Apple-Harvesting Robot Based on the YOLOv5-RACF Model

Abstract

1. Introduction

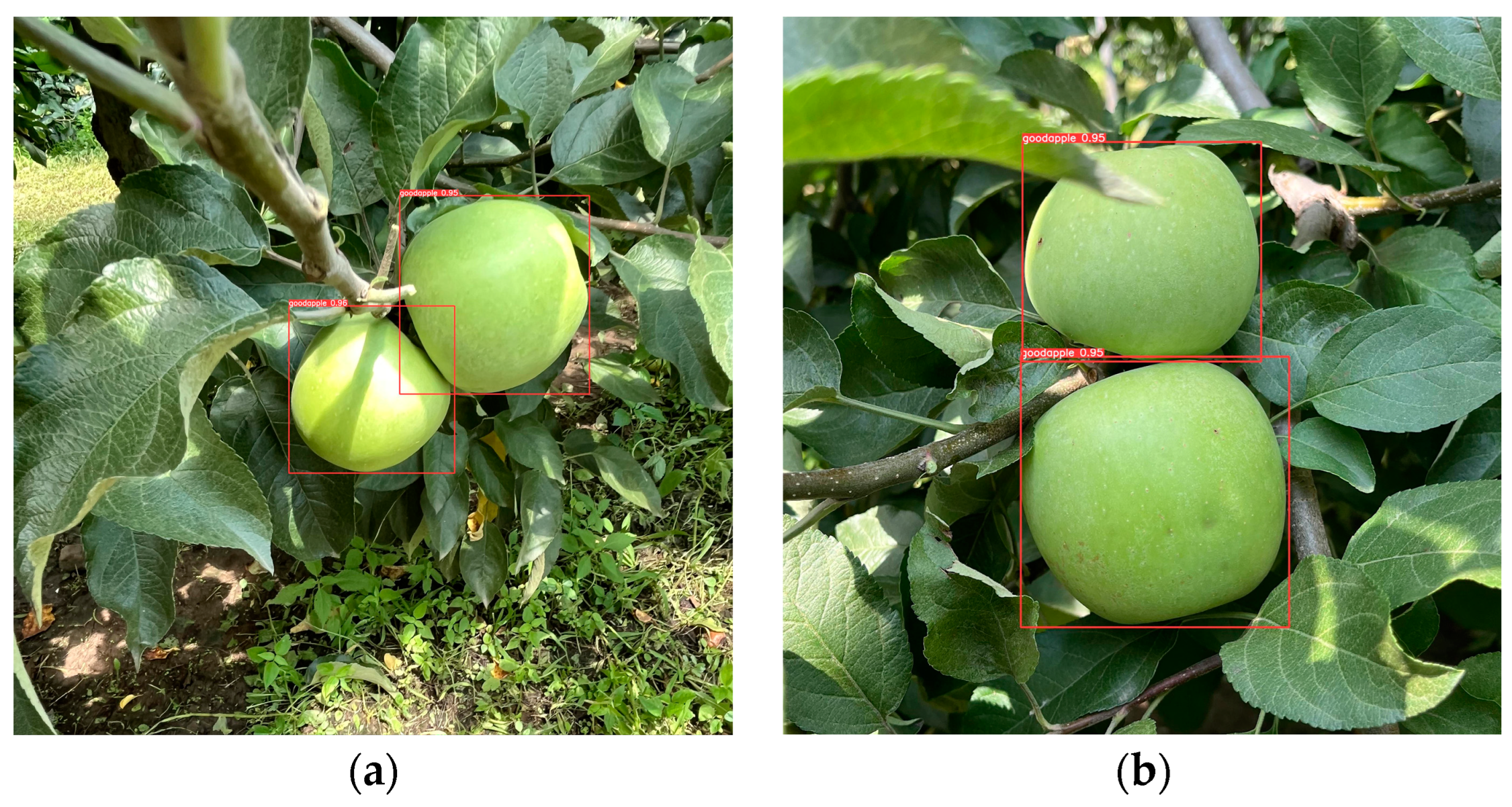

- We propose a YOLOv5-RACF algorithm that combines YOLOv5 for target detection, utilizes the random sample consensus (RANSAC) algorithm for apple contour fitting, and employs a custom activation function to filter outliers to determine the diameter of apples. The objective is to control the opening and closing angle of the robotic arm gripper, reduce mechanical damage during fruit harvesting, and achieve fruit size classification.

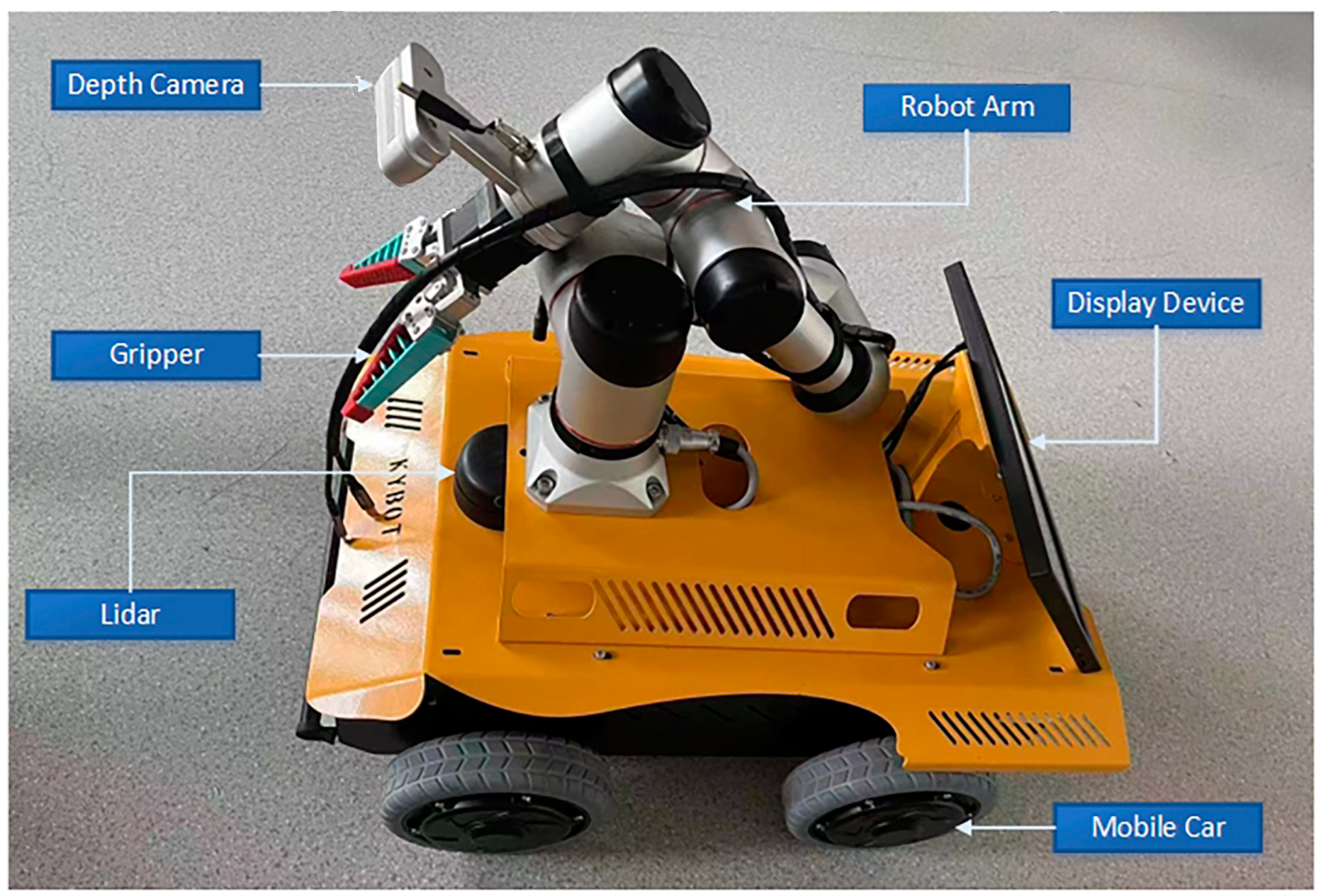

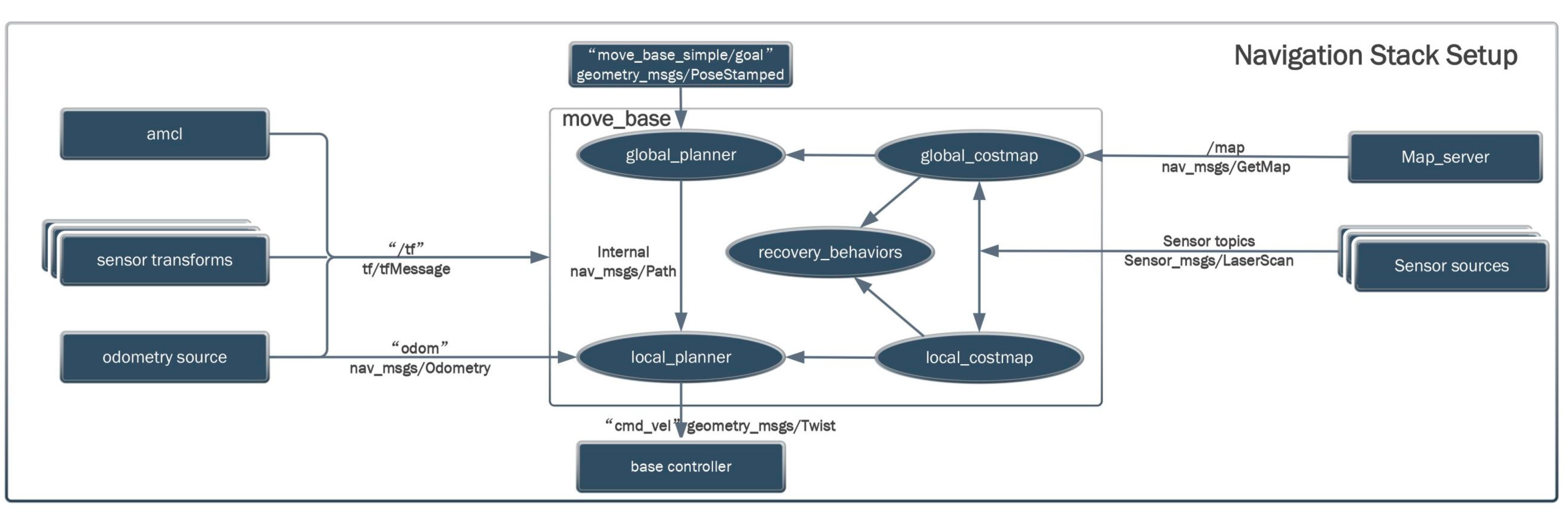

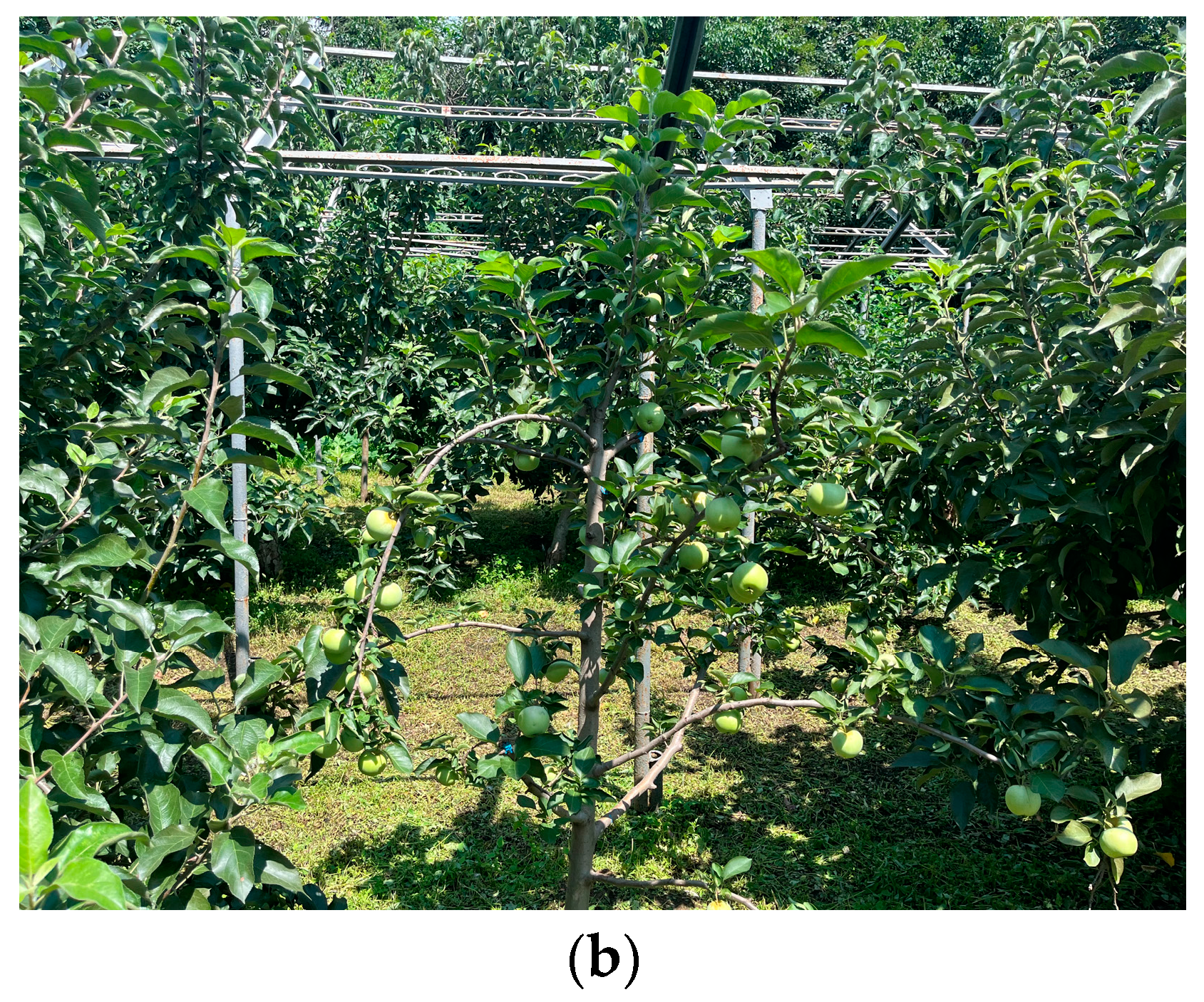

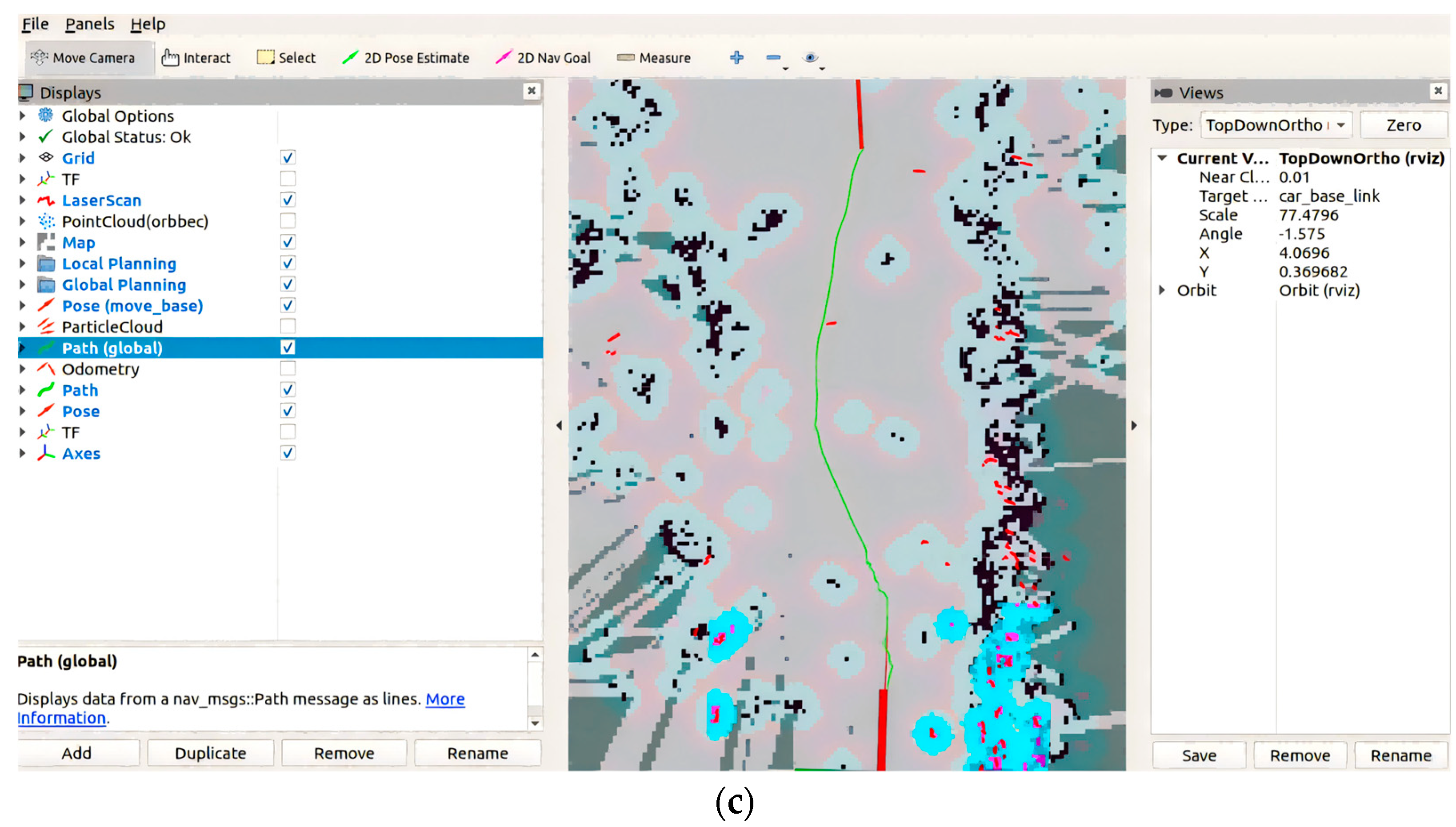

- We intend to equip the robot’s autonomous navigation hardware with Lidar and an inertial measurement unit (IMU) inertial sensor and utilize multiple algorithms to achieve high-precision map construction and navigation obstacle avoidance. These algorithms include the gmapping algorithm for creating high-precision maps of closed environments, and the Dijkstra, A*, and timed elastic band (TEB) algorithms for navigation and obstacle avoidance.

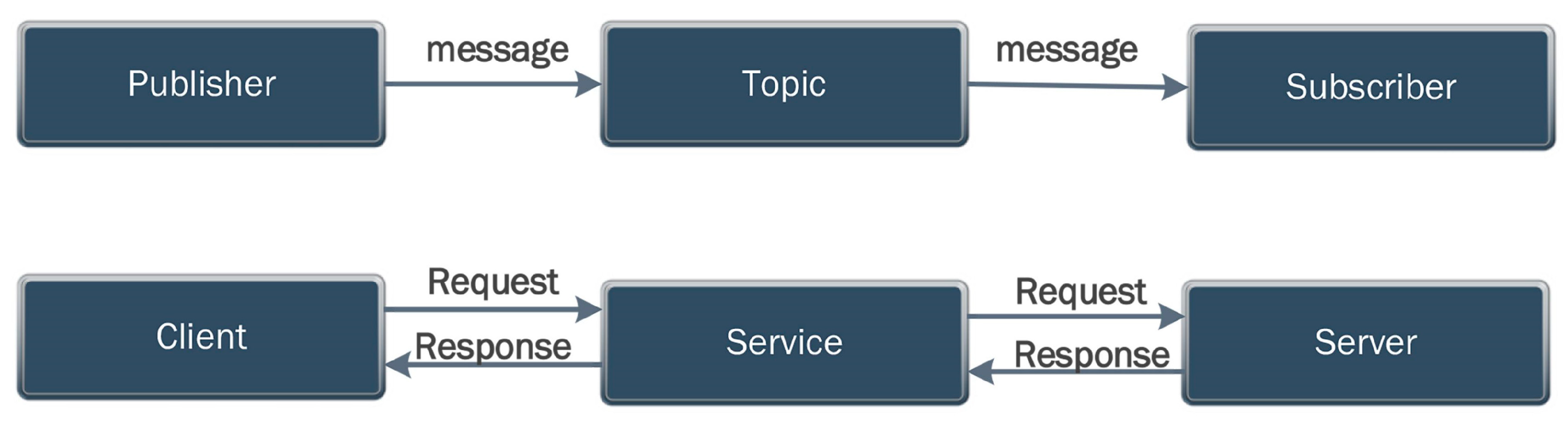

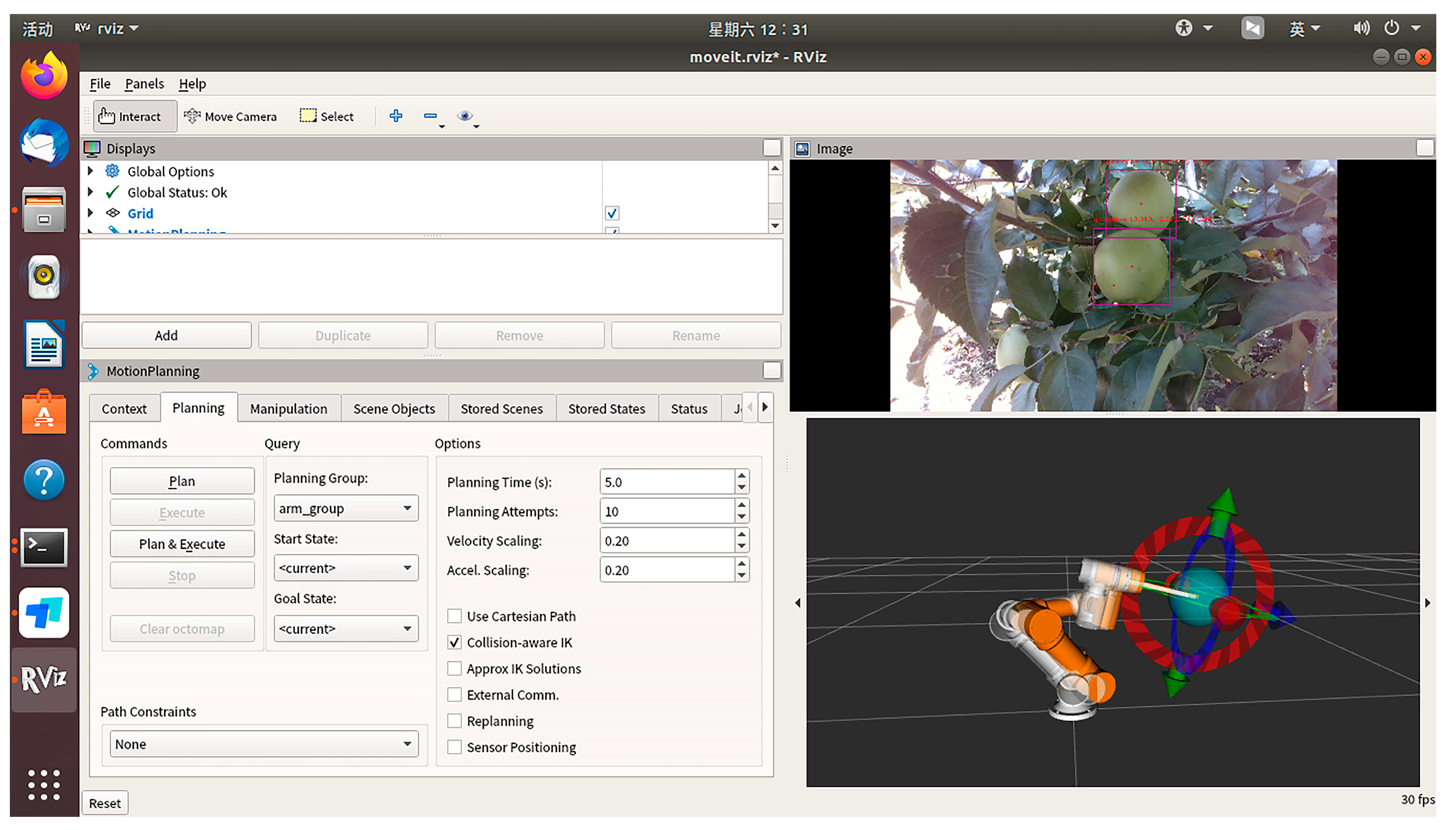

- We aim to efficiently integrate the robot’s grasping system with the autonomous navigation and obstacle avoidance system within the ROS framework. Through ROS communication mechanisms, the system achieves simultaneous apple picking while autonomously navigating and avoiding obstacles. This system not only enhances the robot’s environmental perception and path planning capabilities but also improves its efficiency in autonomous operations in complex environments. To validate the effectiveness of the picking robot system, orchard trials were conducted.

2. Materials and Methods

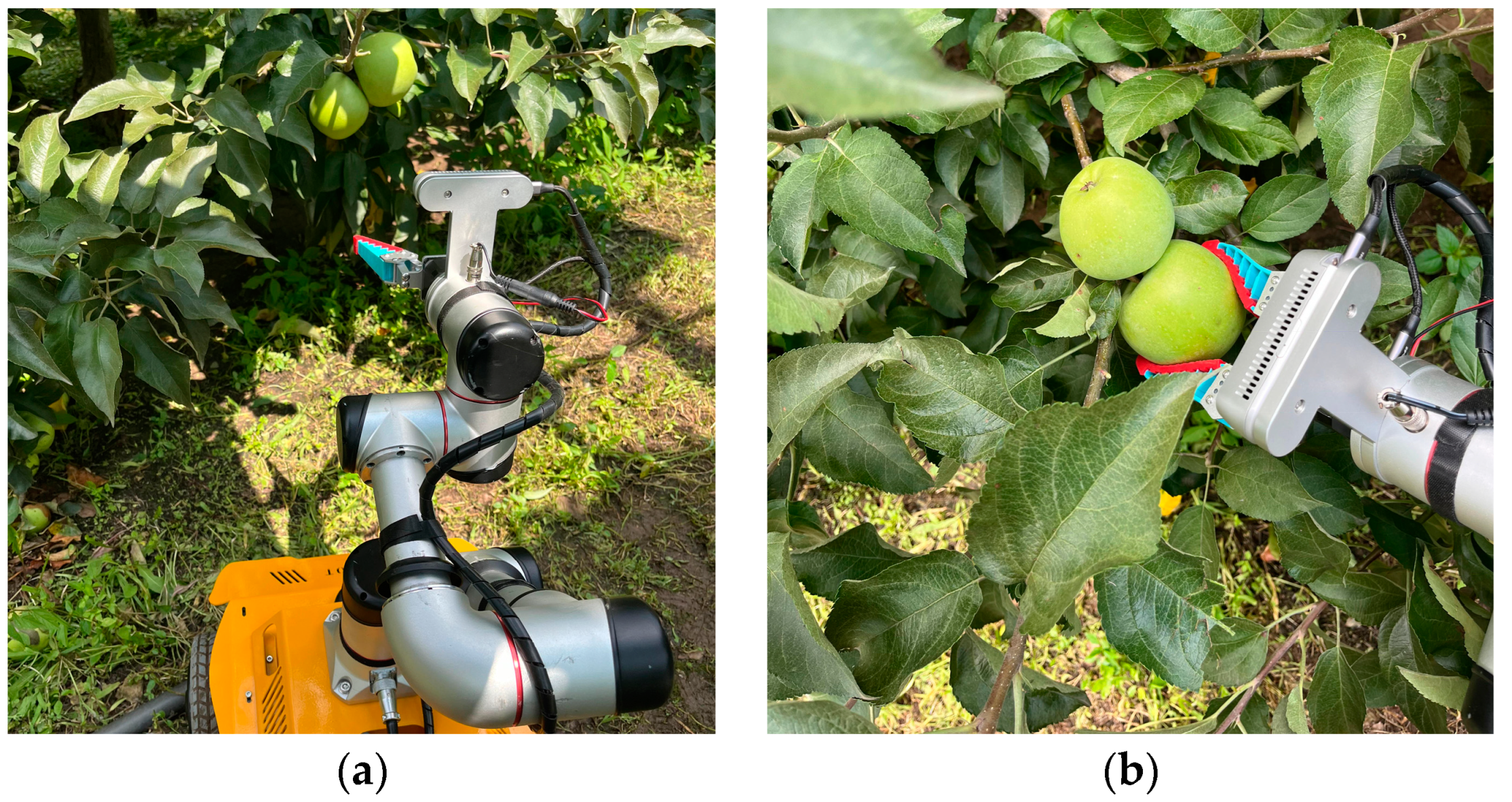

2.1. Experimental Hardware and Software System

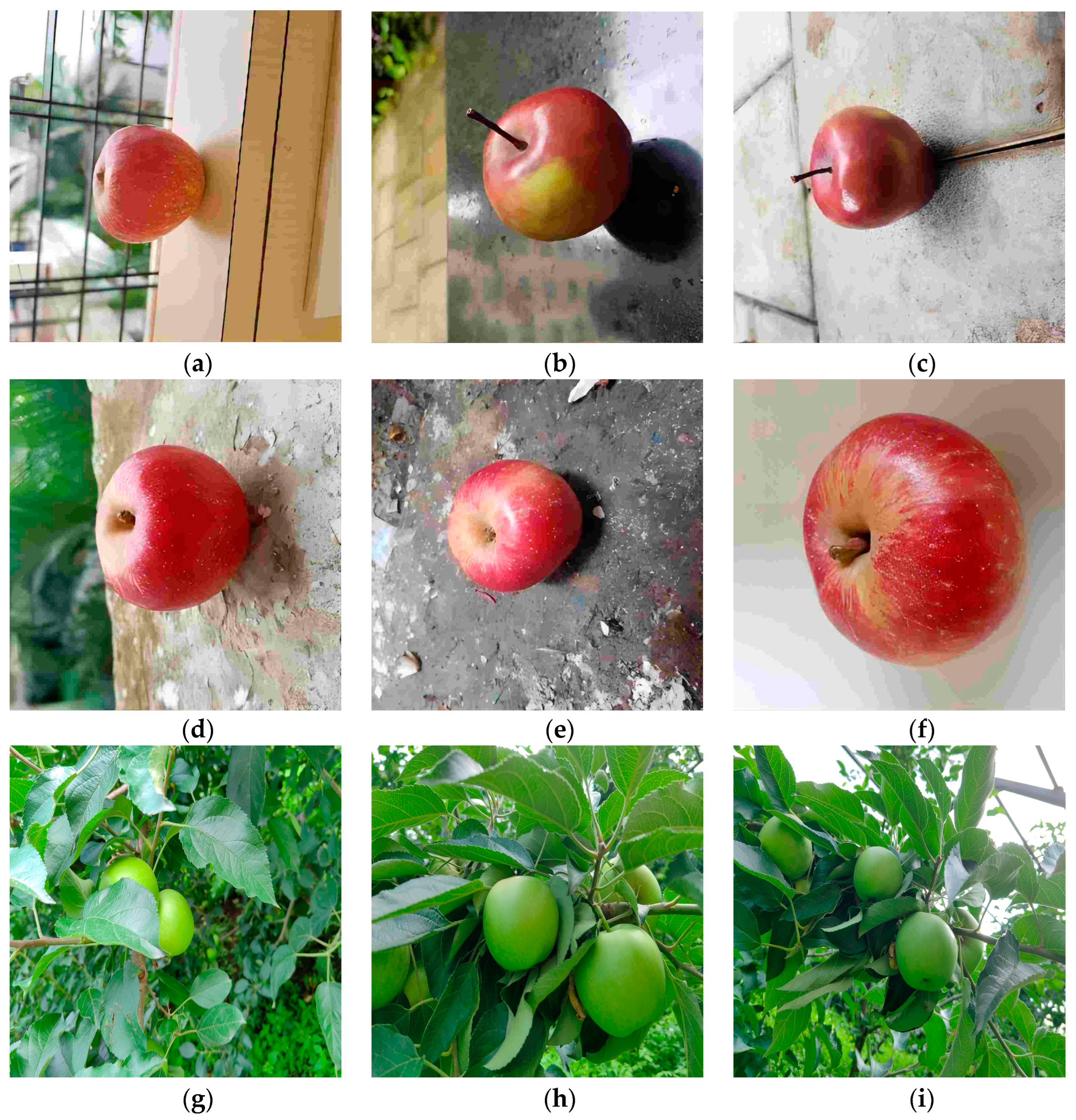

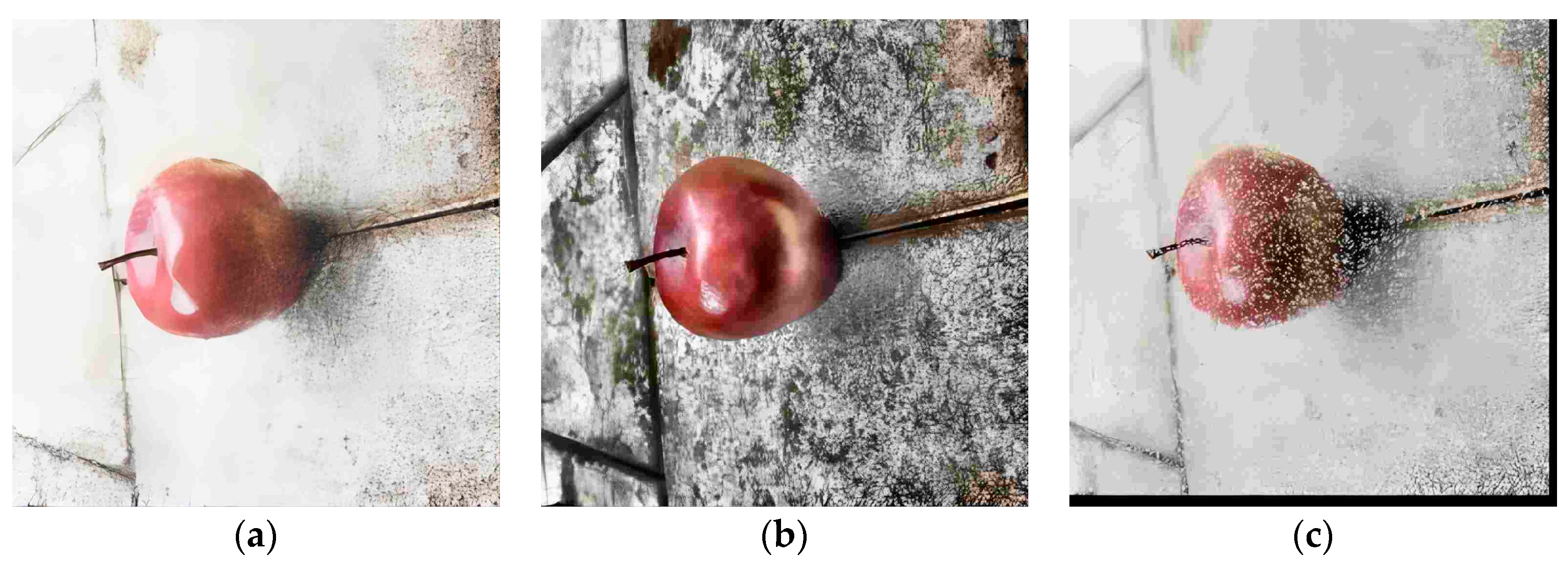

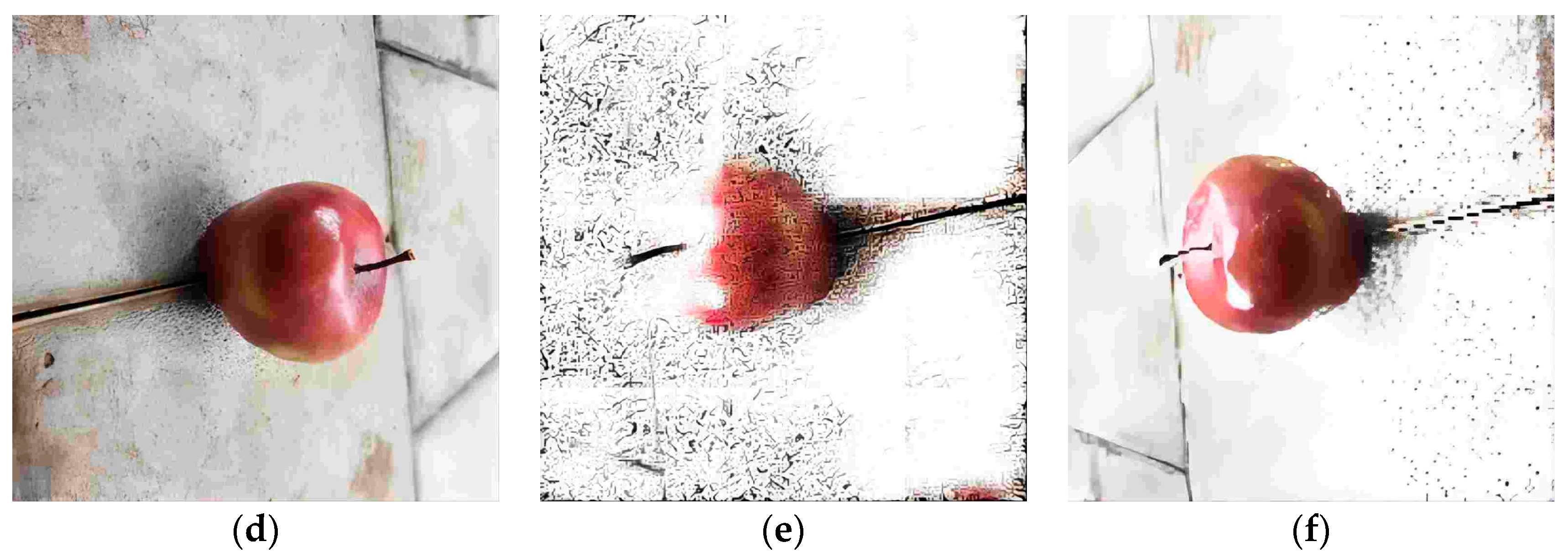

2.2. Data Acquisition and Enhancement

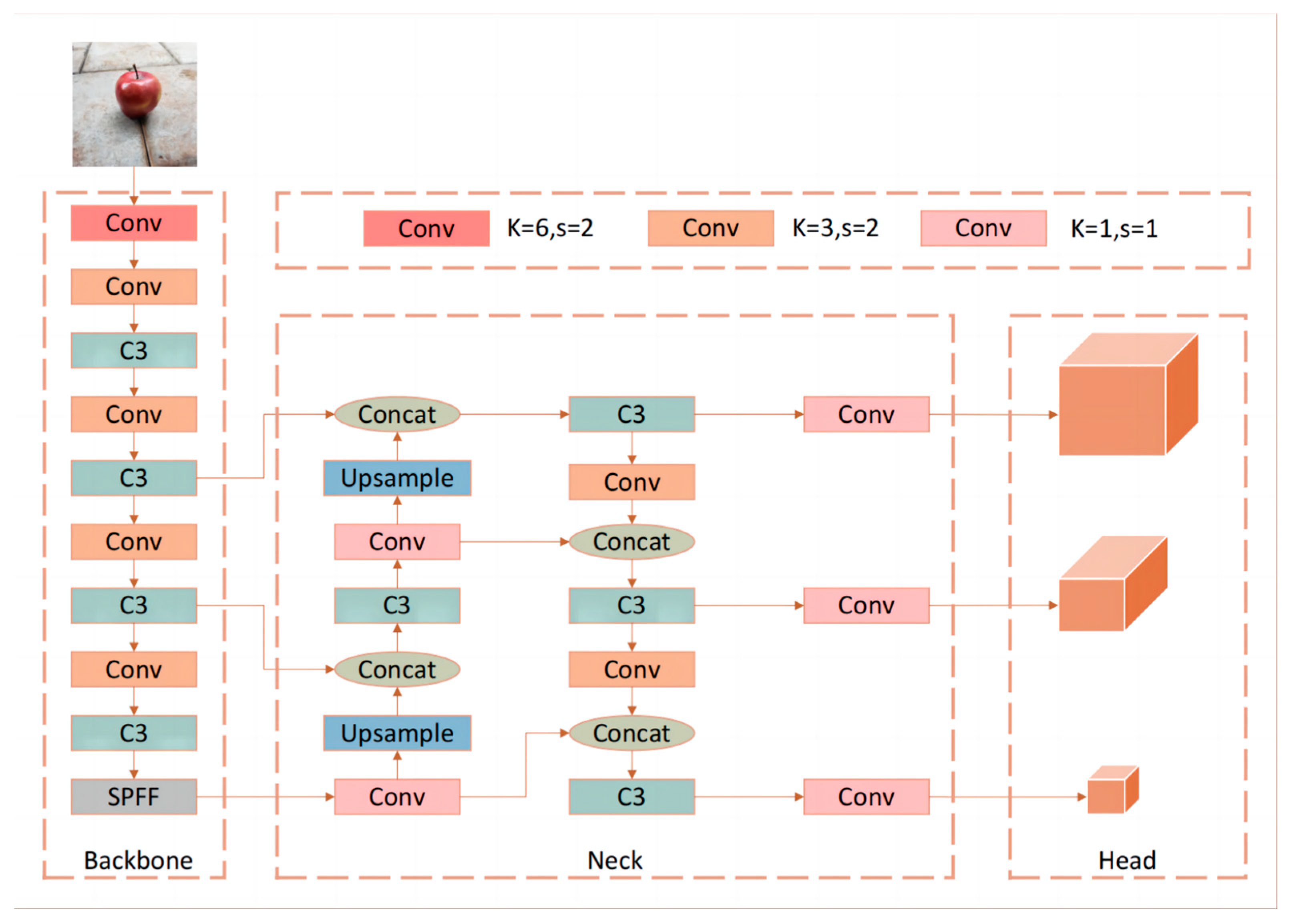

2.3. YOLOv5

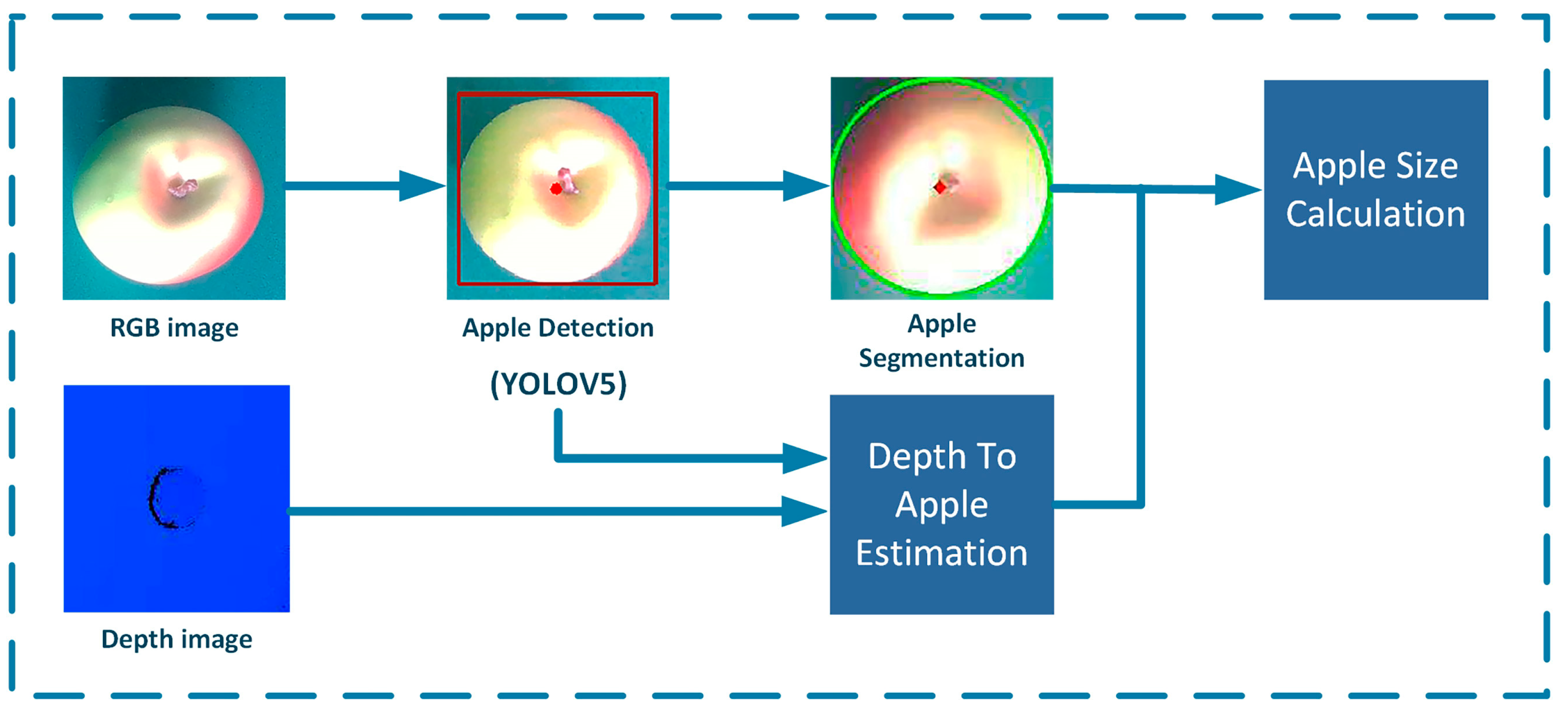

2.4. Apple Target Localization

2.5. Apple Size Calculation Method

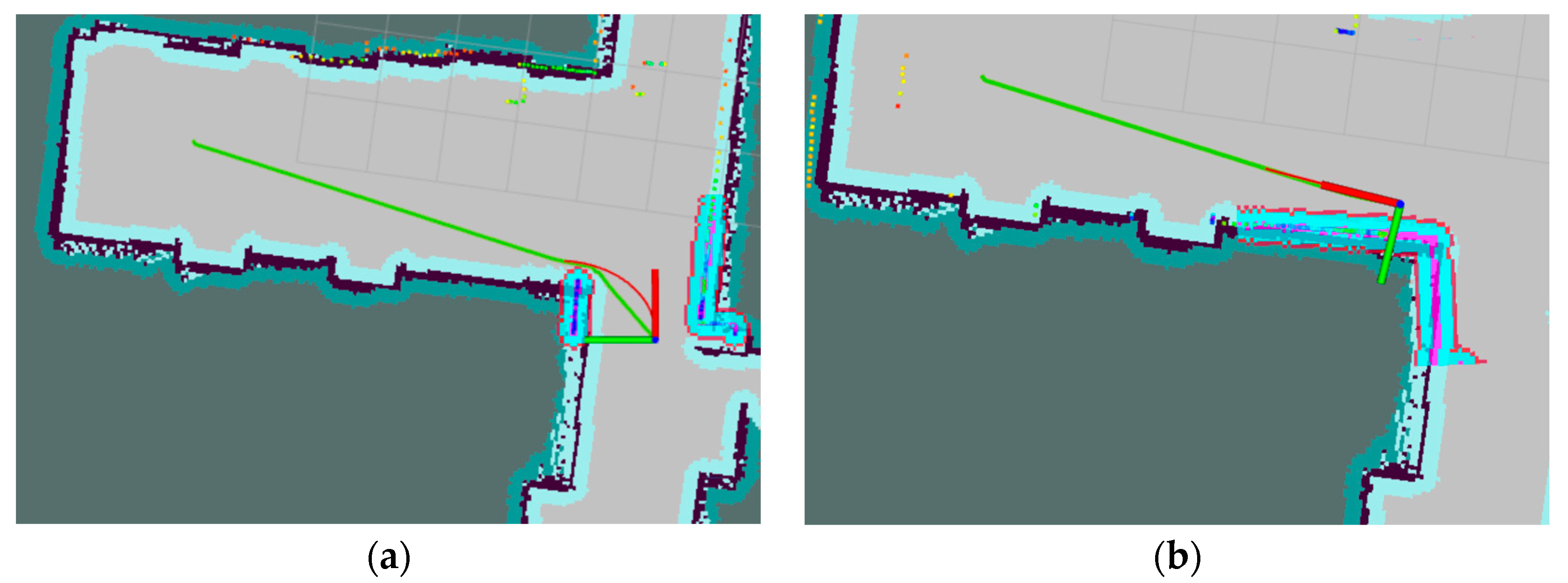

2.6. Laser-Based 2D Navigation Algorithm

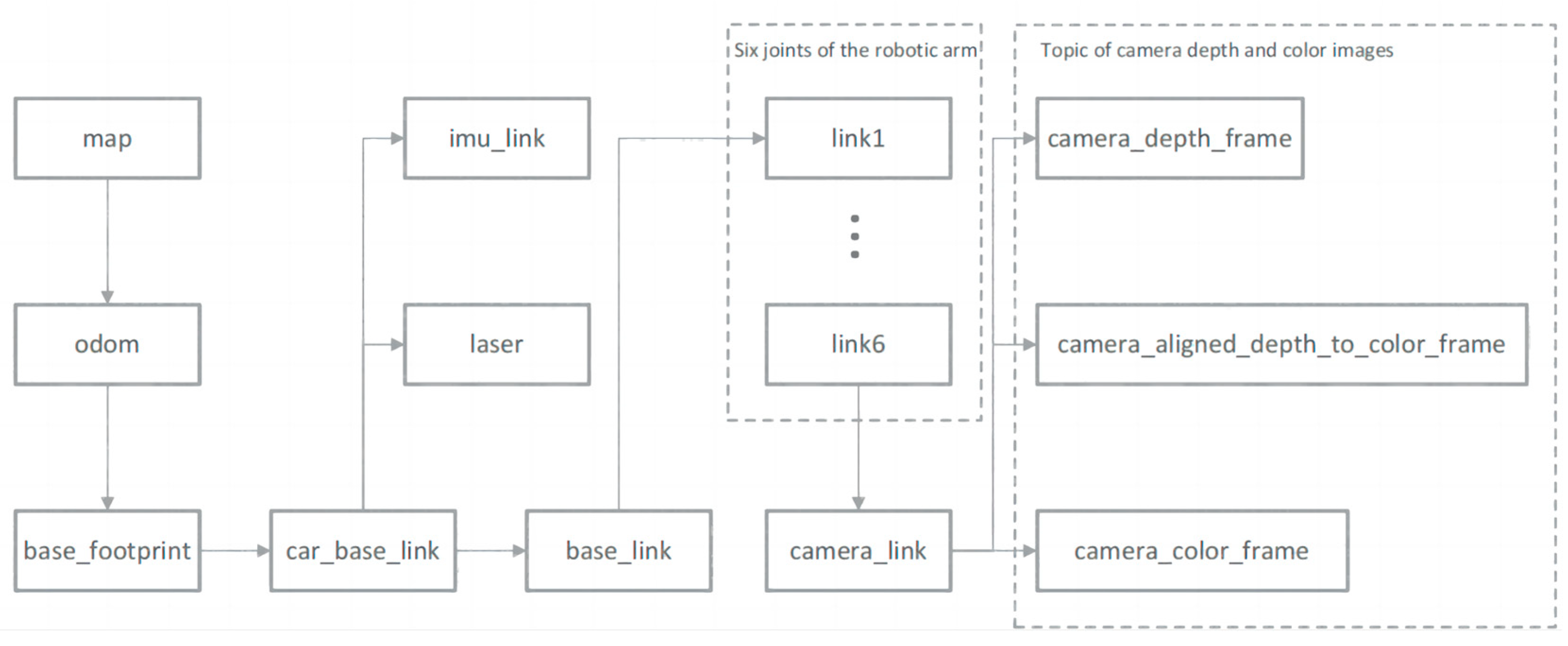

2.7. Coordinate System Transformation Based on the ROS System

2.8. Integration of the Navigation System for the Harvesting Robot

3. Experimentation and Results

3.1. Experimental Setup

3.2. Evaluation Metrics

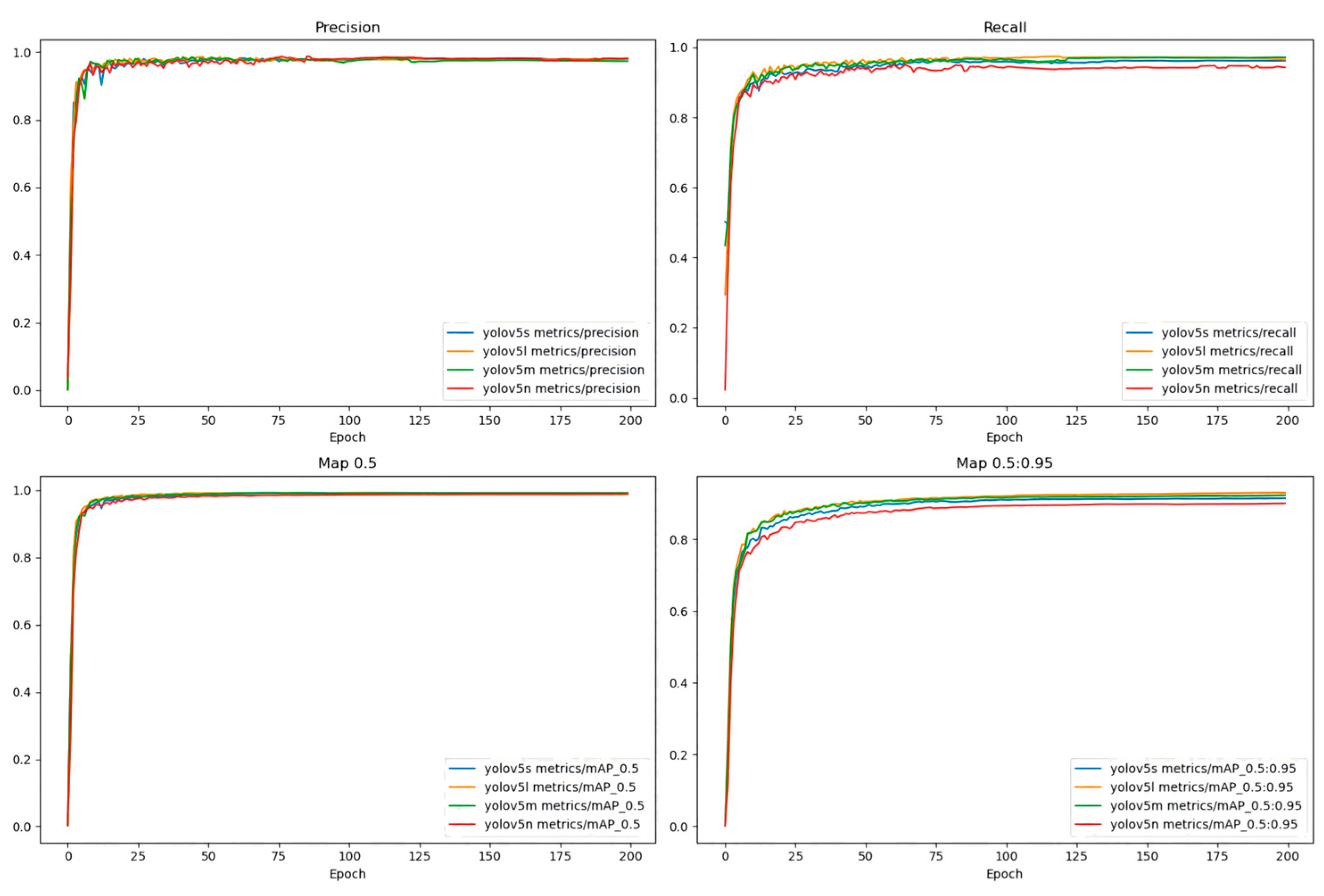

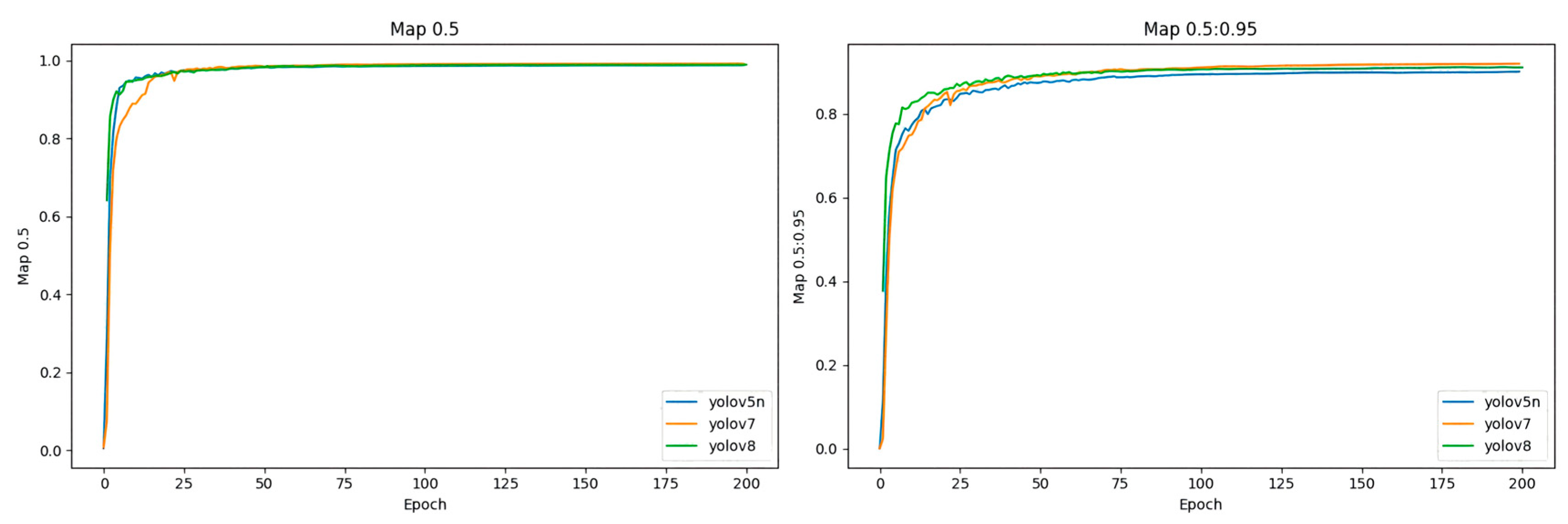

3.3. Comparison of Different YOLOv5 Detection Algorithms

3.4. Target Detection Results

3.5. System Integration and Control of the Robot

3.6. Autonomous Navigation and Grasping Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Bac, C.W.; Van Henten, E.J.; Hemming, J.; Edan, Y. Harvesting robots for high-value crops: State-of-the-art review and challenges ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Ren, G.; Lin, T.; Ying, Y.; Chowdhary, G.; Ting, K.C. Agricultural robotics research applicable to poultry production: A review. Comput. Electron. Agric. 2020, 169, 105216. [Google Scholar] [CrossRef]

- Sistler, F. Robotics and intelligent machines in agriculture. IEEE J. Robot. Autom. 1987, 3, 3–6. [Google Scholar] [CrossRef]

- Bai, Y.; Yu, J.; Yang, S.; Ning, J. An Improved YOLO Algorithm for Detecting Flowers and Fruits on Strawberry Seedlings. Biosyst. Eng. 2024, 237, 1–12. [Google Scholar] [CrossRef]

- Yao, J.; Qi, J.; Zhang, J.; Shao, H.; Yang, J.; Li, X. A Real-Time Detection Algorithm for Kiwifruit Defects Based on YOLOv5. Electronics 2021, 10, 1711. [Google Scholar] [CrossRef]

- García-Navarrete, O.L.; Correa-Guimaraes, A.; Navas-Gracia, L.M. Application of Convolutional Neural Networks in Weed Detection and Identification: A Systematic Review. Agriculture 2024, 14, 568. [Google Scholar] [CrossRef]

- Gao, M.; Cai, Q.; Zheng, B.; Shi, J.; Ni, Z.; Wang, J.; Lin, H. A Hybrid YOLOv4 and Particle Filter Based Robotic Arm Grabbing System in Nonlinear and Non-Gaussian Environment. Electronics 2021, 10, 1140. [Google Scholar] [CrossRef]

- Miao, Z.; Yu, X.; Li, N.; Zhang, Z.; He, C.; Li, Z.; Deng, C.; Sun, T. Efficient tomato harvesting robot based on image processing and deep learning. Precis. Agric. 2023, 24, 254–287. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, K.; Liu, H.; Yang, L.; Zhang, D. Real-time visual localization of the harvesting points for a ridge-planting strawberry harvesting robot. IEEE Access 2020, 8, 116556–116568. [Google Scholar] [CrossRef]

- Qi, X.; Dong, J.; Lan, Y.; Zhu, H. Method for identifying litchi harvesting position based on YOLOv5 and PSPNet. Remote Sens. 2022, 14, 2004. [Google Scholar] [CrossRef]

- Zhaoxin, G.; Han, L.; Zhijiang, Z.; Libo, P. Design a Robot System for Tomato harvesting Based on YOLO V5. IFAC-PapersOnLine 2022, 55, 166–171. [Google Scholar] [CrossRef]

- Hu, H.; Kaizu, Y.; Zhang, H.; Xu, Y.; Imou, K.; Li, M.; Dai, S. Recognition and Localization of Strawberries from 3D Binocular Cameras for a Strawberry harvesting Robot Using Coupled YOLO/Mask R-CNN. Int. J. Agric. Biol. Eng. 2022, 15, 175–179. [Google Scholar]

- Okamoto, H.; Lee, W.S. Green Citrus Detection Using Hyperspectral Imaging. Comput. Electron. Agric. 2009, 66, 201–208. [Google Scholar] [CrossRef]

- Guo, W.; Rage, U.K.; Ninomiya, S. Illumination Invariant Segmentation of Vegetation for Time Series Wheat Images Based on Decision Tree Model. Comput. Electron. Agric. 2013, 96, 58–66. [Google Scholar] [CrossRef]

- Tanigaki, K.; Fujiura, T.; Akase, A. Cherry-Harvesting Robot. Comput. Electron. Agric. 2008, 63, 65–72. [Google Scholar] [CrossRef]

- Hayashi, S.; Shigematsu, K.; Yamamoto, S. Evaluation of a Strawberry-Harvesting Robot in a Field Test. Biosyst. Eng. 2010, 105, 160–171. [Google Scholar] [CrossRef]

- Hayashi, S.; Yamamoto, S.; Tsubota, S. Automation Technologies for Strawberry Harvesting and Packing Operations in Japan. J. Berry Res. 2014, 4, 19–27. [Google Scholar] [CrossRef]

- Williams, H.A.M.; Jones, M.H.; Nejati, M.; Seabright, M.J.; Bell, J.; Penhall, N.D.; Barnett, J.J.; Duke, M.D.; Scarfe, A.J.; Ahn, H.S.; et al. Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 2019, 181, 140–156. [Google Scholar] [CrossRef]

- Berenstein, R.; Edan, Y. Human-robot collaborative site-specific sprayer. J. Field Robot. 2017, 34, 1519–1530. [Google Scholar] [CrossRef]

- Song, Q.; Li, S.; Bai, Q.; Wang, Z.; Zhang, X.; Huang, Y. Trajectory Planning of Robot Manipulator Based on RBF Neural Network. Entropy 2021, 23, 1207. [Google Scholar] [CrossRef]

- Liu, Q.; Li, D.; Ge, S.S.; Mei, T.; Ren, H.; Xiao, J. Adaptive Bias RBF Neural Network Control for a Robotic Manipulator. Neurocomputing 2021, 447, 213–223. [Google Scholar] [CrossRef]

- Wong, C.C.; Chien, M.Y.; Chen, R.J.; Aoyama, H.; Wong, K.Y. Moving Object Prediction and Grasping System of Robot Manipulator. IEEE Access 2022, 10, 20159–20172. [Google Scholar] [CrossRef]

- Sun, C.; Hu, X.; Yu, T.; Lu, X. Structural Design of Agaricus Bisporus harvesting Robot Based on Cartesian Coordinate System. Electr. Eng. Comput. Sci. (EECS) 2019, 2, 103–106. [Google Scholar]

- Yaguchi, H.; Nagahama, K.; Hasegawa, T.; Inaba, M. Development of an Autonomous Tomato Harvesting Robot with Rotational Plucking Gripper. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 652–657. [Google Scholar]

- Kondo, N.; Yata, K.; Iida, M.; Shiigi, T.; Monta, M.; Kurita, M.; Omori, H. Development of an End-Effector for a Tomato Cluster Harvesting Robot. Eng. Agric. Environ. Food 2010, 3, 20–24. [Google Scholar] [CrossRef]

- Xiong, Y.; Peng, C.; Grimstad, L.; From, P.J.; Isler, V. Development and Field Evaluation of a Strawberry Harvesting Robot with a Cable-Driven Gripper. Comput. Electron. Agric. 2019, 157, 392–402. [Google Scholar] [CrossRef]

- Liu, J.; Peng, Y.; Faheem, M. Experimental and Theoretical Analysis of Fruit Plucking Patterns for Robotic Tomato Harvesting. Comput. Electron. Agric. 2020, 173, 105330. [Google Scholar] [CrossRef]

- Stajnko, D.; Lakota, M.; Hocevar, M. Estimation of Number and Diameter of Apple Fruits in an Orchard During the Growing Season by Thermal Imaging. Comput. Electron. Agric. 2004, 42, 31–42. [Google Scholar] [CrossRef]

- Li, T.; Feng, Q.; Qiu, Q.; Xie, F.; Zhao, C. Occluded Apple Fruit Detection and localization with a frustum-based point-cloud-processing approach for robotic harvesting. Remote Sens. 2022, 14, 482. [Google Scholar] [CrossRef]

- Chen, W.; Zhang, J.; Guo, B.; Wei, Q.; Zhu, Z. An Apple Detection Method Based on DES-YOLO V4 Algorithm for Harvesting Robots in Complex Environment. Math. Probl. Eng. 2021, 2021, 7351470. [Google Scholar] [CrossRef]

- Zhang, Z.; Heinemann, P.H. Economic analysis of a low-cost apple harvest-assist unit. HortTechnology 2017, 27, 240–247. [Google Scholar] [CrossRef]

- Zhang, Z.; Igathinathane, C.; Li, J.; Cen, H.; Lu, Y.; Flores, P. Technology Progress in Mechanical Harvest of Fresh Market Apples. Comput. Electron. Agric. 2020, 175, 105606. [Google Scholar] [CrossRef]

- Wang, X.; Kang, H.; Zhou, H.; Au, W.; Wang, M.Y.; Chen, C. Development and Evaluation of a Robust Soft Robotic Gripper for Apple Harvesting. Comput. Electron. Agric. 2023, 204, 107552. [Google Scholar] [CrossRef]

- Ma, R.; Yan, R.; Cui, H.; Cheng, X.; Li, J.; Wu, F.; Yin, Z.; Wang, H.; Zeng, W.; Yu, X. A Hierarchical Method for Locating the Interferometric Fringes of Celestial Sources in the Visibility Data. Res. Astron. Astrophys. 2024, 24, 035011. [Google Scholar] [CrossRef]

- Lehnert, C.; Sa, I.; Mccool, C.; Corke, P. Sweet Pepper Pose Detection and Grasping for Automated Crop Harvesting. In Proceedings of the IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; pp. 2428–2434. [Google Scholar]

- Rizon, M.; Yusri, N.A.N.; Kadir, M.F.A.; Shakaff, A.Y.M.; Zakaria, A.; Ahmad, N.; Abdul Rahman, S.; Saad, P.; Ali, M.A.M.; Adom, A.H. Determination of Mango Fruit from Binary Image Using Randomized Hough Transform. In Proceedings of the International Conference on Machine Vision, Tokyo, Japan, 21–23 September 2015. [Google Scholar]

- Ji, J.; Sun, J.; Zhao, K.; Jin, X.; Ma, H.; Zhu, X. Measuring the Cap Diameter of White Button Mushrooms (Agaricus bisporus) by Using Depth Image Processing. Appl. Eng. Agric. 2021, 37, 623–633. [Google Scholar] [CrossRef]

| Label | Original Image Data | Augmented Image Data | Training | Validation |

|---|---|---|---|---|

| goodapple | 3070 | 6864 | 8952 | 982 |

| teb_local_planner_params | global_costmap_params | local_costmap_params |

|---|---|---|

| dt_ref: 0.3 max_vel_x: 0.5 min_vel_theta: 0.2 acc_lim_theta: 3.2 yaw_goal_tolerance: 0.1 inflation_dist: 0.6 obstacle_poses_affected: 30 weight_kinematics_forward_drive: 1000 weight_optimaltime: 1 weight_dynamic_obstacle: 10 weight_preferred_direction: 0.5 | global_frame:/map update_frequency: 1.0 transform_tolerance: 0.5 inflation_radius: 0.6 | global_frame:/odom update_frequency: 5.0 transform_tolerance: 0.5 inflation_radius: 0.6 |

| dt_hysteresis: 0.1 max_vel_theta: 1.0 acc_lim_x: 2.5 xy_goal_tolerance: 0.2 min_obstacle_dist: 0.5 costmap_obstacles_behind_robot_dist: 1.0 weight_kinematics_nh: 1000 weight_kinematics_turning_radius: 1 weight_obstacle: 50 weight_viapoint: 1 weight_adapt_factor: 2 | robot_base_frame:/base_footprint publish_frequency: 0.5 cost_scaling_factor: 5.0 | robot_base_frame:/base_footprint publish_frequency: 2.0 cost_scaling_factor: 5.0 |

| Network | P/% | R/% | mAP@0.5/% | mAP@0.5:0.95/% | Parameters/M | GFLOPS |

|---|---|---|---|---|---|---|

| yolov5s | 97.978 | 96.144 | 99.051 | 91.409 | 7,022,326 | 15.9 |

| yolov5l | 98.042 | 96.56 | 99.144 | 92.99 | 46,138,294 | 108.2 |

| yolov5m | 97.335 | 97.129 | 99.133 | 92.312 | 20,871,318 | 48.2 |

| yolov5n | 98.131 | 94.272 | 98.748 | 90.02 | 1,765,270 | 4.2 |

| Network | P/% | R/% | mAP@0.5/% | mAP@0.5:0.95/% | Parameters/M | GFLOPS |

|---|---|---|---|---|---|---|

| Yolov5n | 98.131 | 94.272 | 98.748 | 90.02 | 1,765,270 | 4.2 |

| Yolov7 | 96.77 | 97.53 | 99.22 | 91.96 | 37,196,556 | 105.1 |

| Yolov8 | 98.204 | 95.08 | 98.902 | 91.017 | 3,011,043 | 8.2 |

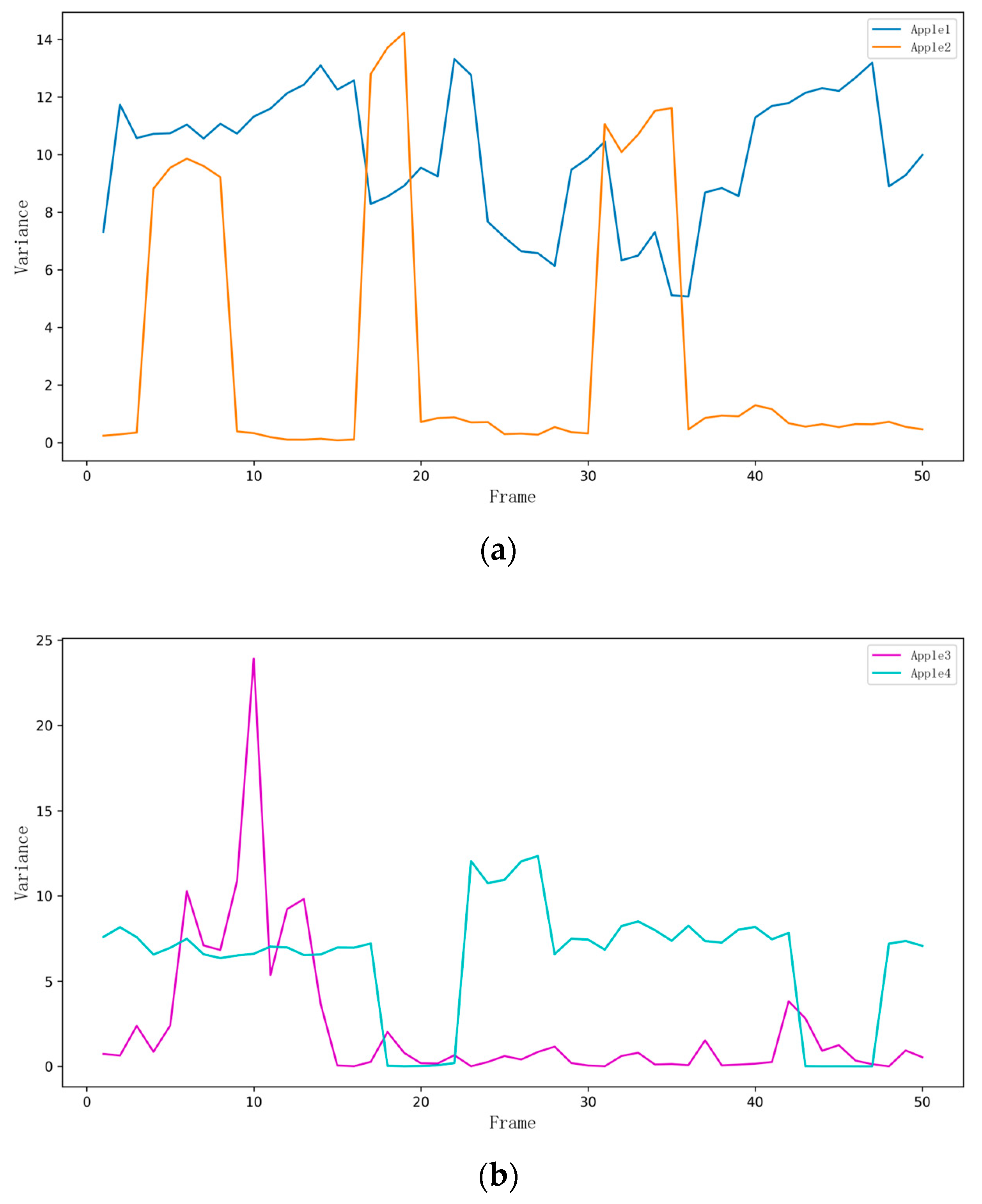

| Object | Actual Measurement Value/mm | Camera Measurement Value/mm | Standard Deviation/mm | Variance/mm2 |

|---|---|---|---|---|

| Apple1 | 86.93 | 83.792108 | 3.127892 | 9.924321 |

| Apple2 | 72.18 | 70.813700 | 1.366300 | 3.237878 |

| Apple3 | 78.93 | 77.812189 | 1.117811 | 2.324057 |

| Apple4 | 72.53 | 70.320186 | 2.255963 | 6.267101 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, F.; Zhang, W.; Wang, S.; Jiang, B.; Feng, X.; Zhao, Q. Apple-Harvesting Robot Based on the YOLOv5-RACF Model. Biomimetics 2024, 9, 495. https://doi.org/10.3390/biomimetics9080495

Zhu F, Zhang W, Wang S, Jiang B, Feng X, Zhao Q. Apple-Harvesting Robot Based on the YOLOv5-RACF Model. Biomimetics. 2024; 9(8):495. https://doi.org/10.3390/biomimetics9080495

Chicago/Turabian StyleZhu, Fengwu, Weijian Zhang, Suyu Wang, Bo Jiang, Xin Feng, and Qinglai Zhao. 2024. "Apple-Harvesting Robot Based on the YOLOv5-RACF Model" Biomimetics 9, no. 8: 495. https://doi.org/10.3390/biomimetics9080495

APA StyleZhu, F., Zhang, W., Wang, S., Jiang, B., Feng, X., & Zhao, Q. (2024). Apple-Harvesting Robot Based on the YOLOv5-RACF Model. Biomimetics, 9(8), 495. https://doi.org/10.3390/biomimetics9080495