Abstract

The question “How does it work” has motivated many scientists. Through the study of natural phenomena and behaviors, many intelligence algorithms have been proposed to solve various optimization problems. This paper aims to offer an informative guide for researchers who are interested in tackling optimization problems with intelligence algorithms. First, a special neural network was comprehensively discussed, and it was called a zeroing neural network (ZNN). It is especially intended for solving time-varying optimization problems, including origin, basic principles, operation mechanism, model variants, and applications. This paper presents a new classification method based on the performance index of ZNNs. Then, two classic bio-inspired algorithms, a genetic algorithm and a particle swarm algorithm, are outlined as representatives, including their origin, design process, basic principles, and applications. Finally, to emphasize the applicability of intelligence algorithms, three practical domains are introduced, including gene feature extraction, intelligence communication, and the image process.

1. Introduction

The question “How does it work?” has motivated many scientists. In past decades, biomimetics has promoted lots of science and engineering research and changed people’s lives dramatically. Neural networks, which are inspired by biological neurons, have achieved significant success and been used in impactful applications [,]. For instance, ChatGPT, which is based on a multi-layered deep neural network, has been important since it came out. As a large language model, it can be used for various natural language-processing tasks, and it has been widely applied in social media, customer service chatbots, and virtual assistants. Neural networks aim to solve complex problems, and they have been widely used in tackling optimization problems or mathematical programming. Optimization is a typical mathematical problem, and it is widely encountered in all engineering disciplines. Literally, it refers to finding an optimal/desirable solution. Optimization problems are complex and numerous, so approaches to solving these problems are always an active topic. Optimization approaches can be essentially distinguished into deterministic and stochastic. However, the former requires enormous computational resources. This is the motivation for exploring more efficient methods.

As a class of neural networks, recurrent neural networks (RNNs) have demonstrated great computational power, and they have been investigated in many engineering and scientific fields over the past decades. The authors of [] proposed a gradient-based RNN (GBRNN) model for solving matrix inversion problems. However, there are some restrictions for methods under traditional neural networks, such as GBRNN. The ability of GBRNN to deal with time-varying problems is limited. In [], the author verified that the residual error function of GBRNN will accumulate over time, which means it is unable to efficiently deal with time-varying problems. This paper focuses on a special RNN, named a zeroing neural network (ZNN), specifically for solving time-varying problems. Unlike the existing surveys, this paper presents a new classification method based on the performance index of ZNNs. According to the different performance abilities of ZNNs, it can be classified into the following three categories: (1) an accelerated-convergence ZNN, the type of ZNN that has fast convergence characteristics; (2) a noise-tolerance ZNN, the type of ZNN that has noise tolerance characteristics; (3) a discrete-time ZNN, the type of ZNN that can achieve higher computational accuracy and is more easily implemented in hardware. It is worth noting that these three types of ZNNs do not exist in isolation; they can be organically integrated.

As an optimization technique, swarm intelligence algorithms are driven by natural phenomena and behaviors, and they are particularly suited for problems that are non-linear, multi-modal, and have complex search spaces. These algorithms excel in exploring diverse regions of the solution space and efficiently finding near-optimal solutions. Similarly, many swarm intelligence algorithms have been applied in many scientific and engineering fields [,,,]. For example, a bio-inspired algorithm named the ant colony algorithm has been proposed for vehicle heterogeneity and backhaul mixed-load problems []. This algorithm is intended to jointly optimize the vehicle type, the vehicle number and travel routes, and minimize the total service cost. Through the introduction of intelligent algorithms, many fields have ushered in new developments and opportunities.

This paper aims to present a comprehensive survey of intelligence algorithms, including a neural network designed to solve time-varying problems, a swarm intelligence algorithm, and some practical fields that combine the rest of the intelligent algorithms. The algorithms’ origins, basic principles, recent advances, future challenges, and applications are described in detail. The paper is organized as follows. In Section 3, ZNNs are discussed in detail, including their origin, basic principle, operation mechanism, model variants, and applications. The rest of the intelligence algorithms are discussed in Section 4, including the genetic algorithm, the particle swarm algorithm, and some real applications. Conclusions are drawn in Section 5.

2. Related Work

There are review articles on neural networks, as well as bio-inspired algorithms, mostly devoted to the origins and inspirations of algorithms and categorizing existing algorithms. Ref. [] classified bio-inspired algorithms into seven main groups: stochastic algorithms, evolutionary algorithms, physical algorithms, probabilistic algorithms, swarm intelligence algorithms, immune algorithms, and natural algorithms. In another example, some researchers have started from a specific domain and explored the study of algorithms applied to that domain. For example, ref. [] started from the field of electrical drives and discussed the bio-inspired algorithms applied to this field. There have been numerous reviews of bio-inspired algorithms written in various ways. However, very few of them have focused on a specific algorithm. Therefore, starting with how to solve the optimization problem, this paper divides the optimization problem into a time-varying/dynamic optimization problem and a time-invariant/static optimization problem, highlighting a neural network ZNN for time-varying/dynamic optimization problems. For the rest of the algorithms, the authors prefer that the readers have some understanding of these algorithms and their origins, fundamentals, and applications.

ZNNs are a subfield of neural networks, and they belong to a neural network based on neurodynamics. To the best of our knowledge, only a few attempts have been made to comprehensively review ZNNs. In [], the authors focused on mathematical programming problems in optimization problems by categorizing mathematical programming problems and discussing the ZNN models corresponding to different mathematical programming problems. Ref. [] only explored the application of ZNN models to different mathematical programming problems and did not discuss neural networks such as ZNNs in depth. Ref. [] exhaustively described the application areas involved in ZNNs, as well as the model variants, but like ref. [], it did not provide a good introduction to ZNNs themselves, mention the origin of ZNNs, or mention the topology of ZNNs. The ZNN model is divided into different application areas. This classification model involves a lot of subjectivity and uncertainty. Compared to other works, this paper provides an in-depth overview of ZNNs, and it comprehensively introduces their origin, structure, operation mechanism, model variants, and applications. A novel classification of ZNNs is first proposed, categorizing models into accelerated convergence ZNNs, noise tolerance ZNNs, and discrete-time ZNNs, each offering unique advantages for solving time-varying optimization problems. The main contribution of this paper is that it identifies the structural topology of ZNNs, analyzes and explains the operating mechanism of ZNNs to some extent, and classifies ZNNs structurally.

Remark 1.

Neural networks and bio-inspired optimization algorithms have a lot in common. Firstly, both neural networks and bio-inspired optimization algorithms are types of bio-heuristic algorithms; in essence, both types of algorithms are summarized from inspiration gained from nature. For example, Ref. [] explored neural networks and bio-inspired optimization algorithms as two different classes of bio-heuristic algorithms and discussed their applications in the field of sustainable energy systems. Ref. [] described a bio-inspired algorithm, i.e., the particle swarm algorithm, and neural networks in terms of concepts, methodology, and performance. Secondly, all the related previous research [,,] has considered neural networks and bio-inspired optimization algorithms as the same concept, i.e., “computational intelligence”. Both neural networks and bio-inspired optimization algorithms are considered powerful computing tools.

Neural networks and bio-inspired optimization algorithms have many intersections and correlations. Firstly, bio-inspired optimization algorithms are widely used in neural network parameter optimization [,]. Especially in [], the author paid special attention to the optimization of recurrent neural network parameters using bio-inspired optimization algorithms and provided a comprehensive analysis. Secondly, neural networks and bio-inspired optimization algorithms are used in common applications, and they are always used for comparison, such as comparing intelligent feature selection and classification [,], as well as structural health monitoring [].

Recurrent neural networks are a class of neural networks, and we believe that it is feasible to combine them with bio-inspired optimization algorithms in one text. Particularly in this paper, from the perspective of solving optimization problems, the author classified optimization problems as dynamic/time-varying and static/time-invariant, and in particular, they introduced a recurrent neural network that has been widely used to solve dynamic/time-varying optimization problems.

Remark 2.

The writing logic of the paper revolves around how to solve the optimization problem. By observing the laws or habits of organisms in nature, researchers have been surprised to find that modeling the laws and habits of these organisms can be constructively helpful in solving practical problems. As a result, many such algorithms have been born, and they are collectively known as intelligent algorithms. Since these algorithms mainly realize computational functions, some researchers refer to them as “computational intelligence” [,,]. In this paper, optimization problems are classified into time-varying/dynamic optimization problems and time-invariant/static optimization problems, and a class of neural networks for solving time-varying/dynamic optimization problems is described in detail in Section 2, while the rest of the bio-inspired algorithms used for optimization problems, including swarm intelligence algorithms and genetic algorithms, are described in Section 3.

In the field of application, neural networks and bio-inspired optimization algorithms have a broad and deep intersection. Bio-inspired optimization algorithms, especially swarm intelligence algorithms, are widely used for hyperparameter optimization and training process optimization for neural networks. In development trends, there is an increasing number of studies that have attempted to combine swarm intelligence algorithms with neural networks in order to create hybrid algorithms, for example, using PSO to optimize the architecture or parameters of convolutional neural networks []. Some research has focused on the co-evolution of swarm intelligence algorithms and neural networks, optimizing both simultaneously within the same system and promoting each to evolve towards better solutions. As future prospects, future research may develop more efficient swarm intelligence optimization methods, further enhancing the performance and training efficiency of neural networks. As technology advances, the combination of swarm intelligence algorithms and neural networks is expected to play a crucial role in more emerging fields, such as autonomous driving, smart manufacturing, and personalized medicine.

Remark 3.

Biomimetic algorithms, bio-inspired algorithms, and bio-inspired intelligence algorithms can be viewed as synonyms, all denoting algorithms that draw on the good design and functionality found in biological systems. Intelligent algorithms refer to a class of algorithms that use artificial intelligence techniques to solve complex problems. These algorithms often mimic intelligent behaviors observed in biological systems to find optimal or near-optimal solutions in complex environments. They possess adaptive, learning, and optimization capabilities that enable them to perform well in dynamic and uncertain situations. In addition to the bio-heuristic algorithms mentioned above, intelligent algorithms include several statistically or probabilistically inspired algorithms such as Bayesian optimization, random forest algorithms, and K-means algorithms. The conclusion is that bio-inspired algorithms are a subset of intelligent algorithms.

3. Bio-Inspired Neural Network Models

3.1. Origin

The interior of each neuron cell is filled with a conducting ionic solution, and the cell is surrounded by a membrane of high resistivity. Ion-specific pumps maintain an electrical potential difference between the inside and the outside of the cell by transporting ions such as and across the membrane. Neuron cells respond to outside stimulation by dynamically changing the conductivity of specific ion species in synapses and cell membranes. Based on the response mechanisms of biological neuron cells, John Hopfield [] used a neural dynamics equation to describe this change in conductivity. He applied his physical talents to the field of neurobiology in order to help people better understand how the brain works.

In 1982, Hopfield [] introduced the concept of “computational energy”, and he proposed the Hopfield neural network (HNN) model. Two years later, he proposed the continuous-time HNN model [], which pioneered a new way for neural networks to be used for associative memory and optimization calculations. This pioneering research work has a strong impetus to the study of neural networks. In 1985, Hopfield and Tank [] proposed an HNN model for solving a difficult but well-defined optimization problem, the traveling-salesman problem, and used it to illustrate HNNs’ computational power. After that, numerous neural networks have been proposed, owing to this seminal work.

Similarly, Zhang introduced the concept of an “error function” and proposed a kind of RNN named a zeroing neural network (ZNN). ZNNs are easier to implement in hardware, and they belong to a non-training model. Essentially, the reason why ZNNs can effectively tackle time-varying problems is that they can utilize time-derivative information. For any given random initial input values, ZNNs operate in a neurodynamic manner, and they evolve in the direction of a decreasing error function, eventually reaching a steady state. The ZNN neural dynamics can be formulated as follows:

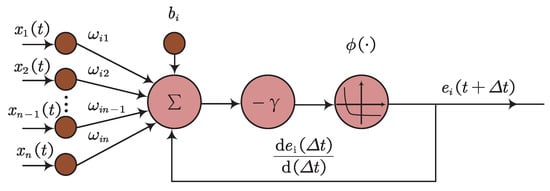

where is the error function, is the design parameter, and denotes the activation function. The key step in designing the ZNN model is to construct an error function, . For an arbitrary time-varying problem, , the error function can be formulated as , where is the time-varying unknown variable to be solved. The structure of a ZNN is described in Figure 1, and input and output relationships can be described as follows:

where is the ith neuron’s sub-error, is the weight coefficient, and refers to the next time. When all sub-error increments converge to zero, the system achieves stability, i.e., . Based on the superiority of ZNNs in real-time processing, many fruitful academic outcomes have been reported [,,,,,]. The following subsections discuss the convergence and stability of ZNNs, as well as model variants and applications.

Figure 1.

ZNN structure.

3.2. Convergence and Stability

The key performance aspects of ZNNs are convergence and stability. Generally speaking, this can be proven via three approaches, i.e., Lyapunov theory, ordinary differential equations, and Laplace transformation.

Proof.

(Based on Lyapunov theory). For example, to solve a time-varying, nonlinear minimization problem, the target functions are and ; ref. [] designed an error function as

Then, a Lyapunov function candidate is constructed:

it is obvious that is positive definiteness. Then, the formulation of its time derivative can be described as

which is negative definiteness. That means the residual error of the ZNN model is globally converged to zero. Notably, by substituting the definition of the error function, , the global convergence of all extant continuous-time ZNN models can be analyzed using a similar methodology. This analytical approach represents the predominant method for verifying continuous-time ZNN models, and it has been extensively investigated in [,,,]. □

Proof.

(Based on an ordinary differential equation). The ordinary differential equation is mainly used to verify the convergent speed of ZNN models activated via linear functions. For example, when considering the problem in [], solving the ith subsystem of the design formula , it can lead to

where denotes the initial value of . Finally, the residual error of the ZNN model globally and exponentially is set to zero. There has been extensive research presented in [,]. □

Proof.

(Based on Laplace transformation). Let us use Laplace transformation for and get

and, further, have

Due to , the final value theorem can be applied. Based on the final value theorem, we get

the proof is completed. This approach was studied in [,,]. □

3.3. Accelerated Convergence

To solve some of the shortcomings of conventional neural networks, e.g., long training times and high computational resource consumption, an accelerated convergence neural network undoubtedly provides an effective solution to these issues. In past decades, there have been two main kinds of accelerated convergence neural networks: finite-time neural networks and predefined-time neural networks. What finite-time neural networks and predefined-time neural networks have in common is importing activation functions (AFs). For convenience, the main usages of AFs are listed as follows:

- •

- General linear AF:

- •

- Power AF:where n is an odd integer, and n > 3.

- •

- Bipolar sigmoid AF:where .

- •

- Hyperbolic sine AF:where .

- •

- Power-sigmoid AF:

- •

- Constant-sign–bi-power AF:

- •

- Sign–bi-power AF:where with the parameters and sgnn defined as

- •

- Weighted-sign–bi-power AF:where are tunable positive design parameters.

- •

- Nonlinear AF 1:where .

- •

- Nonlinear AF 2:where , and .

3.3.1. Finite-Time Neural Network

The finite-time convergence of neural network models refers to the ability of a neural network to converge to a satisfactory state or achieve a predetermined level of performance within a finite period of time. In [], Xiao proposed two nonlinear ZNN models based on nonlinear activation functions (Equations (18) and (19)) to efficiently solve linear matrix equations. According to Lyapunov theory, two such nonlinear ZNNs are proven to be convergent within finite time and the lower convergence bound. According to this paper, the convergence bound is

where denotes the convergence upper bound.

In [], a ZNN with a specially constructed activation function was proposed and investigated to find the root of nonlinear equations. Then, comparing it with gradient neural networks, Xiao [] pointed out that this model has better consistency in actual situations and a stronger ability in dynamical systems. The specially constructed activation function can be defined as Equation (16) and named the sign–bi-power function or Li function []. Due to its finite-time convergence, most researchers have employed it. For example, ref. [] proposed an improved ZNN model for solving a time-varying linear equation system. Such a ZNN model is activated via an array of continuous sign–bi-power functions (Equation (15)). In [], to solve dynamic matrix inversion problems, two finite-time ZNNs with the sign–bi-power activation function were proposed by designing two novel error functions. Furthermore, Lv [] introduced a weighted sign–bi-power function (Equation (17)) to ZNNs. The ZNN model makes full use of all the items of the weighted sign–bi-power function, and thus, it obtains a lower upper bound for the convergence time.

Differing from the previous processing method, ref. [] proposed a new design formula, which also can accelerate a neural network to finite-time convergence. This neural network achieves a breakthrough in convergence performance. According to this paper, an indefinite matrix-valued, time-varying error function, , is defined as follows:

Then, the new design formula for is proposed as follows:

where denotes an activation function array, and is used to adjust the convergence rate with the design parameters ; p and q denote positive odd integers and satisfy .

3.3.2. Predefined-Time Neural Network

The predefined-time convergence of a neural network refers to the ability of a neural network to converge to a satisfactory state or achieve a predetermined level of performance within a predefined time. In some actual applications [,] that require the fulfillment of strict time constraints, there is a requirement that a neural network model can guarantee a timely convergence. For instance, the common problem in numerous fields of science and engineering is solving the dynamic-matrix square root (DMSR) [,]. It is preferable to solve the DMSR via a neural network with explicitly and antecedently definable convergence time. Li [] proposed a predefined-time neural network, the convergence time of which can be explicitly defined as a prior constant parameter. In this paper, the maximum predefined time can be formulated as

where and are prior constant parameters. Similarly, Xiao [] presented and introduced a new ZNN model using a versatile activation function for solving time-dependent matrix inversion. Unlike the existing ZNN models, the proposed ZNN model not only converges to zero within a predefined, finite time but also tolerates several noises in solving the time-dependent matrix inversion. Differing from the method of using AFs, ref. [] proposed a very parameter-focused neural network for improving the ZNN model’s convergence speed, which is more compatible with the characteristics of the actual hardware parameter.

3.4. Noise Tolerance Neural Network

Noise in a neural network refers to the uncertainty or randomness in data, which can originate from various sources, including sensor errors, environmental interference, and incidental factors during data collection. In neural networks, noise can have significant impacts on model training and performance. Jim [] first discussed the impact of noise on recurrent neural networks and pointed out that the introduction of noise will also help improve the convergence and generalization performance of recurrent neural network models. However, for the most part, the impact of noise on neural networks is negative. To eliminate the impact of noise, most researchers are devoted to designing a noise filter, such as a Gaussian filter []. This has achieved major success in image-recognition denoising []. But, in practical engineering applications, it is hard to gauge in advance which noise it is and, thus, hard to design the corresponding filter. Then, researchers focused on improving the robustness of neural networks. Liao [] analyzed the traditional ZNN model and verified that it has a tolerance for low-amplitude noise during multiple noise interferences. There are two main approaches to tolerating noise interference: (1) introducing AFs and (2) introducing integral terms. In [,], some AFs were introduced to improve the robustness of neural networks. To tolerate harmonic noise interference, a Li activation function [] was introduced in order to further improve the convergence rate and robustness. The convergence and robustness of harmonic noises of the proposed neural network models were proven through theoretical analyses.

Research [] first proposed a noise-tolerance ZNN model with a single integral term for solving the time-varying matrix inversion problem, which can be mathematically formulated as follows:

where and . Essentially, a single-integral-structure ZNN model uses the error integration information to mitigate constant bias errors. No matter how large the matrix-form constant noise is, these kinds of ZNN models can converge to the theoretical solution with an arbitrarily small residual error. These design models can serve as a fundamental framework for future research on solving time-varying problems, and they can open a door to solving time-varying problems with constant noise. Based on [], the research [] presented a ZNN model with a single term to compute the matrices’ inversion, which was subjected to null space and specific range constraints under various noises. This is an important topic to further enhance the robustness of ZNN models. Thus, Liao [] further extended the single integral framework in [] and designed a double-integral-structure ZNN model to solve various types of time-varying problems, whose structure can be described as follows:

Then, in [], to solve matrix inversion problems with linear noise rejection, this paper illustrated that a double-integral-structure ZNN model can effectively tolerate linear noise. Similarly, to enhance robustness in solving matrix inversion problems, ref. [] proposed a variable-parameter, noise-tolerant ZNN model. Compared with a single-integral-structure ZNN model for matrix inversion, numerical simulations revealed, a double-integral-structure ZNN model has better robustness under the same external noise interference.

3.5. Convergence Accuracy

As mentioned before, a series of continuous-time ZNN models have been proposed and designed to solve different problems. However, the fact is that step sizes in simulating continuous-time systems are variable, while a digital computer often requires constant time steps. It is hard to implement continuous-time ZNN models using digital computers. Additionally, continuous-time ZNN models always work in ideal conditions, i.e., it is supposed that neurons communicate and respond without any delay. Nevertheless, because of the existence of the sampling gap, a time delay is inevitable; it unavoidably leads to a reduction in the computing accuracy of ZNN models. Thus, researchers proposed and investigated discrete-time ZNN models. For example, Xiang [] proposed a discrete-time ZNN model for solving dynamic matrix pseudoinversion. There are some challenges in constructing discrete-time ZNN model, and they are listed as follows.

- •

- In view of time, any time-varying problem can be considered a causal system. The computation should be based on existing data, which include present and/or previous data. For instance, when solving the time-varying matrix inversion problem discretely, at time instant , we can only use known information, such as and , not unknown information, such as and , to compute the inverse of , i.e., . Therefore, a fundamental requirement for constructing the discrete-time ZNN model is that unknown data cannot be used.

- •

- This means that a potential numerical differentiation formula for discretizing a continuous-time ZNN model should have and should only have one point ahead of the target point. Thus, in the process of discretizing the continuous-time ZNN model using the numerical differentiation formulation, the discrete-time ZNN model has only one unknown point, , to be computed. Thus, no matter how minimal the truncation error of each formula is, discrete-time ZNN models cannot be constructed using backward and multiple-point central differentiation rules. Only the one-step-ahead forward differentiation formulas can be considered for discrete-time ZNNs.

- •

- Time is valuable for solving time-varying problems. It is crucial to design a very straightforward discrete-time ZNN model with minimal time consumption.

In [], Zhang proposed the first discrete-time ZNN model for solving constant matrix inversion. This model built a critical bridge between discrete-time ZNN frameworks and traditional Newton iteration. At the beginning, ref. [,] constructed the discrete-time ZNN models using the Euler forward difference. Notably, the error pattern of discrete-time ZNN models derived from the Euler forward difference approach is , where is the sampling interval. For instance, the Euler-type discrete-time ZNN model for time-varying matrix inversion is directly formulated as []

where k denotes the iteration index, and . Especially by omitting the term and setting , the Euler-type discrete-time ZNN model (24) is simplified to

which aligns with the traditional Newton iteration. Hence, the traditional Newton iteration can be considered a special case of the Euler-type discrete-time ZNN model (24). Furthermore, a linkage between the Getz–Marsden dynamic system and discrete-time ZNN models was established in []. To enhance the accuracy of ZNN model discretization, a Taylor-type numerical differentiation formula was proposed in [] for first-order derivative approximation. This formula exhibits a truncation error of , and it is expressed as:

subsequently, a novel, Taylor-type, discrete-time ZNN model was developed for time-varying matrix inversion,

This Taylor-type, discrete-time ZNN model converges to the theoretical solution of the time-varying problem with a residual error of

Recently, a numerical differentiation rule with a truncation error of was established in [] for first-order derivative approximation. By utilizing this new formula, a five-step discrete-time ZNN model was proposed for time-varying matrix inversion, with a residual error of . It is imperative to note that discrete-time ZNN models can be considered time-delay systems, and consequently, a high value of h may induce oscillations in the model. To mitigate instability, reducing the value of h is a direct remedy. However, decreasing h significantly decelerates the convergence of discrete-time ZNN models.

3.6. Time-Varying Linear System Solving

Time-varying linear systems have extensive applications in engineering fields such as circuit design [], communication systems [,,], and signal processing [,]. Many engineering problems can be effectively modeled as time-varying linear systems [,]. Time-varying linear systems can be constructed using a series of time-varying matrices that store system information, which changes over time.

One notable contribution in this field is the work of Lu [], who proposed a novel ZNN model for solving time-varying underdetermined linear systems. This model satisfied variable constraints and effectively converged the residual errors. Through extensive theoretical analyses and numerical simulations, the author demonstrated the effectiveness and validity of the proposed ZNN model and showcased its applicability in controlling the PUMA560 robot under physical constraints. Additionally, Xiao [] designed a ZNN model specifically for time-varying linear matrix equations. Their study included a theoretical analysis of the maximum convergence time, and it concluded with exceptional performance in solving time-varying linear equations. Zhang [] proposed a varying-gain ZNN model for solving linear systems, which can be represented as . Due to the varying properties parameter, this ZNN model can achieve finite-time convergence. Moreover, several other ZNN models, proposed in [,], have been presented for solving time-varying Sylvester equations of the form .

Similarly, a complex time-varying linear system has also been mainly discussed and investigated. The complex number domain is widely and profoundly used in science and engineering, which not only exists as a mathematical tool but also plays a key role in solving many practical problems. For the online solving of complex time-varying linear equations, ref. [] proposed and investigated a neural network. This proposed neural network adequately utilizes time-derivative information for time-varying complex matrix coefficients, and it was theoretically proven that it can converge to the theoretical solution with finite time. In [], a complex-valued, nonlinear, recurrent neural network was designed for time-varying matrix inversion solving in complex number fields. This paper proposed a complex-valued, nonlinear ZNN model that was established on the basis of a nonlinear evolution formula and that possessed better finite-time convergence. Long [] mainly discussed the finite-time stabilization of a complex-valued ZNN. This article investigated the finite-time stabilization of a complex-valued ZNN with a proportional delay via the direct analysis method without separating the real and imaginary parts of complex values. To achieve higher precision and a higher convergence rate, ref. [] proposed a new ZNN model. Compared with the GBRNN model and the existing ZNN models, the illustrative results showed that the new ZNN model has higher precision and a higher convergence rate.

3.7. Time-Varying Nonlinear System Solving

Nonlinear systems are prevalent in many real-world applications, and real-time solutions for nonlinear systems have been a hot topic. In [], a continuous-time ZNN model was presented and investigated for online time-varying nonlinear optimization. This paper focused on the convergence of continuous-time ZNN models, and it theoretically verified that the continuous-time ZNN model is provided with a global exponential convergence property. For the purpose of satisfying both finite-time convergence and noise tolerance, Xiao [] proposed a finite-time robust ZNN to solve time-varying nonlinear minimization under various external disturbances. Ref. [] proposed a finite-time, varying-parameter, convergent-differential ZNN for solving nonlinear optimization. The proposed ZNN model has super exponential convergence, finite-time convergence, and strong robustness.

Quadratic minimization is a fundamental problem in nonlinear optimization, and it has wide applicability. The goal of quadratic minimization is to find values of variables that minimize a quadratic objective function subject to certain constraints. Ref. [] proposed a segmented variable-parameter ZNN model for solving time-varying quadratic problems. They achieved strong robustness by keeping the time-varying parameters stable. The general time-varying quadratic problem can be expressed as follows:

where the vector is the solution that should be tackled using the model, , , , , is a symmetric positive definite matrix, and ; these time-varying coefficients are known. In [], a single integral structure with a nonlinearly activated function ZNN model was proposed to solve dynamic quadratic minimization with additive noises considered. Differing from the above-mentioned ZNN model, ref. [] proposed a new design formula. Based on this formula, a finite-time and noise-tolerance ZNN was proposed for solving time-varying quadratic optimization problems under various levels of additive noise interference.

3.8. Robot Control

As an effective approach to solving time-varying problems, ZNNs have been widely applied to robot control, such as redundant manipulators [] and multiple-robot control [,]. For example, because the end-effector task may be incomplete due to a manipulator’s physical limitations or space limitations, it is important to adjust the manipulator configuration from one state to another state. In [], Tang proposed a refined self-motion control scheme based on ZNNs, which can be described as follows:

where and are the joint-angle-velocity vector and joint-angle vector, respectively; denotes the given joint-angle vector; denotes the initial joint-angel vector; is the 2-norm of the vector; is defined according to the self-motion task; and is the Jacobian matrix. are the positive design parameters, and k is used to scale the magnitude of the manipulators. In addition, and denote the time-varying joint-angle lower bound and upper bound; and represent the joint-angle-velocity lower bound and upper bound. This self-motion can adjust the manipulator configuration from the initial state to the final state and keep the end effector immobile at its current orientation or position. Considering the higher accuracy, ref. [] proposed a discrete-time ZNN model to solve the motion planning of redundant manipulators.

To effectively manage and control muti-robots, Liao [] proposed a strategy for real-time control. This strategy achieves the real-time measuring and minimizing of inter-robot distances, which can be formulated as

where the complex number is the position information of the jth follower robot, the real part and the imaginary part represent the x-coordinate and y-coordinate, and are two neighboring robots. Based on leader–follower frameworks, the desired formation is merely known by leader robots, and follower robots just follow the motion of the leaders (this paper chose the robots at the edges as leaders). Furthermore, to verify the correctness of this strategy, the author employed a wheeled mobile robot, and the kinematic model can be described as

Then, according to differential flatness, the left- and right-wheel velocity can be represented with finite-order differentials of the position information, which can be formulated as follows:

To achieve real-time control, the author constructed a complex ZNN model to find the real-time positions. Compared to the GBRNN model, this model can eliminate a large lagging error.

3.9. Discussion and Challenges

Overall, research on ZNNs has achieved considerable success in solving time-varying problems. However, there are still many challenges to be solved in the future. This paper provides some prospective suggestions for future directions.

- (1)

- Keep exploring and discovering more useful, one-step-ahead numerical differential formulas to construct discrete-time ZNNs. One of the goals is that further discrete-time ZNN models possess larger step sizes and higher computational accuracy. Larger step sizes consume less computation time. Such advancements align closely with the development of applied mathematics and computational mathematics.

- (2)

- How to achieve faster convergence still remains an open problem. Additionally, it is meaningful in the development of ZNNs to understand how to achieve convergence conditions.

- (3)

- Apart from global stability, more theory is also needed to improve robustness.

All of these future developments will coincide with the advancement of mathematical theory, particularly in applied mathematics and computational mathematics. For instance, it is necessary to derive new models for addressing inequality constraints in time-varying optimization problems. These advancements will parallel the progress in computational mathematics, which is crucial for constructing and developing neural networks. It is important to recognize that different types of neural networks, such as discrete-time ZNN models or accelerated-convergence ZNN models, each have their own applicable ranges. Therefore, it is unrealistic to expect that an isolated ZNN model can solve all computational problems.

4. Intelligence Algorithms with Applications

Bio-inspired intelligence algorithms can be categorized into two specific categories, genetic algorithms and swarm intelligence algorithms. This section mainly discusses one of the classic evaluation algorithms, i.e., genetic algorithms, and one of the classic swarm intelligence algorithms, i.e., the particle swarm optimization algorithm. And it introduces their origins, design process, and basic principles. It also introduces a number of swarm intelligence algorithms, such as the ant colony optimization algorithm, an artificial fish swarm algorithm, and the Harris hawks optimizer. Then, we select some practical domains, i.e., gene feature extraction, intelligence communication, and the image process, to emphasize the applicability of intelligent algorithms.

4.1. Bio-Inspired Intelligence Algorithm

Bio-inspired optimization algorithms draw inspiration from biological evolution and swarm intelligence, aiming to solve complex optimization problems. These algorithms leverage the principles underlying biological systems, such as evolution, swarm intelligence, and natural selection, to tackle optimization, search, and decision-making tasks across diverse domains.

One prominent category of bio-inspired algorithms is evolutionary algorithms, which include genetic algorithms, evolutionary strategies, evolutionary programming, and genetic programming. These algorithms simulate the process of natural evolution, where candidate solutions/individuals evolve and adapt over successive generations through mechanisms such as selection, crossover/reproduction, and mutation, ultimately converging toward optimal or near-optimal solutions.

Another is swarm intelligence algorithms, which are inspired by the collective behavior of decentralized, self-organized systems observed in nature, such as ant colonies and bird flocks [,]. Swarm intelligence algorithms include particle swarm optimization, the egret swarm optimization algorithm [], the beetle antennae search algorithm [,], and the gray wolf algorithm [], which mimic the cooperative behaviors of social insects to efficiently explore solution spaces and find high-quality solutions.

These intelligence algorithms offer the following advantages:

- •

- Distributed robustness: Individuals are distributed across the search space, and they interact with each other. Due to the lack of a centralized control center, these algorithms exhibit strong robustness. Even if certain individuals fail or become inactive, the overall optimization process is not significantly affected.

- •

- Simple structure and easy implementation: Individuals have simple structures and behavior rules. They typically only perceive local information and interact with others through simple mechanisms. This simplicity makes the algorithms easy to implement and understand.

- •

- Self-organization: Individuals exhibit complex self-organizing behaviors through interactions and information exchanges. The intelligent behavior of the entire swarm emerges from the interactions among simple individuals.

4.1.1. Genetic Algorithm

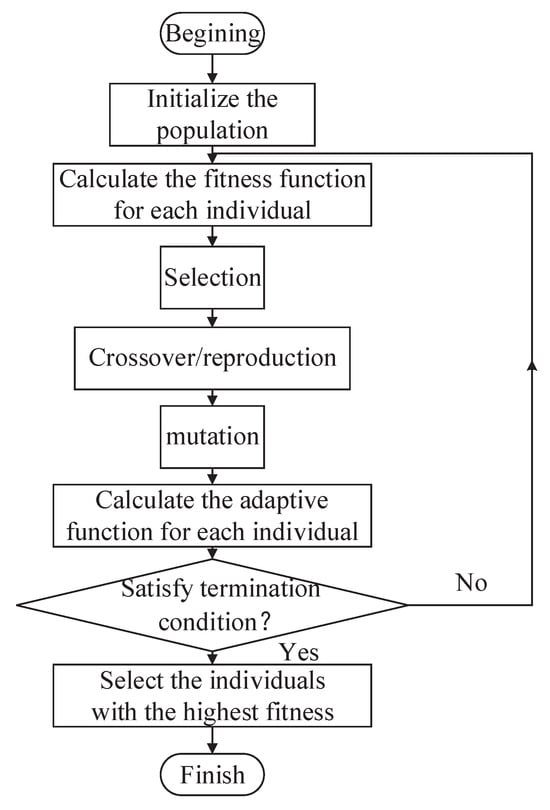

In 1975, Holland [] first proposed the genetic algorithm (GA), which was inspired by natural selection. As the most successful type of evolutionary algorithm, GA is a stochastic optimization algorithm with a global search potential. Essentially, GA emulates the process of natural selection in finding optimal solutions for complex optimization and search problems. The core idea of GA is the concept of the survival of the fittest. The fitness function is used to measure the merits of individuals, and the individuals with better performance have a higher probability of being selected to produce offspring in the next generation. Through the iterative process of reproduction and selection, GA gradually improves the solution set and converges toward the optimal or near-optimal solution. The design procedure can be described as follows, and the flow diagram is shown in Figure 2.

Figure 2.

General procedure of GA.

- (1)

- Initialize the population.

- (2)

- Calculate the fitness function for each individual.

- (3)

- Select individuals with a high fitness function, and let them undergo crossover/reproduction and mutation to generate offspring.

- (4)

- Calculate the fitness function for each individual.

- (5)

- If the termination condition is satisfied, select the individual with the highest fitness, or else return to step (2).

Due to its remarkable performance in optimization, GA has been applied in many optimization problems, especially where the search space is large, complex, or poorly known. Ou [] developed a new hybrid knowledge extraction framework by combining GA and backpropagation neural networks. The efficacy of the framework was demonstrated through a case study using the Wisconsin breast cancer dataset. Furthermore, Li [] investigated the application of the harmonic search algorithm in this framework.

4.1.2. Particle Swarm Optimization Algorithm

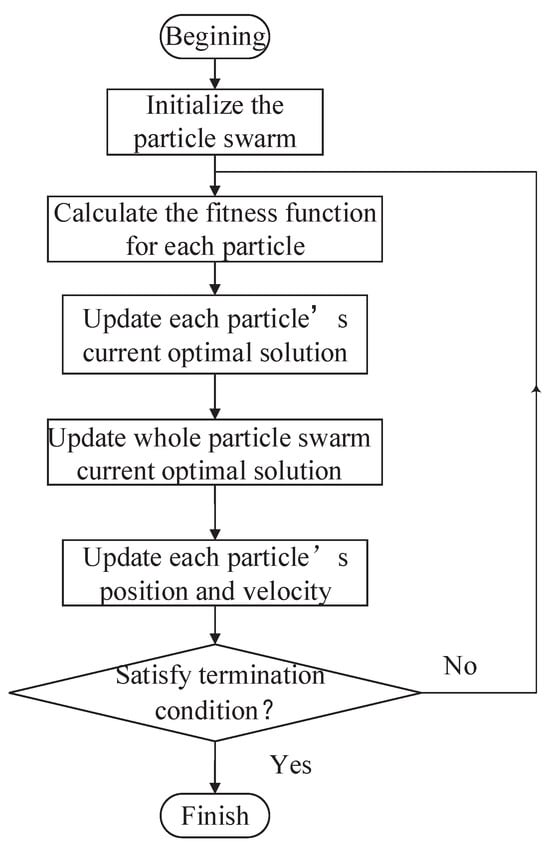

In 1995, by observing the social behavior of birds flocking and searching for food, Kennedy and Eberhart proposed particle swarm optimization (PSO) algorithm, which is stochastic and computational-intelligence-oriented []. ”Particles” usually refers to population members with an arbitrarily small mass and volume. Each particle denotes a solution with four vectors, including the current position, the current optimal solution, the current optimal solution found via its neighbor particles, and velocity. In each iteration, each particle updates its current position in the search space based on the current optimal position and the current neighbor particle’s optimal position. The renewal principle of each particle’s position and velocity can be described as follows:

where denotes ith particle’s position in jth iteration; denotes the ith particle’s velocity in the jth iteration; denotes ith particle’s current optimal position; denotes whole particles’ optimal positions; denotes cognitive and social parameters; and . The design procedure can be described as follows, and the flow diagram is shown in Figure 3.

Figure 3.

General procedure of PSO.

- (1)

- Initialize the particle swarm.

- (2)

- Calculate the fitness function for each particle.

- (3)

- Update each particle’s current optimal solution.

- (4)

- Update the whole particle’s current optimal solution.

- (5)

- Update each particle’s position and velocity.

- (6)

- If the termination condition is satisfied, select the individual with the highest fitness or else return to step (2).

Due to the PSO algorithm’s simple concept, easy implementation, computational efficiency, and unique searching mechanism, it has extensive applications in various engineering optimizations. To solve the problem associated with Takagi–Sugeno fuzzy neural networks, Peng [] proposed an enhanced chaotic quantum-inspired PSO algorithm. This algorithm effectively solves problems such as a slow convergence time and a long calculation time. Additionally, Yang [] proposed an improved PSO (IPSO) algorithm to identify parameters of the Preisach model for modeling hysteresis phenomena. Compared with traditional PSO methods, IPSO is provided with superior convergence, less computation time, and higher accuracy.

4.2. Ant Colony Optimization Algorithm

The ant colony optimization (ACO) algorithm was first introduced by M. Dorigo and colleagues in 1996 as a nature-inspired, meta-heuristic method aimed at solving combinatorial optimization problems. This algorithm draws inspiration from stigmergy, where communication within a swarm is achieved through environmental manipulation. It has been demonstrated that ants communicate by depositing pheromones on the ground or objects to send specific signals to other ants. Various pheromones are used for different tasks within a colony. For example, ants mark distinct paths from their nests to a food source to guide other ants for transportation. The shortest path is naturally selected because longer paths allow more time for pheromone evaporation before re-deposition.

In the ACO’s original version, the problem must be represented as a graph, where each ant represents a tour, and the goal is to identify the shortest one. There are two key matrices: distance and pheromones, which ants use to update their routes. Through multiple iterations and updates of these matrices, a tour is established that all ants follow, which is considered the best solution for the problem. ACO has various adaptations that can address problems with continuous variables, constraints, multiple objectives, and more. Bullnheimer [] introduced an innovative rank-based variant of the ant system. In this version, the paths of the ants are sorted from the shortest to the longest after each iteration. The algorithm assigns different weights based on the path length, with shorter paths receiving higher weights. Hu [] developed a new ACO called the “continuous orthogonal ACO” to solve continuous problems, using a pheromone deposition mechanism that allows ants to efficiently search for solutions. Gupta [] introduced the concept of depth into a recursive ACO, where depth determines the number of recursions, each based on a standard ant colony algorithm. Gao [] combined the K-means clustering algorithm with the ACO and introduced three immigrant schemes to solve the dynamic location routing problem. Hemmatian [] applied an elitist ACO algorithm to the multi-objective optimization of hybrid laminates, aiming to minimize the cost during the computation process.

4.3. Artificial Fish Swarm

In nature, fish can identify more nutritious areas by either searching individually or following others, and areas with more fish tend to be richer in nutrients. In 2002, Li [] initially introduced a stochastic, population-based AFS optimization algorithm. The algorithm’s core idea is to imitate fish behaviors like swarming, preying, and following, combining these with a local search to generate a global optimum []. The AFS algorithm generally has the same advantages as genetic algorithms, while the AFS algorithm can reach faster convergence and needs fewer parameter adjustments. Unlike genetic algorithms, AFS does not involve crossover and mutation processes, making it simpler to execute. It operates as a population-based optimizer: the system begins with a set of randomly generated potential solutions and iteratively searches for the best one []. Due to random search and the parallel optimization method, AFS has global search capabilities and fast convergence. The fish group is represented by a set of points or solutions, with each agent symbolizing a candidate solution. The feasible solution space represents the “waters” where artificial fish move and search for the optimum. The algorithm reaches the optimal solution through survival, competition, and coordination mechanisms. In the AFS algorithm, the swarm behavior is characterized by movement towards the central point of the visual scope. Numerous researchers have developed and improved the AFS algorithm, resulting in a variety of novel variants. For example, a binary AFS was proposed to solve 0–1 multidimensional knapsack problems. In this approach, a 0/1-bit binary string represents a point, and each bit of a trial point is generated by copying the relevant bit from itself or another specified point with equal probability. Zhang introduced a Pareto-improved AFS algorithm for addressing a multi-objective fuzzy disassembly-line-balancing problem, which helped reduce uncertainty. To enhance the global search ability and convergence speed, Zhu proposed a new quantum AFS algorithm based on principles of quantum computing, such as quantum bits and quantum gates.

4.4. Harris Hawks Optimizer

The Harris hawks optimizer (HHO) was developed by Heidari [], and it is based on the natural hunting strategies of Harris hawks. This algorithm uses different equations to update the positions of hawks in a search space, emulating the different hunting techniques these birds use to capture prey. The following equation is used to provide exploratory behavior for HHO []:

where denotes the position in the tth iteration, is the prey position, and b are a random number in , L and U are the parameters’ lower and upper bounds, respectively, indicates a hawk randomly selected from the current population, and represents the mean position of the current population of hawks. The exploitation algorithm employs two distinct besieging strategies: soft and hard:

where represents the distance between the solution and the prey at the tth iteration, and C are random variables.

4.5. The Rest of the Swarm Intelligence Algorithms

Swarm intelligence algorithms are predominantly derived from simulations of natural ecosystems, especially those based on insects and animals. These algorithms are optimized by simulating the processes of foraging or information exchange within these biomes, and most utilize directional iterative methods based on probabilistic search strategies. With the progression of swarm intelligence, optimization algorithms have transcended merely incorporating biological population traits. Some studies have introduced human biological characteristics into swarm intelligence algorithms, such as the human immune system []. Recent innovations in swarm intelligence algorithms, as well as enhancements to classical algorithms, mainly aim at reducing parameters, streamlining processes, accelerating computation speeds, and improving search capabilities, particularly for high-dimensional and multi-objective optimization problems []. The application domains of swarm intelligence algorithms are expanding, with their usage guiding further development directions.

In addition to the well-known swarm intelligence algorithms, many extended algorithms have also been extensively discussed and utilized. The “cuckoo search” algorithm, inspired by the brood parasitic behavior of some cuckoo species and the Lévy flight behavior of birds and fruit flies, addresses optimization problems []. Pigeon-inspired optimization, used for air–robot path planning, employs a map and compass operator model based on the magnetic field and the sun, along with a landmark operator model grounded in physical landmarks []. The bat algorithm, which leverages the echolocation behavior of bats, is effective for solving single- and multi-objective problems within continuous solution spaces []. The gray wolf optimizer, inspired by the social hierarchy and hunting strategies of gray wolves, simulates their natural leadership dynamics []. An artificial immune system (ARTIS) encompasses attributes of natural immune systems such as diversity, distributed computation, error tolerance, dynamic learning and adaptation, and self-monitoring, functioning as a robust framework for distributed adaptive systems across various domains []. The fruit fly optimization algorithm (FOA), based on the foraging behavior of fruit flies, exploits their keen sense of smell and vision to locate superior food sources and gathering spots []. Glow-worm swarm optimization, inspired by glow-worm behavior, is utilized for computing multiple optima of multimodal functions simultaneously []. Lastly, invasive weed optimization, inspired by the colonization strategies of weeds, is a numerical stochastic optimization algorithm that mimics the robustness, adaptability, and randomness of invasive weeds using a simple yet effective optimization approach [].

4.6. Gene Feature Extraction

Gene feature extraction refers to the process of extracting meaningful information from genomic data, particularly focusing on the characteristics or attributes of genes. It plays a key role in understanding the biological functions of genes, deciphering genetic mechanisms underlying diseases, and developing computational models for predictive analysis. First, researchers perform original data preprocessing to ensure data quality. Second, what needs to be done is the selection of relevant features; for example, the gene HER2 is commonly found in breast cancer and stomach cancer. Then, meaningful features are extracted, such as gene expression levels, sequence features, and functional annotations. Finally, based on the extracted features, certain tasks can be achieved, such as classifying cancer genes. Intelligent algorithms are closely related to gene feature extraction, and they are often combined in genomics and bioinformatics research to better understand the function and biological significance of genes.

To prevent some preprocessing that may introduce noise or information loss, ref. [] proposed a new preprocessing method named mean–standard deviation to solve this problem. In addition, to process overlapping biclusters, Chu [] introduced a new biclustering algorithm called weight-adjacency-difference-matrix binary biclustering. The results of the GO enrichment analysis showed that this method was provided with biological significance using real datasets. Liu [] proposed the Harris hawks optimization algorithm to accurately select a subset of feature cancer genes. By applying this method to eight published microarray gene expression datasets, the results showed a 100% classification accuracy in gastric cancer, acute lymphoblastic leukemia, and ovarian cancer and an average classification accuracy of 95.33% across a variety of other cancers. Then, ref. [] improved the Harris hawks optimization algorithm, and the experimental results showed that the classification accuracy of the algorithm was greater than 96.128% for tumors in the colon, nervous system, and lungs, versus 100% for the rest. Compared with seven other algorithms, the experimental results demonstrated the superiority of this algorithm in classification accuracy, fitness value, and the AUC value in feature selection for gene expression data. Inspired by the predatory behavior of humpback whales, Mirjalili [] proposed a whale optimization algorithm to select the optimal feature gene subset. Compared with other advanced feature selection algorithms, the experimental results showed that the whale optimization algorithm has significant advantages in various evaluation indicators.

4.7. Intelligence Communication

Intelligence communication is an emerging, hot field of communication engineering that uses intelligence algorithms to improve the quality of communication. Communication systems can be described simply in three parts: (1) the transmitter, which is responsible for converting information into a signal suitable for transmission, sending it into the transmission-medium encoder, and sending the information to be transmitted; (2) the transmission-medium encoder, in which the transmission medium mainly plays the role of transmitting signals; and (3) the receiver, which receives the signal from the transmission medium and converts it into an intelligible form of information.

Singnal-to-noise ratio estimation is an important task in improving communication quality. Based on deep learning, Xie [] proposed a new signal-to-noise ratio estimation algorithm using constellation diagrams. This algorithm converted the received signal into a constellation diagram and constructed three deep neural networks to achieve signal-to-noise ratio estimation. The result showed that this algorithm is superior to the traditional algorithm, especially in a low-signal-to-noise-ratio situation.

Cognitive radio is an intelligent wireless communication technology aimed at enhancing the efficiency and reliability of radio spectrum utilization. Traditional wireless communication systems typically allocate fixed spectrum resources to specific users or services, but this allocation method may lead to spectrum wasting and congestion []. Cognitive radio dynamically senses, analyzes, and adapts to the radio spectrum environment to achieve the intelligent management and optimization of spectrum resources. False alarm probability and the missed detection probability are usually used to evaluate the performance of spectrum sensing. Consequently, there is a key problem in how to determine a threshold of the false alarm probability and the missed detection probability. There have been many studies related to the selection of threshold and cognitive radio networks [,]. Peng [] proposed two new adaptive threshold algorithms, which had exploited the Markovian behavior of a primary user to reduce the Bayesian cost of a false alarm and missed detection in spectrum sensing. These two algorithms achieved higher detection accuracy for TV white spaces. To improve the performance of the detection time of a cognitive radio network, ref. [] proposed a fast cooperative energy detection under an accuracy constraints algorithm. The results illustrated that it could achieve a minimal detection time by appropriately choosing the number of secondary users. Differing from the above-mentioned improvements, Liu [] combined a butterfly network and Clos network and proposed a new topology network to enhance the throughput of the network.

4.8. Image Processing

Image processing is a field of study within computer science and engineering that focuses on analyzing, enhancing, and manipulating digital images. With the development of computer science, more and more intelligence algorithms are used in image processing [,,,]. Due to the outbreak of COVID-19 worldwide, there is a large burden on clinicians. Sun [] proposed an adaptive-feature-selection, deep-forest algorithm for the classification of the computed tomography chest images of COVID-19 patients. Through the evaluation of the COVID-19 dataset with 1495 patients and 1027 community-acquired pneumonia patients, this algorithm exhibited better performance. To enhance image quality, Wang [] investigated multifractal theory for texture features through the testing of some textures. The author verified the robustness of the proposed approach in three aspects: noise tolerance, the degree of image blurring, and the compression ratio. From a coding perspective, Li [] proposed an early merge-mode decision approach for 3D high-efficiency video coding. The proposed approach can effectively decrease the computational cost. In terms of image denoising, Zhu and Chan [] proposed an approach for restoring images, which is based on mean curvature energy minimization. This approach not only retains all the valid information but also filters out noise.

4.9. Other Applications

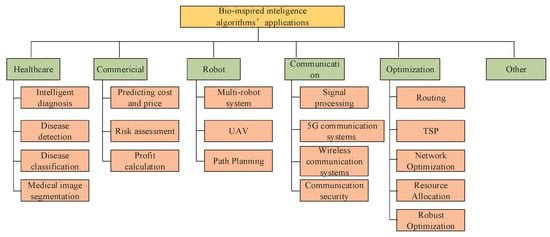

Recently, significant research has been directed towards applying intelligence algorithm techniques to address clustering challenges in wireless IoT networks. For instance, the study in [] proposed a clustering framework specifically for wireless IoT sensor networks. This framework aims to enhance energy efficiency and balance energy consumption using a fitness function optimized via a chicken swarm optimization algorithm. An improved ACO-based UAV path-planning architecture was proposed in [], which considered obstacles within a controlled area monitored via several radars. The study in [] proposed a set-based PSO algorithm with adaptive weights to develop an optimal path-planning scheme for a UAV surveillance system. The objective was to minimize energy consumption and enhance the disturbance rejection response of UAVs. Ref. [] introduced a novel ACO-based fuzzy identification algorithm designed to determine initial consequent parameters and an initial membership function matrix for silted conditions. Zhou [] presented a new ACO algorithm for continuous domains. This algorithm effectively minimizes total fuel costs by appropriately allocating power demands to generator modules while adhering to various physical and operational constraints. The work in [] applied the ACO algorithm to develop a new SVM model. The algorithm explored a finite subset of possible values to identify parameters that minimize the generalization error. In the context of flux-cored arc welding, a fusion welding process where a tubular wire electrode is continuously fed into the weld area, the welding input parameters are crucial for determining the quality of the weld joint. Table 1 shows some applications and corresponding papers on bio-inspired intelligence algorithms. Figure 4 depicts when each intelligent algorithm was introduced and some of its main applications.

Table 1.

Bio-inspired intelligence algorithm and applications.

Figure 4.

Applications of bio-inspired intelligence algorithms.

4.10. Discussion and Future Direction

In summary, intelligence algorithms offer versatile solutions across diverse domains, ranging from gene feature extraction to image processing. Whether an intelligence algorithm is bio-inspired or not, both types can tackle complex optimization problems effectively and drive advancements in various fields of research and application. However, there are some challenges, such as local optima traps or overfitting. This has encouraged researchers to explore new optimization strategies. This paper provides some prospective suggestions for future directions.

- (1)

- Multi-objective optimization: Extending intelligence algorithms to multi-objective optimization domains is still a hot topic.

- (2)

- Adaptive and self-learning techniques: Developing adaptive and self-learning algorithms that automatically adjust parameters and strategies based on problem and environmental changes improves algorithm robustness and adaptability.

- (3)

- Hybridization and fusion algorithms: Integrating intelligence algorithms with other optimization methods, such as deep learning and reinforcement learning, to leverage the strengths of various algorithms enhances solution efficiency and accuracy.

5. Conclusions

In this paper, we have provided a comprehensive survey of intelligence algorithms. First, we presented an in-depth overview of ZNNs and comprehensively introduced their origin, structure, operation mechanism, model variants, and applications. A novel classification of ZNNs was first proposed, categorizing models into accelerated-convergence ZNNs, noise-tolerance ZNNs, and discrete-time ZNNs, each offering unique advantages in solving time-varying optimization problems. These three types of ZNNs can also be integrated to solve a variety of time-varying optimization algorithms. Concerning applications, the ZNN models were applied in different areas. From different perspectives, some future directions for ZNNs were suggested. Then, we analyzed and outlined other bio-inspired intelligence algorithms, such as GA and PSO, introducing their origins, basic principles, design procedures, and applications. For the rest of the intelligence algorithms, real applications were introduced to emphasize the applicability of intelligent algorithms. In summary, this paper has offered an informative guide for researchers interested in tackling optimization problems using intelligence algorithms.

Author Contributions

Conceptualization, H.L., B.L. and S.L.; methodology, H.L. and B.L.; validation, J.L. and S.L.; formal analysis, J.L. and B.L.; investigation, H.L. and S.L.; writing—original draft preparation, H.L.; writing—review and editing, H.L., J.L. and S.L.; supervision, B.L., H.L. and S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 62066015 and Grant 62006095.

Data Availability Statement

Some or all of the data and models that support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sun, Q.; Wu, X. A deep learning-based approach for emotional analysis of sports dance. PeerJ Comput. Sci. 2023, 9, e1441. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.; Peng, C.; Zheng, Y.; Li, S.; Ha, T.T.; Shutyaev, V.; Katsikis, V.; Stanimirovic, P. Neural Networks for Portfolio Analysis in High-Frequency Trading. Available online: https://ieeexplore.ieee.org/abstract/document/10250899 (accessed on 13 September 2023).

- Zhang, Y.; Li, S.; Weng, J.; Liao, B. GNN Model for Time-Varying Matrix Inversion With Robust Finite-Time Convergence. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 559–569. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Li, Z.; Yi, C.; Chen, K. Zhang neural network versus gradient neural network for online time-varying quadratic function minimization. In Advanced Intelligent Computing Theories and Applications. With Aspects of Artificial Intelligence, Proceedings of the 4th International Conference on Intelligent Computing, ICIC 2008, Shanghai, China, 15–18 September 2008; Proceedings 4; Springer: Berlin/Heidelberg, Germany, 2008; pp. 807–814. [Google Scholar]

- Xu, H.; Li, R.; Pan, C.; Li, K. Minimizing energy consumption with reliability goal on heterogeneous embedded systems. J. Parallel Distrib. Comput. 2019, 127, 44–57. [Google Scholar] [CrossRef]

- Peng, C.; Liao, B. Heavy-head sampling for fast imitation learning of machine learning based combinatorial auction solver. Neural Process. Lett. 2023, 55, 631–644. [Google Scholar] [CrossRef]

- Xu, H.; Li, R.; Zeng, L.; Li, K.; Pan, C. Energy-efficient scheduling with reliability guarantee in embedded real-time systems. Sustain. Comput. Inform. Syst. 2018, 18, 137–148. [Google Scholar] [CrossRef]

- Qin, F.; Zain, A.M.; Zhou, K.Q. Harmony search algorithm and related variants: A systematic review. Swarm Evol. Comput. 2022, 74, 101126. [Google Scholar] [CrossRef]

- Wu, W.; Tian, Y.; Jin, T. A label based ant colony algorithm for heterogeneous vehicle routing with mixed backhaul. Appl. Soft Comput. 2016, 47, 224–234. [Google Scholar] [CrossRef]

- Sindhuja, P.; Ramamoorthy, P.; Kumar, M.S. A brief survey on nature inspired algorithms: Clever algorithms for optimization. Asian J. Comput. Sci. Technol. 2018, 7, 27–32. [Google Scholar] [CrossRef]

- Sakunthala, S.; Kiranmayi, R.; Mandadi, P.N. A review on artificial intelligence techniques in electrical drives: Neural networks, fuzzy logic, and genetic algorithm. In Proceedings of the 2017 International Conference on Smart Technologies for Smart Nation (SmartTechCon), Bengaluru, India, 17–19 August 2017; pp. 11–16. [Google Scholar]

- Lachhwani, K. Application of neural network models for mathematical programming problems: A state of art review. Arch. Comput. Methods Eng. 2020, 27, 171–182. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, Z.; Huang, Y.; Liao, B.; Li, S. Applications of Zeroing neural networks: A survey. IEEE Access 2024, 12, 51346–51363. [Google Scholar] [CrossRef]

- Zheng, Y.J.; Chen, S.Y.; Lin, Y.; Wang, W.L. Bio-inspired optimization of sustainable energy systems: A review. Math. Probl. Eng. 2013, 2013, e354523. [Google Scholar] [CrossRef]

- Zajmi, L.; Ahmed, F.Y.; Jaharadak, A.A. Concepts, methods, and performances of particle swarm optimization, backpropagation, and neural networks. Appl. Comput. Intell. Soft Comput. 2018, 2018, e9547212. [Google Scholar] [CrossRef]

- Cosma, G.; Brown, D.; Archer, M.; Khan, M.; Pockley, A.G. A survey on computational intelligence approaches for predictive modeling in prostate cancer. Expert Syst. Appl. 2017, 70, 1–19. [Google Scholar] [CrossRef]

- Goel, L. An extensive review of computational intelligence-based optimization algorithms: Trends and applications. Soft Comput. 2020, 24, 16519–16549. [Google Scholar] [CrossRef]

- Ding, S.; Li, H.; Su, C.; Yu, J.; Jin, F. Evolutionary artificial neural networks: A review. Artif. Intell. Rev. 2013, 39, 251–260. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Dahou, A.; Abualigah, L.; Yu, L.; Alshinwan, M.; Khasawneh, A.M.; Lu, S. Advanced metaheuristic optimization techniques in applications of deep neural networks: A review. Neural Comput. Appl. 2021, 33, 14079–14099. [Google Scholar] [CrossRef]

- Alqushaibi, A.; Abdulkadir, S.J.; Rais, H.M.; Al-Tashi, Q. A review of weight optimization techniques in recurrent neural networks. In Proceedings of the 2020 International Conference on Computational Intelligence (ICCI), Bandar Seri Iskandar, Malaysia, 8–9 October 2020; pp. 196–201. [Google Scholar]

- Ganapathy, S.; Kulothungan, K.; Muthurajkumar, S.; Vijayalakshmi, M.; Yogesh, P.; Kannan, A. Intelligent feature selection and classification techniques for intrusion detection in networks: A survey. EURASIP J. Wirel. Commun. Netw. 2013, 2013, 271. [Google Scholar] [CrossRef]

- Vijh, S.; Gaurav, P.; Pandey, H.M. Hybrid bio-inspired algorithm and convolutional neural network for automatic lung tumor detection. Neural Comput. Appl. 2023, 35, 23711–23724. [Google Scholar] [CrossRef]

- Hassani, S.; Dackermann, U. A systematic review of optimization algorithms for structural health monitoring and optimal sensor placement. Sensors 2023, 23, 3293. [Google Scholar] [CrossRef]

- Gad, A.G. Particle swarm optimization algorithm and its applications: A systematic review. Arch. Comput. Methods Eng. 2022, 29, 2531–2561. [Google Scholar] [CrossRef]

- Hopfield, J.J. Neurons, Dynamics and Computation. Phys. Today 1994, 47, 40–46. [Google Scholar] [CrossRef]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef] [PubMed]

- Hopfield, J.J. Neurons with graded response have collective computational properties like those of two-state neurons. Proc. Natl. Acad. Sci. USA 1984, 81, 3088–3092. [Google Scholar] [CrossRef] [PubMed]

- Hopfield, J.; Tank, D. Neural Computation of Decisions in Optimization Problems. Biol. Cybern. 1985, 52, 141–152. [Google Scholar] [CrossRef] [PubMed]

- Liao, B.; Hua, C.; Cao, X.; Katsikis, V.N.; Li, S. Complex noise-resistant zeroing neural network for computing complex time-dependent Lyapunov equation. Mathematics 2022, 10, 2817. [Google Scholar] [CrossRef]

- Liao, B.; Han, L.; He, Y.; Cao, X.; Li, J. Prescribed-time convergent adaptive ZNN for time-varying matrix inversion under harmonic noise. Electronics 2022, 11, 1636. [Google Scholar] [CrossRef]

- Liao, B.; Huang, Z.; Cao, X.; Li, J. Adopting nonlinear activated beetle antennae search algorithm for fraud detection of public trading companies: A computational finance approach. Mathematics 2022, 10, 2160. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, X. Structural Analysis of the Evolution Mechanism of Online Public Opinion and its Development Stages Based on Machine Learning and Social Network Analysis. Int. J. Comput. Intell. Syst. 2023, 16, 99. [Google Scholar] [CrossRef]

- Chen, S.; Zhou, C.; Li, J.; Peng, H. Asynchronous introspection theory: The underpinnings of phenomenal consciousness in temporal illusion. Minds Mach. 2017, 27, 315–330. [Google Scholar] [CrossRef]

- Luo, M.; Ke, W.; Cai, Z.; Liu, A.; Li, Y.; Cheang, C. Using Imbalanced Triangle Synthetic Data for Machine Learning Anomaly Detection. Comput. Mater. Contin. 2019, 58, 15–26. [Google Scholar] [CrossRef]

- Jin, L.; Zhang, Y. Continuous and discrete Zhang dynamics for real-time varying nonlinear optimization. Numer. Algorithms 2016, 73, 115–140. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, L.; Weng, J.; Mao, Y.; Lu, W.; Xiao, L. A New Varying-Parameter Recurrent Neural-Network for Online Solution of Time-Varying Sylvester Equation. IEEE Trans. Cybern. 2018, 48, 3135–3148. [Google Scholar] [CrossRef] [PubMed]

- Xiao, L.; Liao, B. A convergence-accelerated Zhang neural network and its solution application to Lyapunov equation. Neurocomputing 2016, 193, 213–218. [Google Scholar] [CrossRef]

- Peng, C.; Ling, Y.; Wang, Y.; Yu, X.; Zhang, Y. Three new ZNN models with economical dimension and exponential convergence for real-time solution of moore-penrose pseudoinverse. In Proceedings of the 2014 International Joint Conference on Neural Networks (IJCNN), Beijing, China, 6–11 July 2014; pp. 2788–2793. [Google Scholar]

- Guo, D.; Peng, C.; Jin, L.; Ling, Y.; Zhang, Y. Different ZFs lead to different nets: Examples of Zhang generalized inverse. In Proceedings of the 2013 Chinese Automation Congress, Changsha, China, 7–8 November 2013; pp. 453–458. [Google Scholar]

- Lv, X.; Xiao, L.; Tan, Z.; Yang, Z.; Yuan, J. Improved gradient neural networks for solving Moore–Penrose inverse of full-rank matrix. Neural Process. Lett. 2019, 50, 1993–2005. [Google Scholar] [CrossRef]

- Xiao, L. A nonlinearly activated neural dynamics and its finite-time solution to time-varying nonlinear equation. Neurocomputing 2016, 173, 1983–1988. [Google Scholar] [CrossRef]

- Liu, M.; Liao, B.; Ding, L.; Xiao, L. Performance analyses of recurrent neural network models exploited for online time-varying nonlinear optimization. Comput. Sci. Inf. Syst. 2016, 13, 691–705. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, G.; Jin, L.; Cheng, C.; Zhang, B.; Yu, J. Noise-suppressing zeroing neural network for online solving time-varying matrix square roots problems: A control-theoretic approach. Expert Syst. Appl. 2022, 192, 116272. [Google Scholar] [CrossRef]

- Jin, L.; Zhang, Y.; Li, S.; Zhang, Y. Modified ZNN for time-varying quadratic programming with inherent tolerance to noises and its application to kinematic redundancy resolution of robot manipulators. IEEE Trans. Ind. Electron. 2016, 63, 6978–6988. [Google Scholar] [CrossRef]

- Xiao, L.; Liao, B.; Li, S.; Chen, K. Nonlinear recurrent neural networks for finite-time solution of general time-varying linear matrix equations. Neural Netw. 2018, 98, 102–113. [Google Scholar] [CrossRef]

- Xiao, L.; Lu, R. Finite-time solution to nonlinear equation using recurrent neural dynamics with a specially-constructed activation function. Neurocomputing 2015, 151, 246–251. [Google Scholar] [CrossRef]

- Xiao, L. A nonlinearly-activated neurodynamic model and its finite-time solution to equality-constrained quadratic optimization with nonstationary coefficients. Appl. Soft Comput. 2016, 40, 252–259. [Google Scholar] [CrossRef]

- Liao, B.; Zhang, Y. From different ZFs to different ZNN models accelerated via Li activation functions to finite-time convergence for time-varying matrix pseudoinversion. Neurocomputing 2014, 133, 512–522. [Google Scholar] [CrossRef]

- Lv, X.; Xiao, L.; Tan, Z. Improved Zhang neural network with finite-time convergence for time-varying linear system of equations solving. Inf. Process. Lett. 2019, 147, 88–93. [Google Scholar] [CrossRef]

- Xiao, L.; Tan, H.; Jia, L.; Dai, J.; Zhang, Y. New error function designs for finite-time ZNN models with application to dynamic matrix inversion. Neurocomputing 2020, 402, 395–408. [Google Scholar] [CrossRef]