Task-Motion Planning System for Socially Viable Service Robots Based on Object Manipulation

Abstract

1. Introduction

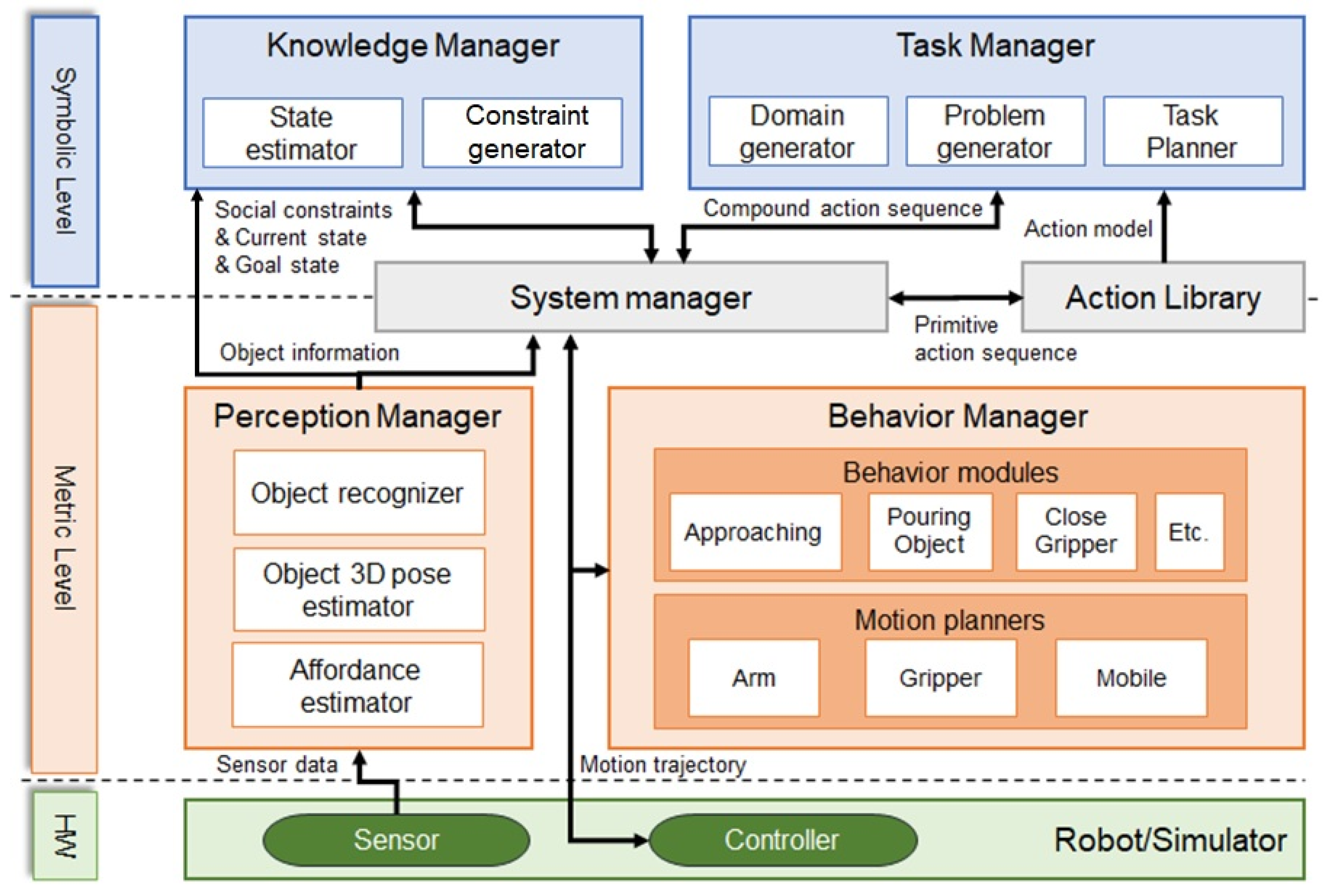

2. Task and Motion Planning System Architecture

2.1. Overview

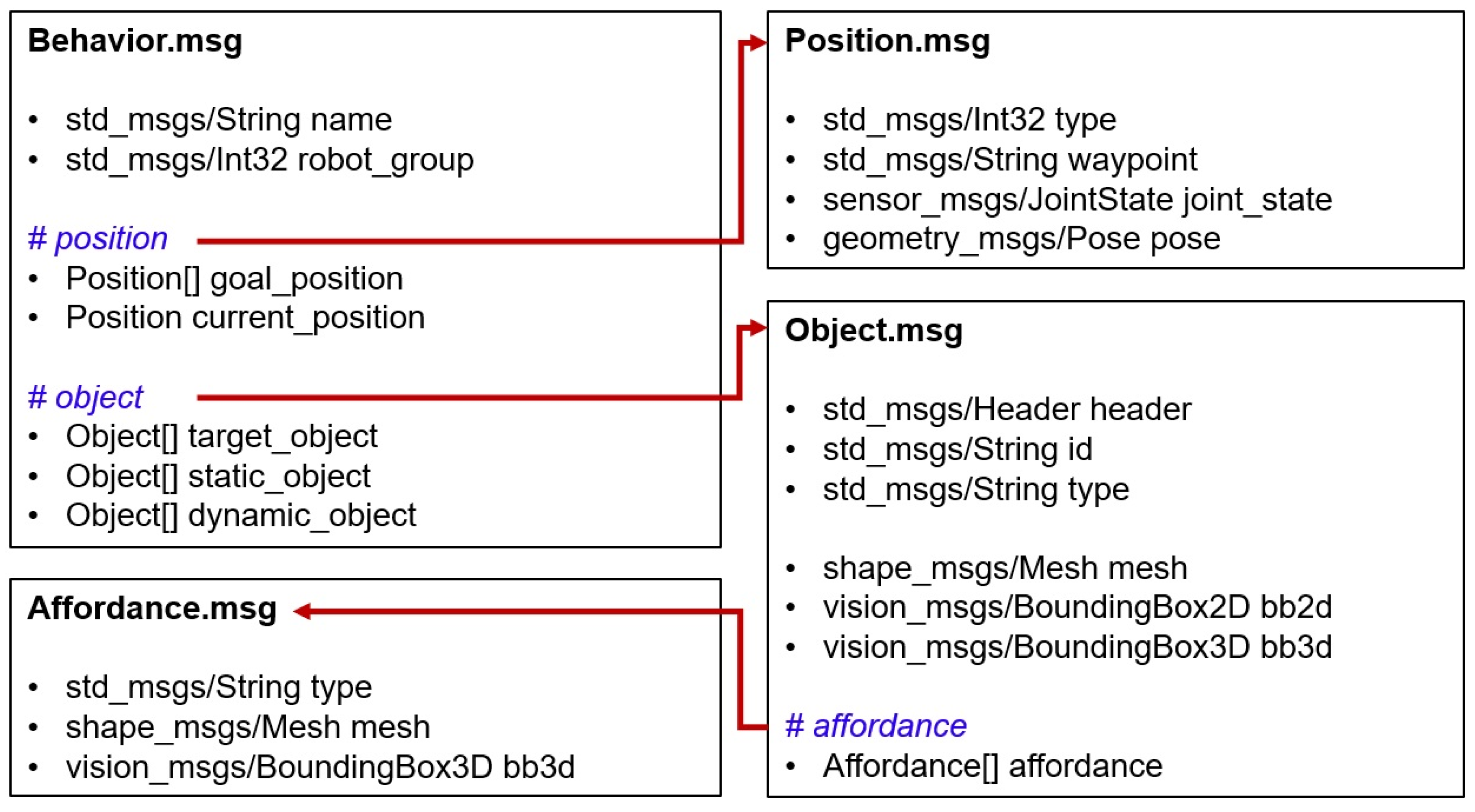

2.2. Functions of System Modules

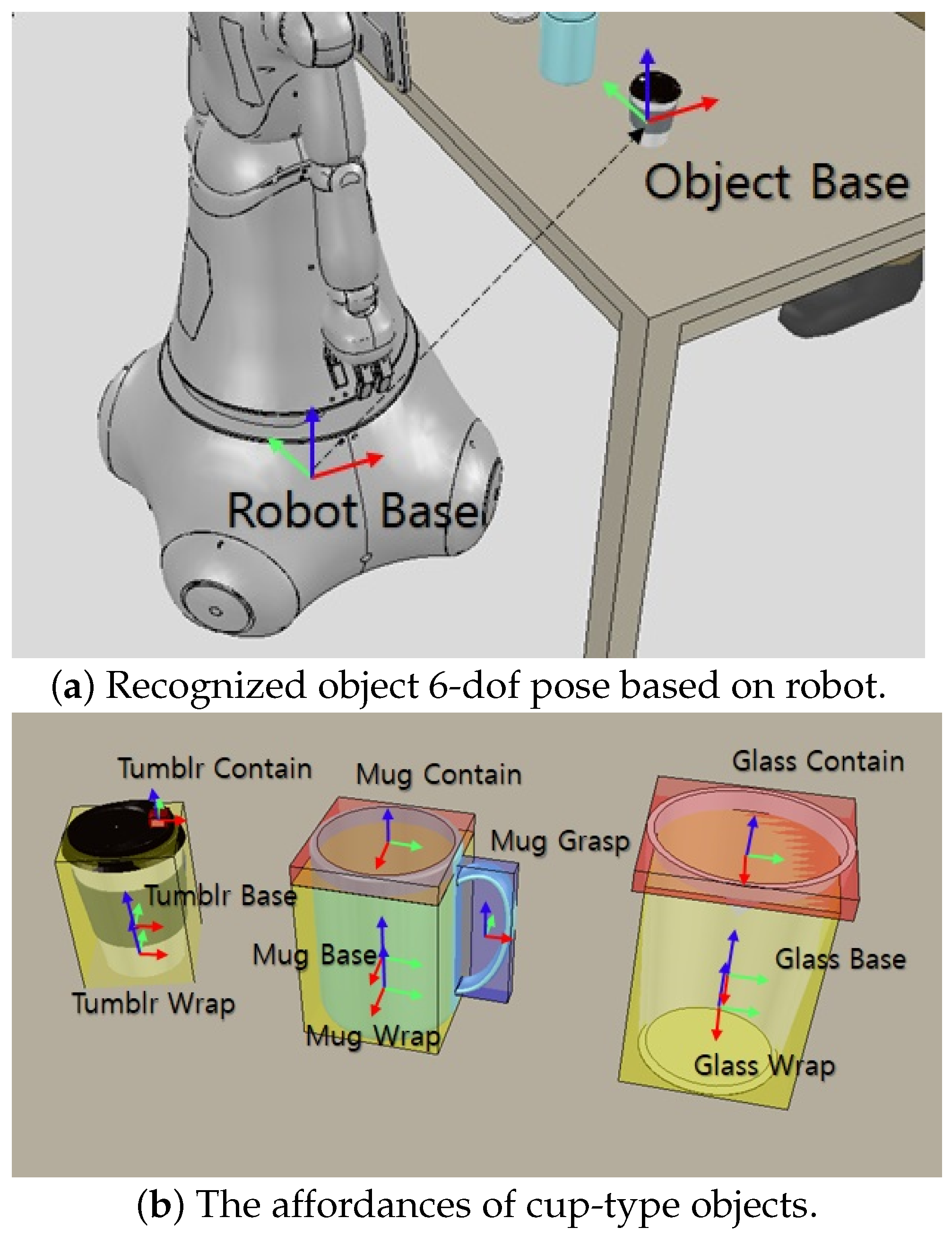

2.2.1. Perceptual Reasoning

- Contain: Storing liquids or objects (e.g., cups’ entrances);

- Grasp: Using a hand to enclose (e.g., tool handles);

- Wrap: Holding by hand (e.g., cup outside).

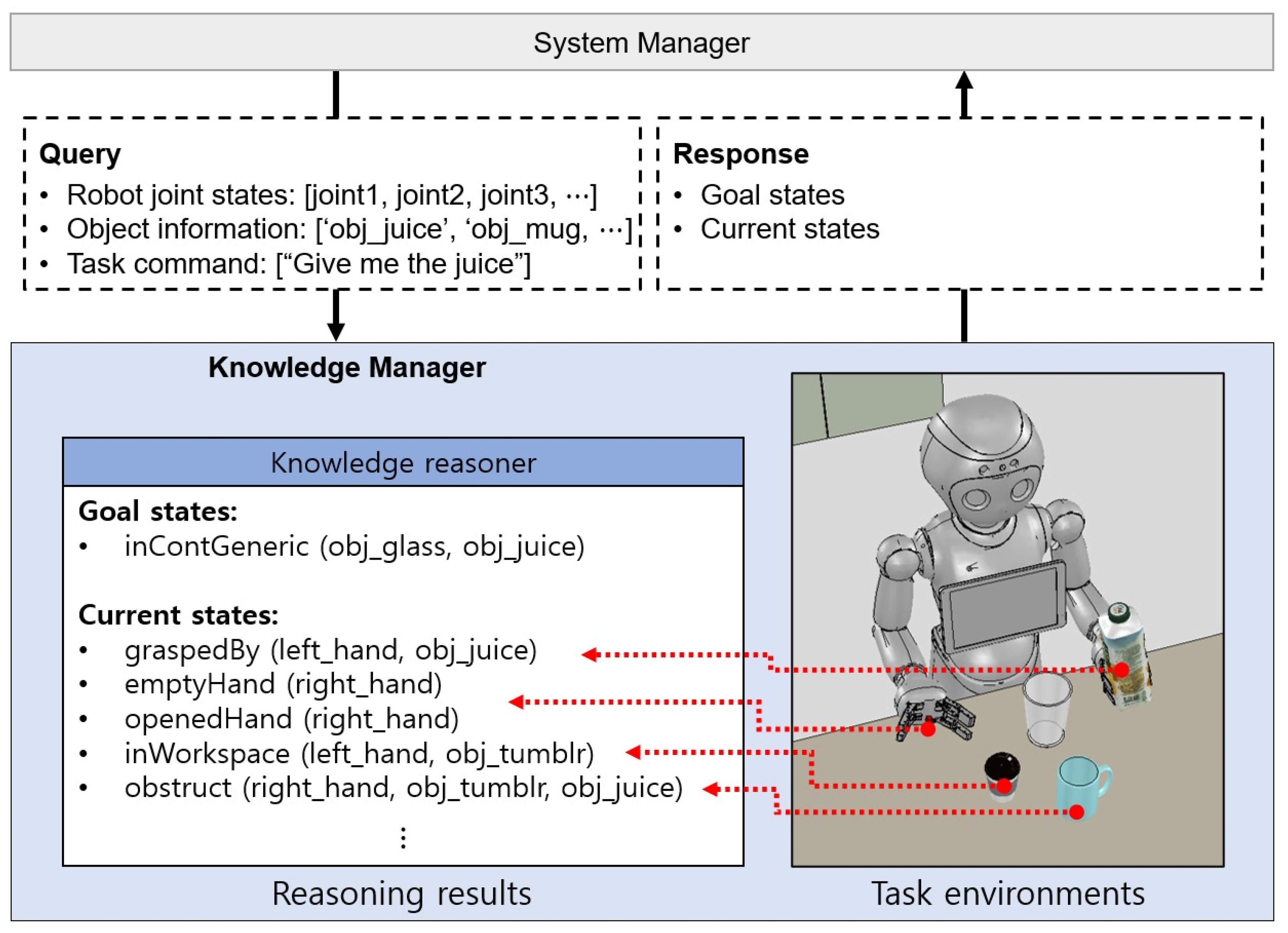

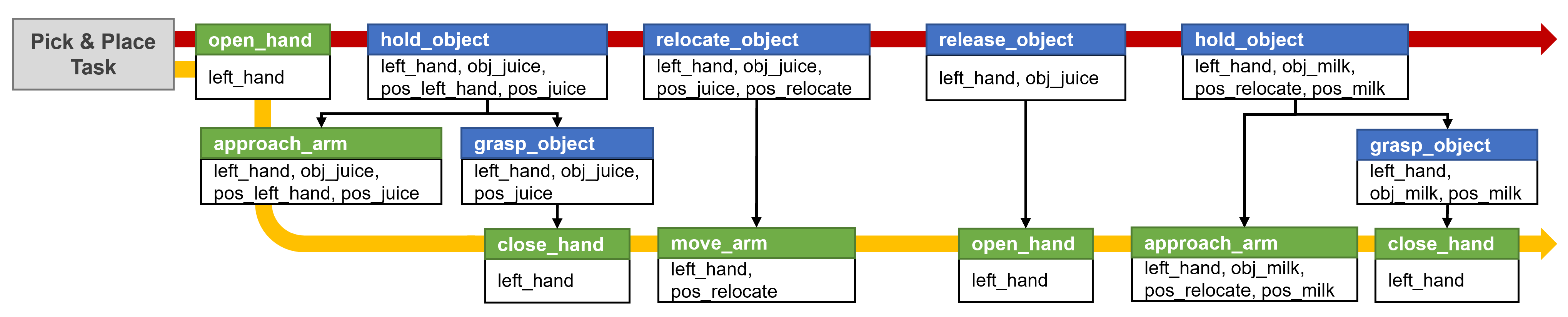

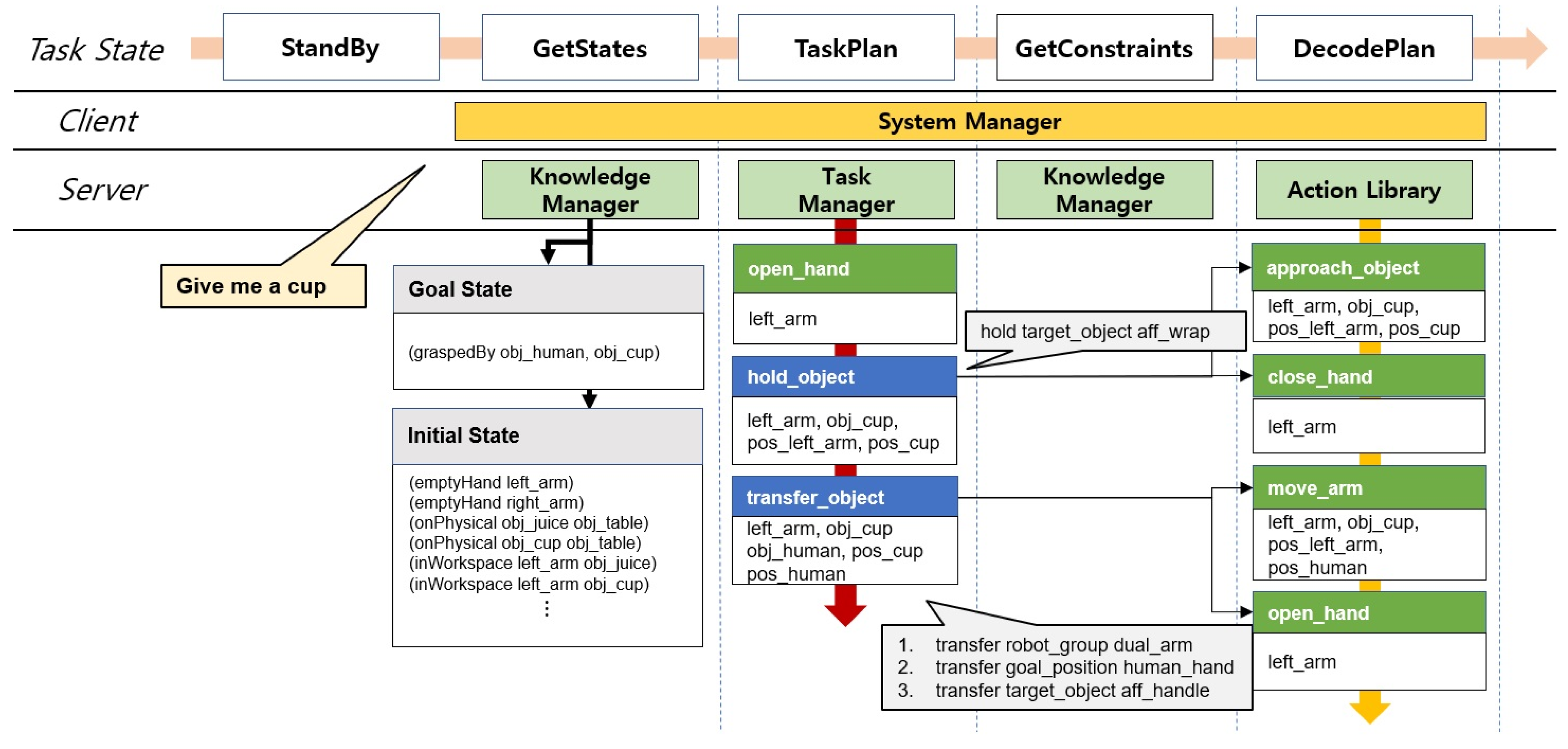

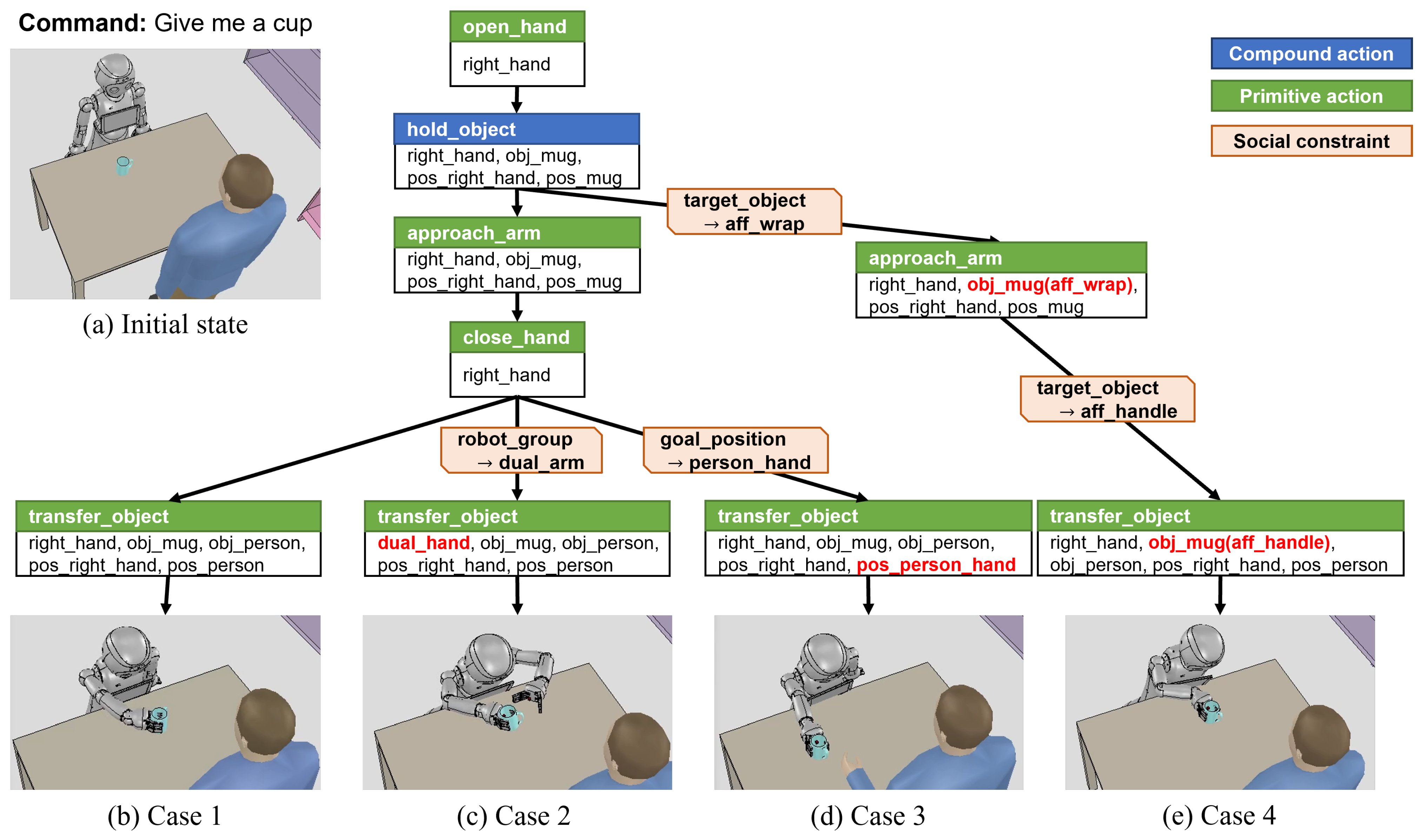

2.2.2. Symbolic Task Planning

2.2.3. Motion Planning with Social Constraints

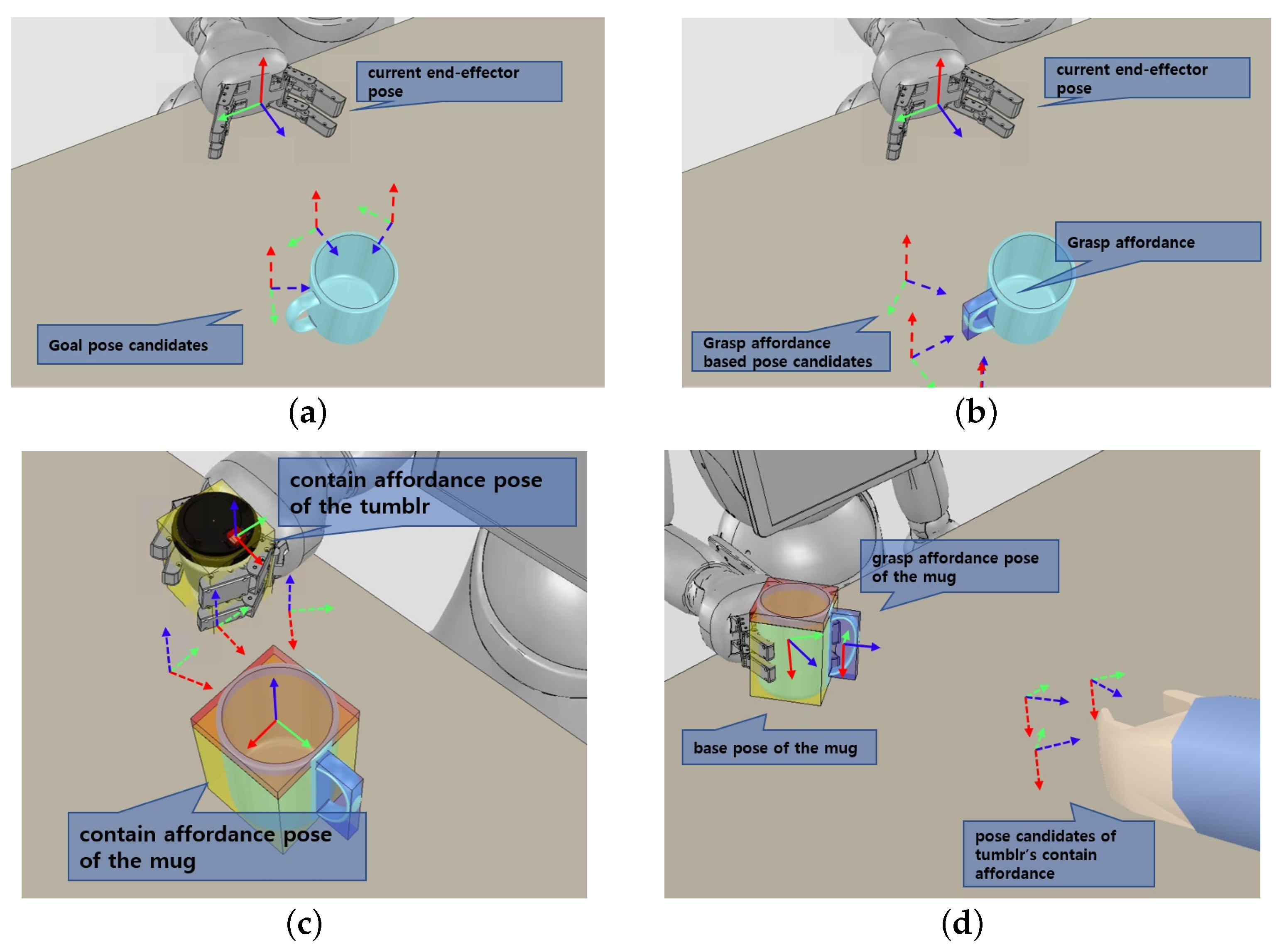

- ApproachToObject behavior: It is a motion that moves the robot’s gripper into place prior to graspable affordance of an object. Our approach motion planner uses the URDF files, including robot information and 3D mesh files, such as STEP format of the target object to manipulate, as well as 6D pose data. When collision-free path between current pose and desired pose is calculated, multiple candidate goals are provided for robot’s end effector to approach in the frontal direction of the target object as shown in the Figure 7a. If the object’s graspable affordance is provided as an option, as shown in Figure 7b, goal poses are generated to approach affordance, and the path is generated.

- PourObject behavior: Pouring is the action of pouring a beverage from one container into another, which is necessary to perform a manipulation task, such as providing a beverage. There are studies that use force sensors or algorithms that determine the affordance of the object as part of planning the pour motion for a stable pour [39,40]. We implement a planner that can reflect the affordance of an object by motion planning that only takes into account the tilting behavior of the beverage without considering the flow of the beverage. When the pouring behavior module receives the bounding box and affordances of the target objects to be poured, the planner creates several candidate goal poses accessible to the container to be poured based on contain affordance coordinates and calculates a goal pose that allows pitching motion without collision as shown in Figure 7c.

- TransferObject behavior: The transferring motion is a motion that carries a holding object. The transferring behavior module receives the 3D bounding box of the objects, the 6D pose for the goal position to be delivered, and the object affordances as an optional input. If the motion path between the goal pose and the current pose of the end-effector holding the object is planned, the result is returned. If the object affordance is included as optional input, the motion path between the goal pose and the current pose based on the object affordance is planned so that a specific part of the object faces the target point, as shown in Figure 7d.

- OpenCloseHand behavior: The robot gripper motion planner creates a motion trajectory to hold or release objects. The object can be held just by closing the gripper joint since the approach_arm action is carried out first in accordance with the action sequence to take a position before grasping the object. Therefore, we use a predefined trajectory of gripper joints to open and close the robot gripper.

- MoveBase behavior: The mobile motion planner calculates motion for moving the robot mobile to a target pose. This behavior module calculates the path trajectory based on the RRT star planner by considering the approach direction to the goal pose.

- OpenDoor behavior: The door is a manipulation object that the service robot can often encounter in an indoor environment, and there are several studies [12,41] to calculate the motion for opening the door using the robot. We briefly implement a module to plan a motion to open a container with a door in which an object can be inserted for experiments in Section 3.2. We calculate the motion path to open the door from the current position of the robot to the axis of the hinge of the door for the container with the door opened to the left and right.

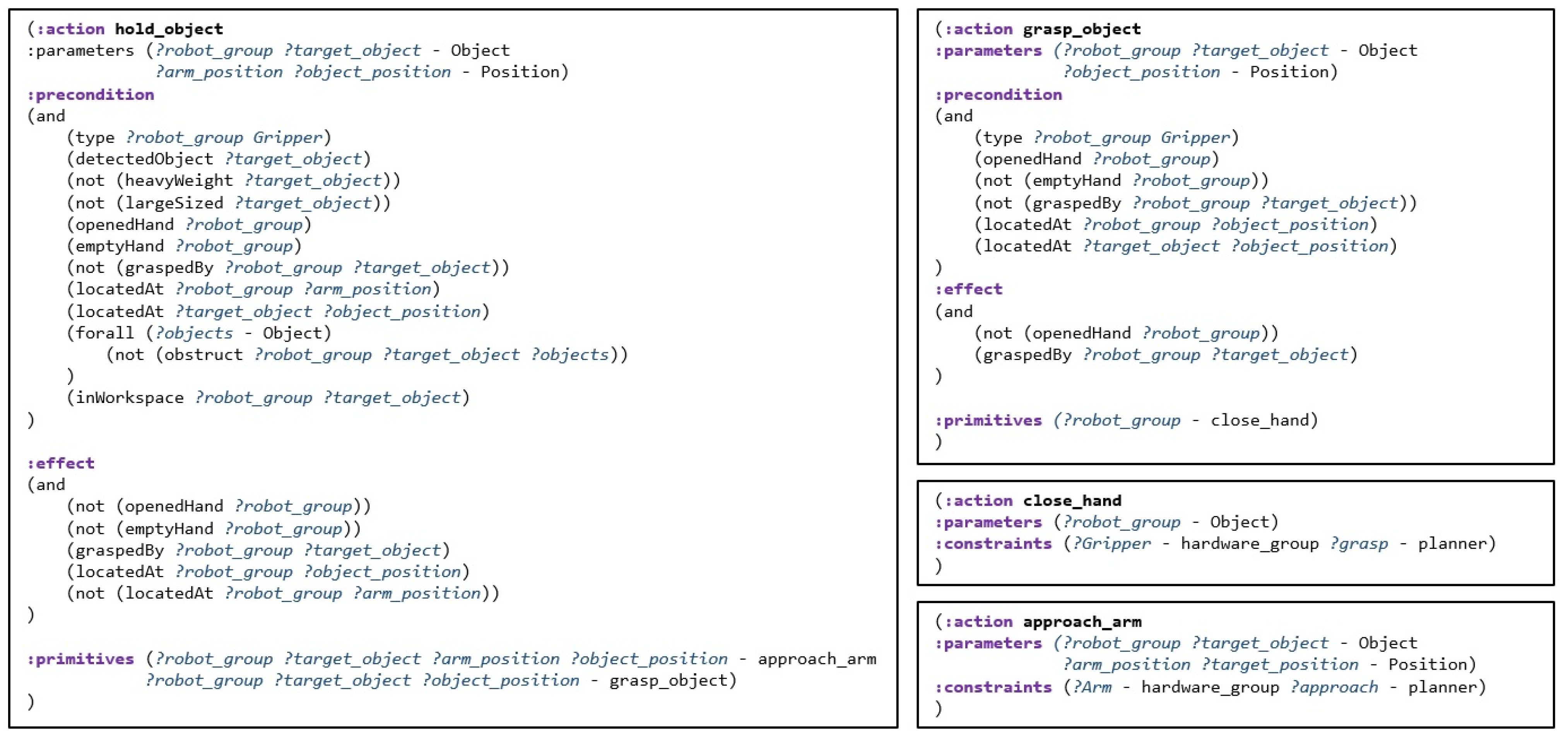

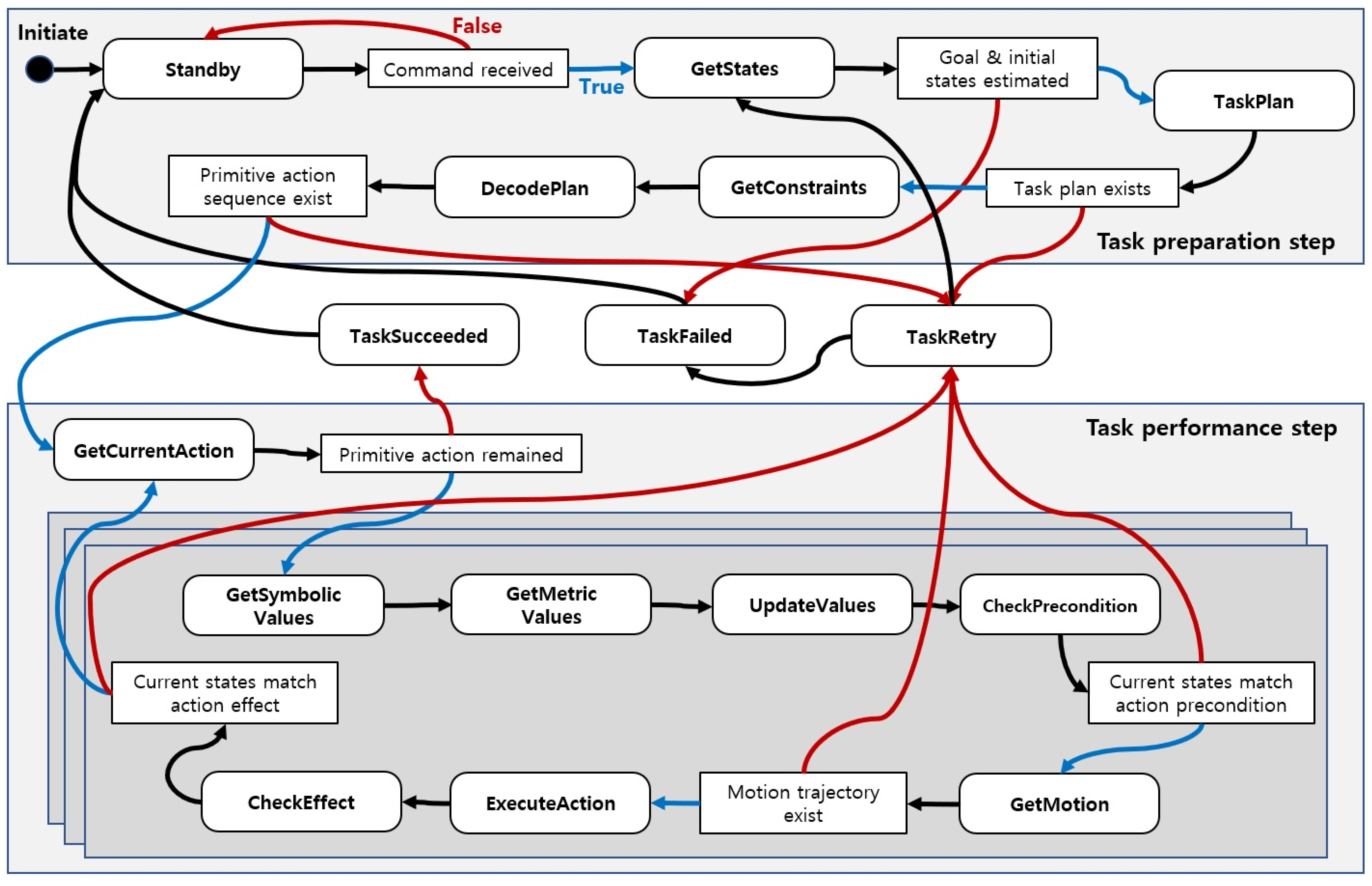

2.2.4. Task Management

Task States and Transition Rules

- Standby: In the Standby state, the system waits before or after performing the operation task until the next user’s command is received, and the ROS service receives a predefined command script as input information.

- GetStates: When the user orders the robot, commands are transferred to the knowledge manager from the system manager. Then, knowledge manager infers to determine the goal states for the command’s execution as well as the current states of the robot and task environment. Then, the system manager receives a response from the knowledge manager. If inference fails, it is determined that the intent of the user command is not grasped and task state moves to the Standby to obtain the user’s command.

- TaskPlan: When the goal state and current state predicates are obtained, the system manager forwards them to the task manager and requests a task plan. If the action sequence is successfully generated after the task plan, the task state moves to GetConstraints, and, if sequence is not planned, the state transitions to GetStates after it becomes TaskRetry state to infer the current state and try the task plan again. If the task plan retry continues, the system determines that the task is not in a situation where it can perform and the task state moves to Standby to receive the command again.

- GetConstraints: When the task plan is completed, the system manager receives a compound action sequence. In the GetConstraints state, the system manager requests the knowledge manager to impose social constraints on each compound action.

- DecodePlan: In this state, a compound action sequence derived from a task plan is decomposed into a series of primitive actions. The system manager requests action models from the action library to know which primitive actions each compound action is divided into, and social constraints assigned in the previous state are inherited as parameters for the divided primitive actions.

- GetCurrentAction: After the primitive action sequence is decoded, the robot takes out primitive actions to be performed in the sequence one by one. If there are no actions to be performed in the sequence after the executions, it is determined that the task is successful and transitions to the TaskSucceeded state and becomes a Standby to receive the next command.

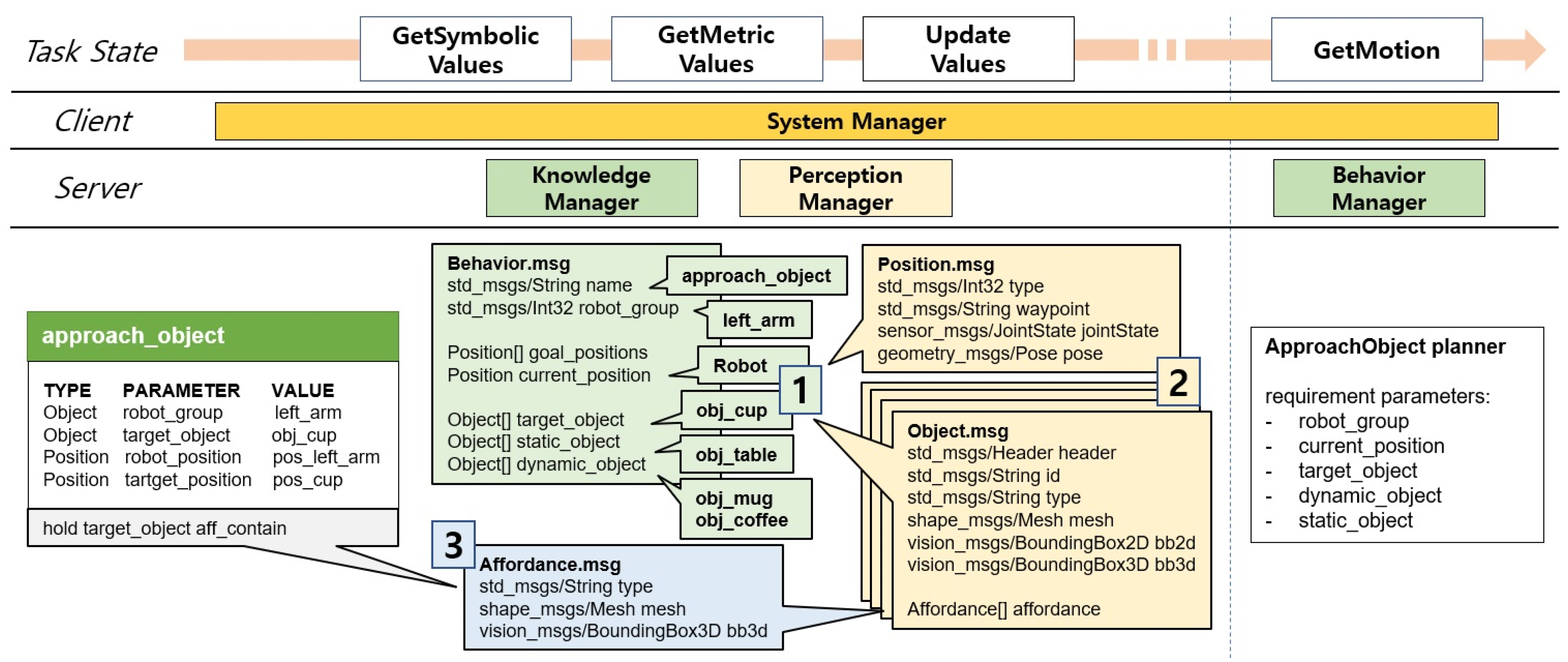

- GetSymbolicValues: When the action to be performed is determined, the system manager brings up a model of this action from the action library. From the behavior model, the system manager extracts symbolic parameter values of the behavior and transition state to the GetMetricValues to embody them.

- GetMericValues: GetMericValues state is a state where the primitive action’s symbolic parameter values are transformed into geometric values. The system manager identifies where the values recognized by the perception manager correspond and updates the symbolic values.

- UpdateValues: When the metric values of the primitive action are assigned, the values are updated to reflect the social constraints obtained from the GetConstraints state. The social constraint is inherited from the compound action, and refer to Section 2.2.4 for the detailed process.

- CheckPrecondition: Before performing motion planning, when the action parameter values are all embodied, compare the conditions between the precondition of the action and the current states. If they do not match, it is considered that the environment has changed and the task state moves to TaskRetry.

- GetMotion: In this task state, the system manager sends the metric values to the behavior manager to generate the motion trajectory. If there is no possible motion, the robot state transitions to the task retry state and retries in the GetStates state.

- ExecuteAction: The system manager delivers the motion trajectory to the robot hardware controller. Then, robot moves along the motion trajectory. The task state transitions to CheckEffect state if the robot has completed its motion.

- CheckEffect: Similar to the CheckPrecondition state, the robot compares the effect of the action with the current state. If the comparison results are consistent, the system manager determines that the current action is successful. The robot then transitions to GetCurrentAction and repeats the actions in the sequence.

- TaskRetry: There are many reasons for task failure, such as instantaneous failure to recognize an object and not performing a task plan, or the robot cannot grasp the object because the position or posture is incorrectly recognized. This failure is identified in the GetMotion and CheckEffect states, and it then enters the TaskRetry state, prompting the task to be re-planned using knowledge inference or the objects to be recognized again. The operation fails if the robot fails more times than a predetermined number.

Data Flow in Task States

3. System Evaluation

3.1. System Environments

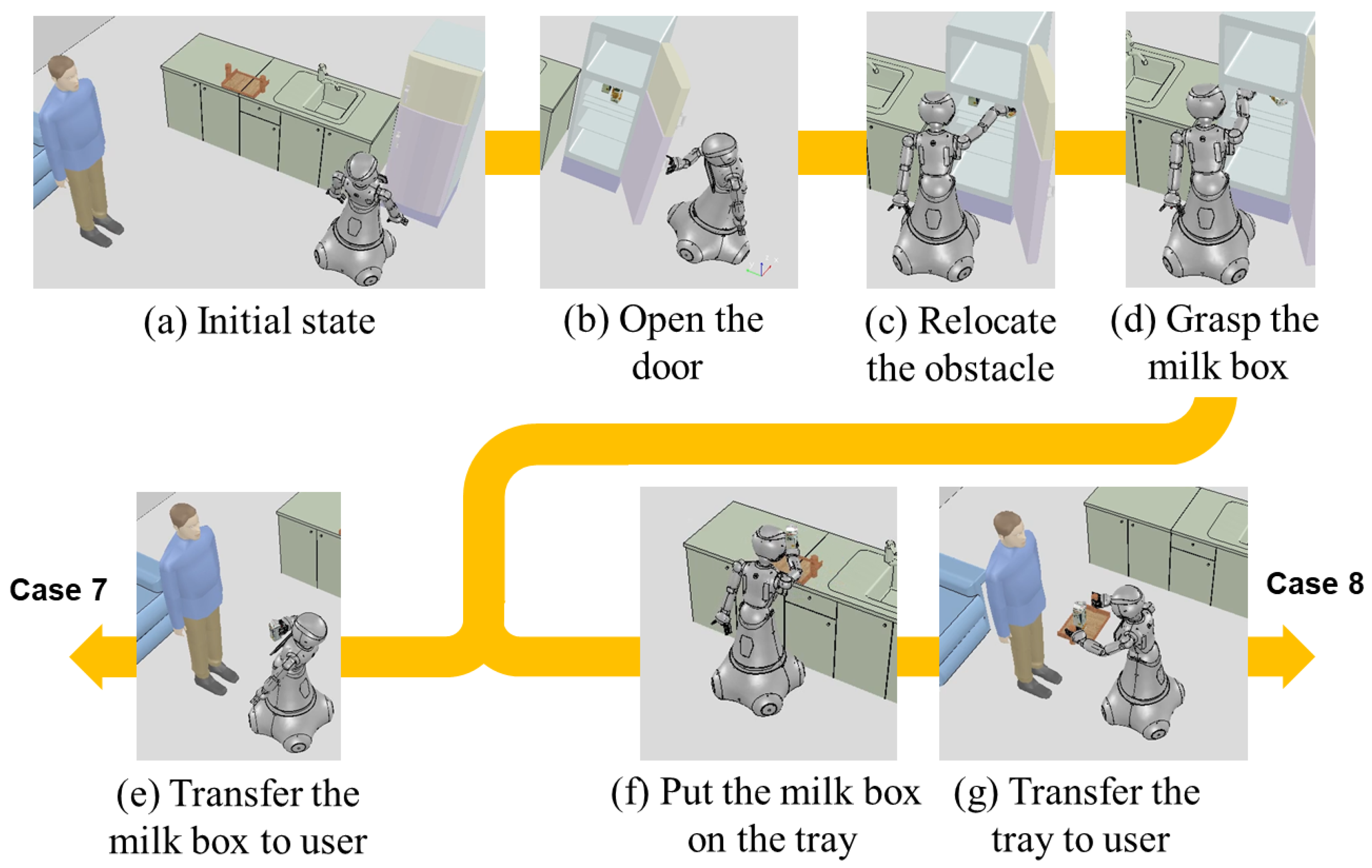

3.2. Task Simulation Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Action Library

| # | Action | Description | Primitives |

|---|---|---|---|

| 1 | approach_arm | Move robot end-effector for the pre-grasping with single arm | - |

| 2 | approach_base | Move robot base to approach objects | - |

| 3 | close_container | Close container object | approach_base approach_arm grasp_object close_door open_hand |

| 4 | close_door | Close door | - |

| 5 | close_hand | Close robot gripper joints | - |

| 6 | deliver_object | Deliver a grasped object to someone | approach_base transfer_object |

| 7 | grasp_object | Grasp object just closing gripper | close_hand |

| 8 | hold_object | Hold object using single-arm | approach_base approach_arm grasp_object |

| 9 | move_arm | Move robot end-effector from A to B | - |

| 10 | move_base | Move robot base from A to B | - |

| 11 | open_container | Open container object | approach_base approach_arm grasp_object open_door open_hand approach_base |

| 12 | open_door | Open door | - |

| 13 | open_hand | Open robot gripper joints | - |

| 14 | pour_object | Pour object into or onto another object | - |

| 15 | putin_object | Put object into container object | - |

| 16 | putup_object | Put object on top of another object | - |

| 17 | relocate_obstacle | Relocate object from A to B | - |

| 18 | stackup_object | Stack grasped object on top of another | putup_object open_hand |

| 19 | takeout_object | Take out object from container object | approach_base hold_object |

| 20 | transfer_object | Hand over a grasped object to someone | - |

References

- Cakmak, M.; Takayama, L. Towards a comprehensive chore list for domestic robots. In Proceedings of the 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Tokyo, Japan, 3–6 March 2013; pp. 93–94. [Google Scholar]

- World Health Organization. World Health Statistics 2021; WHO Press: Geneva, Switzerland, 2021. [Google Scholar]

- Gonzalez-Aguirre, J.A.; Osorio-Oliveros, R.; Rodríguez-Hernández, K.L.; Lizárraga-Iturralde, J.; Morales Menendez, R.; Ramírez-Mendoza, R.A.; Ramírez-Moreno, M.A.; Lozoya-Santos, J.d.J. Service robots: Trends and technology. Appl. Sci. 2021, 11, 10702. [Google Scholar] [CrossRef]

- Sakagami, Y.; Watanabe, R.; Aoyama, C.; Matsunaga, S.; Higaki, N.; Fujimura, K. The intelligent ASIMO: System overview and integration. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Lausanne, Switzerland, 30 September–4 October 2002; Volume 3, pp. 2478–2483. [Google Scholar]

- Fujita, M. On activating human communications with pet-type robot AIBO. Proc. IEEE 2004, 92, 1804–1813. [Google Scholar] [CrossRef]

- Zachiotis, G.A.; Andrikopoulos, G.; Gornez, R.; Nakamura, K.; Nikolakopoulos, G. A survey on the application trends of home service robotics. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; pp. 1999–2006. [Google Scholar]

- Pandey, A.K.; Gelin, R. A mass-produced sociable humanoid robot: Pepper: The first machine of its kind. IEEE Robot. Autom. Mag. 2018, 25, 40–48. [Google Scholar] [CrossRef]

- Ribeiro, T.; Gonçalves, F.; Garcia, I.S.; Lopes, G.; Ribeiro, A.F. CHARMIE: A collaborative healthcare and home service and assistant robot for elderly care. Appl. Sci. 2021, 11, 7248. [Google Scholar] [CrossRef]

- Yi, J.s.; Luong, T.A.; Chae, H.; Ahn, M.S.; Noh, D.; Tran, H.N.; Doh, M.; Auh, E.; Pico, N.; Yumbla, F.; et al. An Online Task-Planning Framework Using Mixed Integer Programming for Multiple Cooking Tasks Using a Dual-Arm Robot. Appl. Sci. 2022, 12, 4018. [Google Scholar] [CrossRef]

- Bai, X.; Yan, W.; Cao, M.; Xue, D. Distributed multi-vehicle task assignment in a time-invariant drift field with obstacles. IET Control Theory Appl. 2019, 13, 2886–2893. [Google Scholar] [CrossRef]

- Bolu, A.; Korçak, Ö. Adaptive task planning for multi-robot smart warehouse. IEEE Access 2021, 9, 27346–27358. [Google Scholar] [CrossRef]

- Urakami, Y.; Hodgkinson, A.; Carlin, C.; Leu, R.; Rigazio, L.; Abbeel, P. Doorgym: A scalable door opening environment and baseline agent. arXiv 2019, arXiv:1908.01887. [Google Scholar]

- Tang, Z.; Xu, L.; Wang, Y.; Kang, Z.; Xie, H. Collision-free motion planning of a six-link manipulator used in a citrus picking robot. Appl. Sci. 2021, 11, 11336. [Google Scholar] [CrossRef]

- Zakharov, S.; Shugurov, I.; Ilic, S. Dpod: 6D pose object detector and refiner. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1941–1950. [Google Scholar]

- Sajjan, S.; Moore, M.; Pan, M.; Nagaraja, G.; Lee, J.; Zeng, A.; Song, S. Clear grasp: 3D shape estimation of transparent objects for manipulation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3634–3642. [Google Scholar]

- Tsitos, A.C.; Dagioglou, M.; Giannakopoulos, T. Real-time feasibility of a human intention method evaluated through a competitive human-robot reaching game. In Proceedings of the 2022 ACM/IEEE International Conference on Human-Robot Interaction, Sapporo Hokkaido, Japan, 7–10 March 2022; pp. 1080–1084. [Google Scholar]

- Singh, R.; Miller, T.; Newn, J.; Sonenberg, L.; Velloso, E.; Vetere, F. Combining planning with gaze for online human intention recognition. In Proceedings of the 17th International Conference on Autonomous Agents and Multiagent Systems, Stockholm, Sweden, 10–15 July 2018; pp. 488–496. [Google Scholar]

- Hanheide, M.; Göbelbecker, M.; Horn, G.S.; Pronobis, A.; Sjöö, K.; Aydemir, A.; Jensfelt, P.; Gretton, C.; Dearden, R.; Janicek, M.; et al. Robot task planning and explanation in open and uncertain worlds. Artif. Intell. 2017, 247, 119–150. [Google Scholar] [CrossRef]

- Liu, H.; Cui, L.; Liu, J.; Zhang, Y. Natural language inference in context-investigating contextual reasoning over long texts. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; Volume 35, pp. 13388–13396. [Google Scholar]

- Garrett, C.R.; Chitnis, R.; Holladay, R.; Kim, B.; Silver, T.; Kaelbling, L.P.; Lozano-Pérez, T. Integrated task and motion planning. Annu. Rev. Control. Robot. Auton. Syst. 2021, 4, 265–293. [Google Scholar] [CrossRef]

- Guo, H.; Wu, F.; Qin, Y.; Li, R.; Li, K.; Li, K. Recent trends in task and motion planning for robotics: A Survey. ACM Comput. Surv. 2023, 55, 289. [Google Scholar] [CrossRef]

- Mao, X.; Huang, H.; Wang, S. Software Engineering for Autonomous Robot: Challenges, Progresses and Opportunities. In Proceedings of the 2020 27th Asia-Pacific Software Engineering Conference (APSEC), Singapore, 1–4 December 2020; pp. 100–108. [Google Scholar]

- Houliston, T.; Fountain, J.; Lin, Y.; Mendes, A.; Metcalfe, M.; Walker, J.; Chalup, S.K. Nuclear: A loosely coupled software architecture for humanoid robot systems. Front. Robot. AI 2016, 3, 20. [Google Scholar] [CrossRef]

- Garcıa, S.; Menghi, C.; Pelliccione, P.; Berger, T.; Wohlrab, R. An architecture for decentralized, collaborative, and autonomous robots. In Proceedings of the 2018 IEEE International Conference on Software Architecture (ICSA), Seattle, WA, USA, 30 April–4 May 2018; pp. 75–7509. [Google Scholar]

- Jiang, Y.; Walker, N.; Kim, M.; Brissonneau, N.; Brown, D.S.; Hart, J.W.; Niekum, S.; Sentis, L.; Stone, P. LAAIR: A layered architecture for autonomous interactive robots. arXiv 2018, arXiv:1811.03563. [Google Scholar]

- Keleştemur, T.; Yokoyama, N.; Truong, J.; Allaban, A.A.; Padir, T. System architecture for autonomous mobile manipulation of everyday objects in domestic environments. In Proceedings of the 12th ACM International Conference on Pervasive Technologies Related to Assistive Environments, Rhodes, Greece, 5–7 June 2019; pp. 264–269. [Google Scholar]

- Aleotti, J.; Micelli, V.; Caselli, S. An affordance sensitive system for robot to human object handover. Int. J. Soc. Robot. 2014, 6, 653–666. [Google Scholar] [CrossRef]

- Do, T.T.; Nguyen, A.; Reid, I. Affordancenet: An end-to-end deep learning approach for object affordance detection. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 5882–5889. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Beetz, M.; Beßler, D.; Haidu, A.; Pomarlan, M.; Bozcuoğlu, A.K.; Bartels, G. Know rob 2.0—A 2nd generation knowledge processing framework for cognition-enabled robotic agents. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 512–519. [Google Scholar]

- Chitta, S.; Sucan, I.; Cousins, S. Moveit! [ros topics]. IEEE Robot. Autom. Mag. 2012, 19, 18–19. [Google Scholar] [CrossRef]

- Miller, A.T.; Allen, P.K. Graspit! a versatile simulator for robotic grasping. IEEE Robot. Autom. Mag. 2004, 11, 110–122. [Google Scholar] [CrossRef]

- Sucan, I.A.; Moll, M.; Kavraki, L.E. The open motion planning library. IEEE Robot. Autom. Mag. 2012, 19, 72–82. [Google Scholar] [CrossRef]

- Helmert, M. The fast downward planning system. J. Artif. Intell. Res. 2006, 26, 191–246. [Google Scholar] [CrossRef]

- Jeon, J.; Jung, H.; Yumbla, F.; Luong, T.A.; Moon, H. Primitive Action Based Combined Task and Motion Planning for the Service Robot. Front. Robot. AI 2022, 9, 713470. [Google Scholar] [CrossRef]

- Jeon, J.; Jung, H.; Luong, T.; Yumbla, F.; Moon, H. Combined task and motion planning system for the service robot using hierarchical action decomposition. Intell. Serv. Robot. 2022, 15, 487–501. [Google Scholar] [CrossRef]

- Bruno, B.; Chong, N.Y.; Kamide, H.; Kanoria, S.; Lee, J.; Lim, Y.; Pandey, A.K.; Papadopoulos, C.; Papadopoulos, I.; Pecora, F.; et al. Paving the way for culturally competent robots: A position paper. In Proceedings of the 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28 August–1 September 2017; pp. 553–560. [Google Scholar]

- Fox, M.; Long, D. PDDL2. 1: An extension to PDDL for expressing temporal planning domains. J. Artif. Intell. Res. 2003, 20, 61–124. [Google Scholar] [CrossRef]

- Pan, Z.; Park, C.; Manocha, D. Robot motion planning for pouring liquids. In Proceedings of the International Conference on Automated Planning and Scheduling, London, UK, 12–17 June 2016; Volume 26, pp. 518–526. [Google Scholar]

- Tsuchiya, Y.; Kiyokawa, T.; Ricardez, G.A.G.; Takamatsu, J.; Ogasawara, T. Pouring from Deformable Containers Using Dual-Arm Manipulation and Tactile Sensing. In Proceedings of the IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; pp. 357–362. [Google Scholar]

- Su, H.R.; Chen, K.Y. Design and implementation of a mobile robot with autonomous door opening ability. Int. J. Fuzzy Syst. 2019, 21, 333–342. [Google Scholar] [CrossRef]

| Case | Command | Goal Predicates | Social Constraints |

|---|---|---|---|

| 1 | Give me a cup | graspedBy obj_human, obj_cup | - |

| 2 | hold_object target_object aff_wrap transfer_object target_object aff_handle | ||

| 3 | transfer_object robot_group dual_arm | ||

| 4 | transfer_object goal_position person_hand | ||

| 5 | Give me a coffee | inContGeneric obj_cup, obj_coffee | pour_object target_object obj_mug |

| 6 | Give me a juice | inContGeneric obj_cup, obj_juice | pour_object target_object obj_glass |

| 7 | Give me a milk | graspedBy obj_human, obj_milk | - |

| 8 | graspedBy obj_human, obj_milk belowOf obj_tray, obj_milk | transfer_object target_object, obj_tray |

| Measurement | Case 1 | Case 2 | Case 3 | Case 4 | Case 5 | Case 6 | Case 7 | Case 8 | |

|---|---|---|---|---|---|---|---|---|---|

| Success rate | 100% | 100% | 86% | 100% | 92% | 98% | 98% | 92% | |

| Avg. execution time | Knowledge reasoning | 0.08 s | 0.08 s | 0.05 s | 0.37 s | 1.52 s | 1.81 s | 1.62 s | 1.38 s |

| Task planning | 0.32 s | 0.43 s | 0.28 s | 0.25 s | 0.47 s | 0.31 s | 0.49 s | 0.51 s | |

| Motion planning | 3.41 s | 4.74 s | 9.06 s | 5.54 s | 8.42 s | 6.38 s | 7.47 s | 8.62 s | |

| Robot movement | 34.42 s | 31.38 s | 57.45 s | 32.6 s | 24.19 s | 13.78 s | 245.12 s | 320.89 s | |

| Total operation | 38.23 s | 36.63 s | 66.84 s | 38.76 s | 34.60 s | 22.28 s | 254.7 s | 331.4 s | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeon, J.; Jung, H.-r.; Pico, N.; Luong, T.; Moon, H. Task-Motion Planning System for Socially Viable Service Robots Based on Object Manipulation. Biomimetics 2024, 9, 436. https://doi.org/10.3390/biomimetics9070436

Jeon J, Jung H-r, Pico N, Luong T, Moon H. Task-Motion Planning System for Socially Viable Service Robots Based on Object Manipulation. Biomimetics. 2024; 9(7):436. https://doi.org/10.3390/biomimetics9070436

Chicago/Turabian StyleJeon, Jeongmin, Hong-ryul Jung, Nabih Pico, Tuan Luong, and Hyungpil Moon. 2024. "Task-Motion Planning System for Socially Viable Service Robots Based on Object Manipulation" Biomimetics 9, no. 7: 436. https://doi.org/10.3390/biomimetics9070436

APA StyleJeon, J., Jung, H.-r., Pico, N., Luong, T., & Moon, H. (2024). Task-Motion Planning System for Socially Viable Service Robots Based on Object Manipulation. Biomimetics, 9(7), 436. https://doi.org/10.3390/biomimetics9070436