A Fish-like Binocular Vision System for Underwater Perception of Robotic Fish

Abstract

1. Introduction

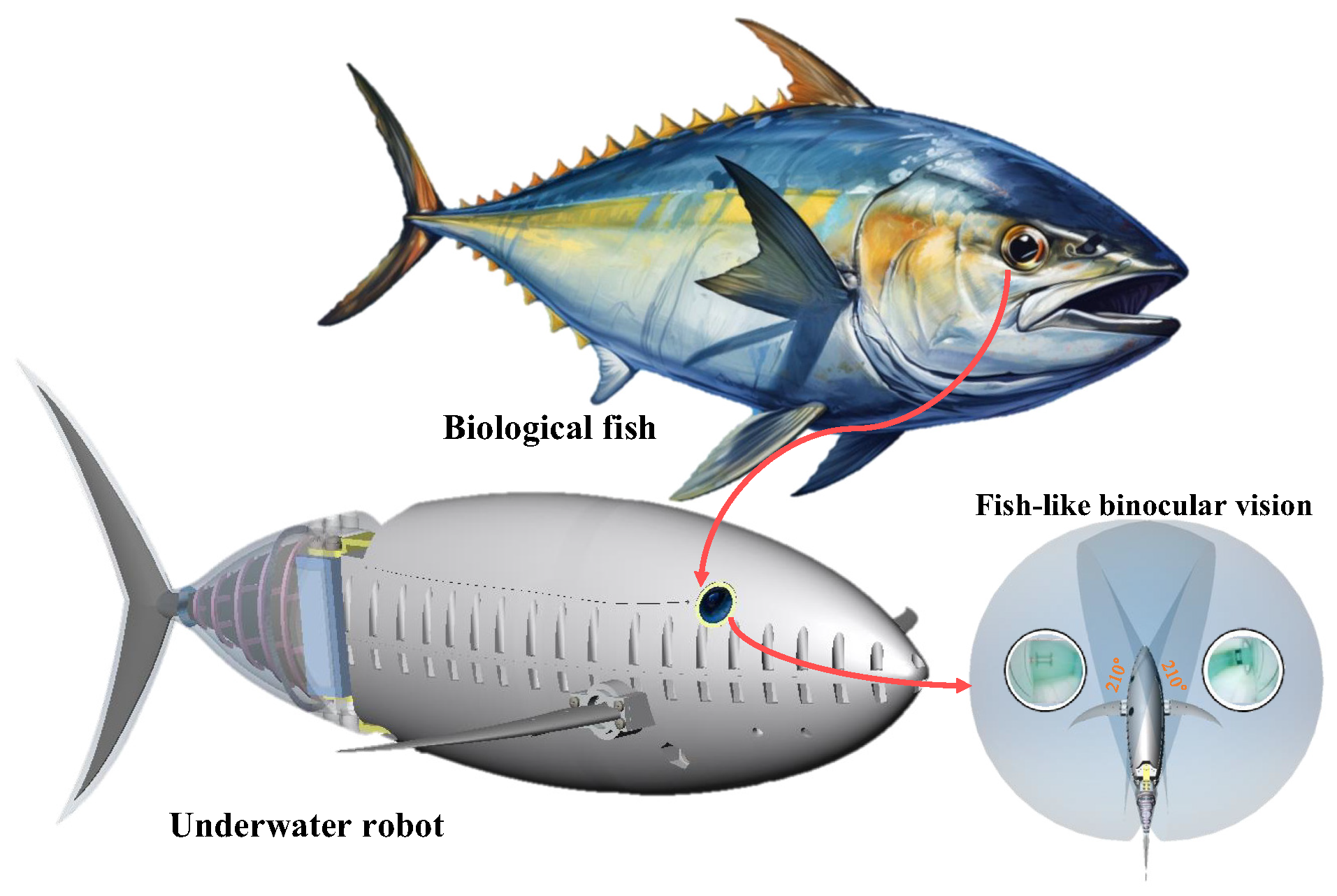

2. Fish-like Binocular Vision System

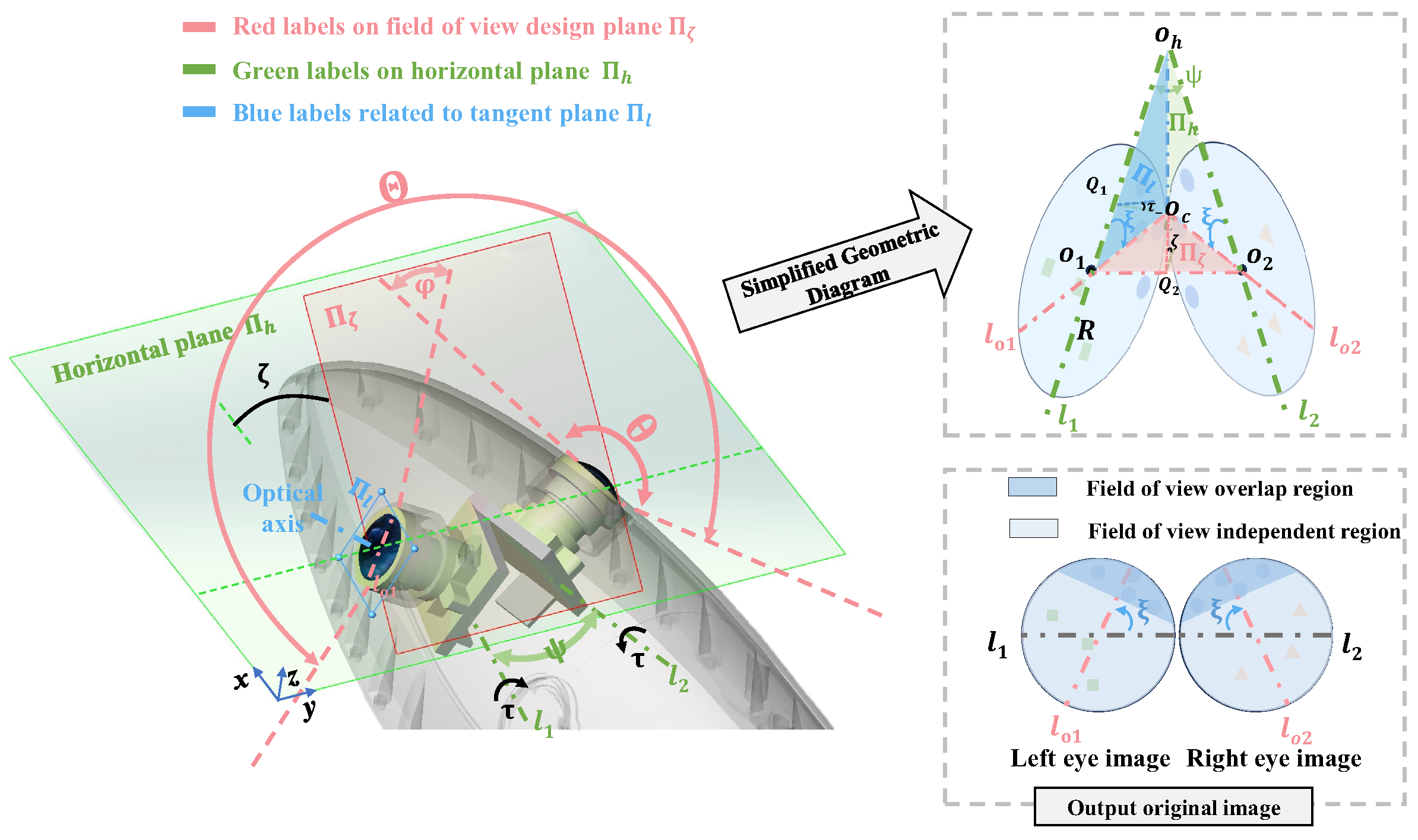

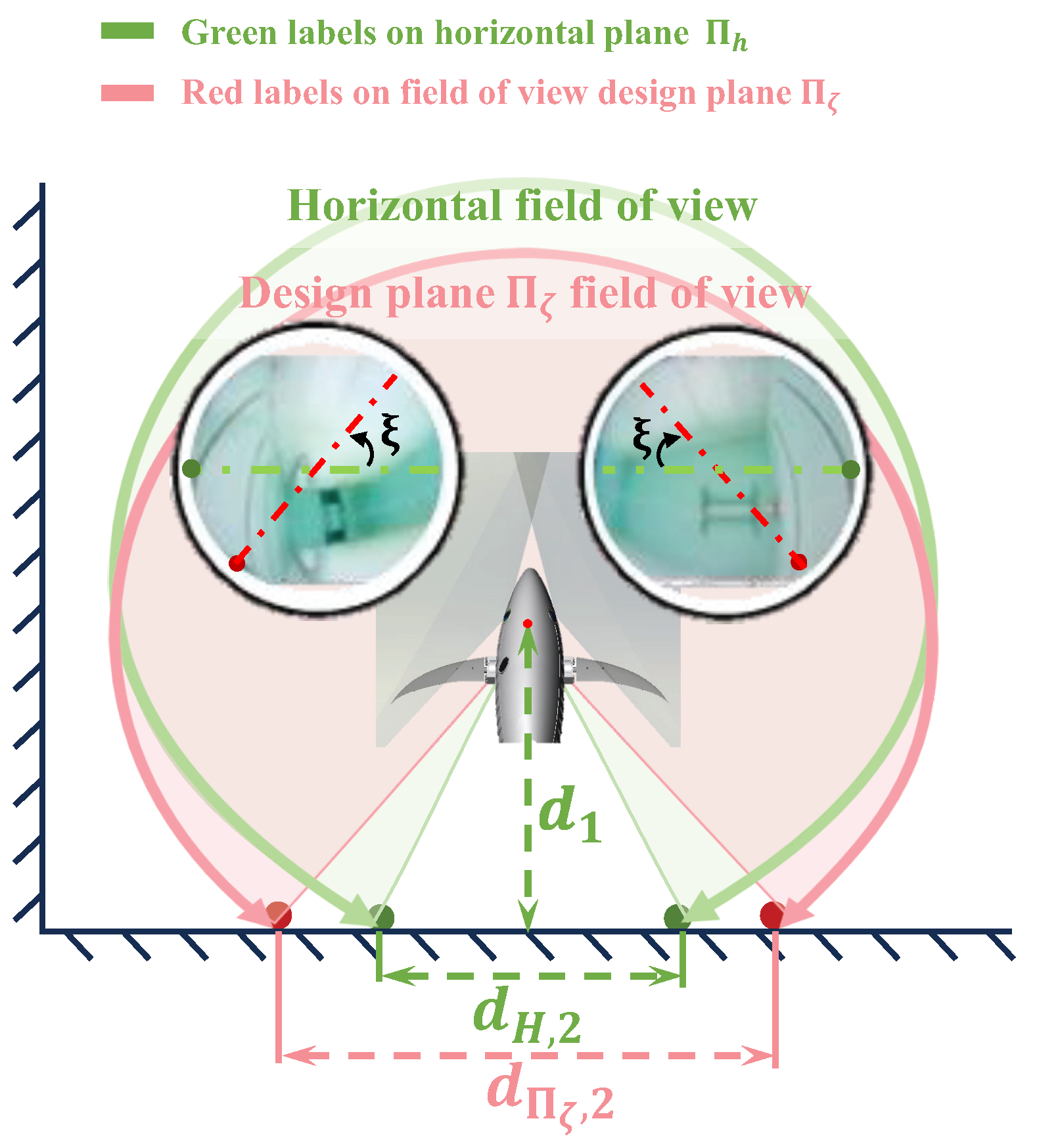

2.1. Field of View Design

2.2. Deployment on Robotic Fish

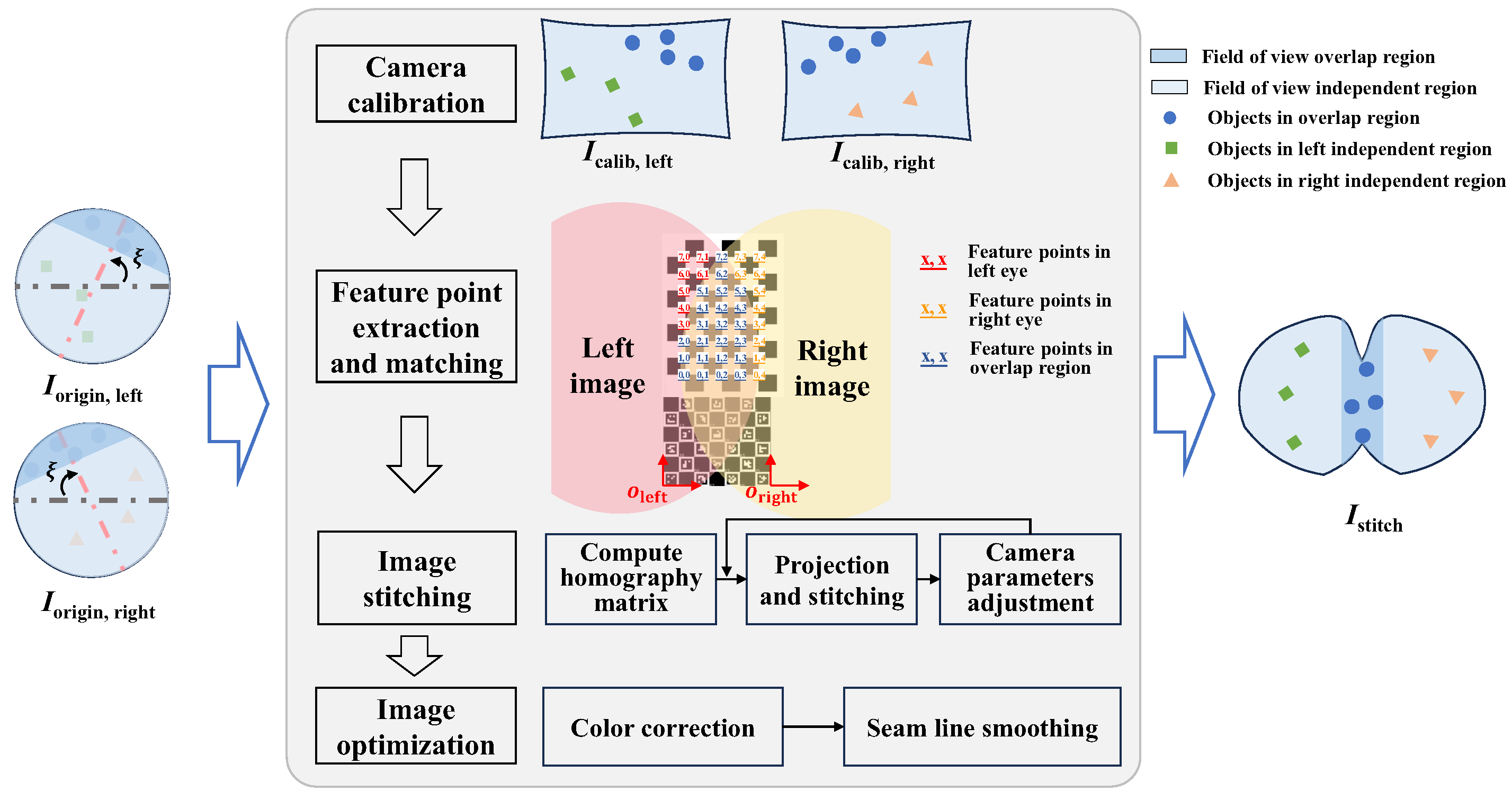

3. Binocular Visual Field Stitching for Fish-like Vision System

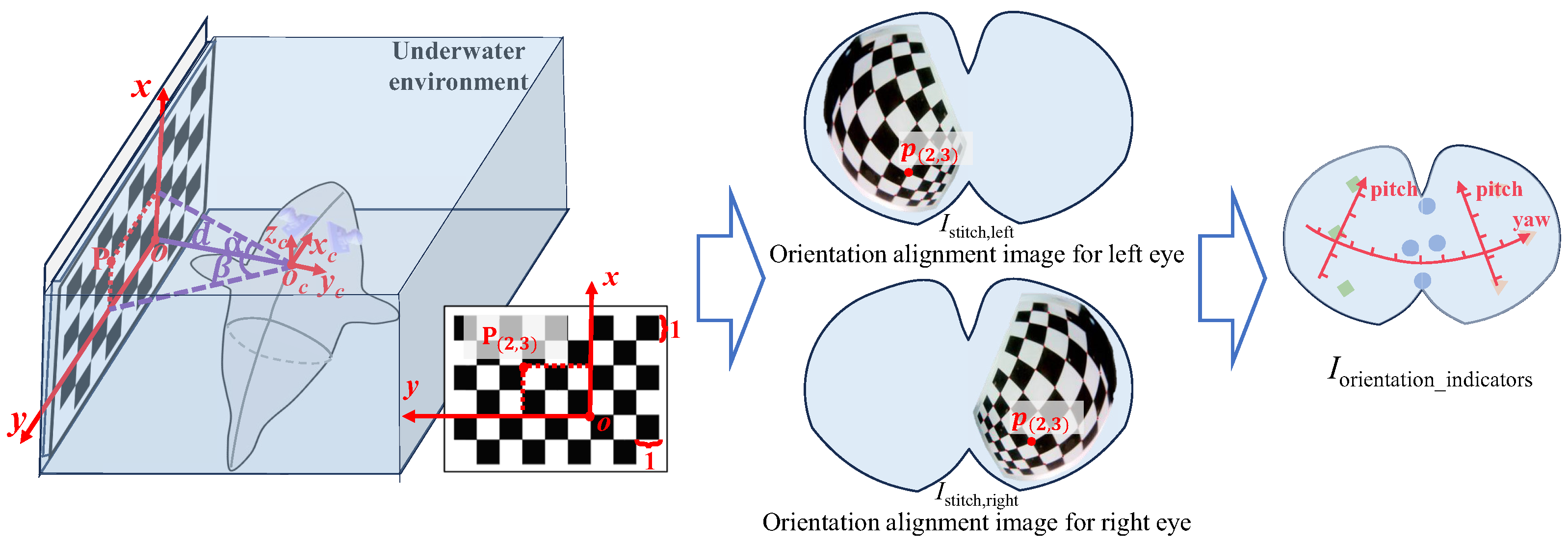

4. Orientation Alignment for Fish-like Vision System

5. Experiments and Results

5.1. Field of View Test

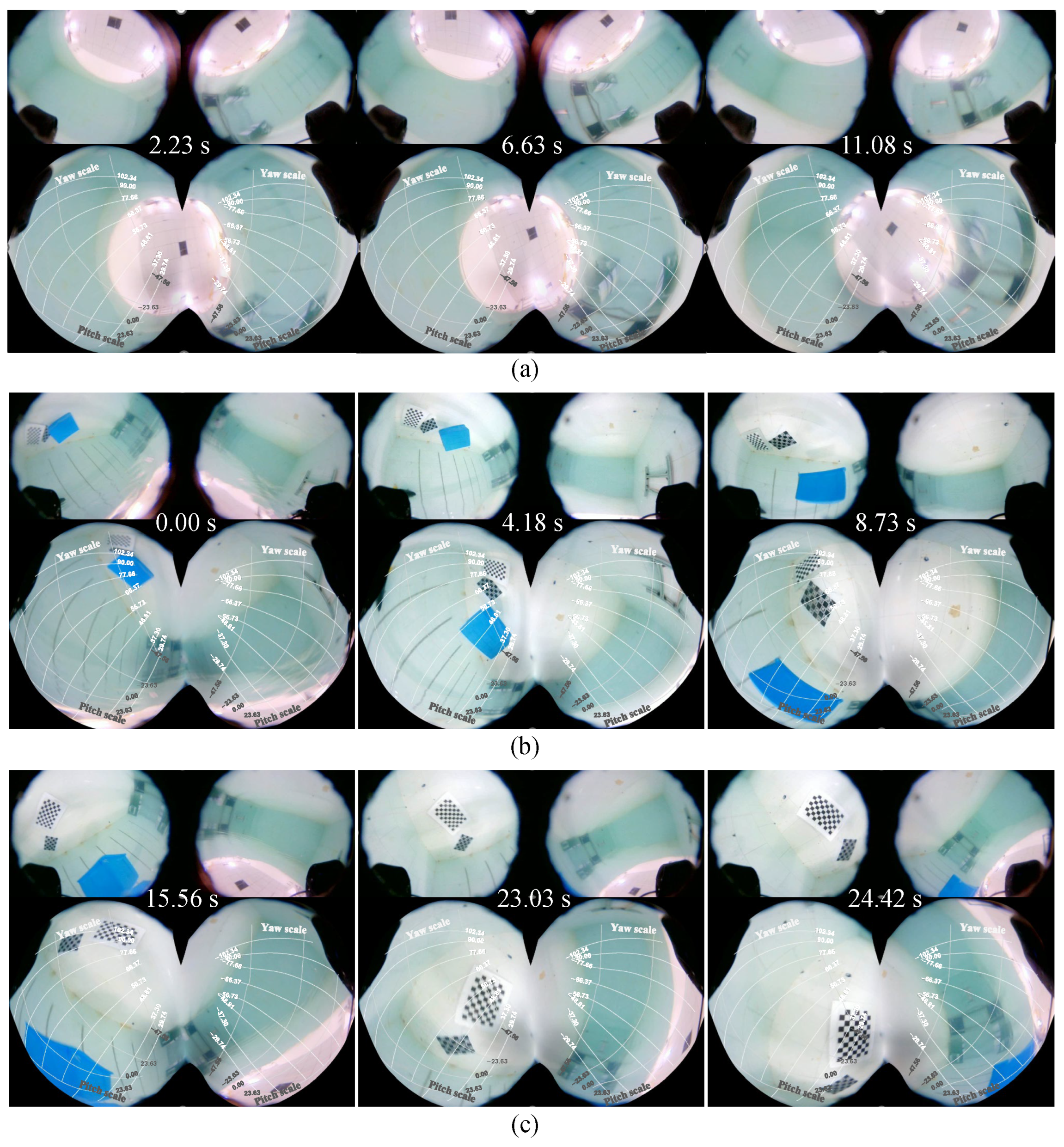

5.2. Visual Field Stitching Test

5.3. Orientation Indicator Test

5.4. Comprehensive Performance Test

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- González-Sabbagh, S.P.; Robles-Kelly, A. A survey on underwater computer vision. ACM Comput. Surv. 2023, 55, 268. [Google Scholar] [CrossRef]

- Zhang, T.; Wan, L.; Zeng, W.; Xu, Y. Object detection and tracking method of AUV based on acoustic vision. China Ocean Eng. 2012, 26, 623–636. [Google Scholar] [CrossRef]

- Kumar, G.S.; Painumgal, U.V.; Kumar, M.N.V.C.; Rajesh, K.H.V. Autonomous underwater vehicle for vision based tracking. Procedia Comput. Sci. 2018, 133, 169–180. [Google Scholar] [CrossRef]

- Huang, H.; Bian, X.; Cai, F.; Li, J.; Jiang, T.; Zhang, Z.; Sun, C. A review on visual servoing for underwater vehicle manipulation systems automatic control and case study. Ocean Eng. 2022, 260, 112065. [Google Scholar] [CrossRef]

- Qin, J.; Li, M.; Li, D.; Zhong, J.; Yang, K. A survey on visual navigation and positioning for autonomous UUVs. Remote Sens. 2022, 14, 3794. [Google Scholar] [CrossRef]

- Zhang, T.; Zeng, W.; Wan, L.; Qin, Z. Vision-based system of AUV for an underwater pipeline tracker. China Ocean Eng. 2012, 26, 547–554. [Google Scholar] [CrossRef]

- Balasuriya, A.; Ura, T. Vision-based underwater cable detection and following using AUVs. In Proceedings of the OCEANS’02 MTS/IEEE, Biloxi, MI, USA, 29–31 October 2002; Volume 3, pp. 1582–1587. [Google Scholar]

- Balasuriya, B.A.A.P.; Takai, M.; Lam, W.C.; Ura, T.; Kuroda, Y. Vision based autonomous underwater vehicle navigation: Underwater cable tracking. In Proceedings of the Oceans’97 MTS/IEEE, Halifax, NS, Canada, 6–9 October 1997; Volume 2, pp. 1418–1424. [Google Scholar]

- Bobkov, V.A.; Mashentsev, V.Y.; Tolstonogov, A.Y.; Scherbatyuk, A.P. Adaptive method for AUV navigation using stereo vision. In Proceedings of the International Offshore and Polar Engineering Conference, Rhodes, Greece, 26 June–1 July 2016; pp. 562–565. [Google Scholar]

- Wang, Y.; Ma, X.; Wang, J.; Wang, H. Pseudo–3D vision–inertia based underwater self–localization for AUVs. IEEE Trans. Veh. Technol. 2020, 69, 7895–7907. [Google Scholar] [CrossRef]

- Zhang, S.; Zhao, S.; An, D.; Liu, J.; Wang, H.; Feng, Y.; Li, D.; Zhao, R. Visual SLAM for underwater vehicles: A survey. Comput. Sci. Rev. 2022, 46, 100510. [Google Scholar] [CrossRef]

- Liu, S.; Xu, H.; Lin, Y.; Gao, L. Visual navigation for recovering an AUV by another AUV in shallow water. Sensors 2019, 19, 1889. [Google Scholar] [CrossRef]

- Li, Y.; Jiang, Y.; Cao, J.; Wang, B.; Li, Y. AUV docking experiments based on vision positioning using two cameras. Ocean Eng. 2015, 110, 163–173. [Google Scholar] [CrossRef]

- Zhang, P.; Wu, Z.; Meng, Y.; Dong, H.; Tan, M.; Yu, J. Development and control of a bioinspired robotic remora for hitchhiking. IEEE ASME Trans. Mechatron. 2022, 27, 2852–2862. [Google Scholar] [CrossRef]

- Shortis, M. Calibration techniques for accurate measurements by underwater camera systems. Sensors 2015, 15, 30810–30826. [Google Scholar] [CrossRef]

- Meng, Y.; Wu, Z.; Zhang, P.; Wang, J.; Yu, J. Real-time digital video stabilization of bioinspired robotic fish using estimation-and-prediction framework. IEEE ASME Trans. Mechatron. 2022, 27, 4281–4292. [Google Scholar] [CrossRef]

- Xie, M.; Lai, T.; Fang, Y. A new principle toward robust matching in human-like stereovision. Biomimetics 2023, 8, 285. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, Z. Review on image-stitching techniques. Multimed. Syst. 2020, 26, 413–430. [Google Scholar] [CrossRef]

- Sheng, M.; Tang, S.; Cui, Z.; Wu, W.; Wan, L. A joint framework for underwater sequence images stitching based on deep neural network convolutional neural network. Int. J. Adv. Robot. Syst. 2020, 17, 172988142091506. [Google Scholar] [CrossRef]

- Chen, M.; Nian, R.; He, B.; Qiu, S.; Liu, X.; Yan, T. Underwater image stitching based on SIFT and wavelet fusion. In Proceedings of the OCEANS 2015, Genova, Italy, 18–21 May 2015; pp. 1–4. [Google Scholar]

- Zhang, H.; Zheng, R.; Zhang, W.; Shao, J.; Miao, J. An improved SIFT underwater image stitching method. Appl. Sci. 2023, 13, 12251. [Google Scholar] [CrossRef]

- Zhang, B.; Ma, Y.; Xu, M. Image stitching based on binocular vision. J. Phys. Conf. Ser. 2019, 1237, 032038. [Google Scholar] [CrossRef]

- Tang, M.; Zhou, Q.; Yang, M.; Jiang, Y.; Zhao, B. Improvement of image stitching using binocular camera calibration model. Electronics 2022, 11, 2691. [Google Scholar] [CrossRef]

- Zhang, F.; Liu, F. Parallax-tolerant image stitching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3262–3269. [Google Scholar]

- Kwatra, V.; Schödl, A.; Essa, I.; Turk, G.; Bobick, A. Graphcut textures: Image and video synthesis using graph cuts. ACM Trans. Graph. 2003, 22, 277–286. [Google Scholar] [CrossRef]

- Dai, Q.; Fang, F.; Li, J.; Zhang, G.; Zhou, A. Edge-guided composition network for image stitching. Pattern Recognit. 2021, 118, 108019. [Google Scholar] [CrossRef]

- Chen, X.; Yu, M.; Song, Y. Optimized seam-driven image stitching method based on scene depth information. Electronics 2022, 11, 1876. [Google Scholar] [CrossRef]

- Heesy, C.P. On the relationship between orbit orientation and binocular visual field overlap in mammals. Anat. Rec. Part A Discov. Mol. Cell. Evol. Biol. 2004, 281, 1104–1110. [Google Scholar] [CrossRef] [PubMed]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A flexible technique for accurate omnidirectional camera calibration and structure from motion. In Proceedings of the Fourth IEEE International Conference on Computer Vision Systems (ICVS’06), New York, NY, USA, 4–7 January 2006; p. 45. [Google Scholar]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A toolbox for easily calibrating omnidirectional cameras. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 5695–5701. [Google Scholar]

- Rufli, M.; Scaramuzza, D.; Siegwart, R. Automatic detection of checkerboards on blurred and distorted images. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3121–3126. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; pp. 224–236. [Google Scholar]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.J.; Marín-Jiménez, M.J. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- Wang, Y.; Ji, Y.; Liu, D.; Tamura, Y.; Tsuchiya, H.; Yamashita, A.; Asama, H. Acmarker: Acoustic camera-based fiducial marker system in underwater environment. IEEE Robot. Autom. Lett. 2020, 5, 5018–5025. [Google Scholar] [CrossRef]

- Wei, Q.; Yang, Y.; Zhou, X.; Fan, C.; Zheng, Q.; Hu, Z. Localization method for underwater robot swarms based on enhanced visual markers. Electronics 2023, 12, 4882. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Liao, T.; Zhao, C.; Li, L.; Cao, H. Seam-guided local alignment and stitching for large parallax images. arXiv 2013, arXiv:2311.18564. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

| i | −2 | −1 | 0 | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|---|---|---|

| Pitch scale (°) | 23.63 | 12.34 | 0 | −12.34 | −23.63 | −33.27 | −41.19 | −47.56 |

| j | −2 | −1 | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Yaw scale (Left) (°) | 113.6 | 102.3 | 90 | 77.66 | 66.37 | 56.73 | 48.81 | 42.44 | 37.30 | 33.15 | 29.74 |

| Yaw scale (Right) (°) | −113.6 | −102.3 | −90 | −77.66 | −66.37 | −56.73 | −48.81 | −42.44 | −37.30 | −33.15 | −29.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tong, R.; Wu, Z.; Wang, J.; Huang, Y.; Chen, D.; Yu, J. A Fish-like Binocular Vision System for Underwater Perception of Robotic Fish. Biomimetics 2024, 9, 171. https://doi.org/10.3390/biomimetics9030171

Tong R, Wu Z, Wang J, Huang Y, Chen D, Yu J. A Fish-like Binocular Vision System for Underwater Perception of Robotic Fish. Biomimetics. 2024; 9(3):171. https://doi.org/10.3390/biomimetics9030171

Chicago/Turabian StyleTong, Ru, Zhengxing Wu, Jinge Wang, Yupei Huang, Di Chen, and Junzhi Yu. 2024. "A Fish-like Binocular Vision System for Underwater Perception of Robotic Fish" Biomimetics 9, no. 3: 171. https://doi.org/10.3390/biomimetics9030171

APA StyleTong, R., Wu, Z., Wang, J., Huang, Y., Chen, D., & Yu, J. (2024). A Fish-like Binocular Vision System for Underwater Perception of Robotic Fish. Biomimetics, 9(3), 171. https://doi.org/10.3390/biomimetics9030171