CoDC: Accurate Learning with Noisy Labels via Disagreement and Consistency

Abstract

:1. Introduction

- A weighted cross-entropy loss is proposed to remit the overfitting phenomenon, and the loss weights are learned directly from information derived from the historical training process.

- We propose a novel method called CoDC to alleviate the negative effect of label noise. CoDC maintains disagreement at the feature level and consistency at the prediction level using a balance loss function, which can effectively improve the generalization of networks.

2. Related Work

3. Materials and Methods

3.1. Preliminary

3.2. Training with Disagreement and Consistency

| Algorithm 1 CoDC algorithm |

Input: two networks with weights and , learning rate , noise rate , epoch and , iteration ;

|

4. Results

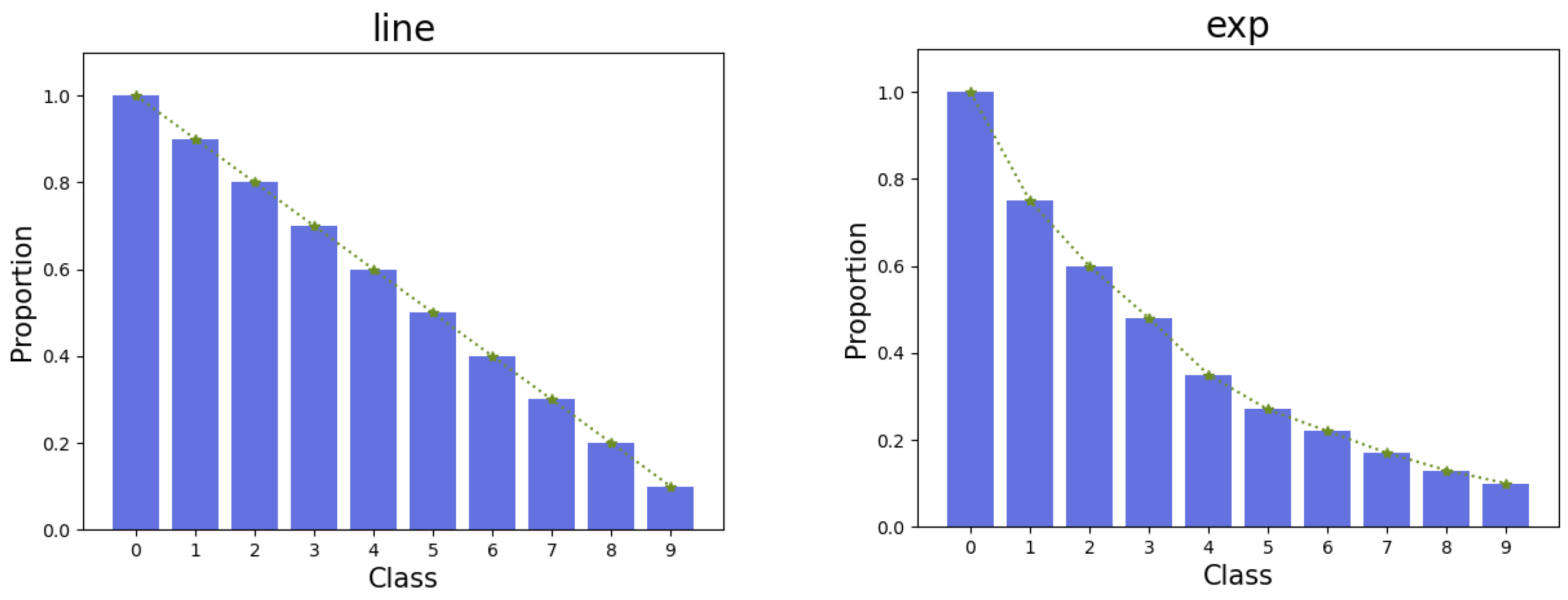

4.1. Datasets and Implementation Details

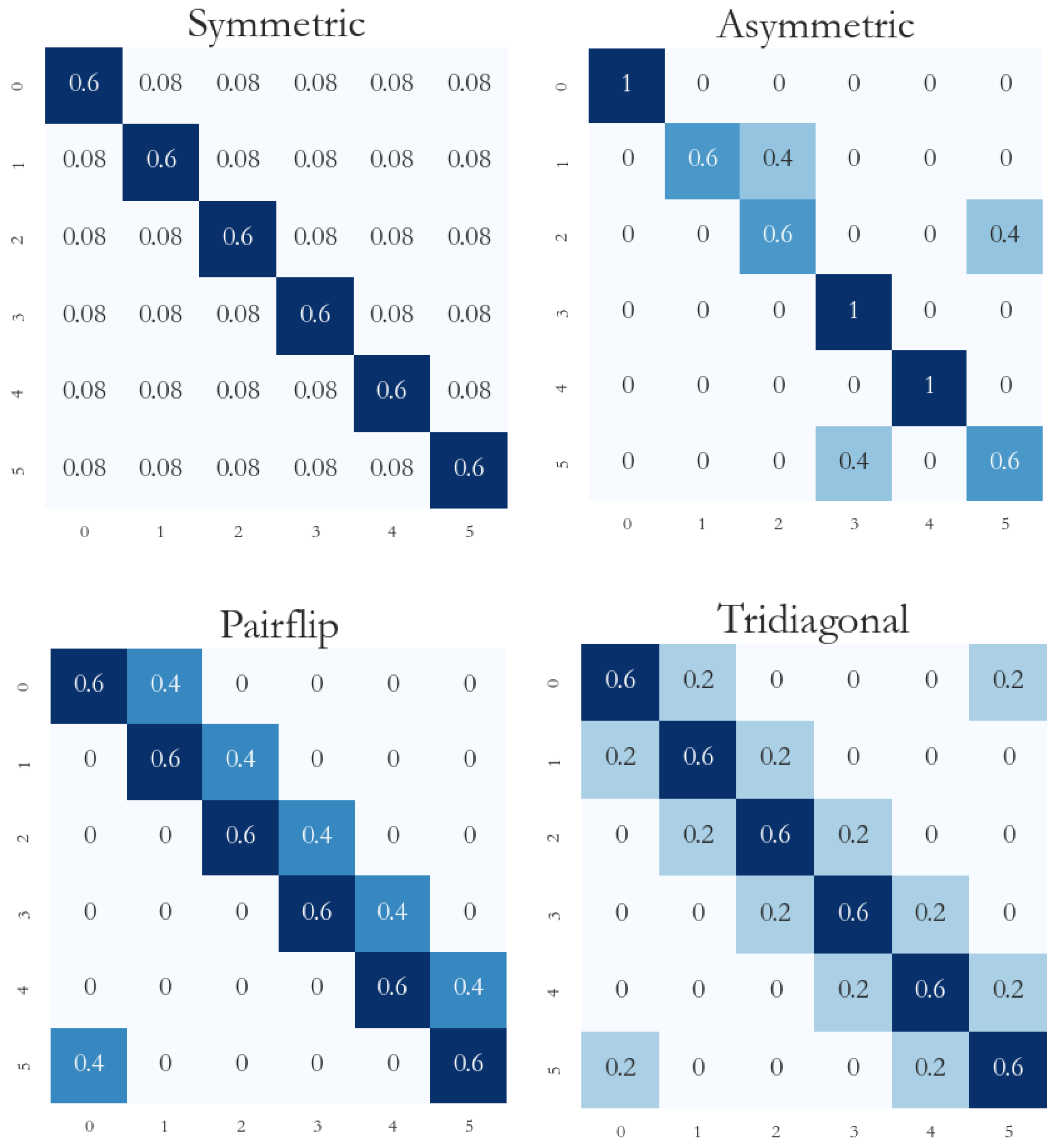

- (1)

- Symmetric noise: the clean labels in each class are uniformly flipped to labels of other wrong classes.

- (2)

- Asymmetric noise: considers the visual similarity in the flipping process, which is closer to real-world noise; for example, the labels of cats and dogs will be reversed, and the labels of planes and birds will be reversed. Asymmetric noise is an unbalanced type of noise.

- (3)

- Pairflip noise: this is realized by flipping clean labels in each class to adjacent classes.

- (4)

- Tridiagonal noise: realized by two consecutive pairflips of two classes in opposite directions.

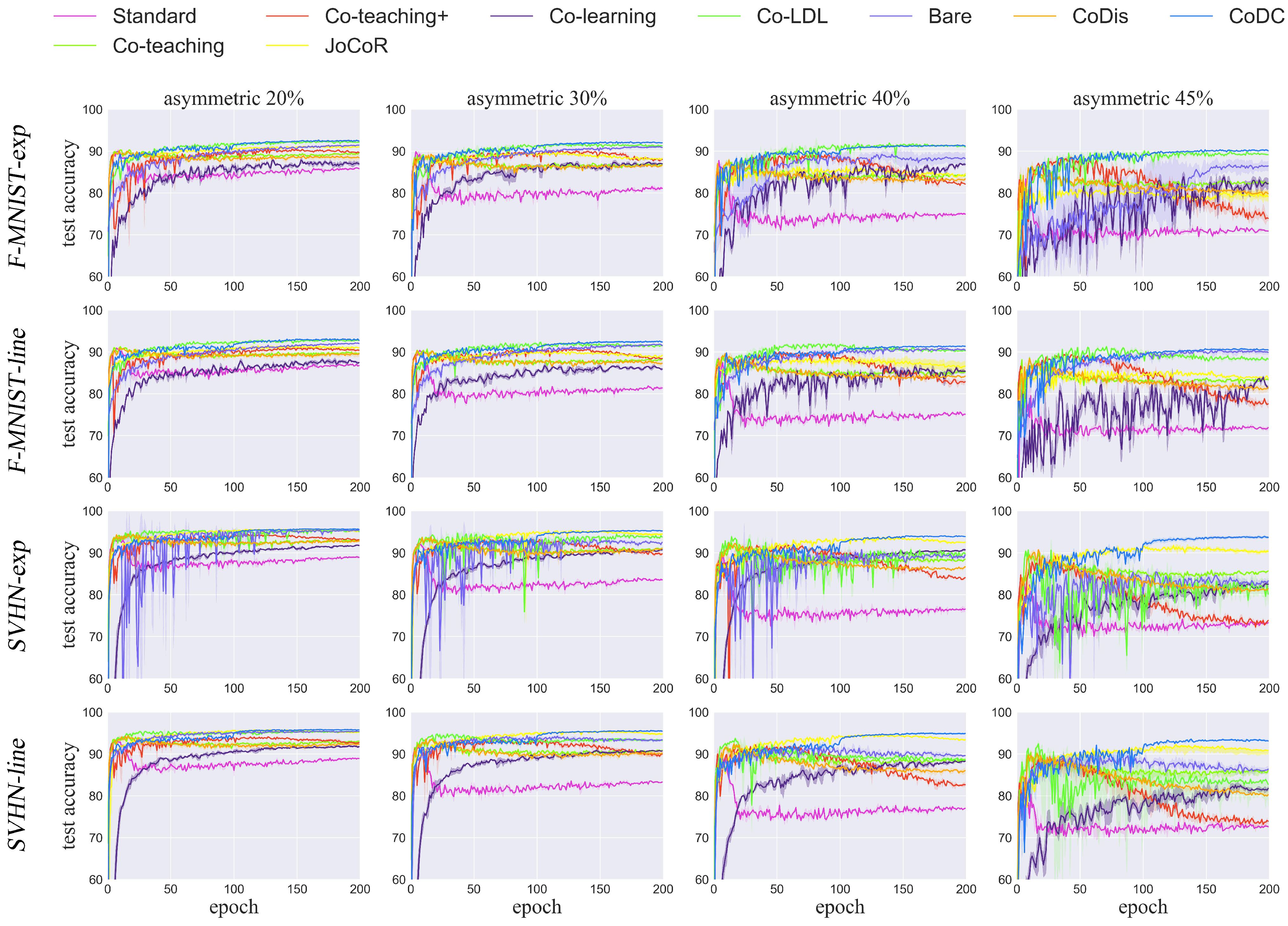

4.2. Comparison with SOTA Methods

- (1)

- Standard: trains a single network and uses only standard cross-entropy loss.

- (2)

- Co-teaching [17]: trains two networks simultaneously; the two networks guide each other during learning.

- (3)

- Co-teaching-plus [18]: trains two networks simultaneously while considering the small loss samples of the two networks’ diverging samples.

- (4)

- JoCoR [31]: trains two networks simultaneously and applies the co-regularization method to maximize the consistency between the two networks.

- (5)

- Co-learning [47]: a simple and effective method for learning with noisy labels; it combines supervised and self-supervised learning to regularize the network and improve generalization performance.

- (6)

- Co-LDL [29]: an end-to-end framework proposed to train high-loss samples using label distribution learning and enhance the learned representations by a self-supervised module, further boosting model performance and the use of training data.

- (7)

- CoDis [19]: trains two networks simultaneously and applies the covariance regularization method to maintain the divergence between the two networks.

- (8)

- Bare [48]: proposes an adaptive sample selection strategy to provide robustness against label noise.

4.3. Ablation Study

4.4. Comparison of Running Time

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Basheri, M. Intelligent Breast Mass Classification Approach Using Archimedes Optimization Algorithm with Deep Learning on Digital Mammograms. Biomimetics 2023, 8, 463. [Google Scholar] [CrossRef] [PubMed]

- Albraikan, A.A.; Maray, M.; Alotaibi, F.A.; Alnfiai, M.M.; Kumar, A.; Sayed, A. Bio-Inspired Artificial Intelligence with Natural Language Processing Based on Deceptive Content Detection in Social Networking. Biomimetics 2023, 8, 449. [Google Scholar] [CrossRef] [PubMed]

- Xie, T.; Yin, M.; Zhu, X.; Zhu, X.; Sun, J.; Meng, C.; Bei, S. A Fast and Robust Lane Detection via Online Re-Parameterization and Hybrid Attention. Sensors 2023, 23, 8285. [Google Scholar] [CrossRef] [PubMed]

- Tewes, T.J.; Welle, M.C.; Hetjens, B.T.; Tipatet, K.S.; Pavlov, S.; Platte, F.; Bockmühl, D.P. Understanding Raman Spectral Based Classifications with Convolutional Neural Networks Using Practical Examples of Fungal Spores and Carotenoid-Pigmented Microorganisms. AI 2023, 4, 114–127. [Google Scholar] [CrossRef]

- Lee, H.U.; Chun, C.J.; Kang, J.M. Causality-Driven Efficient Feature Selection for Deep-Learning-Based Surface Roughness Prediction in Milling Machines. Mathematics 2023, 11, 4682. [Google Scholar] [CrossRef]

- Pinto, J.; Ramos, J.R.C.; Costa, R.S.; Oliveira, R. A General Hybrid Modeling Framework for Systems Biology Applications: Combining Mechanistic Knowledge with Deep Neural Networks under the SBML Standard. AI 2023, 4, 303–318. [Google Scholar] [CrossRef]

- Liao, H.; Zhu, W. YOLO-DRS: A Bioinspired Object Detection Algorithm for Remote Sensing Images Incorporating a Multi-Scale Efficient Lightweight Attention Mechanism. Biomimetics 2023, 8, 458. [Google Scholar] [CrossRef] [PubMed]

- Song, F.; Li, P. YOLOv5-MS: Real-time multi-surveillance pedestrian target detection model for smart cities. Biomimetics 2023, 8, 480. [Google Scholar] [CrossRef] [PubMed]

- Zhu, P.; Yao, X.; Wang, Y.; Cao, M.; Hui, B.; Zhao, S.; Hu, Q. Latent heterogeneous graph network for incomplete multi-view learning. IEEE Trans. Multimed. 2022, 25, 3033–3045. [Google Scholar] [CrossRef]

- Liu, B.; Feng, L.; Zhao, Q.; Li, G.; Chen, Y. Improving the Accuracy of Lane Detection by Enhancing the Long-Range Dependence. Electronics 2023, 12, 2518. [Google Scholar] [CrossRef]

- Chen, T.; Xie, G.S.; Yao, Y.; Wang, Q.; Shen, F.; Tang, Z.; Zhang, J. Semantically meaningful class prototype learning for one-shot image segmentation. IEEE Trans. Multimed. 2021, 24, 968–980. [Google Scholar] [CrossRef]

- Nanni, L.; Loreggia, A.; Brahnam, S. Comparison of Different Methods for Building Ensembles of Convolutional Neural Networks. Electronics 2023, 12, 4428. [Google Scholar] [CrossRef]

- Ghosh, A.; Kumar, H.; Sastry, P.S. Robust loss functions under label noise for deep neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Mahajan, D.; Girshick, R.; Ramanathan, V.; He, K.; Paluri, M.; Li, Y.; Bharambe, A.; Van Der Maaten, L. Exploring the limits of weakly supervised pretraining. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 181–196. [Google Scholar]

- Yan, Y.; Rosales, R.; Fung, G.; Subramanian, R.; Dy, J. Learning from multiple annotators with varying expertise. Mach. Learn. 2014, 95, 291–327. [Google Scholar] [CrossRef]

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding deep learning (still) requires rethinking generalization. Commun. ACM 2021, 64, 107–115. [Google Scholar] [CrossRef]

- Han, B.; Yao, Q.; Yu, X.; Niu, G.; Xu, M.; Hu, W.; Tsang, I.; Sugiyama, M. Co-teaching: Robust training of deep neural networks with extremely noisy labels. In Proceedings of the 32nd Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; Advances in Neural Information Processing Systems. Volume 31. [Google Scholar]

- Yu, X.; Han, B.; Yao, J.; Niu, G.; Tsang, I.; Sugiyama, M. How does disagreement help generalization against label corruption? In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 7164–7173. [Google Scholar]

- Xia, X.; Han, B.; Zhan, Y.; Yu, J.; Gong, M.; Gong, C.; Liu, T. Combating Noisy Labels with Sample Selection by Mining High-Discrepancy Examples. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 1833–1843. [Google Scholar]

- Liu, J.; Jiang, D.; Yang, Y.; Li, R. Agreement or Disagreement in Noise-tolerant Mutual Learning? In Proceedings of the 26th International Conference on Pattern Recognition, Montreal, QC, Canada, 21–25 August 2022; pp. 4801–4807. [Google Scholar]

- Wang, Y.; Ma, X.; Chen, Z.; Luo, Y.; Yi, J.; Bailey, J. Symmetric cross entropy for robust learning with noisy labels. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–3 November 2019; pp. 322–330.

- Zhang, Z.; Sabuncu, M. Symmetric cross entropy for robust learning with noisy labels. In Proceedings of the Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Ma, X.; Huang, H.; Wang, Y.; Romano, S.; Erfani, S.; Bailey, J. Normalized loss functions for deep learning with noisy labels. In Proceedings of the International Conference on Machine Learning, Online, 13–18 July 2020; pp. 6543–6553. [Google Scholar]

- Kim, Y.; Yun, J.; Shon, H.; Kim, J. Joint negative and positive learning for noisy labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 9442–9451. [Google Scholar]

- Patrini, G.; Rozza, A.; Krishna Menon, A.; Nock, R.; Qu, L. Joint negative and positive learning for noisy labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1944–1952. [Google Scholar]

- Lukasik, M.; Bhojanapalli, S.; Menon, A.; Kumar, S. Does label smoothing mitigate label noise? In Proceedings of the International Conference on Machine Learning, Online, 13–18 July 2020; pp. 6448–6458. [Google Scholar]

- Pham, H.; Dai, Z.; Xie, Q.; Le, Q.V. Meta pseudo labels? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 11557–11568. [Google Scholar]

- Yi, K.; Wu, J. Probabilistic end-to-end noise correction for learning with noisy labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7017–7025. [Google Scholar]

- Sun, Z.; Liu, H.; Wang, Q.; Zhou, T.; Wu, Q.; Tang, Z. Co-ldl: A co-training-based label distribution learning method for tackling label noise. IEEE Trans. Multimed. 2021, 24, 1093–1104. [Google Scholar] [CrossRef]

- Liu, J.; Zhou, Z.; Leung, T.; Li, L.J.; Li, F.-F. Mentornet: Learning data-driven curriculum for very deep neural networks on corrupted labels. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 2304–2313. [Google Scholar]

- Wei, H.; Feng, L.; Chen, X.; An, B. Combating noisy labels by agreement: A joint training method with co-regularization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 13726–13735. [Google Scholar]

- Li, J.; Socher, R.; Hoi, S.C. Dividemix: Learning with noisy labels as semi-supervised learning. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Cordeiro, F.R.; Sachdeva, R.; Belagiannis, V.; Reid, I.; Carneiro, G. Longremix: Robust learning with high confidence samples in a noisy label environment. Pattern Recognit. 2023, 133, 109013. [Google Scholar] [CrossRef]

- Kong, K.; Lee, J.; Kwak, Y.; Cho, Y.; Kim, S.; Song, W. Penalty based robust learning with noisy labels. Neurocomputing 2022, 489, 112–127. [Google Scholar] [CrossRef]

- Sindhwani, V.; Niyogi, P.; Belkin, M. A co-regularization approach to semi-supervised learning with multiple views. In Proceedings of the International Conference on Machine Learning Workshop on Learning with Multiple Views, Bonn, Germany, 7–11 August 2005; pp. 74–79. [Google Scholar]

- Yao, Y.; Sun, Z.; Zhang, C.; Shen, F.; Wu, Q.; Zhang, J.; Tang, Z. Jo-src: A contrastive approach for combating noisy labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 5192–5201. [Google Scholar]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-Mnist: A Novel Image Dataset for Benchmarking Machine Learning Algorithms. Available online: https://arxiv.org/pdf/1708.07747.pdf (accessed on 25 August 2017).

- Netzer, Y.; Wang, T.; Coates, A.; Bissacco, A.; Wu, B.; Ng, A.Y. Reading digits in natural images with unsupervised feature learning. In Proceedings of the 25th Conference on Neural Information Processing Systems (NeurIPS) Workshop on Deep Learning and Unsupervised Feature Learning, Granada, Spain, 12 December 2011; pp. 462–471. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Technical report; University of Toronto: Toronto, ON, Canada, 9 April 2009. [Google Scholar]

- Xiao, T.; Xia, T.; Yang, Y.; Huang, C.; Wang, X. Learning from massive noisy labeled data for image classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2691–2699. [Google Scholar]

- Li, W.; Wang, L.; Li, W.; Agustsson, E.; Van, G.L. Webvision Database: Visual Learning and Understanding from Web Data. Available online: https://arxiv.org/pdf/1708.02862.pdf (accessed on 9 August 2017).

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Zhang, Y.; Niu, G.; Sugiyama, M. Imagenet: Learning noise transition matrix from only noisy labels via total variation regularization. In Proceedings of the Conference on Machine Learning, Online, 18–24 July 2021; pp. 12501–12512. [Google Scholar]

- Yang, Y.; Xu, Z. Rethinking the value of labels for improving class-imbalanced learning. In Proceedings of the Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; Volume 33, pp. 19290–19301. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vegas, NV, USA, 26–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Tan, C.; Xia, J.; Wu, L.; Li, S.Z. Co-learning: Learning from noisy labels with self-supervision. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 1405–1413. [Google Scholar]

- Patel, D.; Sastry, P.S. Adaptive sample selection for robust learning under label noise. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 3932–3942. [Google Scholar]

| Noise Type | Sym | Asym | Pair | Trid | |||||

|---|---|---|---|---|---|---|---|---|---|

| Setting | 20% | 40% | 20% | 40% | 20% | 40% | 20% | 40% | |

| Standard | 87.46 ± 0.09 | 72.46 ± 0.68 | 89.34 ± 0.15 | 77.73 ± 0.19 | 84.23 ± 0.23 | 61.46 ± 0.32 | 85.86 ± 0.4 | 66.44 ± 0.28 | |

| Co-teaching | 91.20 ± 0.03 | 88.06 ± 0.06 | 91.29 ± 0.16 | 86.32 ± 0.09 | 89.97 ± 0.09 | 84.99 ± 0.11 | 90.54 ± 0.05 | 86.29 ± 0.09 | |

| Co-teaching-plus | 92.85 ± 0.03 | 91.42 ± 0.03 | 93.42 ± 0.02 | 89.38 ± 0.17 | 92.90 ± 0.03 | 88.38 ± 0.16 | 92.75 ± 0.08 | 90.40 ± 0.04 | |

| JoCoR | 94.11 ± 0.02 | 93.24 ± 0.01 | 94.24 ± 0.02 | 91.18 ± 0.16 | 93.90 ± 0.01 | 91.37 ± 0.21 | 94.03 ± 0.01 | 92.93 ± 0.01 | |

| F-MNIST | Co-learning | 90.03 ± 0.29 | 90.03 ± 0.21 | 90.88 ± 0.22 | 88.67 ± 0.48 | 91.08 ± 0.18 | 87.59 ± 0.35 | 90.98 ± 0.16 | 90.27 ± 0.24 |

| Co-LDL | 94.68 ± 0.10 | 93.97 ± 0.14 | 95.02 ± 0.13 | 93.71 ± 0.57 | 94.84 ± 0.08 | 93.66 ± 0.11 | 94.88 ± 0.11 | 94.26 ± 0.09 | |

| CoDis | 90.97 ± 0.05 | 87.92 ± 0.10 | 91.55 ± 0.08 | 85.77 ± 0.35 | 90.23 ± 0.04 | 83.92 ± 0.08 | 90.33 ± 0.02 | 86.10 ± 0.09 | |

| Bare | 93.59 ± 0.16 | 92.79 ± 0.13 | 93.79 ± 0.12 | 93.47 ± 0.10 | 93.60 ± 0.14 | 92.75 ± 0.13 | 93.64 ± 0.11 | 93.02 ± 0.14 | |

| CoDC | 94.87 ± 0.06 | 94.05 ± 0.08 | 94.66 ± 0.08 | 93.63 ± 0.04 | 94.64 ± 0.09 | 93.67 ± 0.09 | 94.89 ± 0.06 | 94.09 ± 0.08 | |

| Standard | 87.21 ± 0.10 | 70.97 ± 0.34 | 89.55 ± 0.05 | 77.79 ± 0.21 | 84.84 ± 0.10 | 61.39 ± 0.35 | 86.37 ± 0.2 | 67.25 ± 0.44 | |

| Co-teaching | 91.98 ± 0.04 | 89.21 ± 0.23 | 91.91 ± 0.35 | 88.00 ± 0.43 | 91.24 ± 0.27 | 85.94 ± 0.06 | 92.03 ± 0.04 | 87.94 ± 0.04 | |

| Co-teaching-plus | 94.71 ± 0.01 | 92.86 ± 0.03 | 94.37 ± 0.03 | 88.14 ± 0.06 | 94.15 ± 0.02 | 88.68 ± 0.14 | 94.74 ± 0.01 | 91.88 ± 0.06 | |

| JoCoR | 96.41 ± 0.02 | 95.70 ± 0.01 | 96.17 ± 0.02 | 93.89 ± 0.37 | 96.29 ± 0.01 | 93.88 ± 0.26 | 96.54 ± 0.01 | 95.29 ± 0.09 | |

| SVHN | Co-learning | 93.18 ± 0.10 | 92.08 ± 0.11 | 92.74 ± 0.18 | 88.07 ± 0.43 | 93.39 ± 0.11 | 89.07 ± 0.16 | 93.48 ± 0.07 | 92.21 ± 0.08 |

| Co-LDL | 96.66 ± 0.05 | 95.51 ± 0.33 | 96.20 ± 0.07 | 91.80 ± 0.30 | 96.29 ± 0.07 | 91.56 ± 0.23 | 96.42 ± 0.07 | 95.03 ± 0.06 | |

| CoDis | 91.67 ± 0.04 | 89.19 ± 0.08 | 92.10 ± 0.06 | 87.27 ± 0.18 | 91.25 ± 0.07 | 85.09 ± 0.11 | 91.83 ± 0.03 | 88.34 ± 0.01 | |

| Bare | 96.20 ± 0.06 | 94.92 ± 0.10 | 95.83 ± 0.12 | 92.44 ± 0.25 | 95.84 ± 0.18 | 91.66 ± 0.30 | 95.98 ± 0.07 | 94.46 ± 0.13 | |

| CoDC | 96.64 ± 0.03 | 95.89 ± 0.03 | 96.26 ± 0.06 | 94.60 ± 0.08 | 96.26 ± 0.04 | 95.13 ± 0.04 | 96.48 ± 0.04 | 95.18 ± 0.05 | |

| Standard | 75.74 ± 0.33 | 58.64 ± 0.81 | 82.32 ± 0.14 | 72.19 ± 0.32 | 76.38 ± 0.35 | 55.03 ± 0.27 | 76.26 ± 0.26 | 58.72 ± 0.26 | |

| Co-teaching | 82.24 ± 0.18 | 77.16 ± 0.10 | 80.76 ± 0.11 | 72.85 ± 0.11 | 82.55 ± 0.10 | 75.74 ± 0.14 | 82.50 ± 0.12 | 76.28 ± 0.12 | |

| Co-teaching-plus | 81.96 ± 0.12 | 71.49 ± 0.33 | 79.68 ± 0.13 | 70.96 ± 0.69 | 79.71 ± 0.14 | 58.39 ± 0.76 | 81.15 ± 0.05 | 64.79 ± 0.47 | |

| JoCoR | 85.23 ± 0.09 | 79.77 ± 0.18 | 85.62 ± 0.21 | 73.50 ± 1.25 | 81.75 ± 0.26 | 68.29 ± 0.30 | 82.78 ± 0.11 | 73.53 ± 0.28 | |

| CIFAR-10 | Co-learning | 88.82 ± 0.23 | 85.99 ± 0.22 | 88.58 ± 0.28 | 82.66 ± 0.44 | 89.13 ± 0.11 | 79.68 ± 0.27 | 89.47 ± 0.19 | 86.40 ± 0.23 |

| Co-LDL | 91.57 ± 0.15 | 88.89 ± 0.26 | 90.89 ± 0.22 | 84.21 ± 0.26 | 91.10 ± 0.17 | 85.03 ± 0.15 | 91.12 ± 0.22 | 87.62 ± 0.19 | |

| CoDis | 82.36 ± 0.24 | 77.04 ± 0.09 | 84.58 ± 0.05 | 75.30 ± 0.32 | 82.53 ± 0.23 | 70.86 ± 0.22 | 82.69 ± 0.07 | 74.59 ± 0.05 | |

| Bare | 85.30 ± 0.61 | 78.90 ± 0.70 | 86.44 ± 0.39 | 81.20 ± 0.46 | 84.98 ± 0.57 | 74.53 ± 0.28 | 85.53 ± 0.45 | 77.40 ± 0.59 | |

| CoDC | 92.35 ± 0.07 | 90.15 ± 0.13 | 91.53 ± 0.10 | 84.25 ± 0.12 | 92.03 ± 0.05 | 89.46 ± 0.15 | 92.59 ± 0.15 | 89.70 ± 0.18 | |

| Standard | 46.10 ± 0.53 | 33.20 ± 0.58 | 46.11 ± 0.53 | 33.20 ± 0.58 | 46.01 ± 0.33 | 33.16 ± 0.32 | 46.30 ± 0.21 | 33.29 ± 0.31 | |

| Co-teaching | 50.87 ± 0.31 | 43.38 ± 0.35 | 48.88 ± 0.29 | 35.92 ± 0.34 | 49.57 ± 0.35 | 35.11 ± 0.45 | 50.14 ± 0.31 | 39.77 ± 0.38 | |

| Co-teaching-plus | 51.72 ± 0.33 | 44.31 ± 0.67 | 51.48 ± 0.28 | 34.20 ± 0.64 | 50.71 ± 0.77 | 34.29 ± 0.27 | 51.54 ± 0.29 | 41.36 ± 0.29 | |

| JoCoR | 51.61 ± 0.37 | 42.78 ± 0.26 | 51.21 ± 0.09 | 42.68 ± 0.23 | 51.46 ± 0.32 | 42.01 ± 0.21 | 51.58 ± 0.06 | 42.77 ± 0.34 | |

| CIFAR-100 | Co-learning | 62.43 ± 0.31 | 57.18 ± 0.35 | 63.04 ± 0.36 | 49.69 ± 0.31 | 62.53 ± 0.31 | 49.29 ± 0.41 | 63.26 ± 0.35 | 59.57 ± 0.43 |

| Co-LDL | 66.60 ± 0.24 | 61.51 ± 0.34 | 66.85 ± 0.25 | 59.98 ± 0.50 | 66.59 ± 0.29 | 58.87 ± 0.35 | 66.53 ± 0.26 | 60.87 ± 0.30 | |

| CoDis | 50.65 ± 0.35 | 43.44 ± 0.27 | 50.14 ± 0.38 | 35.43 ± 0.38 | 50.80 ± 0.41 | 35.15 ± 0.29 | 51.50 ± 0.40 | 41.43 ± 0.45 | |

| Bare | 63.32 ± 0.32 | 55.33 ± 0.62 | 61.62 ± 0.26 | 40.88 ± 1.17 | 61.22 ± 0.51 | 40.92 ± 1.40 | 62.88 ± 0.28 | 48.82 ± 0.51 | |

| CoDC | 72.42 ± 0.22 | 68.76 ± 0.16 | 70.94 ± 0.11 | 57.37 ± 0.33 | 70.78 ± 0.27 | 56.14 ± 0.19 | 70.78 ± 0.17 | 61.67 ± 0.16 | |

| Noise Type | Asym.20% | Asym.30% | Asym.40% | Asym.45% | |

|---|---|---|---|---|---|

| Standard | 85.77 ± 0.17 | 80.99 ± 0.45 | 74.90 ± 0.25 | 70.90 ± 0.37 | |

| Co-teaching | 89.41 ± 0.13 | 86.83 ± 0.16 | 84.21 ± 0.12 | 82.27 ± 0.21 | |

| Co-teaching-plus | 90.00 ± 0.17 | 88.60 ± 0.20 | 82.36 ± 0.26 | 76.19 ± 0.57 | |

| JoCoR | 91.00 ± 0.10 | 88.12 ± 0.12 | 84.10 ± 0.17 | 78.41 ± 0.29 | |

| F-MNIST-exp | Co-learning | 86.70 ± 0.25 | 86.95 ± 0.29 | 86.78 ± 0.41 | 83.02 ± 0.66 |

| Co-LDL | 92.16 ± 0.11 | 91.40 ± 0.16 | 91.28 ± 0.21 | 89.31 ± 0.20 | |

| CoDis | 89.11 ± 0.17 | 87.11 ± 0.16 | 83.84 ± 0.26 | 80.88 ± 0.13 | |

| Bare | 91.43 ± 0.15 | 90.89 ± 0.18 | 88.45 ± 1.31 | 86.41 ± 1.15 | |

| CoDC | 92.45 ± 0.08 | 92.24 ± 0.10 | 91.37 ± 0.06 | 90.20 ± 0.11 | |

| Standard | 86.94 ± 0.22 | 81.38 ± 0.33 | 75.26 ± 0.39 | 71.68 ± 0.32 | |

| Co-teaching | 89.79 ± 0.10 | 87.89 ± 0.15 | 85.24 ± 0.26 | 83.43 ± 0.14 | |

| Co-teaching-plus | 90.61 ± 0.14 | 87.79 ± 0.20 | 82.83 ± 0.35 | 77.28 ± 0.58 | |

| JoCoR | 91.12 ± 0.15 | 89.02 ± 0.17 | 85.29 ± 0.26 | 84.01 ± 0.26 | |

| F-MNIST-line | Co-learning | 87.84 ± 0.23 | 86.22 ± 0.41 | 85.66 ± 0.43 | 83.09 ± 1.04 |

| Co-LDL | 92.73 ± 0.21 | 91.47 ± 0.24 | 89.98 ± 0.38 | 88.39 ± 0.47 | |

| CoDis | 90.13 ± 0.10 | 87.79 ± 0.16 | 84.77 ± 0.26 | 81.46 ± 0.13 | |

| Bare | 92.11 ± 0.13 | 91.79 ± 0.09 | 90.55 ± 0.28 | 89.92 ± 0.61 | |

| CoDC | 93.04 ± 0.09 | 92.72 ± 0.06 | 91.39 ± 0.15 | 90.59 ± 0.05 | |

| Standard | 89.02 ± 0.21 | 83.54 ± 0.21 | 76.37 ± 0.40 | 73.09 ± 0.27 | |

| Co-teaching | 93.08 ± 0.16 | 91.22 ± 0.24 | 88.49 ± 0.14 | 85.21 ± 0.28 | |

| Co-teaching-plus | 93.10 ± 0.13 | 89.57 ± 0.19 | 83.58 ± 0.43 | 73.28 ± 0.69 | |

| JoCoR | 95.01 ± 0.08 | 94.62 ± 0.08 | 92.65 ± 0.11 | 89.95 ± 0.07 | |

| SVHN-exp | Co-learning | 91.67 ± 0.18 | 90.60 ± 0.23 | 86.52 ± 0.40 | 81.97 ± 0.58 |

| Co-LDL | 95.33 ± 0.27 | 93.77 ± 0.35 | 89.58 ± 0.61 | 82.08 ± 1.56 | |

| CoDis | 92.80 ± 0.17 | 91.09 ± 0.19 | 86.89 ± 0.11 | 81.25 ± 0.13 | |

| Bare | 95.42 ± 0.14 | 92.51 ± 0.23 | 89.48 ± 0.33 | 83.31 ± 0.65 | |

| CoDC | 95.68 ± 0.04 | 95.28 ± 0.07 | 93.97 ± 0.05 | 93.47 ± 0.10 | |

| Standard | 88.90 ± 0.15 | 83.33 ± 0.20 | 76.92 ± 0.29 | 72.57 ± 0.24 | |

| Co-teaching | 92.92 ± 0.10 | 91.17 ± 0.10 | 88.33 ± 0.25 | 85.77 ± 0.26 | |

| Co-teaching-plus | 92.73 ± 0.13 | 89.41 ± 0.20 | 82.28 ± 0.37 | 73.94 ± 0.47 | |

| JoCoR | 95.34 ± 0.04 | 94.88 ± 0.07 | 93.56 ± 0.06 | 91.00 ± 0.07 | |

| SVHN-line | Co-learning | 91.90 ± 0.09 | 90.70 ± 0.16 | 88.08 ± 0.25 | 81.80 ± 0.46 |

| Co-LDL | 95.28 ± 0.12 | 93.40 ± 0.19 | 88.79 ± 0.25 | 83.66 ± 0.40 | |

| CoDis | 92.94 ± 0.17 | 90.78 ± 0.19 | 86.71 ± 0.11 | 80.93 ± 0.13 | |

| Bare | 95.40 ± 0.11 | 93.38 ± 0.22 | 89.62 ± 0.16 | 86.35 ± 0.72 | |

| CoDC | 95.96 ± 0.06 | 95.77 ± 0.06 | 95.01 ± 0.06 | 93.56 ± 0.06 |

| Method | Acc |

|---|---|

| Standard | 67.22 |

| Co-teaching | 69.21 |

| Co-teaching-plus | 59.32 |

| JoCoR | 70.30 |

| Co-learning | 68.72 |

| Co-LDL | 71.10 |

| CoDis | 71.60 |

| Bare | 70.32 |

| CoDC | 72.81 |

| Dataset | WebVision | ILSVRC12 | |||

|---|---|---|---|---|---|

| Method | top1 | top5 | top1 | top5 | |

| Co-teaching | 63.58 | 85.20 | 61.48 | 84.70 | |

| Co-teaching-plus | 68.56 | 86.64 | 65.60 | 86.60 | |

| JoCoR | 61.84 | 83.72 | 59.16 | 84.16 | |

| Co-LDL | 69.74 | 84.26 | 68.63 | 84.61 | |

| CoDis | 70.52 | 87.88 | 66.88 | 87.20 | |

| Bare | 69.60 | 88.84 | 66.48 | 88.76 | |

| CoDC | 76.96 | 91.56 | 73.44 | 92.08 | |

| Modules | Acc | |||

|---|---|---|---|---|

| 33.20 | ||||

| ✓ | 64.74 | |||

| ✓ | ✓ | 65.91 | ||

| ✓ | ✓ | ✓ | 68.38 | |

| ✓ | ✓ | ✓ | ✓ | 68.76 |

| Method | Acc (%) | Time (h) |

|---|---|---|

| Co-teaching | 77.16 | 1.34 |

| Co-teaching-plus | 71.49 | 1.62 |

| Co-LDL | 88.89 | 1.87 |

| Bare | 78.90 | 4.02 |

| CoDC | 90.15 | 1.76 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, Y.; Li, J.; Wang, Z.; Jia, W. CoDC: Accurate Learning with Noisy Labels via Disagreement and Consistency. Biomimetics 2024, 9, 92. https://doi.org/10.3390/biomimetics9020092

Dong Y, Li J, Wang Z, Jia W. CoDC: Accurate Learning with Noisy Labels via Disagreement and Consistency. Biomimetics. 2024; 9(2):92. https://doi.org/10.3390/biomimetics9020092

Chicago/Turabian StyleDong, Yongfeng, Jiawei Li, Zhen Wang, and Wenyu Jia. 2024. "CoDC: Accurate Learning with Noisy Labels via Disagreement and Consistency" Biomimetics 9, no. 2: 92. https://doi.org/10.3390/biomimetics9020092

APA StyleDong, Y., Li, J., Wang, Z., & Jia, W. (2024). CoDC: Accurate Learning with Noisy Labels via Disagreement and Consistency. Biomimetics, 9(2), 92. https://doi.org/10.3390/biomimetics9020092