1. Introduction

Drone technology is rapidly maturing, exhibiting high flexibility, efficiency, and autonomy. Its range of applications is expanding, allowing drones to effectively replace humans in executing high-risk tasks. Among them, searching for trapped people in disaster areas is one of the important application areas of drones. Search and rescue missions can be extremely challenging for rescue teams due to terrain constraints. However, drones are better suited for these tasks because they can navigate around ground obstacles and conduct searches significantly faster than humans. Effective and efficient path planning not only reduces the cost of search and rescue operations but also significantly enhances their overall efficiency. The UAV path planning problem can be defined as an optimization challenge, with the primary objective of finding the most cost-effective and efficient route between the starting and ending points while meeting all constraints [

1]. In contrast to manual control of the UAV flight path by the operator [

2], employing metaheuristic algorithms for UAV flight path planning can yield significantly higher efficiency and accuracy [

3]. However, in areas with dense obstacles, drones often face challenges in finding the optimal path and may even risk collisions. Therefore, in recent years, many researchers have extensively explored the UAV path planning problem, aiming to identify flight routes that minimize costs, enhance safety, and reduce travel time [

4].

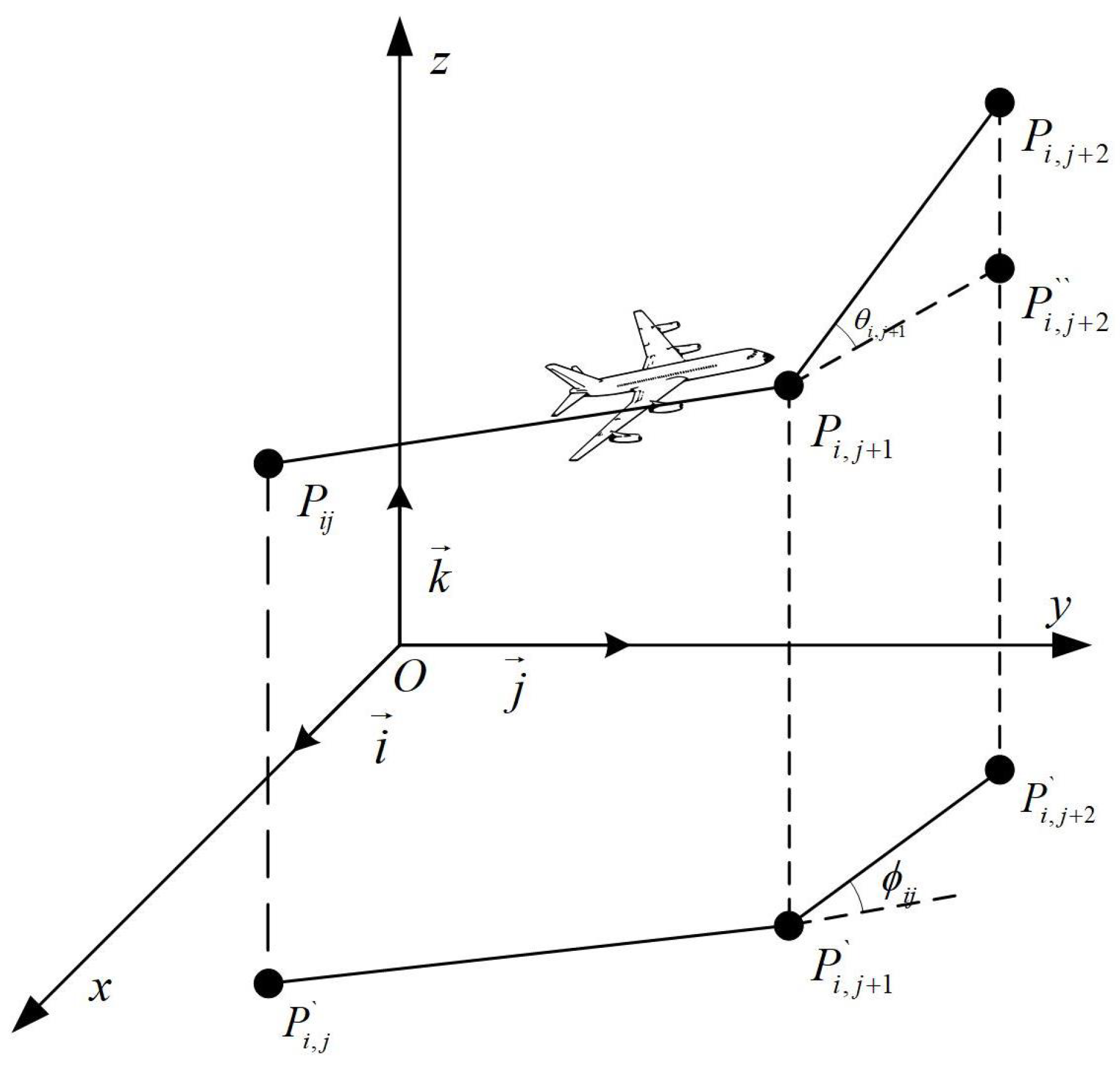

UAV search and rescue is fundamentally a three-dimensional path planning problem, and the main algorithms for optimizing UAV paths include traditional optimization methods and metaheuristic algorithms. Traditional optimization algorithms are techniques utilized to determine the optimal solution for a given problem, including Dijkstra’s algorithm [

5], integer programming [

6], and linear programming [

7]. Dijkstra’s algorithm can accurately find the shortest path; however, it requires traversing all nodes in the graph and cannot handle negative weights. Consequently, it often requires enhancement through supplementary algorithms or improvements to boost its performance. Since traditional Dijkstra’s algorithm struggles to handle problems with complex constraints, Subaselvi Sundarraj et al. [

8] proposed an improved particle swarm optimization (PSO) algorithm that integrates weight control with Dijkstra’s algorithm. Deng et al. [

9] enhanced Dijkstra’s algorithm by applying the integral theorem to improve its performance in handling uncertain edge weights. However, this improvement yields favorable results only in specific scenarios, and the algorithm’s computational efficiency remains inadequate in more complex environments. Linear programming methods, such as the simplex and interior point algorithms, can guarantee the existence and uniqueness of a solution. However, these methods often struggle with uncertain problems, and the optimization results may not always be globally optimal when dealing with complex or highly nonlinear issues. Therefore, Jiang et al. [

10] combined linear programming, fuzzy clustering, and pigeon optimization algorithms to enhance the performance of linear programming in addressing nonlinear problems. The integrated approach significantly improves both optimization effectiveness and computational efficiency. However, it still remains susceptible to falling into local optima. Cheng et al. [

11] proposed a mixed logical linear programming algorithm to enhance both the computational efficiency and the solution quality of linear programming. In addition, Kvitko et al. [

12] integrated chaos theory with neural network modeling, offering a novel approach to the path planning problem. Moysis et al. [

13] introduced an innovative 3D path planning method that leverages chaotic mapping to generate pseudo-random bit sequences, enhancing both the randomness and efficiency of path design. Although traditional algorithms have made significant progress, they typically require the objective function to be continuously differentiable and are prone to getting stuck in local optima [

14]. These limitations have prompted researchers to prefer metaheuristic algorithms.

Metaheuristic optimization algorithms are inspired by natural and physical phenomena. Generally, these algorithms can be classified into four categories [

15]: group intelligence-based algorithms, physics-based algorithms, human-based algorithms, and evolution-based algorithms. Metaheuristic algorithms are more stochastic than traditional optimization algorithms, which can adjust their parameters based on specific problems. This inherent stochasticity allows them to effectively tackle complex nonlinear issues [

16] and has made them popular among researchers for their ability to yield satisfactory solutions. Examples include optimizing real-valued parameters and constrained engineering problems [

17], energy management [

18], flow shop scheduling [

19], brain tumor classification [

20], biomedical feature selection [

21], combinatorial optimization [

22], supply chain management [

23], wireless sensor network localization [

24], and clustering [

25]. Group intelligence algorithms are derived from simulating the social behaviors of swarming organisms, with particle swarm optimization [

26] (PSO) being the most popular among researchers; this algorithm simulates the foraging behavior of birds and is characterized by a simple structure and fewer parameters [

27]. Jiang et al. [

28] divided the flock into several sub-flocks, with each sub-flock performing PSO-based updates and iterations simultaneously. At the later stages of iteration, each sub-flock shares its optimal position, significantly enhancing both the exploration and exploitation capabilities of PSO. However, it is evident that this improvement strategy does not focus on optimizing the convergence speed of PSO. Sun et al. [

29] divided the bird flocks into main flocks and sub-flocks, with the main flocks tasked with conducting a random optimization search in the search space to maintain diversity, while the sub-flocks focused on exploring regions near the local optimum. The synergy between these two populations significantly enhances the convergence speed of the algorithm; however, the algorithm remains prone to falling into local optima. Shao et al. [

30] designed an adaptive linear variation of acceleration coefficients and maximum velocities to introduce random mutations for low-quality particles, thereby improving solution quality and reducing the risk of falling into local optima. However, the convergence accuracy of this algorithm remains lower compared to more recent swarm intelligence algorithms. Meanwhile, to enhance the convergence accuracy of the algorithm, Jiang et al. [

31] incorporated an information feedback model into the Flamingo Search Algorithm (FSA). However, the stability of this modified algorithm remains relatively weak. Therefore, [

32] combined PSO, which incorporates three inertia weight coefficients, with the Symbiotic Organism Search (SOS) algorithm to improve both the convergence accuracy and stability of the algorithm. Evolution-based optimization algorithms identify near-optimal solutions by simulating the natural concept of survival of the fittest. Common approaches include the genetic algorithm (GA) [

33] and differential evolution (DE) [

34]. The genetic algorithm transforms the optimization problem into a process of chromosome crossover and recombination in biological evolution. However, it suffers from poor population diversity and is less suited for solving continuous problems. Krishna et al. [

35] combined a genetic algorithm with the K-means algorithm and demonstrated the convergence of this hybrid approach. However, achieving accurate convergence remains a significant challenge. Physics-based optimization algorithms simulate physical phenomena, such as black-hole optimization (BH). Pashaei et al. [

36] demonstrate that the binary black hole algorithm offers a notable improvement in computational complexity; however, it still depends on the choice of initial parameters. Human HBBO algorithms simulate the two phases of human exploration and exploitation. Liu et al. [

37] proposed a particle swarm algorithm that incorporates human behavior, significantly enhancing both the convergence speed and accuracy of the algorithm. However, despite these improvements, the algorithm still struggles to guarantee the discovery of a globally optimal solution. From the above discussion, it is evident that most metaheuristic algorithms are prone to issues such as getting stuck in local optima, lacking sufficient population diversity, and exhibiting slow convergence.

Compared with other metaheuristic algorithms, the nutcracker optimizer [

38] (NOA) is characterized by its simple structure, significant randomness, and a low tendency to get trapped in local optima. The NOA algorithm, developed by Abdel-Basset et al., is a population-based optimization method. Due to its outstanding optimization capabilities, it has captured the attention of researchers since its inception and has been utilized in multiple areas. Applications include solar PV model parameter extraction [

39], the performance of the NOA on multi-objective problems [

40], fresh produce distribution [

41], detection of news truthfulness and reliability [

42], power system scheduling [

43], and medical image classification [

44]. Despite its successful application across various fields, the nutcracker optimizer encounters challenges, including slow convergence rates and limited accuracy in convergence. SCHO [

45] exhibits excellent exploitation capabilities while maintaining a strong balance between the exploration and exploitation processes. This study effectively integrates the SCHO algorithm into the nutcracker optimizer. This novel approach tackles the limitations of the NOA algorithm, such as inefficient searching and challenges in fully leveraging reference point information. The contributions of this paper are specified below:

To tackle the issue of randomized foraging strategies and wide search ranges that result in inefficient search, we integrate the SCHO algorithm to improve the search efficiency of the NOA algorithm by enhancing the foraging strategy to search for high-quality food.

To tackle the issue of rapid population diversity loss and slow convergence in storage strategies, we employ a nonlinear function to steer the search direction of the current optimal individual while simultaneously preserving population diversity in the later iterations to prevent convergence to a local optimum.

Recognizing that nutcrackers do not fully leverage the information regarding reference point locations, this paper implements an improved SCHO algorithm to incorporate this reference point information. This enhancement bolsters the collaborative search capabilities of the nutcracker populations.

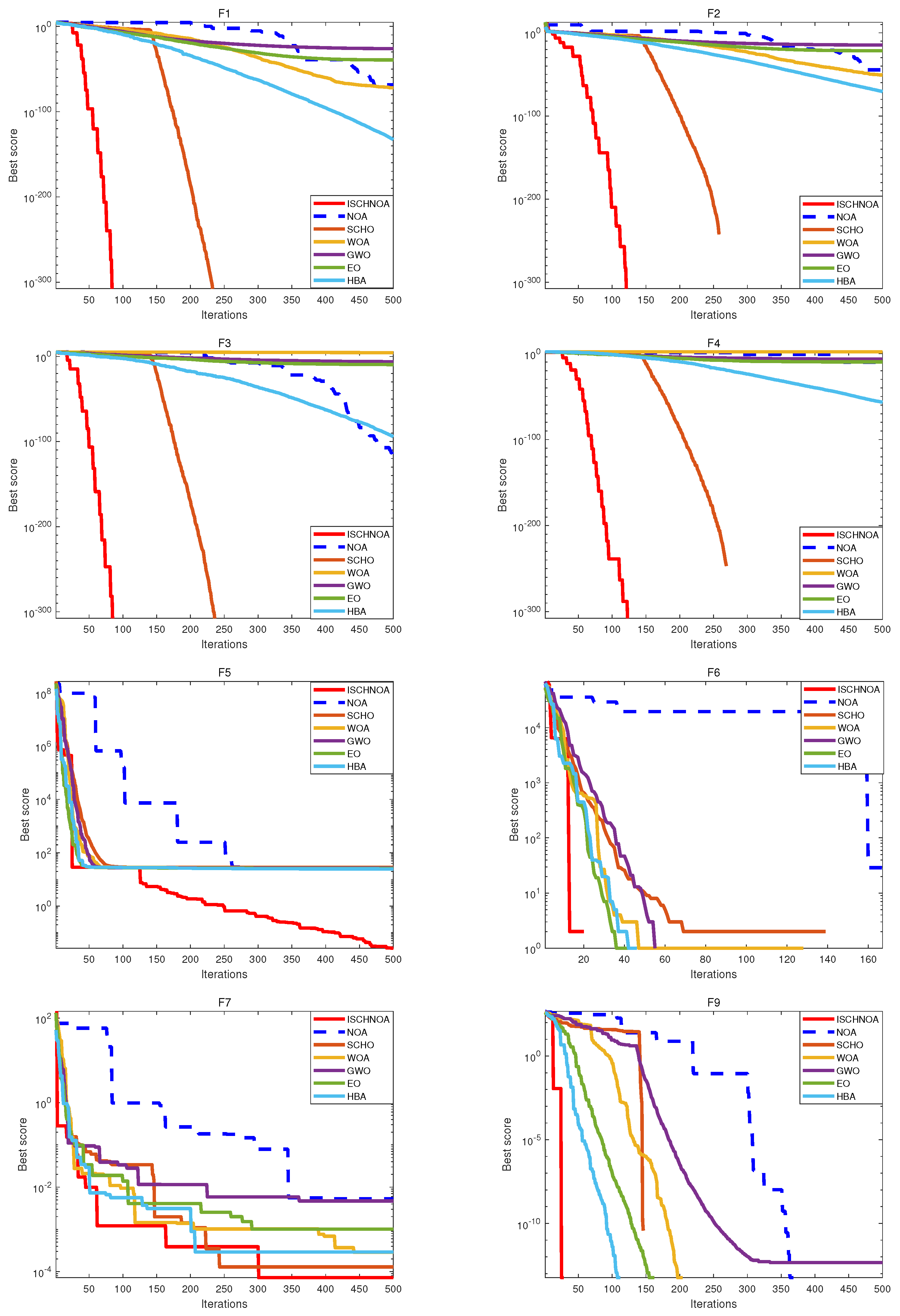

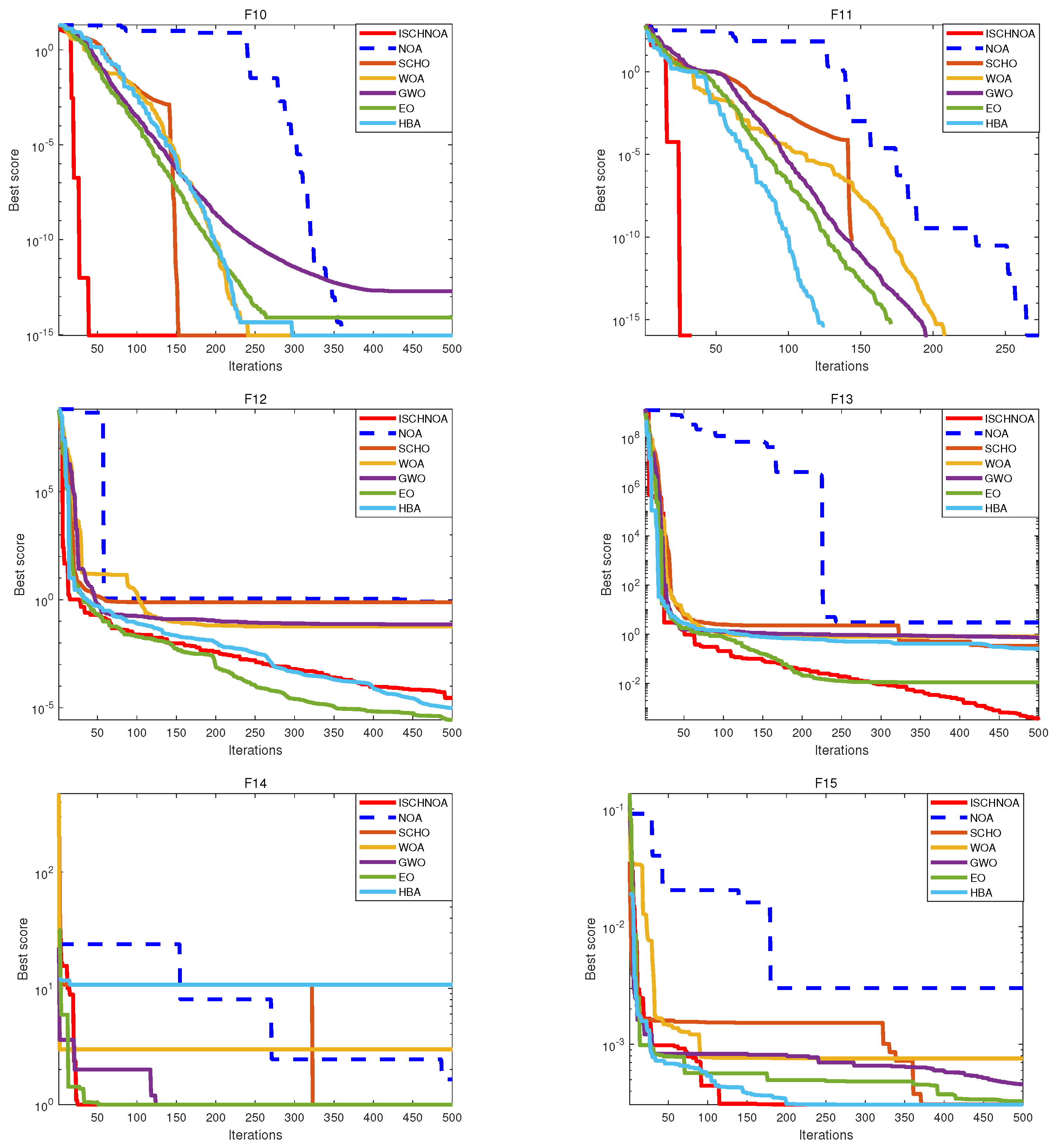

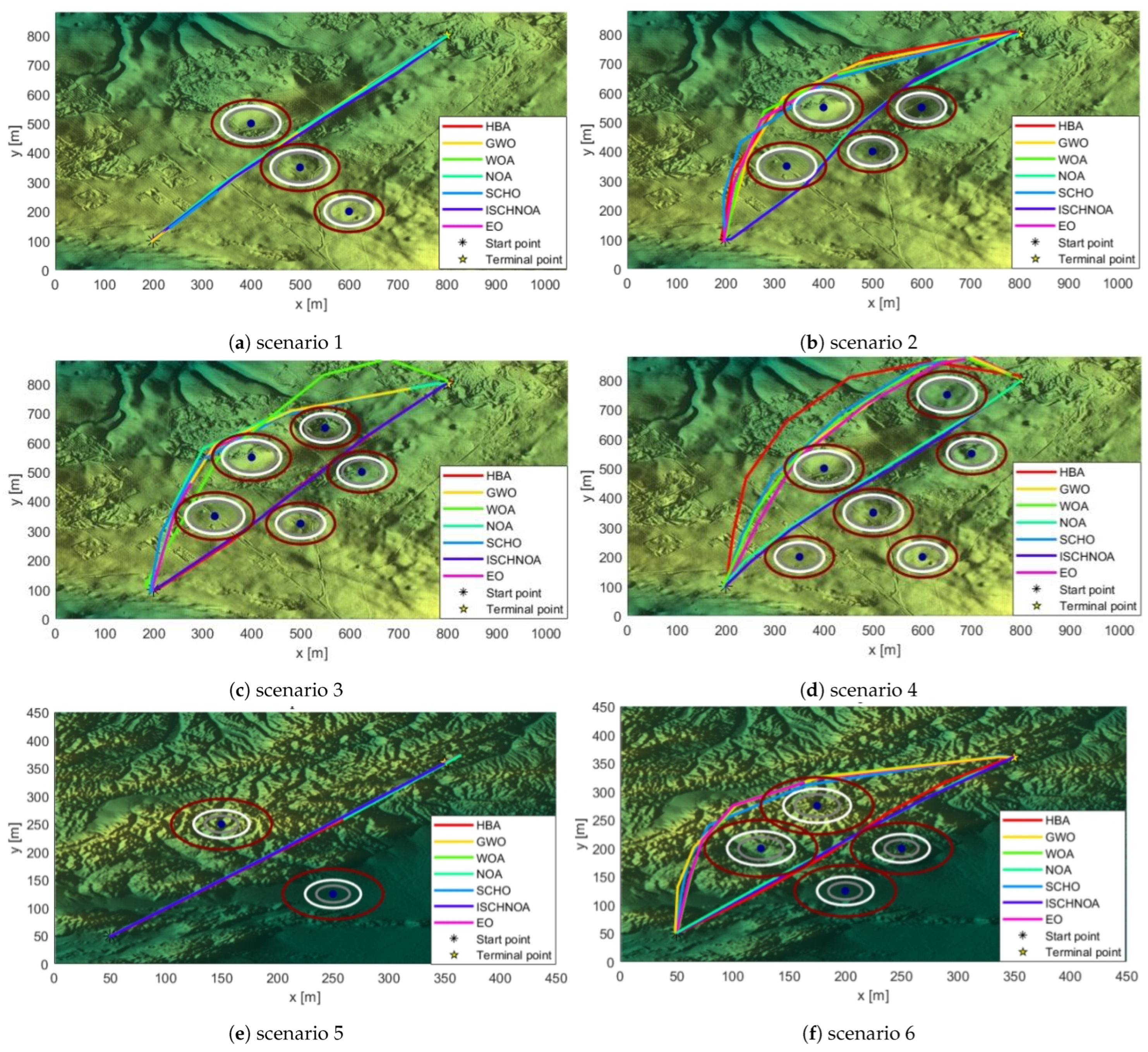

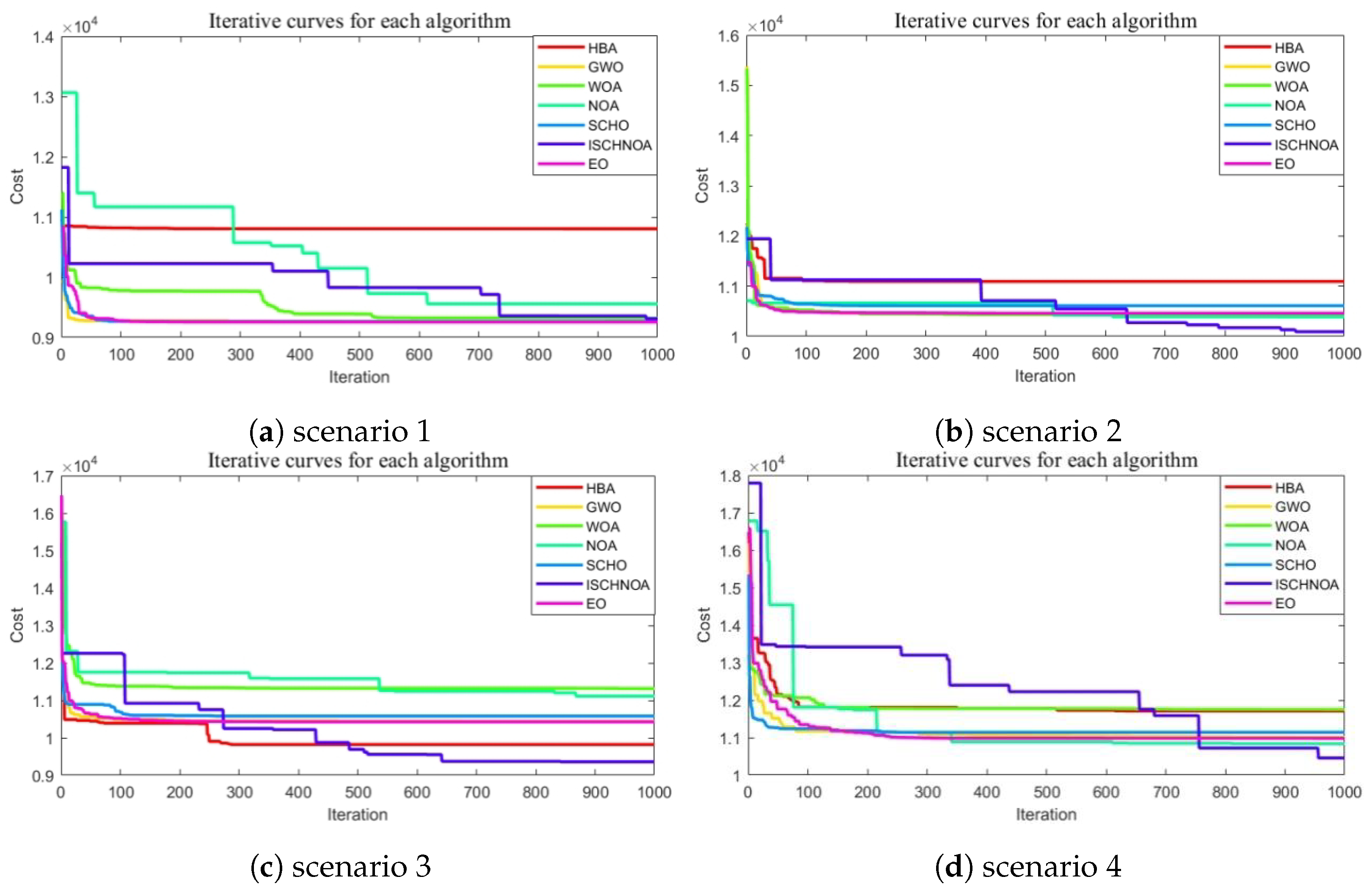

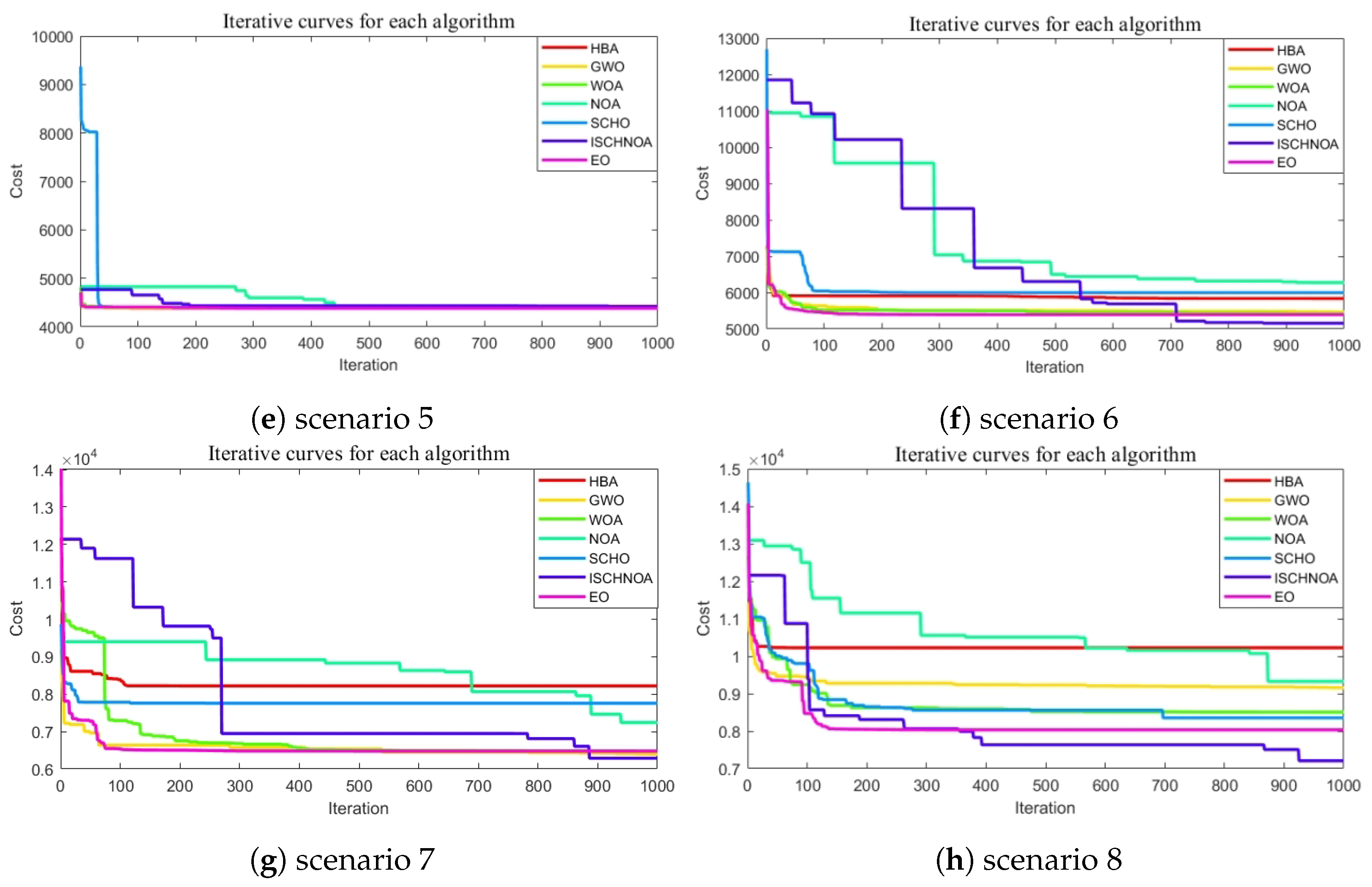

The performance of the improved algorithm is assessed using 14 classical benchmark test functions, as well as the CEC2014 and CEC2020 test suites. Additionally, the optimized algorithm is applied to real map models with progressively increasing densities, thereby validating its effectiveness.

ISCHNOA demonstrates competitive performance not only against recently proposed NOA algorithms but also when compared to other algorithms that exhibit superior performance. The remainder of this paper is structured as follows:

Section 2 provides a description of the UAV path planning problem.

Section 3 presents the NOA algorithm.

Section 4 introduces the ISCHNOA algorithm.

Section 5 assesses the performance of the ISCHNOA algorithm and applies it to 3D maps in real-world scenarios.

Section 6 provides a summary of the research.