Application of Machine Learning Based on Structured Medical Data in Gastroenterology

Abstract

:1. Introduction

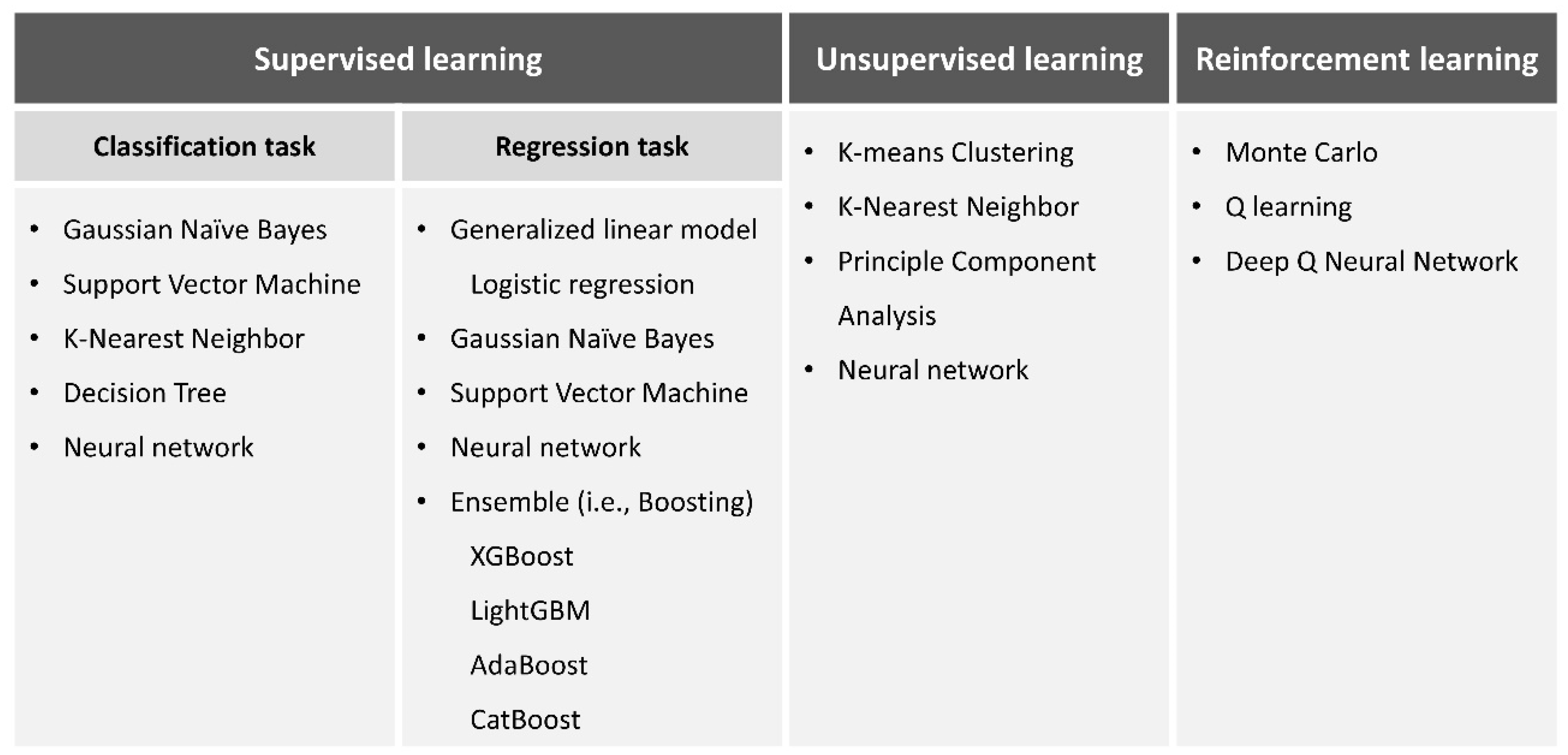

2. ML Technology

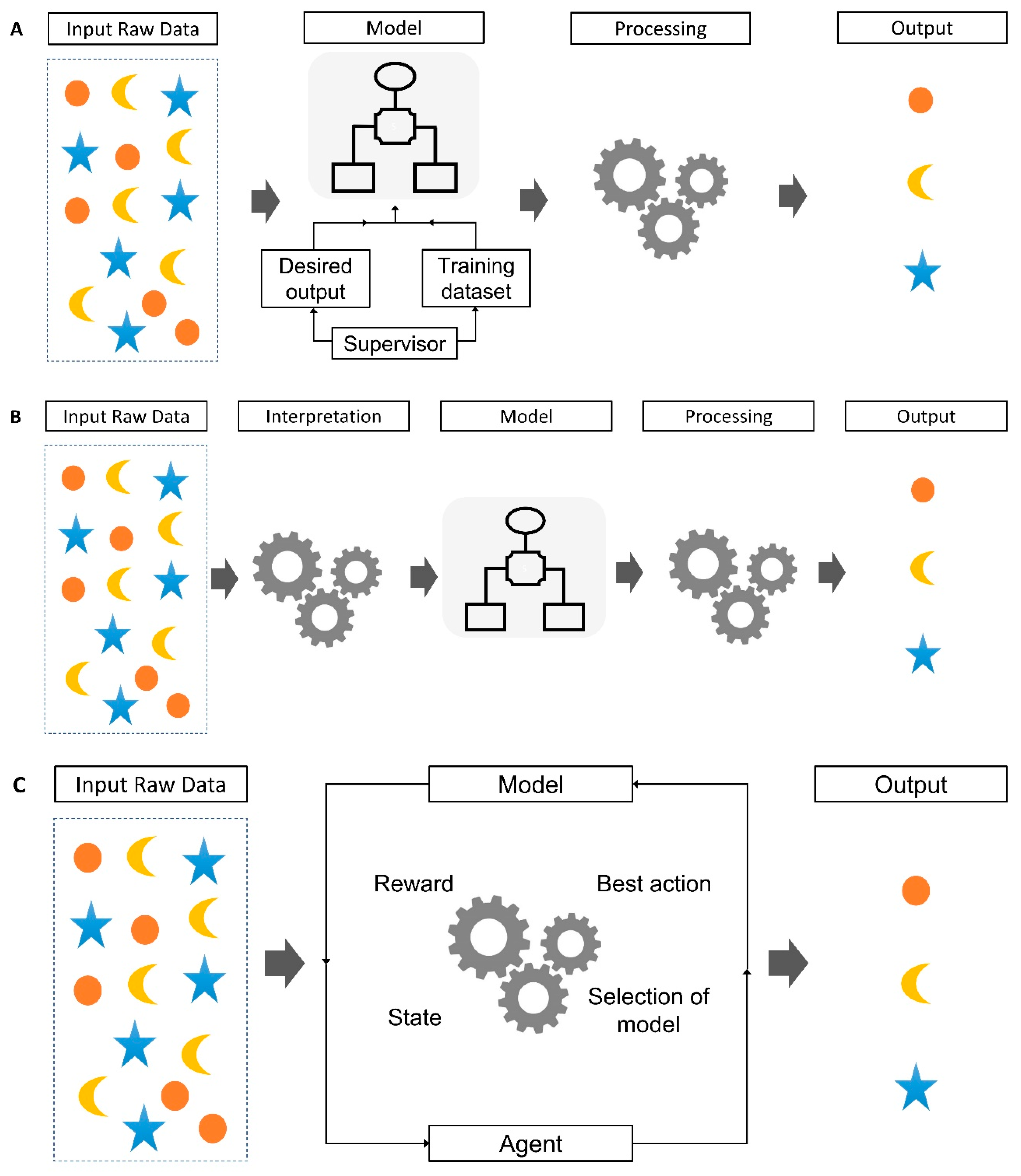

2.1. Supervised Learning

2.2. Unsupervised Learning

2.3. Reinforcement Learning

2.4. Recent Advancement of ML Analysis Models

2.5. Boosting (Hypothesis Boosting)

2.6. Bagging

2.7. Stacking (Stacked Generalization)

3. Application of ML in Gastroenterology

3.1. General Subjects

3.2. Gastrointestinal Hemorrhage

3.3. Gastric Cancer

3.4. Gastrointestinal Tumors and Cancers

4. Challenges and Future Directions for ML Application

5. Large Language Model

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Mintz, Y.; Brodie, R. Introduction to artificial intelligence in medicine. Minim. Invasive Ther. Allied Technol. 2019, 28, 73–81. [Google Scholar] [CrossRef] [PubMed]

- Sakamoto, T.; Goto, T.; Fujiogi, M.; Lefor, A.K. Machine learning in gastrointestinal surgery. Surg. Today 2022, 52, 995–1007. [Google Scholar] [CrossRef]

- McCarthy, J.; Minsky, M.L.; Rochester, N.; Shannon, C.E. A proposal for the dartmouth summer research project on artificial intelligence, august 31, 1955. AI Mag. 2006, 27, 12. [Google Scholar]

- Zhou, J.; Hu, N.; Huang, Z.-Y.; Song, B.; Wu, C.-C.; Zeng, F.-X.; Wu, M. Application of artificial intelligence in gastrointestinal disease: A narrative review. Ann. Transl. Med. 2021, 9, 1188. [Google Scholar] [CrossRef] [PubMed]

- Bang, C.S. Artificial Intelligence in the Analysis of Upper Gastrointestinal Disorders. Korean J. Helicobacter Up. Gastrointest. Res. 2021, 21, 300–310. [Google Scholar] [CrossRef]

- Bang, C.S. Deep Learning in Upper Gastrointestinal Disorders: Status and Future Perspectives. Korean J. Gastroenterol. 2020, 75, 120–131. [Google Scholar] [CrossRef]

- Camacho, D.M.; Collins, K.M.; Powers, R.K.; Costello, J.C.; Collins, J.J. Next-Generation Machine Learning for Biological Networks. Cell 2018, 173, 1581–1592. [Google Scholar] [CrossRef]

- Gong, E.J.; Bang, C.S. Interpretation of Medical Images Using Artificial Intelligence: Current Status and Future Perspectives. Korean J. Gastroenterol. 2023, 82, 43–45. [Google Scholar] [CrossRef]

- Bi, Q.; Goodman, K.E.; Kaminsky, J.; Lessler, J. What is Machine Learning? A Primer for the Epidemiologist. Am. J. Epidemiol. 2019, 188, 2222–2239. [Google Scholar] [CrossRef]

- Adadi, A.; Adadi, S.; Berrada, M. Gastroenterology Meets Machine Learning: Status Quo and Quo Vadis. Adv. Bioinform. 2019, 2019, 1870975. [Google Scholar] [CrossRef]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Deo, R.C. Machine Learning in Medicine. Circulation 2015, 132, 1920–1930. [Google Scholar] [CrossRef] [PubMed]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef] [PubMed]

- Ahn, J.C.; Connell, A.; Simonetto, D.A.; Hughes, C.; Shah, V.H. Application of artificial intelligence for the diagnosis and treatment of liver diseases. Hepatology 2021, 73, 2546–2563. [Google Scholar] [CrossRef] [PubMed]

- Kaelbling, L.P.; Littman, M.L.; Moore, A.W. Reinforcement Learning: A Survey. J. Artif. Intell. Res. 1996, 4, 237–285. [Google Scholar] [CrossRef]

- Yang, Y.J.; Bang, C.S. Application of artificial intelligence in gastroenterology. World J. Gastroenterol. 2019, 25, 1666–1683. [Google Scholar] [CrossRef]

- Gong, E.J.; Bang, C.S.; Lee, J.J.; Yang, Y.J.; Baik, G.H. Impact of the Volume and Distribution of Training Datasets in the Development of Deep-Learning Models for the Diagnosis of Colorectal Polyps in Endoscopy Images. J. Pers. Med. 2022, 12, 1361. [Google Scholar] [CrossRef]

- Mahesh, B. Machine learning algorithms—A review. Int. J. Sci. Res. 2020, 9, 381–386. [Google Scholar]

- L’Heureux, A.; Grolinger, K.; Elyamany, H.F.; Capretz, M.A.M. Machine Learning with Big Data: Challenges and Approaches. IEEE Access 2017, 5, 7776–7797. [Google Scholar] [CrossRef]

- Zhou, Z.-H. Boosting. In Encyclopedia of Database Systems; Liu, L., Özsu, M.T., Eds.; Springer: New York, NY, USA, 2018; pp. 331–334. [Google Scholar]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Naimi, A.I.; Balzer, L.B. Stacked generalization: An introduction to super learning. Eur. J. Epidemiol. 2018, 33, 459–464. [Google Scholar] [CrossRef]

- Ting, K.M.; Witten, I.H. Issues in Stacked Generalization. J. Artif. Intell. Res. 1999, 10, 271–289. [Google Scholar] [CrossRef]

- Wolpert, D.H. Stacked generalization. Neural Netw. 1992, 5, 241–259. [Google Scholar] [CrossRef]

- Bang, C.S.; Ahn, J.Y.; Kim, J.H.; Kim, Y.I.; Choi, I.J.; Shin, W.G. Establishing Machine Learning Models to Predict Curative Resection in Early Gastric Cancer with Undifferentiated Histology: Development and Usability Study. J. Med. Internet Res. 2021, 23, e25053. [Google Scholar] [CrossRef]

- Klang, E.; Freeman, R.; Levin, M.A.; Soffer, S.; Barash, Y.; Lahat, A. Machine Learning Model for Outcome Prediction of Patients Suffering from Acute Diverticulitis Arriving at the Emergency Department—A Proof of Concept Study. Diagnostics 2021, 11, 2102. [Google Scholar] [CrossRef] [PubMed]

- Yoshii, S.; Mabe, K.; Watano, K.; Ohno, M.; Matsumoto, M.; Ono, S.; Kudo, T.; Nojima, M.; Kato, M.; Sakamoto, N. Validity of endoscopic features for the diagnosis of Helicobacter pylori infection status based on the Kyoto classification of gastritis. Dig. Endosc. 2020, 32, 74–83. [Google Scholar] [CrossRef]

- Konishi, T.; Goto, T.; Fujiogi, M.; Michihata, N.; Kumazawa, R.; Matsui, H.; Fushimi, K.; Tanabe, M.; Seto, Y.; Yasunaga, H. New machine learning scoring system for predicting postoperative mortality in gastroduodenal ulcer perforation: A study using a Japanese nationwide inpatient database. Surgery 2021, 171, 1036–1042. [Google Scholar] [CrossRef]

- Liu, Y.; Lin, D.; Li, L.; Chen, Y.; Wen, J.; Lin, Y.; He, X. Using machine-learning algorithms to identify patients at high risk of upper gastrointestinal lesions for endoscopy. J. Gastroenterol. Hepatol. 2021, 36, 2735–2744. [Google Scholar] [CrossRef] [PubMed]

- Shung, D.L.; Au, B.; Taylor, R.A.; Tay, J.K.; Laursen, S.B.; Stanley, A.J.; Dalton, H.R.; Ngu, J.; Schultz, M.; Laine, L. Validation of a Machine Learning Model That Outperforms Clinical Risk Scoring Systems for Upper Gastrointestinal Bleeding. Gastroenterology 2020, 158, 160–167. [Google Scholar] [CrossRef] [PubMed]

- Herrin, J.; Abraham, N.S.; Yao, X.; Noseworthy, P.A.; Inselman, J.; Shah, N.D.; Ngufor, C. Comparative Effectiveness of Machine Learning Approaches for Predicting Gastrointestinal Bleeds in Patients Receiving Antithrombotic Treatment. JAMA Netw. Open 2021, 4, e2110703. [Google Scholar] [CrossRef] [PubMed]

- Seo, D.-W.; Yi, H.; Park, B.; Kim, Y.-J.; Jung, D.H.; Woo, I.; Sohn, C.H.; Ko, B.S.; Kim, N.; Kim, W.Y. Prediction of Adverse Events in Stable Non-Variceal Gastrointestinal Bleeding Using Machine Learning. J. Clin. Med. 2020, 9, 2603. [Google Scholar] [CrossRef]

- Sarajlic, P.; Simonsson, M.; Jernberg, T.; Bäck, M.; Hofmann, R. Incidence, associated outcomes, and predictors of upper gastrointestinal bleeding following acute myocardial infarction: A SWEDEHEART-based nationwide cohort study. Eur. Heart J.-Cardiovasc. Pharmacother. 2022, 8, 483–491. [Google Scholar] [CrossRef]

- Levi, R.; Carli, F.; Arévalo, A.R.; Altinel, Y.; Stein, D.J.; Naldini, M.M.; Grassi, F.; Zanoni, A.; Finkelstein, S.; Vieira, S.M.; et al. Artificial intelligence-based prediction of transfusion in the intensive care unit in patients with gastrointestinal bleeding. BMJ Health Care Inform. 2021, 28, e100245. [Google Scholar] [CrossRef]

- Leung, W.K.; Cheung, K.S.; Li, B.; Law, S.Y.; Lui, T.K. Applications of machine learning models in the prediction of gastric cancer risk in patients after Helicobacter pylori eradication. Aliment. Pharmacol. Ther. 2021, 53, 864–872. [Google Scholar] [CrossRef] [PubMed]

- Arai, J.; Aoki, T.; Sato, M.; Niikura, R.; Suzuki, N.; Ishibashi, R.; Tsuji, Y.; Yamada, A.; Hirata, Y.; Ushiku, T.; et al. Machine learning-based personalised prediction of gastric cancer incidence using the endoscopic and histological findings at the initial endoscopy. Gastrointest. Endosc. 2022, 95, 864–872. [Google Scholar] [CrossRef] [PubMed]

- Zhou, C.; Hu, J.; Wang, Y.; Ji, M.-H.; Tong, J.; Yang, J.-J.; Xia, H. A machine learning-based predictor for the identification of the recurrence of patients with gastric cancer after operation. Sci. Rep. 2021, 11, 1571. [Google Scholar] [CrossRef] [PubMed]

- Zhou, C.M.; Wang, Y.; Ye, H.T.; Yan, S.; Ji, M.; Liu, P.; Yang, J.J. Machine learning predicts lymph node metastasis of poorly differentiated-type intramucosal gastric cancer. Sci. Rep. 2021, 11, 1300. [Google Scholar] [CrossRef]

- Mirniaharikandehei, S.; Heidari, M.; Danala, G.; Lakshmivarahan, S.; Zheng, B. Applying a random projection algorithm to optimize machine learning model for predicting peritoneal metastasis in gastric cancer patients using CT images. Comput. Methods Progr. Biomed. 2021, 200, 105937. [Google Scholar] [CrossRef]

- Zhou, C.; Wang, Y.; Ji, M.-H.; Tong, J.; Yang, J.-J.; Xia, H. Predicting Peritoneal Metastasis of Gastric Cancer Patients Based on Machine Learning. Cancer Control 2020, 27, 107327482096890. [Google Scholar] [CrossRef]

- Li, M.; He, H.; Huang, G.; Lin, B.; Tian, H.; Xia, K.; Yuan, C.; Zhan, X.; Zhang, Y.; Fu, W. A Novel and Rapid Serum Detection Technology for Non-Invasive Screening of Gastric Cancer Based on Raman Spectroscopy Combined with Different Machine Learning Methods. Front. Oncol. 2021, 11, 665176. [Google Scholar] [CrossRef]

- Liu, D.; Wang, X.; Li, L.; Jiang, Q.; Li, X.; Liu, M.; Wang, W.; Shi, E.; Zhang, C.; Wang, Y.; et al. Machine Learning-Based Model for the Prognosis of Postoperative Gastric Cancer. Cancer Manag. Res. 2022, 14, 135–155. [Google Scholar] [CrossRef]

- Chen, Y.; Wei, K.; Liu, D.; Xiang, J.; Wang, G.; Meng, X.; Peng, J. A Machine Learning Model for Predicting a Major Response to Neoadjuvant Chemotherapy in Advanced Gastric Cancer. Front. Oncol. 2021, 11, 675458. [Google Scholar] [CrossRef]

- Rahman, S.A.; Maynard, N.; Trudgill, N.; Crosby, T.; Park, M.; Wahedally, H.; the NOGCA Project Team and AUGIS. Prediction of long-term survival after gastrectomy using random survival forests. Br. J. Surg. 2021, 108, 1341–1350. [Google Scholar] [CrossRef]

- Wang, S.; Dong, D.; Zhang, W.; Hu, H.; Li, H.; Zhu, Y.; Zhou, J.; Shan, X.; Tian, J. Specific Borrmann classification in advanced gastric cancer by an ensemble multilayer perceptron network: A multicenter research. Med. Phys. 2021, 48, 5017–5028. [Google Scholar] [CrossRef]

- Christopherson, K.M.; Das, P.; Berlind, C.; Lindsay, W.D.; Ahern, C.; Smith, B.D.; Subbiah, I.M.; Koay, E.J.; Koong, A.C.; Holliday, E.B.; et al. A Machine Learning Model Approach to Risk-Stratify Patients with Gastrointestinal Cancer for Hospitalization and Mortality Outcomes. Int. J. Radiat. Oncol. Biol. Phys. 2021, 111, 135–142. [Google Scholar] [CrossRef] [PubMed]

- Shimizu, H.; Nakayama, K.I. A universal molecular prognostic score for gastrointestinal tumors. NPJ Genom. Med. 2021, 6, 6. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Xie, Z.; Zhu, X.; Niu, Z.; Ji, H.; He, L.; Hu, Q.; Zhang, C. Differentiation of gastric schwannomas from gastrointestinal stromal tumors by CT using machine learning. Abdom. Imaging 2021, 46, 1773–1782. [Google Scholar] [CrossRef]

- Wang, M.; Feng, Z.; Zhou, L.; Zhang, L.; Hao, X.; Zhai, J. Computed-Tomography-Based Radiomics Model for Predicting the Malignant Potential of Gastrointestinal Stromal Tumors Preoperatively: A Multi-Classifier and Multicenter Study. Front. Oncol. 2021, 11, 582847. [Google Scholar] [CrossRef] [PubMed]

- Maheswari, G.U.; Sujatha, R.; Mareeswari, V.; Ephzibah, E. The role of metaheuristic algorithms in healthcare. In Machine Learning for Healthcare; Chapman and Hall/CRC: Boca Raton, FL, USA, 2020; pp. 25–40. [Google Scholar]

- Chen, R.J.; Lu, M.Y.; Chen, T.Y.; Williamson, D.F.K.; Mahmood, F. Synthetic data in machine learning for medicine and healthcare. Nat. Biomed. Eng. 2021, 5, 493–497. [Google Scholar] [CrossRef] [PubMed]

- Kokosi, T.; Harron, K. Synthetic data in medical research. BMJ Med. 2022, 1, e000167. [Google Scholar] [CrossRef]

- Goncalves, A.; Ray, P.; Soper, B.; Stevens, J.; Coyle, L.; Sales, A.P. Generation and evaluation of synthetic patient data. BMC Med. Res. Methodol. 2020, 20, 108. [Google Scholar] [CrossRef]

- Nayyar, A.; Gadhavi, L.; Zaman, N. Chapter 2—Machine learning in healthcare: Review, opportunities and challenges. In Machine Learning and the Internet of Medical Things in Healthcare; Singh, K.K., Elhoseny, M., Singh, A., Elngar, A.A., Eds.; Academic Press: Cambridge, MA, USA, 2021; pp. 23–45. [Google Scholar]

- ChatGPT (Mar 14 Version) [Large Language Model]. [Internet]. OpenAI. 2023. Available online: https://chat.openai.com/chat (accessed on 6 September 2023).

- Bard [Large Language Model]. Google AI. 2023. Available online: https://bard.google.com (accessed on 6 September 2023).

- Rajpurkar, P.; Lungren, M.P. The current and future state of AI interpretation of medical images. N. Engl. J. Med. 2023, 388, 1981–1990. [Google Scholar] [CrossRef] [PubMed]

- Şendur, H.N.; Şendur, A.B.; Cerit, M.N. ChatGPT from radiologists’ perspective. Br. J. Radiol. 2023, 96, 20230203. [Google Scholar] [CrossRef] [PubMed]

- Haug, C.J.; Drazen, J.M. Artificial Intelligence and Machine Learning in Clinical Medicine, 2023. N. Engl. J. Med. 2023, 388, 1201–1208. [Google Scholar] [CrossRef] [PubMed]

| Reference | Published Year | Aim of Study | Design of Study | Number of Subjects | Type of Machine Learning Model | Input Variables | Outcomes |

|---|---|---|---|---|---|---|---|

| Klang E et al. [26] | 2021 | Prediction of outcomes in acute diverticulitis | Retrospective | 4497 patients | XGboost | 31 clinical and biologic variables | Internal test performance: Sensitivity: 88%, Negative predictive value: 99% External test AUC: 0.85 |

| Yoshii S et al. [27] | 2020 | Diagnosis of Helicobacter pylori infection status based on the Kyoto classification of gastritis | Prospective | 498 patients | generalized linear model | 16 endoscopic features | Internal test performance: overall diagnostic accuracy: 82.9% |

| Konishi T et al. [28] | 2021 | Prediction of postoperative mortality in gastroduodenal ulcer perforation | Retrospective | 25,886 patients | Lasso and XGBoost | 45 clinical candidate predictors | Internal test performance: Lasso (AUC: 0.84) XGBoost (AUC: 0.88) |

| Liu Y et al. [29] | 2021 | Prediction of patient risk of upper gastrointestinal lesions to identify high risk for endoscopy | Retrospective | 620 patients | Support vector machine | 48 clinical symptoms, serological results, and pathological variables | Internal test accuracy: 91.2% |

| Reference | Published Year | Aim of Study | Design of Study | Number of Subjects | Type of Machine Learning Model | Input Variables | Outcomes |

|---|---|---|---|---|---|---|---|

| Shung DL et al. [30] | 2019 | Risk of hospital-based intervention or death in patients with upper gastrointestinal hemorrhage | Retrospective | 2357 patients | XGBoost | 24 clinical and biologic variables | Internal test AUC: 0.91 External test AUC: 0.90 |

| Herrin J et al. [31] | 2021 | Prediction of gastrointestinal hemorrhage in patients receiving antithrombotic treatment | Retrospective | 306,463 patients | Regularized Cox proportional hazards regression model and the XGBoost model | 32 clinical variables | Internal test AUC: 0.67 at 6 months, 0.66 at 12 months |

| Seo DW et al. [32] | 2020 | Prediction of adverse events in stable non-variceal gastrointestinal hemorrhage | Retrospective | 1439 patients | Random forest | 38 clinicopathological variables | Internal test AUC: 0.92 |

| Sarajlic P et al. [33] | 2021 | Predictors of upper gastrointestinal hemorrhage following acute myocardial infarction | Nationwide cohort Prospective | 149,477 (acute myocardial infarction patients with antithrombotic therapy) | Random forest | 25 predictor variables | C-index: 0.73 |

| Levi R et al. [34] | 2020 | Prediction of rebleeding in patients admitted to the intensive care unit with gastrointestinal hemorrhage | Retrospective | 14,620 patients | Ensemble machine learning model | 20 clinical and pathological variables | Internal test AUC: 0.81 |

| Reference | Published Year | Aim of Study | Design of Study | Number of Subjects | Type of Machine Learning Model | Input Variables | Outcomes |

|---|---|---|---|---|---|---|---|

| Leung W et al. [35] | 2020 | Prediction of gastric cancer risk in patients after Helicobacter pylori eradication | Retrospective | 89,568 patients | XGBoost | 26 clinical variables | Internal test performance: AUC: 0.97 Sensitivity: 98.1% Specificity: 93.6% |

| Arai J et al. [36] | 2021 | Prediction of gastric cancer incidence | Retrospective | 1099 patients | GBDT | 8 clinical and biological variables | C-index: 0.84 |

| Zhou C et al. [37] | 2021 | Prediction for recurrence of gastric cancer after operation | Retrospective | 2012 patients | Logistic regression | 10 clinical variables | Internal test accuracy: 0.801 |

| Zhou CM et al. [38] | 2021 | Prediction of lymph node metastasis of poorly differentiated-type intramucosal gastric cancer | Retrospective | 1169 patients with postoperative gastric cancer | XGBoost | 10 clinicopathological variables | Internal test accuracy: 0.95 |

| Mirniaharikandehei S et al. [39] | 2021 | Prediction of gastric cancer metastasis before surgery | Retrospective | 159 patients | GBM with random projection algorithm | 5 clinical variables and abdominal computed tomography images | Internal test performance: Accuracy: 71.2% Precision: 65.8% Sensitivity: 43.1% Specificity: 87.1% |

| Zhou C et al. [40] | 2020 | Prediction of peritoneal metastasis of gastric cancer | Retrospective | 1080 patients | GBM, light GBM | 20 clinical and biological variables | Internal test accuracy: 0.91 |

| Bang et al. [25] | 2021 | Prediction the possibility of curative resection in undifferentiated-type early gastric cancer prior to endoscopic submucosal dissection | Retrospective | 3105 undifferentiated-type early gastric cancers | XGboost | 8 clinical variables | External test accuracy: 89.8% |

| Li M et al. [41] | 2021 | Differentiate serum samples from stomach cancer patients and healthy controls | Retrospective | 109 patients (including 35 in stage I, 14 in stage II, 35 in stage III, and 25 in stage IV) 104 health volunteers | Random forest in conjunction with Raman spectroscopy | Serum samples | Internal test performance: Accuracy: 92.8% Sensitivity: 94.7% Specificity: 90.8% |

| Liu D et al. [42] | 2021 | Prediction of prognosis of postoperative gastric cancer | Retrospective | 17,690 patients with gastric cancer (additional external test set: 955) | Lasso regression | 12 clinicopathological variables | Internal test and external test AUC: 0.8 |

| Chen Y et al. [43] | 2021 | Prediction of major pathological response to neoadjuvant chemotherapy in advanced gastric cancer | Retrospective | 221 patients | Lasso regression | 15 clinicopathological variables | C-index: 0.763 |

| Rahman SA et al. [44] | 2021 | Prediction of long-term survival after gastrectomy | Retrospective | 2931 patients | Non-linear random survival forests bootstrapping | 29 clinical and pathological variables | Internal test performance: time-dependent AUC at 5 years: 0.80 C-index: 0.76 |

| Wang S et al. [45] | 2020 | Borrmann classification in advanced gastric cancer | Retrospective | 597 AGC patients (additional 292 patients for external test) | Ensemble multi-layer neural network | Computed tomography images (Borrmann I/II/III vs. IV and Borrmann II vs. III) | External test performance: Borrmann I/II/III vs. IV AUC: 0.7, Borrmann II vs. III AUC: 0.73 |

| Reference | Published Year | Aim of Study | Design of Study | Number of Subjects | Type of Machine Learning Model | Input Variables | Outcomes |

|---|---|---|---|---|---|---|---|

| Christopherson KM et al. [46] | 2020 | Prediction of 30-day unplanned hospitalization for gastrointestinal malignancies | Prospective | 1341 patients (consecutive patients undergoing gastrointestinal radiation treatment) | GBDT | 787 predefined candidate clinical and treatment variables | Internal test performance: AUC: 0.82 |

| Shimizu H et al. [47] | 2021 | Development of universal molecular prognostic score based on the expression state of 16 genes in colorectal cancer | Retrospective | Over 1200 patients | Lasso regression | Gene scoring | Application of established genetic universal prognostic classifier for patients with gastric cancers and showed acceptable prediction in Kaplan–Meyer curve |

| Wang J et al. [48] | 2020 | Differentiation of gastric schwannomas from gastrointestinal stromal tumors using computed tomography images | Retrospective | 188 patients/ 49 patients with schwannomas and 139 patients with gastrointestinal stromal tumors | Logistic regression | 8 clinical characteristics and computed tomography findings | Internal test performance: AUC: 0.97 |

| Wang M et al. [49] | 2021 | Prediction of risk stratification for gastrointestinal stromal tumors | Retrospective | 180 patients with gastrointestinal stromal tumors (additional 144 patients for external test) | Random forest | Computed tomography images (Top 10 features with importance value above 5) | External test performance: AUC: 0.90 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.-J.; Gong, E.-J.; Bang, C.-S. Application of Machine Learning Based on Structured Medical Data in Gastroenterology. Biomimetics 2023, 8, 512. https://doi.org/10.3390/biomimetics8070512

Kim H-J, Gong E-J, Bang C-S. Application of Machine Learning Based on Structured Medical Data in Gastroenterology. Biomimetics. 2023; 8(7):512. https://doi.org/10.3390/biomimetics8070512

Chicago/Turabian StyleKim, Hye-Jin, Eun-Jeong Gong, and Chang-Seok Bang. 2023. "Application of Machine Learning Based on Structured Medical Data in Gastroenterology" Biomimetics 8, no. 7: 512. https://doi.org/10.3390/biomimetics8070512

APA StyleKim, H.-J., Gong, E.-J., & Bang, C.-S. (2023). Application of Machine Learning Based on Structured Medical Data in Gastroenterology. Biomimetics, 8(7), 512. https://doi.org/10.3390/biomimetics8070512