Abstract

The sand cat is a creature suitable for living in the desert. Sand cat swarm optimization (SCSO) is a biomimetic swarm intelligence algorithm, which inspired by the lifestyle of the sand cat. Although the SCSO has achieved good optimization results, it still has drawbacks, such as being prone to falling into local optima, low search efficiency, and limited optimization accuracy due to limitations in some innate biological conditions. To address the corresponding shortcomings, this paper proposes three improved strategies: a novel opposition-based learning strategy, a novel exploration mechanism, and a biological elimination update mechanism. Based on the original SCSO, a multi-strategy improved sand cat swarm optimization (MSCSO) is proposed. To verify the effectiveness of the proposed algorithm, the MSCSO algorithm is applied to two types of problems: global optimization and feature selection. The global optimization includes twenty non-fixed dimensional functions (Dim = 30, 100, and 500) and ten fixed dimensional functions, while feature selection comprises 24 datasets. By analyzing and comparing the mathematical and statistical results from multiple perspectives with several state-of-the-art (SOTA) algorithms, the results show that the proposed MSCSO algorithm has good optimization ability and can adapt to a wide range of optimization problems.

1. Introduction

With the development of the information age, there is an explosive increase in data volume. The problems people encounter in fields such as engineering [1], ecology [2], information [3], manufacturing [4], design [5], and management [6] are becoming increasingly complex. Most of these problems exhibit characteristics such as multi-objective [7] and high-dimensional [8]. The swarm intelligence algorithm is a critical way to solve optimization problems [9], with simple principles, easy implementation, and excellent performance; it has been favored by more and more scholars, and research in this area is also increasing [10]. Heuristic intelligence algorithms can achieve higher global optima by using a random search. Due to its independence in utilizing function gradients, heuristic algorithms do not require the objective function to have continuously differentiable conditions, providing optimization possibilities for some objective functions that cannot be optimized through a gradient descent [11,12].

The SCSO is a recently proposed efficient swarm intelligence algorithm that simulates the lifestyle habits of sand cats for optimization. It belongs to the evolutionary algorithm that simulates biological practices [13]. The SCSO algorithm has a simple structure and is easy to implement. Compared with the cat swarm optimization (CSO) [14], grey wolf Optimizer (GWO) [15], whale optimization algorithm (WOA) [16], salp swarm algorithm (SSA) [17], gravitational search algorithm (GSA) [18], particle swarm optimization (PSO) [19], black widow optimization (BWO) [20] and other algorithms, it has local solid development capabilities. Although the SCSO algorithm has achieved good results, due to a low population diversity and too-single exploration angle, the algorithm has a slow convergence speed and low solving accuracy, and is prone to falling into local optima during the exploration stage of complex problems. Due to the abundance of natural organisms and their easy-to-understand and accept survival habits, evolutionary algorithms that simulate biological patterns have become a hot research topic for relevant experts and scholars, such as the Genghis Khan shark optimizer (GKSO) [21], Harris hawks optimization (HHO) [22], snake optimizer (SO) [23], dung beetle optimizer (DBO) [24], crayfish optimization algorithm (COA) [25], and so on. The food chain is the foundation for the survival of the fittest, and many organisms have related shortcomings, which leads to evolutionary algorithms that simulate biological habits. Although they can solve numerous optimization problems, the optimization effect sometimes could be better. Therefore, excellent mathematical models can be obtained for optimization problem-solving after constructing a mathematical model for optimizing biological habits and improving some mathematical theories [26]. This method often achieves good optimization results. Farzad Kiani et al. proposed chaotic sand cat swarm optimization [27], Seyyedabbasi proposed binary sand cat swarm optimization algorithm [28], Amjad Qtaish et al. proposed memory-based sand cat swarm optimization [29], Wu et al. proposed modified sand cat swarm optimization algorithm [30], and Farzad Kiani et al. proposed enhanced sand cat swarm optimization inspired by the political system [31]. Our research team is also committed to improving the effectiveness of original biological intelligence algorithms by introducing some mathematical theories. We proposed algorithms such as the enhanced snake optimizer (ESO) [32], hybrid golden jackal optimization and golden sine algorithm with dynamic lens-imaging learning (LSGJO) [33], reptile search algorithm considering different flight heights (FRSA) [34], etc., to provide some ideas for solving optimization problems.

Although SCSO achieved specific results in optimization problems, it is not perfect. When encountering higher dimensional and multi-feature issues, the convergence speed of the SCSO algorithm is slow, and the disadvantage of quickly falling into local optima is fully exposed. To improve the effectiveness of the SCSO and help it overcome some physiological limitations, this paper proposes three novel strategies: a novel opposition-based learning strategy, a novel exploration mechanism, and a biological elimination update mechanism. These strategies help the SCSO quickly jump out of local optima, accelerate convergence speed, and improve optimization accuracy.

To verify the effectiveness of the MSCSO algorithm proposed in this paper, the algorithm was applied to two kinds of problems, global optimization (containing 30 functions) [35] and feature selection (containing 20 datasets) [36], which are also common complexity problems in many fields. Global optimization includes unimodal multidimensional functions, multimodal multidimensional functions, and multimodal fixed-dimensional functions. Unimodal functions are used to test the development ability of optimization algorithms, multimodal functions are used to test the exploration ability of optimization algorithms, and multidimensional functions are used to test the stability of algorithms. Feature Selection is considered an NP-hard problem: when a dataset has N features, 2N feature subsets are generated. Metaheuristic algorithms are widely used to find an optimal solution to NP-hard problems. Such as Ma et al. [37], Wu et al. [38] and Fan et al. [39] used global optimization functions to test their proposed algorithms. Wang et al. [40], Lahmar et al. [41], and Turkoglu et al. [42] used feature selection datasets to test their proposed algorithms. Finally, by comparing the results with many advanced algorithms in global optimization and feature selection problems, it is proven that the improved algorithm proposed in this paper has excellent performance.

The main contribution of this paper is as follows:

- ♦

- Three improvement strategies (novel opposition-based learning strategy, novel exploration mechanism, and biological elimination update mechanism) are used to improve the optimization performance of the SCSO algorithm.

- ♦

- Thirty standard test functions for intelligent optimization algorithm testing are used to evaluate the proposed MSCSO and compare the results with 11 other advanced optimization algorithms.

- ♦

- Twenty-four feature selection datasets were used to evaluate the proposed MSCSO and compare the results with other advanced optimization algorithms.

The chapters of this paper are arranged as follows: Section 1 introduces the background of intelligent optimization algorithms, Section 2 introduces the original SCSO, Section 3 introduces the relevant improvement strategies designed in this paper, and introduces the proposed MSCSO. Section 4 applies the proposed MSCSO to global optimization and feature selection problems and describes the corresponding statistical results. Section 5 summarizes the entire paper and looks forward to future research directions.

2. Original SCSO

The sand cat is the only type of cat that lives in the desert and can walk on soft, hot sand. With its superb auditory ability, it can detect low-frequency noise, detect and track prey, whether moving on the ground or underground, and then carry out capture operations on the prey. The SCSO is a novel biomimetic optimization algorithm that simulates the behavior of the sand cat in nature to achieve the optimization process. In the SCSO process, the detection and tracking of prey by sand cats can be observed as the exploration phase of the algorithm, while the capture of prey by sand cats is the exploitation phase of the algorithm.

In SCSO, the generation method of the initial solutions for the sand cat is shown in Equations (1) and (2).

where represents the position of the i-th sand cat, , is the number of populations, is the dimension for solving the problem, indicates the position of the i-th sand cat in the j-th dimension, is a random number between 0 and 1, and are the upper and lower boundary of the j-th dimensional space, respectively.

Due to its unique ear canal structure, sand cats can perceive low-frequency noise, which can help them make judgments based on the noise situation, search, or track prey, and achieve conversion between the stages of surround (exploration) and hunting (exploitation). is a mathematical model for sand cats to sense low-frequency noise, as shown in Equations (3)–(5).

where is the constant 2, is a linear line that converges from 2 to 0, represents the current number of iterations, represents the maximum number of iterations, represents the transition control between exploration and exploitation, , and are random numbers between 0 and 1, and represents the sensitivity range of each sand cat.

When |R| > 1, The SCSO has entered the exploration phase, and its position update method is shown in Equation (6).

where represents the position of the i-th sand cat in the j-th dimension during the t-th iteration. represents the position of the i-th sand cat in the j-th dimension during the (t+1)-th iteration. is the position of the r-th sand cat in the j-th dimension of the sand cat group during the t-th iteration. is a random number between 0 and 1.

When |R| ≤ 1, the SCSO has entered the exploitation phase, and its location update method is shown in Equations (7) and (8).

where represents the random position at t-th iteration, which ensures that the sand cat can approach its prey. is the position of the optimal individual in the sand cat group in the j-th dimension during the t-th iteration. is a random number between 0 and 1. is assigned by the roulette wheel algorithm. represents the position of the i-th sand cat in the j-th dimension during the (t + 1)-th iteration.

The pseudocode of the SCSO is shown in Algorithm 1.

| Algorithm 1 The pseudocode of the SCSO | |

| 1. | Initialize the population Si |

| 2. | Calculate the fitness function based on the objective function |

| 3. | Initialize the |

| 4. | While (t < = ) |

| 5. | For each search agent |

| 6. | Obtain a random angle based on the Roulette Wheel Selection () |

| 7. | If (abs(R) > 1) |

| 8. | Update the search agent position based on the Equation (6) |

| 9. | Else |

| 10. | Update the search agent position based on the Equation (8) |

| 11 | End |

| 12. | End |

| 13. | t = t + 1 |

| 14. | End |

3. Proposed MSCSO

The SCSO was proposed by Seyyedabbasi and Kiani in 2022 as a biomimetic intelligent algorithm. Due to sand cats’ intense hunting and survival abilities, the SCSO has excellent optimization ability by simulating their habits. But there is no free lunch in the world, and there is no one way to solve all problems [43]. When solving optimization problems, the SCSO encountered some problems. This paper introduces novel mathematical theories to improve the SCSO, enhance its effectiveness, and help it overcome some physiological limitations. This paper proposes three novel strategies, namely, a novel opposition-based learning strategy, a novel exploration mechanism, and a biological elimination update mechanism, to help the SCSO quickly jump out of local optima, accelerate convergence speed, and improve optimization accuracy.

3.1. Nonlinear Lens Imaging Strategy

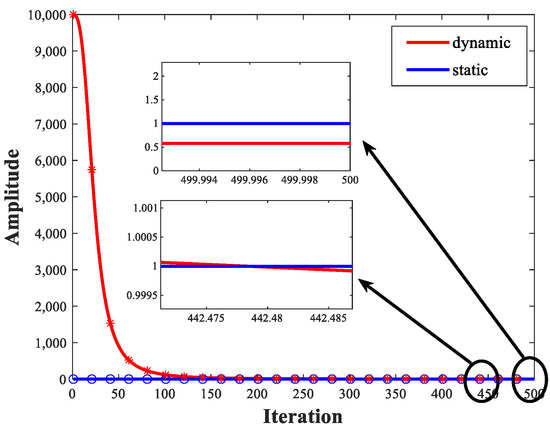

Lens imaging strategy is a form of opposition-based learning [44]. By refracting the object on one side of the convex lens from the convex lens to the other side of the convex lens, a more optimal solution can be obtained. However, in traditional convex lens imaging mechanisms, the imaging coefficients are often a fixed value, which is not conducive to generating population diversity. Therefore, this paper proposes a novel lens imaging strategy that expands the diversity of the population and increases the possibility of obtaining high-quality solutions by setting dynamically updated imaging coefficients. The dynamically updated imaging coefficient is defined here as , which can be calculated by Equations (9)–(11). Figure 1 shows the variations of the static lens imaging strategy and the emotional lens imaging strategy. The dynamic lens imaging strategy can search for more effective regions to improve the population’s diversity and enhance the algorithm’s global search ability.

where is the original solution; is a new solution obtained through the lens imaging strategy.

Figure 1.

Variations of the static lens imaging strategy and the dynamic lens imaging strategy.

3.2. Novel Exploration Mechanisms

In the development phase of the original SCSO, assuming that the sensitivity range of the sand cat is a circle, the direction of movement can be determined by a random angle on the circle θ. Due to the selected arbitrary angle being between 0 and 360, its value will be between −1 and 1. In this way, each group member can move in different circumferential directions in the search space. SCSO uses a roulette selection algorithm to select a random angle for each sand cat.

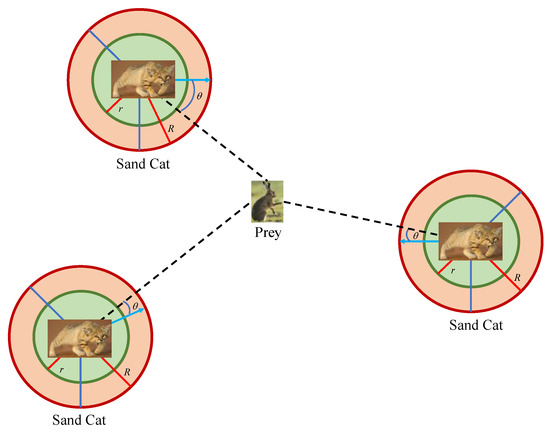

Inspired by this idea, this paper proposes a novel exploration mechanism by adding a random angle θ, enabling the sand cat to search for prey in different directions during the exploration phase. The novel exploration mechanism is represented by Equation (12). By increasing the random angle, the sand cat can approach its prey, increasing the randomness of exploration and utilizing the sand cat to close the optimal individual position. Figure 2 shows the variation form of random angle θ. Using this method, the sand cat can approach the hunting position while avoiding the risk of getting trapped in local optima by introducing an unexpected angle.

where represents the position of the i-th sand cat in the j-th dimension during the (t+1)-th iteration. is a random number between 1 and 2. is a random number between 1 and d. represents the position of the i-th sand cat in the dimension during the t-th iteration. represents the position of the i-th sand cat in the dimension during the t-th iteration.

Figure 2.

The variation form of random angle θ.

3.3. Elimination and Update Mechanism

Although the sand cat is a highly viable organism, the number of sand cat species has also changed during the exploration and exploitation stages due to changes in the external environment. Some sand cats may even be attacked by higher-level food chain species and die out. Inspired by this phenomenon, this paper proposes an elimination and update mechanism to ensure that the population size of sand cats remains consistent during the optimization process. This mechanism randomly selects 10% of individuals for elimination. If the fitness value of the new individual is lower, the old individual will be replaced, which is more in line with the survival of the fittest in the competition process of organisms. The update mechanism is shown in Equation (13).

where, and are random numbers between 0 and 1, respectively.

Apply the proposed improvement strategies to the SCSO and proposed the MSCSO algorithm. The pseudocode of MSCSO is shown in Algorithm 2.

| Algorithm 2. The pseudocode of MSCSO. | |

| 1. | Initialize the population |

| 2. | Calculate the fitness function based on the objective function |

| 3. | Initialize the |

| 4. | While (t <= ) |

| 5. | For each search agent |

| 6. | Obtain a random angle based on the Roulette Wheel Selection () |

| 7 | Obtain new position by Equation (10) |

| 8 | Calculate the fitness function values to obtain the optimal position |

| 9. | If (abs(R) > 1) |

| 10. | Update the search agent position based on the Equation (12) |

| 11. | Else |

| 12. | Update the search agent position based on the Equation (8) |

| 13 | End |

| 14 | Update new position using Equation (13) |

| 15 | Check the boundaries of new position and calculate fitness value |

| 16. | End |

| 17 | Find the current best solution |

| 18. | t = t + 1 |

| 19. | End |

3.4. Time Complexity of MSCSO

In the process of optimizing practical problems, in addition to pursuing accuracy, time is also a very significant factor. The time complexity of an algorithm is an essential indicator for measuring the algorithm [45]. Therefore, it is crucial to analyze the time complexity of the improved algorithm compared to the original algorithm. The time complexity is mainly reflected in three parts: algorithm initialization, fitness evaluation, and update solution.

Time complexity is an essential indicator in algorithm comparison, representing the degree of time an algorithm takes to perform calculations, which is mainly reflected in the algorithm’s initialization, fitness evaluation, and update solution [46]. The computational complexity of SCSO is , where is the population size, is the computational dimension of the problem, and is the number of iterations. MSCSO has three more parts than SCSO. The time complexity of the new opposition-based learning strategy is , and the novel exploration mechanism replaces the original exploration mechanism; thus, there is no increase in time complexity. The time complexity of the elimination update mechanism is . Therefore, the time complexity of MSCSO is ; thus, the MSCSO proposed in this paper has equal time complexity compared to SCSO.

3.5. Population Diversity of MSCSO

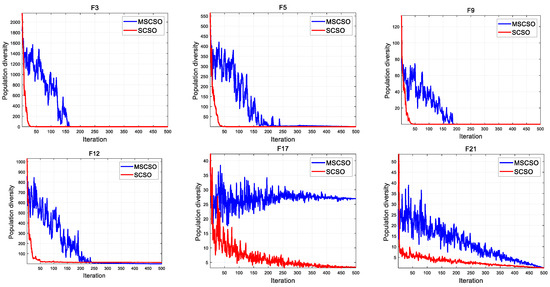

Population diversity is an important part of the qualitative analysis of algorithms. This article demonstrates the population changes of SCSO and MSCSO algorithms during optimization through population diversity experiments. Taking global optimization as an example, unimodal and multimodal functions were selected, with dimensions of 30 and fixed. The population diversity can be calculated by Equations (14) and (15) [47]. The population diversity curves of SCSO and MSCSO are shown in Figure 3.

where denotes the degree of dispersion between the population and the centroid c in each iteration, and represents the d-th dimension value of the i-th individual during the t-th iteration.

Figure 3.

The population diversity curves of SCSO and MSCSO.

During the entire algorithm optimization process, MSCSO has higher population diversity values and better population diversity than SCSO. This indicates that MSCSO has better search ability during the exploration phase, which can avoid the algorithm falling into local optima and premature convergence.

3.6. Exploration and Exploitation of MSCSO

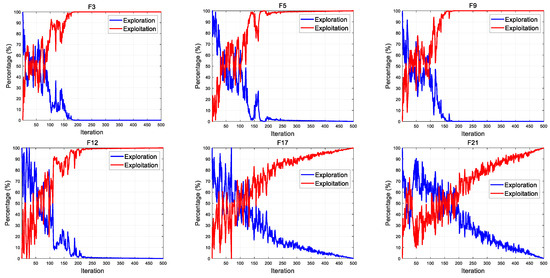

During the optimization process, different algorithms have different design ideas, resulting in differences in exploration and exploitation. Therefore, when designing a new algorithm, it is necessary to measure the exploration and exploitation of the algorithm in order to conduct a practical analysis of the search strategies that affect these two factors. The percentage of exploration and exploitation can be calculated by Equations (16)–(18) [47]. The exploration and exploitation of MSCSO is shown in Figure 4.

where denotes the dimension-wise diversity measurement, and is the maximum diversity in the whole iteration process.

Figure 4.

The exploration and exploitation of MSCSO.

During the entire algorithm optimization process, the first part of MSCSO has a high search proportion, indicating that MSCSO has good searchability, and preventing the algorithm from falling into local optima and premature convergence. In the latter part, the development proportion gradually increases, and, based on the previous search, the convergence is accelerated to obtain the optimization results. Throughout the entire optimization process, the exploration and exploitation of MSCSO maintain a dynamic balance, indicating that the algorithm has good stability and optimization performance.

4. Experiments and Results Analysis

4.1. Benchmark Datasets

In this section, we conduct performance and effectiveness testing experiments on the proposed algorithm. Global optimization and feature selection, as common problems in daily life, have become the leading choices for testing optimization algorithms used to evaluate the comprehensive ability of algorithm exploration and exploitation. In terms of global optimization, this paper selected 30 well-known functions commonly used for optimization testing as the test set, including 20 non-fixed dimensional functions and ten fixed dimensional functions. In terms of feature selection, this paper selected 24 datasets commonly used for testing. The details of the global optimization function set are shown in Appendix A Table A1. The details of datasets for feature selection are shown in Table 1, which can be obtained from the website: https://archive.ics.uci.edu/datasets (accessed on 15 October 2023).

Table 1.

The feature selection datasets.

4.2. Parameter Settings

In order to better compare the results with other algorithms, in the global optimization section, this paper uses 11 famous algorithms as benchmark algorithms, including GA [48], PSO [19], GWO [15], HHO [22], ACO [49], WOA [16], SOGWO [50], EGWO [51], TACPSO [52], SCSO [13], etc. These algorithms have been used as comparative methods in many studies and have excellent performance in global optimization. The details of parameter setting for algorithms are shown in Table 2.

Table 2.

Parameters and assignments setting for algorithms.

The feature selection problem is a binary optimization problem. When applying traditional optimization algorithms to the feature selection problem, binary transformation is required first, and a transfer function is used to map continuous values to their corresponding binary values [53].

Any optimization problem is transformed into a solution for the objective function [54]. In feature selection, the goal is to minimize the number of selected features and achieve the highest accuracy. Therefore, the objective function of the feature selection problem is shown in Equation (19).

where represents the classification error rate, represents the number of selected features, represents the total number of features, and is the weight assigned to the classification error rate, .

Table 3 shows eight binary transfer functions (including four S-shaped and four V-shaped transfer functions). This paper conducted extensive simulations to verify the efficiency of these transfer functions and found that V4 is the most feasible transfer function.

Table 3.

Details of binary transfer functions.

In the global optimization experiment, all algorithms adopt unified parameter settings to ensure the fairness of the results. The algorithm runs independently and continuously 30 times, with a population of 50 and algorithm iterations of 500. The simulation testing environment for this time is operating system Win10, 64-bit, CPU 11th Gen Intel (R) Core (TM) i7-11700K, memory 64 GB, primary frequency 3.60 GHz, and simulation software MATLAB 2016b.

4.3. Results Analysis

In global optimization problems, the higher the dimension of the optimization problem, the better it can demonstrate the robustness and performance of the algorithm. Therefore, this paper focuses on 20 non-fixed dimensional functions, using three dimensions of 30, 100, and 500, respectively, to fully validate the proposed and benchmarked algorithm’s effectiveness. This paper adopts five statistical metrics to assess the effectiveness of all algorithms, including Mean, standard deviation (Std), p-value, Wilcoxon rank sum test, and Friedman test. It draws the iterative convergence curve and box diagram of the algorithm fully and comprehensively.

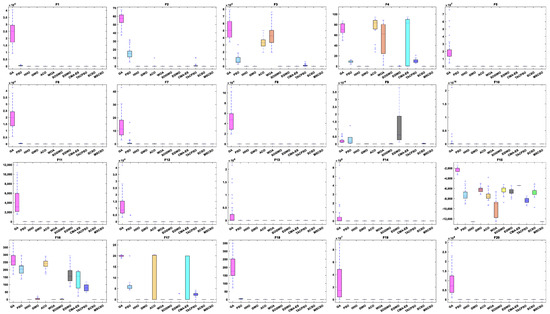

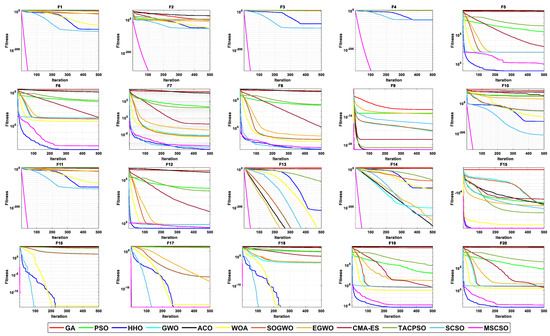

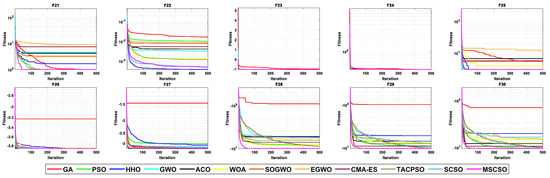

Table 4 shows the results of 5 statistical metrics for 12 optimization algorithms in solving 30-dimensional non-fixed dimensional functions. Figure 5 shows the iteration curves of the 12 optimization algorithms in solving 30-dimensional non-fixed dimensional functions. Through the convergence curve, the MSCSO algorithm proposed in this paper outperforms other algorithms in terms of the convergence speed and optimization accuracy in F1, F2, F3, F4, F9, F10, F11, F13, F14, F15, F16, F17, and F18 functions. Figure 6 is a box diagram of the results of 12 optimization algorithms solving 30-dimensional non-fixed dimensional functions.

Table 4.

Comparison of results on 30-dimensional non-fixed dimensional functions.

Figure 5.

The convergence curves of 30-dimensional non-fixed dimensional functions.

Figure 6.

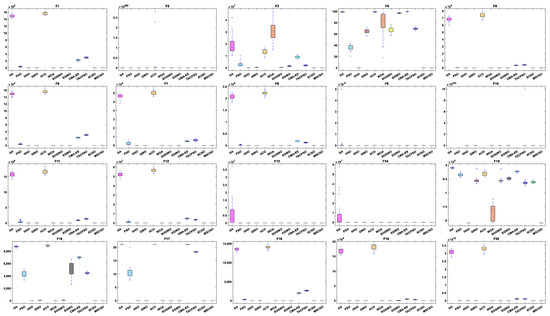

Boxplot analysis of 30-dim non-fixed dimensional functions.

By analyzing the results in Table 4, Figure 5 and Figure 6, MSCSO achieved the most optimal values in the 30-dimensional non-fixed dimensional functions compared to the other 11 algorithms, with a quantity of 13. The Wilcoxon rank sum test and Friedman test show the overall results of each algorithm. In the Wilcoxon rank sum test, MSCSO achieved results of 190/22/8; in the Friedman test, MSCSO achieved the highest-ranking result with a value of 2.3750. The above results indicate that MSCSO has achieved better results than other algorithms in 30-dimensional non-fixed dimensional functions.

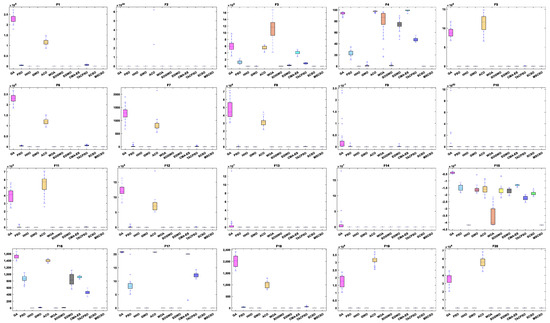

Table 5 shows the results of 5 statistical metrics for 12 optimization algorithms in solving 100-dimensional non-fixed dimensional functions. Figure 7 shows the iteration curves of the 12 optimization algorithms in solving 100-dimensional non-fixed dimensional functions. Through the convergence curve, the MSCSO algorithm proposed in this paper outperforms other algorithms in terms of convergence speed and optimization accuracy in F1, F2, F3, F4, F9, F10, F11, F13, F14, F15, F16, F17, and F18 functions. Figure 8 is a box diagram of the results of 12 optimization algorithms solving 100-dimensional non-fixed dimensional functions.

Table 5.

Comparison of results on 100-dimensional non-fixed dimensional functions.

Figure 7.

The convergence curves of 100-dimensional non-fixed dimensional functions.

Figure 8.

Boxplot analysis of 100-dimensional non-fixed dimensional functions.

By analyzing the results in Table 5, Figure 7 and Figure 8, MSCSO achieved the most optimal values in the 100-dimensional non-fixed dimensional functions compared to the other 11 algorithms, with a quantity of 12. The Wilcoxon rank sum test and Friedman test show the overall results of each algorithm. In the Wilcoxon rank sum test, MSCSO achieved results of 194/20/6; in the Friedman test, MSCSO achieved the highest-ranking result with a value of 2.2125. The above results indicate that MSCSO has achieved better results than other algorithms in 100-dimensional non-fixed dimensional functions.

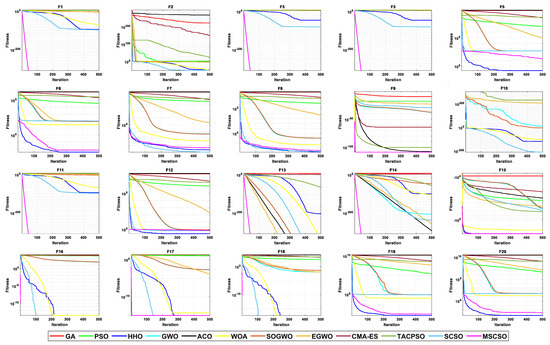

Table 6 shows the results of 5 statistical metrics for 12 optimization algorithms in solving 500-dimensional non-fixed dimensional functions. Figure 9 shows the iteration curves of the 12 optimization algorithms in solving 500-dimensional non-fixed dimensional functions. Through the convergence curve, the MSCSO algorithm proposed in this paper outperforms other algorithms in terms of convergence speed and optimization accuracy in F1, F2, F3, F4, F9, F10, F11, F13, F14, F15, F16, F17, and F18 functions. Figure 10 is a box diagram of the results of 12 optimization algorithms solving 500-dimensional non-fixed dimensional functions.

Table 6.

Comparison of results on 500-dimensional non-fixed dimensional functions.

Figure 9.

The convergence curves of 500-dimensional non-fixed dimensional functions.

Figure 10.

Boxplot analysis of 500-dimensional non-fixed dimensional functions.

By analyzing the results in Table 6, Figure 9 and Figure 10, MSCSO achieved the most optimal values in the 500-dimensional non-fixed dimensional functions compared to the other 11 algorithms, with a quantity of 12. The Wilcoxon rank sum test and Friedman test show the overall results of each algorithm. In the Wilcoxon rank sum test, MSCSO achieved results of 191/24/5; in the Friedman test, MSCSO achieved the highest-ranking result with a value of 2.2500. The above results indicate that MSCSO has achieved better results than other algorithms in 100-dimensional non-fixed dimensional functions.

Table 7 shows the results of 5 statistical metrics for 12 optimization algorithms in a fixeddimensional function. Figure 11 shows the iteration curves of the 12 optimization algorithms in solving fixed-dimensional functions. From the convergence curve, the MSCSO algorithm proposed in this paper has a higher convergence speed than other algorithms in functions F21, F23, F24, F28, F29, and F30. In functions F21, F23, F24, F25, F26, F28, F29, and F30, the optimization accuracy is stronger than other algorithms. Figure 12 is a box diagram of the results of 12 optimization algorithms solving fixed-dimensional functions.

Table 7.

Comparison of results on fixed-dimensional functions.

Figure 11.

The convergence curves of fixed-dimensional functions.

Figure 12.

Boxplot analysis of fixed-dimensional functions.

Through the analysis of the results in Table 7, Figure 11 and Figure 12, in the fixed-dimensional functions, MSCSO achieved the second highest optimal value compared to the other 11 algorithms, with only one fewer number than the best TACPSO. The Wilcoxon rank sum test and Friedman test show the overall results of each algorithm. In the Wilcoxon rank sum test, MSCSO achieved a result of 60/24/26; in the Friedman test, MSCSO achieved the highest-ranking result with a value of 4.3750. The above results indicate that MSCSO has achieved better results than other algorithms in fixed-dimensional functions.

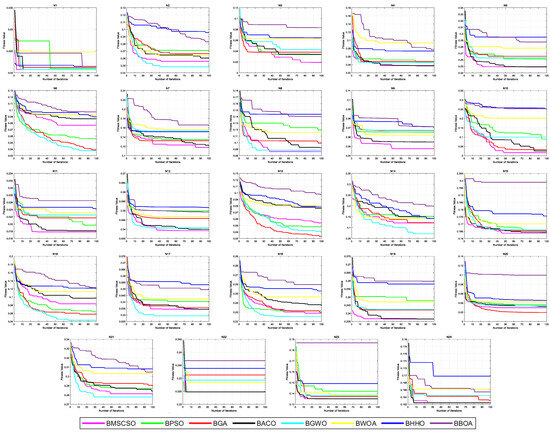

Accurate and fitness values are commonly used comparative indicators in feature selection problems. To comprehensively demonstrate the effectiveness, the average (Mean) and standard deviation (Std) of the accuracy and fitness values are calculated separately for Friedman’s test. The iterative convergence curve of the algorithm was drawn.

Table 8 shows the proposed and benchmark algorithm results for solving 24 feature selection datasets. The results include the average and standard deviation of the feature selection accuracy. In 24 datasets, MSCSO achieved 15 optimal values, with the highest number among all algorithms. Table 9 shows the Friedman test results for the average and standard deviation of feature selection accuracy, with the sum of ranks achieving 46.5 and the average of ranks achieving 1.9375, ranking first among all algorithms.

Table 8.

Classification accuracy results on feature selection datasets.

Table 9.

Friedman’s test results of classification accuracy on feature selection datasets.

The results in Table 10 include the average and standard deviation of feature selection fitness values. In 24 datasets, MSCSO achieved 13 optimal values, with the highest number among all algorithms. Table 11 shows the Friedman test results for the average and standard deviation of feature selection accuracy, with the sum of ranks achieving 46 and the average of ranks achieving 1.9167, ranking first among all algorithms. Figure 13 shows the convergence curves of feature selection datasets.

Table 10.

Fitness results on feature selection datasets.

Table 11.

Friedman test results of fitness results on feature selection datasets.

Figure 13.

The convergence curves of feature selection datasets.

This section validates the proposed MSCSO algorithm through two problems: global optimization and feature selection. MSCSO and other advanced algorithms were tested on 30 well-known global optimization functions (20 non-deterministic functions (Dim = 30, 100, and 500) and 10 deterministic functions) and 24 feature selection datasets. Global optimization problems such as options of five statistical metrics assess the effectiveness of all algorithms, including Mean, Std, p-value, Wilcoxon rank sum test, and Friedman test. The feature selection problem adopts the Mean and Std of the accuracy, and fitness values are calculated separately for Friedman’s test. The convergence curve, box diagram, and experimental data table show that MSCSO has achieved the best results, demonstrating its strong development ability and efficient spatial exploration ability. The effectiveness of the proposed strategies and methods has been verified.

5. Conclusions and Future Works

The SCSO is a highly effective biomimetics swarm intelligence algorithm proposed in recent years, which simulates the life habits of sand cats to optimize problems and achieve good optimization results. But in some issues, the optimization effect is not ideal. Therefore, after constructing a mathematical model for optimizing biological habits, some mathematical theories can be improved to obtain excellent optimization mathematical models. This method often achieves good optimization results. In order to enhance the effectiveness of the SCSO and help it overcome some physiological limitations, this paper proposes three novel strategies: a new opposition-based learning strategy, a new exploration mechanism, and a biological elimination update mechanism. These strategies help the SCSO easily jump out of local optima, accelerate convergence speed, and improve optimization accuracy.

To verify the effectiveness of the proposed MSCSO algorithm in this paper, the algorithm was applied to two problems: global optimization (thirty functions) and feature selection (twenty datasets). These are also common complexity problems in many fields. Finally, by comparing them with many advanced algorithms in global optimization and feature selection problems, it is proven that the improved algorithm proposed in this paper has excellent performance. Global optimization problems such as options five statistical metrics assess the effectiveness of all algorithms, including Mean, Std, p-value, Wilcoxon rank sum test, and Friedman test. The feature selection problem adopts the Mean and Std of the accuracy, and fitness values are calculated separately for Friedman’s test. The convergence curve, box diagram, and experimental data table show that MSCSO achieved the best results, demonstrating its strong development ability and efficient spatial exploration ability. The experimental and statistical results show that MSCSO has excellent performance and has certain advantages compared to other advanced algorithms in jumping out of local optima, improving convergence speed, and improving optimization accuracy. MSCSO has excellent optimization capabilities from both theoretical and practical perspectives. This proves that MSCSO can adapt to a wide range of optimization problems and verifies the algorithm’s robustness.

Although the strategy proposed in this article improved the optimization ability of the original SCSO, it was found through the interpretation of relevant mathematical models that the proportion of algorithm exploration and exploitation is too fixed, which cannot enable the algorithm to explore and develop according to actual problems. In later research, nonlinear dynamic adjustment factors can be set for the exploration and development stage of the algorithm. In future related research, emphasis will be placed on evolving the proposed algorithms towards more practical problems, such as feature selection in the fields of text and images. In response to the hyperparameter optimization problems faced by machine learning and deep learning, more effective heuristic algorithms will be adopted to attempt to provide some efforts for the improvement of artificial intelligence technology.

Author Contributions

Conceptualization, L.Y., J.Y. and P.Y.; methodology, P.Y. and L.Y.; software, P.Y. and G.L.; writing—original draft, L.Y. and Y.L.; writing—review and editing, Y.L., T.Z. and J.Y.; data curation, J.Y. and G.L.; visualization G.L. and J.Y.; supervision, T.Z. and Y.L.; funding acquisition, T.Z. All authors have read and agreed to the published version of the manuscript.

Funding

Guizhou Provincial Science and Technology Projects (Grant No. Qiankehejichu-ZK [2022] General 320), National Natural Science Foundation (Grant No. 72061006) and Academic New Seedling Foundation Project of Guizhou Normal University (Grant No. Qianshixinmiao-[2021] A30).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this article is publicly available and has been explained in the main text.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

The global optimization functions.

Table A1.

The global optimization functions.

| Name | Function | Dim | Range | Type | |

|---|---|---|---|---|---|

| Sphere | 30, 100, 500 | [−100, 100] | 0 | Unimodal | |

| Schwefel 2.22 | 30, 100, 500 | [−1.28, 1.28] | 0 | Unimodal | |

| Schwefel 1.2 | 30, 100, 500 | [−100, 100] | 0 | Unimodal | |

| Schwefel 2.21 | 30, 100, 500 | [−100, 100] | 0 | Unimodal | |

| Rosenbroke | 30, 100, 500 | [−30, 30] | 0 | Unimodal | |

| Step | 30, 100, 500 | [−100, 100] | 0 | Unimodal | |

| Quartic | 30, 100, 500 | [−1.28, 1.28] | 0 | Unimodal | |

| Exponential | 30, 100, 500 | [−10, 10] | 0 | Unimodal | |

| Sum Power | 30, 100, 500 | [−1, 1] | 0 | Unimodal | |

| Sum Square | 30, 100, 500 | [−10, 10] | 0 | Unimodal | |

| Zakharov | 30, 100, 500 | [−10, 10] | 0 | Unimodal | |

| Trid | 30, 100, 500 | [−10, 10] | 0 | Unimodal | |

| Elliptic | 30, 100, 500 | [−100, 100] | 0 | Unimodal | |

| Cigar | 30, 100, 500 | [−100, 100] | 0 | Unimodal | |

| Generalized Schwefel’s problem 2.26 | 30, 100, 500 | [−500, 500] | −418.9829 × n | Multimodal | |

| Generalized Rastrigin’s Function | 30, 100, 500 | [−5.12, 5.12] | 0 | Multimodal | |

| Ackley’s Function | 30, 100, 500 | [−32, 32] | 0 | Multimodal | |

| Generalized Criewank Function | 30, 100, 500 | [−600, 600] | 0 | Multimodal | |

| Penalized 1 | 30, 100, 500 | [−50, 50] | 0 | Multimodal | |

| Penalized 2 | 30, 100, 500 | [−50, 50] | 0 | Multimodal | |

| Shekell’s Foxholes Function | 2 | [−65.536, 65.536] | 1 | Multimodal | |

| Kowalik’s Function | 4 | [−5, 5] | 0.0003 | Multimodal | |

| Six-Hump Camel-Back Function | 2 | [−5, 5] | −1.0316 | Multimodal | |

| Branin Function | 2 | [−5, 5] | 0.398 | Multimodal | |

| Goldstein-Price Function | 2 | [−2, 2] | 3 | Multimodal | |

| Hatman’s Function1 | 3 | [0, 1] | −3.86 | Multimodal | |

| Hatman’s Function2 | 6 | [0, 1] | −3.32 | Multimodal | |

| Schekel’s Family 1 | 4 | [0, 10] | −10.1532 | Multimodal | |

| Schekel’s Family 2 | 4 | [0, 10] | −10.4028 | Multimodal | |

| Schekel’s Family 3 | 4 | [0, 10] | −10.5364 | Multimodal |

References

- Yuan, Y.; Wei, J.; Huang, H.; Jiao, W.; Wang, J.; Chen, H. Review of resampling techniques for the treatment of imbalanced industrial data classification in equipment condition monitoring. Eng. Appl. Artif. Intell. 2023, 126, 106911. [Google Scholar] [CrossRef]

- Liang, Y.-C.; Minanda, V.; Gunawan, A. Waste collection routing problem: A mini-review of recent heuristic approaches and applications. Waste Manag. Res. 2022, 40, 519–537. [Google Scholar] [CrossRef] [PubMed]

- Kuo, R.; Li, S.-S. Applying particle swarm optimization algorithm-based collaborative filtering recommender system considering rating and review. Appl. Soft Comput. 2023, 135, 110038. [Google Scholar] [CrossRef]

- Fan, S.-K.S.; Lin, W.-K.; Jen, C.-H. Data-driven optimization of accessory combinations for final testing processes in semiconductor manufacturing. J. Manuf. Syst. 2022, 63, 275–287. [Google Scholar] [CrossRef]

- Huynh, N.-T.; Nguyen, T.V.T.; Tam, N.T.T.; Nguyen, Q. Optimizing Magnification Ratio for the Flexible Hinge Displacement Amplifier Mechanism Design. In Lecture Notes in Mechanical Engineering, Proceedings of the 2nd Annual International Conference on Material, Machines and Methods for Sustainable Development (MMMS2020), Nha Trang, Vietnam, 12–15 November 2020; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Kler, R.; Gangurde, R.; Elmirzaev, S.; Hossain, M.S.; Vo, N.T.M.; Nguyen, T.V.T.; Kumar, P.N. Optimization of Meat and Poultry Farm Inventory Stock Using Data Analytics for Green Supply Chain Network. Discret. Dyn. Nat. Soc. 2022, 2022, 8970549. [Google Scholar] [CrossRef]

- Yu, K.; Liang, J.J.; Qu, B.; Luo, Y.; Yue, C. Dynamic Selection Preference-Assisted Constrained Multiobjective Differential Evolution. IEEE Trans. Syst. Man Cybern. Syst. 2021, 52, 2954–2965. [Google Scholar] [CrossRef]

- Yu, K.; Sun, S.; Liang, J.J.; Chen, K.; Qu, B.; Yue, C.; Wang, L. A bidirectional dynamic grouping multi-objective evolutionary algorithm for feature selection on high-dimensional classification. Inf. Sci. 2023, 648, 119619. [Google Scholar] [CrossRef]

- Tang, J.; Liu, G.; Pan, Q. A review on representative swarm intelligence algorithms for solving optimization problems: Applications and trends. IEEE/CAA J. Autom. Sin. 2021, 8, 1627–1643. [Google Scholar] [CrossRef]

- Yu, K.; Zhang, D.; Liang, J.J.; Chen, K.; Yue, C.; Qiao, K.; Wang, L. A Correlation-Guided Layered Prediction Approach for Evolutionary Dynamic Multiobjective Optimization. IEEE Trans. Evol. Comput. 2023, 27, 1398–1412. [Google Scholar] [CrossRef]

- Wei, J.; Huang, H.; Yao, L.; Hu, Y.; Fan, Q.; Huang, D. New imbalanced bearing fault diagnosis method based on Sample-characteristic Oversampling TechniquE (SCOTE) and multi-class LS-SVM. Appl. Soft Comput. 2021, 101, 107043. [Google Scholar] [CrossRef]

- Yu, K.; Zhang, D.; Liang, J.J.; Qu, B.; Liu, M.; Chen, K.; Yue, C.; Wang, L. A Framework Based on Historical Evolution Learning for Dynamic Multiobjective Optimization. IEEE Trans. Evol. Comput. 2023. early access. [Google Scholar] [CrossRef]

- Seyyedabbasi, A.; Kiani, F. Sand Cat swarm optimization: A nature-inspired algorithm to solve global optimization problems. Eng. Comput. 2023, 39, 2627–2651. [Google Scholar] [CrossRef]

- Chu, S.-C.; Tsai, P.-W.; Pan, J.-S. Cat swarm optimization. In PRICAI 2006: Trends in Artificial Intelligence, Proceedings of the 9th Pacific Rim International Conference on Artificial Intelligence, Guilin, China, 7–11 August 2006; Proceedings 9; Springer: Cham, Switzerland, 2006; pp. 854–858. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Hayyolalam, V.; Kazem, A.A.P. Black widow optimization algorithm: A novel meta-heuristic approach for solving engineering optimization problems. Eng. Appl. Artif. Intell. 2020, 87, 103249. [Google Scholar] [CrossRef]

- Hu, G.; Guo, Y.; Wei, G.; Abualigah, L. Genghis Khan shark optimizer: A novel nature-inspired algorithm for engineering optimization. Adv. Eng. Inform. 2023, 58, 102210. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussien, A.G. Snake Optimizer: A novel meta-heuristic optimization algorithm. Knowl.-Based Syst. 2022, 242, 108320. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. Dung beetle optimizer: A new meta-heuristic algorithm for global optimization. J. Supercomput. 2023, 79, 7305–7336. [Google Scholar] [CrossRef]

- Jia, H.; Rao, H.; Wen, C.; Mirjalili, S. Crayfish optimization algorithm. Artif. Intell. Rev. 2023, 1–61. [Google Scholar] [CrossRef]

- Wei, J.; Wang, J.; Huang, H.; Jiao, W.; Yuan, Y.; Chen, H.; Wu, R.; Yi, J. Novel extended NI-MWMOTE-based fault diagnosis method for data-limited and noise-imbalanced scenarios. Expert Syst. Appl. 2023, 238, 121799. [Google Scholar] [CrossRef]

- Kiani, F.; Nematzadeh, S.; Anka, F.A.; Fındıklı, M. Chaotic Sand Cat Swarm Optimization. Mathematics 2023, 11, 2340. [Google Scholar] [CrossRef]

- Seyyedabbasi, A. Binary Sand Cat Swarm Optimization Algorithm for Wrapper Feature Selection on Biological Data. Biomimetics 2023, 8, 310. [Google Scholar] [CrossRef] [PubMed]

- Qtaish, A.; Albashish, D.; Braik, M.; Alshammari, M.T.; Alreshidi, A.; Alreshidi, E. Memory-Based Sand Cat Swarm Optimization for Feature Selection in Medical Diagnosis. Electronics 2023, 12, 2042. [Google Scholar] [CrossRef]

- Wu, D.; Rao, H.; Wen, C.; Jia, H.; Liu, Q.; Abualigah, L. Modified Sand Cat Swarm Optimization Algorithm for Solving Constrained Engineering Optimization Problems. Mathematics 2022, 10, 4350. [Google Scholar] [CrossRef]

- Kiani, F.; Anka, F.A.; Erenel, F. PSCSO: Enhanced sand cat swarm optimization inspired by the political system to solve complex problems. Adv. Eng. Softw. 2023, 178, 103423. [Google Scholar] [CrossRef]

- Yao, L.; Yuan, P.; Tsai, C.-Y.; Zhang, T.; Lu, Y.; Ding, S. ESO: An Enhanced Snake Optimizer for Real-world Engineering Problems. Expert Syst. Appl. 2023, 230, 120594. [Google Scholar] [CrossRef]

- Yuan, P.; Zhang, T.; Yao, L.; Lu, Y.; Zhuang, W. A Hybrid Golden Jackal Optimization and Golden Sine Algorithm with Dynamic Lens-Imaging Learning for Global Optimization Problems. Appl. Sci. 2022, 12, 9709. [Google Scholar] [CrossRef]

- Yao, L.; Li, G.; Yuan, P.; Yang, J.; Tian, D.; Zhang, T. Reptile Search Algorithm Considering Different Flight Heights to Solve Engineering Optimization Design Problems. Biomimetics 2023, 8, 305. [Google Scholar] [CrossRef] [PubMed]

- Zhong, C.; Li, G.; Meng, Z. Beluga whale optimization: A novel nature-inspired metaheuristic algorithm. Knowl.-Based Syst. 2022, 251, 109215. [Google Scholar] [CrossRef]

- Abed-Alguni, B.H.; Alawad, N.A.; Al-Betar, M.A.; Paul, D. Opposition-based sine cosine optimizer utilizing refraction learning and variable neighborhood search for feature selection. Appl. Intell. 2023, 53, 13224–13260. [Google Scholar] [CrossRef] [PubMed]

- Ma, C.; Huang, H.; Fan, Q.; Wei, J.; Du, Y.; Gao, W. Grey wolf optimizer based on Aquila exploration method. Expert Syst. Appl. 2022, 205, 117629. [Google Scholar] [CrossRef]

- Wu, R.; Huang, H.; Wei, J.; Ma, C.; Zhu, Y.; Chen, Y.; Fan, Q. An improved sparrow search algorithm based on quantum computations and multi-strategy enhancement. Expert Syst. Appl. 2022, 215, 119421. [Google Scholar] [CrossRef]

- Fan, Q.; Huang, H.; Chen, Q.; Yao, L.; Yang, K.; Huang, D. A modified self-adaptive marine predators algorithm: Framework and engineering applications. Eng. Comput. 2021, 38, 3269–3294. [Google Scholar] [CrossRef]

- Wang, Y.; Ran, S.; Wang, G.-G. Role-Oriented Binary Grey Wolf Optimizer Using Foraging-Following and Lévy Flight for Feature Selection. Appl. Math. Model. 2023, in press. [CrossRef]

- Lahmar, I.; Zaier, A.; Yahia, M.; Boaullègue, R. A Novel Improved Binary Harris Hawks Optimization For High dimensionality Feature Selection. Pattern Recognit. Lett. 2023, 171, 170–176. [Google Scholar] [CrossRef]

- Turkoglu, B.; Uymaz, S.A.; Kaya, E. Binary Artificial Algae Algorithm for feature selection. Appl. Soft Comput. 2022, 120, 108630. [Google Scholar] [CrossRef]

- Hu, G.; Zhong, J.; Zhao, C.; Wei, G.; Chang, C.-T. LCAHA: A hybrid artificial hummingbird algorithm with multi-strategy for engineering applications. Comput. Methods Appl. Mech. Eng. 2023, 415, 116238. [Google Scholar] [CrossRef]

- Long, W.; Jiao, J.; Xu, M.; Tang, M.; Wu, T.; Cai, S. Lens-imaging learning Harris hawks optimizer for global optimization and its application to feature selection. Expert Syst. Appl. 2022, 202, 117255. [Google Scholar] [CrossRef]

- Chen, H.; Xu, Y.; Wang, M.; Zhao, X. A balanced whale optimization algorithm for constrained engineering design problems. Appl. Math. Model. 2019, 71, 45–59. [Google Scholar] [CrossRef]

- Jia, H.; Li, Y.; Wu, D.; Rao, H.; Wen, C.; Abualigah, L. Multi-strategy Remora Optimization Algorithm for Solving Multi-extremum Problems. J. Comput. Des. Eng. 2023, 10, qwad044. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Zamani, H. DMDE: Diversity-maintained multi-trial vector differential evolution algorithm for non-decomposition large-scale global optimization. Expert Syst. Appl. 2022, 198, 116895. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Dhargupta, S.; Ghosh, M.; Mirjalili, S.; Sarkar, R. Selective opposition based grey wolf optimization. Expert Syst. Appl. 2020, 151, 113389. [Google Scholar] [CrossRef]

- Komathi, C.; Umamaheswari, M. Design of gray wolf optimizer algorithm-based fractional order PI controller for power factor correction in SMPS applications. IEEE Trans. Power Electron. 2019, 35, 2100–2118. [Google Scholar] [CrossRef]

- Ziyu, T.; Dingxue, Z. A modified particle swarm optimization with an adaptive acceleration coefficients. In Proceedings of the 2009 Asia-Pacific Conference on Information Processing, Shenzhen, China, 18–19 July 2009; pp. 330–332. [Google Scholar]

- Ewees, A.A.; Ismail, F.H.; Sahlol, A.T. Gradient-based optimizer improved by Slime Mould Algorithm for global optimization and feature selection for diverse computation problems. Expert Syst. Appl. 2023, 213, 118872. [Google Scholar] [CrossRef]

- Sun, L.; Si, S.; Zhao, J.; Xu, J.; Lin, Y.; Lv, Z. Feature selection using binary monarch butterfly optimization. Appl. Intell. 2023, 53, 706–727. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).