Abstract

The objective of this paper is to present a novel design of intelligent neuro-supervised networks (INSNs) in order to study the dynamics of a mathematical model for Parkinson’s disease illness (PDI), governed with three differential classes to represent the rhythms of brain electrical activity measurements at different locations in the cerebral cortex. The proposed INSNs are constructed by exploiting the knacks of multilayer structure neural networks back-propagated with the Levenberg–Marquardt (LM) and Bayesian regularization (BR) optimization approaches. The reference data for the grids of input and the target samples of INSNs were formulated with a reliable numerical solver via the Adams method for sundry scenarios of PDI models by way of variation of sensor locations in order to measure the impact of the rhythms of brain electrical activity. The designed INSNs for both backpropagation procedures were implemented on created datasets segmented arbitrarily into training, testing, and validation samples by optimization of mean squared error based fitness function. Comparison of outcomes on the basis of exhaustive simulations of proposed INSNs via both LM and BR methodologies was conducted with reference solutions of PDI models by means of learning curves on MSE, adaptive control parameters of algorithms, absolute error, histogram error plots, and regression index. The outcomes endorse the efficacy of both INSNs solvers for different scenarios in PDI models, but the accuracy of the BR-based method is relatively superior, albeit at the cost of slightly more computations.

1. Introduction

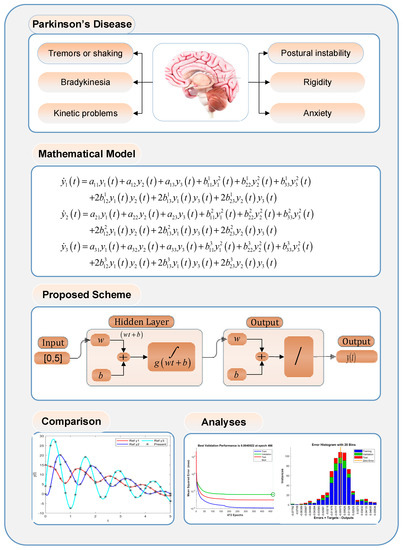

Parkinson’s disease illness (PDI) is a neurological disorder normally caused by an early significant death of dopaminergic neurons, and the resulting deficiency of dopamine within the basal ganglia results in movement disorders [1]. PDI patients may suffer from tremors/shaking, kinetic problems, postural instability, and rigidity and anxiety, as highlighted in Part 1 of the graphical abstract provided in Figure 1. In 2016, around 6.1 million people were affected by the PDI [2], and a rapid increase in PDI patients has been observed in the past two decades [3,4]. The current therapeutic treatments for PDI are based on restoring dopamine levels. These remedies are helpful in providing symptomatic relief to PDI patients, but they are not disease modifying, and, therefore, PDI has remained incurable [5]. Mathematical modeling of PDI may help in better understanding the dynamics of the disease and, thus, improved treatments for its recovery. Different mathematical models of PDI have been proposed [6]. For instance, in [7], Anninou et al. developed a mathematical model for PDI by exploiting the concept of fuzzy cognitive maps, and a generic algorithm was proposed based on nonlinear Hebbian learning techniques. Recently, a wide use of artificial intelligence methodologies for modeling different diseases has emerged [8,9,10], but, as per our exhaustive search, these methodologies are not yet exploited to study the dynamics of PDI. Thus, it seems promising to exploit the well-established strength of machine learning and artificial intelligence techniques to study the dynamics of PDI.

Figure 1.

Graphical abstract of the PDI study using INSNs.

The objectives of the current investigation are as follows:

- Study the dynamics of a mathematical model for PDI, governed with three differential classes to represent the rhythms of brain electrical activity that are measured at different locations of the cerebral cortex.

- Construct intelligent neuro-supervised networks (INSNs) by exploiting the knacks of multilayer structure neural networks backpropagated with the Levenberg–Marquardt (LM) and the Bayesian regularization (BR) approaches.

- Optimize the mean squared error based fitness function for sundry scenarios of PDI models by the variation of sensor locations to measure the impact of the rhythms of brain electrical activity.

- Compare the outcomes on the basis of exhaustive simulations of the proposed INSNs via both LM and BR methodologies with reference solutions of PDI models by means of learning curves on MSE, adaptive control parameters of algorithms, absolute error, histogram error plots, and regression index.

The structure of the remaining paper is as follows: Section 2 presents the details of the related works; Section 3 provides the mathematical model of PDI along with a description of the proposed INSNs; Section 4 discusses the simulation results for different scenarios of PDI; and Section 5 concludes the study by noting some potential future research directions.

2. Related Works

In PDI, the freezing of gait of patients is a common problem, referring to sudden/temporary inability to initiate or continue walking, and the PDI patient feels as if his or her feet are glued to ground when this occurs [11]. The freezing episodes often occur in PDI patients during the gait initiation or when turning, and they may considerably affect mobility/independence. Although the exact reason for freezing in PDI is not yet fully understood, it is widely believed that it results from a combination of motor/cognitive factors, such as (i) motor fluctuations, where the person experiences periods of good mobility—on periods and periods of poor mobility i.e., only off periods; (ii) dual-task interference, i.e., performing dual tasks, such as talking when walking or carrying an object, can enhance the risk of freezing; and (iii) emotional factors, such as anxiety and stress, can trigger or may worsen the freezing of gait. Parakkal et al. investigated the freezing of gait in PDI patients and suggested an ankle push off model [11], where a simplified neuromechanical model of gait is used for observing the variability and freezing in PDI. The mathematical model presented in [11], composed of the stance-leg, demonstrated an inverted pendulum (IP) operated by the ankle, and pushes off forces through the trailing-leg and pathological forces from the plantar flexors of the stance-leg. Further, the effect on walking of the swing-leg is modeled in a biped model (BM), while freezing and irregular walking is studied in both the BM and IP model. The plantar flexors (PF) correspond to the swing-leg pushing the center-of-mass forward, and the PF correspond to the stance-leg producing opposing torque. The study conducted in [8] demonstrated that the opposing forces produced by PF can persuade freezing, and it also explained the gait irregularities that are closer to freezing, such as step length reduction and irregular walking patterns.

Heyete et al. [12] presented the Bayesian mathematical model (BMM) to identify predictors of long-term motor/cognitive results and the progression rate in PDI. A BMM of motor/cognitive outcomes in PDI may aid understanding of the complex interactions among various factors and predict the progression of the disease. BMM exploits the concepts of probability and integrates data from multiple sources, including clinical assessments, demographic details, and neuroimaging results, to predict the different motor and cognitive outcomes. Belozyotov et al. [13] presented a mathematical model to study the behavior of PDI through the EEG signal of the patient taken at three different locations of the cerebral cortex (CC), considered as a three-time series describing the behavior of the disease curve in a three-dimensional phase-space, and then the 3D system of quadratic differential equations was constructed, whose solution provides the disease curve. Further, in [13], chaotic dynamics have been observed in the PDI mathematical model that can capture the complex interactions among the neurons and how their activity evolves over time. The chaotic attractors formed by the CC signals give information about the normal process or disease progression, depending on the nature of the chaotic graph.

Borah et al. [14] presented the fractional order model of PDI by exploiting the strong mathematical foundations of fractional calculus that allow real order differentiation or integration to be taken through generalizing the conventional integer order calculus. The design of appropriate controllers to control the chaos in the bio-mathematical models, including the PDI presented, enables stable performance to be attained. Further, the design of anti-controllers is also demonstrated in [14] for generating chaos when turbulence is required. Detailed analyses of the fractional order PDI model involving chaos are conducted in [14], where absence of chaos reflects the onset of the disease, and where anti-control schemes through linear state feedback, sliding mode, and single-state sinusoidal feedback are developed.

The intelligent computing approaches are introduced to effectively model or optimize different engineering, mathematical, and applied sciences problems [15,16,17,18]. In [19], a convolutional neural network (CNN) framework is introduced for emotion recognition that takes input from the extracts of mel scale spectrogram, chromagram, Tonnetz representation, mel frequency cepstral coefficients, and spectral contrast features through speech files. In [20], the estimated yield of soya bean crops under drought conditions is studied through imagery from an unmanned aerial vehicle and CNN. Further, it is demonstrated that the fusion of 1-D and 2-D inputs in a CNN-based deep learning model enhances the estimated accuracy. In [21], an improved denoising autoencoder (DAE) is developed that integrates the concept of confidence level in conventional DAE for fast and accurate recommendations in recommender systems, which are required in the E-commerce industry to provide reliable recommendations to the users. The DAE structure proposed in [21] is effectively applied to Movie Lens 100 K and 1 M datasets with outstanding performance compared to the standard DAE structures in terms of precision, recall, and MAP metrics. In [22], swarm intelligence is exploited for the identification of fractional order nonlinear autoregressive exogenous systems through established strength of particle swarm optimization (PSO). In [23], a hybrid bi-directional gated recurrent unit (BiGRU) and bi-directional long-term short-term memory (BiLSTM) are presented for electricity theft detection in smart grids with preprocessing through feature engineering. Before using the BiGRU and BiLSTM for classification, the data imbalance issue is solved using a K-means minority oversampling scheme such that the balanced data are given as an input to the BiGRU and BiLSTM models for better classification accuracy. In [24], the fractional calculus concepts are incorporated in the optimization mechanism of PSO to enhance its optimization strength for effective parameter estimation of nonlinear Hammerstein autoregressive exogenous systems. Further, the key term separation principle is introduced in the PSO to accurately estimate the actual parameters of the Hammerstein nonlinear system by avoiding the redundant parameters. [25] present marine predators based optimization heuristics for a parameter estimation of Hammerstein output error systems. The marine predator is a recently introduced swarm intelligence optimization approach that mimics the behavior of predators for catching prey through Brownian and Levy distributions for estimating the optimum communication between predator and prey. In [26], the weather classification model through the hybrid CNN and generative adversarial network is developed for photovoltaic power forecasting. In [27], knacks of feedforward artificial neural networks (FANN) optimized with the Levenberg–Marquardt algorithm are presented for analysis of the power law fluidic problem of moving wedge and flat plate model. In [28], FANN optimized with the Bayesian regularization algorithm are exploited for peristaltic motion of a third grade fluid in the planar channel. In [29], FANN optimized with the Levenberg–Marquardt algorithm are proposed to study the dynamics of multi-walled carbon nanotubes coated with gold nanoparticles with a different velocity slip in curved channel peristaltic motion. In [30], the efficacy of ANN optimized with the Levenberg–Marquardt and the Bayesian regularization algorithms is analyzed for the Cattaneo–Cristov heat flux model with biconvection nanofluid flow. In [31], intelligent algorithms and control schemes are presented for battery management in electric vehicles with details of current advancement, major challenges, and future prospects in the domain of battery management systems. In [32], a comprehensive survey of the applications of the intelligent transportation systems is presented in the context of big data, with identification of the research gaps and potential future research directions in the domain of intelligent transportation systems. In [33], a variety of issues related to interoperability in the Internet of Things (IoT) are discussed, such as searching/processing IoT, implementing, modeling event, and workflow processes. In [34,35], automatic detection of motor imagery EEG signals is obtained for robust brain computer interface systems. In [36], the recognition of alcoholic EEG signals is performed using CNN and the concept of geometrical features. The graphical features are one of the newest approaches for identifying underlying patterns of EEG signals, and they are used for effective depression detection [37] as well as seizure recognition [38].

There are a few intelligent computation algorithms. These include a chimp-inspired optimization scheme [39], i.e., an intelligent optimization algorithm effectively exploited to solve different problems with reasonably accuracy through providing a good balance in the exploration and exploitation phases; a Kohonen neural network [40], i.e., an unsupervised self-organizing (SO) competitive neural network that performs automatic clustering and that updates the weights of the network through SO feature mapping with effective application to intrusion detection of the network virus; and a Mayfly algorithm [41], i.e., a swarm intelligence-based heuristic approach, applied to successfully solve different engineering optimization problems, including the asymmetric traveling salesman problem, due to the features of population diversity and enhanced local search capability. Others include a simplified slime mould algorithm [42], i.e., a modified version of the slime mould heuristic, with an introduction of enhanced adaptive oscillation for better exploration capability during the early search phase, with application to wireless sensor network optimization problems; a code pathfinder algorithm [43], i.e., a discrete complex code pathfinder heuristic for an efficient solution to the optimization problem of wind farm layout through an improved exploration capability; and a firefighting strategy based marine predators approach [44], i.e., an improved variant of marine predator heuristic through an introduction of opposition-based learning for more uniform initial population and adaptive weight factor for creating balance between exploration/exploitation capabilities to effectively handle the forest fire rescue issues. More of these intelligent computer algorithms include a chaotic grey wolf optimizer [45], i.e., a modified grey wolf optimizer by incorporating the concepts of chaotic maps and adaptive convergence factor for robust and accurate parameter estimation of control autoregressive systems; a subtraction average based optimizer [46], i.e., an optimization approach inspired by the subtraction average of searchers agents for the position updates of the particles in the search space; and an enhanced dragonfly heuristic [47], i.e., enhanced version of dragonfly algorithm with an improved mechanism of global search and a local search for the four color map problem. There are also two others: a non-dominated sorting genetic algorithm [48], i.e., a modified variant of genetic algorithm with special congestion approach and adaptive crossover scheme to effectively solve multi objective and multi modal optimization problems, and, lastly, a green anaconda optimizer [49], i.e., an optimization heuristic that mimics the natural behavior of the green anacondas to solve various benchmark optimization challenges.

The intelligent computing-based methodologies have been proposed for bioinformatics and biotechnology applications as well. These include a combination of a graph neural network and CNN for efficient breast cancer classification [50]; deep learning and transfer learning through regional CNN for white blood cell detection [51]; and a fine-tuned neural network and long-term short-term memory-based neural network for skin disease [52]. They also include a convolutional autoencoder and transfer learning-based scheme for Alzheimer’s disease visualization [53]; a perceptron neural network for bacterial behavior programming [54]; and a deep neural architecture with generative adversarial network for brain tumor classification [55]. In addition, they include a deep neural network for epidemic prediction of COVID disease [56]; deep learning for sequential analysis of biomolecules [57]; elastic net and neural networks for the identification of plant genomics [58]; data mining and machine learning algorithms based on spectral clustering, random forest, and neural networks for cancer diagnosis through gene data [8]; and a stacking ensemble model based on an auto-regressive integrated moving average, exponential smoothing, a neural network autoregressive, a gradient-boosting regression tree, and extreme gradient boost models for infectious diseases [9]. Finally, there are supervised machine learning algorithms for lung disease detection, respiratory sound analyses, and so on [10]. Motivated by the widespread applications of the artificial intelligence methodologies, this study investigates exploiting the artificial intelligence techniques to study the dynamics of PDI.

3. Proposed Methodology

Before developing the INSNs, first the mathematical model of the PDI is introduced in this section. Let the rhythms of cerebral activity at k point of cerebral cortex be measured by EEG and defined as [13]:

where shows the discrete approximation of and represent the white Gaussian noise. The accurate acquisition of the EEG signals is of great significance, and denoising of the signal is required before further processing. The multiscale principle component analysis (MSPCA) plays vital role in the denoising of a signal, which is a combination of principle component analyses and wavelet [59], and which is used for robust motor imagery brain computer interface classification [60,61]. The system of differential equations are constructed as [13]:

The (k × j) matrix of unknown coefficients D, matrix Y, and matrix are introduced in (3), (4), and (5), respectively:

Considering k = 3 and [13]:

For practical application, let the EEG signal of the subject be taken at three different points of his cerebral cortex and considered as a three-time series describing the behavior in the three-dimensional space. In standard medical procedure, measuring sensors are placed on some defined points of the cerebral cortex. For this study, we considered the magnitude of electrical impulses at points P3, P4, and O1, as well as C3, C4, and T5, designated by coordinate , , and . A three-dimensional system based on these time series was constructed as:

The system (7) simulated the impulses at C3, C4, and T5, while the system of the differential equation presented in (8) and (9) simulated the impulse at the P3, P4, and O1 points:

Now, the details regarding the implementation of the proposed intelligent neuro supervised networks are presented. The proposed scheme is implemented in two steps:

- Reference dataset generation: First, the reference dataset for the INSNs is generated through determining the numerical results of the PDI models presented in (7) to (9). The state of the art Adams procedure is used to determine the numerical results of the PDI models of (7) to (9) through the ‘NDSolve’ routine of Mathematica software for finding the solution of the systems represented by the differential equations for , with a step size 0.2, i.e., total 251 input (time instances), and, accordingly, a 753 output (number of measurements) with 251 discrete instances for each y1, y2, y3. The value of the parameters of the quantities of interest and initial population representing the location of sensors for electrical rhythms of the brain are taken from the reported study [13]. Further information regarding the justification of the parameter on the basis of theoretical analyses, i.e., global and local stability and population dynamics, can be seen in the reported study [13].

- Developing neuro-supervised networks: The INSNs are constructed through a neural networks structure with logistic activation function to solve the PDI models of (7) to (9). For backpropagation, two different optimization algorithms are used, i.e., Levenberg–Marquardt (LM) and Bayesian regularization (BR). In LM, the number of hidden neurons is taken as 20 for all three PDI models of (7) to (9), while in the case of BR, the number of hidden neurons for the PDI model of (7) are 50, and for remaining two PDI models of (8) and (9), the neurons are 100.

The optimizers based on LM and BR adjust the weights of the neural networks through minimizing the deviation from the reference numerical solution in the mean square error (MSE) sense. The MSE, absolute error (AE), to assess the performance of the proposed INSNs is defined as:

The proposed INSNs may play a significant role in solving the PDI mathematical models. As PDI is a complex neurological disorder, its accurate mathematical modeling can help understand the underlying mechanisms, its progression prediction, and developing effective treatment strategies. The proposed INSNs are capable of analyzing the complex data, identifying patterns, and making predictions, and they thus may contribute in advancing the knowledge of PDI and improving patient care. Therefore, in this study, the authors proposed a neural networks-based intelligent framework for solving the PDI mathematical model. However, this framework can be extended for clinical contributions in terms of early and efficient diagnosis as well as the prediction of PDI. Moreover, the proposed INSNs can assist in optimizing PDI treatment strategies by considering various factors, such as age, symptoms, and medication history. The proposed INSNs can help predict the most effective treatment options and dosages for individual patients. This can help in enhancing personalized medicine approaches and improved patient outcomes.

The INSNs normally demand more computational requirements, especially when dealing with large datasets. Training and optimizing INSNs for PDI models may require significant computational resources and time. This may affect the practical implementation of the INSNs, particularly for researchers with limited computing resources.

4. Performance Analyses

The simulation results of the proposed INSNs for PDI models 1, 2, and 3 presented in (7), (8), and (9), respectively, are provided in this section by considering both the BR and LM optimization algorithms.

In order to analyze the performance of the proposed INSNs, first, a reference dataset through the Adams solver is generated for PDI models 1, 2, and 3 that are presented in (7), (8), and (9), respectively, for inputs [0, 5] with a step size of 0.025. The dataset for all three PDI models is arbitrarily segmented into training, testing, and validation, with a proportion of 80, 10, and 10, respectively. The block diagram representation of the proposed INSN layer structure is presented in part 3 in Figure 1, and is implemented in the Matlab fitting tool.

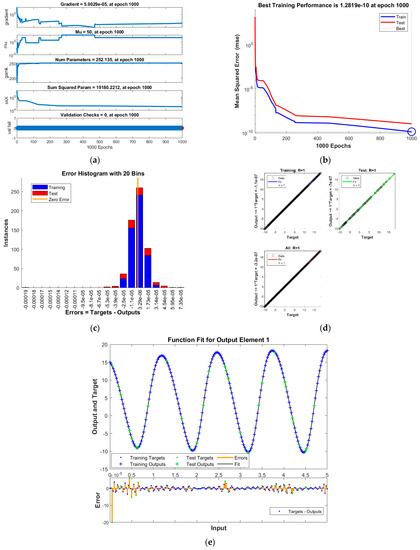

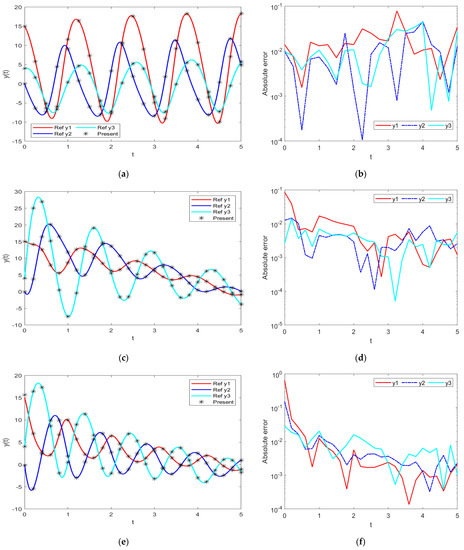

The results of INSNs with BR (INSN-BR) are given in Figure 2, Figure 3, Figure 4 and Figure 5, and the results of INSNs with LM (INSN-LM) are provided in Figure 6, Figure 7, Figure 8 and Figure 9. The results of INSN-LM are provided in Figure 2, Figure 3 and Figure 4 for PDI models 1, 2, and 3, respectively. Figure 2a, Figure 3a, and Figure 4a provide the state transition values; Figure 2b, Figure 3b, and Figure 4b provide the learning curves; Figure 2c, Figure 3c, and Figure 4c present the histogram analyses; Figure 2d, Figure 3d, and Figure 4d provide the regression results; and Figure 2e, Figure 3e, and Figure 4e present the fitting results.

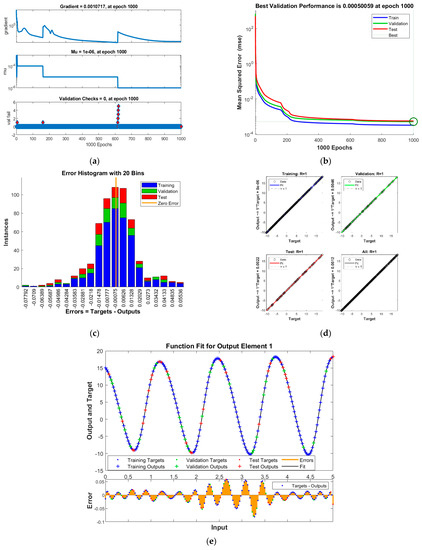

Figure 2.

Results of INSN-BR for PDI model 1: (a) State transition values; (b) Learning curve; (c) Histogram; (d) Regression results; (e) Fitting results.

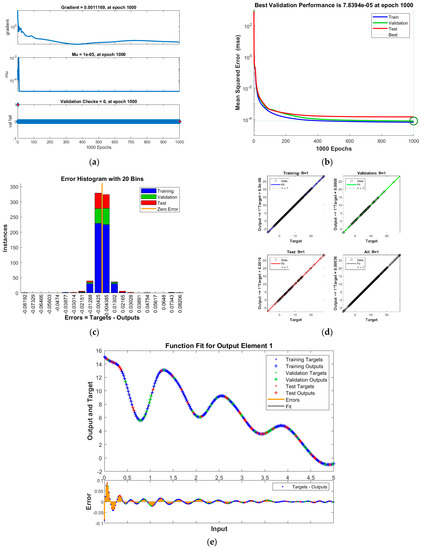

Figure 3.

Results of INSN-BR for PDI model 2: (a) State transition values; (b) Learning curve; (c) Histogram; (d) Regression results; (e) Fitting results.

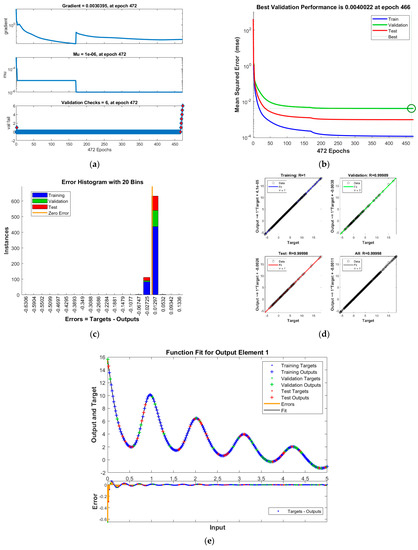

Figure 4.

Results of INSN-BR for PDI model 3: (a) State transition values; (b) Learning curve; (c) Histogram; (d) Regression results; (e) Fitting results.

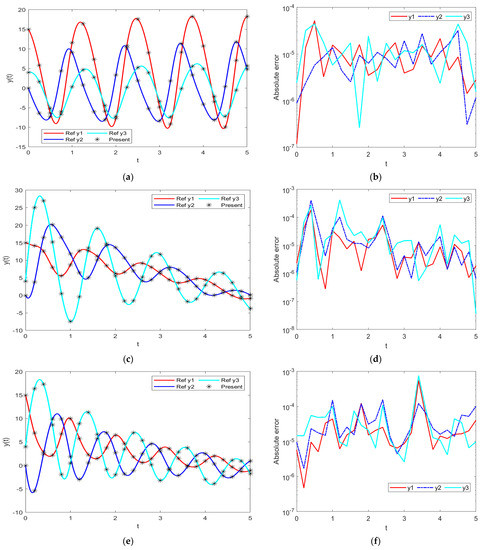

Figure 5.

Comparative results of the proposed INSN-BR with the reference numerical solution: (a) PDI model 1; (b) PDI model 1; (c) PDI model 2; (d) PDI model 2; (e) PDI model 3; (f) PDI model 3.

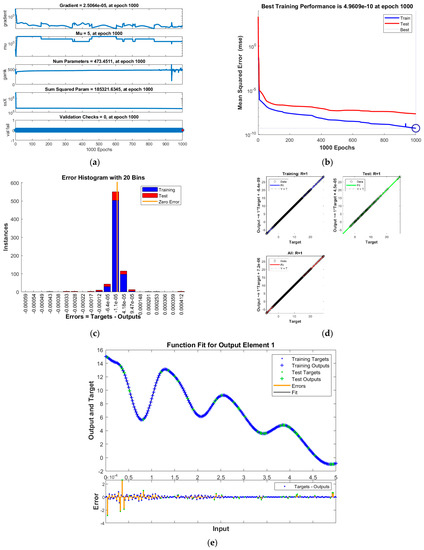

Figure 6.

Results of INSN-LM for PDI model 1: (a) State transition values; (b) Learning curve; (c) Histogram; (d) Regression results; (e) Fitting results.

Figure 7.

Results of INSN-LM for PDI model 2: (a) State transition values; (b) Learning curve; (c) Histogram; (d) Regression results; (e) Fitting results.

Figure 8.

Results of INSN-LM for PDI model 3: (a) State transition values; (b) Learning curve; (c) Histogram; (d) Regression results; (e) Fitting results.

Figure 9.

Comparative results of the proposed INSN-LM with the reference numerical solution: (a) PDI model 1; (b) PDI model 1; (c) PDI model 2; (d) PDI model 2; (e) PDI model 3; (f) PDI model 3.

The best training performance of the proposed INSN-BR is 1.2819 × 10−10 at 1000 epochs, 4.9609 × 10−10 at 1000 epochs, and 1.531 × 10−9 at 33 epochs for PDI models 1, 2, and 3, respectively. The corresponding gradient and learning rates are [5.0029 × 10−5, 2.5064 × 10−5, 3.7703 × 10−8] and [50, 5 and 500,000], respectively. Further, it is observed from the histogram analyses that the bin with a reference value of zero error value has error values of around 3.29 × 10−6, −1.1 × 10−5, and −3.7 × 10−6 for PDI models 1, 2, and 3, respectively. Moreover, the regression results show that the value for the coefficient of determination is R = 1 for all three PDI models, which confirms the correctness of the proposed INSN-BR.

In order to further demonstrate the accuracy/correctness of the proposed ISNS-BR, the absolute error is calculated for all three PDI models, and the results are presented in Figure 5 along with the comparison of the proposed solutions obtained through the INSN-BR with the reference numerical solutions. The results presented in Figure 5 endorse the efficacy of the proposed INSN-BR.

The results of the proposed INSN-LM are provided in Figure 6, Figure 7 and Figure 8 for PDI models 1, 2, and 3, respectively. Figure 6a, Figure 7a, and Figure 8a provide the state transition values; Figure 6b, Figure 7b, and Figure 8b provide the learning curves; Figure 6c, Figure 7c, and Figure 8c present the histogram analyses; Figure 6d, Figure 7d, and Figure 8d provide the regression results; and Figure 6e, Figure 7e, and Figure 8e present the fitting plots.

The best validation performance of the proposed INSN-LM is 5.0059 × 10−3 at 1000 epochs, 7.8394 × 10−5 at 1000 epochs, and 4.0022 × 10−3 at 466 epochs for PDI models 1, 2, and 3, respectively. The corresponding gradient and the learning rates are [0.0010, 0.0011, 0.0030] and [1 × 10−6, 1 × 10−5 and 1 × 10−6], respectively. Further, it is observed from the histogram analyses that the bin with a reference value of zero error has error values of around −7.7 × 10−4, −4.25 × 10−3, and 1.29 × 10−2 for PDI models 1, 2, and 3, respectively. Moreover, the regression results show the value for coefficient of determination is R = 1 for all three PDI models, which confirms the correctness of the proposed INSN-LM. In order to further demonstrate the accuracy/correctness of the proposed ISNS-LM, the absolute error is calculated for all three PDI models, and the results are presented in Figure 9 along with the comparison of the proposed solutions obtained through the INSN-LM with the reference numerical solutions. The results presented in Figure 9 endorse the efficacy of the proposed INSN-LM.

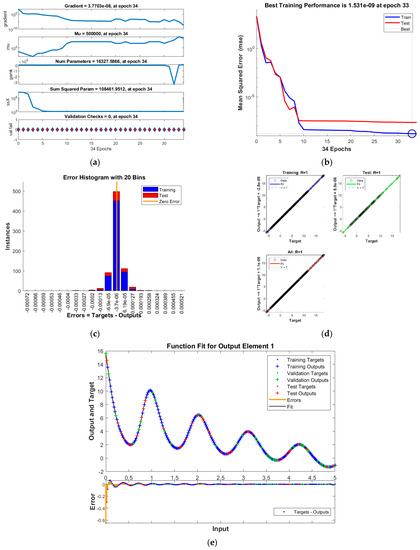

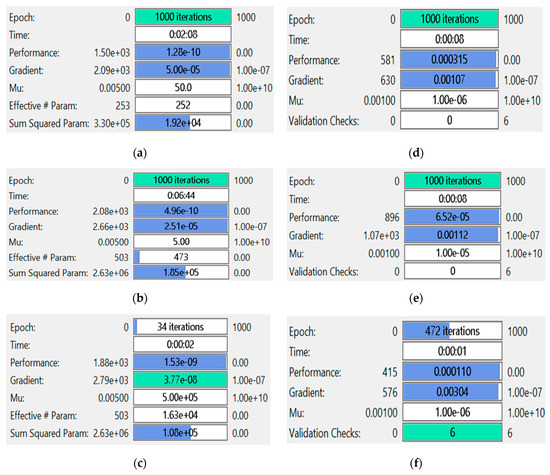

The comparison of the INSN-BR and ISNS-LM is also conducted with respect to the MSE-based fitness values, the number of epochs, the time consumed in the computation, and the BR/LM parameters, such as gradient and learning rate, for all three PDI models, and the results are presented in Figure 10.

Figure 10.

Performance comparison of INSN-BR and INSN-LM for PDI models: (a) INSN-BR for PDI model 1; (b) INSN-BR for PDI model 2; (c) INSN-BR for PDI model 3; (d) INSN-LM for PDI model 1; (e) INSN-LM for PDI model 2; (f) INSN-LM for PDI model 3.

Figure 10a–c provides the results of INSN-BR for PDI models 1, 2, and 3, respectively, while the respective results of INSN-LM are given in Figure 10d–f, where the # means number. The INSN-BR attains the performance of 1.2819 × 10−10, 4.9609 × 10−10, and 1.531 × 10−9 in times of 0:02:08, 0:06:44, and 0:00:02 with 1000, 1000, and 34 epochs. The INSN-LM, meanwhile, attains the performance of 3.12 × 10−4, 6.54 × 10−5, and 1.10 × 10−4 in times of 0:00:08, 0:00:08, and 0:00:01 with 1000, 1000, and 472 epochs. The results clearly indicate that the INSN-BR provides more accurate results than the ISNS-LM but at the cost of bit more computation.

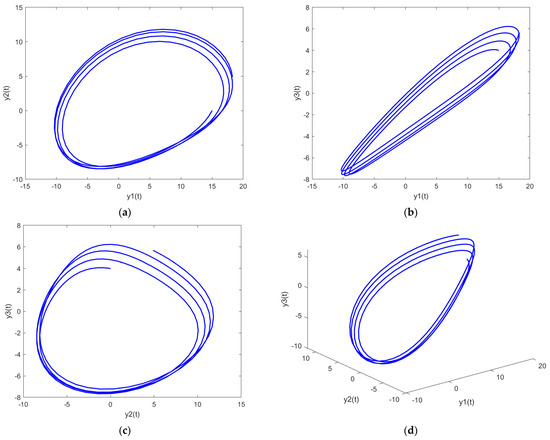

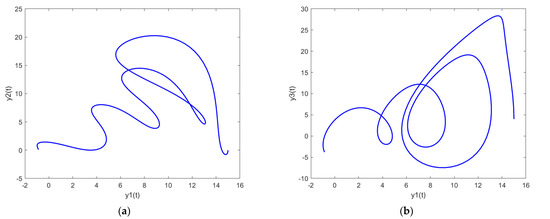

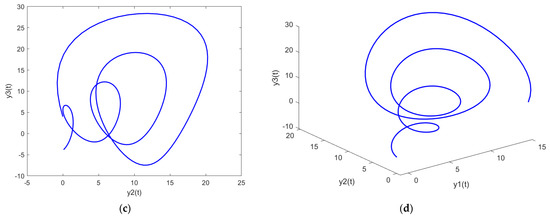

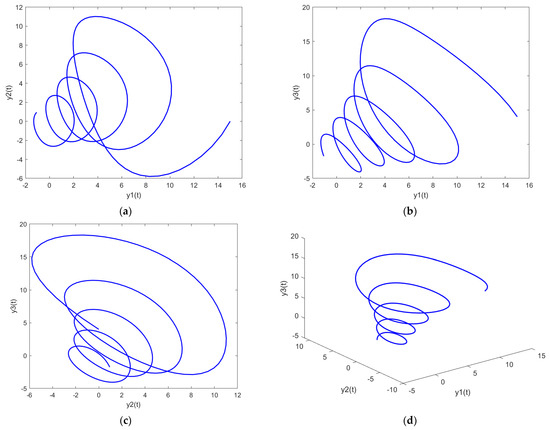

In order to further analyze the behavior of the PDI models presented in (7) to (9), the parametric plots are also drawn and presented in Figure 11, Figure 12 and Figure 13 for PDI model 1, 2, and 3, respectively.

Figure 11.

Parametric plots of PDI model 1: (a) y1 vs. y2; (b) y1 vs. y3; (c) y2 vs. y3; (d) y1 vs. y2 vs. y3.

Figure 12.

Parametric plots of PDI model 2: (a) y1 vs. y2; (b) y1 vs. y3; (c) y2 vs. y3; (d) y1 vs. y2 vs. y3.

Figure 13.

Parametric plots of PDI model 3: (a) y1 vs. y2; (b) y1 vs. y3; (c) y2 vs. y3; (d) y1 vs. y2 vs. y3.

Figure 11a, Figure 12a and Figure 13a show the parametric plot of y1 and y2 for PDI model 1, 2, and 3, respectively. Similarly, Figure 11b, Figure 12b and Figure 13b provide the parametric plots of y1 and y2 for PDI model 1, 2, and 3, respectively, and Figure 11c, Figure 12c and Figure 13c provide the parametric plots of y2 and y3 for PDI models 1, 2, and 3. To further deepen the analyses, the 3D parametric plots are also constructed and presented in Figure 11d, Figure 12d and Figure 13d for PDI models 1, 2, and 3, respectively. The parametric plots of Figure 11, Figure 12 and Figure 13 further establish the stability of the PDI models.

5. Conclusions

- This study presented intelligent neuro-supervised networks, INSNs, in order to study the dynamics of Parkinson’s disease illness (PDI) through the rhythms of brain electrical activity measured at different locations on the cerebral cortex, represented with three differential classes. Two types of INSNs are constructed by neural networks multilayer architecture backpropagated with the Levenberg–Marquardt and the Bayesian regularization algorithms, i.e., INSN-LM and INSN-BR. The Adams solver is used to generate the reference data for grids of input and target samples of INSNs for different PDI models obtained by varying the sensor locations in order to measure the impact of rhythms of brain electrical activity. The dataset for all three PDI models is arbitrarily segmented into training, testing, and validation, with a proportion of 80, 10, and 10, respectively, by optimizing the fitness function based on the mean squared error criterion. The values of mean square error and absolute error endorse the accuracy and the correctness of the proposed INSN-LM and INSN-BR for all three of the PDI models. Further, the analyses by means of histogram error plots, learning curves, control parameters, and regression index all confirm the efficacy of the proposed INSNs for the PDI models, although the accuracy of INSN-RB is relatively superior to the INSN-LM, albeit at the cost of slightly more computational budget requirements.

- In future, it looks promising to incorporate the fractional gradient-based algorithms [62,63] for backpropagation in INSNs for analyzing PDI models, and to investigate early and efficient diagnosis as well as prediction of PDI through the proposed INSNs.

Author Contributions

Conceptualization, M.A.Z.R.; methodology, R.M. and N.I.C.; software, R.M.; validation, M.A.Z.R.; writing—original draft preparation, R.M.; writing—review and editing, N.I.C. and M.A.Z.R., supervision, C.-Y.C. and M.A.Z.R., project administration, C.-Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

All authors declared that there are no potential conflict of interest.

References

- Kalia, L.V.; Lang, A.E. Parkinson’s disease. Lancet 2015, 386, 896–912. [Google Scholar] [CrossRef]

- Feigin, V.L.; Nichols, E.; Alam, T.; Bannick, M.S.; Beghi, E.; Blake, N.; Fischer, F. Global, regional, and national burden of neurological disorders, 1990–2016: A systematic analysis for the Global Burden of Disease Study 2016. Lancet Neurol. 2019, 18, 459–480. [Google Scholar] [CrossRef] [PubMed]

- Dorsey, E.; Sherer, T.; Okun, M.S.; Bloem, B.R. The emerging evidence of the Parkinson pandemic. J. Parkinson’s Dis. 2018, 8, S3–S8. [Google Scholar] [CrossRef]

- Deuschl, G.; Beghi, E.; Fazekas, F.; Varga, T.; Christoforidi, K.A.; Sipido, E.; Feigin, V.L. The burden of neurological diseases in Europe: An analysis for the Global Burden of Disease Study 2017. Lancet Public Health 2020, 5, e551–e567. [Google Scholar] [CrossRef]

- Bakshi, S.; Chelliah, V.; Chen, C.; van der Graaf, P.H. Mathematical biology models of Parkinson’s disease. CPT Pharmacomet. Syst. Pharmacol. 2019, 8, 77–86. [Google Scholar] [CrossRef] [PubMed]

- Sarbaz, Y.; Pourakbari, H. A review of presented mathematical models in Parkinson’s disease: Black-and gray-box models. Med. Biol. Eng. Comput. 2016, 54, 855–868. [Google Scholar] [CrossRef]

- Anninou, A.P.; Groumpos, P.P. Modeling of Parkinson’s disease using fuzzy cognitive maps and non-linear Hebbian learning. Int. J. Artif. Intell. Tools 2014, 23, 1450010. [Google Scholar] [CrossRef]

- Babichev, S.; Yasinska-Damri, L.; Liakh, I. A Hybrid Model of Cancer Diseases Diagnosis Based on Gene Expression Data with Joint Use of Data Mining Methods and Machine Learning Techniques. Appl. Sci. 2023, 13, 6022. [Google Scholar] [CrossRef]

- Mahajan, A.; Sharma, N.; Aparicio-Obregon, S.; Alyami, H.; Alharbi, A.; Anand, D.; Sharma, M.; Goyal, N. A Novel Stacking-Based Deterministic Ensemble Model for Infectious Disease Prediction. Mathematics 2022, 10, 1714. [Google Scholar] [CrossRef]

- Brunese, L.; Mercaldo, F.; Reginelli, A.; Santone, A. A Neural Network-Based Method for Respiratory Sound Analysis and Lung Disease Detection. Appl. Sci. 2022, 12, 3877. [Google Scholar] [CrossRef]

- Parakkal Unni, M.; Menon, P.P.; Wilson, M.R.; Tsaneva-Atanasova, K. Ankle push-off based mathematical model for freezing of gait in parkinson’s disease. Front. Bioeng. Biotechnol. 2020, 8, 552635. [Google Scholar] [CrossRef]

- Hayete, B.; Wuest, D.; Laramie, J.; McDonagh, P.; Church, B.; Eberly, S.; Ravina, B. A Bayesian mathematical model of motor and cognitive outcomes in Parkinson’s disease. PLoS ONE 2017, 12, e0178982. [Google Scholar] [CrossRef]

- Belozyotov, V.Y.; Zaytsev, V.G. Mathematical modelling of parkinson’s illness by chaotic dynamics methods. Probl. Math. Model. Theory Differ. Equ. 2017, 9, 21–39. [Google Scholar]

- Borah, M.; Das, D.; Gayan, A.; Fenton, F.; Cherry, E. Control and anticontrol of chaos in fractional-order models of Diabetes, HIV, Dengue, Migraine, Parkinson’s and Ebola virus diseases. Chaos Solitons Fractals 2021, 153, 111419. [Google Scholar] [CrossRef]

- Danciu, D. A CNN-based approach for a class of non-standard hyperbolic partial differential equations modeling distributed parameters (nonlinear) control systems. Neurocomputing 2015, 164, 56–70. [Google Scholar] [CrossRef]

- Mwata-Velu, T.Y.; Avina-Cervantes, J.G.; Cruz-Duarte, J.M.; Rostro-Gonzalez, H.; Ruiz-Pinales, J. Imaginary Finger Movements Decoding Using Empirical Mode Decomposition and a Stacked BiLSTM Architecture. Mathematics 2021, 9, 3297. [Google Scholar] [CrossRef]

- Stoean, R.; Ionescu, L.; Stoean, C.; Boicea, M.; Atencia, M.; Joya, G. A deep learning-based surrogate for the xrf approximation of elemental composition within archaeological artefacts before restoration. Procedia Comput. Sci. 2021, 192, 2002–2011. [Google Scholar] [CrossRef]

- Atencia, M.; Stoean, R.; Joya, G. Uncertainty quantification through dropout in time series prediction by echo state networks. Mathematics 2020, 8, 1374. [Google Scholar] [CrossRef]

- Issa, D.; Demirci, M.F.; Yazici, A. Speech emotion recognition with deep convolutional neural networks. Biomed. Signal Process Control 2020, 59, 101894. [Google Scholar] [CrossRef]

- Zhou, J.; Zhou, J.; Ye, H.; Ali, M.L.; Chen, P.; Nguyen, H.T. Yield estimation of soybean breeding lines under drought stress using unmanned aerial vehicle-based imagery and convolutional neural network. Biosyst. Eng. 2021, 204, 90–103. [Google Scholar] [CrossRef]

- Khan, Z.A.; Chaudhary, N.I.; Abbasi, W.A.; Ling, S.H.; Raja, M.A.Z. Design of Confidence-Integrated Denoising Auto-Encoder for Personalized Top-N Recommender Systems. Mathematics 2023, 11, 761. [Google Scholar] [CrossRef]

- Malik, M.F.; Chang, C.-L.; Chaudhary, N.I.; Khan, Z.A.; Kiani, A.K.; Shu, C.-M.; Raja, M.A.Z. Swarming intelligence heuristics for fractional nonlinear autoregressive exogenous noise systems. Chaos Solitons Fractals 2023, 167, 113085. [Google Scholar] [CrossRef]

- Munawar, S.; Javaid, N.; Khan, Z.A.; Chaudhary, N.I.; Raja, M.A.Z.; Milyani, A.H.; Ahmed Azhari, A. Electricity Theft Detection in Smart Grids Using a Hybrid BiGRU–BiLSTM Model with Feature Engineering-Based Preprocessing. Sensors 2022, 22, 7818. [Google Scholar] [CrossRef] [PubMed]

- Altaf, F.; Chang, C.-L.; Chaudhary, N.I.; Cheema, K.M.; Raja, M.A.Z.; Shu, C.-M.; Milyani, A.H. Novel Fractional Swarming with Key Term Separation for Input Nonlinear Control Autoregressive Systems. Fractal Fract. 2022, 6, 348. [Google Scholar] [CrossRef]

- Mehmood, K.; Chaudhary, N.I.; Khan, Z.A.; Cheema, K.M.; Raja, M.A.Z.; Milyani, A.H.; Azhari, A.A. Nonlinear Hammerstein System Identification: A Novel Application of Marine Predator Optimization Using the Key Term Separation Technique. Mathematics 2022, 10, 4217. [Google Scholar] [CrossRef]

- Wang, F.; Zhang, Z.; Liu, C.; Yu, Y.; Pang, S.; Duić, N.; Shafie-Khah, M.; Catalão, J.P. Generative adversarial networks and convolutional neural networks based weather classification model for day ahead short-term photovoltaic power forecasting. Energy Convers. Manag. 2019, 181, 443–462. [Google Scholar] [CrossRef]

- Mahmood, T.; Ali, N.; Raja, M.A.Z.; Chaudhary, N.I.; Cheema, K.M.; Shu, C.-M.; Milyani, A.H. Intelligent backpropagated predictive networks for dynamics of the power-law fluidic model with moving wedge and flat plate. Waves Random Complex Media 2023, 1–26. [Google Scholar] [CrossRef]

- Mahmood, T. Novel adaptive Bayesian regularization networks for peristaltic motion of a third-grade fluid in a planar channel. Mathematics 2022, 10, 358. [Google Scholar] [CrossRef]

- Raja, M.A.Z.; Sabati, M.; Parveen, N.; Awais, M.; Awan, S.E.; Chaudhary, N.I.; Shoaib, M.; Alquhayz, H. Integrated intelligent computing application for effectiveness of Au nanoparticles coated over MWCNTs with velocity slip in curved channel peristaltic flow. Sci. Rep. 2021, 11, 22550. [Google Scholar] [CrossRef]

- Raja, M.A.Z.; Khan, Z.; Zuhra, S.; Chaudhary, N.I.; Khan, W.U.; He, Y.; Islam, S.; Shoaib, M. Cattaneo-christov heat flux model of 3D hall current involving biconvection nanofluidic flow with Darcy-Forchheimer law effect: Backpropagation neural networks approach. Case Stud. Therm. Eng. 2021, 26, 101168. [Google Scholar] [CrossRef]

- Lipu, M.H.; Hannan, M.A.; Karim, T.F.; Hussain, A.; Saad, M.H.M.; Ayob, A.; Miah, M.S.; Mahlia, T.I. Intelligent algorithms and control strategies for battery management system in electric vehicles: Progress, challenges and future outlook. J. Clean. Prod. 2021, 292, 126044. [Google Scholar] [CrossRef]

- Kaffash, S.; Nguyen, A.T.; Zhu, J. Big data algorithms and applications in intelligent transportation system: A review and bibliometric analysis. Int. J. Prod. Econ. 2021, 231, 107868. [Google Scholar] [CrossRef]

- Ahmad, A.; Cuomo, S.; Wu, W.; Jeon, G. Intelligent algorithms and standards for interoperability in Internet of Things. Future Gener. Comput. Syst. 2019, 92, 1187–1191. [Google Scholar] [CrossRef]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Aziz, M.Z.; Siuly, S.; Ding, W. Toward the development of versatile brain–computer interfaces. IEEE Trans. Artif. Intell. 2021, 2, 314–328. [Google Scholar] [CrossRef]

- Yu, X.; Aziz, M.Z.; Sadiq, M.T.; Fan, Z.; Xiao, G. A new framework for automatic detection of motor and mental imagery EEG signals for robust BCI systems. IEEE Trans. Instrum. Meas. 2021, 70, 1006612. [Google Scholar] [CrossRef]

- Sadiq, M.T.; Akbari, H.; Siuly, S.; Li, Y.; Wen, P. Alcoholic EEG signals recognition based on phase space dynamic and geometrical features. Chaos Solitons Fractals 2022, 158, 112036. [Google Scholar] [CrossRef]

- Akbari, H.; Sadiq, M.T.; Payan, M.; Esmaili, S.S.; Baghri, H.; Bagheri, H. Depression Detection Based on Geometrical Features Extracted from SODP Shape of EEG Signals and Binary PSO. Trait. Du Signal 2021, 38, 13–26. [Google Scholar] [CrossRef]

- Akbari, H.; Sadiq, M.T.; Jafari, N.; Too, J.; Mikaeilvand, N.; Cicone, A.; Serra-Capizzano, S. Recognizing seizure using Poincaré plot of EEG signals and graphical features in DWT domain. Bratisl. Med. J./Bratisl. Lek. Listy 2023, 124, 12–24. [Google Scholar] [CrossRef]

- Xiang, Y.; Zhou, Y.; Huang, H.; Luo, Q. An Improved Chimp-Inspired Optimization Algorithm for Large-Scale Spherical Vehicle Routing Problem with Time Windows. Biomimetics 2022, 7, 241. [Google Scholar] [CrossRef] [PubMed]

- Zhou, G.; Miao, F.; Tang, Z.; Zhou, Y.; Luo, Q. Kohonen neural network and symbiotic-organism search algorithm for intrusion detection of network viruses. Front. Comput. Neurosci. 2023, 17, 1079483. [Google Scholar] [CrossRef]

- Zhang, T.; Zhou, Y.; Zhou, G.; Deng, W.; Luo, Q. Discrete Mayfly Algorithm for spherical asymmetric traveling salesman problem. Expert Syst. Appl. 2023, 221, 119765. [Google Scholar] [CrossRef]

- Wei, Y.; Wei, X.; Huang, H.; Bi, J.; Zhou, Y.; Du, Y. SSMA: Simplified slime mould algorithm for optimization wireless sensor network coverage problem. Syst. Sci. Control Eng. 2022, 10, 662–685. [Google Scholar] [CrossRef]

- Li, N.; Zhou, Y.; Luo, Q.; Huang, H. Discrete complex-valued code pathfinder algorithm for wind farm layout optimization problem. Energy Convers. Manag. X 2022, 16, 100307. [Google Scholar] [CrossRef]

- Chen, J.; Luo, Q.; Zhou, Y.; Huang, H. Firefighting multi strategy marine predators algorithm for the early-stage Forest fire rescue problem. Appl. Intell. 2022, 53, 15496–15515. [Google Scholar] [CrossRef]

- Mehmood, K.; Chaudhary, N.I.; Khan, Z.A.; Cheema, K.M.; Raja, M.A.Z. Variants of Chaotic Grey Wolf Heuristic for Robust Identification of Control Autoregressive Model. Biomimetics 2023, 8, 141. [Google Scholar] [CrossRef] [PubMed]

- Trojovský, P.; Dehghani, M. Subtraction-Average-Based Optimizer: A New Swarm-Inspired Metaheuristic Algorithm for Solving Optimization Problems. Biomimetics 2023, 8, 149. [Google Scholar] [CrossRef]

- Zhong, L.; Zhou, Y.; Zhou, G.; Luo, Q. Enhanced discrete dragonfly algorithm for solving four-color map problems. Appl. Intell. 2023, 53, 6372–6400. [Google Scholar] [CrossRef]

- Deng, W.; Zhang, X.; Zhou, Y.; Liu, Y.; Zhou, X.; Chen, H.; Zhao, H. An enhanced fast non-dominated solution sorting genetic algorithm for multi-objective problems. Inf. Sci. 2022, 585, 441–453. [Google Scholar] [CrossRef]

- Dehghani, M.; Trojovský, P.; Malik, O.P. Green Anaconda Optimization: A New Bio-Inspired Metaheuristic Algorithm for Solving Optimization Problems. Biomimetics 2023, 8, 121. [Google Scholar] [CrossRef]

- Zhang, Y.D.; Satapathy, S.C.; Guttery, D.S.; Górriz, J.M.; Wang, S.H. Improved breast cancer classification through combining graph convolutional network and convolutional neural network. Inf. Process Manag. 2021, 58, 102439. [Google Scholar] [CrossRef]

- Kutlu, H.; Avci, E.; Özyurt, F. White blood cells detection and classification based on regional convolutional neural networks. Med. Hypotheses 2020, 135, 109472. [Google Scholar] [CrossRef] [PubMed]

- Srinivasu, P.N.; SivaSai, J.G.; Ijaz, M.F.; Bhoi, A.K.; Kim, W.; Kang, J.J. Classification of skin disease using deep learning neural networks with MobileNet V2 and LSTM. Sensors 2021, 21, 2852. [Google Scholar] [CrossRef] [PubMed]

- Oh, K.; Chung, Y.C.; Kim, K.W.; Kim, W.S.; Oh, I.S. Classification and visualization of Alzheimer’s disease using volumetric convolutional neural network and transfer learning. Sci. Rep. 2019, 9, 18150. [Google Scholar] [CrossRef] [PubMed]

- Becerra, A.G.; Gutiérrez, M.; Lahoz-Beltra, R. Computing within bacteria: Programming of bacterial behavior by means of a plasmid encoding a perceptron neural network. BioSystems 2022, 213, 104608. [Google Scholar] [CrossRef] [PubMed]

- Ghassemi, N.; Shoeibi, A.; Rouhani, M. Deep neural network with generative adversarial networks pre-training for brain tumor classification based on MR images. Biomed. Signal Process Control 2020, 57, 101678. [Google Scholar] [CrossRef]

- Cinaglia, P.; Cannataro, M. Forecasting COVID-19 epidemic trends by combining a neural network with rt estimation. Entropy 2022, 24, 929. [Google Scholar] [CrossRef]

- Roethel, A.; Biliński, P.; Ishikawa, T. BioS2Net: Holistic Structural and Sequential Analysis of Biomolecules Using a Deep Neural Network. Int. J. Mol. Sci. 2022, 23, 2966. [Google Scholar] [CrossRef]

- Abbas, Z.; Tayara, H.; Chong, K.T. ENet-6mA: Identification of 6mA Modification Sites in Plant Genomes Using ElasticNet and Neural Networks. Int. J. Mol. Sci. 2022, 23, 8314. [Google Scholar] [CrossRef]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Aziz, M.Z. Motor imagery BCI classification based on novel two-dimensional modelling in empirical wavelet transform. Electron. Lett. 2020, 56, 1367–1369. [Google Scholar] [CrossRef]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Aziz, M.Z.; ur Rehman, N.; Ding, W.; Xiao, G. Motor imagery BCI classification based on multivariate variational mode decomposition. IEEE Trans. Emerg. Top. Comput. Intell. 2022, 6, 1177–1189. [Google Scholar] [CrossRef]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Zeming, F.; Rehman, A.U.; Ullah, I.; Li, G.; Xiao, G. Motor imagery EEG signals decoding by multivariate empirical wavelet transform-based framework for robust brain–computer interfaces. IEEE Access 2019, 7, 171431–171451. [Google Scholar] [CrossRef]

- Herzog, B. Fractional Stochastic Search Algorithms: Modelling Complex Systems via AI. Mathematics 2023, 11, 2061. [Google Scholar]

- Xu, C.; Mao, Y. Auxiliary model-based multi-innovation fractional stochastic gradient algorithm for hammerstein output-error systems. Machines 2021, 9, 247. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).