1. Introduction

According to the traditional Chinese medicine system, the human ear is the place where the meridians of the human body converge, which can be helpful in disease diagnoses and treatment [

1,

2]. Modern medicine has also paid attention to the ear acupoints and systematic ear acupoint therapy for the efficient diagnoses and treatment of various diseases [

3,

4,

5,

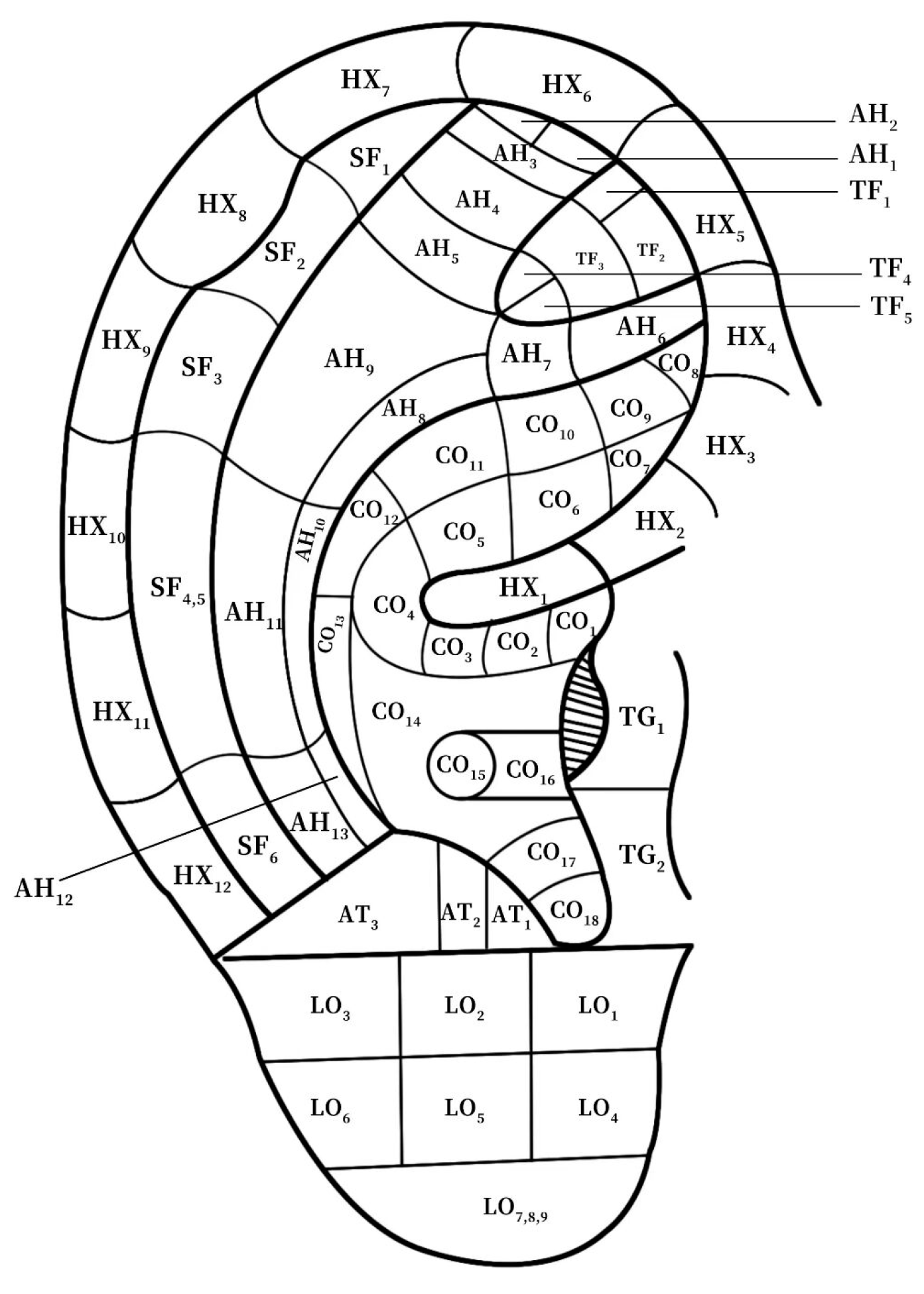

6]. Obviously, ear image identification is the premise of disease diagnoses and treatment. Due to the large differences in the human ear in individuals and the dense acupoint area, there are difficulties in actual ear diagnosis. According to the most widely used Chinese national standard for ear acupoints, GB/T13734-2008, the segmentation of the auricular region area mainly focuses on dividing the anatomical structure of these acupoints, combining the naming and positioning of the acupoint area and the point. The partition of ear acupoints can be divided into nine large areas, namely the helix, antihelix, cymba conchae, cavum conchae, fossae helicis, fossae triangularis auriculae, tragus, antitragus, and earlobe. Herein, according to the respective standards, the helix can be divided into 12 areas, the antihelix into 13 areas, the concha auriculae (including the cymba conchae and cavum conchae) into 18 areas, the fossae helicis into 6 areas, the fossae triangularis auriculae into 5 areas, the tragus into 4 areas, the antitragus into 4 areas, and the earlobe into 9 areas [

7], which can be distinguished by an orderly connection of 91 human ear acupoints scattered on the ears. According to the preliminary investigation, the current acupoint positioning is still in a very primitive operation stage, with many urgent problems needing to be solved:

- (1)

The location of the acupoints described in the traditional textbooks is not intuitive and clear;

- (2)

Personnel want to accurately locate, need a lot of training, and spend a lot of energy;

- (3)

It is difficult for non-professionals to find the right acupoints for daily healthcare;

Although acupoints have always been in the early practice mode, the medical value of TCM acupoints is recognized by the whole country and even the world, and has broad prospects, especially with regard to acupoint massages that can be carried out at home, the operation difficulty being low, and it being a very economical and convenient way of providing healthcare with good results. If the difficulty of finding acupoints can be reduced so that personnel without professional training can also find these acupoints very quickly and accurately and implement massages, it would be very beneficial for the popularization of traditional Chinese medicine acupoints and the development of traditional Chinese traditional medicine. The introduction of a computer to identify and display the ear image acupoint area and acupoints can not only effectively promote the development of traditional Chinese medicine, but is also a new application of computer vision in a new field.

At present, there have been relevant studies on the detection, normalization, feature extraction, and recognition of human external auricles. For example, Li Yibo et al. used the GVFsnake (gradient vector flow snake, GVFsnake) algorithm to automatically detect and segment external auricles [

8]; Li Sujuan et al. proposed a normalization method for human ear images [

9]; and Gao Shuxin et al. used the ASM (active shapemodel) algorithm to detect the contour of the outer ear [

10].

Based on the ASM algorithm, Timothy F. Cootes et al. added a set of appearance models to form a relatively mature set of AAM algorithms for image identification [

11]. The original AAM algorithm had a poor robustness and could not adapt to the interference of external environments. To solve the problem, E. Antonakos et al. combined the HOG (Histogram of Oriented Gradient) features with the AAM algorithm to reduce the impacts of light and occlusion on the recognition effect of the target images [

12] and Lucas–Kanade was introduced into the search process of the AAM algorithm to improve the algorithm’s operation efficiency [

13]. For images with complex feature point connections, the feature point identification of the AAM algorithm often requires many iterations and it is easy to fall into a local optimal solution; thus, the Gaussian–Newton optimization method [

14] and Bayesian formula [

15], etc., have been cited in the optimization process of the AAM algorithm, which improved its operation efficiency. In terms of application, researchers have mainly applied the AAM algorithm to face identification, which has obtained good results [

16,

17,

18,

19]. Chang Menglong et al. used the ASM algorithm to locate the acupoints that overlap with facial feature points [

20]. Compared to the ASM algorithm, the AAM algorithm can identify and divide the ear point area more accurately and establish the contour of the object through the training set. Wang Yihui et al., based on the AAM algorithm, achieved the localization of the ear region in human ear images by connecting the feature points that make up the ear region separately [

21].

The basic idea of the AAM algorithm is to divide the face image into two parts: shape and texture. By modeling the shape and texture, the recognition and tracking of the face can be achieved. A shape model is composed of a set of key points, while a texture model is composed of a set of feature vectors. Due to the characteristics of the AAM model, facial feature positions can be successfully detected. That is, the process of the AAM model matching can detect faces and facial features. Therefore, the AAM algorithm has been widely applied in both face detection and facial feature detection.

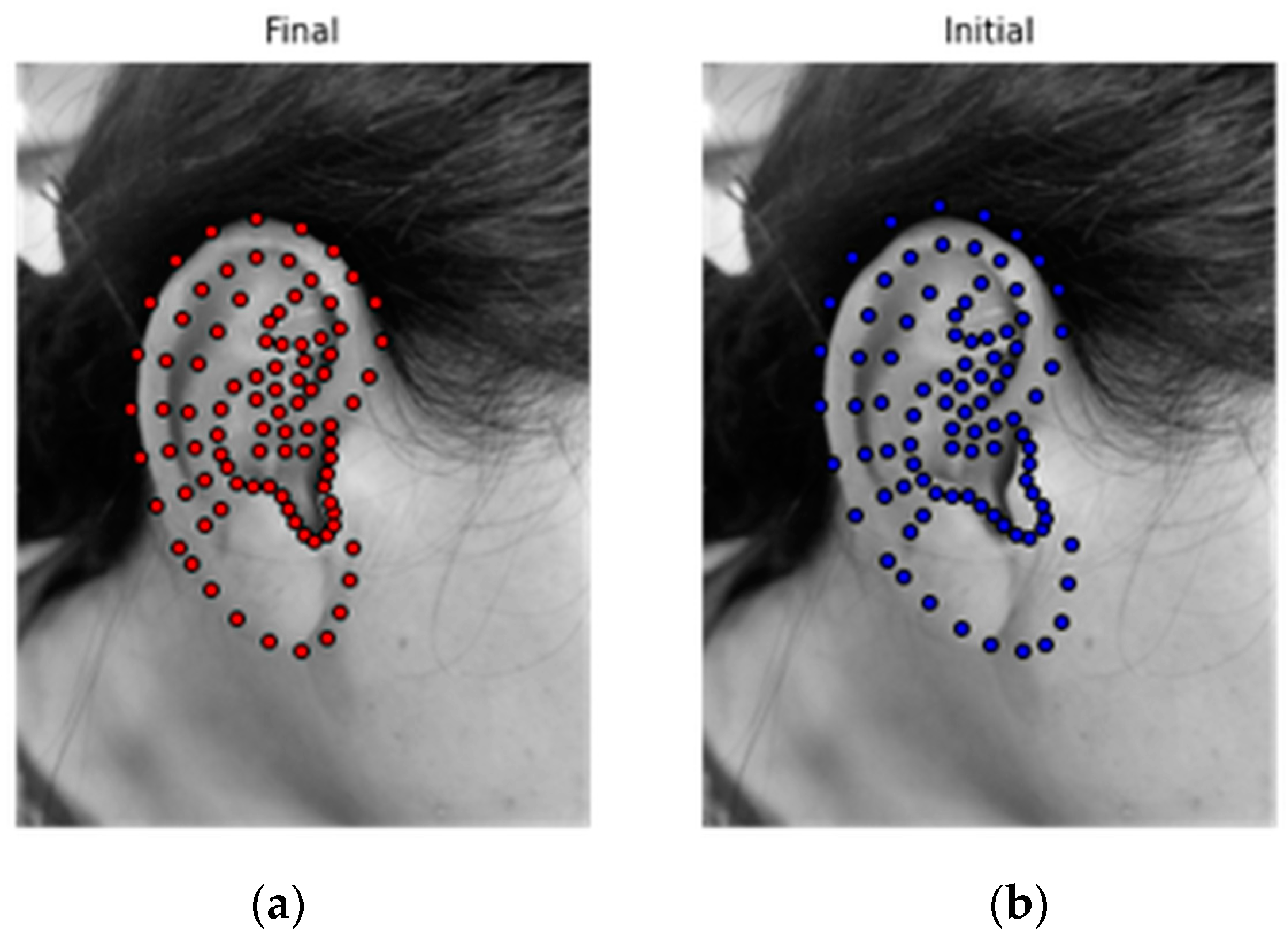

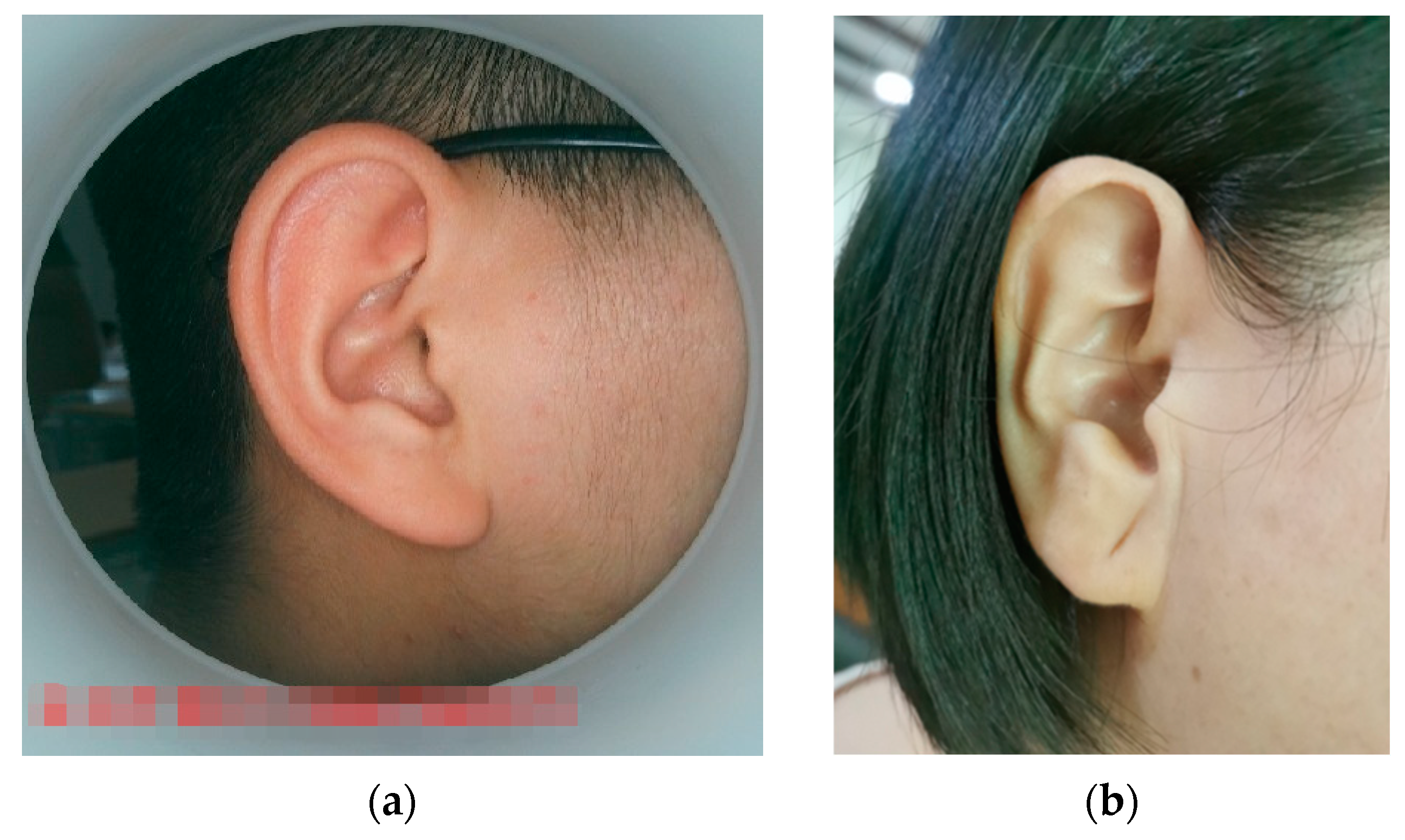

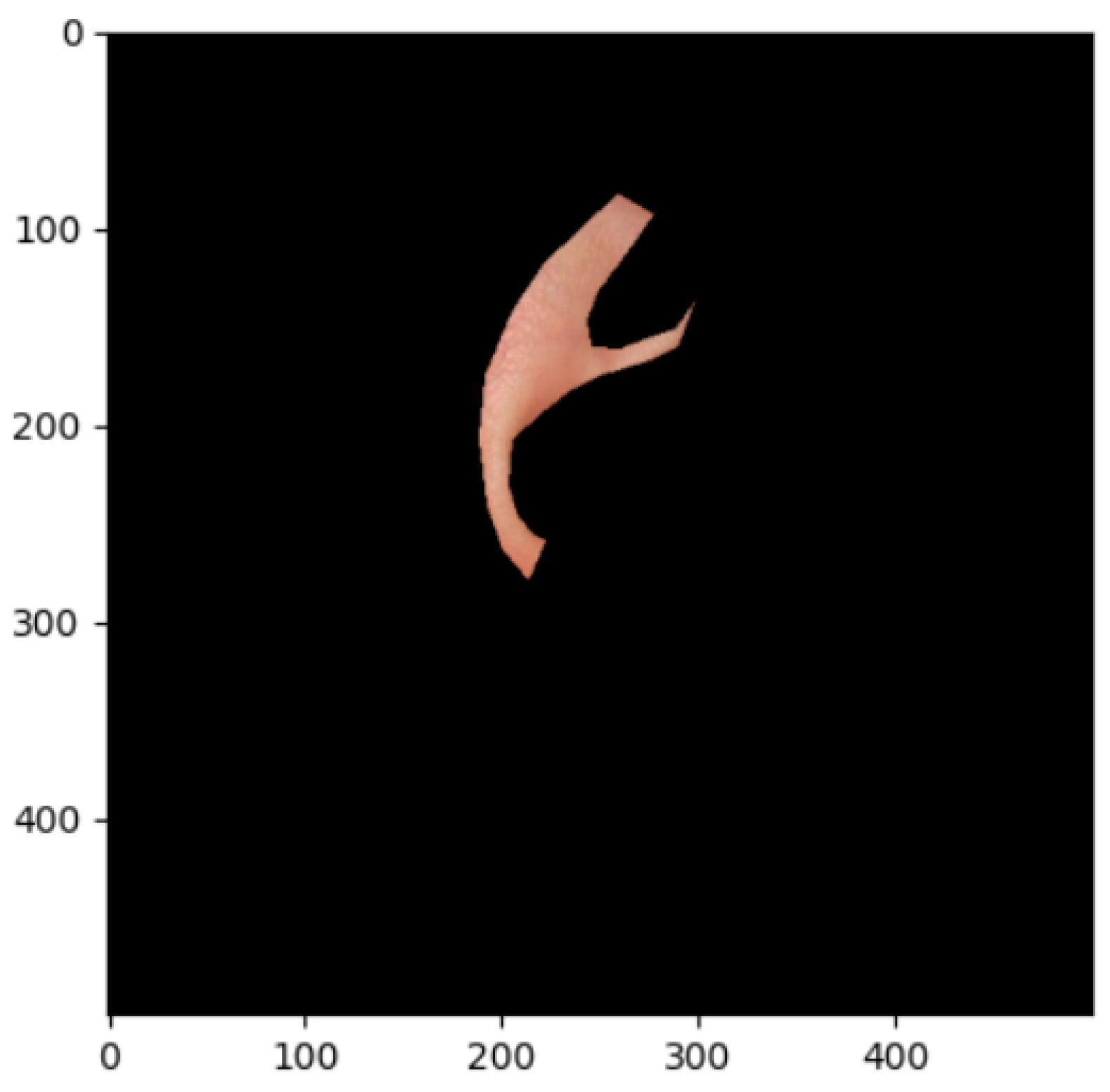

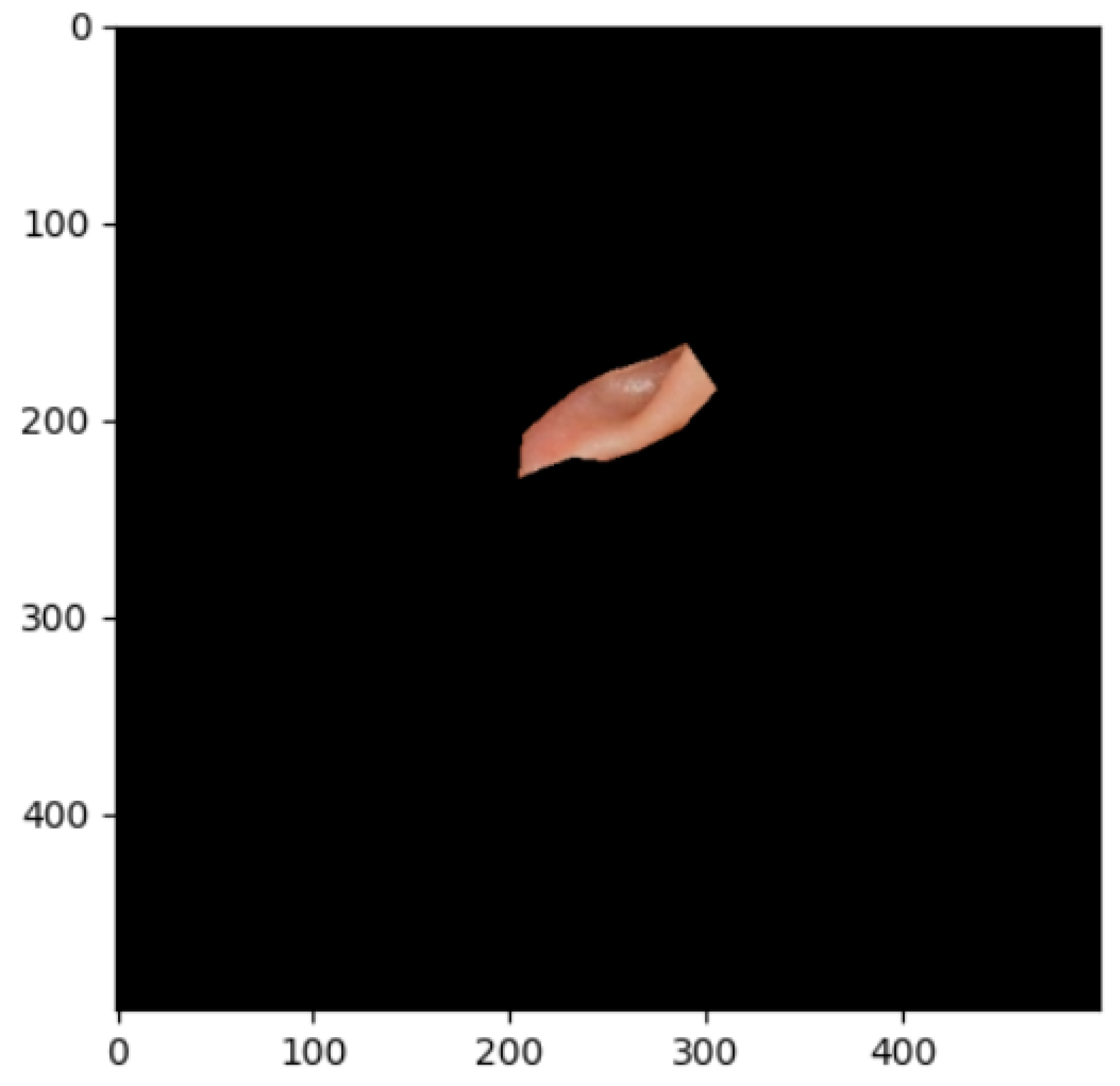

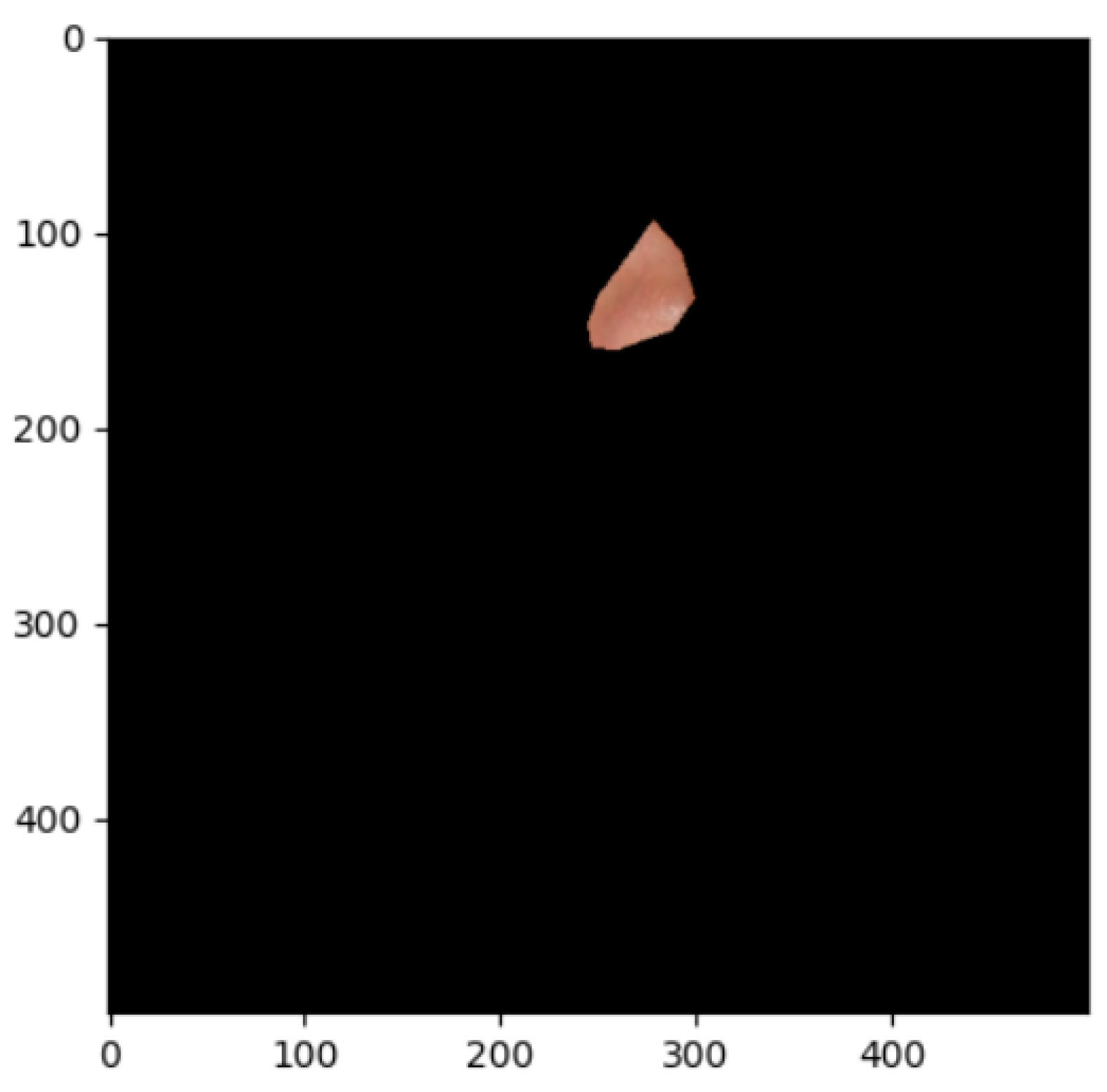

In order to improve the effective identification, segmentation, and feature point matching of the ear acupoints’ region images, and visually show the distribution of the hole area on the whole human ear, the AAM algorithm was used to obtain 91 feature points of a human ear image, in which the ear acupoints were identified, as well as the regions through the existing feature points. An ear region division method to visually represent the structure of an arbitrary ear was constructed. The ear area segmentation and appointed acupoint’s highlighting were achieved. The complete AAM-based method for ear image processing was applied in practical ear diagnoses.

3. AAM Algorithm Training Process

3.1. The Establishment of Shape Model

The input feature points are processed and transformed to the form of the vector

ai, which is used to characterize the shape features of a picture:

among which,

i represents the serial number of an image,

ai represents the characteristics of the picture of number

i,

N/

2 represents the number of the feature points,

x and

y represent the coordinates in the horizontal and longitudinal directions from the top left corner of the image, and

n represents the total amount of training pictures.

- (1)

Normalization of shape model

The feature points are aligned to the average human ear model using the Procrustes method, where the corresponding shape vector ai for each image has four transformed degrees of freedom, two degrees of translation, a rotational degree, and a size-scaled degree. When these four degrees of freedom are successively expressed in the column vectors, the change relationship of the human ear shape vector of each training picture can be represented by a corresponding four-dimensional vector Zi.

For

Ei =

ZiTWZi, the normalization process can be converted into a minimization process of

Ei,

W is a diagonal matrix of order

N in which

N = 182, and the element

ωi in the matrix satisfies:

among which,

VR represents the variance in the distance between point

k and point 1 between different training samples.

- (2)

Principal component analysis (PCA)

Using a set of vectors

ai normalized into a matrix

A,

A is multiplied by its transposition to obtain the covariance matrix of the feature point position; the change law of the feature point position is as follows:

The eigenvalues and eigenvectors of the acquisition

S are (

λ1,

λ2,

…,

λN) and (

n1,

n2, …,

nN), respectively, and then the required shape model is:

among which,

aavg is the initialized model after each alignment,

ni (eigenvector) indicates each change and its direction of change, and

λi (eigenvalue) represents the weight of each change in the model.

3.2. The Establishment of Appearance Model

In addition to the shape model, the appearance model continues to be introduced and reflects the color change law around the formed area between any set of characteristic points, so that it can better adapt to the more complex image changes and shooting lighting conditions.

- (1)

Normalization of shape model

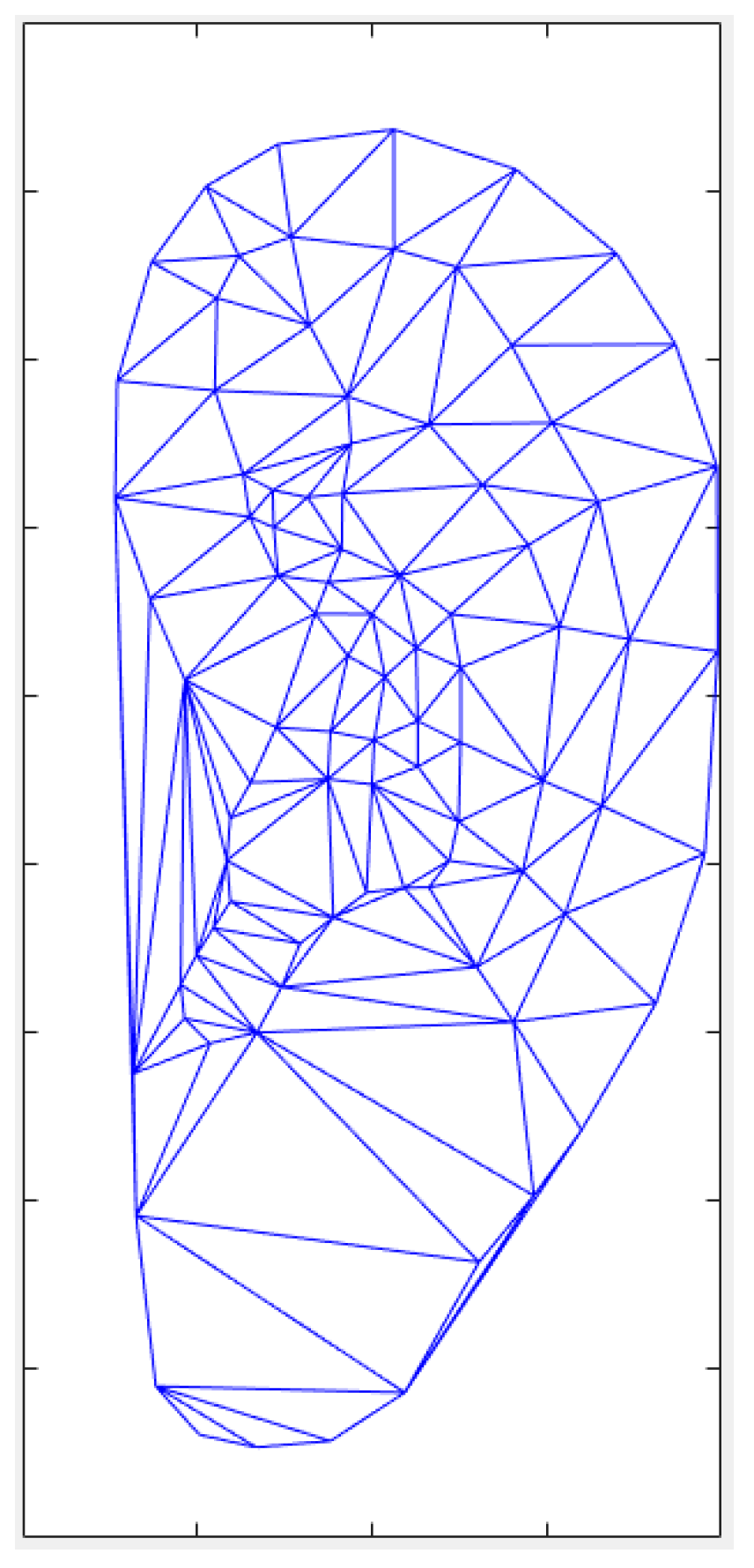

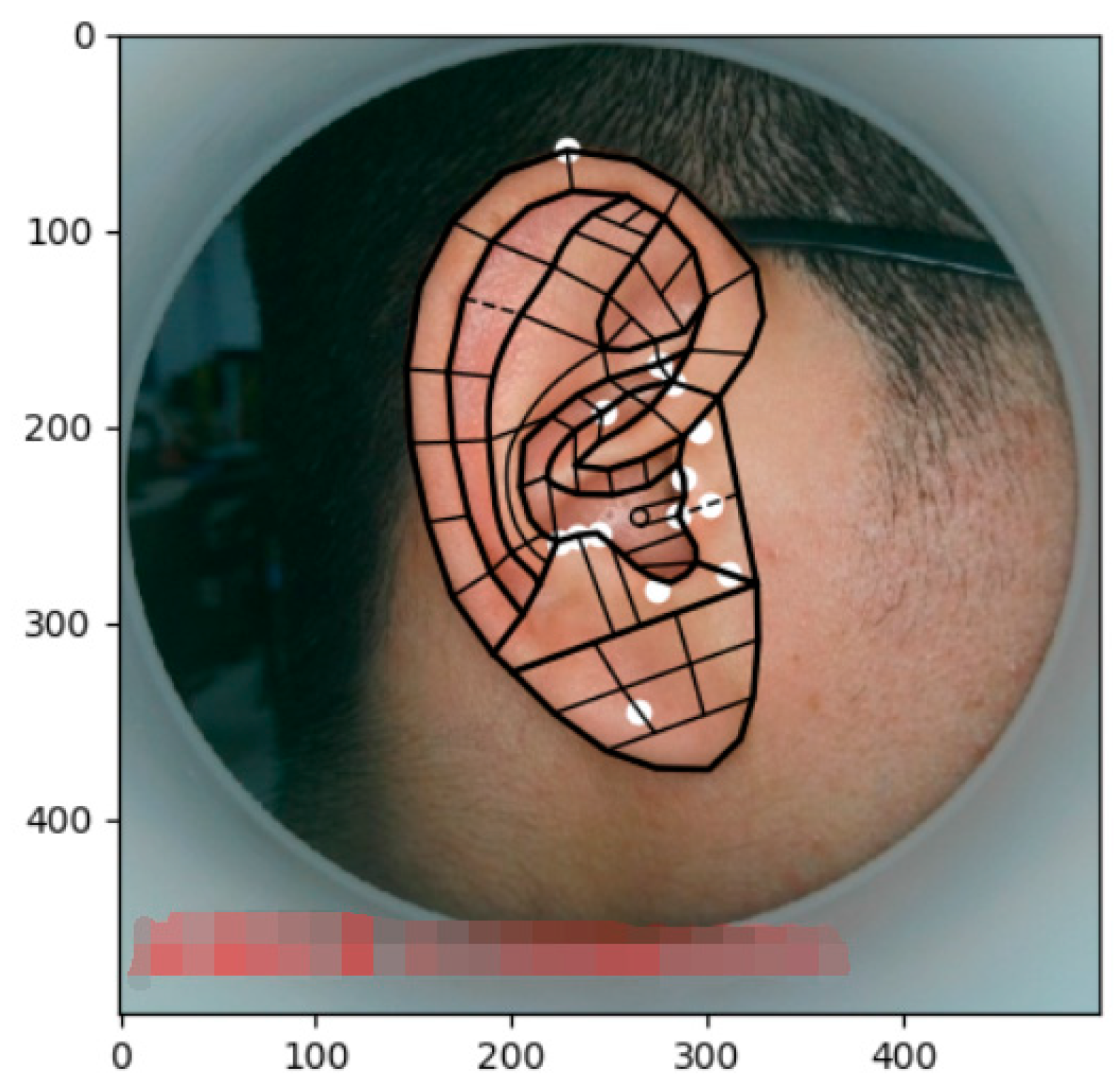

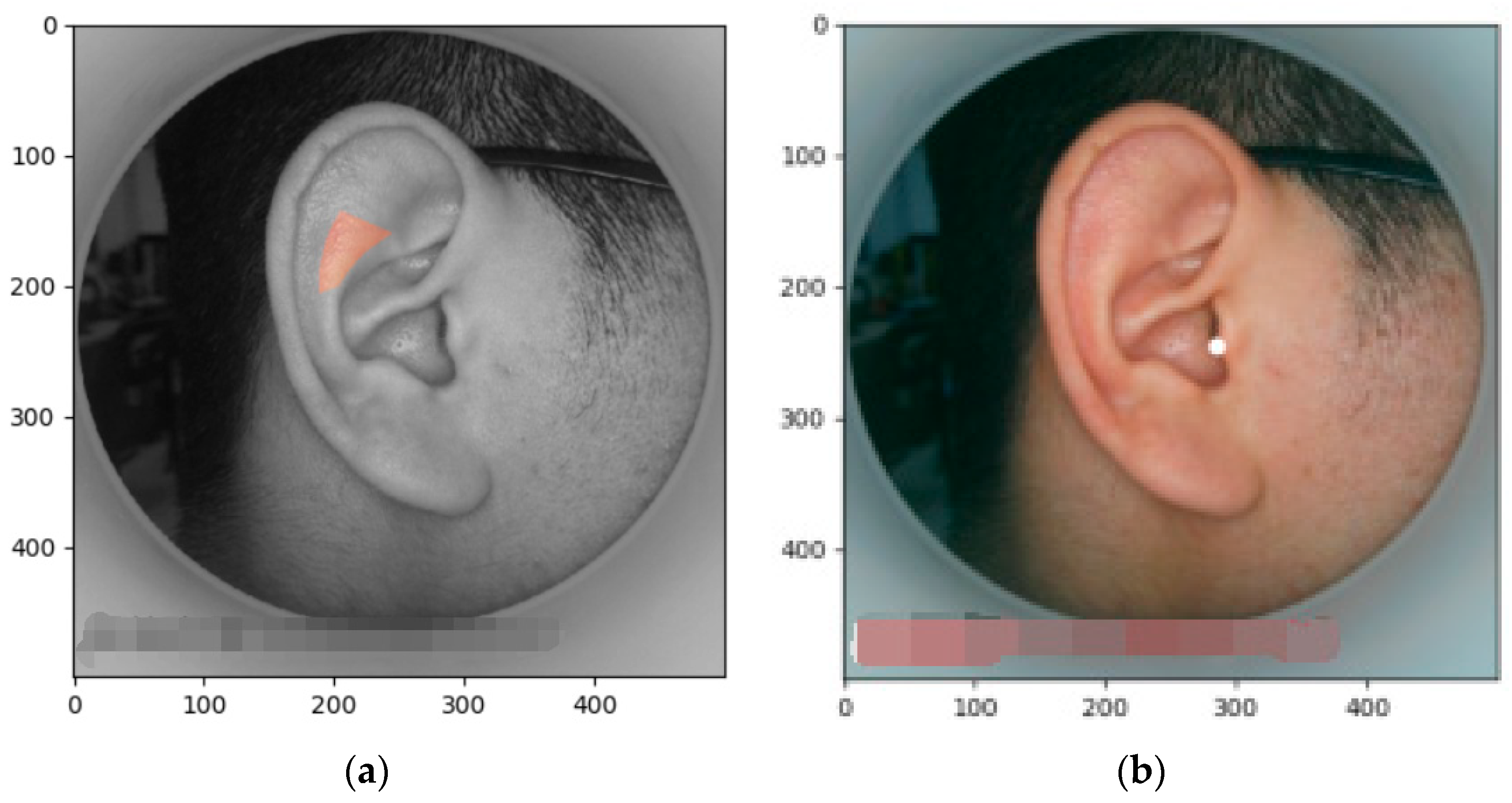

In order to facilitate the acquisition of the appearance model, a large shape needs to be divided into several small shapes to facilitate the calculation and storage. The Delaunay triangle algorithm is used to divide the large area of the human ear into several triangle regions [

17]. The division effect is shown in

Figure 2, and then the required set of triangles is obtained by artificially removing a small part of the redundant triangles.

The feature points are divided into several triangles and the shape image of the human ear is combined from these triangles. Therefore, adjusting the shape of a triangle can change the shape of the entire human ear, and transforming each triangle to the average shape of the entire human ear can obtain the average shape. When changing the substance of the triangle and the partial geometry relationship, as shown in

Figure 2, any point

p within the triangle will map to a new coordinate point

p′ in the new triangle, with their coordinates satisfying the relationship among the three triangular vertices,

pi,

pj, and

pk (

pi′,

pj′, and

pk′):

Since the coordinates of point

p are known, the three vertex coordinates of the triangle before the deformation are known and the required new coordinate points can be obtained by obtaining their geometric relationship

p′. Letting any point of a coordinate be

p = [

x,

y]

T, the three vertex coordinates of the triangle are, respectively,

pi = [

xi,

yi]

T,

p2 = [

xj,

yj]

T, and

p3 = [

xk,

yk]

T, the positional relationship of

p as well as

p1,

p2, and

p3 are calculated, and the values of

α,

β, and

γ are, respectively,

The above process shows how to realize the partial linear affine. The established sample model can be sampled in the triangle area of the average shape model, so that the construction of the appearance model cannot be affected by the shape model and realize the normalization of the shape model.

- (2)

Normalization of appearance model

In Equation (1), after normalization, the shape model and the transformation of the human ear image under the average shape model are obtained. To convert the image information into vectors, the gray values of each pixel point are arranged in a set of vectors in a fixed order. g = (g1, g2, g3, …gn), where n is the number of all the pixels in a shape-independent texture image. In order to overcome the impact of different overall illuminations, the shape-independent grayscale vector needs to be normalized. The so-called normalization of the gray vector is to generate a gray vector with a mean of 0 and variance of 1.

Upon normalizing the vector

g, a more canonical texture sample vector can be obtained

g′, and the specific implementation of the normalization is [

16]:

In order to achieve the normalization of all the elements in

g′ that have a mean of 0 and varying variances of l,

σ is the scaling factor that is the variance of

gi and

m is the displacement factor that is the mean of

gi, thus:

- (3)

Principal component analysis (PCA)

After listing the pixel information of each point in the region as a vector, the average appearance model is desired, which is the aligned initial model and corresponding transformation direction. The method is as same as the PCA analysis of the shape model.

among which,

Aavg is the initialization of the model for each alignment,

n′I (eigenvector) indicates each change and its direction, and

λ′I (eigenvalue) represents the weight of each change in the model.

7. Conclusions

This paper mainly studied the positioning and display methods of various acupoints and acupoint areas based on the AAM algorithm. Based on the AAM, the shape models and appearance models of the human ear were constructed, 91 feature points of the target ear image were extracted, and the acupoints’ area division was completed, as well as the segmentation extraction of 9 representative regions of the human ear and the display of each ear acupoint through the obtained 91 feature points. The research is very useful for graph-based ear acupoint positioning, promotes the development of ear acupoint diagnoses and treatment in smart Chinese medicine, and is helpful for the subsequent ear acupoint regional vision identification of corresponding diseases. In fact, the main drawbacks of the AAM algorithm are that it is sensitive to factors such as changes in lighting and facial expressions, requires post-processing, and does not have a very good average detection speed and real-time tracking. Furthermore, on some occasions, the annual calibration of the anatomical structure of the auricle may lead to a low accuracy in some ear point segmentations. In future work, we will collect images for constructing a larger dataset, including ear images and tongue images, etc., to expand the acupoint segmentation and recognition of a range of faces, introducing deep learning to make the speed of the acupoint area identification and segmentation faster. We hope that this AAM-based identification method will be applied to ear diagnoses and treatment, which play roles in practical medical engineering works.