Abstract

An interactive artificial ecological optimization algorithm (SIAEO) based on environmental stimulus and a competition mechanism was devised to find the solution to a complex calculation, which can often become bogged down in local optimum because of the sequential execution of consumption and decomposition stages in the artificial ecological optimization algorithm. Firstly, the environmental stimulus defined by population diversity makes the population interactively execute the consumption operator and decomposition operator to abate the inhomogeneity of the algorithm. Secondly, the three different types of predation modes in the consumption stage were regarded as three different tasks, and the task execution mode was determined by the maximum cumulative success rate of each individual task execution. Furthermore, the biological competition operator is recommended to modify the regeneration strategy so that the SIAEO algorithm can provide consideration to the exploitation in the exploration stage, break the equal probability execution mode of the AEO, and promote the competition among operators. Finally, the stochastic mean suppression alternation exploitation problem is introduced in the later exploitation process of the algorithm, which can tremendously heighten the SIAEO algorithm to run away the local optimum. A comparison between SIAEO and other improved algorithms is performed on the CEC2017 and CEC2019 test set.

1. Introduction

In the realm of optimization, the emerging heuristic algorithm has become a focus of researchers due to its convenience and comprehensibility. Swarm intelligence (SI) is an important branch of biological heuristic computing. The SI algorithm takes advantage of the collaborative competitive relationship among populations to pilot the population to conduct the optimal solution, which fascinates a great deal of investigators. Several novel algorithms have been put forward in recent years. For example, SFO [1], HHO [2], BMO [3], EJS [4], MCSA [5], SDABWO [6], STOA [7], SCA [8], SSA [9] et al. Swarm-based intelligent optimization algorithm has greatly promoted its application in engineering optimization because it has abandoned gradient information in traditional optimization algorithms, such as time series prediction [10], image segmentation [11], feature selection [12] and cloud computing scheduling tasks [13].

The AEO algorithm [14] is a SI algorithm introduced by Zhao et al. in 2019. The AEO algorithm is designed according to energy flow and material circulation in natural ecosystem. The algorithm model is established through three stages of production, consumption and decomposition. The first stage is the producer, who itself obtains energy from nature and uses it to describe the plants in the ecosystem. The second stage is consumer, whose main body is an animal; the final stage is the decomposer, which feeds on both the producer and the consumer.

In the population of AEO algorithm, all individuals are called consumers except for one producer and decomposer. Consumers can be divided into herbivorous animals, carnivorous animals and omnivorous animals according to their different ways of foraging. Energy levels in an ecosystem decrease along the food chain, so producers have the highest energy levels, and decomposers have the lowest. AEO algorithm has a strong ability to equilibrium the whole search and local exploitation, and is easy to implement, with few design parameters, and has good applications in many fields. One study [15] improved AEO by introducing self-adaption nonlinear weight, optional cross-learning and Gaussian mutation strategies, as well as an excogitated IAEO algorithm to handle the optimization problem of PEMFC. In [16], the authors applied an EAEO to settle the actuality problem of distributed power distribution in the distribution system by combining AEO with the sine and cosine operator so as to cut down the power wastage of the distribution network. In [17], the authors proposed the MAEO algorithm to handle the parameter estimation of the PEMFC problem by introducing the linear operator H to balance exploration and exploitation. The authors of [18] proposed a promising blend multi-population algorithm HMPA for managing multiple engineering optimization problems. HMPA adopts a new population division method, which divides the population into several subpopulations, each of which dynamically exchanges solutions. An associative algorithm based on AEO and HHO was presented. Regarding this knowledge, multiple strategies were mixed to get the maximum efficiency of HMPA. For [19], the authors proposed a new random vector function chain (RVFL) network combined with the AEO algorithm to calculate the optimal outcome of the SWGH system. The authors of [20] proposed a new fitness function reconstruction technology based on the AEO algorithm for photovoltaic array reconstruction optimization. The authors of [21] used an AEO algorithm to acquire the ideal modulation parameters of a PID controller for an AVR system.

Although the AEO algorithm has been successfully used in the above domains, the AEO algorithm first explores all individuals and then executes the exploitation in sequence mode, which aggrandizes the computational burden and retards the optimization speed of the AEO. Secondly, the correcting of the AEO in the exploration stage puts individuals at the end of the biological chain, which does not account for the biological competition in nature—that is, every creature preys on other species and is itself the target of prey, so the solution accuracy is not high.

For the sake of making the AEO characteristics better and ameliorating the accuracy of an optimization scheme, inspired by environmental stimulation and rival mechanism, when the population diversity in the environment is large, there are fewer similar organisms distributed in unit space, and the exploration ability is strong. In this case, the exploitation should be strengthened, and vice versa. Based on this, this paper proposes a combination of environmental stimuli and biological competition mechanism improved the artificial ecological optimization algorithm (SIAEO), improvements on AEO to do the following two aspects: (1) the environmental stimulation mechanism is introduced into the AEO algorithm, the external environment stimulation to achieve defined by the population diversity population interaction perform manipulations of consumption and, reduce the computational complexity of algorithm; (2) In the consumption stage, considering the biological competition, an individual is added to die due to predation, and the maximum cumulative success rate of each individual performing different tasks is used to guide the individual to choose a more suitable consumption renewal strategy, which redounds to the exploitation of the algorithm in the exploration stage. In the decomposition stage, two arbitrarily chosen individuals are introduced to define a potential exploitation and update formula, which can excellently assist the algorithm apart from the local optimal so as to build on proportionate the overall search and local optimization of the algorithm.

K-means [22,23] is an excellent clustering algorithm in a complex clustering problem. After determining the number of classes as well as clustering centers, the nearest neighbor principle is used to allocate samples to the categories determined by K clustering centers, and the in-class distance is minimized, and the inter-class distance is maximized by constantly updating the K-clustering centers. Among them, the determination of K cluster centers belongs to the high-dimensional optimization problem.

To demonstrate the effectiveness and functionality of the SIAEO algorithm, this experiment consists of two parts. Firstly, 40 benchmark functions composed of the CEC2017 and CEC2019 are designed to measure the performance of SIAEO to reply to complex high-dimension numerical optimization, and the results are paralleled with nine other excellent algorithms. The numerical optimization potential of the SIAEO is proved. Secondly, the validity of SIAEO solving the engineering optimization and K-means optimal clustering center was verified, and four engineering optimization problems and nine criterion data sets of a UCI machine learning knowledge base were clustered. The results confirmed the significance and competitiveness of the SIAEO–K-means algorithm.

The structure of this article is that Section 2 outlines the AEO, and Section 3 gives a list of the improvement strategy of the improved AEO algorithm. In Section 4, numerical examples and practical application examples and cluster optimization show the excellent performance of the SIAEO. In the conclusions, we review the entire paper and point out directions for future research.

2. AEO Algorithm

For the -dimensional optimization problem:

where represents the optimization function, is the decision variable and and are the upper and lower bounds of , respectively.

The AEO algorithm simulates the energy flow of the ecosystem, which is presented in 2019. According to the composition of the food chain, it is divided into three stages: production, consumption and decomposition. The detailed steps for solving the optimization problem AEO algorithm are:

- (1)

- Initialization: Suppose expresses the population size, randomly generate individuals in the -dimensional decision space to compose the early generation population ed by , the j-th dimension of the individual is created byin which is a random number within (0, 1).

- (2)

- Production phase: Suppose the t-th population is , which are ranked in descending order with optimal value, in the production stage, only a temporary location update is performed on producer . The specific update strategy is a linear combination of best and randomly generated individual , and the updated formula is:where expresses the coefficient used for linear weighting, , , represents a member arbitrarily generated in the solution space; respectively represent this iteration and the final iteration. indicates a random value between [0, 1]. Equation (2) shows that in the pre-development stage of the algorithm, producers tend to explore extensively, while in the later stage, focus on further exploitation near .

- (3)

- Consumption phase: In this stage, the second to N-th individuals are temporarily updated according to the random walk strategy generated by Levy’s flight. Let and be two random numbers with standard normal distribution and the consumption factor is defined:in which represents the normal distribution.The i-th individual was classified and updated according to probability by using the formula of herbivorous ( 1), carnivorous ( 2) and omnivorous ( 3), i.e.,where is a positive integer with a random value in , are the random values in [0, 1].

- (4)

- Decomposition phase: The temporarily updated population is further revised to obtain the final revised population:where is a random number that is normally distributed, , and is a random number between [0, 1].

In summary, the AEO algorithm wantonly creates initial solutions. In the process of updating and optimizing the population of each generation, the position of the producer is revised by relying on Equation (2), while the updating of consumers are carried out according to the isoprobability decision method by using Equation (4) and the final decomposition process is performed by Equation (5) until the termination conditions are met, the algorithm ends.

3. An Improved Interactive Artificial Ecological Optimization Algorithm Based on Environmental Stimulus and Biological Competition Mechanism

In an ecosystem, the survival and death of various organisms in the ecosystem, the predation of animals, and the stability and balance of the ecological chain are all greatly affected by the environment. In the process of predation, animals respond to different environmental stimuli in order to cope with the impact of the intensity of environmental stimulus on the predation patterns of animals. The incentive mechanism is a mechanism to describe the relationship between environmental stimulus and individual response. Combined with the AEO algorithm, environmental stimulus S is defined to guide individuals to carry out task conversion between consumption and decomposition, thereby meaning that consumption and decomposition can be executed interactively rather than sequentially, thereupon then reducing the complexity of the algorithm. In the process of predation, animals have incentive responses to different environmental stimuli and form their own preferences that are influenced by natural enemies. Furthermore, since the survival of organisms itself follows the competition mechanism of survival of the fittest, the introduction of competitive trend in the consumption stage can better simulate the real situation of the ecosystem.

The purpose of this paper is to better improve the exploration and development ability of the AEO algorithm. Therefore, we introduced an interactive execution environment to stimulate the consumption and decomposition of tasks through the incentive mechanism and biological competition relations into the AEO algorithm. The consumption phase of the population individuals changes by searching the environment, and the largest accumulation of mission success rates guides the best-performing way to feed. The method of isoprobability execution of the AEO is abandoned, the convergence ability of the AEO is accelerated, and the exploitation function of the AEO in the exploration stage is intensified. At the same time, during the decomposition stage, through the introduction of arbitrary individuals, the exploration ability in the exploitation stage is boosted.

3.1. Environmental Stimulus

Environmental stimulus describes the external drive of an individual to perform a task. In the process of an algorithm search, the execution opportunity of consumption and decomposition can be measured by population diversity.

Population diversity is an indicator that describes the differences between individuals and reflects the distribution of populations [24]. For the t-generation population, population diversity [25] was measured as:

where and represent the size of population and reconciliation space, respectively, is the dimension, is the population center, .

Environmental stimulus is defined as:

as well as indicates the sensitivity coefficient of the stimulus to diversity. Referring to the value in reference [25] and simulation experiment, is 50 in this paper.

When , the variety of the group is better and the current algorithm is in the exploration stage. This moment increasing the exploitation potential of the algorithm while carrying out exploration can be helpful to improve the calculation accuracy. Therefore, when , the consumption stage of AEO algorithm is implemented, and the updated formula is modified using a competition relationship to enhance the exploitation ability. When , the population diversity is relatively poor, and the individuals of the population have converged to the vicinity of the optimal solution. At this time, the exploitation should be strengthened to help the algorithm seek out the best solution, nevertheless at the same time, the scope of the population should be maintained to forestall individuals from getting bogged down in the local optimal. Therefore, when , the decomposition stage of AEO algorithm is implemented in which the individuals emulate from the best individuals as well as the random mean is integrated to enhance the exploration performance. To boost the global and local searching of the AEO, the calculation precision of the algorithm is improved.

3.2. Incentive Mechanism

In the incentive mechanism, the individual’s success in performing a search task refers to the improvement of its fitness, and the individual’s propensity to perform a task is measured by the cumulative success rate. The cumulative success rate should increase when the individual successfully performs the task and decrease when the individual fails to perform the task. The greater the cumulative success rate, the greater the propensity to perform the task, and vice versa.

By absorbing the advantages of the incentive mechanism, the three different consumer predation modes (Task 1), (Task 2) and (Task 3) in the consumption stage are regarded as three different search tasks. Therefore, we utilize the cumulative times of individual successfully executing task until the -th iteration to calculate the cumulative success rate. The specific methods are as follows:

- (a)

- Cumulative success rate initialization. For each individual in the initial population, a three-dimensional cumulative success rate vector is initialized, where represents the initial cumulative success rate of the -th individual performing the -th task. The assignment method is:where stands for the random value in , and Formula (10) ensures that the sum of the elements after initialization is 1. Then initialize the three dimensional count vector to store the times of individual successfully executing task , where .

- (b)

- Cumulative success rate update process. Firstly, the update process of count vector is introduced. Let represent the identifier of the -th task performed by the -th individual of in the -th generation. When individual i performs task j, let ; otherwise, it is 0. Since each individual in each generation performs only one of the three tasks in Equation (4). Therefore, for individual i, only one element of () is 1. Let represent the new individual obtained by individual i of in the t-th generation after performing task j, then the . If is superior to , that is, individual i successfully performs task j, then the possibility of executing task j next time should be increased while the chance of executing other tasks should be weakened. The -th element of the corresponding count vector increases by 1 while the remaining elements remain unchanged, that is,where refers to the individual’s fitness, which is defined as the objective function value in this paper.

If is inferior to , that is, individual i fails to execute task j, then the possibility of executing task j next time should be reduced and the chance of executing other tasks should be increased. The -th element of the corresponding count vector remains unchanged, while the current value of other elements increases by 1, that is

At this point, the vector of the cumulative success rate of the next generation of individual i is updated with the count vector as:

For each individual, cumulative success rate vector can be calculated. Let and take as a definite indicator of the task to be executed in the next consumption phase. In the consumption phase of the AEO, the corresponding updatings is executed with equal probability without considering the incentive mechanism. Now, formula (13) is used to calculate the cumulative success rate of each individual performing the three tasks, and the strategy corresponding to the * task is determined according to the indicator of task propensity , so as to motivate individuals to explore more promising areas in a more appropriate way. The incentive mechanism pseudocode is named Algorithm 1.

| Algorithm 1: Incentive mechanism (N, , , ) | |

| 1 | For t-th generation population |

| 2 | Calculated population diversity and environmental stimulus |

| 3 | |

| 4 | for i = 1:N |

| 5 | |

| 6 | Perform *; |

| 7 | end for % Get the updated population |

| 8 | |

| 9 | |

| 10 | |

| 11 | Update optimal solution and fitness value |

| 12 | |

| 13 | |

| 14 | end if |

| 15 | |

| 16 | |

| 17 | |

| 18 | |

| 19 | |

| 20 | for i=1:N |

| 21 | Execute the decomposition phase |

| 22 | end for |

| 23 | |

| Output: generation population | |

3.3. Updating Based on Competition Mechanism

Due to ecological competition, the individuals in the consumption stage of the ecosystem eat both the low-ranking organisms and the high-ranking natural enemies. In Equation (4), the influence of natural enemies is not taken into account, which makes the algorithm have strong exploration ability but poor exploitation ability in the consumption stage. In Equation (5), the algorithm is apt to stumble into local optimization as a result of only considering the lead of the optimal individual. When choosing the execution strategy of the algorithm according to the environmental stimulus, each strategy should have both exploration and exploitation performance. Therefore, the influence of predator predation is integrated into (4), and the Task to be executed is determined according to the maximum cumulative success rate. That is, if the , then the Task* is executed and updated as

where means a positive integer of the random value in interval , indicates a positive integer of the random value of , and are random numbers at (0, 1).

In Equation (5), the drag of random individuals is integrated into enabling the algorithm to from local optimum. For two randomly selected individuals and in the current population, a more reasonable updating formula is put forward to substitute for the decomposition strategy of the original algorithm. Formula (15) is adopted to displace the intrinsic individual position updating strategy of Formula (5):

Other parameters are the same as Equation (5). In Equation (15), the insertion of two heterogeneous individuals in the population can perfectly heighten the universality of exploration of the algorithm and aid the algorithm in averting the local optimum.

3.4. Implementation Steps of SIAEO Algorithm

To make the above preparations, the incentive mechanism and competition relationship were integrated into AEO, the environmental stimuli were defined according to population diversity, and the next-generation search strategy was determined according to the cumulative success rate of the search tasks, so as to effectively make the convergence speed and the precision in the calculation of the AEO better. The global searching and local optimization of the AEO balance are further promoted by modifying the updated formula of consumption and decomposition stage through competition relationship. Based on this, this paper provides an improved artificial ecological optimization algorithm (SIAEO) that integrates into environmental stimulus and competition mechanism. The execution programs of the SIAEO are described as:

Step 1: Set algorithm parameters.

Step 2: According to Equation (1), the initial population is randomly generated and the cumulative success rate of each individual performing three different search tasks is initialized and assigned.

Step 3: The diversity () of the population and the environmental stimulus were calculated through Equations (6)–(8), and the individuals were rearranged in ascending order in light of the fitness value.

Step 4: According to the numerical value of , the corresponding strategy is executed to obtain the new population, as follows:

If , execute the consumption operator, each individual adopts the corresponding formula in Formula (14) to update according to the maximum cumulative success rate; The cumulative success rate of each individual performing three search tasks was calculated;

If , then use Equation (15) to perform the decomposition operator.

Step 5: Judge whether is true; if so, make , then the algorithm turn to Step 3. Otherwise, the algorithm ends and outputs the best solution and corresponding function value.

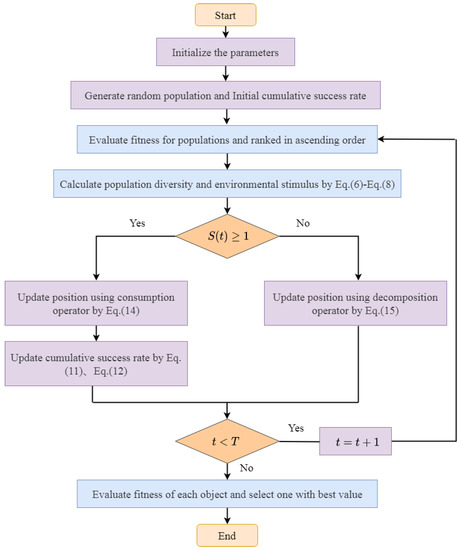

The working frame of the SIAEO is displayed in Figure 1.

Figure 1.

The flowchart of the SIAEO algorithm.

3.5. Time Complexity Analysis of the Algorithm

In the SIAEO, if the meanings of , and are shown above, then the time complexity of the beginning phase is . The time complexity of the population diversity and the production are and , while consumption and the decomposition phase are both . According to the incentive mechanism, only one of the two can be executed in an iteration, but because the consumption operator and the decomposition operator contain exploration and exploitation capacity in the meantime, the stimulus incentive mechanism properly uses these two operators and better balances the relationship between them. The complexity of evaluating fitness values for each individual is . The complexity of sorting fitness values is . As a consequence, the total computational time complexity of the SIAEO algorithm is:

4. Numerical Experiment

For the sake of testing the superiority of the SIAEO in settling complicated problems and the practical applicability of solving the actual problems, the latest standard test set CEC2019 was selected to separately analyze the validity of the improved strategy in Section 4.1. In Section 4.2, the CEC2017 test set was selected for high dimensional numerical experiments, and CEC2019 was selected to test the performance divergence between SIAEO and other algorithms. In Section 4.3, the SIAEO is applied to manage four engineering minimization problems. Finally, the high-dimensional K-means clustering problem is selected to declare the precision and accuracy of clustering by the SIAEO.

4.1. Strategy Effectiveness Analysis

To validate the influence of a single improvement strategy on the SIAEO algorithm, experiments were set up to compare the SIAEO algorithm with the SAEO that only appended environmental stimulus strategy, the CAEO that only added incentive mechanism and biological competition mechanism strategy in the composition stage, the DAEO that only increased local escape operator mechanism strategy in decomposition stage and AEO [2].

4.1.1. Test Functions

The effectiveness of the improved strategies was evaluated using ten multi-modal functions of the CEC2019 standard test set [26]. Table 1 gives the range of variables, dimensions, and the theoretical optimal value of the CEC2019 test function. Although the dimension of the CEC2019 test set is not high, each function has many local optimal advantages, among which f4, f6, f7 and f8 have many local optimal advantages, which tremendously challenges the global minimization of the intelligence algorithm.

Table 1.

CEC2019 benchmark function.

4.1.2. Strategy Effectiveness Verification of the SIAEO

Table 2 compares the outcomes of the SIAEO with the SAEO, CAEO, DAEO and AEO algorithms on CEC2019 test set. The same parameter settings were used for all five algorithms, namely, an independent running for 30 times, , the estimation times of the maximization function is times, average Ave and standard deviation Std represent the error between the optimal value and the theoretical value of 30 times running and average CPU Time of 30 times running was calculated. Two-tailed t- and Friedman tests were applied to inspect the statistical comparison of all the methods of concern. Where the bold data represents the optimal results calculated by the five algorithms, (+), (=) and (−) respectively signify the use of a two-tailed t-test at a significance level , the SIAEO is superior to, equal to and inferior to the comparison algorithm, and (#) line remarks the number of corresponding results. The Friedman rank means the sorting result of the Friedman test.

Table 2.

Comparison of results of SIAEO’s improved strategy on CEC2019 functions.

It can be noted from Table 2 that, for the 10 functions of the CEC2019, the solution results of the five algorithms for the f1 function have reached the optimal value. The SAEO, CAEO, DAEO, and SIAEO each have advantages over the other test functions. Among them, the SAEO has the best performance in solving the f3 and f4 functions. The CAEO possessed the optimal mean and standard deviation in the f2 function. While the DAEO acquired the best average results on three functions (f6, f8 and f9), the SIAEO captured the best average results on the f5, f7 and f10 functions. On the whole, the addition of each strategy individually contributes to the advancement of the AEO algorithm in some way. For different test functions, each strategy has distinct effects. Therefore, the SIAEO algorithm gathered in one place with the three improved strategies is more promising and competitive.

4.1.3. Analysis of Statistical Test Results

The last four lines of Table 2 clearly state that the results of two statistical tests, the two-tailed t-test and the Friedman test, demonstrate the absolute superiority of our improved SIAEO algorithm. The number of functions similar to the SIAEO, SAEO, CAEO and DAEO algorithms is 2, 6, 4 and 7, respectively, while the number of functions inferior to SIAEO is 7, 3, 5 and 2, respectively. On the one hand, this manifests that the SIAEO algorithm is provided with significant advantages and superior performance over the AEO algorithm and shows the effectiveness of the improvement strategy at the same time. On the other hand, we can also discover the importance of the orientation and influence degree of the three strategies in the iterative optimization process. The DAEO algorithm, which only raises the local escape operator in the decomposition stage, gains more proficiency than other strategies. As many as seven functions are close to the SIAEO’s performance. The SAEO, which only attaches environmental stimulus mechanisms to reduce computational complexity, obtains six functions close to the SIAEO’s performance.

The Friedman test showed an ability to test the similarity of the five algorithms, with smaller results indicating better algorithms. The SIAEO algorithm took the minimum value of 2.419 and ranked first overall, followed by the DAEO, CAEO, SAEO and AEO algorithms to demonstrate the superiority of the improved strategy.

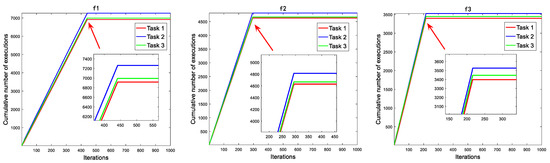

4.1.4. The Tendency Analysis of Consumption Operator

There are three predation modes in the consumption stage, showed by Task1, Task2 and Task3. The algorithm changes from overall situation performance to local performance with iterations increasing. However, the original algorithm adopts three tasks with equal probability execution, which will cause a part of resource waste in the late iteration. The incentive mechanism will improve this situation to a large extent. The number of tasks performed by Task2 is much higher than that of other tasks, followed by Task3, and the number of tasks performed by Task1 decreases in the final stage of the iteration. In each iteration, we recorded the frequency of individuals performing the three tasks and stacked them up as we went through the iteration. Figure 2 shows the running results on the node test functions of the CEC2019.

Figure 2.

The cumulative number of tasks performed.

In Figure 2, it can be seen that in the prophase iterations of the SIAEO, the algorithm pays attention to global exploration ability and executes the consumption operator. The cumulative number of tasks performed increases with each iteration. The improved carnivorous and weedy strategies have a higher probability of being selected because they combine exploration and exploitation, resulting in a higher slope of the cumulative line. At the end of the iteration, environmental stimuli guide the algorithm to perform decomposition operations. Due to the termination of the consumption operator, the individual does not perform the three tasks, so the cumulative curve presents a horizontal straight line in the late iteration.

4.2. Numerical Optimization Experiment

4.2.1. Parameter Setting

To show the availability and stability of the SIAEO in handling high-dimensional optimization problems, a total of 40 benchmark test functions of the CEC2019 [26] and CEC2017 [27] were numerically optimized. The CEC2017 test set is composed of a total of 30 functions, including single-peak functions (F1–F3), simple multi-peak functions (F4–F10), mixed functions (F11–F20) and composite functions (F21–F30). In the experiment, the SIAEO algorithm was compared with the AEO’s improved algorithms IAEO [15], EAEO [16] and AEO [14], as well as other representative swarm intelligence algorithms, including AOA [28], TSA [29], HHO [2], QPSO [30], WOA [31] and GWO [32]. Table 3 shows the parameter values of each algorithm used in the experiment. To be fair, all algorithms run independently 30 times, and, and the maximum estimation times is times. The computer is configured as Intel(R) Core I7, main frequency 3.60 ghz, memory 8 GB, Windows 7 64-bit operating system, and the programming is by MATLAB R2014a.

Table 3.

Algorithm parameter setting.

4.2.2. Comparison of Results between SIAEO and Comparison Algorithm

Table 4 and Table 5 exhibit that the SIAEO and nine other algorithms run the CEC2017 and CEC2019 test sets independently 30 times. The Ave and Std and the average CPU Time of 30 times are calculated. The bold data display the minimum value obtained by each algorithm. The symbols “+”, “−” and “=“ in () after time in the table indicate that the SIAEO is significantly better than, worse than or similar to the competitive algorithm when the t-test is executed on the SIAEO and the other nine comparison algorithms. The last four rows of Table 4 and Table 5 show the number of best solutions achieved on all test functions ((#) best), as well as the t-test ‘+’, ‘−’, and ‘=’ quantity statistics and Friedman test results.

Table 4.

Comparison between the SIAEO and comparison algorithm on the CEC2017 test set (D = 100).

Table 5.

Comparison between the SIAEO and comparison algorithm on the CEC2019 test set.

The data in Table 4 demonstrate that the SIAEO performed best for the high-dimensional and challenging CEC2017 test set in line with the number of optimal solutions achieved ((# best) with a value of (# best) of 21. Specifically, the SIAEO accessed the best results on six of the seven simple multi-peak functions (F4–F10). For mixed functions (F11–F20) and composite functions (F21–F30), the performance is best on seven and eight functions, respectively. For three unimodal functions (F1–F3), the performance is slightly inferior to that of the EAEO. In general, the SIAEO performs slightly less well for unimodal functions, but its performance on simple multimodal functions, mixed functions, and composite functions is the the most promising. Thus, the SIAEO algorithm possesses more advantages and more comprehensive performance in solving high-dimensional complex optimization problems.

The data in Table 5 illustrate that the SIAEO’s (#)best value is nine in terms of the number of optimal solutions ((#)best), which indicate that the SIAEO unfolds before one’s eyes a significant advantage in solving the CEC2019 test set with fixed dimensions. In conclusion, the SIAEO algorithm shows more promising performance for both the high-dimensional CEC2017 test set and the fixed-dimensional CEC2019 test set, which authenticates that the SIAEO outperforms the other nine comparison algorithms in terms of scalability.

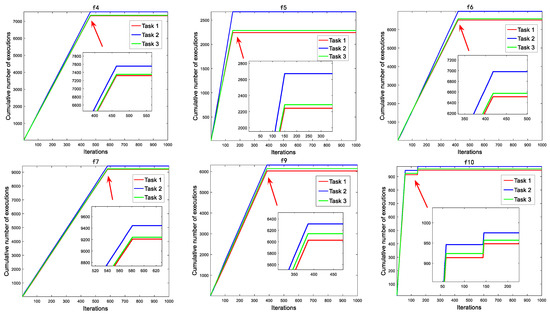

Figure 3 provides the comparison diagram of curve convergence process of three simple multi-peak functions (F5, F6, and F8), three mixed functions (F15, F16, and F19), and three composite functions (F21, F23, and F24) of the CEC2017 test set when the SIAEO and other algorithms search for optimization. For ease of observation, the convergence curve is plotted with as the ordinate. Figure 3 illustrates this point: although the SIAEO algorithm is slower than AEO algorithm in solving simple multi-peak, mixed function and composite functions, it has strong exploration and exploitation performance. It is obviously better than the other nine reference algorithms in terms of solving precision and has a strong ability to escape the local optimal.

Figure 3.

Convergence curve of nine benchmark functions of the CEC2017.

4.2.3. Analysis of Statistical Test Results

Statistical test [33] is an essential mathematical method to analyze the results of intelligent optimization algorithms. When the significance level is , three statistical tests, including the two-tailed t-test, Wilcoxon signed-rank test and Friedman test, are used to test the performance of the comparison algorithm.

- (1)

- Two-tailed t-test

In Table 4 and Table 5, the t-test results of the SIAEO and the other nine comparison algorithms are given in parentheses after Time, and (#) best gives the number of winning results. As an attempt to comprehensively assess all algorithms, the comprehensive performance CP is defined by the number of superior algorithms minus the number of inferior algorithms, i.e., “(#)+” − “(#)−”. When the CP is positive, that is, CP > 0, it indicates that the SIAEO is superior to the comparison algorithm and vice versa. Table 6 shows CP values of the SIAEO and other comparison algorithms on the CEC2017 test set and the CEC2019 test set. Obviously, the data in Table 6 indicates that the SIAEO is an excellent algorithm for the CEC2017 test set. The CP value of the SIAEO compared with the AEO is 13, which exhibits the superiority of the SIAEO amendment scheme. For the EAEO and IAEO, the CP values are 29 and 16, respectively, which suggests that the proposed algorithm is significantly superior to the EAEO and IAEO. By comparing the CP values of the other six intelligent algorithms, the SIAEO achieved an overwhelming superiority, with the CP values of thirty compared with the other four algorithms, except that the CP values of WOA and HHO algorithms are 28. For the CEC2019 test set, the CP values of the SIAEO algorithm are positive compared with the AEO, EAEO and IAEO, while the CP values of the other six comparison algorithms are close to the maximum. In all, it shows that the SIAEO algorithm has more advantages and prospects in exploring high-dimensional minimize problems.

Table 6.

The CP values of the SIAEO and comparison algorithms on CEC2017 and CEC2019 test sets.

- (2)

- Wilcoxon signed-rank test

For the CEC2017 and CEC2019 test sets, the Wilcoxon signed-rank test with a significance level of 0.05 was used to test the difference between the SIAEO and other competitive algorithms. In Table 7, R+ and R− represent the sum of the rank of all the test functions in which the SIAEO is better or worse than its competitors. The greater the difference between R+ and R-, the better the performance of the algorithm will be. p represents the probability value corresponding to the test result. When p < 0.05, it means that a significant difference exists in the two algorithms. Significance means the significance of the difference, “+” expresses that the underlying algorithm is prominently better than the comparison algorithm, and “=” indicates that the performance is similar to the comparison algorithm. Table 7 shows that the SIAEO significantly outperforms the other nine algorithms except for the IAEO for the CEC2019 test set. Not only is p < 0.05, but the number of R+ is far greater than the number of R-, especially for the EAEO, AOA, TSA, HHO, QPSO, WOA and GWO, the number of R− is 0. For the CEC2017 test set, although the SIAEO is not significantly superior to the EAEO and AEO in p, it provides more potential performance than the EAEO and AEO algorithms in R+ and R−. In general, the SIAEO has a significant advantage among the nine comparison algorithms based on the p-value. Moreover, it outperforms all competitors in the number of R+ and R- and shows a more promising performance in high-dimensional cases.

Table 7.

Wilcoxon sign rank test results of the SIAEO and comparison algorithms at 0.05 significance level.

- (3)

- The Friedman test

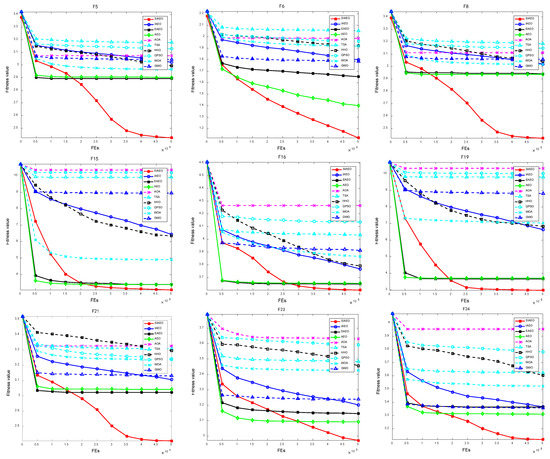

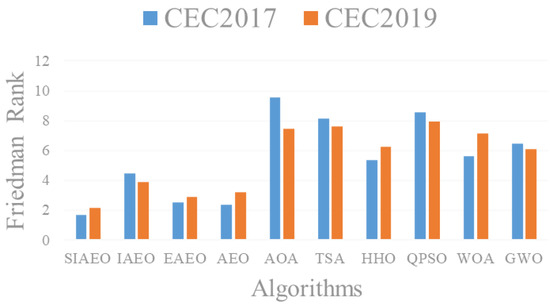

Nonparametric Friedman test [34] was used to test the overall performance of the ten comparison algorithms. During the inspection, the average value of 30 times of independent operation is used as input data to calculate the overall ranking of each algorithm. Friedman test results and rankings for the CEC2017 and CEC2019 test sets are shown in the last row of Table 4 and Table 5. The results in Table 4 and Table 5 indicate that the SIAEO ranked first in both the 100 dimensions of the CEC2017 and the fixed dimensions of the CEC2019. The AEO, EAEO and IAEO rank 2nd, 3rd and 4th, respectively. In addition, the ranking does not change with the change of dimension, so illustrating the SIAEO has significant robustness and the ability to solve high-dimensional problems. Figure 4 shows the differences in Friedman ranking and illustrates the SIAEO having prominent preponderance over the other algorithms.

Figure 4.

Friedman test results of the SIAEO and comparison algorithms on CEC2017 and CEC2019.

4.3. Engineering Optimization Experiment

In this subsection, to validate the ability of the SIAEO to solve the optimization problems in real-world applications, four real-world engineering challenges are disposed of availing on the proposed algorithm. In the comparison results, the optimal value is represented in bold font.

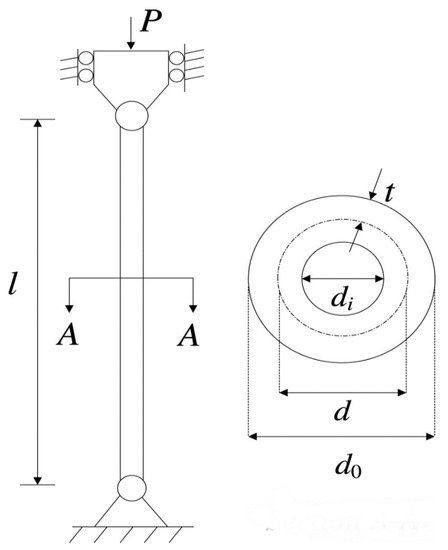

4.3.1. Three-Bar Truss Design Problem

This example discusses a 3-bar planar truss structure (Figure 5) that minimizes volume while meeting stress restrictions on each side of the truss members [35]. Optimization of two-stage rod length with the variable vector can be mathematically simulated as below:

Figure 5.

Sketch map of the three-bar truss design problem.

The findings of the SIAEO and other excellent comparative methodologies are given in Table 8. Table 8 also lists the best decision variables of the optimal solution for all comparing approaches. Table 8 shows that by supplying optimal variables at with minimum objective function value: , the proposed SIAEO achieved better and comparable outcomes than other potential minimized methods.

Table 8.

Optimal results of various methods for the Three bar truss design problem.

4.3.2. Himmelblau’s Nonlinear Problems

The mathematical description of Himmelblau’s nonlinear problems [40] using the vector can be surveyed as:

The best results obtained using various methods are demonstrated in Table 9, which reveal that the SIAEO outperforms existing approaches and also has engineering practicability.

Table 9.

Optimal results of various methods for the Himmelblau’s nonlinear problems.

4.3.3. Tabular Column Design Problem

Figure 6 illustrates the uniform tabular column design problem [44]. Generating a homogeneous column of tabular design at the lowest possible cost is its purpose. The following is a mathematical description of the problem using the variable vector .

Figure 6.

Sketch map of the tabular column design problem.

Although the optimization variables of the tabular column design problem are few, there are many constraints that increase the difficulty of hunting for feasible solutions. The best results of each algorithm for searching such a problem are given in Table 10. The SIAEO method’s variation is clearly lower than the other approaches; in addition, the SIAEO method’s best result has the best performance by supplying optimal variables at with a minimum objective function value: .

Table 10.

Optimal results of each algorithm for the tabular column design.

4.3.4. Gas Transmission Compressor Design Problem

This four-variable mechanical design problem was first proposed by Beightlerand Phillips designed the problem [46]. The goal is to seek out the variable for sending natural gas to the gas pipeline transmission system at the lowest possible cost. The following is a mathematical description of the problem with the vector .

The objective function of this problem is complex and highly nonlinear, which puts forward higher requirements for the optimization algorithm.

The optimal results of different methods for solving such a problem are given in Table 11. The SIAEO method’s variation is clearly lower than the other approaches; in addition, the SIAEO method’s best result has the best performance by supplying optimal variables at with a minimum objective function value: .

Table 11.

Optimal results of various methods for the Gas transmission compressor design problem.

4.4. Application of SIAEO in Clustering Problem

K-means clustering is a very effective clustering method [51,52]. When the number of classes is determined, the determination of the clustering center is the key to this method. The finding of the clustering center can be turned into the optimization problem of minimum class spacing. For the data set composed of V samples, there are classes in total, the cluster center of class is , and the number of data in the cluster of class i is , then the clustering center of K-means clustering is the optimal of the following optimization model.

Attempting to demonstrate the SIAEO algorithm’s competitiveness in solving high-dimensional practical problems by verifying its optimization ability for high-dimensional clustering, this section uses the SIAEO to search for the optimal clustering center of K-means clustering.

4.4.1. SIAEO—K-Means Algorithm

Table 12 gives the number of data instances, class-number Classes, Dimension Features of data and the number of dimensions of the decision variables in optimization problem (17) in nine data sets from the UCI standard database [53]. The process of solving optimization problem (17) with the SIAEO algorithm is as follows: Firstly, N Dimension individuals are generated as N groups of clustering centers. For each individual, that is, each group of clustering centers, the K-means clustering method is adopted to determine the number of data contained in each category. Individual fitness values are calculated using Formula (17) and determine the optimal individuals. Then, the SIAEO algorithm is used to update each individual until the optimal cluster center is found.

Table 12.

Characteristics of the nine datasets.

4.4.2. Experimental Setup and Performance Evaluation

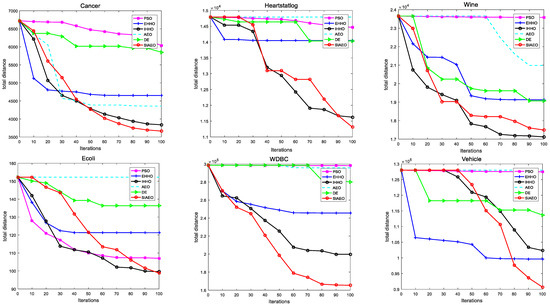

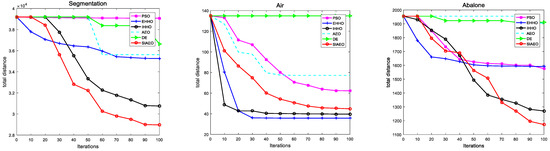

In the experiment, and , and the independent operation was performed 30 times. The IAEO, EAEO, AEO, DE and PSO were selected to combine with K-means as the comparison algorithm. Table 13 provides the mean Ave, standard deviation Std and Rank gained by the SIAEO and comparison algorithm to search the optimization model established by Formula (17) of the data in Table 12. The last three lines of Table 13 give the evaluation index. The count indicates the number of data sets of the optimal result obtained by each optimization algorithm. The Avg_Rank represents the average ranking and Total Rank quantitatively expresses the contrast in the optimization ability of each algorithm. For nine data sets, Figure 7 depicts the search process of each contrast algorithm to reflect the characteristics of the algorithm and to explore the global optimal solution during operation.

Table 13.

Comparison of K-means optimization results of six algorithms on nine datasets.

Figure 7.

Convergence curves between the SIAEO–K-means and other algorithms on all data sets.

4.4.3. Comparison and Analysis of Results

Table 13 shows that when compared with other algorithms, the SIAEO acquires the ideal intra-class distance in seven data sets and ranks 1.33 on average, placing it first overall. The EAEO and IAEO came in second and third place, while the PSO and DE came in 4th and 5th place, respectively, while the AEO ranked last, which differed greatly from the SIAEO. Figure 7 shows that the SIAEO possesses a strong capability to escape the local minimum and explores the global minimum compared with other algorithms, making it an outstanding heuristic algorithm for solving high-dimensional clustering problems.

5. Conclusions

In artificial ecosystem optimization algorithms, the relationship between exploration and exploitation has always been the focus of research. In this paper, by introducing the environmental stimulus incentives mechanism, which was defined by the population diversity of the external environment stimulus to assist populations to realize the conversion between consumption and decomposition, the largest cumulative success rate by individuals performing different tasks to guide the consumption of individual choice was found to be more suitable for an update strategy. This method decreases the complexity of the AEO and improves the calculation precision.

At the same time, in the consumption stage, a new exploration and updating method that uses biological competition to make it have successfully random search features is proposed. Because of this, an improved artificial ecosystem optimization algorithm (SIAEO) on account of environmental stimulus incentive mechanisms and biological competition was proposed. Two types of tests were developed to confirm the superiority of the SIAEO. The validity of the SIAEO and other intelligent algorithms and the AEO variant algorithms to resolve the CEC2017 and CEC2019 benchmark functions was confirmed in the first set of experiments. The SIAEO has a greater solving precision and convergence speed than contrast algorithms, according to the findings of the experiments. The SIAEO’s better stability and robustness are further demonstrated through statistical tests and the convergence curve.

The second group of experiments verified the usefulness of the SIAEO. Four engineering minimize problems verify the efficiency of the SIAEO in light of the complex engineering nonlinear search problems. The SIAEO–K-means model was established to optimize the K-means clustering center, and nine UCI standard data sets were applied in the experiment. The results showed that SIAEO–K-means obtained higher evaluation index values and had better performance in high-dimensional clustering data sets. The SIAEO exceeds the comparative algorithms according to optimization ability and performance in addressing high-dimensional problems in light of the two groups of experimental data.

As the AEO is a new heuristic algorithm, a more effective improvement strategy to balance its exploration and exploitation ability is studied to improve its optimization ability. The authors of [54] provided a dynamic data flow clustering method based on intelligent algorithms, providing a reference for the fusion of dynamic data clustering and heuristic algorithms. Another study [55] required an optimized estimation of hydraulic jump roller length. These new requirements need further exploration of the large-scale clustering ability of AEO algorithm and its deep application in the mechanical field in the future.

Author Contributions

W.G.: supervision, methodology, formal analysis, writing—original draft, writing—review and editing. M.W.: software, data curation, conceptualization, visualization, formal analysis, writing—original draft. F.D.: methodology, supervision, data curation, supervision, writing—review and editing. Y.Q.: methodology, formal analysis, data curation, writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China No. 61976176.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

This study did not conduct data archiving, as the algorithm has randomness, and the results obtained each time are different.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shadravan, S.; Naji, H.R.; Bardsiri, V.K. The sailfish optimizer: A novel nature-inspired metaheuristic algorithm for solving constrained engineering optimization problems. Eng. Appl. Artif. Intell. 2019, 80, 20–34. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Sulaiman, M.H.; Mustaffa, Z.; Saari, M.M.; Daniyal, H. Barnacles Mating Optimizer: A Bio-Inspired Algorithm for Solving Optimization Problems. In Proceedings of the 2018 19th IEEE/ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD), Busan, Republic of Korea, 27–29 June 2018; pp. 265–270. [Google Scholar]

- Hu, G.; Wang, J.; Li, M.; Hussien, A.G.; Abbas, M. EJS: Multi-strategy enhanced jellyfish search algorithm for engineering applications. Mathematics 2023, 11, 851. [Google Scholar] [CrossRef]

- Hu, G.; Yang, R.; Qin, X.; Wei, G. MCSA: Multi-strategy boosted chameleon-inspired optimization algorithm for engineering applications. Comput. Methods Appl. Mech. Eng. 2023, 403, 115676. [Google Scholar] [CrossRef]

- Hu, G.; Du, B.; Wang, X.; Wei, G. An enhanced black widow optimization algorithm for feature selection. Knowl.-Based Syst. 2022, 235, 107638. [Google Scholar] [CrossRef]

- Dhiman, G.; Kaur, A. STOA: A bio-inspired based optimization algorithm for industrial engineering problems. Eng. Appl. Artif. Intell. 2019, 82, 148–174. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for Solving Optimization Problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Alqaness, M.A.A.; Ewees, A.A.; Fan, H.; Aziz, M. Optimization method for forecasting confirmed cases of COVID-19 in China. Clin. Med. 2020, 9, 674. [Google Scholar]

- Ewees, A.A.; Elaziz, M.A.; Al-Qaness, M.A.A.; Khalil, H.A.; Kim, S. Improved artificial bee colony using Sine-cosine algorithm for multi-level thresholding image segmentation. IEEE Access 2020, 8, 26304–26315. [Google Scholar] [CrossRef]

- Rajamohana, S.; Umamaheswari, K. Hybrid approach of improved binary particle swarm optimization and shuffled frog leaping for feature selection. Comput. Electr. Eng. 2018, 67, 497–508. [Google Scholar] [CrossRef]

- Attiya, I.; Elaziz, M.A.; Xiong, S. Job scheduling in cloud computing using a modified harris hawks optimization and simulated annealing algorithm. Comput. Intell. Neurosci. 2020, 16, 3504642. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Zhang, Z. Artificial ecosystem-based optimization: A novel nature-inspired meta-heuristic algorithm. Neural Comput. Appl. 2020, 32, 9383–9425. [Google Scholar] [CrossRef]

- RizkALllah, R.M.; ELFergany, A.A.; SMIEEE. Artificial Ecosystem Optimizer for Parameters Identification of Proton Exchange Membrane Fuel Cells Model. Int. J. Hydrog. Energy 2020, 46, 37612–37627. [Google Scholar] [CrossRef]

- Sultana, H.M.; Menesy, A.S.; Kamel, S.; Korashy, A.; Almohaimeed, S.A.; Abdel-Akher, M. An improved artificial ecosystem optimization Algorithm for optimal configuration of a hybrid PV/WT/FC energy system. Alex. Eng. J. 2021, 60, 1001–1025. [Google Scholar] [CrossRef]

- Menesy, A.S.; Sultan, H.M.; Korashy, A.; Banakhr, F.A.; Ashmawy, M.G.; Kamel, A.S. Effective parameter extraction of different polymer electrolyte membrane fuel cell stack models using a modified artificial ecosystem optimization algorithm. IEEE Access 2020, 8, 31892–31909. [Google Scholar] [CrossRef]

- Barshandeh, S.; Piri, F.; Sangani, S.R. HMPA: An innovative hybrid multi-population algorithm based on artificial ecosystem-based and Harris Hawks optimization algorithms for engineering problems. Eng. Comput. 2022, 38, 1581–1625. [Google Scholar] [CrossRef]

- Essa, F.A.; Mohamed, A. Prediction of power consumption and water productivity of seawater greenhouse system using random vector functional link network integrated with artificial ecosystem-based optimization. Process. Saf. Environ. 2020, 144, 322–329. [Google Scholar] [CrossRef]

- Yousri, D.; Babu, T.S.; Mirjalili, S.; Rajasekar, N.; Elaziz, M.A. A novel objective function with artificial ecosystem-based optimization for relieving the mismatching power loss of large-scale photovoltaic array. Energy Convers. Manag. 2020, 225, 113385. [Google Scholar] [CrossRef]

- Calasan, M.; Mice, M.; Djurovic, Z.; Mageed, H.M. Artificial ecosystem-based optimization for optimal tuning of robust PID controllers in AVR systems with limited value of excitation voltage. Int. J. Electr. Eng. Educ. 2020, 1–28. [Google Scholar] [CrossRef]

- Mohammadrezapour, O.; Kisi, O.; Pourahmad, F. Fuzzy c-means and K-means clustering with genetic algorithm for identification of homogeneous regions of groundwater quality. Neural Comput. Appl. 2020, 32, 3763–3775. [Google Scholar] [CrossRef]

- Capó, M.; Pérez, A.; Lozano, J.A. An efficient approximation to the k-means clustering for massive data. Knowl.-Based Syst. 2017, 117, 56–69. [Google Scholar] [CrossRef]

- Peng, Z.; Zheng, J.; Zou, J. A population diversity maintaining strategy based on dynamic environment evolutionary model for dynamic multiobjective optimization. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014; pp. 274–281. [Google Scholar]

- Ursem, R. Diversity-guided evolutionary algorithms. In Parallel Problem Solving from Nature; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2002; Volume 2439, pp. 462–471. [Google Scholar]

- Price, K.V.; Awad, N.H.; Ali, M.Z.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the 100-Digit Challenge Special Session and Competition on Single Objective Numerical Optimization; Technical Report; Nanyang Technological University: Singapore, 2018. [Google Scholar]

- Awad, N.H.; Ali, M.Z.; Liang, J.J.; Qu, B.Y.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2017 Special Session and Competition on Single Objective Real-Parameter Numerical Optimization; Technical Report; Nanyang Technological University: Singapore, 2016. [Google Scholar]

- Hashim, F.A.; Hussain, K.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W. Archimedes optimization algorithm: A new metaheuristic algorithm for solving optimization problems. Appl. Intell. 2021, 51, 1531–1551. [Google Scholar] [CrossRef]

- Kaur, S.; Awasthi, L.; Sangal, A.; Dhiman, G. Tunicate Swarm Algorithm: A new bio-inspired based metaheuristic paradigm for global optimization. Eng. Appl. Artif. Intell. 2020, 90, 103541. [Google Scholar] [CrossRef]

- Sun, J.; Fang, W.; Wu, X.; Palade, V.; Xu, W. Quantum-behaved particle swarm optimization: Analysis of individual particle behavior and parameter selection. Evol. Comput. 2012, 20, 349–393. [Google Scholar] [CrossRef] [PubMed]

- Seyedali, M.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar]

- Mirjalili, S.; Mohammad, S.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- Friedman, M. A comparison of alternative tests of significance for the problem of m rankings. Ann. Math. Stat. 1940, 11, 86–92. [Google Scholar] [CrossRef]

- Nowcki, H. Optimization in pre-contract ship design. In Computer Applications in the Automation of Shipyard Operation and Ship Design; Fujita, Y., Lind, K., Williams, T.J., Eds.; Elsevier: North-Holland, NY, USA, 1974; Volume 2, pp. 327–338. [Google Scholar]

- Feng, Z.; Niu, W.; Liu, S. Cooperation search algorithm: A novel metaheuristic evolutionary intelligence algorithm for numerical optimization and engineering optimization problems. Appl. Soft. Comput. 2020, 98, 106734. [Google Scholar] [CrossRef]

- Ray, T.; Saini, P. Engineering design optimization using a swarm with an intelligent information sharing among individuals. Eng. Optim. 2001, 33, 735–748. [Google Scholar] [CrossRef]

- Sadollah, A.; Bahreininejad, A.; Eskandar, H.; Hamdi, M. Mine blast algorithm: A new population based algorithm for solving constrained engineering optimization problems. Appl. Soft Comput. 2013, 13, 2592–2612. [Google Scholar] [CrossRef]

- Abualigah, L.; Shehab, M.; Diaba, A.; Abraham, A. Selection scheme sensitivity for a hybrid salp swarm algorithm: Analysis and applications. Eng. Comput. 2020, 38, 1149–1175. [Google Scholar] [CrossRef]

- Himmelblau, D. Applied Nonlinear Programming; McGraw-Hill Companies: New York, NY, USA, 1972. [Google Scholar]

- Deb, K. An efficient constraint handling method for genetic algorithms. Comput. Methods Appl. Mech. Eng. 2000, 186, 311–338. [Google Scholar] [CrossRef]

- He, S.; Prempain, E.; Wu, H. An improved particle swarm optimizer for Mechanical design optimization problems. Eng. Optim. 2004, 36, 585–605. [Google Scholar] [CrossRef]

- Dimopoulos, G. Mixed-variable engineering optimization based on evolutionary and social metaphors. Comput. Methods Appl. Mech. Eng. 2007, 196, 803–817. [Google Scholar] [CrossRef]

- Bard, J. Engineering Optimization: Theory and Practice; John Wiley & Sons: New York, NY, USA, 1997. [Google Scholar]

- Hsu, L.; Liu, C. Developing a fuzzy proportional-derivative controller optimization engine for engineering design optimization problems. Eng. Optim. 2007, 39, 679–700. [Google Scholar] [CrossRef]

- Beightler, C.; Phillips, D. Applied Geometric Programming; Wiley: New York, NY, USA, 1976. [Google Scholar]

- Arora, S.; Singh, S. Butterfly optimization algorithm: A novel approach for global optimization. Soft Comput. 2018, 23, 715. [Google Scholar] [CrossRef]

- Cheng, M.; Prayogo, D. Symbiotic organisms search: A new metaheuristic optimization algorithm. Comput. Struct. 2014, 139, 98–112. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for Global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Peña, M.; Lozano, A.; Larranaga, P. An empirical comparison of four initialization methods for the k-means algorithm. Pattern Recognit. Lett. 1999, 20, 1027–1040. [Google Scholar] [CrossRef]

- Askari, S. Fuzzy C-Means clustering algorithm for data with unequal cluster sizes and contaminated with noise and outliers: Review and development. Expert. Syst. Appl. 2021, 165, 113856. [Google Scholar] [CrossRef]

- Dheeru, D.; Taniskidou, E. UCI Repository of machine learning databases. Available online: http://www.ics.uci.edu/ (accessed on 2 June 2023).

- Yeoh, J.M.; Caraffini, F.; Homapour, E.; Santucci, V.; Milani, A. A clustering system for dynamic data streams based on metaheuristic optimisation. Mathematics 2019, 7, 1229. [Google Scholar] [CrossRef]

- Agresta, A.; Biscarini, C.; Caraffini, F.; Santucci, V. An intelligent optimised estimation of the hydraulic jump roller length. In Applications of Evolutionary Computation: 26th European Conference, EvoApplications; Springer: Cham, Switzerland, 2023; pp. 475–490. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).