Deep Reinforcement Learning-Based End-to-End Control for UAV Dynamic Target Tracking

Abstract

1. Introduction

- This paper proposes a DRL-based end-to-end control framework of UAV dynamic target tracking, which simplifies the traditional modularization paradigm by establishing an integrated neural network. This method can achieve dynamic target tracking using the policy obtained from the task training of flying towards a fixed target.

- Neural network architecture, reward functions, and SAC-based speed command perception are designed to train the policy network for UAV dynamic target tracking. The trained policy network can use the input image to obtain the speed control command as an output, which realizes the UAV dynamic target tracking based on speed command perception.

- The numerical results show that the proposed framework for simplifying the traditional modularization paradigm is feasible and the end-to-end control method allows the UAV to track the dynamic target with rapidly changing of speed and direction.

2. Preliminaries

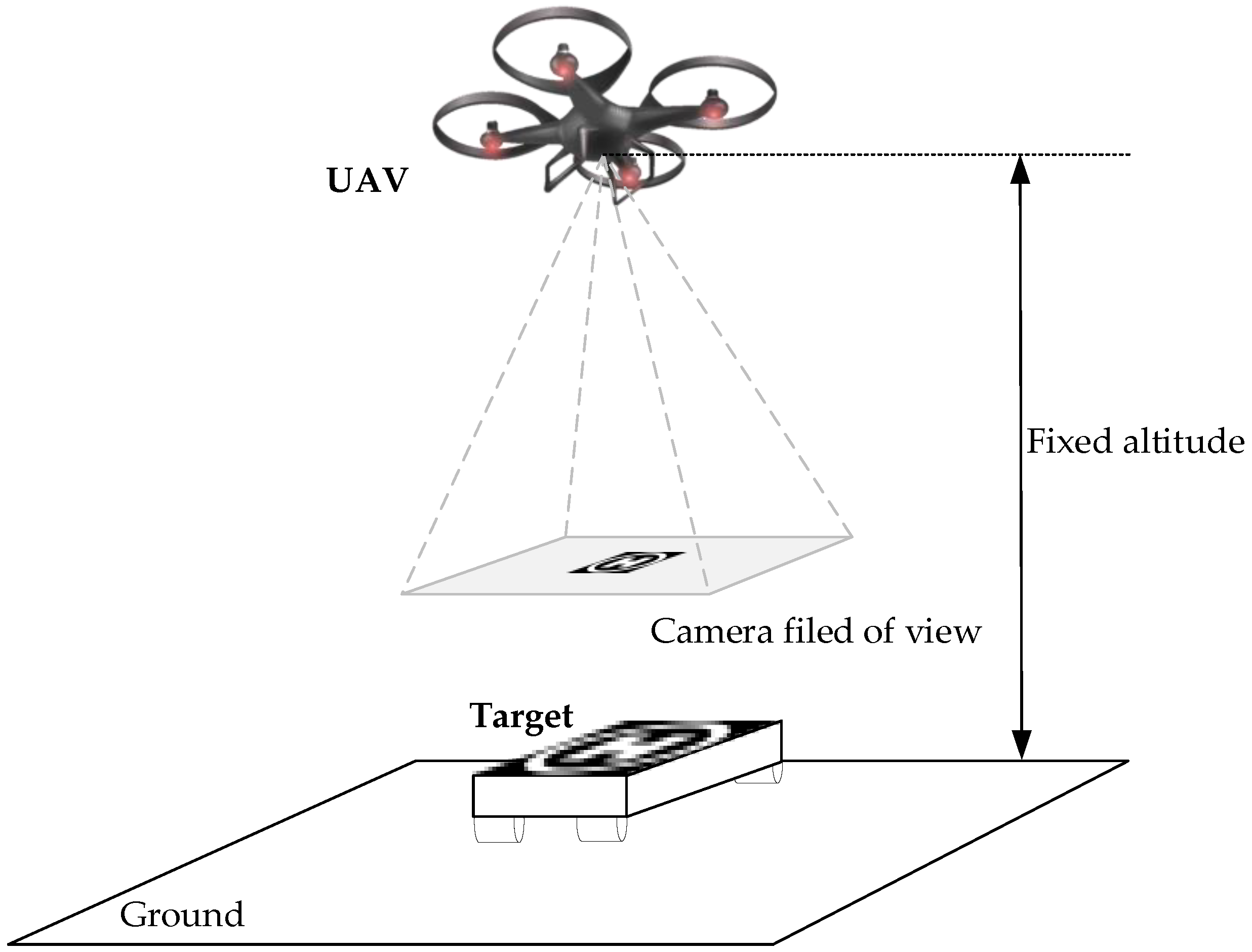

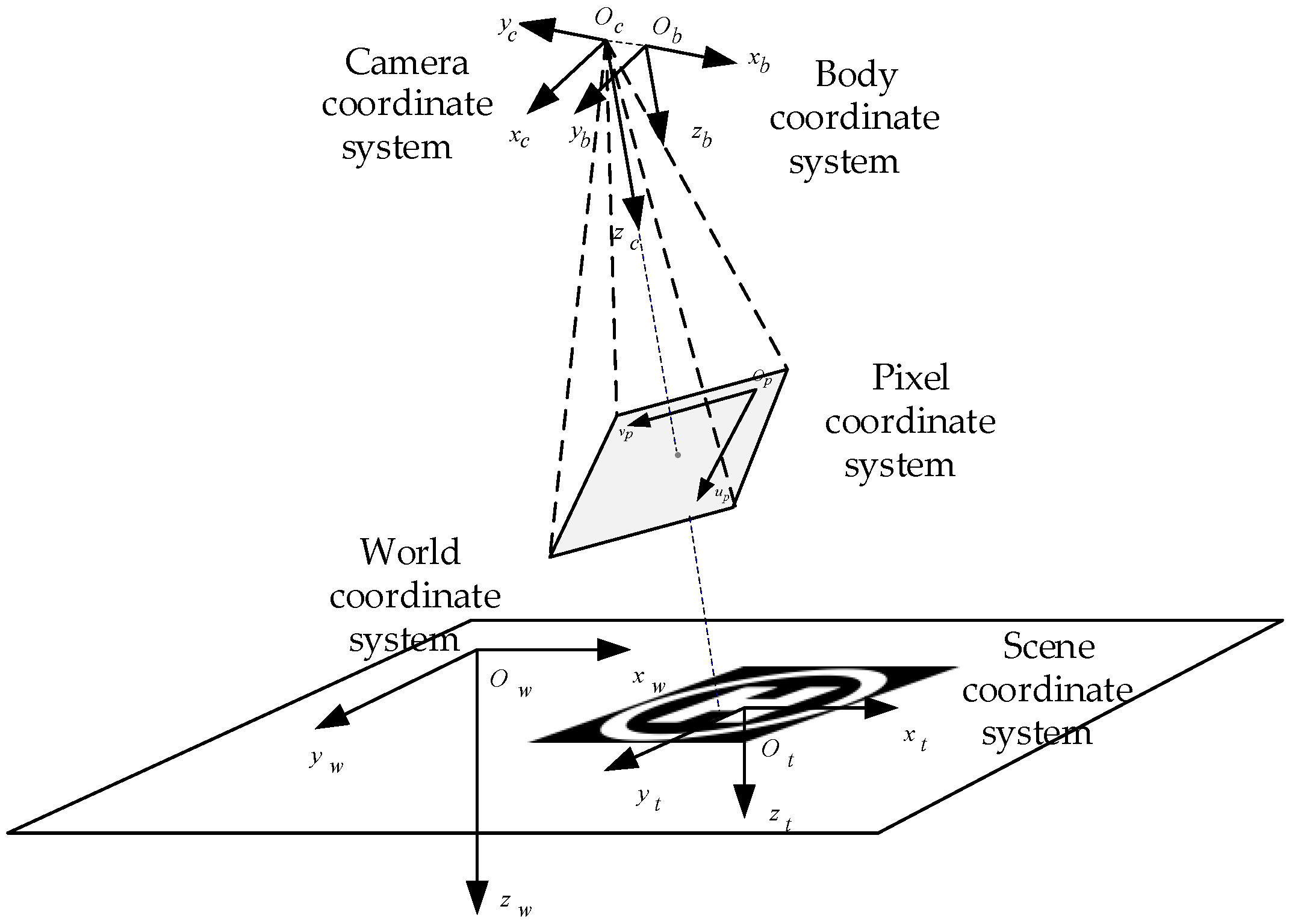

2.1. Problem Formulation

2.2. UAV Model

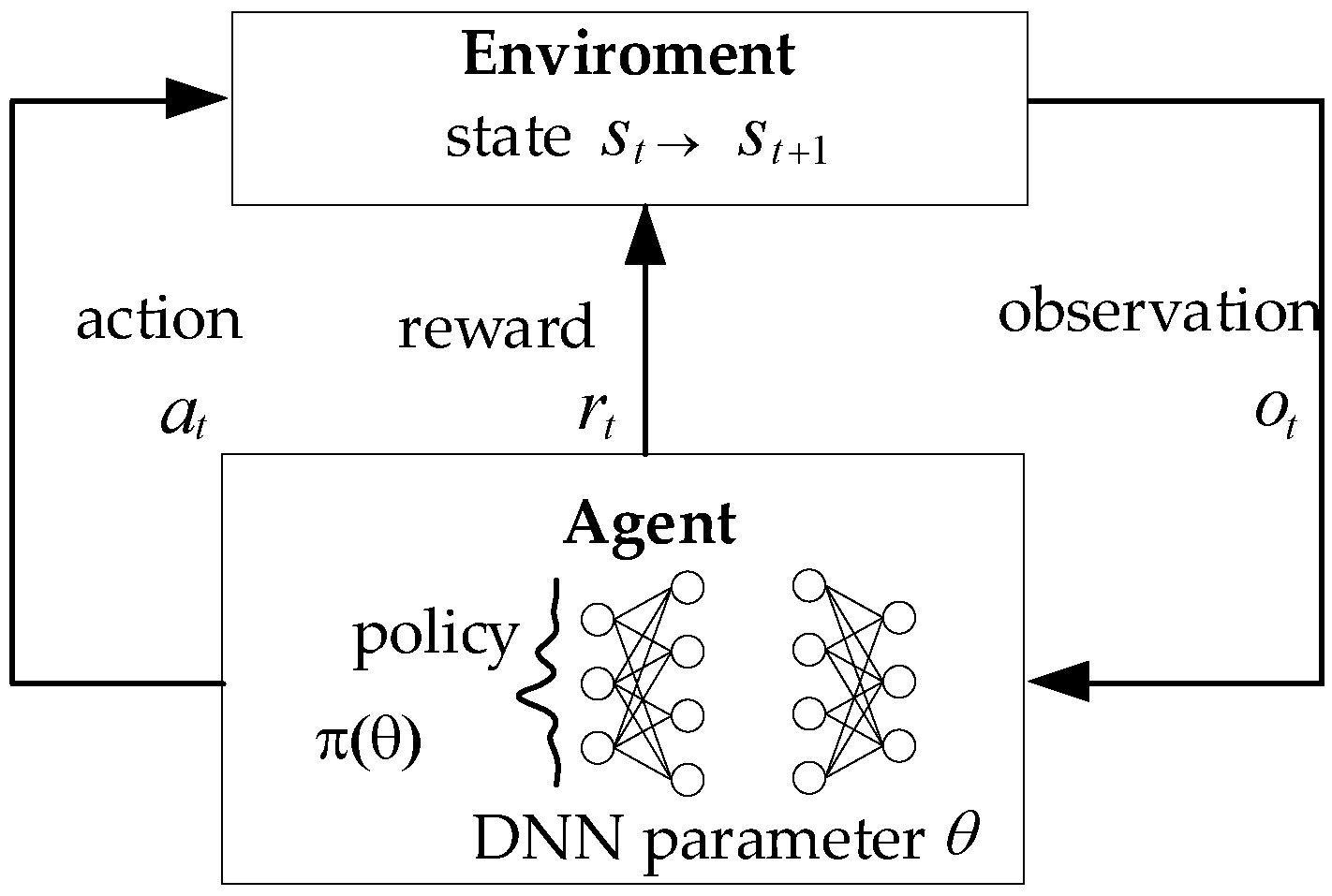

2.3. DRL and SAC

3. End-to-End Control for UAV Dynamic Target Tracking

3.1. Framework

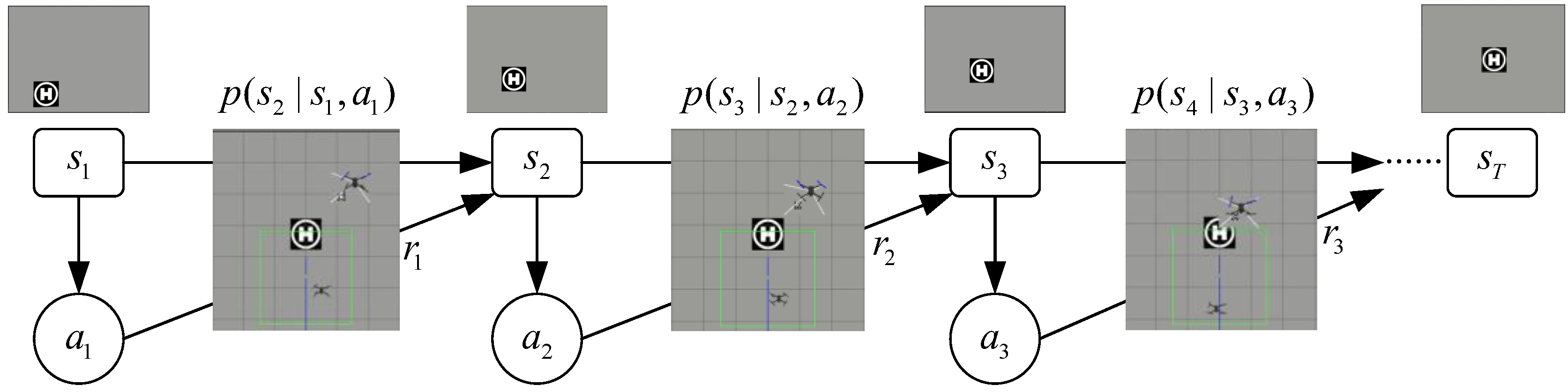

3.1.1. Markov Decision Process for Target Tracking

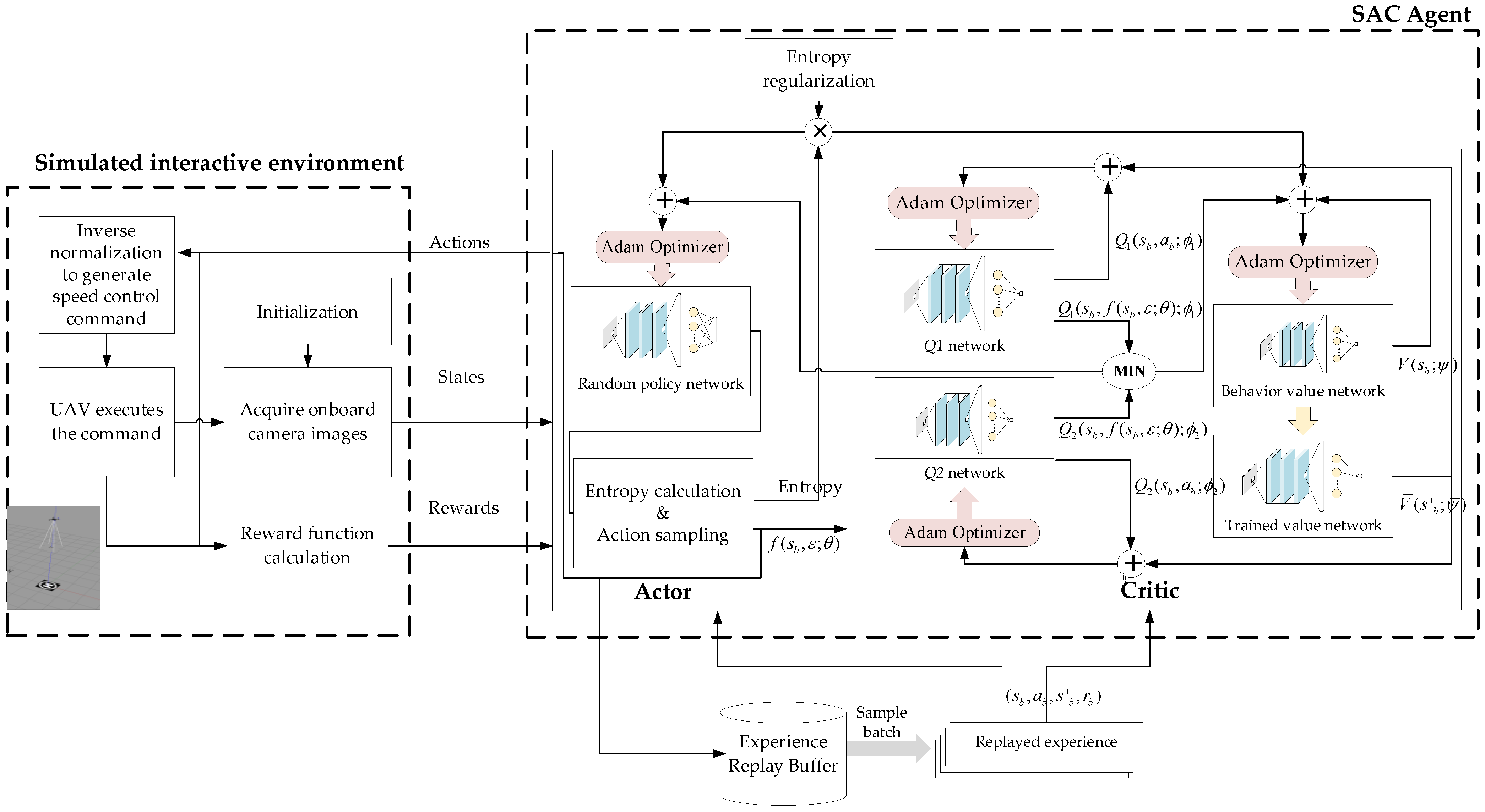

3.1.2. Interactive Environment and Agents

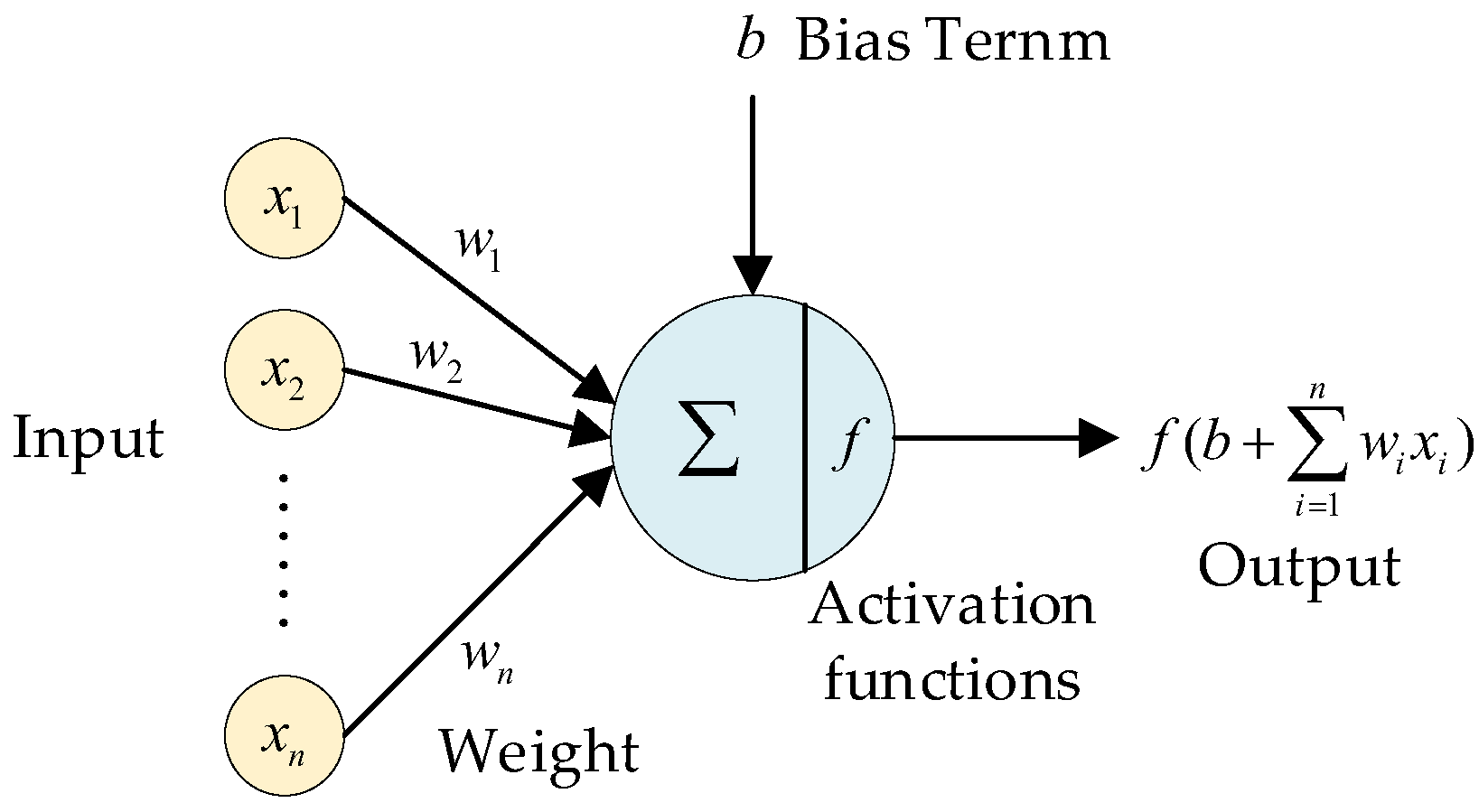

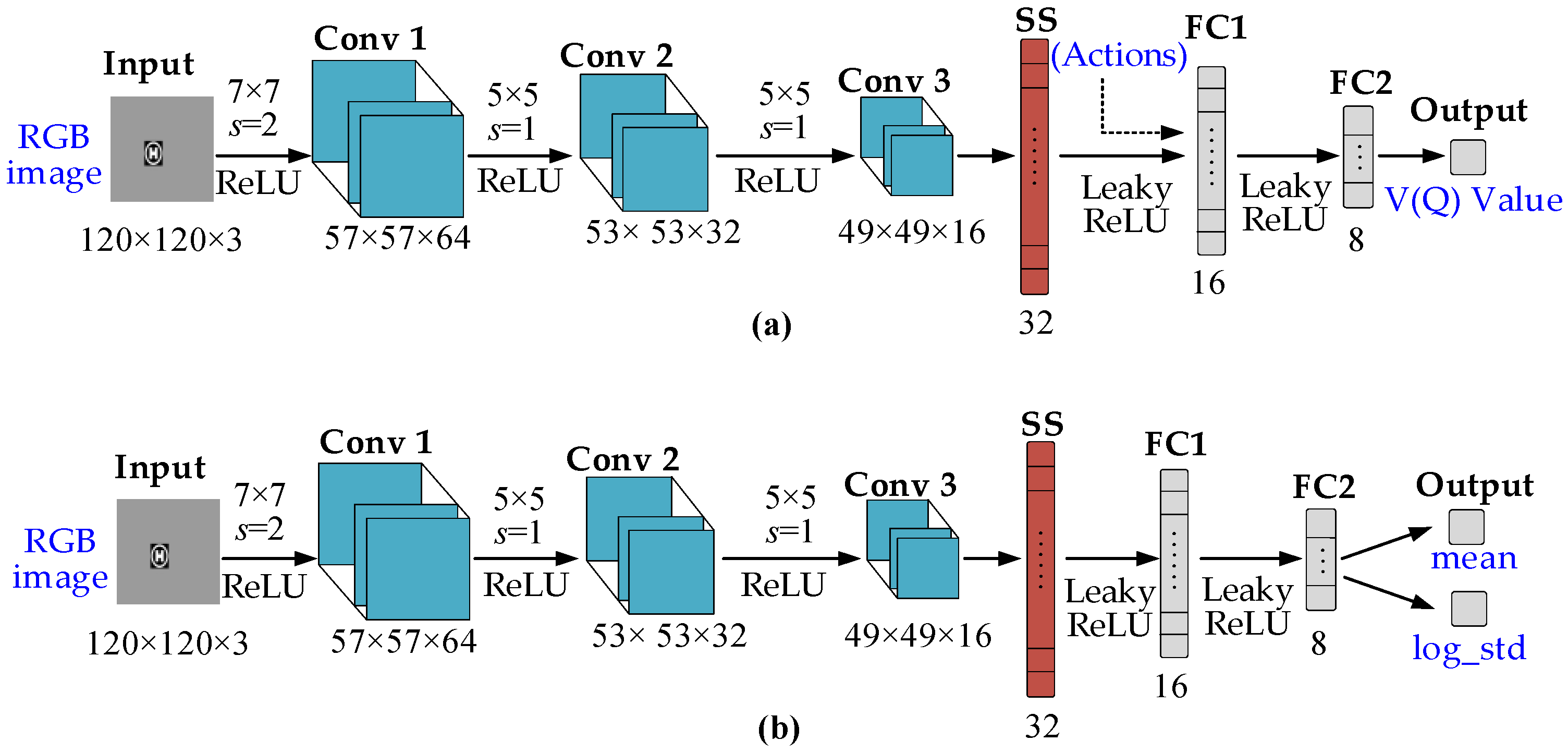

3.2. Neural Network Architecture for End-to-End Learning

3.3. Reward Function for Target Tracking

3.4. SAC-Based Speed Command Perception

| Algorithm 1. SAC-Based Training Algorithm |

|

|

|

|

The end of a time step; The end of an episode; |

4. Numerical Simulations

4.1. Training Results

4.2. Dynamic Target Tracking

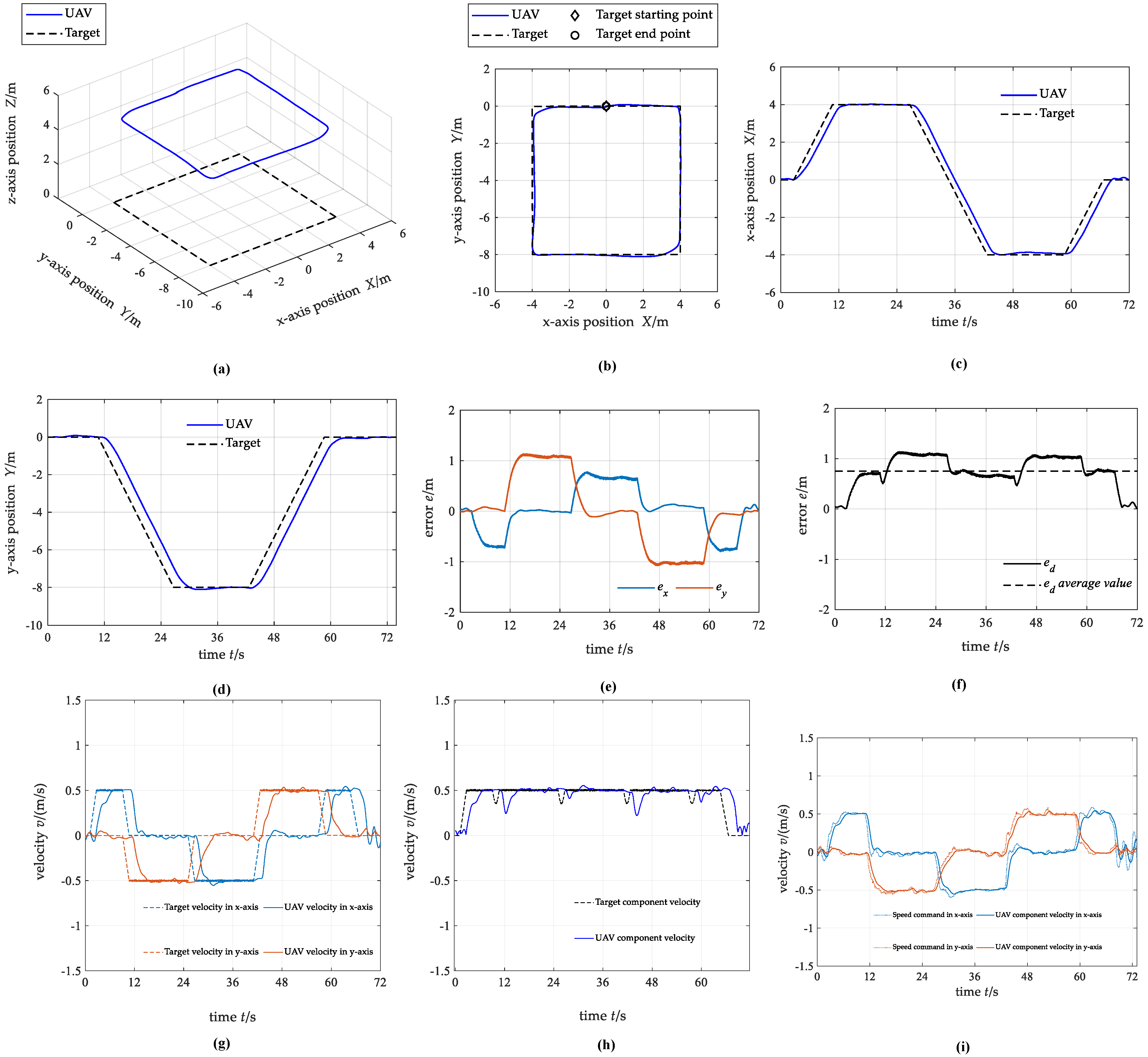

4.2.1. Case 1: Square Trajectory

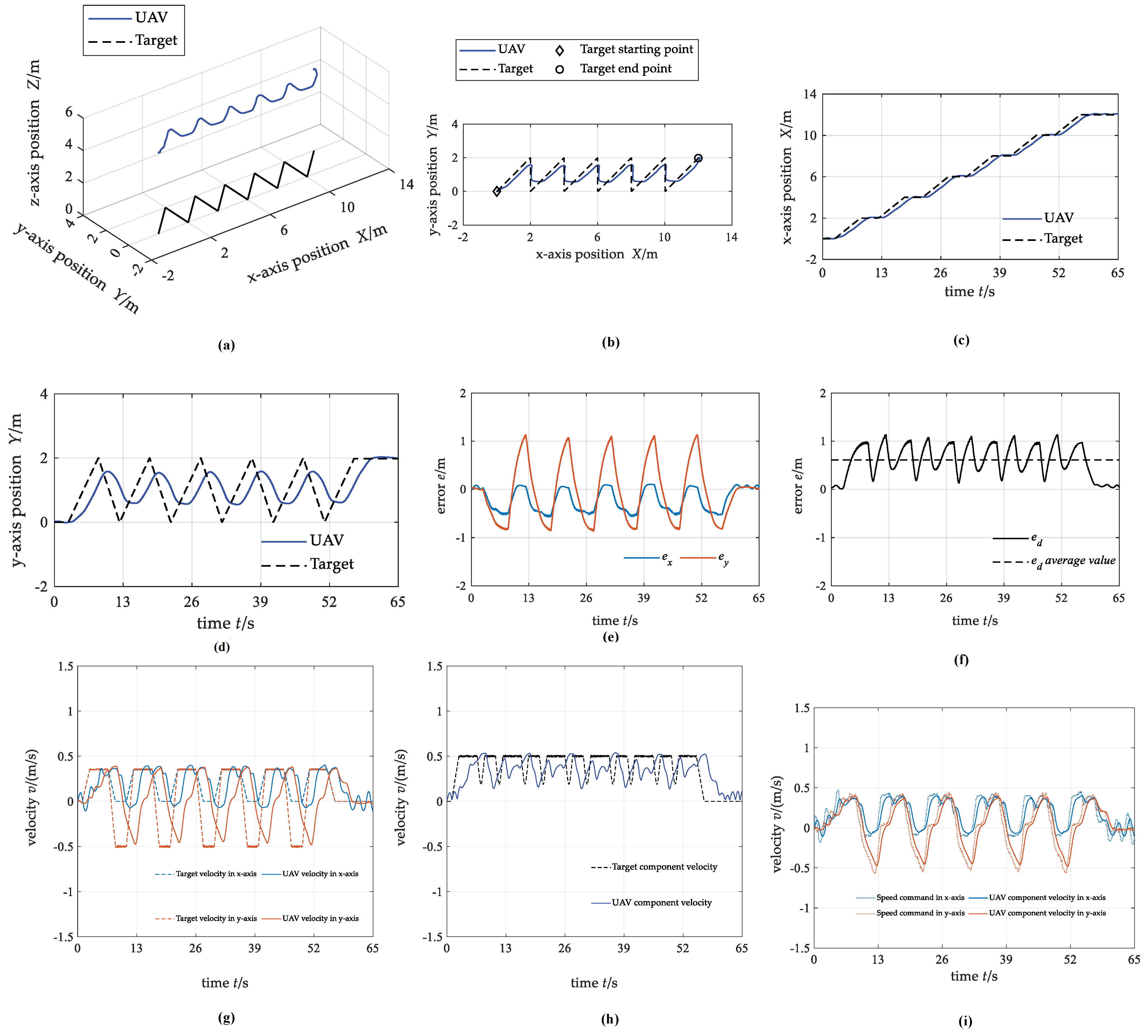

4.2.2. Case 2: Polygonal Trajectory

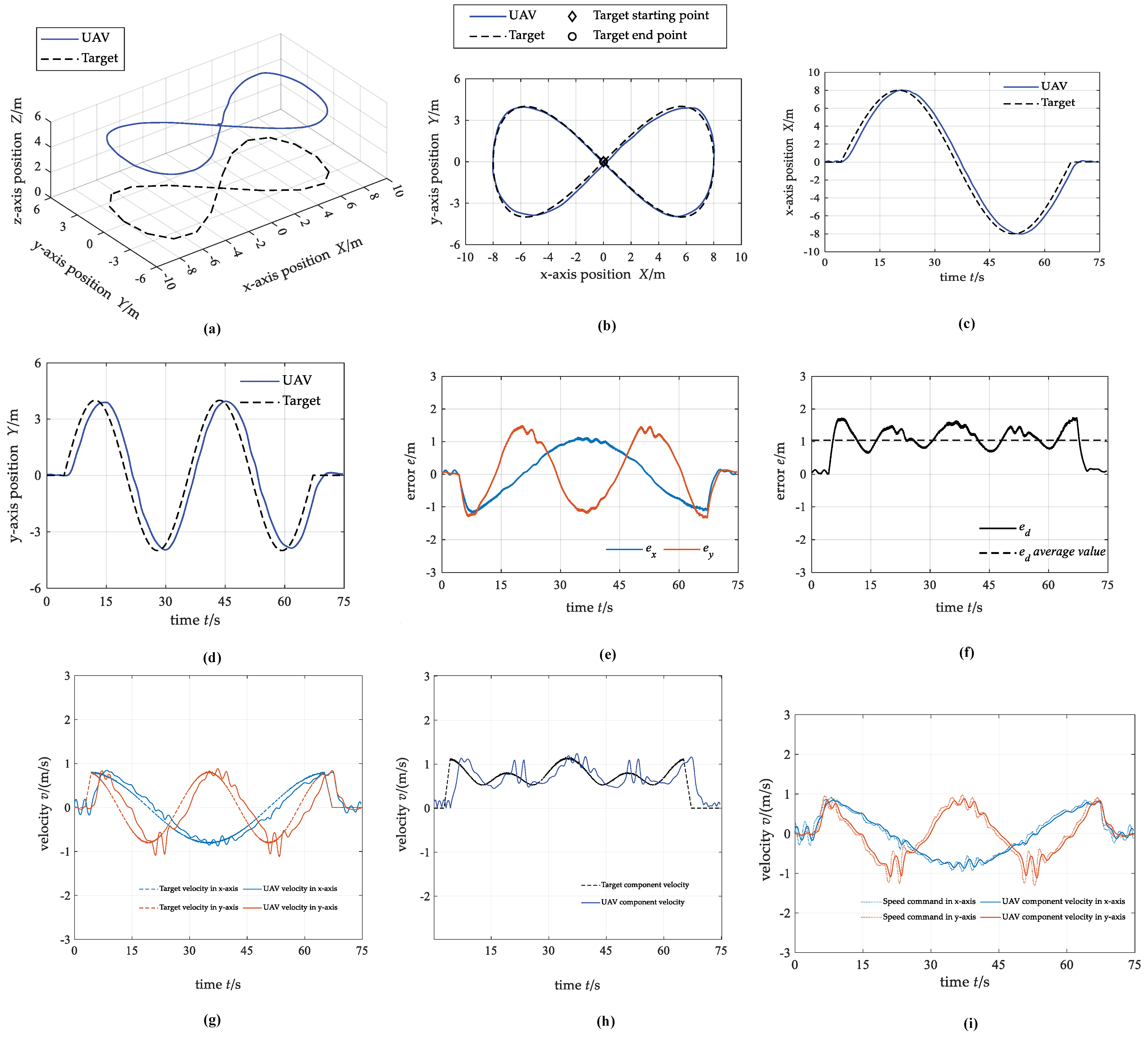

4.2.3. Case 3: Curve Trajectory

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kondoyanni, M.; Loukatos, D.; Maraveas, C. Bio-Inspired Robots and Structures toward Fostering the Modernization of Agriculture. Biomimetics 2022, 7, 69. [Google Scholar] [CrossRef] [PubMed]

- Mademlis, I.; Nikolaidis, N.; Tefas, A. Autonomous UAV cinematography: A tutorial and a formalized shot-type taxonomy. ACM Comput. Surv. 2019, 52, 1–33. [Google Scholar] [CrossRef]

- Birk, A.; Wiggerich, B.; Bülow, H. Safety, security, and rescue missions with an unmanned aerial vehicle. J. Intell. Robot. Syst. 2011, 64, 57–76. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Messina, G.; Modica, G. Applications of UAV thermal imagery in precision agriculture: State of the art and future research outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Gu, J.; Su, T.; Wang, Q. Multiple moving targets surveillance based on a cooperative network for multi-UAV. IEEE Commun. Mag. 2018, 56, 82–89. [Google Scholar] [CrossRef]

- Zhao, J.; Xiao, G.; Zhang, X. A survey on object tracking in aerial surveillance. In Proceedings of the International Conference on Aerospace System Science and Engineering, Berlin, Germany, 31 July 2018; pp. 53–68. [Google Scholar]

- Chamola, V.; Kotesh, P.; Agarwal, A.; Gupta, N.; Guizani, M. A comprehensive review of unmanned aerial vehicle attacks and neutralization techniques. Ad Hoc Netw. 2021, 111, 102324. [Google Scholar] [CrossRef] [PubMed]

- Tang, S.; Kumar, V. Annual Review of Control, Robotics, and Autonomous Systems. Auton. Flight 2018, 1, 29–52. [Google Scholar]

- Zhao, J.; Ji, S.; Cai, Z.; Zeng, Y.; Wang, Y. Moving Object Detection and Tracking by Event Frame from Neuromorphic Vision Sensors. Biomimetics 2022, 7, 31. [Google Scholar] [CrossRef] [PubMed]

- Rafi, F.; Khan, S.; Shafiq, K. Autonomous target following by unmanned aerial vehicles. In Proceedings of the Unmanned Systems Technology VIII, Orlando, FL, USA, 9 May 2006; Volume 6230, pp. 325–332. [Google Scholar]

- Deng, C.; He, S.; Han, Y.; Zhao, B. Learning dynamic spatial-temporal regularization for UAV object tracking. IEEE Signal Process. Lett. 2021, 28, 1230–1234. [Google Scholar] [CrossRef]

- Bhagat, S.; Sujit, P.B. UAV Target Tracking in Urban Environments Using Deep Reinforcement Learning. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 694–701. [Google Scholar]

- Wang, S.; Jiang, F.; Zhang, B. Development of UAV-based target tracking and recognition systems. IEEE Trans. Intell. Transp. Syst. 2019, 21, 3409–3422. [Google Scholar] [CrossRef]

- Azrad, S.; Kendoul, F.; Nonami, K. Visual servoing of quadrotor micro-air vehicle using color-based tracking algorithm. J. Syst. Des. Dyn. 2010, 4, 255–268. [Google Scholar] [CrossRef]

- Chakrabarty, A.; Morris, R.; Bouyssounouse, X. Autonomous indoor object tracking with the Parrot AR. Drone. In Proceedings of the International Conference on Unmanned Aircraft Systems, Arlington, TX, USA, 7–10 June 2016; pp. 25–30. [Google Scholar]

- Nebehay, G.; Pflugfelder, R. Clustering of static-adaptive correspondences for deformable object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 6–8 June 2015; pp. 2784–2791. [Google Scholar]

- Greatwood, C.; Bose, L.; Richardson, T. Tracking control of a UAV with a parallel visual processor. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 4248–4254. [Google Scholar]

- Diego, A.; Mercado, R.; Pedro, C. Visual detection and tracking with UAVs, following a mobile object. Adv. Robot. 2019, 33, 388–402. [Google Scholar]

- Petersen, M.; Samuelson, C.; Beard, R. Target tracking and following from a multirotor UAV. Curr. Robot. Rep. 2021, 2, 285–295. [Google Scholar] [CrossRef]

- Kassab, M.A.; Maher, A.; Elkazzaz, F. UAV target tracking by detection via deep neural networks. In Proceedings of the IEEE International Conference on Multimedia and Expo, Shanghai, China, 8–12 July 2019; pp. 139–144. [Google Scholar]

- Shaferman, V.; Shima, T. Cooperative uav tracking under urban occlusions and airspace limitations. In Proceedings of the AIAA Guidance Navigation and Control Conference and Exhibit, Honolulu, HI, USA, 18–21 August 2008; p. 7136. [Google Scholar]

- Li, S.; Liu, T.; Zhang, C. Learning unmanned aerial vehicle control for autonomous target following. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 10–15 July 2018; pp. 4936–4942. [Google Scholar]

- Zhang, W.; Song, K.; Rong, X.; Li, Y. Coarse-to-fine UAV target tracking with deep reinforcement learning. IEEE Trans. Autom. Sci. Eng. 2018, 16, 1522–1530. [Google Scholar] [CrossRef]

- Xia, Z.; Du, J.; Wang, J.; Jiang, C.; Ren, Y.; Li, G.; Han, Z. Multi-Agent Reinforcement Learning Aided Intelligent UAV Swarm for Target Tracking. IEEE Trans. Veh. Technol. 2021, 71, 931–945. [Google Scholar]

- Xu, G.; Jiang, W.; Wang, Z.; Wang, Y. Autonomous Obstacle Avoidance and Target Tracking of UAV Based on Deep Reinforcement Learning. J. Intell. Robot. Syst. 2022, 104, 60. [Google Scholar] [CrossRef]

- Quan, Q. Introduction to Multicopter Design and Control; Springer: Singapore, 2017; pp. 80–150. [Google Scholar]

- Li, M.; Cai, Z.; Zhao, J. Disturbance rejection and high dynamic quadrotor control based on reinforcement learning and supervised learning. Neural Comput. Appl. 2022, 34, 11141–11161. [Google Scholar] [CrossRef]

- Huang, H.; Ho, D.W.; Lam, J. Stochastic stability analysis of fuzzy Hopfield neural networks with time-varying delays. IEEE Trans. Circuits Syst. II Express Briefs 2005, 52, 251–255. [Google Scholar] [CrossRef]

- Haarnoja, T.; Zhou, A.; Hartikainen, K. Soft actor-critic algorithms and applications. arXiv 2018, arXiv:1812.05905. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Abbeel, P. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the International Conference on Machine Learning, Stockholm, Wseden, 10–15 July 2018; pp. 1861–1870. [Google Scholar]

- Van, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; p. 30. [Google Scholar]

| Training Parameters | Value |

|---|---|

| Size of experience replay buffer | 10,000 |

| Size of experience replay batch | 128 |

| Discounting factor | 0.99 |

| Maximum number of steps each episode | 50 |

| Learning rate of each neural network | 0.0003 |

| Target Trajectory | Target Velocity | |

|---|---|---|

| Case 1 | Square trajectory | 0.5 m/s |

| Case 2 | Polygonal trajectory | 0.5 m/s |

| Case 3 | Curve trajectory | 0.5~1.2 m/s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, J.; Liu, H.; Sun, J.; Wu, K.; Cai, Z.; Ma, Y.; Wang, Y. Deep Reinforcement Learning-Based End-to-End Control for UAV Dynamic Target Tracking. Biomimetics 2022, 7, 197. https://doi.org/10.3390/biomimetics7040197

Zhao J, Liu H, Sun J, Wu K, Cai Z, Ma Y, Wang Y. Deep Reinforcement Learning-Based End-to-End Control for UAV Dynamic Target Tracking. Biomimetics. 2022; 7(4):197. https://doi.org/10.3390/biomimetics7040197

Chicago/Turabian StyleZhao, Jiang, Han Liu, Jiaming Sun, Kun Wu, Zhihao Cai, Yan Ma, and Yingxun Wang. 2022. "Deep Reinforcement Learning-Based End-to-End Control for UAV Dynamic Target Tracking" Biomimetics 7, no. 4: 197. https://doi.org/10.3390/biomimetics7040197

APA StyleZhao, J., Liu, H., Sun, J., Wu, K., Cai, Z., Ma, Y., & Wang, Y. (2022). Deep Reinforcement Learning-Based End-to-End Control for UAV Dynamic Target Tracking. Biomimetics, 7(4), 197. https://doi.org/10.3390/biomimetics7040197