Abstract

The basic Salp Swarm Algorithm (SSA) offers advantages such as a simple structure and few parameters. However, it is prone to falling into local optima and remains inadequate for seed classification tasks that involve hyperparameter optimization of machine learning classifiers such as Support Vector Machines (SVMs). To overcome these limitations, an Enhanced Knowledge-based Salp Swarm Algorithm (EKSSA) is proposed. The EKSSA incorporates three key strategies: Adaptive adjustment mechanisms for parameters and to better balance exploration and exploitation within the salp population; a Gaussian walk-based position update strategy after the initial update phase, enhancing the global search ability of individuals; and a dynamic mirror learning strategy that expands the search domain through solution mirroring, thereby strengthening local search capability. The proposed algorithm was evaluated on thirty-two CEC benchmark functions, where it demonstrated superior performance compared to eight state-of-the-art algorithms, including Randomized Particle Swarm Optimizer (RPSO), Grey Wolf Optimizer (GWO), Archimedes Optimization Algorithm (AOA), Hybrid Particle Swarm Butterfly Algorithm (HPSBA), Aquila Optimizer (AO), Honey Badger Algorithm (HBA), Salp Swarm Algorithm (SSA), and Sine–Cosine Quantum Salp Swarm Algorithm (SCQSSA). Furthermore, an EKSSA-SVM hybrid classifier was developed for seed classification, achieving higher classification accuracy.

1. Introduction

Swarm intelligence algorithms (SIAs) [1,2,3,4] are usually inspired by characteristics of the natural swarm of the animal, which can be applied to deal with complex optimization issues from modeling real-world problems. In addition, the behavior and characteristic of biological individuals are also considered in the design of the algorithm. Some widely SIAs include Particle Swarm Optimization (PSO) [1], Grey Wolf Optimizer (GWO) [5], Salp Swarm Algorithm (SSA) [6], Aquila Optimizer (AO) [7], Duck Swarm Algorithm (DSA) [3], etc. Expert in numerical optimization problems, engineering constrained optimization problems are also an effective method for verifying proposed algorithms. In addition, it can be abstracted as that all optimization problems can be solved by using SIAs and their variants in theory.

A Salp Swarm Algorithm (SSA) is a metaheuristic optimization technique modeled after the swarming and foraging behavior of salps in marine environments [6,8] The main steps are from the leader and followers, which has the advantages of simple structure and few parameters. As we know, the basic SSA has been researched with different strategies for optimization problems, which is used to avoid becoming stuck in local optima. Wang et al. [9] proposed a modified SSA to solve the node coverage task of wireless sensor networks (WSNs) with tent chaotic population initialization, T-distribution mutation, and adaptive position update strategy. Zhou et al. [10] designed an improved A* algorithm with SSA using a refined B-spline interpolation strategy, which is used for the path planning problem. Mahdieh et al. [11] designed an improved SSA via a robust search strategy and a novel local search method for solving feature selection (FS) tasks. Zhang et al. [12] proposed a cosine opposition-based learning (COBL) to modify SSA and used it to address the FS problem. Wang et al. [13] designed a spherical evolution algorithm with spherical and hypercube search by two-stage strategy. In addition, multi-perspective initialization, Newton interpolation inertia weight, and followers’ update model strategies were also used in the improved SSA. A Gaussian Mixture Model (GMM) via SSA [14] was proposed for data clustering of big data processing.Wang et al. [15] used symbiosis theory and the Gaussian distribution to improve SSA with better exploitation capabilities, which was applied to optimize multiple parameters in fuel cell optimization systems. However, the performance of the basic SSA should be improved by the knowledge-enhanced strategy, where novelty Gaussian mutation, dynamic adjustment of hyperparameter, and mirror learning strategies can be researched for specific tasks.

For the classification task, SIAs are usually utilized to optimize the hyperparameters of the classifier network [16,17,18]. An improved pelican optimization algorithm (IPOA) [16] was designed to optimize the combination model of variational mode decomposition (VMD) and long short-term memory (LSTM), which is used to forecast Ultra-Short-Term Wind Speed. Li et al. [17] proposed an improved Parrot Optimizer (IPO) with an aerial search strategy to train the multilayer perceptron (MLP), which enhanced the exploration and optimization ability of the basic pelican optimizer. Its performance was evaluated using the CEC benchmark function and the data set of the oral English teaching quality classification. Song et al. [18] proposed a modified pelican optimization algorithm with multi-strategies and used it for a high-dimensional feature selection task through K-nearest neighbor (KNN) and support vector machine (SVM) classifiers. Panneerselvam et al. [19] proposed a dynamic salp swarm algorithm and weighted extreme learning machine to deal with the imbalance task in the classification dataset with a higher accuracy. Wang et al. [20] proposed a hierarchical and distributed strategy to enhance the Gravitational Search Algorithm, which is inspired by the structure of Multi-Layer Perceptrons (MLPs), resulting in significantly improved performance compared to existing methods. Yang et al. [21] proposed a self-learning salp swarm algorithm (SLSSA) to train the MLP classifier using UCI datasets; its performance was also verified by CEC2014 benchmark functions with a longer computational time than basic SSA.

In the field of smart agriculture, the application of SIAs can effectively enhance the efficiency of plant disease diagnosis [22] and seed classification [23,24] by classifiers, significantly improve work efficiency, and thus reduce economic costs. Pranshu et al. [25] used the five most popular machine learning approaches to the Rice varieties classification problem. Din et al. [26] used a Deep Convolutional Neural Network to identify rice grain varieties via the pre-trained strategy, named RiceNet, which can have better prediction accuracy than traditional machine learning (ML) methods. Iqbal et al. [27] used three lightweight networks to perform the rice varieties classification task, which can extend to mobile devices. However, the deep neural network method needs more computing resources than the joint ML classifier and the SIA method.

To address the aforementioned challenges, this study proposes an enhanced-knowledge Salp Swarm Algorithm (EKSSA) to optimize the critical parameters of SVM in seed classification tasks. The proposed algorithm incorporates several strategic improvements to enhance its optimization performance. Specifically, adaptive adjustment mechanisms for parameters and are introduced to effectively balance the exploration and exploitation capabilities of the salp population. Furthermore, a novel position update strategy based on Gaussian walk theory is implemented after the basic position update phase to significantly enhance the global search ability of individual salps. Additionally, a dynamic mirror learning strategy is designed to prevent premature convergence to local optima by creating mirrored search regions, thereby substantially improving local search efficiency. The effectiveness of the EKSSA is comprehensively evaluated through experiments on thirty-two CEC benchmark functions and two practical seed classification datasets, demonstrating its superior performance in both optimization accuracy and classification tasks. In summary, the contributions of the designed EKSSA are as follows:

- To enhance the performance of the basic SSA, an enhanced-knowledge Salp Swarm Algorithm (EKSSA) is proposed, and its effectiveness is rigorously evaluated through comparisons with other state-of-the-art optimization algorithms.

- Exploration and exploitation of the follower balances using different adjustment strategies for the parameters and by the exponential function.

- A novel Gaussian mutation strategy and a dynamic mirror learning strategy are introduced to enhance global search capability and prevent EKSSA from becoming trapped in local optima.

- Many CEC benchmark functions are applied to evaluate the performance of the designed EKSSA, and two seed classification datasets are also utilized by the combination of EKSSA and the SVM algorithm which is named EKSSA-SVM.

The remainder of this paper is structured as follows: Section 2 presents the basic SSA. Section 3 introduces the mathematical model of the proposed EKSSA. Section 4 presents and discusses the experimental results of comparative algorithms. In Section 5, the application of EKSSA for optimizing hyperparameters of SVM in seed classification is described. Finally, Section 6 concludes the study and suggests potential future research directions.

2. The Basic Salp Swarm Algorithm

Salp Swarm Algorithm (SSA) is a metaheuristic optimization technique modeled after the swarming and foraging behavior of salps; the main steps are from the leader and followers. The mathematical model of the behavior corresponds to the optimization process of the proposed SSA. There are two key stages of the basic SSA: Population Initial Stage and Position Update Stage.

2.1. Population Initial Stage

The optimization problem is assumed to have a D-dimensional search space, and the initial positions of the salp population are defined as:

where denotes the initial position of the slap, , . is the number of initial solutions and is the dimension of the issue. represents a random value in distributed uniformly. and indicate the upper and lower boundary values of the search space, respectively.

2.2. Position Update Stage

In the individual search process, the food source F for the slap is treated as the target, which is the optimal objective. In the search space, the position is updated by:

where represents the position of the leader in the jth dimension. indicates the jth dimensional food source. and denote a random value in according to the Gaussian law.

After the leader position with respect to the food source, the exploration and exploitation of the follower balances by the parameter , which is defined as follows:

where l denotes the current iteration. indicates the maximum number. Notably, the follower’s position is updated by:

where denotes the position of the ith follower in the jth dimension when .

3. The Proposed Enhanced Knowledge Salp Swarm Algorithm

In this study, to overcome the shortage of SSA falling into local optimum, we use the improved method to optimize hyperparameter of the SVM classifier for seed classification tasks. We propose an adjustment strategy for the parameter to balance the optimization process of the follower position. In addition, the Gaussian mutation strategy and the mirror learning strategy are employed to enhance the overall performance of the proposed EKSSA. Moreover, the position update strategy of the follower is also a novel approach. The following introduces in detail the process of the improved strategies.

To enhance the performance between exploration and exploitation of the follower, the adjustment strategy of the parameter is calculated by:

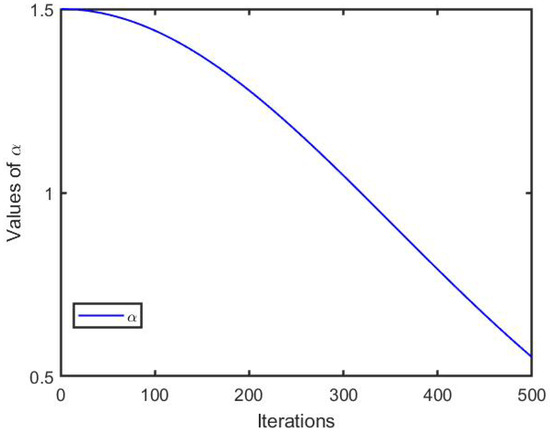

where denotes the adjustment strategy parameter, which is used to balance the follower position. l indicates the current iteration. is the maximum number. The hyperparameter curve is depicted in Figure 1, because nonlinear strategies can effectively enhance the search ability of EKSSA during the optimization process. In the early stage of the slap search, a larger value () can help followers obtain a better search space. In the later stage of slap search, reducing the value () can help followers search for the optimal value of the optimization problem.

Figure 1.

The curve of the parameter .

Then, the follower’s position from Equation (4) can be redefined as:

where denotes the position of the ith follower in the jth dimension when the . is a random number in (0,1) according to the Gaussian law. indicates the jth dimensional food source, which is the best position during the search process of the individual.

Notably, a new Gaussian mutation strategy is proposed to avoid falling into local optimum, and its expression is:

where denotes the standard deviation of Gaussian variation and it is set to 0.5. denotes the position of the ith follower in the jth dimension. indicates the jth dimensional food source. The expression of the Gaussian walk is defined as:

In addition, the mirror learning strategy is employed to prevent the EKSSA from converging on local optima, thereby strengthening its global search performance, which is defined as:

where is a random number in (0,1) according to the Gaussian law. k denotes the scaling factor of the mirror learning strategy. and indicate the upper and lower boundary values of the individual, respectively. The adjust strategy of the k is defined as:

where denotes a random number in (0,1) according to the Gaussian law. l is the current iteration. is the maximum number.

3.1. Computational Complexity Analysis

The test platforms can influence the consumption of optimization time for the same algorithm, which means that the designed EKSSA should be analyzed. Assuming that N is the population size of the EKSSA, T indicates the maximum number of iterations, and D is the dimension. The computational complexity of the proposed EKSSA algorithm is analyzed as follows: the initialization of the salp population requires operations; the position update in the basic global and local search phase has a complexity of ; the Gaussian mutation and mirror learning strategies contribute an additional to the update complexity. Furthermore, the complexity of the fitness sorting is . Therefore, the overall computational complexity of the EKSSA can be expressed as:

However, the computational complexity of the basic SSA is:

3.2. Flowchart and Pseudo-Code of the EKSSA

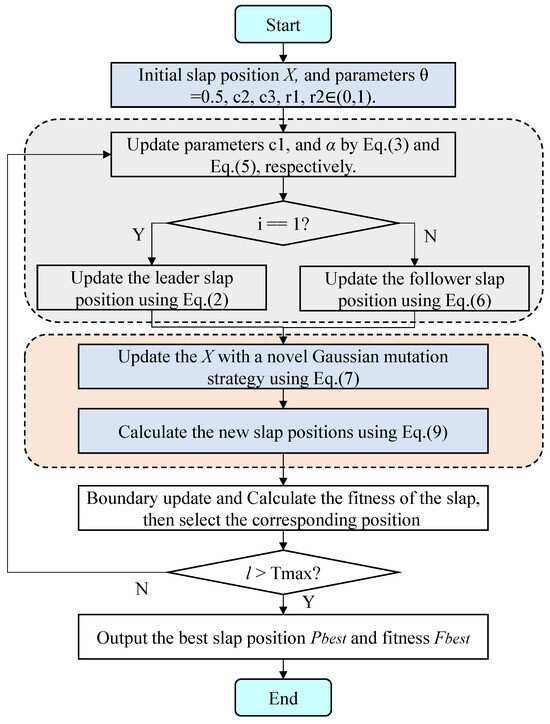

Figure 2 illustrates the flowchart of the EKSSA algorithm, detailing its optimization process. From Figure 2, it can be summarized in four stages. Stage 1 is the initialization of the slap position; Stage 2 includes parameter update, leader and follower position update of the proposed EKSSA; Stage 3 involves individual position update by the Gaussian mutation and mirror learning strategy. Stage 4 represents the best population of slaps corresponding to fitness during the optimization process. After the iterations of the designed method, the best solution and fitness value are generated.

Figure 2.

The flowchart of the designed EKSSA.

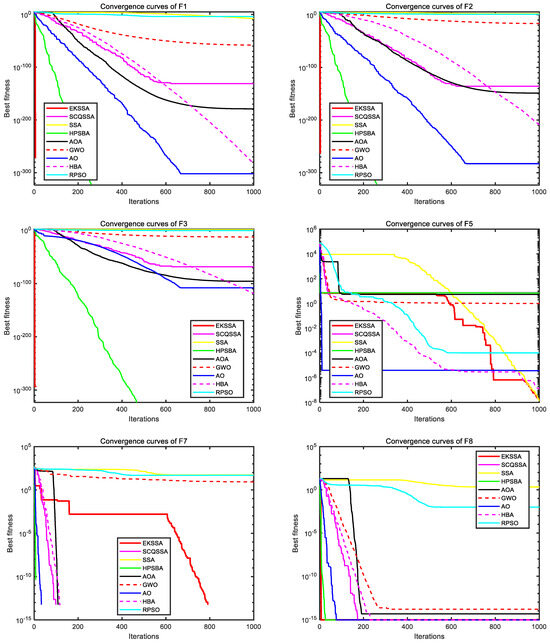

To understand the main architecture of the method, the pseudo-code of the designed EKSSA is displayed in Algorithm 1. In particular, input, output, and the main code of the EKSSA are listed.

| Algorithm 1: Pseudo-code of EKSSA. |

|

4. Results and Analysis

This section details the experimental setup, including the selected benchmark functions, algorithm hyperparameters, and the presentation of results from the CEC benchmarks and boxplot analyses.

4.1. Selected Benchmark Test Functions

A comprehensive evaluation of the proposed algorithm’s performance was conducted on 32 functions from the CEC benchmark suite [28,29,30,31], where F1 to F6 are unimodal (U), F7 to F10 are multimodal (M), and F11 to F18 are fixed-dimensional (M) functions, respectively. The dimension of F1 to F10 was wet at 30. Table 1 details the 18 functions. Table depicts eight functions (F19 to F26) from CEC2017 [30] and six functions (F27 to F32) from CEC2022 [31], respectively. For the experiment in this study, Matlab 2018a was the platform with a Windows 10 system, 16 GB memory, and Intel(R) (Santa Clara, CA, USA.) Core (TM) i5-10210U CPU @2.11 GHz.

Table 1.

Eighteen test functions for the performance evaluation.

4.2. Hyperparameter Settings

In our work, the performance and effectiveness of the designed EKSSA were assessed through a comprehensive suite of 32 numerical optimization benchmark functions. The comaprison algorithms are Randomized Particle Swarm Optimizer (RPSO) [32], Grey Wolf Optimizer (GWO) [5], Archimedes Optimization Algorithm (AOA) [33], Hybrid Particle Swarm Butterfly Algorithm (HPSBA) [34], Aquila Optimizer (AO) [7], Honey Badger Algorithm (HBA) [35], Salp Swarm Algorithm (SSA) [6], Sine–Cosine Quantum Salp Swarm Algorithm (SCQSSA) [36], and the proposed EKSSA. Notably, Table 2 presents the hyperparameter settings of the comparison approaches. For the optimization problem, the size of the population is set to 30 of all comparison approaches in this study, that is, . Each test function is executed independently 30 times, and the maximum number of iterations serves as the condition for optimization termination. is set to 1000 in this study.

Table 2.

Comparison of algorithm hyperparameter settings.

4.3. Analysis of CEC Benchmark Function Results

From Table 3 containing the results of F1, F2, F3, F7, and F9, the proposed EKSSA can obtain the theoretical optimum, where Best, Worst, Mean, and Std are all the best. The smaller the STD value, the better the stability of the algorithm’s optimization of the comparison methods. For the fixed functions F16, F17, and F18, the EKSSA achieves the theoretical optimal value with the smallest Std value in the comparison methods. For the RPSO, its performance is better than that others on F13, F14, F15 under the best Std with theoretical optimum. In addition, the HPSBA can also obtain the same result as EKSSA in F1, F2, F3, F7, and F9; however, its performance should be improved on other benchmark functions. For F7 and F8, HBA, AO, HPSBA, SCQSSA and EKSSA have better results than others. For F9, HBA, AO, AOA, HPSBA, SCQSSA and EKSSA achieve the theoretical optimal value. From Table 3, the results show that EKSSA holds the top rank against the eight other methods over the suite of 18 test functions, and the overall ranking is EKSSA > HBA > AO > AOA > GWO > RPSO > SSA > HPSBA > SCQSSA. Also, Table 4 shows p-values of the comparison algorithms for F1 to F18 by the WSR test.

Table 3.

Comparative evaluation of nine algorithms using 18 benchmark functions.

Table 4.

WSR test p-values of eight algorithms with designed EKSSA.

From Table 5, we report results on a collection of 14 test functions drawn from the CEC2017 and CEC2022 benchmarks with Mean, Std, Time, and p-value by the WSR test. For the Mean of F19, F20, F22, F23, F31, F32, the proposed EKSSA can obtain the best values comapred to other comparison algorithms. RPSO can obtain the best result from the Mean of F26, F27, and F29 compared to others. The performance of the EKSSA on complex numerical optimization problems is thus demonstrably effective. This is validated by the Friedman test results presented in Table 6, which ranks the algorithms based on their performance in 14 test functions of the CEC2017 and CEC2022 benchmark suites. The overall ranking is EKSSA > HBA > SSA > GWO > RPSO > AO > AOA > HPSBA > SCQSSA.

Table 5.

Results of the nine comparison methods on the CEC2017 and CEC2022 benchmark functions with WSR test.

Table 6.

Friedman test results of the nine comparison methods on the CEC2017 and CEC2022 benchmark functions.

The empirical results in Table 3, Table 4, Table 5 and Table 6 indicate a modest increase in the optimization time of EKSSA compared to the basic SSA. This observed difference aligns with the conclusions drawn from the theoretical computational complexity analysis. For F1 with high dimension, the consumption time of the designed EKSSA is 1.6713E-01 s and the consumption time of the SSA is 1.6116E-01 s, which is approximately 0.006 s higher. For the F16 with fixed dimension, the consumption times of EKSSA and SSA are 2.7603E-01 s and 2.7019E-01 s, respectively, which is also approximately 0.006 s higher. Although the computational complexity is slightly higher, the performance of the proposed EKSSA is significantly improved compared to SSA from the results of the test functions.

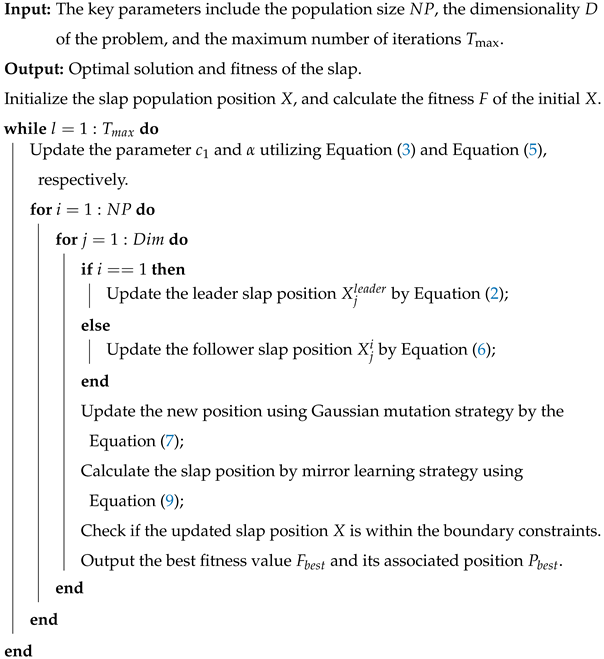

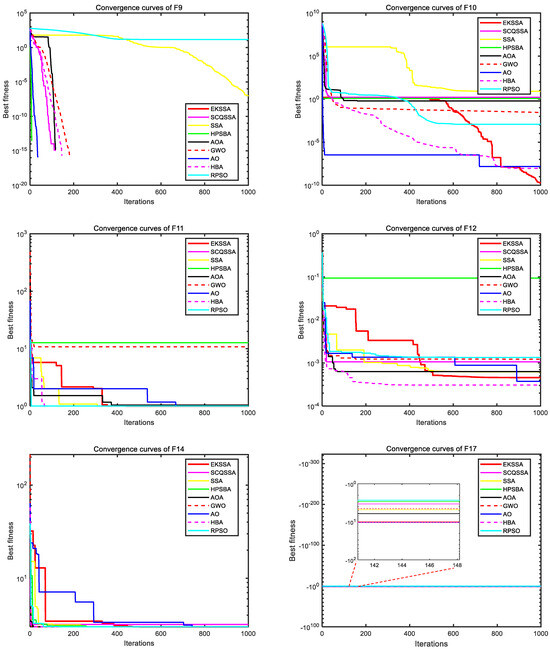

Figure 3 and Figure 4 depict the convergence curves, which can be used to analyze the convergence speed and accuracy curve of comparison methods. From Figure 3, the EKSSA has better convergence than comparison methods with a fast speed on F1, F2, F3, and F8. From Figure 4, for F10, the convergence curve of EKSSA obtains the best value. For F5, F7, F10, and F12, the proposed EKSSA curves have multiple inflection points, indicating that it has a very good ability to escape from local optima. For other functions, the proposed EKSSA does not outperform all peers, indicating potential for further enhancement of its convergence properties in future work.

Figure 3.

Convergence curves of the comparison algorithms on F1 to F3, F5, F7, and F8.

Figure 4.

Convergence curves of the comparison algorithms on F9 to F12, F14, and F17.

4.4. Boxplot Results Analysis

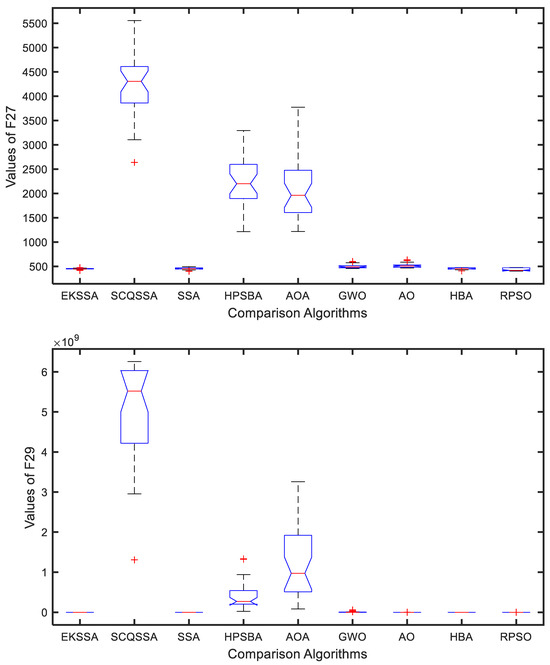

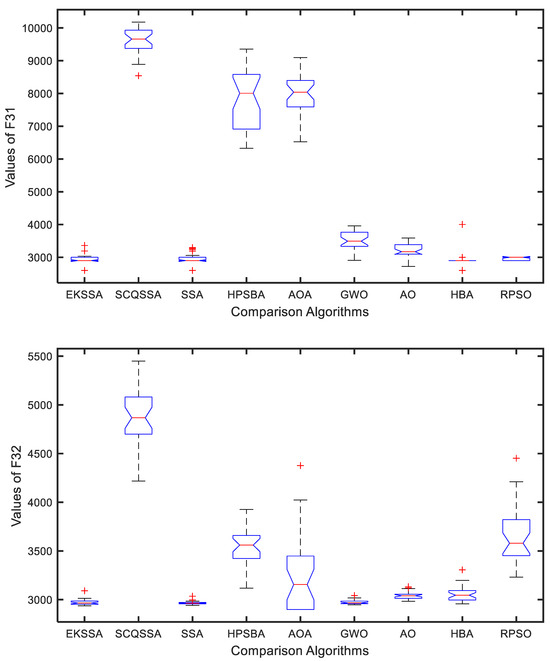

The boxplot can be used to explain the stability of the comparison approaches. Figure 5 and Figure 6 show the boxplot results of the nine comparison methods in F27, F29, F31, and F32. Notably, each algorithm was independently run 30 times for a test function. From Figure 5, EKSSA is better than others in F27. EKSSA, SSA, and HBA have a similar result to the boxplot of F29. In Figure 6, there are a few outliers in EKSSA from F31 and F32. The boxplot results demonstrate that the proposed EKSSA has better stability performance for the numerical optimization problem.

Figure 5.

Boxplot for F27 and F29 of the comparison methods.

Figure 6.

Boxplot for F31 and F32 of the comparison methods.

4.5. Ablation Results Analysis

To evaluate the contribution of each proposed strategy, ablation studies were conducted, and the results are summarized in Table 7. In this table, EKSSA1 denotes the algorithm that incorporates only adaptive adjustment strategies for parameters and . EKSSA2 corresponds to the version that uses solely the novel position update strategy via Gaussian walk. EKSSA3 represents the configuration with only the dynamic mirror learning strategy. For comparison, the baseline SSA and fully integrated EKSSA are also included, which combines all three strategies. From Table 7, EKSSA1, EKSSA2, and EKSSA3 are all superior to basic SSA, indicating that the proposed improvement strategy is effective. The EKSSA that combines multiple strategies has the best performance, which indicates that the fusion of multiple strategies can effectively enhance the optimization ability of the algorithm and has a complementary effect.

Table 7.

Ablation results of the proposed strategies of the EKSSA.

5. Results of EKSSA-SVM for Seed Classification

High-quality seeds can help farmers achieve better profits and safe food. The classification of seeds through intelligent technology not only improves efficiency but also reduces the cost of manual screening. Thus, it is necessary for us to study the ML algorithm to identify seed varieties. The efficiency and accuracy of existing methods still hold potential for further improvement. Notably, there is also a gap for the seed classification task by the optimizing the hyperparameter of SVM using SIAs. In this research, two seed classification datasets were used to verify the performance of the proposed EKSSA. The SVM [37] served as the baseline model for seed classification, and the EKSSA was employed to optimize its hyperparameters, specifically the penalty coefficient c and the kernel parameter g.

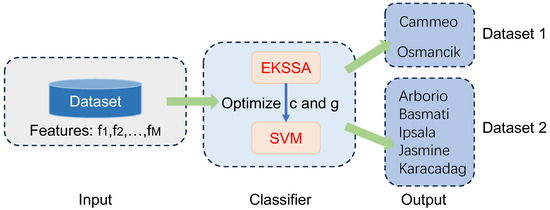

Figure 7 presents the diagram of EKSSA-SVM for seed classification tasks using two Rice Varieties datasets from the open resources. There are two categories in the Rice Varieties Dataset 1, 1630 Cammeo and 2180 Osmancik [38]. Seven features (, ) were sourced and consolidated with Area, Diameter, Major-axis length, Minor-axis length, Eccentricity, Convex-area, and Extent. In addition, Rice Varieties Dataset 2 has five categories: Arborio, Basmati, Ipsala, Jasmine, and Karacadag [39]; 10,000 samples were used for each category in this study. Sixteen features (, ) were sourced and consolidated with Area, Perimeter, Major-Axis, Minor-Axis, Eccentricity, Eqdiasq, Solidity, Convex-Area, Extent, Aspect-Ratio, Roundness, Compactness, Shapefactor1, Shapefactor2, Shapefactor3, and Shapefactor4. The metric accuracy (Acc/%) is defined as:

where indicates the true positive number of the seed sample; denotes the true negative number of the seed sample. and are the false positive and negative number of the seed sample, respectively.

Figure 7.

The diagram of the EKSSA-SVM for seed classification.

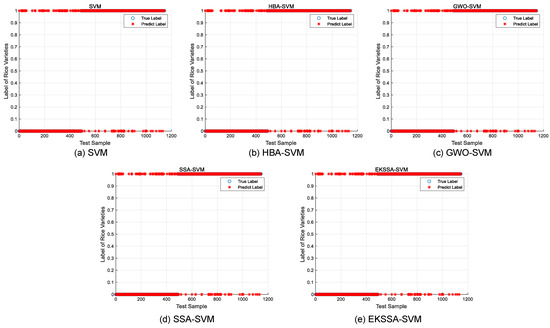

Notably, the features of the seed classification task are first normalized before being input into the classifier, which should be mapped between 0 and 1. The optimization of hyperparameters c and g significantly influences the predictive accuracy of the SVM model in the seed classification task. In these experiments, the dataset was partitioned into training and testing sets with a ratio of 7:3. The search intervals for c and g were set to [0.1, 5] and [0.1, 10], respectively. The population size was set to 10, and the iteration was set to 15. The comparison methods were selected from the top four ranking of the nine algorithms, which are EKSSA, HBA, SSA, and GWO. The results of Rice Varieties Dataset 1 and Dataset 2 are listed in Table 8 and Table 9, respectively. In addition, the true label and the predict label result is depicted in Figure 8 of the five methods.

Table 8.

Comparison results of the two Rice Varieties datasets.

Table 9.

Comparison results of the five Rice Varieties datasets.

Figure 8.

Predict results of the comparison approaches for Rice Varieties Dataset 1.

For the Rice Varieties Dataset 1 in Table 8, test Acc (%) results of KNN, SVM, HBA-SSA, GWO-SVM, SSA-SVM, and EKSSA-SVM are 88.58, 90.5512 with , 90.6387 with , 90.8136 with , 90.7262 with , and 90.8136 with , respectively. Although the classification accuracy of EKSSA-SVM and GWO-SSA is the same, the c and g values for optimization are different.

For the Rice Varieties Dataset 2 in Table 9, the accuracy is 97.7867% by the basic SVM with . SSA-SVM has 98.0667% Acc with . GWO-SVM has 97.9600% Acc with . HBA-SVM has 98.0667% Acc with . The proposed EKSSA-SVM has 98.1133% Acc for the Rice Varieties classification task when c is set to 3.89383 and g is set to 6.03773. It is 0.3266, 0.0466, 0.1533, and 0.3466 percentage points higher than SVM, HBA-SVM, GWO-SVM, SSA-SVM, and EKSSA-SVM, respectively.

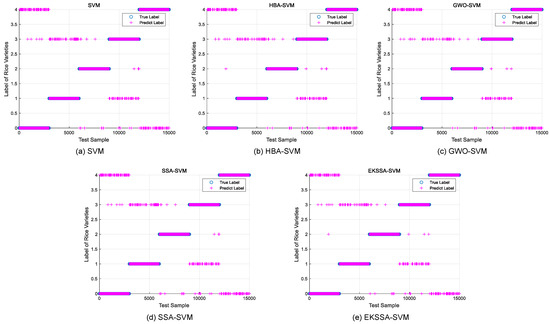

From Table 8 and Table 9, for different datasets, the values of parameter c and g are different by the proposed EKSSA-SVM. In addition, the true label and the predict label result of the five Rice Varieties dataset are depicted in Figure 9 of the SVM, HBA-SVM, GWO-SVM, SSA-SVM, and EKSSA-SVM. Except for Jasmine, there are obvious misclassification samples in the other types of Rice Varieties classification using Dataset 2. Thus, the predicted results of the EKSSA-SVM can be further modified by the quantum strategy.

Figure 9.

Predict results of the comparison approaches for Rice Varieties Dataset 2.

6. Conclusions and Future Work

To address the deficiency that SSA is prone to fall into local optima, the EKSSA was designed and used to fill a gap for the seed classification task by optimizing the hyperparameter of SVM. Different adjustment strategies for the parameters and are used to balance the exploration and exploitation of the slaps. Moreover, a novel position update strategy is inspired by the Gaussian walk theory, which improves the global search ability of the slap individual after the basic position update stage. Notably, a dynamic mirror learning strategy is designed to mirror the search range of the optimization problem with a local search capability. The performance of the EKSSA is verified by the thirty-two CEC benchmark functions, which is compared via eight advanced algorithms of RPSO, GWO, AOA, HPSBA, AO, HBA, SSA, and SCQSSA. In addition, a combined EKSSA-SVM classifier is proposed for the seed classification problem with a higher accuracy, in which EKSSA-SVM has 98.1133% Acc for the five Rice Varieties datasets when c is set to 3.89383 and g is set to 6.03773. In future work, chaotic population initialization and quantum strategies will be used to improve the performance of the EKSSA, which will be applied to solve the task of diagnosing plant leaf diseases [40].

Author Contributions

Conceptualization, Q.L. and Y.Z.; methodology, Q.L. and Y.Z.; software, Q.L.; validation, Q.L. and Y.Z.; formal analysis, Q.L. and Y.Z.; investigation, Q.L. and Y.Z.; resources, Y.Z.; data curation, Q.L. and Y.Z.; writing—original draft preparation, Q.L.; writing—review and editing, Q.L. and Y.Z.; visualization, Q.L. and Y.Z.; supervision, Y.Z.; project administration, Y.Z.; funding acquisition, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the Guangdong Basic and Applied Basic Research Foundation, grant numbers 2023A1515140021 and 2022A1515110757, and the Guangdong Academy of Agricultural Sciences Talent Introduction Project for 2022, grant number R2022YJ-YB3023.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The Rice Varieties datasets can be found at https://www.muratkoklu.com/datasets/.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Chakraborty, A.; Kar, A.K. Swarm intelligence: A review of algorithms. In Nature-Inspired Computing and Optimization; Springer: Cham, Switzerland, 2017; pp. 475–494. [Google Scholar]

- Zhang, M.; Wen, G. Duck swarm algorithm: Theory, numerical optimization, and applications. Clust. Comput. 2024, 27, 6441–6469. [Google Scholar] [CrossRef]

- Zhang, K.; Yuan, F.; Jiang, Y.; Mao, Z.; Zuo, Z.; Peng, Y. A Particle Swarm Optimization-Guided Ivy Algorithm for Global Optimization Problems. Biomimetics 2025, 10, 342. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-Qaness, M.A.; Gandomi, A.H. Aquila optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Abualigah, L.; Shehab, M.; Alshinwan, M.; Alabool, H. Salp swarm algorithm: A comprehensive survey. Neural Comput. Appl. 2020, 32, 11195–11215. [Google Scholar] [CrossRef]

- Wang, J.; Zhu, Z.; Zhang, F.; Liu, Y. An improved salp swarm algorithm for solving node coverage optimization problem in WSN. Peer-to-Peer Netw. Appl. 2024, 17, 1091–1102. [Google Scholar] [CrossRef]

- Zhou, H.; Shang, T.; Wang, Y.; Zuo, L. Salp Swarm Algorithm Optimized A* Algorithm and Improved B-Spline Interpolation in Path Planning. Appl. Sci. 2025, 15, 5583. [Google Scholar] [CrossRef]

- Khorashadizade, M.; Abbasi, E.; Fazeli, S.A.S. Improved salp swarm optimization algorithm based on a robust search strategy and a novel local search algorithm for feature selection problems. Chemom. Intell. Lab. Syst. 2025, 258, 105343. [Google Scholar] [CrossRef]

- Zhang, H.; Qin, X.; Gao, X.; Zhang, S.; Tian, Y.; Zhang, W. Improved salp swarm algorithm based on Newton interpolation and cosine opposition-based learning for feature selection. Math. Comput. Simul. 2024, 219, 544–558. [Google Scholar] [CrossRef]

- Wang, Y.; Cai, Z.; Guo, L.; Li, G.; Yu, Y.; Gao, S. A spherical evolution algorithm with two-stage search for global optimization and real-world problems. Inf. Sci. 2024, 665, 120424. [Google Scholar] [CrossRef]

- Saravanakumar, R.; TamilSelvi, T.; Pandey, D.; Pandey, B.K.; Mahajan, D.A.; Lelisho, M.E. Big data processing using hybrid Gaussian mixture model with salp swarm algorithm. J. Big Data 2024, 11, 167. [Google Scholar] [CrossRef]

- Wang, R.; Li, K.; Chen, P.; Tang, H. Multiple subpopulation Salp swarm algorithm with Symbiosis theory and Gaussian distribution for optimizing warm-up strategy of fuel cell power system. Appl. Energy 2025, 393, 126050. [Google Scholar] [CrossRef]

- Guo, L.; Xu, C.; Ai, X.; Han, X.; Xue, F. A Combined Forecasting Model Based on a Modified Pelican Optimization Algorithm for Ultra-Short-Term Wind Speed. Sustainability 2025, 17, 2081. [Google Scholar] [CrossRef]

- Li, F.; Dai, C.; Hussien, A.G.; Zheng, R. IPO: An Improved Parrot Optimizer for Global Optimization and Multilayer Perceptron Classification Problems. Biomimetics 2025, 10, 358. [Google Scholar] [CrossRef]

- Song, H.M.; Wang, J.S.; Hou, J.N.; Wang, Y.C.; Song, Y.W.; Qi, Y.L. Multi-strategy fusion pelican optimization algorithm and logic operation ensemble of transfer functions for high-dimensional feature selection problems. Int. J. Mach. Learn. Cybern. 2025, 16, 4433–4470. [Google Scholar] [CrossRef]

- Panneerselvam, R.; Balasubramaniam, S. Multi-Class Skin Cancer Classification using a hybrid dynamic salp swarm algorithm and weighted extreme learning machines with transfer learning. Acta Inform. Pragensia 2023, 12, 141–159. [Google Scholar] [CrossRef]

- Wang, Y.; Gao, S.; Yu, Y.; Cai, Z.; Wang, Z. A gravitational search algorithm with hierarchy and distributed framework. Knowl.-Based Syst. 2021, 218, 106877. [Google Scholar] [CrossRef]

- Yang, Z.; Jiang, Y.; Yeh, W.C. Self-learning salp swarm algorithm for global optimization and its application in multi-layer perceptron model training. Sci. Rep. 2024, 14, 27401. [Google Scholar] [CrossRef] [PubMed]

- Khan, I.R.; Sangari, M.S.; Shukla, P.K.; Aleryani, A.; Alqahtani, O.; Alasiry, A.; Alouane, M.T.H. An automatic-segmentation-and hyper-parameter-optimization-based artificial rabbits algorithm for leaf disease classification. Biomimetics 2023, 8, 438. [Google Scholar] [CrossRef]

- Koklu, M.; Ozkan, I.A. Multiclass classification of dry beans using computer vision and machine learning techniques. Comput. Electron. Agric. 2020, 174, 105507. [Google Scholar] [CrossRef]

- Islam, M.M.; Himel, G.M.S.; Moazzam, M.G.; Uddin, M.S. Artificial Intelligence-based Rice Variety Classification: A State-of-the-Art Review and Future Directions. Smart Agric. Technol. 2025, 10, 100788. [Google Scholar] [CrossRef]

- Saxena, P.; Priya, K.; Goel, S.; Aggarwal, P.K.; Sinha, A.; Jain, P. Rice varieties classification using machine learning algorithms. J. Pharm. Negat. Results 2022, 13, 3762–3772. [Google Scholar]

- Din, N.M.U.; Assad, A.; Dar, R.A.; Rasool, M.; Sabha, S.U.; Majeed, T.; Islam, Z.U.; Gulzar, W.; Yaseen, A. RiceNet: A deep convolutional neural network approach for classification of rice varieties. Expert Syst. Appl. 2024, 235, 121214. [Google Scholar] [CrossRef]

- Iqbal, M.J.; Aasem, M.; Ahmad, I.; Alassafi, M.O.; Bakhsh, S.T.; Noreen, N.; Alhomoud, A. On application of lightweight models for rice variety classification and their potential in edge computing. Foods 2023, 12, 3993. [Google Scholar] [CrossRef]

- Yao, X.; Liu, Y.; Lin, G. Evolutionary programming made faster. IEEE Trans. Evol. Comput. 1999, 3, 82–102. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Wu, G.; Mallipeddi, R.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2017 Competition on Constrained Real-Parameter Optimization; Technical Report; National University of Defense Technology: Changsha, China; Kyungpook National University: Daegu, Republic of Korea; Nanyang Technological University: Singapore, 2017. [Google Scholar]

- Bujok, P.; Kolenovsky, P. Eigen crossover in cooperative model of evolutionary algorithms applied to CEC 2022 single objective numerical optimisation. In Proceedings of the 2022 IEEE Congress on Evolutionary Computation (CEC), Padua, Italy, 18–23 July 2022; pp. 1–8. [Google Scholar]

- Liu, W.; Wang, Z.; Zeng, N.; Yuan, Y.; Alsaadi, F.E.; Liu, X. A novel randomised particle swarm optimizer. Int. J. Mach. Learn. Cybern. 2021, 12, 529–540. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussain, K.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W. Archimedes optimization algorithm: A new metaheuristic algorithm for solving optimization problems. Appl. Intell. 2021, 51, 1531–1551. [Google Scholar] [CrossRef]

- Zhang, M.; Wang, D.; Yang, M.; Tan, W.; Yang, J. HPSBA: A modified hybrid framework with convergence analysis for solving wireless sensor network coverage optimization problem. Axioms 2022, 11, 675. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Hussain, K.; Mabrouk, M.S.; Al-Atabany, W. Honey Badger Algorithm: New metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 2022, 192, 84–110. [Google Scholar] [CrossRef]

- Jia, F.; Luo, S.; Yin, G.; Ye, Y. A novel variant of the salp swarm algorithm for engineering optimization. J. Artif. Intell. Soft Comput. Res. 2023, 13, 131–149. [Google Scholar] [CrossRef]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 27. [Google Scholar] [CrossRef]

- Cinar, I.; Koklu, M. Classification of rice varieties using artificial intelligence methods. Int. J. Intell. Syst. Appl. Eng. 2019, 7, 188–194. [Google Scholar] [CrossRef]

- Koklu, M.; Cinar, I.; Taspinar, Y.S. Classification of rice varieties with deep learning methods. Comput. Electron. Agric. 2021, 187, 106285. [Google Scholar] [CrossRef]

- Wang, A.; Song, Z.; Xie, Y.; Hu, J.; Zhang, L.; Zhu, Q. Detection of Rice Leaf SPAD and Blast Disease Using Integrated Aerial and Ground Multiscale Canopy Reflectance Spectroscopy. Agriculture 2024, 14, 1471. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).