Abstract

Steady-State Visual Evoked Potentials (SSVEPs) have emerged as an efficient means of interaction in brain–computer interfaces (BCIs), achieving bioinspired efficient language output for individuals with aphasia. Addressing the underutilization of frequency information of SSVEPs and redundant computation by existing transformer-based deep learning methods, this paper analyzes signals from both the time and frequency domains, proposing a stacked encoder–decoder (SED) network architecture based on an xLSTM model and spatial attention mechanism, termed SED-xLSTM, which firstly applies xLSTM to the SSVEP speller field. This model takes the low-channel spectrogram as input and employs the filter bank technique to make full use of harmonic information. By leveraging a gating mechanism, SED-xLSTM effectively extracts and fuses high-dimensional spatial-channel semantic features from SSVEP signals. Experimental results on three public datasets demonstrate the superior performance of SED-xLSTM in terms of classification accuracy and information transfer rate, particularly outperforming existing methods under cross-validation across various temporal scales.

1. Introduction

In the fields of neuroscience and cognitive psychology, exploring how the brain processes and responds to external stimuli is crucial. With the continuous progress of bionics technology, we have been able to deeply understand the working principles of the brain, and applications such as spellers, robotic arms, and BCI medical recovery [1,2,3] have come into reality. As a special electroencephalogram (EEG) phenomenon, the SSVEP is a reaction of the visual cortex to periodic visual stimulation, which has the advantages of outstanding classification performance and strong anti-interference ability [4,5]. It employs specific frequencies of visual stimuli to trigger electrical changes in the brain at corresponding frequencies, which can be captured and analyzed by EEG devices, providing an efficient means of communication for non-invasive brain–computer interfaces. It can increase the SSVEP signal category by setting a variety of visual flicker stimulation frequencies, thereby building a rich instruction set to enable individuals with aphasia to communicate with the external world via biomimetic spelling paradigms [6].

Conventional SSVEP signal classification methods, such as Canonical Correlation Analysis (CCA) [7], are limited by noise sensitivity and linear signal characteristics, which makes them less effective at capturing phase information. To address these issues, several improved algorithms have been proposed. FB-CCA [8] firstly deployed the filter bank technique on CCA, combining fundamental and harmonic frequencies to enhance SSVEP detection. The more representative methods include TRCA and its variants [9,10,11], which improved the signal-to-noise ratio by maximizing task-based data reproduction and removing background EEG activity. Due to the issue of redundant spatial filters in TRCA, TDCA [12] employed a discriminative model that maximizes inter-class distinctions and reduces intra-class distinctions for feature extraction. In other ways, SS-CCA [13] and PRCA [14], respectively, improved performance by introducing temporal delay to signals and replicating periodic repeat components.

Recently, deep learning has driven significant innovation in BCIs by offering powerful nonlinear feature extraction, making it increasingly advantageous over conventional algorithms in solving complex classification tasks [15,16]. For time-domain data, EEGNet [17] and SSVEPNet [18] stand out as prominent models, both leveraging convolutional neural networks (CNNs) to recognize diverse stimulus signals. Similarly, the filter bank technique can be incorporated into deep learning methods as well [19,20]. However, time-domain signals are susceptible to noise and periodic signal observation challenges. For this, researchers have explored frequency features using Fast Fourier Transform (FFT) to analyze richer amplitude and phase information. The CCNN [21] proves the effectiveness of the combination of complex spectral features and convolutional neural networks. Subsequently, PLFA and MS1D_CNN [22,23], respectively, utilized spatial attention and Squeeze-and-Excitation [24] modules to enhance frequency features. To alleviate the problem of insufficient data, a 3DCNN [25] employed a deep transfer learning strategy on the frequency domain.

To reveal the complex topological relationships among EEG channels, graph neural networks have shown application prospects in the field of EEG due to their robust trustworthiness and ability to discover potential influences [26]. In the first application of EEG emotion recognition based on GCNNs [27], it adopted graph structure to model signals and capture the correlations between different regions of the brain. On this basis, a DGCNN [28] utilized the dynamic graph convolution method to learn the adjacency matrix between channels to evaluate the connection topology within the brain network. Specifically for SSVEP classification, the DDGCNN [29] introduced hierarchical dynamic graph learning and dense linking, and, respectively, employed dynamic convolutional kernels and graph dynamic channel fusion techniques to reconstruct the DGCNN and reduce computational complexity, further verifying the feature contributions of different brain regions to decoding.

Driven by the development of large language models, transformer-based models have been extensively applied in the fields of signal processing for their powerful sequence modeling capability and have achieved excellent results [30,31,32,33]. However, the self-attention mechanism of the Transformer [34] results in a reduction in computational efficiency and resource wastage due to redundant computation. The emergence of xLSTM [35], another novel large language model architecture, not only overcomes the limitations of traditional LSTM [36] models in handling long sequences and concurrent computations but offers different insights for the field of signal processing.

In this paper, a neural network, SED-xLSTM, is proposed for speller stimulus classification of SSVEPs, integrating xLSTM and spatial attention [37] with Transformer patterns to construct encoders and decoders, which are widely adopted in the realm of image segmentation. The approach initially maps frequency-domain information into high-dimensional semantic representations containing spatial-frequency and correlation features via two distinct encoders. Subsequently, the xLSTM-based decoder reconstructs the combined features and removes redundancy to ultimately accomplish the classification. Additionally, the filter bank technique is deployed before entering the SED-xLSTM to fully utilize harmonic frequencies for further improving performance. Furthermore, we compare SED-xLSTM with other baseline methods and replace xLSTM with another mainstream sequence model as well. The experimental results illustrate that SED-xLSTM exhibits distinct advantages in average accuracy and average information transfer rate (ITR) under cross-validation across four different temporal scales.

2. Datasets and Processing

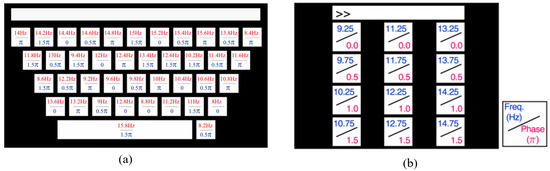

Datasets 1 and 2 are Benchmark and BETA versions of the public SSVEP dataset provided by Tsinghua University [38]. The datasets utilize the mode of a virtual keyboard, covering 40 types of frequencies from 8.0 Hz to 15.8 Hz with a frequency interval of 0.2 Hz, which is shown in Figure 1a. In addition, four different phases (0, 0.5, 1, 1.5) are employed to modulate these frequencies. The datasets provide references for developers to construct a decoding system that can rapidly and accurately map the collected SSVEP signals to the corresponding keyboard keys. Dataset 1 is collected from 35 participants with six test blocks for each participant, and Dataset 2 contains 70 participants with four test blocks in the experiment. Each subject is required to gaze at the designated stimulus target to generate a detectable SSVEP response, which is then collected by the recording device. The sampling rate is 250 Hz and the EEG signals are collected using a 64-channel recorder, which consists of 0.5 s before, during, and after the stimulation. In Dataset 1, the stimulus duration is 5 s, whereas in Dataset 2, it is 2 s for the first 15 participants and 3 s for the others.

Figure 1.

(a) The stimulus paradigm of dataset 1 and 2; (b) The stimulus paradigm of dataset 3. The numbers on the keyboard correspond to the flickering frequency of the display light source during the evoked experiment.

Dataset 3 is from the publicly available database UCSD in San Diego [39]. The dataset is derived from 10 healthy subjects, containing 12 frequency stimuli ranging from 9.25 Hz to 14.75 Hz with an interval of 0.50 Hz and four phases of 0, 0.5, 1, and 1.5 and is shown in Figure 1b. Each participant performs experiments with 12 different stimulus frequencies and a 256 Hz sampling rate. Every experiment consists of 15 separate tests, and each test contains 1114 sampling points.

For Datasets 1 and 2, the experiment selects all subjects in Dataset 1 and the last 55 subjects in Dataset 2 [22] and chooses 16 types of stimulus signal data out of 40 stimuli from all test blocks of each subject. In addition to the eight typical stimulus frequencies [22,23], we also select eight extra stimulus types with larger intervals from these typical stimuli as much as possible to enhance the discrimination of the stimulation samples in the process of adding classification tasks. The frequency and phase of the 16 stimuli range from 8.2 Hz to 15.2 Hz and from 8.6 Hz to 15.6Hz, with an interval of 1 Hz. In addition, we choose a total of nine electrodes (O1, O2, PO3, Oz, PO4, PO5, PO6, POZ and PZ) [40] and cut out 0.5 s before and after the stimulation in order to keep the continuity of the stimulus signal. Hence, the retained lengths of the signal in two datasets were 5 s and 3 s. For Dataset 3, since the stimulation starts from the 39th sampling point (after 0.15 s), our experiment selects all 12 stimulus frequencies and 4s stimulus signals corresponding to 0.15 s–4.15 s from eight electrodes (O1, O2, PO3, Oz, PO4, PO7, PO8, POZ) [21]. The signal clipping process corresponds to Figure 2b.

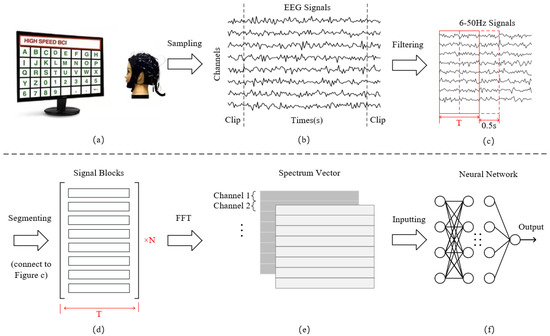

Figure 2.

(a) Sample collection; (b) Signal filtering and extraction of stimulation periods; (c,d) Signal segmentation; (e) Fast Fourier Transform; (f) Decoding classification.

After the above operation, the EEG signals of the three datasets are filtered using a bandpass FIR filter with a range from 6 to 50 Hz, implemented using EEGLAB toolbox in Matlab. Since every subject has different response onset time and duration for the same visual stimulus frequency, Figure 2c,d adopt four different time window lengths of 0.5 s, 1 s, 1.5 s, and 2 s. Signals of each length are segmented according to a sliding window of 0.5 s until the end position of the last segment is the same as that of the original signal. The length of the overlapping part is the difference between the four time windows and 0.5 s. Segmenting the data with different time lengths not only enhances system stability but allows for the expansion of smaller datasets. The time domain feature dimension obtained by segmentation is , where C is the number of channels and is the sample points.

Subsequently, we carry out an FFT transformation of 512 frequency points for each channel of each sample. When the input signal is real-valued, the FFT result exhibits conjugate symmetry, which implies that the second half of the spectrum is a mirror image of the first half. Therefore, we can obtain the real and imaginary parts of the spectrum by employing only half of the FFT result, specifically 256 points. The conversion expression is defined as

where x represents time domain information, i represents imaginary unit, and represent the real and imaginary parts of the complex spectrum, respectively. The amplitude information is obtained by calculating the modulus of the complex number through real and imaginary parts, while the phase is determined by the arctangent of the ratio of the imaginary part to the real part. The information of the two vectors is spliced into a 3D frequency domain feature matrix according to the dimensions of channels and frequency points. Through this, the dimension of the input data is , where the value of F is 256 and 2 represents the two dimensions of the real and the imaginary part, as illustrated in Figure 2e,f.

3. Preliminaries

xLSTM [35] is an extension of LSTM (Long Short-Term Memory), which introduces a new gating mechanism and memory structure to enhance the performance of LSTM in natural language processing. In the biological nervous system, neurons control the flow of information by regulating the strength of synapses and the efficiency of signal transmission. xLSTM inherits the brain-like memory pattern of LSTM, simulating the selective memory and forgetting of the brain through gate structure and cell state, thereby realizing flexible management of long and short-term memory. Owing to the favorable scalability, xLSTM has been gradually applied in the fields of image [41,42,43] and signal processing [44,45].

xLSTM is composed of the stacking of sLSTM [35] and mLSTM [35], which are variants of LSTM. sLSTM adds a scalar update mechanism on the basis of LSTM, employing exponential gating and normalization technique to optimize the accuracy and stability of the model. Meanwhile, mLSTM extends the vector operations from a scalar to a matrix , enhancing the memory ability of the model and enabling it to perform parallel computing on data. Since mLSTM has no interactions between hidden states across successive timesteps, it can be fully parallelized on modern hardware to achieve fast computation. In this paper, the mLSTM is utilized as the basic module, which is defined as

where denotes the cell state, is the normalizer state, and represents the hidden state. Additionally, mLSTM stores a pair of vectors, the key and the value , to achieve higher separability according to the ccovariance update rule [46]. The forget gate controls the attenuation rate, the input gate regulates the learning rate, while the output gate scales the retrieval vector. Moreover, mLSTM invokes an additional state to stabilize gates, avoiding overflow caused by exponential activation functions:

During the process of state update, xLSTM retains and extends the dual states and multiple-gate structure of LSTM, providing a stable structural foundation for long-term memory retention and fine-grained control of reading and writing. In contrast, the GRU simplifies the gate structure and compresses semantic information into a single vector, sacrificing some expressive capacity for greater conciseness and efficiency. Owing to the presence of multi-scale components in EEG signals, such as transient events and artifacts, it is easier for GRU to confuse long-term features when there is a strong noise. For the Transformer model, despite its proficiency in capturing global dependencies and the capability for parallel computation, it is prone to overlook the local structures of EEG frequency-domain features and lacks sensitivity to fine-grained information.

4. SED-xLSTM

4.1. Overview

The SED-xLSTM aims to classify SSVEP speller instructions based on the extended LSTM, and it is roughly composed of five main components: the spatial attention encoder, the xLSTM-based encoder EM-block and decoder DM-block, the feature recalibration, and the output layer. The spatial attention mechanism is derived from the CBAM module [37], which is commonly used in image processing to enhance the features of key regions. In this model, the encoders and the decoder all apply the stacked structure according to Transformer architecture to learn the spectrogram semantic information of the signal in a hierarchical manner, enabling the model to progressively deepen the understanding of the input content. Considering that the feature fusion process leads to the generation of redundancy, we optimize and calibrate the fused features adopting the feature recalibration technique [24] to adaptively adjust the weights before they enter the decoder. Furthermore, SED-xLSTM is trained and calibrated in a data-driven manner, and the recognition of speller commands is conducted in an offline experiment.

From the view of EEG electrode channels, due to the spatial correlation of SSVEP signals among various channels, neuronal activities in certain regions of the scalp may synchronously respond during the visual stimulus evoking process. Therefore, the model integrates spectral information from multiple channels into patch blocks within both branches for holistic processing, thereby capturing the synergy among local channels. On the other hand, the patch-based processing not only preserves the discrete attribute of the data but prevents the model from overly relying on specific channels. Consequently, the xLSTM is leveraged to learn the cooperative dependencies of these multi-channel features and contains the harmonic information as well.

4.2. Detailed Structure

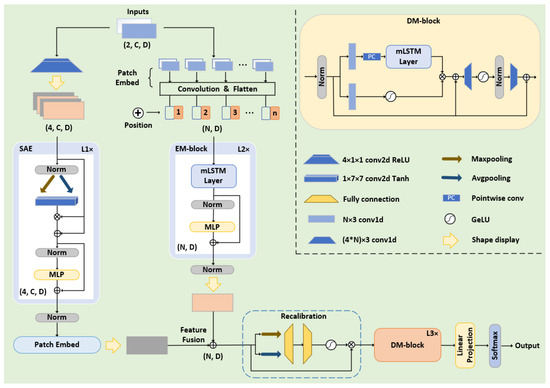

The pipeline and parameters of the model are illustrated in Figure 3. As mentioned in Section 3, the original input dimension of the model is . Before entering the EM-block, we utilize a convolutional neural network to divide the spectral features into several fixed-size patches as ViTs [47], which are flattened and mapped to the . N represents the number of patches, while dm corresponds to the embedding dimension that is consistent with F. Subsequently, we incorporate trainable positional encoding into each patch to learn the sequential dependencies and heighten the sensitivity of the model to positional information. As for the EM-block, it consists of two mLSTM layers and multiple perceptron layers in series, with skip connections implemented. In the mLSTM layers, the number and size of the parallel heads are set to 4 and 128. The height and width size of each patch are 3 (UCSD: 2) and 64.

Figure 3.

Detailed parameters and architecture of SED-xLSTM.

The FFT can reveal the frequency components and periodic patterns in data that are often not apparent and difficult to capture directly from the time-domain data. Frequency-domain features are more discriminative, enabling the spatial attention mechanism to more accurately focus on key regions and patterns, thereby achieving more effective feature enhancement. Since the response frequency of the brain to stimuli is particularly prominent on the EEG channel, the data is propagated in the form of 3D features in the spatial attention encoder (SAE) to maintain spatial coherence. This module compresses the input content along the channel dimension via max pooling and average pooling, and subsequently maps the compression results to a spatial weight matrix through a convolutional network and the Tanh activation function. The matrix is element-wise multiplied with the input content to obtain the key feature information, which is fed into the multilayer perceptron with skip connections implemented as well. After the feature enhancement by this encoder, the data is ultimately mapped to by an additional patch embedding layer for feature fusion with the output of the EM-block.

The feature recalibration module optimizes the fusion process by introducing a dynamic weighting mechanism that allocates weights based on the importance and relevance of features. The fused features are squeezed via max pooling and average pooling along the patch dimension and then pass through two fully connected layers and GELU activation for excitation. The patch weights obtained from the excitation operation are applied to the original fused feature map, thereby suppressing unimportant and redundant information.

Within the DM-block, a gating mechanism-based network structure is implemented for xLSTM to control the storage and update of effective information, and two convolutional modules are integrated to enhance non-linear expressiveness. The kernel size and stride of the convolutional layers are respectively set to 3 and 1, and the parameters of xLSTM are identical to those in the EM-block. In addition to the primary modules, the model extensively incorporates layer normalization and dropout techniques to stabilize training and mitigate overfitting, and the final instruction classification task is accomplished by the output layer.

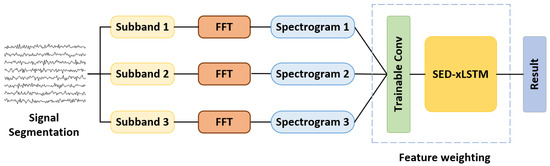

4.3. Model with Filter Bank

Apart from the fundamental frequency, SSVEP data contains multiple harmonic components that can serve as classification features as well. Based on this theory, the filter bank technique is leveraged on this model to further improve the classification performance, which is shown in Figure 4. To make the frequency index on the spectrogram express the feature information as richly as possible, we first filter the signal into n bands through bandpass filters and subsequently perform FFT on the n groups of signals to obtain the frequency-domain features. Compared with the method of obtaining sub-frequencies by zeroing out the unnecessary frequencies, this approach makes the interval frequencies of the adjacent two indexes on the spectrogram more refined. The amplitude and phase information are then concatenated along the channel dimension and a trainable convolutional layer is implemented to weight and reshape the spectrograms, resulting in an input shape of . In this experiment, the number of filter banks is set at 3, consistent with other research [25,40], to maintain a balance between model complexity and performance. For the filters, the lower cutoff frequencies are all set at 6 Hz, while the upper cutoff frequencies are 50 Hz, 64 Hz, and 80 Hz, respectively, covering the frequency range of 3 to 5 times the fundamental stimulation frequency. To mitigate the overfitting impact caused by the noise in the input features based on the filter bank, we adjust the double mLSTM layer to a single layer and increase the dropout rate to 0.4 in the decoder of SED-xLSTM.

Figure 4.

The filter branches obtained by the filter bank are weighted by a trainable convolutional layer to express harmonic information.

4.4. Training Settings

The SED-xLSTM network is trained by default deploying the Adam optimizer and the cross-entropy loss function with a batch size of 64. The initial learning rate is set to 0.001. Additionally, the learning rate attenuation mechanism and early stopping mechanism are implemented during the training process. The learning rate will be halved if the validation loss does not decrease for 5 consecutive epochs, and training will be terminated if the loss does not decrease for more than 25 consecutive epochs. Additionally, the network, employing one Nvidia RTX 3060 GPU and two A10 GPU graphics cards to accelerate computation.

5. Performance Analysis

5.1. Baseline Methods

In this paper, we conduct a comparative evaluation of our approach against six other baseline methods for EEG signal classification: TRCA [9], CCNN [21], EEGformer [32], PLFA [22], EEGNet [17], and SSVEPNet [18]. TRCA is a traditional knowledge-driven method, while the others are data-driven methods based on deep learning.

TRCA enhances the signal-to-noise ratio of task-related EEG components significantly by maximizing the reproducibility of time-locked activity across task trials to identify the optimal weighting coefficients.

CCNN includes a convolution layer and a fully connected layer and takes the complex spectrum of the signal as input data, which confirms the potential advantage of spectrum representation in SSVEP decode tasks.

EEGformer employs a one-dimensional convolutional neural network to automatically extract features from the EEG channels and integrates the Transformer to successively learn the temporal, regional, and synchronous characteristics of the EEG signals.

PLFA introduces the spatial attention mechanism to enhance the discriminative frequency information.

EEGNet integrates depthwise separable convolution and spatial pooling techniques to achieve automatic feature extraction of EEG signals.

SSVEPNet adopts a CNN-BiLSTM network architecture, combining spectrum normalization and label smoothing techniques to suppress overfitting.

5.2. Comparison Experiment

The performance of the methods is measured using accuracy and information transfer rate (ITR) as metrics under the identical data preprocessing conditions. Each classifier is independently trained for a 16-class classification task on the first two datasets and a 12-class classification task on the last dataset, with five-fold cross-validation across various data lengths. The formula of ITR is defined as

where N denotes the number of classification targets, P is the accuracy rate, and T denotes to the selection time of a single target, expressed in bits/min.

Table 1, Table 2 and Table 3 display the average accuracy of the various methods across the three datasets at different time window lengths. Due to the increase in time duration, the periodic characteristics contained in the signals are more obvious. As the time window length is set to 2 s, the proposed method achieves the highest accuracy performance on all datasets, with values of , , and , respectively. Compared with the second-place SSVEPNet on Datasets 1 and 3, the average accuracy is higher by and , while it outperforms the second-place EEGformer by on Dataset 2. After preprocessing with the filter bank (FB), the performance of the model is , , and , respectively. In all experimental scenarios, the proposed method attains the optimal classification accuracy and reveals remarkable distinctions between SED-xLSTM and the baseline methods and between FBSED-xLSTM and the other seven methods via paired sample t-tests with a degree of freedom of 3 (*: p < 0.05, **: p < 0.01, ***: p < 0.001).

Table 1.

Average accuracy of all methods on Benchmark dataset (%).

Table 2.

Average accuracy of all methods on BETA dataset (%).

Table 3.

Average accuracy of all methods on UCSD dataset (%).

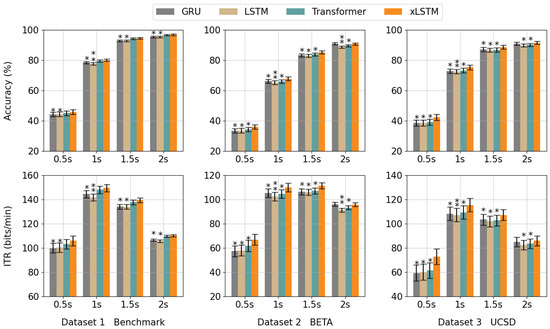

Figure 5 illustrates the ITR metric for various methods. SED-xLSTM achieves optimal performance when the time length is set to 1s, with values of bits/min and bits/min on Datasets 1 and 3, respectively. Similarly, it exceeds the second-place SSVEPNet by 5.54 bits/min and 7.14 bits/min. On the other dataset, the model performs comparable performance in the cases of 1 s and 1.5 s, with values of bits/min and bits/min. The second-place EEGformer is 9.06 bits/min and 5.36 bits/min lower, respectively. Additionally, this trend is observed in FBSED-xLSTM as well. Its ITR can reach a maximum of bits/min and bits/min on Datasets 1 and 3, while the values for 1 s and 1.5 s on Dataset 2 are bits/min and bits/min, respectively.

Figure 5.

Average ITR of all methods at four time lengths for three datasets (bits/min).

Among the existing sequence models, LSTM [36], GRUs [48], and Transformers [34] are widely recognized as methods with excellent sequence modeling capabilities. Nonetheless, due to the difficulty of parallelizing computations and the limitation in capability to handle long sequences in LSTM and GRUs, as well as the extensive computations for Transformers to gradually learn local information, we take advantage of xLSTM to circumvent the aforementioned limitations of conventional models in processing sequential data. Figure 6 exhibits a comparative evaluation of xLSTM against other sequence modules under the condition of identical numbers of layers or heads across various datasets. The results reveal that xLSTM maintains optimal performance in the majority of cases. It is worth noting that the Transformer module performs with no remarkable distinction compared to xLSTM on Dataset 1, achieving accuracy (ITR) values of ( bits/min), ( bits/min), ( bits/min), and ( bits/min) across the four data lengths. The GRU also shows no significant distinction to xLSTM at the data length of 2 s on Datasets 2 and 3, with accuracy (ITR) values of ( bits/min) and ( bits/min), respectively.

Figure 6.

The first row represents the average accuracy of various sequence modules on each dataset, while the second row corresponds to the average ITR. The paired sample t-test with a degree of freedom of 3 is conducted (*: p < 0.05, **: p < 0.01).

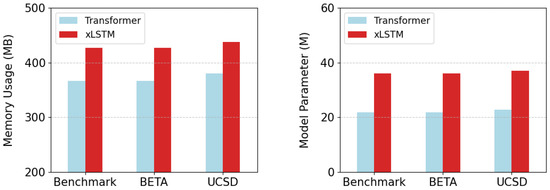

Furthermore, the model size and the computational memory usage are employed as two extra metrics for comparing the distinctions between xLSTM and the Transformer module, which is illustrated in Figure 7. Since the fixed conversion points of FFT, the two metrics remain consistent at various data lengths of the same dataset. In Datasets 1 and 2, the number of parameters and memory usage for a batch of xLSTM module are 36.1 M and 427.04 MB due to the identical sample size. For the Transformer module, the values are 21.8 M and 366.47 MB, which are 14.3 M and 60.57 MB lower than xLSTM, respectively. In Dataset 3, the model size and memory usage of xLSTM are 37.0 M and 437.97 MB, while the results for the Transformer are 22.7 M and 380.41 MB, which are 14.3 M and 57.56 MB lower, respectively. In the multi-head mechanism of mLSTM, each head independently performs complex matrix operations, and the embedding dimension of the model is mapped to high dimension through projection blocks for forward propagation. In contrast, the projection dimension in the Transformer is relatively lower, resulting in a smaller computational capacity.

Figure 7.

The comparison of the two modules in terms of memory usage and model parameters.

To further validate the stability of the model on untrained subjects and comprehensively consider two performance metrics, accuracy and ITR, cross-subject generalization experiments are implemented in this paper at time lengths of 1s and 1.5s. For each dataset, the subjects are evenly divided into five groups. The data of each group is successively utilized for validation, while the remaining parts serve as the training set. Table 4 displays the generalization results of FBSED-xLSTM. In the case of 1s, the average accuracy and ITR of the five subsets on each dataset are , , and bits/min, bits/min, bits/min, respectively. As for 1.5 s, the values are , , and bits/min, bits/min, bits/min, respectively. In a prior training strategy, the data distribution of the training and validation sets is more consistent. However, the two datasets come from various subjects in cross-subject training. The model overly learns the signal features of the training subjects, and the substantial individual differences diminish the generalization capacity of the model in new subject data.

Table 4.

For the fixed time length, the first row shows the accuracy (%) of each subset, while the second row denotes the ITR (bits/min).

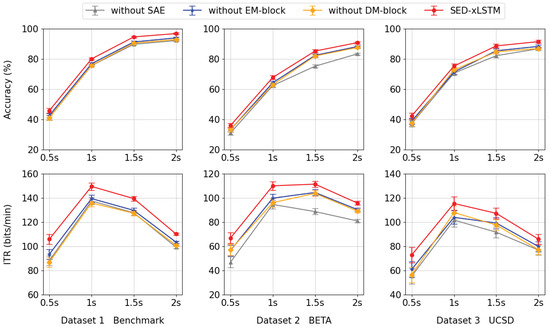

5.3. Ablation Study

To verify the validity of the SAE- and xLSTM-based modules proposed in this paper, we designed and conducted a series of ablation studies in which we progressively removed certain components from the model to observe their impact on model performance at four time lengths, as shown in Figure 8. In experiments involving the removal of the EM-block and DM-block, the accuracy and ITR metrics demonstrate a marked decline in comparison to the intact SED-xLSTM model, with the magnitude of reduction being roughly equivalent. This outcome indicates that the EM-block and DM-block have comparable impacts on the performance of the model. Furthermore, in the absence of the SAE module, the performance of the method declines the most across all datasets, which manifests the effectiveness of feature fusion from two encoders. This ablation study emphasizes the necessity of integrating the three sub-networks simultaneously and underscores the significance of combining their functionalities to optimize overall performance.

Figure 8.

From left to right, the first row presents the accuracy results for each component across various datasets, while the second row shows the corresponding ITR values in the same sequence.

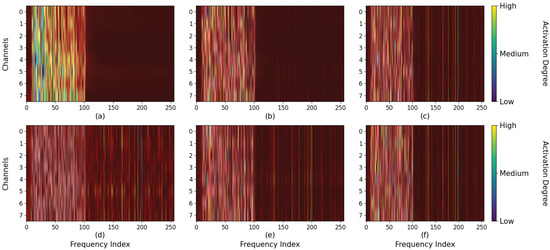

Moreover, the class activation mapping is carried out in this experiment to explore how the spatial attention mechanism and filter bank enhance the discernment of the model. Figure 9 exhibits the class activation results of the amplitude characteristic information of the fixed sample on Dataset 3 at a stimulation frequency of 9.25 Hz. When the number of spatial attention encoders is 0, the model mainly focuses on the low-frequency region, and the harmonic information at high frequencies is not fully utilized. Additionally, similar frequencies around this stimulus affect the judgment of the model as well. As the number of encoder layers increases, the harmonic information in the high-frequency region is gradually utilized, and the model reduces its focus on similar frequencies. For the filter bank, the input of the model is the spectral features of three specific filtering ranges. The harmonic features are amplified and weighted by class activation to enhance the discrimination ability.

Figure 9.

The first row of subplots (a–c) represent the class activation patterns of the model when the number of the spatial attention encoder is set to 0, 1, and 4 respectively. The second row of subplots (d–f) denotes the effective contribution region on the results of the three filtering parameters.

6. Discussion

The method proposed in this study aims to tackle the redundant computation in Transformer-based approaches by introducing a gating mechanism, thereby offering a new alternative for signal decoding. The SSVEP spectrogram is essentially channel-oriented sequence data. Similar to text data, it also has rich contextual dependencies internally. By virtue of the unique structure, xLSTM exhibits high sensitivity to noise within the signal, which enables it to achieve higher-precision classification. Meanwhile, since the spectrogram features lack the distinct standard characteristics like texture and color that are commonly found in natural images, we incorporated network integration into our chosen strategy to enhance the overall feature representation.

Despite the relatively superior performance of SED-xLSTM, it is subject to certain limitations. Firstly, the SSVEP is characterized by a continuous and periodic response to visual stimuli of specific frequencies. When the time length of the sample is relatively short, the number of signal cycles collected is limited. This leads to a decrease in frequency resolution and signal-to-noise ratio during spectral analysis, making it difficult to fully capture stable evoked frequency components. Additionally, the sample is more susceptible to interference, which can mask the true characteristics of the SSVEP. Therefore, the shorter time window restricts the full manifestation of the significant frequency characteristics of the SSVEP signal, especially at the time length of 0.5 s. In the second place, the 16 stimuli decoded instead of 40 stimuli conducted on the first two datasets in this paper affects the the generalizability of the model as well. Looking ahead, we will concentrate on instruction recognition within short time windows to enhance the real-time response rate of BCI systems and achieve a broader classification of stimulus frequencies.

7. Conclusions

This paper addresses the issues of the insufficient extraction of SSVEP frequency domain information by existing deep learning models and the redundant computations generated by Transformer models, proposing the SED-xLSTM model method, which is based on a novel gating mechanism, xLSTM, to enhance the classification performance of speller instructions on few channels. The model employs a stacked encoder–decoder architecture for instruction classification and incorporates spatial attention mechanisms for feature enhancement. To fully exploit harmonic information, we further enhance the performance of the model by utilizing filter bank techniques in the preprocessing stage. In this paper, we pioneer the application of xLSTM to the decoding of SSVEP-based spellers and introduce the design principles of the model and the signal preprocessing procedures in detail, bridging the gap between natural language and SSVEP signals. Comparative experiments across three public datasets with four different time lengths demonstrate that the SED-xLSTM model exhibits remarkable distinctions compared to other baseline methods. Moreover, the xLSTM module has been proven to possess considerable competitiveness as well when benchmarked against existing mainstream sequential model architectures in this experiment.

Author Contributions

Conceptualization, L.D. and C.X.; methodology and visualization, L.D.; software, L.D.; validation, C.X., R.X. and X.W.; formal analysis, L.D. and W.Y.; investigation, C.X.; resources, Y.L.; data curation, L.D.; writing—original draft preparation, L.D.; writing—review and editing, L.D. and C.X.; supervision, C.X.; project administration, Y.L. and W.Y.; funding acquisition, C.X. All authors have read and agreed to the published version of the manuscript.

Funding

This paper is supported by the National Natural Science Foundation of China (NSFC) under Grant No. 62306106, and by the Natural Science Foundation of Hubei Province under Grant No. 2023AFB377.

Data Availability Statement

https://github.com/dlyres/SED-xLSTM (accessed on 18 August 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Singh, S.; Singh, S. Systematic review of spell-checkers for highly inflectional languages. Artif. Intell. Rev. 2020, 53, 4051–4092. [Google Scholar] [CrossRef]

- Yan, Z.; Chang, Y.; Yuan, L.; Wei, F.; Wang, X.; Dong, X.; Han, H. Deep Learning-Driven Robot Arm Control Fusing Convolutional Visual Perception and Predictive Modeling for Motion Planning. J. Organ. End User Comput. (JOEUC) 2024, 36, 1–29. [Google Scholar] [CrossRef]

- Ramadan, R.A.; Altamimi, A.B. Unraveling the potential of brain-computer interface technology in medical diagnostics and rehabilitation: A comprehensive literature review. Health Technol. 2024, 14, 263–276. [Google Scholar] [CrossRef]

- Zander, T.O.; Kothe, C.; Welke, S.; Rötting, M. Utilizing secondary input from passive brain-computer interfaces for enhancing human-machine interaction. In Foundations of Augmented Cognition, Neuroergonomics and Operational Neuroscience, Proceedings of the 5th International Conference, FAC 2009 Held as Part of HCI International, San Diego, CA, USA, 19–24 July 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 759–771. [Google Scholar]

- Wu, J.; Wang, J. An Analysis of Traditional Methods and Deep Learning Methods in SSVEP-Based BCI: A Survey. Electronics 2024, 13, 2767. [Google Scholar] [CrossRef]

- Chen, X.; Huang, X.; Wang, Y.; Gao, X. Combination of augmented reality based brain-computer interface and computer vision for high-level control of a robotic arm. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 3140–3147. [Google Scholar] [CrossRef]

- Lin, Z.; Zhang, C.; Wu, W.; Gao, X. Frequency recognition based on canonical correlation analysis for SSVEP-based BCIs. IEEE Trans. Biomed. Eng. 2006, 53, 2610–2614. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Y.; Gao, S.; Jung, T.P.; Gao, X. Filter bank canonical correlation analysis for implementing a high-speed SSVEP-based brain–computer interface. J. Neural Eng. 2015, 12, 046008. [Google Scholar] [CrossRef]

- Nakanishi, M.; Wang, Y.; Chen, X.; Wang, Y.-T.; Gao, X.; Jung, T.-P. Enhancing detection of SSVEPs for a high-speed brain speller using task-related component analysis. IEEE Trans. Biomed. Eng. 2017, 65, 104–112. [Google Scholar] [CrossRef]

- Jin, J.; Wang, Z.; Xu, R.; Liu, C.; Wang, X.; Cichocki, A. Robust similarity measurement based on a novel time filter for SSVEPs detection. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 4096–4105. [Google Scholar] [CrossRef]

- Deng, Y.; Ji, Z.; Wang, Y.; Zhou, S.K. OS-SSVEP: One-shot SSVEP classification. Neural Netw. 2024, 180, 106734. [Google Scholar] [CrossRef]

- Liu, B.; Chen, X.; Shi, N.; Wang, Y.; Gao, S.; Gao, X. Improving the performance of individually calibrated SSVEP-BCI by task-discriminant component analysis. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 1998–2007. [Google Scholar] [CrossRef] [PubMed]

- Cherloo, M.N.; Amiri, H.K.; Daliri, M.R. Spatio-spectral CCA (SS-CCA): A novel approach for frequency recognition in SSVEP-based BCI. J. Neurosci. Methods 2022, 371, 109499. [Google Scholar] [CrossRef]

- Ke, Y.; Liu, S.; Ming, D. Enhancing ssvep identification with less individual calibration data using periodically repeated component analysis. IEEE Trans. Biomed. Eng. 2023, 71, 1319–1331. [Google Scholar] [CrossRef]

- Zhang, X.; Yao, L.; Wang, X.; Monaghan, J.; McAlpine, D.; Zhang, Y. A survey on deep learning-based non-invasive brain signals: Recent advances and new frontiers. J. Neural Eng. 2021, 18, 031002. [Google Scholar] [CrossRef]

- Xu, D.; Tang, F.; Li, Y.; Zhang, Q.; Feng, X. An analysis of deep learning models in SSVEP-based BCI: A survey. Brain Sci. 2023, 13, 483. [Google Scholar] [CrossRef]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 2018, 15, 056013. [Google Scholar] [CrossRef]

- Pan, Y.; Chen, J.; Zhang, Y.; Zhang, Y. An efficient CNN-LSTM network with spectral normalization and label smoothing technologies for SSVEP frequency recognition. J. Neural Eng. 2022, 19, 056014. [Google Scholar] [CrossRef] [PubMed]

- Ding, W.; Shan, J.; Fang, B.; Wang, C.; Sun, F.; Li, X. Filter bank convolutional neural network for short time-window steady-state visual evoked potential classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 2615–2624. [Google Scholar] [CrossRef]

- Yao, H.; Liu, K.; Deng, X.; Tang, X.; Yu, H. FB-EEGNet: A fusion neural network across multi-stimulus for SSVEP target detection. J. Neurosci. Methods 2022, 379, 109674. [Google Scholar] [CrossRef] [PubMed]

- Ravi, A.; Beni, N.H.; Manuel, J.; Jiang, N. Comparing user-dependent and user-independent training of CNN for SSVEP BCI. J. Neural Eng. 2020, 17, 026028. [Google Scholar] [CrossRef]

- Lin, Y.; Zang, B.; Guo, R.; Liu, Z.; Gao, X. A deep learning method for SSVEP classification based on phase and frequency characteristics. J. Electron. Inf. Technol. 2022, 44, 446–454. [Google Scholar]

- Wang, X.; Cui, X.; Liang, S.; Chen, C. A Fast Recognition Method of SSVEP Signals Based on Time-Frequency Multiscale. J. Electron. Inf. Technol. 2023, 45, 2788–2795. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Xiong, H.; Song, J.; Liu, J.; Han, Y. Deep transfer learning-based SSVEP frequency domain decoding method. Biomed. Signal Process. Control 2024, 89, 105931. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, B.; Yuan, X.; Pan, S.; Tong, H.; Pei, J. Trustworthy graph neural networks: Aspects, methods, and trends. Proc. IEEE 2024, 112, 97–139. [Google Scholar] [CrossRef]

- Jang, S.; Moon, S.E.; Lee, J.S. EEG-based video identification using graph signal modeling and graph convolutional neural network. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 3066–3070. [Google Scholar]

- Song, T.; Zheng, W.; Song, P.; Cui, Z. EEG emotion recognition using dynamical graph convolutional neural networks. IEEE Trans. Affect. Comput. 2018, 11, 532–541. [Google Scholar] [CrossRef]

- Zhang, S.; An, D.; Liu, J.; Chen, J.; Wei, Y.; Sun, F. Dynamic decomposition graph convolutional neural network for SSVEP-based brain–computer interface. Neural Netw. 2024, 172, 106075. [Google Scholar] [CrossRef]

- Liu, J.; Wang, R.; Yang, Y.; Zong, Y.; Leng, Y.; Zheng, W.; Ge, S. Convolutional Transformer-based Cross Subject Model for SSVEP-based BCI Classification. IEEE J. Biomed. Heal. Inform. 2024, 28, 6581–6593. [Google Scholar] [CrossRef]

- Xie, J.; Zhang, J.; Sun, J.; Ma, Z.; Qin, L.; Li, G.; Zhou, H.; Zhan, Y. A transformer-based approach combining deep learning network and spatial-temporal information for raw EEG classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 2126–2136. [Google Scholar] [CrossRef]

- Wan, Z.; Li, M.; Liu, S.; Huang, J.; Tan, H.; Duan, W. EEGformer: A transformer–based brain activity classification method using EEG signal. Front. Neurosci. 2023, 17, 1148855. [Google Scholar] [CrossRef]

- Mao, S.; Gao, S. Enhancing SSEVP Classification via Hybrid Convolutional-Transformer Networks. In Proceedings of the 2024 IEEE 6th International Conference on Power, Intelligent Computing and Systems (ICPICS), Shenyang, China, 26–28 July 2024; pp. 562–570. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998. [Google Scholar]

- Beck, M.; Pöppel, K.; Spanring, M.; Auer, A.; Prudnikova, O.; Kopp, M.; Klambauer, G.; Brandstetter, J.; Hochreiter, S. xlstm: Extended long short-term memory. arXiv 2024, arXiv:2405.04517. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Liu, B.; Huang, X.; Wang, Y.; Chen, X.; Gao, X. BETA: A large benchmark database toward SSVEP-BCI application. Front. Neurosci. 2020, 14, 627. [Google Scholar] [CrossRef] [PubMed]

- Nakanishi, M.; Wang, Y.; Wang, Y.T.; Jung, T.P. A Comparison Study of Canonical Correlation Analysis Based Methods for Detecting Steady-State Visual Evoked Potentials. PLoS ONE 2017, 10, e0140703. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Zhang, Y.; Pan, Y.; Xu, P.; Guan, C. A transformer-based deep neural network model for SSVEP classification. Neural Netw. 2023, 164, 521–534. [Google Scholar] [CrossRef]

- Chen, T.; Ding, C.; Zhu, L.; Xu, T.; Ji, D.; Wang, Y.; Zang, Y.; Li, Z. xlstm-unet can be an effective 2d & 3d medical image segmentation backbone with vision-lstm (vil) better than its mamba counterpart. arXiv 2024, arXiv:2407.01530. [Google Scholar]

- Alkin, B.; Beck, M.; Pöppel, K.; Hochreiter, S.; Brandstetter, J. Vision-lstm: Xlstm as generic vision backbone. arXiv 2024, arXiv:2406.04303. [Google Scholar]

- Zhu, Q.; Cai, Y.; Fan, L. Seg-LSTM: Performance of xLSTM for semantic segmentation of remotely sensed images. arXiv 2024, arXiv:2406.14086. [Google Scholar]

- Ding, L.; Wang, H.; Fu, L. STSE-xLSTM: A Deep Learning Framework for Automated Seizure Detection in Long Video Sequences Using Spatio-Temporal and Attention Mechanisms. In Proceedings of the 2024 10th International Conference on Computer and Communications (ICCC), Chengdu, China, 13–16 December 2024; pp. 781–785. [Google Scholar]

- Li, Y.; Sun, Q.; Murthy, S.M.; Alturki, E.; Schuller, B.W. GatedxLSTM: A Multimodal Affective Computing Approach for Emotion Recognition in Conversations. arXiv 2025, arXiv:2503.20919. [Google Scholar] [CrossRef]

- Dayan, P.; Willshaw, D.J. Optimising synaptic learning rules in linear associative memories. Biol. Cybern. 1991, 65, 253–265. [Google Scholar] [CrossRef]

- Dosovitskiy, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).