Abstract

The multi-strategy optimized dream optimization algorithm (MSDOA) is proposed to address the challenges of inadequate search capability, slow convergence, and susceptibility to local optima in intelligent optimization algorithms applied to UAV three-dimensional path planning, aiming to enhance the global search efficiency and accuracy of UAV path planning algorithms in 3D environments. First, the algorithm utilizes Bernoulli chaotic mapping for population initialization to widen individual search ranges and enhance population diversity. Subsequently, an adaptive perturbation mechanism is incorporated during the exploration phase along with a lens imaging reverse learning strategy to update the population, thereby improving the exploration ability and accelerating convergence while mitigating premature convergence. Lastly, an Adaptive Individual-level Mixed Strategy (AIMS) is developed to conduct a more flexible search process and enhance the algorithm’s global search capability. The performance of the algorithm is evaluated through simulation experiments using the CEC2017 benchmark test functions. The results indicate that the proposed algorithm achieves superior optimization accuracy, faster convergence speed, and enhanced robustness compared to other swarm intelligence algorithms. Specifically, MSDOA ranks first on 28 out of 29 benchmark functions in the CEC2017 test suite, demonstrating its outstanding global search capability and conver-gence performance. Furthermore, UAV path planning simulation experiments conducted across multiple scenario models show that MSDOA exhibits stronger adaptability to complex three-dimensional environments. In the most challenging scenario, compared to the standard DOA, MSDOA reduces the best cost function fitness by 9% and decreases the average cost function fitness by 12%, thereby generating more efficient, smoother, and higher-quality flight paths.

1. Introduction

In recent years, unmanned aerial vehicles (UAVs) have been widely applied with the maturation of technology due to their advantages such as small size, light weight, strong adaptability, high concealment, and low risk factors [1,2]. The application scenarios of UAVs are constantly expanding in multiple fields, from military reconnaissance, disaster monitoring, agricultural spraying, to logistics transportation [3,4,5,6]. The autonomous flight capability and exceptional mission execution efficiency of UAVs make them a key tool in numerous industries [7,8,9]. However, as the application scope continues to expand, the challenges faced by UAVs are gradually increasing, especially the issue of path planning in complex environments [10,11]. Therefore, how to achieve efficient, safe, and accurate flight path planning in a complex environment [12] has become one of the core issues in the research of unmanned aerial vehicle technology [13].

UAV path planning algorithms can be divided into two main types: traditional algorithms and intelligent optimization algorithms. The first category is traditional algorithms, including the A* algorithm [14], Dijkstra algorithm [15], artificial potential field method [16], rapid search random tree [17], etc. The second method is a meta-heuristic algorithm [18]. Unlike traditional algorithms that rely on specific problem models, meta-heuristic algorithms employ heuristic rules and strategies to globally search for the optimal or approximate optimal solution to a given problem [19]. Therefore, the research of UAV path planning has shifted from traditional algorithms to meta-heuristic algorithms [20]. Meta-heuristic algorithms are algorithms that solve complex problems by imitating some optimization processes in nature or society [21]. In UAV path planning, meta-heuristics can effectively deal with uncertainty, complex environments, or dynamically changing conditions [22]. Numerous meta-heuristic algorithms, including particle swarm optimization (PSO) [23,24], Ant Colony Optimization (ACO) [25,26], Grey Wolf Optimization (GWO) [27,28], Sparrow Search Algorithm (SSA) [29,30], and Harris hawks optimization (HHO) [31,32], have been successfully applied to the problem of UAV path planning. While these algorithms have demonstrated robust performance across a range of optimization problems, their application to UAV path planning still faces challenges, such as limited convergence accuracy and susceptibility to local optima.

Researchers have proposed various improved schemes to address the challenges of meta-heuristic algorithms in UAV path planning. Xiao et al. [33] employed a Logistic chaotic map initialization and the Nutcracker Optimization Algorithm to enhance the quality of the initial population. Wang et al. [34] combined Tent chaotic mapping and Gaussian mutation strategy to solve the slow convergence speed and the easy fall into local optimization problems of the traditional BKA algorithm in high-dimensional data and complex function optimization. Zhou [35] et al. introduced a nonlinear control mechanism to optimize the convergence factor of the GWO algorithm, which improved the adaptability and robustness of the algorithm. Hu et al. [36] proposed a co-evolutionary multi-group particle swarm optimization (CMPSO), which innovatively improves the global optimization ability by introducing two different group learning mechanisms and a grouping mechanism based on the activity level to avoid convergence to local optima. Zhang et al. [37] introduced the Cauchy mutation strategy and adaptive weights into the search process and combined them with the Sine–cosine Algorithm (SCA) to improve the global search ability and convergence efficiency. Xu et al. [38] combined the whale optimization algorithm with the dung beetle optimization algorithm to improve local search ability.

The dream optimization algorithm (DOA) proposed by Gao et al. [39] in 2024 is a novel intelligent optimization algorithm inspired by the characteristics of human dreaming. The algorithm incorporates a basic memory strategy, a forgetting and replenishing strategy that balances exploration and exploitation, and a dream sharing strategy to enhance the ability to escape local optima. The optimization process is divided into two phases: exploration and exploitation. DOA exhibits advantages such as fast convergence speed, strong stability, and high optimization precision, making it suitable for complex engineering problems. However, despite its rapid convergence, the algorithm is prone to becoming trapped in local optima, and its original mechanism tends to rapidly lose population diversity, leading to a poor ability to escape local optima. This paper proposes a multi-strategy dream optimization algorithm (MSDOA), with the following key contributions:

- A population initialization method using the Bernoulli chaotic map is employed to initialize the population, enhancing the diversity of the initial population, promoting a more even distribution across the entire search space, and expanding the coverage range.

- The proposed adaptive hybrid perturbation mechanism dynamically adjusts disturbance parameters by combining Cauchy variation and Lévy flight strategies during the forgetting and supplementing phases of the dream process. This approach enhances the ability to explore the solution space while preserving high local search accuracy, thereby accelerating convergence.

- To evade local optima, a lens-imaging learning strategy is employed during the exploration phase. This approach simulates the symmetric mapping of individuals in the search space to produce “mirror image” solutions, thereby improving the ability to escape local traps.

- This study presents a new global perturbation mechanism, Adaptive Individual-level Mixed Strategy (AIMIS), aimed at improving global optimization performance. AIMIS combines two individual-level perturbation strategies: a global perturbation that utilizes boundary information to expand the search space and a local perturbation that leverages variances among individuals to enhance precision.

The remainder of this paper is organized as follows: Section 2 presents the problem formulation of UAV path planning. Section 3 introduces the standard dream optimization algorithm. Section 4 outlines the technical details of the proposed MSDOA. Section 5 provides comparative experimental results and analysis between MSDOA and other state-of-the-art intelligent optimization algorithms. Section 6 summarizes the main conclusions of this work.

2. Problem Description of UAV Path Planning

Examining UAV path planning difficulties involves creating an extensive cost function comprising flight path length, threat, altitude, and smoothness costs, with various constraint weights. The calculation of the cost function is as follows:

2.1. Flight Path Length Cost

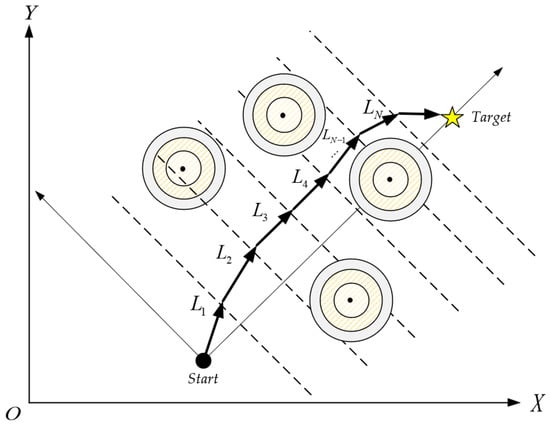

The flight path length cost of the UAV reflects the distance from the starting point to the destination. The coordinates of each waypoint are represented as , and the Euclidean distance between two waypoints is calculated as . A top view of the UAV flight path is shown in Figure 1. The fight path length cost Fdistance is mathematically modeled as shown in Equation (1).

where Lij and Li,j+1 represent the j and j + 1 path points in the ith flight path.

Figure 1.

Top-down view of flight path.

2.2. Threat Cost

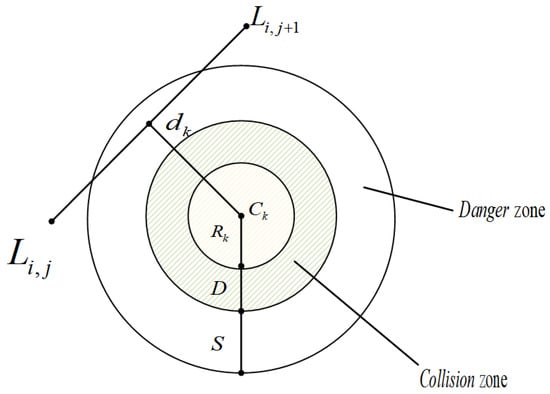

In order for the UAV to reach the set target safely, ensuring that the UAV does not collide with obstacles is the main requirement. In this paper, it is assumed that each threat is a cylinder, and the entire flight area is divided into a safety area, threat area, and collision area. Figure 2 is a threat prediction diagram, which illustrates the relationship between these three areas. Threat cost Fthreat is expressed by Equation (2).

where represents the threat cost function for the path segment with respect to the k-th threat, as defined in Equation (3).

where k represents the quantity of obstacles present. Ck denotes the center of each obstacle, while Rk signifies the radius of the obstacle. D stands for the diameter of the UAV, dk represents the distance between the current UAV position and the center of the k-th obstacle, whereas S indicates the critical safety distance.

Figure 2.

Threat prediction map.

2.3. Flight Altitude Cost

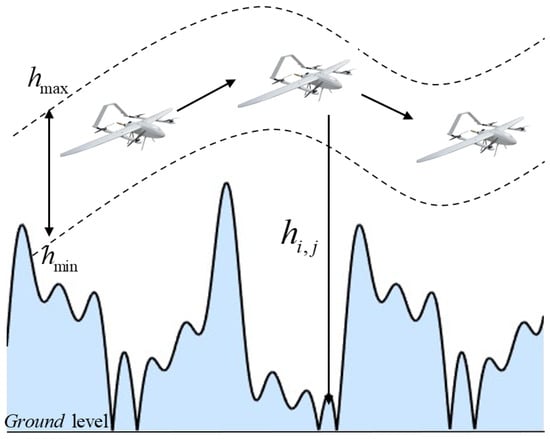

When UAVs fly at high altitudes, they are more susceptible to external environmental influences, which increases the risk of flight accidents. Conversely, flying at excessively low altitudes poses the danger of ground collisions. Therefore, the altitude cost Faltitude for height-constrained flight trajectories at the maximum and minimum altitudes is expressed by Equation (4). Figure 3 illustrates the altitude constraint concept, showing the allowable flight corridor between and , as well as the terrain-relative height .

where Hij represents the height cost of the current path point, calculated as shown in Equation (5).

where hmax and hmin represent the maximum and minimum flight altitudes of the UAV, respectively; hij denotes the altitude above ground level.

Figure 3.

Flight height constraint diagram.

2.4. Smoothing Cost

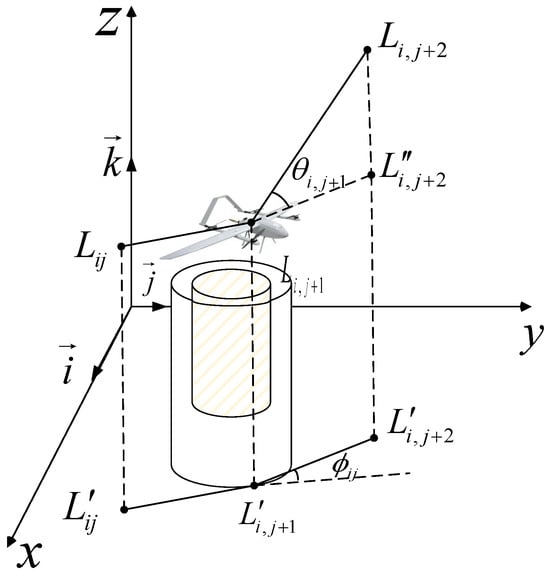

Smoothness of the flight path during UAV operations is crucial and is primarily impacted by the yaw and pitch angles. To enhance path smoothness, a smoothing cost is incorporated into the path optimization procedure. This cost aims to minimize the curvature of the flight trajectory and mitigate abrupt variations in the yaw angle.

In Figure 4, the yaw angle ϕij indicates the deviation between vectors and , representing two consecutive path segments, while the pitch angle θij signifies the angle between vector and its projection on the horizontal plane. Assuming is the unit vector along the z-axis, the vector between two consecutive points is determined according to Equation (6). The projection vector, along with the yaw angle ϕij and pitch angle θij, are defined in Equations (7) and (8).

Figure 4.

Flight angle diagram.

Therefore, the smoothing cost function is expressed in Equation (9).

where a1 and a2 represent the penalty coefficients for yaw angle and pitch angle, respectively.

2.5. Total Cost Function

The total cost function of traversing a path includes the flight path length cost, threat cost, altitude cost, and smoothing cost. The mathematical model is applicable to general path planning problems. In this study, the optimal flight path of the UAV is determined by minimizing the cost function defined in Equation (10).

where w1, w2, w3, and w4 are the weighting coefficients for the flight path length cost, threat cost, altitude cost, and smoothing cost, respectively.

Based on the principles of safety and operational efficiency, the weighting coefficients w1, w2, w3, and w4 are defined as follows:

The highest weight, w3 = 10, is assigned to the altitude cost. Considering the practical need for high-altitude flight in real-world missions, this indicates that altitude safety is given the highest priority in the path planning process.

w1 = 5 reflects that the distance cost also carries significant weight, emphasizing that while ensuring safety, it is still important to control the total flight path length to improve operational efficiency.

The lower weight of w2 = 2 suggests that most threats can be effectively avoided through altitude selection, allowing altitude and distance constraints to primarily guide the path planning process.

Finally, w4 = 1, although smoothness contributes to the stability of the flight control system, in this configuration, mission safety and execution efficiency are prioritized over path smoothness.

3. Standard Dream Optimization Algorithm

The ensuing section delineates the fundamental principles and procedure of the standard DOA, explaining its mathematical model and providing a comprehensive analysis of its mechanisms.

3.1. Initialization

The initialization of the population is a crucial step in heuristic, dream-inspired optimization algorithms. The initial population is generated within the search space to initiate the optimization process. The equation for obtaining the initial population is as in Equation (11).

where N represents the number of individuals in the population, Xi denotes the i-th individual in the population, Xl and Xu indicate the lower and upper boundaries of the search space, and rand is a D-dimensional vector where each dimension is a random number between 0 and 1.

3.2. Exploration Phase

The exploration phase of the algorithm begins by partitioning the population into five distinct groups based on variations in memory capacity. These differences in memory capability are reflected in the parameter kq, which represents the number of forgotten dimensions for each group. Prior to the “dreaming” event, a memory strategy is applied, where all individuals in a group observe the best-performing individual from previous iterations. Subsequently, the forgetting and replenishment strategy, as well as the dream-sharing strategy, are executed. Accounting for the disparities in memory capacity among individuals, each individual randomly selects certain information dimensions to forget, referred to as “forgotten dimensions.” During subsequent updates, individuals adjust their positions solely along these marked forgotten dimensions.

3.2.1. Memory Strategies

Dreams are inherently connected to existing memories. Prior to dreaming, group q recalled the positions of the optimal individuals within the group and adjusted their own positions accordingly. The update formula for the optimal individual is shown in Equation (12).

where represents the position of the i-th individual at time t + 1, denotes the position of the best individual in the q-th group at time t, and q = 1, 2, 3, 4, 5 indicates the group number.

3.2.2. Forgetting and Supplementation Strategy

The proposed forgetting and supplementation strategy exploits disparities in memory to stochastically discard and replenish specific dimensions, thereby augmenting both global and local search capacities. The position update equation is presented in Equation (13).

where denotes the position of the i-th individual in the j-th dimension at iteration t + 1, represents the position of the best individual in the q-th group in the j-th dimension at iteration t, and denote the upper and lower bounds of the search space in the j-th dimension, t is the current iteration count, is the maximum number of iterations, and is the maximum iteration count during the exploration phase.

3.2.3. Dream-Sharing Strategies

The dream-sharing strategy in DOA also follows the memory strategy, allowing individuals to randomly acquire positional information from others in the forgetting dimension. The update formula is represented by Equation (14).

where m is a population randomly selected from 1 to N populations.

3.3. Exploitation Phase

The Exploitation phase no longer involves further grouping. Instead, the optimal solution from the exploration phase is selected, and the position of each individual within the forgetting dimension is subsequently updated. This updating process follows a similar approach to Equations (12) and (13). Equation (15) shows that in dimensions other than K1, K2, …, Kk, individuals retain the position of the global best solution from previous iterations, effectively preserving this information during the dreaming phase. In contrast, Equation (16) illustrates that in dimensions K1, K2, …, Kk, individuals discard this information and instead regenerate new positions through self-organization, as updated as follows by Equations (15) and (16).

3.3.1. Memory Strategies

3.3.2. Forgetting and Supplementation Strategy

4. Multi-Strategy Dream Optimization Algorithm

This section establishes the mathematical model of the multi-strategy dream optimization algorithm based on the analysis of the basic version presented in the previous section. It provides a detailed description of the improved mechanism, presents the corresponding pseudo-code, and flowchart.

4.1. Improved Algorithm Based on Bernoulli Chaotic Map

The initial population of the dream optimization algorithm is randomly generated, often resulting in uneven distribution and reduced population diversity. This, in turn, can negatively impact the algorithm’s convergence speed. To address this issue, a chaos mapping mechanism is employed to enhance population diversity and improve algorithmic efficiency. The nonlinear and periodic properties of chaos mapping enable the generation of more complex and effective search results. Specifically, the Logistic, Tent, and Bernoulli chaotic maps are utilized for population initialization. Compared to other maps, the Bernoulli chaotic map exhibits a wider search range and can generate initial solutions more uniformly. The Bernoulli chaotic map [40] initialization produces a more uniform sequence than random initialization, facilitating faster searches for optimal solutions and mitigating the risk of becoming trapped in local minima.

The present work employs the Bernoulli chaotic map to initialize the population, a strategy that yields a more uniform population distribution and rapidly generates initial path points exhibiting strong randomness. The obtained values are then projected into the chaotic variable space using the Bernoulli mapping relationship. The specific expression of this mapping is presented in Equation (17).

where is a chaotic variable at time t, represents the chaotic component.

The generated chaotic values are then mapped into the initial population of the algorithm through linear transformation, with the mapping formula given in Equation (18).

4.2. Adaptive Hybrid Perturbation Strategy

During the forgetting and supplementation phase, this paper introduces an adaptive hybrid perturbation mechanism to enhance the coverage of the solution space and further improve search efficiency in this phase. This study proposes an adaptive individual hybrid perturbation strategy. This strategy integrates three perturbation methods:

- A basic uniform random perturbation to enhance population diversity;

- A Cauchy mutation [41] factor Cy to leverage its heavy-tailed distribution and improve local escape capabilities, Cy as shown in Equation (19);

- 3.

- The incorporation of a Lévy flight-based perturbation RL [42], which enables long-distance jumps and improves global exploration. The mathematical expression for RL is given in Equation (20), as follows:

The selection of these perturbation strategies is governed by an adaptive probability control mechanism, which dynamically adjusts the selection probabilities based on the current iteration progress. This adaptive scheduling enables a smooth transition from broad exploration in the early stages to refined exploitation in the later phases of optimization. μ represents the perturbation term generated according to the adaptive strategy.

Under this mechanism, the update rule for the i-th individual and the j-th variable at iteration t is defined in Equation (21).

where update formula in the equation is as shown in Equation (22):

where h1 is a uniformly distributed random number, and , and represent the dynamically adjusted selection probabilities for each perturbation type at iteration i.

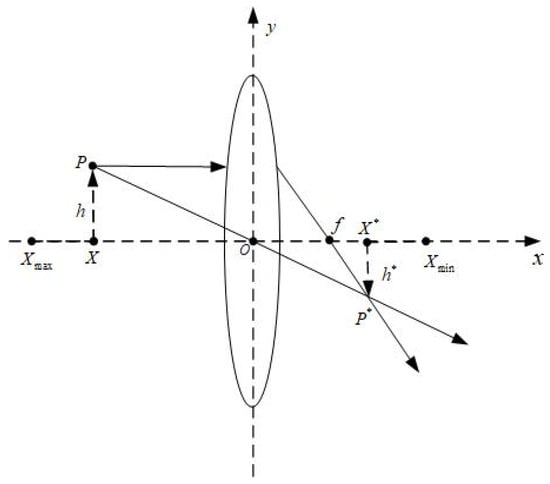

4.3. Lens Imaging Learning Strategy for Population Update

In the original dream optimization algorithm, the algorithm exhibits strong exploitation capability but is prone to falling into local optima. To address this issue, this paper introduces a lens imaging reverse learning strategy into the population update process. Compared with traditional backward learning strategies, the lens imaging reverse learning incorporates a scaling factor k, as illustrated in Figure 5. By generating the reverse solution of the current solution through inverse operation and comparing its fitness with that of the original solution, the initial solution is updated, thereby further enhancing the algorithm’s probability of escaping local optima. The lens imaging reverse learning strategy is described in Equation (23), and k is the scaling coefficient, whose expression is given in Equation (24).

where is the generated inverse solution, and Xi,j(t) is the current solution.

Figure 5.

Principle diagram of lens imaging inverse learning.

4.4. Adaptive Individual-Level Mixed Strategy

The proposed Adaptive Individual-level Mixed Strategy (AIMIS) addresses the limitations of the forgetting supplement strategy and dream-sharing strategy in the DOA algorithm. These traditional methods often rely excessively on local perturbations, leading to convergence in local optima and restricting the algorithm’s global search capability. AIMIS integrates two distinct perturbation mechanisms at the individual level to enhance global optimization performance. The first mechanism employs a global perturbation based on boundary information and chaotic sequences, aiming to expand the search scope. The second mechanism utilizes local perturbations based on differential information among individuals, targeting improved search precision. Simultaneously, a greedy selection mechanism is employed to retain individuals with superior fitness from multiple perturbation candidates, ensuring the quality of perturbation operations. Compared to traditional fixed perturbation methods, AIMIS effectively disrupts the local convergence of individuals and reconstructs perturbation paths. This enhances the algorithm’s ability to escape local optima and improves its efficiency and diversity in exploring diverse solution regions within the search space. The key to AIMIS lies in applying appropriate perturbation operations to the population, dispersing it more widely in the solution space, avoiding concentration in certain regions, and increasing the algorithm’s search capability in the global solution space. The mathematical formulation of this strategy is presented in Equation (25).

where H is a random number of 0 or 1, and and individuals are randomly selected from the population.

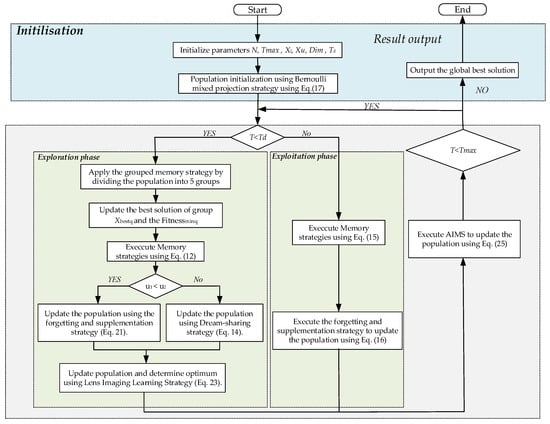

4.5. The MSDOA Algorithm Flow

Combined with the mathematical model of the above-mentioned multi-strategy dream optimization algorithm, Figure 6 presents the overall flowchart of MSDOA, the execution steps of MSDOA can be summarized into the following seven steps:

Figure 6.

Flowchart of MSDOA.

Step 1: Initialize parameters population size N, the maximum number of iterations , the lower limits of variables Xl, the upper limits of variables Xu, and the size of the problem Dim. The number of iterations is a demarcation Td.

Step 2: By means of Equation (17), incorporate the Bernoulli chaotic map to initialize the population.

Step 3: If the current iteration number t < Td, then enter the exploration phase; otherwise, enter the Exploitation phase.

Step 4: During the exploration phase, the group memory strategy is implemented to identify the optimal individual within each group. Two random probabilities, u1 and u2, are then evaluated; the condition u1 < u2 is judged. If u1 < u2 holds true, the adaptive disturbance strategy incorporates the dream forgetting and supplementation; otherwise, the dream sharing strategy is utilized to direct the individual’s search. Following this, the sub-populations are consolidated, and an inverse learning strategy, as per Equation (23), is employed to enhance the search capabilities of the population.

Step 5: In the development stage, the memory strategy is adopted directly, and then the dream forgetting and supplementation strategy are implemented to improve local search ability.

Step 6: At the end of each iteration, Adaptive Individual-level Mixed Strategy updates the population positions according to Equation (25), further avoiding local optima and improving the algorithm’s global search capability.

Step 7: Verify whether the current iteration number equals the maximum Tmax. If so, conclude the process and display the global optimal solution; otherwise, advance to step 3 and proceed with the subsequent iteration.

The detailed procedural steps of MSDOA are further illustrated in the corresponding pseudo-code, as shown in Algorithm 1.

| Algorithm 1: Pseudo-code of MSDOA |

| Input: Initialize parameters N, Tmax, Xl, Xu, Dim, Td. |

| Output: The global best solution Xgbest and f(Xgbest) |

|

5. Simulation and Results Analysis

The present study evaluated the performance of the MSDOA algorithm against a diverse set of widely adopted optimization algorithms, including the classical and well-established particle swarm optimization (PSO) [43], Grey wolf optimizer (GWO) [44], Harris hawks optimization algorithm (HHO) [45], as well as the recently published and highly competitive Crested Porcupine Optimizer (CPO) [46], BKA [47], and Sand Cat swarm optimization (SCSO) [48]. The assessment was conducted using standard benchmark test functions and path planning simulation experiments, examining key metrics such as global search capability, convergence rate, and stability. This comprehensive comparative analysis aimed to elucidate the advantages of the MSDOA approach in addressing complex optimization challenges.

5.1. Comparison of Algorithms in the CEC2017 Test Set

To further evaluate the efficacy of the MSDOA algorithm on high-dimensional and large-scale test functions, benchmark functions from the CEC2017 test suite are used for simulation testing. This test suite encompasses a variety of function types, such as single-peak, multi-peak, mixed, and compound functions, exhibiting high complexity. By utilizing this diverse set, the adaptability and optimization efficiency of the algorithm across various problem characteristics. To ensure the robustness and accuracy of our analysis, the maximum number of iterations during the exploration phase was set to Td = 0.9 × Tmax. Standardized testing protocols and datasets were employed, with each test function executed independently 30 times over 500 iterations to minimize the impact of randomness on the results.

The optimization performance of various algorithms was assessed through three key metrics: the Best fitness value (Best), the mean error (Mean), and the standard error (Std). As shown in Table 1, the MSDOA algorithm ranked first among the twenty-eight benchmark test functions and ranked third in the F14 benchmark test, demonstrating that the incorporation of a multi-strategy optimization approach into the basic Dream Algorithm significantly enhanced the optimization accuracy and search speed. Notably, the MSDOA algorithm exhibited significantly superior mean error and standard error values compared to other optimization algorithms, highlighting its exceptional stability, robustness, and overall performance across diverse test cases.

Table 1.

Comparison of optimization results of test functions.

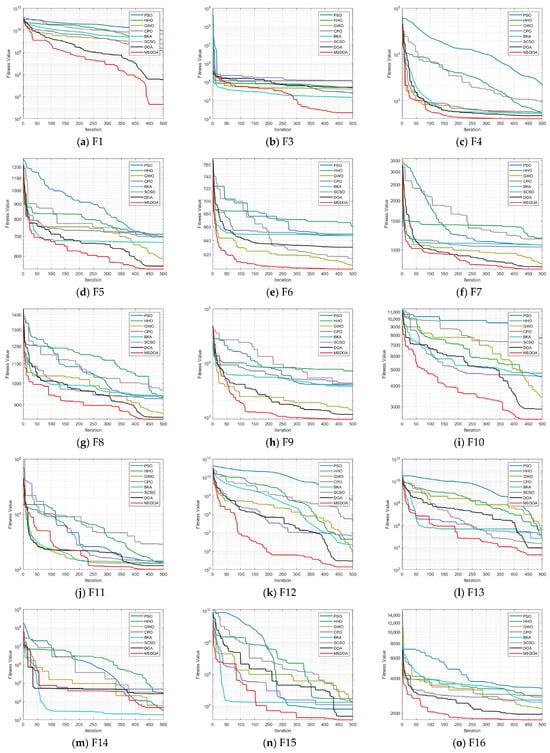

Figure 7 presents the convergence curves for various optimization algorithms across different test functions, with the MSDOA algorithm depicted in red. The MSDOA algorithm demonstrates a rapid decrease in fitness, achieving substantially lower levels at the maximum number of iterations, outperforming other optimization algorithms. This indicates that MSDOA not only swiftly converges to an optimal solution but also surpasses other algorithms in optimization efficiency and accuracy. Specifically, MSDOA shows an extremely fast convergence speed and an extremely low final error in the unimodal functions F1, F3, and F6, indicating that it has good local search accuracy. Among the multimodal functions F5, F7, F9, and F10, it is possible to effectively escape the local optimal trap and maintain a continuous optimization trend. For the complex-structured mixed functions F12, F13, and F20, MSDOA can adaptively adjust the strategy among different function regions to achieve global convergence. However, on the most challenging composite functions F23, F26, and F30, it can still maintain a stable decline and eventually reach an excellent optimal solution level, demonstrating strong robustness and generalization ability.

Figure 7.

Convergence curve of test function algorithm.

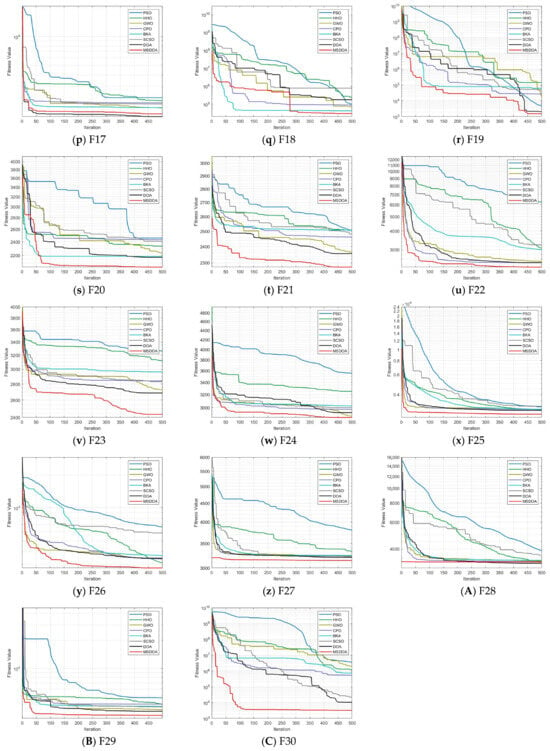

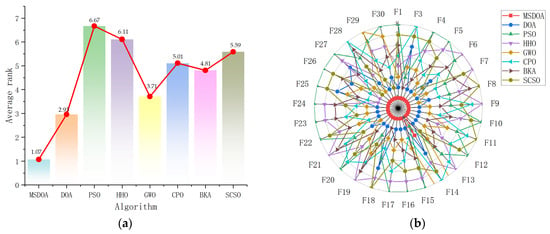

The comprehensive analysis presented in the rank analysis Figure 8a and radar chart Figure 8b conclusively demonstrates the superior performance and remarkable stability of the MSDOA algorithm across the 30 test functions. With an outstanding average ranking of 1.07, MSDOA clearly surpasses all other algorithms, emphasizing its exceptional optimization capabilities. Moreover, the uniform distribution of the radar chart and the centralized vertices further reinforce the algorithm’s excellent stability, highlighting its optimal optimization performance in the CEC2017 benchmark test.

Figure 8.

Ranking charts of optimization results on the CEC2017 benchmark. (a) The average rank chart. (b) The radar chart.

These results clearly demonstrate that MSDOA exhibits strong adaptability, high search efficiency, and robust global optimization capability on the CEC2017 test set, making it well-suited for a wide range of complex real-world optimization problems.

5.2. Performance Test and Analysis of UAV Track Planning Under Different Algorithms

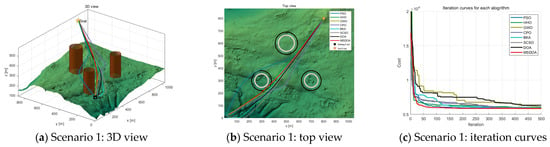

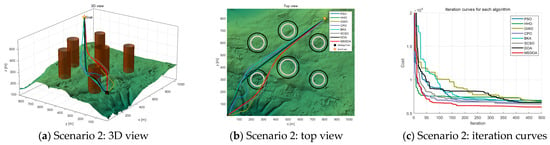

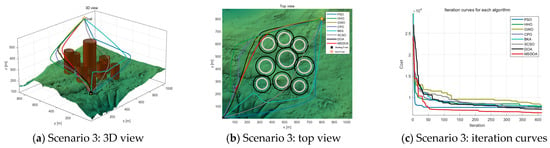

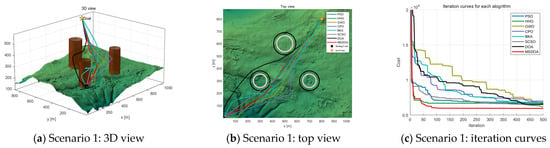

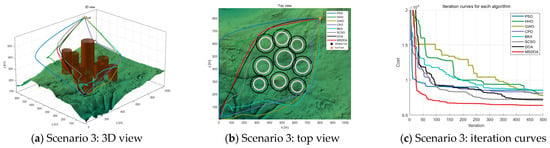

5.2.1. Performance Analysis Under Different Obstacles

Three scenarios were developed to evaluate the effectiveness of the algorithm in simulating UAV paths in three-dimensional mountainous terrain. Table 2 provides a detailed overview of the scenes. In each scenario, UAV paths were planned with six trajectory points, navigating through different obstacle densities: three obstacles in scenario 1, six obstacles in scenario 2, and nine obstacles in scenario 3. These scenarios were designed to assess the performance of the MSDOA algorithm in environments of increasing complexity, ranging from simple to highly complex terrains. The minimum flying altitude was established at 100 m, while the maximum altitude was capped at 300 m. Both the maximum turning angle and climbing angle were restricted to 45° to ensure optimal maneuverability and flight safety.

Table 2.

Scenario information.

As the number of obstacles increases, the algorithm’s search difficulty also rises. Figure 9, Figure 10 and Figure 11 illustrate the flight path’s side view, top view, and convergence curve for each scenario, respectively. Table 3 presents the cost function fitness values for each optimization algorithm across scenarios. With heightened environmental complexity, the paths generated by each algorithm become more convoluted, underscoring their adaptability differences. In Figure 9a, within a simple environment, all algorithms successfully avoid obstacles, though PSO and CPO produce more winding paths, the optimal solutions across algorithms show little variance. Notably, as depicted in Figure 9c, MSDOA converges more rapidly at the iteration’s onset, demonstrating superior optimization efficiency and maintaining robust planning capability in simpler environments.

Figure 9.

Comparison of path planning results in scenario 1 (number of waypoints = 6).

Figure 10.

Comparison of path planning results in scenario 2 (number of waypoints = 6).

Figure 11.

Comparison of path planning results in scenario 3 (number of waypoints = 6).

Table 3.

Complex scenario simulation experiment data (number of waypoints = 6).

Figure 10 illustrates the planning outcomes in complex environments, showcasing the diverse paths taken by various algorithms and their adaptability fluctuations. The results suggest that most algorithms struggle with accuracy and robustness in high-complexity settings. However, the DOA algorithm stands out by achieving an optimal value of 6410, while the MSDOA algorithm surpasses all others with an impressive optimal value of 5941. Remarkably, MSDOA achieves this optimal value in just around 150 iterations, highlighting its exceptional search efficiency. In Figure 11, the path planning results from different optimization algorithms in highly complex scenarios are depicted. As the environmental complexity increases, the performance gaps between the algorithms become more pronounced. Particularly noteworthy are the paths generated by the BKA and PSO algorithms, which exceed the constraints of the map. Table 3 provides a comparative analysis showing that MSDOA achieves a 7.81% reduction in the cost function fitness compared to DOA by 7.81% and further reduces the cost function by 13.3%, 10.7%, 11.6%, 12.1%, 15.8%, and 8.48% compared to PSO, HHO, GWO, CPO, BKA, and SCSO, respectively. These results are achieved while ensuring rapid and stable convergence across iterations. Additionally, the stability of MSDOA, as indicated by its mean and standard deviation, underscores its robustness and adaptability in handling highly complex tasks.

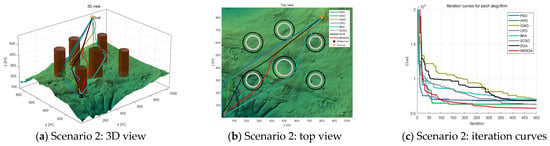

5.2.2. Performance Analysis with Different Numbers of Waypoints

The number of waypoints has a significant impact on the computational efficiency and trajectory search performance of the algorithm. As the number of waypoints increases from 6 to 12, Table 4 shows the fitness values of the cost function for each optimization algorithm under different scenarios at 12 waypoints. The results indicate that the optimal values of all algorithms are improved, but the MSDOA algorithm demonstrates stronger adaptability and reliability. As illustrated in Figure 12, Figure 13 and Figure 14, the flight paths generated by the algorithms become more tortuous as the number of waypoints increases. In Scenario 1, the MSDOA-planned flight path is smoother than the paths generated by the other algorithms. Furthermore, by comparing the UAV routes planned by different algorithms in Figure 13 and Figure 14 with those in Figure 10 and Figure 11, it is evident that the paths generated by some inferior algorithms have exceeded the set constraint range and exhibit more turns and detours. In contrast, the MSDOA algorithm can still avoid obstacles and find the shortest, smoothest path, demonstrating its path planning capabilities. In addition, MSDOA demonstrated a 9% reduction in optimal fitness and a 12% reduction in average fitness compared to standard DOA in the most complex scenarios.

Table 4.

Complex scenario simulation experiment data (number of waypoints = 12).

Figure 12.

Comparison of path planning results in scenario 1 (number of waypoints = 12).

Figure 13.

Comparison of path planning results in scenario 2 (number of waypoints = 12).

Figure 14.

Comparison of path planning results in scenario 3 (number of waypoints = 12).

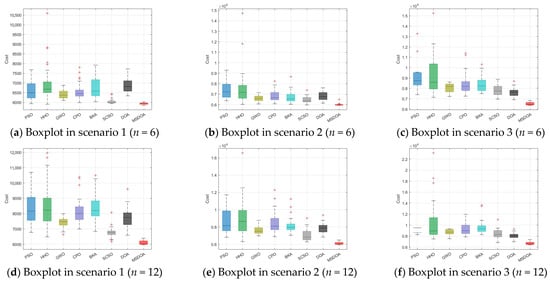

The box plot in Figure 15 illustrates that the MSDOA algorithm exhibits minimal cost fluctuations, indicative of its robust and scalable performance across complex environments. Figure 15a–c corresponds to scenarios with 6 waypoints, while Figure 15d–f represents scenarios with 12 waypoints. In both cases, the median cost of MSDOA is notably lower than that of the comparative algorithms, including PSO, HHO, and BKA. Additionally, the interquartile range of MSDOA remains consistently smaller, demonstrating its stability. Despite increasing scenario complexity or the number of waypoints, MSDOA exhibits significantly improved global search capabilities in complex optimization environments, attains superior optimization accuracy, and effectively mitigates the risk of converging to local optima. These findings confirm its efficacy in tackling UAV path planning challenges.

Figure 15.

Boxplot comparison of path planning costs.

In conclusion, the MSDOA algorithm demonstrates robust convergence in addressing the three-dimensional path planning problem for drones in complex environments. Initially, it leverages a Bernoulli chaotic map to generate an improved initial solution. During exploration, adaptive perturbation and the Lens Imaging Learning Strategy facilitate rapid convergence to the optimal solution. Ultimately, the Adaptive Individual-level Mixed Strategy significantly enhances solution accuracy at final convergence, maintaining strong performance across diverse scenarios.

6. Conclusions

In this study, a multi-strategy MSDOA algorithm demonstrates robust performance in solving complex 3D path planning problems for UAVs. The algorithm employs a Bernoulli chaotic map to initialize the population, widen individual search ranges, and enhance population diversity. Furthermore, the algorithm incorporates an adaptive disturbance mechanism and a lens imaging reverse learning strategy during the exploration phase to update the population, thereby improving the exploration ability and accelerating convergence while mitigating premature convergence. Additionally, an Adaptive Individual-level Mixed Strategy (AIMS) is developed to conduct a more flexible search process and enhance the algorithm’s global search capability. To validate the effectiveness of these improvements, comparative experiments were conducted using the CEC 2017 benchmark functions. The results indicate that the MSDOA algorithm outperforms mainstream swarm intelligence algorithms in terms of convergence speed and optimal value search ability, particularly when addressing multi-peak, nonlinear, and high-dimensional optimization problems. Moreover, the MSDOA algorithm is applied to UAV 3D path planning problems under various scene models, and its performance is compared with other algorithms. The simulation results demonstrate that the MSDOA algorithm achieves more efficient and higher-quality path planning in complex 3D environments, resulting in smoother and safer UAV paths.

Author Contributions

Conceptualization, X.Y.; software, W.G. and S.Z.; validation, X.Y. and S.Z.; formal analysis, Z.F.; investigation, L.L.; resources, L.L. and X.W.; data curation, P.L.; writing—original draft preparation, T.J. and W.G.; writing—review and editing, S.Z. and X.W.; visualization, Z.F. and W.G.; supervision, X.Y. and T.J.; project administration, S.Z. and W.G.; funding acquisition, Z.F. and X.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Open Project of the Shijiazhuang Key Laboratory of Intelligent Research on VTOL Fixed-Wing UAVs and the Science Research Project of the Hebei Education Department, under grant numbers KF2024-1 and QN2023137.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Available under request.

Acknowledgments

The authors would like to thank the anonymous reviewers and external experts for their valuable feedback and suggestions, which greatly helped improve the quality of this work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mohsan, S.A.; Khan, M.A.; Noor, F.; Ullah, I.; Alsharif, M.H. Towards the unmanned aerial vehicles (UAVs): A comprehensive review. Drones 2022, 6, 147. [Google Scholar] [CrossRef]

- Javed, S.; Hassan, A.; Ahmad, R.; Ahmed, W.; Ahmed, R.; Saadat, A.; Guizani, M. State-of-the-Art and Future Research Challenges in UAV Swarms. IEEE Internet Things J. 2024, 11, 19023–19045. [Google Scholar] [CrossRef]

- Zhang, X.; Zheng, J.; Su, T.; Ding, M.; Liu, H. An effective dynamic constrained two-archive evolutionary algorithm for cooperative search-track mission planning by UAV swarms in air intelligent transportation. IEEE Trans. Intell. Transp. Syst. 2023, 25, 944–958. [Google Scholar] [CrossRef]

- Zhang, X.; Fu, Q.Y.; Li, J.; Zhao, W.J. Cooperative Search-Track Mission Planning for Multi-UAV Based on a Distributed Approach in Uncertain Environment. In Proceedings of 2021 5th Chinese Conference on Swarm Intelligence and Cooperative Control; Springer Nature: Singapore, 2022; pp. 526–535. [Google Scholar]

- Qu, C.; Boubin, J.; Gafurov, D.; Zhou, J.; Aloysius, N.; Nguyen, H.; Calyam, P. UAV Swarms in Smart Agriculture: Experiences and Opportunities. In Proceedings of the 2022 IEEE 18th International Conference on e-Science (e-Science), Salt Lake City, UT, USA, 11–14 October 2022; pp. 148–158. [Google Scholar]

- Lu, J.; Liu, Y.; Jiang, C.; Wu, W. Truck-drone joint delivery network for rural area: Optimization and implications. Transp. Policy 2025, 163, 273–284. [Google Scholar] [CrossRef]

- Baniasadi, P.; Foumani, M.; Smith-Miles, K.; Ejov, V. A transformation technique for the clustered generalized traveling salesman problem with applications to logistics. Eur. J. Oper. Res. 2020, 285, 444–457. [Google Scholar] [CrossRef]

- Katkuri, A.V.R.; Madan, H.; Khatri, N.; Abdul-Qawy, A.S.H.; Patnaik, K.S. Autonomous UAV navigation using deep learning-based computer vision frameworks: A systematic literature review. Array 2024, 23, 100361. [Google Scholar] [CrossRef]

- Meng, W.; Zhang, X.; Zhou, L.; Guo, H.; Hu, X. Advances in UAV Path Planning: A Comprehensive Review of Methods, Challenges, and Future Directions. Drones 2025, 9, 376. [Google Scholar] [CrossRef]

- Zhang, D.; Xuan, Z.; Zhang, Y.; Yao, J.; Li, X.; Li, X. Path planning of unmanned aerial vehicle in complex environments based on state-detection twin delayed deep deterministic policy gradient. Machines 2023, 11, 108. [Google Scholar] [CrossRef]

- Gómez Arnaldo, C.; Zamarreño Suárez, M.; Pérez Moreno, F.; Delgado-Aguilera Jurado, R. Path Planning for Unmanned Aerial Vehicles in Complex Environments. Drones 2024, 8, 288. [Google Scholar] [CrossRef]

- Liu, J.; Luo, W.; Zhang, G.; Li, R. Unmanned Aerial Vehicle Path Planning in Complex Dynamic Environments Based on Deep Reinforcement Learning. Machines 2025, 13, 162. [Google Scholar] [CrossRef]

- He, Y.; Hou, T.; Wang, M. A new method for unmanned aerial vehicle path planning in complex environments. Sci. Rep. 2024, 14, 9257. [Google Scholar] [CrossRef]

- Wang, J.; Li, Y.; Li, R.; Chen, H.; Chu, K. Trajectory planning for UAV navigation in dynamic environments with matrix alignment Dijkstra. Soft Comput. 2022, 26, 12599–12610. [Google Scholar] [CrossRef]

- Fan, J.; Chen, X.; Liang, X. UAV trajectory planning based on bi-directional APF-RRT* algorithm with goal-biased. Expert. Syst. Appl. 2023, 213, 119137. [Google Scholar] [CrossRef]

- Zhu, X.; Gao, Y.; Li, Y.; Li, B. Fast Dynamic P-RRT*-Based UAV Path Planning and Trajectory Tracking Control Under Dense Obstacles. Actuators 2025, 14, 211. [Google Scholar] [CrossRef]

- Hooshyar, M.; Huang, Y.M. Meta-heuristic algorithms in UAV path planning optimization: A systematic review (2018–2022). Drones 2023, 7, 687. [Google Scholar] [CrossRef]

- Debnath, D.; Vanegas, F.; Sandino, J.; Hawary, A.F.; Gonzalez, F. A Review of UAV Path-Planning Algorithms and Obstacle Avoidance Methods for Remote Sensing Applications. Remote Sens. 2024, 16, 4019. [Google Scholar] [CrossRef]

- Ma, Y.; Zhang, Z.; Yao, M.; Fan, G. A Self-Adaptive Improved Slime Mold Algorithm for Multi-UAV Path Planning. Drones 2025, 9, 219. [Google Scholar] [CrossRef]

- Vinod Chandra, S.S.; Anand, H.S. Nature inspired meta heuristic algorithms for optimization problems. Computing 2022, 104, 251–269. [Google Scholar]

- Jiang, Y.; Xu, X.-X.; Zheng, M.-Y.; Zhan, Z.-H. Evolutionary Computation for Unmanned Aerial Vehicle Path Planning: A Survey. Artif. Intell. Rev. 2024, 57, 267. [Google Scholar] [CrossRef]

- Ait Saadi, A.; Soukane, A.; Meraihi, Y.; Benmessaoud Gabis, A.; Mirjalili, S.; Ramdane-Cherif, A. UAV path planning using optimization approaches: A survey. Arch. Comput. Methods Eng. 2022, 29, 4233–4284. [Google Scholar] [CrossRef]

- Sonny, A.; Yeduri, S.R.; Cenkeramaddi, L.R. Autonomous UAV path planning using modified PSO for UAV-assisted wireless networks. IEEE Access 2023, 11, 70353–70367. [Google Scholar] [CrossRef]

- Meng, Q.; Chen, K.; Qu, Q. Ppswarm: Multi-uav path planning based on hybrid pso in complex scenarios. Drones 2024, 8, 192. [Google Scholar] [CrossRef]

- Zhang, Y.; Yu, H. Application of Hybrid Swarming Algorithm on a UAV Regional Logistics Distribution. Biomimetics 2023, 8, 96. [Google Scholar] [CrossRef]

- Bui, D.N.; Duong, T.N.; Phung, M.D. Ant colony optimization for cooperative inspection path planning using multiple unmanned aerial vehicles. In Proceedings of the 2024 IEEE/SICE International Symposium on System Integration (SII), Ha Long, Vietnam, 8–11 January 2024; pp. 675–680. [Google Scholar]

- Teng, Z.; Dong, Q.; Zhang, Z.; Huang, S.; Zhang, W.; Wang, J.; Chen, X. An Improved Grey Wolf Optimizer Inspired by Advanced Cooperative Predation for UAV Shortest Path Planning. arXiv 2025, arXiv:2506.03663. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, Y.; Hu, C. Path planning with time windows for multiple UAVs based on gray wolf algorithm. Biomimetics 2022, 7, 225. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Zhu, X.; Li, J. Intelligent path planning with an improved sparrow search algorithm for workshop UAV inspection. Sensors 2024, 24, 1104. [Google Scholar] [CrossRef]

- Liu, G.; Shu, C.; Liang, Z.; Peng, B.; Cheng, L. A modified sparrow search algorithm with applcation in 3d route planning for UAV. Sensors 2021, 21, 1224. [Google Scholar] [CrossRef] [PubMed]

- You, G.; Hu, Y.; Lian, C.; Yang, Z. Mixed-strategy Harris Hawk optimization algorithm for UAV path planning and engineering applications. Appl. Sci. 2024, 14, 10581. [Google Scholar] [CrossRef]

- Shi, H.; Lu, F.; Wu, L.; Yang, G. Optimal trajectories of multi-UAVs with approaching formation for target tracking using improved Harris Hawks optimizer. Appl. Intell. 2022, 52, 14313–14335. [Google Scholar] [CrossRef]

- Chang, X.; Yang, H.; Zhang, B. Multi-Unmanned aerial vehicle path planning based on improved nutcracker optimization algorithm. Drones 2025, 9, 116. [Google Scholar] [CrossRef]

- Wang, S.; Xu, B.; Zheng, Y.; Yue, Y.; Xiong, M. Path Optimization Strategy for Unmanned Aerial Vehicles Based on Improved Black Winged Kite Optimization Algorithm. Biomimetics 2025, 10, 310. [Google Scholar] [CrossRef]

- Zhou, X.; Shi, G.; Zhang, J. Improved grey wolf algorithm: A method for uav path planning. Drones 2024, 8, 675. [Google Scholar] [CrossRef]

- Hu, G.; Cheng, M.; Houssein, E.H.; Jia, H. CMPSO: A novel co-evolutionary multigroup particle swarm optimization for multi-mission UAVs path planning. Adv. Eng. Inform. 2025, 63, 102923. [Google Scholar] [CrossRef]

- Zhang, R.; Li, S.; Ding, Y.; Qin, X.; Xia, Q. UAV path planning algorithm based on improved Harris Hawks optimization. Sensors 2022, 22, 5232. [Google Scholar] [CrossRef]

- Xu, T.; Chen, C. DBO-AWOA: An Adaptive Whale Optimization Algorithm for Global Optimization and UAV 3D Path Planning. Sensors 2025, 25, 2336. [Google Scholar] [CrossRef] [PubMed]

- Lang, Y.; Gao, Y. Dream Optimization Algorithm(DOA):A novel metaheuristic optimization algorithm inspired by human dreams and its applications to real-world engi- neering problems. Comput. Methods Appl. Mech. Eng. 2025, 436, 117718. [Google Scholar] [CrossRef]

- Du, X.; Zhou, Y. A Novel Hybrid Differential Evolutionary Algorithm for Solving Multi-objective Distributed Permutation Flow-Shop Scheduling Problem. Int. J. Comput. Intell. Syst. 2025, 18, 1–22. [Google Scholar] [CrossRef]

- Shan, W.; He, X.; Liu, H.; Heidari, A.A.; Wang, M.; Cai, Z.; Chen, H. Cauchy mutation boosted Harris hawk algorithm: Optimal performance design and engineering applications. J. Comput. Des. Eng. 2023, 10, 503–526. [Google Scholar] [CrossRef]

- Yu, X.; Duan, Y.; Cai, Z. Sub-population improved grey wolf optimizer with Gaussian mutation and Lévy flight for parameters identification of photovoltaic models. Expert. Syst. Appl. 2023, 232, 120827. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; IEEE: Piscataway, NJ, USA, 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Abouhawwash, M. Crested Porcupine Optimizer: A new nature-inspired metaheuristic. Knowl.-Based Syst. 2024, 284, 111257. [Google Scholar] [CrossRef]

- Wang, J.; Wang, W.C.; Hu, X.X.; Qiu, L.; Zang, H.F. Black-winged kite algorithm: A nature-inspired meta-heuristic for solving benchmark functions and engineering problems. Artif. Intell. Rev. 2024, 57, 98. [Google Scholar] [CrossRef]

- Seyyedabbasi, A.; Kiani, F. Sand Cat swarm optimization: A nature-inspired algorithm to solve global optimization problems. Eng. Comput. 2023, 39, 2627–2651. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).