CCESC: A Crisscross-Enhanced Escape Algorithm for Global and Reservoir Production Optimization

Abstract

1. Introduction

- A novel CCESC algorithm is proposed, which synergistically integrates the crowd evacuation dynamics of ESC with a CC strategy to enhance search efficiency, solution diversity, and overall optimization performance.

- CCESC’s performance is rigorously validated on the CEC2017 benchmarks against numerous established metaheuristics, with statistical significance confirmed by Wilcoxon signed-rank and Friedman tests.

- The efficacy of CCESC in solving real-world problems is demonstrated through its application to reservoir production optimization, where it achieves a superior NPV and showcases its practical robustness.

2. The Original ESC

3. Proposed CCESC

3.1. Crisscross Strategy

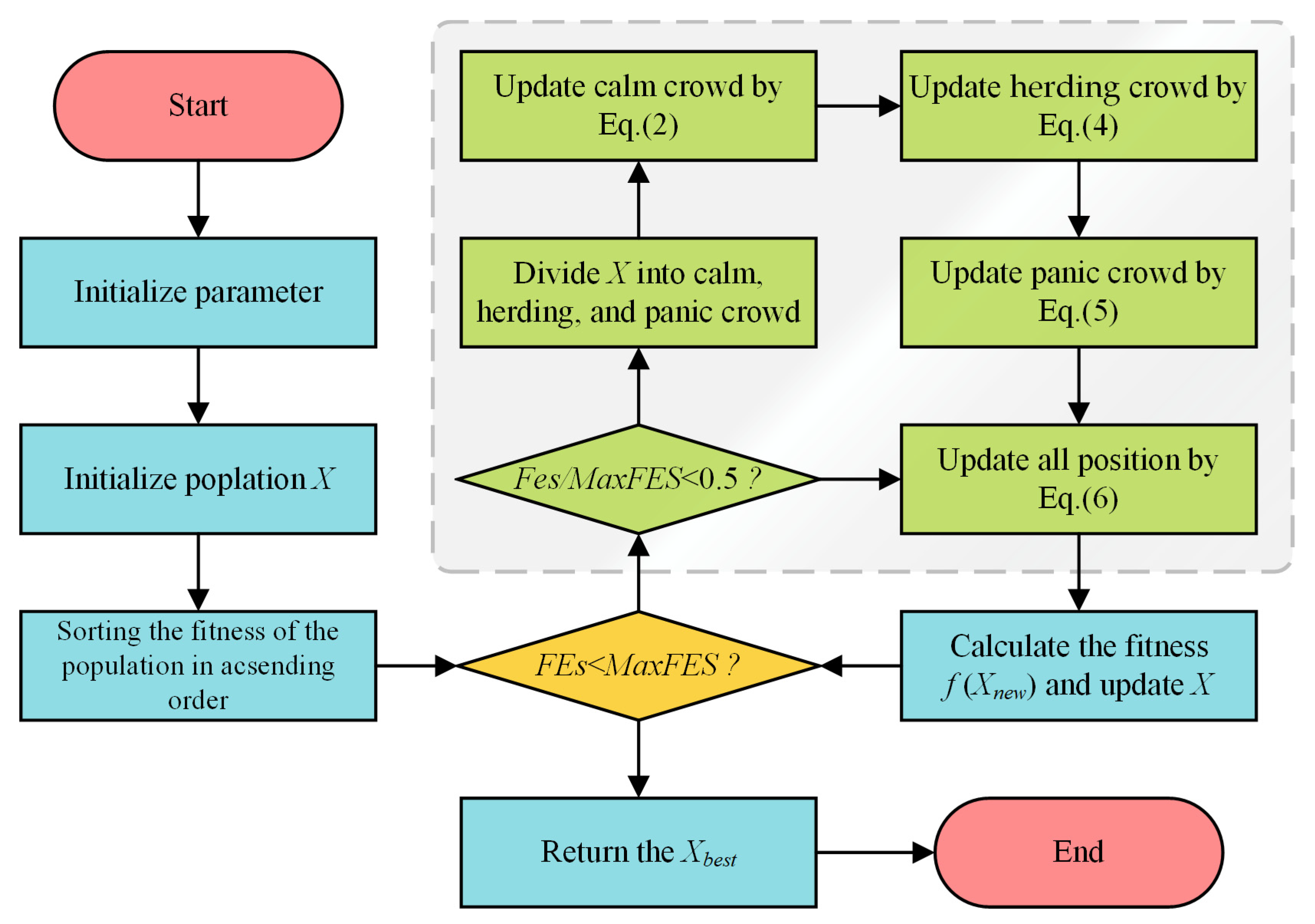

3.2. The Proposed CCESC

| Algorithm 1 Pseudo-code of the CCESC |

| Set parameters: , , population size Initialize population = 0 For Evaluate the fitness value of Find the global min and fitness End For Sort population by fitness in ascending order Store the top individuals in the Elite Pool While IF Generate Panic Index by Equation (1) Divide population into: Calm group (proportion c), Conforming group (proportion h), and Panic group (proportion p) Update Calm Group by Equation (2) Update Herding crowd by Equation (4) Update Panic Group by Equation (5) ELSE Update population by Equation (6) End IF For /*CC*/ Perform HCS to update Perform VCS to update Update End For End While Return End |

4. Experimental Results and Analysis

4.1. Benchmark Functions Overview

4.2. Performance Comparison with Other Algorithms

5. Application to Production Optimization

5.1. Reservoir Model Description

5.2. Analysis and Discussion of Experimental Results

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lin, C.; Wang, P.; Heidari, A.A.; Zhao, X.; Chen, H. A Boosted Communicational Salp Swarm Algorithm: Performance Optimization and Comprehensive Analysis. J. Bionic Eng. 2023, 20, 1296–1332. [Google Scholar] [CrossRef]

- Cheng, M.-M.; Zhang, J.; Wang, D.-G.; Tan, W.; Yang, J. A Localization Algorithm Based on Improved Water Flow Optimizer and Max-Similarity Path for 3-D Heterogeneous Wireless Sensor Networks. IEEE Sens. J. 2023, 23, 13774–13788. [Google Scholar] [CrossRef]

- Garg, T.; Kaur, G.; Rana, P.S.; Cheng, X. Enhancing Road Traffic Prediction Using Data Preprocessing Optimization. J. Circuits Syst. Comput. 2024, 34, 2550045. [Google Scholar] [CrossRef]

- Kumar, A.; Das, S.; Mallipeddi, R. An Efficient Differential Grouping Algorithm for Large-Scale Global Optimization. IEEE Trans. Evol. Comput. 2024, 28, 32–46. [Google Scholar] [CrossRef]

- Shan, W.; Hu, H.; Cai, Z.; Chen, H.; Liu, H.; Wang, M.; Teng, Y. Multi-Strategies Boosted Mutative Crow Search Algorithm for Global Tasks: Cases of Continuous and Discrete Optimization. J. Bionic Eng. 2022, 19, 1830–1849. [Google Scholar] [CrossRef]

- Li, X.; Khishe, M.; Qian, L. Evolving Deep Gated Recurrent Unit Using Improved Marine Predator Algorithm for Profit Prediction Based on Financial Accounting Information System. Complex Intell. Syst. 2024, 10, 595–611. [Google Scholar] [CrossRef]

- Chakraborty, A.; Ray, S. Economic and Environmental Factors Based Multi-Objective Approach for Optimizing Energy Management in a Microgrid. Renew. Energy 2024, 222, 119920. [Google Scholar] [CrossRef]

- Hu, H.; Wang, J.; Huang, X.; Ablameyko, S.V. An Integrated Online-Offline Hybrid Particle Swarm Optimization Framework for Medium Scale Expensive Problems. In Proceedings of the 2024 6th International Conference on Data-driven Optimization of Complex Systems (DOCS), Hangzhou, China, 16–18 August 2024; pp. 25–32. [Google Scholar]

- Liang, J.; Lin, H.; Yue, C.; Ban, X.; Yu, K. Evolutionary Constrained Multi-Objective Optimization: A Review. Vicinagearth 2024, 1, 5. [Google Scholar] [CrossRef]

- Biegler, L.T.; Grossmann, I.E. Retrospective on Optimization. Comput. Chem. Eng. 2004, 28, 1169–1192. [Google Scholar] [CrossRef]

- Meza, J.C. Steepest Descent. WIREs Comput. Stat. 2010, 2, 719–722. [Google Scholar] [CrossRef]

- Polyak, B.T. The Conjugate Gradient Method in Extremal Problems. USSR Comput. Math. Math. Phys. 1969, 9, 94–112. [Google Scholar] [CrossRef]

- Hu, H.; Shan, W.; Tang, Y.; Heidari, A.A.; Chen, H.; Liu, H.; Wang, M.; Escorcia-Gutierrez, J.; Mansour, R.F.; Chen, J. Horizontal and Vertical Crossover of Sine Cosine Algorithm with Quick Moves for Optimization and Feature Selection. J. Comput. Des. Eng. 2022, 9, 2524–2555. [Google Scholar] [CrossRef]

- Dantzig, G.B. Linear Programming. Oper. Res. 2002, 50, 42–47. [Google Scholar] [CrossRef]

- Bellman, R. Dynamic Programming. Science 1966, 153, 34–37. [Google Scholar] [CrossRef]

- Hu, H.; Shan, W.; Chen, J.; Xing, L.; Heidari, A.A.; Chen, H.; He, X.; Wang, M. Dynamic Individual Selection and Crossover Boosted Forensic-Based Investigation Algorithm for Global Optimization and Feature Selection. J. Bionic Eng. 2023, 20, 2416–2442. [Google Scholar] [CrossRef]

- Bottou, L.; Curtis, F.E.; Nocedal, J. Optimization Methods for Large-Scale Machine Learning. SIAM Rev. 2018, 60, 223–311. [Google Scholar] [CrossRef]

- Huang, X.; Hu, H.; Wang, J.; Yuan, B.; Dai, C.; Ablameyk, S.V. Dynamic Strongly Convex Sparse Operator with Learning Mechanism for Sparse Large-Scale Multi-Objective Optimization. In Proceedings of the 2024 6th International Conference on Data-driven Optimization of Complex Systems (DOCS), Hangzhou, China, 16–18 August 2024; pp. 121–127. [Google Scholar]

- Dokeroglu, T.; Sevinc, E.; Kucukyilmaz, T.; Cosar, A. A Survey on New Generation Metaheuristic Algorithms. Comput. Ind. Eng. 2019, 137, 106040. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Abdel-Fatah, L.; Sangaiah, A.K. Metaheuristic Algorithms: A Comprehensive Review. In Computational Intelligence for Multimedia Big Data on the Cloud with Engineering Applications; Academic Press: Cambridge, MA, USA, 2018; pp. 185–231. [Google Scholar]

- Katoch, S.; Chauhan, S.S.; Kumar, V. A Review on Genetic Algorithm: Past, Present, and Future. Multimed. Tools Appl. 2021, 80, 8091–8126. [Google Scholar] [CrossRef] [PubMed]

- Askarzadeh, A. A Novel Metaheuristic Method for Solving Constrained Engineering Optimization Problems: Crow Search Algorithm. Comput. Struct. 2016, 169, 1–12. [Google Scholar] [CrossRef]

- Rajpurohit, J.; Sharma, T.K.; Abraham, A.; Vaishali. Glossary of Metaheuristic Algorithms. Int. J. Comput. Inf. Syst. Ind. Manag. Appl. 2017, 9, 25. [Google Scholar]

- Price, K.; Storn, R.M.; Lampinen, J.A. Differential Evolution: A Practical Approach to Global Optimization; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Bertsimas, D.; Tsitsiklis, J. Simulated Annealing. Stat. Sci. 1993, 8, 10–15. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A Gravitational Search Algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Poli, R.; Kennedy, J.; Blackwell, T. Particle Swarm Optimization. Swarm Intell. 2007, 1, 33–57. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant Colony Optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. Artificial Bee Colony (ABC) Optimization Algorithm for Solving Constrained Optimization Problems. In Foundations of Fuzzy Logic and Soft Computing; Melin, P., Castillo, O., Aguilar, L.T., Kacprzyk, J., Pedrycz, W., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4529, pp. 789–798. ISBN 978-3-540-72917-4. [Google Scholar]

- Wolpert, D.H.; Macready, W.G. No Free Lunch Theorems for Optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Ho, Y.C.; Pepyne, D.L. Simple Explanation of the No-Free-Lunch Theorem and Its Implications. J. Optim. Theory Appl. 2002, 115, 549–570. [Google Scholar] [CrossRef]

- Desbordes, J.K.; Zhang, K.; Xue, X.; Ma, X.; Luo, Q.; Huang, Z.; Hai, S.; Jun, Y. Dynamic Production Optimization Based on Transfer Learning Algorithms. J. Petrol. Sci. Eng. 2022, 208, 109278. [Google Scholar] [CrossRef]

- Suwartadi, E.; Krogstad, S.; Foss, B. Adjoint-Based Surrogate Optimization of Oil Reservoir Water Flooding. Optim. Eng. 2015, 16, 441–481. [Google Scholar] [CrossRef]

- Rasouli, H.; Rashidi, F.; Karimi, B.; Khamehchi, E. A Surrogate Integrated Production Modeling Approach to Long-Term Gas-Lift Allocation Optimization. Chem. Eng. Commun. 2015, 202, 647–654. [Google Scholar] [CrossRef]

- Yan, M.; Huang, C.; Bienstman, P.; Tino, P.; Lin, W.; Sun, J. Emerging Opportunities and Challenges for the Future of Reservoir Computing. Nat. Commun. 2024, 15, 2056. [Google Scholar] [CrossRef]

- Verma, S.; Prasad, A.D.; Verma, M.K. Optimal Operation of the Multi-Reservoir System: A Comparative Study of Robust Metaheuristic Algorithms. Int. J. Hydrol. Sci. Technol. 2024, 17, 239–266. [Google Scholar] [CrossRef]

- Wang, L.; Yao, Y.; Zhao, G.; Adenutsi, C.D.; Wang, W.; Lai, F. A Hybrid Surrogate-Assisted Integrated Optimization of Horizontal Well Spacing and Hydraulic Fracture Stage Placement in Naturally Fractured Shale Gas Reservoir. J. Petrol. Sci. Eng. 2022, 216, 110842. [Google Scholar] [CrossRef]

- An, Z.; Zhou, K.; Hou, J.; Wu, D.; Pan, Y. Accelerating Reservoir Production Optimization by Combining Reservoir Engineering Method with Particle Swarm Optimization Algorithm. J. Pet. Sci. Eng. 2022, 208, 109692. [Google Scholar] [CrossRef]

- Gu, J.; Liu, W.; Zhang, K.; Zhai, L.; Zhang, Y.; Chen, F. Reservoir Production Optimization Based on Surrograte Model and Differential Evolution Algorithm. J. Pet. Sci. Eng. 2021, 205, 108879. [Google Scholar] [CrossRef]

- Chen, G.; Zhang, K.; Zhang, L.; Xue, X.; Ji, D.; Yao, C.; Yao, J.; Yang, Y. Global and Local Surrogate-Model-Assisted Differential Evolution for Waterflooding Production Optimization. SPE J. 2020, 25, 105–118. [Google Scholar] [CrossRef]

- Zhao, Z.; Luo, S. A Crisscross-Strategy-Boosted Water Flow Optimizer for Global Optimization and Oil Reservoir Production. Biomimetics 2024, 9, 20. [Google Scholar] [CrossRef]

- Gao, Y.; Cheng, L. A Multi-Swarm Greedy Selection Enhanced Fruit Fly Optimization Algorithm for Global Optimization in Oil and Gas Production. PLoS ONE 2025, 20, e0322111. [Google Scholar] [CrossRef]

- Yue, T.; Li, T. Crisscross Moss Growth Optimization: An Enhanced Bio-Inspired Algorithm for Global Production and Optimization. Biomimetics 2025, 10, 32. [Google Scholar] [CrossRef]

- Ouyang, K.; Fu, S.; Chen, Y.; Cai, Q.; Heidari, A.A.; Chen, H. Escape: An Optimization Method Based on Crowd Evacuation Behaviors. Artif. Intell. Rev. 2024, 58, 19. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-Flame Optimization Algorithm: A Novel Nature-Inspired Heuristic Paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for Solving Optimization Problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Lian, J.; Hui, G.; Ma, L.; Zhu, T.; Wu, X.; Heidari, A.A.; Chen, Y.; Chen, H. Parrot Optimizer: Algorithm and Applications to Medical Problems. Comput. Biol. Med. 2024, 172, 108064. [Google Scholar] [CrossRef]

- Yang, X.-S.; Gandomi, A.H. Bat Algorithm: A Novel Approach for Global Engineering Optimization. Eng. Comput. 2012, 29, 464–483. [Google Scholar] [CrossRef]

- Yang, X.-S. Firefly Algorithm, Stochastic Test Functions and Design Optimisation. Int. J. Bio-Inspired Comput. 2010, 2, 78–84. [Google Scholar] [CrossRef]

| Function | Function Name | Class | Optimum |

|---|---|---|---|

| F1 | Shifted and Rotated Bent Cigar Function | Unimodal | 100 |

| F2 | Shifted and Rotated Zakharov Function | Unimodal | 300 |

| F3 | Shifted and Rotated Rosenbrock’s Function | Multimodal | 400 |

| F4 | Shifted and Rotated Rastrigin’s Function | Multimodal | 500 |

| F5 | Shifted and Rotated Expanded Schaffer’s F6 Function | Multimodal | 600 |

| F6 | Shifted and Rotated Lunacek Bi-Rastrigin Function | Multimodal | 700 |

| F7 | Shifted and Rotated Non-Continuous Rastrigin’s Function | Multimodal | 800 |

| F8 | Shifted and Rotated Lévy Function | Multimodal | 900 |

| F9 | Shifted and Rotated Schwefel’s Function | Multimodal | 1000 |

| F10 | Hybrid Function 1 (N = 3) | Hybrid | 1100 |

| F11 | Hybrid Function 2 (N = 3) | Hybrid | 1200 |

| F12 | Hybrid Function 3 (N = 3) | Hybrid | 1300 |

| F13 | Hybrid Function 4 (N = 4) | Hybrid | 1400 |

| F14 | Hybrid Function 5 (N = 4) | Hybrid | 1500 |

| F15 | Hybrid Function 6 (N = 4) | Hybrid | 1600 |

| F16 | Hybrid Function 6 (N = 5) | Hybrid | 1700 |

| F17 | Hybrid Function 6 (N = 5) | Hybrid | 1800 |

| F18 | Hybrid Function 6 (N = 5) | Hybrid | 1900 |

| F19 | Hybrid Function 6 (N = 6) | Hybrid | 2000 |

| F20 | Composition Function 1 (N = 3) | Composition | 2100 |

| F21 | Composition Function 2 (N = 3) | Composition | 2200 |

| F22 | Composition Function 3 (N = 4) | Composition | 2300 |

| F23 | Composition Function 4 (N = 4) | Composition | 2400 |

| F24 | Composition Function 5 (N = 5) | Composition | 2500 |

| F25 | Composition Function 6 (N = 5) | Composition | 2600 |

| F26 | Composition Function 7 (N = 6) | Composition | 2700 |

| F27 | Composition Function 8 (N = 6) | Composition | 2800 |

| F28 | Composition Function 9 (N = 3) | Composition | 2900 |

| F29 | Composition Function 10 (N = 3) | Composition | 3000 |

| F1 | F2 | F3 | ||||

|---|---|---|---|---|---|---|

| Avg | Std | Avg | Std | Avg | Std | |

| CCESC | 3.5255 × 103 | 3.8954 × 103 | 1.0835 × 103 | 5.3427 × 102 | 4.7483 × 102 = | 2.9002 × 101 |

| ESC | 3.3872 × 103 = | 3.9748 × 103 | 6.3777 × 102 − | 4.5533 × 102 | 4.6240 × 102 + | 3.0354 × 101 |

| DE | 2.7647 × 103 = | 4.4090 × 103 | 1.9190 × 104 + | 5.0403 × 103 | 4.8943 × 102 + | 8.7689 × 100 |

| GWO | 1.4755 × 109 + | 1.1091 × 109 | 3.1543 × 104 + | 1.0232 × 104 | 6.1372 × 102 + | 1.0357 × 102 |

| MFO | 1.0161 × 1010 + | 7.5321 × 109 | 9.2600 × 104 + | 6.6262 × 104 | 1.3525 × 103 + | 7.9515 × 102 |

| SCA | 1.2752 × 1010 + | 2.3869 × 109 | 3.8462 × 104 + | 7.0213 × 103 | 1.4624 × 103 + | 3.2765 × 102 |

| PSO | 3.1524 × 103 = | 3.0479 × 103 | 3.0000 × 102 − | 4.8342 × 10−3 | 4.6206 × 102 − | 2.5274 × 101 |

| PO | 4.2669 × 107 + | 5.6668 × 107 | 5.6686 × 103 + | 3.8437 × 103 | 5.2065 × 102 + | 2.3061 × 101 |

| BA | 5.5491 × 105 + | 2.4723 × 105 | 3.0010 × 102 − | 7.6706 × 10−2 | 4.7381 × 102 = | 3.5388 × 101 |

| FA | 1.4343 × 1010 + | 1.4273 × 109 | 6.1478 × 104 + | 1.0700 × 104 | 1.3581 × 103 + | 1.2564 × 102 |

| F4 | F5 | F6 | ||||

| Avg | Std | Avg | Std | Avg | Std | |

| CCESC | 5.5037 × 102 | 7.8167 × 100 | 6.0000 × 102 | 6.5666 × 10−6 | 7.9386 × 102 | 9.0650 × 100 |

| ESC | 5.2816 × 102 − | 7.8871 × 100 | 6.0010 × 102 + | 1.1095 × 10−1 | 7.6211 × 102 − | 9.3598 × 100 |

| DE | 6.1306 × 102 + | 8.8681 × 100 | 6.0000 × 102 = | 0.0000 × 100 | 8.4353 × 102 + | 7.2832 × 100 |

| GWO | 6.0056 × 102 + | 2.4028 × 101 | 6.0595 × 102 + | 3.0534 × 100 | 8.5675 × 102 + | 3.3805 × 101 |

| MFO | 7.0553 × 102 + | 4.2114 × 101 | 6.3645 × 102 + | 1.1182 × 101 | 1.2015 × 103 + | 2.3191 × 102 |

| SCA | 7.7670 × 102 + | 1.7574 × 101 | 6.4961 × 102 + | 4.5914 × 100 | 1.1169 × 103 + | 3.3466 × 101 |

| PSO | 6.9392 × 102 + | 4.1274 × 101 | 6.4342 × 102 + | 6.5115 × 100 | 1.0271 × 103 + | 6.1418 × 101 |

| PO | 7.3187 × 102 + | 4.6764 × 101 | 6.5719 × 102 + | 7.5367 × 100 | 1.1326 × 103 + | 7.4220 × 101 |

| BA | 8.4090 × 102 + | 7.4849 × 101 | 6.7434 × 102 + | 7.0809 × 100 | 1.6100 × 103 + | 1.8629 × 102 |

| FA | 7.5838 × 102 + | 1.1129 × 101 | 6.4328 × 102 + | 3.3706 × 100 | 1.3901 × 103 + | 3.4637 × 101 |

| F7 | F8 | F9 | ||||

| Avg | Std | Avg | Std | Avg | Std | |

| CCESC | 8.5047 × 102 | 6.8607 × 100 | 9.0126 × 102 | 1.2168 × 100 | 3.5882 × 103 | 2.4471 × 102 |

| ESC | 8.2653 × 102 − | 6.7751 × 100 | 9.2665 × 102 + | 2.1323 × 101 | 3.2137 × 103 − | 7.9416 × 102 |

| DE | 9.1027 × 102 + | 6.2547 × 100 | 9.0000 × 102 − | 9.6743 × 10−14 | 5.8136 × 103 + | 2.8900 × 102 |

| GWO | 8.8931 × 102 + | 2.1397 × 101 | 1.7638 × 103 + | 6.3256 × 102 | 4.0596 × 103 + | 6.3352 × 102 |

| MFO | 1.0012 × 103 + | 4.9928 × 101 | 7.6299 × 103 + | 2.2116 × 103 | 5.5813 × 103 + | 9.2648 × 102 |

| SCA | 1.0519 × 103 + | 1.7228 × 101 | 5.2489 × 103 + | 1.0536 × 103 | 8.1075 × 103 + | 3.3474 × 102 |

| PSO | 9.4191 × 102 + | 2.4900 × 101 | 4.0330 × 103 + | 5.9325 × 102 | 4.8212 × 103 + | 5.3623 × 102 |

| PO | 9.8016 × 102 + | 3.0531 × 101 | 5.0865 × 103 + | 7.6591 × 102 | 5.7686 × 103 + | 7.8686 × 102 |

| BA | 1.0472 × 103 + | 5.6682 × 101 | 1.2756 × 104 + | 4.4659 × 103 | 5.4802 × 103 + | 6.2913 × 102 |

| FA | 1.0552 × 103 + | 1.3119 × 101 | 5.2547 × 103 + | 5.3521 × 102 | 8.0185 × 103 + | 2.9787 × 102 |

| F10 | F11 | F12 | ||||

| Avg | Std | Avg | Std | Avg | Std | |

| CCESC | 1.1399 × 103 | 2.6975 × 101 | 3.0823 × 105 | 2.0745 × 105 | 1.3200 × 104 | 1.5007 × 104 |

| ESC | 1.1684 × 103 + | 3.6141 × 101 | 3.9234 × 105 = | 2.4493 × 105 | 1.5499 × 104 = | 1.0559 × 104 |

| DE | 1.1568 × 103 + | 2.4300 × 101 | 1.6569 × 106 + | 7.9478 × 105 | 3.0594 × 104 + | 2.0254 × 104 |

| GWO | 1.8756 × 103 + | 7.1996 × 102 | 6.9142 × 107 + | 8.6664 × 107 | 2.2559 × 107 + | 5.8838 × 107 |

| MFO | 5.7541 × 103 + | 5.0815 × 103 | 3.7185 × 108 + | 5.0196 × 108 | 9.3223 × 107 + | 3.0688 × 108 |

| SCA | 2.0663 × 103 + | 2.1876 × 102 | 1.1999 × 109 + | 3.7840 × 108 | 3.7641 × 108 + | 1.7320 × 108 |

| PSO | 1.2075 × 103 + | 3.2471 × 101 | 3.3489 × 104 − | 2.0523 × 104 | 1.2396 × 104 = | 1.5268 × 104 |

| PO | 1.3055 × 103 + | 6.5945 × 101 | 3.3512 × 107 + | 3.5698 × 107 | 1.4785 × 105 + | 1.1272 × 105 |

| BA | 1.3242 × 103 + | 8.0755 × 101 | 2.2536 × 106 + | 1.7036 × 106 | 3.0314 × 105 + | 1.1221 × 105 |

| FA | 3.5574 × 103 + | 4.3103 × 102 | 1.4741 × 109 + | 3.8706 × 108 | 6.0224 × 108 + | 1.6634 × 108 |

| F13 | F14 | F15 | ||||

| Avg | Std | Avg | Std | Avg | Std | |

| CCESC | 2.4961 × 104 | 1.9010 × 104 | 5.2832 × 103 | 5.9862 × 103 | 1.9362 × 103 | 1.8960 × 102 |

| ESC | 1.4643 × 104 − | 1.3725 × 104 | 7.2748 × 103 = | 7.5329 × 103 | 2.0617 × 103 + | 2.1360 × 102 |

| DE | 6.4083 × 104 + | 4.3831 × 104 | 6.7605 × 103 + | 3.7226 × 103 | 2.0987 × 103 + | 1.3508 × 102 |

| GWO | 2.1191 × 105 + | 3.0916 × 105 | 3.4786 × 105 + | 7.0974 × 105 | 2.3503 × 103 + | 2.6201 × 102 |

| MFO | 1.6461 × 105 + | 3.2360 × 105 | 3.0154 × 107 + | 1.6484 × 108 | 3.2261 × 103 + | 3.8883 × 102 |

| SCA | 1.2213 × 105 + | 6.0385 × 104 | 1.3110 × 107 + | 1.1163 × 107 | 3.5654 × 103 + | 2.4475 × 102 |

| PSO | 6.2203 × 103 − | 3.9012 × 103 | 7.7850 × 103 + | 6.5680 × 103 | 2.9417 × 103 + | 3.9223 × 102 |

| PO | 4.2016 × 104 + | 2.9247 × 104 | 3.8824 × 104 + | 2.5295 × 104 | 3.0436 × 103 + | 3.1588 × 102 |

| BA | 6.5404 × 103 − | 4.0829 × 103 | 9.1369 × 104 + | 4.9670 × 104 | 3.2934 × 103 + | 4.4017 × 102 |

| FA | 1.9705 × 105 + | 8.7921 × 104 | 6.6901 × 107 + | 3.0210 × 107 | 3.4141 × 103 + | 1.5962 × 102 |

| F16 | F17 | F18 | ||||

| Avg | Std | Avg | Std | Avg | Std | |

| CCESC | 1.7798 × 103 | 5.8233 × 101 | 1.9710 × 105 | 1.7757 × 105 | 8.0167 × 103 | 8.9592 × 103 |

| ESC | 1.8868 × 103 + | 1.2433 × 102 | 2.8857 × 105 = | 3.3036 × 105 | 7.3902 × 103 = | 5.8539 × 103 |

| DE | 1.8644 × 103 + | 5.9255 × 101 | 3.2283 × 105 + | 1.6230 × 105 | 7.2213 × 103 = | 4.1013 × 103 |

| GWO | 1.9867 × 103 + | 1.4623 × 102 | 4.5734 × 105 + | 4.0013 × 105 | 4.0608 × 105 + | 4.8087 × 105 |

| MFO | 2.5587 × 103 + | 2.0382 × 102 | 3.1724 × 106 + | 6.9905 × 106 | 1.3544 × 107 + | 3.6473 × 107 |

| SCA | 2.3984 × 103 + | 1.2507 × 102 | 3.0253 × 106 + | 1.4421 × 106 | 2.6512 × 107 + | 1.5133 × 107 |

| PSO | 2.4593 × 103 + | 2.9558 × 102 | 1.4883 × 105 = | 8.6525 × 104 | 1.0108 × 104 = | 1.0643 × 104 |

| PO | 2.3168 × 103 + | 2.2105 × 102 | 5.6943 × 105 + | 3.8407 × 105 | 6.4285 × 105 + | 6.9384 × 105 |

| BA | 2.8405 × 103 + | 3.2629 × 102 | 2.2019 × 105 = | 1.3705 × 105 | 6.4719 × 105 + | 2.9608 × 105 |

| FA | 2.5289 × 103 + | 1.0249 × 102 | 3.6599 × 106 + | 1.6108 × 106 | 9.3336 × 107 + | 4.5101 × 107 |

| F19 | F20 | F21 | ||||

| Avg | Std | Avg | Std | Avg | Std | |

| CCESC | 2.1155 × 103 | 6.9545 × 101 | 2.3516 × 103 | 6.5957 × 100 | 2.4905 × 103 | 7.2399 × 102 |

| ESC | 2.2513 × 103 + | 1.1968 × 102 | 2.3285 × 103 − | 8.4475 × 100 | 3.0059 × 103 + | 1.1838 × 103 |

| DE | 2.1315 × 103 = | 5.9738 × 101 | 2.4108 × 103 + | 7.5239 × 100 | 3.7545 × 103 + | 1.9345 × 103 |

| GWO | 2.3525 × 103 + | 1.2895 × 102 | 2.3855 × 103 + | 2.5234 × 101 | 4.5912 × 103 + | 1.3127 × 103 |

| MFO | 2.6958 × 103 + | 2.0705 × 102 | 2.5007 × 103 + | 3.7422 × 101 | 6.4010 × 103 + | 1.5213 × 103 |

| SCA | 2.5807 × 103 + | 1.4258 × 102 | 2.5478 × 103 + | 1.9573 × 101 | 8.8320 × 103 + | 1.9163 × 103 |

| PSO | 2.6068 × 103 + | 1.9247 × 102 | 2.4698 × 103 + | 3.5134 × 101 | 5.1165 × 103 + | 2.2689 × 103 |

| PO | 2.4862 × 103 + | 1.6903 × 102 | 2.5023 × 103 + | 4.4799 × 101 | 2.7944 × 103 + | 1.0815 × 103 |

| BA | 2.9724 × 103 + | 2.2133 × 102 | 2.6438 × 103 + | 6.9904 × 101 | 6.7510 × 103 + | 1.7252 × 103 |

| FA | 2.5833 × 103 + | 1.0234 × 102 | 2.5417 × 103 + | 9.7253 × 100 | 3.8372 × 103 + | 1.2854 × 102 |

| F22 | F23 | F24 | ||||

| Avg | Std | Avg | Std | Avg | Std | |

| CCESC | 2.6986 × 103 | 9.0945 × 100 | 2.8647 × 103 | 8.6917 × 100 | 2.8898 × 103 | 7.5304 × 100 |

| ESC | 2.6880 × 103 − | 1.1336 × 101 | 2.8517 × 103 − | 1.0709 × 101 | 2.8976 × 103 + | 1.5369 × 101 |

| DE | 2.7545 × 103 + | 8.0664 × 100 | 2.9596 × 103 + | 1.1611 × 101 | 2.8874 × 103 − | 2.5098 × 10−1 |

| GWO | 2.7491 × 103 + | 3.8744 × 101 | 2.9293 × 103 + | 5.6197 × 101 | 3.0034 × 103 + | 6.0756 × 101 |

| MFO | 2.8435 × 103 + | 3.8511 × 101 | 2.9993 × 103 + | 4.2330 × 101 | 3.3859 × 103 + | 5.9192 × 102 |

| SCA | 2.9857 × 103 + | 3.3269 × 101 | 3.1556 × 103 + | 3.0030 × 101 | 3.2041 × 103 + | 5.2181 × 101 |

| PSO | 3.2924 × 103 + | 1.9323 × 102 | 3.3705 × 103 + | 1.0796 × 102 | 2.8805 × 103 − | 6.3784 × 100 |

| PO | 2.9715 × 103 + | 6.9490 × 101 | 3.1048 × 103 + | 6.8115 × 101 | 2.9227 × 103 + | 2.7850 × 101 |

| BA | 3.3136 × 103 + | 1.5781 × 102 | 3.3232 × 103 + | 1.2567 × 102 | 2.9127 × 103 + | 2.4890 × 101 |

| FA | 2.9154 × 103 + | 9.4232 × 100 | 3.0645 × 103 + | 1.1776 × 101 | 3.5518 × 103 + | 1.2014 × 102 |

| F25 | F26 | F27 | ||||

| Avg | Std | Avg | Std | Avg | Std | |

| CCESC | 4.0531 × 103 | 1.1532 × 102 | 3.2141 × 103 | 9.3902 × 100 | 3.1715 × 103 | 6.4540 × 101 |

| ESC | 4.0841 × 103 = | 1.8511 × 102 | 3.2302 × 103 + | 1.7731 × 101 | 3.1789 × 103 = | 5.3878 × 101 |

| DE | 4.6572 × 103 + | 1.2058 × 102 | 3.2068 × 103 − | 3.3581 × 100 | 3.1779 × 103 = | 5.7495 × 101 |

| GWO | 4.7819 × 103 + | 3.5736 × 102 | 3.2393 × 103 + | 1.7523 × 101 | 3.4680 × 103 + | 2.3047 × 102 |

| MFO | 5.9247 × 103 + | 6.3639 × 102 | 3.2603 × 103 + | 2.9104 × 101 | 4.6385 × 103 + | 1.0065 × 103 |

| SCA | 6.9798 × 103 + | 3.3407 × 102 | 3.3936 × 103 + | 4.5056 × 101 | 3.8195 × 103 + | 9.7138 × 101 |

| PSO | 6.3088 × 103 + | 2.3791 × 103 | 3.3135 × 103 = | 2.8523 × 102 | 3.1467 × 103 = | 6.2260 × 101 |

| PO | 6.2389 × 103 + | 1.5609 × 103 | 3.3153 × 103 + | 4.9880 × 101 | 3.3089 × 103 + | 5.5567 × 101 |

| BA | 8.8007 × 103 + | 2.6331 × 103 | 3.4038 × 103 + | 9.5290 × 101 | 3.1534 × 103 = | 6.4405 × 101 |

| FA | 6.4862 × 103 + | 1.8155 × 102 | 3.3339 × 103 + | 1.6953 × 101 | 3.8787 × 103 + | 9.0911 × 101 |

| F28 | F29 | |||||

| Avg | Std | Avg | Std | |||

| CCESC | 3.4364 × 103 | 5.4729 × 101 | 7.3284 × 103 | 1.8753 × 103 | ||

| ESC | 3.5012 × 103 + | 1.2967 × 102 | 1.0768 × 104 + | 2.2758 × 103 | ||

| DE | 3.5250 × 103 + | 6.9103 × 101 | 1.1751 × 104 + | 3.3232 × 103 | ||

| GWO | 3.7476 × 103 + | 1.7946 × 102 | 5.8495 × 106 + | 5.5302 × 106 | ||

| MFO | 4.1130 × 103 + | 2.4497 × 102 | 7.5618 × 105 + | 1.0771 × 106 | ||

| SCA | 4.5913 × 103 + | 2.9433 × 102 | 7.0828 × 107 + | 3.0432 × 107 | ||

| PSO | 3.9840 × 103 + | 3.2357 × 102 | 5.0228 × 103 − | 1.7910 × 103 | ||

| PO | 4.3708 × 103 + | 3.4611 × 102 | 6.2783 × 106 + | 4.9233 × 106 | ||

| BA | 4.9522 × 103 + | 5.0689 × 102 | 1.2934 × 106 + | 7.2635 × 105 | ||

| FA | 4.7103 × 103 + | 1.5665 × 102 | 9.7735 × 107 + | 2.3550 × 107 | ||

| Overall Rank | ||||||

| RANK | +/=/− | AVG | ||||

| CCESC | 1 | ~ | 2.1034 | |||

| ESC | 2 | 11/9/9 | 2.4828 | |||

| DE | 3 | 21/4/4 | 3.3448 | |||

| GWO | 5 | 29/0/0 | 5.2069 | |||

| MFO | 8 | 29/0/0 | 7.4483 | |||

| SCA | 9 | 29/0/0 | 8.3448 | |||

| PSO | 4 | 17/6/6 | 4.2414 | |||

| PO | 6 | 29/0/0 | 6.1034 | |||

| BA | 7 | 24/3/2 | 7.1379 | |||

| FA | 10 | 29/0/0 | 8.5862 |

| Algorithm | NPV (USD) | |||

|---|---|---|---|---|

| Mean | Std | Best | Worst | |

| CCESC | 9.457 × 108 | 1.532 × 107 | 9.782 × 108 | 9.146 × 108 |

| ESC | 8.859 × 108 | 2.510 × 107 | 9.403 × 108 | 8.308 × 108 |

| DE | 8.986 × 108 | 3.121 × 107 | 9.553 × 108 | 8.391 × 108 |

| GWO | 9.053 × 108 | 2.784 × 107 | 9.648 × 108 | 8.557 × 108 |

| MFO | 8.247 × 108 | 4.542 × 107 | 8.905 × 108 | 7.692 × 108 |

| SCA | 8.704 × 108 | 3.487 × 107 | 9.251 × 108 | 8.107 × 108 |

| PSO | 8.552 × 108 | 3.939 × 107 | 9.106 × 108 | 7.895 × 108 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Li, X. CCESC: A Crisscross-Enhanced Escape Algorithm for Global and Reservoir Production Optimization. Biomimetics 2025, 10, 529. https://doi.org/10.3390/biomimetics10080529

Zhao Y, Li X. CCESC: A Crisscross-Enhanced Escape Algorithm for Global and Reservoir Production Optimization. Biomimetics. 2025; 10(8):529. https://doi.org/10.3390/biomimetics10080529

Chicago/Turabian StyleZhao, Youdao, and Xiangdong Li. 2025. "CCESC: A Crisscross-Enhanced Escape Algorithm for Global and Reservoir Production Optimization" Biomimetics 10, no. 8: 529. https://doi.org/10.3390/biomimetics10080529

APA StyleZhao, Y., & Li, X. (2025). CCESC: A Crisscross-Enhanced Escape Algorithm for Global and Reservoir Production Optimization. Biomimetics, 10(8), 529. https://doi.org/10.3390/biomimetics10080529