1. Introduction

With the rapid pace of urbanization and ongoing infrastructure development, high-performance concrete (HPC) has found widespread application in high-rise buildings [

1], bridge engineering [

2], nuclear power plants [

3], and other sectors due to its excellent mechanical properties, durability, and workability [

4,

5]. Among the various performance indicators, concrete compressive strength is a critical factor influencing the safety and durability of engineering structures [

6,

7,

8]. Accurate prediction of the compressive strength of HPC not only ensures structural safety and durability but also optimizes material utilization, reduces waste, and advances sustainable development within the construction sector. Traditionally, compressive strength has been determined through experimental methods. However, this approach is not only time-consuming and costly but also affected by environmental factors and human error, making it challenging to meet the dual demands of both efficiency and accuracy in engineering design [

9]. Consequently, the development of an efficient, stable, and highly accurate compressive strength prediction model has become a focal point of research in civil engineering and materials science [

10,

11,

12].

In addressing the challenge of predicting concrete strength, various modeling approaches have been proposed, including multiple linear regression (MLR) [

13,

14], decision tree (DT) [

15,

16], support vector machine (SVM) [

17,

18], and other statistical and machine learning models [

19]. However, due to the inherent nonlinearities and multivariate coupling in concrete materials, these methods often struggle to fully capture the complex nonlinear relationships between inputs and outputs, leading to limited prediction accuracy [

20]. In contrast, artificial neural networks (ANN) [

21], particularly backpropagation neural networks (BPNN) [

22,

23,

24,

25], have been widely used in concrete performance prediction because of their strong nonlinear modeling capabilities and adaptability.

Despite the advantages of BPNN in terms of modeling accuracy, their training process relies on the gradient descent optimization method, which is prone to local minima and sensitive to the initialization of weights and network architecture [

26]. This sensitivity can adversely affect the model’s predictive accuracy, training efficiency, and its convergence speed [

27]. To mitigate these limitations, researchers have explored integrating various intelligent optimization algorithms with BPNN to optimize weights and thresholds, thereby enhancing the model’s global search capability and generalization ability. FZ El-Hassani et al. [

28] developed a GA-optimized BPNN model for thyroid disease diagnosis, effectively addressing local minima and slow convergence issues. F Ma et al. [

29] applied a GA-optimized BPNN to forecast regional logistics demand, improving prediction accuracy and reducing iteration times. A Abdurrakhman et al. [

30] proposed a PSO-optimized adaptive BPNN model to predict and optimize the output power of biogas-fueled generators, achieving high accuracy and effective parameter tuning. Z Wang et al. [

31] proposed an XGBoost-assisted OTDBO-BPNN for predicting HPC compressive strength, which achieved superior accuracy by enhancing DBO’s global search and convergence performance through four strategic improvements. In addition, recent research has demonstrated the effectiveness of combining deep learning and 3D point cloud technologies to enhance the performance of intelligent optimization models in human–machine interaction and rehabilitation engineering [

32,

33], providing further insight for the development of hybrid intelligent frameworks in civil engineering contexts. Meanwhile, recent studies have also emphasized the significance of co-optimizing neural networks using adaptive evolutionary algorithms [

34] and the increasing industrial relevance of hybrid nature-inspired population-based optimization methods [

35], further supporting the rationale of our proposed hybrid framework.

Inspired by biomimetic principles, where natural systems often balance global exploration with local exploitation to adapt efficiently to complex environments [

36,

37], this paper introduces a novel BPNN model optimized using an adaptive probability hybrid ivy algorithm (IVYA) [

38] and particle swarm optimization (PSO) [

39] based on fitness improvement (AP-IVYPSO). The IVYA, drawing inspiration from the natural growth process of ivy plants, mimics biological behaviors to perform efficient local search, while PSO simulates the social behavior of bird flocking for global search. By combining these biomimetic algorithms, the proposed model effectively balances exploration and exploitation, addressing challenges faced by traditional optimization techniques.

The proposed model leverages the global search capabilities of PSO and the local search characteristics of the IVYA. By employing a fitness-based adaptive probability strategy, the model dynamically adjusts the update rules of PSO and IVYA, improving the accuracy, stability, and generalization ability of concrete strength predictions. To evaluate the model’s effectiveness, experiments were conducted using a publicly available concrete dataset and compared with traditional models such as BPNN, PSO-BP, GA-BP, and IVY-BP. The results show that the AP-IVYPSO-BP model outperforms these models across various evaluation metrics, particularly in enhancing the robustness and prediction accuracy of the model. The main contributions of this paper can be summarized as follows:

We introduce a new hybrid algorithm, AP-IVYPSO, which combines the IVYA and PSO, and incorporates an adaptive probability strategy based on fitness improvement. This biomimetic-inspired approach strikes a balance between global search capability and local search efficiency, effectively addressing the challenges faced by single optimization algorithms—such as getting stuck in local optima, slow convergence, and instability—when dealing with complex nonlinear problems.

Through comparison experiments with 10 widely recognized optimization (PSO, IVYA, HFPSO, HJSPSO, BOA, WOA, GOOSE, PSOBOA, NSM-BO, and FDB-AGSK.) algorithms on 26 benchmark test functions, AP-IVYPSO demonstrates exceptional optimization capability and high stability.

When applied to optimize a BP neural network, AP-IVYPSO effectively overcomes the local optima issue typically faced by traditional gradient descent methods, significantly improving the stability of the model. In comparison to existing prediction models, the AP-IVYPSO-BP model outperforms in multiple evaluation metrics (R2 = 0.9542, MAE = 3.0404, and RMSE = 3.7991), further validating the superior performance of the proposed approach.

The remainder of this paper is organized as follows:

Section 2 introduces the fundamentals of BPNN, PSO, and the IVYA.

Section 3 presents the construction and parameter optimization mechanism of the AP-IVYPSO-BP model;

Section 4 details the experimental setup, performance evaluation, and comparison with baseline models.

Section 5 presents the concluding remarks of this study and delineates prospective directions for subsequent research endeavors.

2. Materials and Methods

2.1. BP Neural Network

In recent years, the BPNN, as a classical multilayer feedforward neural network, has been widely applied in nonlinear modeling, regression prediction, and classification tasks [

40,

41]. Its primary strength stems from effectively capturing intricate nonlinear relationships and its strong generalization capability with a relatively interpretable model structure.

BPNN is generally composed of three fundamental parts: a layer for input, one or several hidden layers, and a layer for output, with interconnections defined by adjustable weights. During training, the network processes input data through forward propagation to produce outputs and then applies error backpropagation to iteratively adjust the weights and biases based on the difference between predicted and expected values. This learning mechanism enables the model to progressively minimize prediction errors and extract deep correlations among input features.

Although the use of gradient descent during training may lead to local optima, BPNN remain popular due to their structural flexibility and adaptability. To further improve convergence speed and prediction accuracy, recent studies have integrated BPNNs with intelligent optimization algorithms, resulting in hybrid models with enhanced robustness and global search capabilities.

In this study, the BP neural network is utilized as the foundational model for predicting concrete strength. Instead of relying on the traditional BP backpropagation training method, its weights and biases are optimized through an external global search using a swarm intelligence algorithm.

Each candidate solution represents an initial set of network parameters. The swarm intelligence algorithm iteratively adjusts these parameters to minimize the prediction error on the validation dataset. This approach mitigates common issues associated with conventional training techniques, such as local minima and vanishing gradients.

2.2. PSO Algorithm

PSO, introduced by Kennedy and Eberhart in 1995, is a population-based metaheuristic inspired by the collective behavior observed in bird flocks and fish schools during foraging. By exchanging information among individuals within a population, the algorithm efficiently balances exploration of the global search space and exploitation of promising local regions to address complex optimization problems [

42,

43].

In PSO, each individual—referred to as a particle—represents a candidate solution and is characterized by its position vector and velocity vector , where = 1, 2, …, denotes the population size, , and denotes the problem dimensionality. At each iteration, a particle’s trajectory is updated based on three fundamental influences:

- (1)

Inertia term: This retains a particle’s previous velocity, aiding in the continuation of its current search direction and contributing to global exploration.

- (2)

Cognitive term: This component reflects the particle’s own experience, guiding its movement toward its personal best position .

- (3)

Social term: Representing collective intelligence, this steers particles toward the globally best-known position discovered by the swarm.

In the searching phase, each particle’s location is affected by its personal best position within its vicinity and the overall best position found by the swarm of the entire population.

The formulas for updating the particle’s position and velocity are presented in Equation (1) and Equation (2), respectively.

where

denotes the inertia weight that regulates the trade-off between exploration and exploitation,

denotes the current iteration index, and

,

is the maximum number of iteration. The parameters

and

are cognitive and social acceleration coefficients, while

and

are uniformly distributed random numbers in the range [0, 1], introducing stochasticity into the search process. The termination condition is defined as reaching the maximum number of iterations, ensuring a balance between convergence quality and computational efficiency.

In the task of predicting concrete compressive strength, PSO is employed to optimize the parameters of the BP neural network. Each particle represents a set of initial parameters for the BP network, with the particle’s trajectory in the search space reflecting the path taken to find the optimal network weights and biases. Each particle’s position vector encodes a set of weights and biases for the BP neural network, and the population consists of several particles that co-evolve within the search space. PSO dynamically adjusts each particle’s velocity and position based on both its individual best position and the global best position, guiding the entire population towards a more optimal solution. The search space is defined by the complex, non-convex space of network parameters, and the fitness function is evaluated using the RMSE on the validation set. Through the iterative particle search process, an optimal set of network parameters can be identified more rapidly, improving prediction accuracy and enhancing the model’s robustness.

Figure 1 illustrates the operational workflow of the PSO algorithm.

2.3. Ivy Algorithm

The IVYA is an optimization method based on swarm intelligence, drawing inspiration from the adaptive and exploratory nature of vine plants in the natural world. Vines exhibit dynamic behaviors such as climbing, stretching, and expanding as they seek vital resources like sunlight and nutrients. This biological strategy provides a conceptual foundation for tackling global optimization problems. The IVYA emulates several phases of ivy development, including propagation, vertical climbing, and lateral spreading [

40]. The algorithm consists of the following four primary stages:

- (1)

Population Initialization. At the outset, a population of potential solutions is generated. Let

denote the number of individuals and

denote the dimensionality of the optimization problem. Each individual

is represented as a

-dimensional vector:

, where

and

denotes the population size. The entire ivy population is given by

. The initial positions of the individuals are randomly determined within the defined search boundaries using Equation (3):

where

denotes a

-dimensional vector containing random numbers uniformly distributed between 0 and 1 and

represents the Hadamard product between two vectors. The search boundaries are defined by

and

, which represent the lower and upper bounds of the decision space, respectively.

- (2)

Controlled Growth Dynamics. The population evolves in a structured manner that mimics ivy growth. The rate of growth

is assumed to vary over time, described via a differential Equation (4):

where

is a velocity factor,

is a nonlinear correction term, and

is the current growth rate. Individual updates are defined using Equation (5):

where

and

are the growth variations at successive time steps and

is a Gaussian-distributed random vector.

- (3)

Sunlight-Driven Adaptation. In nature, vines grow directionally towards light sources, often attaching to structures that support upward movement. This phototropic tendency is captured in the IVYA by encouraging individuals to improve based on their best-performing neighbor. The optimal peer

for individual

is chosen using Equation (6):

where the variable

represents the index of individual

in the population sorted by fitness from best to worst, with

. The selection procedure is as follows:

Sort the population according to fitness values in descending order, producing a sorted sequence .

Find the position of individual in this sorted list.

If , the optimal peer is the immediate better-ranked neighbor .

If , meaning is the current best individual , then itself.

This selection ensures that each individual learns from the nearest superior peer in the fitness ranking, mimicking the natural tendency of vines to grow towards more favorable structures.

The new state of individual

is calculated with Equations (7) and (8):

where

represents a vector with absolute values of the normal distribution components, enhancing diversity and exploration in the search space.

To replicate this behavior, the algorithm allows each individual

to identify and refer to the nearest neighbor

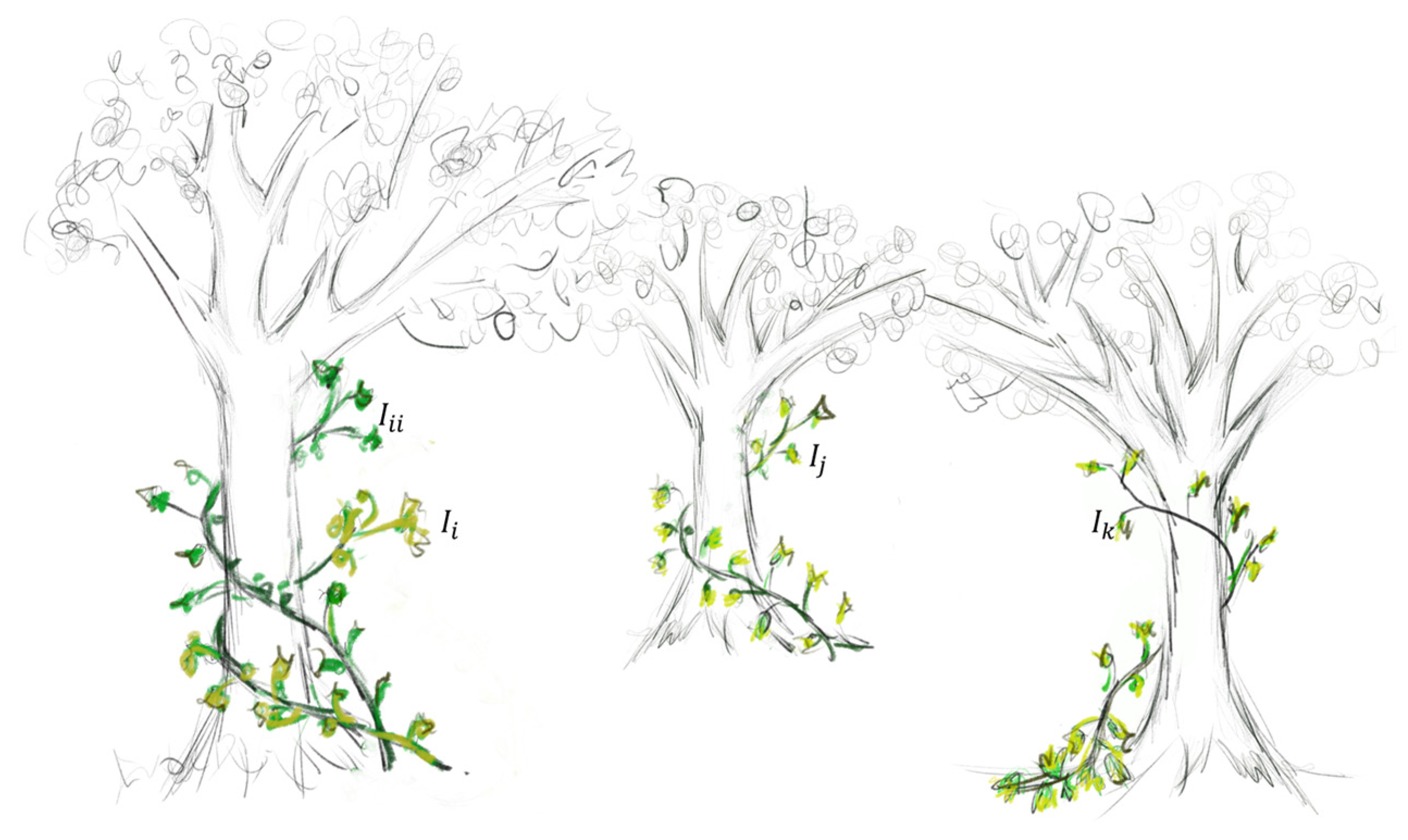

with superior fitness as a guide for its own evolution process. This mechanism, which mimics the natural tendency of vines to grow toward favorable conditions, is illustrated in

Figure 2.

The last primary stage is as follows:

- (4)

Growth behavior and evolutionary adjustment. Once an individual has explored the global space and located its closest high-quality neighbor it proceeds to align its search direction toward the current global best solution . This phase emphasizes exploiting the local region around to refine the solution, as formulated in Equations (9) and (10).

In this study, the IVYA is employed to optimize the initial weights and biases of the BP neural network, thereby enhancing the accuracy of the network’s prediction of concrete compressive strength. In practice, each IVYA individual is represented as a vector, which encodes a complete set of neural network weights and bias parameters. The population consists of multiple such individuals, and the search space is defined by the high-dimensional, non-convex error function space associated with the BP network parameters. IVYA efficiently explores this complex space through mechanisms such as simulating vine extension, selecting growth nodes, and perturbing local solutions. In each iteration of the algorithm, a new growth node is generated, corresponding to a new configuration of neural network parameters, which is then used to construct the corresponding BP model and assess its prediction performance on both the training and validation datasets. The fitness function is defined as the root mean square error (RMSE) on the validation set, with the goal of minimizing this error to improve the network’s generalization ability. This approach effectively mitigates common challenges in neural network training, such as vanishing gradients and local optima.

The search process is terminated when the maximum number of iterations is reached.

Figure 3 illustrates the procedural flow of the ivy algorithm.

3. Construction and Parameter Optimization Mechanism of the AP-IVYPSO-BP Model

This section presents a detailed description of the construction and parameter optimization mechanism of the AP-IVYPSO-BP model. To address the challenges faced by traditional BPNN, including issues of local optima and low convergence efficiency during training, this paper introduces an AP-IVYPSO to optimize the BPNN. The proposed model integrates the global search capabilities of PSO with the local search features of the IVYA. Unlike simple hybrid strategies that alternate update steps or linearly weight two algorithms, the AP-IVYPSO model adaptively switches between PSO and IVYA based on a fitness-driven probability function. This mechanism ensures seamless cooperation between the fast exploration of PSO and the refined exploitation of IVYA. Through an adaptive probability mechanism based on fitness improvement, the model dynamically adjusts the update strategies of PSO and IVYA. The strategy mimics the natural adaptation behavior observed in vine plants exposed to sunlight, which selectively grows towards better environmental conditions—an inspiration that guides this adaptive optimization framework. This combination effectively enhances the accuracy, stability, and generalization ability of concrete compressive strength prediction.

Specifically, in addressing the typical regression problem of predicting concrete strength, the BP network’s training process is redefined as a parameter optimization problem, where the AP-IVYPSO algorithm directly updates the parameters within the network architecture through iterative adjustments.

3.1. Implementation Mechanism of AP-IVYPSO

To improve the dynamic balance between global search capability and local refinement ability in complex engineering problems, this paper introduces an intelligent optimization algorithm based on an adaptive probability-guided mechanism, named AP-IVYPSO. The method incorporates an adaptive probability control mechanism into the core iteration process, dynamically adjusting the tendency of individuals to select search strategies at various stages. This facilitates the complementary coordination between the global exploration ability of PSO and the local fine search capability of the IVYA. By guiding the switching of search strategies at different stages of the evolutionary process, AP-IVYPSO effectively enhances both the global convergence performance and the local convergence accuracy of the algorithm. As a result, it achieves adaptive adjustment of the search direction and a seamless integration of multi-stage search behaviors. The method demonstrates excellent adaptability and robustness, making it particularly suitable for solving complex, nonlinear, and multimodal engineering optimization problems.

3.1.1. Adaptive Probability-Guided Mechanism

In the AP-IVYPSO algorithm, the core idea is to dynamically determine whether the current individual will use the PSO update strategy or the IVYA update strategy in each iteration, based on an adaptive probability control mechanism. This mechanism is calculated using the Equation (11):

where

is the current iteration number and

is the maximum number of iterations.

represents the natural exponential function, which is the exponential function with the base of the natural constant

. The term

represents the position of the current process in the iteration. Multiplying by 5 is used to control the rate of decay, which likely refers to how quickly the influence of certain parameters reduces over time in the algorithm.

Each individual generates a random number during the iteration and selects the search strategy based on the following rule:

If , the PSO update strategy is selected;

Otherwise, the IVYA update strategy is chosen.

The function of this mechanism is as follows:

Early iterations ( ≪ ): at this stage, , indicating that individuals are more likely to adopt the PSO strategy, which enhances global search capabilities by exploring a wider solution space.

Later iterations ( ≈ ): at this stage, , at which point the algorithm shifts to using the IVYA strategy, emphasizing local refinement and fine-tuning of solutions.

The core innovation of this mechanism is that it quantifies the trade-off between global and local search through a decaying probability function. Early in the optimization, a high favors PSO, enabling the swarm to explore broadly and avoid premature convergence. As the search progresses, decreases, making IVYA more likely to dominate, which improves fine-tuning and convergence precision.

This approach is grounded in adaptive optimization theory, where dynamically adjusting exploration and exploitation according to convergence state is a proven strategy for avoiding local optima in multimodal problems.

3.1.2. Global Search Strategy with PSO

When the condition is met, individual will adopt the standard PSO strategy to update its position and velocity, as specified in Equations (1) and (2). This strategy is guided by the individual’s best historical experience and the global best information from the entire population, offering strong global search capabilities and parallel information sharing.

During the early iterations, with a high value of , individuals are more likely to adopt the PSO strategy. This encourages the population to quickly expand the search space, avoid local optima, and enhance both the global exploration ability and search diversity of the algorithm. At this stage, the algorithm can gather richer search information on a global scale, which provides a solid foundation for the later local search phase. This leads to improved overall optimization efficiency, allowing the model to refine solutions more effectively in subsequent stages.

3.1.3. Local or Global Search Strategy with IVYA

When , the individual adopts the IVYA strategy, with its search behavior guided by the “vine disturbance mechanism” to achieve either local development or global exploration.

First, an adaptive disturbance threshold is generated as Equation (12):

where

refers to a random number uniformly distributed in the interval [0, 1]. If the current individual’s fitness

is less than

, the individual is considered to be in a potentially optimal region, and a local search is performed as Equation (13):

where

refers to another individual randomly selected from the population and

represents a standard normal random variable.

Otherwise, global search is performed as Equation (14):

This strategy effectively balances exploration and exploitation. Under the control of the adaptive probability mechanism, the algorithm adjusts the search behavior at different stages of the optimization process, using more local search behavior in promising areas and broader global search behavior when exploring new regions. This adaptive mechanism ensures that the algorithm can efficiently explore the solution space while avoiding local optima.

The IVYA’s role here is crucial: its vine disturbance mechanism simulates a localized perturbation process where solutions ‘grow’ along promising paths but with stochastic fine-scale adjustments. This is particularly important to compensate for PSO’s tendency to converge prematurely in high-dimensional, separable search spaces.

Moreover, the IVYA search contributes both local and global search modes. If the solution is near a potential optimum, the local mode is triggered to exploit it further. If the solution is suboptimal, global search provides a chance to escape poor regions. This behavior, governed by the disturbance threshold β1, ensures search robustness across various fitness landscapes.

3.1.4. GV Update and Greedy Selection Mechanism

The vine disturbance variable

controls the search disturbance amplitude in the IVYA strategy, and its update mechanism is given by Equation (15):

This update rule mimics the natural mutation and contraction behavior observed in vine growth, enabling the search process to be dynamically adjusted. By introducing controlled randomness, this mechanism enhances the algorithm’s capability to escape local optima. The update procedure involves checking and correcting the new position to ensure it stays within predefined boundaries, evaluating its fitness based on the objective function, and then applying a greedy selection mechanism. If the new position’s fitness is better than the current one, it replaces the old solution. Furthermore, if it outperforms the individual’s historical best or the global best solution, the respective records are updated accordingly. This approach guarantees effective evolution of the population each generation while maintaining diversity, which is essential for preventing premature convergence and improving overall search performance.

3.1.5. Time Complexity Analysis

The overall time complexity of the AP-IVYPSO algorithm proposed in this paper can be estimated based on its iterative structure. Let represent the population size, the problem dimension, and the maximum number of iterations. In each iteration, every individual performs either a PSO or IVY update operation, determined by an adaptive probability strategy aimed at improving fitness. Both update operations involve vector calculations of dimension and a single evaluation of the objective function.

The primary computational costs per generation include updating the position and velocity of each individual, perturbing the vine variable

, and calculating the fitness for all individuals. Assuming that the complexity of evaluating the objective function is

, the computational complexity for each iteration is

. Therefore, the total time complexity for the entire algorithm is given by Equation (16):

This complexity increases linearly with the number of iterations, population size, and problem dimension, which ensures good scalability for practical engineering optimization problems. Notably, this paper balances algorithm performance with computational efficiency. In the experiments, the population size is consistently set to 30, which helps ensure the algorithm achieves high computational efficiency while maintaining its optimization capabilities.

3.2. Performance Testing of AP-IVYPSO

To validate the performance and effectiveness of AP-IVYPSO, this study selected 26 widely used benchmark test functions [

44,

45,

46,

47], which include 15 single-peak test functions (f1–f15) and 11 multi-peak test functions (f16–f26). Single-peak test functions have a single global optimum and relatively simple search spaces. The detailed information about the test functions can be found in

Table 1. The optimization process mainly focuses on evaluating the algorithm’s convergence speed and accuracy. With no local optima to interfere with the search, single-peak functions are ideal for testing the algorithm’s local search capability and convergence stability.

In contrast, multi-peak test functions feature multiple local optima and one or more global optima, creating a more complex search space structure. These functions test the algorithm’s ability to escape from local optima and assess its global search performance, making them useful for evaluating the algorithm’s robustness and exploration capabilities in complex environments.

By testing both single-peak and multi-peak functions, we can comprehensively measure the algorithm’s performance across different problem types. Additionally, the 30 independent runs of AP-IVYPSO were compared with the results of eight other widely recognized, high-performance optimization algorithms: PSO, IVYA, HFPSO [

48], HJSPSO [

49], BOA [

50], WOA [

51], GOOSE [

52], PSOBOA [

53], NSM_BO [

54], and FDB_AGSK [

55]. The parameter configurations for each algorithm can be found in

Table 2. Three numerical evaluation metrics were used: the best fitness value, the average value, and the standard deviation, with the formulas described as Equation (17) through (19):

where

represents the number of runs, set to 30 in this case. The maximum number of iterations for the algorithms is set to 500.

All experiments were performed on a Windows 10 operating system, equipped with a 32 GB of RAM and Intel (R) Core (TM) i9-14900KF processor (3.10 GHz), using the Matlab R2023a environment.

3.2.1. Numerical Results Analysis

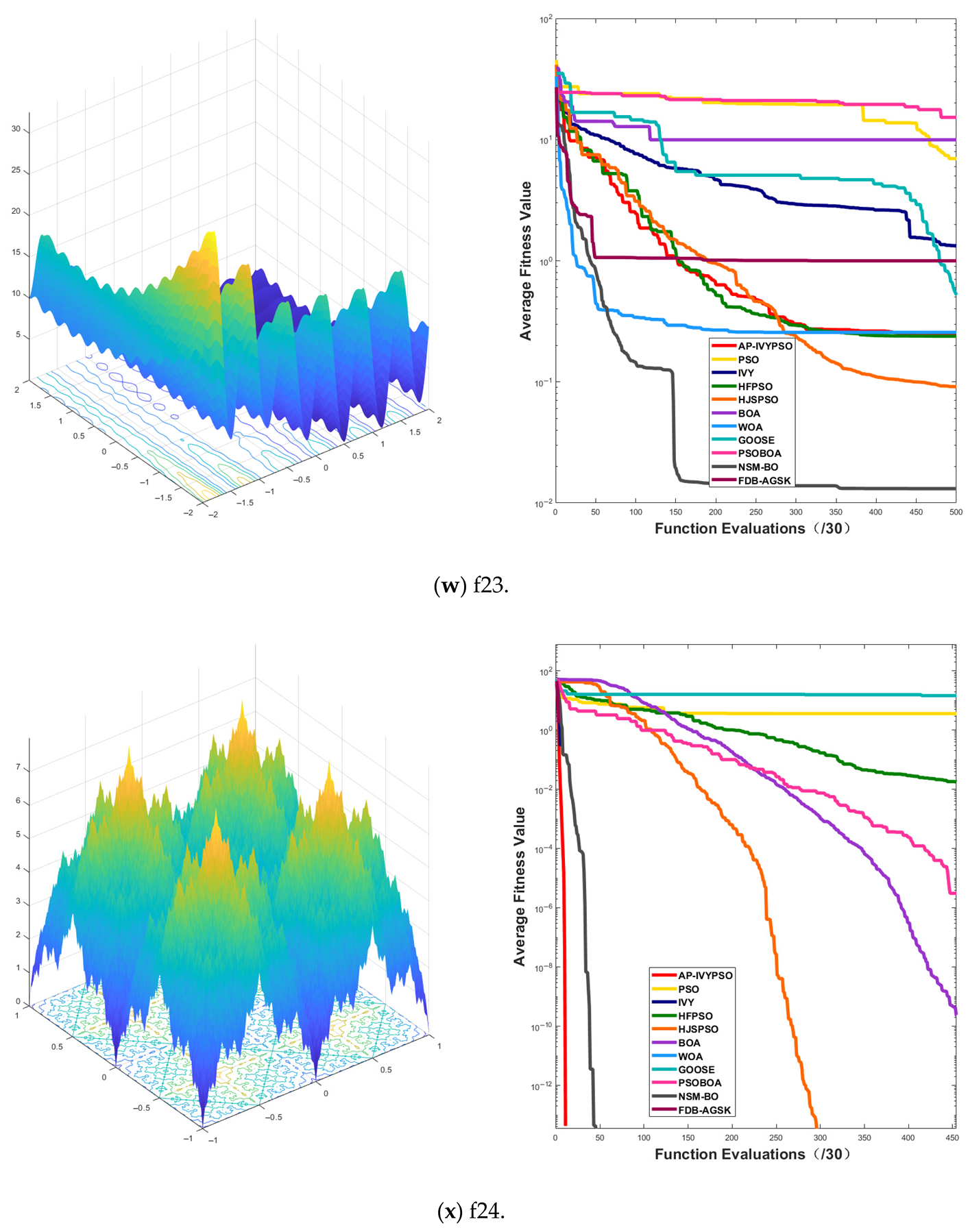

Table 3 presents the best fitness values and rankings achieved by AP-IVYPSO and the other eight algorithms across 26 test functions. AP-IVYPSO achieved the best fitness value on 21 (f1–f4, f6, f8–f20, f24–f26) test functions, demonstrating its strong accuracy and local search capability. However, on functions f22 and f23, it ranked 10 and 4, respectively, indicating average performance.

Table 4 shows the average fitness values, standard deviations, and rankings of AP-IVYPSO and the other eight algorithms across 26 test functions. AP-IVYPSO achieved the best average fitness value on 23 (f1–f6, f8–f21, f24–f26) test functions, highlighting its excellent global search capability and stability. On f7, f22, and f23, however, it ranked 8, 8, and 6, respectively, reflecting average performance compared to the best-performing algorithms on these functions.

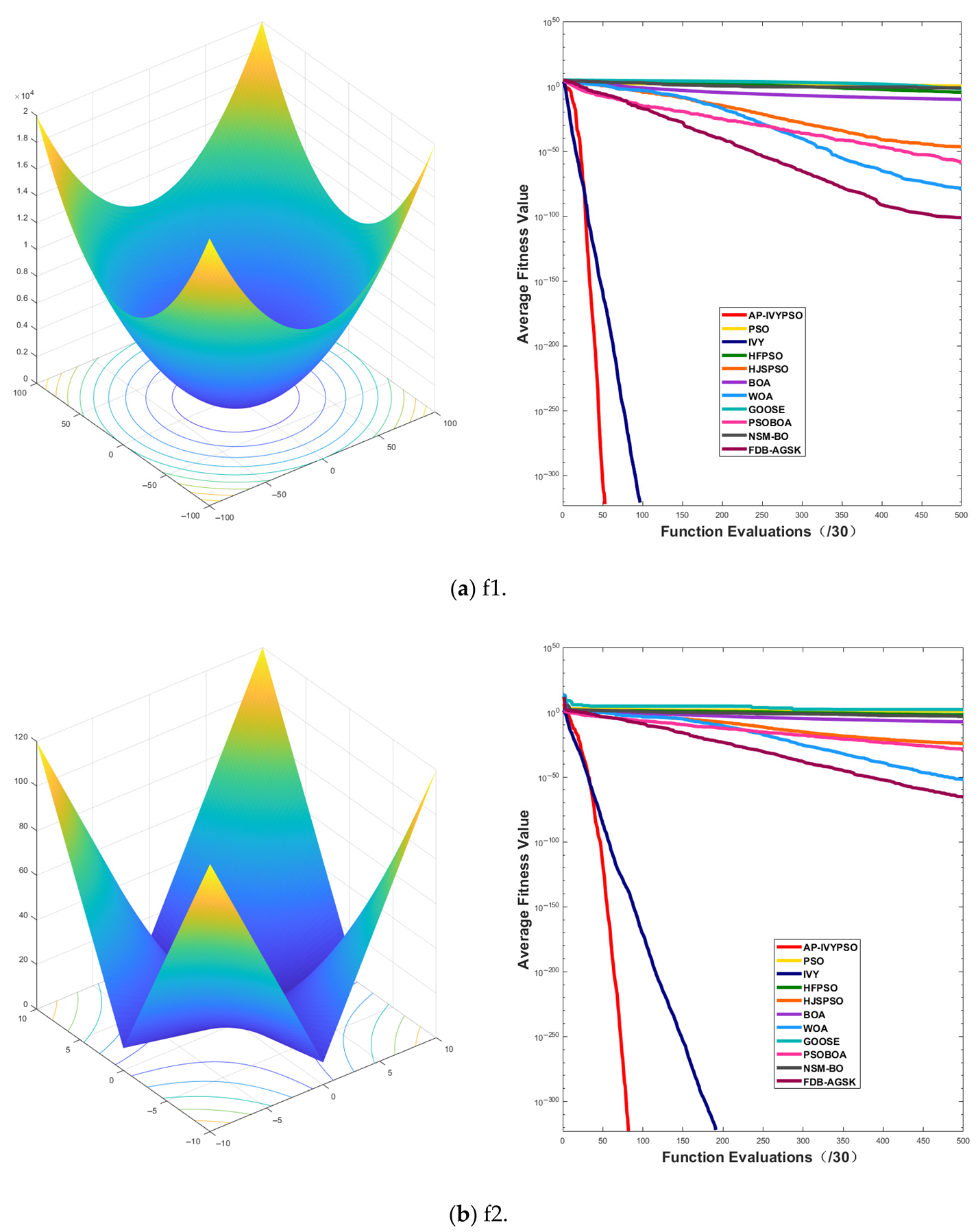

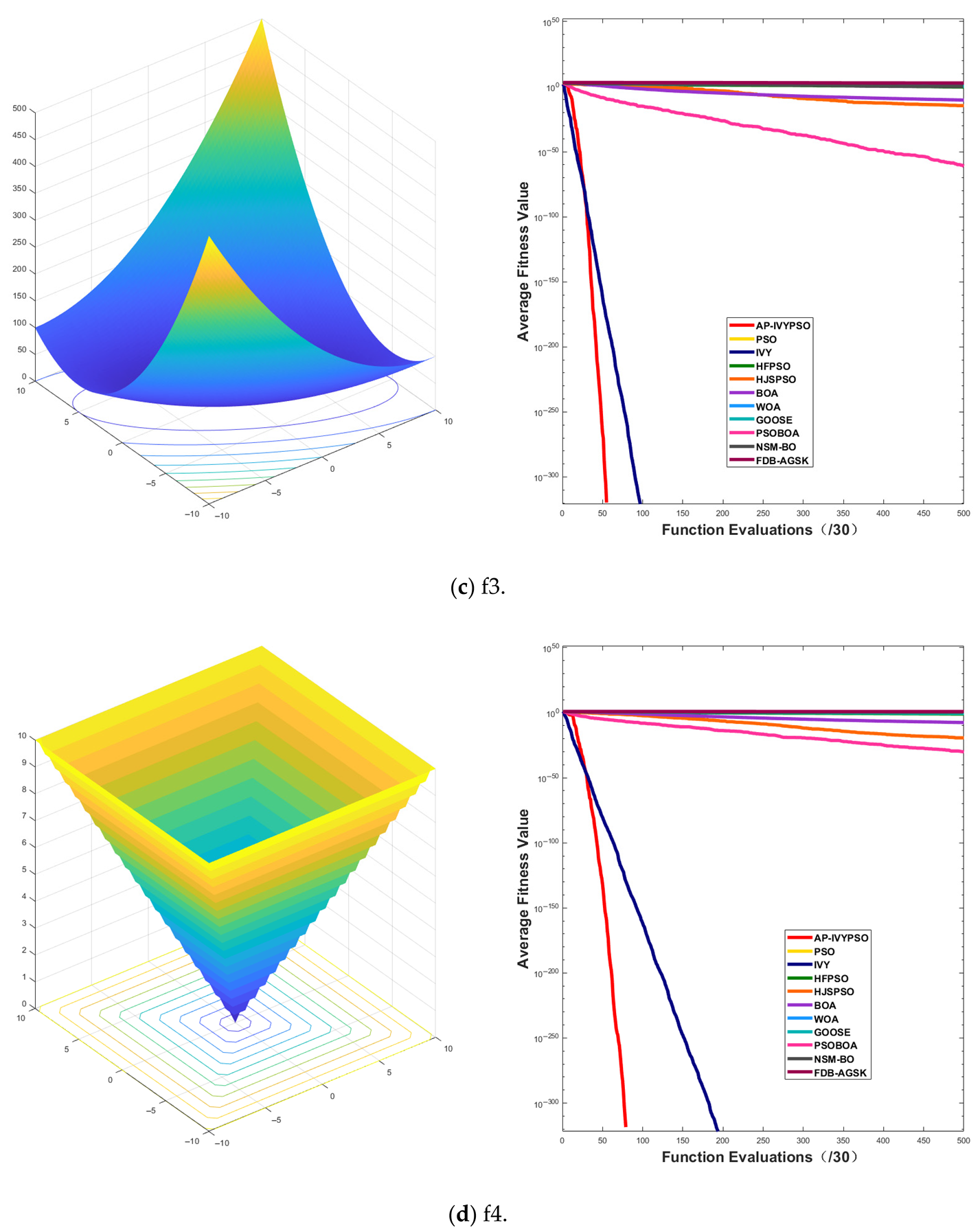

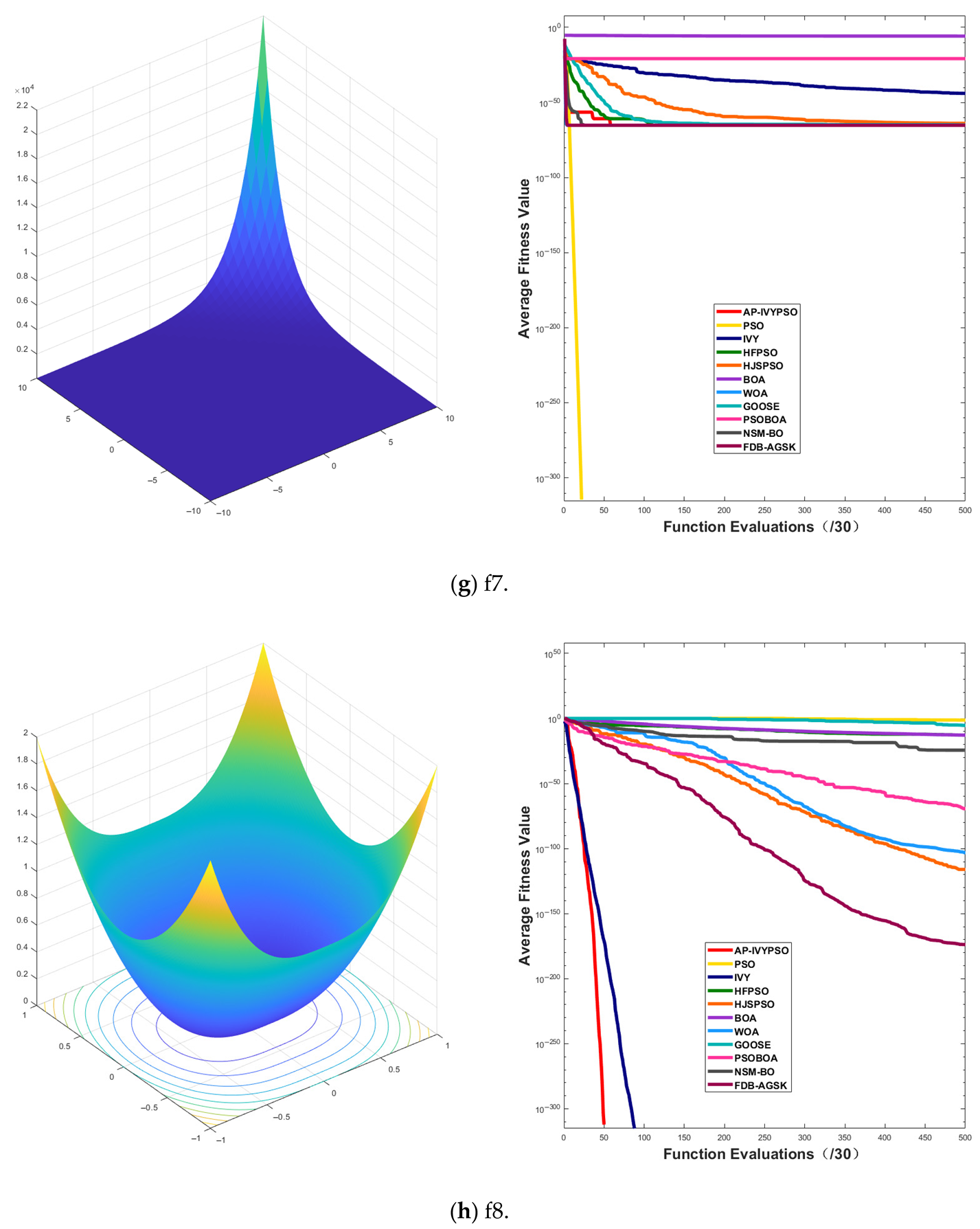

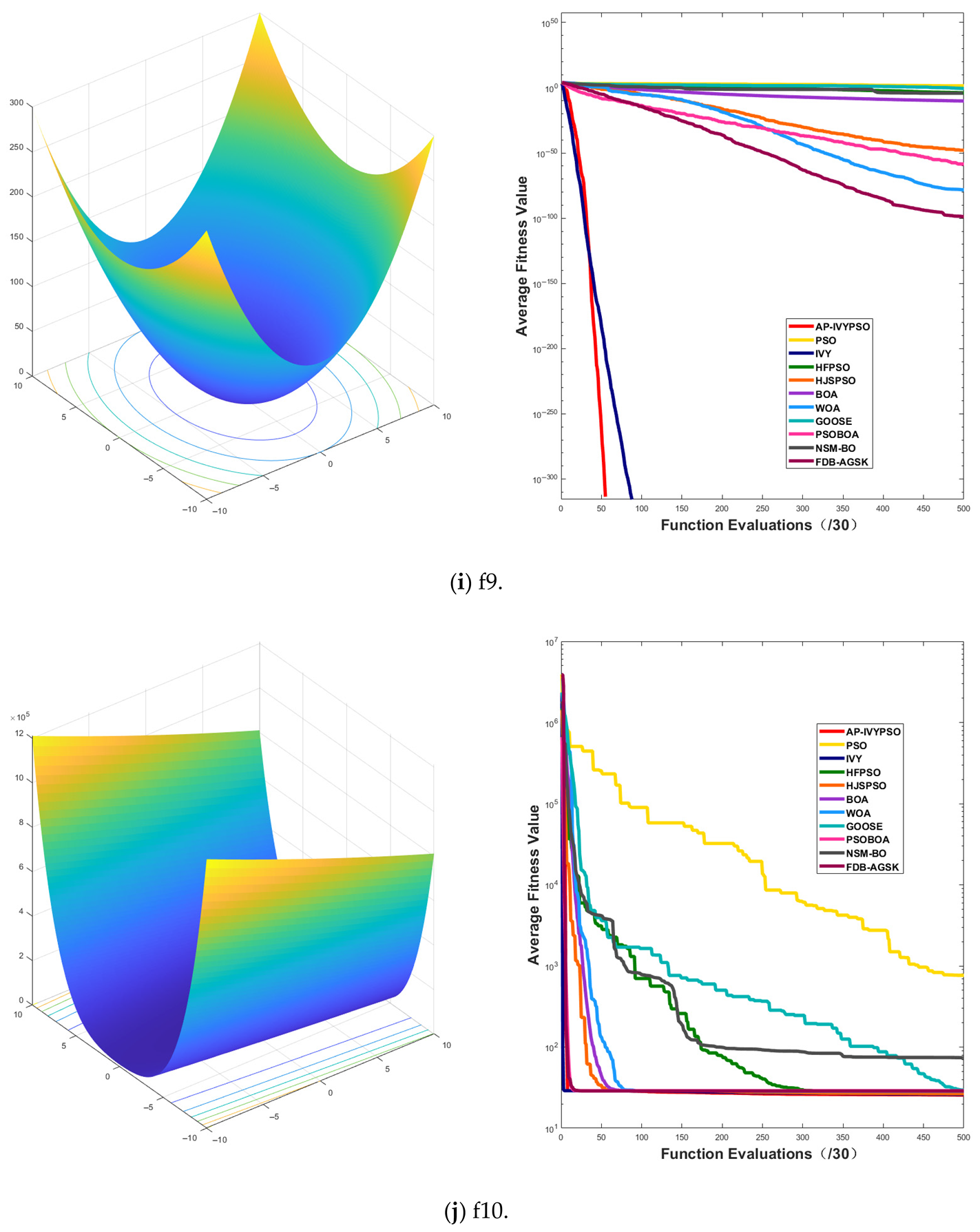

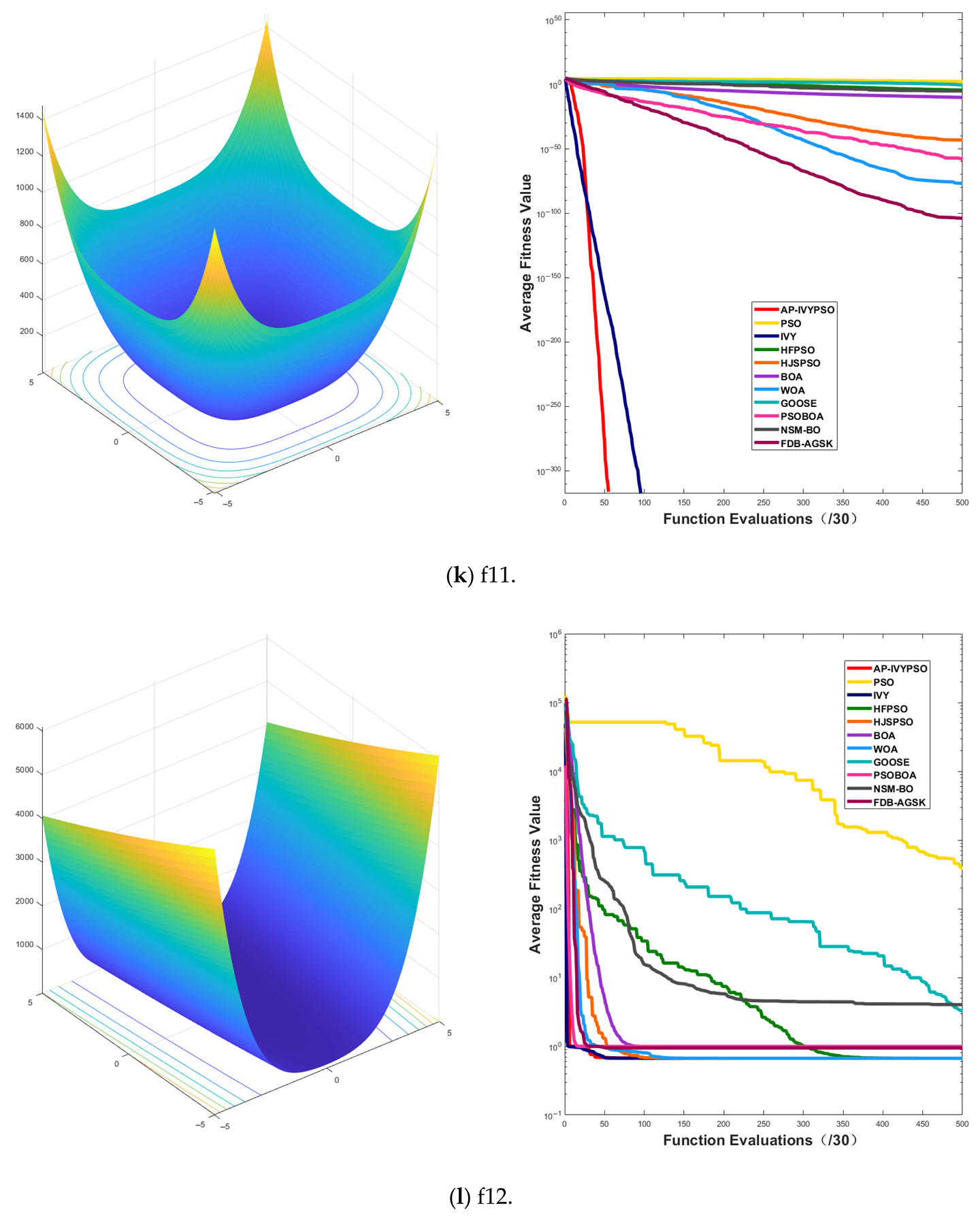

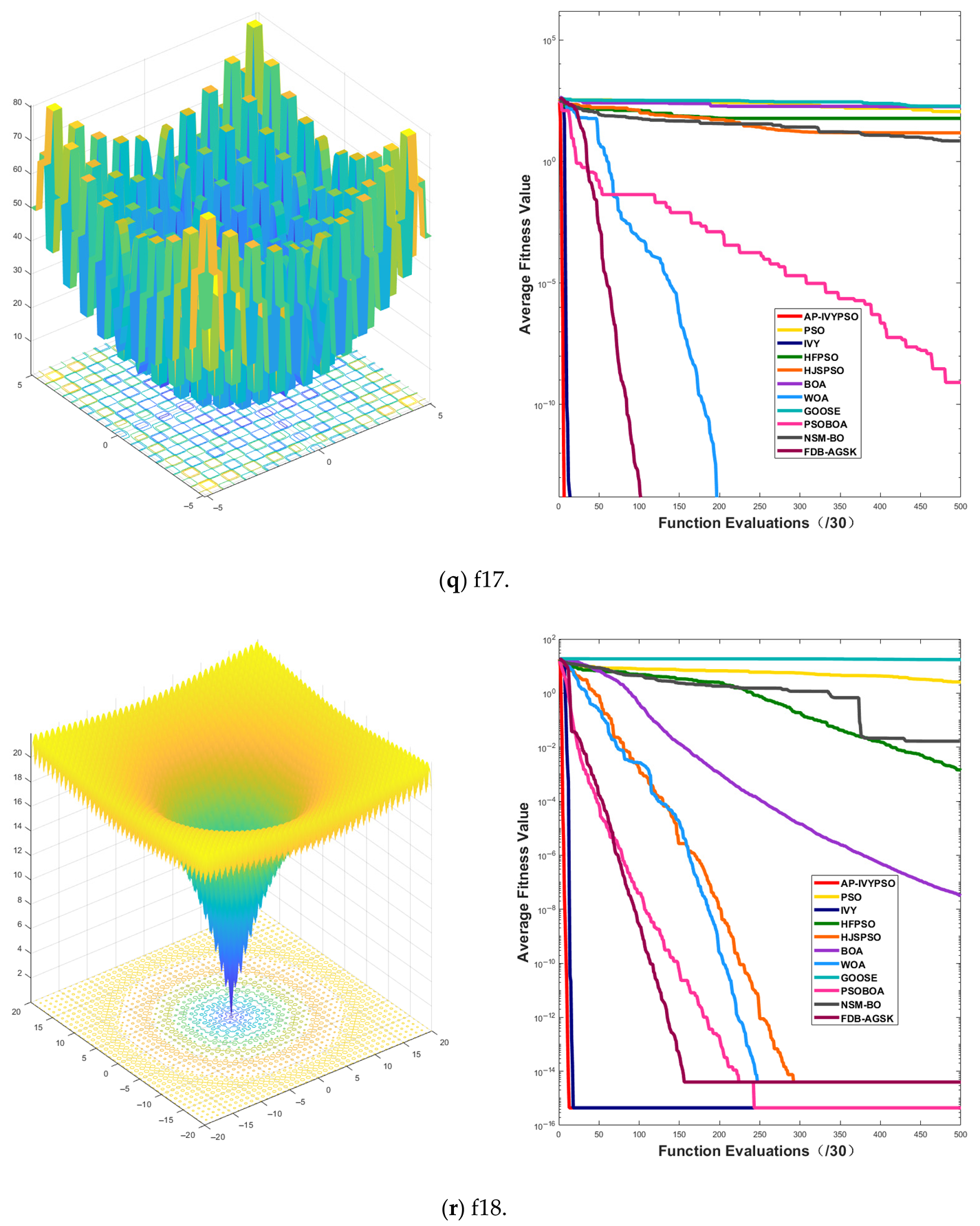

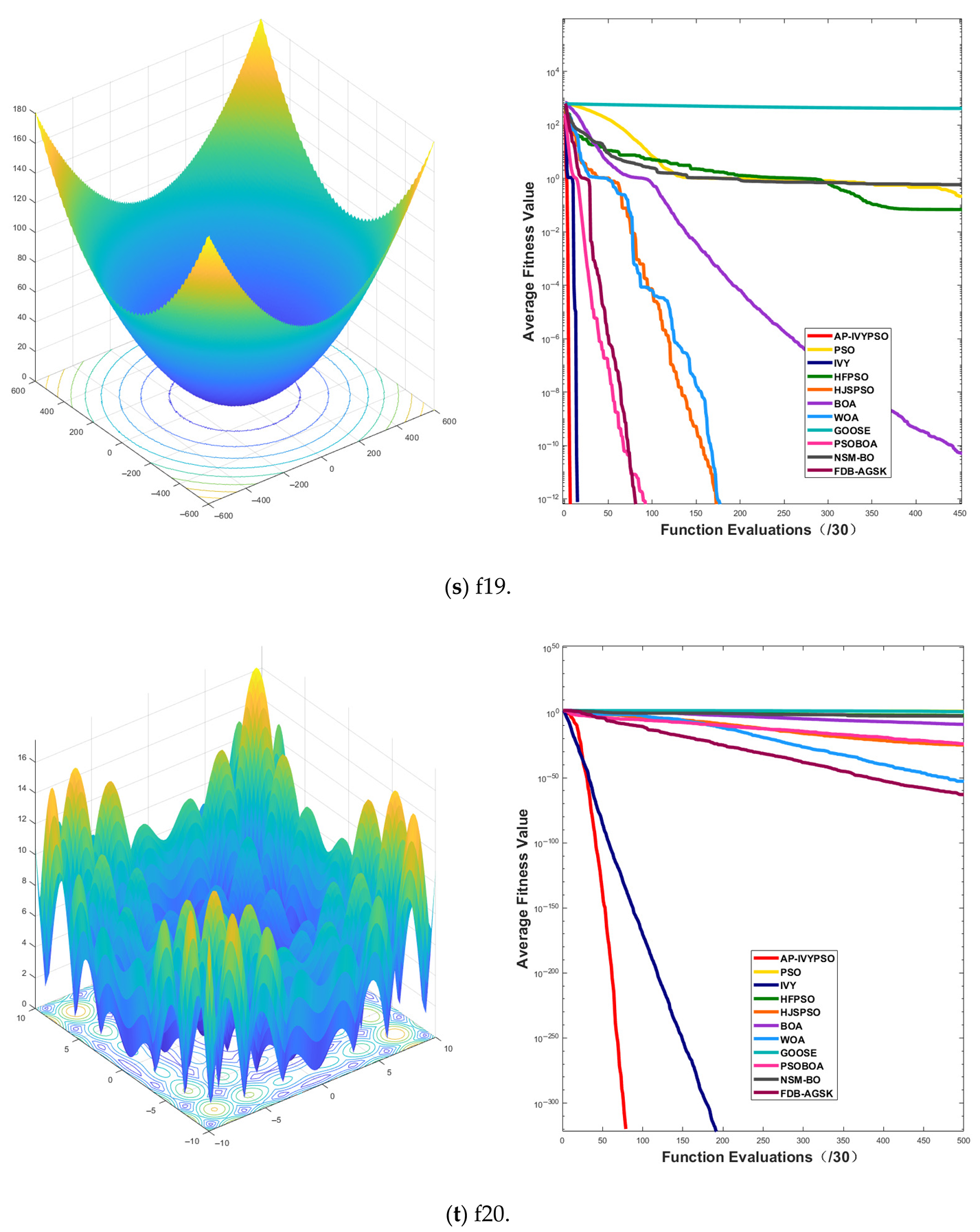

3.2.2. Convergence Curve Analysis

Appendix A.1 presents the convergence curves of the proposed AP-IVYPSO-BP algorithm and comparison algorithms, showing how the average fitness values of the benchmark test functions change with the number of objective function evaluations. This provides a more comprehensive representation of the optimization process and overall performance of each algorithm. The x-axis indicates the number of objective function evaluations (with a maximum of 15,000), while the y-axis displays the average fitness values obtained from 30 independent runs, thereby minimizing the random fluctuations that could arise from a single trial.

The figure reveals that the AP-IVYPSO algorithm exhibits faster convergence and superior average performance across 18 test functions (f1–f4, f8–f9, f11, f13–f20, and f24–f26), highlighting its robust global optimization capability. However, on functions f5, f7, f22, and f23, certain comparison algorithms achieved better optimization results, suggesting that these algorithms also demonstrate strong adaptability to specific problem instances.

3.2.3. Friedman Ranking and Wilcoxon Signed-Rank Test

To comprehensively evaluate the performance of the proposed AP-IVYPSO algorithm on 26 test functions, the Friedman test was employed to rank the nine algorithms. Based on the Friedman test scores, AP-IVYPSO achieved the lowest average rank (1.8587), securing first place and demonstrating the best overall performance. IVY and FDB-AGSK followed in second and third place, respectively. The traditional PSO was ranked 10, while GOOSE ranked the lowest, in 11 place. These ranking results further validate the significant advantage of the AP-IVYPSO algorithm in terms of optimization quality and stability. The Friedman ranking of each algorithm is shown in

Table 5.

To further validate the significant advantage of the proposed AP-IVYPSO algorithm on multiple benchmark test functions, the Wilcoxon signed-rank test was performed for paired comparisons between AP-IVYPSO and the other eight comparison algorithms. The significance level for the test was set to α = 0.05. The results showed that the

p-values between AP-IVYPSO and all other algorithms were smaller than the significance level of 0.05. This indicates that, in statistical terms, there are significant differences between AP-IVYPSO and all the comparison algorithms, further confirming the superior optimization performance of the proposed algorithm. The Wilcoxon signed-rank test results for AP-IVYPSO and the other eight algorithms are displayed in

Table 6.

In conclusion, AP-IVYPSO has shown outstanding performance across all comprehensive tests, proving itself to be a powerful algorithm.

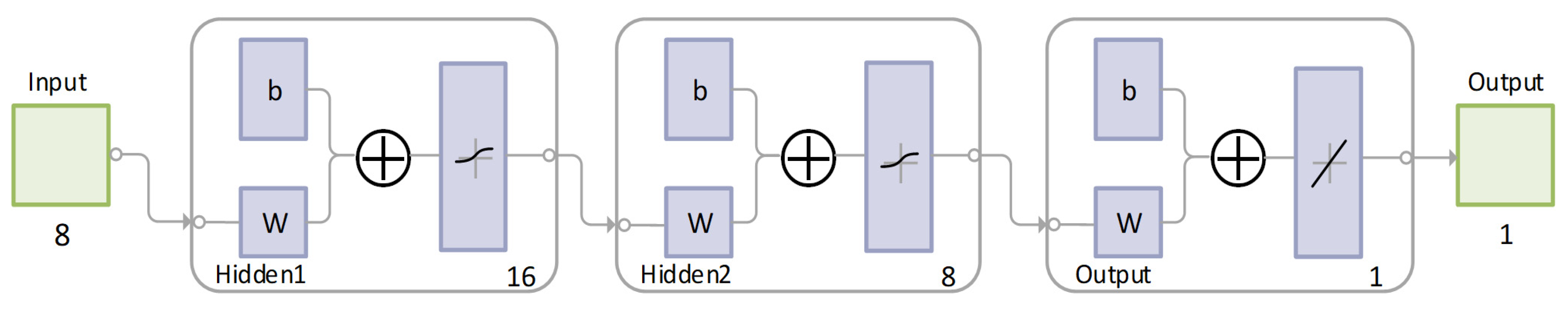

3.3. BPNN Model Parameter Optimization

The AP-IVYPSO-BP model utilizes a feedforward neural network for predicting concrete compressive strength. The network consists of an input layer, two hidden layers, and an output layer. The basic structural diagram of the AP-IVYPSO-BP model is shown in

Figure 4. The input layer includes features related to the concrete mix, while the output layer predicts the compressive strength of the concrete. The network’s weights and biases are optimized using the AP-IVYPSO algorithm to enhance the accuracy of the predictions.

The network is trained by minimizing the mean squared error (MSE) between the predicted values and the actual values. The AP-IVYPSO algorithm is used iteratively to optimize the weights and biases of the network, with each particle’s position representing a specific set of neural network parameters.

The algorithm begins by initializing the positions and velocities of the particle swarm and evaluating each particle’s fitness based on the performance of the neural network on the training set. The AP-IVYPSO algorithm then optimizes the model through an adaptive probability mechanism based on fitness improvement. In each optimization iteration, the particle positions are updated according to the probabilities of the PSO or IVYA strategies. The optimization process continues until the maximum number of iterations is reached or a convergence criterion is satisfied. Once the optimization is complete, the optimal parameters identified are used to initialize the BPNN. The network is then trained on the training set using the backpropagation algorithm, which aims to minimize the prediction error.

The reason for choosing AP-IVYPSO to optimize the BPNN lies in the complementary nature of the two algorithms: PSO enables efficient global exploration of the neural network’s high-dimensional weight space, while IVYA introduces precise local search adjustments through its disturbance mechanism. This helps fine-tune the network parameters for better predictive accuracy.

Moreover, the AP-IVYPSO’s adaptive switch strategy ensures that early optimization focuses on avoiding poor minima, while later optimization emphasizes convergence around strong solutions. This is highly suitable for training BPNNs, which are known to suffer from poor initialization and gradient-based convergence issues.

3.4. Summary

The AP-IVYPSO-BP model integrates the global search ability of PSO and the local optimization capabilities of IVYA. By utilizing the adaptive probability mechanism, the model dynamically selects the most suitable optimization strategy, effectively balancing global and local search efforts. This balance significantly enhances the accuracy and stability of concrete compressive strength predictions. The next section will discuss the experimental setup, performance evaluation, and comparisons with benchmark models.

4. Experimental Evaluation and Result Interpretation

4.1. Dataset Overview for Experimentation

This study employs a dataset derived from the high-performance concrete compressive strength experimental dataset in the UCI Machine Learning Repository [

56]. This dataset is widely utilized in research on the prediction of HPC properties and holds significant representativeness and practical value [

57]. The dataset comprises 1030 samples, each with eight input variables and one output variable. The input variables include the following: cement, fly ash, blast furnace slag, water, superplasticizer, age, fine aggregate, and coarse aggregate, while concrete compressive strength serves as the output variable. All input variables, with the exception of Age, are quantified in kilograms per cubic meter (kg/m

3); age is measured in days, while compressive strength is expressed in megapascals (MPa).

The data were collected from controlled laboratory experiments simulating realistic HPC mix designs, ensuring high data reliability and consistency. The samples cover a broad range of mixture proportions and curing ages, which effectively represent the variability encountered in practical engineering scenarios. This diversity in the dataset allows for robust modeling of the nonlinear and complex relationships between mixture components and compressive strength.

In this study, each sample represents a unique high-performance concrete mix design, and the nonlinear mapping between the eight-dimensional input variables and compressive strength output defines a high-dimensional, non-convex search space. This space lacks gradient information and contains numerous local optima, often metaphorically referred to as an “inhospitable environment.” Both IVYA and PSO are applied to this space to search for the optimal parameters of the neural network.

Specifically, each individual in the population represents a set of initial weights and biases for the BP neural network, which are encoded as real-valued vectors. The optimization algorithm’s goal is to minimize the model’s prediction error on the training or validation set. By initializing the BP neural network with the weights corresponding to each individual, the network is trained on the normalized high-performance concrete dataset, and its performance on the test set is evaluated to assess the fitness of each individual. In this manner, the dynamic search process of swarm intelligence is directly applied to the optimization of the concrete strength prediction parameters.

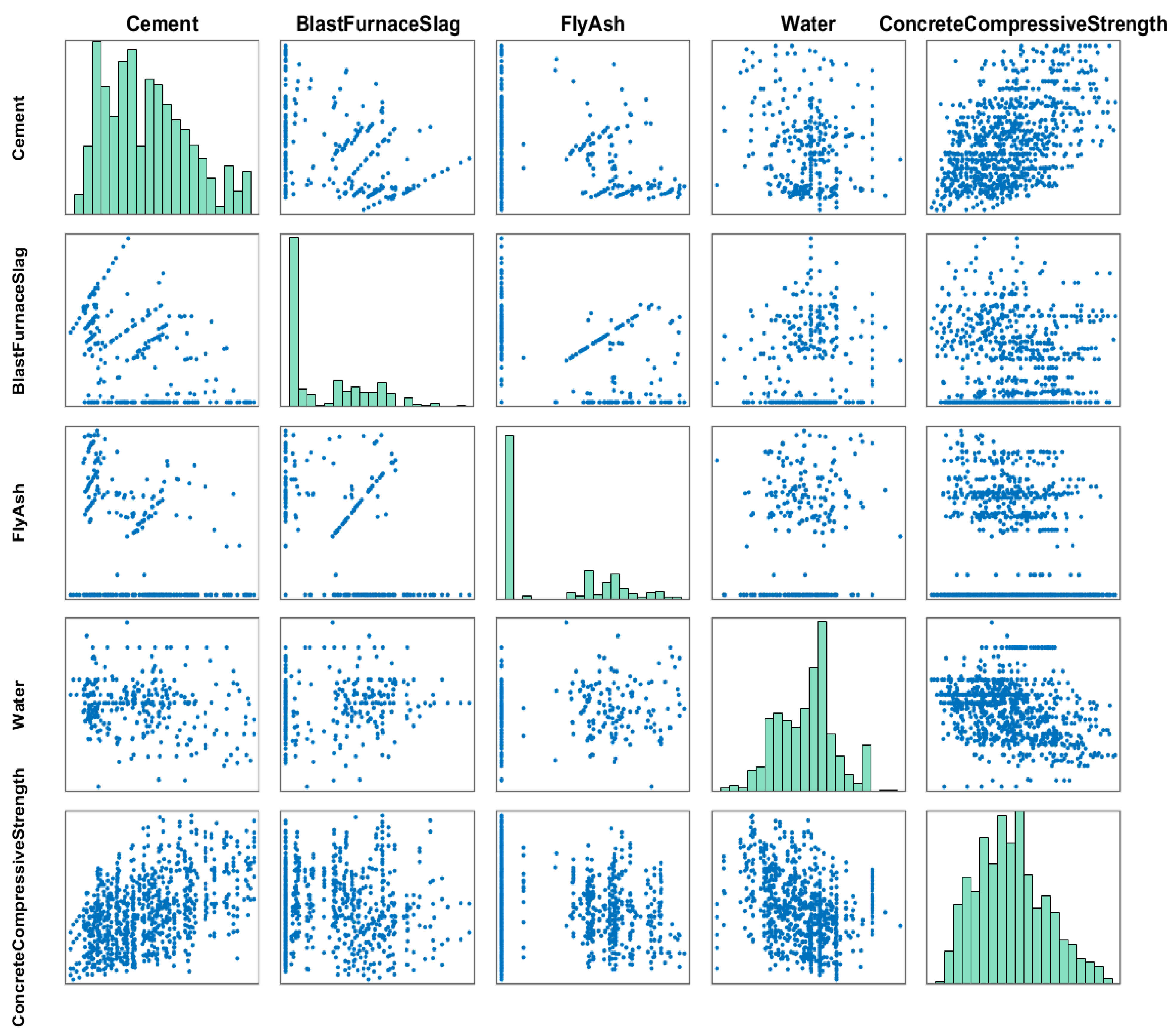

The compressive strength of HPC exhibits a distinct nonlinear relationship with the composition of the mixture, and this complexity is visually illustrated in

Figure 5 and

Figure 6. Detailed information about the input features is presented in

Table 7, facilitating a thorough understanding of their characteristics. By using this data for neural network training and swarm intelligence-based optimization, the algorithmic strategy is not generic or abstract but is specifically customized to model the highly nonlinear strength behavior of concrete.

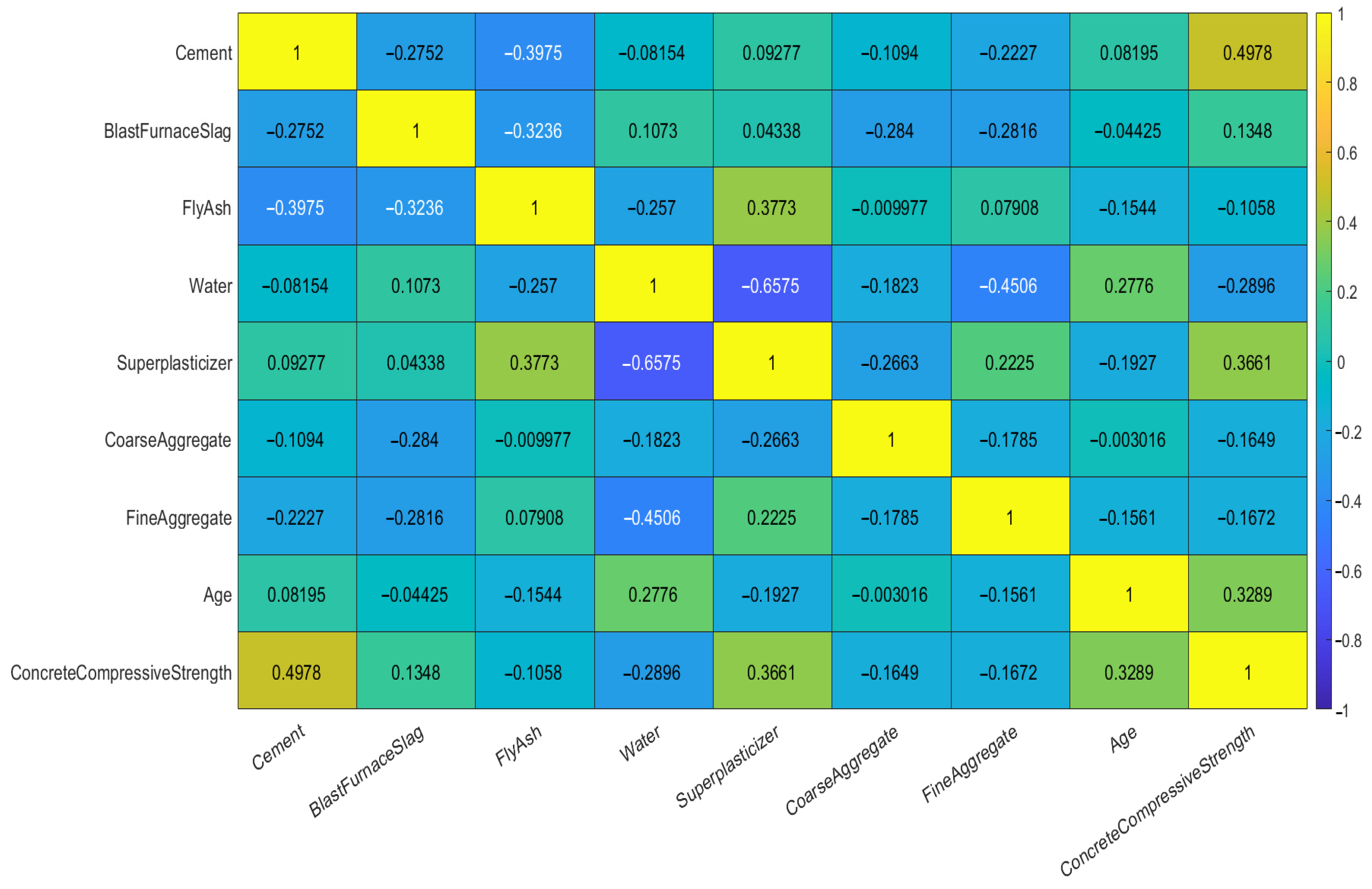

To better reveal the interactions between variables, this paper employs the Pearson Correlation Coefficient to analyze the correlations among the input variables and between input variables and output variable. The coefficient ranges from −1 to 1, where values near 0 suggest a weak correlation, values approaching 1 indicate a strong positive correlation, and values close to -l represent a strong negative correlation. The resulting Pearson correlation matrix is displayed in

Figure 7, from which the correlation patterns between variables can be discerned. The results demonstrate that the influence of various factors on strength follows distinct and significant correlation patterns.

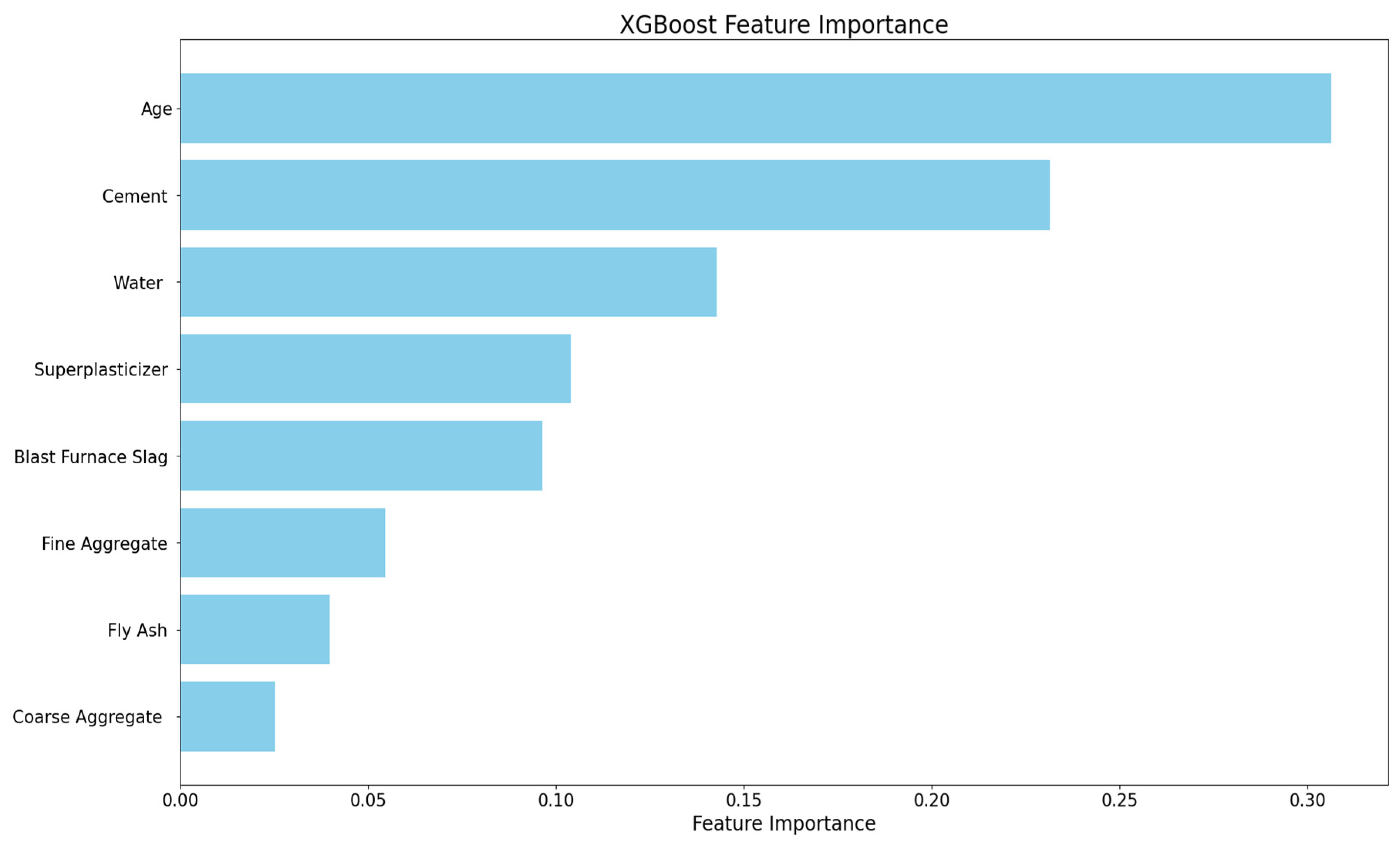

Furthermore, to quantitatively evaluate the influence of each input variable on the compressive strength of HPC, the XGBoost algorithm was employed within a Python 3.8 computational environment. The resulting feature importance rankings, as illustrated in

Figure 8, reveal that curing age is the most critical predictor, with cement and water con-tent following closely behind. In contrast, fly ash and coarse aggregate have relatively smaller impacts.

To ensure stable convergence during the model training process and eliminate disparities in the magnitudes of different feature variables, the input features were normalized to the [0, 1] range using min-max normalization, while the output targets were standardized using the Z-score method. This preprocessing step enhances the training efficiency and prediction accuracy of the neural network, while mitigating potential convergence issues or instability arising from significant differences in data scales.

In this study, the primary research goal is to accurately predict the compressive strength of high-performance concrete based on its mix design parameters. To achieve this, a hybrid prediction model named AP-IVYPSO-BP is proposed, in which the AP-IVYPSO is employed to optimize the initial weights and biases of a BPNN. By combining global search capability with nonlinear learning, this model aims to address the complex mapping relationship between concrete components and compressive strength, thereby improving prediction precision.

4.2. Performance Evaluation Metrics

To assess the predictive performance of the proposed algorithmic model, this study utilizes three widely used evaluation metrics: the coefficient of determination (

), mean absolute error (

), and root mean squared error (

).

and

assess the differences between actual values and predicted value; whereas lower values signify better prediction accuracy,

quantifies the degree of correlation between the model’s predicted results and the observed outcomes. Its value ranges from 0 to 1, with values approaching 1 indicating superior model performance and stronger predictive accuracy. The detailed computational expressions are outlined in Equation (20) through (22):

where

is the actual output value of the

sample,

is the mean of the actual values,

is the predicted output value, and

is the total number of samples.

4.3. Overview of Experimental Procedures

In this experiment, 1000 samples are used for training, while an additional 30 samples are set aside for testing. The model is configured to undergo 100 training iterations, and the optimization algorithm parameters are provided in

Table 8. To balance computational efficiency and algorithm performance, the population size for all algorithms in this study was uniformly set to 30.

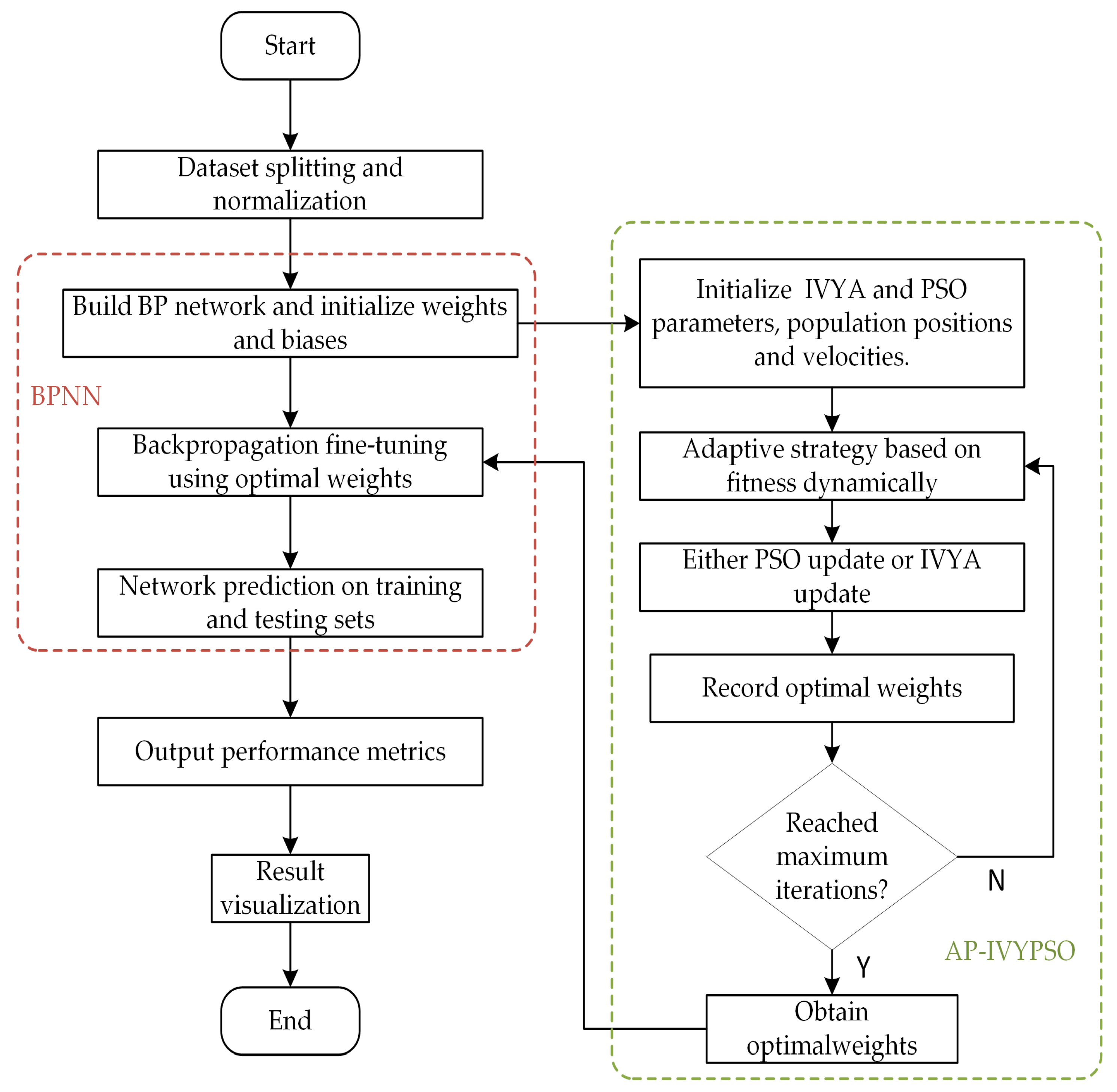

The flowchart of the experimental procedure is shown in

Figure 9. The specific procedures for conducting this experiment are outlined as follows.

The specific procedures for conducting this experiment are detailed as follows:

- (1)

Data Preprocessing and Dataset Division: The original HPC dataset is divided into a training set and a test set. The input features are normalized using the mapminmax function, while the output values are standardized with Z-score normalization. These preprocessing steps help to enhance the model’s stability and speed up convergence during training.

- (2)

Neural Network Architecture Configuration: A BPNN is constructed, with two hidden layers containing 16 and 8 neurons. The architecture is initialized based on the input features’ dimensionality and the number of output variables.

- (3)

AP-IVYPSO Initialization: The maximum number of iterations and population size for the AP-IVYPSO algorithm are set. Each “vine” individual represents a potential combination of neural network weights and thresholds, which are the optimization targets. The individual particles dynamically select between the PSO or IVYA update strategies, using an adaptive probability mechanism based on fitness improvements.

- (4)

Fitness Evaluation: The fitness function is defined as the RMSE between predicted and actual values. This guides the “vine” individuals toward the optimal solution, ensuring that the network’s predictive performance is maximized.

- (5)

Position Update and Local Search: Each “vine” individual updates its position either by applying a local disturbance strategy or by randomly selecting a leader’s direction. In PSO updates, particle velocity and position are adjusted using the velocity update mechanism. In IVYA updates, the position is modified using vine heuristic growth dynamics. A mutation mechanism with a certain probability is incorporated to increase population diversity and help avoid local optima.

- (6)

Optimal Weight Selection: After all iterations, the “vine” individual with the best fitness is selected, and its corresponding neural network weights and thresholds are used to update the BPNN.

- (7)

Model Training and Prediction: The BPNN is trained using the optimal weights obtained from the AP-IVYPSO algorithm. Predictions are made for both the training and test sets, and the model’s performance is evaluated using R2, MAE, and RMSE metrics. The results are visualized to assess the model’s accuracy.

4.4. Analysis of Compressive Strength Prediction for High-Performance Concrete

To assess the practicality and advantages of the AP-IVYPSO-BP model in predicting the compressive strength of high-performance concrete, we systematically compared it with four benchmark models: the unoptimized standard BPNN, the PSO-optimized PSO-BP model, the GA-optimized GA-BP model, and the IVYA-optimized IVY-BP model. By keeping the input features consistent, different optimization strategies were applied to adjust the BPNN parameters, ensuring a comprehensive and fair comparison of model performance under the same dataset and experimental conditions.

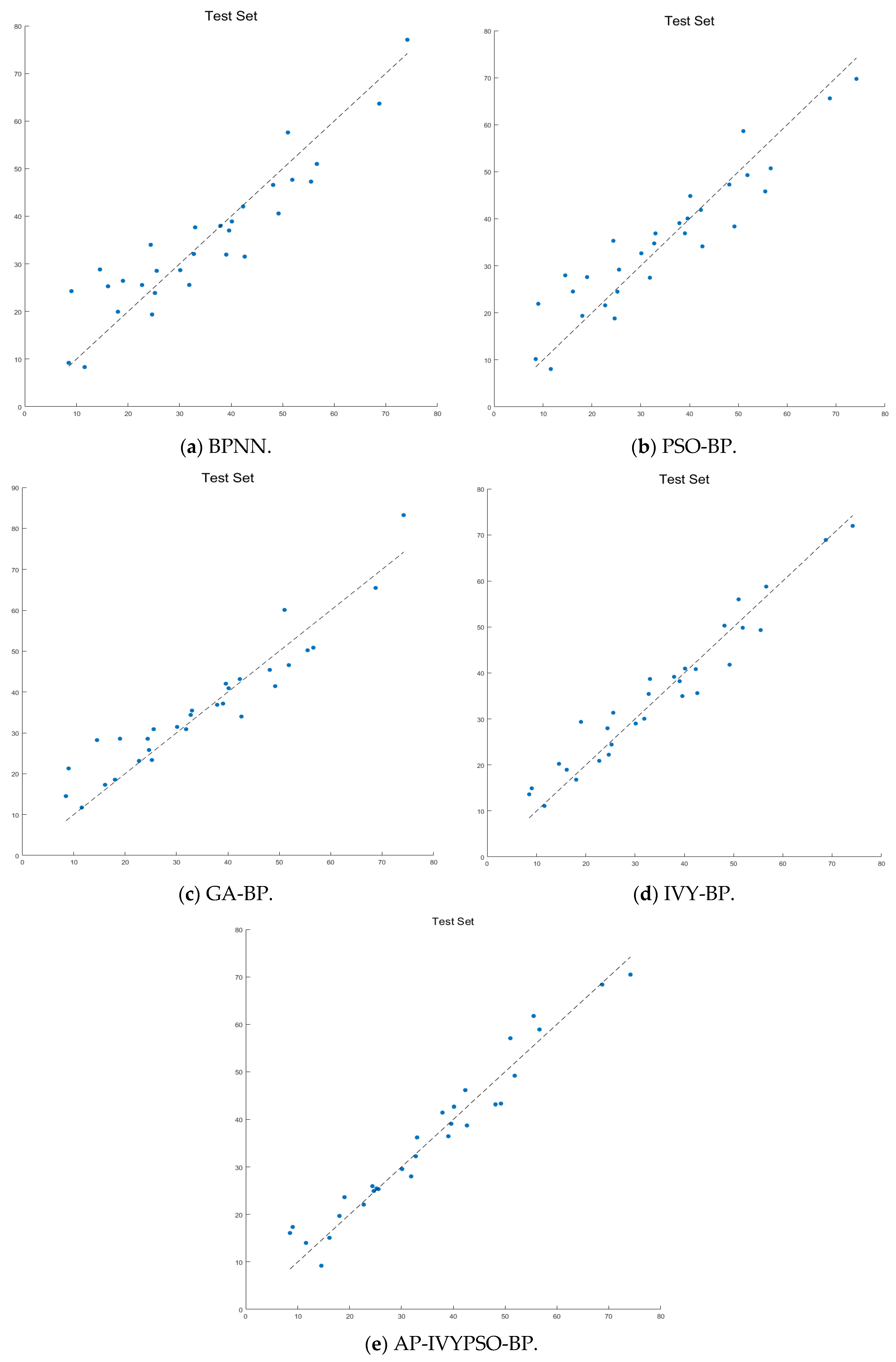

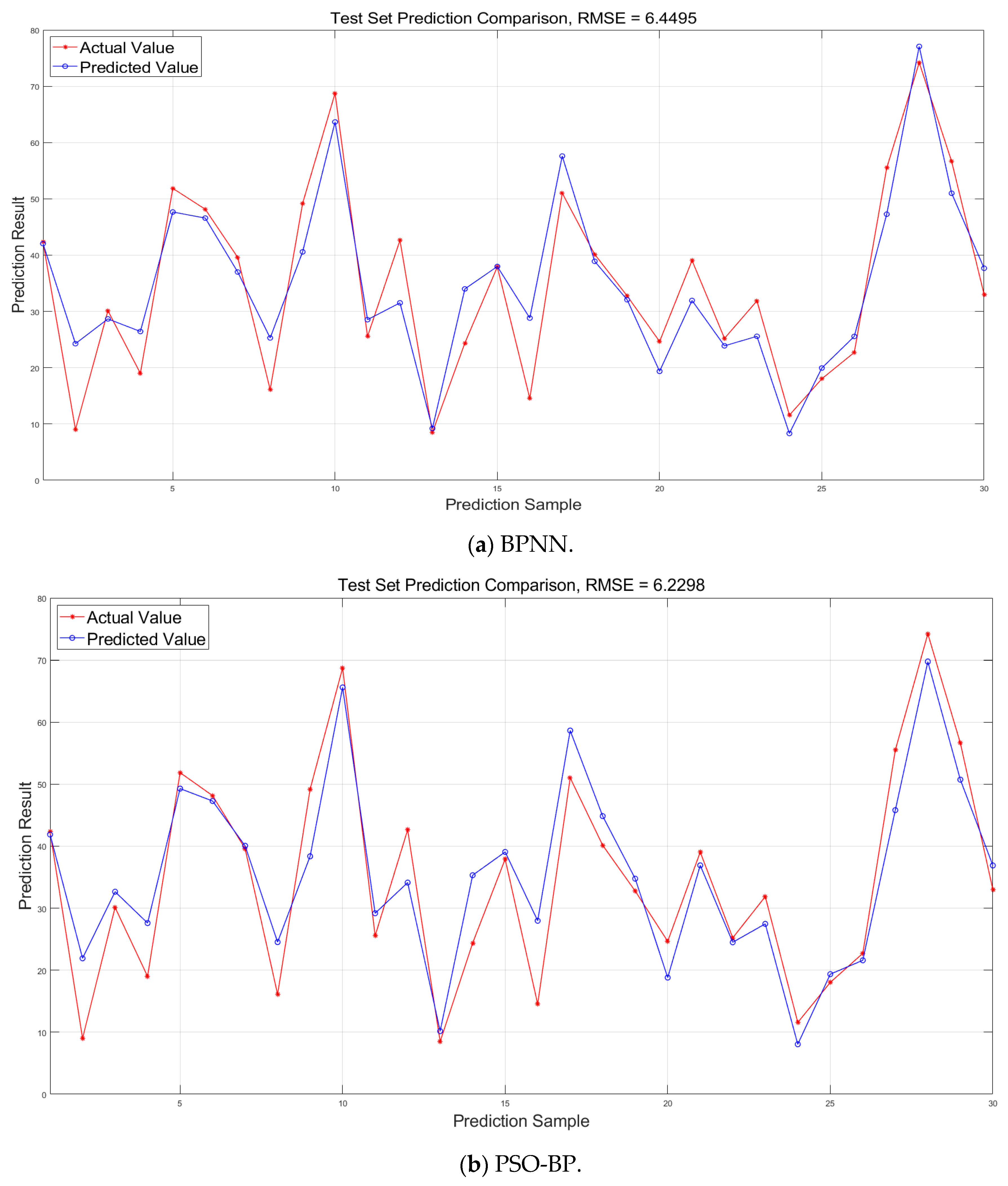

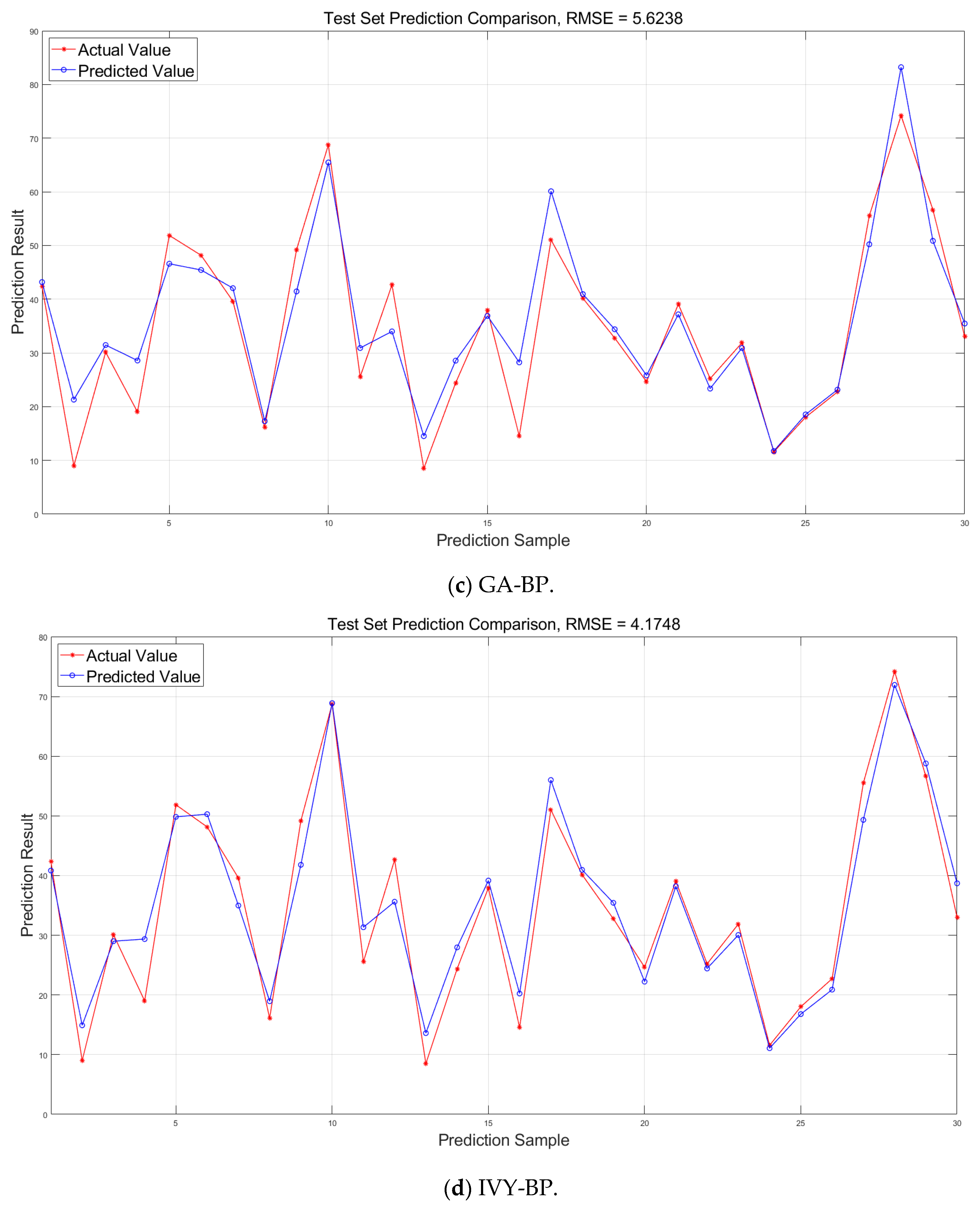

To facilitate a more intuitive comparison of the prediction performance, we present scatter plots (

Figure 10) and prediction curves (

Figure 11) showing actual values against predicted values. These visualizations demonstrate the performance of the BPNN, PSO-BP, GA-BP, IVY-BP, and AP-IVYPSO-BP models on the test set.

As shown in

Figure 10, the scatter plot clearly indicates that the predicted points of the AP-IVYPSO-BP model are the most tightly clustered and almost uniformly distributed along the ideal diagonal (where predicted values equal actual values), suggesting minimal deviation between the actual and predicted values. In contrast, the scatter plots for the BPNN, PSO-BP, GA-BP, and IVY-BP models show greater dispersion, with the BPNN model exhibiting more pronounced prediction errors.

The prediction curve in

Figure 11 highlights how well the AP-IVYPSO-BP model fits the entire sample range, with the predicted curve closely matching the actual curve and demonstrating consistent fluctuations and trends. In comparison, other models show clear deviations at certain sample points, particularly at extreme values or inflection points, indicating poor fitting performance. Furthermore, the AP-IVYPSO-BP model excels in tracking regions with large fluctuations in the data, effectively capturing complex nonlinear relationships. This underscores the superior fitting ability and stability of the AP-IVYPSO-BP model when dealing with complex data structures.

Table 9 provides the prediction results for each model, from which we can draw the following conclusions. Additionally, the table also provides the runtime (

) of each model.

The AP-IVYPSO-BP model outperforms all comparison models, especially in terms of prediction performance on the test set. Specifically, it surpasses the traditional BPNN, PSO-BP, GA-BP, and IVY-BP models in key evaluation metrics such as R2, MAE, and RMSE. Notably, it achieves R2 = 0.9542, MAE = 3.0404, and RMSE = 3.7991, demonstrating minimal deviation between the predicted and actual values. These results show that the AP-IVYPSO-BP model delivers optimal prediction accuracy for high-performance concrete compressive strength.

Compared to the traditional BPNN model, the PSO and GA algorithms have already enhanced its performance, and the IVYA further improves the predictive capability of BPNN. The AP-IVYPSO-BP model combines the global search ability of PSO with the local search characteristics of IVYA, dynamically adjusting the PSO and IVYA update mechanisms through an adaptive probability strategy based on fitness improvement. This dynamic balance between global and local searches significantly enhances both prediction accuracy and model stability. When compared to the IVY-BP model, which is less optimized, the AP-IVYPSO-BP model improves R2 from 0.9485 to 0.9542, reduces MAE by 0.3155, and lowers RMSE by 0.3757. These improvements clearly demonstrate the superior prediction accuracy of the AP-IVYPSO-BP model over other optimization techniques.

In conclusion, these results suggest that integrating bio-inspired optimization algorithms with neural networks is an effective approach for improving regression prediction accuracy in complex nonlinear problems. By leveraging both global and local search capabilities, the AP-IVYPSO-BP model demonstrates remarkable generalization ability and robustness, achieving notable success in predicting the compressive strength of high-performance concrete.

5. Conclusions

This paper introduces a novel hybrid prediction model, AP-IVYPSO-BP, that combines the bio-inspired IVYA with PSO to optimize a BPNN for accurately predicting the compressive strength of HPC. The AP-IVYPSO-BP model strengthens the global search capability of PSO and the local search characteristics of IVYA, while dynamically adjusting their update mechanisms through an adaptive probability strategy based on fitness improvement. This dynamic adjustment optimizes the balance between global and local searches, significantly enhancing prediction accuracy, model stability, and robustness.

To validate the proposed model’s effectiveness, experiments were conducted on a publicly available dataset containing 1030 high-performance concrete mix samples. The AP-IVYPSO-BP model was compared with traditional BPNN, PSO-BP, GA-BP, and IVY-BP models. The experimental results demonstrate that the AP-IVYPSO-BP model outperforms the other models across various evaluation metrics, particularly excelling in R2, MAE, and RMSE. Specifically, the AP-IVYPSO-BP model achieved an R2 of 95.42%, reflecting its excellent fitting ability and prediction accuracy. Moreover, MAE and RMSE showed substantial improvements compared to the baseline models, further highlighting the model’s superior performance in predicting concrete compressive strength.

The AP-IVYPSO-BP model provides an effective tool for accurately predicting concrete strength, contributing to better material utilization, reduced resource waste, and minimized environmental impact, thereby supporting the sustainable development of the construction industry. Future research could explore applying this model to the prediction of other engineering materials’ strength, incorporating additional optimization algorithms and deep learning techniques to further enhance the model’s performance. Furthermore, investigating the model’s practical applications in engineering management will help unlock its full potential for sustainable development.