Abstract

Hyper-parameters play a critical role in neural networks; they significantly impact both training effectiveness and overall model performance. Proper hyper-parameter settings can accelerate model convergence and improve generalization. Among various hyper-parameters, the learning rate is particularly important. However, optimizing the learning rate typically requires extensive experimentation and tuning, as its setting is often dependent on specific tasks and datasets and therefore lacks universal rules or standards. Consequently, adjustments are generally made through trial and error, thereby making the selection of the learning rate complex and time-consuming. In an attempt to surmount this challenge, evolutionary computation algorithms can automatically adjust the hyper-parameter learning rate to improve training efficiency and model performance. In response to this, we propose a black widow optimization algorithm based on Lagrange interpolation (LIBWONN) to optimize the learning rate of ResNet18. Moreover, we evaluate LIBWONN’s effectiveness using 24 benchmark functions from CEC2017 and CEC2022 and compare it with nine advanced metaheuristic algorithms. The experimental results indicate that LIBWONN outperforms the other algorithms in convergence and stability. Additionally, experiments on publicly available datasets from six different fields demonstrate that LIBWONN improves the accuracy on both training and testing sets compared to the standard BWO, with gains of 6.99% and 4.48%, respectively.

1. Introduction

Owing to its ability to efficiently extract features, scale well, and perform excellently, image recognition technology finds widespread use in fields such as image classification and medical image analysis [1,2,3,4,5]. ResNet, proposed by He et al. [2], addresses the degradation problem in deep neural networks by adding shortcut connections. This design enables the network to be deeper without easily overfitting. This model performs excellently in tasks such as image classification [3], which has become a significant benchmark in deep learning [6,7,8,9,10,11].

The training process of ResNet is significantly affected by its learning rate [4], which determines the step size for updating model parameters and directly influences the convergence speed [12,13,14,15,16], ultimately impacting the model’s performance. Compared to a fixed learning rate, an adaptive learning rate can dynamically adjust based on the model’s performance. This approach effectively addresses complex optimization problems, thereby improving the stability and efficiency of model training [5].

The learning rate determines the step size for updating model parameters, affecting both convergence speed and final performance. It may lead to slow convergence or suffer from a local optimum in a model with improper parameters, which makes it difficult to find a global optimum. Numerous researchers have conducted extensive studies to optimize the learning rate, improving training effectiveness [17,18,19,20,21].

Ma et al. propose an efficient optimization method to address function approximation problems, enabling the solution of partial differential equations with deep learning. They employ particle methods (PMs) and smoothed particle methods (SPMs) for spatial discretization, allowing for a smaller learning rate to ensure the convergence of the optimization algorithm [8]. Franchini et al. study a stochastic gradient algorithm that gradually increases the mini-batch size in a predefined manner, automatically adjusting the learning rate in a line search process that is either monotonic or non-monotonic [9]. Wang et al. adopt a learning rate scheduler with an incremental proportional–integral–derivative controller for optimizing the parameters of stochastic gradient descent (SGD) [10]. Qin et al. developed the Adaptive Parallel Stochastic Gradient Descent (AP-SGD) algorithm to minimize scheduling costs. This method achieved significant parallelism by integrating an adaptive momentum technique into the learning process, thereby speeding up convergence with adaptive learning rates and acceleration coefficients [11]. However, these methods still suffer from inappropriate hyper-parameters, which result in low computational efficiency [22,23,24,25,26,27].

To address the aforementioned issues, this study proposes a Lagrange interpolation black widow optimization algorithm (LIBWONN) based on Lagrange interpolation to further enhance its performance. By dynamically constructing an interpolation function with known data points during iteration, the parameters are adjusted to obtain an optimal learning rate.

In order to substantiate the efficacy of the algorithm proposed herein, nine optimization algorithms are deliberately selected as baselines, which comprehensively embrace four aspects. These aspects include “Optimization based on principles from physics and mathematics (PSEQADE [28])”, “Optimization based on evolutionary cycles (COVIDOA [29], LSHADE-cnEpSin [30])”, “Optimization based on behaviors observed in animals and plants (ALA [31], SDO [32], SASS [33], BOA [34], WOA [35])”, and “Optimization inspired by human activities (DOA [36], CBSO [37], AGSK [38])”. Each of these algorithms represents a different approach to solving optimization problems, ensuring a comprehensive comparison. We conduct comparative experiments to analyze their performance in terms of convergence speed, solution accuracy, and robustness. By comparing these algorithms, we aim to highlight the strengths and weaknesses of LIBWONN.

The key contributions of this study are listed below:

(a) This paper proposes a Lagrange interpolation-based black widow optimization algorithm (LIBWONN) to improve training efficiency and performance by optimizing the learning rate of ResNet18, which overcomes the limitations of the original BWO algorithm in avoiding local optima, achieving higher robust learning rate adjustments.

(b) Experiments are conducted on six publicly available datasets, with nine novel metaheuristic optimization algorithms selected as baselines. The experimental results demonstrate that LIBWONN outperforms the other algorithms and maintains good generalization and stability across multiple datasets.

2. Related Theoretical Description

2.1. ResNet18 Model

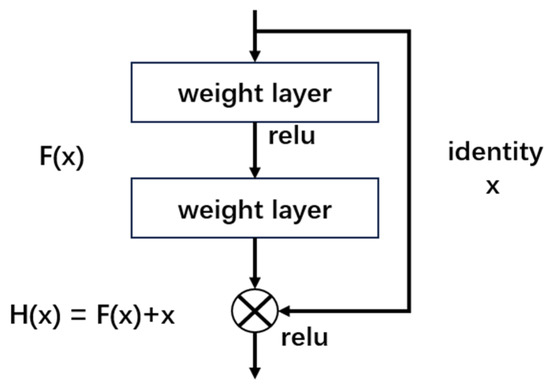

ResNet is a deep residual network model proposed by a research team at Microsoft Research. The number of convolutional layers in ResNet can be adjusted based on different tasks; adding more layers can improve accuracy to meet varying task requirements by increasing structural complexity with depth [38,39,40,41,42,43,44,45,46]. This paper adopts ResNet18, which is a commonly used ResNet model. The primary innovation of this model is the introduction of residual learning. Traditional deep neural networks frequently face issues with vanishing or exploding gradients, especially as the network depth increases, which makes training challenging. ResNet18 addresses these issues by using residual blocks, which allow shortcut connections between network layers, thus enabling the model to learn features more deeply and effectively. This can be represented by the formula below:

where H(x) is the expected output of the network, F(x) denotes the residual function, and x is the input signal or feature map.

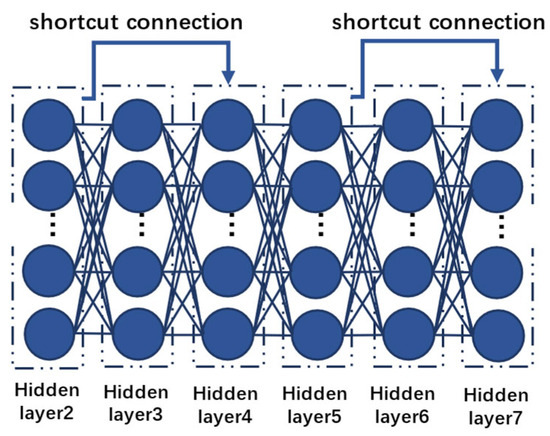

From Figure 1, each residual block includes two main paths: one is direct identity mapping, where x is added directly to the output of the target function via a shortcut connection, as shown in Figure 2; the other is the path for learning the residual, which produces an output through two convolutional layers and the activation function ReLU.

Figure 1.

The residual block for ResNet18.

Figure 2.

The convolutional image of a residual block.

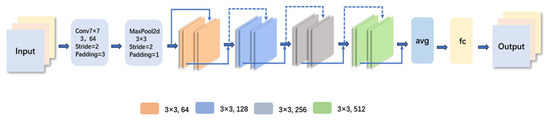

The structure of ResNet18 consists of several major components, and Figure 3 represents the architecture of this model. The input layer accepts an image of size 224 × 224 × 3. This is followed by a 7 × 7 convolutional layer with a stride of 2, outputting 64 channels, and then a 3 × 3 max pooling layer with a stride of 2. In the residual block, the network is divided into four stages. Stage 1 contains two residual blocks with 64 channels; Stage 2 has two residual blocks with 128 channels and a stride of 2; Stages 3 and 4 contain two residual blocks with 256 and 512 channels, respectively, each with a stride of 2. After a global average pooling layer, the feature map is converted into a fixed-length feature vector, which is passed through a fully connected layer to produce a classification result [35,36,37,38,39,40,41].

Figure 3.

The model architecture diagram of ResNet.

The learning rate is a critical hyper-parameter for training ResNet18, as it directly affects the convergence speed and performance of the model. An appropriate learning rate can accelerate convergence, allowing the model to achieve better results within fewer training iterations. Setting the learning rate too high can cause the model to overshoot the optimal solution during loss function optimization, resulting in unstable or divergent training. Conversely, if the learning rate is too low, convergence may be slow, increasing training time and potentially trapping the model into local optima. For ResNet18, the depth structure with shortcut connections benefits from an appropriate learning rate, which helps to avoid the issue of vanishing gradients.

The effect of the learning rate on each layer of the ResNet18 model is captured by the equation below:

where represents the parameters of the current model, represents the parameters of the updated model, and η is the learning rate, which controls the step size of parameter updating. is the gradient of the loss function.

For example, the influence on the convolutional connected layers can be represented by Equations (3) and (4).

In a comprehensive and rigorous attempt to authenticate the efficacy of the ResNet18 neural network model within the complex realm of image classification, a painstaking selection of five preeminent and canonical models is executed. These models include the Recurrent Neural Network (RNN) [47], the Convolutional Neural Network (CNN) [2], the Long Short-Term Memory network (LSTM) [48], the Generative Adversarial Network (GAN) [49], and the Visual Geometry Group network (VGG) [50]. Subsequently, the FASHION-MNIST dataset in synergy with the LIBWONN optimization algorithm is harnessed for the training process. The FASHION-MNIST dataset, characterized by its distinct features relevant to fashion-focused image classification tasks, provides an opportune medium for evaluating the models’ performance. In Table 1, the experimental results show that ResNet18 performed the best in both training and testing, with a test accuracy of 95.33%, which is about 4.28% higher than the worst-performing GAN. CNN and VGG showed performances close to ResNet18 but were slightly inferior. RNN and LSTM performed worse, with accuracies about 2% to 3% lower than ResNet18. GAN performed the worst, with higher training and testing losses. The residual connections in ResNet18 effectively avoid the vanishing gradient problem in deep networks, ensuring faster convergence and higher accuracy. Other models, such as RNN and LSTM, are more suitable for sequential data and perform worse in image classification tasks compared to CNN and ResNet. Although VGG also achieved good results, it lacks the residual structure of ResNet18, leading to a slightly worse training and testing performance. Therefore, ResNet18, with its unique structural advantages, outperforms other classic neural network models in handling image classification tasks.

Table 1.

Comparison of results from neural network models.

2.2. Black Widow Optimization Algorithm

Inspired by the biological characteristics of the black widow spider, the black widow optimization (BWO) algorithm is a novel nature-inspired metaheuristic optimization method. The algorithm simulates the strategies male black widow spiders use to locate females, combining pheromone-guided actions with movement strategies within their web to effectively search and optimize solutions in complex problem spaces. The specific principles of the two strategies employed by the BWO are explained as follows:

- Movement Strategy

The movement strategy of the black widow spider is one of its mobility strategies; it can be abstracted into linear and spiral movements within its web.

where is the new position of the current search agent, is the best search agent from the previous iteration, m denotes a randomly generated floating-point number within the range [0.4, 0.9], represents the i-th search agent, and β denotes a random floating-point number within the range [−1.0, 1.0].

When the spider moves linearly, it follows a determined direction for precise and effective searching in the current region. In contrast, spiral movement expands its search range, enhancing global exploration and helping to avoid local optima. The algorithm implements the black widow spider’s movement using a formula with random floating-point numbers, where m controls speed, and added randomness further enhances search diversity. By strategically combining both linear and spiral movements, the algorithm balances local exploitation and global exploration, ultimately improving its ability to solve complex optimization problems efficiently.

- 2.

- Pheromone

Pheromones play a crucial role in the mating process of spiders, as male spiders tend to prioritize female spiders with higher pheromone levels. The formula for calculating pheromones is as follows:

where and are the worst and best fitness values in the global iteration, respectively. is the fitness of i-th search agent.

When the pheromone level is less than or equal to 0.3, the search agent is updated by Equation (3). and are the random integers generated within the range from 1 to the maximum size of the search agents, . Additionally, the pseudocode for the BWO algorithm can be found in Algorithm 1.

| Algorithm 1: BWO | ||

| Input: MaxIter, pop, dim | ||

| Operation | ||

| /* Initialization */ | ||

| 1. | Initialize: MaxIter, pop, dim | |

| 2. | Initialize: parameters m and | |

| /* Training Starts */ | ||

| 3. | while iteration < Max Number of Iterations do | |

| 4. | if random < 0.3 then | |

| 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. | else end if Calculate the pheromone for each search agent using the specified Equation (6) Revise search agents with low pheromone values using its Equation (7) Calculate fitness value of the new search agents if then end if | |

| 16. | end while | |

| /* Operation Ending */ | ||

| Output: , the best optimal solution | ||

The algorithm takes as input the maximum number of iterations (MaxIter), population size (pop), and dimension (dim). It first initializes the parameters m and β. During the iterative process, the algorithm selects different operations based on a set probability to maintain population diversity and search capability.

In each iteration, the pheromone of each individual is calculated, which reflects the individual’s fitness. Individuals with lower pheromone values update their positions to avoid becoming trapped in the local optima. After updating, the fitness values are recalculated, and the global best solution is updated if a better solution is found. After the iterations end, the algorithm outputs the best solution X*. This process simulates the hunting behavior of black widow spiders to efficiently search for the global optimum.

2.3. Lagrange Interpolation Method

The BWO algorithm has excellent performance in solving complex optimization problems, but it still has some limitations, as it is sensitive to critical parameters such as the population size during initialization, the maximum number of iterations, and the dimensionality of the problem. In some cases, the BWO algorithm may encounter local optima and exhibit an unstable convergence speed, which can lead to a decrease in solution quality and a slowdown in optimization speed.

To address the issues in optimization algorithms, F. Miao, Y. Wu et al. propose a quadratic interpolation whale optimization algorithm for solving high-dimensional feature selection problems [26,27], while Z. Li, S. Li et al. propose a novel cubic interpolated beetle antennae search (CIBAS)-based robot arm calibration algorithm [51].

Therefore, this study also introduces mathematical interpolation methods to optimize the BWO algorithm. We select six interpolation methods (Lagrange interpolation, Newton interpolation [42], spline interpolation [43], quadratic interpolation [44], linear interpolation [45], and Chebyshev interpolation [46]) and conduct extensive comparative experiments on the FASHION-MNIST dataset with the ResNet18 model. From the data in Table 2, it can be clearly seen that Lagrange interpolation performs the best in optimizing the black widow optimization (BWO) algorithm. It achieves the lowest training and testing losses, as well as the highest training and testing accuracies, indicating superior convergence and generalization abilities. Furthermore, this method achieves 0.95 in precision, recall, and F1 score, resulting in the best overall classification performance. In contrast, the other interpolation methods show slightly lower testing accuracy and F1 scores, with quadratic and linear interpolation performing particularly poorly. This indicates that Lagrange interpolation is significantly more effective in optimizing the BWO algorithm, leading to faster convergence, stronger generalization, and more stable classification performance across various problem domains, especially in complex and large-scale optimization tasks.

Table 2.

Comparison of results from interpolation methods.

Lagrange interpolation is employed to enhance the position-updating process of each spider, thereby further optimizing the BWO algorithm. When a spider needs to update its position toward the global optimum, a new position is calculated through Lagrange interpolation, which adopts several known optimal spider positions. Then, the new position is compared with the global optimal position to decide whether to update. This approach mitigates excessive jumps in the spider’s movement, enhancing the algorithm’s convergence and robustness, which helps avoid local optima and improve global search performance.

The Lagrange interpolation polynomial is given by the following:

Each lj (x) is a Lagrangian polynomial, which is expressed as follows:

where xj is the position of the independent variable and yj is the value of the function at this position.

The Lagrange interpolation method calculates the value of an unknown point by using the values of three known points. The steps are roughly as follows:

- Determining the coordinate information of the three known points.

- 2.

- Calculating the Lagrange basis function.

- 3.

- The Lagrange interpolation polynomial is obtained by adding three equations together.

By using the Lagrange interpolation method to optimize the BWO algorithm (LIBWONN), its convergence speed and local search capability are enhanced. Using Lagrange interpolation, a new optimal fitness is derived from the current optimal fitness, global optimal fitness, and previous optimal fitness. Furthermore, the new optimal fitness is evaluated against the global optimal fitness to determine if an update to the global optimal fitness is necessary.

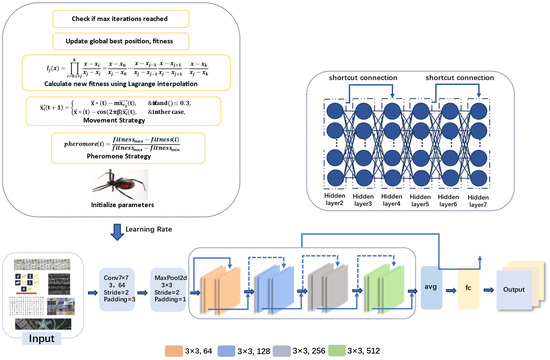

2.4. The Model Design Based on the LIBWONN Method

We employ the LIBWONN algorithm to dynamically adjust the learning rate of the ResNet18 model, which improves training efficiency. This approach allows the model to converge quickly in the early stages and gradually reduce the learning rate as it approaches the optimal solution, thereby reducing training time and the number of iterations. Additionally, this approach helps avoid local optima and enhances the model’s overall performance. The adaptive learning rate also improves model stability, reducing the risk of overfitting and enhancing generalization ability. Meanwhile, dynamic adjustment simplifies hyper-parameter tuning, reduces manual intervention, and increases training efficiency. Moreover, the model structure is as follows:

The model optimization process shown in Figure 4 is based on the black widow optimization algorithm (LIBWONN), which simulates the hunting behavior of black widow spiders in nature to search for the optimal learning rate. The training data comes from a public dataset, which is normalized before being used to train a deep neural network. After each training session, the validation loss is calculated using a validation set. This loss value acts as a pheromone in the model and serves as a metric to evaluate the quality of the current learning rate, guiding the individuals during the optimization process.

Figure 4.

The model structure of the LIBWONN method.

The core of the black widow optimization algorithm lies in simulating the predatory behavior of a spider population for search and optimization. Each individual (i.e., spider) represents a potential learning rate, randomly selected within a defined boundary range. After training the model, the validation loss corresponding to each individual is calculated and used as its fitness value. The higher the fitness, the stronger the pheromone released, attracting other individuals to move closer and accelerating convergence toward the optimal solution.

In each iteration, individuals update their positions not only under the guidance of the current best and global best solutions, but also by incorporating Lagrange interpolation to enhance the algorithm’s search capability and convergence performance. Unlike traditional update strategies that rely solely on current fitness values for local adjustments, the Lagrange interpolation mechanism leverages trend information derived from historical optimal solutions to predict potentially better learning rate positions. Specifically, during the optimization process, three key points are recorded: the best learning rate and its corresponding validation loss from the previous iteration, the best learning rate and its loss from the current iteration, and the global best learning rate with its loss. These three points are treated as inputs to the Lagrange interpolation function, which constructs a polynomial to estimate the functional relationship between the learning rate and loss.

The interpolated result yields a predicted learning rate position that is likely to produce a lower validation loss. This position is then evaluated through training, and if its corresponding loss outperforms the current global best, it is adopted as the new global optimum and releases stronger pheromones to guide future searches. Since the interpolation integrates multiple historically optimal points and captures the underlying optimization trend, it not only improves search accuracy and speeds up convergence but also enhances the algorithm’s ability to escape local optima and maintain population diversity. As a result, the LIBWONN algorithm demonstrates superior performance in dynamically tuning the learning rate, ultimately improving model generalization and classification accuracy.

In this way, during each iteration, the LIBWONN algorithm not only relies on traditional fitness evaluation and position updates but also uses Lagrange interpolation to predict potentially better solutions. This accelerates the search for the optimal learning rate and helps the neural network achieve better performance during training. Ultimately, the optimized model performs significantly better on the validation set, effectively improving classification accuracy and generalization ability. Moreover, by incorporating historical optimal points into the interpolation process, the algorithm enhances its ability to escape local optima and explores a wider solution space more effectively.

Moreover, the pseudocode of the LIBWONN algorithm is in Algorithm 2.

| Algorithm 2: LIBWONN | ||

| Input: MaxIter, pop, dim | ||

| Operation | ||

| /* Initialization */ | ||

| 1. | Initialize: MaxIter, pop, dim | |

| 2. | Initialize: parameters m and | |

| /* Training Starts */ | ||

| 3. | while iteration < Max Number of Iterations do | |

| 4. | if random < 0.3 then | |

| 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. 17. 18. 19. | else end if Calculate the pheromone for each search agent using the specified Equation (6) Revise search agents with low pheromone values using its Equation (7) Calculate fitness value of the new search agents if then end if if then end if | |

| 20. | end while | |

| /* Operation Ending */ | ||

| Output: , the best optimal solution | ||

The primary steps of the enhanced LIBWONN algorithm are as follows:

Step 1: Initialize the population parameters, such as population size, maximum iterations, and population boundary range, randomly initializing the optimal position and optimal fitness. We calculate the pheromone value by using Equation (2) based on the pheromone strategy.

Step 2: Update spider positions by using Equation (1) according to the movement strategy. If the pheromone value is less than 0.3, the pheromone strategy updates the current individual position by using Equation (2).

Step 3: Adjust the current individual’s position and fitness.

Step 4: Update the global optimal position, optimal fitness, and pheromone.

Step 5: Use the current optimal fitness, global optimal fitness, and previous optimal fitness to calculate a new fitness value via Lagrange interpolation. Evaluate it against the global optimal fitness and update the global optimal fitness if needed.

Step 6: Verify whether the maximum iterations are completed. If not, proceed back to Step 2; otherwise, halt the iteration and return the optimal position and fitness.

3. Experiments and Analyses

3.1. Dataset

A total of six public datasets are used in the experiments, which cover various scenarios such as clothing, handwritten digits, and street-view images. Their details are as follows:

- FASHION-MNIST [52]: A clothing image dataset with grayscale images sized 28 × 28 pixels contains 10 different clothing categories, such as T-shirts, trousers, dresses. The training set contains 60,000 samples, while the test set comprises 10,000 samples.

- MNIST [53]: This dataset for handwritten digit recognition contains 28 × 28 pixel images of digits from 0 to 9, with 60,000 samples for training and 10,000 samples for testing.

- Intel Image Classification [54]: This dataset includes images from six different categories, like buildings, forests, glaciers, mountains, oceans, and cities. All images are standardized to 150 × 150 pixels. The training set contains 14,034 samples, and the testing set has 3,000 samples.

- SVHN [55]: A digit recognition dataset has street-view images with 32 × 32 pixels. It includes 10 categories corresponding to the digits 0–9, and has 73,254 training images and 26,032 test images.

- RICE [56]: It covers five common rice varieties: Arborio, Basmati, Ipsala, Jasmine, and Karacadag. Image dimensions are 224 × 224 pixels with a total of 3,800 images.

- CIFAR10 [57]: This dataset includes images from 10 different categories: Airplane, automobile, bird, cat, deer, dog, frog, horse, ship, and truck. The images are 32 × 32 pixels with a training set of 50,000 images and a test set of 10,000 images.

3.2. Base Models

To validate the performance of the LIBWONN algorithm, nine novel and advanced optimization algorithms are selected for comparative experiments.

- DOA: Dream Optimization Algorithm (DOA) is a novel metaheuristic algorithm inspired by human dreams. The algorithm combines a basic memory strategy with a forgetting and replenishing strategy to balance exploration and exploitation.

- ALA: Artificial Lemming Algorithm (ALA) is a biologically inspired metaheuristic algorithm inspired by the four basic behaviors of lemmings in nature: long migrations, digging holes, foraging for food, and avoiding predators. The algorithm simulates the survival strategies of lemmings in complex environments, providing an effective search method for solving optimization problems.

- SDO: Sled Dog Optimizer (SDO) is mainly inspired by the various behavior patterns of sled dogs, focusing on simulating the processes of dogs pulling sleds, training, and retiring to construct a mathematical model.

- CBSO: Connected Banking System Optimizer (CBSO) is a population-based optimization algorithm that belongs to a multi-stage search strategy. It is inspired by the interconnectedness of banking systems, where different banks are connected in various ways, facilitating transactions and submissions.

- PSEQADE: Quantum Adaptive Population State Evaluation Differential Evolution Algorithm (PSEQADE) is an improved quantum heuristic differential evolution algorithm. It adopts a quantum adaptive mutation strategy to reduce excessive mutation and introduces a population state evaluation framework to enhance convergence accuracy and stability.

- COVIDOA: Coronavirus Optimization Algorithm (COVIDOA) is an evolutionary algorithm that simulates the biological lifecycle. It is inspired by the behavior of organisms at different stages such as growth, reproduction, and adaptation. The algorithm simulates the evolutionary process of individuals from youth to adulthood, adapting through mutation, recombination, selection, and reproduction based on environmental changes, thereby balancing global and local search capabilities.

- SASS: Social-Aware Salp Swarm Algorithm (SASS) is a population-based optimization algorithm inspired by the behavior of sand particles in a sandstorm. It mainly simulates the collective movement of sand particles under the influence of wind to perform global optimization. The goal of SASS is to improve collaboration among individuals in the group using a social awareness model, enhancing the balance between exploration and exploitation in the search process.

- LSHADE-cnEpSin: Latent Search Strategy Adaptive Differential Evolution with Compound Neighborhood-based Epistemic Population for Sine Function (LSHADE-cnEpSin) is an improved differential evolution algorithm. It enhances optimization performance, especially for high-dimensional complex problems, by using an adaptive mutation strategy and a control mechanism that balances global and local search.

- AGSK: Adaptive Gaining Sharing Knowledge (AGSK) is an algorithm that simulates the human knowledge-sharing process. It enhances global search capability and local search efficiency by introducing an adaptation strategy based on successful historical positional information, making it suitable for solving complex optimization problems.

- BOA: The Bobcat Optimization Algorithm (BOA) is a bio-inspired metaheuristic algorithm that simulates the natural hunting behavior of bobcats. It enhances the balance between global exploration and local exploitation by modeling two phases: the bobcat’s movement towards its prey (exploration) and the chase process to catch its prey (exploitation). BOA’s dual-phase position update strategy improves convergence speed and solution quality, making it effective for solving high-dimensional, complex, and constrained optimization problems.

- WOA: The Wombat Optimization Algorithm (WOA) is a bio-inspired metaheuristic algorithm that simulates the foraging behavior of wild wombats and their evasive maneuvers against predators. The algorithm models two phases: the wombat’s position changes during foraging (exploration) and its movements when diving into tunnels to escape predators (exploitation), effectively balancing global search and local search.

3.3. Performance Verification

In an effort to rigorously validate the efficacy of the LIBWONN algorithm in adaptively optimizing the learning rate of the ResNet18 model, a set of comparative experiments are meticulously carried out. In these experiments, the LIBWONN algorithm is contrasted with nine other cutting-edge optimization algorithms. The algorithms selected are thus emblematic of the deep-learning domain, ensuring a comprehensive and probative evaluation of the LIBWONN algorithm’s performance in the optimization of RenNet18 model. The experiments involve six public datasets to ensure the comprehensiveness and reliability of the evaluation results. The chosen datasets cover various fields and tasks, which allows for testing the algorithms’ performance through diverse applications and validating the applicability of the LIBWONN algorithm.

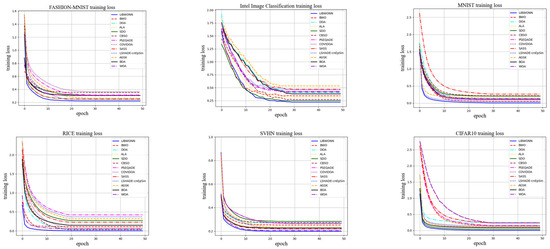

In Figure 5, the LIBWONN algorithm performs excellently on multiple datasets. Specifically, LIBWONN shows the fastest convergence speed and achieves convergence by the 10th iteration on the FASHION-MNIST, MNIST, and SVHN datasets, where the final loss value reaches its minimum. This indicates that the LIBWONN algorithm is able to effectively capture the features of the data on these relatively simple datasets.

Figure 5.

The training loss images on six datasets.

On the Intel Image Classification dataset, compared with the WOA model, LIBWONN’s initial convergence speed and final loss value are slightly inferior by 1.08%. This is mainly attributed to the higher complexity and diversity of the dataset, which requires more iterations for the model to adequately capture the data patterns. Despite its relatively weaker performance on this dataset, LIBWONN still demonstrates a good convergence capability and maintains a relatively low final loss value overall.

Overall, the LIBWONN model performs exceptionally well across multiple datasets, especially in tasks involving FASHION-MNIST, MNIST, and SVHN, where its accuracy reaches above 98%. Its efficient convergence speed and low loss values highlight its effectiveness in image classification. Moreover, when dealing with more complex datasets, LIBWONN also shows strong adaptability, indicating promising potential for further optimization and exploration in future research.

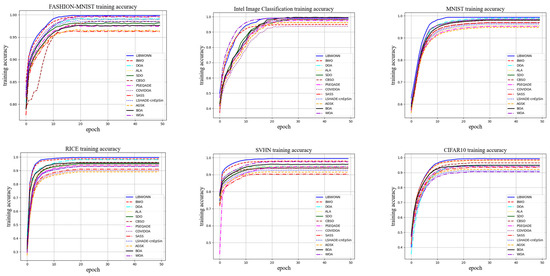

In Figure 6, we present a comparison of the training accuracy between different optimization algorithms and the proposed LIBWONN algorithm across five datasets. The results clearly demonstrate that LIBWONN performs exceptionally well, achieving high accuracy, requiring fewer training iterations, and exhibiting rapid convergence. Additionally, compared to the other optimization algorithms, LIBWONN offers enhanced stability and smoother training curves.

Figure 6.

The training accuracy images on six datasets.

Notably, on the MNIST and SVHN datasets, the LIBWONN algorithm attains high accuracy within a short training time, highlighting its capability for fast convergence. However, on the Intel Image Classification dataset, its training accuracy exhibits more noticeable fluctuations. This can be attributed to the dataset’s greater diversity and complexity, as it comprises six different scene categories and intricate image structures, making generalization more challenging for the optimization algorithm. Factors such as noise, imbalanced sample distribution, and the limited size of the training dataset may lead the model to learn incorrect patterns, thereby causing accuracy fluctuations. In convolutional neural networks trained for image classification, dataset imbalance can further contribute to such variations. Nevertheless, when using models like ResNet, accuracy fluctuations typically smooth out over time rather than displaying random oscillations, which aligns with our expected trend.

Overall, the LIBWONN algorithm demonstrates outstanding performance across multiple datasets, particularly in terms of rapid convergence. However, when applied to complex datasets such as Intel Image Classification, the impact of dataset diversity and complexity on the model’s generalization ability must be carefully considered. By fine-tuning optimization parameters, employing data augmentation techniques, and leveraging other strategies, the model’s stability and accuracy can be further enhanced.

TP (true positive): the prediction matches the actual positive class. TN (true negative): the prediction matches the actual negative class. FP (false positive): the prediction is positive, but the actual class is negative. FN (false negative): the prediction is negative, but the actual class is positive.

Accuracy, the most intuitive metric, represents the proportion of correct predictions out of all the samples. However, it does not always provide a complete picture of a model’s performance, particularly in imbalanced datasets where errors in the minority class have a minimal impact on overall accuracy. To quantitatively verify the performance of the LIBWONN algorithm, we select several evaluation metrics such as accuracy, precision, recall, and F1-score. These metrics are standard in the field of machine learning and are used to comprehensively assess the model’s predictive capabilities.

Precision measures the ratio of true positive predictions to the total positive predictions made by the model. This metric shows how well the model identifies actual positive cases. A high precision score means the model is more careful in predicting positives, reducing the likelihood of false positives.

Recall evaluates the percentage of true positives among all actual positive samples, reflecting the model’s ability to detect positive instances. A high recall means the model is adept at identifying all positive samples, thereby decreasing false negative errors.

F1-Score, being the harmonic mean of the precision and recall, is used to assess the balance between these metrics. It reaches its highest value of 1 when the precision and recall are equal, otherwise it diminishes. This metric is crucial in applications where both precision and recall need to be taken into account.

p-value represents the probability of observing the current data or more extreme results under the assumption that the null hypothesis is true, serving as a measure of the evidence against the null hypothesis. A lower p-value indicates a lower likelihood of the null hypothesis being true, providing a basis for its rejection. Typically, if the p-value is less than the significance level (e.g., 0.05), the result is considered statistically significant. This allows researchers to make data-driven decisions and draw conclusions about the relationships between variables. The mathematical formulas for the Wilcoxon signed-rank test and the Friedman test are shown in Equations (19) and (20), respectively. After standardization, hypothesis testing is conducted to validate the assumptions and ensure reliable results.

By thoroughly evaluating these metrics, we can better understand the performance of the LIBWONN algorithm across various datasets, as well as its potential and limitations in real-world applications, providing valuable insights into its practical applicability and robustness in different scenarios.

Table 2, Table 3, Table 4, Table 5, Table 6, Table 7 and Table 8 show the performance metrics of each benchmark model on six public datasets. In terms of the three comprehensive performance indicators, namely precision, recall, and F1-score, LIBWONN achieves the best results, which indicates that the LIBWONN algorithm has strong learning capabilities and significant advantages in classification accuracy, recall, and overall performance. This demonstrates that LIBWONN is able to effectively capture patterns and make accurate predictions across various datasets. Its superior performance in both precision and recall highlights its ability to balance the trade-off between minimizing false positives and false negatives, making it a highly reliable model for real-world applications.

Table 3.

VHN dataset and comparison models.

Table 4.

MNIST dataset and comparison models.

Table 5.

FASHION-MNIST dataset and comparison models.

Table 6.

Intel Image Classification dataset and comparison models.

Table 7.

RICE dataset and comparison models.

Table 8.

CIFAR10 dataset and comparison models.

In the signed-rank test, the p-value for most cases is less than 0.05, indicating that there is a significant difference between the other algorithms and LIBWONN. This suggests that the superior performance of LIBWONN is not due to random chance but is statistically significant. A p-value smaller than 0.05 typically means that the null hypothesis can be rejected, confirming that LIBWONN consistently outperforms the other algorithms in terms of accuracy, recall, and F1-score. This strengthens the validity of LIBWONN as a more effective and reliable algorithm for solving optimization and classification tasks.

LIBWONN performs exceptionally well on the MNIST and RICE datasets, with its training and testing loss being relatively low, and its accuracy exceeding 99%. All metrics reach their highest levels, showing a 3.44% improvement in performance on the training set compared to BWO, followed by a 2.16% improvement on the test set. Additionally, LIBWONN achieves excellent results on relatively complex datasets such as SVHN, Fashion-MNIST, and Intel Image Classification. Although its performance slightly declines on the texture-rich Fashion-MNIST and the diverse natural scenes of the Intel Image Classification dataset, LIBWONN still maintains a low loss, high accuracy, and outstanding precision, recall, and F1 scores. These results demonstrate LIBWONN’s consistent performance across a wide range of datasets. In the SVHN dataset’s training set, LIBWONN trails BWO by just 0.37%.

3.4. Testing Functions

The CEC 2017 and CEC 2022 testing functions, presented at the IEEE Congress on Evolutionary Computation, serve as essential benchmarks for evaluating optimization algorithms. The CEC 2017 functions encompass various optimization problems, such as unimodal, multimodal, composite, and dynamic types. These functions are designed to test the algorithm’s performance under different complexities and characteristics, thus evaluating its capabilities in both static and dynamic environments. The CEC 2022 testing function introduces complex challenges, which includes intricate multimodal function, high-dimensional function, irregular function, discontinuous function, and constrained optimization problems. These new features are intended to better reflect the challenges of modern applications, which test algorithms’ performance in high-dimensional spaces and complex landscapes, as well as under constraints. CEC 2022 emphasizes improving the robustness and adaptability of algorithms, thus providing a more comprehensive testing environment.

- Unimodal functions: Characterized by a single global optimum, they are used to test an algorithm’s local search proficiency and convergence speed. They are effective in evaluating the algorithm’s efficiency and accuracy in basic situations.

- Multimodal functions: Characterized by several local optima, they test the performance of an algorithm to navigate out of local optima as well as its global search capability. These functions are adopted to measure the performance of the algorithm in complex and nonlinear environments.

- Composite functions: They are composed of multiple functions with different characteristics, which simulate the complexity and diversity of real-world problems. They are adopted to verify the algorithm’s adaptability when handling mixed features and multiple levels of difficulty.

- Dynamic change functions: Their objective function changes over time, and are used to evaluate the algorithm’s tracking and adaptability in dynamic environments. They are especially suitable for testing an algorithm’s ability to maintain its performance under continuously changing conditions.

The characteristics of these test functions lie in their diversity and complexity, thus allowing researchers to test the performance of algorithms through different dimensions and scenarios. The design of these functions takes into account various factors, including smoothness, differentiability, and the number of local optima. These factors directly affect the performance of optimization algorithms during the solution process. Moreover, Table 9 and Table 10 summarize the basic information regarding the CEC 2017 and CEC 2022 testing functions in our paper, including function numbers, function names, and global minimum values.

Table 9.

CEC2017 testing functions.

Table 10.

CEC2022 testing functions.

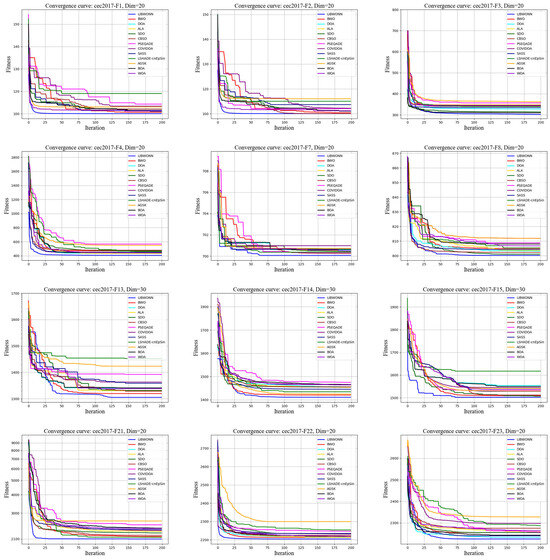

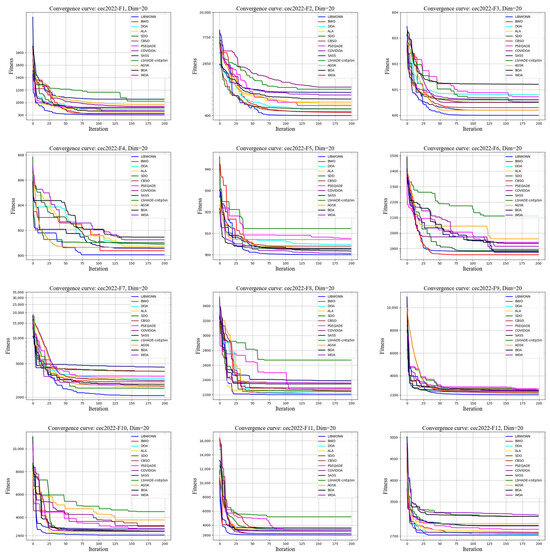

To thoroughly evaluate the performance of different optimization algorithms on the CEC test functions, we conducted multiple experiments using various algorithms to iteratively solve several test functions and plotted their fitness value curves. Figure 7 and Figure 8 illustrate the fitness changes over 200 iterations for different algorithms on the CEC2017 and CEC2022 test functions, providing a clear basis for subsequent analysis.

Figure 7.

CEC2017 testing functions.

Figure 8.

CEC2022 testing functions.

Figure 7 shows that LIBWONN and BWO exhibit rapid decreases in their fitness values within the first 50 iterations, demonstrating fast convergence speeds and strong search capabilities. Additionally, LIBWONN’s fitness curve shows smaller fluctuations, indicating better stability. This fast convergence makes these algorithms especially suitable for time-sensitive optimization problems, as they can quickly locate regions near the optimal solution. Although LIBWONN performs slightly worse than SDO and CBSO on the F3 and F7 test functions, it remains highly competitive overall and excels in handling complex and large-scale functions. Its efficient and stable characteristics give it advantages in practical applications, showing good robustness against parameter variations and environmental disturbances.

Figure 8 presents the performance of LIBWONN on the CEC2022 test functions, where it consistently demonstrates a rapid fitness decline, reflecting its excellent convergence ability and stability. The algorithm effectively balances exploration and exploitation, avoiding local optima and ensuring global optimization. Compared with the other algorithms, LIBWONN outperforms them in both convergence speed and final fitness values, showing strong adaptability, especially suited for high-dimensional and complex optimization tasks. Moreover, LIBWONN maintains stable performance across different test environments, highlighting its potential for applications in dynamic and complex scenarios.

To further quantify the algorithm’s performance, we evaluated it using three metrics: average value, best value, and standard deviation. The average fitness value reflects overall stability and reliability across multiple runs; a lower average indicates a consistent good performance. The best value measures the algorithm’s ability to find the optimal solution, while the standard deviation assesses the variability of its results, with smaller values indicating greater stability. Together, these metrics show that LIBWONN performs excellently across the various test functions, confirming its efficiency and broad applicability in complex optimization problems.

Combining the fitness curves and quantitative metrics, the LIBWONN algorithm demonstrates significant advantages in fast convergence, superior stability, and strong adaptability across multiple test functions, proving its potential and value in solving real-world complex optimization challenges.

The best value refers to the lowest fitness value achieved by the algorithm on multiple iterations, the optimal solution the algorithm can reach in a given environment. Moreover, the best value of the LIBWONN algorithm directly reflects its efficiency in exploring the solution space. A lower best value indicates that the algorithm is capable of effectively finding solutions close to the global optimum.

The standard deviation is an indicator for assessing the variability of the algorithm’s results, thus reflecting the stability of LIBWONN on multiple runs. A smaller standard deviation shows a higher consistency of the algorithm’s results in different experiments, which indicates that LIBWONN can maintain a stable optimization performance in the face of various problems. It is particularly important in practical applications to ensure the reliability and reproducibility of the algorithm.

From an extensive analysis of these three metrics, we can thoroughly evaluate the performance of the LIBWONN optimization algorithm and affirm its effectiveness in handling complex optimization tasks. These quantitative results provide us with a more solid theoretical foundation, thus aiding us in understanding the significant advantages of the LIBWONN algorithm in practical applications.

- Average value (Ave):

- 2.

- Best value (Best):

- 3.

- Standard deviation (Std):

Each test function is uniformly set at 20 dimensions, except for F13, F14, and F15 in CEC2017, which are set at 30 dimensions.

The detailed results of the CEC2017 test functions are presented in Table 11. From the average values of each test function, it is evident that LIBWONN and BWO demonstrate superior performance across most functions, particularly for functions F1, F3, and F4. Their average values are consistently lower than those of the other algorithms, indicating that these two algorithms excel in optimizing these specific problems. This performance highlights LIBWONN and BWO’s strong ability to explore the solution space, suggesting that their algorithmic designs are highly effective in quickly locating regions near the optimal solution.

Table 11.

Comparison of results from 12 benchmark testing functions on CEC2017.

In terms of stability, the analysis of the standard deviation shows that LIBWONN and BWO generally exhibit low standard deviations, underscoring their high stability throughout the optimization process. For example, the standard deviations of LIBWONN and BWO remain within an acceptable range for the functions F1, F3, and F4, demonstrating consistent and reliable results.

The comparison of the best values further underscores the advantage of LIBWONN. In several of the test functions, LIBWONN achieves the best values, securing the first rank, which emphasizes its exceptional overall performance. This result confirms the efficacy of LIBWONN in optimization tasks and demonstrates its excellent adaptability and competitiveness across various complex problems.

Table 12 presents the detailed results of the CEC2022 test functions. Analyzing these results reveals that the LIBWONN algorithm continues to excel in several of the test functions, particularly F1 and F2, where its average fitness is notably lower than that of the other algorithms, further showcasing its optimization capabilities for complex problems. From the perspective of standard deviation, LIBWONN maintains relatively low standard deviation values, indicating remarkable stability. This suggests that LIBWONN can consistently deliver reliable optimization results across multiple experiments. In summary, LIBWONN’s performance in the CEC2022 test functions reinforces the advantages observed in CEC2017, confirming its continued effectiveness and reliability in solving complex optimization challenges.

Table 12.

Comparison of results from 12 benchmark testing functions on CEC2022.

3.5. Ablation Study and Sensitivity Analysis

In an attempt to validate the contribution of each component within the LIBWONN algorithm to the performance of the ResNet18 model, and thereby to illustrate the efficacy of LIBWONN, two ablation experiments are carried out during the training of the ResNet18 model on the FASHION-MNIST dataset: (1) LIBWONN is substituted with a conventional optimization approach employing a fixed learning rate, and (2) the Lagrange interpolation in LIBWONN is removed, and only the BWO algorithm is used for the optimization effectiveness of LIBWONN.

The experimental results indicate that LIBWONN exhibits significant performance advantages over the other optimization methods, as shown in Table 13. When replacing LIBWONN with a conventional optimization method using a fixed learning rate, both training and testing losses increased substantially, suggesting that a fixed learning rate fails to adapt effectively to the characteristics of the dataset, resulting in suboptimal model training. When removing the Lagrange interpolation and using only BWO for optimization, the testing accuracy improved to 92.50%, surpassing that of the fixed learning rate approach but still falling short of the 95.33% achieved by LIBWONN. This result demonstrates that while BWO effectively optimizes the learning rate, the absence of Lagrange interpolation reduces its precision and global search capability, preventing the model from achieving optimal performance.

Table 13.

The results of the ablation study.

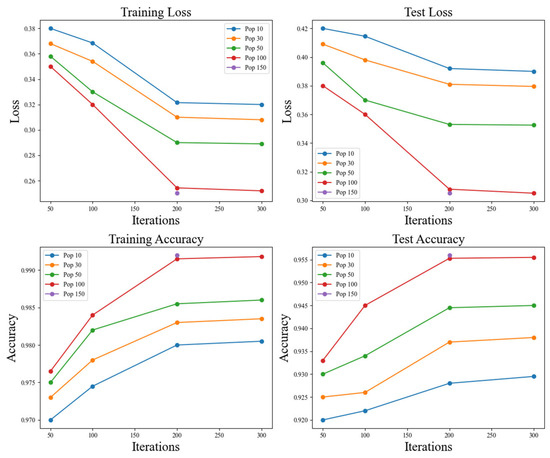

A sensitivity analysis experiment primarily checks the impact of population size and the number of iterations on the performance of LIBWONN in optimizing the ResNet18 model. The experimental results indicate that as the population size and the number of iterations increase, both the training loss and test loss gradually decrease, while the training accuracy and test accuracy continuously improve. This validates the optimization process. The result is shown in Table 14 and the line graph in Figure 9.

Table 14.

The results of the sensitivity analysis experiment.

Figure 9.

Line chart of sensitivity analysis results.

Specifically, when the population size reaches 100 and the number of iterations is set to 200, the training loss decreases to 0.2543, the test loss decreases to 0.3078, the training accuracy reaches 99.15%, and the test accuracy reaches 95.53%. In contrast, smaller population sizes (e.g., 10 or 30) and fewer iterations (e.g., 50 or 100) lead to a higher loss and lower accuracy, suggesting that a smaller search space and a shorter optimization process fail to sufficiently explore the optimal solution of the model.

However, when the population size and the number of iterations increase to 150 and 300, respectively, the reduction in training and test loss tends to level off, and the improvement in test accuracy becomes less significant. For example, with 200 iterations, increasing the population size from 100 to 150 results in only a slight test accuracy improvement from 95.53% to 95.60%. Similarly, with 300 iterations, increasing the population size from 100 to 150 raises the test accuracy only marginally from 95.55% to 95.62%. This indicates that once the population size and the number of iterations reach a certain threshold, further increasing computational resources provides limited optimization benefits, exhibiting a convergence effect.

4. Conclusions

This paper proposes an innovative adaptive learning rate method: a Lagrange interpolation-based black widow optimization algorithm developed to optimize the learning rate of the ResNet18 model, thereby significantly improving the training efficiency and performance of the model. The code developed in this paper is available at https://github.com/HJYJY/LIBWO (accessed on 30 May 2025).

To verify the effectiveness of LIBWONN, this paper selects nine new metaheuristic optimization algorithms (including DOA, ALA, SDO, CBSO, PSEQADE, COVIDOA, SASS, LSHADE-cnEpSin, AGSK) and conducts experiments on six public datasets covering various scenarios such as fashion, handwritten digits, and street-scene images. Additionally, 24 benchmark functions from CEC2017 and CEC2022 are chosen for the performance testing of the model. The experimental results demonstrate that LIBWONN performs excellently on multiple public datasets, thus significantly outperforming the original BWO algorithm without Lagrange interpolation.

By combining Lagrange interpolation techniques with the BWO algorithm, we effectively overcome the limitations of traditional learning rate optimization methods, especially in their convergence rate and generalization capability, which addresses the issue of BWO’s susceptibility to local optima. The LIBWONN algorithm dynamically constructs an interpolation function that can adaptively adjust the learning rate based on the training performance of the ResNet18 model. This mechanism enhances the robustness of the optimization process, which also effectively reduces the risk of oscillation and divergence caused by inappropriate learning rate choices during training.

Compared with traditional fixed learning rate methods and simple adaptive learning rate algorithms, LIBWONN can smoothly and effectively adjust the learning rate of the ResNet18 model, thereby accelerating convergence and improving final performance. This innovative approach provides new insights for training deep learning models that can significantly improve training results and overall model performance when handling complex tasks. Additionally, it offers a new solution for addressing the problem in which metaheuristic optimization algorithms suffer from local optima.

However, this method faces the issue of high computational costs, as each individual requires training and validation loss computation, which may significantly increase the training time when applied to deep neural networks and large-scale datasets. Moreover, LIBWONN may still suffer from local optima; although the pheromone mechanism guides the search, individuals may prematurely converge in complex high-dimensional search spaces, limiting the optimization performance and ultimately affecting the model’s learning capability and generalization ability.

Our current research mainly focuses on low-dimensional problems, but as optimization algorithms become more widely applied in practice, studying high-dimensional problems will become increasingly important. In the future, we plan to carry out more systematic and comprehensive experiments on high-dimensional tasks to evaluate the performance and efficiency of interpolation methods in complex environments, while also exploring potential optimization strategies to reduce computational costs.

Furthermore, considering practical deployment, future work will also explore how LIBWONN can be adapted for real-world applications such as embedded systems and real-time learning scenarios. These environments often demand lightweight models and rapid inference, which necessitates the development of simplified or accelerated versions of LIBWONN. Such efforts will help enhance the algorithm’s applicability in industrial and edge computing settings.

Author Contributions

Conceptualization, P.W.; methodology, H.S.; software, J.H.; validation, C.H. and W.Q.; formal analysis, P.W.; investigation, T.C.; resources, J.G. and C.H.; data curation, J.G.; writing—original draft preparation, P.W. and J.H.; writing—review and editing, P.W. and Z.L.; visualization, P.W. and J.H.; supervision, J.G. and H.S.; project administration, H.S. and M.S.; funding acquisition, P.W. and Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the National Funded Postdoctoral Research Program GZC20241900, Natural Science Foundation Program of Xinjiang Uygur Autonomous Region 2024D01A141, Tianchi Talents Program of Xinjiang Uygur Autonomous Region and Postdoctoral Fund of Xinjiang Uygur Autonomous Region, the Key Laboratory of Remote Sensing Application and Innovation (LRSAI-2025004), Sichuan University students innovation and entrepreneurship training program (S202410621082), and Chengdu University of Information Technology key project of education reform (JYJG2024206).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The source code used in this work can be retrieved from the following Github link: https://github.com/HJYJY/LIBWO (accessed on 30 May 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yuan, J.; Zhu, A.; Xu, Q.; Wattanachote, K.; Gong, Y. CTIF-Net: A CNN-transformer iterative fusion network for salient object detection. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 3795–3805. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, R. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Xu, W.; Fu, Y.-L.; Zhu, D. ResNet and its application to medical image processing: Research progress and challenges. Comput. Methods Programs Biomed. 2023, 240, 107660. [Google Scholar] [CrossRef] [PubMed]

- Croitoru, F.-A.; Ristea, N.-C.; Ionescu, R.T.; Sebe, N. Learning rate curriculum. Int. J. Comput. Vis. 2024, 132, 1–24. [Google Scholar] [CrossRef]

- Razavi, M.; Mavaddati, S.; Koohi, H. ResNet deep models and transfer learning technique for classification and quality detection of rice cultivars. Expert Syst. Appl. 2024, 247, 123276. [Google Scholar] [CrossRef]

- Peña-Delgado, A.F.; Peraza-Vázquez, H.; Almazán-Covarrubias, J.H.; Cruz, N.T.; García-Vite, P.M.; Morales-Cepeda, A.B.; Ramirez-Arredondo, J.M. A Novel bio-inspired algorithm applied to selective harmonic elimination in a three-phase eleven-level inverter. Math. Probl. Eng. 2020, 2020, 8856040. [Google Scholar] [CrossRef]

- Sauer, T.; Xu, Y. On multivariate Lagrange interpolation. Math. Comput. 1995, 64, 1147–1170. [Google Scholar] [CrossRef]

- Ma, Z.; Mao, Z.; Shen, J. Efficient and stable SAV-based methods for gradient flows arising from deep learning. J. Comput. Phys. 2024, 505, 112911. [Google Scholar] [CrossRef]

- Franchini, G.; Porta, F.; Ruggiero, V.; Trombini, I.; Zanni, L. A Stochastic gradient method with variance control and variable learning rate for deep learning. J. Comput. Appl. Math. 2024, 451, 116083. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, J. Incremental PID controller-based learning rate scheduler for stochastic gradient descent. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 7060–7071. [Google Scholar] [CrossRef]

- Qin, W.; Luo, X.; Zhou, M.C. Adaptively-accelerated parallel stochastic gradient descent for high-dimensional and incomplete data representation learning. IEEE Trans. Big Data 2024, 10, 92–107. [Google Scholar] [CrossRef]

- Shen, L.; Chen, C.; Zou, F.; Jie, Z.; Sun, J.; Liu, W. A unified analysis of AdaGrad with weighted aggregation and momentum acceleration. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 14482–14490. [Google Scholar] [CrossRef] [PubMed]

- Shao, Y.; Yang, J.; Zhou, W.; Sun, H.; Xing, L.; Zhao, Q.; Zhang, L. An improvement of adam based on a cyclic exponential decay learning rate and gradient norm constraints. Electronics 2024, 13, 1778. [Google Scholar] [CrossRef]

- Jia, X.; Feng, X.; Yong, H.; Meng, D. Weight decay with tailored adam on scale-invariant weights for better generalization. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 6936–6947. [Google Scholar] [CrossRef] [PubMed]

- Wilson, A.C.; Roelofs, R.; Stern, M.; Srebro, N.; Recht, B. The marginal value of adaptive gradient methods in machine learning. Proc. Adv. Neural Inf. Process. Syst. 2017, 30, 4149–4159. [Google Scholar]

- Gharehchopogh, F.S. Quantum-inspired metaheuristic algorithms: Comprehensive survey and classification. Artif. Intell. Rev. 2023, 56, 5479–5543. [Google Scholar] [CrossRef]

- Akgul, A.; Karaca, Y.; Pala, M.A.; Çimen, M.E.; Boz, A.F.; Yildiz, M.Z. Chaos theory, advanced metaheuristic algorithms and their newfangled deep learning architecture optimization applications: A review. Fractals 2024, 32, 2430001. [Google Scholar] [CrossRef]

- Wang, X.; Hu, H.; Liang, Y.; Zhou, L. On the mathematical models and applications of swarm intelligent optimization algorithms. Arch. Comput. Methods Eng. 2022, 29, 3815–3842. [Google Scholar] [CrossRef]

- Li, J.; Yang, S.X. Intelligent fish-inspired foraging of swarm robots with sub-group behaviors based on neurodynamic models. Biomimetics 2024, 9, 16. [Google Scholar] [CrossRef]

- Chen, B.; Cao, L.; Chen, C.; Chen, Y.; Yue, Y. A comprehensive survey on the chicken swarm optimization algorithm and its applications: State-of-the-art and research challenges. Artif. Intell. Rev. 2024, 57, 170. [Google Scholar] [CrossRef]

- Deng, W.; Ma, X.; Qiao, W. A hybrid intelligent optimization algorithm based on a learning strategy. Mathematics 2024, 12, 2570. [Google Scholar] [CrossRef]

- Guan, T.; Wen, T.; Kou, B. Improved lion swarm optimization algorithm to solve the multi-objective rescheduling of hybrid flowshop with limited buffer. J. King Saud Univ.-Comput. Inf. Sci. 2024, 36, 102077. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, M.; Yang, M.; Wang, D. Hybrid quantum particle swarm optimization and variable neighborhood search for flexible job-shop scheduling problem. J. Manuf. Syst. 2024, 73, 334–348. [Google Scholar] [CrossRef]

- Zhong, M.; Wen, J.; Ma, J.; Cui, H.; Zhang, Q.; Parizi, M.K. A hierarchical multi-leadership sine cosine algorithm to dissolving global optimization and data classification: The COVID-19 case study. Comput. Biol. Med. 2023, 164, 107212. [Google Scholar] [CrossRef]

- Mohamed, M.T.; Alkhalaf, S.; Senjyu, T.; Mohamed, T.H.; Elnoby, A.M.; Hemeida, A. Sine cosine optimization algorithm combined with balloon effect for adaptive position control of a cart forced by an armature-controlled DC motor. PLoS ONE 2024, 19, e0300645. [Google Scholar] [CrossRef]

- Miao, F.; Wu, Y.; Yan, G.; Si, X. A memory interaction quadratic interpolation whale optimization algorithm based on reverse information correction for high-dimensional feature selection. Appl. Soft Comput. 2024, 164, 111979. [Google Scholar] [CrossRef]

- Wei, P.; Shang, M.; Zhou, J.; Shi, X. Efficient adaptive learning rate for convolutional neural network based on quadratic interpolation egret swarm optimization algorithm. Heliyon 2024, 10, e37814. [Google Scholar] [CrossRef]

- Deng, W.; Wang, J.; Guo, A.; Zhao, H. Quantum differential evolutionary algorithm with quantum-adaptive mutation strategy and population state evaluation framework for high-dimensional problems. Inf. Sci. 2024, 676, 120787. [Google Scholar] [CrossRef]

- Khalid, A.M.; Hosny, K.M.; Mirjalili, S. COVIDOA: A novel evolutionary optimization algorithm based on coronavirus disease replication lifecycle. Neural Comput. Appl. 2022, 34, 22465–22492. [Google Scholar] [CrossRef]

- Salgotra, R.; Singh, U.; Saha, S.; Nagar, A. New Improved SALSHADE-cnEpSin Algorithm with Adaptive Parameters. IEEE Congr. Evol. Comput. (CEC) 2019, 2019, 3150–3156. [Google Scholar]

- Xiao, Y.; Cui, H.; Abu Khurma, R.; Castillo, P.A. Artificial lemming algorithm: A novel bionic meta-heuristic technique for solving real-world engineering optimization problems. Artif. Intell. Rev. 2025, 58, 84. [Google Scholar] [CrossRef]

- Hu, G.; Cheng, M.; Houssein, E.H.; Hussien, A.G.; Abualigah, L. SDO: A novel sled dog-inspired optimizer for solving engineering problems. Adv. Eng. Inform. 2024, 62, 102783. [Google Scholar] [CrossRef]

- Rao, A.N.; Vijayapriya, P. Salp Swarm Algorithm and Phasor Measurement Unit Based Hybrid Robust Neural Network Model for Online Monitoring of Voltage Stability. Wirel. Netw. 2021, 27, 843–860. [Google Scholar]

- Benmamoun, Z.; Khlie, K.; Bektemyssova, G.; Dehghani, M.; Gherabi, Y. Bobcat Optimization Algorithm: An effective bio-inspired metaheuristic algorithm for solving supply chain optimization problems. Sci. Rep. 2024, 14, 20099. [Google Scholar] [CrossRef]

- Benmamoun, Z.; Khlie, K.; Dehghani, M.; Gherabi, Y. WOA: Wombat optimization algorithm for solving supply chain optimization problems. Mathematics 2024, 12, 1059. [Google Scholar] [CrossRef]

- Lang, Y.; Gao, Y. Dream Optimization Algorithm (DOA): A novel metaheuristic optimization algorithm inspired by human dreams and its applications to real-world engineering problems. Comput. Methods Appl. Mech. Eng. 2025, 436, 117718. [Google Scholar] [CrossRef]

- Nemati, M.; Zandi, Y.; Sabouri, J. Application of a novel metaheuristic algorithm inspired by connected banking system in truss size and layout optimum design problems and optimization problems. Sci. Rep. 2024, 14, 27345. [Google Scholar] [CrossRef]

- Akhmedova, S.; Stanovov, V. Success-History Based Position Adaptation in Gaining-Sharing Knowledge Based Algorithm. In Proceedings of the Advances in Swarm Intelligence: 12th International Conference, Qingdao, China, 17–21 July 2021; Volume 12, pp. 174–181. [Google Scholar]

- Beheshti, Z.; Shamsuddin, S.M.H. A review of population-based meta-heuristic algorithms. Int. J. Adv. Soft. Comput. Appl. 2013, 5, 1–35. [Google Scholar]

- Wang, J.; Liang, Y.; Tang, J.; Wu, Z. Vehicle trajectory reconstruction using lagrange-interpolation-based framework. Appl. Sci. 2024, 14, 1173. [Google Scholar] [CrossRef]

- Wang, R.; Feng, Q.; Ji, J. The discrete convolution for fractional cosine-sine series and its application in convolution equations. AIMS Math. 2024, 9, 2641–2656. [Google Scholar] [CrossRef]

- Taylor, R.L. Newton interpolation. Numer. Anal. 2022, 12, 25–37. [Google Scholar]

- Smith, M.J. Spline interpolation. J. Comput. Math. 2021, 18, 58–72. [Google Scholar]

- Thompson, A.P. Quadratic interpolation: Formula, definition, and solved examples. Appl. Math. Comput. 2023, 20, 65–78. [Google Scholar]

- Acton, F.S. Linear vs. quadratic interpolations example from F.S. Acton ‘Numerical methods that work’. Numer. Methods J. 2020, 9, 101–110. [Google Scholar]

- Zhang, X. Research on interpolation and data fitting: Basis and applications. Math. Model. Appl. 2022, 35, 125–135. [Google Scholar]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. Neural Comput. 1994, 6, 157–167. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. Int. Conf. Mach. Learn. (ICML) 2015, 37, 1–9. [Google Scholar]

- Li, Z.; Li, S.; Luo, X. A novel machine learning system for industrial robot arm calibration. IEEE Trans. Circuits Syst. II Express Briefs 2023, 71, 2364–2368. [Google Scholar] [CrossRef]

- Ma, R.; Hwang, K.; Li, M.; Miao, Y. Trusted model aggregation with zero-knowledge proofs in federated learning. IEEE Trans. Parallel Distrib. Syst. 2024, 35, 2284–2296. [Google Scholar] [CrossRef]

- Tissera, M.D.; McDonnell, M.D. Deep extreme learning machines: Supervised autoencoding architecture for classification. Neurocomputing 2016, 174, 42–49. [Google Scholar] [CrossRef]

- Abhishek, A.V.S.; Gurrala, V.R. Improving model performance and removing the class imbalance problem using augmentation. Technology (IJARET) 2022, 13, 14–22. [Google Scholar]

- Netzer, Y.; Wang, T.; Coates, A.; Bissacco, A.; Wu, B.; Ng, A.Y. Reading digits in natural images with unsupervised feature learning. NIPS Workshop Deep Learn. Unsupervised Feature Learn. 2011, 2011, 4. [Google Scholar]

- Koklu, M.; Cinar, I.; Taspinar, Y.S. Classification of rice varieties with deep learning methods. Comput. Electron. Agric. 2021, 187, 106285. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers Offeatures from Tiny Images. Master’s Thesis, University of Tront, Toronto, ON, Canada, 2009; pp. 1–60. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).