Abstract

Teleoperation enables robots to perform tasks in dangerous or hard-to-reach environments on behalf of humans, but most methods lack operator immersion and compliance during grasping. To significantly enhance the operator’s sense of immersion and achieve more compliant and adaptive grasping of objects, we introduce a novel teleoperation method for dexterous robotic hands. This method integrates finger-to-finger force and vibrotactile feedback based on the Fuzzy Logic-Dynamic Compliant Primitives (FL-DCP) controller. It employs fuzzy logic theory to identify the stiffness of the object being grasped, facilitating more effective manipulation during teleoperated tasks. Utilizing Dynamic Compliant Primitives, the robotic hand implements adaptive impedance control in torque mode based on stiffness identification. Then the immersive bilateral teleoperation system integrates finger-to-finger force and vibrotactile feedback, with real-time force information from the robotic hand continuously transmitted back to the operator to enhance situational awareness and operational judgment. This bidirectional feedback loop increases the success rate of teleoperation and reduces operator fatigue, improving overall performance. Experimental results show that this bio-inspired method outperforms existing approaches in compliance and adaptability during teleoperation grasping tasks. This method mirrors how human naturally modulate muscle stiffness when interacting with different objects, integrating human-like decision-making and precise robotic control to advance teleoperated systems and pave the way for broader applications in remote environments.

1. Introduction

The concept of shared control is the human-machine interaction technique that integrates the strengths of both human operators and automated systems to achieve more efficient, safe, and reliable control [1]. In a shared control system, humans and machines do not operate independently but collaborate, leveraging their respective capabilities to jointly accomplish tasks. Shared control aims to achieve better performance by appropriately distributing the control authority between the human operator and the automated system, thereby leveraging both human intelligence and the capabilities of the automation. Shared control is widely applied in fields such as robotics [2], autonomous vehicles [3], teleoperation [4,5,6], and rehabilitation [7,8,9].

With the recent wave of development of humanoid robots, teleoperation technology becomes more and more significant. Teleoperation technology can not only realize the operation when human and robot are separated from each other, so that the robot could replace human to work or explore in dangerous, harsh conditions and hard-to-reach areas; teleoperation technology can also provide high-quality demonstration data for imitation learning and deep reinforcement learning [10,11,12], it endows the robot with ability of autonomous operation, which also accelerates the pace of the robot into the people’s daily life [13].

Teleoperation technology has undergone extensive research and significant development over the past two decades. However, there are still some problems with current hand teleoperation technology. The first problem with most teleoperation systems is their limited immersion, which is either due to the absence of force feedback or the lack of precise finger-to-finger force feedback. Some of them use data gloves without force feedback [14,15,16], and some of them use depth cameras to capture the position of the hand in real time directly [17,18,19]. Although some individuals utilize haptic devices for teleoperation, these systems lack finger-to-finger mapping, let alone precise finger-to-finger force feedback [20,21]. The lack of precise finger-to-finger force feedback makes the human operator subject feel less immersed when grasping the object and will reduce the success rate of teleoperation as a result. The absence of force feedback also makes the subject more susceptible to premature fatigue, as the subject sometimes needs to remain fixed in one position. The aforementioned problems increase the difficulty of novice teleoperation, leading to longer training and adaptation times. The second is the lack of compliance during teleoperation. Most dexterous robotic hands and grippers currently use position control mode to grip objects, which has the advantage of fast response times [22,23,24]. However, even a small deformation of the object after contact can cause excessive gripping force, which may result in damage to the gripped object, irreversible plastic deformation, or mechanical failure of the dexterous robotic hand and gripper. The above problem can be solved by controlling the joint torque to realize impedance control by the dexterous robotic hand [25]. But they have not incorporated targeted variable impedance control specifically adapted to objects with varying stiffness levels. This limitation can result in suboptimal performance when interacting with diverse objects, as the system may not adequately adjust its compliance to match the characteristics of each object. Currently, there are methods that combine machine learning and deep reinforcement learning with variable impedance control [26,27,28,29,30]. But these methods are designed at the end-effectors of robotic arms, which involves simpler forms. In contrast, dexterous hands have five finger end-effectors, resulting in a higher dimensional space, making it difficult to directly transfer these methods to dexterous hands. Additionally, it is challenging to couple and train these methods with teleoperation systems directly.

To address above problems, this paper proposes a novel compliant teleoperation method of hand with finger-to-finger force and vibrotactile feedback based on FL-DCP controller. This method is driven by two primary objectives: the first is to enhance the operator’s sense of immersion during teleoperation, and the second is to achieve more compliant grasping of objects. By providing the operator with more accurate information about the object being grasped at the remote site (such as force feedback and vibrotactile feedback), the sense of immersion during teleoperation can be significantly improved, thereby enhancing the operator’s decision-making capabilities. Compliant grasping of objects with variable stiffness not only protects the object being manipulated, but also safeguards the end-effector. Meanwhile, both of these aspects contribute to an increase in the success rate of teleoperation conducted by the operator and a reduction in operator fatigue. The innovations of this method are listed as follows:

- This approach pioneeringly combines the fuzzy logic module for estimating the stiffness of grasped objects with the dynamic compliant primitives based self-adaptive regulation of impedance stiffness coefficient, in the context of teleoperated hand manipulation. This integration enables adaptive impedance control that closely mimics human muscle behavior, enhancing the system’s ability to handle objects of different stiffness safely and effectively.

- The approach integrates compliant adaptive grasping methods into the teleoperation framework for hand manipulation, incorporating finger-to-finger force feedback and vibrotactile feedback on the master side. By integrating force and vibrotactile feedback, the operator gains enhanced situational awareness and operational judgment, leading to better operator confidence and reduced cognitive load. Not only does it increase the success rate of teleoperation and reduce operator fatigue, but it also provides a more immersive and realistic interaction experience.

2. Methodology

2.1. Hand Teleoperation Framework Based on Shared Control

The method proposed in this paper falls within the domain of shared control. The hand teleoperation of grasping an object can be divided into two stages, the first stage is when the object has not yet been touched, and the second stage is when the object is touched and the grasping begins. In the first stage, when the dexterous robotic hand is operating in free space, the human operator has full control authority. Then in the second stage, the dexterous robotic hand transitions into operating in constrained space. At this point, the dexterous robotic hand performs object stiffness identification based on FL-DCP and generates desired impedance parameters. Real-time feedback of force and vibrotactile information to the human operator aids in decision-making and enhances the operator’s decision capabilities, providing better expected angles for task completion.

Besides, the proposed method adopts joint torque control mode instead of position control mode to realize compliant grasping. The method utilizes impedance control to regulate the torque output of a dexterous robotic hand involves using the desired position and the actual position as variables. In the first stage, to respond more effectively to the operator’s desired position, the fixed-gain impedance control is adopted. In the second stage, to better adapt to objects with different stiffness, the adaptive impedance control is adopted.

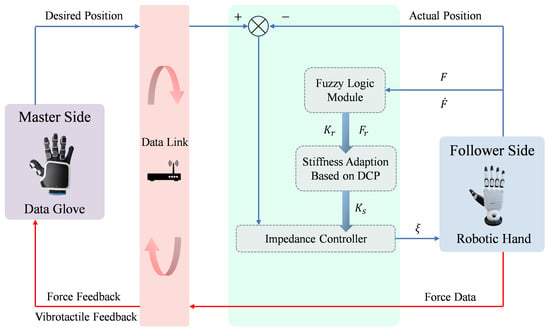

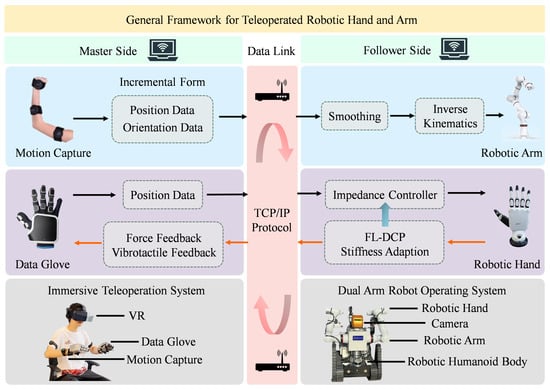

The general framework of the proposed method is depicted in Figure 1. Initially, the data glove with finger-to-finger force feedback and vibrotactile feedback modules on the master side captures the real-time movement angles of the human operator’s fingers. Subsequently, the captured data undergoes cleaning and smoothing processes before being transmitted to the dexterous robotic hand on the follower side. The dexterous robotic hand implements compliant control using impedance control and feedforward control, which operates in joint torque control mode instead of position control mode. The dexterous robotic hand continuously captures its current position and computes the difference with the desired position, which serves as the input for the impedance control. The specifics of impedance control will be elaborated upon in Section 2.3.1. When the force between the dexterous robotic hand and the object exceeds 0.15 N, it is determined that contact has occurred. The dexterous robotic hand subsequently provides real-time force feedback to the master side and transmits tactile vibration signals to notify the operator that contact has been established, transitioning the system into the shared control mode. Upon establishing contact with the object, the dexterous robotic hand identifies the object’s stiffness based on the fuzzy logic module, which will be elaborated upon in Section 2.2. Drawing inspiration from Dynamic Movement Primitives (DMP) and the way humans grasp objects of different stiffness, we propose Dynamic Compliance Primitives (DCP). DCP employ distinct stiffness coefficient profiles tailored to the grasping of objects with varying stiffness levels, which will be elaborated upon in Section 2.3. In the end, the integration and usage of the fuzzy logic module with DCP model to form FL-DCP controller are detailed in Section 2.4.

Figure 1.

General framework of hand teleoperation.

2.2. Fuzzy Logic Module

Human hands are capable of adjusting the grasping stiffness coefficient and grasping force in response to the stiffness of the target object, a process guided by force feedback, tactile feedback and experience. Fuzzy logic theory offers a powerful and flexible approach to control problems characterized by uncertainty, nonlinearity, and complex interactions [31]. Their ability to emulate human reasoning and adapt to changing conditions makes them an attractive choice for many advanced control applications. Therefore, employing a fuzzy logic module to identify and adapt to different stiffness levels objects is a logical choice. Compared to other algorithms, fuzzy logic theory offers two key advantages:

- It maintains robust performance during input saturation without requiring an extra anti-windup structure [32].

- It can effectively protect against impulse signals without the need for additional filtering mechanisms.

These features make fuzzy logic theory particularly suitable for applications where reliable and adaptive control is essential [33], such as in robotic grasping tasks.

This paper employs two fuzzy logic controllers, both of which take the grasping force F and the grasping force variation rate as input and produce the reference maximum grasping force and the reference maximum grasping stiffness coefficient as output, respectively. F is collected by the force sensor of the dexterous robotic hand in real time. is calculated as

where represents the instantaneous value of the contact force between the object and the dexterous robotic hand.

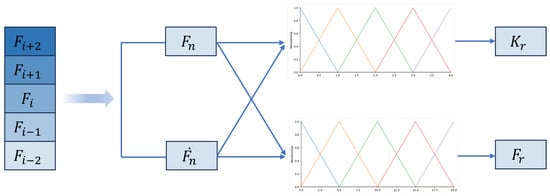

The design details of the fuzzy logic module are as follows, which are shown in Figure 2. Both the input and output membership functions employed standard triangular membership functions to represent linguistic terms, ensuring a structured and intuitive approach to fuzzification and defuzzification processes. The inputs and outputs of the fuzzy logic module are shown in Figure 2. The details of the fuzzy reasoning rules are shown in Table 1, where input variables F and are defıned as fıve fuzzy sets: extra small (XS), small (S), medium (M), large (L), and extra large (XL). The output of the fuzzy estimation are the reference grasping stiffness coefficient and the reference grasping force , which are also defıned as fıve fuzzy sets: extra small (XS), small (S), medium (M), large (L), and extra large (XL).

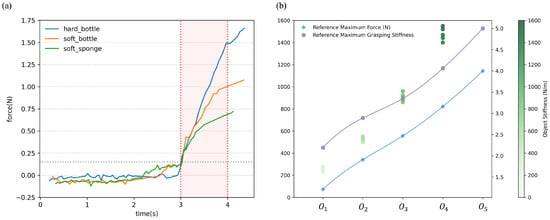

Figure 2.

Inputs and outputs of fuzzy logic module.

Table 1.

Fuzzy reasoning rules.

The inference process of fuzzy logic theory utilizes the standard Mandani inference engine. To derive a crisp output, the centroid method is employed, which can be expressed as the following equations.

Here, denotes the centroid of the consequent set for the activated rule, and M represents the total number of activated rules. The terms and denote two input fuzzy sets. The terms and correspond to the membership functions for the inputs F and . The parameters and serve as the coefficients for the inputs, respectively. The symbol * indicates the t-norm operator used to combine these membership values.

2.3. Adaptive Impedance Control Based on Dynamic Compliant Primitives

2.3.1. Impedance Control

The dynamics of a rigid body can be represented by the following equation [34,35]:

Here, and denote the joint angles and velocities, respectively. The variable x stands for the position of the end-effector. , and represent the inertia, the Coriolis and centrifugal forces, and the gravitational force, respectively. signifies the control input, while denotes any external forces acting on the robotic hand, whether from the environment or human interaction.

In accordance with the principles of human muscle motor learning, the control input can be decomposed into two distinct components:

In this context, represents impedance component and represents feedforward component.

The impedance component is typically defined as:

with

Here, and represent the stiffness coefficient and the damping parameter of impedance control, respectively. and represent position and velocity errors, respectively. and represent the desired position and the desired velocity, respectively.

Inspired by the human process of grasping objects, it is observed that humans exhibit a variable stiffness behavior during the act of grasping [36]. This characteristic is crucial for performing delicate and complex tasks, such as handling objects with high deformability or performing precise operations. When humans grasp objects of different shapes, sizes, and textures, the muscles of their hands adjust their stiffness coefficient to suit the specific requirements of the grip. Therefore, teleoperated dexterous robotic hand grasping objects can be divided into two stages, the first stage is when the object has not yet been touched, and the second stage is when the object is touched and the grasping begins. In the first stage fixed gain impedance control is used and in the second stage adaptive impedance control is used.

2.3.2. The Definition and Derivation of Dynamic Compliant Primitives

Dynamic Movement Primitives (DMP) is widely used for the demonstration and generalization of robot trajectory [37]. Just as humans possess the ability to adapt stiffness coefficient during grasp interactions, robots should similarly be capable of learning and generalizing highly human-like skills. Stiffness coefficient adaption refers to the process of dynamically adjusting the stiffness coefficient applied to an object during grasping, based on the object’s stiffness characteristics. Although human hands use different stiffness coefficient settings when handling objects with varying stiffness, these adjustments follow a common trend. This phenomenon provides a foundation for developing dexterous robotic hand grasping algorithms that can automatically adapt to objects of different stiffness. To achieve this, it is crucial to place significant emphasis on the representation of stiffness coefficient profiles. Furthermore, the DMP framework can be extended to the Dynamic Compliant Primitives (DCP) framework for stiffness coefficient adaptation. Based on the DMP framework, the DCP framework can be utilized to learn and generalize the trajectory of stiffness coefficient profiles. The definition and derivation of DCP are presented as follows.

Similarly to DMP models, DCP models can generally be categorized into two types based on the nature of the tasks: discrete type and rhythmic type [38,39]. This paper employs the former type. The DCP model is conceptualized as a spring damper system plus an external force component, which originates from a standard dynamical system. The specifics of this model are outlined below:

In this scenario, x denotes the stiffness coefficient profiles, while z represents the velocity of change in the stiffness coefficient profile. The symbols and indicate the starting and target stiffness coefficient values, respectively. The parameters and represent the stiffness and damping coefficients of the spring damper system, respectively. The constant is positive, which serves as the speed modulation parameter. Upon adjustment of the coefficient , the time required to complete a task can be modified. The non-linear function can be modeled as a linear combination of radial basis functions, capturing its complex behavior in a structured manner. The variable s reflects the state of the first order dynamical system that has the same speed modulation parameter . The evolution of s is governed by the following differential equation:

where is a predetermined constant and .

In this system, regardless of the initial value, s ultimately converges monotonically to the target state as time approaches infinity, thus it could be regarded as a phase parameter. And s exhibits monotonically increasing behavior throughout its entire domain. As s approaches 0, decreases, reaching 0 at the target stiffness coefficient value. Owing to the characteristics of the standard system, the DCP model utilizes the phase parameter s rather than time as its basis, allowing for easy adaptation to various scenarios without altering the trajectory of the stiffness coefficient profile. The two parameters and are able to influence the system’s convergence rate.

The external force term is defined by

with

The normalized radial basis function , characterized by its center and bandwidth , is employed in the model. Each of these basis functions is associated with a weight . The total number of Gaussian functions utilized within this framework, denoted as N, is determined based on the specific requirements of the task at hand. Parameters such as the center , the bandwidth , and the quantity N are established according to the specific scenario and tailored to the specific requirements of impedance control.

Given a single stiffness coefficient profile demonstration , the target nonlinear function can be represented as follows:

Then, the weight w of DCP can be calculated using LWR (locally weighted regression) method by minimizing the cost function. The cost function is described as follows:

By learning the specific pattern of how stiffness coefficient varies with independent variables from a concrete example, DCP model are able to capture and encode the key features in this process. Once such a model is established, it is capable of generating appropriately adjusted stiffness coefficient control strategies when confronted with new target objects having different stiffness properties. This approach not only preserves the fundamental behavioral logic embodied in the original demonstration data but also demonstrates adaptability to unknown environmental conditions.

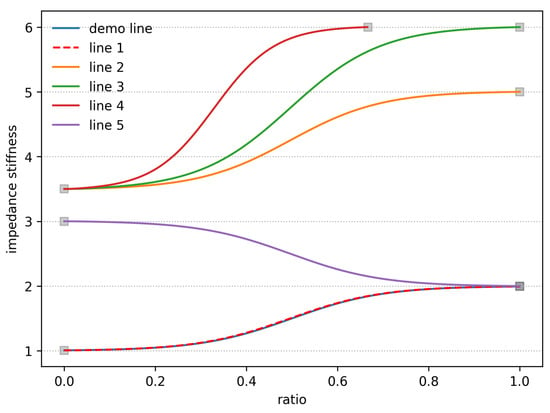

The simulation results of the DCP model, shown in Figure 3, demonstrate adaptive reproduction of stiffness coefficient profiles under varied initial and final conditions. From Figure 3, it is evident that once a stiffness coefficient profile has been established for learning purposes, the system demonstrates remarkable adaptability in reproducing similar contours across subsequent trials. Lines 1 to 5 are the reproduced contours. Specifically, even when the starting point remains constant but the endpoint varies, or when both the starting and ending points differ from the original demonstration, the system is capable of accurately generating a stiffness coefficient profile that closely resembles the initially demonstrated contour. It demonstrates its versatility and reliability in achieving compliant and precise grasping behaviors.

Figure 3.

Adaptive reproduction of stiffness coefficient profiles under varied initial and final conditions.

2.4. Fuzzy Logic-Dynamic Compliant Primitives Controller

To achieve variable stiffness coefficient control that closely emulates the adaptability of the human hand during object grasping, we propose an advanced FL-DCP control framework. This system is designed to dynamically adjust the stiffness coefficient of a robotic hand in response to varying object properties and interaction forces, ensuring compliance in manipulation tasks.

During this learning process, the system captures the nuances of the desired stiffness coefficient variation, which can be later reproduced with high fidelity. The learned stiffness coefficient profile serves as a template for subsequent operations, guiding the robotic hand’s response to different grasping scenarios. The initial phase of this control strategy involves learning a stiffness coefficient profile that can smoothly transition between a fixed initial stiffness coefficient and a fixed final stiffness coefficient . To ensure that this transition is continuous and differentiable, thereby avoiding abrupt changes that could lead to instability, we employ the Sigmoid function [40]. The Sigmoid function allows for a smooth interpolation between the starting point and the end point of the trajectory, providing a natural progression that mimics human muscle behavior when adapting to the objects being manipulated.

Once the stiffness coefficient profile has been established, the system enters the reproduction phase. Here, the output of the fuzzy logic module provides reference values for grasping stiffness coefficient and force , which are critical parameters to achieving the intended level of compliance. The stiffness coefficient profile begins at the same fixed initial stiffness coefficient but now transitions to the reference grasping stiffness coefficient , rather than the previously fixed final stiffness coefficient . This adjustment is crucial because it allows the system to respond more flexibly to the specific requirements of each grasping task. By aligning the final grasping stiffness coefficient value with the reference grasping stiffness coefficient derived from the fuzzy logic module, the controller can better accommodate the unique properties of different object.

Central to the DCP model is the input variable R, which represents the ratio of the current contact force to the reference grasping force :

Here, is defined as:

This ensures that the applied force does not exceed the reference value, preventing potential damage to either the object or the robotic hand. The output of the DCP model is the adapted stiffness coefficient , which plays a pivotal role in the impedance control. The adapted stiffness coefficient is continuously adjusted based on the input ratio R, allowing the robotic hand to maintain adequate compliance while interacting with various objects.

By integrating these components within the FL-DCP control framework, we create a robust system capable of performing compliant and adaptive grasping tasks with accuracy and responsiveness, closely mirroring the sophisticated capabilities of the human hand.

3. Experimental Evaluation

To verify the effectiveness of the proposed method, three distinct hand teleoperation experiments are carried out. The first experiment is conducted to validate the efficacy of the object stiffness identification method based on the fuzzy logic module. The second experiment aimed to demonstrate that this adaptive impedance control method results in more compliant grasping than alternative approaches. The third experiment aimed to demonstrate that integrating this adaptive impedance control method with hand teleoperation enhances the success rate of teleoperation tasks and reduces operator fatigue. In above experiments, the motion generator used on the master side is Senseglove Nova 2, which is equipped with force feedback module and vibrotactile feedback module. And the dexterous robot hand used on the follower side is Inspire Hand, which is equipped with force and position sensors.

The specific teleoperation details are as follows. On the master side, Senseglove Nova 2 is connected to a Windows computer via Bluetooth. The data glove captures the human operator’s finger angles in real-time. After being processed with the sliding window mean filter and scale transformation, the data are transmitted over a local network using the TCP/IP protocol. On the follower side, Inspire Hand is connected to a Linux computer. Similarly, the dexterous robotic hand receives the desired angle data via the TCP/IP protocol and sends the force feedback data in real-time. When the data glove receives force data from the dexterous robotic hand, it applies this force feedback directly to the operator’s hand, providing a realistic sense of touch. If the detected force exceeds 0.15 N, the data glove activates its vibrotactile feedback module. This vibration serves as an immediate notification to the operator that the dexterous robotic hand has come into contact with the object.

3.1. Object Stiffness Identification

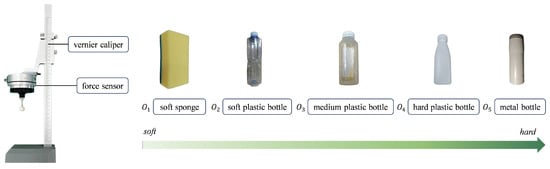

To validate the efficacy of the object stiffness identification method based on the fuzzy logic module, five common objects with different stiffness levels were chosen for the experiment. The objects, ordered from highest to lowest stiffness, are: hard metal bottle, hard plastic bottle, medium plastic bottle, soft plastic bottle, and soft sponge.

At first, we used the apparatus shown in Figure 4 to measure the actual stiffness of different objects. To determine the actual stiffness of the objects, we quantify their deformation in response to an applied force. This process involves using a vernier caliper to precisely measure the object’s deformation and a force sensor to accurately record the applied force. The actual measured stiffness of the objects is displayed in the scatter graph shown in Figure 5b, with each object tested 5 times repeatedly. It can be observed that the stiffness of the five objects can be categorized as very hard, relatively hard, medium, relatively soft, and very soft. For very hard objects, we assume that they do not deform, and their stiffness is considered to be infinitely large. Due to the exceedingly high stiffness of the very hard object and the consequent difficulty in obtaining precise measurements, the data points representing the very hard object are not depicted in Figure 5b. This decision was made to enhance the readability of the graph and the visual differentiation of stiffness among other objects. Additionally, it is noted that objects with higher stiffness exhibit larger variances in their measured stiffness values.

Figure 4.

The experimental apparatus of the object stiffness identification.

Figure 5.

(a) The force data collected during the object stiffness identification experiment. (b) The result of the object stiffness identification experiment.

The experimental process of object stiffness identification is as follows: By setting the same finger torque, the dexterous robotic hand contacts objects with different stiffness levels, ensuring that contact position between the objects and the robotic hand remains as consistent as possible. The results of the stiffness identification are recorded and analyzed.

The force variation experienced by the index finger of the dexterous robotic hand during the contact with the object is illustrated in Figure 5a. When the force exceeds 0.15 N, it is determined that the dexterous robotic hand has initiated contact with the object, and the stiffness identification process begins. The data collection for the stiffness identification process continues for 1 s, as indicated by the red region in Figure 5a. During this period, the system gathers force data to identify the stiffness of the object. After collecting the force information, the rate of variation of the force is computed. The force and its rate of variation are then used as inputs to the fuzzy logic module.

The output results of the fuzzy logic module are presented in the line graph in Figure 5b, including the maximum reference force and the maximum grasping reference stiffness coefficient, which are represented by blue squares and purple hexagons, respectively. It is important to note that the grasping stiffness coefficient referred to here is the stiffness coefficient parameter used in the impedance control law. In this case, the fuzzy logic module outputs the maximum set values for the reference force and reference grasping stiffness coefficient. The output range for the maximum reference force is limited to 1–4 N, and the output range for the maximum grasping stiffness coefficient is limited to 1–5. Therefore, for very hard objects, the fuzzy logic module outputs the maximum reference force of 4 N and the maximum grasping stiffness coefficient of 5. Besides, it can be observed that for objects with higher stiffness, both the maximum reference force and the maximum reference grasping stiffness coefficient are higher. Conversely, for objects with lower stiffness, both the maximum reference force and the maximum reference grasping stiffness coefficient are lower. Furthermore, it is observed that the trends in the maximum reference force and the maximum reference grasping stiffness coefficient closely follow the trends in the stiffness of the objects. This finding essentially confirms the effectiveness of the object stiffness identification method based on the fuzzy logic module.

3.2. Comparison of Different Control Methods for the Dexterous Robotic Hand

To validate the efficacy of the proposed adaptive impedance control, three different control methods for the dexterous robotic hand were compared. Three different control methods are position control mode, fixed-gain impedance control, and adaptive impedance control, respectively.

The experimental process is as follows: Three different control methods were used to teleoperate the dexterous robotic hand to grasp objects with three different levels of stiffness. The three objects are soft sponge, soft plastic bottle and hard plastic bottle. The contact force was recorded once the grasp stabilized. Each grasping test was repeated 10 times and the mean and standard deviation of contact forces were calculated for analysis.

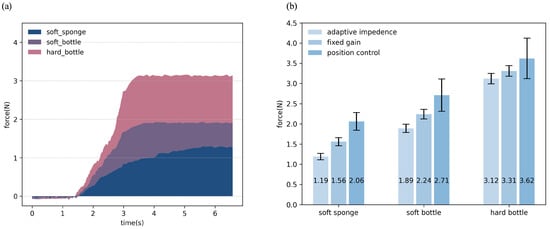

The contact force data collected during experiments conducted in adaptive impedance mode is illustrated in Figure 6a. As illustrated in Figure 6a, it can be observed that as the object transitions from soft to hard, the contact force increases progressively from the initial grasping phase until stabilization. Additionally, the rate at which the contact force changes becomes faster with increasing object stiffness. The experimental result of different control methods for the dexterous robotic hand is illustrated in Figure 6b and Table 2. From Figure 6b, it can be observed that the contact force exerted on the objects of different stiffness levels varies significantly depending on the control method used. The contact force of position control is the highest among the three control methods. This is likely due to the rigid nature of position control, which does not account for the compliance of the objects, leading to higher forces being applied. The contact force of fixed gain impedance control is moderate, indicating that impedance control provides a balance between precision and compliance, allowing for safer interaction with the objects. The contact force of adaptive impedance control is the lowest among the three control modes. This is a significant advantage of the adaptive impedance control proposed in this paper, as it dynamically adjusts the stiffness coefficient and force profiles based on the object’s stiffness, resulting in minimal force application and safer handling of the objects. Simultaneously, it can be observed that the standard deviation of the contact force under position control is much larger compared to impedance control modes. And the standard deviations of the contact forces for the two impedance control modes are relatively similar, indicating similar stability and consistency. This is because, under position control mode, even small changes in the desired position can lead to substantial variations in the contact force. The experimental results presented above effectively validate the effectiveness of the adaptive impedance control method proposed in this paper for compliantly grasping objects with different stiffness levels.

Figure 6.

(a) The force data collected during experiments conducted in adaptive impedance mode. (b) The experimental result of different control methods for the dexterous robotic hand.

Table 2.

The experimental result of different control methods for the dexterous robotic hand.

3.3. Robotic Arm and Hand Teleoperation Experiment

To validate the effectiveness of the proposed compliant hand teleoperation method, we applied it to actual teleoperation scenarios and conducted experiments. The general framework for teleoperated robotic hand and arm is shown in Figure 7. The experimental platform utilized in teleoperation experiments is the immersive humanoid dual-arm dexterous robot previously developed by our laboratory team [41]. The data glove and motion capture use Senseglove Nova 2 and Noitom Perception Neuron Pro on the master side, respectively. The dexterous robotic hand and the robotic arm use Inspire Hand and Rokae xMate ER7 Pro on the follower side, respectively. The data communication between the motion capture system and the robotic arm is also achieved via TCP/IP protocol over a local area network. The motion capture data is divided into position and orientation components, which are transmitted in incremental form for action mapping. After receiving the pose data, the robotic arm uses inverse kinematics to calculate the appropriate joint angles to move. The inverse kinematics adopts the relaxed-ik method [42]. This method can avoid kinematics singularity, discontinuity of joint space, self-collision avoidance and so on while ensuring the end-effector pose matching.

Figure 7.

General framework for teleoperated robotic hand and arm.

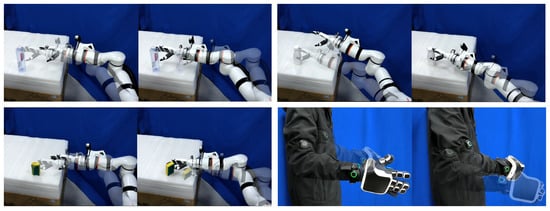

The scenarios of arm and hand teleoperation experiment are shown in Figure 8. The experimental process consists of two parts. In the first part, the teleoperated dexterous robotic hand and robotic arm are used to grasp and suspend objects until the operator’s hand feels fatigued. The fatigue time is tested and recorded under two conditions: with or without force feedback and vibrotactile feedback. Each condition is repeated 10 times. The average fatigue time and standard deviation are calculated. In the second part, the success rate of teleoperated grasping and placing objects at another location is evaluated using five objects differ in stiffness from the previous experiment. The testing is performed under four conditions: with or without force feedback and vibrotactile feedback, and in position control mode or adaptive impedance mode. Each object is grasped and placed 5 times, and the success rate is recorded. A grasp is considered a failure if the object drops or the force exceeds the maximum reference grasping force established in the Section 3.1 of the experimental evaluation. The average success rate across all five objects is then calculated.

Figure 8.

The scenarios of arm and hand teleoperation experiment.

The experimental result is shown in Table 3 and Table 4. It can be observed that the presence of force feedback and vibrotactile feedback during teleoperation significantly increases the operator’s fatigue time and operational success rate compared to conditions without feedback, demonstrating the necessity of force feedback and vibrotactile feedback in teleoperation. The adaptive impedance mode, compared to position control mode, can improve the success rate of teleoperation and help the operator perform tasks more easily. In summary, the use of adaptive impedance mode, along with force feedback and vibrotactile feedback, is the optimal choice for teleoperated hand manipulation. Furthermore, these findings also validate the effectiveness of the method proposed in this paper.

Table 3.

The result of the teleoperation experiment about fatigue time.

Table 4.

The result of the teleoperation experiment about success rate.

Besides, we tested both the communication time over TCP/IP protocol and the processing time of the control algorithm. The experimental results are as follows: under optimal network conditions, the average transmission latency measured over TCP/IP protocol was 4.56 ms, with a maximum observed latency of 8.58 ms. The average processing time for controlling the dexterous hand directly using the position control method was 31.9 ms, while using the method proposed in this article, the average processing time was 54.6 ms. Although the processing time of the method proposed in this article is longer compared to the directly position control method, the difference in operational experience during tasks that do not require high-speed operation is not substantial. In addition to the processing speed being slower compared to the direct position control method, another limitation is that the dexterous hand’s response time is less sensitive. Specifically, the responsiveness of the dexterous hand of our proposed method is not as immediate as it is with the position control method.

4. Conclusions

In this study, we introduce an innovative compliant teleoperation method of hand that significantly enhances the operator’s sense of immersion and achieves more compliant grasping of objects. This method integrates finger-to-finger force feedback and vibrotactile feedback based on the FL-DCP controller, which fully leverages the decision-making capabilities of both humans and robots. The dexterous robotic hand operates in torque mode to perform impedance control, allowing for precise and adaptive interactions with objects.

A key feature of our approach is the implementation of adaptive impedance control by the FL-DCP controller, which identifies the stiffness of the grasped object and adjusts its response accordingly. This mimics the human ability to modulate muscle stiffness coefficient when interacting with different objects, leading to more intuitive and effective manipulation during teleoperated tasks. This bio-inspired strategy enables more compliant grasping of different stiffness objects. To further enhance the operator’s performance, real-time force information from the robotic hand is transmitted back to the operator, thereby improving judgment and reducing fatigue during extended periods of teleoperation. This bidirectional communication loop ensures that the operator remains well-informed about the interaction forces at play, contributing to higher success rates and less fatigue in grasping tasks. Our method has been validated through stiffness identification and practical teleoperation experiments, demonstrating superior performance compared to existing methods. Overall, this novel compliant teleoperation method of hand represents a significant advancement in the field, offering a promising direction for improving human-robot collaboration in remote manipulation tasks.

The potential future work includes the incorporation of tactile information from the robotic fingers into the system. This addition aims to provide even more nuanced feedback, potentially leading to even more compliant and sensitive grasping capabilities. The second direction is to investigate the impact of latency on the controller and develop strategies to mitigate its effects on the operator’s experience. And another potential direction would be to use machine learning-based controllers to model how humans vary impedance coefficients during the grasping of different objects and then apply these learned patterns to impedance control.

Author Contributions

Conceptualization, P.H., H.L., X.H. and Z.J.; methodology, P.H.; formal analysis, P.H.; investigation, P.H. and Y.W.; validation, P.H. and Y.W.; writing—original draft preparation, P.H.; writing—review and editing, Z.J., P.H. and X.H.; supervision, Z.J., H.L. and X.H.; project administration, Z.J., H.L. and X.H.; funding acquisition, Z.J., H.L. and X.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the National Natural Science Foundation of China under Grant U22B2079, Grant 62103054, Grant 62273049, and Grant U2013602; in part by the Beijing Natural Science Foundation under Grant L243011, 4232054 and Grant 4242050; in part by the Foundation of National Key Laboratory of Human Factors Engineering under Grant HFNKL2023WW06; and in part by the Beijing Institute of Technology Research Fund Program for Young Scholars under Grant XSQD-6120220298.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Abbink, D.A.; Carlson, T.; Mulder, M.; De Winter, J.C.; Aminravan, F.; Gibo, T.L.; Boer, E.R. A topology of shared control systems—Finding common ground in diversity. IEEE Trans. Hum.-Mach. Syst. 2018, 48, 509–525. [Google Scholar] [CrossRef]

- Rakita, D.; Mutlu, B.; Gleicher, M.; Hiatt, L.M. Shared control–based bimanual robot manipulation. Sci. Robot. 2019, 4, eaaw0955. [Google Scholar] [PubMed]

- Marcano, M.; Díaz, S.; Pérez, J.; Irigoyen, E. A review of shared control for automated vehicles: Theory and applications. IEEE Trans. Hum.-Mach. Syst. 2020, 50, 475–491. [Google Scholar]

- Gottardi, A.; Tortora, S.; Tosello, E.; Menegatti, E. Shared control in robot teleoperation with improved potential fields. IEEE Trans. Hum.-Mach. Syst. 2022, 52, 410–422. [Google Scholar]

- Hu, H.; Shi, D.; Yang, C.; Si, W.; Li, Q. A Novel Shared Control Framework Based on Imitation Learning. In Proceedings of the 2024 IEEE International Conference on Industrial Technology (ICIT), Bristol, UK, 25–27 March 2024; pp. 1–6. [Google Scholar]

- Luo, J.; Lin, Z.; Li, Y.; Yang, C. A teleoperation framework for mobile robots based on shared control. IEEE Robot. Autom. Lett. 2019, 5, 377–384. [Google Scholar]

- Zhuang, K.Z.; Sommer, N.; Mendez, V.; Aryan, S.; Formento, E.; D’Anna, E.; Artoni, F.; Petrini, F.; Granata, G.; Cannaviello, G.; et al. Shared human–robot proportional control of a dexterous myoelectric prosthesis. Nat. Mach. Intell. 2019, 1, 400–411. [Google Scholar]

- Wang, F.; Qian, Z.; Lin, Y.; Zhang, W. Design and rapid construction of a cost-effective virtual haptic device. IEEE/ASME Trans. Mechatronics 2020, 26, 66–77. [Google Scholar]

- Latreche, A.; Kelaiaia, R.; Chemori, A.; Kerboua, A. A new home-based upper-and lower-limb telerehabilitation platform with experimental validation. Arab. J. Sci. Eng. 2023, 48, 10825–10840. [Google Scholar]

- Qin, Y.; Su, H.; Wang, X. From one hand to multiple hands: Imitation learning for dexterous manipulation from single-camera teleoperation. IEEE Robot. Autom. Lett. 2022, 7, 10873–10881. [Google Scholar]

- Chen, J.; Wu, Y.; Yao, C.; Huang, X. Robust motion learning for musculoskeletal robots based on a recurrent neural network and muscle synergies. IEEE Trans. Autom. Sci. Eng. 2024, 22, 2405–2420. [Google Scholar]

- Huang, X.; Wang, X.; Zhao, Y.; Hu, J.; Li, H.; Jiang, Z. Guided Model-Based Policy Search Method for Fast Motor Learning of Robots With Learned Dynamics. IEEE Trans. Autom. Sci. Eng. 2024, 22, 453–465. [Google Scholar]

- Darvish, K.; Penco, L.; Ramos, J.; Cisneros, R.; Pratt, J.; Yoshida, E.; Ivaldi, S.; Pucci, D. Teleoperation of humanoid robots: A survey. IEEE Trans. Robot. 2023, 39, 1706–1727. [Google Scholar]

- Kumar, V.; Todorov, E. Mujoco haptix: A virtual reality system for hand manipulation. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Republic of Korea, 3–5 November 2015; pp. 657–663. [Google Scholar]

- Fang, B.; Sun, F.; Liu, H.; Liu, C. 3D human gesture capturing and recognition by the IMMU-based data glove. Neurocomputing 2018, 277, 198–207. [Google Scholar]

- Kukliński, K.; Fischer, K.; Marhenke, I.; Kirstein, F.; Maria, V.; Sølvason, D.; Krüger, N.; Savarimuthu, T.R. Teleoperation for learning by demonstration: Data glove versus object manipulation for intuitive robot control. In Proceedings of the 2014 6th International Congress on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT), St. Petersburg, Russia, 6–8 October 2014; pp. 346–351. [Google Scholar]

- Ficuciello, F.; Migliozzi, A.; Laudante, G.; Falco, P.; Siciliano, B. Vision-based grasp learning of an anthropomorphic hand-arm system in a synergy-based control framework. Sci. Robot. 2019, 4, eaao4900. [Google Scholar] [PubMed]

- Qin, Y.; Yang, W.; Huang, B.; Van Wyk, K.; Su, H.; Wang, X.; Chao, Y.W.; Fox, D. Anyteleop: A general vision-based dexterous robot arm-hand teleoperation system. arXiv 2023, arXiv:2307.04577. [Google Scholar]

- Handa, A.; Van Wyk, K.; Yang, W.; Liang, J.; Chao, Y.W.; Wan, Q.; Birchfield, S.; Ratliff, N.; Fox, D. Dexpilot: Vision-based teleoperation of dexterous robotic hand-arm system. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 9164–9170. [Google Scholar]

- He, R.; Zhang, B.; Bi, Z.; Zhang, W. Development of a hybrid haptic device with high degree of motion decoupling. In Proceedings of the 2022 28th International Conference on Mechatronics and Machine Vision in Practice (M2VIP), Nanjing, China, 16–18 November 2022; pp. 1–5. [Google Scholar]

- Bong, J.H.; Choi, S.; Hong, J.; Park, S. Force feedback haptic interface for bilateral teleoperation of robot manipulation. Microsyst. Technol. 2022, 28, 2381–2392. [Google Scholar]

- Meeker, C.; Rasmussen, T.; Ciocarlie, M. Intuitive hand teleoperation by novice operators using a continuous teleoperation subspace. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 5821–5827. [Google Scholar]

- Shintemirov, A.; Taunyazov, T.; Omarali, B.; Nurbayeva, A.; Kim, A.; Bukeyev, A.; Rubagotti, M. An open-source 7-DOF wireless human arm motion-tracking system for use in robotics research. Sensors 2020, 20, 3082. [Google Scholar] [CrossRef]

- Gao, Q.; Ju, Z.; Chen, Y.; Wang, Q.; Chi, C. An efficient RGB-D hand gesture detection framework for dexterous robot hand-arm teleoperation system. IEEE Trans. Hum.-Mach. Syst. 2022, 53, 13–23. [Google Scholar]

- Zeng, C.; Li, S.; Jiang, Y.; Li, Q.; Chen, Z.; Yang, C.; Zhang, J. Learning compliant grasping and manipulation by teleoperation with adaptive force control. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 717–724. [Google Scholar]

- Suomalainen, M.; Calinon, S.; Pignat, E.; Kyrki, V. Improving dual-arm assembly by master-slave compliance. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8676–8682. [Google Scholar]

- Martín Martín, R.; Lee, M.A.; Gardner, R.; Savarese, S.; Bohg, J.; Garg, A. Variable impedance control in end-effector space: An action space for reinforcement learning in contact-rich tasks. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1010–1017. [Google Scholar]

- Roveda, L.; Maskani, J.; Franceschi, P.; Abdi, A.; Braghin, F.; Molinari Tosatti, L.; Pedrocchi, N. Model-based reinforcement learning variable impedance control for human-robot collaboration. J. Intell. Robot. Syst. 2020, 100, 417–433. [Google Scholar]

- Li, Z.; Liu, J.; Huang, Z.; Peng, Y.; Pu, H.; Ding, L. Adaptive impedance control of human–robot cooperation using reinforcement learning. IEEE Trans. Ind. Electron. 2017, 64, 8013–8022. [Google Scholar] [CrossRef]

- Li, M.; Yin, H.; Tahara, K.; Billard, A. Learning object-level impedance control for robust grasping and dexterous manipulation. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 6784–6791. [Google Scholar]

- Babuska, R.; Verbruggen, H.B. (Eds.) Fuzzy Logic Control: Advances in Applications; World Scientific: Singapore, 1999; Volume 23. [Google Scholar]

- Duan, X.G.; Li, H.X.; Deng, H. Robustness of fuzzy PID controller due to its inherent saturation. J. Process Control 2012, 22, 470–476. [Google Scholar] [CrossRef]

- Precup, R.E.; Teban, T.A.; Albu, A.; Borlea, A.B.; Zamfirache, I.A.; Petriu, E.M. Evolving fuzzy models for prosthetic hand myoelectric-based control. IEEE Trans. Instrum. Meas. 2020, 69, 4625–4636. [Google Scholar] [CrossRef]

- Hogan, N. Impedance control: An approach to manipulation: Part I—Theory. J. Dyn. Syst. Meas. Control 1985, 107, 1–7. [Google Scholar] [CrossRef]

- Hogan, N. Impedance control: An approach to manipulation: Part II—Implementation. J. Dyn. Syst. Meas. Control 1985, 107, 8–16. [Google Scholar] [CrossRef]

- Naceri, A.; Schumacher, T.; Li, Q.; Calinon, S.; Ritter, H. Learning optimal impedance control during complex 3D arm movements. IEEE Robot. Autom. Lett. 2021, 6, 1248–1255. [Google Scholar] [CrossRef]

- Saveriano, M.; Abu-Dakka, F.J.; Kramberger, A.; Peternel, L. Dynamic movement primitives in robotics: A tutorial survey. Int. J. Robot. Res. 2023, 42, 1133–1184. [Google Scholar] [CrossRef]

- Schaal, S.; Mohajerian, P.; Ijspeert, A. Dynamics systems vs. optimal control—A unifying view. Prog. Brain Res. 2007, 165, 425–445. [Google Scholar]

- Ijspeert, A.J.; Nakanishi, J.; Hoffmann, H.; Pastor, P.; Schaal, S. Dynamical movement primitives: Learning attractor models for motor behaviors. Neural Comput. 2013, 25, 328–373. [Google Scholar] [CrossRef]

- Cao, X.; Huang, X.; Zhao, Y.; Sun, Z.; Li, H.; Jiang, Z.; Ceccarelli, M. A method of human-like compliant assembly based on variable admittance control for space maintenance. Cyborg Bionic Syst. 2023, 4, 0046. [Google Scholar] [CrossRef]

- Jiang, Z.; Ma, Y.; Cao, X.; Shen, M.; Yin, C.; Liu, H.; Cui, J.; Sun, Z.; Huang, X.; Li, H. FC-EODR: Immersive humanoid dual-arm dexterous explosive ordnance disposal robot. Biomimetics 2023, 8, 67. [Google Scholar] [CrossRef]

- Rakita, D.; Mutlu, B.; Gleicher, M. RelaxedIK: Real-time Synthesis of Accurate and Feasible Robot Arm Motion. In Proceedings of the Robotics: Science and Systems, Pittsburgh, PA, USA, 26–30 June 2018; Volume 14, pp. 26–30. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).