Abstract

To address the challenges of low optimization efficiency and premature convergence in existing algorithms for unmanned aerial vehicle (UAV) 3D path planning under complex operational constraints, this study proposes an enhanced honey badger algorithm (LRMHBA). First, a three-dimensional terrain model incorporating threat sources and UAV constraints is constructed to reflect the actual operational environment. Second, LRMHBA improves global search efficiency by optimizing the initial population distribution through the integration of Latin hypercube sampling and an elite population strategy. Subsequently, a stochastic perturbation mechanism is introduced to facilitate the escape from local optima. Furthermore, to adapt to the evolving exploration requirements during the optimization process, LRMHBA employs a differential mutation strategy tailored to populations with different fitness values, utilizing elite individuals from the initialization stage to guide the mutation process. This design forms a two-population cooperative mechanism that enhances the balance between exploration and exploitation, thereby improving convergence accuracy. Experimental evaluations on the CEC2017 benchmark suite demonstrate the superiority of LRMHBA over 11 comparison algorithms. In the UAV 3D path planning task, LRMHBA consistently generated the shortest average path across three obstacle simulation scenarios of varying complexity, achieving the highest rank in the Friedman test.

1. Introduction

With the widespread application of unmanned aerial vehicles (UAVs) in complex terrain exploration, disaster response, military reconnaissance, and other fields, three-dimensional path planning has emerged as a core challenge in autonomous navigation systems. The objective is to determine an optimal flight path that minimizes cost while ensuring the UAV navigates through three-dimensional space without violating operational constraints or colliding with obstacles [1,2].

Corresponding to such a complex constraint optimization problem as path planning, scholars have proposed some algorithms and improvement studies. Traditional path planning methods include the A-star algorithm [3], Artificial Potential Fields (APF) [4], and RRT* [5], etc. These algorithms appeared earlier, developed more maturely, and were relatively simple to implement, but for path planning in complex environments, problems such as slow convergence speed would occur. In recent years, swarm intelligence algorithms have become an important method to solve the path planning problem, and this meta-heuristic algorithm simulates the information sharing and mutual learning between biological individuals in nature, with stronger self-learning, self-adaptation, and self-organization [6,7,8,9]. Some algorithms with superior performance are widely used in UAV path planning, including Particle Swarm Algorithm (PSO) [10], Artificial Bee Colony Algorithm (ABC) [11], Whale Optimization Algorithm (WOA) [12], Harris Hawks Optimization (HHO) [13], and Sparrow Search Algorithm (SSA) [14], Dung Beetle Optimizer (DBO) [15], Crested Porcupine Optimizer (CPO) [16] and so on. Li et al. [17] introduced the FP-GPSO algorithm, which uses Fermat points for grouped particle swarm optimization to address the path-planning challenge of composite unmanned aerial vehicles. Experimental findings demonstrated the effectiveness of FP-GPSO in optimizing UAV path planning. Han et al. [18] developed a multi-strategy evolutionary database to enhance the conventional artificial bee colony algorithm, leveraging their brain-inspired evolutionary learning framework to improve cognitive abilities, and simulation results show that the algorithm produces UAV trajectories with better fuel economy and higher safety. Dai et al. [19] proposed a novel whale optimization algorithm (NWOA), which improves the convergence speed and avoids local optima by using adaptive techniques and setting virtual obstacles and introduces improved potential field factors to enhance the dynamic obstacle avoidance ability of mobile robots. Tang et al. [20] improved the Harris Hawk algorithm using several strategies such as dimensional learning-based hunting (DLH) search and applied it to robot path planning, and the results demonstrated the algorithm’s clear advantage in path planning performance. Cheng et al. [21] developed an improved SSA applied to UAV path planning based on the theory of uniform experimental design and obtained good experimental results. Lian et al. [22] added exponentially decreasing inertia weights and adaptive Cauchy mutation strategies to the dung beetle optimizer to solve complex UAV path planning problems in complex 3D environments. Liu et al. [23] proposed a periodic retreat strategy and a visual-auditory synergistic defense mechanism to improve the Crested Porcupine Optimizer (ICPO) for better obstacle avoidance and reduced energy consumption of UAVs.

The Honey Badger Algorithm (HBA) [24] is a nature-inspired optimization algorithm proposed by Hashim et al. in 2021. It is designed to identify optimal solutions by mimicking the intelligent foraging behavior of the honey badger. HBA is characterized by its strong optimization capability, robustness, and simplicity of implementation. Numerous researchers have explored and applied HBA across various fields, yielding promising experimental results, as summarized in Table 1.

Table 1.

Latest variants and applications of the HBA algorithm.

Currently, research on the application of the Honey Badger Algorithm (HBA) in UAV path planning remains limited. Hu et al. [33] proposed an improved variant of HBA, named SaCHBA_PDN, which incorporates a Bernoulli shift map, segment optimal decreasing neighborhoods, and adaptive level crossing for path planning. While the algorithm demonstrates strong performance in two-dimensional path planning, it does not address three-dimensional path planning. Therefore, this study explores the application of HBA to tackle the NP-hard problem of UAV 3D path planning [34].

According to the “No Free Lunch (NFL)” theorem [35], no single algorithm can effectively solve all optimization problems. To enhance the applicability of the Honey Badger Algorithm (HBA) for UAV path planning and address its limitations, such as slow convergence speed, an imbalance between exploration and exploitation, and susceptibility to local optima [36,37,38], this study proposes an improved variant, named LRMHBA.

First, Latin hypercube sampling combined with an elite strategy [39] is employed during initialization to ensure a more uniform population distribution and enhance global search efficiency. Second, a stochastic perturbation strategy inspired by the Whale Optimization Algorithm is integrated to improve the algorithm’s ability to escape local optima. Finally, a staged dual-population co-evolutionary strategy incorporating multiple differential evolution variants is introduced. The population is divided into two subpopulations based on their adaptive capabilities. Considering the dynamic trade-off between exploration and exploitation during optimization, different differential evolution variants are assigned to the two subpopulations at various evolutionary stages. Additionally, the elite population obtained during initialization is leveraged to guide the differential mutation process, improving the balance between exploration and exploitation and enhancing the algorithm’s global optimization capability.

The following are this paper’s primary contributions:

- Effective fusion of the randomized perturbation strategy in the whale algorithm and the honey badger algorithm.

- Proposing a staged two-population coevolutionary strategy that incorporates multiple differential variation approaches.

- Proposing an improved HBA algorithm (LRMHBA) that combines Latin hypercubic sampling with an elite strategy, a randomized perturbation strategy, and a staged two-population co-evolutionary strategy that fuses multiple differential variability approaches.

- Comparative performance tests were conducted on the LRMHBA algorithm against various competing algorithms, including highly referenced algorithms and their variants, recently developed high-performance algorithms, the champion algorithm, as well as the original HBA and its variant, using the CEC2017 test suite, with evaluations covering both low-dimensional (30-dimensional) and high-dimensional (100-dimensional) function optimization. Statistical analyses, including the Wilcoxon rank-sum test and Friedman test, along with ablation and exploration-exploitation experiments, were performed to validate the advancements of LRMHBA.

- The UAV flight cost is defined and three UAV 3D simulation scenarios from simple to complex are established, and the performance of path planning for each scenario is compared and analyzed with the LRMHBA algorithm and other competing algorithms, and the outcomes demonstrate the superiority of the LRMHBA method in the UAV path planning problem as well.

The paper is organized as follows: Section 2 describes the UAV path planning modeling approach. Section 3 describes the principles of the HBA algorithm. Section 4 describes specific improvements to the HBA algorithm. Section 5 is a discussion of testing and analyzing the LRMHBA algorithm using the CEC2017 test suite. Section 6 is the UAV path planning simulation test analysis. Section 7 summarizes the entire thesis research work.

2. UAV Path Planning Modeling

2.1. Environmental Modeling

Environmental modeling refers to the process of converting physical environmental information into digital models that can be processed by computer algorithms, serving as the prerequisite and foundation for UAV path planning.

2.1.1. Base Terrain Model

We adopted a commonly used functional simulation method to generate realistic terrain patterns [40], as expressed in Equation (1):

where is the height value at coordinate ; , , , c, , , , are the scaling coefficients controlling digital terrain characteristics.

2.1.2. Mountain Model

Natural mountainous terrain poses the most significant threat to UAV navigation. We employ an exponential superposition function to characterize such features [41], as defined in Equation (2):

where is the height value at the point (x, y); is the peak altitude of the th mountain; are the centroid coordinates of the th mountain; and are the slope parameters in x/y directions for the th mountain.

2.2. Operational Constraints

The proposed path planning model simulates UAV operations in both simple and complex hazardous environments, incorporating multiple constraint costs [42,43] to enhance the practical relevance and applicability of the study.

2.2.1. Flight Distance Cost

Limited by fuel capacity and consumption rate, UAVs operate under strict flight range constraints. The distance cost function balancing flight efficiency and obstacle avoidance is formulated as follows:

where (, , ) denotes the 3D coordinates of the th waypoint; is the total number of waypoints.

2.2.2. Flight Altitude Cost

Maintaining appropriate flight altitude enhances fuel efficiency and operational safety. To ensure stealth performance, UAVs require stable low-altitude flight patterns. The altitude deviation cost is defined in Equation (4) with its mean reference in Equation (5):

where is the altitude at the th waypoint; is the mean flight altitude.

2.2.3. Turning Maneuver Cost

Sharp turns jeopardize UAV stability and controllability. The turning angle is usually not greater than the pre-set maximum turning angle. The cost function for flight turning is presented as follows:

where denotes the turning angle between adjacent path segments; is the maximum allowable turning angle; is the direction vector of the th path segment.

2.2.4. Terrain Clearance Constraint

To prevent terrain collisions during mission execution, planned trajectories must maintain altitude superiority over terrain with designated safety margins. The terrain clearance cost is formulated as follows:

where denotes the terrain elevation at the th waypoint and SD denote the Mandatory safety clearance (0.2 km in this study).

2.2.5. Obstacle Threat Cost

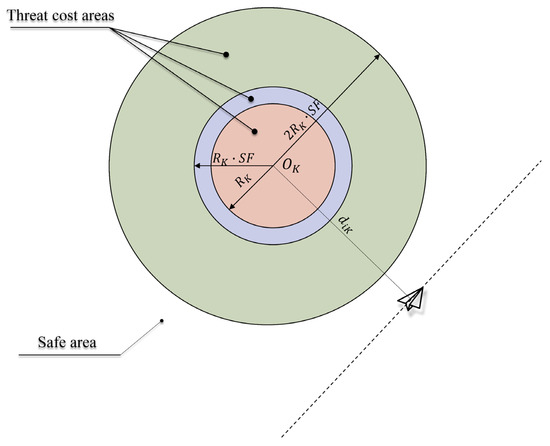

UAVs must avoid collisions with obstacles and radar threat zones modeled as vertical cylinders where flight operations are strictly prohibited at all altitudes. The threat cost function enforces safety buffers around threat sources, as defined in Equations (11)–(13):

where denotes the centroid coordinates of the th threat zone; denotes the radius of the th cylindrical threat zone; is the safety factor for threat zones (1.2 in this study).

As illustrated in Figure 1, denotes the centroid of the th threat source. The penalty activates when , with threat cost increasing quadratically as the distance decreases.

Figure 1.

Schematic of the threat cost model.

The weighted sum of each individual cost function is the overall cost function, if the proposed path is viable. Otherwise, the total cost function is assigned an excessively high penalty value, which in this paper is set to 10,000:

where are weighting coefficients with .

3. Honey Badger Algorithm (HBA)

This section outlines the principle of the Honey Badger Algorithm (HBA), where the parameters to in the formula are random numbers between 0 and 1.

3.1. Population Initialization

The population is randomly initialized within predefined boundaries using:

where is the position vector of the th individual; and denote the lower/upper bounds of search space.

3.2. Excavation Phase

Honey badgers perform cardioid-shaped movements during prey excavation, mathematically modeled as:

where denotes the updated position of the individual honey badger; denotes the global best position; is the food acquisition capability factor, ; is the prey control intensity (Equations (17)–(19)); α is the density factor for exploration-exploitation transition (Equation (20)); Dir is the Search direction operator (Equation (21)).

where is the source intensity concentration; denotes the distance to prey.

where is the maximum iterations; is a constant (typically ).

3.3. Honey Harvesting Phase

Simulating honey badgers following honeyguide birds to beehives:

4. LRMHBA Algorithm

4.1. Hybrid LHS Initialization and Elite Guidance

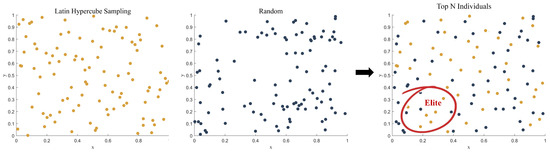

Conventional randomized initialization in the honey badger algorithm may result in suboptimal population distribution and diminished diversity, leading to increased stochastic uncertainty during optimization. To address this limitation, we propose a hybrid initialization strategy integrating Latin Hypercube Sampling (LHS), which enhances spatial uniformity and improves global exploration capabilities.

Latin Hypercube Sampling (LHS) [44,45], proposed by McKay et al. in 1979, is a stratified sampling technique for multivariate parameter distributions. Compared with random sampling, LHS demonstrates superior space-filling properties through its uniform stratification mechanism, particularly when handling limited sample sizes. This method ensures comprehensive coverage of the entire parameter space while capturing tail distribution characteristics.

To address the uneven initialization issue, we implement a hybrid initialization strategy, as shown in Figure 2:

- Generate N samples using LHS for uniform spatial coverage.

- Create another N sample through random sampling.

- Select the top N individuals by fitness ranking from the combined pool.

- Extract the elite 20% individuals to guide the proposed dual-population framework.

Figure 2.

Schematic of hybrid LHS initialization and elite guidance.

4.2. Stochastic Perturbation Strategy

Premature Convergence Analysis

In the original HBA method, position updates are primarily influenced by the global best individual , which can lead to premature convergence as the population tends to cluster around local optima. To enhance global search capability, we introduce a stochastic perturbation mechanism inspired by the Whale Optimization Algorithm (WOA), regulated by the adaptive coefficient . When , the search strategy incorporates stochastic perturbation; otherwise, the honey badger’s position is updated based on . The coefficient is calculated as follows:

where is a random number, linearly decreases from 2 to 0; is the current number of function evaluations; is the Maximum function evaluation number.

When , the modified position update equation and the redefined distance metric are expressed as:

The coefficient exhibits a linear throughout the iteration process. In the initial stage, a high value activates a random perturbation strategy to prevent premature population aggregation and enhance coverage in the search space. As decreases during iterations, the algorithm gradually transitions to the precise exploitation of promising regions.

4.3. The Staged Dual-Population Co-Evolutionary Strategy Integrating Multiple Differential Evolution Variants

4.3.1. Motivation and Framework

To improve the convergence efficiency and convergence accuracy of the HBA algorithm, we propose a staged dual-population co-evolutionary strategy integrating multiple differential evolution variants, which is set to be executed when the optimal value has not changed in 150 function evaluation times. This approach leverages complementary characteristics of diverse differential evolutionary variants to balance exploration and exploitation dynamically.

4.3.2. DE Mutation Operators

The DE Mutation Operator is one of the core operators of the Differential Evolution (DE) algorithm [46], which serves to generate new candidate solutions (variant individuals) to guide the population to search towards a more optimal solution space. The following canonical DE mutation strategies are adopted due to their proven efficacy [47,48,49,50,51,52]:

where denotes the randomly selected individual from generation G; is the global best individual; is the th individual of generation ; is the mutated individual of generation ; and is the scaling factor.

Additionally, Layeb, A. et al. [53] (2024) proposed two more differential mutation strategies, which have been validated by extensive experiments:

where

4.3.3. Dual-Population Mutation Method with Elite Individuals

Single mutation strategy fails to address diverse evolutionary demands across population members, because high-fitness individuals (clustered near the current optimum) require intensified exploitation, while low-fitness individuals (distributed in peripheral regions) demand enhanced exploration [54].

To resolve this dichotomy, we implement fitness-based bipartite grouping:

- Group A (Top 50% fitness): Focused on precision exploitation.

- Group B (Bottom 50% fitness): Dedicated to spatial exploration.

At the same time, considering that the population’s search behavior and needs evolve throughout the iteration process, we have designed the following configuration of differential evolution strategies to better address the balance between global exploration and local exploitation. This configuration allows the algorithm to adapt its strategy dynamically throughout the optimization process, thereby finding the optimal balance between global and local optima and ultimately improving the optimization performance.

- 1.

- Phase I (Initial 2/3 iterations): Exploration Emphasis.

- Group A: DE/mean-current/2.

- Group B: DE/rand/1.

Group A maintains the exploitation potential and group B breaks through the local optimum through randomized search.

- 2.

- Phase II (Final 1/3 iterations): Exploitation Emphasis.

- Group A: DE/current-to-best/2.

- Group B: DE/mean-current/2.

Group A focuses on fine-grained search in optimal neighborhoods, and group B maintains moderate exploration to avoid precocity.

Throughout the process, the elite individuals selected during initialization guide the mutation process in DE/mean-current/2. Consequently, Formula (36) is updated to:

where represents the elite individual obtained during initialization.

After mutation and crossover, the individuals are updated using a greedy strategy:

where denotes fitness evaluation.

In summary, the three improvement strategies work together to form an integrated optimization mechanism through phased coordination. Latin hypercube sampling ensures a geometrically homogeneous initialization of the population, with the top 20% of elite individuals guiding subsequent dual-population operations as potential global optima. During iterations, the stochastic perturbation strategy dynamically prevents premature convergence by adaptively adjusting the coefficient. Simultaneously, the staged dual-population co-evolutionary strategy integrates population characteristics, differential evolution mutation operator characteristics, and the evolving demands of the iterative process, gradually enhancing local exploitation while preserving exploration capabilities. This phased collaboration enables LRMHBA to maintain a balanced exploration-exploitation dynamic throughout the entire optimization process.

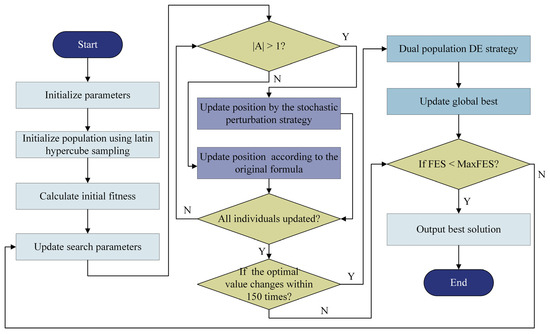

4.4. Pseudocode and Flowchart of LRMHBA

Figure 3 displays the flowchart of LRMHBA, which combines the three tactics discussed in this section. Algorithm 1 displays the pseudocode.

| Algorithm 1 Pseudocode of LRMHBA |

| 1: Initialize population X using Latin hypercube sampling and elite strategy do using Equations (17), (20), (23) and (24). do then 6: Update position using random individual using Equations (25) and (26). 7: else using Equations (16) and (22). 9: end if 10: Update if better solution found. 11: end for and no improvement in last 150 evaluations then then 14: Set population ratios: 0% mean-current/2, 50% current-to-best/2, 50% rand/1 15: else 16: Set population ratios: 50% mean-current/2, 50% current-to-best/2, 0% rand/1 17: end if 18: Sort population by fitness and divide into groups 19: for each group do 20: if Group 1 (mean-current/2) then 21: Apply mutation using Equation (33). 22: else if Group 2 (current-to-best/2) then 23: Apply mutation using Equation (32). 24: else if Group 3 (rand/1) then 25: Apply mutation using Equation (28). 26: end if 27: Apply binomial crossover with probability. 28: Update if better solution found. 29: end for 30: end if 31: Update X_prey and Food_Score if better solution found. 32: end while 33: Return Food_Score, X_prey. |

Figure 3.

Flowchart of LRMHBA.

4.5. Time Complexity Analysis

Let denote the population size, represent the problem dimension, and indicate the maximum number of function evaluations. The original Honey Badger Algorithm (HBA) exhibits a time complexity of When analyzing the computational complexity of the enhanced LRMHBA algorithm relative to HBA, the extra operations and their respective complexities include:

- Latin hypercube sampling during initialization:

- Elite strategy sorting in the initialization phase:

- Stochastic perturbation strategy:

- Dual-population mutation method:

Among them, the time complexity of the Latin hypercube sampling and random perturbation strategies is negligible, while the Dual-Population Mutation Method may be triggered only once every 150 times, and its computation time is also approximately negligible with respect to the main loop when the problem size increases, so the total time complexity of the LRMHBA is , which is approximately equal to . So the LRMHBA algorithm basically adds no extra computational cost compared to the baseline HBA algorithm.

5. Algorithm Performance Testing and Analysis

This section evaluates the effectiveness of the LRMHBA algorithm using the CEC2017 benchmark suite. The CEC2017 test suite contains 29 functions of various types. It provides a comprehensive evaluation of the LRMHBA algorithm’s performance across different problem types. The dimensions and search ranges of the test functions within the benchmark are detailed in Appendix A.

To analyze the LRMHBA algorithm’s performance in terms of convergence accuracy, convergence speed, and exploration-exploitation capabilities, the following four categories of algorithms were selected for comparison:

- Classical high-citation algorithms and their variants: Particle swarm optimization (PSO), Differential evolution (DE), Whale optimization algorithm (WOA), AOA (Arithmetic optimization algorithm, 2021) [55], GQPSO (Gaussian quantum-behaved particle swarm optimization, 2010) [56];

- Recently proposed algorithms and their variants (within the past two years): PO (Parrot Optimizer, 2024) [57], (Dung Beetle Optimizer (DBO), QHDBO (2024) [58];

- Champion algorithm: LSHADE [59];

- HBA algorithm and its variant: HBA, SaCHBA_PDN.

Every algorithm’s parameters were chosen based on the values suggested by the corresponding sources. as detailed in Table 2. The experimental analysis included performance testing on functions with different dimensions, ablation studies, and exploration-exploitation capability experiments.

Table 2.

Algorithm parameter settings for CEC2017 benchmark testing.

The maximum number of function evaluations (FES) was set at 100,000, and the population size for all algorithms was set at 100 to guarantee equity. To reduce experimental randomness and enhance the reliability of results, each algorithm was independently run 30 times. The optimal value, mean value, and standard deviation of the test functions were calculated for both low-dimensional (30 dimensions) and high-dimensional (100 dimensions) settings. The software used for the experiments was Matlab R2021b.

5.1. Results Analysis on CEC2017

Table 3 and Table 4 show the experimental results for dimensions 30 and 100, respectively. The best outcomes are indicated in bold for each performance metric (optimal value, mean value, and standard deviation). As shown in Table 3, when the dimension is 30, out of a total of 87 metrics (29 functions × 3 metrics), the LRMHBA algorithm outperformed other competing algorithms in 35 metrics, ranking first. Specifically, for functions CEC09, CEC11, and CEC12, LRMHBA achieved the best results across all three metrics. LSHADE ranked second with 19 best metrics, followed by HBA and SaCHBA_PDN, each with 10 best metrics.

Table 3.

Test results of LRMHBA with other algorithms on CEC2017 (dim = 30).

Table 4.

Test results of LRMHBA with other algorithms on CEC2017 (dim = 100).

From Table 4, it can be observed that the advantage of LRMHBA becomes even more pronounced when the dimension increases to 100. It ranked first in 44 metrics and achieved better average values than other competing algorithms for 24 functions. These results demonstrate that LRMHBA has a highly competitive global optimization capability and excellent stability.

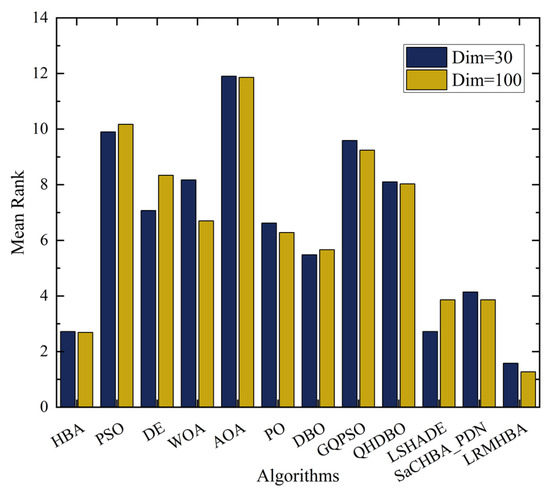

The final row for each function in Table 3 and Table 4 presents the Friedman test rankings of the algorithms for solving the respective problem. Additionally, the overall mean rank and ranking of the algorithms are provided at the end of the tables. For the 30-dimensional problems, LRMHBA ranked first in 18 functions, whereas for the 100-dimensional problems, it ranked first in 24 functions. Among all the algorithms, LRMHBA achieved the best average rankings, with a rank of 1.58 for 30-dimensional problems and 1.27 for 100-dimensional problems. These results demonstrate that LRMHBA outperforms all other algorithms on the CEC2017 benchmark, with its advantage being particularly pronounced in high-dimensional problems. The summary statistics of the average ranks from the Friedman test for all algorithms at both 30 and 100 dimensions are shown in Figure 4.

Figure 4.

Statistical results of Friedman test rankings in 30 and 100 dimensions.

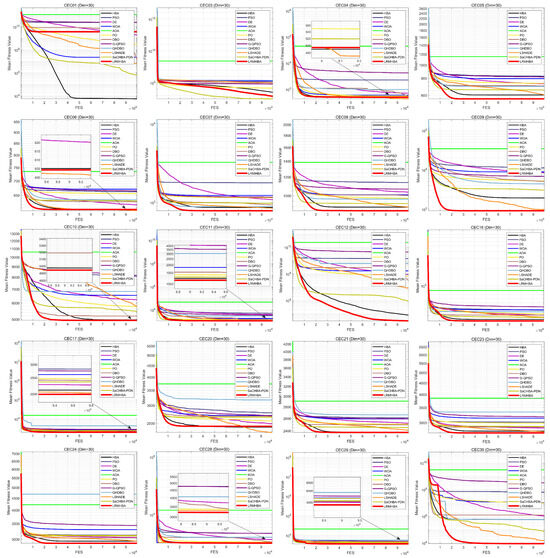

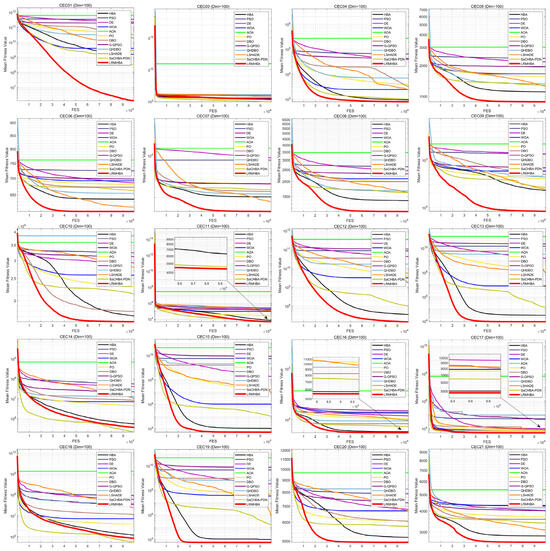

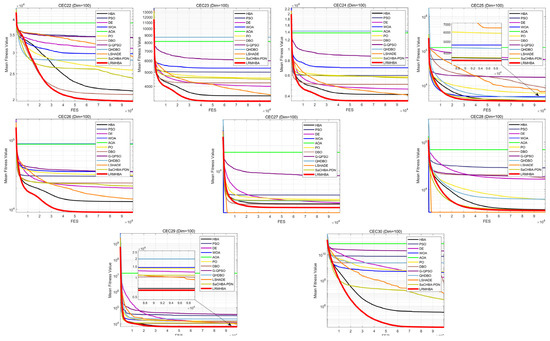

Figure 5 and Figure 6 display the convergence curves for every algorithm that was tested. Due to space limitations, only the convergence curves for 20 functions in the 30-dimensional case are presented. From the figures, it can be observed that LRMHBA achieved better average fitness values on most benchmark functions. Compared to the most competitive algorithms, LSHADE, HBA, and SaCHBA_PDN, LRMHBA generally converged faster than LSHADE and HBA. Although LRMHBA’s early-stage convergence speed was slower than SaCHBA_PDN, the latter was more prone to getting trapped in local optima. In contrast, LRMHBA demonstrated the ability to gradually converge to better values as the number of function evaluations increased, highlighting its superior global optimization capability.

Figure 5.

CEC2017 functions iteration curves (dim = 30).

Figure 6.

CEC2017 functions iteration curves (dim = 100).

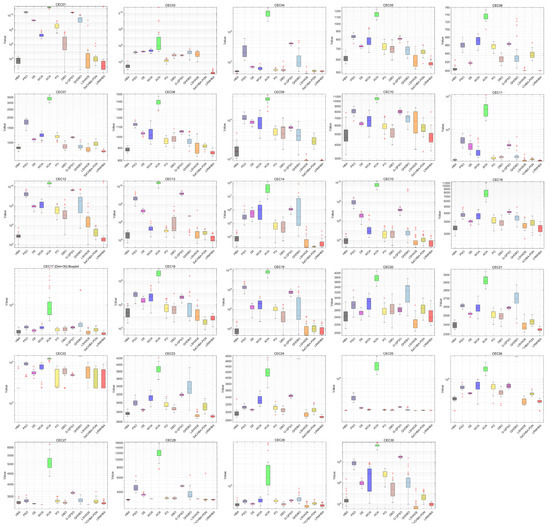

Figure 7 shows the boxplots of all the algorithms on CEC2017 in 30 dimensions, from which it can be seen that although LRMHBA has a few outliers on some of the test functions, its box lengths are very short, and its median and mean rank first on most of the test functions compared to the other compared algorithms, which indicates that the LRMHBA algorithm has a very good stability.

Figure 7.

Boxplots of all the algorithms on CEC2017 (Dim = 30).

The statistically significant findings of the Wilcoxon Rank-Sum Test for 100-dimensional issues are shown in Table 5. A p-value of less than 0.05 indicates a statistically significant difference between the two algorithms. The symbols “+”, “=”, and “−” are used to represent that LRMHBA performs better than, equal to, or worse than the comparison algorithms, respectively. The results show that, except for two cases where the p-value exceeded 0.05 when compared with LSHADE, all other p-values were below 0.05. This indicates that the performance of LRMHBA differs greatly from that of other methods.

Table 5.

Wilcoxon rank-sum test (Dim = 100).

5.2. Ablation Study

To validate the effectiveness of each improvement strategy in the LRMHBA algorithm, three modified versions of the HBA algorithm were constructed by integrating each of the proposed strategies separately. The descriptions of the three versions are as follows:

- LRMHBA1: HBA combined with Latin hypercube sampling and elite strategy.

- LRMHBA2: HBA combined with a random disturbance strategy.

- LRMHBA3: HBA combined with a staged dual-population co-evolutionary strategy integrating multiple differential evolution variants.

The CEC2017 benchmark suite was used to test HBA, LRMHBA1, LRMHBA2, LRMHBA3, and LRMHBA in the 30-dimensional case. Thirty separate runs of each algorithm were conducted. Table 6 displays the outcomes of the experiment, where the best values among the five algorithms are highlighted in bold. Additionally, the mean ranks and rankings obtained from the Friedman test are provided at the bottom of the table.

Table 6.

Results of ablation experiment.

From the results, it can be observed that LRMHBA1, LRMHBA2, and LRMHBA3 each achieved the best values for some functions compared to the other algorithms. However, the LRMHBA algorithm, which integrates all three improvement strategies, demonstrated the best overall performance, obtaining the best values for 44 functions and ranking first in the Friedman test’s mean rank.

The experimental results indicate that each strategy contributed to the improvements of the algorithm. Latin hypercube sampling and elite strategy ensured a more uniform population distribution and effectively guided the search process, enhancing search efficiency. The stochastic perturbation strategy prevented premature convergence of the population during the early stages of iteration. Dual-population mutation strategy, which combines multiple differential evolution approaches, adaptively selected different mutation strategies based on the evolutionary process and population fitness. This approach effectively balanced the exploration and exploitation capabilities of the algorithm, thereby improving its global optimization ability.

5.3. Exploration and Exploitation Experiment

Exploration refers to the algorithm’s attempt to access new regions in the search space to discover potentially better solutions, while exploitation focuses resources on thoroughly searching a known promising region to find its optimal solution. Excessive exploration may lead to wasted computational resources, whereas excessive exploitation can result in premature convergence to a suboptimal solution, preventing the algorithm from escaping local optima. Consequently, a successful heuristic algorithm needs to properly balance exploration and exploitation.

In this section, a dimensional diversity measurement method [60,61] is employed to better evaluate the ability of LRMHBA to balance exploration and exploitation. The corresponding formula is as follows:

where denotes the diversity value of the population; is the maximum diversity value during the iteration. is the population size; is the dimensionality of the variables; is the position of the th dimension of the th individual; is the median of the th variable across all individuals in the population.

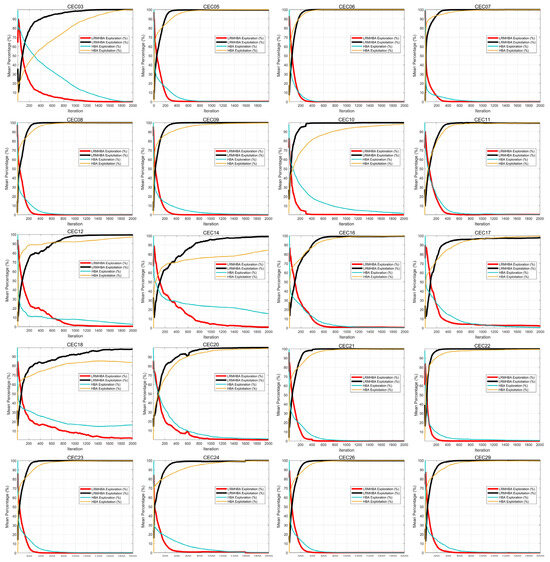

For comparative trials between LRMHBA and HBA, we chose one unimodal function, six simple multimodal functions, seven hybrid functions, and six composition functions from the CEC2017 test suite due to space constraints. The number of iterations was set to 2000, and each algorithm was executed 30 times separately. Figure 8 displays the test findings.

Figure 8.

Exploration and exploitation curves of LRMHBA and HBA.

From the Figure 8, it is evident that LRMHBA exhibits strong exploration capability during the early iterations, enabling it to identify potential optimal solutions across various regions of the solution space. As the iterations progress, LRMHBA gradually transitions from exploration to exploitation, refining high-quality solutions and ultimately converging near the global optimum.

Additionally, compared to the HBA algorithm, LRMHBA demonstrates faster convergence, with the population quickly approaching the optimal solution. This indicates that the Latin hypercube sampling method improves population uniformity, enhancing the optimization efficiency of the LRMHBA algorithm. Furthermore, the stochastic perturbation strategy and the dual-population strategy integrating multiple differential mutation methods effectively balance exploration and exploitation, significantly improving the global optimization capability of the algorithm.

6. UAV Path Planning Simulation Experiments

In this section, we apply the proposed LRMHBA algorithm to UAV path planning to further analyze its performance.

6.1. Experimental Setup

We have constructed three map scenarios of sizes 100 × 100 × 3 km, with increasing complexity. The algorithms selected for comparison, which have shown good performance in this problem, include Particle Swarm Optimization (PSO), Salp swarm algorithm (SSA), Harris hawks optimization (HHO), Dung beetle optimizer (DBO), and Crested Porcupine Optimizer (CPO), as well as the original HBA algorithm and its improved version, SaCHBA_PDN, for 3D UAV path planning. The population size for each algorithm is set to 100, with a maximum of 500 iterations. The parameters for other algorithms are configured according to their respective references, which are shown in Table 7. The map’s starting point is set at (5, 5, 0.3) and the target point at (90, 90, 0.8), with 8 intermediate waypoints. The mountain model and threat area parameters are provided in Table 8. To lessen algorithmic randomness, each algorithm is executed 30 times on its own. The evaluation metrics include the optimal value, average value, variance, and rankings from the Friedman test.

Table 7.

Parameters setting.

Table 8.

Mountain model parameters and threat model parameters.

6.2. Analysis of Experimental Results

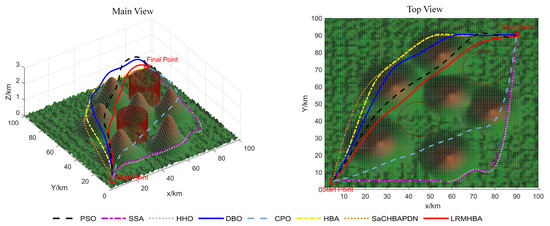

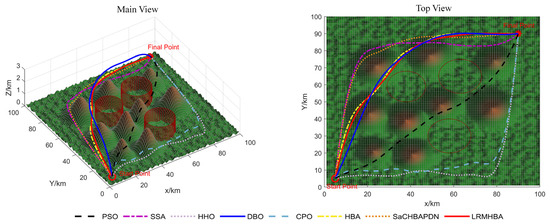

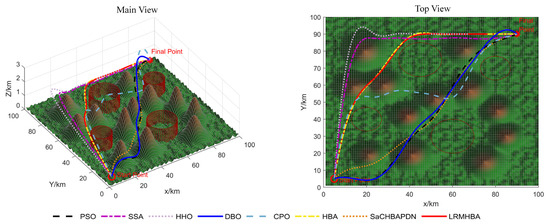

Figure 9, Figure 10, Figure 11 and Figure 12 display the optimal path planning maps for each of the three scenarios, respectively. Figure 11 is the average cost convergence curve. It is evident that every algorithm was able to identify workable best routes. From Figure 9, we can see that the LRMHBA algorithm found the shortest and smoothest optimal path in the simplest Scenario 1. Although it did not find the shortest path in Scenarios 2 and 3, Figure 10 shows that it achieved the smallest average path planning cost in all three scenarios, indicating that LRMHBA demonstrates excellent stability. Furthermore, in both Scenario 1 and the most complex Scenario 3, LRMHBA outperformed the original HBA algorithm in terms of convergence speed, finding feasible paths with very few iterations. In Scenario 2, while the HBA algorithm showed slightly better convergence speed, it was trapped in a local optimum.

Figure 9.

Main and top views of optimal path planning for Scene 1.

Figure 10.

Main and top views of optimal path planning for Scene 2.

Figure 11.

Main and top views of optimal path planning for Scene 3.

Figure 12.

Iterative curves of average flight cost for 3 scenarios.

As evidenced in Table 9 summarizing planning outcomes across three scenarios (with bold values indicating optimal metrics), LRMHBA demonstrates superior performance: it achieves the optimal shortest path in Scenario 1, delivers the best average flight cost across all scenarios, and outperforms the original HBA algorithm in most metrics. These findings align with visualizations of optimal trajectory planning and convergence curves. Furthermore, the Sparrow Search Algorithm (SSA) demonstrates the highest stability by consistently identifying feasible paths, while the robustness of HHO, PSO, and DBO has declined. HHO yields excessively high mean values and variances across all scenarios, and both PSO and DBO perform poorly in the complex Scenarios 2 and 3 (with extremely high mean/variance), indicating that these algorithms frequently encounter search failures in challenging environments. Additionally, LRMHBA consistently ranked first in the Friedman test, confirming its excellent adaptability in generating efficient and smooth flight trajectories within both simple and complex three-dimensional obstacle-filled environments.

Table 9.

Path planning results.

7. Conclusions

This study presents an enhanced Honey Badger Algorithm (LRMHBA) for three-dimensional UAV path planning. The proposed algorithm integrates Latin hypercube sampling with elite preservation mechanisms during population initialization, effectively mitigating low search efficiency resulting from uneven population distribution in conventional random initialization. To strengthen global optimization capacity, a stochastic perturbation mechanism derived from whale optimization is incorporated. Furthermore, an elite-guided dual-population cooperative evolution framework is developed by adaptively combining multiple differential mutation strategies, which dynamically balances global exploration and local exploitation requirements throughout the evolutionary process while ensuring optimization stability.

Comprehensive evaluations on the CEC2017 benchmark suite demonstrate LRMHBA’s superior convergence speed and solution accuracy across low- and high-dimensional optimization tasks, achieving the highest ranking in Friedman’s comprehensive evaluation among comparative powerful algorithms. Statistical validation through Wilcoxon rank-sum tests, ablation analysis, and exploration-exploitation metrics confirms three key aspects: (1) Significant performance differentiation from other algorithms, (2) Demonstrated efficacy of the proposed enhancement strategies, and (3) Better equilibrium between exploration and exploitation capabilities.

Three-dimensional path planning simulations under varying complexity scenarios—encompassing steep terrains and obstacle-threatened airspaces—reveal LRMHBA’s consistent generation of minimal-cost flight trajectories. The algorithm outperforms its counterparts in Friedman rankings, with particular dominance in simplified environments.

Future research directions include dynamic real-time path replanning mechanisms and cooperative multi-UAV optimization frameworks to enhance practical engineering applications. The algorithm’s architectural versatility suggests potential extensions to industrial robotics trajectory optimization, autonomous vehicle navigation, flexible manufacturing scheduling, and computer vision segmentation tasks, demonstrating promising applicability across these domains.

Author Contributions

Conceptualization, X.T. and C.J.; methodology, X.T.; software, X.T., and C.J.; validation, X.T., and Z.H.; data curation, Z.H.; writing—original draft preparation, X.T.; writing—review and editing, X.T. and C.J.; All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Science and Technology Programme Projects in Meishan city of China (2024KJZD162).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are included in the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

CEC2017 Test Functions.

Table A1.

CEC2017 Test Functions.

| No. | Functions | ||

|---|---|---|---|

| Unimodal Functions | 1 | Shifted and Rotated Bent Cigar Function | 100 |

| 3 | Shifted and Rotated Zakharov Function | 200 | |

| Simple Multimodal Functions | 4 | Shifted and Rotated Rosenbrock’s Function | 300 |

| 5 | Shifted and Rotated Rastrigin’s Function | 400 | |

| 6 | Shifted and Rotated Expanded Scaffer’s F6 Function | 500 | |

| 7 | Shifted and Rotated Lunacek Bi_Rastrigin Function | 600 | |

| 8 | Shifted and Rotated Non-Continuous Rastrigin’s Function | 700 | |

| 9 | Shifted and Rotated Levy Function | 800 | |

| 10 | Shifted and Rotated Schwefel’s Function | 900 | |

| Hybrid Functions | 11 | Hybrid Function 1 (N = 3) | 1000 |

| 12 | Hybrid Function 2(N = 3) | 1100 | |

| 13 | Hybrid Function 3 (N = 3) | 1200 | |

| 14 | Hybrid Function 4 (N = 4) | 1300 | |

| 15 | Hybrid Function 5 (N = 4) | 1400 | |

| 16 | Hybrid Function 6 (N = 4) | 1500 | |

| 17 | Hybrid Function 6 (N = 5) | 1600 | |

| 18 | Hybrid Function 6 (N = 5) | 1700 | |

| 19 | Hybrid Function 6 (N = 5) | 1800 | |

| 20 | Hybrid Function 6 (N = 6) | 1900 | |

| Composition Functions | 21 | Composition Function 1 (N = 3) | 2000 |

| 22 | Composition Function 2 (N = 3) | 2100 | |

| 23 | Composition Function 3 (N = 4) | 2200 | |

| 24 | Composition Function 4 (N = 4) | 2300 | |

| 25 | Composition Function 5 (N = 5) | 2400 | |

| 26 | Composition Function 6 (N = 5) | 2500 | |

| 27 | Composition Function 7 (N = 6) | 2600 | |

| 28 | Composition Function 8 (N = 6) | 2700 | |

| 29 | Composition Function 9 (N = 3) | 2800 | |

| 30 | Composition Function 10 (N = 3) | 2900 | |

References

- Alejandro, P.; Daniel, R.; Alejandro, P.; Enrique, F. A review of artificial intelligence applied to path planning in UAV swarms. Neural Comput. Appl. 2022, 34, 153–170. [Google Scholar] [CrossRef]

- Jones, M.; Soufiene, D.; Kristopher, W. Path-planning for unmanned aerial vehicles with environment complexity considerations: A survey. ACM Comput. Surv. 2023, 55, 1–39. [Google Scholar] [CrossRef]

- Hart, P.; Nils, J.; Bertram, R. A formal basis for the heuristic determination of minimum cost paths. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 100–107. [Google Scholar] [CrossRef]

- Khatib, O. Real-time obstacle avoidance for manipulators and mobile robots. Int. J. Robot. Res. 1986, 5, 90–98. [Google Scholar] [CrossRef]

- Nasir, J.; Islam, F.; Ayaz, Y. Adaptive Rapidly-Exploring-Random-Tree-Star (RRT*)-Smart: Algorithm Characteristics and Behavior Analysis in Complex Environments. Asia-Pac. J. Inf. Technol. Multimed 2013, 2, 39–51. [Google Scholar] [CrossRef]

- Desale, S.; Rasool, A.; Andhale, S.; Rane, P. Heuristic and meta-heuristic algorithms and their relevance to the real world: A survey. Int. J. Comput. Eng. Res. Trends 2015, 2, 296–304. [Google Scholar]

- Sadeghian, Z.; Akbari, E.; Nematzadeh, H.; Motameni, H. A review of feature selection methods based on meta-heuristic algorithms. J. Exp. Theor. Artif. Intell. 2025, 37, 1–51. [Google Scholar] [CrossRef]

- Hashim, F.A.; Mostafa, R.R.; Hussien, A.G.; Mirjalili, S.; Sallam, K.M. Fick’s Law Algorithm: A physical law-based algorithm for numerical optimization. Knowl.-Based Syst. 2023, 260, 110146. [Google Scholar] [CrossRef]

- Hu, G.; Cheng, M.; Houssein, E.H.; Hussien, A.G.; Abualigah, L. SDO: A novel sled dog-inspired optimizer for solving engineering problems. Adv. Eng. Inform. 2024, 62, 102783. [Google Scholar] [CrossRef]

- Kennedy, J.; Russell, E. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995. [Google Scholar] [CrossRef]

- Karaboga, D.; Bahriye, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Glob. Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Mirjalili, S.; Andrew, L. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Heidari, A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. Dung beetle optimizer: A new meta-heuristic algorithm for global optimization. J. Supercomput. 2023, 79, 7305–7336. [Google Scholar] [CrossRef]

- Abdel, B.; Mohamed, R.; Mohamed, A. Crested Porcupine Optimizer: A new nature-inspired metaheuristic. Knowl.-Based Syst. 2024, 284, 111257. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, L.; Cai, B.; Liang, Y. Unified path planning for composite UAVs via Fermat point-based grouping particle swarm optimization. Aerosp. Sci. Technol. 2024, 148, 109088. [Google Scholar] [CrossRef]

- Han, Z.; Chen, M.; Shao, S.; Wu, Q. Improved artificial bee colony algorithm-based path planning of unmanned autonomous helicopter using mul-ti-strategy evolutionary learning. Aerosp. Sci. Technol. 2022, 122, 107374. [Google Scholar] [CrossRef]

- Dai, Y.; Yu, J.; Zhang, C.; Zhan, B.; Zheng, X. A novel whale optimization algorithm of path planning strategy for mobile robots. Appl. Intell. 2023, 53, 10843–10857. [Google Scholar] [CrossRef]

- Tang, C.; Li, W.; Han, T.; Yu, L.; Cui, T. Multi-Strategy Improved Harris Hawk Optimization Algorithm and Its Application in Path Planning. Biomimetics 2024, 9, 552. [Google Scholar] [CrossRef]

- Cheng, L.; Ling, G.; Liu, F.; Ge, M. Application of uniform experimental design theory to multi-strategy improved sparrow search algorithm for UAV path planning. Expert Syst. Appl. 2024, 255, 124849. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, Y.; Sun, Y. An improved dung beetle optimizer for UAV 3D path planning. J. Supercomput. 2024, 80, 26537–26567. [Google Scholar] [CrossRef]

- Liu, S.; Jin, Z.; Lin, H.; Lu, H. An improve crested porcupine algorithm for UAV delivery path planning in challenging environments. Sci. Rep. 2024, 14, 20445. [Google Scholar] [CrossRef] [PubMed]

- Hashim, F.; Houssein, E.; Hussain, K.; Mabrouk, M.; Al-Atabany, W. Honey Badger Algorithm: New metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 2022, 192, 84–110. [Google Scholar] [CrossRef]

- Abasi, A.K.; Aloqaily, M.; Guizani, M. Optimization of cnn using modified honey badger algorithm for sleep apnea detection. Expert Syst. Appl. 2023, 229, 120484. [Google Scholar] [CrossRef]

- Nassef, A.; Houssein, E.; Helmy, B.; Rezk, H. Modified honey badger algorithm based global MPPT for triple-junction solar photovoltaic system under partial shading condition and global optimization. Energy 2022, 254, 124363. [Google Scholar] [CrossRef]

- Dao, T.; Nguyen, T.; Nguyen, V. An improved honey badger algorithm for coverage optimization in wireless sensor network. J. Internet Technol. 2023, 24, 363–377. [Google Scholar]

- Houssein, E.; Emam, M.; Singh, N.; Samee, N.; Alabdulhafith, M.; Çelik, E. An improved honey badger algorithm for global optimization and multilevel thresholding segmentation: Real case with brain tumor images. Clust. Comput. 2024, 27, 14315–14364. [Google Scholar] [CrossRef]

- Jain, D.; Weiping, D.; Ketan, K. Training fuzzy deep neural network with honey badger algorithm for intrusion detection in cloud environment. Int. J. Mach. Learn. Cybern. 2023, 14, 2221–2237. [Google Scholar] [CrossRef]

- Xu, Y.; Zhong, R.; Cao, Y.; Zhang, C.; Yu, J. Symbiotic mechanism-based honey badger algorithm for continuous optimization. Clust. Comput. 2025, 28, 133. [Google Scholar] [CrossRef]

- Düzenlí, T.; Funda, K.; Salih, B.; Aydemir, S.B. Improved honey badger algorithms for parameter extraction in photovoltaic models. Optik 2022, 268, 169731. [Google Scholar] [CrossRef]

- Bansal, B.; Sahoo, A. Enhanced honey badger algorithm for multi-view subspace clustering based on consensus representation. Soft Comput. 2024, 28, 13307–13329. [Google Scholar] [CrossRef]

- Hu, G.; Zhong, J.; Guo, W. SaCHBA_PDN: Modified honey badger algorithm with multi-strategy for UAV path planning. Expert Syst. Appl. 2023, 223, 119941. [Google Scholar] [CrossRef]

- Zhang, X.; Duan, H. An improved constrained differential evolution algorithm for unmanned aerial vehicle global route planning. Appl. Soft Comput. 2015, 26, 270–284. [Google Scholar] [CrossRef]

- Service, T. A No Free Lunch theorem for multi-objective optimization. Inf. Process. Lett. 2010, 110, 917–923. [Google Scholar] [CrossRef]

- Abasi, A.K.; Aloqaily, M.; Guizani, M. Bare-bones based honey badger algorithm of CNN for Sleep Apnea detection. Clust. Comput. 2024, 27, 6145–6165. [Google Scholar] [CrossRef]

- Fathy, A.; Rezk, H.; Ferahtia, S.; Ghoniem, R.; Alkanhel, R. An efficient honey badger algorithm for scheduling the microgrid energy management. Energy Rep. 2023, 9, 2058–2074. [Google Scholar] [CrossRef]

- Han, E.; Noradin, G. Model identification of proton-exchange membrane fuel cells based on a hybrid convolutional neural network and extreme learning machine optimized by improved honey badger algorithm. Sustain. Energy Technol. Assess. 2022, 52, 102005. [Google Scholar] [CrossRef]

- Tan, Y.; Liu, S.; Zhang, L.; Song, J.; Ren, Y. The Application of an Improved LESS Dung Beetle Optimization in the Intelligent Topological Reconfiguration of ShipPower Systems. J. Mar. Sci. Eng. 2024, 12, 1843. [Google Scholar] [CrossRef]

- Wang, K.; Si, P.; Chen, L.; Li, Z. 3D Path Planning of Unmanned Aerial Vehicle Based on Enhanced Sand Cat Swarm Optimization Algorithm. Acta Armamentarii 2023, 44, 3382–3393. [Google Scholar]

- Ning, Y.; Zheng, B.; Long, Z.; Luo, J. Complex 3D Path Planning for UAVs Based on CMPSO Algorithm. Electron. Opt. Control 2024, 31, 35–42. [Google Scholar]

- Luo, J.; Tian, Y.; Wang, Z. Research on Unmanned Aerial Vehicle Path Planning. Drones 2024, 8, 51. [Google Scholar] [CrossRef]

- Zhou, X.; Tang, Z.; Wang, N.; Yang, C.; Huang, T. A novel state transition algorithm with adaptive fuzzy penalty for multi-constraint UAV path planning. Expert Syst. Appl. 2024, 248, 123481. [Google Scholar] [CrossRef]

- Michael, D. Latin hypercube sampling as a tool in uncertainty analysis of computer models. In Proceedings of the 24th Conference on Winter Simulation, Arlington, VA, USA, 13–16 December 1992. [Google Scholar]

- Stein, M. Large sample properties of simulations using Latin hypercube sampling. Technometrics 1987, 29, 143–151. [Google Scholar] [CrossRef]

- Storn, R. On the usage of differential evolution for function optimization. In Proceedings of the North American Fuzzy Information Processing, Berkeley, CA, USA, 19–22 June 1996. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Gämperle, R.; Sibylle, D.; Petros, K. A parameter study for differential evolution. Adv. Intell. Syst. Fuzzy Syst. Evol. Comput. 2002, 10, 293–298. [Google Scholar]

- Yu, W.; Shen, M.; Chen, W.; Zhan, Z.; Gong, Y.; Lin, Y.; Zhang, J. Differential evolution with two-level parameter adaptation. IEEE Trans. Cybern. 2013, 44, 1080–1099. [Google Scholar] [CrossRef]

- Li, Y.; Wang, S.; Yang, B. An improved differential evolution algorithm with dual mutation strategies collaboration. Expert Syst. Appl. 2020, 153, 113451. [Google Scholar] [CrossRef]

- Zuo, M.; Guo, C. DE/current−to−better/1: A new mutation operator to keep population diversity. Intell. Syst. Appl. 2022, 14, 200063. [Google Scholar] [CrossRef]

- Rauf, H.; Gao, J.; Almadhor, A.; Haider, A.; Zhang, Y.; Al-Turjman, F. Multi population-based chaotic differential evolution for multi-modal and multi-objective optimization problems. Appl. Soft Comput. 2023, 132, 109909. [Google Scholar] [CrossRef]

- Layeb, A. Differential evolution algorithms with novel mutations, adaptive parameters, and Weibull flight operator. Soft Comput. 2024, 28, 7039–7091. [Google Scholar] [CrossRef]

- Price, K. Multi population-based chaotic differential evolution for multi-modal and multi-objective optimization problems. In Proceedings of the North American Fuzzy Information Processing, Berkeley, CA, USA, 19–22 June 1996. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- dos Santos Coelho, L. Gaussian quantum-behaved particle swarm optimization approaches for constrained engineering design problems. Expert Syst. Appl. 2010, 37, 1676–1683. [Google Scholar] [CrossRef]

- Lian, J.; Hui, G.; Ma, L.; Zhu, T.; Wu, X.; Heidari, A.A.; Chen, Y.; Chen, H. Parrot optimizer: Algorithm and applications to medical problems. Comput. Biol. Med. 2024, 172, 108064. [Google Scholar] [CrossRef] [PubMed]

- Zhu, F.; Li, G.; Tang, H.; Li, Y.; Lv, X.; Wang, X. Dung beetle optimization algorithm based on quantum computing and multi-strategy fusion for solving engineering problems. Expert Syst. Appl. 2024, 236, 121219. [Google Scholar] [CrossRef]

- Tanabe, R.; Fukunaga, A.S. Improving the search performance of SHADE using linear population size reduction. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014. [Google Scholar] [CrossRef]

- Zhu, F.; Li, G.; Tang, H.; Li, Y.; Lv, X.; Wang, X. Population diversity maintenance in brain storm optimization algorithm. J. Artif. Intell. Soft Comput. Res. 2014, 4, 83–97. [Google Scholar] [CrossRef]

- Morales-Castañeda, B.; Zaldivar, D.; Cuevas, E.; Fausto, F.; Rodríguez, A. A better balance in metaheuristic algorithms: Does it exist? Swarm Evol. Comput. 2020, 54, 100671. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).