Fine-Grained Image Recognition with Bio-Inspired Gradient-Aware Attention

Abstract

1. Introduction

- We propose an efficient method for constructing gradient-aware spatial weight maps, which effectively encodes high-frequency direction cues at an extremely low computational cost through multi-directional one-step attention fusion.

- We propose a strategy of injecting compact gradient tokens into the Transformer, which enables the model to explicitly utilize high-frequency local information while maintaining the global semantic modeling capability.

- The proposed method is evaluated on four widely used fine-grained benchmarks: CUB-200-2011, iNaturalist 2018, NABirds, and Stanford Cars, achieving top-1 accuracies of 92.9%, 90.5%, 93.1%, and 95.1%, respectively, demonstrating its effectiveness.

2. Related Work

2.1. Fine-Grained Image Recognition

2.2. Attention Mechanism

3. Materials and Methods

3.1. Image Feature Tokenization

3.2. Gradient Feature Tokenization

3.2.1. Gradient Feature Extraction

3.2.2. Directional Attention Construction

3.2.3. Attention Reconstruction and Gradient Weighting

3.2.4. Gradient Token Generation

3.3. Token Collaborative Encoding Unit

4. Results and Discussion

4.1. Datasets

4.2. Experimental Details

4.3. Comparison with SOTA Methods

4.3.1. iNaturalist 2018

4.3.2. CUB-200-2011

4.3.3. NABirds

4.3.4. Stanford Cars

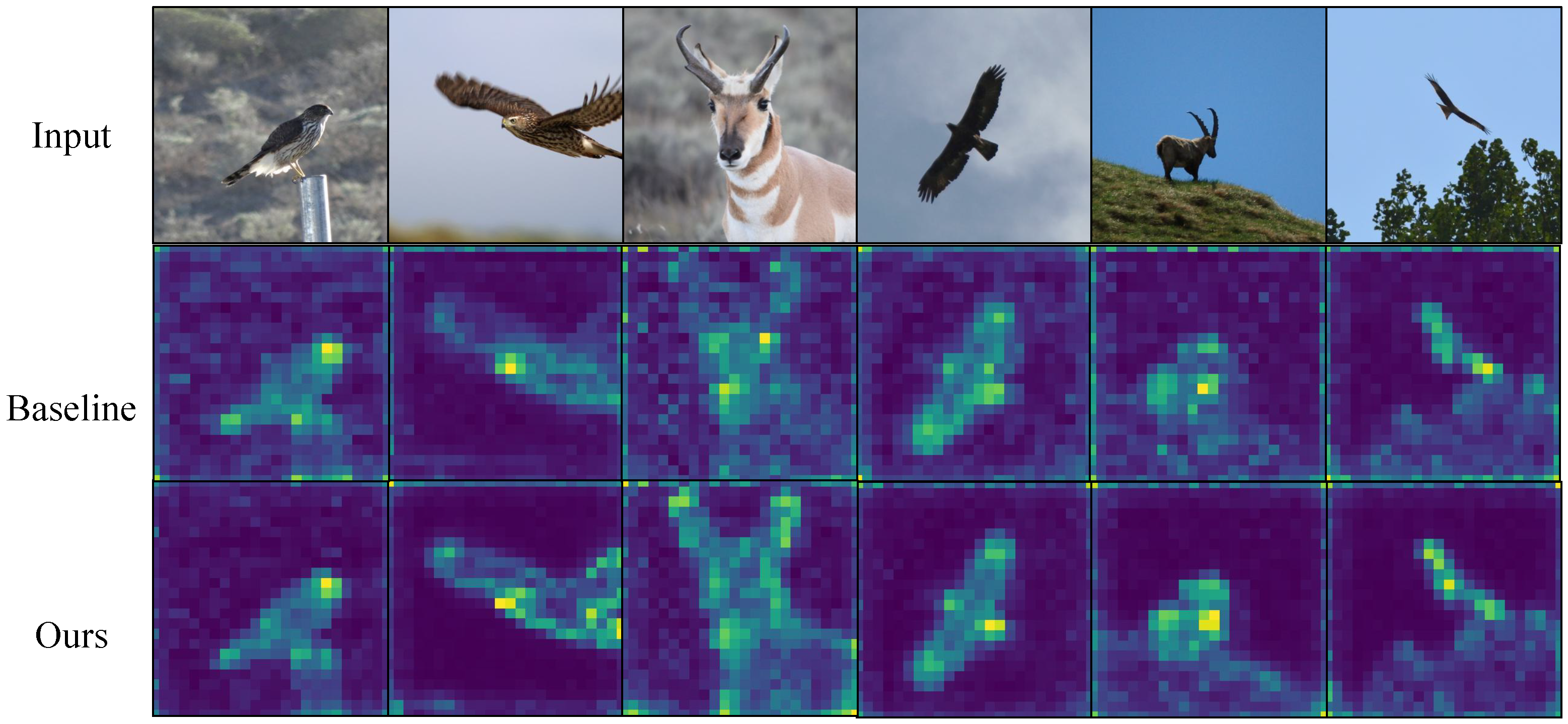

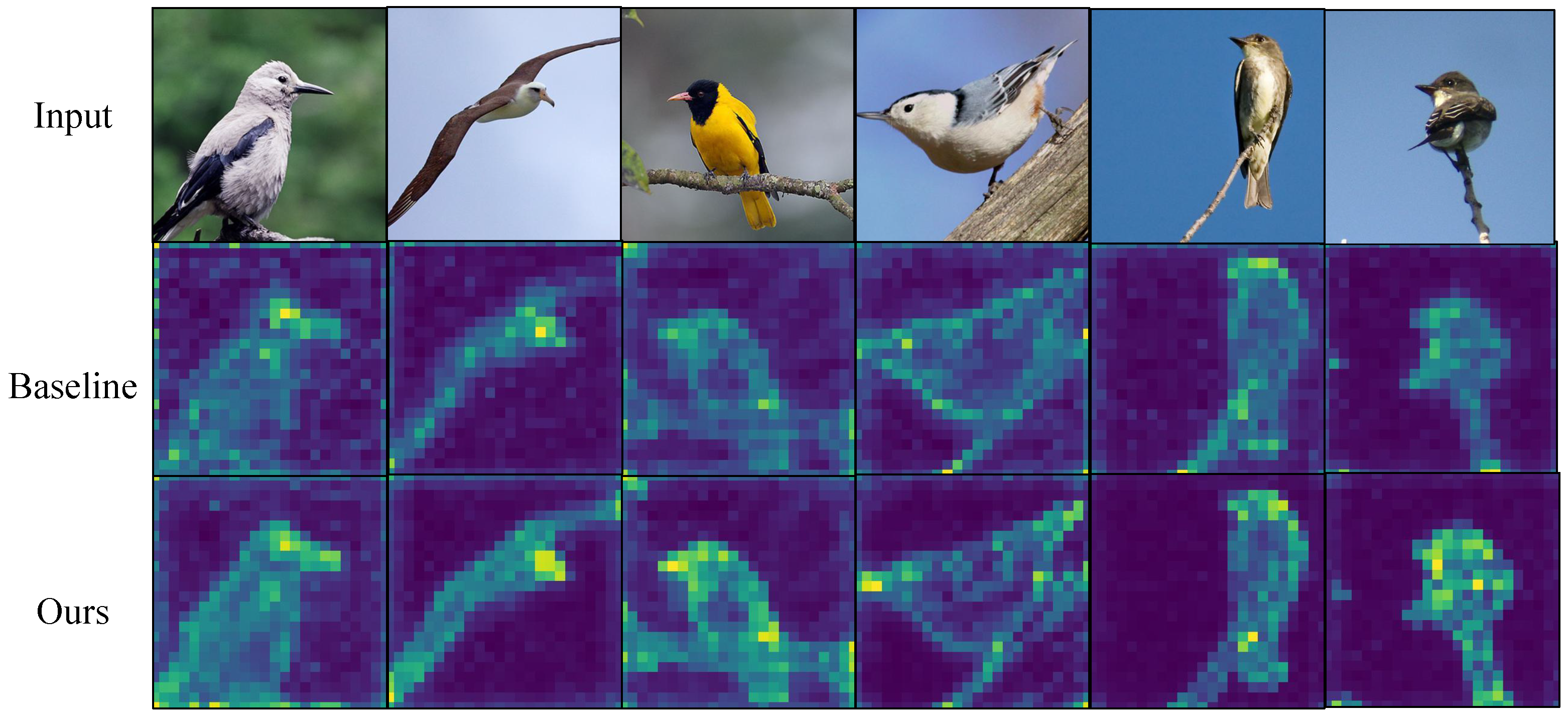

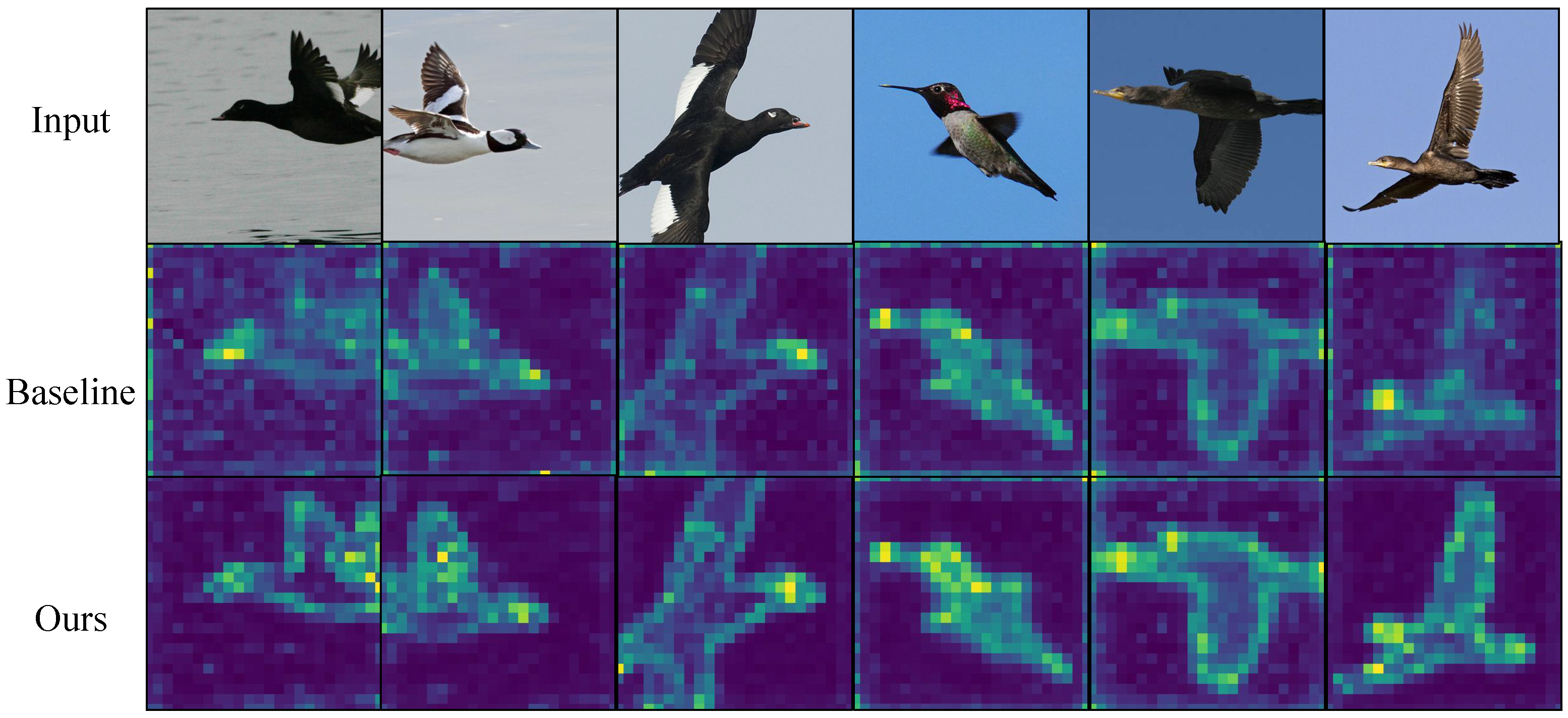

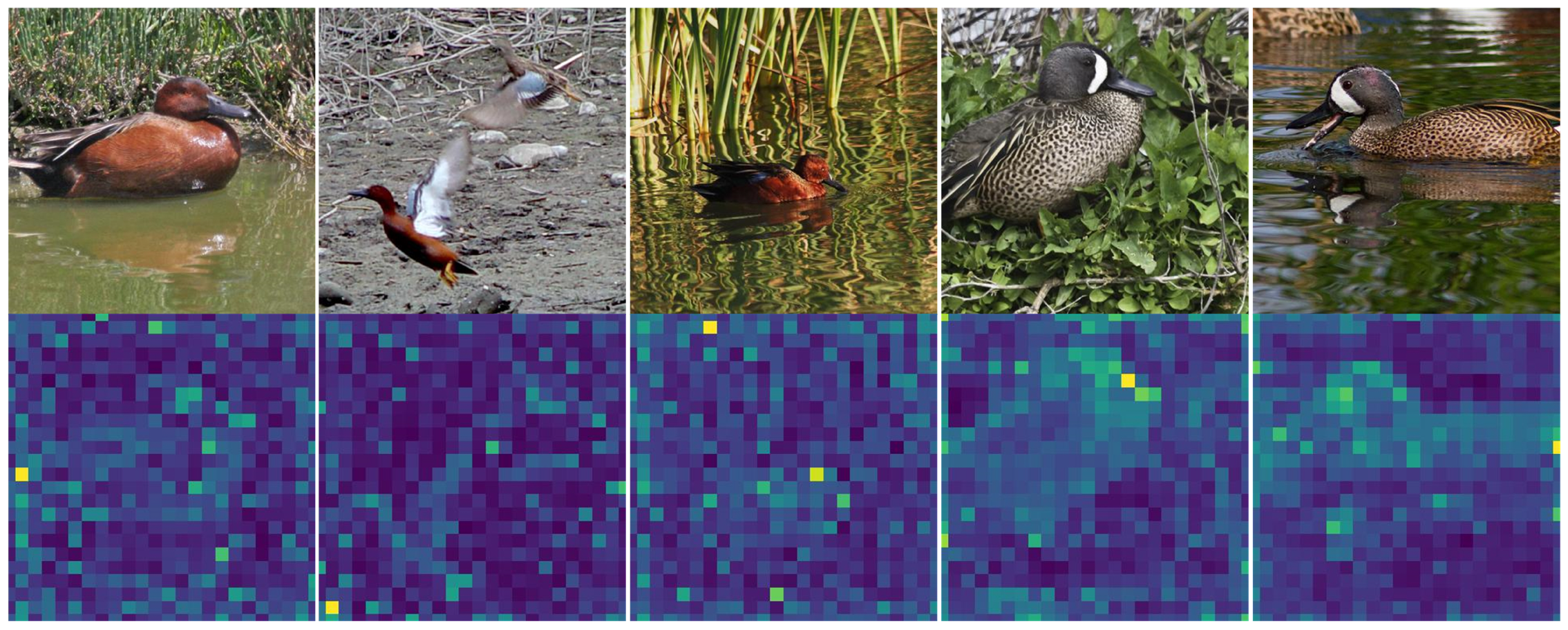

4.4. Visualization Analysis

4.5. Ablation Study

4.5.1. Effectiveness of the Proposed Module

4.5.2. Impact of the Number of Gradient Tokens

4.5.3. Analysis of Hard Cases

4.5.4. Model Complexity Analysis

4.5.5. Sensitivity Analysis of Sobel Kernel Size

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zheng, H.; Fu, J.; Zha, Z.J.; Luo, J. Learning deep bilinear transformation for fine-grained image representation. Adv. Neural Inf. Process. Syst. 2019, 32. Available online: https://arxiv.org/abs/1911.03621 (accessed on 10 December 2025).

- He, J.; Chen, J.N.; Liu, S.; Kortylewski, A.; Yang, C.; Bai, Y.; Wang, C. Transfg: A transformer architecture for fine-grained recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 852–860. [Google Scholar]

- Diao, Q.; Jiang, Y.; Wen, B.; Sun, J.; Yuan, Z. Metaformer: A unified meta framework for fine-grained recognition. arXiv 2022, arXiv:2203.02751. [Google Scholar]

- Zhu, L.; Chen, T.; Yin, J.; See, S.; Liu, J. Learning gabor texture features for fine-grained recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 1621–1631. [Google Scholar]

- Wang, J.; Xu, Q.; Jiang, B.; Luo, B.; Tang, J. Multi-Granularity Part Sampling Attention for Fine-Grained Visual Classification. IEEE Trans. Image Process. 2024, 33, 4529–4542. [Google Scholar] [CrossRef]

- Chou, P.Y.; Kao, Y.Y.; Lin, C.H. Fine-grained visual classification with high-temperature refinement and background suppression. arXiv 2023, arXiv:2303.06442. [Google Scholar]

- Moran, J.; Desimone, R. Selective attention gates visual processing in the extrastriate cortex. Science 1985, 229, 782–784. [Google Scholar] [CrossRef]

- Treisman, A.M.; Gelade, G. A feature-integration theory of attention. Cogn. Psychol. 1980, 12, 97–136. [Google Scholar] [CrossRef] [PubMed]

- He, X.; Peng, Y. Fine-grained image classification via combining vision and language. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5994–6002. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MI, USA, 2–7 June 2019. [Google Scholar]

- Xu, Q.; Wang, J.; Jiang, B.; Luo, B. Fine-grained visual classification via internal ensemble learning transformer. IEEE Trans. Multimed. 2023, 25, 9015–9028. [Google Scholar] [CrossRef]

- Chu, G.; Potetz, B.; Wang, W.; Howard, A.; Song, Y.; Brucher, F.; Leung, T.; Adam, H. Geo-aware networks for fine-grained recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Ding, Y.; Ma, Z.; Wen, S.; Xie, J.; Chang, D.; Si, Z.; Wu, M.; Ling, H. AP-CNN: Weakly supervised attention pyramid convolutional neural network for fine-grained visual classification. IEEE Trans. Image Process. 2021, 30, 2826–2836. [Google Scholar] [CrossRef]

- Do, T.; Tran, H.; Tjiputra, E.; Tran, Q.D.; Nguyen, A. Fine-grained visual classification using self assessment classifier. In Proceedings of the 2024 IEEE Conference on Artificial Intelligence (CAI), Singapore, 25–27 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 597–602. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. Available online: https://arxiv.org/abs/1706.03762 (accessed on 10 December 2025).

- Khan, R.; Raisa, T.F.; Debnath, R. An efficient contour based fine-grained algorithm for multi category object detection. J. Image Graph. 2018, 6, 127–136. [Google Scholar] [CrossRef]

- Luo, W.; Yang, X.; Mo, X.; Lu, Y.; Davis, L.S.; Li, J.; Yang, J.; Lim, S.N. Cross-x learning for fine-grained visual categorization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8242–8251. [Google Scholar]

- Han, J.; Yao, X.; Cheng, G.; Feng, X.; Xu, D. P-CNN: Part-Based Convolutional Neural Networks for Fine-Grained Visual Categorization. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 579–590. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. In Proceedings of the International Conference on Learning Representations (ICLR), Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Luong, M.T.; Pham, H.; Manning, C.D. Effective Approaches to Attention-based Neural Machine Translation. arXiv 2015, arXiv:1508.04025. [Google Scholar] [CrossRef]

- Radford, A.; Narasimhan, K. Improving Language Understanding by Generative Pre-Training. arXiv 2018, in press. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Zhu, H.; Ke, W.; Li, D.; Liu, J.; Tian, L.; Shan, Y. Dual cross-attention learning for fine-grained visual categorization and object re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 4692–4702. [Google Scholar]

- Tang, Y.; Han, K.; Guo, J.; Xu, C.; Li, Y.; Xu, C.; Wang, Y. An image patch is a wave: Phase-aware vision mlp. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10935–10944. [Google Scholar]

- Keshun, Y.; Yingkui, G.; Yanghui, L.; Yajun, W. A novel physical constraint-guided quadratic neural networks for interpretable bearing fault diagnosis under zero-fault sample. Nondestruct. Test. Eval. 2025, 1–31. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, J.; Chen, L.; Li, F.; Feng, Z.; Jia, L.; Li, P. RailVoxelDet: A Lightweight 3-D Object Detection Method for Railway Transportation Driven by Onboard LiDAR Data. IEEE Internet Things J. 2025, 12, 37175–37189. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pvt v2: Improved baselines with pyramid vision transformer. Comput. Vis. Media 2022, 8, 415–424. [Google Scholar] [CrossRef]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar] [CrossRef]

- Van Horn, G.; Mac Aodha, O.; Song, Y.; Cui, Y.; Sun, C.; Shepard, A.; Adam, H.; Perona, P.; Belongie, S. The inaturalist species classification and detection dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8769–8778. [Google Scholar]

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S. The Caltech-UCSD Birds-200-2011 Dataset; Technical Report CNS-TR-2011-001; California Institute of Technology: Pasadena, CA, USA, 2011. [Google Scholar]

- Van Horn, G.; Branson, S.; Farrell, R.; Haber, S.; Barry, J.; Ipeirotis, P.; Perona, P.; Belongie, S. Building a bird recognition app and large scale dataset with citizen scientists: The fine print in fine-grained dataset collection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 595–604. [Google Scholar]

- Krause, J.; Stark, M.; Deng, J.; Fei-Fei, L. 3d object representations for fine-grained categorization. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 1–8 December 2013; pp. 554–561. [Google Scholar]

- Park, S.; Hong, Y.; Heo, B.; Yun, S.; Choi, J.Y. The majority can help the minority: Context-rich minority oversampling for long-tailed classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6887–6896. [Google Scholar]

- Touvron, H.; Cord, M.; El-Nouby, A.; Verbeek, J.; Jégou, H. Three things everyone should know about vision transformers. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 497–515. [Google Scholar]

- Cui, J.; Zhong, Z.; Tian, Z.; Liu, S.; Yu, B.; Jia, J. Generalized parametric contrastive learning. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 7463–7474. [Google Scholar] [CrossRef]

- Liu, J.; Huang, X.; Zheng, J.; Liu, Y.; Li, H. MixMAE: Mixed and masked autoencoder for efficient pretraining of hierarchical vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6252–6261. [Google Scholar]

- Mac Aodha, O.; Cole, E.; Perona, P. Presence-only geographical priors for fine-grained image classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 9596–9606. [Google Scholar]

- Touvron, H.; Cord, M.; Sablayrolles, A.; Synnaeve, G.; Jégou, H. Going deeper with image transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 32–42. [Google Scholar]

- Tian, C.; Wang, W.; Zhu, X.; Dai, J.; Qiao, Y. Vl-ltr: Learning class-wise visual-linguistic representation for long-tailed visual recognition. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 73–91. [Google Scholar]

- Kim, D.; Heo, B.; Han, D. DenseNets reloaded: Paradigm shift beyond ResNets and ViTs. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 395–415. [Google Scholar]

- Girdhar, R.; Singh, M.; Ravi, N.; Van Der Maaten, L.; Joulin, A.; Misra, I. Omnivore: A single model for many visual modalities. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16102–16112. [Google Scholar]

- Singh, M.; Gustafson, L.; Adcock, A.; Reis, V.d.F.; Gedik, B.; Kosaraju, R.P.; Mahajan, D.; Girshick, R.; Dollár, P.; van der Maaten, L. Revisiting weakly supervised pre-training of visual perception models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 804–814. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16000–16009. [Google Scholar]

- Ryali, C.; Hu, Y.T.; Bolya, D.; Wei, C.; Fan, H.; Huang, P.Y.; Aggarwal, V.; Chowdhury, A.; Poursaeed, O.; Hoffman, J.; et al. Hiera: A hierarchical vision transformer without the bells-and-whistles. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; PMLR: Birmingham, UK, 2023; pp. 29441–29454. [Google Scholar]

- Liu, H.; Zhang, C.; Deng, Y.; Xie, B.; Liu, T.; Li, Y.F. TransIFC: Invariant cues-aware feature concentration learning for efficient fine-grained bird image classification. IEEE Trans. Multimed. 2023, 27, 1677–1690. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Laurens, V.D.M.; Weinberger, K.Q. Densely Connected Convolutional Networks; IEEE Computer Society: Washington, DC, USA, 2016. [Google Scholar]

- Chang, D.; Tong, Y.; Du, R.; Hospedales, T.; Song, Y.-Z.; Ma, Z. An Erudite Fine-Grained Visual Classification Model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Liu, M.; Zhang, C.; Bai, H.; Zhang, R.; Zhao, Y. Cross-part learning for fine-grained image classification. IEEE Trans. Image Process. 2021, 31, 748–758. [Google Scholar] [CrossRef]

- Zhao, P.; Yang, S.; Ding, W.; Liu, R.; Xin, W.; Liu, X.; Miao, Q. Learning Multi-Scale Attention Network for Fine-Grained Visual Classification. J. Inf. Intell. 2025, 3, 492–503. [Google Scholar] [CrossRef]

- Rao, Y.; Chen, G.; Lu, J.; Zhou, J. Counterfactual attention learning for fine-grained visual categorization and re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1025–1034. [Google Scholar]

- Tang, Z.; Yang, H.; Chen, C.Y.C. Weakly supervised posture mining for fine-grained classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 23735–23744. [Google Scholar]

- Kim, S.; Nam, J.; Ko, B.C. Vit-net: Interpretable vision transformers with neural tree decoder. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; PMLR: Birmingham, UK, 2022; pp. 11162–11172. [Google Scholar]

- Jiang, X.; Tang, H.; Gao, J.; Du, X.; He, S.; Li, Z. Delving into multimodal prompting for fine-grained visual classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 2570–2578. [Google Scholar]

- Zhang, Z.C.; Chen, Z.D.; Wang, Y.; Luo, X.; Xu, X.S. A vision transformer for fine-grained classification by reducing noise and enhancing discriminative information. Pattern Recognit. 2024, 145, 109979. [Google Scholar] [CrossRef]

- Bi, Q.; Zhou, B.; Ji, W.; Xia, G.S. Universal Fine-grained Visual Categorization by Concept Guided Learning. IEEE Trans. Image Process. 2025, 34, 394–409. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Yan, K.; Huang, F.; Li, J. Graph-based high-order relation discovery for fine-grained recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15079–15088. [Google Scholar]

- Song, Y.; Sebe, N.; Wang, W. On the Eigenvalues of Global Covariance Pooling for Fine-Grained Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 3554–3566. [Google Scholar] [CrossRef]

- Du, R.; Xie, J.; Ma, Z.; Chang, D.; Song, Y.Z.; Guo, J. Progressive learning of category-consistent multi-granularity features for fine-grained visual classification. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 9521–9535. [Google Scholar] [CrossRef]

- Ke, X.; Cai, Y.; Chen, B.; Liu, H.; Guo, W. Granularity-aware distillation and structure modeling region proposal network for fine-grained image classification. Pattern Recognit. 2023, 137, 109305. [Google Scholar] [CrossRef]

- Shi, Y.; Hong, Q.; Yan, Y.; Li, J. LDH-ViT: Fine-grained visual classification through local concealment and feature selection. Pattern Recognit. 2025, 161, 111224. [Google Scholar] [CrossRef]

- Imran, A.; Athitsos, V. Domain adaptive transfer learning on visual attention aware data augmentation for fine-grained visual categorization. In Proceedings of the Advances in Visual Computing: 15th International Symposium, ISVC 2020, San Diego, CA, USA, 5–7 October 2020; Proceedings, Part II 15. Springer: Berlin/Heidelberg, Germany, 2020; pp. 53–65. [Google Scholar]

- Yao, H.; Miao, Q.; Zhao, P.; Li, C.; Li, X.; Feng, G.; Liu, R. Exploration of class center for fine-grained visual classification. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 9954–9966. [Google Scholar] [CrossRef]

- Yu, W.; Luo, M.; Zhou, P.; Si, C.; Zhou, Y.; Wang, X.; Feng, J.; Yan, S. Metaformer is actually what you need for vision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10819–10829. [Google Scholar]

| Dataset | Class | Train | Test | LR |

|---|---|---|---|---|

| iNaturalist 2018 [31] | 8142 | 437,513 | 24,426 | 5 |

| CUB-200-2011 [32] | 200 | 5994 | 5794 | 5 |

| NABirds [33] | 555 | 23,929 | 24,633 | 5 |

| Stanford Cars [34] | 196 | 8114 | 8041 | 5 |

| Method | Publication | Accuracy (%) |

|---|---|---|

| BS-CMO [35] | CVPR 2022 | 74.0 |

| ViT-L [36] | ECCV 2022 | 75.3 |

| GPaCo [37] | IEEE 2023 | 75.4 |

| MixMIM-B [38] | CVPR 2023 | 77.5 |

| Presence-Only [39] | ICCV 2019 | 77.5 |

| CaiT-M-36U224 [40] | ICCV 2021 | 78.0 |

| MixMIM-L [38] | CVPR 2023 | 80.3 |

| VL-LTR [41] | ECCV 2022 | 81.0 |

| RDNet-L [42] | ECCV 2024 | 81.8 |

| OMNIVORE [43] | CVPR 2022 | 84.1 |

| SWAG [44] | CVPR 2022 | 86.0 |

| MAE [45] | CVPR 2022 | 86.8 |

| Hiera-H [46] | PMLR 2023 | 87.3 |

| MetaFormer [3] | arXiv 2022 | 88.7 |

| Ours | – | 89.9 |

| Method | Publication | Accuracy (%) |

|---|---|---|

| DenseNet161 [48] | CVPR 2017 | 85.5 |

| PMG [49] | CVPR 2023 | 90.6 |

| CP-CNN [50] | TIP 2022 | 91.4 |

| LGTF [4] | ICCV 2023 | 91.5 |

| TransIFC [47] | TMM 2023 | 91.0 |

| CAMF [51] | SPL 2021 | 91.2 |

| RAMS-Trans [52] | AAAI 2021 | 91.3 |

| PMRC [53] | CVPR 2023 | 91.5 |

| ViT-Net [54] | ICML 2022 | 91.6 |

| TransFG [2] | AAAI 2022 | 91.7 |

| IELT [11] | TMM 2023 | 91.8 |

| MP-FGVR [55] | AAAI 2024 | 91.8 |

| ACC-VIT [56] | AAAI 2024 | 91.8 |

| DACL [24] | CVPR 2022 | 92.0 |

| MetaFormer [3] | arXiv 2022 | 92.5 |

| CGL [57] | TMM 2025 | 92.6 |

| MPSA [5] | TIP 2024 | 92.8 |

| Ours | – | 92.9 |

| Method | Publication | Accuracy (%) |

|---|---|---|

| DenseNet161 [48] | CVPR 2017 | 83.1 |

| GaRD [58] | CVPR 2021 | 88.0 |

| SEB [59] | TPAMI 2023 | 88.2 |

| PMG-V2 [60] | TPAMI 2022 | 88.4 |

| GDSPM-Net [61] | PR 2023 | 89.0 |

| LGTF [4] | ICCV 2023 | 90.4 |

| TransFG [2] | AAAI 2022 | 90.8 |

| IELT [11] | TMM 2023 | 90.8 |

| TransIFC [47] | TMM 2023 | 90.9 |

| MP-FGVR [55] | AAAI 2024 | 91.0 |

| ACC-ViT [56] | PR 2024 | 91.4 |

| CGL [57] | TMM 2025 | 91.7 |

| MPSA [5] | TIP 2024 | 92.5 |

| MetaFormer [3] | arXiv 2022 | 92.8 |

| Ours | – | 93.1 |

| Method | Publication | Accuracy (%) |

|---|---|---|

| LDH-ViT [62] | PR 2025 | 93.1 |

| DATL [63] | ISVC 2020 | 94.5 |

| RsNet50-ECC [64] | TNNLS 2024 | 94.7 |

| TransFG [2] | AAAI 2022 | 94.8 |

| ViT-Net [54] | ICML 2022 | 95.0 |

| MetaFormer [3] | arXiv 2022 | 95.0 |

| Ours | – | 95.1 |

| GFE | iNaturalist 2018 | CUB-200-2011 | NABirds | Stanford Cars |

|---|---|---|---|---|

| - | 88.70 | 92.50 | 92.80 | 94.98 |

| ✓ | 90.46 | 92.86 | 93.23 | 95.27 |

| Token_Numbers | 0 | 4 | 8 | 12 | 16 |

|---|---|---|---|---|---|

| Accuracy (%) | 92.50 | 92.78 | 92.80 | 92.87 | 92.82 |

| Models | Param (M) | FLOPs (G) |

|---|---|---|

| TransFG [2] | 86.4 | 130.2 |

| IELT [11] | 93.5 | 73.2 |

| ACC-ViT [56] | 87.0 | 162.9 |

| Swin-Base [23] | 87.1 | 47.2 |

| ViT-Net [54] | 92.2 | 65.6 |

| MPSA [60] | 98.5 | 67.9 |

| PMRC (SwinTransformer) [53] | 89.0 | - |

| PMRC (VGG16) [53] | 139.0 | - |

| MetaFormer [65] | 81.0 | 49.7 |

| Ours | 88.1 | 52.7 |

| Sobel Kernel Size | |||

|---|---|---|---|

| Accuracy (%) | 93.1 | 93.07 | 93.03 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, B.; Li, J.; Jin, Z.; Zhang, W.; Song, X.; Jin, B. Fine-Grained Image Recognition with Bio-Inspired Gradient-Aware Attention. Biomimetics 2025, 10, 834. https://doi.org/10.3390/biomimetics10120834

Ma B, Li J, Jin Z, Zhang W, Song X, Jin B. Fine-Grained Image Recognition with Bio-Inspired Gradient-Aware Attention. Biomimetics. 2025; 10(12):834. https://doi.org/10.3390/biomimetics10120834

Chicago/Turabian StyleMa, Bing, Junyi Li, Zhengbei Jin, Wei Zhang, Xiaohui Song, and Beibei Jin. 2025. "Fine-Grained Image Recognition with Bio-Inspired Gradient-Aware Attention" Biomimetics 10, no. 12: 834. https://doi.org/10.3390/biomimetics10120834

APA StyleMa, B., Li, J., Jin, Z., Zhang, W., Song, X., & Jin, B. (2025). Fine-Grained Image Recognition with Bio-Inspired Gradient-Aware Attention. Biomimetics, 10(12), 834. https://doi.org/10.3390/biomimetics10120834