1. Introduction

An optimization algorithm seeks to identify the set of parameters that produces the optimal value (maximum or minimum) for an objective function, subject to a series of defined constraints [

1]. Conventional optimization algorithms are typically contingent upon specific mathematical models or problem structures, which enable them to efficiently find the optimal solution for low-dimensional, well-structured, or gradient-based problems like linear, convex, and continuous optimization [

2,

3]. Nevertheless, optimization problems in practical applications exhibit significant diversity and are classified into multiple types based on whether they are single-objective or multi-objective, static or dynamic, and discrete or continuous [

4]. The hallmarks of these problems include non-convexity, nonlinear constraints, high dimensionality, and substantial computational expense, with the difficulty of finding a solution escalating as the problem’s dimensions grow [

5]. Therefore, employing traditional algorithms for such challenges is often problematic due to high computational complexity, the risk of stagnation at suboptimal solutions, and an inability to achieve the level of precision demanded by real-world engineering problems [

6].

Against this backdrop, metaheuristic algorithms have seen widespread adoption in various optimization problems, attributable to their ease of implementation, their non-reliance on gradient data, and their lower susceptibility to premature convergence [

7]. Metaheuristic algorithms are distinguished from traditional methods by their use of a search mechanism based on predefined rules and stochastic operators that, by operating independently of the search space’s gradient information, enables them to solve most real-world non-convex, nonlinear, and high-dimensional optimization problems [

3]. Metaheuristic algorithms can find a solution at or near the global optimum under more complex constraints. Therefore, this class of algorithms is better suited for solving optimization tasks with complex constraint conditions.

Based on the description above, ongoing progress in metaheuristic optimization has spurred the emergence of various pioneering algorithms, which can be grouped into four classes based on their characteristics. The first class of algorithms is derived from principles of biological evolution, including Biogeography-based optimization (BBO) [

8] and differential evolution (DE) [

9]. A second category of algorithms draws from physical laws or mathematical principles, featuring algorithms such as the sine cosine algorithm (SCA) [

10], thermal exchange optimization (TEO) [

11], atom search optimization (ASO) [

12], and Keplerian optimization algorithm (KOA) [

13]. Human social behaviors provide the conceptual basis for the third category, which includes social group optimization (SGO) [

14], league championship algorithm (LCA) [

15], and the student psychology optimization (SPO) algorithm [

16]. Lastly, swarm intelligence-based methods constitute the fourth class, including beluga whale optimization (BWO) [

17], the walrus optimizer (WO) [

18], the hippopotamus optimization algorithm (HO) [

19], and Greylag Goose Optimization (GGO) [

20].

While the multitude of optimization algorithms documented in prior research each possess distinct merits, according to the no free lunch (NFL) theorem, there is no one-size-fits-all algorithm that is universally optimal for every kind of optimization problem [

21]. This theorem justifies the creation of domain-specific solutions, since an algorithm’s effectiveness on a particular set of problems does not guarantee similar success in other areas [

22]. Consequently, motivated by the NFL theorem, researchers have spent the last few decades developing numerous algorithmic variants designed to advance the efficacy of metaheuristic approaches.

As an illustration, Xie et al. [

23] developed a sparrow search algorithm enhanced with a dynamic classification mechanism. The specific improvements include (1) employing an elite opposition-based Chebyshev strategy when generating the initial population to ensure the starting solutions are both diverse and well-distributed, thereby preventing premature convergence, and (2) proposing a dynamic multi-subpopulation classification mechanism that stratifies the sparrow population into elite, intermediate, and inferior subgroups, with stratification dictated by individual fitness values. The elite subgroup employs an elite guidance strategy to accelerate the convergence process; the intermediate subgroup applies an adaptive dynamic inertia weight strategy, guiding it in balancing its exploratory and exploitative behaviors; and the inferior subgroup aims to enhance final convergence precision by employing a golden sine strategy. Zhu et al. [

24] modified the snake optimizer with the addition of several new strategies. Several specific improvements were made, which are detailed below and include the following: (1) enhancing the quality of the algorithm’s initial solution by designing a multi-seed chaotic mapping mechanism to generate the initial population, resulting in a more homogenous dispersal of the starting individuals, (2) incorporating an anti-predation strategy within the exploration process, aiming to widen the searchable area while enhancing the rate and precision of convergence, and (3) proposing a bidirectional population evolution dynamics strategy aimed at bolstering local exploitation process, thereby steering the algorithm away from premature convergence and improving the equilibrium between its global and local search capabilities. Similarly, an adaptive and diversified version of the hiking optimization algorithm was introduced by Abdel-salam et al. [

25]. Their specific improvements include (1) proposing a stratified random initialization strategy to be employed during initial population construction, thereby promoting greater diversity within the set of starting solutions, (2) proposing an enhanced leader coordination strategy to mitigate the risk of premature convergence, (3) an adaptive perturbation strategy introduced as a solution to local optima entrapment, aiming to improve the hiking optimization algorithm’s escape capability, and (4) employing a dynamic exploration strategy to maintain a good trade-off between the algorithm’s exploratory and exploitative phases.

A recent addition to the swarm intelligence family, the Dung Beetle Optimizer (DBO) [

26], is an algorithm which draws its core principles from the life habits of dung beetles. It establishes an innovative search framework by simulating their behaviors of ball-rolling, dancing, breeding, foraging, and stealing. Distinguishing itself from other prominent swarm intelligence techniques, the DBO features a unique search process that adeptly manages the trade-off between global search and local refinement, resulting in both rapid convergence and high-precision solutions [

27]. As a result, researchers have applied the DBO to solve numerous practical problems.

For instance, the authors of [

28] applied an elite-based variant of the DBO to fine-tune the hyperparameters of an encrypted traffic classification model based on a multi-scale convolutional neural network. For the purpose of boosting the quantity and rated power of fuel cell stacks, the work in [

29] utilized the DBO for the optimization of a manifold’s structural parameters, thereby improving the consistency of the fuel cell’s performance. In [

30], a composite model combining a self-attention temporal convolutional network and a bidirectional long short-term memory network was developed for ultra-short-term photovoltaic power forecasting; this model’s hyperparameters were subsequently fine-tuned using the DBO for improved predictive accuracy. Furthermore, [

31] developed an improved variant of the DBO specifically for application in search and rescue operations that utilize multiple UAVs within disaster environments, and the authors succeeded in finding the most efficient route in minimal time. Lastly, [

32] optimized a long short-term memory model using the DBO which, when used in conjunction with the variational mode decomposition technique, predicted methane production from deep coal seams and improved the precision of daily yield forecasts.

Despite its superior performance relative to many algorithms in the swarm intelligence family in tackling specific optimization challenges, it also possesses certain shortcomings. The authors of [

33] pointed out three drawbacks of the DBO. (1) The canonical DBO algorithm begins by creating its initial population through a random initialization method. The diversity of the initial population may be compromised because this stochastic approach can result in poor spatial coverage of individuals throughout the search space. (2) The foraging dung beetles’ lack of adaptability impairs their global exploration capability and increases their propensity for premature convergence. (3) Lastly, the strategy used to adjust the locations of thieving dung beetles is reliant on the current individual’s best solution, a factor that diminishes population diversity and reduces the convergence precision. The authors of [

34] noted that the reliance of the position update rule for ball-rolling dung beetles in the global worst position impairs the algorithm’s global search performance and undermines the balance between its exploratory and exploitative capabilities. As pointed out in [

35], the linear adjustment of parameter R as part of the dung beetle’s reproduction strategy, while straightforward, elevates the likelihood of the algorithm converging prematurely and may prevent the discovery of more optimal solutions. In summary, further enhancements can be made to the DBO to elevate its performance in optimization tasks.

This paper proposes an enhanced variant of the standard DBO with multiple strategies. The goal of this new algorithm is to address key weaknesses such as its population initialization, balancing exploration and exploitation, and its susceptibility to premature convergence. The algorithm enhances population diversity and augments the global search capabilities of the baseline algorithm by incorporating an efficient initialization mechanism and a dynamic balancing strategy. Ultimately, these enhancements enable the algorithm to surmount the challenge of premature convergence, thereby elevating its solution accuracy when tackling complex optimization problems. Through these improvements, this paper makes the following key contributions:

- •

An enhanced version of the DBO algorithm, named MIDBO, is proposed, which improves overall performance by integrating four new strategies into the original framework.

- •

We validate the numerical optimization effectiveness of the MIDBO on functions selected from the CEC2017 and CEC2022 benchmark collections and in comparison with six competing algorithms. Based on the experimental data, the MIDBO possesses a clear competitive edge.

- •

To further test the MIDBO’s practical utility, it was applied to three practical engineering challenges, where its success in securing optimal solutions underscored its high performance when tackling complex challenges.

The subsequent sections of this paper are as follows.

Section 2 introduces the standard DBO.

Section 3 introduces the new improvement mechanisms in the proposed MIDBO.

Section 4 presents comparative experiments involving the MIDBO against other optimization algorithms on two test suites.

Section 5 applies the MIDBO to specific engineering application scenarios.

Section 6 summarizes the paper and offers an outlook on potential future work.

3. Proposed Algorithm

3.1. Population Initialization Based on Chaotic Opposition-Based Learning Strategy

The efficiency of swarm intelligence optimization algorithms is influenced by its population initialization, as a well-distributed initial population improves the coverage of the solution space, which in turn enhances both the convergence speed and precision [

36]. The conventional DBO typically employs a uniform random initialization method to form its initial swarm, and while this stochastic nature is beneficial for exploring disparate zones within the search space and improves the prospects for discovering the global optimal solution, it presents a notable shortcoming. Specifically, there is no mechanism to secure a homogenous distribution, which often results in high-density pockets of candidates in some regions and low-density voids in others. Such an imbalanced spread poses a significant impediment to the algorithm’s initial convergence phase. To boost the quality of the initial candidate solutions, this study introduces a hybrid initialization strategy that fuses chaotic maps with dynamic opposition-based learning (OBL).

Compared with random initialization, chaotic maps exhibit superior performance in optimization searches, especially when locating the global optimum, an advantage that stems from their comprehensive traversal of the search domain and their effectiveness at evading local optima, thereby enabling a more thorough exploration of the solution domain [

37]. The circle map, when compared with other prevalent chaotic maps like the Chebyshev, logistic, and tent maps, has gained attention for its strong ergodicity, high coverage of chaotic values, and strong stability [

38,

39]. However, experiments revealed that the values generated by the circle map tend to cluster heavily within the interval [0.2, 0.5], making its distribution non-uniform. For the purpose of improving the uniformity of its chaotic value distribution, a modified circle map formula was developed. Equation (

10) presents the original formulation of the circle chaotic map:

wherein,

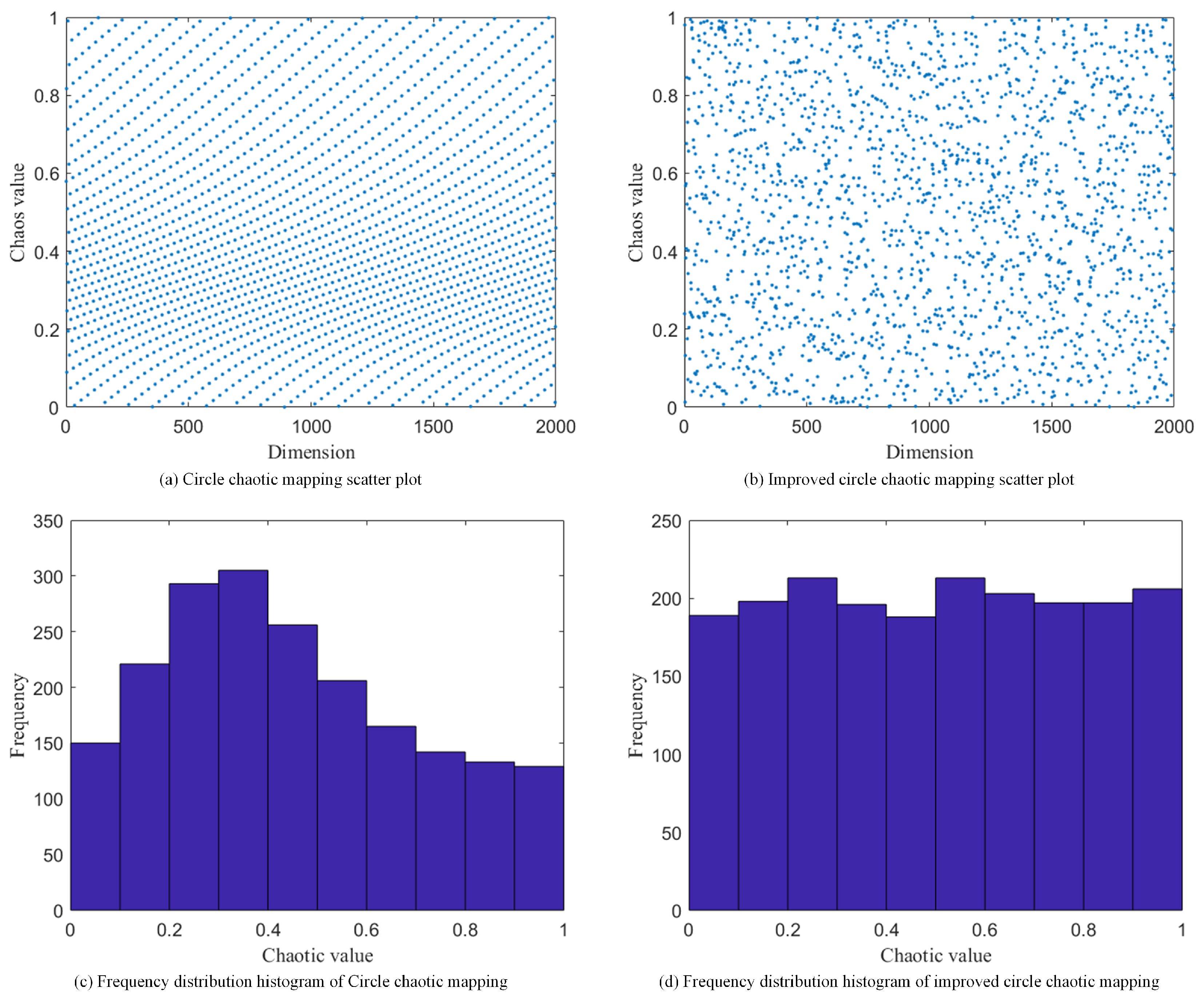

represents the nth chaotic sequence number. The scatter plot and frequency histogram corresponding to the standard circle chaotic map are presented in

Figure 1a,c, respectively.

As shown in

Figure 1a,c, the standard circle chaotic map generates values that are predominantly concentrated within the [0.2, 0.5] interval. Such a dense clustering of initial candidate solutions compromises the DBO’s population diversity. This study addresses this limitation by proposing a modified circle chaotic map that alters the original formula in three primary ways: (1) the linear term

is scaled by a factor of four; (2) the influence of the sine term is amplified by augmenting its coefficient from 0.5 to 0.8; and (3) the constant terms are reconfigured to 0.3 and

. The resulting modified map yields superior chaotic properties compared with the original, with its formulation presented in Equation (

11):

As shown in

Figure 1b,d, the scatter plot and frequency histogram for the modified circle chaotic map demonstrate a distribution that exhibits considerably greater uniformity than the original map. Consequently, initializing the DBO with this improved map generates a more diverse initial population with superior spatial coverage.

Based on [

40], the strategy of dynamic opposition-based learning (OBL) contributes to a more diverse population, improves the initial solution quality, and accelerates algorithmic convergence. Therefore, this study incorporates the dynamic OBL strategy within the DBO’s initial population generation stage, the mathematical formulation of which is presented in Equation (

12):

In the equation, represents the randomly generated initial population, with the variables and being random numbers sampled from a uniform distribution in the interval [0, 1].

The proposed initialization process, which integrates the improved circle chaotic map and the dynamic opposition-based learning method, proceeds as follows.

Step 1: First, form the starting population A using random initialization. Step 2: Generate a chaotic population B from population A according to Equation (

11), and simultaneously generate an opposite population C from population A according to Equation (

12). Step 3: Merge populations A, B, and C, and then compute each individual’s fitness score within the newly formed set. Step 4: Sort the resulting fitness values, and then form the final initial population by selecting the top N individuals from the merged group.

3.2. Oscillating Balance Factor

Swarm intelligence is fundamentally characterized by a balance between two distinct phases, namely exploration, where the algorithm scans the whole search area for the purpose of discovering potentially optimal regions, and exploitation, where the focus shifts to a more concentrated search within those regions to pinpoint the optimal solution.

Global exploration within the DBO framework is the primary responsibility of the ball-rolling dung beetles. To bolster this capability while establishing a more effective trade-off between global search and local refinement, a balance factor, denoted by

w, which nonlinearly decreases over iterations at a rate adaptively modulated by a sinusoidal function for the purpose of establishing a trade-off between global exploration and local exploitation, is developed and applied to their position update rule, as formulated in Equation (

13):

wherein, the parameter

is defined on the interval

, while the parameter

serves to control the amplitude of oscillation.

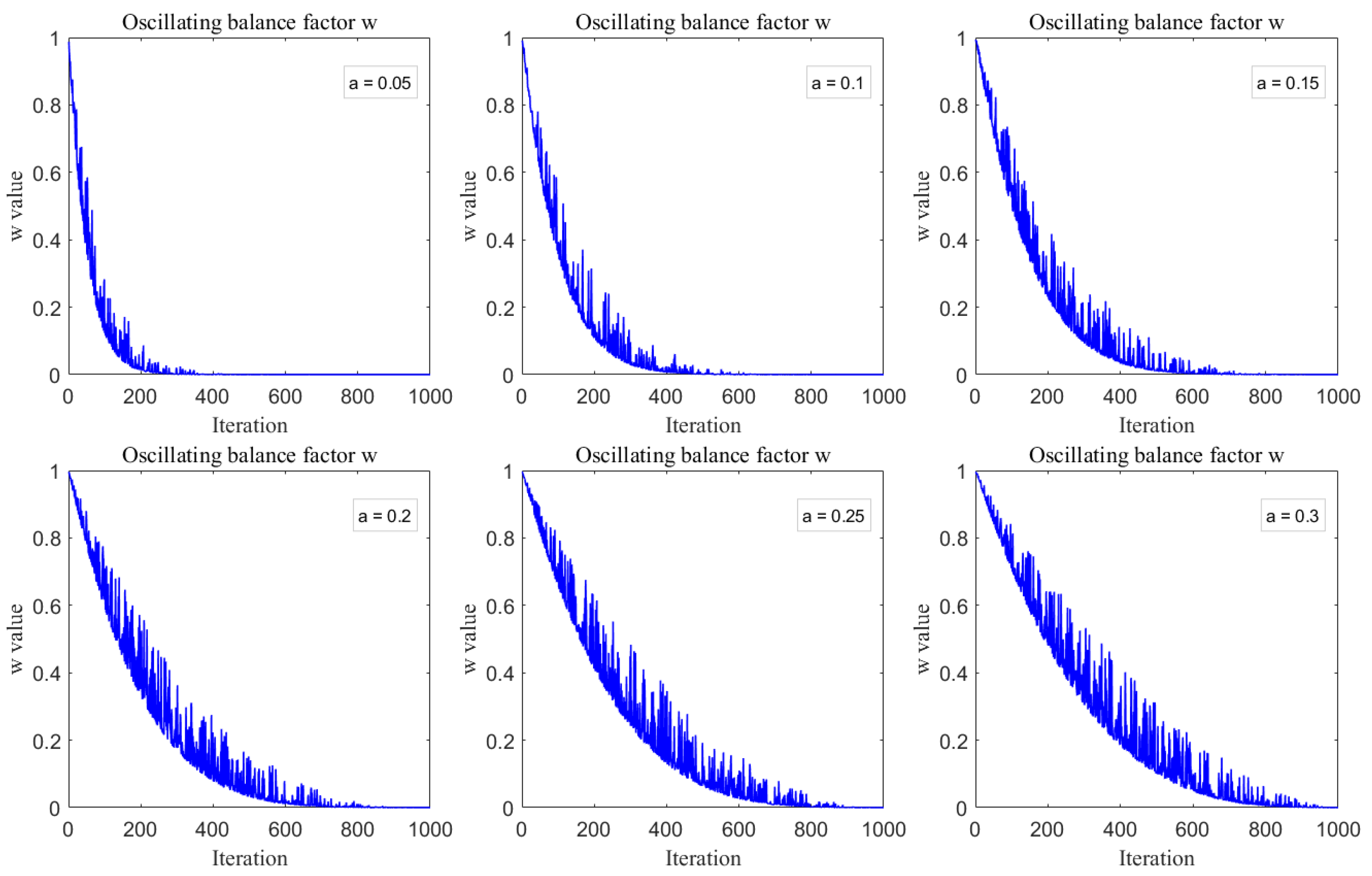

Figure 2 illustrates the variation curves of the oscillatory equilibrium factor

w as a function of different values of

over the course of 1000 iterations.

As illustrated by Equation (

13) and

Figure 2, the oscillating balance factor

w gradually declines over the course of the iterations, with a faster rate of decrease in the beginning. Consequently, the algorithm’s step size transitions from large to small over the course of the search, which allows for a more reasonable allocation of resources between its global exploration and local refinement capabilities. Additionally, the sine function, which is parameterized by

, injects a degree of randomness into the algorithm’s search during the exploration phase. To maintain a proper equilibrium for the oscillating balance factor in both the initial and final stages while also adhering to the principle of avoiding excessively large step sizes in the exploration phase and using the smallest possible step size during the exploitation phase, this study uses a value of 0.2 for the parameter

.

The process of updating their positions is modified by incorporating the oscillating balance factor,

w, as formulated in Equations (

14) and (

15):

3.3. Improved Foraging Strategy

The generation of candidate solutions as part of the DBO’s foraging process is governed by two random numbers: and . A key limitation of this approach is its failure to utilize information from the current best solutions, thereby weakening the algorithm’s local refinement capabilities and slowing its rate of convergence. To address this deficiency, this paper enhances the foraging phase by integrating an optimal value guidance strategy and an adaptive t-distribution perturbation strategy, both of which are incorporated into the position-updating mechanism.

Drawing inspiration from the social learning mechanism in particle swarm optimization (PSO), this study employs an optimal value guidance strategy to accelerate convergence. The core principle of this strategy is to leverage the best-so-far solution to steer the search of subsequent candidates toward the global optimum [

41]. By incorporating this strategy within the foraging stage, the position-updating mechanism is reformulated according to Equation (

16):

In the equation, is the optimal value guidance factor.

The t-distribution [

42] (Student’s distribution) is governed by a degree of freedom parameter n. Its two limiting cases are the Cauchy distribution (

), which provides strong global search capabilities, and the Gaussian distribution (

), which excels at local exploitation to improve convergence speed [

43]. To leverage the complementary strengths of these two behaviors, this study introduces an adaptive t-distribution perturbation strategy. This strategy enables the algorithm to transition from wide-ranging exploration in the initial phases to focused exploitation in the final phases, which serves to enhance the overall convergence speed. Equation (

17) provides the specific formula for this position update:

wherein, the positions of the optimal solution in the

jth dimension before and after the perturbation are denoted by

and

, respectively. The term

is a random value sampled from a t-distribution where the degrees of freedom are set by the current iteration number iter.

3.4. Multi-Population Differential Co-Evolutionary Mechanism

Despite boosting search efficiency, the original DBO’s position update strategy is susceptible to converging prematurely to local optima. Once trapped in such a state, the algorithm’s search is restricted to a less-than-optimal region of the solution domain, thereby impeding the discovery of the global optimum. The authors of [

44] pointed out that this constraint can be addressed by increasing population diversity, a common strategy for which is to introduce mutation operations, among which the differential evolution algorithm has gained attention for its superior search mechanism.Therefore, to strengthen the algorithm’s potential for steering clear of local optima and bolster population diversity, we developed a multi-population differential co-evolutionary mechanism that applies mutation operations to the dung beetle population.

However, a uniform mutation strategy is suboptimal, as it fails to address the diverse evolutionary requirements of all individuals in the population. For example, individuals with high fitness values are usually clustered near the current best individual and thus require more emphasis on the local exploitation capability. In contrast, individuals with inferior fitness are typically distant from the optimal solution, thus requiring a stronger capacity for global exploration. This principle motivates our approach, which involves partitioning the population into three distinct groups, using fitness as the partitioning criterion—the top 20% of the population as the elite group, the middle 50% as the intermediate group, and the remaining 30% as the inferior group—and then applying a unique differential evolution operator to each group. For example, with a total population of 30, the members are allocated accordingly: 6 are assigned to the elite group, 15 are assigned to the intermediate group, and the final 9 form the inferior group. This method enhances the DBO’s overall search effectiveness and facilitates its escape from local optima. The specific strategies are detailed below.

3.4.1. Elite Group

Individuals in the population’s elite group, characterized by high fitness values, are presumed to be in proximity to the global optimum. Consequently, their search should focus on local exploitation—performing fine-grained searches within their immediate region—to pinpoint the optimal solution. To facilitate this, the DE/current-to-best/1 mutation operator is employed for this group. This operator was selected for its exceptional local exploitation capabilities, which align perfectly with the requirements of the elite group, despite its limited global exploration potential. The corresponding mathematical formulation is presented in Equation (

18):

wherein, the resulting mutant vector is denoted by

, while

F serves as the scaling factor. The best individual in the current generation

t is represented by

. The terms

and

represent two separate individuals randomly selected from the population, excluding the target vector

.

Subsequently, a crossover operation derived from differential evolution is applied, with its formulation presented in Equation (

19):

wherein,

is an integer selected randomly from the interval

and

is the crossover rate parameter. Following this crossover operation, a selection operation is performed using a greedy criterion, as shown in Equation (

20):

3.4.2. Intermediate Group

The intermediate group, which possesses fitness values situated between the superior and inferior extremes, has a dual function, being capable of both local learning from the elite population and global exploration, which assists the algorithm in achieving a more effective trade-off between global search and exploitation. To fulfill the intermediate group’s role of balancing the search, we utilize the DE/mean-current/2 mutation operator, which was selected for its inherent capacity to provide an effective trade-off between global search and local refinement. Equation (

21) gives the mathematical definition for this operator:

wherein,

,

. After mutation, the crossover operation is performed using Equation (

19), and the selection operation is performed using Equation (

20).

3.4.3. Inferior Group

Individuals in the inferior group, characterized by poor fitness, are tasked with performing wide-ranging global exploration, which serves to maintain a diverse population and help the algorithm steer clear of local optima. The DE/rand/1 mutation operator is ideally suited for this purpose. By generating perturbations from two random difference vectors, this operator facilitates a broad search of the solution space without relying on guidance from other individuals, thus exhibiting excellent global exploration properties. Its mathematical definition is given by Equation (

22):

The terms

,

, and

represent three different individuals, chosen randomly from the existing population. Following the mutation phase, the crossover and selection steps are executed as defined in Equations (

19) and (

20).

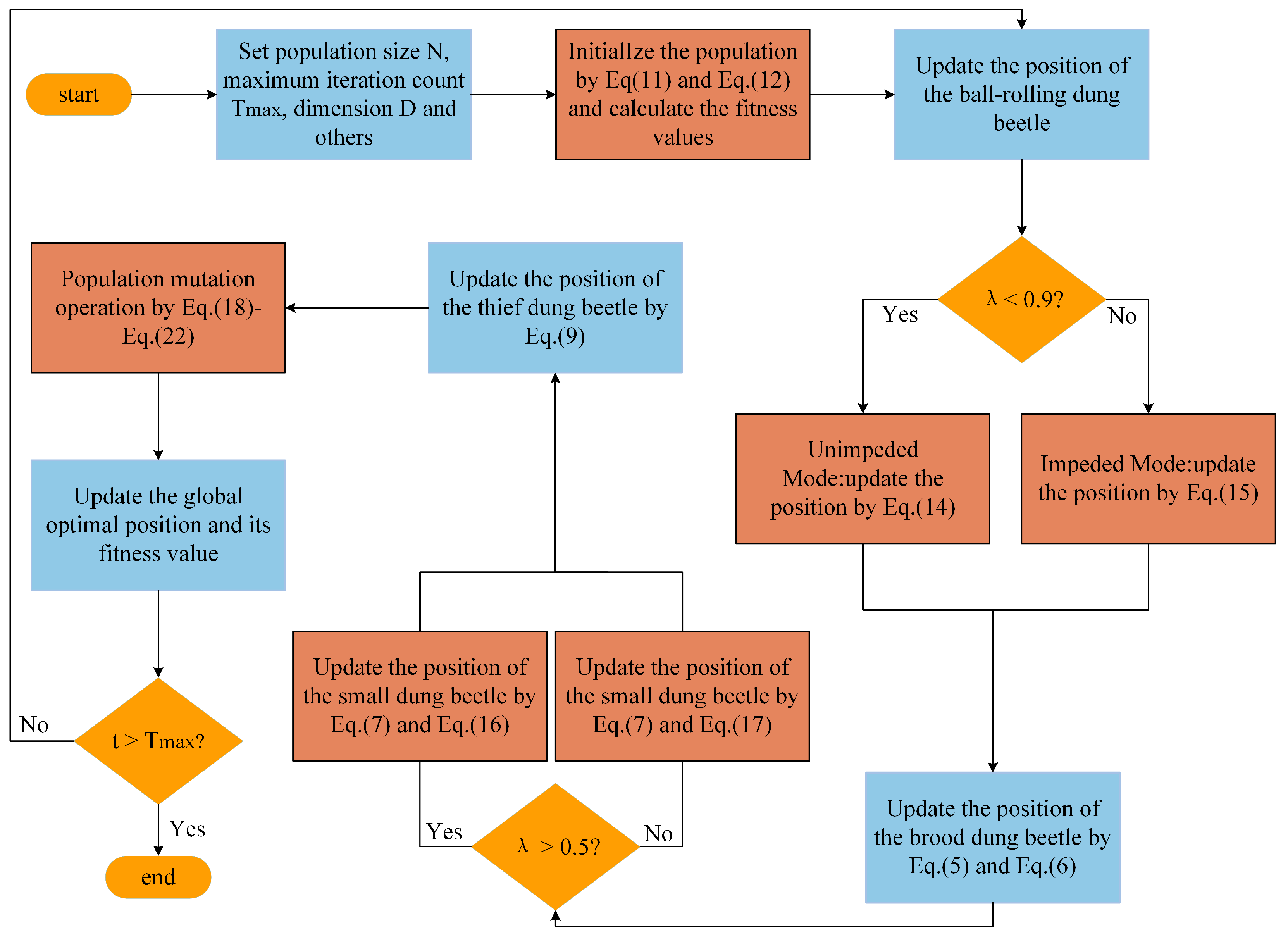

The complete operational flow of the MIDBO is detailed in two places: a flowchart presented in

Figure 3 and its corresponding pseudocode, given in Algorithm 2.

3.5. Time Complexity Analysis of MIDBO

Given a population with a size N, a solution space with a dimension D, and a maximum iteration count of M, the time complexity of the baseline DBO is . We will now analyze the computational overhead introduced by the strategies integrated into our proposed algorithm:

Population initialization with the chaotic opposition-based learning strategy: .

Oscillating balance factor: .

Improved foraging strategy: .

Multi-population differential co-evolutionary mechanism: .

Thus, the time complexity of the MIDBO can be expressed as

, indicating a rise in computational demands relative to the DBO. However, substantial performance gains for the MIDBO are confirmed through the experiments and real-world engineering cases presented in

Section 4 and

Section 5. Accordingly, this escalation in complexity is deemed an acceptable compromise in exchange for the algorithm’s augmented efficacy.

| Algorithm 2 The framework of the MIDBO algorithm |

| Require: The maximum iteration , the size of the particle’s population N, obtain the initial population X of dung beetles by Equations (11) and (12) |

| Ensure: Optimal position and its fitness value |

| 1: while do |

| 2: for to Number of rolling dung beetles do |

| 3: |

| 4: if then |

| 5: Update rolling dung beetle’s position by using Equation (14) |

| 6: else |

| 7: Update rolling dung beetle’s position by using Equation (15) |

| 8: end if |

| 9: end for |

| 10: for to Number of breeding dung beetles do |

| 11: Update breeding dung beetle’s position by using Equations (5) and (6) |

| 12: end for |

| 13: for to Number of small dung beetles do |

| 14: if then |

| 15: Update small dung beetle’s position by using Equations (7) and (16) |

| 16: else |

| 17: Update small dung beetle’s position by using Equations (7) and (17) |

| 18: end if |

| 19: end for |

| 20: for to Number of Stealing Dung Beetles do |

| 21: Update stealing dung beetle’s location by using Equation (9) |

| 22: end for |

| 23: Perform the population mutation operation by using Equations (18)–(22) |

| 24: |

| 25: end while |

| 26: return and its fitness value |

4. Experiments

All experiments in this section were conducted on a conventional personal computer running MATLAB R2024a, configured with a Windows 11 (64-bit) OS, an Intel(R) Core(TM) i9-13900H CPU @ 2.60 GHz, and 24 GB of RAM. To evaluate the performance of the proposed MIDBO on the CEC2017 [

45] and CEC2022 [

46] test suites, it was compared with the hiking optimization algorithm (HOA) [

47], whale optimization algorithm (WOA) [

48], differential evolution (DE) [

9], particle swarm optimization (PSO) [

49], a dung beetle optimization algorithm based on quantum computing and a multi-strategy hybrid (QHDBO) [

50], and the original DBO. Both test suites comprise unimodal, multimodal, hybrid, and composition functions, thereby facilitating a more scientific and comprehensive evaluation of the respective merits and demerits of each algorithm.

Table 1 lists the specific parameter configurations for all algorithms used in the comparative analysis.

4.1. Performance Evaluation of Improved Strategies

Ablation experiments were performed to evaluate how the four integrated strategies, both individually and in combination, affect the DBO’s overall performance. Here, we define the relevant abbreviations used in the experiments: IDBO is DBO + chaotic opposition-based learning; ODBO is the DBO + oscillating balance factor; FDBO is the DBO + improved foraging strategy; DDBO is the DBO + multi-population differential co-evolutionary mechanism; IOFDBO is the DBO + chaotic opposition-based learning + oscillating balance factor + improved foraging strategy; IODDBO is the DBO + chaotic opposition-based learning + oscillating balance factor + multi-population differential co-evolutionary mechanism; IFDDBO is the DBO + chaotic opposition-based learning + improved foraging strategy + multi-population differential co-evolutionary mechanism; OFDDBO is the DBO + oscillating balance factor + improved foraging strategy + multi-population differential co-evolutionary mechanism. The performance of these DBO variants was analyzed using 12 benchmark functions selected from the CEC2017 benchmark set, covering unimodal, simple multimodal, hybrid, and composition functions. For the primary statistical analysis, the canonical DBO was treated as the baseline, which included the mean and ranking.

Table 2 summarizes the findings from the ablation experiment.

The analysis of our experiments demonstrates that four new mechanisms, especially the improved foraging strategy and the multi-population differential co-evolutionary mechanism, collectively improved the DBO’s performance. Additionally, it can be seen from the rankings in the last row of the experimental results that IFDDBO, IODDBO, and OFDDBO, which were ranked second, third, and fourth, had better overall optimization performance than the other variants of the DBO. This result underscores the significant contributions of the three main strategies: chaotic opposition-based learning, improved foraging, and multi-population differential co-evolutionary. The MIDBO demonstrated superior performance compared with the DBO, exhibiting enhanced local optima avoidance and improved exploration–exploitation balance. This advancement is a result of the synergistic effect among the four proposed strategies. Together, they effectively guide the algorithm’s search toward the global optimum.

4.2. CEC2017 Benchmark Function Results and Analysis

To thoroughly assess the MIDBO’s performance, we benchmarked it against six leading swarm intelligence algorithms using the CEC2017 benchmark suite (29 test functions). This assessment validated MIDBO’s effectiveness and generalizability across diverse optimization problem types. For experimental consistency, we applied identical parameter settings to all algorithms for the population size (N = 30), maximum iterations (T = 1000), and dimensionality (D = 50). To mitigate stochastic variability, for each test function, every algorithm was run independently 30 times, with performance quantified through the mean, standard deviation, and ranking.

With an average rank of 1.1379 across the 29 CEC2017 test functions, the MIDBO achieved the highest overall rank, a finding clearly supported by the results in

Table 3. Notably, MIDBO significantly outperformed the original DBO across all test functions, a result that validates the effectiveness of our proposed modifications. The MIDBO ranked first and recorded the best mean value when its performance was analyzed on unimodal functions F1 and F3. The MIDBO also obtained the best mean and rank on multimodal functions F4, F5, F8, and F10. However, it did not secure the best mean on F6 and F7, ranking third. On F9, the MIDBO’s mean and rank were inferior to PSO’s, placing it second. In the hybrid and composition functions, the MIDBO’s mean on F27 was inferior to that of the DE algorithm; however, despite not achieving the optimal mean, it still ranked first. For all functions other than these, it obtained the best mean and the first rank.

Table 3 presents an analysis of the 50-dimensional problem results on the CEC2017 benchmark, revealing a distinct performance hierarchy among the compared algorithms. The MIDBO secured the premier rank, surpassing the QHDBO, DBO, and HOA, which ranked second, third, and last, respectively. These results provide compelling evidence that the novel strategies implemented in the MIDBO are highly effective at enhancing the convergence precision of the baseline DBO. The seven algorithms were ranked by performance in the following order: MIDBO > QHDBO > DBO > DE > PSO > WOA > HOA.

The results obtained from the Wilcoxon rank-sum test are displayed in

Table 4. A

p value exceeding 0.05, highlighted in bold, indicates the absence of a statistically significant difference and is denoted by the symbol “=”. Conversely, a

p value below 0.05 signals a significant performance disparity. In such instances, superiority was assigned to the algorithm with the lower mean value, where “+” signifies that the MIDBO is superior while “-” indicates the superiority of the comparison algorithm. Adhering to this convention, the summary tallies of the MIDBO’s performance against each competitor were 29/0/0, 21/8/0, 25/4/0, 29/0/0, 25/3/1, and 22/6/1. In the comparison between the MIDBO and DBO,

p > 0.05 on functions F7, F10, F18, and F22, and the MIDBO’s performance was superior to the DBO’s on the remaining 25 functions. Compared with DE,

p > 0.05 on functions F6, F14, F15, F23, F24, F26, F27, and F29, and the MIDBO’s performance was superior to DE’s on the remaining 21 functions. Compared with PSO,

p > 0.05 on functions F9, F26, and F27. On function F6, PSO was superior to the MIDBO, but the MIDBO’s performance was superior to PSO’s on the remaining 25 functions. Compared with the QHDBO,

p > 0.05 on functions F6, F10, F14, F18, F25, and F26. As the QHDBO achieved the optimal mean value on function F7, it was considered superior on this function. However, the MIDBO’s performance was superior to the QHDBO’s on the remaining 22 functions. A comparative analysis with the two other algorithms demonstrates that the MIDBO outperformed all of them. Consequently, the MIDBO exhibited superior capability in solving 50-dimensional CEC2017 benchmark functions.

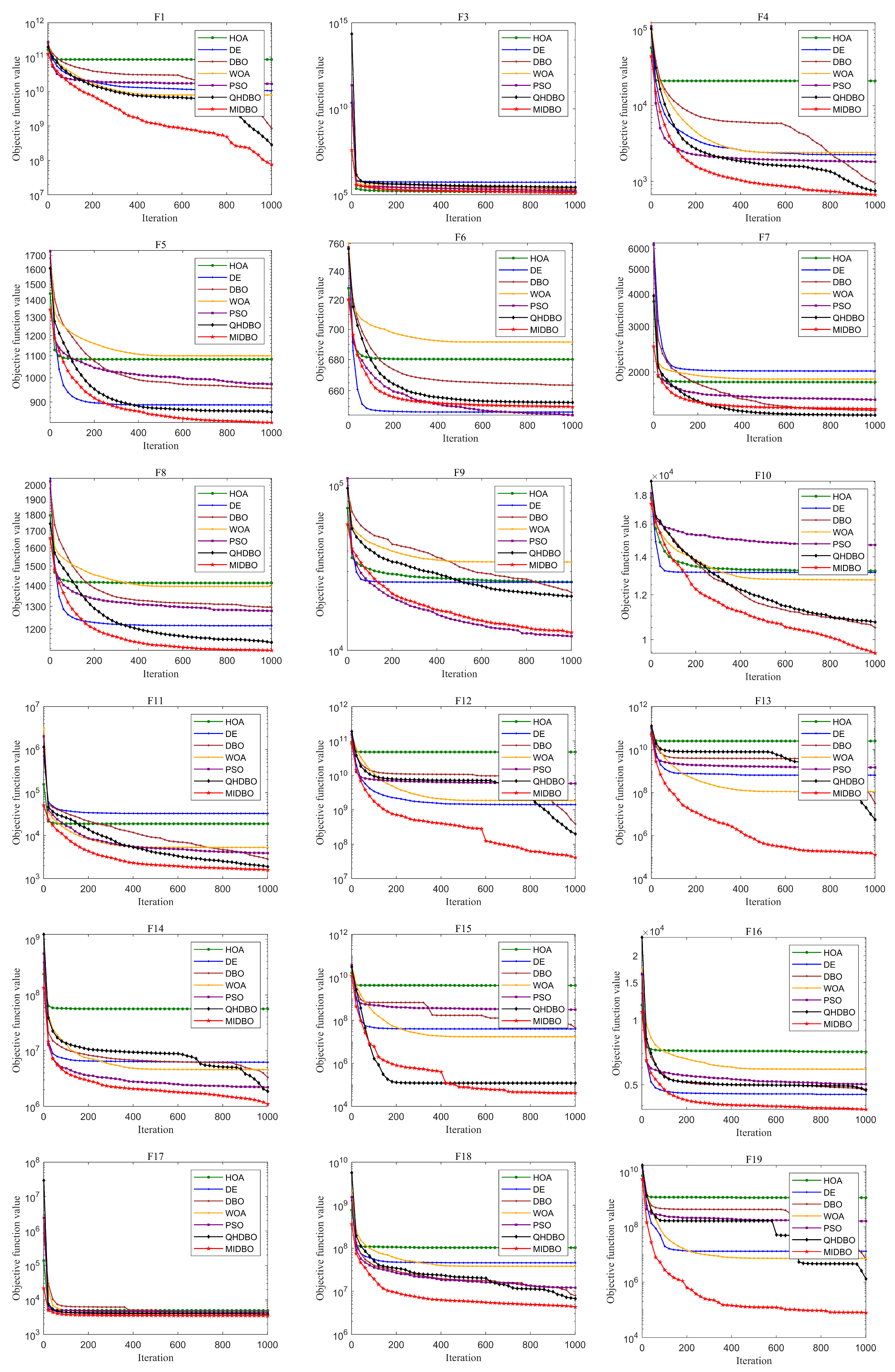

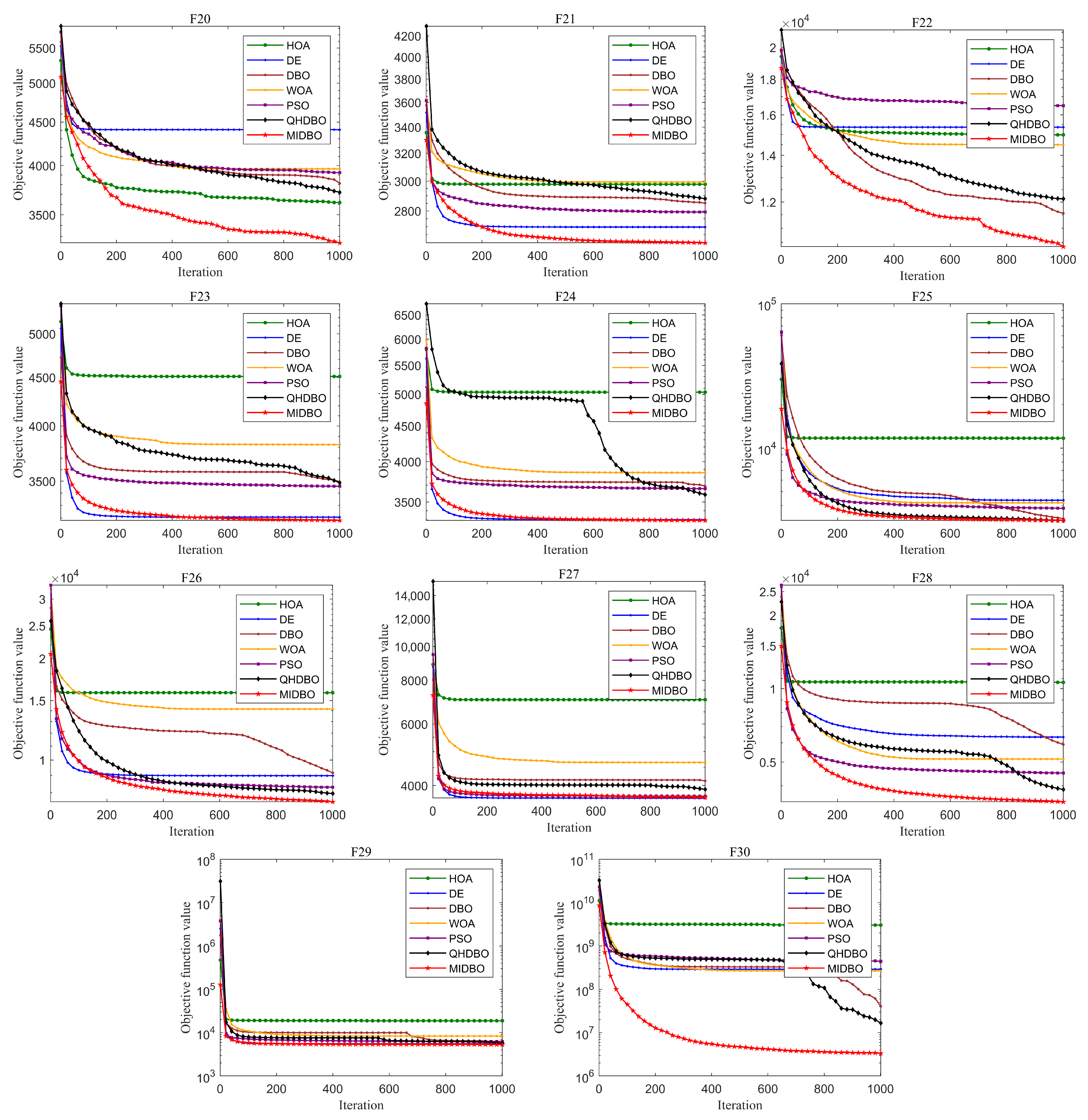

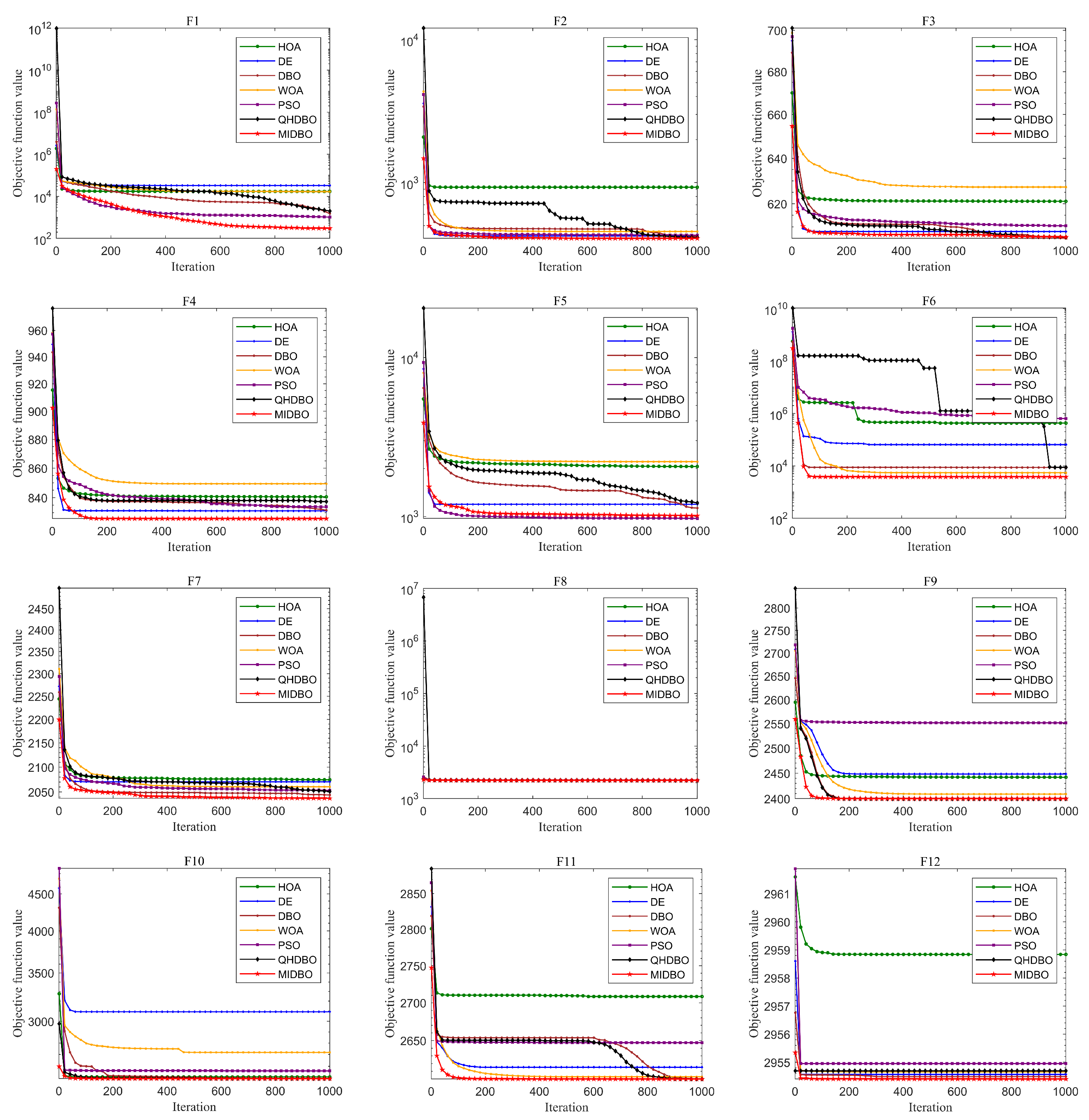

Figure 4 presents how all algorithms converged for the CEC2017 test suite. For unimodal functions, the MIDBO’s convergence performance demonstrated substantial superiority over the competing algorithms. The MIDBO exhibited slower early-stage convergence than the competing algorithms for multimodal and hybrid functions. However, the incorporation of an enhanced foraging strategy and a multi-population differential cooperative evolution mechanism provides the algorithm with a more effective mechanism for circumventing local optima. Therefore, as the iterations progressed, after other algorithms already stagnated and converged to a suboptimal solution, the MIDBO’s convergence curve still showed a downward trend, and in terms of convergence accuracy, the MIDBO clearly outperformed its competitors. The MIDBO demonstrated a particularly strong performance on functions F4, F5, F10–F16, F18 and F19. Its optimization capabilities were also more prominent on the composition functions, specifically F20–F23, F26, F28, and F30, when compared with other algorithms.

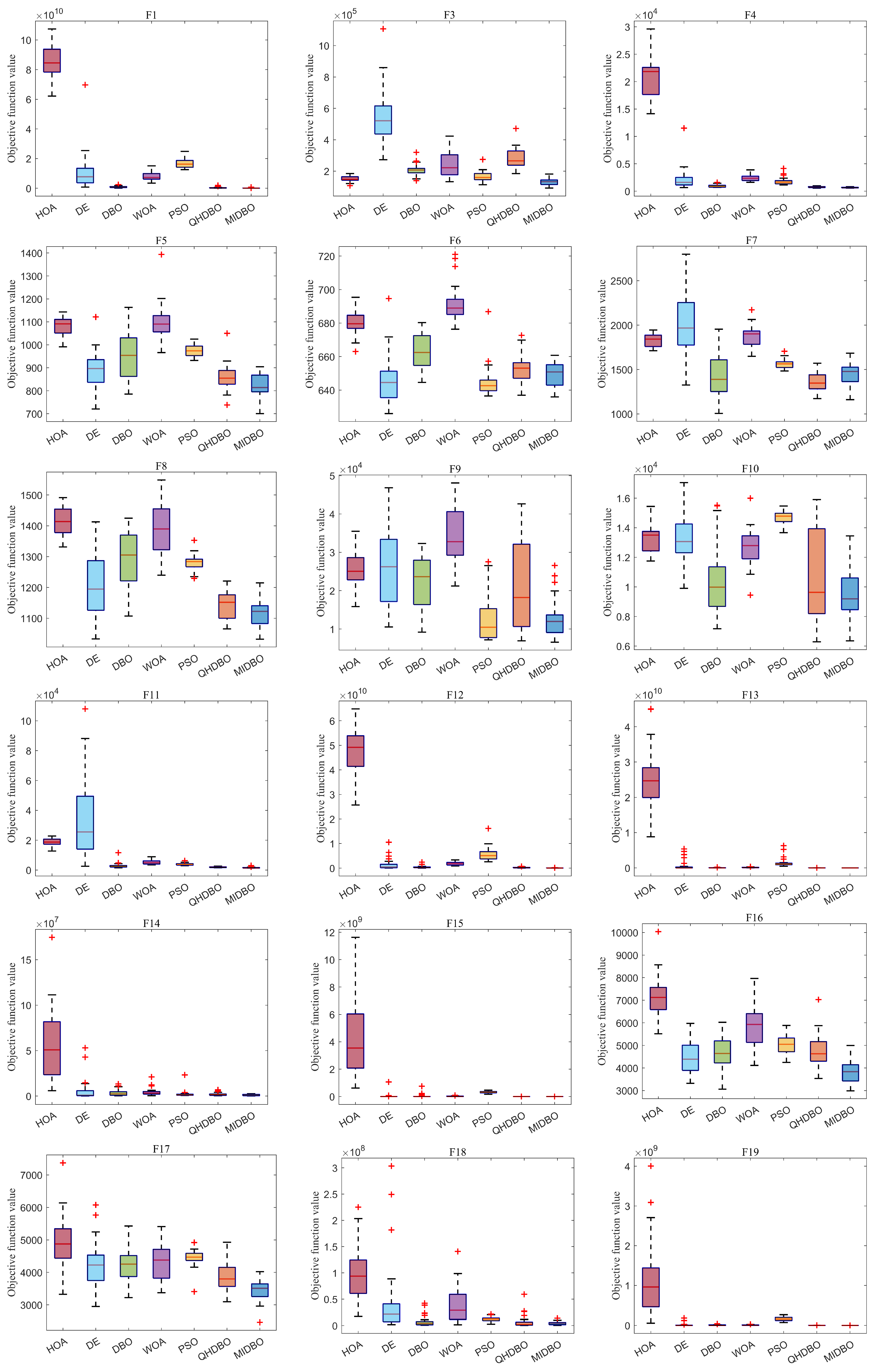

Figure 5 presents the box plot analysis of algorithm performance on the CEC2017 benchmark suite. In most function cases, the MIDBO exhibited significantly smaller and lower quartile ranges, demonstrating both superior solution quality and enhanced stability relative to the competing methods. When set against the other algorithms, the MIDBO algorithm’s boxes were consistently smaller and positioned lower, especially on functions F1, F3–F4, F11–F16, F18–F19, F23–F25, and F28–F30. Overall, the box plots indicate that the MIDBO showed a significant improvement compared with the DBO.

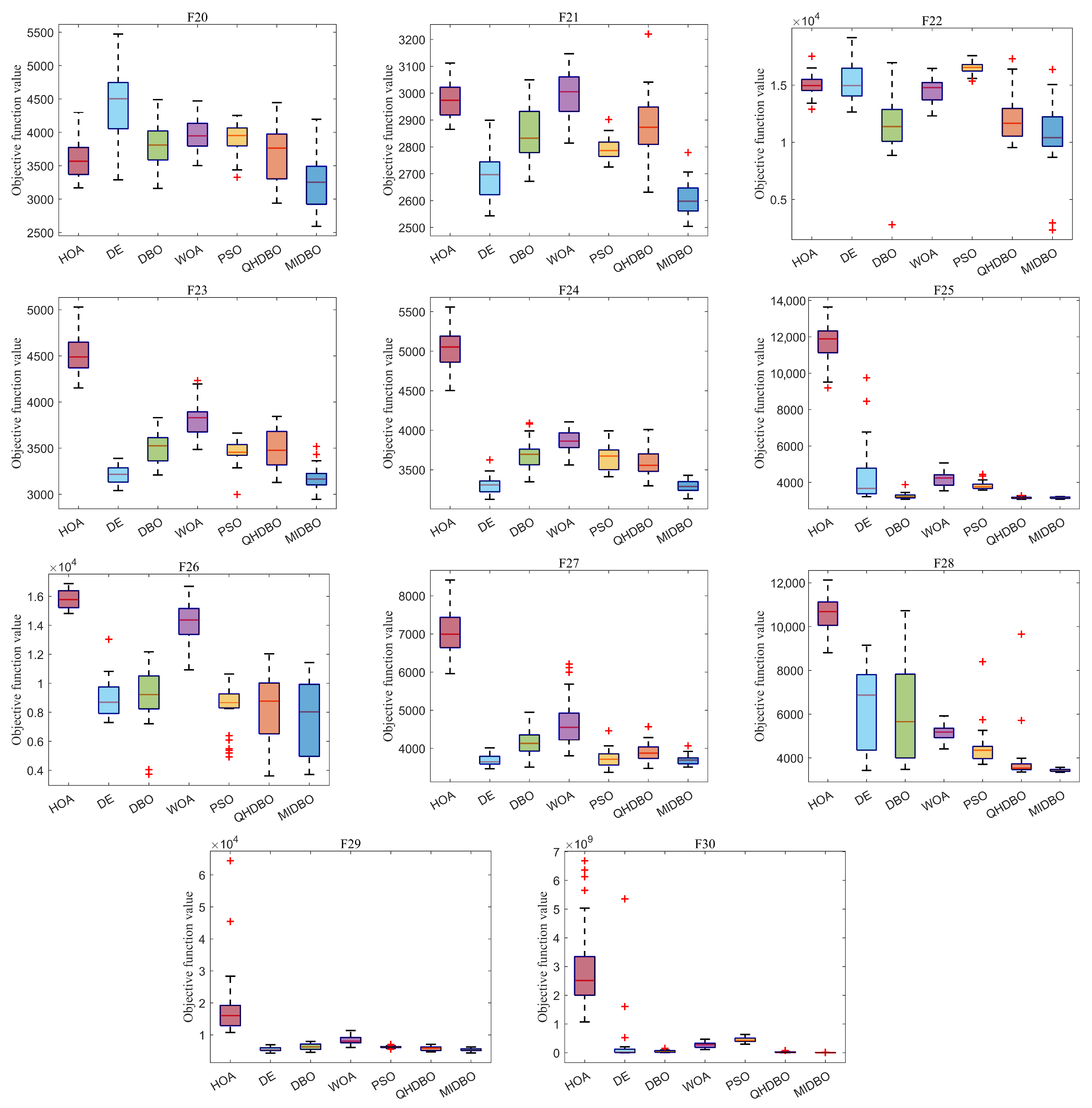

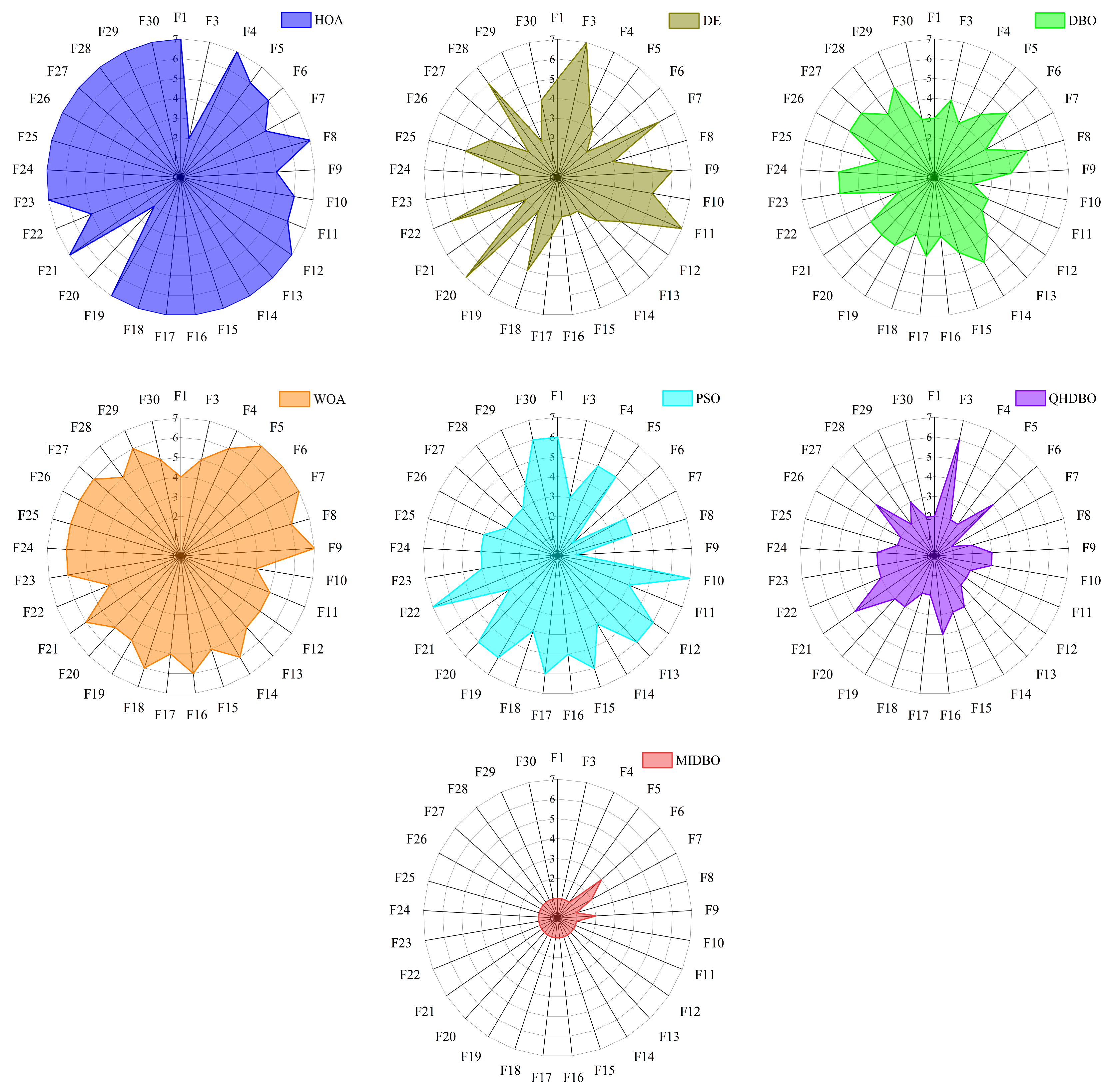

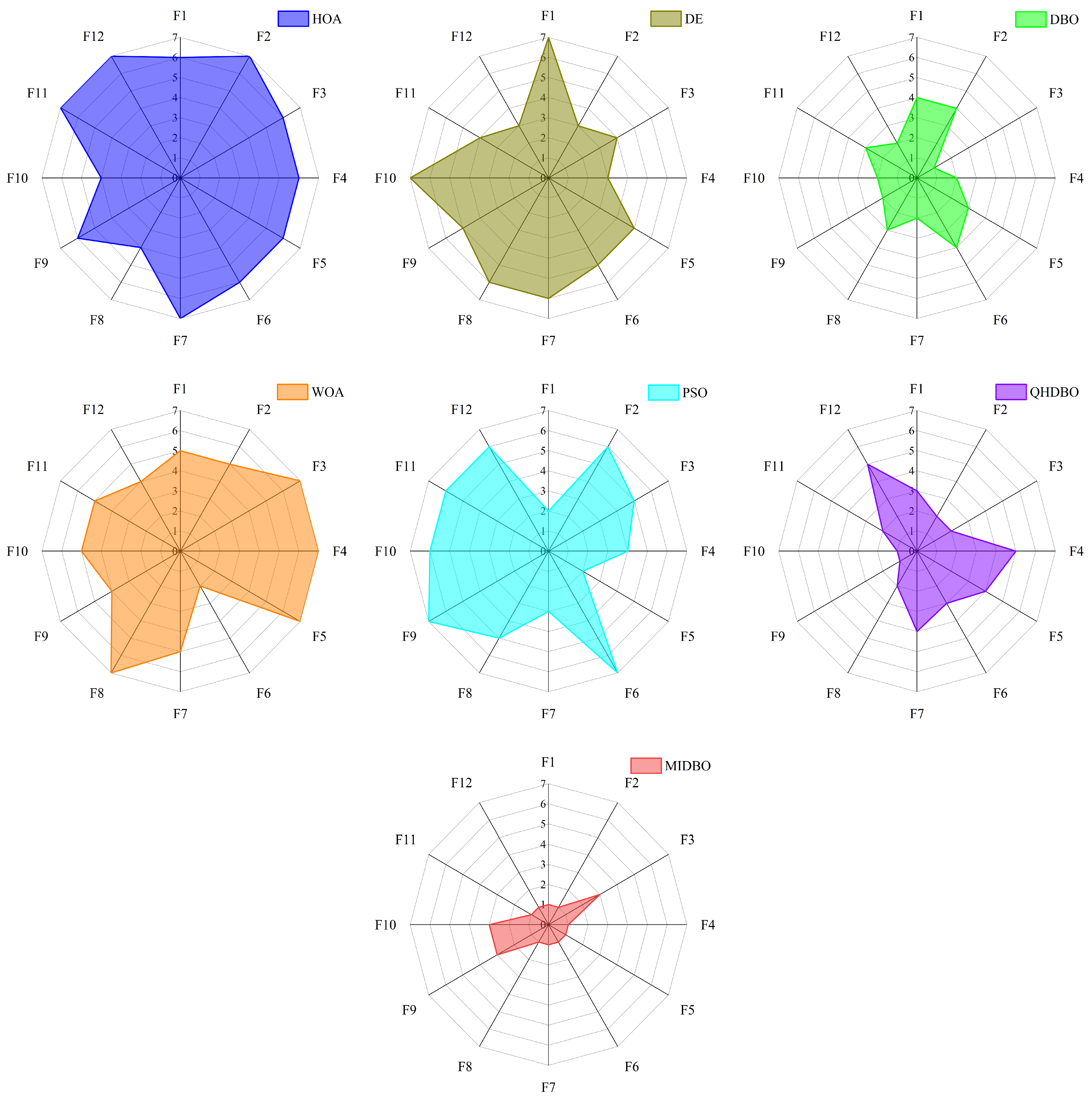

Figure 6 displays the radar chart comparing the ranking performance of the seven algorithms across all 29 functions. The radar chart analysis revealed that the MIDBO achieved the minimal enclosed area among all algorithms, indicating superior overall performance. Conversely, the HOA demonstrated the maximal area, while the DBO ranked third in terms of the enclosed space magnitude. These results demonstrate that the implemented enhancement strategies substantially improved the DBO’s performance while showing the MIDBO’s overall superiority over all comparative algorithms.

4.3. CEC2022 Benchmark Function Results and Analysis

To conduct a more comprehensive evaluation of the MIDBO’s effectiveness in solving complex optimization problems, we performed a systematic comparative analysis between the MIDBO and other selected algorithms using the CEC2022 benchmark suite. The experimental parameters were set as follows: a population size of 30, termination criterion of 1000 iterations, and 30 replicates to ensure result robustness. The comprehensive performance evaluation, supported by statistical analysis of the experimental data, highlights the marked advantage of the MIDBO (

Table 5). It achieved a first-place finish among all compared algorithms with an average rank of 1.5, a result that corroborates its potent capabilities in global optimization. The performance advantage of the MIDBO became particularly pronounced when evaluated on specific test functions. Specifically, on eight of the benchmark functions (F1, F2, F4, F6–F8, F11, and F12), the MIDBO delivered the best average convergence accuracy while consistently ranking first. This consistency indicates the algorithm’s proficiency in maintaining an efficient optimization process across problems with diverse complexities. An exception occurred on function F10, where the algorithm secured the third rank. Furthermore, on functions F3 and F9, the MIDBO failed to obtain the optimal mean and rank, placing it third behind the DBO and QHDBO. On function F5, although the MIDBO’s mean was marginally inferior to PSO’s, it still achieved the first rank. While there were minor fluctuations in performance on these few functions, the overall findings decisively confirm the effectiveness of the MIDBO in improving convergence accuracy.

According to

Table 5, the seven algorithms’ performance on the CEC2022 benchmark can be summarized with the following ranking: MIDBO > DBO > QHDBO > DE > PSO > WOA > HOA.

A Wilcoxon rank sum test was conducted, with the findings detailed in

Table 6. The specific win/tie/loss results were 12/0/0, 9/3/0, 6/5/1, 11/1/0, 11/1/0, and 8/3/1. In the comparison between the MIDBO and DBO,

p > 0.05 was observed on functions F3–F5, F7, and F10. On function F9, the DBO’s performance was superior to the MIDBO’s. Nevertheless, the MIDBO outperformed the DBO on the remaining six functions. Compared with DE,

p > 0.05 was observed on functions F2, F3, and F4, while the MIDBO was superior on the other nine functions. Similarly, in the comparisons with the WOA and PSO,

p > 0.05 was observed on functions F6 and F5, respectively, with the MIDBO proving superior results on the remaining 11 functions in each case. Against the QHDBO,

p > 0.05 was observed on functions F3, F8, and F11. The QHDBO was superior only on function F9, whereas the MIDBO held an advantage on the other eight functions. Finally, the MIDBO exhibited a comprehensive superiority over the HOA. Consequently, it achieved more competitive results on the CEC2022 benchmark.

Figure 7 presents how all algorithms converged for the CEC2022 test suite. On function F1, its convergence speed was initially slower than PSO’s. However, as the iterations progressed, PSO plateaued, while the MIDBO maintained its downward trend, ultimately reaching the optimal value. On function F5, the MIDBO’s initial convergence was surpassed by both DE and PSO. In the subsequent stages of the run, while DE’s rate of descent began to stagnate, the MIDBO maintained a consistent downward trajectory. Nevertheless, its convergence rate remained slower than that of PSO, and it ultimately failed to match the final precision achieved by the latter. Regarding function F9, the MIDBO initially outpaced all competing algorithms, but its progress began to plateau in the later stages, eventually reaching a final accuracy comparable to that of the DBO and QHDBO. The MIDBO demonstrated a superior rate of convergence and, in most instances, higher solution precision, with the exception of a few where it was either slower or failed to reach the optimal value. This robust performance affirms the efficacy of the multiple strategies, as their synergy is what empowers the algorithm to converge on the optimal optimum.

Using box plots,

Figure 8 presents a comparative analysis of the algorithms’ effectiveness on the CEC2022 benchmark.

Figure 8 illustrates that the MIDBO’s boxes were smaller and positioned lower on most functions, indicating its superior performance. For functions F3, F4, and F7, while the MIDBO exhibited a larger box size than PSO, its box was positioned lower. On F5, the MIDBO’s overall performance was inferior to PSO’s. On function F4, the MIDBO’s box was also larger than that of the HOA, but it maintained a lower position. In summary, the collective results establish the MIDBO’s clear superiority over its counterparts, and this outcome, supported by the box plot analysis, powerfully underscores the value of the enhancements presented in this paper.

Figure 9 displays the radar chart, which compares the ranks achieved by the seven algorithms for the 12 test functions from the CEC2022 suite. The figure reveals that the MIDBO’s enclosed shape had the smallest area. In contrast, the HOA’s area was the largest, while the DBO ranked second and the QHDBO ranked third. The radar chart’s enclosed areas confirm that the various proposed strategies notably improved the DBO’s optimization performance.

5. Engineering Optimization Issues

The effectiveness of the MIDBO in real-world scenarios is examined in this section. To accomplish this, its performance was validated on three standard engineering design case studies. The three issues included the tension–compression spring design problem [

51], the pressure vessel design problem [

52], and the speed reducer design problem [

53]. Due to the multi-constraint nature of these engineering problems, because of its simplicity and straightforward application, this study utilized the penalty function method to manage the problem’s constraints. The function is formulated as shown below:

wherein, the modified objective function is denoted by

. The experiments were conducted with the penalty coefficient

r set to 10e100. The terms

and

correspond to the functions for the inequality and equality constraints, respectively, and

and

are constants, which were set to two in this paper. The total counts of the inequality and equality constraint functions are denoted by

m and

n, respectively. A penalty function works by augmenting the objective function’s value with a penalty term in the event of a constraint violation by a candidate solution, thereby directing the algorithm’s search toward more promising and feasible regions.

For the engineering design problem experiments, the same set of comparative algorithms as that in the preceding section was used. The experimental parameters were configured as follows: a population size of 30, termination criterion of 500 iterations, and 20 replicates to ensure result robustness. Performance was evaluated using four statistical metrics: the best, mean, standard deviation, and worst values.

5.1. Tension–Compression Spring Design Issues

The classic tension–compression spring design challenge involves optimizing three key variables: the wire diameter (

d), mean coil diameter (

D), and number of active coils (

N). The primary objective is to find the design with the lowest possible weight while adhering to constraints on the minimum deflection, surge frequency, and shear stress. The governing equations for this task are presented below in Equations (

24)–(

27).

The findings, summarized in

Table 7 and

Table 8, reveal that the MIDBO outperformed all other algorithms on this problem by achieving superior optimization accuracy and significantly better stability. The algorithm’s high ranking underscores its strong competitive edge when tackling the tension–compression spring design challenge.

5.2. Pressure Vessel Design Issues

The pressure vessel design challenge required optimizing four key parameters: the shell thickness (

), head thickness (

), inner radius (

), and the vessel’s length (excluding heads) (

). Minimizing the total cost of the vessel while satisfying four specific constraints was the main goal of this design problem. The governing mathematical model for this task is provided in Equations (

28)–(

31).

Table 9 and

Table 10 provide a performance assessment of seven algorithms applied to the pressure vessel design challenge. The experimental outcomes unequivocally establish the significant advantages of the proposed MIDBO concerning its optimization accuracy and average performance. To be specific, the MIDBO outperformed all competitors in the two key metrics of the best and mean values, securing an optimal value of 5743.021200 and an average value of 6033.448733. Notably, the PSO algorithm exhibited superior performance in the worst value and Std metrics. Nonetheless, the MIDBO’s premier optimization capability and exceptional average performance confirm its enhanced overall efficacy and practical value for tackling engineering challenges characterized by complex constraints and stringent precision demands, setting it apart from the other compared algorithms.

5.3. Speed Reducer Design Issues

The speed reducer design issues involve optimizing a set of seven parameters: the face width (

), module of teeth (

), number of teeth on the pinion (

), lengths of the first and second shafts between bearings (

and

, respectively), and the diameters of the first and second shafts (

and

, respectively). Finding the variable combination that yielded the lowest possible weight for the speed reducer was the primary objective, all while adhering to 11 engineering constraints. The governing equations for this task are provided in Equations (

32)–(

35).

As demonstrated by the data in

Table 11 and

Table 12, the different algorithms exhibited varied performance in terms of optimization accuracy and stability. Although the DE algorithm achieved the best value (2513.700952), its standard deviation (75.53042354) was fourth among all compared algorithms, indicating that its results were highly unstable. In contrast, our proposed MIDBO demonstrated an exceptional balance in its performance. It not only secured the second-best optimization accuracy (2994.234252), outperforming most of the comparative algorithms, including the baseline DBO, but more importantly, it achieved the best stability with a standard deviation of 0.000319158, far surpassing all other algorithms. Compared with the baseline DBO, the MIDBO showed significant improvements across all four metrics. In conclusion, the MIDBO, while preserving a high-accuracy solution capability, greatly enhanced algorithmic stability, rendering it an efficient and highly reliable option for solving such engineering optimization problems.

6. Conclusions

In this paper, we proposed an improved Dung Beetle Optimizer (MIDBO), which adds a chaotic opposition-based learning strategy, an oscillating balance factor strategy, an improved foraging strategy, and a multi-population differential co-evolutionary mechanism to the DBO. This improved algorithm was benchmarked against the original DBO and other swarm intelligence algorithms using the CEC2017 and CEC2022 benchmark suites to assess its performance. The MIDBO, as shown by the experimental results, excelled in two key areas: its speed of convergence and the precision of its solutions. Furthermore, the results from three real-world engineering challenges confirmed MIDBO’s strong performance when handling complex optimization tasks.

Despite the MIDBO’s overall strong performance, numerical experiments revealed its relatively poor convergence accuracy on certain functions across the two test suites, suggesting that there is still room for further enhancement. The complexity of the MIDBO has increased due to the addition of multiple strategies. Therefore, future work could involve combining the proposed MIDBO with other swarm intelligence algorithms to form more effective hybrid algorithms to further improve its performance. To enrich the international scope of this research and serve as an impetus for the algorithm’s continued evolution, our future efforts will focus on a comparative analysis involving advanced algorithms drawn from the global literature. This approach is designed to remedy the constrained range of our current reference works. The initiative aims to ensure that the MIDBO’s performance is evaluated against internationally recognized benchmarks and furnish a more resilient and diversified theoretical underpinning for its subsequent refinement.

Additionally, while the MIDBO has demonstrated commendable performance on engineering design problems, according to the NFL theorem, there is no universally optimal algorithm suited for all optimization challenges. Consequently, the extent of the MIDBO’s competitive edge in more arduous engineering domains warrants further investigation. This recognition impels our future work toward probing the applicability of the MIDBO in sophisticated engineering contexts—such as unmanned aerial vehicle path planning and task allocation, regression prediction, feature selection, and the optimization of model parameters—with the ultimate goal of broadening its range of practical applications. We hope that these studies can contribute to the development of the swarm intelligence field by providing more efficient methods and tools.