Abstract

Global optimization problems, prevalent across scientific and engineering disciplines, necessitate efficient algorithms for navigating complex, high-dimensional search spaces. Drawing inspiration from the resilient and adaptive growth strategies of moss colonies, the moss growth optimization (MGO) algorithm presents a promising biomimetic approach to these challenges. However, the original MGO can experience premature convergence and limited exploration capabilities. This paper introduces an enhanced bio-inspired algorithm, termed crisscross moss growth optimization (CCMGO), which incorporates a crisscross (CC) strategy and a dynamic grouping parameter, further emulating the biological mechanisms of spore dispersal and resource allocation in moss. By mimicking the interwoven growth patterns of moss, the CC strategy facilitates improved information exchange among population members, thereby enhancing offspring diversity and accelerating convergence. The dynamic grouping parameter, analogous to the adaptive resource allocation strategies of moss in response to environmental changes, balances exploration and exploitation for a more efficient search. Key findings from rigorous experimental evaluations using the CEC2017 benchmark suite demonstrate that CCMGO consistently outperforms nine established metaheuristic algorithms across diverse benchmark functions. Furthermore, in a real-world application to a three-channel reservoir production optimization problem, CCMGO achieves a significantly higher net present value (NPV) compared to benchmark algorithms. This successful application highlights CCMGO’s potential as a robust and adaptable tool for addressing complex, real-world optimization challenges, particularly those found in resource management and other nature-inspired domains.

1. Introduction

Optimization is a crucial step in diverse fields such as engineering and economics, aiming to identify the optimal solution within a vast decision space [1]. This process is essential in practical applications to enhance efficiency and minimize costs. Many real-world tasks, including structural optimization in engineering, experimental parameter tuning in scientific research, and resource scheduling in industrial operations, often translate into complex optimization challenges [2]. These problems are frequently characterized by high-dimensional, nonlinear, and multimodal landscapes, often compounded by intricate constraints and dynamic environments [3].

Traditional optimization methods, such as gradient-based algorithms, simplex methods [4], and dynamic programming [5], have been extensively utilized. Gradient-based algorithms, including steepest descent [6] and conjugate gradient methods [7], are effective for convex and differentiable problems but struggle with nonconvexity, discontinuity, or the presence of multiple local optima. Simplex methods and dynamic programming perform well for specific problem types but typically suffer from the curse of dimensionality when addressing large-scale or high-dimensional problems [8]. Consequently, traditional optimization methods often fall short in addressing the complexities of many practical scenarios [9].

Metaheuristic algorithms have emerged as powerful alternatives, gaining significant attention for their adaptability and global search capabilities [10]. Unlike traditional methods, metaheuristic algorithms are not constrained by problem-specific properties such as convexity or differentiability. They employ stochastic mechanisms and principles inspired by natural phenomena to explore solution spaces effectively, making them particularly well suited for tackling complex, multimodal, and high-dimensional optimization problems [11].

In the literature, metaheuristic algorithms are generally classified into two main categories: evolutionary algorithms (EAs) and swarm intelligence (SI) algorithms [12]. Both EAs and SI share a similar framework structure: they initialize a set of solutions, iteratively update this population through specific operators, and return the current optimal solution when a termination condition is met. EAs are inspired by the principles of natural selection and biological evolution [13]. These algorithms iteratively refine a population of candidate solutions through selection, crossover, and mutation operators. Representative EAs include genetic algorithms (GAs) [14], differential evolution algorithms (DEs) [15], and spherical evolutionary algorithms (SEs) [16]. Swarm intelligence (SI) algorithms, conversely, draw inspiration from the collective behavior of social organisms such as birds, ants, and bees. These algorithms emphasize cooperation and information sharing among individuals in the population, leading to an efficient exploration of the search space and rapid convergence. Prominent SI algorithms include particle swarm optimization (PSO) [17], which models the social foraging behavior of bird flocks; ant colony optimization (ACO) [18], a method widely used for combinatorial problems such as routing and scheduling; and artificial bee colony (ABC) [19], which mimics the foraging behavior of honeybees.

Despite the success of metaheuristics in addressing complex optimization problems, these algorithms face fundamental limitations, as highlighted by the no free lunch (NFL) theorem [20]. This theorem fundamentally states that no single optimization algorithm is universally superior across all possible optimization problems. Instead, it reveals that algorithm performance is inherently problem-dependent. A strategy that excels at solving one type of problem may perform poorly when applied to a different type. This means there are always trade-offs in algorithm design. Consequently, understanding specific problem characteristics and tailoring algorithm design or improvements to those characteristics becomes crucial for achieving superior practical performance.

One prominent application domain where metaheuristic algorithms have demonstrated significant potential is reservoir production optimization [21]. In oil reservoir development, establishing an effective production scheme is essential for efficient hydrocarbon recovery and sustained production [22]. Optimizing injection and production processes in oil reservoirs involves considering numerous dynamic factors, such as reservoir heterogeneity, fluid properties, and operational constraints [23]. Achieving an optimal balance in injection rates and production strategies is vital for maximizing hydrocarbon recovery and minimizing operational costs. The inherent complexities of this domain, characterized by nonlinearity, uncertainty, and dynamic behavior, present significant challenges for traditional optimization methods. These methods often struggle with the high dimensionality and multifaceted nature of reservoir management problems. Consequently, there is a growing interest in applying metaheuristic algorithms, which offer adaptability and global search capabilities, to address these challenges and provide robust solutions for reservoir production optimization.

In the academic pursuit of optimizing petroleum injection and production, a variety of methodologies have been employed. Table 1 offers a review of some pertinent research in recent times. Although a substantial amount of research has concentrated on the development of surrogate models, frequently resorting to differential evolution (DE) or particle swarm optimization (PSO) as the optimization frameworks, it is of utmost significance that the optimizer chosen is strategically selected to match the particular traits of the problem. Such a tailored approach is vital for attaining superior optimization outcomes, as it allows for a more precise alignment with the unique demands and characteristics inherent in petroleum injection and production optimization, thus enhancing the potential for achieving enhanced efficiency and performance in this domain.

Table 1.

Recent years’ advances in optimization methodologies for petroleum production.

The moss growth optimizer (MGO), a swarm intelligence optimization algorithm introduced by Zheng et al. [30], draws inspiration from the growth patterns of moss in natural environments. MGO determines the evolutionary direction of the population through a “definite wind direction” mechanism. It then employs spore dispersal search and dual reproduction search for exploration and exploitation of the search space, respectively. Finally, a cryptobiosis mechanism modifies the conventional approach of directly altering individual solutions commonly used in metaheuristic algorithms. While experimental results have demonstrated the effectiveness of MGO, several limitations remain. Firstly, the algorithm lacks inter-population information exchange, leading to a suboptimal utilization of population knowledge. Secondly, the absence of dynamic adaptive strategies hinders the algorithm’s ability to optimize effectively across the different stages of the search process.

In this paper, we propose an improved MGO method, named CCMGO. By incorporating a crisscross strategy, CCMGO enhances inter-population information exchange, promotes offspring diversity, and accelerates convergence towards the optimal solution. Furthermore, the proposed dynamic grouping parameters balance the algorithm’s exploration and exploitation capabilities, enabling its effective adaptation across different optimization stages. The key contributions of this paper are threefold as follows:

- An improved MGO algorithm is proposed, which integrates a (CC) strategy and dynamic grouping parameters to enhance search efficiency and solution quality.

- CCMGO’s performance is rigorously evaluated against nine classic metaheuristic algorithms using the CEC2017 benchmark suite [31]. Statistical validation of the experimental results is conducted using the Wilcoxon and Friedman tests;

- CCMGO is applied to the practical problem of reservoir production optimization, utilizing a three-phase numerical simulation model. The net present value (NPV) achieved by CCMGO is compared to that of other optimization algorithms to demonstrate its efficacy in a real-world scenario.

This paper is structured into six sections. Section 1 provides an introduction, outlining the research background, motivation, and rationale for improving the MGO algorithm. Section 2 presents a detailed review of the original MGO algorithm, discussing its core principles and identifying areas for improvement. Section 3 introduces the proposed CCMGO algorithm, elaborating on the crisscross strategy and dynamic grouping parameters designed to enhance its performance. Section 4 details the experimental setup, presents the results of the comparative analysis with nine classic metaheuristic algorithms, and discusses the statistical validation of these results. Section 5 demonstrates the application of CCMGO to oil reservoir production optimization, showcasing its performance in comparison to other algorithms. Finally, Section 6 summarizes the key findings, discusses the advantages and limitations of CCMGO, and suggests potential avenues for future research.

2. The Original MGO

MGO is a metaheuristic algorithm proposed in 2024, which was inspired by the process of moss growth in natural environments, by Zheng et al. [30]. The MGO algorithm begins by establishing the evolutionary trajectory of the population through a mechanism known as the determination of wind direction, which utilizes a method of partitioning the population. Inspired by the processes of asexual, sexual, and vegetative reproduction in moss, the algorithm introduces two innovative search strategies: spore dispersal search for exploration and dual propagation search for exploitation. Lastly, the cryptobiosis mechanism is employed to modify the traditional metaheuristic approach of directly altering individuals’ solutions, thereby preventing the algorithm from becoming ensnared in local optima. By emulating the biological behavior of moss, the primary mathematical model of the MGO algorithm is structured as follows:

1. Determination of wind direction: MGO has introduced an innovative mechanism known as “Determining Wind Direction”, which establishes the evolutionary trajectory for all members of a population based on the spatial relationship between the majority of individuals and the optimal individual.

Firstly, the grouping operation is performed for each dimension of the population , and the optimal individual in the current population is , and the th dimension of is used as the threshold; which is greater than belongs to the set, and which is less than belongs to the set, and after that, the determined set is selected as according to Equation (1).

where means counting the number of elements in a given set. This dividing process will be performed several times and the set after dividing is as follows:

where denotes the number of times it has been dividing, and denotes a randomly selected dimension, which simply means that the set is first determined to be divided times, followed by randomly selecting dimensions, after which the first dimension is used for the dividing the set, and after that the divided set is used for the second divided using the second dimension selected, and so on until the number of times the dividing is exhausted, to obtain the . Based on the above information, the wind direction vectors are as follows:

where denotes minus a vector that averages each dimension of ;

2. Spore dispersal search: The exploration stage of MGO simulates the dissemination process of spores. In the presence of strong winds, the spores can travel longer distances, whereas in turbulent conditions, the dispersion distance of the spores is shorter. The modeling of this process is shown in Equation (4).

where denotes the new individual generated by the th individual, and is a random number between 0 and 1. step1 and step2 represent the update steps for the two modes, respectively, as shown in Equations (5) and (6).

where is a constant value of two; is a random vector between zero and one, and E denotes the strength of the wind as shown in Equation (7).

where is a random vector between 0 and 1, and is shown in Equation (8).

where denotes the current number of evaluations, and denotes the maximum number of evaluations.

where denotes the proportion of the size of and ;

3. Dual propagation search: The dual propagation search strategy simulates the phenomenon of sexual and asexual reproduction of spores, and it is important to note that the MGO algorithm uses dual propagation search with 80% probability, which is mathematically modeled as shown below:

In Equation (9), the part where means it is updating the th individual, while the part where means it is updating the th dimension of the th individual, where denotes the parameter controlling whether is utilized or not as shown in Equation (10), and denotes the update step as shown in Equation (11).

where is a random vector between zero and one.

where is a random vector between zero and one, and denotes the strength of the wind as in Equation (7);

4. Cryptobiosis mechanism: The cryptobiosis mechanism, employed to replace the greedy selection component of traditional metaheuristic algorithms, draws inspiration from the cryptobiosis observed in mosses—an extraordinary ability enabling these organisms to recover and thrive following periods of dormancy or desiccation. Unlike the conventional greedy selection, this mechanism uses an archive to systematically store updated individuals across generations. When specific conditions, such as reaching a maximum record count, are satisfied, the selection process is triggered to replace the current individual with the best performer from the archive. This strategy allows each individual to initiate their search from the same location multiple times, thereby significantly enhancing the optimization performance of the MGO. The interested readers are referred to its detailed exposition in the study by [30].

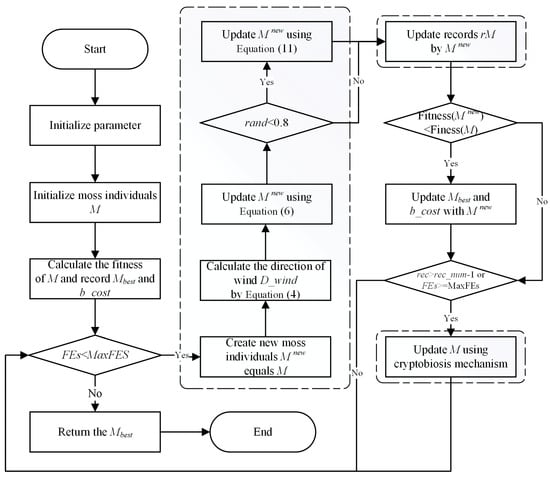

In summary, MGO starts by randomly generating a set of initial individuals, and during each iteration, it first determines the evolutionary direction of the population based on the wind direction, after which it performs spore dispersal search and dual propagation search sequentially based on the given probability and updates each individual through the cryptobiosis mechanism. This process is repeated until the maximum number of evaluations is reached and the optimal individual of the current population is returned. The flowchart of MGO is shown in Figure 1.

Figure 1.

Flowchart of the MGO.

3. Proposed CCMGO

3.1. Crisscross Strategy

The CC concept, originating from the crisscross optimization (CSO) algorithm introduced in 2014 [32], comprises two key components: horizontal crossover search (HCS) and vertical crossover search (VCS). CC embodies the principle of “The Doctrine of the Mean”, dynamically adjusting the search trajectory within the solution space. HCS facilitates information exchange among individuals, while VCS enables intersection across different dimensions of the same individual. This unique intersection strategy, coupled with a competitive selection mechanism, enhances the algorithm’s global search capabilities and accelerates convergence.

In this study, we integrate the CC strategy into the original MGO algorithm to enhance inter-population information dissemination, thereby facilitating a more effective evasion of local optima. The HCS and VCS strategies, as incorporated within CC, are detailed below.

3.1.1. Horizontal Crossover Search

The HCS operation involves randomly selecting particles from the population and pairing them to perform a crossover operation. This process leverages population information to enhance the algorithm’s exploratory capabilities. The mathematical formulation of HCS is defined in Equations (12) and (13).

where and denote random numbers within the interval [0, 1]; and denote random numbers within the interval [−1, 1]; denotes the value of the th dimension of the th particle, and is the value of the th dimension of the th particle. and are the new offspring generated by the HCS from the two particles. HCS then proceeds with a greedy selection to preserve the fittest individuals between the offspring and their parents.

3.1.2. Vertical Crossover Search

The VCS operation entails randomly selecting dimensions within each particle and pairing them for a crossover operation. This mechanism utilizes individual information to enhance the algorithm’s exploitative capabilities. The specific formulation of the VCS operation is detailed in Equation (14)

where denotes a random number within the interval [0, 1]; and denote the values of the two dimensions randomly picked by the th individual; denotes the offspring generated by the VCS operation. Analogous to HCS, VCS employs a greedy selection strategy, retaining individuals with superior fitness values from the offspring and their progenitors.

3.2. Dynamic Population Divisions Parameter

The direction determination phase in the original MGO algorithm necessitates specifying the number of population divisions, which subsequently determines the final direction vectors as defined in Equations (2) and (3). In the original MGO, this number is fixed at , a setting that may not adequately address the algorithm’s requirements across various stages of the optimization process.

Intuitively, during the initial exploration phase, a larger proportion of the population should contribute to determining the update direction, implying fewer population divisions. Conversely, in the later exploitation phase, it is preferable for a smaller subset of individuals to influence the update direction, necessitating a larger number of population divisions. To address this, we propose a dynamic population division parameter that adjusts the number of divisions in each iteration. This ensures that the algorithm’s update direction is influenced by an appropriate number of individuals at each stage, thereby enhancing optimization efficiency. The formulation of this parameter is as follows:

where is the current number of evaluations; is the maximum number of evaluations, and is the dimension of the problem.

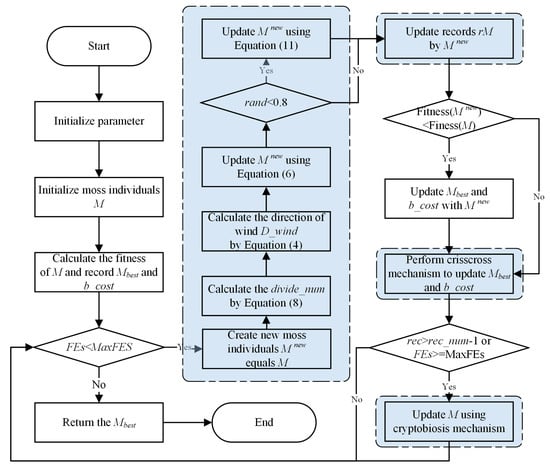

3.3. The Proposed CCMGO

This section introduces the proposed CCMGO algorithm. First, CCMGO initializes the MGO parameters and generates the initial population. Then, the algorithm proceeds with population updating using the original MGO framework. In each iteration, the number of divisions for determining the wind direction vector is dynamically adjusted. Before concluding each iteration, the CC strategy is applied to generate a new population. This iterative process continues until the predefined maximum number of iterations is reached. The algorithm’s workflow is illustrated in Figure 2.

Figure 2.

Flowchart of the CCMGO.

Algorithm 1 provides the pseudo-code for the CCMGO.

| Algorithm 1 Pseudo-code of the CCMGO |

| Set parameters: the maximum evaluation number , the problem dimension , and the population size Initialize population = 0 For Evaluate the fitness value of Find the global min and fitness End While ( Calculate the by Equation (15) /* Dynamic Divisions Parameter */ Calculate the wind direction by Equation (3) For Create the new search agent equals Update the by Equation (4) If Update by Equation (9) End if If End if End for For Update using the cryptobiosis mechanism End for For /*CC*/ Perform Horizontal crossover search to update Perform Vertical crossover search to update Update End End While Return End |

Four primary factors contribute to the computational complexity of CCMGO: population initialization, fitness function evaluation, particle position updates, and the crisscross (CC) strategy. Therefore, the overall complexity can be expressed as O(CCMGO) ≈ O(T × N) + O(T × N) + O(T × N × D) + O(T × N × D), which simplifies to O(T × N × D).

4. Experimental Results and Analysis

This section evaluates the performance of the proposed CCMGO algorithm using the 29 benchmark functions from the CEC2017 test suite. Experiments are conducted under fair conditions using these industry-standard benchmarks. The experimental setup consists of an Intel i5-13600KF (Intel Corporation, Santa Clara, CA, USA) processor with 32 GB of RAM, running the Windows 11 operating system. The algorithms are implemented in MATLAB 2024a. For the comparative analysis, all algorithms utilize a population size of 30, a problem dimension of 30, and a maximum of 300,000 function evaluations. Each function is executed 30 times independently, and the average and standard deviation of the resulting objective function values are reported.

4.1. Benchmark Functions Overview

This subsection describes the 29 benchmark functions from the 2017 IEEE Congress on Evolutionary Computation (CEC2017) test suite [31]. These functions are categorized into four groups: unimodal, multimodal, hybrid, and composition functions. This diverse set of functions allows for a comprehensive evaluation of the algorithm’s performance across various function landscapes. Table 2 provides a summary of the CEC2017 benchmark functions.

Table 2.

CEC2017 benchmark functions.

4.2. Performance Comparison with Other Algorithms

This section presents a comparative performance evaluation of the proposed CCMGO algorithm against nine widely used metaheuristic optimization algorithms using the CEC2017 benchmark functions. The comparative algorithms include MGO [30], WOA [33], GWO [34], MFO [35], SCA [36], PSO [37], SMA [38], BA [39], and FA [40]. All algorithms were implemented following the descriptions provided in their respective original papers to maintain methodological consistency. To ensure a fair comparison, the hyperparameter settings for each comparative algorithm were configured according to the recommendations in their original studies (as summarized in Table 3) and were not adjusted further. This means that we used the most commonly reported parameter values from their original papers and other relevant literature, providing an impartial setting for each method.

Table 3.

Hyperparameter settings of comparative algorithms.

Table 4 provides a comprehensive performance comparison of CCMGO and the other nine algorithms on the CEC2017 benchmark functions. The table reports the average (Avg) and standard deviation (Std) of the fitness values for each algorithm on each benchmark function. The average value provides a measure of the overall performance of each method on each problem, and the standard deviation shows the stability of the approach when repeated. Using these two metrics provides a robust method for measuring performance. Furthermore, the Friedman test ranks (Rank) of each algorithm are presented, along with the average rank (Avg) across all functions. The Friedman test was chosen as it is a non-parametric statistical test suitable for comparing the performance of multiple algorithms across a number of problems. The “+/−/=” column denotes instances where CCMGO outperformed (+), matched (=), or underperformed (−) compared to the other algorithms. This pairwise comparison allows us to specifically see where our proposed approach shows an improvement over other standard benchmarks. By including all of these different metrics, we are able to make a rigorous and detailed assessment of the performance of the proposed method.

Table 4.

Results of the CCMGO and the other algorithms on CEC2017.

The results in Table 4 demonstrate that CCMGO achieves the best overall performance, attaining the lowest average rank of 1.6207 among all algorithms on the CEC2017 benchmark suite. Specifically, CCMGO outperforms MGO, the second-best performing algorithm with an average rank of 2.6552, on 18 out of 29 functions. This superior performance and lower average rank highlight CCMGO’s enhanced optimization reliability and effectiveness in tackling complex optimization problems. The specific performance of CCMGO in comparison to the other benchmark algorithms, combined with the lower average rank of our approach, demonstrates the validity and improved performance of CCMGO compared to other similar methods. This suggests that our approach can be generalized to other problem domains and may provide improvements over other methods.

Table 5 further substantiates the superior performance of CCMGO on the CEC2017 benchmark suite. The Wilcoxon signed-rank test results, presented in Table 5, reveal statistically significant differences (p < 0.05) between CCMGO and the other algorithms for most of the benchmark functions. These findings strongly support the conclusion that CCMGO offers significantly better optimization performance compared to the other algorithms, reinforcing its reliability and effectiveness for challenging optimization tasks.

Table 5.

The p-values of the CCMGO versus the other algorithms on CEC2017.

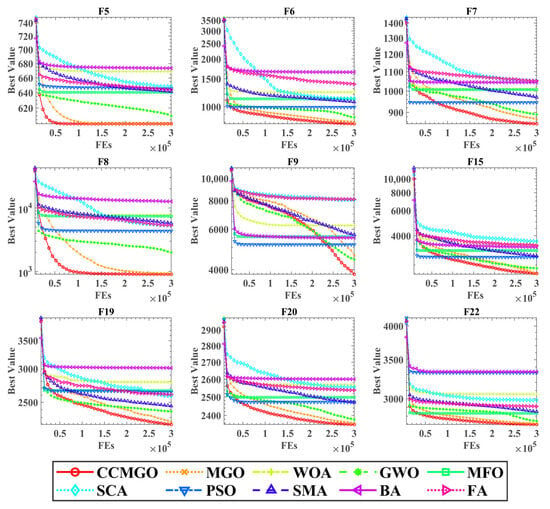

Figure 3 illustrates the convergence curves of CCMGO compared to the other nine algorithms on the benchmark functions. The horizontal axis represents the number of function evaluations, while the vertical axis represents the best fitness value achieved.

Figure 3.

Convergence curves of the CCMGO on benchmarks with other algorithms.

The convergence curves clearly demonstrate that CCMGO consistently achieves lower fitness values compared to the other algorithms across the benchmark functions. This indicates that CCMGO converges to better solutions more efficiently, requiring fewer function evaluations to reach comparable or superior fitness levels. This observation highlights CCMGO’s effective exploration and exploitation of the search space and its ability to avoid premature convergence to local optima. In summary, the incorporation of the crisscross strategy significantly enhances the search performance of MGO, enabling it to outperform competing algorithms on the benchmark functions.

5. Application to Production Optimization

The objective of reservoir production optimization is to determine the optimal control settings for each well to maximize NPV. However, the large number of wells and production cycles leads to a combinatorial explosion of possible solutions, resulting in a high-dimensional, NP-hard optimization problem. This complexity makes evolutionary algorithms well suited for tackling such challenges. In this study, we use the Eclipse reservoir simulator to apply CCMGO to a three-channel reservoir model and compare its performance against several widely used metaheuristic algorithms. For this study, nonlinear constraints associated with oilfield production are disregarded, and the NPV, as defined in Equation (16), serves as the objective function.

where denotes the set of variables to be optimized; denotes the state parameter of the model denotes the total simulation time; , , and are the rates of oil production, water production, and water injection, respectively. denotes the oil revenue; and denote the cost of treating and injecting the water, respectively; is the annual interest rate, and is the number of years that have passed.

5.1. Three-Channel Model

The three-channel reservoir model is a typical non-homogeneous two-dimensional reservoir, which has four injection wells as well as nine production wells arranged in a five-point pattern. This model is shaped with a grid consisting of 625 grid blocks, where each grid side is 100 ft; each grid block is 20 ft thick, and all grid blocks have a porosity of 0.2, as shown in Table 6. These are all standard values that have been used in other studies and can be considered typical values for this kind of problem. To ensure our results are comparable with other studies in the literature, we used the same values as in reference [24].

Table 6.

Properties of the three-channel model.

The optimization variables in this production optimization problem are the injection rate for each injection well and the fluid recovery rate for the production wells. The water injection rate ranges from 0 to 500 STB/DAY, and the water extraction rate for the production wells ranges from 0 to 200 STB/DAY. Thermal storage is utilized for 1800 days, with a decision time step of 360 days. As a result, the decision variable has a dimensionality of 65.

NPV is the fitness function for this optimization problem and is determined by various parameters, including the oil price (80.0 USD/STB), the water injection cost (5.0 USD/STB), and the water treatment cost (5.0 USD/STB). To simplify the model, it is assumed that the interest rate per annum is 0%.

5.2. Analysis and Discussion of the Experimental Results

This subsection provides a detailed analysis of the experimental results for optimizing the three-channel oil reservoir production model. The performance of CCMGO is compared with six other optimization algorithms: MGO, MFO, GWO, PSO, SMA, and WOA. All algorithms were repeated five times, and the metrics such as the mean, standard deviation (Std), the best and worst values of the objective function, and the experimental results of CCMGO are presented and discussed.

Table 7 summarizes the experimental results of each algorithm, including metrics such as the mean, standard deviation, and the best and worst values. CCMGO achieves the highest mean value (9.4969 × 107) among all algorithms, highlighting its superior ability to consistently deliver high-quality solutions. Moreover, its standard deviation (1.7636 × 106) is relatively low compared to MFO (3.2705 × 106) and GWO (3.8653 × 106), indicating greater stability and reliability. These results demonstrate CCMGO’s effectiveness in exploring and exploiting the search space and its robustness in maintaining consistent performance under various conditions.

Table 7.

The results of CCMGO and the other algorithms on the oil reservoir production optimization.

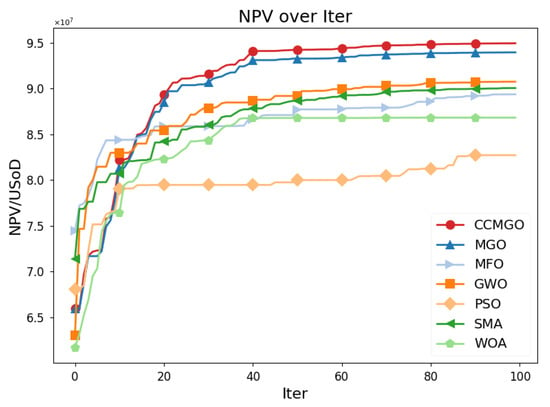

Figure 4 shows the NPV progression of CCMGO and six other algorithms over 100 iterations, with the horizontal axis representing the iteration count and the vertical axis showing the corresponding NPV values.

Figure 4.

Convergence of NPV values for the different algorithms over iterations.

CCMGO exhibits the fastest convergence and consistently achieves the highest NPV among all algorithms. It rapidly approaches its peak performance within the first 30 iterations and maintains a clear advantage throughout the optimization process. In contrast, MGO converges more slowly and achieves slightly lower NPV values than CCMGO by the end of the iterations. The other algorithms, including MFO, GWO, SMA, PSO, and WOA, show varying degrees of suboptimal performance. MFO, GWO, and SMA achieve moderate results, while PSO and WOA converge significantly slower and yield lower final NPV values.

In summary, the results demonstrate the effectiveness of CCMGO in optimizing the oil reservoir production model. CCMGO achieves the highest NPV and converges faster than the other algorithms, confirming its superior performance.

6. Conclusions

This study introduced CCMGO, an enhanced MGO algorithm incorporating a CC strategy and a dynamic population divisions parameter. The CC strategy promotes information exchange among individuals within the population, thereby increasing offspring diversity and accelerating convergence towards optimal solutions. The dynamic population divisions parameter balances exploration and exploitation, leading to a more efficient search process.

The performance of CCMGO was rigorously evaluated using the CEC2017 benchmark suite and compared against nine prominent metaheuristic optimization algorithms. Experimental results demonstrated that CCMGO consistently outperformed the other algorithms across a variety of benchmark functions, achieving a significantly lower average rank of 1.6207 compared to MGO’s 2.6552 on the CEC2017 suite. Furthermore, CCMGO outperformed MGO on 18 out of 29 functions in these tests. Furthermore, CCMGO was applied to a real-world reservoir production optimization problem, where it achieved a higher NPV and the highest mean value of 9.4969 × 107 compared to all other algorithms, while also maintaining a relatively low standard deviation of 1.7636 × 106, demonstrating superior performance and stability. This practical application illustrates CCMGO’s potential for solving complex, real-world optimization challenges.

Future research will focus on enhancing the scalability of CCMGO for high-dimensional problems and extending its capabilities to address multi-objective optimization tasks. We also plan to explore the application of CCMGO in a wider range of real-world optimization problems to further demonstrate its adaptability and effectiveness.

Author Contributions

T.Y.: Conceptualization, Software, Data Curation, Investigation, Writing—Original Draft, Project Administration; T.L.: Methodology, Writing—Original Draft, Writing—Review and Editing, Vali-dation, Formal Analysis, Supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gao, K.; Cao, Z.; Zhang, L.; Chen, Z.; Han, Y.; Pan, Q. A Review on Swarm Intelligence and Evolutionary Algorithms for Solving Flexible Job Shop Scheduling Problems. IEEE/CAA J. Autom. Sin. 2019, 6, 904–916. [Google Scholar] [CrossRef]

- Shan, W.; Hu, H.; Cai, Z.; Chen, H.; Liu, H.; Wang, M.; Teng, Y. Multi-Strategies Boosted Mutative Crow Search Algorithm for Global Tasks: Cases of Continuous and Discrete Optimization. J. Bionic Eng. 2022, 19, 1830–1849. [Google Scholar] [CrossRef]

- Huang, X.; Hu, H.; Wang, J.; Yuan, B.; Dai, C.; Ablameyk, S.V. Dynamic Strongly Convex Sparse Operator with Learning Mechanism for Sparse Large-Scale Multi-Objective Optimization. In Proceedings of the 2024 6th International Conference on Data-driven Optimization of Complex Systems (DOCS), Hangzhou, China, 16–18 August 2024; pp. 121–127. [Google Scholar]

- Nelder, J.A.; Mead, R. A Simplex Method for Function Minimization. Comput. J. 1965, 7, 308–313. [Google Scholar] [CrossRef]

- Bellman, R. Dynamic Programming. Science 1966, 153, 34–37. [Google Scholar] [CrossRef]

- Meza, J.C. Steepest Descent. WIREs Comput. Stat. 2010, 2, 719–722. [Google Scholar] [CrossRef]

- Polyak, B.T. The Conjugate Gradient Method in Extremal Problems. USSR Comput. Math. Math. Phys. 1969, 9, 94–112. [Google Scholar] [CrossRef]

- Bottou, L.; Curtis, F.E.; Nocedal, J. Optimization Methods for Large-Scale Machine Learning. SIAM Rev. 2018, 60, 223–311. [Google Scholar] [CrossRef]

- Garg, T.; Kaur, G.; Rana, P.S.; Cheng, X. Enhancing Road Traffic Prediction Using Data Preprocessing Optimization. J. Circuits Syst. Comput. 2024, 2550045. [Google Scholar] [CrossRef]

- Hu, H.; Shan, W.; Tang, Y.; Heidari, A.A.; Chen, H.; Liu, H.; Wang, M.; Escorcia-Gutierrez, J.; Mansour, R.F.; Chen, J. Horizontal and Vertical Crossover of Sine Cosine Algorithm with Quick Moves for Optimization and Feature Selection. J. Comput. Des. Eng. 2022, 9, 2524–2555. [Google Scholar] [CrossRef]

- Dréo, J.; Pétrowski, A.; Siarry, P.; Taillard, E. Metaheuristics for Hard Optimization: Methods and Case Studies; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006; ISBN 978-3-540-30966-6. [Google Scholar]

- Janga Reddy, M.; Nagesh Kumar, D. Evolutionary Algorithms, Swarm Intelligence Methods, and Their Applications in Water Resources Engineering: A State-of-the-Art Review. H2Open J. 2021, 3, 135–188. [Google Scholar] [CrossRef]

- Hu, H.; Shan, W.; Chen, J.; Xing, L.; Heidari, A.A.; Chen, H.; He, X.; Wang, M. Dynamic Individual Selection and Crossover Boosted Forensic-Based Investigation Algorithm for Global Optimization and Feature Selection. J. Bionic Eng. 2023, 20, 2416–2442. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic Algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Price, K.; Storn, R.M.; Lampinen, J.A. Differential Evolution: A Practical Approach to Global Optimization; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Tang, D. Spherical Evolution for Solving Continuous Optimization Problems. Appl. Soft Comput. 2019, 81, 105499. [Google Scholar] [CrossRef]

- Poli, R.; Kennedy, J.; Blackwell, T. Particle Swarm Optimization. Swarm Intell. 2007, 1, 33–57. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant Colony Optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Akay, B.; Karaboga, D. Artificial Bee Colony Algorithm for Large-Scale Problems and Engineering Design Optimization. J. Intell. Manuf. 2012, 23, 1001–1014. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No Free Lunch Theorems for Optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Hu, H.; Wang, J.; Huang, X.; Ablameyko, S.V. An Integrated Online-Offline Hybrid Particle Swarm Optimization Framework for Medium Scale Expensive Problems*. In Proceedings of the 2024 6th International Conference on Data-driven Optimization of Complex Systems (DOCS), Hangzhou, China, 16–18 August 2024; pp. 25–32. [Google Scholar]

- Foroud, T.; Baradaran, A.; Seifi, A. A Comparative Evaluation of Global Search Algorithms in Black Box Optimization of Oil Production: A Case Study on Brugge Field. J. Petrol. Sci. Eng. 2018, 167, 131–151. [Google Scholar] [CrossRef]

- Yu, J.; Jafarpour, B. Active Learning for Well Control Optimization with Surrogate Models. SPE J. 2022, 27, 2668–2688. [Google Scholar] [CrossRef]

- Chen, G.; Zhang, K.; Xue, X.; Zhang, L.; Yao, J.; Sun, H.; Fan, L.; Yang, Y. Surrogate-Assisted Evolutionary Algorithm with Dimensionality Reduction Method for Water Flooding Production Optimization. J. Petrol. Sci. Eng. 2020, 185, 106633. [Google Scholar] [CrossRef]

- Chen, G.; Zhang, K.; Zhang, L.; Xue, X.; Ji, D.; Yao, C.; Yao, J.; Yang, Y. Global and Local Surrogate-Model-Assisted Differential Evolution for Waterflooding Production Optimization. SPE J. 2020, 25, 105–118. [Google Scholar] [CrossRef]

- Cuadros Bohorquez, J.F.; Tovar, L.P.; Wolf Maciel, M.R.; Melo, D.C.; Maciel Filho, R. Surrogate-Model-Based, Particle Swarm Optimization, and Genetic Algorithm Techniques Applied to the Multiobjective Operational Problem of the Fluid Catalytic Cracking Process. Chem. Eng. Commun. 2020, 207, 612–631. [Google Scholar] [CrossRef]

- de Oliveira, L.C.; Afonso, S.M.B.; Horowitz, B. Global/Local Optimization Strategies Combined for Waterflooding Problems. J. Braz. Soc. Mech. Sci. Eng. 2016, 38, 2051–2062. [Google Scholar] [CrossRef]

- Zhang, K.; Zhao, X.; Chen, G.; Zhao, M.; Wang, J.; Yao, C.; Sun, H.; Yao, J.; Wang, W.; Zhang, G. A Double-Model Differential Evolution for Constrained Waterflooding Production Optimization. J. Pet. Sci. Eng. 2021, 207, 109059. [Google Scholar] [CrossRef]

- Zhao, Z.; Luo, S. A Crisscross-Strategy-Boosted Water Flow Optimizer for Global Optimization and Oil Reservoir Production. Biomimetics 2024, 9, 20. [Google Scholar] [CrossRef] [PubMed]

- Zheng, B.; Chen, Y.; Wang, C.; Heidari, A.A.; Liu, L.; Chen, H. The Moss Growth Optimization (MGO): Concepts and Performance. J. Comput. Des. Eng. 2024, 11, 184–221. [Google Scholar] [CrossRef]

- Wu, G.; Mallipeddi, R.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2017 Competition on Constrained Real-Parameter Optimization; Technical Report; National University of Defense Technology: Changsha, China; Kyungpook National University: Daegu, Republic of Korea; Nanyang Technological University: Singapore, 2017. [Google Scholar]

- Meng, A.; Chen, Y.; Yin, H.; Chen, S. Crisscross Optimization Algorithm and Its Application. Knowl.-Based Syst. 2014, 67, 218–229. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-Flame Optimization Algorithm: A Novel Nature-Inspired Heuristic Paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for Solving Optimization Problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime Mould Algorithm: A New Method for Stochastic Optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Yang, X.-S.; He, X. Bat Algorithm: Literature Review and Applications. Int. J. Bio-Inspired Comput. 2013, 5, 141–149. [Google Scholar] [CrossRef]

- Yang, X.-S.; He, X. Firefly Algorithm: Recent Advances and Applications. Int. J. Swarm Intell. 2013, 1, 36–50. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).