Abstract

Despite numerous recent advances in the classroom and in-vehicle driver training and education over the last quarter-century, traffic accidents remain a leading cause of mortality for young adults—particularly, those between the ages of 16 and 19. Obviously, despite recent advances in conventional driver training (e.g., classroom, in-vehicle, Graduated Driver Licensing programs), this remains a critical public safety and public health concern. As advanced vehicle technologies continue to evolve, so too does the unintended potential for mechanical, visual, and/or cognitive driver distraction and adverse safety events on national highways. For these reasons, a physics-based modeling and high-fidelity simulation have great potential to serve as a critical supplementary component of a near-future teen-driver training framework. Here, a case study is presented that examines the specification, development, and deployment of a “blueprint” for a simulation framework intended to increase driver training safety in North America. A multi-measure assessment of simulated driver performance was developed and instituted, including quantitative (e.g., simulator-measured), qualitative (e.g., evaluator-observed), and self-report metrics. Preliminary findings are presented, along with a summary of novel contributions through the deployment of the training framework, as well as planned improvements and suggestions for future directions.

1. Introduction

Traffic accidents continue to be a primary safety and public health concern and are a leading cause of death for young, inexperienced, and newly-licensed drivers (aged 16–19) [1,2]. Per mile driven, young drivers are almost 300% more likely (than drivers in older age groups) to be involved in a fatal driving accident [2]. Statistics from the same source indicate an unfortunate gender effect: the crash fatality rate for young males is more than 200% higher than that for young females. Furthermore, crash risk has long been measured to be particularly high just after licensure [3,4]; per mile driven, crash rates are 150% higher for (just-licensed) 16-year-olds than for slightly older drivers who even have a mere 2–3 years of experience.

Recent scientific evaluations [5] have demonstrated that current driver education programs (e.g., classroom, in-vehicle, Graduated Driver Licensing programs [6]) do not necessarily produce safer drivers. While some training programs have been found to be effective for procedural skill acquisition and others improve driver perception of dangers and hazards, conventional driver training does not provide teenagers with sufficient hands-on, seat-time exposure to the varied challenges of driving, which can be visual, manual, and cognitive [7] in nature. Novice drivers in training need to safely learn essential skills (e.g., basic vehicle mechanical operation, steering, turning, and traffic management), without the complexity of the normal driving environment. Historically, driver licensing in the United States has served mostly as a formality—if the student does not flagrantly violate the rules of the road during their brief on-road evaluation, he or she passes the driving exam [8]. In various countries in Europe (e.g., the Czech Republic, Finland, France, the Netherlands, and Slovakia), driver training requirements are much more rigorous and often include a significant component in addition to classroom and in-vehicle training assessments—through simulator-based training (SBT) [9]. A relevant example from the literature is the TRAINER project [10], which proposes a framework for SBT (in Europe, including implementations based in Spain, Belgium, Sweden, and Greece) and offers result comparisons between training implementation on various classes of simulators and across simulator fidelities.

In recent times, SBT has been implemented for supplementary teen driver education and performance assessment [11,12,13,14,15] with numerous key advantages. For example, training simulators have been implemented to improve driver understanding and detection of hazards and are useful for enhancing the situational awareness of novice drivers [16,17]. Furthermore, training in the controlled environment of a simulator provides critical exposure to many traffic situations (and some that are rarely encountered while driving a physical vehicle), trial-and-error (i.e., freedom-to-fail), safety/repeatability, and digital, real-time metrics of driving performance [18]. Training in a simulator can be extremely engaging—especially so for young trainees—and provides young learners with critical additional seat-time to common driving situations in an educational setting that is active and experiential [19,20]. Likewise, other recent studies [21] have investigated correlations between simulation performance (e.g., violations, lapses, errors, driving confidence) and natural tendencies observed when driving a physical vehicle (on-road).

Despite these advantages and advances, SBT also has some noteworthy drawbacks. A simulator, by definition, is an imitation of reality, and for some trainees, it is difficult to overcome this perception to provide training that is meaningful. This inherent absence of both “presence” (i.e., a sense of “being there”, that is natural, immediate, and direct) [22] and “immersion” (i.e., the objective sensory notion of being in a real-world situation or setting) [23] in the simulator can result in negative training [24] and acquired skills might not be appropriately applied when transferred to the “real world”. Accordingly, prior to implementation, a simulator must be properly verified and validated (i.e., designed and implemented such that the simulator model serves as an accurate representation of the “real world”) both (a) to promote positive training for participants and (b) to ensure that the human behavior data collected are accurate and realistic [25,26,27].

Another primary concern with many simulator implementations is environment maladaptation, more commonly referred to as “simulator sickness” [28]. This side effect is often caused by “postural instability” [29]—a sensory conflict between what is observed and what is felt when interacting within the artificial environment that commonly manifests itself through a number of symptoms, including sweating, dizziness, headaches, and nausea. Fortunately, past studies have demonstrated that simulator sickness is less frequent and less severe when experimental duration is modulated, especially so among young individuals [30]. Finally, simulator environment affordability is a major consideration. The fidelity of the simulator (e.g., field-of-view, user controls, cabin, motion capabilities, and various others) should be sufficient to promote positive training while remaining within a price domain (i.e., acquisition, maintenance, operation, upgrades) that is achievable for a typical training classroom.

Ultimately, researchers are still trying to determine (and objectively measure) how simulator exposure compares to actual, real-world driving exposure. Establishing the efficacy of supplementary simulator exposure in young driver training requires evidence-based empirical studies; this very notion stands as the primary emphasis of this paper. Implementing a targeted sequence of SBT exercises and employing a companion holistic multi-measure assessment, the impact of the training framework was investigated on various aspects of observed young driver performance. Metrics included: (a) quantitative measures (e.g., speed and compliance behavior at intersections), as monitored and calculated by the physics-based vehicle dynamics mathematical model that underlies the driving simulator engine; (b) evaluator qualitative measures, as a critical “human-in-the-loop” component to augment numerical assessment; (c) module questionnaires and self-report, as a means to monitor transfer knowledge (e.g., pre- and post-module and pre- and post- program); and (d) exit surveys/anecdotal evidence, as a means for teen trainees (and their parents) to comment on the advantages and disadvantages of the SBT framework.

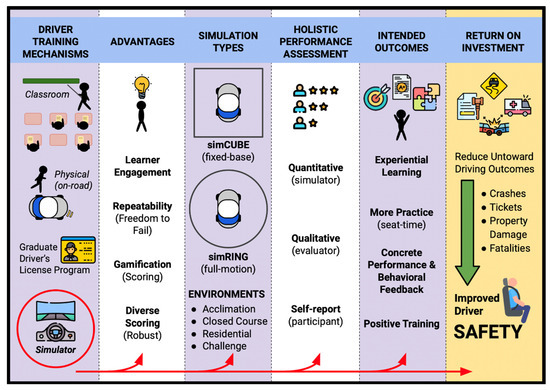

In Figure 1, a “theory of change” (i.e., a logic model) illustrates both the overall workflow and the primary novelties of SBT mechanisms that have been developed and deployed for this work. In Research Methodology, the design and development of the training environment (including two implemented simulator types) is detailed; the suite of SBT modules (environments) are described and justified; the experimental cohort is described, a teenaged cohort who were recruited over a 2+ year timeframe. In Results and Discussion, preliminary findings are presented by successfully instituting a holistic performance assessment, the intended outcomes of which could have profound training benefits. In Broader Impacts of our Methodology and Implementation are discussed the numerous advantages of using games and modeling and simulation (M&S) in training. The paper culminates with Conclusions and Future Work. The primary goal is that the overarching return on investment for this work will be a template for reducing untoward driving outcomes, which could lead to improved national driver safety in the long term.

Figure 1.

Teen driving framework—a blueprint theory of change (with simulation).

2. Research Methodology

As a primary component of the training framework, a simulator training environment was specified and composed that would allow for presentation of training content at suitable fidelity (i.e., both physical and psychological) while simultaneously enabling measurement and observation of human performance alongside a holistic assessment of participant outcomes. The training environment was designed to allow for much-needed training “seat time” while enabling experiential reinforcement of basic driving skills and also enabling a trainee’s freedom to fail. In other words, the environment promoted repetition and was such that the learner could take risks (i.e., where real-world consequences were greatly reduced). This allowed failure to be reframed as a necessary component of learning and made students more resilient in the face of obstacles and challenges (e.g., [31,32]. This study was approved on 21 October 2015 by the ethics committee of 030 University at Buffalo Internal Review Board with the code 030-431144.

2.1. Training Environment Design

The novel implementation offered two simulator types of varying features and physical fidelity. The fixed-base simulator is comprised of a racing frame/chair, a high-fidelity spring-resisted steering wheel (with a 240° range of motion), game-grade foot pedals (gas, brake, and clutch), and a PC-grade sound system. The visual system consists of a four-wall arrangement (each 6′ high × 8′ wide) that provides drivers with forward, left, right, and rearward Wide Super eXtended Graphics Array Plus (WSXGA+) -resolution (1680 × 1050) views arranged at 90° (see Figure 2). The motion-based simulator is comprised of a 6-Degrees of Freedom (DOF) full-motion electric hexapod platform, a two-seat sedan passenger cabin, a moderate-fidelity steering wheel (with force-feedback capability and a 900° range of motion), high-fidelity foot control pedals (with spring-resisted gas and clutch pedals and pressure modulation on the brake pedal), and a 2.1 sound system. The visual system consists of a 16′ diameter theater arrangement, with a 6′ vertical viewing capability and which implements 6 Full High definition (FHD)-resolution (1920 × 1080) projectors to fully envelop the simulator participant (see Figure 3).

Figure 2.

Fixed-base simulator.

Figure 3.

Motion-based simulator.

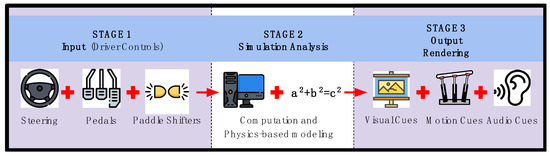

The accompanying simulation software environment was developed in-house (using C++) so that its design could be tailored to exact training needs. While participating on the simulator, the driver adjusts the on-board Human Interface Devices (HID) controls (Stage 1), and these inputs are then delivered to a Windows-based workstation where a simulation analysis is performed (Stage 2). Physics-based model changes manifest themselves in required updates to three forms of output rendering: (i) the scene graphics state (Stage 3a), (ii) the motion platform state (Stage 3b), and (iii) the audio state (Stage 3c) of the simulation. Notionally, this signal path is illustrated in Figure 4, whose design and implementation has previously been described elsewhere [33]. Each of these primary I/O states are briefly described.

Figure 4.

I/O signal pathway for training framework.

2.1.1. Stage 1: Input—Driver Controls

User input commands are obtained with DirectInput (Microsoft), a subcomponent of DirectX, which allows the programmer to specify a human-interface device (e.g., steering wheel, gas pedal, brake pedal). The state of the input device (e.g., rotational position of the steering wheel, translational position of the pedals) can be polled with minimal latency in near real-time.

2.1.2. Stage 2: Simulation Analysis

The simulation computer uses the control inputs to perform a numerical analysis whose outputs are used to render the primary output components of the simulation (i.e., visual, haptic, aural). Vehicle dynamics are calculated using the classical Bicycle Model of the automobile [34,35]. This simplified physics-based model has two primary degrees of freedom (DOF), which are yaw rate (i.e., rotational velocity) and vehicle sideslip angle (i.e., the angle between the direction in which the wheels are pointing versus the direction in which they are actually traveling). Forward velocity is added to the baseline model as a third primary DOF for the present implementation. Primary model inputs are: steering wheel (rotation) angle and the longitudinal forces of the tires, and primary model outputs include vehicle velocities, accelerations, and tire forces. We then employ basic numerical integration (i.e., Euler’s method) to solve the equation outputs that define the current state of the vehicle (e.g., longitudinal/lateral velocity, yaw and rate, and longitudinal/lateral position). Updates to the state equations have to occur rapidly, so the step size between the updated and previous state takes place at a rate of 60 Hz. The artificial vehicles that populate the virtual driving environment alongside the human-driven vehicle are independently represented by a physics-based traffic model [36] that attempts to emulate actual human behavior (i.e., including driving errors and lapses).

2.1.3. Stage 3: Outputs—Visual, Haptic and Audio

Once calculated, vehicle output states are subsequently converted into the six motion DOFs for haptic cueing: (heave, surge, sway, roll, pitch, yaw). Due to the finite stroke length of the electrical actuators on the motion platform, this conversion requires limiting, scaling, and tilt coordination (i.e., a procedure commonly employed that helps to sustain longitudinal and lateral acceleration cues with the gravity vector through pitch and roll, respectively [37]) by way of the washout filtering [38,39] technique. The updated DOFs are sent to the motion platform computer via datagram packets (UDP) for the entire simulation. Packet delivery is accomplished using Win64 Posix multithreads; in this manner, the update rate of the motion process is independent from that of the graphics process to avoid Central Processing Unit (CPU) / Graphics Processing Unit (GPU) bottlenecks. Simulation graphics have been developed using OpenGL, an industry-standard 3D graphics Application Programing Interface (API). The simulation framework employs OpenAL for sound events that are commonplace in a ground vehicle scenario: ignition/shutdown, engine tone, and cues for tire-surface behaviors and hazards.

2.2. Training Modules

The training modules that were designed for this framework allow driving participants to engage with training content in a progressive manner. In this way, training exposure begins simple and gradually increases in complexity while additional tasks and driving challenges are introduced. These simulation-based driving modules were specified and designed to complement existing driver training methodologies (e.g., in-class and in-vehicle components, primarily) while each targets a specific skillset (i.e., mechanical, visual, cognitive, or multiple) widely acknowledged for safe driving. The training modules can be decomposed into three primary classifications: (a) closed course (CC) “proving ground” tracks (without traffic); (b) standard residential driving (both with/without accompanying traffic); and (c) challenge scenarios (always with traffic). These classifications are described and illustrated next.

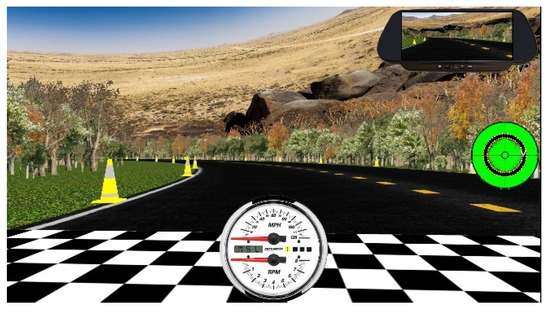

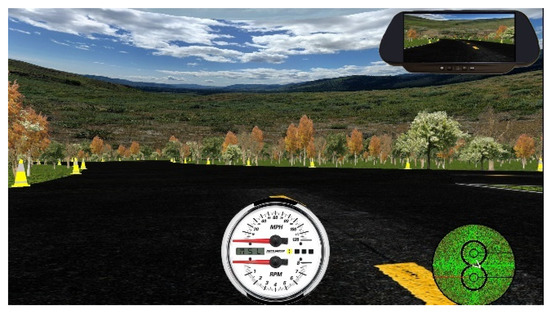

2.2.1. Closed Course Driving

The closed-course training environments allow us to evaluate human performance during specialized maneuvers without traffic. The skid pad [40] training course (Figure 5) is a conventional automotive proving ground environment that is invaluable to help students better understand the complex relationship between speed and heading (i.e., steering angle). The tri-radial speedway (Figure 6) integrates straightaways with curves and necessitates speed adjustments at the segment transitions (i.e., careful interplay between acceleration, braking, and turning). The figure-8 test track (Figure 7) allows learners to experience both clockwise and counterclockwise turns in immediate succession. Note that performance data were not collected during these closed course scenarios. Regardless, they served as an invaluable mechanism for providing novice drivers with additional and repeatable hands-on practice within the simulator among varied geometric conditions. This critical experiential exposure and “seat time” served as an advantageous supplement to the drives where driver performance was quantified.

Figure 5.

The skid pad scenario (CC).

Figure 6.

The tri-radial speedway scenario (CC).

Figure 7.

The figure-8 scenario (CC).

2.2.2. Residential Driving

The primary SBT environment is based upon a five square mile training area inspired by an actual residential region located in suburban Western New York State (i.e., Buffalo-Niagara region) and adjacent to the North campus of the University at Buffalo (where this research was performed). The residential environment includes actual suburban streets, including 2- and 4-lane roads and a 1-mile linear segment of the New York State (290) Thruway. The residential simulation construct also includes road signs, lane markings, and traffic control devices and allows the trainee to practice basic safe driving (e.g., speed maintenance, traffic sign and signal management) within a controlled, measurable, and familiar environment inspired by “real world” locations. Refer to Figure 8, which presents a notional depiction of the residential training environment, which can be endeavored either without or with accompanying traffic (as shown).

Figure 8.

Residential driving scenario.

2.2.3. Challenging Scenarios

Finally, within the residential driving environment, driving challenges were presented that entailed additional vigilance and attentiveness on the part of the trainee through multitasking. To practice these skillsets, which are less common on the roadway (and therefore, less frequently encountered during in-vehicle training), a series of special hazard (challenge) scenarios were devised, during which to observe and measure trainee response and driving performance. These challenge scenarios included the following:

- A construction zone with roadway cones, narrow lanes, and large vehicles

- An aggressive driver (tailgater) segment in a narrowed section of roadway

- Speed modulation segments, including a section with speed bumps (see Figure 9)

Figure 9. Speed modulation (challenge).

Figure 9. Speed modulation (challenge). - A complex highway merge with large vehicles and inclement weather

- An animal crossing within a quiet cul-de-sac (see Figure 10)

Figure 10. Animal crossing (challenge).

Figure 10. Animal crossing (challenge).

According to previously published statistics [41], young and inexperienced adult drivers are at elevated risk to be involved in a vehicle accident, underestimate hazardous situations, and drive more frequently in risky situations than more experienced drivers. Directly related to these driving deficiencies, the leading causes of fatal crashes among teens include: (i) speeding, (ii) driving too fast for road conditions, (iii) inexperience, (iv) distractions, and v) driving while drowsy or impaired. In specification and design efforts for the training modules (and accompanying evaluation protocols), it remained critical for us to explicitly address many of these prevailing weaknesses within the supplemental training framework. Accordingly, thoughtful consideration was given to the layout of each of the 120 min instructional sessions that comprise the 10 h simulation course. Of the documented five leading causes of fatal crashes, the training modules explicitly address the first four: (i) influencing the driver to be mindful of posted speed limits, (ii) making sure the trainee is monitoring speed in varied driving conditions, (iii) exposing new drivers to driving situations they have never seen before—and may only rarely encounter, and (iv) challenge scenarios that cause external distraction and demand multitasking through cognitive/mechanical/visual workload. A specific driving module to address the fifth (i.e., impairment) has been designed and incorporated for another research project [42,43] but was not explicitly included within the current work scope. This training prospect is discussed further in Future Work.

2.3. Instructional Sessions and Performance Measures

In advance of each instructional session, an acclimation drive (of duration five minutes) is offered primarily to serve as a warm-up procedure for drivers to become familiar with the controls and environment of the simulator [44]. Each of the five training sessions incorporates new elements (e.g., traffic behavior) and training content (e.g., challenge scenarios) that build upon the previous and scaffold driving knowledge in a progressive manner. Finally, at the conclusion of the fifth training module, each participant is given a culminating “final exam” in the form of a virtual road test. This road test serves as a cumulative mechanism to examine specific training outcomes that were encountered explicitly within each of the previous five instructional sessions (and the training modules used for each). Refer to Table 1 for a summary of the instructional sessions, an overview of the training module(s) that were deployed for that session, and a brief a description of the primary driving features for each instructional session.

Table 1.

Overview of Training Modules for each Instructional Session.

Table 2 summarizes the experimental protocol for each of the instructional simulation modules. Eligible participants came for their first visit accompanied by their legal guardian. At the beginning of this first session, parents and teens provided their informed consent and assent, respectively, to participate in the study. Each session began with a number of pre-surveys and self-report questionnaires issued in advance of the simulator exercises. This was followed by a video briefing detailing driving rules and regulations pertinent to that session’s specific content and a brief acclimation drive. Teens experienced the actual simulator exercises in pairs (i.e., one driver and one passenger). There were brief breaks/transitions placed in between driver/passenger load/unload and to assist with mitigating simulator sickness. The total duration of each of the five sessions was approximately two hours.

Table 2.

Experimental Protocol for Each Instructional Session.

To provide a more holistic understanding of simulation-based training on driving performance, the holistic performance measure assessment consisted of three primary subcomponents:

2.3.1. Quantitative Measures

A printed simulator score report collected driver performance data for the entire duration of each training module for each instructional session. The score report rated performance on basic driving skills including: (a) travel speed on every street, (b) travel speed at every stop sign, and (c) excursion speed and traffic light state (i.e., red/yellow/green) while traversing both into and out of every stoplight zone. Penalties were assigned when drivers were traveling above the speed limit or when they failed to fully stop at controlled intersections. Cumulatively, these penalties resulted in a final percentage score for each category (i.e., travel speed, stop signs, stop lights), which were then combined to create an aggregated total (0–100) score. Note that the simulator also compiled an (X/Y) trace of lane position in a separate data file. These data were plotted and any obvious/noteworthy trends were observed by the trainee post-experiment, but trainee compliance was not (currently) monitored as a component of the score report cumulative score.

2.3.2. Qualitative Measures

The simulator training evaluator completed an evaluation checklist to subjectively rate the trainee’s performance (in real-time) on various driving skills (e.g., lane maintenance, use of turn indicators, and speed fluctuations while turning) on a 4-point Likert scale. Training evaluators also completed handwritten instructor (HI) comments, later coded to create scores for the number of positive and negative comments (positive HI; negative HI) issued per instructional session, per driver. These tabulations likewise resulted in a difference metric (difference HI), which quantified the difference between the positive and negative comments issued per instructional session, per driver.

2.3.3. Self-Report Measures

A session-based questionnaire (SBQ) measured pre- and post-session knowledge of basic driving facts, but specifically for driving and roadway details that were pertinent to each of the five instructional sessions and the corresponding simulator training module. The simulator exercises were intended to reinforce driving skills introduced during the pre-SBQ (and an accompanying video briefing), and this information might serve as first-time exposure for many novice teen drivers. Subsequently, during the simulator exercise, drivers directly practiced these skills hands-on such that the session-targeted subject matter should be better understood once the post-SBQ was endeavored at the conclusion of each training module.

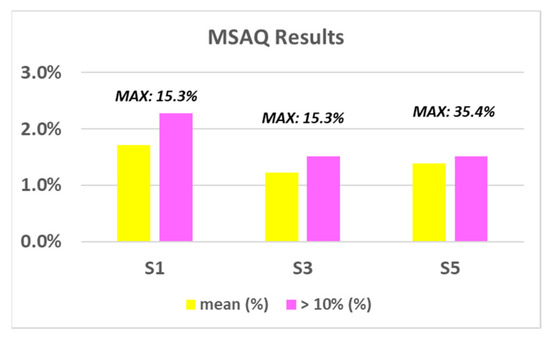

Likewise, a Motion Sickness Assessment Questionnaire (MSAQ) [45] was employed to query how each driver felt before and after they drove the simulator to assess if, and to what degree, simulation had a negative impact on physiological response (e.g., nausea, dizziness, headache). In the full version of the questionnaire, there were 16 questions total, each rated on a 10-point (0–9) Likert scale, with each question addressing one of four possible symptom types (i.e., gastrointestinal; central; peripheral; sopite-related). The MSAQ resulted in sub-scores for each of these four categories, as well as an “overall” sickness score (i.e., ranging from 0 to 144). The MSAQ was administered post-experiment, for modules 1, 3, and 5.

Finally, a participant satisfaction form was issued upon program exit to solicit overall program rating, comments, and suggestions. Separate forms were given both to the drivers (students) and to their parents. The student form asked a series of questions (10-point Likert scale) and also contained three open-ended questions asking students for different types of feedback on the program. The parent form assessed what parents thought of the program as a whole and was emailed to parents after their child had completed the entire 10 h program. Open-ended feedback comments (from students as well as parents) were later tabulated coded to provide trend metrics.

2.4. Experimental Cohort

The study cohort size was N = 132 (66 male, 66 female). For deployment, only teenagers (ages 14–17, permitted in New York State, but unlicensed) were recruited who had little or no actual driving experience as a requirement of participation—per our advanced screening procedures. The intention was to recruit only participants who would be unbiased by pre-existing knowledge of actual on-road driving. This, would enable us to promote positive training on the simulator with a “clean slate” of truly novice/green drivers. Other eligibility criteria were strictly health-related: each candidate participant could not be susceptible to seizures, be claustrophobic, be afraid of dark environments, or prone to extreme motion sickness (e.g., car, plane, sea). Primary subject recruitment sources included digital e-mailings to all regional high schools within a 60 min drive of campus (where the study was conducted), printed fliers posted about the campus, word-of-mouth referrals through families who had already completed the program (as well as related programs on the driving simulator), and printed advertisements within a popular community newspaper. The Institutional Review Board (IRB) at the University at Buffalo reviewed and approved our study protocol in advance of its deployment. Subjects were minimally compensated for their time (a $50 gift card) after completing all five of their two-hour SBT modules.

3. Results and Discussion

Previously published guidelines [46] have summarized general requirements for the successful implementation of a motion simulator intended for driver evaluation. These requirements include: (a) concerns about measurable driver performance criteria, (b) performance with respect to simulator fidelity, and (c) any demerits associated with a simulator implementation related to maladaptation and simulator sickness. During deployment and subsequent collection and analyses of data, these guidelines were attempted comprehensively through a variety of data forms. Accordingly, our results are decomposed into three primary categories of performance measurement: (i) quantitative (i.e., as measured by the simulator), (ii) qualitative (i.e., as observed by the training expert who monitored each instructional session), and (iii) surveys and questionnaires (i.e., as self-reported by driving participants and their parents, as appropriate). As a result, the overall analysis is representative of a truly multi-measure assessment that enabled a thorough and holistic evaluation of simulator-based driving performance. Results were tabulated, analyzed, and reported using a combination of both Microsoft Excel and the R statistical software package.

3.1. Quantitative Measures

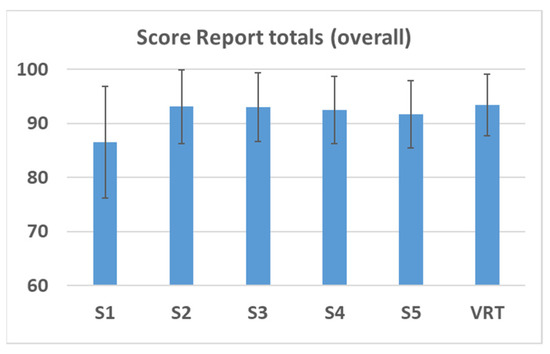

Frequency histograms were composed to illustrate score report total scores as collected and calculated by the computational engine of the driving simulator. These data are representative of core safe driving performance skills as programmed and monitored in real-time by the simulator (e.g., travel speed and behavior at intersections). Refer to Figure 11, which plots the average cohort total score on the x axis for each of the five training sessions (S1–S5), as well as the virtual road test (VRT) on the y axis. The figure demonstrates a general overall compliance to safe driving habits within the simulator; however, it indicates that there may be a ceiling effect beyond the first session. For the remainder of the sessions, ratings from the score report were biased toward the upper threshold of the rating scale, which is a direct indicator of expected improvement of cohort core driving skills (with continued practice/exposure). This trend culminated with the virtual road test, where observed (mean) cohort scores were the highest when compared against any of the training modules offered within each of the simulator training sessions. Embedded within each series are the corresponding standard deviations for these scores report total scores. As expected, there was a wider standard deviation for the first session; however, these deviations slowly and steadily reduced as training progressed, culminating with the smallest standard deviation for the virtual road test. This is a likely indicator that positive training was maintained and actually improved as the program progressed, as scores tended to increase more uniformly (i.e., with less variation) for the entire training cohort.

Figure 11.

Score report totals.

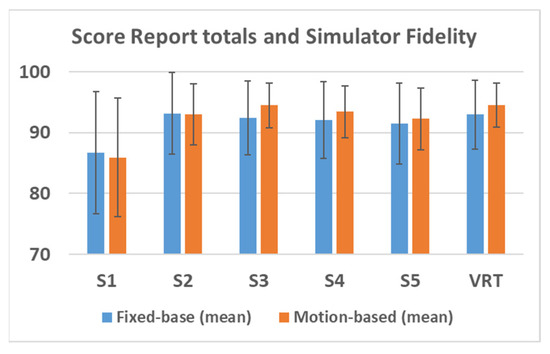

In Figure 12, observed results are compared across simulator fidelities among members of the cohort. Note that all recruited participants were assigned to a cohort (i.e., fixed-base or motion-based) for all training sessions. Although it may have been ideal to have all participants operate both simulator types, enabling a comparison of fidelities within each participant, the overall cohort size (N = 132) provided a sufficient basis for comparison of human performance across simulator-fidelities. For the first session, cohort results were higher on the fixed-base simulator (but with much wider result variance); for the second session, observed results were nearly equal across simulator types, and for the third, fourth, and fifth sessions (as well as the virtual road test), observed results were slightly higher for the motion-based simulator. The general hypothesis for these observations is that for the first session, the fixed-base simulator was easier to learn and operate for a novice trainee, and the second session served as an inflection point. However, once the cohort became suitably acclimated to and comfortable with the companion simulator training environments, the very presence of motion cues to accompany the simulation visuals, as well as the greater induced sense of both presence and immersion within the motion-based simulator display system, provided a higher-quality (i.e., more authentic) learning environment and therefore served to better promote positive training overall.

Figure 12.

Simulator fidelity comparison.

Finally, individual components of the score report were investigated for noteworthy trends. Refer to Table 3, which presents a summary decomposed by simulator fidelity. In general, trainees showed stronger compliance to posted speed limits on the fixed-base simulator. As shown in the second and third columns of the Table, trainees drove almost twice as fast over the speed limit on the higher-fidelity motion simulator (2.6 vs. 1.5 mph over the speed limit, on average), resulting in slightly lower speed sub-scores. This result is somewhat surprising, as one would think that a higher-fidelity environment (both in terms of display system and motion capabilities) would result in more authentic driving behaviors and stronger vigilance to posted speed limits. These trends were actually observed for the latter two score report sub-scores, where stronger compliance was observed toward intersection regulation (stop signs and streetlights) on the motion-based simulator relative to similar observations with the fixed-base simulator (i.e., reflected by the final two columns of the table).

Table 3.

Score Report Metrics by Simulator Type (Entire Cohort).

3.2. Qualitative Measures

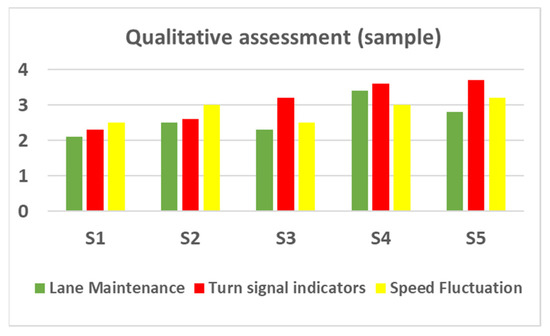

Although quantitative measures served as the primary mechanism for trainee performance assessment, training evaluators also performed a more subjective qualitative assessment (upon pairs of trainees) during each instructional session. In this way, certain categories of trainee performance could be monitored and measured that would be more challenging to program a simulator to quantify with any meaningful accuracy. In so doing, it helped us to maintain an ever-critical “human-in-the-loop” as a critical constituent of the overall human performance assessment. To this end, Figure 13 presents an example depiction of the qualitative assessment for a single participant, in the form of an instructor evaluation checklist (IEC). The IEC was performed across three qualitative performance categories, each rated on a (0–4) Likert scale and observed over the five primary instructional sessions. The categories represent an assessment of (a) overall lateral lane stability, (b) vigilance for using turn signals to indicate turns and lane changes, and (c) maintaining appropriate speed/distance specifically amidst the “challenge” segments of the training modules. For this particular trainee (sample), overall, qualitative ratings generally improved across the five training sessions—particularly, compliance to “turn signal indicators”, which demonstrated steady improvement across all five sessions.

Figure 13.

Sample instructor evaluation (qualitative).

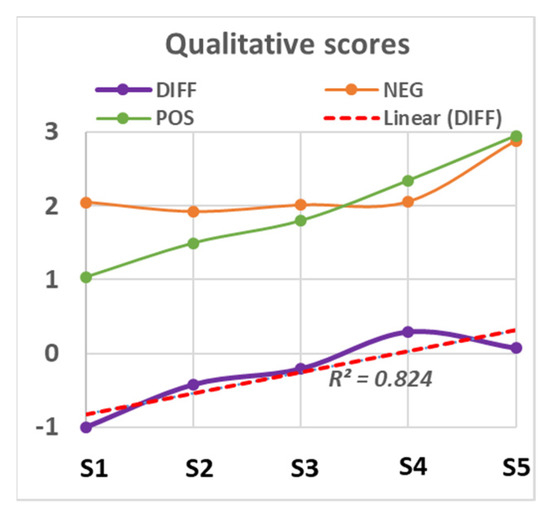

As a mechanism by which to culminate these qualitative observations across the entire cohort, project evaluators simultaneously completed handwritten instructor (HI) comments, later coded to create scores for the number of positive and negative qualitative performance feedback comments issued per trainee, per session. These tabulations resulted in a difference metric, per training module. To this end, Figure 14 illustrates the positive (POS) and negative (NEG) scores, as well as the difference score (DIFF), averaged, across the entire training cohort and issued per training module for each of the primary five instructional sessions. The general observed tendency is that the difference metric is positively correlated with additional simulator exposure, as shown in the plot. As the program progressed, students continued to progressively demonstrate additional positive driving habits and, through experiential exposure with the simulation, practiced gradual performance improvement amidst varied and more complex driving situations. Note that although the positive/negative evaluation differential increased as intended, the overall quantity of negative comments issued were observed to steadily increase across the five training sessions, as illustrated. One likely reason for this observation is the gradual addition of more challenging driving scenarios as sessions proceeded. Facing a new situation for the first time creates a new potential for young driver error, and this may have resulted in additional negative demerits from the human evaluator, even as the student continued to master core driving skills from previous exposure. Likewise, as training progressed across training modules and instructional scenarios, the evaluator likely exhibited increased expectations as students began to master baseline driving skills and perhaps felt an increased responsibility to steadily reinforce more advanced driving behaviors.

Figure 14.

Evaluation summary. DIFF, difference score; POS, positive; NEG, negative.

3.3. Self-Report Measures

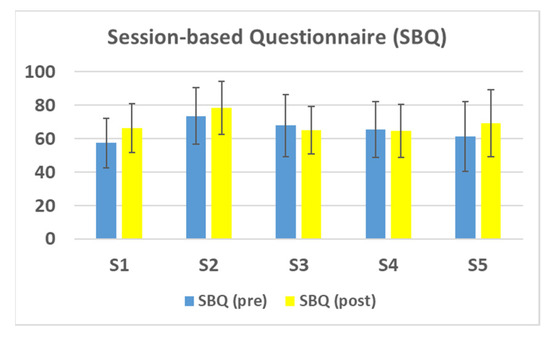

As a tertiary mechanism for the holistic driver performance evaluation, quantitative and qualitative measures were supplemented with a series of self-report metrics. An overall data comparison found that post-SBQ scores were improved (with significance) as compared to the pre-SBQ scores, (t(132) = +3.64, p = 5.19 × 10−9). Figure 15 plots the results (pre vs. post) of the session-based questionnaire (SBQ) cohort average across each of the five instructional sessions. When individual sessions were compared, the post-SBQ scores were higher (with significance) than pre-SBQ scores for three of the five instructional sessions: Session 1, t(132) = +8.89, p =3.18 × 10−11; Session 2, t(132) = +5.00, p = 6.02 × 10−5; and Session 5, t(132) = +7.92, p = 2.24 × 10−7. The lack of observed improvement for the other two individual sessions (i.e., Sessions 3 and 4) could indicate that there was not sufficient correlation between the content of the questionnaires and the embedded training content within the simulator exercises designed explicitly as the focal point for those sessions.

Figure 15.

SBQ (pre/post).

The Motion Sickness Assessment Questionnaire (MSAQ) was issued post-simulator in Sessions 1, 3, and 5. An overall evaluation found that, as expected, simulator sickness was not identified to be a primary concern for these simulator experiments that were designed and deployed for this young driving cohort. Figure 16 displays the cohort mean score (reported as a percentage of the overall MSAQ score: 144) and the number of participants who reported cumulative scores of greater than 14 (15) of the total MSAQ scale (also reported as a percentage of the total N = 132 cohort). Standard deviations for the three sessions (1, 3, and 5) were significantly large only relative to the reported means; they were 2.56%, 2.55%, and 4.44%, respectively, which is an indicator that there was a comparatively wide dispersion of results. To this point, note that Figure 16 also displays a maximum (MAX) MSAQ score across the cohort for each of the three sessions in which this metric was collected and analyzed. While the extremes are noteworthy outliers, the overall trends of the data are favorable. The low MSAQ scores across the cohort are attributed to the youthful age demographic (14–17), who are rarely susceptible to anything more than “mild” simulator maladaptation. The experimental design (see Table 2) successfully imparted simulation best-practices and intentionally balanced the 2 h training sessions with breaks, timely transitions between driver/passenger activities, video training, survey activities, and perhaps most critically—simulator experiment durations that fell below a 15 min maximum.

Figure 16.

Motion Sickness Assessment Questionnaire (MSAQ) summary.

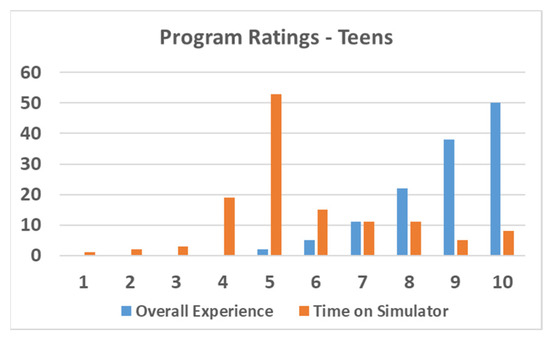

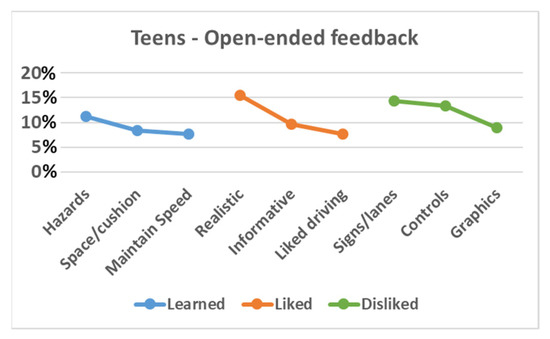

Finally, exit surveys were issued to both the teen trainees and their parents, and these data further yielded valuable information about the training program. For the teen trainees, two questions were administered on a 1 to 10 Likert scale. The first asked, “Overall, rate your experience in the training program”, and the second asked, “Overall, rate the amount of time that was spent on the driving simulator”. Refer to Figure 17, which co-analyzes both series. Overall “experience” ratings on the simulator was observed to be highly favorable, with approximately 110 of the N = 132 cohort (83%) rating their overall experience as being an 8/10 or better. In contrast, “time on simulator” ratings were considerably lower, with a weighted cohort average of just 5.7/10. This was likely because not enough time spent was spent allowing teens to drive the simulator (performing simulator-exercises), as opposed to session time spent on other activities (e.g., watching videos, taking surveys, watching partner drive, experiment debrief). Likewise, teens were afforded the opportunity to answer open-ended questions related to their training experiences; three questions were assessed: “What did you learn about safe driving?” (375 total comments received), “What did you like most about your simulator training program?” (330 total comments received), and “What did you dislike about the driving simulation training?” (112 total comments received). The three most common responses (reported as percentages) for each question are summarized in Figure 18. Most prominently, trainees mentioned learning about hazard management and the importance of maintaining an appropriate following distance and speed (relative to conditions). Similarly, trainees reported most liking the realism of the simulator, the informative nature of the training modules, and the hands-on engagement of driving a simulator for training. By way of criticism, trainees suggested that the lane markers and signs were difficult to see; despite acclimation, the steering wheel and pedals were difficult to adjust to (i.e., a common complaint of all driving simulators); and some suggested that the graphics were not realistic.

Figure 17.

Teen Assessment Experience/Time on Driving Simulator.

Figure 18.

Teen Assessment Open-ended Summary.

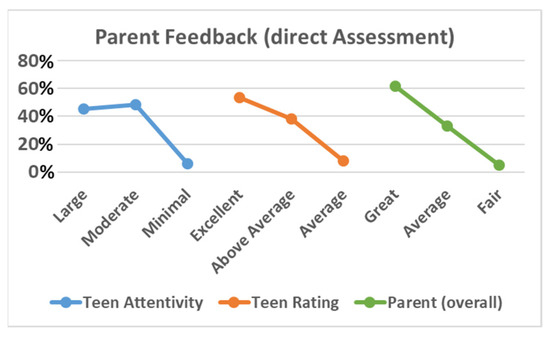

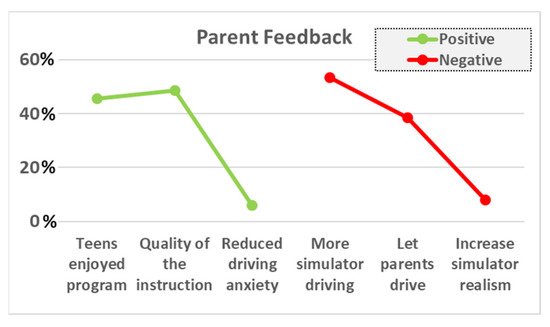

In a similar manner, parents were issued a series of questions when their teen completed the training program. Overall ratings were extremely positive, as parents nearly-unanimously suggested that (a) simulator training should constitute a mandatory first step in driver training, (b) they would recommend the course to other parents, and (c) the program successfully instructed their child basic driving skills. Parents were also afforded the opportunity to answer open-ended questions related to their own perceptions of their teen’s training experiences; three questions were assessed (each, on a 3-point Likert scale): “As a result of their training, did you see your child paying more attention to road signs, road hazards, and general road compliance?” (i.e., translation of training experiences from simulator to real-world), “How much do you think your child enjoyed being part of the SBT program?”, and “Overall, how would you rate this program?” The Likert-style results (reported as response percentages) for each question are summarized (favorably) in Figure 19. As with their teens, parents were afforded the opportunity to answer open-ended questions (positive and negative) related to their child’s training experiences (126 total comments received). For each category, the three most common responses (reported as percentages) are summarized in Figure 20. Parents opined that the primary benefits were that teens enjoyed driving the simulator and that the instructors were of high quality; the third highest frequency response (much less significant) was that teens seemed to have reduced driving anxiety (while driving) as a benefit of their simulator exposure. However, parents also noted that they would have liked to have seen more simulator time and increased graphics realism on the simulator, and many parents also noted the desire to attempt the simulator themselves. The third highest frequency response (much less significant) was that parents wished for higher visual realism for the simulator.

Figure 19.

Parent (direct) Assessment Likert-style feedback.

Figure 20.

Parent Assessment Open-ended Summary.

4. Broader Impacts of Our Methodology and Implementation

The outcomes of the current research aimed to demonstrate the effectiveness of physics-based M&S and its potential to improve roadway safety and public health within North American young driver training. Such a demonstration could result in a more widespread deployment of these advanced technologies and augmentation of similar training programs across the nation. Agencies that might be interested in such technology would include high schools, driver training agencies, and law enforcement. The successful implementation of SBT frameworks could, over time, result in improved driving practices for the teenage demographic, where a disproportionate number of negative driving outcomes (e.g., crashes, injuries) continue to occur [1,2]. Ultimately, this could lead to various other benefits to society, including an increased level of safety on the roadways for all drivers, resulting in a reduction in accidents, injuries, and loss of life. In turn, this could result in reduced insurance rates for all drivers, particularly those in the highest-risk pool (i.e., young males).

Likewise, by leveraging the groundwork established in this paper, similar training programs could be developed for other vehicle types for large equipment civilian vehicle simulator training, including commercial truck driving. Recent research [47,48,49] indicates that skills received from exposure within a large-vehicle simulator (e.g., trucks, aviation) can directly transfer to skills required to operate an actual truck/aircraft—and are learned as quickly (and sometimes faster) than is the case with live/physical training. Within this context, simulators—when employed in addition to (but not as a replacement for) hands-on training—can be particularly useful for instruction of gear-shifting and linear and angular maneuvers of increasing complexity. Likewise, in the military, it remains an ongoing challenge to determine efficient and effective mechanisms for simulator-based training (SBT). It has been emphasized that what matters most for maximizing SBT effectiveness is an environment that fosters psychological fidelity (e.g., cognitive skills) by applying established learning principles (e.g., feedback, measurement, guided practice, and scenario design) [50,51]. Too often, the evaluation of training outcomes focuses on how the simulator itself functions, instead of on how humans perform and behave within the simulator [49,51]. The design and development of a training framework that demonstrates sufficient physical fidelity, while focusing on the analysis of human factors and objective metrics for performance within the training environment, remains a point of emphasis for the current work.

5. Conclusions and Future Work

Traffic accidents remain a primary safety concern and are a leading cause of death for young, inexperienced, and newly licensed drivers [1,2,3,4]. For young driver education, it therefore remains an utmost public health priority to determine how technology can be better employed to increase the overall quality and effectiveness of current driver training methods. Supplemental training afforded by realistic physics-based modeling and hands-on simulation provides much-needed “seat time”, that is, practice and exposure within an engaging and authentic setting that promotes experiential learning. Concrete behavioral and performance feedback can be implemented to enhance and promote positive training, which could help to reduce negative driving outcomes (e.g., crashes, tickets, property damage, and fatalities) and thereby improve young driver safety over the long term.

In this paper, a detailed case study was described that examined the specification, development, and deployment of a “blueprint” for a simulation-centric training framework intended to increase driver training safety in North America (see Figure 1). Points of focus in this paper have included an overview of the numerous advantages of expanding the use of advanced simulation and gaming elements in current and future driver training; consideration toward simulator fidelity (e.g., including motion capabilities and field-of-view); and simulator-based driving environments to enhance young driver training (e.g., including closed-course, residential, and challenge scenarios to accompany baseline environment acclimation).

A multi-measure (holistic) assessment of simulated driver performance was developed and demonstrated, including quantitative, qualitative, and self-report rubrics, from which the following trends were observed:

- Cohort score report total scores exhibited a general upward trend across the entire program, and these trends were moderately enhanced with the presence of motion cues (quantitative).

- Both negative and positive feedback evaluator comments increased as the training modules proceeded. The overarching hypothesis is that the increase in positive feedback was counteracted by the driving session evaluator’s tendency for increased “bias” in the form of growing expectations as the program proceeded (qualitative).

- Post-SBQ scores were higher than pre-SBQ scores (due to module-specific knowledge gained due to simulator exposure), as expected. Motion sickness (by way of MSAQ) was found to be insignificant, which can be credited to the experimental design (see Table 2) and to the young demographics of this specific cohort (self-report).

- Teens rated their experience on the simulator favorably but suggested that more of the overall session time should have been spent on the simulator itself rather than on related activities associated with the training program. They recognized the simulator as an effective way to learn but disliked some of the visual elements and control aspects of the simulator (self-report).

- Almost all parents agreed that the simulator program should be a first step toward future driver training. Furthermore, per their own observations of subsequent teen driving performance, favorable feedback was issued for specific driving skillsets gained, including improved recognition to road signs and road hazards (self-report).

This paper concludes with numerous suggestions and recommendations that would enhance future implementations of simulator training to further improve teen driver safety.

5.1. Improved Metrics for Quantifying Simulator Performance

The simulator score report served as a primary measure for quantifying driver performance and behavior during the driving simulator exercises. This robustness of this score report toward comprehensively quantifying simulator performance stands to be improved from various perspectives. Future implementations would attempt to quantify driver compliance to lateral lane position, primarily during turn and lane-change maneuvers, as well as measuring ability to maintain lane-centric behavior during hazard events and multitasking; would devise enhanced scoring metrics that are specific to each of the three course environments to monitor overall progress and compliance across the five instructional sessions; and would incorporate more explicit metrics for each of the specialized hazard challenges.

5.2. Improvements to Experimental Training Environment

As a primary segment of teen (and parent) surveys, attempts were made to better understand elements of the current simulator framework that demand improvement in future implementations. Many teens suggested that signs and lane markings were difficult to see; although every attempt was made to ensure clarity and visibility of simulation environment objects, improvements are still required. Many teens suggested that the simulator (steering/pedals) were difficult to operate; this remains a common complaint across all driving simulators—particularly so with the feel of the brake pedal [52]. It is never feasible to make a simulator “feel” like everyone’s own vehicle, both physically (e.g., the resistive force on the brake pedal) and visually (e.g., the reduced sensation of longitudinal motion—or vection [53]—while braking within a virtual environment). Nonetheless, ongoing improvements including additional acclimation and device “tuning” to each driver should be considered. Finally, various teens (and parents) suggested the need for improved simulator graphics/realism toward a modern-day “gaming” standard.

5.3. Impairment Training Modules

The current implementation of SBT content was designed explicitly to promote positive training (i.e., enhancement of knowledge, skills, behaviors [24]) to improve roadway safety. This was attempted by explicitly addressing the top five (documented) causes for teen driving accidents and fatalities in New York State [41], with “impairment” being one of those five. It is well known that teen drivers frequently speak and text-message on their cell phones while driving [54]. Core simulator exercises are in development that compare graphical representations of the teen’s driving to provide concrete performance and behavioral feedback. Related studies have investigated internal distractions (mind-wandering) while driving [33,55], which is well-suited to a simulator-based deployment. Finally, according to data published over the last decade, nearly one-fifth of teenagers killed in automobile accidents were under the influence (i.e., illicit or prescription drugs, alcohol), and young drivers are more 17 times more likely to be involved in a fatal accident when they have a blood alcohol concentration of 0.08% (i.e., the legal limit in New York State) than when they have not been drinking [56]. Simulator-based exercises have been designed and installed [42] that enable teen drivers to perform “routine” driving tasks with/without a simulated state of driver impairment that similarly includes concrete driver performance feedback.

5.4. Driving Exercises that Promote Reverse Egress

One major deficiency in the present deployment is its current inability to perform a reverse maneuver within the simulator. Particularly within a driver education context, this feature could be extremely useful and effective toward improving young driver safety. For example, reverse maneuvers within a crowded parking area, 2-point turns (e.g., on a one-way street), 3-point turns (e.g., into a driveway), and the classic parallel-parking maneuver all require an accurate simulation model for reverse vehicle motion. All of these maneuvers are encountered during every day driving, and many are requirements on a standard New York State road test examination [57].

5.5. Driving Exercises for Driver Training on Rural Roads

Previous research [58] has verified that fatality risk is elevated more so on rural roads than urban roads for all drivers and particularly teen drivers [59]. Per miles traveled, there have been estimated to be 30–40% more crashes and deaths in rural areas than in urban areas [59]. Likely causes of these untoward events are attributed to: remote areas with which young drivers are less familiar; low-lighting conditions during nighttime driving; increased propensity for elevated speeds on roads that are less traveled [60]; and as a result of these factors—an elevated likelihood for induced cognitive distraction (e.g., mindlessness, task-unrelated thought [33,55]) during the driving task. As such, these are all critical factors for which to provide exposure—and subsequently examine human performance behaviors—in future teen driving SBT research.

5.6. Longitudinal Data to Measure Efficacy of SBT

To determine the long-term efficacy of employing simulation for teen drivers on a broader scale, it would be beneficial to observe the impact of such training exposure upon lifetime driving statistics. In New York State, the MV-15 report [61] provides vital information regarding traffic accidents, moving violations, and other negative driving outcomes of program participants. In future implementations of similar programs, it would be useful to obtain consent/assent to collect/analyze/compare reports culled from participants enrolled in simulation-based training programs to similar data culled from state and national averages. If favorable, such empirical data—presently unavailable in the literature—could influence future policy for future safety standards related to SBT courseware.

Author Contributions

Conceptualization, K.E.L. and K.F.H.; methodology, K.F.H.; software, G.A.F. and K.F.H.; validation, K.F.H.; formal analysis, K.F.H., M.B., H.D. and N.H.; investigation, R.S.A.L., M.B., N.H., H.D., B.S., J.-A.D., R.C., I.D.J., A.A., J.D. and N.B.; data curation, M.B. and K.F.H.; writing—original draft preparation, K.F.H. and R.S.A.L.; writing—review and editing, R.S.A.L. and K.F.H.; visualization, K.F.H. and R.S.A.L.; supervision, K.F.H.; project administration, N.H., H.D., M.B. and K.F.H.; funding acquisition, K.E.L. and K.F.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Science Foundation (NSF), grant number 1229861, the Industry/University Cooperative Research Center (I/UCRC) for e-Design. This research effort served as a timely contribution to its “Visualization and Virtual Prototyping” track.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board (or Ethics Committee) of the University at Buffalo Internal Review Board (protocol code 030-431144 on 21 October 2015).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publically available due to participant privacy and confidentiality concerns related to the terms of the study IRB.

Acknowledgments

Toward this extensive, multi-year endeavor, the authors wish to gratefully acknowledge the financial and technical support provided by Moog, Inc. (East, Aurora, NY) that further enabled the novel application of M&S to improve STEM, training engagement, and young driver safety. Lastly, authors Hulme and Lim are grateful for the ongoing support of the University at Buffalo Stephen Still Institute for Sustainable Transportation and Logistics (SSISTL).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Centers for Disease Control and Prevention (CDC). WISQARS (Web-Based Injury Statistics Query and Reporting System). US Department of Health and Human Services. September 2019. Available online: https://www.cdc.gov/injury/wisqars/index.html (accessed on 12 November 2020).

- Insurance Institute for Highway Safety (IIHS). Fatality Facts 2017: Teenagers. Highway Loss Data Institute. December 2018. Available online: https://www.iihs.org/topics/fatality-statistics/detail/teenagers (accessed on 12 November 2020).

- Mayhew, D.R.; Simpson, H.M.; Pak, A. Changes in collision rates among novice drivers during the first months of driving. Accid. Anal. Prev. 2003, 35, 683–691. [Google Scholar] [CrossRef]

- McCartt, A.T.; Shabanova, V.I.; Leaf, W.A. Driving experiences, crashes, and teenage beginning drivers. Accid. Anal. Prev. 2003, 35, 311–320. [Google Scholar] [CrossRef]

- Thomas, F.D., III; Blomberg, R.D.; Fisher, D.L. A Fresh Look at Driver Education in America; Report No. DOT HS 811 543; National Highway Traffic Safety Administration: Washington, DC, USA, 2012.

- Vaa, T.; Høye, A.; Almqvist, R. Graduated driver licensing: Searching for the best composition of components. Lat. Am. J. Manag. Sustain. Dev. 2015, 2, 160. [Google Scholar] [CrossRef]

- Strayer, D.L.; Cooper, J.M.; Goethe, R.M.; McCarty, M.M.; Getty, D.J.; Biondi, F. Visual and Cognitive Demands of Using In-Vehicle Information Systems; AAA Foundation for Traffic Safety: Washington, DC, USA, 2017. [Google Scholar]

- Rechtin, M. Dumb and Dumber: America’s Driver Education is Failing Us All—Reference Mark. motortrend.com (Online Article), Published 20 June 2017. Available online: https://www.motortrend.com/news/dumb-dumber-americas-driver-education-failing-us-reference-mark/ (accessed on 26 November 2020).

- Helman, S.V.; Fildes, W.; Oxley, B.; Fernández-Medina, J.; Weekley, K. Study on Driver Training, Testing and Medical Fitness—Final Report; European Commission, Directorate-General Mobility and Transport (DG MOVE): Brussels, Belgium, 2017; ISBN 978-92-79-66623-0. [Google Scholar]

- Nalmpantis, D.; Naniopoulos, A.; Bekiaris, E.; Panou, M.; Gregersen, N.P.; Falkmer, T.; Baten, G.; Dols, J.F. “TRAINER” project: Pilot applications for the evaluation of new driver training technologies. In Traffic & Transport Psychology: Theory and Application; Underwood, G., Ed.; Elsevier: Oxford, UK, 2005; pp. 141–156. ISBN 9780080443799. [Google Scholar]

- Imtiaz, A.; Mueller, J.; Stanley, L. Driving Behavior Differences among Early Licensed Teen, Novice Teen, and Experienced Drivers in Simulator and Real World Potential Hazards. In Proceedings of the IIE Annual Conference and Expo 2014, Montreal, QC, Canada, 31 May–3 June 2014. [Google Scholar]

- Mirman, J.H.; Curry, A.E.; Winston, F.K.; Wang, W.; Elliott, M.R.; Schultheis, M.T.; Thiel, M.C.F.; Durbin, D.R. Effect of the teen driving plan on the driving performance of teenagers before licensure: A randomized clinical trial. JAMA Pediatrics 2014, 168, 764–771. [Google Scholar] [CrossRef] [PubMed]

- Milleville-Pennel, I.; Marquez, S. Comparison between elderly and young drivers’ performances on a driving simulator and self-assessment of their driving attitudes and mastery. Accid. Anal. Prev. 2020, 135, 105317. [Google Scholar] [CrossRef] [PubMed]

- Martín-delosReyes, L.M.; Jiménez-Mejías, E.; Martínez-Ruiz, V.; Moreno-Roldán, E.; Molina-Soberanes, D.; Lardelli-Claret, P. Efficacy of training with driving simulators in improving safety in young novice or learner drivers: A systematic review. Transp. Res. Part F Traffic Psychol. Behav. 2019, 62, 58–65. [Google Scholar] [CrossRef]

- Campbell, B.T.; Borrup, K.; Derbyshire, M.; Rogers, S.; Lapidus, G. Efficacy of Driving Simulator Training for Novice Teen Drivers. Conn. Med. 2016, 80, 291–296. [Google Scholar] [PubMed]

- De Groot, S.; De Winter, J.C.F.; Mulder, M.; Wieringa, P.A. Didactics in simulator based driver training: Current state of affairs and future potential. In Proceedings of the Driving Simulation Conference North America, Iowa City, IA, USA, 12–14 September 2007. [Google Scholar]

- McDonald, C.C.; Goodwin, A.H.; Pradhan, A.K.; Romoser MR, E.; Williams, A.F. A Review of Hazard Anticipation Training Programs for Young Drivers. J. Adolesc. Health 2015, 57 (Suppl. 1), S15–S23. [Google Scholar] [CrossRef]

- Fuller, R. Driver training and assessment: Implications of the task-difficulty homeostasis model. In Driver Behaviour and Training; Dorn, L., Ed.; Ashgate: Dublin, Ireland, 2007; Volume 3, pp. 337–348. [Google Scholar]

- Hulme, K.F.; Kasprzak, E.; English, K.; Moore-Russo, D.; Lewis, K. Experiential Learning in Vehicle Dynamics Education via Motion Simulation and Interactive Gaming. Int. J. Comput. Games Technol. 2009, 2009, 952524. [Google Scholar] [CrossRef]

- Hulme, K.F.; Lewis, K.E.; Kasprzak, E.M.; Russo, D.-M.; Singla, P.; Fuglewicz, D.P. Game-based Experiential Learning in Dynamics Education Using Motion Simulation. In Proceedings of the Interservice/Industry Training, Simulation and Education Conference (I/ITSEC), Orlando, FL, USA, 29 November–2 December 2010. [Google Scholar]

- Wickens, C.; Toplak, M.; Wiesenthal, D. Cognitive failures as predictors of driving errors, lapses, and violations. Accid. Anal. Prev. 2008, 40, 1223–1233. [Google Scholar] [CrossRef] [PubMed]

- Chiu-Shui, C.; Chien-Hui, W. How Real is the Sense of Presence in a Virtual Environment?: Applying Protocol Analysis for Data Collection. In Proceedings of the CAADRIA 2005: 10th International Conference on Computer Aided Design Research in Asia, New Delhi, India, 28–30 April 2005. [Google Scholar]

- Berkman, M.I.; Akan, E. Presence and Immersion in Virtual Reality. In Encyclopedia of Computer Graphics and Games; Lee, N., Ed.; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar] [CrossRef]

- Groeger, J.A.; Banks, A.P. Anticipating the content and circumstances of skill transfer: Unrealistic expectations of driver training and graduated licensing? Ergonomics 2007, 50, 1250–1263. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Kaptein, N.; Theeuwes, J.; van der Horst, R. Driving Simulator Validity: Some Considerations. Transp. Res. Rec. 1996, 1550, 30–36. [Google Scholar] [CrossRef]

- Godley, S.T.; Triggs, T.J.; Fildes, B.N. Driving simulator validation for speed research. Accid. Anal. Prev. 2002, 34, 589–600. [Google Scholar] [CrossRef]

- Bella, F. Driving simulator for speed research on two-lane rural roads. Accid. Anal. Prev. 2008, 40, 1078–1087. [Google Scholar] [CrossRef]

- Stoner, H.A.; Fisher, D.; Mollenhauer, M., Jr. Simulator and scenario factors influencing simulator sickness. In Handbook of Driving Simulation for Engineering, Medicine, and Psychology; CRC Press: Boca Raton, FL, USA, 2011. [Google Scholar]

- Pettijohn, K.; Pistone, D.; Warner, A.; Roush, G.; Biggs, A. Postural Instability and Seasickness in a Motion-Based Shooting Simulation. Aerosp. Med. Hum. Perform. 2020, 91, 703–709. [Google Scholar] [CrossRef]

- Keshavarz, B.; Ramkhalawansingh, R.; Haycock, B.; Shahab, S.; Campos, J. Comparing simulator sickness in younger and older adults during simulated driving under different multisensory conditions. Transp. Res. Part F Traffic Psychol. Behav. 2018, 54, 47–62. [Google Scholar] [CrossRef]

- Gee, J.P. What Games Have to Teach Us about Learning and Literacy; Palgrave Macmillan: New York, NY, USA, 2007. [Google Scholar]

- Lee, J.; Hammer, J. Gamification in Education: What, How, Why Bother? Acad. Exch. Q. 2011, 15, 146. [Google Scholar]

- Hulme, K.F.; Androutselis, T.; Eker, U.; Anastasopoulos, P. A Game-based Modeling and Simulation Environment to Examine the Dangers of Task-Unrelated Thought While Driving. In Proceedings of the MODSIM World Conference, Virginia Beach, VA, USA, 25–27 April 2016. [Google Scholar]

- Milliken, W.F.; Milliken, D.L. Race Car Vehicle Dynamics; Society of Automotive Engineers: Warrendale, PA, USA, 1995. [Google Scholar]

- Rajamani, R. Vehicle Dynamics and Control; Springer: New York, NY, USA, 2006; ISBN 0-387-26396-9. [Google Scholar]

- Raghuwanshi, V.; Salunke, S.; Hou, Y.; Hulme, K.F. Development of a Microscopic Artificially Intelligent Traffic Model for Simulation. In Proceedings of the Interservice/Industry Training, Simulation and Education Conference (I/ITSEC), Orlando, FL, USA, 1–4 December 2014. [Google Scholar]

- Romano, R. Non-linear Optimal Tilt Coordination for Washout Algorithms. In Proceedings of the AIAA Modeling and Simulation Technologies Conference and Exhibit, AIAA 2003, Austin, TX, USA, 11–14 August 2003; p. 5681. [Google Scholar] [CrossRef]

- Bowles, R.L.; Parrish, R.V.; Dieudonne, J.E. Coordinated Adaptive Washout for Motion Simulators. J. Aircr. 1975, 12, 44–50. [Google Scholar] [CrossRef]

- Sung-Hua, C.; Li-Chen, F. An optimal washout filter design for a motion platform with senseless and angular scaling maneuvers. In Proceedings of the 2010 American Control Conference, ACC 2010, Baltimore, MD, USA, 30 June–2 July 2010; pp. 4295–4300. [Google Scholar] [CrossRef]

- Akutagawa, K.; Wakao, Y. Stabilization of Vehicle Dynamics by Tire Digital Control—Tire Disturbance Control Algorithm for an Electric Motor Drive System. World Electr. Veh. J. 2019, 10, 25. [Google Scholar] [CrossRef]

- New York State Department of Health (NYSDOH). Car Safety—Teen Driving Safety, (Online Statistics Portal). 2020. Available online: https://www.health.ny.gov/prevention/injury_prevention/teens.htm (accessed on 16 November 2020).

- Fabiano, G.A.; Hulme, K.; Linke, S.M.; Nelson-Tuttle, C.; Pariseau, M.E.; Gangloff, B.; Lewis, K.; Pelham, W.E.; Waschbusch, D.A.; Waxmonsky, J.; et al. The Supporting a Teen’s Effective Entry to the Roadway (STEER) Program: Feasibility and Preliminary Support for a Psychosocial Intervention for Teenage Drivers with ADHD. Cogn. Behav. Pract. 2011, 18, 267–280. [Google Scholar] [CrossRef]

- Fabiano, G.; Schatz, N.K.; Hulme, K.F.; Morris, K.L.; Vujnovic, R.K.; Willoughby, M.T.; Hennessy, D.; Lewis, K.E.; Owens, J.; Pelham, W.E. Positive Bias in Teenage Drivers with ADHD within a Simulated Driving Task. J. Atten. Disord. 2015, 22, 1150–1157. [Google Scholar] [CrossRef]

- Overton, R.A. Driving Simulator Transportation Safety: Proper Warm-up Testing Procedures, Distracted Rural Teens, and Gap Acceptance Intersection Sight Distance Design. Ph.D. Thesis, University of Tennessee, Knoxville, TN, USA, 2012. Available online: https://trace.tennessee.edu/utk_graddiss/1462 (accessed on 2 December 2020).

- Gianaros, P.; Muth, E.; Mordkoff, J.; Levine, M.; Stern, R. A Questionnaire for the Assessment of the Multiple Dimensions of Motion Sickness. Aviat. Space Environ. Med. 2001, 72, 115–119. [Google Scholar]

- De Winter, J.C.; de Groot, S.; Mulder, M.; Wieringa, P.A.; Dankelman, J.; Mulder, J.A. Relationships between driving simulator performance and driving test results. Ergonomics 2009, 52, 137–153. [Google Scholar] [CrossRef] [PubMed]

- Hirsch, P.; Bellavance, F.; Choukou, M.-A. Transfer of training in basic control skills from a truck simulator to a real truck. J. Transp. Res. Board 2017, 2637, 67–73. [Google Scholar] [CrossRef]

- Clinewood. Are Driving Simulators Beneficial for Truck Driving Training? 2018. Available online: https://clinewood.com/2018/02/19/driving-simulators-beneficial-truck-driving-training/ (accessed on 22 November 2020).

- Salas, E.; Bowers, C.A.; Rhodenizer, L. It is not how much you have but how you use it: Toward a rational use of simulation to support aviation training. Int. J. Aviat. Psychol. 1998, 8, 197–208. [Google Scholar] [CrossRef] [PubMed]

- Straus, S.G.; Matthew, W.L.; Kathryn, C.; Rick, E.; Matthew, E.B.; Timothy, M.; Christopher, M.C.; Geoffrey, E.G.; Heather, S. Collective Simulation-Based Training in the U.S. Army: User Interface Fidelity, Costs, and Training Effectiveness. Santa Monica, CA: RAND Corporation. 2019. Available online: https://www.rand.org/pubs/research_reports/RR2250.html (accessed on 18 November 2020).

- Hays, R.T.; Michael, J.S. Simulation Fidelity in Training System Design: Bridging the Gap between Reality and Training; Springer: New York, NY, USA, 1989. [Google Scholar]

- Wu, Y.; Boyle, L.; McGehee, D.; Roe, C.; Ebe, K.; Foley, J. Modeling Types of Pedal Applications Using a Driving Simulator. Hum. Factors 2015, 57, 1276–1288. [Google Scholar] [CrossRef] [PubMed]

- Kemeny, A.; Panerai, F. Evaluating perception in driving simulation experiments. Trends Cogn. Sci. 2003, 7, 31–37. [Google Scholar] [CrossRef]

- Delgado, M.K.; Wanner, K.; McDonald, C. Adolescent Cellphone Use While Driving: An Overview of the Literature and Promising Future Directions for Prevention. Media Commun. 2016, 4, 79. [Google Scholar] [CrossRef] [PubMed]

- Hulme, K.F.; Morris, K.L.; Anastasopoulos, P.; Fabiano, G.A.; Frank, M.; Houston, R. Multi-measure Assessment of Internal Distractions on Driver/Pilot Performance. In Proceedings of the Interservice/Industry Training, Simulation and Education Conference (I/ITSEC), Orlando, FL, USA, 30 November–4 December 2015. [Google Scholar]

- Centers for Disease Control and Prevention (CDC). Vital Signs, Teen Drinking and Driving. 2020. Available online: https://www.cdc.gov/vitalsigns/teendrinkinganddriving/index.html (accessed on 13 October 2020).

- New York State Department of Motor Vehicles (NYSDMV). Pre-Licensing Course Student’s Manual (Form MV-277.1 PDF Download). 2020. Available online: https://dmv.ny.gov/forms/mv277.1.pdf (accessed on 13 October 2020).

- Senserrick, T.; Brown, T.; Quistberg, D.A.; Marshall, D.; Winston, F. Validation of Simulated Assessment of Teen Driver Speed Management on Rural Roads. Annual proceedings/Association for the Advancement of Automotive Medicine. Assoc. Adv. Automot. Med. 2007, 51, 525–536. [Google Scholar]

- Insurance Institute for Highway Safety (IIHS). Fatality Facts 2018—Urban/Rural Comparison. 2018. Available online: https://www.iihs.org/topics/fatality-statistics/detail/urban-rural-comparison (accessed on 2 November 2020).

- Henning-Smith, C.; Kozhimannil, K.B. Rural-Urban Differences in Risk Factors for Motor Vehicle Fatalities. Health Equity 2018, 2, 260–263. [Google Scholar] [CrossRef] [PubMed]

- New York State Department of Motor Vehicles (NYSDMV). REQUEST FOR CERTIFIED DMV RECORDS (Form MV-15 PDF Download). 2019. Available online: https://dmv.ny.gov/forms/mv15.pdf (accessed on 2 November 2020).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).