3.1. Outbreak of Fire during Cooking with an IH Cooker

A fire outbreak incident occurred in April 2008 due to the misuse of an IH cooker (from Encyclopedia of Accidents II (Nikkei BP)). When a woman was making a deep-fried dish, a fire broke out during her absence from the kitchen for a while. Although the woman attempted to fight the fire, she suffered from burns on the hand, and the cooking range hood was partly damaged. The following three points are the precautionary measures a user must take when using an IH cooker, and violations of these notices occurred in this case.

- (1)

When preparing a deep-fried dish, the user must select the “deep-fried dish” mode. For heating a cooking pot on an IH cooker, an eddy current is produced inside the pot by means of a magnetic-generation coil. Using the “deep-fried dish” mode and setting up the oil temperature is effective to some extent to prevent overheating of the pot. The woman, however, selected the “manual” mode.

- (2)

When using an IH cooker, the user must use the pot exclusively provided with the cooker. The IH cooker has a built-in temperature sensor at the location corresponding to the center of the exclusive cook-pot that will sense the temperature of the pot and thus avoid overheating of the oil. However, the woman used a different commercial cooking pot.

- (3)

When using an IH cooker, the user must not cook or prepare a deep-fried dish using an amount of oil of less than 500 g. Less oil makes it impossible to measure the temperature of the oil accurately as there can be an abrupt increase in the temperature. The woman cooked using an amount of oil of less than 500 g.

A reproducibility experiment of this incident conducted by the National (Japanese) Institute of Technology and Evaluation (NITE), Japan, verified the following results. When the exclusive cooking pot was used with 600 mL of oil, a fire did not break out. In contrast, when a commercial pot with a caved center was used, combustion occurred in approximately 6 min.

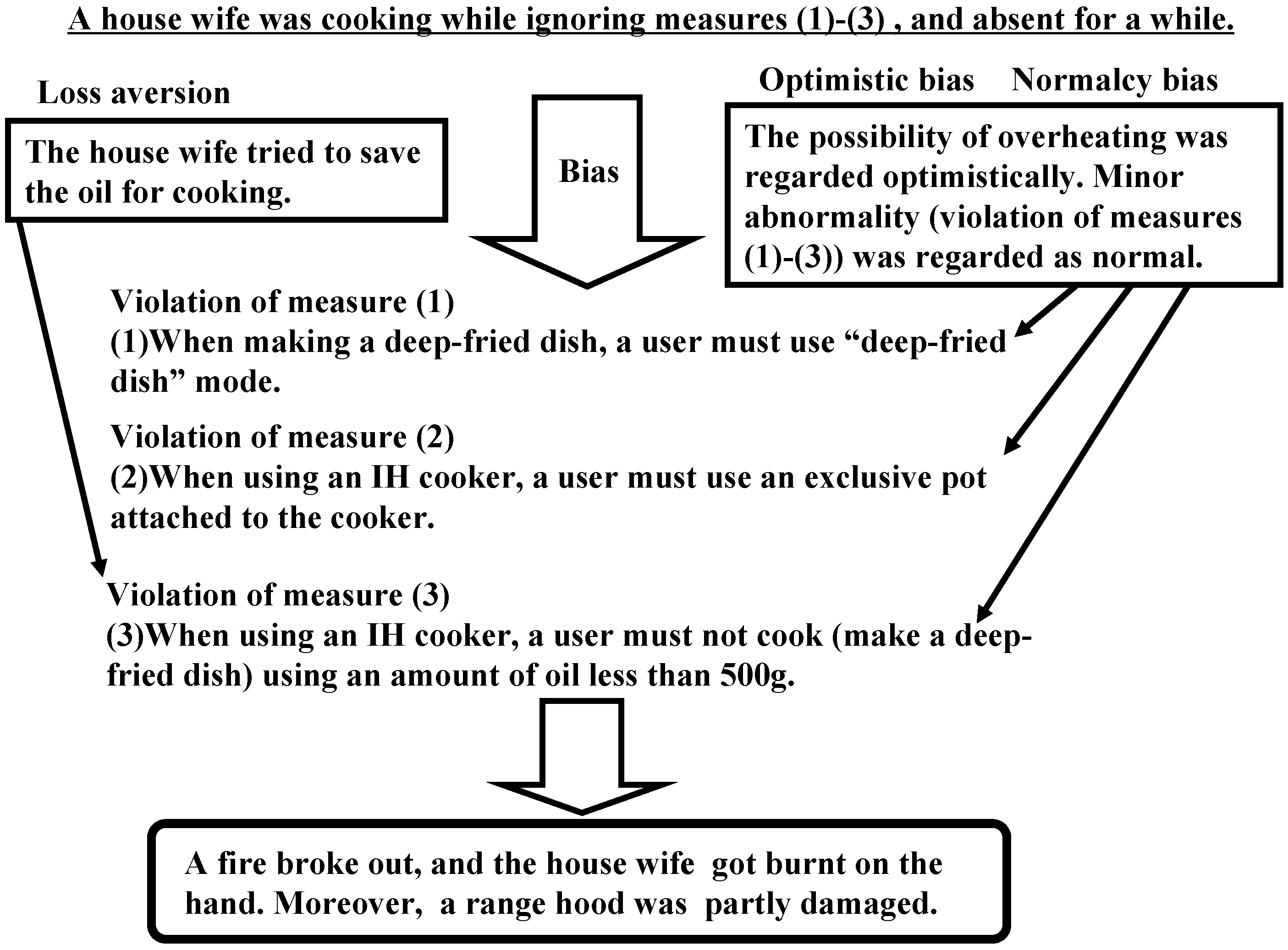

Now, we consider the cause of the incident from the perspective of cognitive biases. Optimistic biases correspond to the tendency to overestimate the rosiness of our future (occurrence of a likable event) and to avoid facing inconvenient events. This may be due to the fact that imagining a favorable future provides us with a feel-good experience. We tend to believe that we are less at risk of experiencing a negative event than others and that we exaggerate less than others.

Normalcy biases represent our propensity to regard minor abnormalities as normal. By this phenomenon, we try to prevent ourselves from reacting excessively to various changes or new events and becoming impoverished. Normalcy bias in excess becomes hazardous in that we do not seriously consider a warning presented to us and may delay in escaping from a disaster, such as a Tsunami or landslide. Normalcy bias is in fact a coping mechanism we adopt while attempting to register and deal with stressful events or impending disasters. Because fears change, one tends to resist them, and in turn, the brain tries to simulate a normal environment. This resistance to a change is a considerably common and normal response and can occur even during the first phase of stressful events or disasters. However, risk can stem from this bias, as we usually become accustomed to normal situations or states and thereby tend to overestimate optimistically that the situations surrounding us will continue to be normal.

In summary, the violation of the aforementioned precautionary measures, (1) and (2), probably stemmed from the optimistic and normalcy biases. The possibility of overheating was regarded optimistically. We must also regard the minor abnormality (violation of Measures (1) and (2)) as normal due to the normalcy bias. Concerning Measure (3), along with optimistic and normalcy biases, loss aversion might have played a role, because the woman tried to save cooking oil. Thus, cognitive biases contributed mainly to the occurrence of the incident. The analysis of this incident is summarized in

Figure 3.

Figure 3.

Summary of the analysis of an IH cooker incident.

Figure 3.

Summary of the analysis of an IH cooker incident.

3.2. KLM Flight 4805 Crash

In March 1977, a Boeing 747 KLM Flight 4805 left Amsterdam and was bound for Las Palmas Airport on the Canary Islands. A terrorist bomb explosion occurred at a flower shop in Las Palmas Airport, and so, the flight, along with a few others, was diverted to Tenerife Airport. After landing at the airport, the flight waited for clearance from the ATC (air traffic controller) to take off, but because of reduced visibility due to fog at the airport, the clearance was delayed. The captain, however, decided to take off without permission from the ATC, and he turned the throttles to full power on the foggy runway. Unfortunately, a Pan Am 747 plane was parked across the runway as the KLM Flight 4805 approached it at take-off speed. Although the captain attempted to avoid a collision by trying to take off as early as possible, the underside of KLM flight’s fuselage ripped through the Pan Am plane, and the KLM plane burst into a fiery explosion. All crew and passengers of the KLM plane and many passengers of the Pan Am plane lost their lives.

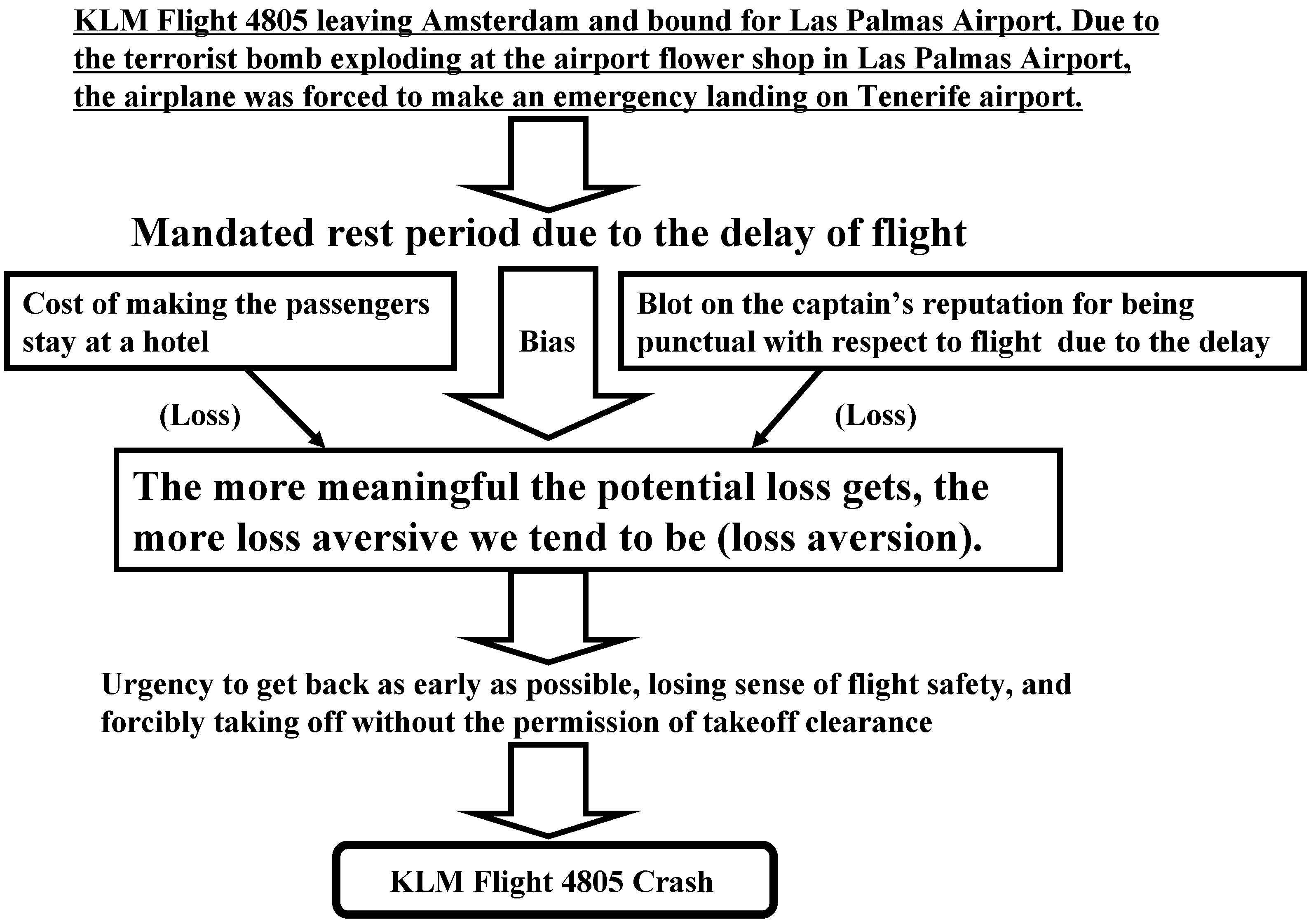

Brafman and Brafman [

19] pointed out that loss aversion strongly contributed to the KLM Flight 4805 crash. In this case, the losses for the captain of the flight include a reduced mandated rest period due to the flight delay, the cost of accommodating the passengers at a hotel until the situation improves and a series of consequences of the flight delay, such as stress imposed on the captain and a blot on the captain’s reputation of being punctual. The complicated interaction of these factors probably triggered and escalated the captain’s feeling of loss aversion. The more significance we attach to potential loss, the more loss aversive we tend to be. The captain must have been preoccupied with the urge to reach the destination as early as possible and must have lost his sense of safety, resulting in his decision to take off without clearance from the ATC. For no apparent logical reason, we tend to get trapped in such a cognitive bias. The phenomenon of loss aversion apparently unexpectedly affected the decision making skills of a seasoned flight captain and caused the critical crash. The analysis of the crash is summarized in

Figure 4.

Figure 4.

Summary of the analysis of the KLM Flight 4805 crash.

Figure 4.

Summary of the analysis of the KLM Flight 4805 crash.

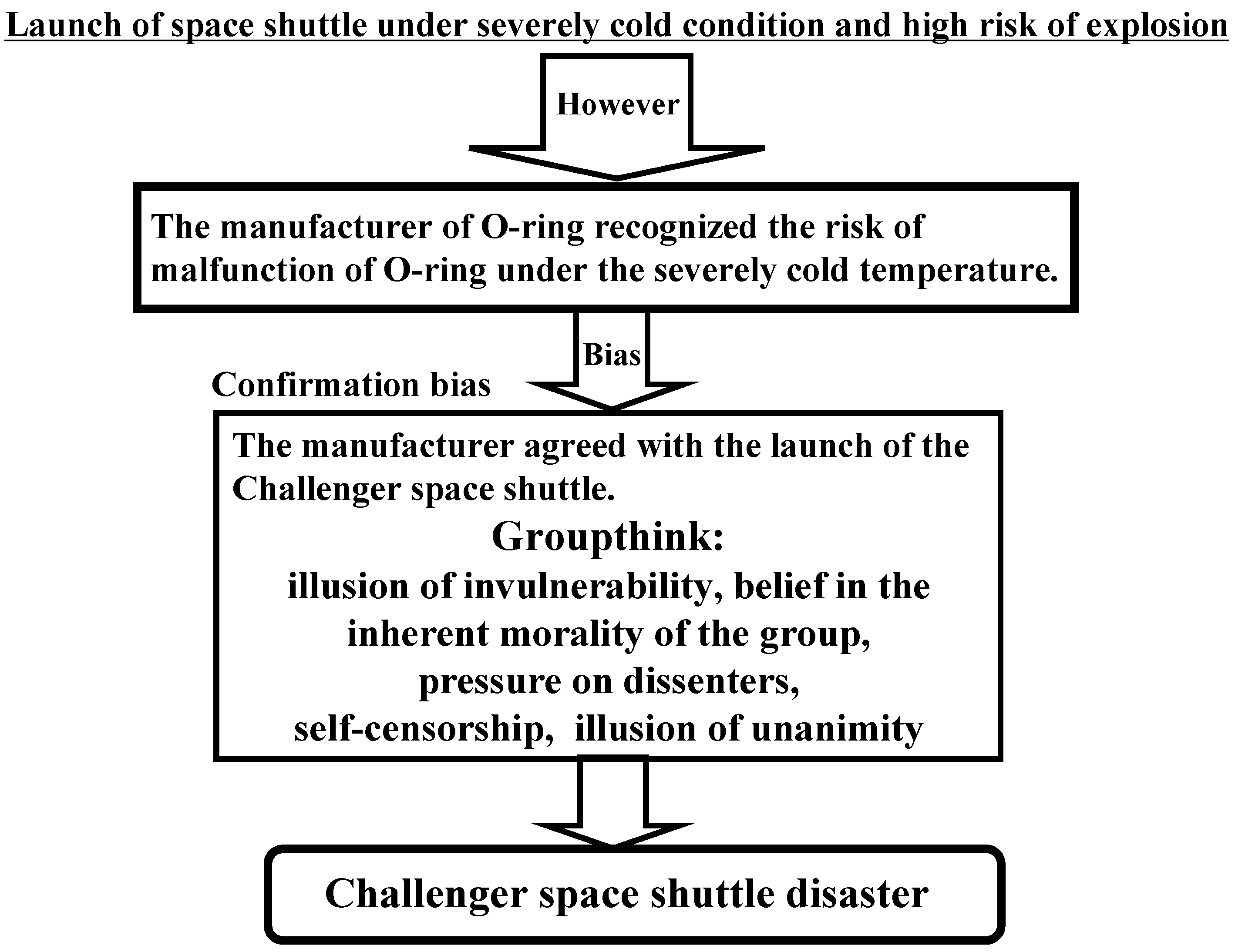

3.3. Challenger Space Shuttle Disaster

One of the major causes of the Challenger space shuttle disaster, in January 1986, is regarded to be the phenomenon of groupthink, especially the illusion of unanimity (e.g., Reason [

10] and Vaughan [

11]). The illusion of unanimity implies that the group decision conforms to the majority view. When such a cognitive bias occurs, the majority view and individual judgments are assumed to be unanimous. As shown in

Figure 2, groupthink stems from the confirmation heuristic. As stated by Janis [

12,

13,

14], groupthink is explained by the following three properties: overestimation of the group, closed mindedness and pressure toward conformity. These properties will potentially distort the group’s decision toward the wrong direction.

Although the manufacturer of the O-ring (a component of the space shuttle) recognized the risk of a malfunction of the O-ring under severe cold temperature, the manufacturer agreed with the launch of the Challenger space shuttle because of groupthink. Specifically, the factors that contributed to this irrational behavior include direct pressure on dissenters (group members are under social pressure to not oppose the group consensus), self-censorship (doubts and deviations from the perceived group consensus are not accepted) and the illusion of unanimity. Turner

et al. [

20,

21] also showed that groupthink is most likely to occur when a group experiences antecedent conditions, such as high cohesion, insulation from experts and limited methodological search and appraisal procedures, and leads to symptoms, such as the illusion of invulnerability, the belief in the inherent morality of the group, pressure on dissenters, self-censorship and the illusion of unanimity. More concretely, the symptoms of groupthink,

i.e., (1) incomplete analysis of the alternatives, (2) incomplete analysis of the objectives and (3) failure to examine the risks of the preferred choice, were observed during the occurrence of the Challenger space shuttle disaster. The group, as a whole, did not consider the opinion of the manufacturer that the O-ring might not properly work under a severely cold environment and did not carry out a complete analysis of this opinion. This eventually led to the critical disaster. The analysis of this incident is summarized in

Figure 5.

Figure 5.

Summary of the analysis of the Challenger space shuttle disaster.

Figure 5.

Summary of the analysis of the Challenger space shuttle disaster.

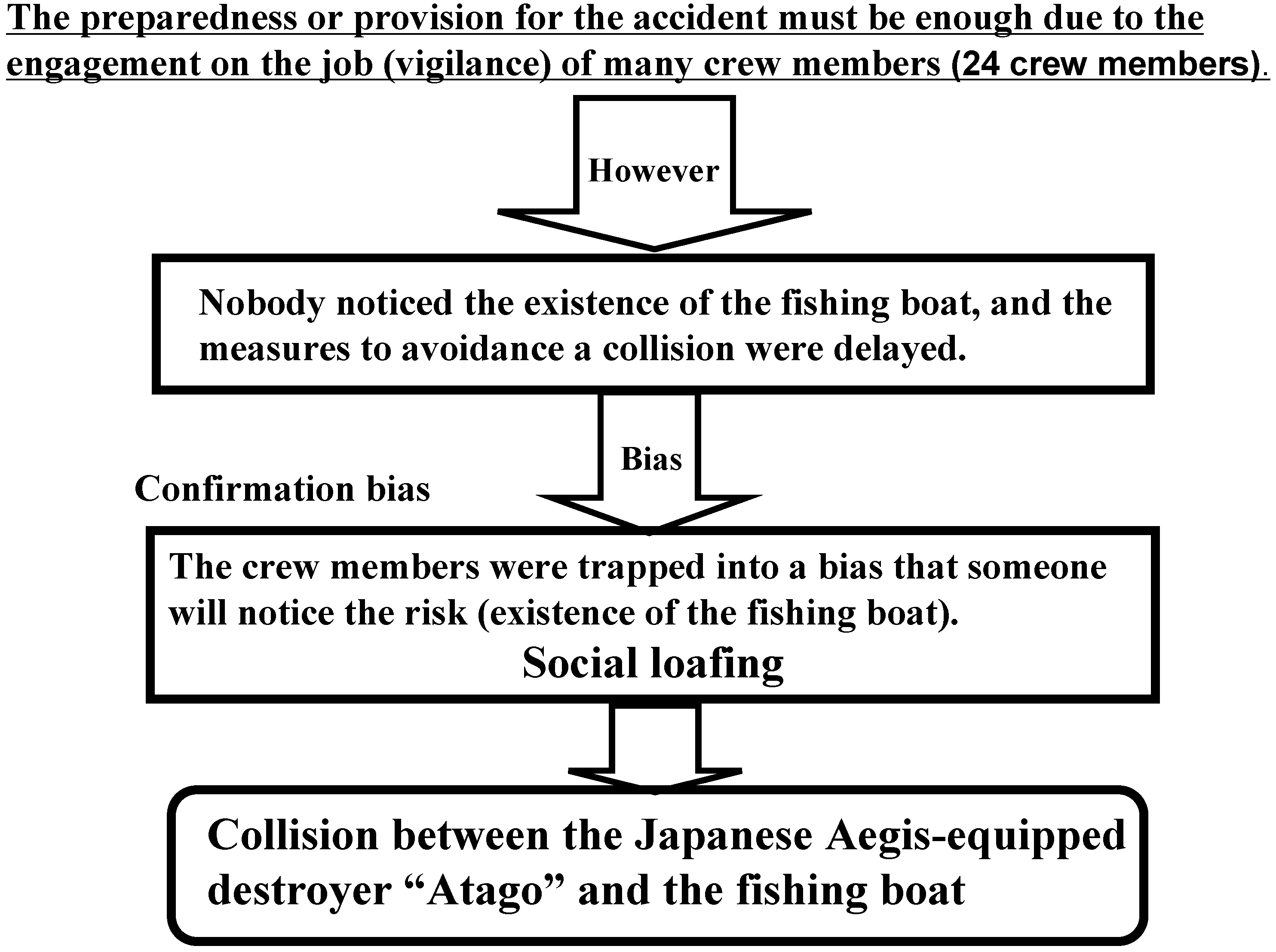

3.4. Collision between the Japanese Aegis-Equipped Destroyer “Atago” and a Fishing Boat

At dawn on 19 February 2008, the Japanese Aegis-equipped destroyer “Atago,” which belonged to the Japanese Ministry of Defense, collided with the fishing boat “Seitokumaru.” Consequently, two crew members of the fishing boat were missing and thereafter declared dead.

Social loafing, which stems from confirmation bias or optimistic bias, possibly led to this critical incident. Social loafing [

22,

23] corresponds to a defective behavior in a social dilemma situation. This phenomenon also potentially leads to an undesirable event under the cooperative working condition of a group. Therefore, organizational managers must take appropriate measures to prevent social loafing from leading to undesirable outcomes. Latané

et al. [

22] showed that social loafing is a tendency of an individual to exert less effort when carrying out a job in a group than when working individually. Murata [

24] showed that due to social loafing, the performance of a secondary vigilance (monitoring) task tends to decrease as the number of members increases. Moreover, he presented insights into the prevention of social loafing and demonstrated that the feedback of the information on each individual member’s performance and the group’s performance of both primary and secondary tasks is effective for restraining social loafing.

One of the main causes of the collision of the Japanese Aegis-equipped destroyer and the fishing boat is as follows. According to the announcement of the investigation committee of the collision, although twenty-four crew members were working on the Aegis-equipped destroyer at the time of collision, nobody properly noticed the fishing boat and, thus, could not take proper countermeasures against the collision. Every crew member must have optimistically reckoned that someone would notice an abnormal situation. The crew members may have failed to take into account the poor visibility due to the cold weather conditions while estimating the likelihood that some crew member will detect an anomaly or an obstacle. This corresponds to the social loafing phenomenon [

22,

23,

24], which stems from confirmation or optimistic bias. In other words, the lack of motivation to detect the likelihood of a collision occurred due to social loafing. The analysis of this collision is summarized in

Figure 6.

Figure 6.

Summary of the analysis of the collision between the Japanese Aegis-equipped destroyer “Atago” and a fishing boat.

Figure 6.

Summary of the analysis of the collision between the Japanese Aegis-equipped destroyer “Atago” and a fishing boat.

3.5. Three Mile Island Nuclear Power Plant Disaster

The main cause of the nuclear meltdown that happened at the Three Mile Island nuclear power plant in March 1979 is that the operators forgot to open the valve of an auxiliary (secondary) water feeding pump after maintenance and did not notice this error for some time [

25,

26,

27]. This incident is possibly related to confirmation bias (see

Figure 2), which made the operators believe that such a subtle error would not be the cause of any critical disaster. The confirmation bias makes us seek information that confirms our expectations (in this case, the expectation that a subtle error would not be the cause of a critical disaster), even though information that contradicts the expectation is available. In spite of the automatic operation of the ECCS (Emergency Core Cooling System), the operators did not notice the malfunction of the nuclear reactor because of the availability heuristic (

i.e., Halo effect), which biases our judgments by transferring our feelings or beliefs about one attribute of something to other unrelated attributes. For example, a good-looking person is likely to be perceived as a competent salesperson, even though there is no logical reason to believe that a person’s appearance is related to his/her sales achievements. The operators’ thinking process could have been biased, such that their belief about the normally-operating plant was transferred even to the malfunctioning plant. Due to confirmation and availability biases, the plant operators could not have identified the root cause of the meltdown. They must also have optimistically believed that such a minor lapse of not opening the valve of the auxiliary water feeding pump would not lead to a crucial disaster (optimistic bias stems from overconfidence).

On the basis of the past reports on the malfunction of the pilot relief valve, it is expected that the pilot relief valve cannot be closed in emergency situations. The confirmation bias that leads the operators to believe that such a trouble would not occur in the Three Mile Island nuclear power plant also prevented them from noticing and identifying the malfunction of the pilot relief valve.

It took a long time to identify the cause of the rapid and abrupt increase of the reactor core temperature, which led to the meltdown of the reactor core. This was probably because of the framing effect (bias) that generally makes operators not analyze and identify the cause of a disaster from multiple perspectives, especially under emergency situations. The operators adhered to the narrow frame that they usually used and could not apply another frame for solving the problem (identification of the cause).

Figure 7.

Summary of the analysis of Three Mile Island nuclear power plant disaster.

Figure 7.

Summary of the analysis of Three Mile Island nuclear power plant disaster.

The design of the central control room must have also been another main cause of the criticality of the disaster. The display system of the central control room was not designed in such a way that the states of the nuclear power plant components, such as the pilot-operated relief valve, the emergency feedwater pump and the drain tank, could be easily monitored. Eventually, the cause of the rapid and abrupt increase in the reactor core temperature could not be identified until the meltdown of the reactor core occurred. The optimistic bias, the fallacy of plan and the fallacy of control (see

Figure 2 for the details) were the main causes of the disaster. The designers of the control room and the plant operators must have optimistically predicted that any situations could be noticed and recognized by the system in status quo. Therefore, they did not consider the worst situations in designing the central control room owing to both the fallacies of plan and control. The analysis of this disaster is summarized in

Figure 7.

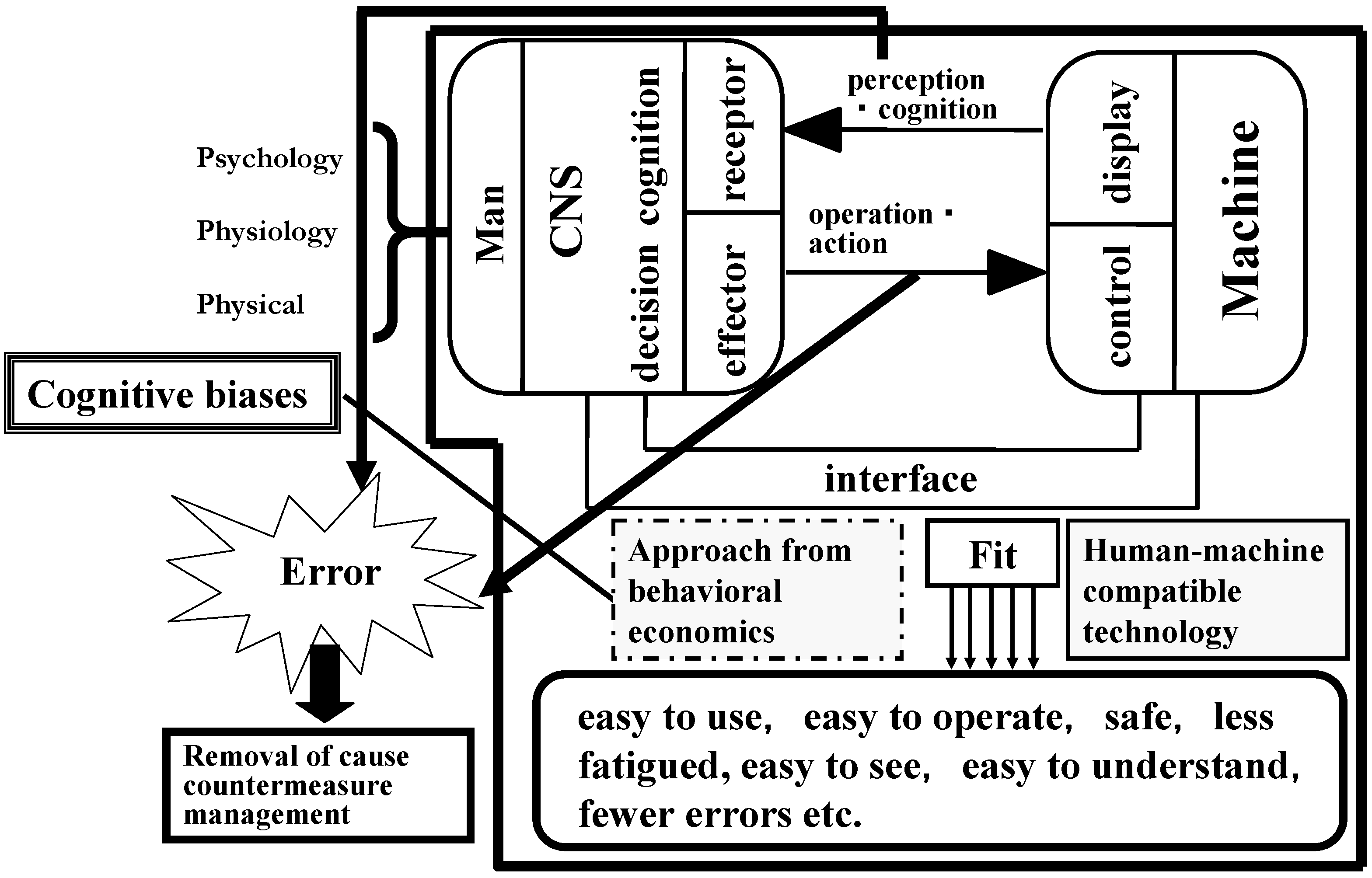

3.6. General Discussion

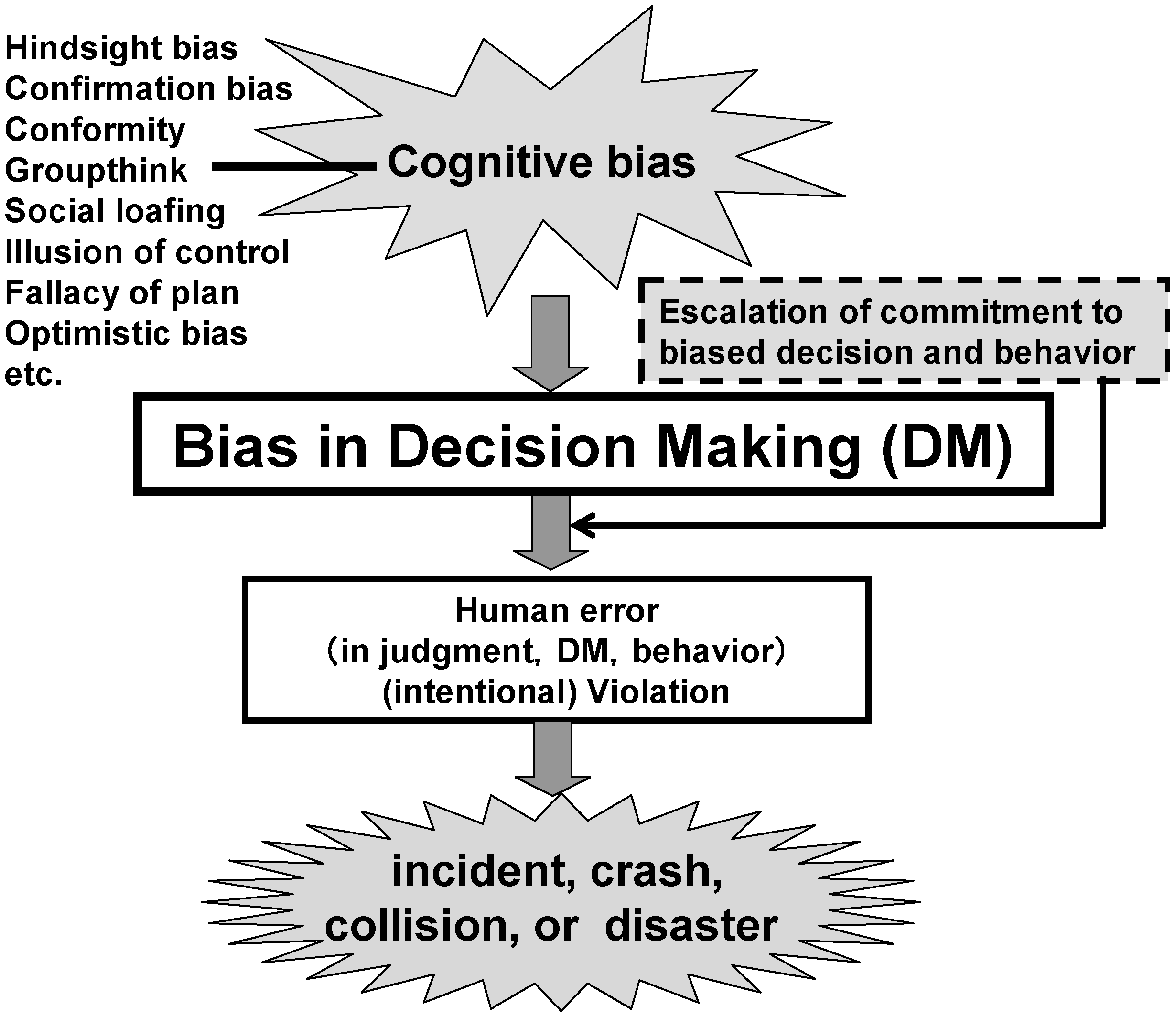

The framework of the traditional analysis of unexpected incidents or events does not focus on how the distortion of decision making due to cognitive biases is related to critical human errors and, eventually, to crashes, collisions or disasters. Although Reason [

10] describes judgmental heuristics and biases, irrationality and cognitive “backlash,” he has not demonstrated systematically how such biases and cognitive backlash are related to distorted decision making, which eventually becomes a trigger of crashes, collisions or disasters. Therefore, we presented a systematic approach based on the human cognitive characteristics that we frequently tend to behave irrationally and are, in most cases, unaware of how and to what extent these irrational behaviors influence the decisions we make (

Figure 2). In addition, we assumed that such irrational behaviors definitely distort our decisions and, in the worst cases, lead to crashes, collisions or disasters, as shown in

Figure 1.

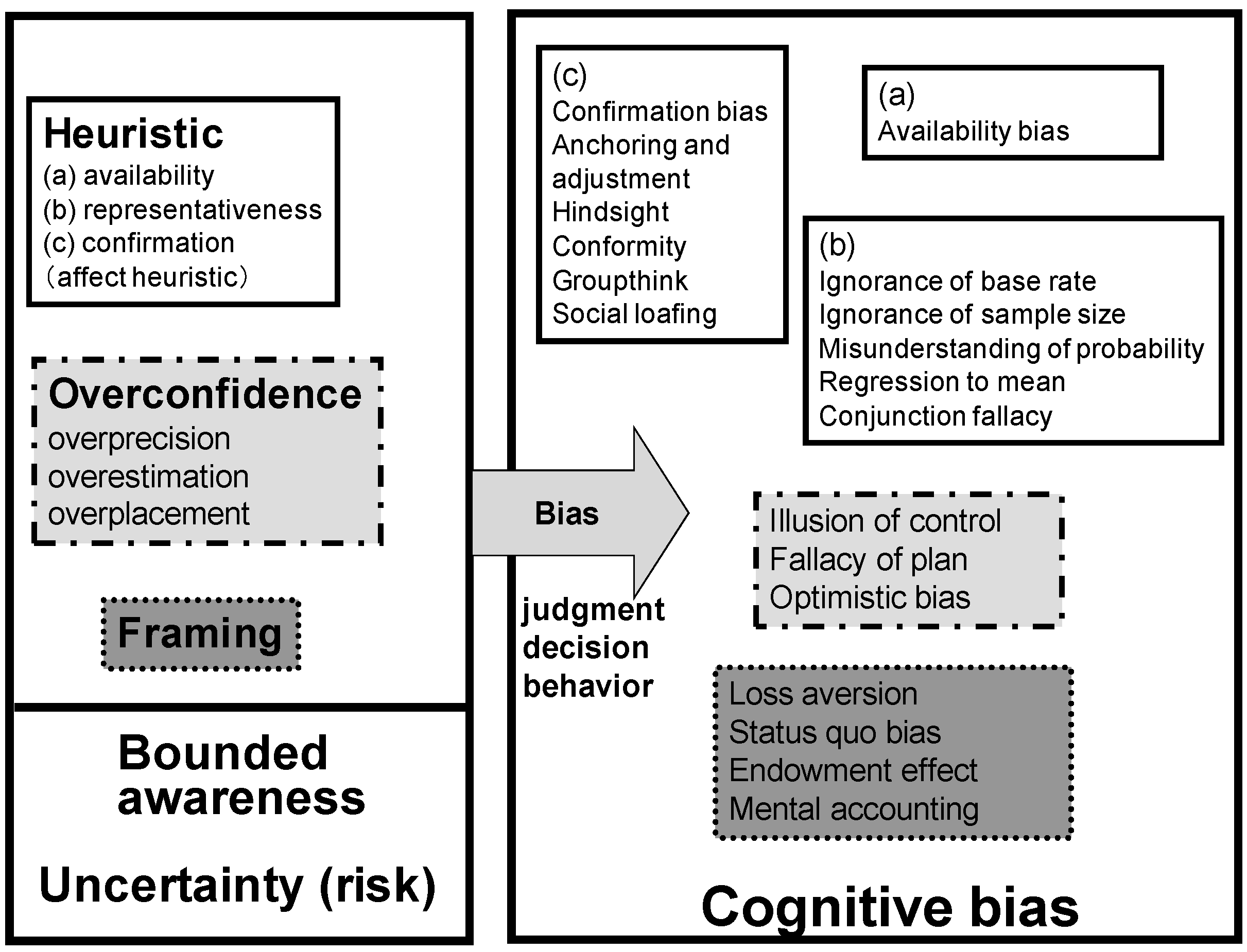

From the analyses of the five case studies presented in

Section 3.1,

Section 3.2,

Section 3.3,

Section 3.4 and

Section 3.5, we note that three major types of cognitive biases (

Figure 2) manifest themselves as causal factors of crashes, collisions or disasters. In the discussion of the cases presented in

Section 3.2 and

Section 3.5, framing is shown to contribute to the occurrence of the crash or disaster. Overconfidence and, in particular, optimistic bias lead to the errors or mistakes we discussed in the cases presented in

Section 3.1 and

Section 3.5. Heuristics, that is, groupthink and social loafing, are found to be causal factors in the cases presented in

Section 3.3 and

Section 3.4, respectively. This suggests that we must identify where and under what conditions these heuristic-, overconfidence- and framing-based cognitive biases are likely to come into play within specific man-machine interactions. This way, we can introduce an appropriate safety intervention to avoid these biases from becoming causal factors of crashes, collisions or disasters.

We have demonstrated how cognitive biases can be the main cause of incidents, crashes, collisions or disasters throughout our analysis of the five case studies. The observations emphasize the significance and criticality of systematically building the problem of cognitive biases into the framework of a man-machine system, as shown in

Figure 8. Moreover, our findings call attention to addressing human errors and preventing incidents, crashes, collisions or disasters more effectively by considering cognitive biases.

If designers or experts of man-machine (man-society, man-economy or man-politics) systems do not understand the fallibility of humans, the limitation of human cognitive ability and the effect of emotion on behavior, the design of the systems could pose incompatibility issues, which, in turn, will induce crucial errors or serious failures. To avoid such incompatibility, we must focus on when, why and how cognitive biases overpower our way of thinking, distort it and lead us to make irrational decisions from the perspective of behavioral economics [

1,

6,

7,

8], as well as the traditional ergonomics and human factors approach. The design of man-machine (society, economy, or politics) compatibility (compatible technology) must be the key perspective for a preventive approach to human error-driven incidents, crashes, collisions or disasters.

As mentioned in

Section 3.3, it is reasonable to think that a cognitive bias (in this case, groupthink) became a trigger of the NASA Challenger disaster. On the other hand, referring to the concept of normal accidents proposed by Perrow [

28], Gladwell [

29] pointed out the fact that there were many other shuttle components that NASA deemed as risky as the O-ring. Given this view, the case also seems to be the result of an optimistic bias that stems from overconfidence, that is where one thinks optimistically that a malfunction seldom occurs in spite of recognizing defects in numerous components, such as the O-ring. Gladwell [

29] warned that a disaster, such as the NASA Challenger disaster, is unavoidable as long as we continue developing large-scale systems with high risks for the profit of humans. Such a situation corresponds to a vicious circle (repeated occurrences of similar critical incidents), as pointed out by Dekker [

15].

Cognitive biases lead to such situations being unresolved and, thus, hinder the progress of safety management and technology. A promising method to address such a problem might be to take into consideration and steadily eliminate cognitive biases that unexpectedly and unconsciously interfere with the functioning of large-scale systems, so that man-machine compatible systems can be established and maintained. Introducing appropriate safety interventions that ensure that cognitive biases do not eventually manifest themselves as causal factors of incidents, crashes, collisions or disasters would enable one to address a cognitive-bias-related safety problem appropriately and to develop a man-machine compatible system.

Figure 8.

Proposal for a preventive approach to incidents, crashes, collisions or disasters and human error by human-machine compatible technology that takes cognitive biases into account.

Figure 8.

Proposal for a preventive approach to incidents, crashes, collisions or disasters and human error by human-machine compatible technology that takes cognitive biases into account.