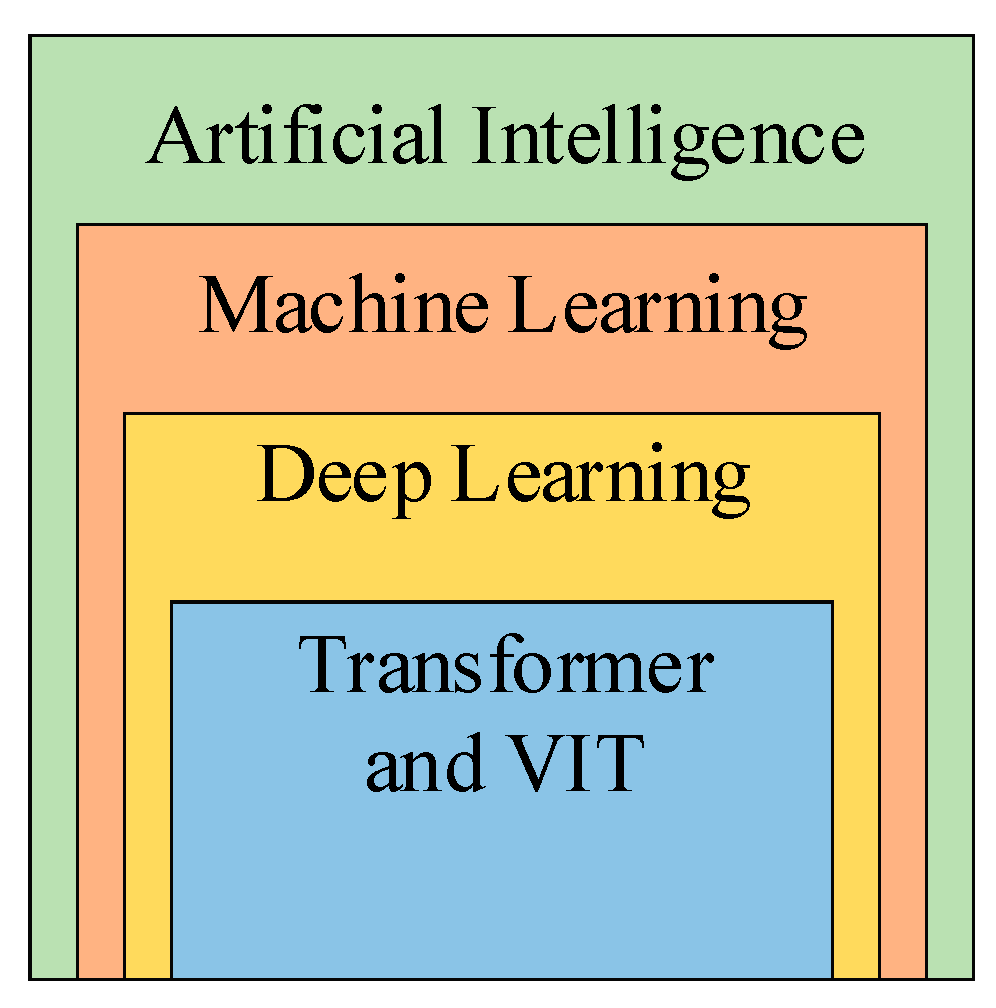

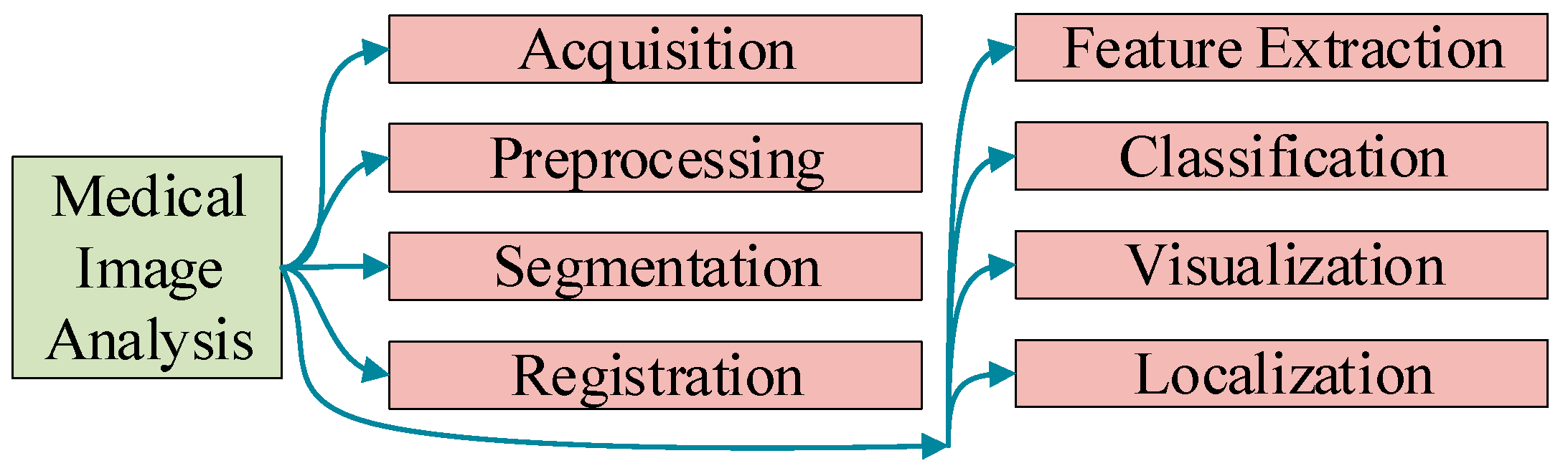

Deep Learning and Vision Transformer for Medical Image Analysis

Author Contributions

Funding

Conflicts of Interest

References

- Ghouri, A.M.; Khan, H.R.; Mani, V.; ul Haq, M.A.; Jabbour, A. An artificial-intelligence-based omnichannel blood supply chain: A pathway for sustainable development. J. Bus. Res. 2023, 164, 113980. [Google Scholar] [CrossRef]

- Cundall, P. Human intelligence seems capable of anything to me. New Sci. 2023, 246, 30. [Google Scholar]

- Lee, M.C.M.; Scheepers, H.; Lui, A.K.H.; Ngai, E.W.T. The implementation of artificial intelligence in organizations: A systematic literature review. Inf. Manag. 2023, 60, 103816. [Google Scholar] [CrossRef]

- Raspanti, M.A.; Palazzani, L. Artificial intelligence and human intelligence:Contributions of christian theology and philosophy of the person. Biolaw J.-Riv. Biodiritto 2022, 457–471. [Google Scholar] [CrossRef]

- Saleem, K.; Saleem, M.; Ahmad, R.Z.; Javed, A.R.; Alazab, M.; Gadekallu, T.R.; Suleman, A. Situation-aware bdi reasoning to detect early symptoms of covid 19 using smartwatch. IEEE Sens. J. 2023, 23, 898–905. [Google Scholar] [CrossRef]

- Goudar, V.; Peysakhovich, B.; Freedman, D.J.; Buffalo, E.A.; Wang, X.J. Schema formation in a neural population subspace underlies learning-to-learn in flexible sensorimotor problem-solving. Nat. Neurosci. 2023, 26, 879–890. [Google Scholar] [CrossRef]

- Gomez, C.; Unberath, M.; Huang, C.M. Mitigating knowledge imbalance in ai-advised decision-making through collaborative user involvement. Int. J. Hum.-Comput. Stud. 2023, 172, 102977. [Google Scholar] [CrossRef]

- Shakibi, H.; Faal, M.Y.; Assareh, E.; Agarwal, N.; Yari, M.; Latifi, S.A.; Ghodrat, M.; Lee, M. Design and multi-objective optimization of a multi-generation system based on pem electrolyzer, ro unit, absorption cooling system, and orc utilizing machine learning approaches; a case study of australia. Energy 2023, 278, 127796. [Google Scholar] [CrossRef]

- Bhowmik, R.T.; Jung, Y.S.; Aguilera, J.A.; Prunicki, M.; Nadeau, K. A multi-modal wildfire prediction and early-warning system based on a novel machine learning framework. J. Environ. Manag. 2023, 341, 117908. [Google Scholar] [CrossRef]

- Kozikowski, P. Machine learning for grouping nano-objects based on their morphological parameters obtained from sem analysis. Micron 2023, 171, 103473. [Google Scholar] [CrossRef]

- Vinod, D.N.; Prabaharan, S.R.S. Elucidation of infection asperity of ct scan images of COVID-19 positive cases: A machine learning perspective. Sci. Afr. 2023, 20, e01681. [Google Scholar] [CrossRef]

- Abd Rahman, N.H.; Zaki, M.H.M.; Hasikin, K.; Abd Razak, N.A.; Ibrahim, A.K.; Lai, K.W. Predicting medical device failure: A promise to reduce healthcare facilities cost through smart healthcare management. PeerJ Comput. Sci. 2023, 9, e1279. [Google Scholar] [CrossRef] [PubMed]

- Yazdanpanah, S.; Chaeikar, S.S.; Jolfaei, A. Monitoring the security of audio biomedical signals communications in wearable iot healthcare. Digit. Commun. Netw. 2023, 9, 393–399. [Google Scholar] [CrossRef]

- Pyne, Y.; Wong, Y.M.; Fang, H.S.; Simpson, E. Analysis of ‘one in a million’ primary care consultation conversations using natural language processing. BMJ Health Care Inform. 2023, 30, e100659. [Google Scholar] [CrossRef]

- Ahmed, S.; Raza, B.; Hussain, L.; Aldweesh, A.; Omar, A.; Khan, M.S.; Eldin, E.T.; Nadim, M.A. The deep learning resnet101 and ensemble xgboost algorithm with hyperparameters optimization accurately predict the lung cancer. Appl. Artif. Intell. 2023, 37, 2166222. [Google Scholar] [CrossRef]

- Tyson, R.; Gavalian, G.; Ireland, D.G.; McKinnon, B. Deep learning level-3 electron trigger for clas12. Comput. Phys. Commun. 2023, 290, 108783. [Google Scholar] [CrossRef]

- Almutairy, F.; Scekic, L.; Matar, M.; Elmoudi, R.; Wshah, S. Detection and mitigation of gps spoofing attacks on phasor measurement units using deep learning. Int. J. Electr. Power Energy Syst. 2023, 151, 109160. [Google Scholar] [CrossRef]

- Alizadehsani, Z.; Ghaemi, H.; Shahraki, A.; Gonzalez-Briones, A.; Corchado, J.M. Dcservcg: A data-centric service code generation using deep learning. Eng. Appl. Artif. Intell. 2023, 123, 106304. [Google Scholar] [CrossRef]

- Zhang, Y.; Dong, Z. Medical imaging and image processing. Technologies 2023, 11, 54. [Google Scholar] [CrossRef]

- Kessler, S.; Schroeder, D.; Korlakov, S.; Hettlich, V.; Kalkhoff, S.; Moazemi, S.; Lichtenberg, A.; Schmid, F.; Aubin, H. Predicting readmission to the cardiovascular intensive care unit using recurrent neural networks. Digit. Health 2023, 9, 20552076221149529. [Google Scholar] [CrossRef]

- Alam, F.; Ananbeh, O.; Malik, K.M.; Odayani, A.A.; Hussain, I.B.; Kaabia, N.; Aidaroos, A.A.; Saudagar, A.K.J. Towards predicting length of stay and identification of cohort risk factors using self-attention-based transformers and association mining: COVID-19 as a phenotype. Diagnostics 2023, 13, 1760. [Google Scholar] [CrossRef] [PubMed]

- Fuad, K.A.A.; Chen, L.Z. A survey on sparsity exploration in transformer-based accelerators. Electronics 2023, 12, 2299. [Google Scholar] [CrossRef]

- Gradonm, K.T. Electric sheep on the pastures of disinformation and targeted phishing campaigns: The security implications of chatgpt. IEEE Secur. Priv. 2023, 21, 58–61. [Google Scholar] [CrossRef]

- Hoshi, T.; Shibayama, S.; Jiang, X.A. Employing a hybrid model based on texture-biased convolutional neural networks and edge-biased vision transformers for anomaly detection of signal bonds. J. Electron. Imaging 2023, 32, 023039. [Google Scholar] [CrossRef]

- Chen, S.; Lu, S.; Wang, S.; Ni, Y.; Zhang, Y. Shifted window vision transformer for blood cell classification. Electronics 2023, 12, 2442. [Google Scholar] [CrossRef]

- Apostolidis, K.D.; Papakostas, G.A. Digital watermarking as an adversarial attack on medical image analysis with deep learning. J. Imaging 2022, 8, 155. [Google Scholar] [CrossRef]

- Kiryati, N.; Landau, Y. Dataset growth in medical image analysis research. J. Imaging 2021, 7, 155. [Google Scholar] [CrossRef]

- Wang, S. Advances in data preprocessing for biomedical data fusion: An overview of the methods, challenges, and prospects. Inf. Fusion 2021, 76, 376–421. [Google Scholar] [CrossRef]

- Shan, C.X.; Li, Q.; Guan, X. Lightweight brain tumor segmentation algorithm based on multi-view convolution. Laser Optoelectron. Prog. 2023, 60, 1010018. [Google Scholar] [CrossRef]

- Baum, Z.M.C.; Hu, Y.P.; Barratt, D.C. Meta-learning initializations for interactive medical image registration. IEEE Trans. Med. Imaging 2023, 42, 823–833. [Google Scholar] [CrossRef]

- Shamna, N.V.; Musthafa, B.A. Feature extraction method using hog with ltp for content-based medical image retrieval. Int. J. Electr. Comput. Eng. Syst. 2023, 14, 267–275. [Google Scholar] [CrossRef]

- Hida, M.; Eto, S.; Wada, C.; Kitagawa, K.; Imaoka, M.; Nakamura, M.; Imai, R.; Kubo, T.; Inoue, T.; Sakai, K.; et al. Development of hallux valgus classification using digital foot images with machine learning. Life 2023, 13, 1146. [Google Scholar] [CrossRef] [PubMed]

- Niemitz, L.; van der Stel, S.D.; Sorensen, S.; Messina, W.; Sekar, S.K.V.; Sterenborg, H.; Andersson-Engels, S.; Ruers, T.J.M.; Burke, R. Microcamera visualisation system to overcome specular reflections for tissue imaging. Micromachines 2023, 14, 1062. [Google Scholar] [CrossRef] [PubMed]

- Bodard, S.; Denis, L.; Hingot, V.; Chavignon, A.; Helenon, O.; Anglicheau, D.; Couture, O.; Correas, J.M. Ultrasound localization microscopy of the human kidney allograft on a clinical ultrasound scanner. Kidney Int. 2023, 103, 930–935. [Google Scholar] [CrossRef]

- Zhang, Y.; Gorriz, J.M. Deep learning in medical image analysis. J. Imaging 2021, 7, 74. [Google Scholar] [CrossRef]

- Sylolypavan, A.; Sleeman, D.; Wu, H.H.; Sim, M. The impact of inconsistent human annotations on ai driven clinical decision making. NPJ Digit. Med. 2023, 6, 26. [Google Scholar] [CrossRef]

- Talesh, S.A.; Mahmoudi, S.; Mohebali, M.; Mamishi, S. A rare presentation of visceral leishmaniasis and epididymo-orchitis in a patient with chronic granulomatous disease. Clin. Case Rep. 2023, 11, e7426. [Google Scholar] [CrossRef]

- Court, L.E.; Fave, X.; Mackin, D.; Lee, J.; Yang, J.Z.; Zhang, L.F. Computational resources for radiomics. Transl. Cancer Res. 2016, 5, 340–348. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Wang, J.; Gorriz, J.M.; Wang, S. Deep Learning and Vision Transformer for Medical Image Analysis. J. Imaging 2023, 9, 147. https://doi.org/10.3390/jimaging9070147

Zhang Y, Wang J, Gorriz JM, Wang S. Deep Learning and Vision Transformer for Medical Image Analysis. Journal of Imaging. 2023; 9(7):147. https://doi.org/10.3390/jimaging9070147

Chicago/Turabian StyleZhang, Yudong, Jiaji Wang, Juan Manuel Gorriz, and Shuihua Wang. 2023. "Deep Learning and Vision Transformer for Medical Image Analysis" Journal of Imaging 9, no. 7: 147. https://doi.org/10.3390/jimaging9070147

APA StyleZhang, Y., Wang, J., Gorriz, J. M., & Wang, S. (2023). Deep Learning and Vision Transformer for Medical Image Analysis. Journal of Imaging, 9(7), 147. https://doi.org/10.3390/jimaging9070147