Augmenting Performance: A Systematic Review of Optical See-Through Head-Mounted Displays in Surgery

Abstract

:1. Introduction

1.1. Medical Augmented Reality

2. Background

2.1. Handheld and Spatial Displays

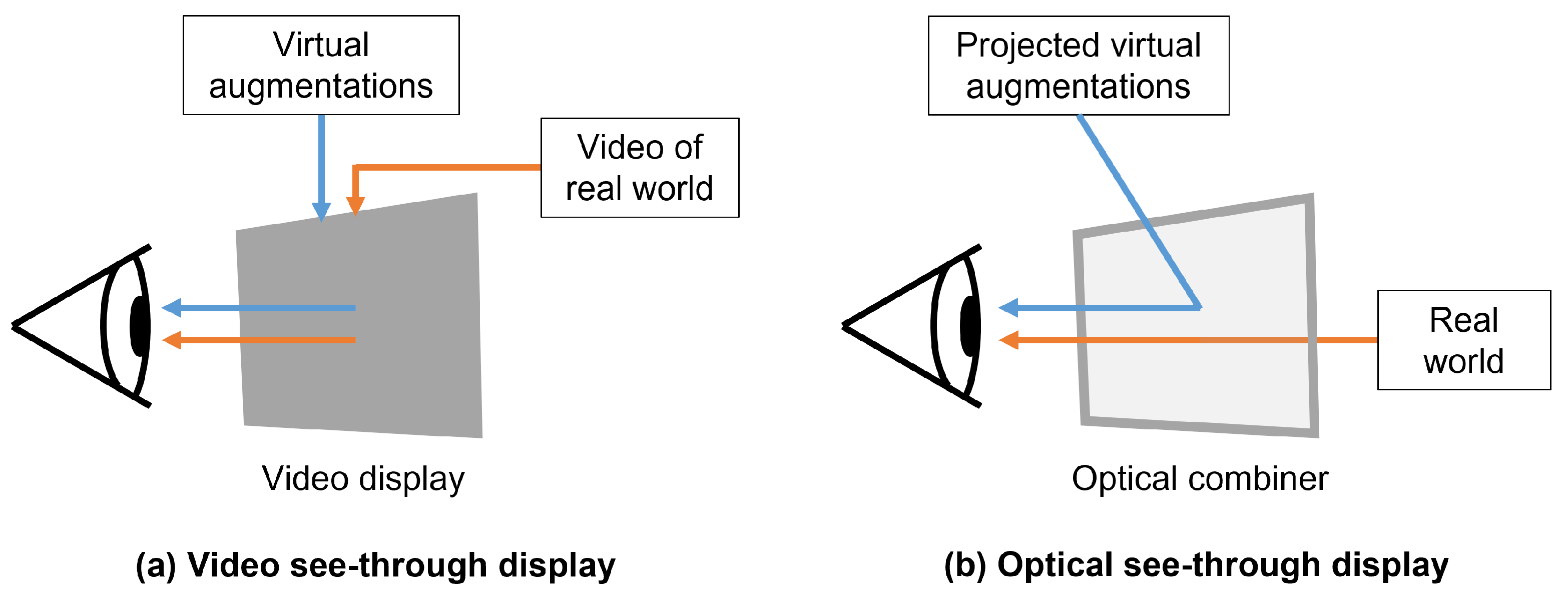

2.2. See-Through Head-Mounted Displays

Optical See-Through Head-Mounted Displays

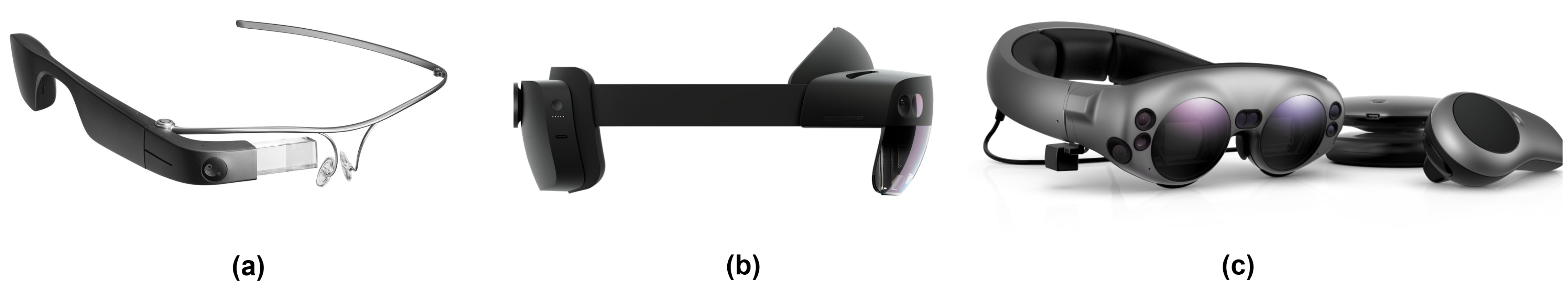

2.3. Overview of Commercially Available Optical See-Through Head-Mounted Displays

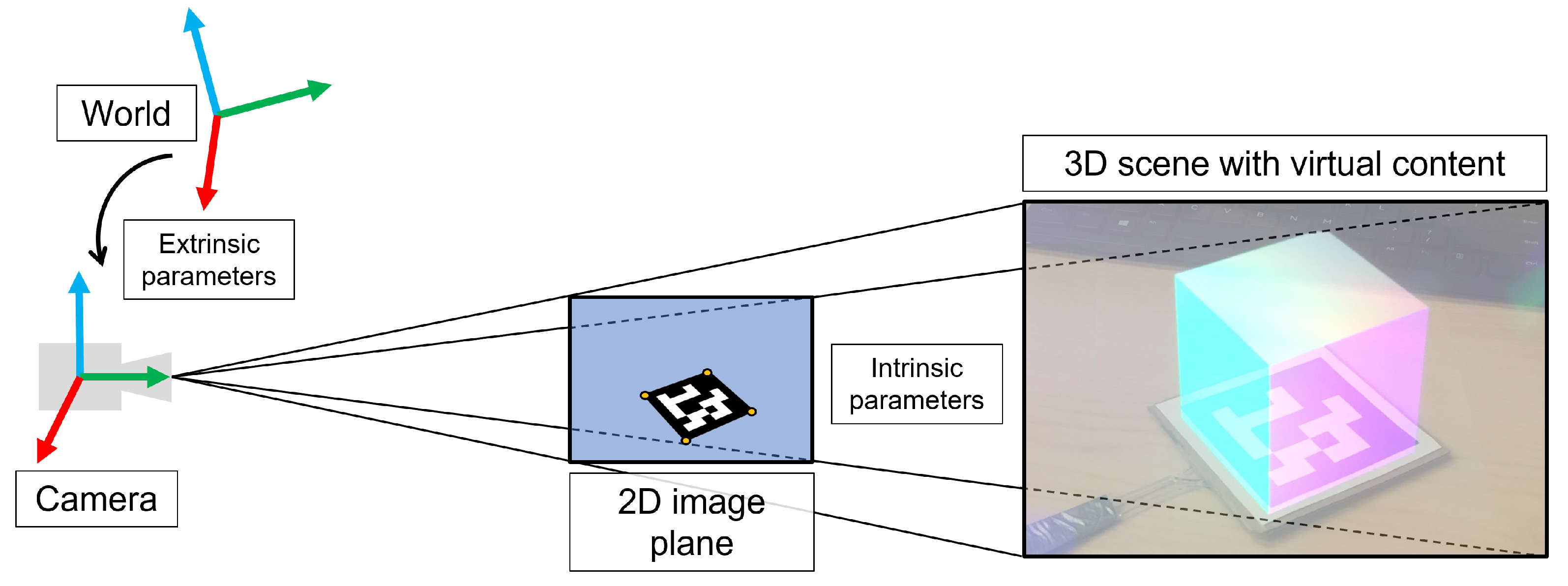

Virtual Model Alignment

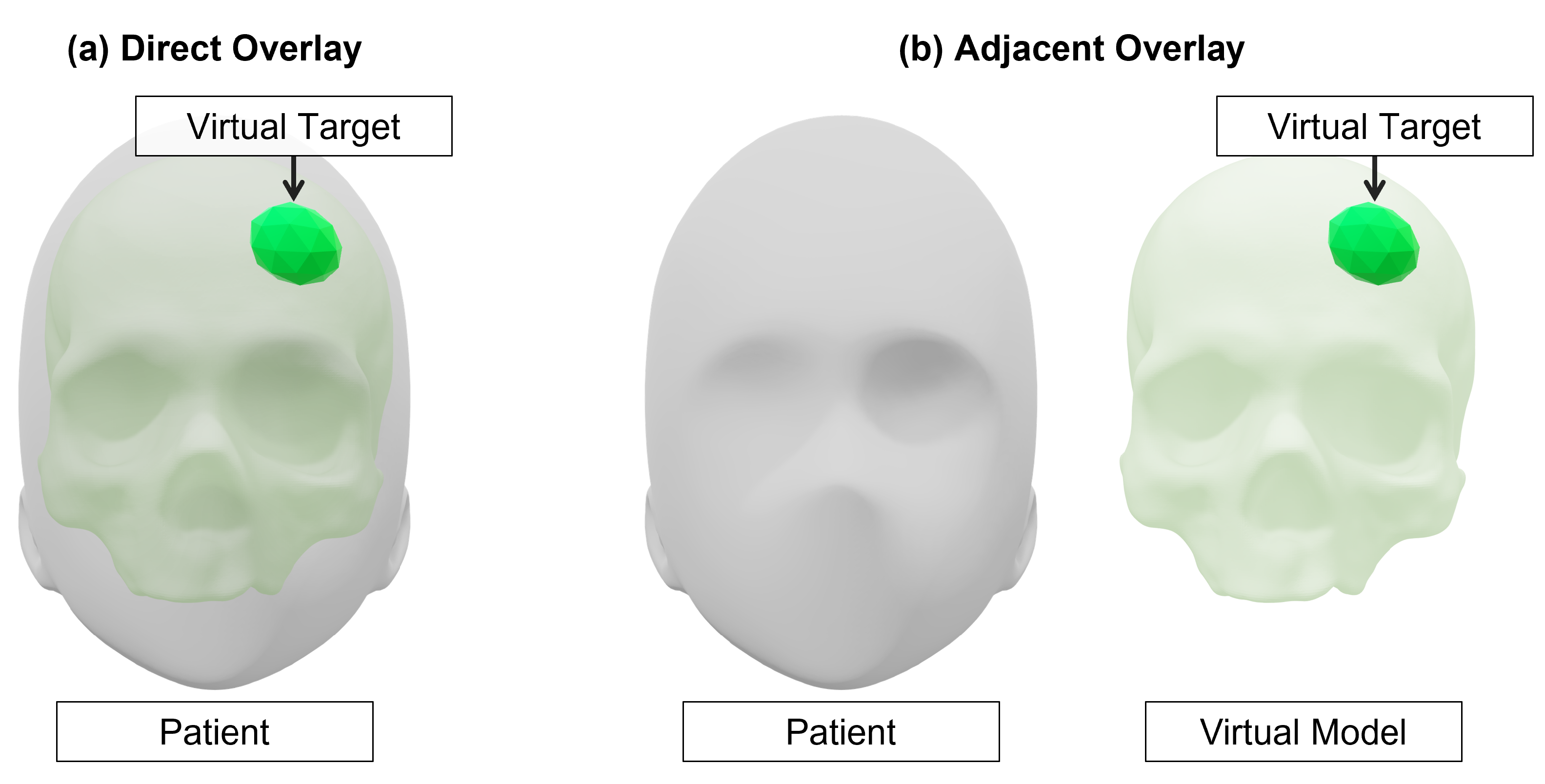

2.4. Augmented Reality Perception

2.4.1. Depth Perception and Depth Cues

2.4.2. Interpupillary Distance

2.4.3. Vergence-Accommodation Conflict

3. Methods

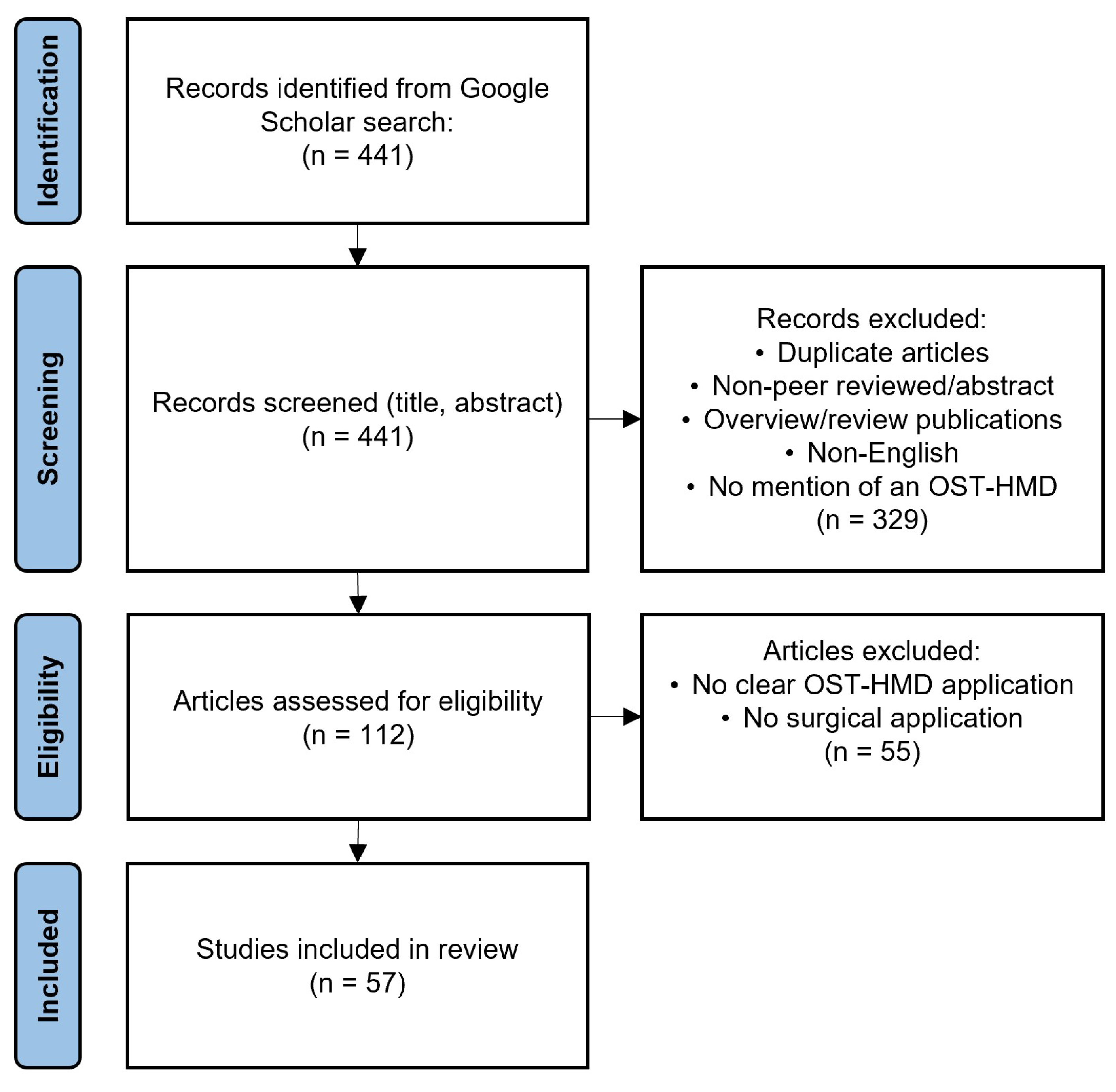

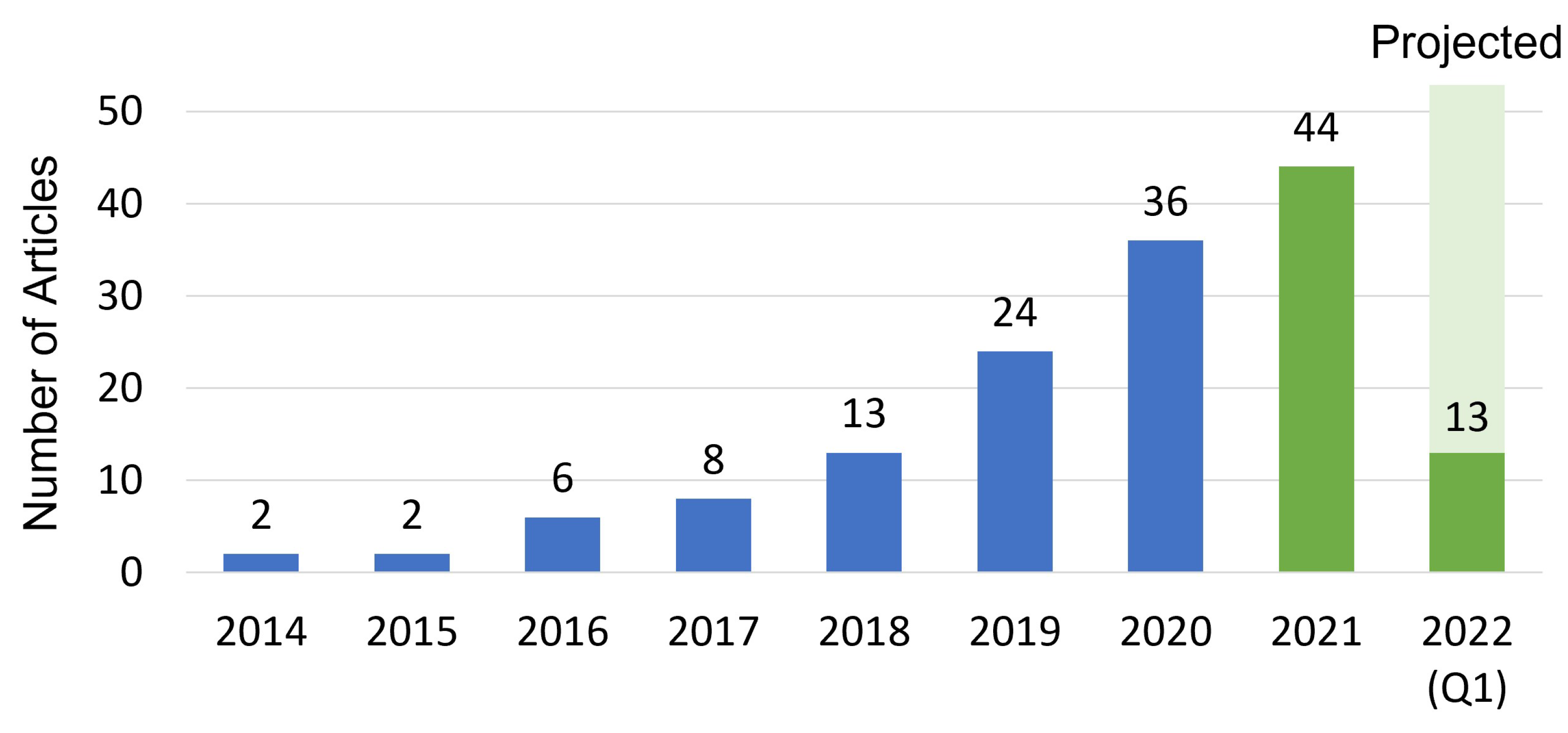

3.1. Literature Search Strategy

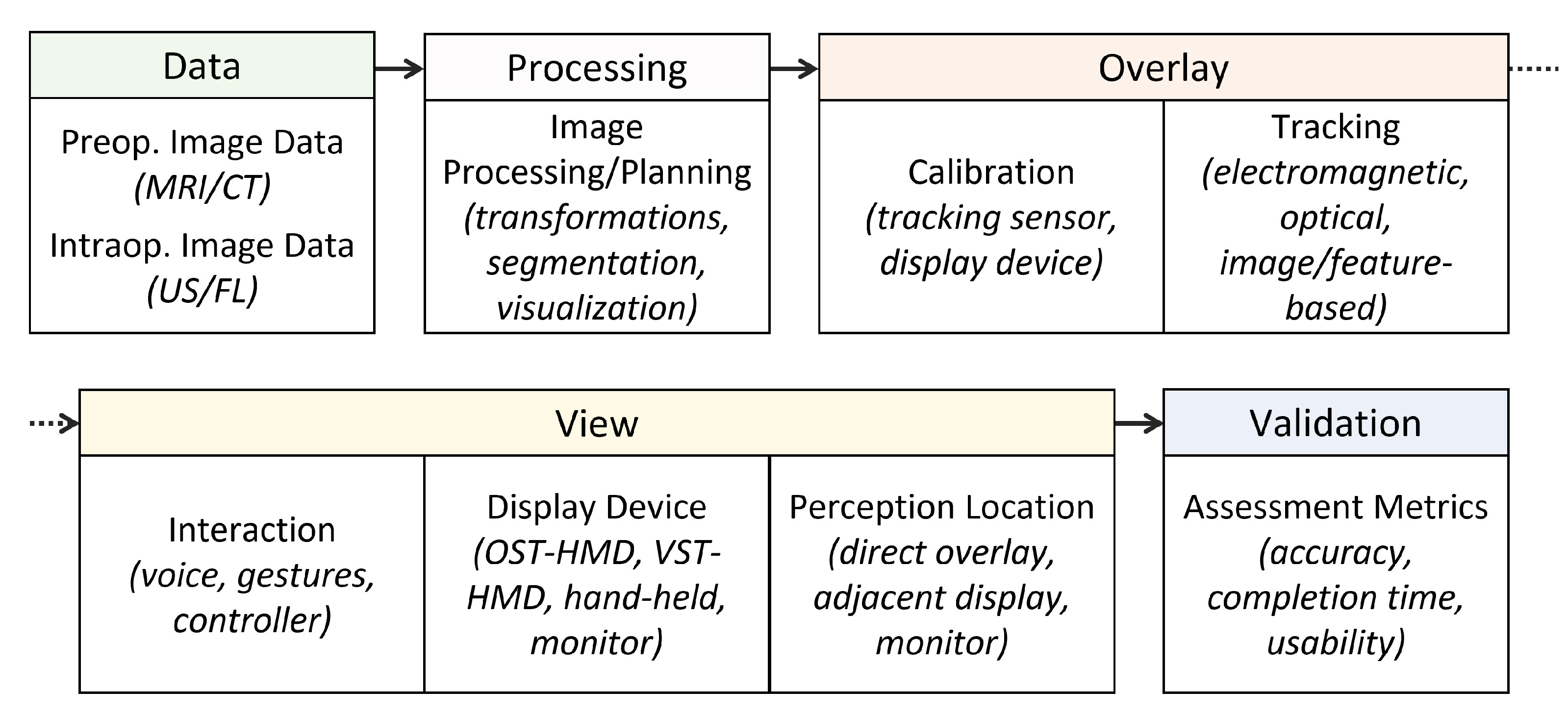

3.2. Review Strategy and Taxonomy

4. Results and Discussion

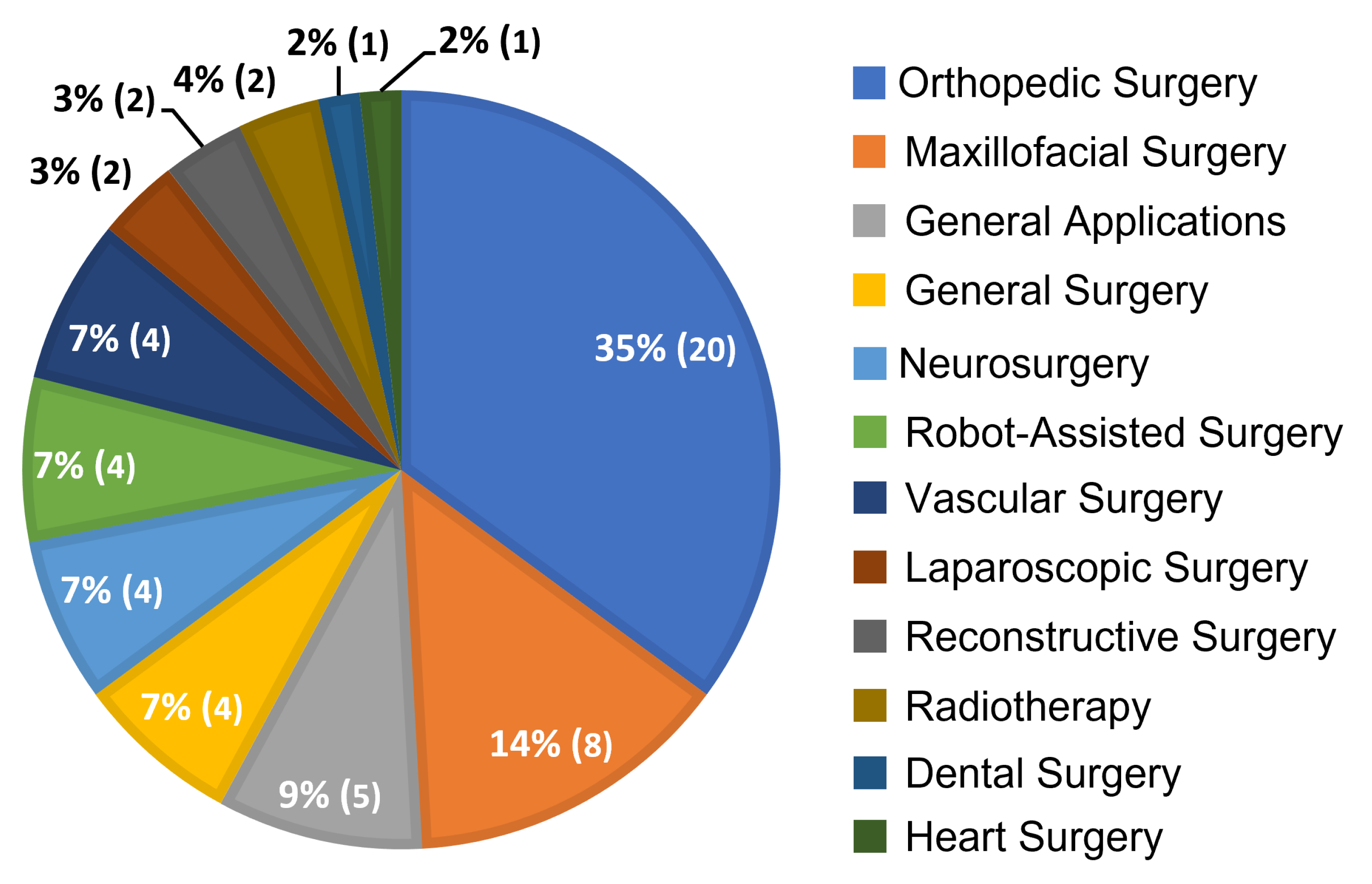

4.1. Distribution of Relevant Articles by Surgical Application

4.2. Data

4.2.1. Preoperative Image Data

4.2.2. Intraoperative Image Data

4.3. Processing

Image Data Processing

4.4. Overlay

Tracking Strategies

4.5. View

4.5.1. Interaction Paradigms

4.5.2. Display Devices

4.5.3. Perception Location

4.6. Validation

4.6.1. System Evaluation

4.6.2. System Accuracy

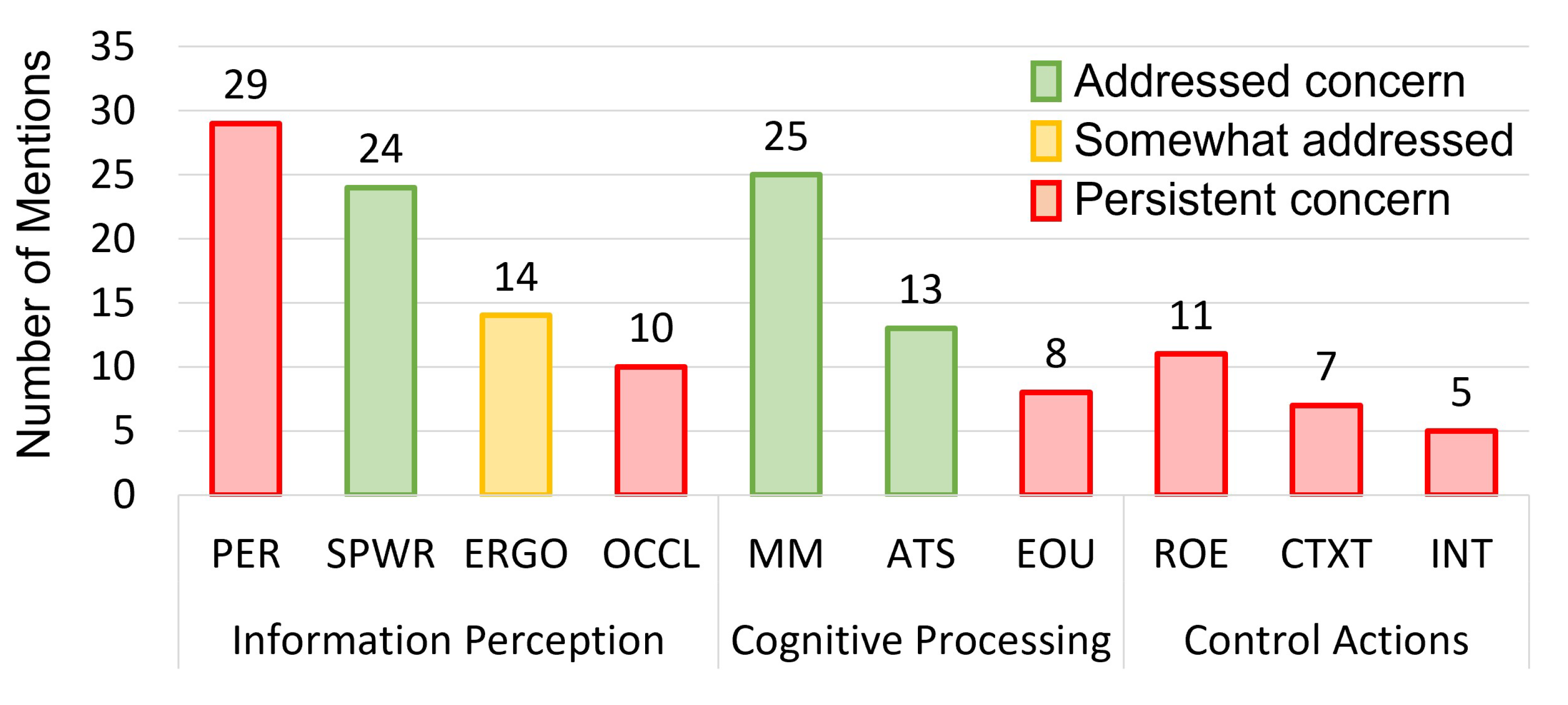

4.6.3. Human Factors, System Usability, and Technical Challenges

4.7. Recommendations for Future Work

4.7.1. Marker-Less Tracking for Surgical Guidance

4.7.2. Context-Relevant Augmented Reality for Intelligent Guidance

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kishino, F.; Milgram, P. A Taxonomy of Mixed Reality Visual Displays. Ieice Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Kelly, P.J.; Alker, G.J.; Goerss, S. Computer-assisted stereotactic microsurgery for the treatment of intracranial neoplasms. Neurosurgery 1982, 10, 324–331. [Google Scholar] [CrossRef] [PubMed]

- Peters, T.M.; Linte, C.A.; Yaniv, Z.; Williams, J. Mixed and Augmented Reality in Medicine; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Cleary, K.; Peters, T.M. Image-guided interventions: Technology review and clinical applications. Annu. Rev. Biomed. Eng. 2010, 12, 119–142. [Google Scholar] [CrossRef] [PubMed]

- Eckert, M.; Volmerg, J.S.; Friedrich, C.M. Augmented reality in medicine: Systematic and bibliographic review. JMIR mHealth uHealth 2019, 7, e10967. [Google Scholar] [CrossRef] [PubMed]

- Birlo, M.; Edwards, P.J.E.; Clarkson, M.; Stoyanov, D. Utility of Optical See-Through Head Mounted Displays in Augmented Reality-Assisted Surgery: A systematic review. Med. Image Anal. 2022, 77, 102361. [Google Scholar] [CrossRef]

- Bernhardt, S.; Nicolau, S.A.; Soler, L.; Doignon, C. The status of augmented reality in laparoscopic surgery as of 2016. Med. Image Anal. 2017, 37, 66–90. [Google Scholar] [CrossRef]

- Sielhorst, T.; Feuerstein, M.; Navab, N. Advanced medical displays: A literature review of augmented reality. J. Disp. Technol. 2008, 4, 451–467. [Google Scholar] [CrossRef] [Green Version]

- Kramida, G. Resolving the Vergence-Accommodation Conflict in Head-Mounted Displays. IEEE Trans. Vis. Comput. Graph. 2015, 22, 1912–1931. [Google Scholar] [CrossRef]

- Condino, S.; Carbone, M.; Piazza, R.; Ferrari, M.; Ferrari, V. Perceptual Limits of Optical See-Through Visors for Augmented Reality Guidance of Manual Tasks. IEEE Trans. Biomed. Eng. 2020, 67, 411–419. [Google Scholar] [CrossRef]

- Navab, N.; Traub, J.; Sielhorst, T.; Feuerstein, M.; Bichlmeier, C. Action-and workflow-driven augmented reality for computer-aided medical procedures. IEEE Comput. Graph. Appl. 2007, 27, 10–14. [Google Scholar] [CrossRef] [Green Version]

- Katić, D.; Spengler, P.; Bodenstedt, S.; Castrillon-Oberndorfer, G.; Seeberger, R.; Hoffmann, J.; Dillmann, R.; Speidel, S. A system for context-aware intraoperative augmented reality in dental implant surgery. Int. J. Comput. Assist. Radiol. Surg. 2015, 10, 101–108. [Google Scholar] [CrossRef] [PubMed]

- Qian, L.; Azimi, E.; Kazanzides, P.; Navab, N. Comprehensive Tracker Based Display Calibration for Holographic Optical See-Through Head-Mounted Display. arXiv 2017, arXiv:1703.05834. [Google Scholar]

- Qian, L.; Song, T.; Unberath, M.; Kazanzides, P. AR-Loupe: Magnified Augmented Reality by Combining an Optical See-Through Head-Mounted Display and a Loupe. IEEE Trans. Vis. Comput. Graph. 2011, 28, 2550–2562. [Google Scholar] [CrossRef] [PubMed]

- Van Krevelen, D.; Poelman, R. A survey of augmented reality technologies, applications and limitations. Int. J. Virtual Real. 2010, 9, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Rolland, J.P.; Fuchs, H. Optical Versus Video See-Through Head-Mounted Displays in Medical Visualization. Presence 2000, 9, 287–309. [Google Scholar] [CrossRef]

- Gervautz, M.; Schmalstieg, D. Anywhere Interfaces Using Handheld Augmented Reality. Computer 2012, 45, 26–31. [Google Scholar] [CrossRef]

- Bimber, O.; Raskar, R. Spatial Augmented Reality: Merging Real and Virtual Worlds; Peters, A.K., Ed.; CRC Press: Boca Raton, FL, USA, 2005. [Google Scholar]

- Hong, J.; Kim, Y.; Choi, H.J.; Hahn, J.; Park, J.H.; Kim, H.; Min, S.W.; Chen, N.; Lee, B. Three-dimensional display technologies of recent interest: Principles, status, and issues [Invited]. Appl. Opt. 2011, 50, H87–H115. [Google Scholar] [CrossRef] [Green Version]

- Cutolo, F.; Fontana, U.; Ferrari, V. Perspective preserving solution for quasi-orthoscopic video see-through HMDs. Technologies 2018, 6, 9. [Google Scholar] [CrossRef] [Green Version]

- Cattari, N.; Cutolo, F.; D’amato, R.; Fontana, U.; Ferrari, V. Toed-in vs parallel displays in video see-through head-mounted displays for close-up view. IEEE Access 2019, 7, 159698–159711. [Google Scholar] [CrossRef]

- Grubert, J.; Itoh, Y.; Moser, K.; Swan, J.E. A survey of calibration methods for optical see-through head-mounted displays. IEEE Trans. Vis. Comput. Graph. 2017, 24, 2649–2662. [Google Scholar] [CrossRef] [Green Version]

- Rolland, J.P.; Gibson, W.; Ariely, D. Towards Quantifying Depth and Size Perception in Virtual Environments. Presence Teleoperators Virtual Environ. 1995, 4, 24–49. [Google Scholar] [CrossRef]

- Cakmakci, O.; Rolland, J. Head-Worn Displays: A Review. J. Disp. Technol. 2006, 2, 199–216. [Google Scholar] [CrossRef]

- Guo, N.; Wang, T.; Yang, B.; Hu, L.; Liu, H.; Wang, Y. An online calibration method for microsoft HoloLens. IEEE Access 2019, 7, 101795–101803. [Google Scholar] [CrossRef]

- Sutherland, I.E. A head-mounted three dimensional display. In Proceedings of the 9–11 December 1968, Fall Joint Computer Conference, Part I, San Francisco, CA, USA, 9–11 December 1968; pp. 757–764. [Google Scholar]

- Bention, S. Selected PapersonThree-Dimensional Displays; SPEOptical Engineeting Press: Belingham, WA, USA, 2001; Volume 4, pp. 446–458. [Google Scholar]

- Holliman, N.S.; Dodgson, N.A.; Favalora, G.E.; Pockett, L. Three-Dimensional Displays: A Review and Applications Analysis. IEEE Trans. Broadcast. 2011, 57, 362–371. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer Science & Business Media: Berlin, Germany, 2010. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.; Marín-Jiménez, M. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- Prince, S.J. Computer Vision: Models, Learning, and Inference; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Doughty, M.; Ghugre, N.R. Head-Mounted Display-Based Augmented Reality for Image-Guided Media Delivery to the Heart: A Preliminary Investigation of Perceptual Accuracy. J. Imaging 2022, 8, 33. [Google Scholar] [CrossRef]

- Viglialoro, R.M.; Condino, S.; Turini, G.; Carbone, M.; Ferrari, V.; Gesi, M. Review of the augmented reality systems for shoulder rehabilitation. Information 2019, 10, 154. [Google Scholar] [CrossRef] [Green Version]

- Singh, G.; Ellis, S.R.; Swan, J.E. The Effect of Focal Distance, Age, and Brightness on Near-Field Augmented Reality Depth Matching. IEEE Trans. Vis. Comput. Graph. 2020, 26, 1385–1398. [Google Scholar] [CrossRef] [Green Version]

- Hua, H. Enabling Focus Cues in Head-Mounted Displays. Proc. IEEE 2017, 105, 805–824. [Google Scholar] [CrossRef]

- Koulieris, G.A.; Bui, B.; Banks, M.S.; Drettakis, G. Accommodation and comfort in head-mounted displays. ACM Trans. Graph. 2017, 36, 1–11. [Google Scholar] [CrossRef]

- Cutting, J.E.; Vishton, P.M. Chapter 3—Perceiving Layout and Knowing Distances: The Integration, Relative Potency, and Contextual Use of Different Information about Depth. In Perception of Space and Motion; Epstein, W., Rogers, S., Eds.; Handbook of Perception and Cognition; Academic Press: Cambridge, MA, USA, 1995; pp. 69–117. [Google Scholar] [CrossRef]

- Teittinen, M. Depth Cues in the Human Visual System. The Encyclopedia of Virtual Environments. 1993, Volume 1. Available online: http://www.hitl.washington.edu/projects/knowledge_base/virtual-worlds/EVE/III.A.1.c.DepthCues.html (accessed on 23 March 2022).

- Lee, S.; Hua, H. Effects of Configuration of Optical Combiner on Near-Field Depth Perception in Optical See-Through Head-Mounted Displays. IEEE Trans. Vis. Comput. Graph. 2016, 22, 1432–1441. [Google Scholar] [CrossRef] [PubMed]

- Patterson, P.D.; Earl, R. Human Factors of Stereoscopic 3D Displays; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Kress, B.C.; Cummings, W.J. 11-1: Invited Paper: Towards the Ultimate Mixed Reality Experience: HoloLens Display Architecture Choices. SID Symp. Dig. Tech. Pap. 2017, 48, 127–131. [Google Scholar] [CrossRef]

- Ungureanu, D.; Bogo, F.; Galliani, S.; Sama, P.; Duan, X.; Meekhof, C.; Stühmer, J.; Cashman, T.J.; Tekin, B.; Schönberger, J.L.; et al. HoloLens 2 Research Mode as a Tool for Computer Vision Research. arXiv 2020, arXiv:2008.11239. [Google Scholar]

- Kersten-Oertel, M.; Jannin, P.; Collins, D.L. DVV: A Taxonomy for Mixed Reality Visualization in Image Guided Surgery. IEEE Trans. Vis. Comput. Graph. 2012, 18, 332–352. [Google Scholar] [CrossRef] [PubMed]

- Ackermann, J.; Liebmann, F.; Hoch, A.; Snedeker, J.G.; Farshad, M.; Rahm, S.; Zingg, P.O.; Fürnstahl, P. Augmented Reality Based Surgical Navigation of Complex Pelvic Osteotomies—A Feasibility Study on Cadavers. Appl. Sci. 2021, 11, 1228. [Google Scholar] [CrossRef]

- Dennler, C.; Bauer, D.E.; Scheibler, A.G.; Spirig, J.; Götschi, T.; Fürnstahl, P.; Farshad, M. Augmented reality in the operating room: A clinical feasibility study. BMC Musculoskelet. Disord. 2021, 22, 451. [Google Scholar] [CrossRef]

- Farshad, M.; Fürnstahl, P.; Spirig, J.M. First in man in-situ augmented reality pedicle screw navigation. N. Am. Spine Soc. J. (NASSJ) 2021, 6, 100065. [Google Scholar] [CrossRef]

- Gu, W.; Shah, K.; Knopf, J.; Navab, N.; Unberath, M. Feasibility of image-based augmented reality guidance of total shoulder arthroplasty using microsoft HoloLens 1. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2021, 9, 261–270. [Google Scholar] [CrossRef]

- Teatini, A.; Kumar, R.P.; Elle, O.J.; Wiig, O. Mixed reality as a novel tool for diagnostic and surgical navigation in orthopaedics. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 407–414. [Google Scholar] [CrossRef]

- Spirig, J.M.; Roner, S.; Liebmann, F.; Fürnstahl, P.; Farshad, M. Augmented reality-navigated pedicle screw placement: A cadaveric pilot study. Eur. Spine J. 2021, 30, 3731–3737. [Google Scholar] [CrossRef] [PubMed]

- Schlueter-Brust, K.; Henckel, J.; Katinakis, F.; Buken, C.; Opt-Eynde, J.; Pofahl, T.; Rodriguez y Baena, F.; Tatti, F. Augmented-Reality-Assisted K-Wire Placement for Glenoid Component Positioning in Reversed Shoulder Arthroplasty: A Proof-of-Concept Study. J. Pers. Med. 2021, 11, 777. [Google Scholar] [CrossRef] [PubMed]

- Tang, Z.N.; Hu, L.H.; Soh, H.Y.; Yu, Y.; Zhang, W.B.; Peng, X. Accuracy of Mixed Reality Combined With Surgical Navigation Assisted Oral and Maxillofacial Tumor Resection. Front. Oncol. 2021, 11, 715484. [Google Scholar] [CrossRef] [PubMed]

- Yang, R.; Li, C.; Tu, P.; Ahmed, A.; Ji, T.; Chen, X. Development and Application of Digital Maxillofacial Surgery System Based on Mixed Reality Technology. Front. Surg. 2022, 8, 719985. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Liu, K.; Lin, L.; Wang, X.; Xie, L. Use of augmented reality navigation to optimise the surgical management of craniofacial fibrous dysplasia. Br. J. Oral Maxillofac. Surg. 2022, 60, 162–167. [Google Scholar] [CrossRef] [PubMed]

- Iqbal, H.; Tatti, F.; y Baena, F.R. Augmented reality in robotic assisted orthopaedic surgery: A pilot study. J. Biomed. Informat. 2021, 120, 103841. [Google Scholar] [CrossRef]

- Stewart, C.L.; Fong, A.; Payyavula, G.; DiMaio, S.; Lafaro, K.; Tallmon, K.; Wren, S.; Sorger, J.; Fong, Y. Study on augmented reality for robotic surgery bedside assistants. J. Robot. Surg. 2021, 1–8. [Google Scholar] [CrossRef]

- Lin, Z.; Gao, A.; Ai, X.; Gao, H.; Fu, Y.; Chen, W.; Yang, G.Z. ARei: Augmented-Reality-Assisted Touchless Teleoperated Robot for Endoluminal Intervention. IEEE/ASME Trans. Mechatronics 2021, 1–11. [Google Scholar] [CrossRef]

- Gasques, D.; Johnson, J.G.; Sharkey, T.; Feng, Y.; Wang, R.; Xu, Z.R.; Zavala, E.; Zhang, Y.; Xie, W.; Zhang, X.; et al. ARTEMIS: A Collaborative Mixed-Reality System for Immersive Surgical Telementoring. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, CHI ’21, Yokohama, Japan, 8–13 May 2021; pp. 1–14. [Google Scholar] [CrossRef]

- Rai, A.T.; Deib, G.; Smith, D.; Boo, S. Teleproctoring for Neurovascular Procedures: Demonstration of Concept Using Optical See-Through Head-Mounted Display, Interactive Mixed Reality, and Virtual Space Sharing—A Critical Need Highlighted by the COVID-19 Pandemic. Am. J. Neuroradiol. 2021, 42, 1109–1115. [Google Scholar] [CrossRef]

- Gsaxner, C.; Li, J.; Pepe, A.; Schmalstieg, D.; Egger, J. Inside-Out Instrument Tracking for Surgical Navigation in Augmented Reality. In Proceedings of the 27th ACM Symposium on Virtual Reality Software and Technology. Association for Computing Machinery, VRST ’21, Osaka, Japan, 8–10 December 2021; pp. 1–11. [Google Scholar] [CrossRef]

- Zhang, F.; Zhang, S.; Sun, L.; Zhan, W.; Sun, L. Research on registration and navigation technology of augmented reality for ex-vivo hepatectomy. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 147–155. [Google Scholar] [CrossRef]

- Ivan, M.E.; Eichberg, D.G.; Di, L.; Shah, A.H.; Luther, E.M.; Lu, V.M.; Komotar, R.J.; Urakov, T.M. Augmented reality head-mounted display–based incision planning in cranial neurosurgery: A prospective pilot study. Neurosurg. Focus 2021, 51, E3. [Google Scholar] [CrossRef] [PubMed]

- Fick, T.; van Doormaal, J.; Hoving, E.; Regli, L.; van Doormaal, T. Holographic patient tracking after bed movement for augmented reality neuronavigation using a head-mounted display. Acta Neurochir. 2021, 163, 879–884. [Google Scholar] [CrossRef]

- Kunz, C.; Hlaváč, M.; Schneider, M.; Pala, A.; Henrich, P.; Jickeli, B.; Wörn, H.; Hein, B.; Wirtz, R.; Mathis-Ullrich, F. Autonomous Planning and Intraoperative Augmented Reality Navigation for Neurosurgery. IEEE Trans. Med. Robot. Bionics 2021, 3, 738–749. [Google Scholar] [CrossRef]

- Qi, Z.; Li, Y.; Xu, X.; Zhang, J.; Li, F.; Gan, Z.; Xiong, R.; Wang, Q.; Zhang, S.; Chen, X. Holographic mixed-reality neuronavigation with a head-mounted device: Technical feasibility and clinical application. Neurosurg. Focus 2021, 51, E22. [Google Scholar] [CrossRef] [PubMed]

- Condino, S.; Montemurro, N.; Cattari, N.; D’Amato, R.; Thomale, U.; Ferrari, V.; Cutolo, F. Evaluation of a Wearable AR Platform for Guiding Complex Craniotomies in Neurosurgery. Ann. Biomed. Eng. 2021, 49, 2590–2605. [Google Scholar] [CrossRef] [PubMed]

- Uhl, C.; Hatzl, J.; Meisenbacher, K.; Zimmer, L.; Hartmann, N.; Böckler, D. Mixed-Reality-Assisted Puncture of the Common Femoral Artery in a Phantom Model. J. Imaging 2022, 8, 47. [Google Scholar] [CrossRef]

- Li, R.; Tong, Y.; Yang, T.; Guo, J.; Si, W.; Zhang, Y.; Klein, R.; Heng, P.A. Towards quantitative and intuitive percutaneous tumor puncture via augmented virtual reality. Comput. Med. Imaging Graph. 2021, 90, 101905. [Google Scholar] [CrossRef]

- Liu, Y.; Azimi, E.; Davé, N.; Qiu, C.; Yang, R.; Kazanzides, P. Augmented Reality Assisted Orbital Floor Reconstruction. In Proceedings of the 2021 IEEE International Conference on Intelligent Reality (ICIR), Piscataway, NJ, USA, 12–13 May 2021; pp. 25–30. [Google Scholar] [CrossRef]

- Tarutani, K.; Takaki, H.; Igeta, M.; Fujiwara, M.; Okamura, A.; Horio, F.; Toudou, Y.; Nakajima, S.; Kagawa, K.; Tanooka, M.; et al. Development and Accuracy Evaluation of Augmented Reality-based Patient Positioning System in Radiotherapy: A Phantom Study. In Vivo 2021, 35, 2081–2087. [Google Scholar] [CrossRef]

- Johnson, P.B.; Jackson, A.; Saki, M.; Feldman, E.; Bradley, J. Patient posture correction and alignment using mixed reality visualization and the HoloLens 2. Med. Phys. 2022, 49, 15–22. [Google Scholar] [CrossRef]

- Kitagawa, M.; Sugimoto, M.; Haruta, H.; Umezawa, A.; Kurokawa, Y. Intraoperative holography navigation using a mixed-reality wearable computer during laparoscopic cholecystectomy. Surgery 2021, 171, 1006–1013. [Google Scholar] [CrossRef]

- Heinrich, F.; Huettl, F.; Schmidt, G.; Paschold, M.; Kneist, W.; Huber, T.; Hansen, C. HoloPointer: A virtual augmented reality pointer for laparoscopic surgery training. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 161–168. [Google Scholar] [CrossRef] [PubMed]

- Ivanov, V.M.; Krivtsov, A.M.; Strelkov, S.V.; Kalakutskiy, N.V.; Yaremenko, A.I.; Petropavlovskaya, M.Y.; Portnova, M.N.; Lukina, O.V.; Litvinov, A.P. Intraoperative Use of Mixed Reality Technology in Median Neck and Branchial Cyst Excision. Future Internet 2021, 13, 214. [Google Scholar] [CrossRef]

- Gsaxner, C.; Pepe, A.; Li, J.; Ibrahimpasic, U.; Wallner, J.; Schmalstieg, D.; Egger, J. Augmented Reality for Head and Neck Carcinoma Imaging: Description and Feasibility of an Instant Calibration, Markerless Approach. Comput. Methods Programs Biomed. 2021, 200, 105854. [Google Scholar] [CrossRef]

- von Atzigen, M.; Liebmann, F.; Hoch, A.; Miguel Spirig, J.; Farshad, M.; Snedeker, J.; Fürnstahl, P. Marker-free surgical navigation of rod bending using a stereo neural network and augmented reality in spinal fusion. Med. Image Anal. 2022, 77, 102365. [Google Scholar] [CrossRef]

- Hu, X.; Baena, F.R.y.; Cutolo, F. Head-Mounted Augmented Reality Platform for Markerless Orthopaedic Navigation. IEEE J. Biomed. Health Informat. 2022, 26, 910–921. [Google Scholar] [CrossRef]

- Velazco-Garcia, J.D.; Navkar, N.V.; Balakrishnan, S.; Younes, G.; Abi-Nahed, J.; Al-Rumaihi, K.; Darweesh, A.; Elakkad, M.S.M.; Al-Ansari, A.; Christoforou, E.G.; et al. Evaluation of how users interface with holographic augmented reality surgical scenes: Interactive planning MR-Guided prostate biopsies. Int. J. Med. Robot. Comput. Assist. Surg. 2021, 17, e2290. [Google Scholar] [CrossRef]

- Nousiainen, K.; Mäkelä, T. Measuring geometric accuracy in magnetic resonance imaging with 3D-printed phantom and nonrigid image registration. Magn. Reson. Mater. Phys. Biol. Med. 2020, 33, 401–410. [Google Scholar] [CrossRef] [Green Version]

- Lim, S.; Ha, J.; Yoon, S.; Tae Sohn, Y.; Seo, J.; Chul Koh, J.; Lee, D. Augmented Reality Assisted Surgical Navigation System for Epidural Needle Intervention. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine Biology Society (EMBC), Mexico City, Mexico, 1–5 November 2021; pp. 4705–4708, ISSN 2694-0604. [Google Scholar] [CrossRef]

- Johnson, A.A.; Reidler, J.S.; Speier, W.; Fuerst, B.; Wang, J.; Osgood, G.M. Visualization of Fluoroscopic Imaging in Orthopedic Surgery: Head-Mounted Display vs Conventional Monitor. Surg. Innov. 2021, 29, 353–359. [Google Scholar] [CrossRef]

- Tu, P.; Gao, Y.; Lungu, A.J.; Li, D.; Wang, H.; Chen, X. Augmented reality based navigation for distal interlocking of intramedullary nails utilizing Microsoft HoloLens 2. Comput. Biol. Med. 2021, 133, 104402. [Google Scholar] [CrossRef]

- Cattari, N.; Condino, S.; Cutolo, F.; Ferrari, M.; Ferrari, V. In Situ Visualization for 3D Ultrasound-Guided Interventions with Augmented Reality Headset. Bioengineering 2021, 8, 131. [Google Scholar] [CrossRef]

- Frisk, H.; Lindqvist, E.; Persson, O.; Weinzierl, J.; Bruetzel, L.K.; Cewe, P.; Burström, G.; Edström, E.; Elmi-Terander, A. Feasibility and Accuracy of Thoracolumbar Pedicle Screw Placement Using an Augmented Reality Head Mounted Device. Sensors 2022, 22, 522. [Google Scholar] [CrossRef]

- Puladi, B.; Ooms, M.; Bellgardt, M.; Cesov, M.; Lipprandt, M.; Raith, S.; Peters, F.; Möhlhenrich, S.C.; Prescher, A.; Hölzle, F.; et al. Augmented Reality-Based Surgery on the Human Cadaver Using a New Generation of Optical Head-Mounted Displays: Development and Feasibility Study. JMIR Serious Games 2022, 10, e34781. [Google Scholar] [CrossRef]

- Kimmel, S.; Cobus, V.; Heuten, W. opticARe—Augmented Reality Mobile Patient Monitoring in Intensive Care Units. In Proceedings of the 27th ACM Symposium on Virtual Reality Software and Technology, Osaka, Japan, 8–10 December 2021; ACM: New York, NY, USA, 2021; pp. 1–11. [Google Scholar] [CrossRef]

- Lee, S.; Jung, H.; Lee, E.; Jung, Y.; Kim, S.T. A Preliminary Work: Mixed Reality-Integrated Computer-Aided Surgical Navigation System for Paranasal Sinus Surgery Using Microsoft HoloLens 2. In Computer Graphics International Conference; Magnenat-Thalmann, N., Interrante, V., Thalmann, D., Papagiannakis, G., Sheng, B., Kim, J., Gavrilova, M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Manhattan, NYC, USA, 2021; pp. 633–641. [Google Scholar] [CrossRef]

- Kriechling, P.; Loucas, R.; Loucas, M.; Casari, F.; Fürnstahl, P.; Wieser, K. Augmented reality through head-mounted display for navigation of baseplate component placement in reverse total shoulder arthroplasty: A cadaveric study. Arch. Orthop. Trauma Surg. 2021, 1–7. [Google Scholar] [CrossRef]

- Fischer, M.; Leuze, C.; Perkins, S.; Rosenberg, J.; Daniel, B.; Martin-Gomez, A. Evaluation of Different Visualization Techniques for Perception-Based Alignment in Medical AR. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Recife, Brazil, 9–13 November 2020; pp. 45–50. [Google Scholar] [CrossRef]

- Carbone, M.; Cutolo, F.; Condino, S.; Cercenelli, L.; D’Amato, R.; Badiali, G.; Ferrari, V. Architecture of a Hybrid Video/Optical See-through Head-Mounted Display-Based Augmented Reality Surgical Navigation Platform. Information 2022, 13, 81. [Google Scholar] [CrossRef]

- Ma, X.; Song, C.; Qian, L.; Liu, W.; Chiu, P.W.; Li, Z. Augmented Reality Assisted Autonomous View Adjustment of a 6-DOF Robotic Stereo Flexible Endoscope. IEEE Trans. Med. Robot. Bionics 2022, 4, 356–367. [Google Scholar] [CrossRef]

- Nguyen, T.; Plishker, W.; Matisoff, A.; Sharma, K.; Shekhar, R. HoloUS: Augmented reality visualization of live ultrasound images using HoloLens for ultrasound-guided procedures. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 385–391. [Google Scholar] [CrossRef]

- Tu, P.; Qin, C.; Guo, Y.; Li, D.; Lungu, A.J.; Wang, H.; Chen, X. Ultrasound image guided and mixed reality-based surgical system with real-time soft tissue deformation computing for robotic cervical pedicle screw placement. IEEE Trans. Biomed. Eng. 2022. [Google Scholar] [CrossRef]

- Olson, E. AprilTag: A robust and flexible visual fiducial system. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3400–3407. [Google Scholar]

- Yanni, D.S.; Ozgur, B.M.; Louis, R.G.; Shekhtman, Y.; Iyer, R.R.; Boddapati, V.; Iyer, A.; Patel, P.D.; Jani, R.; Cummock, M.; et al. Real-time navigation guidance with intraoperative CT imaging for pedicle screw placement using an augmented reality head-mounted display: A proof-of-concept study. Neurosurg. Focus 2021, 51, E11. [Google Scholar] [CrossRef]

- Condino, S.; Cutolo, F.; Cattari, N.; Colangeli, S.; Parchi, P.D.; Piazza, R.; Ruinato, A.D.; Capanna, R.; Ferrari, V. Hybrid simulation and planning platform for cryosurgery with Microsoft Hololens. Sensors 2021, 21, 4450. [Google Scholar] [CrossRef]

- Majak, M.; Żuk, M.; Świątek Najwer, E.; Popek, M.; Pietruski, P. Augmented reality visualization for aiding biopsy procedure according to computed tomography based virtual plan. Acta Bioeng. Biomech. 2021, 23, 81–89. [Google Scholar] [CrossRef]

- Zhou, Z.; Jiang, S.; Yang, Z.; Xu, B.; Jiang, B. Surgical navigation system for brachytherapy based on mixed reality using a novel stereo registration method. Virtual Real. 2021, 25, 975–984. [Google Scholar] [CrossRef]

- Gu, W.; Shah, K.; Knopf, J.; Josewski, C.; Unberath, M. A calibration-free workflow for image-based mixed reality navigation of total shoulder arthroplasty. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2021, 10, 243–251. [Google Scholar] [CrossRef]

- Dennler, C.; Safa, N.A.; Bauer, D.E.; Wanivenhaus, F.; Liebmann, F.; Götschi, T.; Farshad, M. Augmented Reality Navigated Sacral-Alar-Iliac Screw Insertion. Int. J. Spine Surg. 2021, 15, 161–168. [Google Scholar] [CrossRef]

- Kriechling, P.; Roner, S.; Liebmann, F.; Casari, F.; Fürnstahl, P.; Wieser, K. Augmented reality for base plate component placement in reverse total shoulder arthroplasty: A feasibility study. Arch. Orthop. Trauma Surg. 2021, 141, 1447–1453. [Google Scholar] [CrossRef]

- Liu, K.; Gao, Y.; Abdelrehem, A.; Zhang, L.; Chen, X.; Xie, L.; Wang, X. Augmented reality navigation method for recontouring surgery of craniofacial fibrous dysplasia. Sci. Rep. 2021, 11, 10043. [Google Scholar] [CrossRef]

- Liu, X.; Sun, J.; Zheng, M.; Cui, X. Application of Mixed Reality Using Optical See-Through Head-Mounted Displays in Transforaminal Percutaneous Endoscopic Lumbar Discectomy. Biomed Res. Int. 2021, 2021, e9717184. [Google Scholar] [CrossRef]

- Rae, E.; Lasso, A.; Holden, M.S.; Morin, E.; Levy, R.; Fichtinger, G. Neurosurgical burr hole placement using the Microsoft HoloLens. In Proceedings of the Medical Imaging 2018: Image-Guided Procedures, Robotic Interventions, and Modeling, Houston, TX, USA, 10–15 February 2018; International Society for Optics and Photonics: Bellingham, WA, USA, 2018; 10576, p. 105760T. [Google Scholar]

- Center for Devices and Radiological Health. Applying Human Factors and Usability Engineering to Medical Devices; FDA: Silver Spring, MD, USA, 2016. [Google Scholar]

- Fitzpatrick, J.M. The role of registration in accurate surgical guidance. Proc. Inst. Mech. Eng. Part J. Eng. Med. 2010, 224, 607–622. [Google Scholar] [CrossRef] [Green Version]

- Sielhorst, T.; Bichlmeier, C.; Heining, S.M.; Navab, N. Depth perception—A major issue in medical AR: Evaluation study by twenty surgeons. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2006; pp. 364–372. [Google Scholar]

- Yeo, C.T.; MacDonald, A.; Ungi, T.; Lasso, A.; Jalink, D.; Zevin, B.; Fichtinger, G.; Nanji, S. Utility of 3D reconstruction of 2D liver computed tomography/magnetic resonance images as a surgical planning tool for residents in liver resection surgery. J. Surg. Educ. 2018, 75, 792–797. [Google Scholar] [CrossRef]

- Pelanis, E.; Kumar, R.P.; Aghayan, D.L.; Palomar, R.; Fretland, Å.A.; Brun, H.; Elle, O.J.; Edwin, B. Use of mixed reality for improved spatial understanding of liver anatomy. Minim. Invasive Ther. Allied Technol. 2020, 29, 154–160. [Google Scholar] [CrossRef]

- Shibata, T.; Kim, J.; Hoffman, D.M.; Banks, M.S. The zone of comfort: Predicting visual discomfort with stereo displays. J. Vis. 2011, 11, 11. [Google Scholar] [CrossRef] [Green Version]

- Hasson, Y.; Tekin, B.; Bogo, F.; Laptev, I.; Pollefeys, M.; Schmid, C. Leveraging photometric consistency over time for sparsely supervised hand-object reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 571–580. [Google Scholar]

- Hein, J.; Seibold, M.; Bogo, F.; Farshad, M.; Pollefeys, M.; Fürnstahl, P.; Navab, N. Towards markerless surgical tool and hand pose estimation. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 799–808. [Google Scholar] [CrossRef] [PubMed]

- Doughty, M.; Ghugre, N.R. HMD-EgoPose: Head-mounted display-based egocentric marker-less tool and hand pose estimation for augmented surgical guidance. Int. J. Comput. Assist. Radiol. Surg. 2022. [Google Scholar] [CrossRef] [PubMed]

- Twinanda, A.P.; Shehata, S.; Mutter, D.; Marescaux, J.; De Mathelin, M.; Padoy, N. Endonet: A deep architecture for recognition tasks on laparoscopic videos. IEEE Trans. Med. Imaging 2016, 36, 86–97. [Google Scholar] [CrossRef] [Green Version]

- Doughty, M.; Singh, K.; Ghugre, N.R. SurgeonAssist-Net: Towards Context-Aware Head-Mounted Display-Based Augmented Reality for Surgical Guidance. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 667–677. [Google Scholar]

| Specifications | Google Glass 2 | HoloLens 1 | HoloLens 2 | Magic Leap 1 | Magic Leap 2 |

|---|---|---|---|---|---|

| Optics | Beam Splitter | Waveguide | Waveguide | Waveguide | Waveguide |

| Resolution | px | px | px | px | px |

| Field of View | diagonal | ||||

| Focal Planes | Single Fixed | Single Fixed | Single Fixed | Two Fixed | Single Fixed |

| Computing | On-board | On-board | On-board | External pad | External pad |

| SLAM | 6DoF | 6DoF | 6DoF | 6DoF | 6DoF |

| Eye Tracking | No | No | Yes | Yes | Yes |

| Weight | 46 g | 579 g | 566 g | 345 g | 260 g |

| Design | Glasses-like | Hat-like | Hat-like | Glasses-like | Glasses-like |

| Interaction | Touchpad | Head, hand, voice | Hand, eye, voice | Controller | Eye, controller |

| Release Date | 2019 | 2016 | 2019 | 2018 | 2022 |

| Price | $999 | $3000 | $3500 | $2295 | $3299 |

| Status | Available | Discontinued | Available | Available | Upcoming |

| Data: Preoperative or Intraoperative | Number of Articles |

|---|---|

| Preoperative | |

| Computed Tomography (CT) | 34 |

| Magnetic Resonance Imaging (MRI) | 7 |

| CT and/or MRI | 6 |

| Prerecorded Videos | 1 |

| Intraoperative | |

| Fluoroscopy | 4 |

| Ultrasound | 3 |

| Telestrations/Virtual Arrows and Annotations | 3 |

| Cone Beam CT | 3 |

| Endoscope Video | 2 |

| Patient Sensors/Monitoring Equipment | 1 |

| Simulated Intraoperative Data | 1 |

| Processing | Number of Articles |

|---|---|

| Three-Dimensional | |

| Surface Models | 39 |

| Planning Information | 8 |

| Raw Data | 5 |

| Volume Models | 4 |

| Printed Models | 3 |

| Two-Dimensional | |

| Telestrations | 4 |

| Raw Data | 3 |

| Overlay | Count | Tracking Marker | Count |

|---|---|---|---|

| External Tracker | External Markers | ||

| Northern Digital Inc. Polaris | 7 | Retroreflective Spheres | 11 |

| Northern Digital Inc. EM/Aurora | 3 | Electromagnetic | 3 |

| ClaroNav MicronTracker | 2 | Visible | 2 |

| Optitrack | 1 | ||

| Medtronic SteathStation | 1 | ||

| OST-HMD Camera (RGB/Infrared) | Optical Markers | ||

| HoloLens 1 | 19 | Vuforia | 10 |

| HoloLens 2 | 10 | ArUco | 9 |

| Custom | 3 | Custom | 4 |

| Magic Leap 1 | 1 | Retroreflective Spheres | 2 |

| OST-HMD Display Calibration | QR-Code | 2 | |

| SPAAM/similar | 2 | Marker-Less | 2 |

| AprilTag | 1 | ||

| Manual Placement | |||

| Surgeon | 8 | ||

| Other | 3 |

| View | Interaction | Display Device | Perception Location |

|---|---|---|---|

| Ackermann et al., 2021 [45] | N/A | HL1 | DO |

| Cattari et al., 2021 [83] | N/A | Custom | DO |

| Condino et al., 2021 [66] | N/A | Custom | DO |

| Condino et al., 2021 [96] | VO, GE | HL1 | DO |

| Dennler et al., 2021 [46] | VO, GE | HL1 | AO |

| Dennler et al., 2021 [100] | N/A | HL1 | DO |

| Farshad et al., 2021 [47] | VO, GE | HL2 | DO |

| Fick et al., 2021 [63] | VO, GE | HL1 | DO |

| Gao et al., 2021 [54] | VO | HL1 | DO |

| Gasques et al., 2021 [58] | VO, GE, PO | HL1 | DO |

| Gsaxner et al., 2021 [60] | N/A | HL2 | DO |

| Gsaxner et al., 2021 [75] | GA, GE | HL2 | DO |

| Gu et al., 2021 [99] | GA, GE | HL2 | DO |

| Gu et al., 2021 [48] | GE | HL1 | DO |

| Heinrich et al., 2021 [73] | VO, GE | HL1 | DO |

| Iqbal et al., 2021 [55] | N/A | HL1 | AO |

| Ivan et al., 2021 [62] | GE | HL1 | DO |

| Ivanov et al., 2021 [74] | GE | HL2 | DO |

| Johnson et al., 2021 [81] | N/A | ODG R-6 | AO |

| Kimmel et al., 2021 [86] | VO, GE | HL1 | AO |

| Kitagawa et al., 2021 [72] | N/A | HL2 | AO |

| Kriechling et al., 2021 [88] | VO, GE | HL1 | DO |

| Kriechling et al., 2021 [101] | VO, GE | HL1 | DO |

| Kunz et al., 2021 [64] | GE | HL1 | DO |

| Lee et al., 2021 [87] | N/A | HL2 | DO |

| Li et al., 2021 [68] | N/A | HL1 | DO |

| Lim et al., 2021 [80] | GE | HL2 | DO |

| Lin et al., 2021 [57] | GE | ML1 | DO |

| Liu et al., 2021 [102] | VO | HL1 | AO |

| Liu et al., 2021 [103] | N/A | HL2 | DO |

| Liu et al., 2021 [69] | N/A | HL1 | DO |

| Majak et al., 2021 [97] | N/A | Moverio BT-200 | DO |

| Qi et al., 2021 [65] | GE | HL2 | DO |

| Rai et al., 2021 [59] | CNT | ML1 | DO |

| Schlueter-Brust et al., 2021 [51] | GE | HL2 | DO |

| Spirig et al., 2021 [50] | VO, GE | HL1 | DO |

| Stewart et al., 2021 [56] | VO, GE | HL1 | AO |

| Tang et al., 2021 [52] | GE | HL1 | AO |

| Tarutani et al., 2021 [70] | GE | HL2 | AO |

| Teatini et al., 2021 [49] | GE | HL1 | DO |

| Tu et al., 2021 [82] | VO, GE | HL2 | DO |

| Velazco-Garcia et al., 2021 [78] | VO, GE | HL1 | AO |

| Yanni et al., 2021 [95] | CNT | ML1 | DO |

| Zhou et al., 2021 [98] | VO, GE | HL1 | DO |

| Carbone et al., 2022 [90] | N/A | Custom | DO |

| Doughty et al., 2022 [33] | GE | HL2 | DO |

| Frisk et al., 2022 [84] | CNT | ML1 | DO |

| Hu et al., 2022 [77] | KB | HL1 | DO |

| Johnson et al., 2022 [71] | VC | HL2 | DO |

| Ma et al., 2022 [91] | HP | HL1 | DO |

| Nguyen et al., 2022 [92] | VO | HL1 | DO |

| Puladi et al., 2022 [85] | GE | HL1 | DO |

| Tu et al., 2022 [93] | GE | HL2 | DO |

| Uhl et al., 2022 [67] | CNT | ML1 | DO |

| Von Atzigen et al., 2022 [76] | VO | HL1 | DO |

| Yang et al., 2022 [53] | VO, GE | HL1 | DO |

| Zhang et al., 2022 [61] | N/A | HL1 | DO |

| Validation | Evaluation | Accuracy | Human Factors |

|---|---|---|---|

| Ackermann et al., 2021 [45] | CAD | mm RMS, | ROE, SPWR, EOU |

| Cattari et al., 2021 [83] | PHA | mm | PER, ERGO, ATS, MM, CTXT |

| Condino et al., 2021 [66] | PHA | mm | ATS, PER, SPWR, CTXT, INT |

| Condino et al., 2021 [96] | PHA, PAT | N/A | SPWR, MM, PER |

| Dennler et al., 2021 [46] | PAT | N/A | ERGO, SPWR, ATS, PER |

| Dennler et al., 2021 [100] | PHA | mm entry, | ATS, ROE |

| Farshad et al., 2021 [47] | PAT | mm entry, | ATS, ROE, ERGO, PER |

| Fick et al., 2021 [63] | PAT | mm | MM, ATS, SPWR |

| Gao et al., 2021 [54] | PHA | SPWR, MM, PER | |

| Gasques et al., 2021 [58] | PHA, CAD | N/A | ROE, PER, CTXT |

| Gsaxner et al., 2021 [60] | PHA | mm RMS | PER, INT, CTXT, SPWR |

| Gsaxner et al., 2021 [75] | PHA | N/A | EOU, PER, MM |

| Gu et al., 2021 [99] | PHA | mm, | PER, MM, SPWR, OCCL, CTXT |

| Gu et al., 2021 [48] | PHA | mm, | OCCL, PER |

| Heinrich et al., 2021 [73] | PHA | N/A | PER, HE |

| Iqbal et al., 2021 [55] | PAT | Surface Roughness | EOU, ERGO, PER, MM, SPWR |

| Ivan et al., 2021 [62] | PAT | Trace Overlap | ERGO, SPWR |

| Ivanov et al., 2021 [74] | PAT | mm | MM, PER |

| Johnson et al., 2021 [81] | PHA | N/A | ERGO, EOU |

| Kimmel et al., 2021 [86] | PAT | N/A | CTXT |

| Kitagawa et al., 2021 [72] | PAT | N/A | SPWR, EOU |

| Kriechling et al., 2021 [88] | CAD | mm | N/A |

| Kriechling et al., 2021 [101] | CAD | mm | N/A |

| Kunz et al., 2021 [64] | PHA | mm | CTXT, PER, ERGO |

| Lee et al., 2021 [87] | PHA | N/A | PER, INT, SPWR |

| Li et al., 2021 [68] | PHA, ANI | mm | PER, INT, SPWR |

| Lim et al., 2021 [80] | PHA | N/A | N/A |

| Lin et al., 2021 [57] | PHA | mm | CTXT |

| Liu et al., 2021 [102] | PAT | mm | SPWR, MM |

| Liu et al., 2021 [103] | PAT | Radiation Exposure | ERGO, EOU |

| Liu et al., 2021 [69] | PHA | mm | CTXT, PER |

| Majak et al., 2021 [97] | PHA | mm | MM, ATS, SPWR |

| Qi et al., 2021 [65] | PAT | mm | MM, SPWR, ROE |

| Rai et al., 2021 [59] | PAT | N/A | SPWR, EOU |

| Schlueter-Brust et al., 2021 [51] | PHA | 3 mm | OCCL, PER |

| Spirig et al., 2021 [50] | CAD | mm | MM, ATS, SPWR |

| Stewart et al., 2021 [56] | PHA | N/A | ATS, ERGO |

| Tang et al., 2021 [52] | PAT | N/A | HE, SPWR, MM, PER |

| Tarutani et al., 2021 [70] | PHA | mm | ROE, SPWR |

| Teatini et al., 2021 [49] | PHA | mm | SPWR, MM, PER, HE, ROE |

| Tu et al., 2021 [82] | PHA, CAD | mm | MM, OCCL, HE, PER, ERGO |

| Velazco-Garcia et al., 2021 [78] | PHA | N/A | MM, CTXT, SPWR |

| Yanni et al., 2021 [95] | PHA | N/A | ERGO, MM, PER |

| Zhou et al., 2021 [98] | PHA, ANI | mm | OCCL, INT, ROE |

| Carbone et al., 2021 [90] | PHA, PAT | mm | ROE, OCCL, PER, ERGO |

| Doughty et al., 2022 [33] | PHA, ANI | mm | PER, MM, OCCL, CTXT, ATS |

| Frisk et al., 2022 [84] | PHA | mm | MM, ATS |

| Hu et al., 2022 [77] | PHA | mm | OCCL, PER |

| Johnson et al., 2022 [71] | PHA | mm | PER, MM, ERGO |

| Ma et al., 2022 [91] | PHA | N/A | OCCL, ERGO, EOU |

| Nguyen et al., 2022 [92] | PHA | N/A | HE, MM |

| Puladi et al., 2022 [85] | CAD | mm | MM, SPWR, PER, OCCL |

| Tu et al., 2022 [93] | PHA | mm | MM, HE, PER, ROE |

| Uhl et al., 2022 [67] | PHA | mm | MM, ATS |

| Von Atzigen et al., 2022 [76] | PHA | mm | ATS |

| Yang et al., 2022 [53] | PAT | mm | SPWR, MM |

| Zhang et al., 2022 [61] | CAD | mm | MM, ROE |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Doughty, M.; Ghugre, N.R.; Wright, G.A. Augmenting Performance: A Systematic Review of Optical See-Through Head-Mounted Displays in Surgery. J. Imaging 2022, 8, 203. https://doi.org/10.3390/jimaging8070203

Doughty M, Ghugre NR, Wright GA. Augmenting Performance: A Systematic Review of Optical See-Through Head-Mounted Displays in Surgery. Journal of Imaging. 2022; 8(7):203. https://doi.org/10.3390/jimaging8070203

Chicago/Turabian StyleDoughty, Mitchell, Nilesh R. Ghugre, and Graham A. Wright. 2022. "Augmenting Performance: A Systematic Review of Optical See-Through Head-Mounted Displays in Surgery" Journal of Imaging 8, no. 7: 203. https://doi.org/10.3390/jimaging8070203

APA StyleDoughty, M., Ghugre, N. R., & Wright, G. A. (2022). Augmenting Performance: A Systematic Review of Optical See-Through Head-Mounted Displays in Surgery. Journal of Imaging, 8(7), 203. https://doi.org/10.3390/jimaging8070203