Abstract

With the development of digital imaging techniques, image quality assessment methods are receiving more attention in the literature. Since distortion-free versions of camera images in many practical, everyday applications are not available, the need for effective no-reference image quality assessment algorithms is growing. Therefore, this paper introduces a novel no-reference image quality assessment algorithm for the objective evaluation of authentically distorted images. Specifically, we apply a broad spectrum of local and global feature vectors to characterize the variety of authentic distortions. Among the employed local features, the statistics of popular local feature descriptors, such as SURF, FAST, BRISK, or KAZE, are proposed for NR-IQA; other features are also introduced to boost the performances of local features. The proposed method was compared to 12 other state-of-the-art algorithms on popular and accepted benchmark datasets containing RGB images with authentic distortions (CLIVE, KonIQ-10k, and SPAQ). The introduced algorithm significantly outperforms the state-of-the-art in terms of correlation with human perceptual quality ratings.

1. Introduction

With the considerable advancements made in digital imaging and technology and the easy availability of cheap image-capturing devices, a large number of digital images are captured by non-technical users every day. As a consequence, people upload huge amounts of images and videos to the internet and extensively use streaming applications. In addition, visual information represents 85% of the information that is usable for human beings. Therefore, the quality assessment of digital images is of great importance and a hot research topic, along with several practical applications, such as benchmarking computer vision algorithms [1], monitoring the quality of network visual communication applications [2], fingerprint image evaluation [3], medical imaging applications [4], evaluating image compression [5], or denoising [6] algorithms. Image degradations may occur due to various reasons, such as noise, blurring, fading, or blocking artifacts. Further, the mentioned degradations can be introduced in all phases of the imaging process, such as acquisition, compression, transmission, decompression, storage, and display.

Traditionally, image quality assessment (IQA) algorithms are divided into three distinct classes in the literature with respect to the accessibility of the reference (distortion-free) images [7], i.e., no-reference (NR), full-reference (FR), and reduced-reference (RR). As the idioms indicate, NR-IQA algorithms have absolutely no access to the reference images, FR-IQA methods have full access to the reference images, while RR-IQA approaches have partial information about the reference images. In the literature, NR-IQA is recognized as a more difficult research task than the other two classes due to the complete lack of reference images [8].

In this paper, we introduce a novel NR-IQA model that relies on the fusion of local and global image features. Namely, many NR-IQA methods [9,10,11,12] characterize digital images with global features that are computed using the whole image. This approach is effective in case of artificial distortions (such as JPEG or JPEG2000 compression noise), since they are distributed uniformly in the image. However, authentically or naturally distorted images are often contaminated by noise locally. To improve the perceptual quality estimation of authentically distorted images, we combined novel local and global feature vectors using the statistics of local feature descriptors and some powerful perceptual features. Specifically, 93 features were employed, from which, 80 were introduced for NR-IQA in this study. It was empirically proved that the statistics of local feature descriptors are quality-aware features (since they interpret local image regions from the viewpoint of a human visual system); combining them with global features results in a higher performance than the current state-of-the-art. Our proposed method is code-named as FLG-IQA, referring to the fact that it is based on the fusion of local and global features.

The rest of this study is arranged as follows. In Section 2, related and previous papers are reviewed and outlined. Section 3 provides an overview of the used benchmark databases and evaluation metrics. Moreover, it describes the proposed approach in detail. Section 4 presents experimental results. Specifically, an ablation study is introduced to explain the effects of individual features. Moreover, a comparison to 12 other state-of-the-art methods is presented. Finally, this study is concluded in Section 5.

2. Literature Review

In this section, a review of the existing NR-IQA algorithms is given. For more comprehensive summaries about this topic, interested readers can refer to the studies by Zhai et al. [7], Mohammadi et al. [13], Yang et al. [14], and the book by Xu et al. [15].

NR-IQA methods can be further divided into training-free and machine learning-based classes. Our proposed method falls into the machine learning-based class; thus, this state-of-the-art study mainly focuses on this category. As the terminology indicates, machine learning-based algorithms incorporate some kind of machine learning algorithm to provide an estimation of the perceptual quality of a digital image, while training-free methods do not contain any training steps or rely on machine learning techniques using distorted images. For example, Venkatanath et al. [16] proposed the perception-based image quality evaluator (PIQE), which estimates the image qualities of distorted images using mean subtracted contrast normalized coefficients calculated at all pixel locations. In contrast, the naturalness image quality evaluator (NIQE) proposed by Mittal et al. [17] determines the distance between naturalness features extracted from the distorted image and the features obtained beforehand from distortion-free, pristine images to quantify perceptual image quality. NIQE was further developed in [18,19]. Namely, Zhang et al. [18] improved NIQE by using a Bhattacharyya-like distance [20] between the learned multivariate Gaussian model from the pristine images and those of the distorted images. In contrast, Wu et al. [19] boosted NIQE by more complex features to increase the prediction performance. Recently, Leonardi et al. [21] utilized deep features extracted from a pre-trained convolutional neural network to construct an opinion–unaware method using the correlations through the Gramian matrix between feature maps.

In the literature, various types of machine learning-based NR-IQA methods can be found. Namely, many machine learning-based algorithms rely on natural scene statistics (NSS), which is a powerful tool in characterizing image distortions. The main assumption of NSS is that digital images of high quality follow a sort of statistical regularity and distorted images significantly deviate from this pattern [22]. For instance, Saad et al. [23] examined the statistics of discrete cosine transform (DCT) coefficients. Specifically, a generalized Gaussian distribution (GGD) model was fitted on the DCT coefficients and its parameters were utilized as quality-aware features and mapped onto a perceptual quality score with the help of a support vector regressor (SVR). Another line of papers focused on the wavelet transform to extract quality-aware features. For example, Moorthy and Bovik [24] carried out a wavelet transform over three scales and three orientations; similar to [23], GGDs were fitted to the subband coefficients and their parameters were used as quality-aware features. This method was further improved in [25], where the correlations across scales, subbands, and orientations were also used as quality-aware features. In contrast, Tang et al. [26] extracted quality-aware features from complex pyramid wavelet coefficients. In [27], the authors used color statistics, such as NSS. Specifically, mean subtracted contrast normalized (MSCN) coefficients were created from the color channels of different color spaces. Next, GGD was fitted on these coefficients; similar to the previously mentioned methods, its parameters were used to map onto quality scores. Similar to [27], Mittal et al. [9] applied MSCN coefficients to characterize perceptual quality but they extracted them from the spatial domain. Other proposed models [28,29,30] utilized the statistics of local binary patterns [31] to characterize image texture degradation in the presence of noise and distortions. In [32], Freitas et al. presented a performance comparison of a wide range of local binary pattern types and variants for NR-IQA. Ye and Doerman [33,34] applied the first visual codebooks for NR-IQA. More specifically, Gabor filters were applied for feature extraction and visual codebook creation. Subsequently, the perceptual quality of an image was expressed as the weighted average of the codeword quality scores. In [35], an unsupervised feature learning framework was introduced where an unlabeled codebook was compiled from raw image patches using means clustering.

With the popularity of deep learning techniques and research, many deep learning-based methods were also proposed in the literature. For instance, Kang et al. [36] trained a convolutional neural network (CNN) with a convolutional layer (using both max and min pooling) and two fully-connected layers. Specifically, the proposed CNN was trained on image patches and the predicted scores of the patches were averaged to obtain the estimated quality of the whole input image. In contrast, Bianco et al. [37] introduced the DeepBIQ architecture, which extracts deep features with the help of a pre-trained CNN from multiple image patches to compile feature vectors that were mapped onto perceptual quality with a SVR. Similarly, Gao et al. [38] relied on a pre-trained CNN but multi-level feature vectors were extracted through global average pooling layers. Sheng et al. [39] utilized the fact that visual saliency is correlated with image quality [40] and trained a saliency-guided deep CNN for NR-IQA from scratch. In contrast, Liu et al. [41] introduced the learning to rank framework [42] for NR-IQA. Specifically, the authors implemented a Siamese CNN to realize this framework. Li et al. [43] implemented a novel loss function (very similar to Pearson’s linear correlation coefficient) to provide the CNN with a shorter convergence time and a better perceptual quality estimation performance. Celona and Schettini [44] trained a novel network, which processes input images at multiple scales, trained jointly by considering NR-IQA as regression, classification, and pairwise ranking, simultaneously.

3. Materials and Methods

3.1. Materials

In this subsection, the applied IQA benchmark databases are described. Further, the applied evaluation methodology and implementation environment are given.

3.1.1. Applied IQA Databases

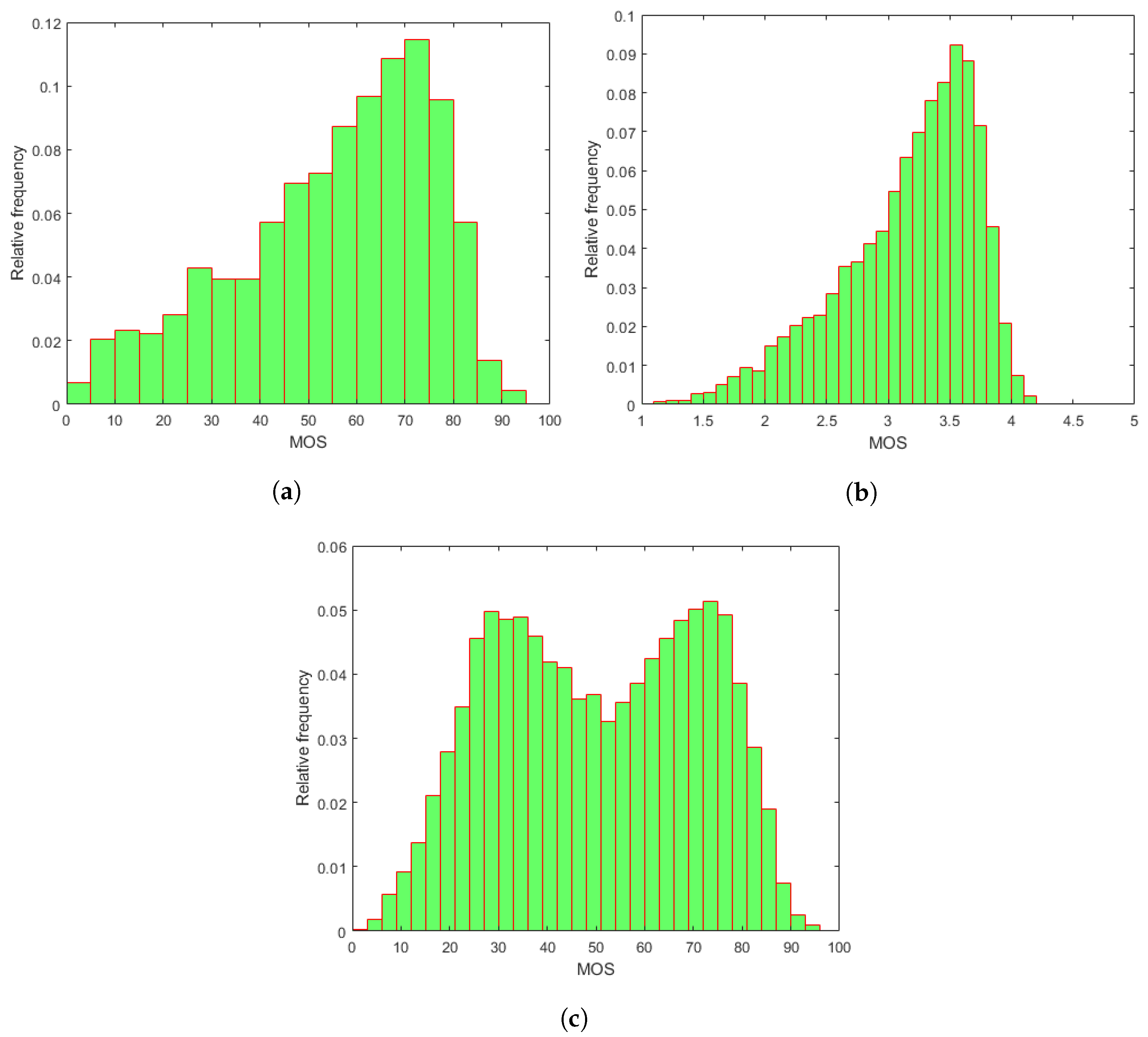

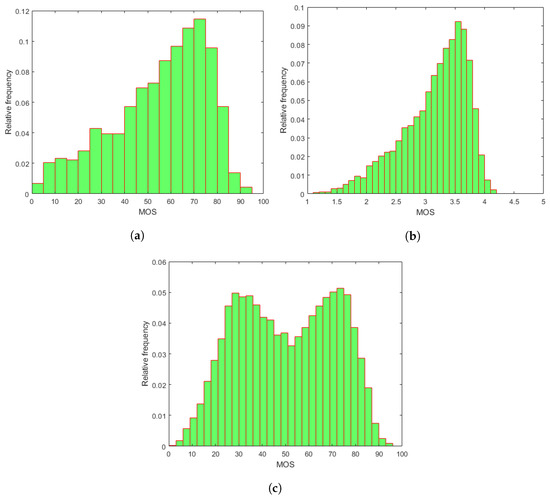

To evaluate our proposed methods and compare them against the state-of-the-art, three publicly available databases containing RGB images with authentic distortions were utilized—CLIVE [45], KonIQ-10k [46], and SPAQ [47]. The main properties of the applied IQA databases are given in Table 1. The empirical mean opinion score (MOS) distributions of the utilized IQA benchmark databases containing authentic distortions are depicted in Figure 1. In the field of IQA, MOS corresponds to the arithmetic mean of the collected individual quality ratings. Further, those distortions are considered authentic and were introduced to the images during the daily (usually non-expert) usage of imaging devices, such as overexposure, underexposure, camera jitter, motion blur, or noises from camera vibration [48].

Table 1.

Summary about the applied benchmark IQA databases with authentic distortions. DSLR: digital single-lens reflex camera. DSC: digital still camera. SPHN: smartphone.

Figure 1.

Empirical MOS distributions in the applied IQA databases: (a) CLIVE [45], (b) KonIQ-10k [46], and (c) SPAQ [47].

3.1.2. Evaluation

The evaluation of NR-IQA algorithms relies on the measurements of the correlations between predicted and ground truth perceptual quality scores. In the literature, Pearson’s linear correlation coefficient (PLCC), Spearman’s rank-order correlation coefficient (SROCC), and Kendall’s rank-order correlation coefficient (KROCC) are widely applied and accepted for this end [49]. As recommended by Sheikh et al. [50], non-linear mapping was carried out between the predicted and the ground truth scores before the computation of PLCC using a logistic function with five parameters,

where and Q indicate the predicted and mapped scores, respectively. Further, , denote the fitting parameters.

As usual, in machine learning, particularly in NR-IQA, 80% of images in a database were used for training and the remaining 20% for testing. Further, median PLCC, SROCC, and KROCC values, which were measured over 100 random train–test splits, are reported in this study. PLCC, SROCC, and KROCC can be defined between vectors and as

where

where N stands for the length of the vectors, and are the means of the vectors, and indicate the number of concordant and discordant pairs between and , respectively.

Our experiments were carried out in MATLAB R2021a, mainly employing the functions of the image processing and machine learning and statistics toolboxes. The main characteristics of the computer configuration applied in our experiments are outlined in Table 2.

Table 2.

The applied computer configuration of the experiments.

3.2. Methods

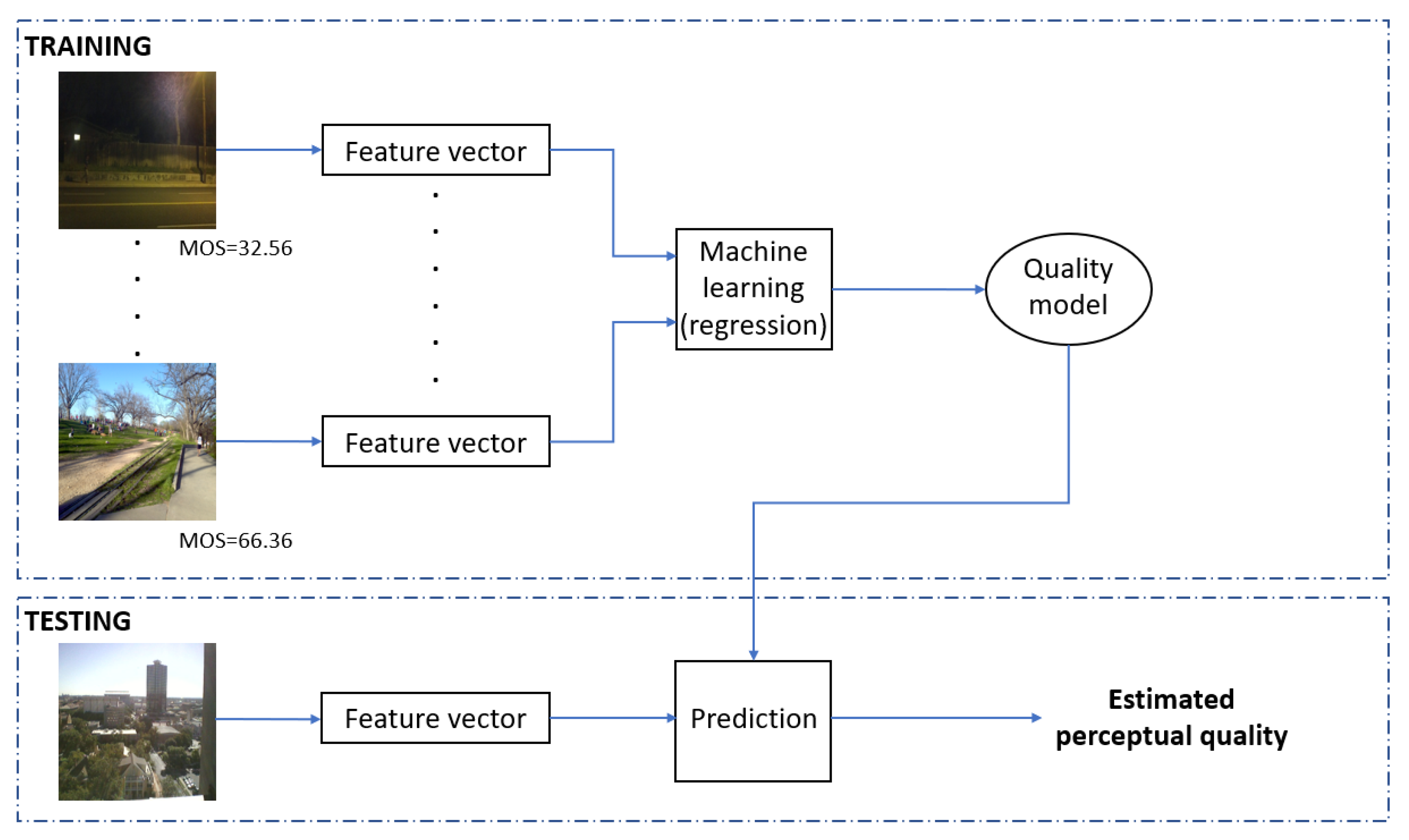

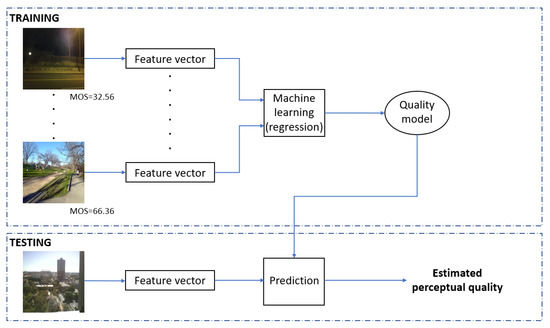

A high-level summary of the proposed method is depicted in Figure 2, while Table 3 sums up the introduced and applied quality-aware features. As it can be seen from Figure 2, feature vectors were extracted from the set of training images to obtain a quality model with the help of a machine learning algorithm. Formally, our quality model is defined by , where q is the estimated quality score, , and G is a regression model that can be the Gaussian process regression (GPR) and support vector regressor (SVR). In the training phase, the model is optimized to minimize the distance between the estimated and ground truth quality scores. In the testing phase, the obtained quality model G was applied to estimate the perceptual quality of previously unseen test images. As already mentioned in the previous subsection, the evaluation of the quality model relies on measuring the correlation strength between the predicted and ground truth quality scores. Further, median PLCC, SROCC, and KROCC values measured over 100 random train–test splits are reported in this study.

Figure 2.

Workflow of the proposed NR-IQA algorithm.

The main (novel) contribution of the proposed method is the introduced quality-aware features outlined in Table 3. To tackle the wide variety of authentic distortions, a broad spectrum of statistical local and global features were applied. Artificial distortions (such as JPEG or JPEG2000 compression) are usually uniformly distributed in an image, which can be characterized well by global and homogeneous features [51]. However, authentic distortions often appear locally in a digital image, which can be better captured by local, non-homogeneous image features. Namely, authentic distortions are mainly introduced to the images during daily (non-expert) usage of imaging devices, such as overexposure, underexposure, camera jitter, motion blur, or noises from camera vibration [48]. In this study, the statistics of local feature descriptors supplemented by global features were introduced to construct a novel image quality model. Specifically, local feature descriptors were designed to characterize images from the human visual system’s point of view [52]. For instance, FAST [53] and Harris [54] are suitable for finding small mono-scale keypoints. On the other hand, other local feature descriptors, such as SURF [55], find multi-scale keypoints. Although they are designed to exhibit some kind of invariance against noise and illumination, perfect robustness does not exist. That is why their statistics may help characterize local image quality. Moreover, images with authentic distortions may suffer from overexposure or underexposure, which influence image content globally. The main goal of this study was to empirically prove that the fusion of local and global features can effectively estimate the perceptual quality of digital images with authentic distortions. To be specific, 93 features were applied in this study, of which, 80 were introduced for NR-IQA. The introduced features can be divided into four groups, i.e., the statistics of local feature descriptors measured on the grayscale image (f1-f35 in Table 3) and on the Prewitt-filtered image (f36-f70), Hu invariant moments computed from the binarized Sobel edge map of the input image (f66-f70), perceptual features (f78-f87), and the relative Grünwald–Letnikov derivative and gradient statistics (f88-f93). In the following subsection, each group of the features is introduced in detail.

Table 3.

Summary of the applied features. Quality-aware features proposed by this paper are in bold.

Table 3.

Summary of the applied features. Quality-aware features proposed by this paper are in bold.

| Feature Number | Input | Feature | Number of Features |

|---|---|---|---|

| f1-f5 | SURF [55], Grayscale image | mean, median, std, skewness, kurtosis | 5 |

| f6-f10 | FAST [53], Grayscale image | mean, median, std, skewness, kurtosis | 5 |

| f11-f15 | BRISK [56], Grayscale image | mean, median, std, skewness, kurtosis | 5 |

| f16-f20 | KAZE [57], Grayscale image | mean, median, std, skewness, kurtosis | 5 |

| f21-f25 | ORB [58], Grayscale image | mean, median, std, skewness, kurtosis | 5 |

| f26-f30 | Harris [54], Grayscale image | mean, median, std, skewness, kurtosis | 5 |

| f31-f35 | Minimum Eigenvalue [59], Grayscale image | mean, median, std, skewness, kurtosis | 5 |

| f36-f40 | SURF [55], Filtered image | mean, median, std, skewness, kurtosis | 5 |

| f41-f45 | FAST [53], Filtered image | mean, median, std, skewness, kurtosis | 5 |

| f46-f50 | BRISK [56], Filtered image | mean, median, std, skewness, kurtosis | 5 |

| f51-f55 | KAZE [57], Filtered image | mean, median, std, skewness, kurtosis | 5 |

| f56-f60 | ORB [58], Filtered image | mean, median, std, skewness, kurtosis | 5 |

| f61-f65 | Harris [54], Filtered image | mean, median, std, skewness, kurtosis | 5 |

| f66-f70 | Minimum Eigenvalue [59], Filtered image | mean, median, std, skewness, kurtosis | 5 |

| f71-f77 | Binary image | Hu invariant moments [60] | 7 |

| f78-f87 | RGB image | Perceptual features | 10 |

| f88 | GL-GM map | histogram variance | 1 |

| f89 | GL-GM map | histogram variance | 1 |

| f90 | GL-GM map | histogram variance | 1 |

| f91 | GM map [61] | histogram variance | 1 |

| f92 | RO map [61] | histogram variance | 1 |

| f93 | RM map [61] | histogram variance | 1 |

3.3. Statistics of Local Feature Descriptors

In contrast to artificially distorted images, where distortions are uniformly distributed, natural or authentic distortions are often present locally in images. This is why the statistics of local feature descriptors are proposed for quality-aware features in this study. In this study, we calculated the statistics of the following local feature detectors: SURF [55], FAST [53], BRISK [56], KAZE [57], ORB [58], Harris [54], and minimum eigenvalue [59]. Specifically, the strongest 250 interest points were detected separately with each of the above-mentioned local feature detectors and the adherent feature vectors were determined. In this study, the following statistics of the obtained feature vectors were considered as local features: mean, median, standard deviation, skewness, and kurtosis. The skewness of a vector containing n elements is calculated as

while its kurtosis can be given as

where stands for the arithmetic mean of . Specifically, the statistics of the features around the feature points were calculated and their arithmetic means were considered as quality-aware features. Moreover to increase the distinctiveness of local feature detectors [62], the statistics of the local feature detectors were also extracted from the filtered version of the input image obtained by Prewitt operators [63].

3.4. Hu Invariant Moments

The moment is a projection of a function (in image processing an image ) to the polynomial basis. Formally, it can be written as

where N and M are the width and the height of image , respectively. The order of the moment is defined as . It can be easily pointed out that is the mass of the image. Further, and are the coordinates of the center of gravity of the image. The central moment is defined as

It is evident that . Next, the normalized central moment is given as

where

Hu [60] proposed seven invariant moments, which were defined using the normalized central moment, such as

The human visual system is very sensitive to edge and contour information, since this information gives reliable implications about the structure of an image [64,65]. To characterize the contour information of an image, the Hu invariant moments [60] of the image’s binary Sobel edge map were used as quality-aware features. The horizontal and vertical derivative approximations of input image were determined as

where ∗ is the convolution operator. Next, the gradient magnitude was computed as

The binary Sobel edge map was obtained from the gradient magnitude by applying a cutoff threshold corresponding to the quadruple of ’s mean.

3.5. Perceptual Features

Some perceptual features, which are proved to be consistent with human quality judgments [66], were also built into our model. In the following, an RGB color image is denoted by I and is a color channel of input image I. Moreover, x stands for the pixel coordinate and we assume that I has N pixels.

- Blur: It is probably the most dominant source of perceptual image quality deterioration in digital imaging [67]. To quantify the emergence of the blur effect, the blur metric of Crété-Roffet et al. [68], which is based on the measurements of intensity variations between neighboring pixels, was implemented due to its low computational costs.

- Colorfulness: In [69], Choi et al. pointed out that colorfulness is a critical component in human image quality judgment. We determined colorfulness using the following formula proposed by Hasler and Suesstrunk [70]:where and stand for the standard deviation and the mean of the matrices denoted in the subscripts, respectively. Specifically, these matrices are given as:where R, G, and B are the red, green, and blue color channels, respectively.

- Chroma: It is one of the relevant image features among a series of color metrics in the CIELAB color space. Moreover, chroma is significantly correlated with haze, blur, or motion blur in the image [70]. It is defined aswhere a and b are the corresponding color channels of the CIELAB color space. The arithmetic mean of was used as a perceptual feature in our model.

- Color gradient: The estimated color gradient magnitude (CGM) map is defined aswhere and stand for the approximate directional derivatives in the horizontal x and vertical y directions of , respectively. In our study, the mean and standard deviations of are utilized as quality-aware features.

- Dark channel feature (DCF): In the literature, Tang et al. [71] proposed DCF [72] for image quality assessment, since it can effectively identify haze effects in images. A dark channel is defined aswhere denotes the image patches around the pixel location x. In our implementation, an image patch corresponds to a square. Next, the DCF is defined aswhere is the size of image I.

- Michelson contrast: Contrast is one of the most fundamental characteristics of an image, since it influences the ability to distinguish objects from each other in an image [73]. Thus, contrast information is built into our NR-IQA model. The Michelson contrast measures the difference between the maximum and minimum values of an image [74], defined as

- Root mean square (RMS) contrast is defined aswhere denotes the mean luminance of .

- Global contrast factor (GCF): Contrary to Michelson and RMS contrasts, GCF considers multiple resolution levels of an image to estimate human contrast perception [75]. It is defined aswhere ’s are the average local contrasts and ’s are the weighting factors. The authors examined nine different resolution levels, which is why the number of weighting factors are nine; ’s are defined aswhich is a result of an optimum approximation from the best fitting [75]. The local contrasts are defined as follows. First, the image of size is rearranged into a one-dimensional vector using row-wise sorting. Next, the local contrast in pixel location i is defined aswhere denotes the pixel value at location i after gamma correction . Finally, the average local contrast at resolution i (denoted by in Equation (31)) is determined as the average of all ’s over the entire image.

- Entropy: It is a quantitative measure of the image’s carried information [76]. Typically, an image with better quality is able to transmit more information. This is why entropy was chosen as a quality-aware feature. The entropy of a grayscale image is defined aswhere consists of the normalized histogram counts of the grayscale image.

Table 4 illustrates the average values of the perceptual features in five equal MOS intervals of CLIVE [45] from very low image quality to very high image quality. From these numerical results, it can be observed that the mean values of the applied perceptual features are roughly proportional with the perceptual quality class. For instance, the mean values of several perceptual features (the mean and the standard deviations of the color gradient, Michelson contrast, RMS contrast, GCF, and entropy) monotonically increase with the quality class. Similarly, Table 5 illustrates the standard deviation values of the perceptual features in five equal MOS intervals of CLIVE [45] from very low image quality to very high image quality. It can be seen that the standard deviation values are also roughly proportional to the perceptual quality classes. For instance, the standard deviation values of several perceptual features (color gradient-mean, DCF, Michelson contrast, RMS contrast, and entropy) exhibit a remarkable proportionality with the perceptual quality classes.

Table 4.

Mean values of perceptual features in CLIVE [45] with respect to five equal MOS intervals.

Table 5.

Standard deviation values of perceptual features in CLIVE [45] with respect to five equal MOS intervals.

3.6. Relative Grünwald–Letnikov Derivative and Gradient Statistics

In image processing, image gradient is one of the most widely used features [77,78,79,80] and a strong predictive factor for image quality [61,81]. To characterize gradient degradation in the presence of image noise, the idea of gradient magnitude (GM), relative gradient orientation (RO), and relative gradient magnitude (RM) maps were applied from [61], on the one hand. On the other hand, the idea of GM maps was generalized and developed further using the Grünwald–Letnikov (GL) derivative [82]. Once, GM, RO, and RM maps were computed—following the recommendations of [61]—their histogram variances were used as quality-aware features. Given a normalized histogram , the histogram variance is defined as

where n is the length of and is the mean of .

The Grünwald–Letnikov derivative, introduced by Anton Karl Grünwald and Aleksey Vasilievich Letnikov, enables differentiating a function a non-integer amount of times [83]. Generally, a one-dimensional function can be differentiated for any using the following formula

where

Grünwald and Letnikov invented an approach, which enables taking the derivative of a function by arbitrary, non-integer -times. Formally, it has been written

where is the Gamma-function, is the th order Grünwald–Letnikov derivative, and x and stand for the upper and lower bounds, respectively. For a discrete two-dimensional signal (in the context of image processing: a digital image), , the GL derivative in the x-direction can be defined as

Similarly, in the y-direction

Similar to the traditional definition, the GL derivate can be given as

Although the physical meaning of the GL fractional derivative is not absolutely understandable, it is important to notice that GL derivatives, in contrast to integer derivatives, do not have local characters [84]. Namely, their derivatives depend on the entire functions. In previous work [85], the combination of global and local variations of an image using GL derivatives (global) and image gradients (local) proved to be beneficial for FR-IQA. Note that the full-reference (FR) setting aims to evaluate an image using reference images without distortions. In this study, in order to characterize global variations of images for no reference (NR)-IQA, the computation of the GM [61] map was modified by using the equations of the GL derivative, as in Equations (39)–(41). More specifically, three GL-GM maps were computed with , , and , respectively, and their histogram variances were taken as quality-aware features.

The GM map can be given very similarly to Equation (41):

where and stand for the approximate directional derivatives in the horizontal x and vertical y directions of , respectively.

The definition of the RO map is as follows [61]. First, the gradient orientation needs to be defined:

The RO map can be given as

where is the local average orientation; the authors define as

where the average directional derivatives are defined as

and

where W stands for the local neighborhood over the values that are computed. Similar to the RO map, the RM map can be given as

4. Results

In this section, our results are presented. Specifically, Section 4.1 consists of an ablation study to analyze the performance of the proposed, individual quality-aware features. Next, Section 4.2 describes the results of a performance comparison to other state-of-the-art NR-IQA methods.

4.1. Ablation Study

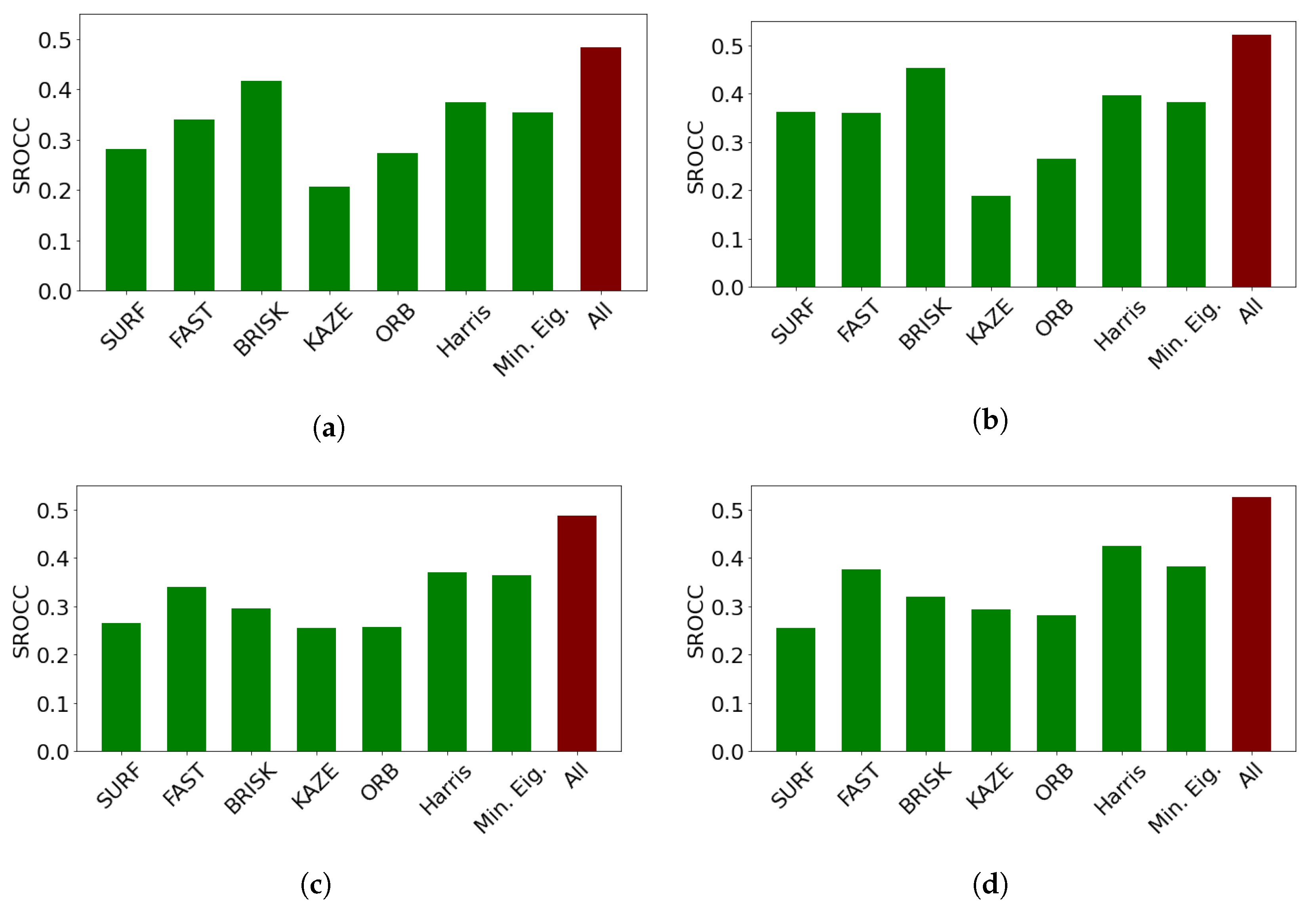

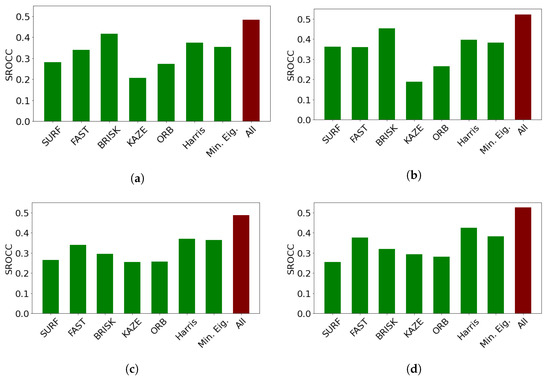

In this subsection, an ablation study on CLIVE [45] is presented to reason the design choices and demonstrate the individual performances of the proposed quality-aware features. The results are summarized in Table 6. From these results, it can be observed that the statistics of feature descriptors are quality-aware features and can deliver a rather strong performance. However, the set of the applied perceptual features delivers the strongest performance. It can also be seen that combining the statistics of local feature descriptors with global features results in improved performance. Moreover, GPR with the rational quadratic kernel function outperforms SVR with the Gaussian kernel function as a regressor for all of the proposed quality-aware features. Figure 3 provides detailed results for all local feature descriptors. On their own, all local feature descriptors can provide weak or mediocre performances. However, their concatenations provide rather strong performances. We attribute this result to the ability of local feature descriptors to diversely characterize local image distortions. Based on the above observations, we used GPR with the rational quadratic kernel function as a regressor in the proposed method, which is code-named FLG-IQA, referring to the fact that it is based on the fusion of local and global features.

Table 6.

Ablation study on CLIVE [45] database. Median PLCC, SROCC, and KROCC values were measured over 100 random train–test splits.

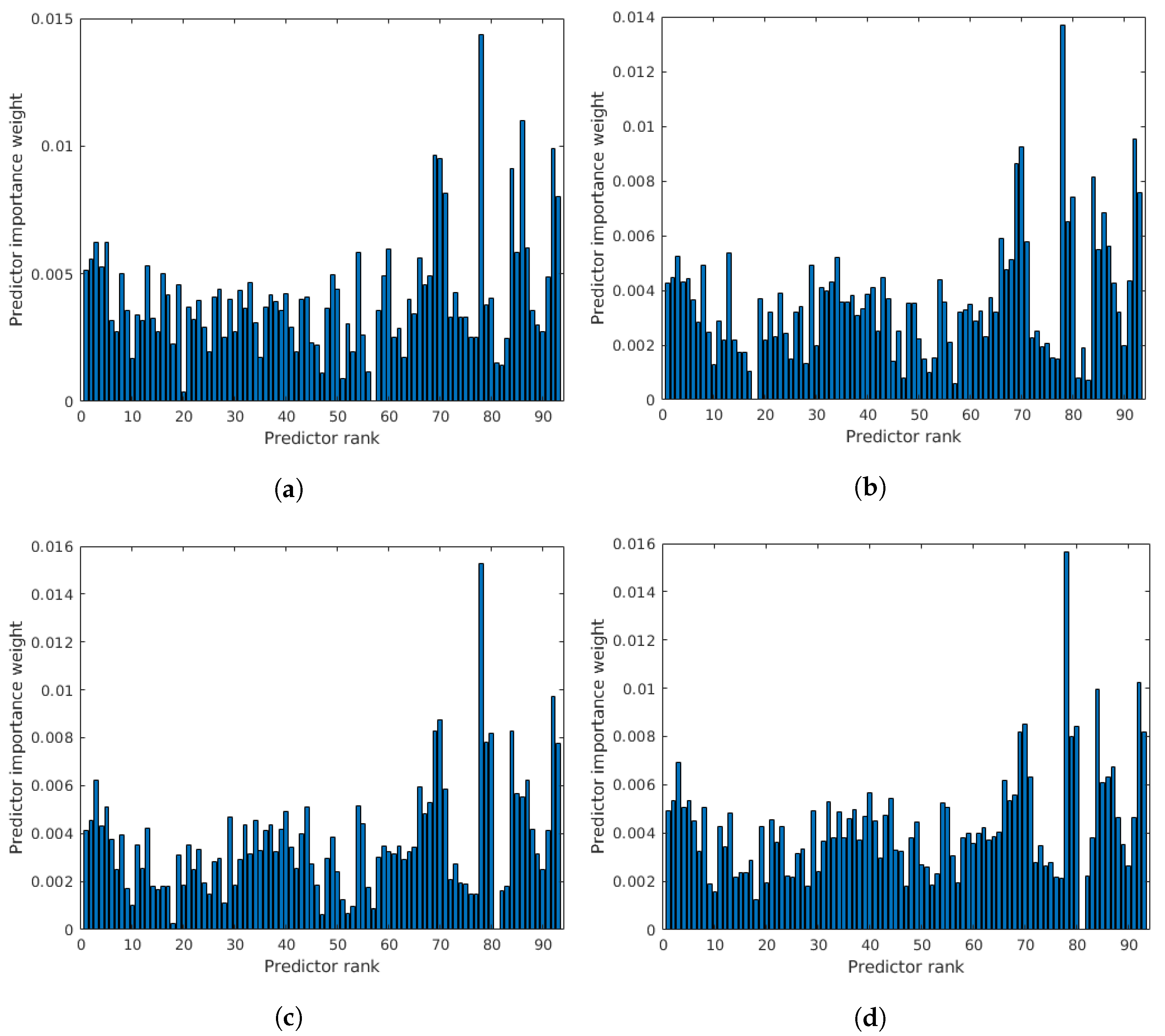

Figure 3.

Comparison of the statistics of local feature descriptors as quality-aware features in CLIVE [45]. Median SROCC values were measured over 100 random train–test splits. (a) RGB image, SVR, (b) RGB image, GPR, (c) filtered image, SVR, (d) filtered image, GPR.

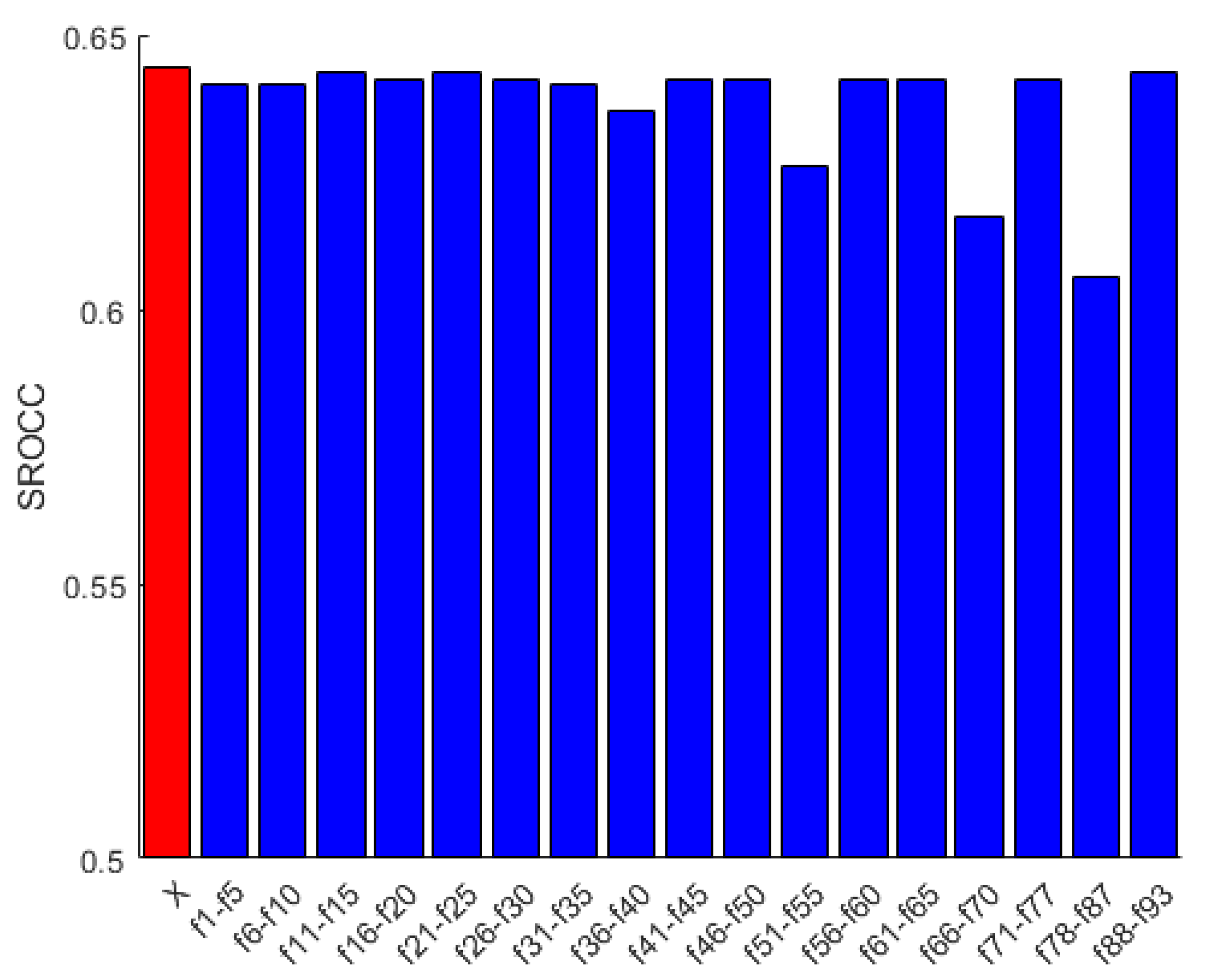

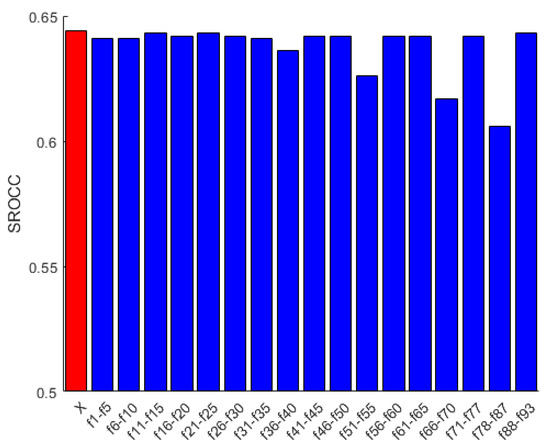

To demonstrate that all features are relevant in FLG-IQA, we present an experiment based on the evaluation protocol described in Section 3.1.2 and using the CLIVE [45] database, in which a given feature is eliminated from the entire feature vector. As demonstrated in Figure 4, all features are important. If one of the applied features is removed, the performance of FLG-IQA falls back. However, the statistics of SURF, KAZE, and minimum eigenvalue local feature descriptors on the Prewitt-filtered image are the most decisive to the performance of FLG-IQA from the statistics of local feature descriptors. Moreover, perceptual features have the most contributing effects to the performance of the proposed method. If we contrast the results in Figure 4 with results in Figure 3d and Table 6, the following interesting fact can be observed. Features that have strong effects on the performance of FLG-IQA, do not have always superior performance individually. For instance, if the statistics of the minimum eigenvalue local feature descriptor in the Prewitt-filtered image are removed, a significant performance drop can be observed. On the other hand, its individual performance lags behind those of the Harris statistics on the filtered image, although Harris has a rather strong individual performance. This indicates that the statistics of local feature descriptors are quality-aware feature vectors and complement each other in NR-IQA. Further, perceptual features have strong individual performances and their removal from the feature vector throw back the performance, indicating that they are very strong predictors of the perceptual image quality.

Figure 4.

Performance in terms of SROCC of the proposed FLG-IQA in cases when a given feature is removed from the proposed feature vector. The performance of the entire feature vector is indicated by ‘X’. Median SROCC values were measured on CLIVE [45] after 100 random train–test splits.

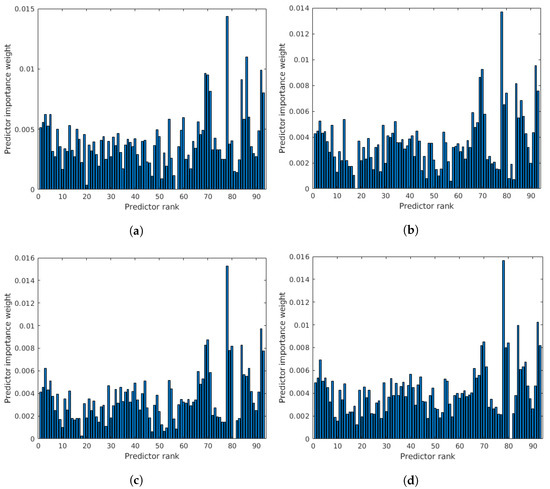

To further prove that all entries of the proposed feature vector are important, the rank importance of all predictors was investigated using the RReliefF algorithm [86]. Namely, the main idea behind RReliefF [87] is to estimate the discriminative power of features based on their ability on how well they differentiate between instances that lie near each other in the feature space. To this end, RReliefF penalizes those predictors that provide different values to neighbors with the same response values. On the other hand, it rewards those predictors that give different values to neighbors with different response values. Further, the number of examined neighbors is an input parameter of RReliefF. For all details about RReliefF, we refer to the paper by Robnik-Sikonja and Kononenko [88]. The results of the RReliefF algorithm—using and 7 nearest neighbors—on the features extracted from the images of CLIVE [45], are depicted in Figure 5. From these results, it can be seen that all entries of the proposed feature vector are important since the weights of importance are non-negative in all cases.

Figure 5.

Results of the RReliefF algorithm on the features extracted from the images of CLIVE [45]. (a) nearest neighbours, (b) nearest neighbours, (c) nearest neighbours, (d) nearest neighbours.

4.2. Comparison to the State-of-the-Art

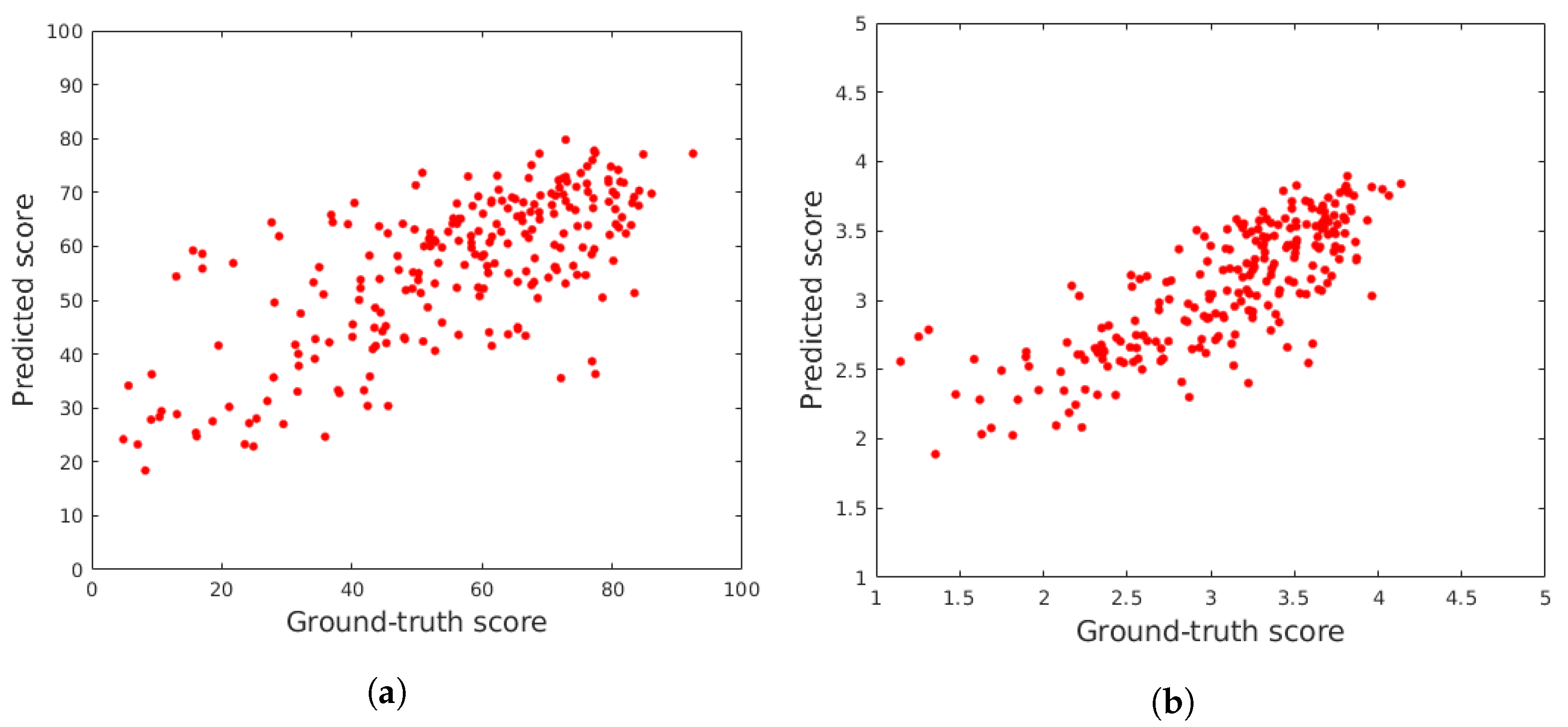

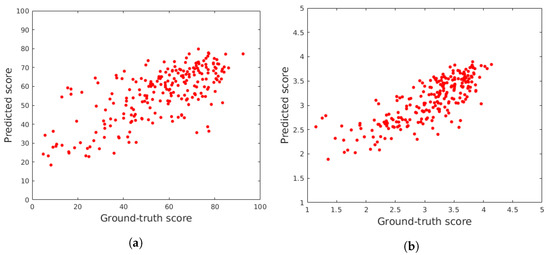

In this subsection, the proposed FLG-IQA algorithm is compared to several state-of-the-art methods, such as BLIINDS-II [23], BMPRI [12], BRISQUE [9], CurveletQA [10], DIIVINE [25], ENIQA [89], GRAD-LOG-CP [11], GWH-GLBP [90], NBIQA [91], OG-IQA [61], PIQE [16], and SSEQ [92], whose original MATLAB source codes can be found online. The above-mentioned algorithms were evaluated in the same environment with the same evaluation protocol as ours. Since PIQE [16] is a training-free method without any machine learning technique, it was directly evaluated in the full datasets. The results are summarized in Table 7 and Table 8, where it can be seen that the proposed FLG-IQA is able to outperform all other considered methods in three large IQA databases with authentic distortions, i.e., CLIVE [45], KonIQ-10k [46], and SPAQ [47]. Table 9 illustrates the direct and weighted average performance values obtained from the achieved results on the used IQA benchmark databases. It can be observed that the proposed FLG-IQA outperforms all other examined state-of-the-art algorithms by a large margin in this comparison. Specifically, FLG-IQA surpasses the second best methods by approximately 0.05 in terms of PLCC, SROCC, and KROCC in direct and weighted averages as well. In general, all methods achieved higher values in the case of the weighted average, which implies that the examined methods tend to perform better on larger databases. As an illustration of the results, Figure 6 depicts ground truth versus predicted scores in CLIVE [45] and KonIQ-10k [46] test sets, respectively.

Table 7.

Comparison to the state-of-the-art in CLIVE [45] and KonIQ-10k [46] databases. Median PLCC, SROCC, and KROCC values were measured over 100 random train–test splits. The best results are in bold and the second-best results are underlined.

Table 8.

Comparison to the state-of-the-art in the SPAQ [47] database. Median PLCC, SROCC, and KROCC values were measured over 100 random train–test splits. The best results are in bold and the second-best results are underlined.

Table 9.

Comparison to the state-of-the-art. Direct and weighted average PLCC, SROCC, and KROCC are reported based on the results measured in CLIVE [45], KonIQ-10k [46], and SPAQ [47]. The best results are in bold and the second-best results are underlined.

Figure 6.

Ground truth scores versus predicted scores in (a) CLIVE [45] and (b) KonIQ-10k [46] test sets.

To prove that the achieved performance difference against the state-of-the-art in CLIVE [45], KonIQ-10k [46], and SPAQ [47] was significant, significance tests were also carried out. Specifically, one-sided t-tests were applied between the 100 SROCC values provided by the proposed FLG-IQA method and one other examined state-of-the-art algorithm. Further, the null hypothesis was that the mean SROCC values of the two sets were equal to each other at a confidence level of . The results of the significance tests are summarized in Table 10 where symbol denotes that the proposed FLG-IQA is significantly better (worse) than the algorithm represented in the row of the table on the IQA benchmark database represented in the column. From the presented results, it can be seen that FLG-IQA is significantly better than the state-of-the-art in the utilized IQA benchmark databases containing authentic distortions.

Table 10.

Results of the significance tests. Symbol denotes that the proposed FLG-IQA algorithm is significantly ( confidence interval) better (worse) than the NR-IQA algorithm in the row on the IQA benchmark database in the column.

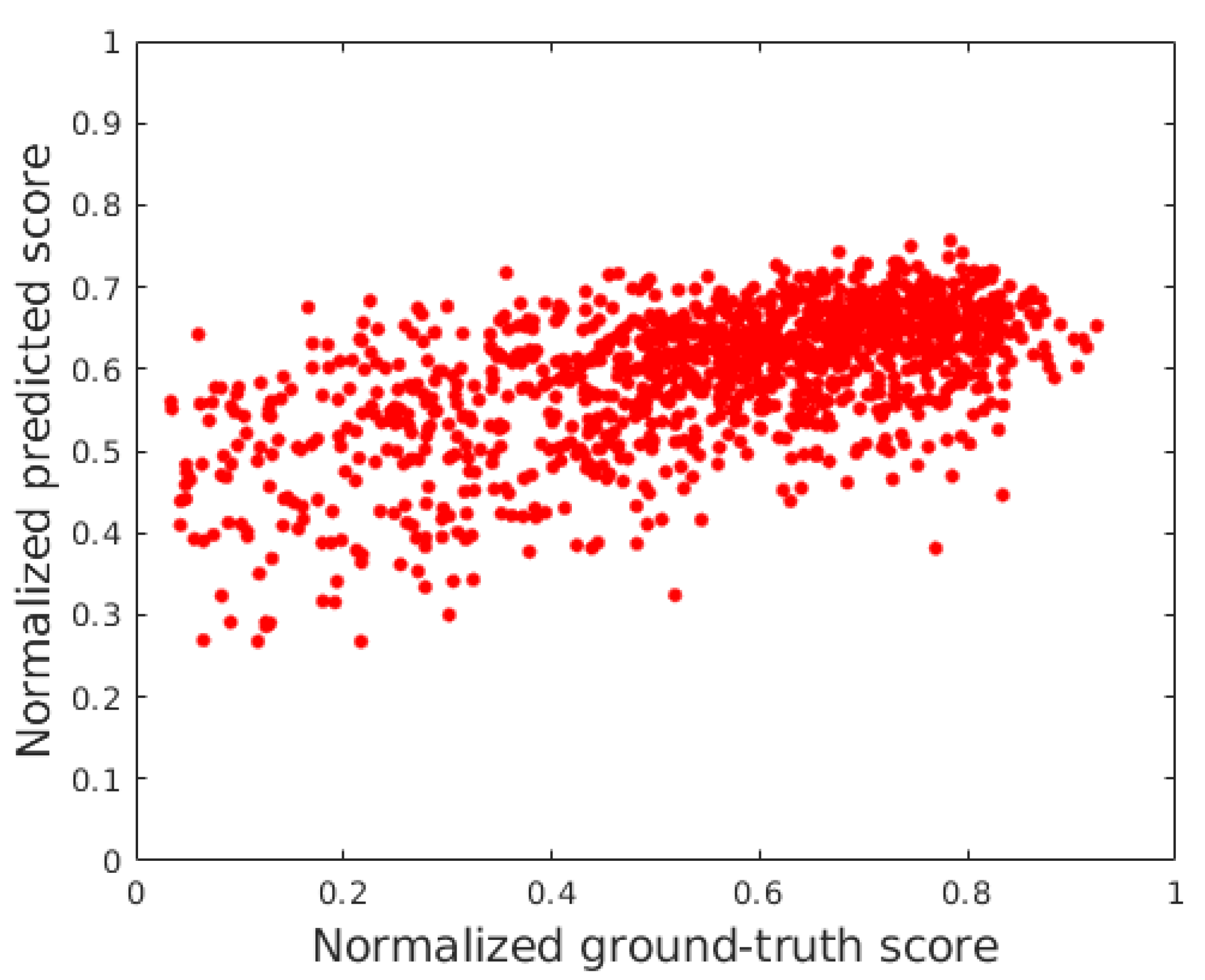

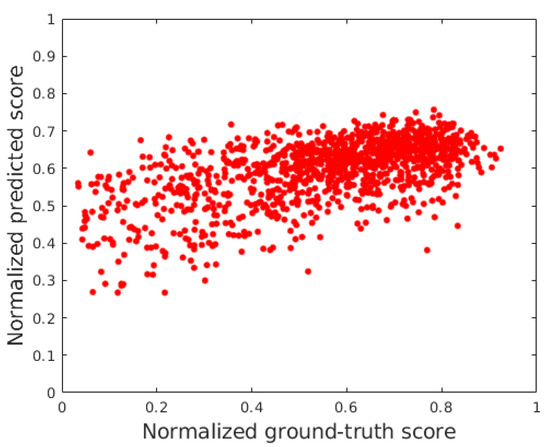

The effectiveness of the proposed FLG-IQA was further proved in a cross-database test where the examined state-of-the-art algorithm and the proposed method were trained on the large KonIQ-10k [46] and tested in CLIVE [45]. The results of this test are summarized in Table 11. From the presented numerical results, it can be seen that the proposed method provides a significantly higher performance than the other methods. Specifically, FLG-IQA performs better than the second-best method bu approximately 0.11 in terms of PLCC and 0.07 in terms of SROCC, respectively. Figure 7 depicts the results of FLG-IQA in the cross database in normalized ground truth scores versus a normalized predicted score scatter plot.

Table 11.

Results of the cross-database test. The examined and the proposed methods were trained on KonIQ-10k [46] and tested on CLIVE [45]. The best results are in bold and the second-best results are underlined.

Figure 7.

Normalized ground truth scores versus normalized predicted score scatter plot of FLG-IQA in the cross-database test.

5. Conclusions

In this paper, a novel NR-IQA method for authentically distorted images was introduced. Specifically, a diverse set of local and global quality-aware features was proposed and applied with a GPR with the rational quadratic kernel function to obtain a perceptual quality estimator. The main idea behind the usage of local feature descriptor statistics was that these feature descriptors interpret local image regions from the human visual system’s viewpoint. The features were studied by taking into consideration their effects on the performance of perceptual quality estimation. The numerical comparison to 12 other state-of-the-art methods on three popular benchmark databases (CLIVE [45], KonIQ-10k [46], and SPAQ [47]) proved the superior performance of the proposed method. Our future work will involve boosting the quality-aware properties of the local feature descriptors by applying bio-inspired filters.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

In this paper, the following publicly available benchmark databases were used: 1. CLIVE: https://live.ece.utexas.edu/research/ChallengeDB/index.html (accessed on 16 April 2022); 2. KonIQ-10k: http://database.mmsp-kn.de/koniq-10k-database.html (accessed on 16 April 2022); 3. SPAQ: https://github.com/h4nwei/SPAQ (accessed on 16 April 2022). The source code of the proposed FLG-IQA method is available at: https://github.com/Skythianos/FLG-IQA (accessed on 12 June 2022).

Acknowledgments

We thank the academic editor and the anonymous reviewers for their careful reading of our manuscript and their many insightful comments and suggestions.

Conflicts of Interest

The author declares no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| BRIEF | binary robust independent elementary features |

| BRISK | binary robust invariant scalable keypoints |

| CGM | color gradient magnitude |

| CNN | convolutional neural network |

| CPU | central processing unit |

| DCF | dark channel feature |

| DCT | discrete cosine transform |

| DF | decision fusion |

| DSC | digital still camera |

| DSLR | digital single-lens reflex camera |

| FAST | features from accelerated segment test |

| FR | full-reference |

| GGD | generalized Gaussian distribution |

| GL | Grünwald–Letnikov |

| GM | gradient magnitude |

| GPU | graphics processing unit |

| GPR | Gaussian process regression |

| IQA | image quality assessment |

| KROCC | Kendall’s rank-order correlation coefficient |

| MOS | mean opinion score |

| MSCN | mean subtracted contrast normalized |

| NIQE | naturalness image quality evaluator |

| NR | no-reference |

| NSS | natural scene statistics |

| ORB | oriented FAST and rotated BRIEF |

| PIQE | perception-based image quality evaluator |

| PLCC | Pearson’s linear correlation coefficient |

| RBF | radial basis function |

| RM | relative gradient magnitude |

| RMS | root mean square |

| RO | relative gradient orientation |

| RR | reduced-reference |

| SPHN | smartphone |

| SROCC | Spearman’s rank-order correlation coefficient |

| SVR | support vector regressor |

References

- Torr, P.H.; Zissermann, A. Performance characterization of fundamental matrix estimation under image degradation. Mach. Vis. Appl. 1997, 9, 321–333. [Google Scholar] [CrossRef]

- Zhao, Q. The Application of Augmented Reality Visual Communication in Network Teaching. Int. J. Emerg. Technol. Learn. 2018, 13, 57. [Google Scholar] [CrossRef]

- Shen, T.W.; Li, C.C.; Lin, W.F.; Tseng, Y.H.; Wu, W.F.; Wu, S.; Tseng, Z.L.; Hsu, M.H. Improving Image Quality Assessment Based on the Combination of the Power Spectrum of Fingerprint Images and Prewitt Filter. Appl. Sci. 2022, 12, 3320. [Google Scholar] [CrossRef]

- Esteban, O.; Birman, D.; Schaer, M.; Koyejo, O.O.; Poldrack, R.A.; Gorgolewski, K.J. MRIQC: Advancing the automatic prediction of image quality in MRI from unseen sites. PLoS ONE 2017, 12, e0184661. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ma, K.; Yeganeh, H.; Zeng, K.; Wang, Z. High dynamic range image compression by optimizing tone mapped image quality index. IEEE Trans. Image Process. 2015, 24, 3086–3097. [Google Scholar]

- Goyal, B.; Gupta, A.; Dogra, A.; Koundal, D. An adaptive bitonic filtering based edge fusion algorithm for Gaussian denoising. Int. J. Cogn. Comput. Eng. 2022, 3, 90–97. [Google Scholar] [CrossRef]

- Zhai, G.; Min, X. Perceptual image quality assessment: A survey. Sci. China Inf. Sci. 2020, 63, 1–52. [Google Scholar] [CrossRef]

- Kamble, V.; Bhurchandi, K. No-reference image quality assessment algorithms: A survey. Optik 2015, 126, 1090–1097. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Liu, L.; Dong, H.; Huang, H.; Bovik, A.C. No-reference image quality assessment in curvelet domain. Signal Process. Image Commun. 2014, 29, 494–505. [Google Scholar] [CrossRef]

- Xue, W.; Mou, X.; Zhang, L.; Bovik, A.C.; Feng, X. Blind image quality assessment using joint statistics of gradient magnitude and Laplacian features. IEEE Trans. Image Process. 2014, 23, 4850–4862. [Google Scholar] [CrossRef] [PubMed]

- Min, X.; Zhai, G.; Gu, K.; Liu, Y.; Yang, X. Blind image quality estimation via distortion aggravation. IEEE Trans. Broadcast. 2018, 64, 508–517. [Google Scholar] [CrossRef]

- Mohammadi, P.; Ebrahimi-Moghadam, A.; Shirani, S. Subjective and objective quality assessment of image: A survey. arXiv 2014, arXiv:1406.7799. [Google Scholar]

- Yang, X.; Li, F.; Liu, H. A survey of DNN methods for blind image quality assessment. IEEE Access 2019, 7, 123788–123806. [Google Scholar] [CrossRef]

- Xu, L.; Lin, W.; Kuo, C.C.J. Visual Quality Assessment by Machine Learning; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Venkatanath, N.; Praneeth, D.; Bh, M.C.; Channappayya, S.S.; Medasani, S.S. Blind image quality evaluation using perception based features. In Proceedings of the 2015 Twenty First National Conference on Communications (NCC), Bombay, India, 27 February–1 March 2015; pp. 1–6. [Google Scholar]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Bovik, A.C. A feature-enriched completely blind image quality evaluator. IEEE Trans. Image Process. 2015, 24, 2579–2591. [Google Scholar] [CrossRef] [Green Version]

- Wu, L.; Zhang, X.; Chen, H.; Wang, D.; Deng, J. VP-NIQE: An opinion-unaware visual perception natural image quality evaluator. Neurocomputing 2021, 463, 17–28. [Google Scholar] [CrossRef]

- Bhattacharyya, A. On a measure of divergence between two statistical populations defined by their probability distributions. Bull. Calcutta Math. Soc. 1943, 35, 99–109. [Google Scholar]

- Leonardi, M.; Napoletano, P.; Schettini, R.; Rozza, A. No Reference, Opinion Unaware Image Quality Assessment by Anomaly Detection. Sensors 2021, 21, 994. [Google Scholar] [CrossRef]

- Reinagel, P.; Zador, A.M. Natural scene statistics at the centre of gaze. Netw. Comput. Neural Syst. 1999, 10, 341. [Google Scholar] [CrossRef]

- Saad, M.A.; Bovik, A.C.; Charrier, C. Blind image quality assessment: A natural scene statistics approach in the DCT domain. IEEE Trans. Image Process. 2012, 21, 3339–3352. [Google Scholar] [CrossRef] [PubMed]

- Moorthy, A.K.; Bovik, A.C. A two-step framework for constructing blind image quality indices. IEEE Signal Process. Lett. 2010, 17, 513–516. [Google Scholar] [CrossRef]

- Moorthy, A.K.; Bovik, A.C. Blind image quality assessment: From natural scene statistics to perceptual quality. IEEE Trans. Image Process. 2011, 20, 3350–3364. [Google Scholar] [CrossRef] [PubMed]

- Tang, H.; Joshi, N.; Kapoor, A. Learning a blind measure of perceptual image quality. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 305–312. [Google Scholar]

- Wang, Q.; Chu, J.; Xu, L.; Chen, Q. A new blind image quality framework based on natural color statistic. Neurocomputing 2016, 173, 1798–1810. [Google Scholar] [CrossRef]

- Wu, J.; Lin, W.; Shi, G. Image quality assessment with degradation on spatial structure. IEEE Signal Process. Lett. 2014, 21, 437–440. [Google Scholar] [CrossRef]

- Freitas, P.G.; Akamine, W.Y.; Farias, M.C. No-reference image quality assessment based on statistics of local ternary pattern. In Proceedings of the 2016 Eighth International Conference on Quality of Multimedia Experience (QoMEX), Lisbon, Portugal, 6–8 June 2016; pp. 1–6. [Google Scholar]

- Freitas, P.G.; Akamine, W.Y.; Farias, M.C. No-reference image quality assessment using orthogonal color planes patterns. IEEE Trans. Multimed. 2018, 20, 3353–3360. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Garcia Freitas, P.; Da Eira, L.P.; Santos, S.S.; Farias, M.C.Q.d. On the Application LBP Texture Descriptors and Its Variants for No-Reference Image Quality Assessment. J. Imaging 2018, 4, 114. [Google Scholar] [CrossRef] [Green Version]

- Ye, P.; Doermann, D. No-reference image quality assessment based on visual codebook. In Proceedings of the 2011 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 3089–3092. [Google Scholar]

- Ye, P.; Doermann, D. No-reference image quality assessment using visual codebooks. IEEE Trans. Image Process. 2012, 21, 3129–3138. [Google Scholar]

- Ye, P.; Kumar, J.; Kang, L.; Doermann, D. Unsupervised feature learning framework for no-reference image quality assessment. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1098–1105. [Google Scholar]

- Kang, L.; Ye, P.; Li, Y.; Doermann, D. Convolutional neural networks for no-reference image quality assessment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1733–1740. [Google Scholar]

- Bianco, S.; Celona, L.; Napoletano, P.; Schettini, R. On the use of deep learning for blind image quality assessment. Signal Image Video Process. 2018, 12, 355–362. [Google Scholar] [CrossRef] [Green Version]

- Gao, F.; Yu, J.; Zhu, S.; Huang, Q.; Tian, Q. Blind image quality prediction by exploiting multi-level deep representations. Pattern Recognit. 2018, 81, 432–442. [Google Scholar] [CrossRef]

- Yang, S.; Jiang, Q.; Lin, W.; Wang, Y. Sgdnet: An end-to-end saliency-guided deep neural network for no-reference image quality assessment. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1383–1391. [Google Scholar]

- Zhang, L.; Shen, Y.; Li, H. VSI: A visual saliency-induced index for perceptual image quality assessment. IEEE Trans. Image Process. 2014, 23, 4270–4281. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, X.; Van De Weijer, J.; Bagdanov, A.D. Rankiqa: Learning from rankings for no-reference image quality assessment. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1040–1049. [Google Scholar]

- Burges, C.; Shaked, T.; Renshaw, E.; Lazier, A.; Deeds, M.; Hamilton, N.; Hullender, G. Learning to rank using gradient descent. In Proceedings of the 22nd International Conference on Machine Learning, Bonn, Germany, 7–11 August 2005; pp. 89–96. [Google Scholar]

- Li, D.; Jiang, T.; Jiang, M. Norm-in-norm loss with faster convergence and better performance for image quality assessment. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 789–797. [Google Scholar]

- Celona, L.; Schettini, R. Blind quality assessment of authentically distorted images. JOSA A 2022, 39, B1–B10. [Google Scholar] [CrossRef]

- Ghadiyaram, D.; Bovik, A.C. Massive online crowdsourced study of subjective and objective picture quality. IEEE Trans. Image Process. 2015, 25, 372–387. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lin, H.; Hosu, V.; Saupe, D. KonIQ-10K: Towards an ecologically valid and large-scale IQA database. arXiv 2018, arXiv:1803.08489. [Google Scholar]

- Fang, Y.; Zhu, H.; Zeng, Y.; Ma, K.; Wang, Z. Perceptual quality assessment of smartphone photography. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3677–3686. [Google Scholar]

- Guan, X.; Li, F.; He, L. Quality Assessment on Authentically Distorted Images by Expanding Proxy Labels. Electronics 2020, 9, 252. [Google Scholar] [CrossRef] [Green Version]

- Ding, Y. Visual Quality Assessment for Natural and Medical Image; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Sheikh, H.R.; Sabir, M.F.; Bovik, A.C. A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans. Image Process. 2006, 15, 3440–3451. [Google Scholar] [CrossRef]

- Varga, D. No-Reference Image Quality Assessment with Global Statistical Features. J. Imaging 2021, 7, 29. [Google Scholar] [CrossRef]

- Krig, S. Computer Vision Metrics; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Rosten, E.; Drummond, T. Fusing points and lines for high performance tracking. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05) Volume 1, Beijing, China, 17–21 October 2005; Volume 2, pp. 1508–1515. [Google Scholar]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; Volume 15, pp. 10–5244. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Gool, L.V. Surf: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary robust invariant scalable keypoints. In Proceedings of the 2011 International Conference on Computer Vision, Las Vegas, NV, USA, 18–21 July 2011; pp. 2548–2555. [Google Scholar]

- Alcantarilla, P.F.; Bartoli, A.; Davison, A.J. KAZE features. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 214–227. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Las Vegas, NV, USA, 18–21 July 2011; pp. 2564–2571. [Google Scholar]

- Shi, J.; Tomasi. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Hu, M.K. Visual pattern recognition by moment invariants. IRE Trans. Inf. Theory 1962, 8, 179–187. [Google Scholar]

- Liu, L.; Hua, Y.; Zhao, Q.; Huang, H.; Bovik, A.C. Blind image quality assessment by relative gradient statistics and adaboosting neural network. Signal Process. Image Commun. 2016, 40, 1–15. [Google Scholar] [CrossRef]

- Moreno, P.; Bernardino, A.; Santos-Victor, J. Improving the SIFT descriptor with smooth derivative filters. Pattern Recognit. Lett. 2009, 30, 18–26. [Google Scholar] [CrossRef] [Green Version]

- Prewitt, J.M. Object enhancement and extraction. Pict. Process. Psychopictorics 1970, 10, 15–19. [Google Scholar]

- Marr, D.; Hildreth, E. Theory of edge detection. Proc. R. Soc. London Ser. B. Biol. Sci. 1980, 207, 187–217. [Google Scholar]

- Huang, J.S.; Tseng, D.H. Statistical theory of edge detection. Comput. Vis. Graph. Image Process. 1988, 43, 337–346. [Google Scholar] [CrossRef]

- Jenadeleh, M. Blind Image and Video Quality Assessment. Ph.D. Thesis, University of Konstanz, Konstanz, Germany, 2018. [Google Scholar]

- Kurimo, E.; Lepistö, L.; Nikkanen, J.; Grén, J.; Kunttu, I.; Laaksonen, J. The effect of motion blur and signal noise on image quality in low light imaging. In Proceedings of the Scandinavian Conference on Image Analysis, Oslo, Norway, 15–18 June; pp. 81–90.

- Crété-Roffet, F.; Dolmiere, T.; Ladret, P.; Nicolas, M. The blur effect: Perception and estimation with a new no-reference perceptual blur metric. In Proceedings of the SPIE Electronic Imaging Symposium Conf Human Vision and Electronic Imaging, San Jose, CA, USA, 29 January 29–1 February 2007. [Google Scholar]

- Choi, S.Y.; Luo, M.R.; Pointer, M.R.; Rhodes, P.A. Predicting perceived colorfulness, contrast, naturalness and quality for color images reproduced on a large display. In Proceedings of the Color and Imaging Conference, Society for Imaging Science and Technology, Portland, OR, USA, 10–14 November 2008; Volume 2008, pp. 158–164. [Google Scholar]

- Hasler, D.; Suesstrunk, S.E. Measuring colorfulness in natural images. In Proceedings of the Human Vision and Electronic Imaging VIII: International Society for Optics and Photonics, Santa Clara, CA, USA, 21–24 January 2003; Volume 5007, pp. 87–95. [Google Scholar]

- Tang, X.; Luo, W.; Wang, X. Content-based photo quality assessment. IEEE Trans. Multimed. 2013, 15, 1930–1943. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar]

- Kukkonen, H.; Rovamo, J.; Tiippana, K.; Näsänen, R. Michelson contrast, RMS contrast and energy of various spatial stimuli at threshold. Vis. Res. 1993, 33, 1431–1436. [Google Scholar] [CrossRef]

- Michelson, A.A. Studies in Optics; Courier Corporation: Chelmsford, MA, USA, 1995. [Google Scholar]

- Matkovic, K.; Neumann, L.; Neumann, A.; Psik, T.; Purgathofer, W. Global contrast factor-a new approach to image contrast. In Proceedings of the Eurographics Workshop on Computational Aesthetics in Graphics, Visualization and Imaging 2005, Girona, Spain, 18–20 May 2005; pp. 159–167. [Google Scholar]

- Tsai, D.Y.; Lee, Y.; Matsuyama, E. Information entropy measure for evaluation of image quality. J. Digit. Imaging 2008, 21, 338–347. [Google Scholar] [CrossRef] [Green Version]

- Petrovic, V.S.; Xydeas, C.S. Gradient-based multiresolution image fusion. IEEE Trans. Image Process. 2004, 13, 228–237. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Kobayashi, T.; Otsu, N. Image feature extraction using gradient local auto-correlations. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; pp. 346–358. [Google Scholar]

- Chiberre, P.; Perot, E.; Sironi, A.; Lepetit, V. Detecting Stable Keypoints from Events through Image Gradient Prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1387–1394. [Google Scholar]

- Liu, A.; Lin, W.; Narwaria, M. Image quality assessment based on gradient similarity. IEEE Trans. Image Process. 2011, 21, 1500–1512. [Google Scholar]

- Gorenflo, R.; Mainardi, F. Fractional calculus. In Fractals and Fractional Calculus in Continuum Mechanics; Springer: Berlin/Heidelberg, Germany, 1997; pp. 223–276. [Google Scholar]

- Sabatier, J.; Agrawal, O.P.; Machado, J.T. Advances in Fractional Calculus; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4. [Google Scholar]

- Motłoch, S.; Sarwas, G.; Dzieliński, A. Fractional Derivatives Application to Image Fusion Problems. Sensors 2022, 22, 1049. [Google Scholar] [CrossRef] [PubMed]

- Varga, D. Full-Reference Image Quality Assessment Based on Grünwald–Letnikov Derivative, Image Gradients, and Visual Saliency. Electronics 2022, 11, 559. [Google Scholar] [CrossRef]

- Robnik-Šikonja, M.; Kononenko, I. Theoretical and empirical analysis of ReliefF and RReliefF. Mach. Learn. 2003, 53, 23–69. [Google Scholar] [CrossRef] [Green Version]

- Kira, K.; Rendell, L.A. A practical approach to feature selection. In Machine Learning Proceedings 1992; Elsevier: Amsterdam, The Netherlands, 1992; pp. 249–256. [Google Scholar]

- Robnik-Šikonja, M.; Kononenko, I. An adaptation of Relief for attribute estimation in regression. In Proceedings of the Fourteenth International Conference on Machine Learning (ICML’97), San Francisco, CA, USA, 8–12 July 1997; Volume 5, pp. 296–304. [Google Scholar]

- Chen, X.; Zhang, Q.; Lin, M.; Yang, G.; He, C. No-reference color image quality assessment: From entropy to perceptual quality. EURASIP J. Image Video Process. 2019, 2019, 77. [Google Scholar] [CrossRef] [Green Version]

- Li, Q.; Lin, W.; Fang, Y. No-reference quality assessment for multiply-distorted images in gradient domain. IEEE Signal Process. Lett. 2016, 23, 541–545. [Google Scholar] [CrossRef]

- Ou, F.Z.; Wang, Y.G.; Zhu, G. A novel blind image quality assessment method based on refined natural scene statistics. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–29 September 2019; pp. 1004–1008. [Google Scholar]

- Liu, L.; Liu, B.; Huang, H.; Bovik, A.C. No-reference image quality assessment based on spatial and spectral entropies. Signal Process. Image Commun. 2014, 29, 856–863. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).