Robust Visibility Surface Determination in Object Space via Plücker Coordinates

Abstract

1. Introduction

2. Related Works

3. Problem Formulation

3.1. Representation of 3D Objects

- vertex: a vector representing the position in 3D space along with further information such as colour, normal vector and texture coordinates;

- edge: the segment between two vertices;

- face: a closed set of edges, i.e., a triangle.

3.2. Visibility Problem

- Image space: a 2D space for the visual representation of a scene. The rasterization process of converting a 3D scene into a 2D image works in this space. For this reason, the most common methods to solve the visibility problem perform their operations in 2D projection planes.

- Object space: a 3D space in which the scene is defined and objects lie. Methods developed within this space are computationally onerous and they are usually used to create proper data structure used to sped up subsequent algorithms. These acceleration structures are crucial for real time computer graphics applications such as video games.

- Line space: the one of all the possible lines that could be traced in a scene. Methods developed within this space try to divide the line space according to the geometry that a given line intercepts. Indeed, as stated at the beginning of this section, the visibility notation can be naturally expressed in relation to those elements.

- Viewpoint space: the one of all the possible views of an object. Theoretically, it could be partitioned into different regions divided by visual events. A visual event is a change in the topological appearance. For example, while rotating a coin vertically, at a certain point, one of its faces becomes visible while the other not. This process generates a structure referred in literature as aspect graph [19]. The latter has only a theoretical interest since it could have a complexity for general non convex polyhedral objects in a 3D viewpoint space, where n is the number of object faces. Nevertheless, a few visibility problems are defined and addressed in this space, such as viewpoint optimization for object tracking.

3.3. Problem Statement

4. Proposed Approach: Ambient Occlusion, Visibility Index and Plücker Coordinates

4.1. Ambient Occlusion

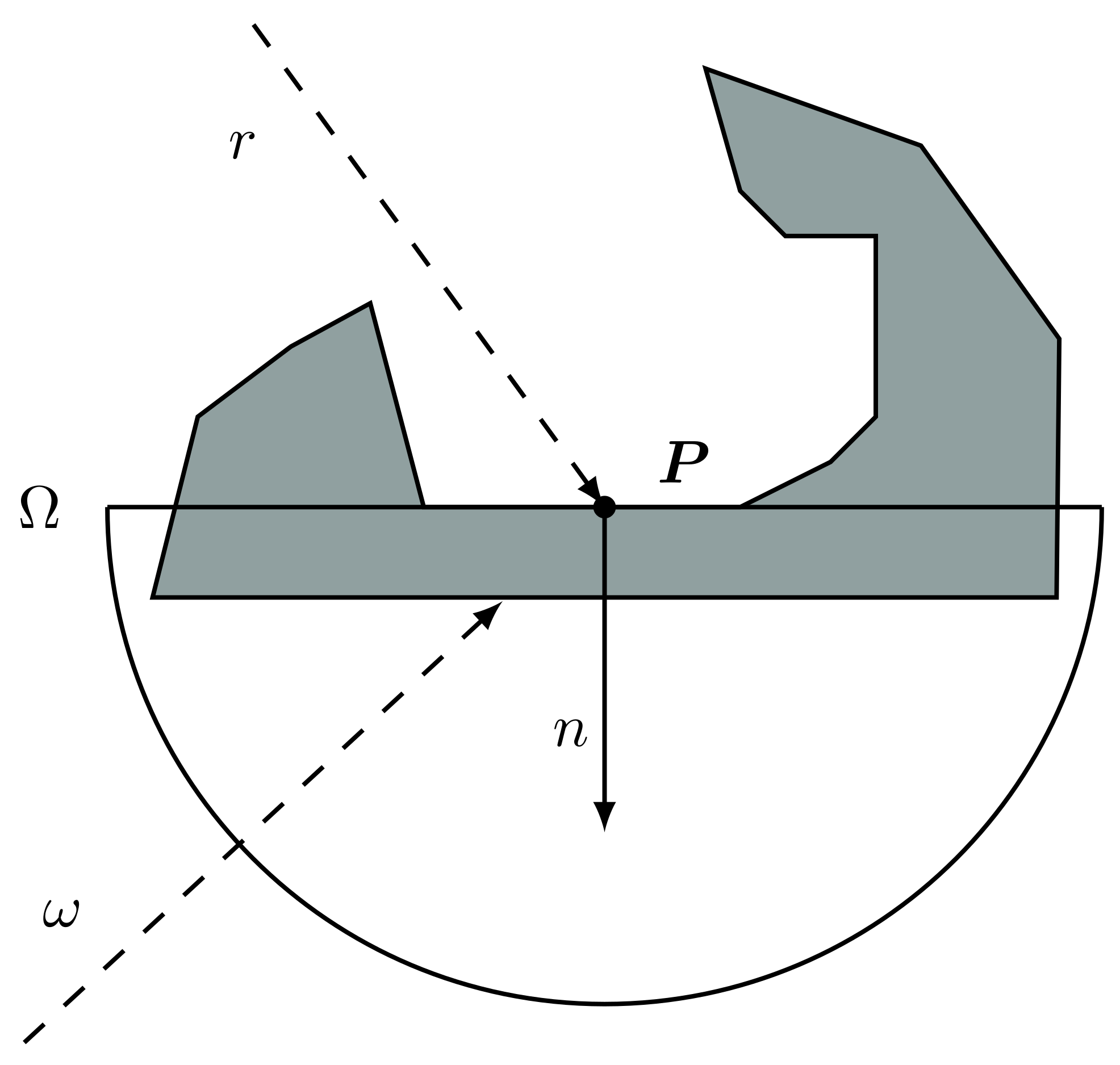

- P is the surface point;

- is the upper hemisphere generated by the cutting of a sphere centred in P by the plane on which the surface lies;

- is a point of the hemisphere and identifies the incoming light direction (with a slight abuse of language, in the following we will sometimes refer to as the ray direction);

- is a function with value 1 if there is incoming ambient light from direction and 0 if not;

- is a normalization factor;

- is the angle between direction and the surface normal n (note also that ).

4.2. Visibility Index

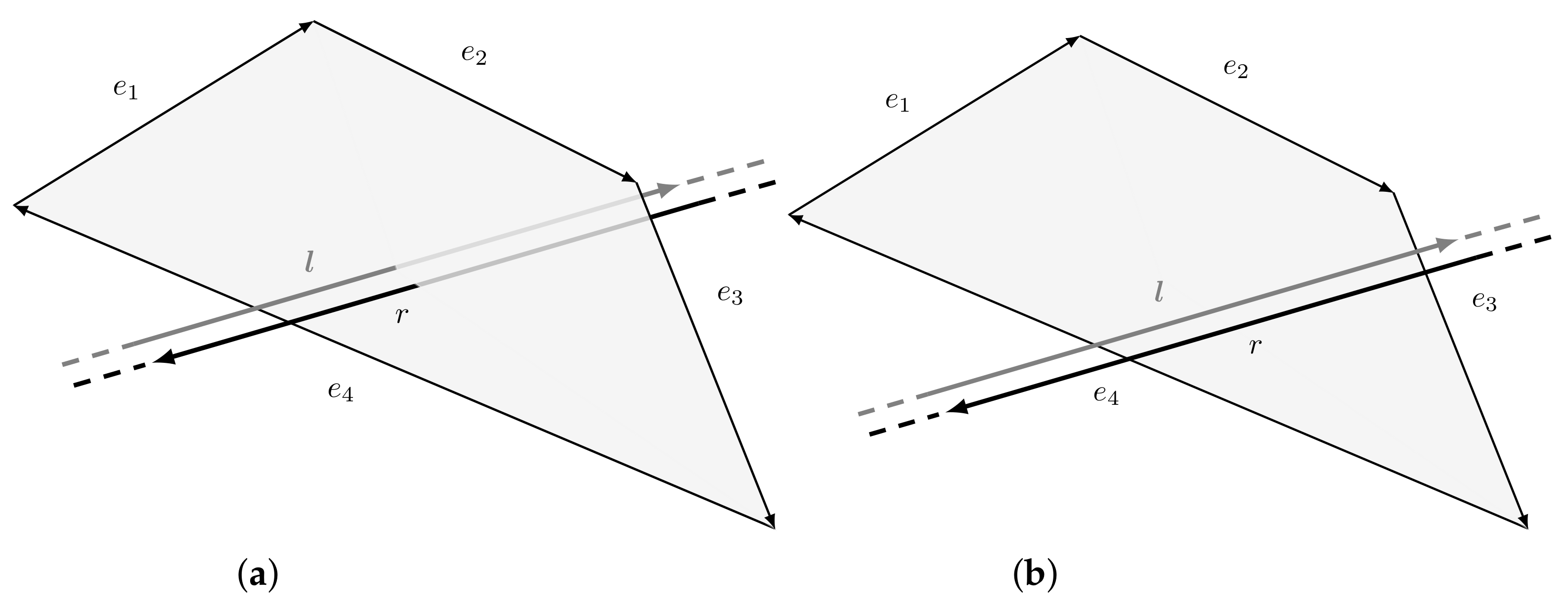

4.3. Plücker Coordinates

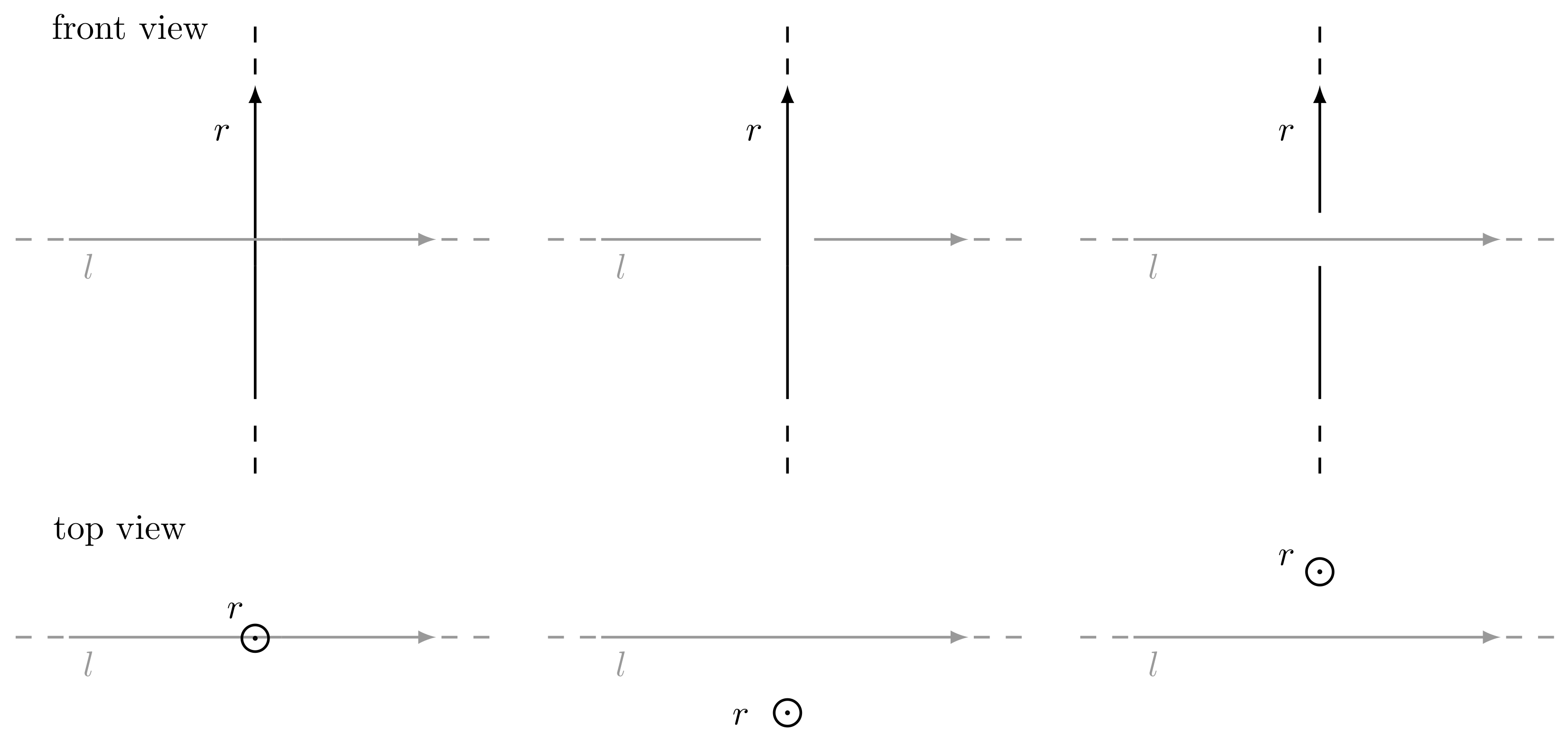

- if l intersects r, then ;

- if l goes clockwise around r, then ;

- if l goes counter-clockwise around r, then .

5. Visibility Algorithm Based on Plücker Coordinates

5.1. Sampling Points on Triangle

5.2. Rays Generation through Sphere Sampling

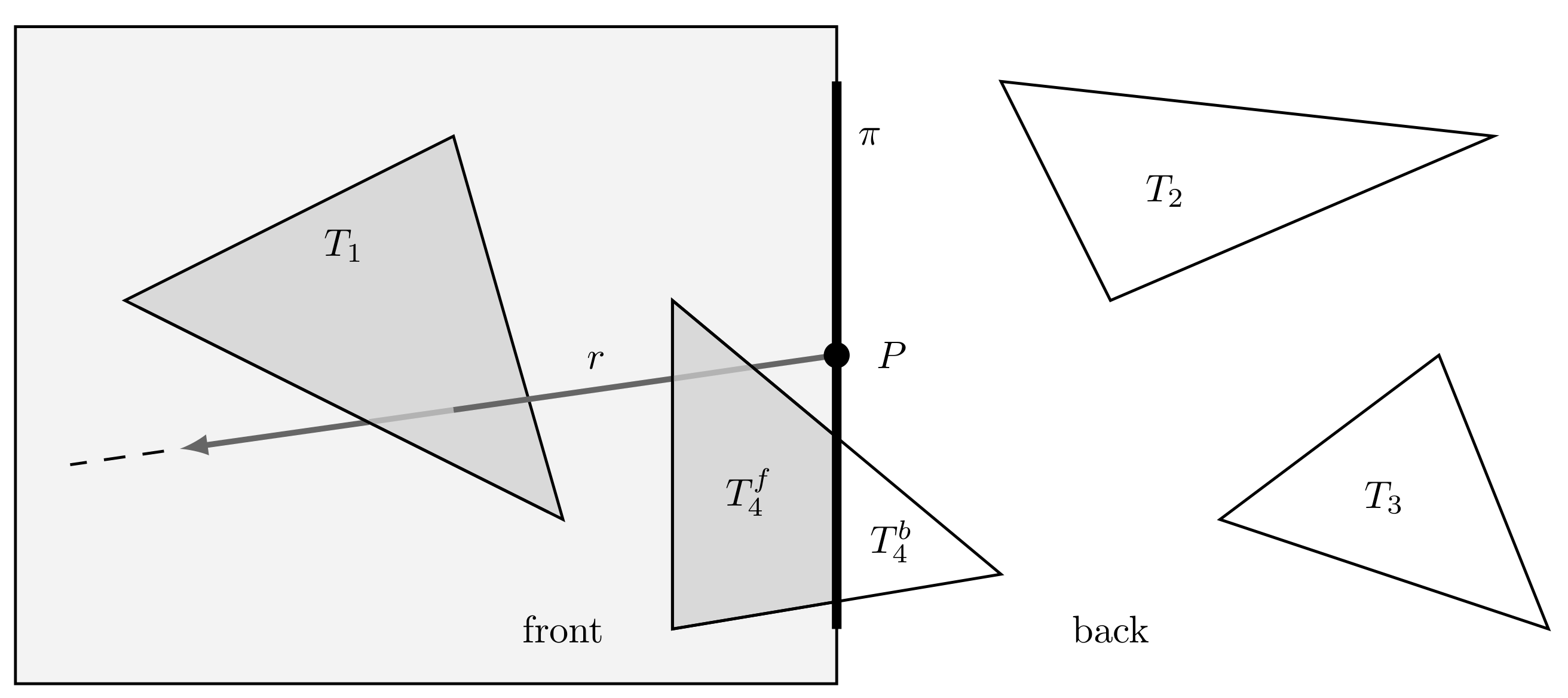

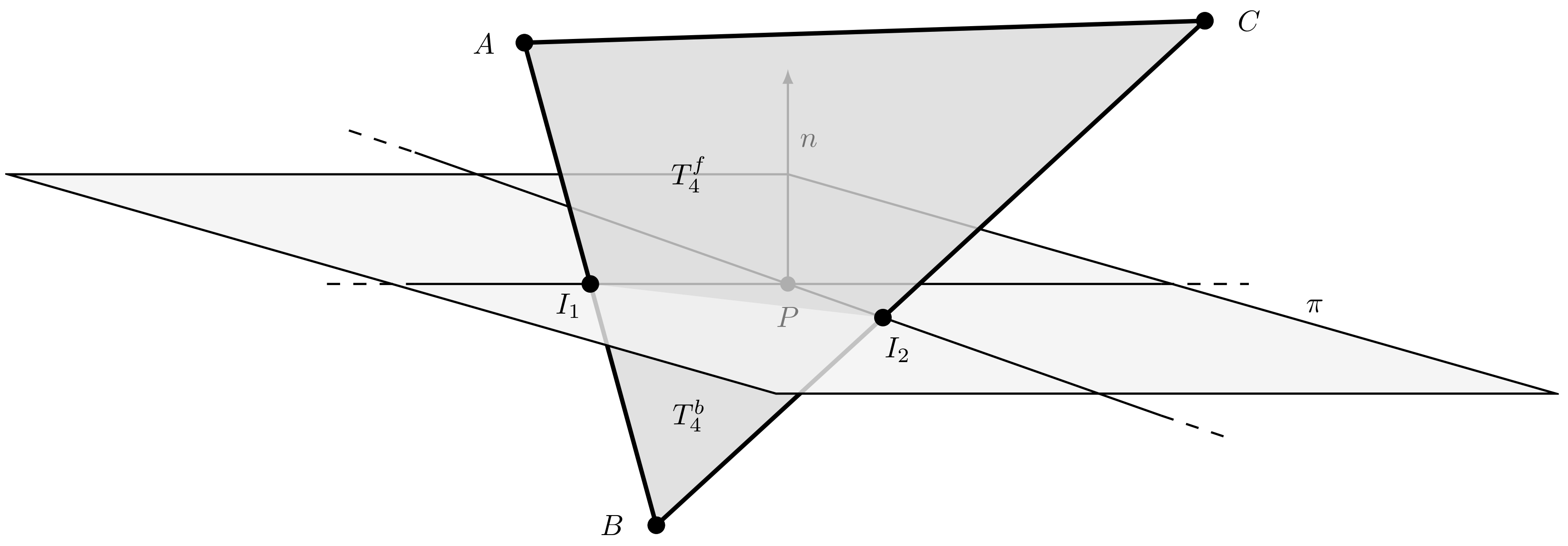

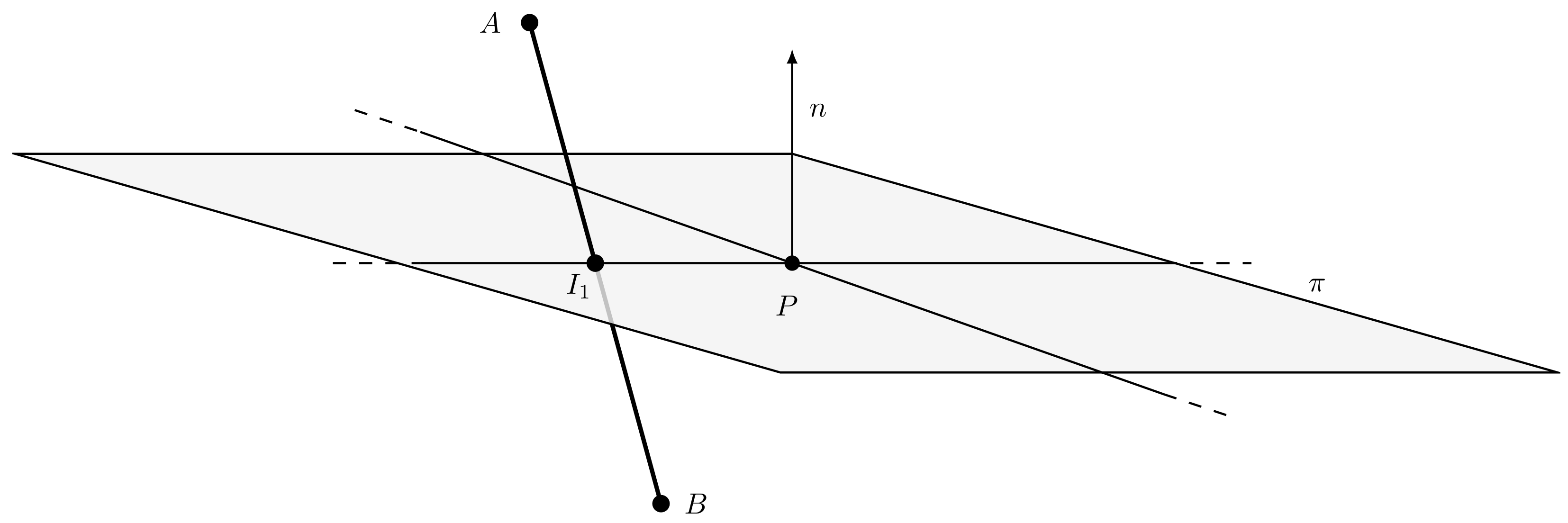

5.3. Ray Intersection Algorithm via Plücker Coordinates

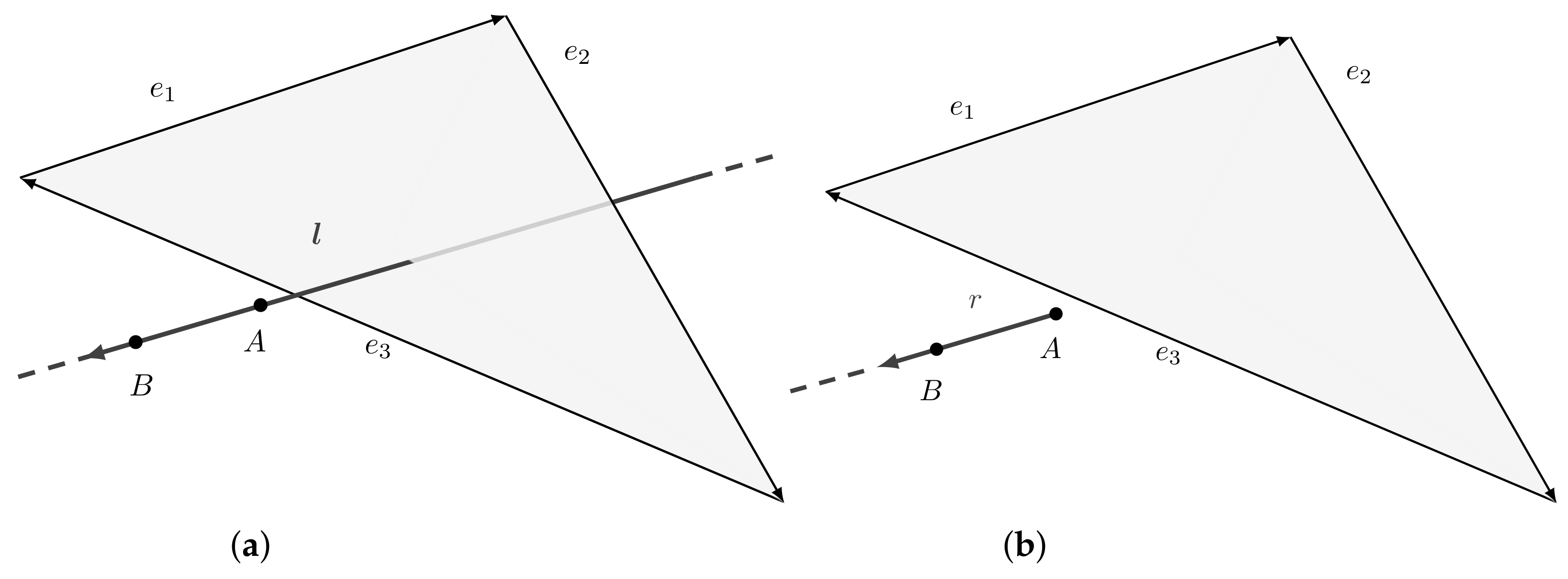

5.3.1. Clipping

- Compute intersection points and of segments and with the plane using the procedure described above.

- Generate two sub-polygons: the triangle and the trapezoid .

5.4. Proposed Approach Implementation and Its Convergence Properties

| Algorithm 1 VSD based on Plücker coordinates |

| Input: 3D mesh Implementation: For each , the following actions are performed in order:

Classification: For , triangle is classified as visible is , otherwise as non visible. |

- the M points in step (1) are selected either uniformly random or adopting the minimum distance approach; and

- the K rays in step (2) are generated uniformly random.

- to be the region obtained by intersecting with ; and

- to be the region obtained by intersecting with and containing .

- the M points in step (1) are selected either uniformly random or adopting the minimum distance approach; and

- the K rays in step (2) are generated according to the Fibonacci lattice distribution.

6. Results

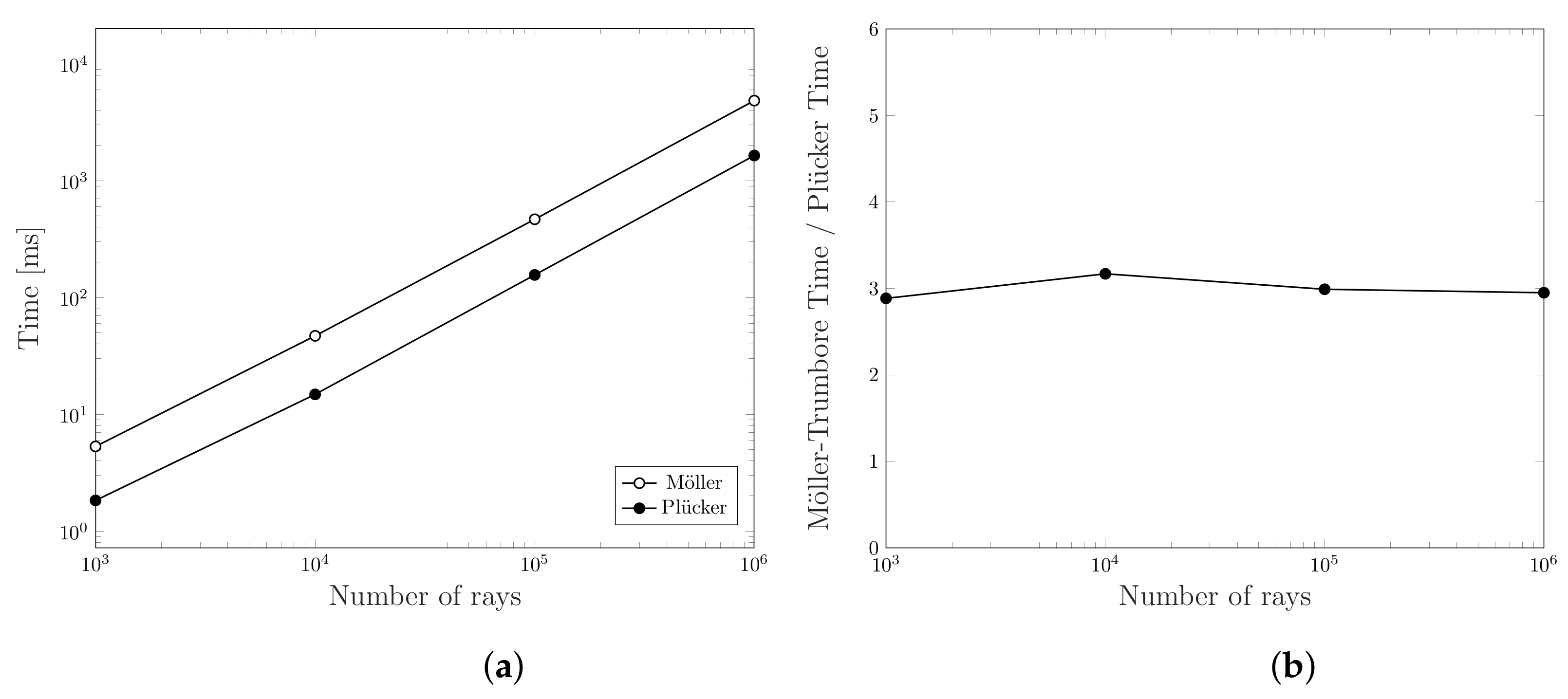

6.1. Intersection Algorithms Comparison

- Generate a set of triangles randomly within a cubic volume of .

- Create a set of points by sampling the surface of a sphere, with radius , centred in the cubic volume, using the Fibonacci distribution.

- For each point , generate a ray starting from the volume centre and passing through S, thus generating a set of rays .

- For each ray in , check if it is occluded by at least one triangle in .

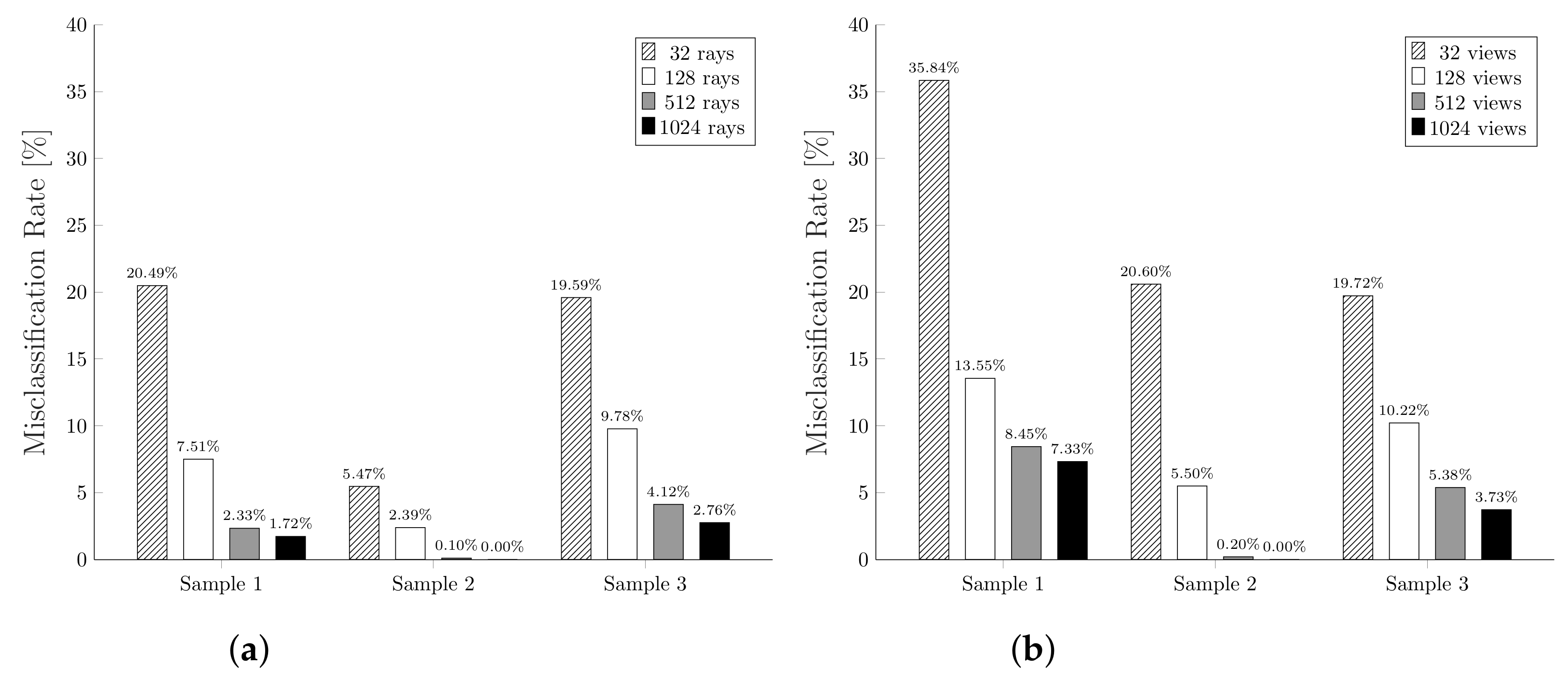

6.2. Comparison with a State-of-the-Art Method

- Given a number of views V, V points are sampled on the surface of a sphere that fully embrace the triangular mesh using the Fibonacci distribution. The set of camera directions contains all the rays starting from each sampled point and directed to the sphere origin.

- For each direction , the depth map of the corresponding camera view is computed. By using this map the ambient occlusion term is computed for each pixel. Each value is then added to the correspondent projected triangle vertex.

- For each vertex, the partial results obtained for each direction r, i.e., for each view, are averaged to obtain a unique global value.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, S.; Li, Y.; Kwok, N.M. Active vision in robotic systems: A survey of recent developments. Int. J. Robot. Res. 2011, 30, 1343–1377. [Google Scholar] [CrossRef]

- Posada, J.; Toro, C.; Barandiaran, I.; Oyarzun, D.; Stricker, D.; de Amicis, R.; Pinto, E.B.; Eisert, P.; Döllner, J.; Vallarino, I. Visual Computing as a Key Enabling Technology for Industrie 4.0 and Industrial Internet. IEEE Comput. Graph. Appl. 2015, 35, 26–40. [Google Scholar] [CrossRef] [PubMed]

- Rossi, A.; Barbiero, M.; Carli, R. Vostok: 3D scanner simulation for industrial robot environments. ELCVIA Electron. Lett. Comput. Vis. Image Anal. 2020, 19, 71–84. [Google Scholar] [CrossRef]

- Okino, N.; Kakazu, Y.; Morimoto, M. Extended Depth-Buffer Algorithms for Hidden-Surface Visualization. IEEE Comput. Graph. Appl. 1984, 4, 79–88. [Google Scholar] [CrossRef]

- Uysal, M.; Sen, B.; Celik, C. Hidden surface removal using bsp tree with cuda. Glob. J. Technol. 3rd World Conf. Inf. Technol. Wcit-2012 2013, 3, 238–243. [Google Scholar]

- Warnock, J.E. A Hidden Surface Algorithm for Computer Generated Halftone Pictures. Ph.D. Thesis, Utah University Salt Lake City Department of Computer Science, Salt Lake City, UT, USA, 1969. [Google Scholar]

- Sutherland, I.E.; Sproull, R.F.; Schumacker, A. A characterization of ten hiddensurface algorithms. ACM Comput. Surv. (CSUR) 1974, 6, 1–55. [Google Scholar] [CrossRef]

- Vaněkčkek, G., Jr. Back-face culling applied to collision detection of polyhedra. J. Vis. Comput. Animat. 1994, 5, 55–63. Available online: https://onlinelibrary.wiley.com/doi/pdf/10.1002/vis.4340050105 (accessed on 1 June 2021). [CrossRef]

- Durand, F.; Drettakis, G.; Puech, C. The 3D visibility complex: A new approach to the problems of accurate visibility. In Rendering Techniques ‘96; Pueyo, X., Schröder, P., Eds.; Springer: Vienna, Austria, 1996; pp. 245–256. [Google Scholar]

- Cignoni, P.; Callieri, M.; Corsini, M.; Dellepiane, M.; Ganovelli, F.; Ranzuglia, G. MeshLab: An Open-Source Mesh Processing Tool. In Proceedings of the Eurographics Italian Chapter Conference, Salerno, Italy, 2–4 July 2008; Scarano, V., Chiara, R.D., Erra, U., Eds.; The Eurographics Association: Salerno, Italy, 2008. [Google Scholar] [CrossRef]

- Möller, T.; Trumbore, B. Fast, Minimum Storage Ray-Triangle Intersection. J. Graph. Tools 1997, 2, 21–28. [Google Scholar] [CrossRef]

- Nirenstein, S.; Blake, E.; Gain, J. Exact From-Region Visibility Culling. In Proceedings of the Eurographics Workshop on Rendering, Pisa, Italy, 26–28 June 2002; Debevec, P., Gibson, S., Eds.; The Eurographics Association: Pisa, Italy, 2002. [Google Scholar] [CrossRef]

- Durand, F. 3D Visibility: Analytical Study and Applications. Ph.D. Thesis, Universitè Joseph Fourier, Saint-Martin-d’Hères, France, 1999. [Google Scholar]

- Bittner, J.; Wonka, P. Visibility in Computer Graphics. Environ. Plan. B Plan. Des. 2003, 30, 729–755. [Google Scholar] [CrossRef]

- Jiménez, J.J.; Ogáyar, C.J.; Noguera, J.M.; Paulano, F. Performance analysis for GPU-based ray-triangle algorithms. In Proceedings of the 2014 International Conference on Computer Graphics Theory and Applications (GRAPP), Lisbon, Portugal, 5–8 January 2014; pp. 1–8. [Google Scholar]

- Havel, J.; Herout, A. Yet Faster Ray-Triangle Intersection (Using SSE4). IEEE Trans. Vis. Comput. Graph. 2010, 16, 434–438. [Google Scholar] [CrossRef] [PubMed]

- Altin, N.; Yazgan, E. RCS prediction using fast ray tracing in Plücker coordinates. In Proceedings of the 2013 7th European Conference on Antennas and Propagation (EuCAP), Gothenburg, Sweden, 8–12 April 2013; pp. 284–288. [Google Scholar]

- Kobbelt, L.; Vorsatz, J.; Seidel, H.P. Multiresolution Hierarchies on Unstructured Triangle Meshes. Comput. Geom. J. Theory Appl. 1999, 14, 5–24. [Google Scholar] [CrossRef]

- Plantinga, H.; Dyer, C.R. Visibility, occlusion, and the aspect graph. Int. J. Comput. Vis. 1990, 5, 137–160. [Google Scholar] [CrossRef]

- Zhukov, S.; Iones, A.; Kronin, G. An ambient light illumination model. In Rendering Techniques ‘98; Drettakis, G., Max, N., Eds.; Springer: Vienna, Austria, 1998; pp. 45–55. [Google Scholar]

- Méndez-Feliu Àlex, S.M. From obscurances to ambient occlusion: A survey. Vis. Comput. 2009, 25, 181–196. [Google Scholar] [CrossRef]

- Pellegrini, M. Ray Shooting and Lines in Space. In Handbook of Discrete and Computational Geometry; Chapman and Hall/CRC: Boca Raton, FL, USA, 2004; Chapter 41. [Google Scholar]

- Arvo, J. State of the Art in Monte Carlo Ray Tracing for Realistic Image Synthesis: Stratified sampling of 2-manifolds. Siggraph 2001 Course 29 2001, 29, 41–64. [Google Scholar]

- Bridson, R. Fast Poisson disk sampling in arbitrary dimensions. In Proceedings of the SIGGRAPH Sketches, San Diego, CA, USA, 9 August 2007; p. 22. [Google Scholar]

- Muller, M.E. A Note on a Method for Generating Points Uniformly on N-Dimensional Spheres. Commun. ACM 1959, 2, 19–20. [Google Scholar] [CrossRef]

- González, Á. Measurement of areas on a sphere using Fibonacci and latitude–longitude lattices. Math. Geosci. 2010, 42, 49. [Google Scholar] [CrossRef]

- Keinert, B.; Innmann, M.; Sänger, M.; Stamminger, M. Spherical Fibonacci Mapping. ACM Trans. Graph. 2015, 34, 1–7. [Google Scholar] [CrossRef]

- Březina, J.; Exner, P. Fast algorithms for intersection of non-matching grids using Plücker coordinates. Comput. Math. Appl. 2017. [Google Scholar] [CrossRef]

- Jones, R. Intersecting a Ray and a Triangle with Plücker Coordinates. RTNews 2000, 13, 67. [Google Scholar]

- Shoemake, K. Plücker Coordinate Tutorial. RTNews 1998, 11, 1. [Google Scholar]

- Bavoil, L.; Sainz, M. Screen Space Ambient Occlusion. NVIDIA Developer Information. 2008. Available online: http://developer.nvidia.com (accessed on 1 June 2021).

- MeshLab GitHub Repository. Available online: https://github.com/cnr-isti-vclab/meshlab (accessed on 1 June 2021).

- Bittner, J.; Havran, V.; Slavík, P. Hierarchical visibility culling with occlusion trees. In Proceedings of the Computer Graphics International (Cat. No.98EX149), Hannover, Germany, 26 June 1998; pp. 207–219. [Google Scholar]

| Rays|Views | MeshLab VSD | Plücker VSD | ||

|---|---|---|---|---|

| Time [s] | M. E. Rate [%] | Time [s] | M. E. Rate [%] | |

| 1000 | 0.58 | 6.39 | 2.29 | 1.93 |

| 2500 | 1.27 | 4.56 | 5.70 | 0.61 |

| 5000 | 2.41 | 3.75 | 11.39 | 0.61 |

| 7500 | 3.67 | 2.84 | 16.99 | 0.30 |

| 10,000 | 4.77 | 1.72 | 22.66 | 0.00 |

| 25,000 | 11.74 | 1.72 | 44.31 | 0.00 |

| 50,000 | 24.23 | 1.22 | 114.20 | 0.00 |

| 75,000 | 34.79 | 1.22 | 170.50 | 0.00 |

| 100,000 | 48.51 | 1.12 | 226.92 | 0.00 |

| 200,000 | 91.08 | 1.12 | 456.20 | 0.00 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rossi, A.; Barbiero, M.; Scremin, P.; Carli, R. Robust Visibility Surface Determination in Object Space via Plücker Coordinates. J. Imaging 2021, 7, 96. https://doi.org/10.3390/jimaging7060096

Rossi A, Barbiero M, Scremin P, Carli R. Robust Visibility Surface Determination in Object Space via Plücker Coordinates. Journal of Imaging. 2021; 7(6):96. https://doi.org/10.3390/jimaging7060096

Chicago/Turabian StyleRossi, Alessandro, Marco Barbiero, Paolo Scremin, and Ruggero Carli. 2021. "Robust Visibility Surface Determination in Object Space via Plücker Coordinates" Journal of Imaging 7, no. 6: 96. https://doi.org/10.3390/jimaging7060096

APA StyleRossi, A., Barbiero, M., Scremin, P., & Carli, R. (2021). Robust Visibility Surface Determination in Object Space via Plücker Coordinates. Journal of Imaging, 7(6), 96. https://doi.org/10.3390/jimaging7060096