Feature Extraction for Finger-Vein-Based Identity Recognition

Abstract

1. Introduction

2. Related Work

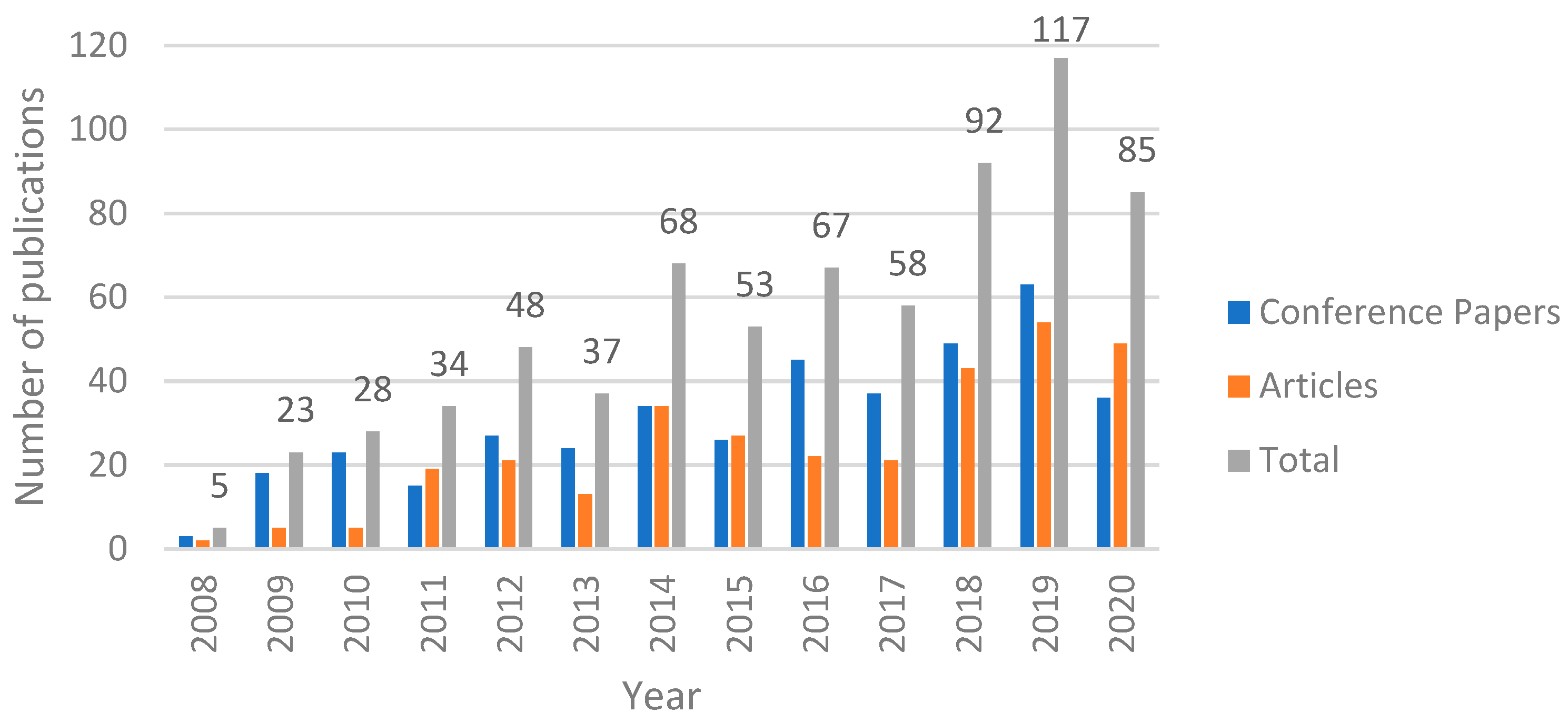

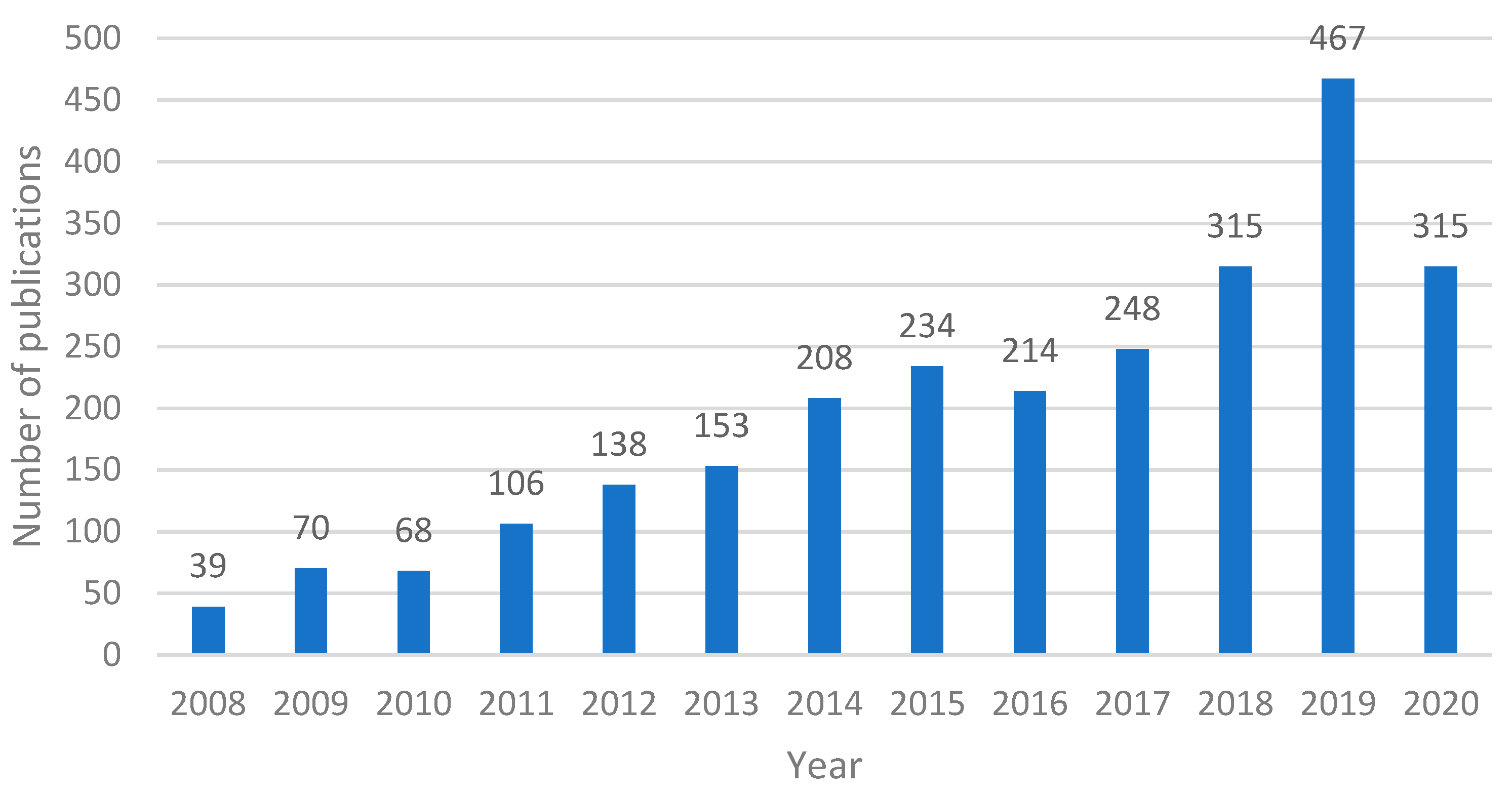

3. Literature Analysis

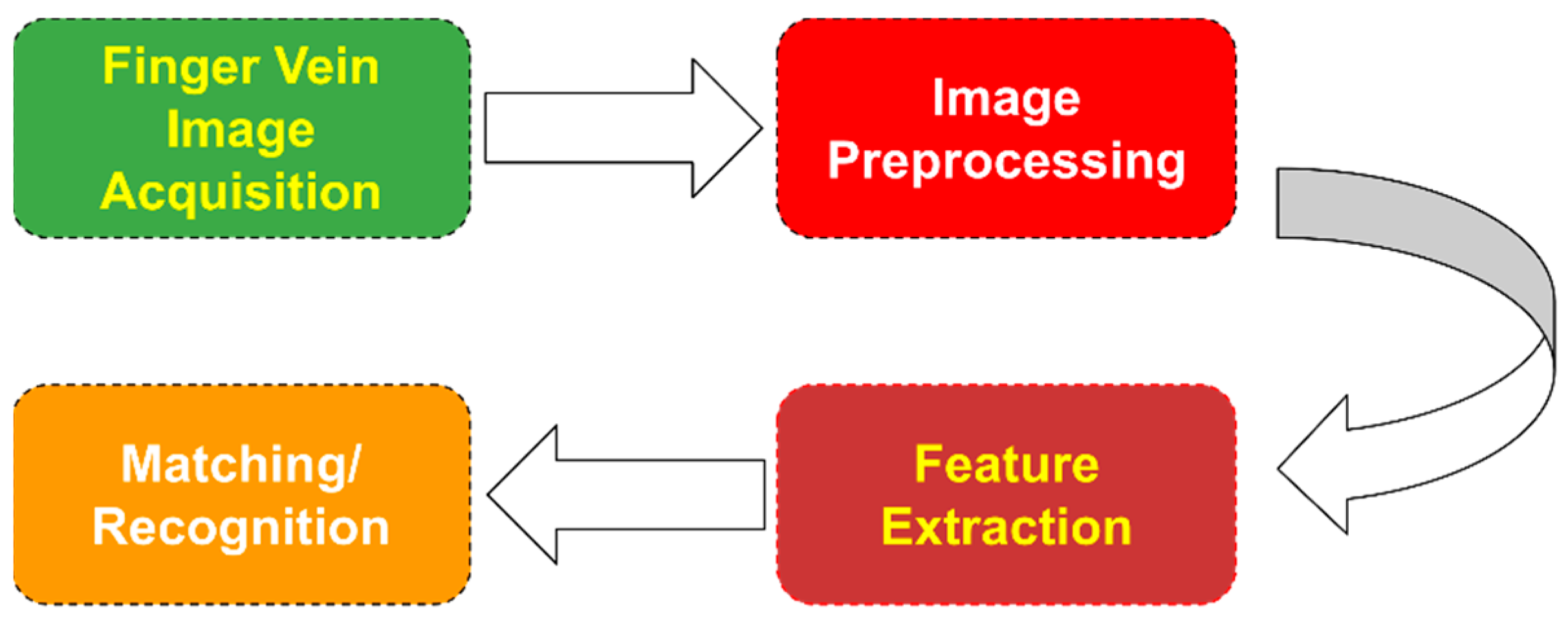

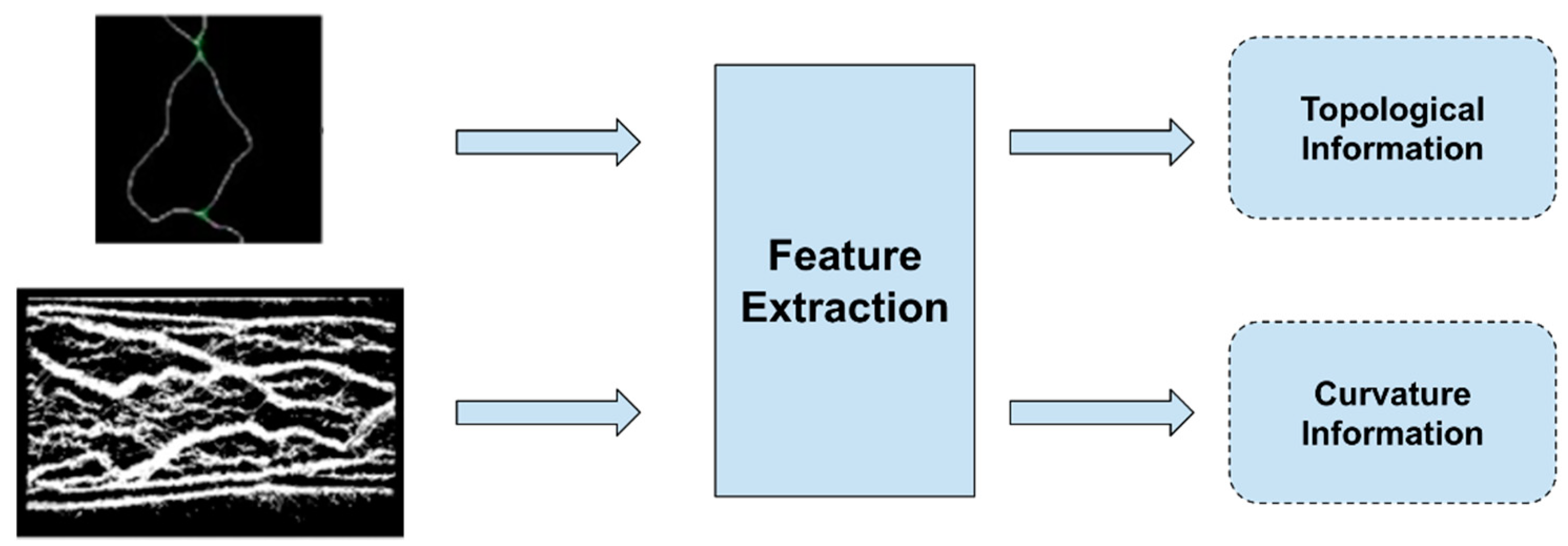

4. Finger Vein Feature Extraction

4.1. Feature Extraction Based on Vein Patterns

4.2. Feature Extraction Based on Dimensionality Reduction

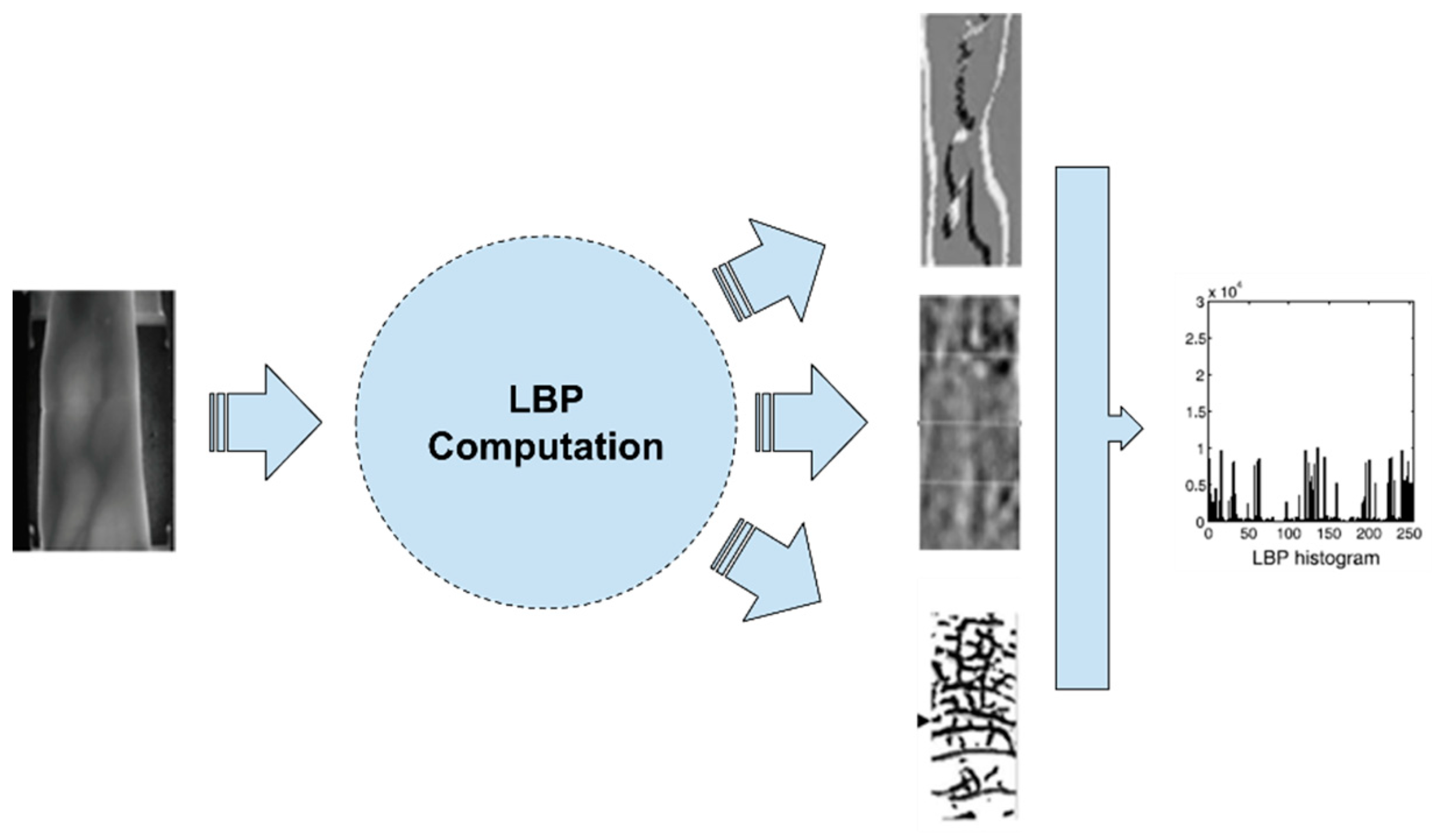

4.3. Feature Extraction Based on Local Binary Patterns

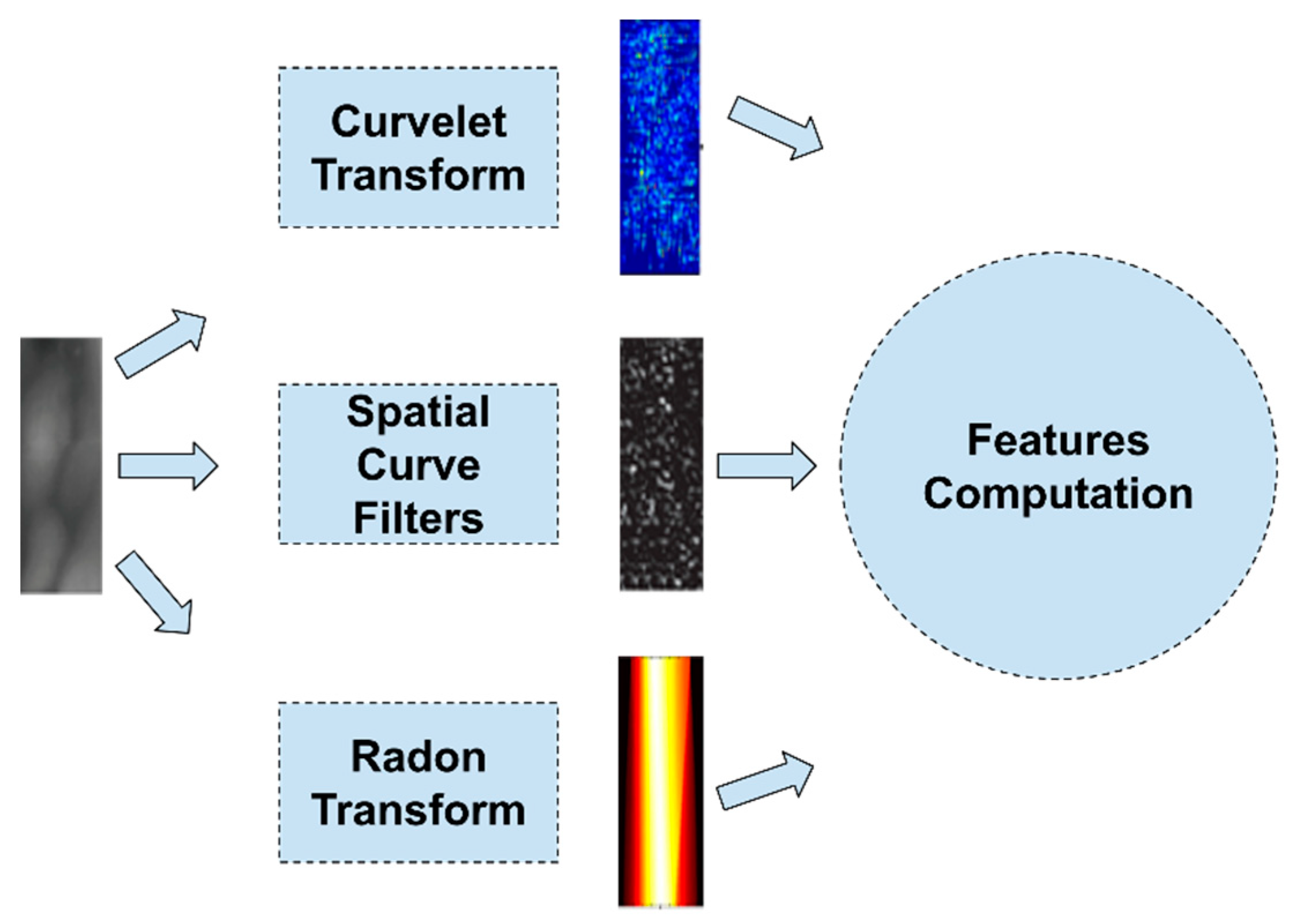

4.4. Feature Extraction Based on Image Transformations

4.5. Other Feature Extraction Methods

5. Feature Extraction vs Feature Learning

6. Implementation Aspects

6.1. Benchmark Datasets

6.2. Software Frameworks/Libraries

6.3. Hardware Topologies/Configuration

7. Conclusions and Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jain, A.K.; Ross, A.; Prabhakar, S. An Introduction to Biometric Recognition. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 4–20. [Google Scholar] [CrossRef]

- Yang, L.; Yang, G.; Yin, Y.; Zhou, L. A Survey of Finger Vein Recognition. In Proceedings of the Chinese Conference on Biometric Recognition, Shenyang, China, 7–9 November 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 234–243. [Google Scholar]

- Syazana-Itqan, K.; Syafeeza, A.R.; Saad, N.M.; Hamid, N.A.; Bin Mohd Saad, W.H. A Review of Finger-Vein Biometrics Identification Approaches. Indian J. Sci. Technol. 2016, 9. [Google Scholar] [CrossRef]

- Dev, R.; Khanam, R. Review on Finger Vein Feature Extraction Methods. In Proceedings of the IEEE International Conference on Computing, Communication and Automation, ICCCA 2017, Greater Noida, India, 5–6 May 2017; pp. 1209–1213. [Google Scholar] [CrossRef]

- Shaheed, K.; Liu, H.; Yang, G.; Qureshi, I.; Gou, J.; Yin, Y. A Systematic Review of Finger Vein Recognition Techniques. Information 2018, 9, 213. [Google Scholar] [CrossRef]

- Mohsin, A.H.; Jalood, N.S.; Baqer, M.J.; Alamoodi, A.H.; Almahdi, E.M.; Albahri, A.S.; Alsalem, M.A.; Mohammed, K.I.; Ameen, H.A.; Garfan, S.; et al. Finger Vein Biometrics: Taxonomy Analysis, Open Challenges, Future Directions, and Recommended Solution for Decentralised Network Architectures. Ieee Access 2020, 8, 9821–9845. [Google Scholar] [CrossRef]

- Elahee, F.; Mim, F.; Naquib, F.B.; Tabassom, S.; Hossain, T.; Kalpoma, K.A. Comparative Study of Deep Learning Based Finger Vein Biometric Authentication Systems. In Proceedings of the 2020 2nd International Conference on Advanced Information and Communication Technology (ICAICT), Dhaka, Bangladesh, 28–29 November 2020; pp. 444–448. [Google Scholar]

- The Largest Database of Peer-Reviewed Literature-Scopus | Elsevier Solutions. Available online: https://www.elsevier.com/solutions/scopus (accessed on 17 April 2021).

- Google Scholar. Available online: https://scholar.google.com/ (accessed on 17 April 2021).

- Miura, N.; Nagasaka, A.; Miyatake, T. Feature Extraction of Finger-Vein Patterns Based on Repeated Line Tracking and Its Application to Personal Identification. Mach. Vis. Appl. 2004, 15, 194–203. [Google Scholar] [CrossRef]

- Miura, N.; Nagasaka, A.; Miyatake, T. Extraction of Finger-Vein Patterns Using Maximum Curvature Points in Image Profiles. IEICE Trans. Inf. Syst. 2007, E90-D, 1185–1194. [Google Scholar] [CrossRef]

- Choi, J.H.; Song, W.; Kim, T.; Lee, S.-R.; Kim, H.C. Finger Vein Extraction Using Gradient Normalization and Principal Curvature. Image Process. Mach. Vis. Appl. II 2009, 7251, 725111. [Google Scholar] [CrossRef]

- Yu, C.B.; Qin, H.F.; Cui, Y.Z.; Hu, X.Q. Finger-Vein Image Recognition Combining Modified Hausdorff Distance with Minutiae Feature Matching. Interdiscip. Sci. Comput. Life Sci. 2009, 1, 280–289. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Shi, Y.; Yang, J.; Jiang, L. A Novel Finger-Vein Recognition Method with Feature Combination. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 2709–2712. [Google Scholar] [CrossRef]

- Yang, J.; Shi, Y.; Yang, J. Finger-Vein Recognition Based on a Bank of Gabor Filters. In Proceedings of the Asian Conference on Computer Vision, Xi’an, China, 23–27 September 2009; pp. 374–383. [Google Scholar]

- Qian, X.; Guo, S.; Li, X.; Zhong, F.; Shao, X. Finger-Vein Recognition Based on the Score Level Moment Invariants Fusion. In Proceedings of the 2009 International Conference on Computational Intelligence and Software Engineering, CiSE 2009, Wuhan, China, 11–13 December 2009; pp. 3–6. [Google Scholar] [CrossRef]

- Wang, K.; Liu, J.; Popoola, O.P.; Feng, W. Finger Vein Identification Based on 2-D Gabor Filter. In Proceedings of the ICIMA 2010–2010 2nd International Conference on Industrial Mechatronics and Automation, Wuhan, China, 30–31 May 2010; Volume 2, pp. 10–13. [Google Scholar] [CrossRef]

- Song, W.; Kim, T.; Kim, H.C.; Choi, J.H.; Kong, H.J.; Lee, S.R. A Finger-Vein Verification System Using Mean Curvature. Pattern Recognit. Lett. 2011, 32, 1541–1547. [Google Scholar] [CrossRef]

- Yang, J.; Shi, Y.; Wu, R. Finger-Vein Recognition Based on Gabor Features. Biom. Syst. Des. Appl. 2011. [Google Scholar] [CrossRef]

- Xie, S.J.; Yoon, S.; Yang, J.C.; Yu, L.; Park, D.S. Guided Gabor Filter for Finger Vein Pattern Extraction. In Proceedings of the 8th International Conference on Signal Image Technology and Internet Based Systems, SITIS 2012r, Sorrento, Italy, 25–29 November 2012; pp. 118–123. [Google Scholar] [CrossRef]

- Venckauskas, A.; Nanevicius, P. Cryptographic Key Generation from Finger Vein. Int. Res. J. Eng. Technol. (IRJET) 2013, 2, 733–738. [Google Scholar]

- Prabhakar, P.; Thomas, T. Finger Vein Identification Based on Minutiae Feature Extraction with Spurious Minutiae Removal. In Proceedings of the 2013 Third International Conference on Advances in Computing and Communications, Cochin, India, 29–31 August 2013; pp. 196–199. [Google Scholar]

- Nivas, S.; Prakash, P. Real-Time Finger-Vein Recognition System. Int. J. Eng. Res. Gen. Sci. 2014, 2, 580–591. [Google Scholar]

- Mohammed, F.E.; Aldaidamony, E.M.; Raid, A.M. Multi Modal Biometric Identification System: Finger Vein and Iris. Int. J. Soft Comput. Eng. 2014, 4, 50–55. [Google Scholar]

- Liu, F.; Yang, G.; Yin, Y.; Wang, S. Singular Value Decomposition Based Minutiae Matching Method for Finger Vein Recognition. Neurocomputing 2014, 145, 75–89. [Google Scholar] [CrossRef]

- Mantrao, N.; Sukhpreet, K. An Efficient Minutiae Matching Method for Finger Vein Recognition. Int. J. Adv. Res. Comput. Sci. Softw. Eng 2015, 5, 657–660. [Google Scholar]

- Prasath, N.; Sivakumar, M. A Comprehensive Approach for Multi Biometric Recognition Using Sclera Vein and Finger Vein Fusion. Int. J. Trends Eng. Technol. 2015, 5, 195–198. [Google Scholar]

- Gupta, P.; Gupta, P. An Accurate Finger Vein Based Verification System. Digit. Signal Process 2015, 38, 43–52. [Google Scholar] [CrossRef]

- Kaur, K.B.S. Finger Vein Recognition Using Minutiae Extraction and Curve Analysis. Int. J. Sci. Res. (IJSR) 2015, 4, 2402–2405. [Google Scholar]

- Liu, T.; Xie, J.B.; Yan, W.; Li, P.Q.; Lu, H.Z. An Algorithm for Finger-Vein Segmentation Based on Modified Repeated Line Tracking. Imaging Sci. J. 2013, 61, 491–502. [Google Scholar] [CrossRef]

- Kalaimathi, P.; Ganesan, V. Extraction and Authentication of Biometric Finger Vein Using Gradient Boosted Feature Algorithm. In Proceedings of the 2016 International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 6–8 April 2016; pp. 723–726. [Google Scholar]

- Matsuda, Y.; Miura, N.; Nagasaka, A.; Kiyomizu, H.; Miyatake, T. Finger-Vein Authentication Based on Deformation-Tolerant Feature-Point Matching. Mach. Vis. Appl. 2016, 27, 237–250. [Google Scholar] [CrossRef]

- Zou, H.; Zhang, B.; Tao, Z.; Wang, X. A Finger Vein Identification Method Based on Template Matching. J. Phys. Conf. Ser. 2016, 680. [Google Scholar] [CrossRef]

- Brindha, S. Finger Vein Recognition. Int. Res. J. Eng. Technol. (IRJET) 2017, 4, 1298–1300. [Google Scholar]

- Babu, G.S.; Bobby, N.D.; Bennet, M.A.; Shalini, B.; Srilakshmi, K. Multistage Feature Extraction of Finger Vein Patterns Using Gabor Filters. Iioab J. 2017, 8, 84–91. [Google Scholar]

- Prommegger, B.; Kauba, C.; Uhl, A. Multi-Perspective Finger-Vein Biometrics. In Proceedings of the 2018 IEEE 9th International Conference on Biometrics Theory, Applications and Systems (BTAS), Redondo Beach, CA, USA, 22–25 October 2018. [Google Scholar]

- Yang, L.; Yang, G.; Yin, Y.; Xi, X. Finger Vein Recognition with Anatomy Structure Analysis. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 1892–1905. [Google Scholar] [CrossRef]

- Yang, L.; Yang, G.; Xi, X.; Su, K.; Chen, Q.; Yin, Y. Finger Vein Code: From Indexing to Matching. IEEE Trans. Inf. Forensics Secur. 2019, 14, 1210–1223. [Google Scholar] [CrossRef]

- Sarala, R.; Yoghalakshmi, E.; Ishwarya, V. Finger Vein Biometric Based Secure Access Control in Smart Home Automation. Int. J. Eng. Adv. Technol. 2019, 8, 851–855. [Google Scholar] [CrossRef]

- Ali, R.W.; Kassim, J.M.; Abdullah, S.N.H.S. Finger Vein Recognition Using Straight Line Approximation Based on Ensemble Learning. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 153–159. [Google Scholar] [CrossRef]

- Ilankumaran, S.; Deisy, C. Multi-Biometric Authentication System Using Finger Vein and Iris in Cloud Computing. Clust. Comput. 2019, 22, 103–117. [Google Scholar] [CrossRef]

- Yang, W.; Ji, W.; Xue, J.H.; Ren, Y.; Liao, Q. A Hybrid Finger Identification Pattern Using Polarized Depth-Weighted Binary Direction Coding. Neurocomputing 2019, 325, 260–268. [Google Scholar] [CrossRef]

- Yong, Y. Research on Technology of Finger Vein Pattern Recognition Based on FPGA. J. Phys. Conf. Ser. 2020, 1453, 012037. [Google Scholar] [CrossRef]

- Vasquez-Villar, Z.J.; Choquehuanca-Zevallos, J.J.; Ludena-Choez, J.; Mayhua-Lopez, E. Finger Vein Segmentation from Infrared Images Using Spectral Clustering: An Approach for User Indentification. In Proceedings of the 2020 IEEE 10th International Conference on System Engineering and Technology (ICSET), Shah Alam, Malaysia, 9 November 2020; pp. 245–249. [Google Scholar]

- Beng, T.S.; Rosdi, B.A. Finger-Vein Identification Using Pattern Map and Principal Component Analysis. In Proceedings of the 2011 IEEE International Conference on Signal and Image Processing Applications (ICSIPA 2011), Kuala Lumpur, Malaysia, 16–18 November 2011; pp. 530–534. [Google Scholar] [CrossRef]

- Liu, Z.; Yin, Y.; Wang, H.; Song, S.; Li, Q. Finger Vein Recognition with Manifold Learning. J. Netw. Comput. Appl. 2010, 33, 275–282. [Google Scholar] [CrossRef]

- Guan, F.; Wang, K.; Wu, Q. Bi-Directional Weighted Modular B2DPCA for Finger Vein Recognition. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; pp. 93–97. [Google Scholar] [CrossRef]

- Ushapriya, A.; Subramani, M. Highly Secure and Reliable User Identification Based on Finger Vein Patterns; Global Journals Inc.: Framingham, MA, USA, 2011; Volume 11. [Google Scholar]

- Wu, J.D.; Liu, C.T. Finger-Vein Pattern Identification Using SVM and Neural Network Technique. Expert Syst. Appl. 2011, 38, 14284–14289. [Google Scholar] [CrossRef]

- Yang, G.; Xi, X.; Yin, Y. Finger Vein Recognition Based on (2D) 2 PCA and Metric Learning. J. Biomed. Biotechnol. 2012. [Google Scholar] [CrossRef] [PubMed]

- Damavandinejadmonfared, S.; Mobarakeh, A.K.; Pashna, M.; Gou, J.; Rizi, S.M.; Nazari, S.; Khaniabadi, S.M.; Bagheri, M.A. Finger Vein Recognition Using PCA-Based Methods. World Acad. Sci. Eng. Technol. 2012, 66. [Google Scholar] [CrossRef]

- Hajian, A.; Damavandinejadmonfared, S. Optimal Feature Extraction Dimension in Finger Vein Recognition Using Kernel Principal Component Analysis. World Acad. Sci. Eng. Technol. 2014, 8, 1637–1640. [Google Scholar]

- You, L.; Wang, J.; Li, H.; Li, X. Finger Vein Recognition Based on 2DPCA and KMMC. Int. J. Signal Process. Image Process. Pattern Recognit. 2015, 8, 163–170. [Google Scholar] [CrossRef]

- Van, H.T.; Thai, T.T.; Le, T.H. Robust Finger Vein Identification Base on Discriminant Orientation Feature. In Proceedings of the 2015 IEEE International Conference on Knowledge and Systems Engineering, KSE 2015, Ho Chi Minh City, Vietnam, 8–10 October 2015; pp. 348–353. [Google Scholar] [CrossRef]

- Qiu, S.; Liu, Y.; Zhou, Y.; Huang, J.; Nie, Y. Finger-Vein Recognition Based on Dual-Sliding Window Localization and Pseudo-Elliptical Transformer. Expert Syst. Appl. 2016, 64, 618–632. [Google Scholar] [CrossRef]

- Xi, X.; Yang, L.; Yin, Y. Learning Discriminative Binary Codes for Finger Vein Recognition. Pattern Recognit. 2017, 66, 26–33. [Google Scholar] [CrossRef]

- Yang, W.; Wang, S.; Hu, J.; Zheng, G.; Valli, C. A Fingerprint and Finger-Vein Based Cancelable Multi-Biometric System. Pattern Recognit. 2018, 78, 242–251. [Google Scholar] [CrossRef]

- Hu, N.; Ma, H.; Zhan, T. Finger Vein Biometric Verification Using Block Multi-Scale Uniform Local Binary Pattern Features and Block Two-Directional Two-Dimension Principal Component Analysis. Optik 2020, 208, 163664. [Google Scholar] [CrossRef]

- Zhongbo, Z.; Siliang, M.; Xiao, H. Multiscale Feature Extraction of Finger-Vein Patterns Based on Curvelets and Local Interconnection Structure Neural Network. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; pp. 145–148. [Google Scholar] [CrossRef]

- Lee, E.C.; Lee, H.C.; Park, K.R. Finger Vein Recognition Using Minutia-Based Alignment and Local Binary Pattern-Based Feature Extraction. Int. J. Imaging Syst. Technol. 2009, 19, 179–186. [Google Scholar] [CrossRef]

- Lee, H.C.; Kang, B.J.; Lee, E.C.; Park, K.R. Finger Vein Recognition Using Weighted Local Binary Pattern Code Based on a Support Vector Machine. J. Zhejiang Univ. Sci. C 2010, 11, 514–524. [Google Scholar] [CrossRef]

- Park, K.R. Finger Vein Recognition by Combining Global and Local Features Based on SVM. Comput. Inform. 2011, 30, 295–309. [Google Scholar]

- Lee, E.C.; Jung, H.; Kim, D. New Finger Biometric Method Using near Infrared Imaging. Sensors 2011, 11, 2319–2333. [Google Scholar] [CrossRef]

- Yang, Y.; Yang, G.; Wang, S. Finger Vein Recognition Based on Multi-Instance. Int. J. Digit. Content Technol. Its Appl. 2012, 6, 86–94. [Google Scholar] [CrossRef]

- Yang, G.; Xi, X.; Yin, Y. Finger Vein Recognition Based on a Personalized Best Bit Map. Sensors 2012, 12, 1738–1757. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Yoon, S.; Xie, S.J.; Yang, J.; Wang, Z.; Park, D.S. Finger Vein Recognition Using Generalized Local Line Binary Pattern. Ksii Trans. Internet Inf. Syst. 2014, 8, 1766–1784. [Google Scholar] [CrossRef]

- Dong, S.; Yang, J.; Chen, Y.; Wang, C.; Zhang, X.; Park, D.S. Finger Vein Recognition Based on Multi-Orientation Weighted Symmetric Local Graph Structure. Ksii Trans. Internet Inf. Syst. 2015, 9, 4126–4142. [Google Scholar] [CrossRef]

- William, A.; Ong, T.S.; Tee, C.; Goh, M.K.O. Multi-Instance Finger Vein Recognition Using Local Hybrid Binary Gradient Contour. In Proceedings of the 2015 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, APSIPA ASC 2015, Hong Kong, China, 16–19 December 2015; pp. 1226–1231. [Google Scholar] [CrossRef]

- Khusnuliawati, H.; Fatichah, C.; Soelaiman, R. A Comparative Study of Finger Vein Recognition by Using Learning Vector Quantization. Iptek J. Proc. Ser. 2017, 136. [Google Scholar] [CrossRef]

- Dong, S.; Yang, J.; Wang, C.; Chen, Y.; Sun, D. A New Finger Vein Recognition Method Based on the Difference Symmetric Local Graph Structure (DSLGS). Int. J. Signal Process. Image Process. Pattern Recognit. 2015, 8, 71–80. [Google Scholar] [CrossRef]

- Yang, W.; Qin, C.; Wang, X.; Liao, Q. Cross Section Binary Coding for Fusion of Finger Vein and Finger Dorsal Texture. In Proceedings of the IEEE International Conference on Industrial Technology, Taipei, Taiwan, 14–17 March 2016; pp. 742–745. [Google Scholar] [CrossRef]

- Liu, H.; Yang, G.; Yang, L.; Su, K.; Yin, Y. Anchor-Based Manifold Binary Pattern for Finger Vein Recognition. Sci. China Inf. Sci. 2019, 62, 1–16. [Google Scholar] [CrossRef]

- Liu, H.; Yang, G.; Yang, L.; Yin, Y. Learning Personalized Binary Codes for Finger Vein Recognition. Neurocomputing 2019, 365, 62–70. [Google Scholar] [CrossRef]

- Su, K.; Yang, G.; Wu, B.; Yang, L.; Li, D.; Su, P.; Yin, Y. Human Identification Using Finger Vein and ECG Signals. Neurocomputing 2019, 332, 111–118. [Google Scholar] [CrossRef]

- Lv, G.-L.; Shen, L.; Yao, Y.-D.; Wang, H.-X.; Zhao, G.-D. Feature-Level Fusion of Finger Vein and Fingerprint Based on a Single Finger Image: The Use of Incompletely Closed Near-Infrared Equipment. Symmetry 2020, 12, 709. [Google Scholar] [CrossRef]

- Zhang, Z.B.; Wu, D.Y.; Ma, S.L.; Ma, J. Multiscale Feature Extraction of Finger-Vein Patterns Based on Wavelet and Local Interconnection Structure Neural Network. In Proceedings of the 2005 International Conference on Neural Networks and Brain, Beijing, China, 13–15 October 2005; Volume 2, pp. 1081–1084. [Google Scholar]

- Wu, J.D.; Ye, S.H. Driver Identification Using Finger-Vein Patterns with Radon Transform and Neural Network. Expert Syst. Appl. 2009, 36, 5793–5799. [Google Scholar] [CrossRef]

- Rattey, P.; Lindgren, A. Sampling the 2-D Radon Transform. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 994–1002. [Google Scholar] [CrossRef]

- Li, J.; Pan, Q.; Zhang, H.; Cui, P. Image Recognition Using Radon Transform. In Proceedings of the 2003 IEEE International Conference on Intelligent Transportation Systems, Shanghai, China, 12–15 October 2003; pp. 741–744. [Google Scholar]

- Ramya, V.; Vijaya Kumar, P.; Palaniappan, B. A Novel Design of Finger Vein Recognition for Personal Authentication and Vehicle Security. J. Theor. Appl. Inf. Technol. 2014, 65, 67–75. [Google Scholar]

- Sreekala; Jagadeesh, B. Simulation of Real-Time Embedded Security System for Atm Using Enhanced Finger-Vein Recognition Technique. Int. J. Eng. Technol. Manag. (IJETM) 2014, 2, 38–41. [Google Scholar]

- Gholami, A.; Hassanpour, H. Common Spatial Pattern for Human Identification Based on Finger Vein Images in Radon Space. J. Adv. Comput. Res. Q. Pissn 2014, 5, 31–42. [Google Scholar]

- Shrikhande, S.P.; Fadewar, H.S. Finger Vein Recognition Using Discrete Wavelet Packet Transform Based Features. In Proceedings of the 2015 International Conference on Advances in Computing, Communications and Informatics, ICACCI 2015, Kochi, India, 10–13 August 2015; pp. 1646–1651. [Google Scholar] [CrossRef]

- Wang, K.; Yang, X.; Tian, Z.; Du, T. The Finger Vein Recognition Based on Curvelet. In Proceedings of the 33rd Chinese Control Conference (CCC 2014), Nanjing, China, 28–30 July 2014; pp. 4706–4711. [Google Scholar] [CrossRef]

- Shareef, A.Q.; George, L.E.; Fadel, R.E. Finger Vein Recognition Using Haar Wavelet Transform. Int. J. Comput. Sci. Mob. Comput. 2015, 4, 1–7. [Google Scholar]

- Qin, H.; He, X.; Yao, X.; Li, H. Finger-Vein Verification Based on the Curvature in Radon Space. Expert Syst. Appl. 2017, 82, 151–161. [Google Scholar] [CrossRef]

- Yang, J.; Shi, Y.; Jia, G. Finger-Vein Image Matching Based on Adaptive Curve Transformation. Pattern Recognit. 2017, 66, 34–43. [Google Scholar] [CrossRef]

- Janney, J.B.; Divakaran, S.; Shankar, G.U. Finger Vein Recognition System for Authentication of Patient Data in Hospital. Int. J. Pharma Bio Sci. 2017, 8, 5–10. [Google Scholar] [CrossRef]

- Subramaniam, B.; Radhakrishnan, S. Multiple Features and Classifiers for Vein Based Biometric Recognition. Biomed. Res. 2018, 8–13. [Google Scholar] [CrossRef]

- Mei, C.L.; Xiao, X.; Liu, G.H.; Chen, Y.; Li, Q.A. Feature Extraction of Finger-Vein Image Based on Morphologic Algorithm. In Proceedings of the 6th International Conference on Fuzzy Systems and Knowledge Discovery, FSKD 2009, Tianjin, China, 14–16 August 2009; Volume 3, pp. 407–411. [Google Scholar]

- Chen, L.; Zheng, H. Finger Vein Image Recognition Based on Tri-Value Template Fuzzy Matching. Wuhan Daxue Xuebao/Geomat. Inf. Sci. Wuhan Univ. 2011, 36, 157–162. [Google Scholar]

- Mahri, N.; Azmin, S.; Suandi, S.; Rosdi, B.A. Finger Vein Recognition Algorithm Using Phase Only Correlation. Electron. Eng. 2012, 2–7. [Google Scholar] [CrossRef]

- Xiong, X.; Chen, J.; Yang, S.; Cheng, D. Study of Human Finger Vein Features Extraction Algorithm Based on DM6437. In Proceedings of the ISPACS 2010–2010 International Symposium on Intelligent Signal Processing and Communication Systems, Chengdu, China, 6–8 December 2010; pp. 6–9. [Google Scholar]

- Tang, D.; Huang, B.; Li, R.; Li, W.; Li, X. Finger Vein Verification Using Occurrence Probability Matrix (OPM). In Proceedings of the 2012 International Joint Conference on Neural Networks (IJCNN), Brisbane, QLD, Australia, 10–15 June 2012; pp. 21–26. [Google Scholar] [CrossRef]

- Xi, X.; Yang, G.; Yin, Y.; Meng, X. Finger Vein Recognition with Personalized Feature Selection. Sensors 2013, 13, 11243–11259. [Google Scholar] [CrossRef] [PubMed]

- Cao, D.; Yang, J.; Shi, Y.; Xu, C. Structure Feature Extraction for Finger-Vein Recognition. In Proceedings of the 2nd IAPR Asian Conference on Pattern Recognition (ACPR 2013), Naha, Japan, 5–8 November 2013; Volume 1, pp. 567–571. [Google Scholar]

- Rajan, R.; Indu, M.G. A Novel Finger Vein Feature Extraction Technique for Authentication. In Proceedings of the 2014 Annual International Conference on Emerging Research Areas: Magnetics, Machines and Drives, Kerala, India, 24–26 July 2014. [Google Scholar]

- Liu, F.; Yin, Y.; Yang, G.; Dong, L.; Xi, X. Finger Vein Recognition with Superpixel-Based Features. In Proceedings of the IEEE International Joint Conference on Biometrics, Clearwater, FL, USA, 29 September–2 October 2014; pp. 1–8. [Google Scholar]

- Soundarya, M.; Rohini, J.; Nithya, V.P.V.R.S. Advanced Security System Using Finger Vein Recognition. Int. J. Eng. Comput. Sci. 2015, 4, 10804–10809. [Google Scholar]

- Jadhav, M.; Nerkar, P.M. FPGA-Based Finger Vein Recognition System for Personal Verification. Int. J. Eng. Res. Gen. Sci. 2015, 3, 382–388. [Google Scholar]

- You, L.; Li, H.; Wang, J. Finger-Vein Recognition Algorithm Based on Potential Energy Theory. In Proceedings of the 2015 IEEE 16th International Conference on Communication Technology, Hangzhou, China, 18–20 October 2015. [Google Scholar]

- Chandra Bai, P.S.; Prabu, A.J. A Biometric Recognition System for Human Identification Using Finger Vein Patterns. Int. J. Emerg. Trends Eng. Dev. 2017, 7, 62–79. [Google Scholar] [CrossRef]

- Banerjee, A.; Basu, S.; Basu, S.; Nasipuri, M. ARTeM: A New System for Human Authentication Using Finger Vein Images. Multimed. Tools Appl. 2018, 77, 5857–5884. [Google Scholar] [CrossRef]

- Kovač, I.; Marák, P. Openfinger: Towards a Combination of Discriminative Power of Fingerprints and Finger Vein Patterns in Multimodal Biometric System. Tatra Mt. Math. Publ. 2020, 77, 109–138. [Google Scholar] [CrossRef]

- Meng, X.; Xi, X.; Li, Z.; Zhang, Q. Finger Vein Recognition Based on Fusion of Deformation Information. IEEE Access 2020, 8, 50519–50530. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Ahmad Radzi, S.; Khalil-Hani, M.; Bakhteri, R. Finger-Vein Biometric Identification Using Convolutional Neural Network. Turk. J. Electr. Eng. Comput. Sci. 2016, 24, 1863–1878. [Google Scholar] [CrossRef]

- Itqan, K.S.; Syafeeza, A.R.; Gong, F.G.; Mustafa, N.; Wong, Y.C.; Ibrahim, M.M. User Identification System Based on Finger-Vein Patterns Using Convolutional Neural Network. ARPN J. Eng. Appl. Sci. 2016, 11, 3316–3319. [Google Scholar]

- Hong, H.G.; Lee, M.B.; Park, K.R. Convolutional Neural Network-Based Finger-Vein Recognition Using NIR Image Sensors. Sensors 2017, 17, 1297. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Pham, T.; Park, Y.; Nguyen, D.; Kwon, S.; Park, K. Nonintrusive Finger-Vein Recognition System Using NIR Image Sensor and Accuracy Analyses According to Various Factors. Sensors 2015, 15, 16866–16894. [Google Scholar] [CrossRef]

- Yang, J.; Sun, W.; Liu, N.; Chen, Y.; Wang, Y.; Han, S. A Novel Multimodal Biometrics Recognition Model Based on Stacked ELM and CCA Methods. Symmetry 2018, 10, 96. [Google Scholar] [CrossRef]

- Hotelling, H. Relations between Two Sets of Variates. Biometrika 1936, 28, 321. [Google Scholar] [CrossRef]

- Kim, W.; Song, J.M.; Park, K.R. Multimodal Biometric Recognition Based on Convolutional Neural Network by the Fusion of Finger-Vein and Finger Shape Using near-Infrared (NIR) Camera Sensor. Sensors 2018, 18, 2296. [Google Scholar] [CrossRef] [PubMed]

- Hu, H.; Kang, W.; Lu, Y.; Fang, Y.; Liu, H.; Zhao, J.; Deng, F. FV-Net: Learning a Finger-Vein Feature Representation Based on a CNN. In Proceedings of the International Conference on Pattern Recognition, Beijing, China, 20–24 August 2018; pp. 3489–3494. [Google Scholar] [CrossRef]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A. Deep Face Recognition. In Proceedings of the British Machine Vision Conference 2015, Swansea, UK, 7–10 September 2015; British Machine Vision Association: Swansea, UK, 2015; pp. 41.1–41.12. [Google Scholar]

- Fairuz, S.; Habaebi, M.H.; Elsheikh, E.M.A.; Chebil, A.J. Convolutional Neural Network-Based Finger Vein Recognition Using Near Infrared Images. In Proceedings of the 2018 7th International Conference on Computer and Communication Engineering, ICCCE 2018, Kuala Lumpur, Malaysia, 19–20 September 2018; pp. 453–458. [Google Scholar] [CrossRef]

- Das, R.; Piciucco, E.; Maiorana, E.; Campisi, P. Convolutional Neural Network for Finger-Vein-Based Biometric Identification. IEEE Trans. Inf. Forensics Secur. 2018, 14, 360–373. [Google Scholar] [CrossRef]

- Xie, C.; Kumar, A. Finger Vein Identification Using Convolutional Neural Network and Supervised Discrete Hashing. Pattern Recognit. Lett. 2019, 119, 148–156. [Google Scholar] [CrossRef]

- Shen, F.; Shen, C.; Liu, W.; Shen, H.T. Supervised Discrete Hashing. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 37–45. [Google Scholar]

- Lu, Y.; Xie, S.; Wu, S. Exploring Competitive Features Using Deep Convolutional Neural Network for Finger Vein Recognition. IEEE Access 2019, 7, 35113–35123. [Google Scholar] [CrossRef]

- Song, J.M.; Kim, W.; Park, K.R. Finger-Vein Recognition Based on Deep DenseNet Using Composite Image. IEEE Access 2019, 7, 66845–66863. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Li, J.; Fang, P. FVGNN: A Novel GNN to Finger Vein Recognition from Limited Training Data. In Proceedings of the 2019 IEEE 8th Joint International Information Technology and Artificial Intelligence Conference (ITAIC 2019), Chongqing, China, 24–26 May 2019; pp. 144–148. [Google Scholar]

- Kuzu, R.S.; Piciucco, E.; Maiorana, E.; Campisi, P. On-the-Fly Finger-Vein-Based Biometric Recognition Using Deep Neural Networks. IEEE Trans. Inf. Forensic Secur. 2020, 15, 2641–2654. [Google Scholar] [CrossRef]

- Noh, K.J.; Choi, J.; Hong, J.S.; Park, K.R. Finger-Vein Recognition Based on Densely Connected Convolutional Network Using Score-Level Fusion with Shape and Texture Images. IEEE Access 2020, 8, 96748–96766. [Google Scholar] [CrossRef]

- Cherrat, E.; Alaoui, R.; Bouzahir, H. Convolutional Neural Networks Approach for Multimodal Biometric Identification System Using the Fusion of Fingerprint, Finger-Vein and Face Images. PeerJ Comput. Sci. 2020, 6, e248. [Google Scholar] [CrossRef] [PubMed]

- Zhao, D.; Ma, H.; Yang, Z.; Li, J.; Tian, W. Finger Vein Recognition Based on Lightweight CNN Combining Center Loss and Dynamic Regularization. Infrared Phys. Technol. 2020, 105, 103221. [Google Scholar] [CrossRef]

- Hao, Z.; Fang, P.; Yang, H. Finger Vein Recognition Based on Multi-Task Learning. In Proceedings of the 2020 5th International Conference on Mathematics and Artificial Intelligence, Chengdu, China, 10–13 April 2020; pp. 133–140. [Google Scholar]

- Kuzu, R.S.; Maiorana, E.; Campisi, P. Vein-Based Biometric Verification Using Transfer Learning. In Proceedings of the 2020 43rd International Conference on Telecommunications and Signal Processing (TSP), Milan, Italy, 7–9 July 2020; pp. 403–409. [Google Scholar]

- Yin, Y.; Liu, L.; Sun, X. SDUMLA-HMT: A Multimodal Biometric Database. In Biometric Recognition; Sun, Z., Lai, J., Chen, X., Tan, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 7098, pp. 260–268. ISBN 978-3-642-25448-2. [Google Scholar]

- Ton, B.T.; Veldhuis, R.N.J. A High Quality Finger Vascular Pattern Dataset Collected Using a Custom Designed Capturing Device. In Proceedings of the 2013 International Conference on Biometrics (ICB), Madrid, Spain, 4–7 June 2013; pp. 1–5. [Google Scholar]

- Lu, Y.; Xie, S.J.; Yoon, S.; Wang, Z.; Park, D.S. An Available Database for the Research of Finger Vein Recognition. In Proceedings of the 2013 6th International Congress on Image and Signal Processing (CISP), Hangzhou, China, 16–18 December 2013; pp. 410–415. [Google Scholar]

- Tsinghua University Finger Vein and Finger Dorsal Texture Database (THU-FVFDT). Available online: https://www.sigs.tsinghua.edu.cn/labs/vipl/thu-fvfdt.html (accessed on 17 April 2021).

- Salzburg University. PLUSVein-FV3 LED-Laser Dorsal-Palmar Finger Vein Database; Salzburg University: Salzburg, Austria, 2018. [Google Scholar]

- Vanoni, M.; Tome, P.; El Shafey, L.; Marcel, S. Cross-Database Evaluation with an Open Finger Vein Sensor. In Proceedings of the IEEE Workshop on Biometric Measurements and Systems for Security and Medical Applications (BioMS), Rome, Italy, 17 October 2014. [Google Scholar]

- Mohd Asaari, M.S.; Suandi, S.A.; Rosdi, B.A. Fusion of Band Limited Phase Only Correlation and Width Centroid Contour Distance for Finger Based Biometrics. Expert Syst. Appl. 2014, 41, 3367–3382. [Google Scholar] [CrossRef]

- The Hong Kong Polytechnic University. Synthetic Finger-Vein Images Database Generator. Available online: https://www4.comp.polyu.edu.hk/~csajaykr/fvgen.htm (accessed on 17 April 2021).

- Kauba, C.; Uhl, A. An Available Open-Source Vein Recognition Framework. In Handbook of Vascular Biometrics; Uhl, A., Busch, C., Marcel, S., Veldhuis, R., Eds.; Springer International Publishing: Cham, Germany, 2020; pp. 113–142. ISBN 978-3-030-27730-7. [Google Scholar]

- Tome, P.; Vanoni, M.; Marcel, S. On the Vulnerability of Finger Vein Recognition to Spoofing. In Proceedings of the 2014 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 1–12 September 2014; pp. 1–10. [Google Scholar]

- Yang, L.; Yang, G.; Xi, X.; Meng, X.; Zhang, C.; Yin, Y. Tri-Branch Vein Structure Assisted Finger Vein Recognition. IEEE Access 2017, 5, 21020–21028. [Google Scholar] [CrossRef]

| Ref. | Key Features | Advantages | Disadvantages |

|---|---|---|---|

| [10] | Application of line tracking | Robust against dark images, fast with a low EER (0.14%) | Mismatch increases when veins become unclear |

| [11] | Application of local maximum curvatures | Not affected by fluctuations in width and brightness, low EER (0.0009%) | Evaluated only with one dataset of 638 images |

| [12] | Combination of gradient normalization, principal curvature, and binarization | Not affected by vein thickness or brightness, EER of 0.36% | High EER |

| [13] | Extraction of minutiae with bifurcation and ending points | Used as a geometric representation of a vein, low EER (0.76%) | Tested on a small dataset |

| [14] | Extraction of local moments, topological structure, and statistics | A Dempster–Shafer fusion scheme is applied | Low accuracy (98.50%) |

| [15] | Application of Gabor filter banks | Takes into account local and global features, performs well in person identification | Low accuracy (98.86%) |

| [16] | Application of maximum curvature | Overcomes low contrast and intensity inhomogeneity | High EER (8.93%) |

| [17] | Extraction of phase and direction texture features | Does not require preprocessing, has a low storage requirement | Robustness in the presence of noise is not studied |

| [18] | Application of the mean curvature method | Extracts patterns from images with unclear veins, fast with a low EER (0.25%) | Small dataset |

| [19] | Application of multi-scale oriented Gabor filters | Takes into account local and global features | Low RR (97.60%) |

| [20] | Application of guided Gabor filters | Does not require segmentation, good against low contrast, illumination, and noise | High EER (2.24%) |

| [21] | Cryptographic key generation from a contour-tracing algorithm | Small probability of error when the image is altered and robust against minor changes in direction or position | No recognition results presented |

| [22] | Maximum curvature method, Gabor filter, minutiae extraction | Elimination of false minutiae points | Performance analysis is not reported |

| [23] | Combination of SURF with Lacunarity | Shows real-time performance | Experimental information is missing |

| [25] | Application of SVDMM | Performs better than similar works | High EER (2.45%) |

| [26] | Combination of minutiae extraction and false pair removal | Eliminates false minutiae matching | Low accuracy (91.67%) |

| [27] | Application of repeated line tracking | Simplicity | Part of a multi-modal system, no results presented |

| [28] | Combination of multi-scale matched filtering and line tracking | Extracts local and global features | High EER (4.47%) |

| [29] | Combination of minutiae extraction and curve analysis | Low EER (0.50%) | Low accuracy (92.00%) |

| [30] | Application of modified repeated line tracking | More robust and efficient than the original line tracking method, fast | Depends heavily on the segmentation result |

| [31] | Application of gradient boost | Fast, is not affected by roughness or dryness of skin | No results presented |

| [32] | Curvature through image intensity | Robust against irregular shading and deformation of vein patterns, fast with a low EER | Requires capturing of finger outlines |

| [33] | Overlaying of segmented vein images for feature generation | Generation of optimal quality templates | Low accuracy (97.14%), small dataset |

| [34] | Application of neighborhood elimination to minutiae point extraction | Takes into account intersection points, reduced feature vector size | No RR or EER results provided |

| [35] | Application of Gabor filters | Captures both local orientation and frequency information | No results presented |

| [36] | Application of different feature extraction methods (maximum and principal curvature, Gabor filters, and SIFT) | Low EER (0.08%) | Fusion of different perspectives needs improvement |

| [37] | Application of orientation map-guided curvature and anatomy structure analysis | Easy vein pattern extraction, fast, overcomes noise and breakpoints, Low EER (0.78%) and high RR (99.00%) | The width of the vein pattern is not used |

| [38] | Application of an elliptical direction map for vein code generation | High accuracy (99.04%) | Results depend on parameters |

| [39] | Combination of KMeans Segmentation with canny edge detection | Low EER (0.015%) | Small dataset |

| [40] | Application of SLA | Ensemble learning is applied | Low accuracy (87.00%) |

| [41] | Application of C2 code | Takes into account orientation and magnitude information, low EER (0.40%) | Dataset information is missing |

| [42] | Application of PWBDC | Low storage requirement and effective with a low EER | Low accuracy (98.9%), High EER (2.20%) |

| [43] | Application of principal curvature using a Hessian matrix | Suitable for FPGA | No results presented |

| [44] | Application of Spectral Clustering | Takes into account useful vein patterns, a low EER (0.037%) | Selection of an appropriate seed parameter value |

| Ref. | Key Features | Advantages | Disadvantages |

|---|---|---|---|

| [45] | Application of pattern map images with PCA | Fast and a high identification rate (100%) | High number of feature vectors (40 features), results depend on parameters |

| [46] | Application of manifold learning | Robust against pose variation, a low EER (0.80%) | Low RR (97.80%) |

| [47] | Combination of B2DPCA with eigenvalue normalization | Improves upon the original 2DPCA method and other methods | Low RR (97.73%) |

| [48] | Combination of Radon transformation and PCA | Low FAR (0.008) and FRR (0) | An in-house dataset is used instead of a benchmark one |

| [49] | Application of linear discriminant analysis with PCA | Very fast and retains the main feature vector | Low Accuracy (98.00%) |

| [50] | Application of (2D)2PCA | High RR (99.17%) | Sample increment with SMOTE |

| [51] | Comparison of multiple PCA algorithms | Can reach an accuracy of up to 100% | Requires a large training set |

| [52] | Application of KPCA | High accuracy (up to 100%) | Accuracy depends on the kernel, feature output, and training size |

| [53] | Combination of KMMC and 2DPCA | Improves upon the recognition time of just KMMC | Very slow recognition time |

| [54] | Combination of MFRAT and GridPCA | Fast and robust against vein structures, variations in illumination and rotation | Low RR (95.67%) |

| [55] | Application of pseudo-elliptical sampling model with PCA | Retains the spatial distribution of vein patterns, reduces redundant information and differences | High EER (1.59%) and low RR (97.61%) |

| [56] | Application of Discriminative Binary Codes | Fast extraction and matching with a low EER (0.0144%) | Requires the construction of a relation graph |

| [57] | Combination of Gabor filters and LDA | Low EER (0.12%) | Part of a multi-modal system |

| [58] | Application of multi-scale uniform LMP with block (2D)2PCA | Preserves local features with a high RR (99.32%) | Does not retain global features and the EER varies per dataset (high to low) |

| Ref. | Key features | Advantages | Disadvantages |

|---|---|---|---|

| [59] | Usage of NN for local feature extraction | Very fast and robust against obscure images | High EER (0.13%) |

| [60] | Alignment using extracted minutiae points | Fast with a low EER (0.081%) | An in-house dataset is used instead of a benchmark one |

| [61] | Extraction of holistic codes through weighted LBP | Reduced processing time and a low EER (0.049%) | Requires setting of weights |

| [62] | Combination of LBP and Wavelet transformation | Low EER (0.011%), fast, and robust against irregular shading and saturation | Tested on a small dataset |

| [63] | Combination of a modified Gaussian high-pass filter with LBP and LDP | Improvement compared with using vein pattern features, a faster processing time, an EER of 0.89% | Not reported |

| [64] | LBP image fusion based on multiple instances | Simple with low computational complexity and improves the RR on low-quality images | High EER (1.42%) |

| [65] | Application of PBBM | Removes noisy bits, personalized features, and highly robust and reliable with a low EER (0.47%) | A small in-house dataset is used instead of a benchmark one |

| [66] | Application of GLLBP | Performs better than other conventional methods on the collected dataset, an EER of 0.58% | Not reported |

| [67] | Application of MOW-SLGS | Takes into account location and direction information | Low RR (96.00%) |

| [68] | Application of enhanced BGC (LHBGC) | Fast, a low EER (0.0038%) when using multiple fingers, and robust against noises | Low EER in cases with multiple fingers |

| [69] | Application of LEBP | Low FPR (0.0129%) and TPR (0.90%) | Low accuracy (97.45%) |

| [70] | Application of DSLGS | More stable features with better performance than the original | High EER (3.28%) |

| [71] | Application of CSBC | High accuracy (99.84%) and a low EER (0.16%) | Multi-modal application |

| [72] | Application of PDVs and AMBP | Solves out-of-sample problems, robust against local changes, and fast with a low EER (0.29%) and a high RR (100%) | Accuracy depends on parameters |

| [73] | Application of multi-directional PDVs | Outperforms state-of-the-art algorithms with a low EER (0.30%) | Complexity analysis is not reported |

| [74] | Fusion of vein images with an ECG signal through DCA | Better than two individual unimodal systems, a low EER (0.1443%) | Multi-modal application |

| [75] | Application of ADLBP | Better describes texture than LBP | Low RR (96.93%), multi-modal application |

| Ref. | Key Features | Advantages | Disadvantages |

|---|---|---|---|

| [76] | Multi-scale self-adaptive enhancement transformation | Very fast, a low EER (0.13%) | Timing performance is not reported |

| [77] | Usage of the Radon transformation for driver identification | High accuracy rate (99.2%) for personal identification | Tested upon a small dataset |

| [80] | Embedded system using the HAAR classifier | Fast recognition time and low computational complexity | Accuracy analysis is not reported |

| [81] | Second generation of wavelet transformation | Fast, a low EER (0.07%) | Dataset and experimental information are missing |

| [82] | Combination of the Radon transformation and common spatial patterns | Fast, a high RR (100%) | Small dataset |

| [83] | Usage of Discrete Wavelet Packet Transform decomposition at every sub-band | Improves upon Discrete Wavelet Transform and the original DWPT | Low RR (92.33%) |

| [84] | Variable-scale USSFT coefficients | High reliability against blurred images | Low RR (91.89%) |

| [85] | Usage of the Haar Wavelet Transformation | High accuracy (99.80%) | Accuracy highly depends on parameters |

| [86] | Feature enhancement and extraction using the Radon transformation | Improvement in accuracy in contacted and contactless databases | High EER (1.03%) |

| [87] | Usage of adaptive vector field estimation using spatial curve filters through effective curve length field estimation | Low EER (0.20%), improves recognition performance compared with other methods | Performance analysis is missing |

| [88] | Usage of Discrete Wavelet Transform | A hardware device is proposed | Small dataset |

| [89] | Fusion of the Hilbert–Hung, Radon, and Dual-Tree wavelet transformations | Low EER (0.014%) and improves upon other methods | Three vein images from different parts |

| Ref. | Key Features | Advantages | Disadvantages |

|---|---|---|---|

| [90] | Combination of morphological peak and valley detection | Precise details, better continuity compared with others, fast, and robust against noise | Low RR |

| [91] | Application of tri-value template fuzzy matching | Robust against fuzzy edges and tips, does not need correspondence among points, and has a low EER (0.54%) | A set of parameters needs optimization |

| [92] | Application of BLPOC | Simple preprocessing, fast with a low EER (0.98%) | A set of parameters needs optimization |

| [93] | Extraction of profile curve valley-shaped features | Fast, easy to implement, and satisfactory results | No classification results provided |

| [94] | Application of OPM | Enhances the similarity between samples in the same class | High EER (3.10%) |

| [95] | Application of PHGTOG | Reflects the global spatial layout and local gray, texture, and shape details and fast with a low EER (0.22%) | Personalized weights for each subject, a low RR (98.90%) |

| [96] | Feature code generation from a modified angle chain | Fast with a low EER (0.0582%) | Small dataset |

| [97] | Combination of a Frangi filter with the FAST and FREAK descriptors | Reliable structure and point-of-interest extraction | No classification results provided |

| [98] | Utilization of superpixel features | Extraction of high-level features | Requires setting of weights for the matching process, a high EER (1.47%) |

| [99] | Application of the Mandelbrot fractal model | Fast, a low EER (0.07%) | Dataset information is missing |

| [100] | Application of canny edge detection | Fast | Slow recognition time and a low RR |

| [101] | Application of Potential Energy Eigenvectors for recognition | Fast and higher accuracy compared with minutiae matching, a low EER (0.97%) | Not reported |

| [102] | Feature extraction using a SVM classifier | Consistent | Low accuracy rate (98.59%) |

| [103] | Feature contrast enhancement and affine transformation registration | Improved preprocessing, can reach a RR of 100% and an EER of 0% | Results vary highly |

| [104] | Combination of the SIFT and SURF keypoint descriptors | Robust to finger displacement and rotation | High EER (6.10%) and a low RR (93.9%) |

| [105] | Takes into account deformation via pixel-based 2D displacements | Low EER (0.40%) | Low timing performance |

| Ref. | Key Features | Advantages | Disadvantages |

|---|---|---|---|

| [127] | Application of a reduced complexity CNN with convolutional subsampling | Fast with very high accuracy (99.27%), does not require segmentation or noise filtering | More testing is required |

| [108] | Application of the smaller LeNet-5 | Not reported | Small dataset, low accuracy (96.00%) |

| [109] | Usage of a difference image as input to VGG-16 | Robust to environmental changes, a low EER (0.396%) | Performance heavily depends on image quality |

| [112] | Application of stacked ELMs and CCA | Does not require iterative fine tuning, efficient, and flexible | Slow with low accuracy (95.58%) |

| [114] | Application of an ensemble model of ResNet50 and ResNet101 | Better performance than other CNN-based models, a low EER (0.80%) | Performance depends on correct ROI extraction |

| [115] | Application of FV-Net | Extracts spatial information, a low EER (0.04%) | Performance varies per dataset |

| [117] | Application of a customized CNN | Very high accuracy (99.17%) | Performance depends on training/testing set size, more testing is required |

| [118] | Application of a customized CNN | Evaluated in four popular datasets | Low accuracy (95.00%), illumination and lighting affect performance |

| [119] | Application of a Siamese network with supervised discrete hashing | Smaller template size | A larger dataset is needed, a high EER (8.00%) |

| [121] | Application of CNN-CO | Exploits discriminative features, does not require a large-scale dataset, a low EER (0.93%) | Performance varies per dataset |

| [122] | Stacking of ROI images into a three-channel image as input to a modified DenseNet-161 | Robust against noisy images, a low EER (0.44%) | Depends heavily on correct alignment and clear capturing |

| [124] | Application of FVGNN | Does not require parameter tuning or preprocessing, very high accuracy (99.98%) | More testing is required |

| [125] | Combination of a V-CNN and LSTM | Ad hoc image acquisition, high accuracy (99.13%) | High complexity |

| [126] | Stacking of both texture and vein images, application of CNNs to extract matching scores | Robust to noise, a low EER (0.76%) | Model is heavy, long processing time |

| [127] | Combination of a CNN, Softmax, and RF | High accuracy (99.73%) | Small dataset |

| [128] | Application of a lightweight CNN with a center loss function and dynamic regularization | Robust against a bad-quality sensor, faster convergence, and a low EER (0.50%) | The customized CNN needs improvement |

| [129] | Application of a multi-task CNCN for ROI and feature extraction | Efficient, interpretable results | Performance varies per dataset |

| [130] | Transfer learning on a modified DenseNet161 | Low EER (0.006%), does not require building a network from scratch | Performance varies per dataset |

| Database Νame | Number of Classes | Number of Fingers | Samples per Finger | Total Size | Image Size | Link (accessed on 17 April 2021) |

|---|---|---|---|---|---|---|

| SDUMLA-HMT [131] | 106 | 6 | 6 | 3816 | 320 × 240 | http://www.wavelab.at/sources/Prommegger19c/ |

| UTFV [132] | 60 | 6 | 4 | 1440 | 200 × 100 | https://pythonhosted.org/bob.db.utfvp/ |

| MMCBNU_6000 [133] | 100 | 6 | 10 | 6000 | 640 × 480 | http://wavelab.at/sources/Drozdowski20a/ |

| THU-FVFD [134] | 220 | 1 | 1 | 440 | 720 × 576 | https://www.sigs.tsinghua.edu.cn/labs/vipl/thu-fvfdt.html |

| PLUSVein-FV3 [135] | 60 | 6 | 5 | 1800 | 736 × 192 | http://wavelab.at/sources/PLUSVein-FV3/ |

| VERA [136] | 110 | 2 | 2 | 440 | 665 × 250 | https://www.idiap.ch/dataset/vera-fingervein |

| FV-USM [137] | 123 | 8 | 6 | 5904 | 640 × 480 | http://drfendi.com/fv_usm_database/ |

| Ref. | Implementation Link (accessed on 17 April 2021) | Programming Language |

|---|---|---|

| [139] | https://gitlab.cosy.sbg.ac.at/ckauba/openvein-toolkit | Python |

| [130] | https://github.com/ridvansalihkuzu/vein-biometrics | Python |

| [140] | https://pypi.org/project/xbob.fingervein/ | Python |

| [65] | https://github.com/sohamidha/PBBM | MATLAB |

| [11] | https://github.com/dohnto/Max-Curvature | C++ |

| [10] | https://github.com/dohnto/Repeated-Line-Tracking | C++ |

| [141] | https://github.com/sandeepkapri/Tri-Branch-Vein-Structure-Assisted-Finger-Vein-Recognition | MATLAB |

| Topology | Camera Type | NIR LED Wavelength (nm) | Additional Hardware |

|---|---|---|---|

| Top-down NIR LED, camera on the opposite side, with the finger in the middle | Common CCD | 700–1000 | NIR filter on camera lens (in some cases) |

| Top-down NIR LED, camera on the opposite side, with the finger in the middle | Common CCD or CMOS camera | 760–850 | Additional LEDs on opposite sides or an angled hot mirror for extra contrast |

| Top-down NIR LED array, array of cameras on the bottom | CMOS NIR | 860 | Diffusing glass on NIR LEDs, a 700 nm long pass NIR filter on the camera array |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sidiropoulos, G.K.; Kiratsa, P.; Chatzipetrou, P.; Papakostas, G.A. Feature Extraction for Finger-Vein-Based Identity Recognition. J. Imaging 2021, 7, 89. https://doi.org/10.3390/jimaging7050089

Sidiropoulos GK, Kiratsa P, Chatzipetrou P, Papakostas GA. Feature Extraction for Finger-Vein-Based Identity Recognition. Journal of Imaging. 2021; 7(5):89. https://doi.org/10.3390/jimaging7050089

Chicago/Turabian StyleSidiropoulos, George K., Polixeni Kiratsa, Petros Chatzipetrou, and George A. Papakostas. 2021. "Feature Extraction for Finger-Vein-Based Identity Recognition" Journal of Imaging 7, no. 5: 89. https://doi.org/10.3390/jimaging7050089

APA StyleSidiropoulos, G. K., Kiratsa, P., Chatzipetrou, P., & Papakostas, G. A. (2021). Feature Extraction for Finger-Vein-Based Identity Recognition. Journal of Imaging, 7(5), 89. https://doi.org/10.3390/jimaging7050089