A Versatile Machine Vision Algorithm for Real-Time Counting Manually Assembled Pieces

Abstract

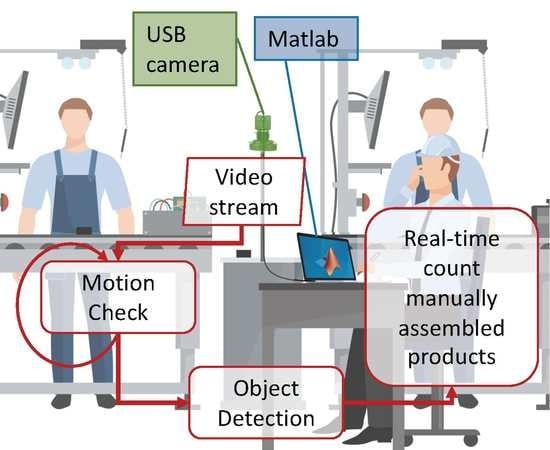

:1. Introduction

2. Materials and Methods

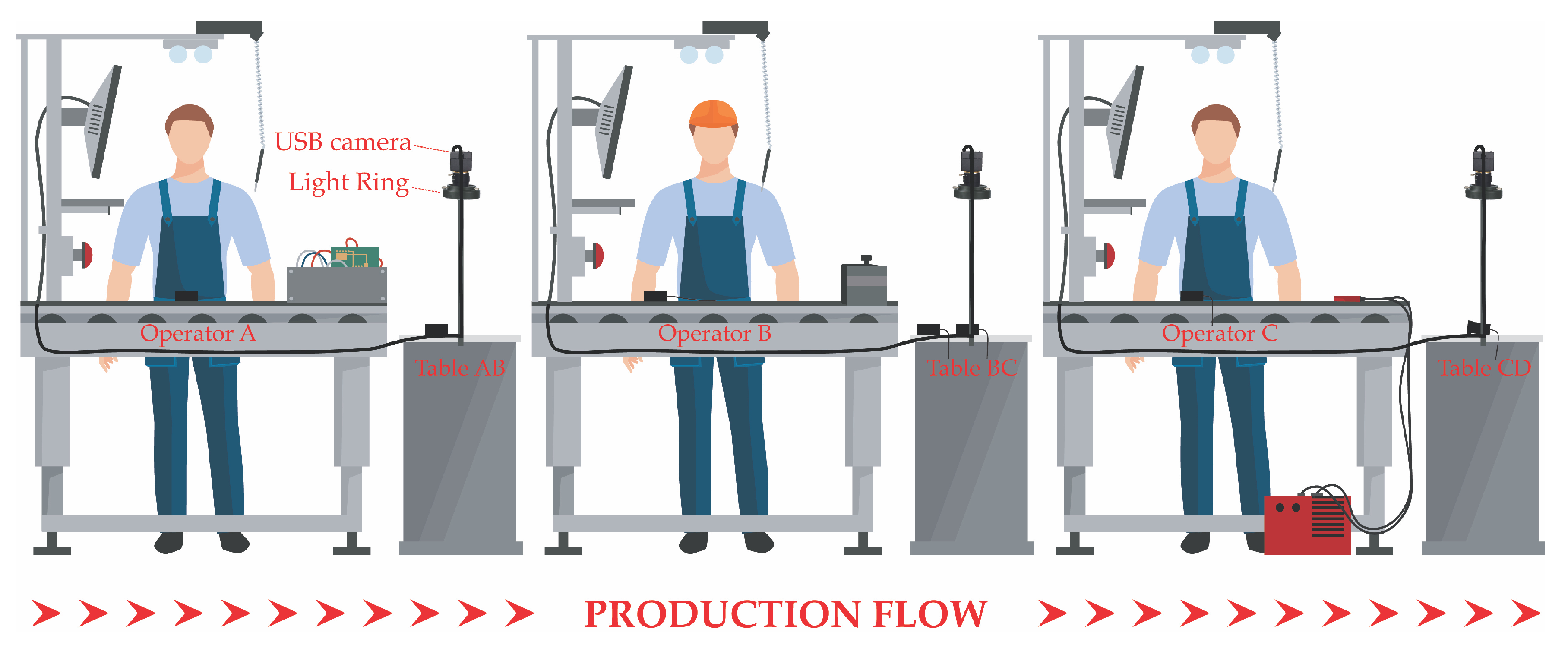

2.1. System Setup: Hardware Architecture

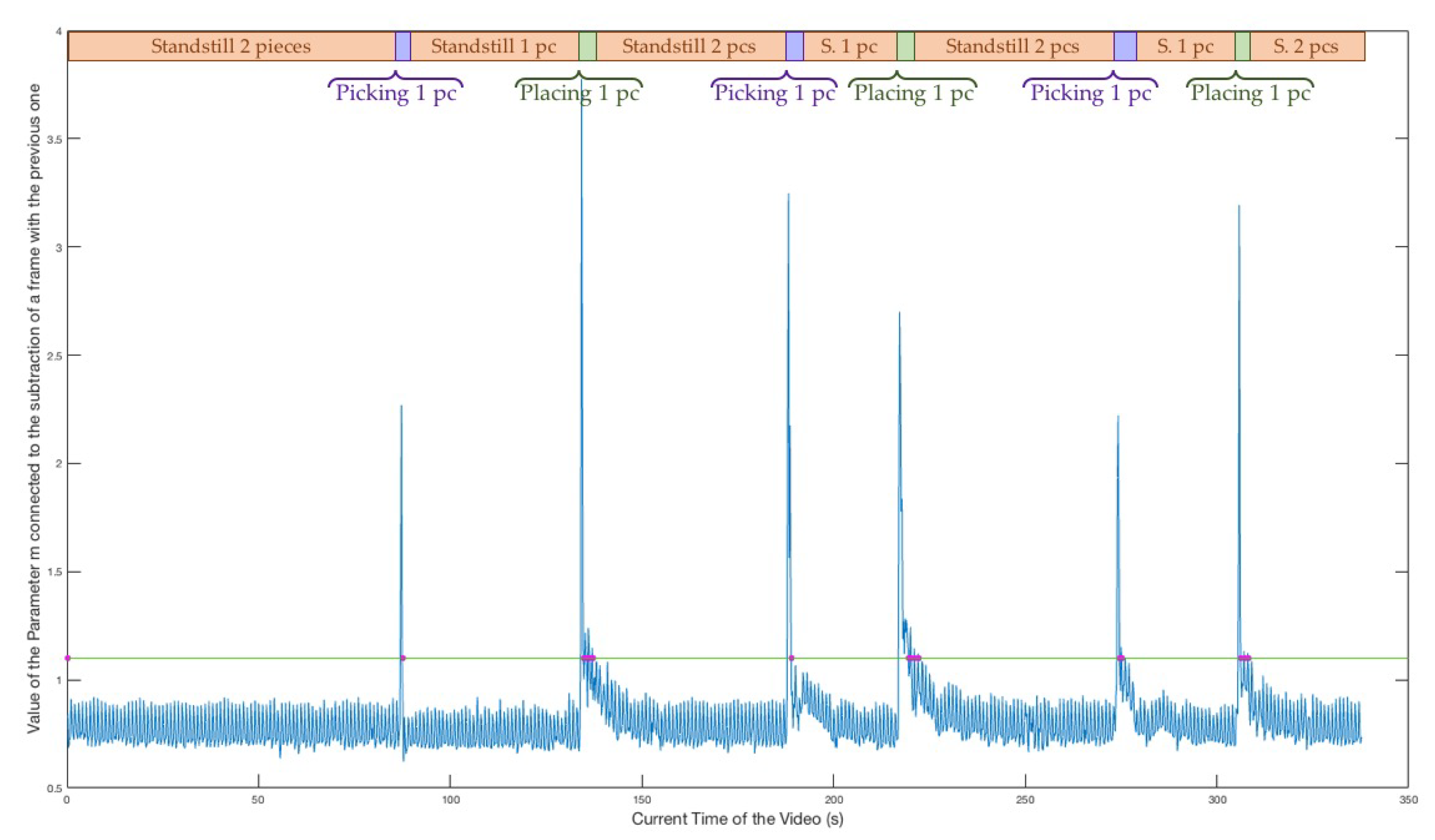

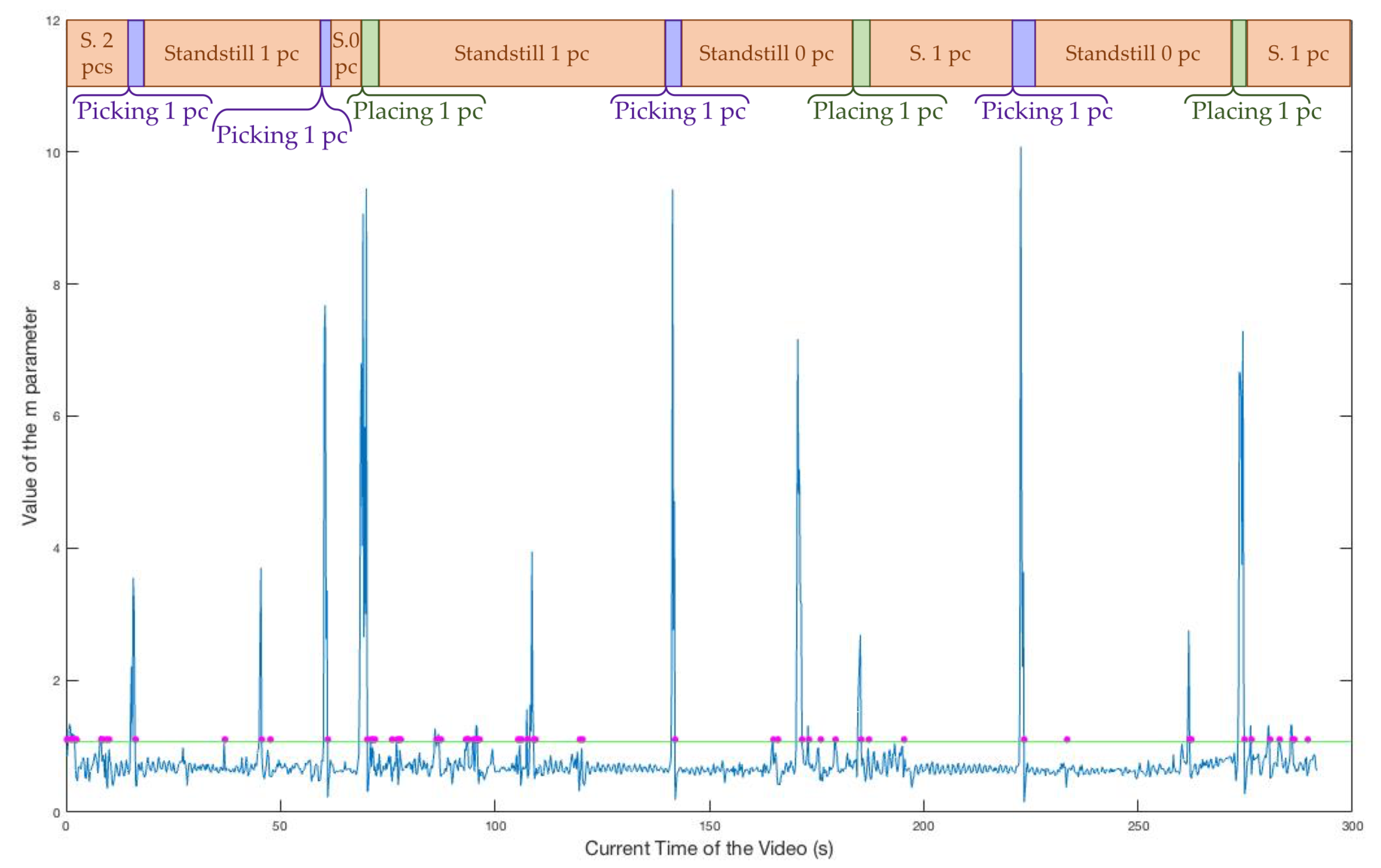

2.2. Context Description and Analysis

- the table is empty (Standstill interval);

- the hand of operator A holds a piece, places it on the table and then goes away from the ROI of the camera (Motion Interval);

- the placed piece is alone on the table (Standstill interval); and

- the hand of operator B enters into the ROI and picks up the piece to take it away from the field of view (Motion Interval).

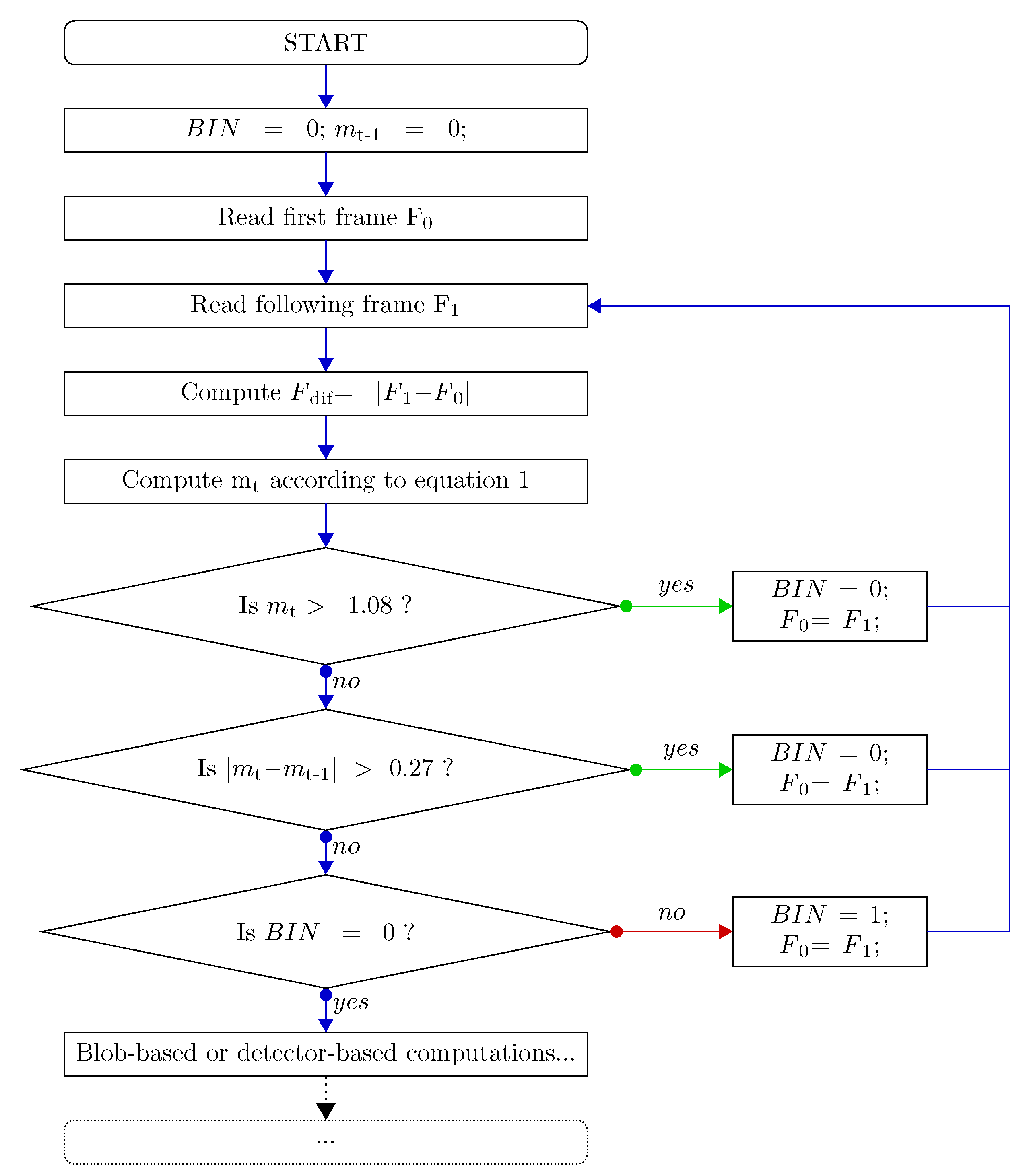

2.3. Improved Algorithms

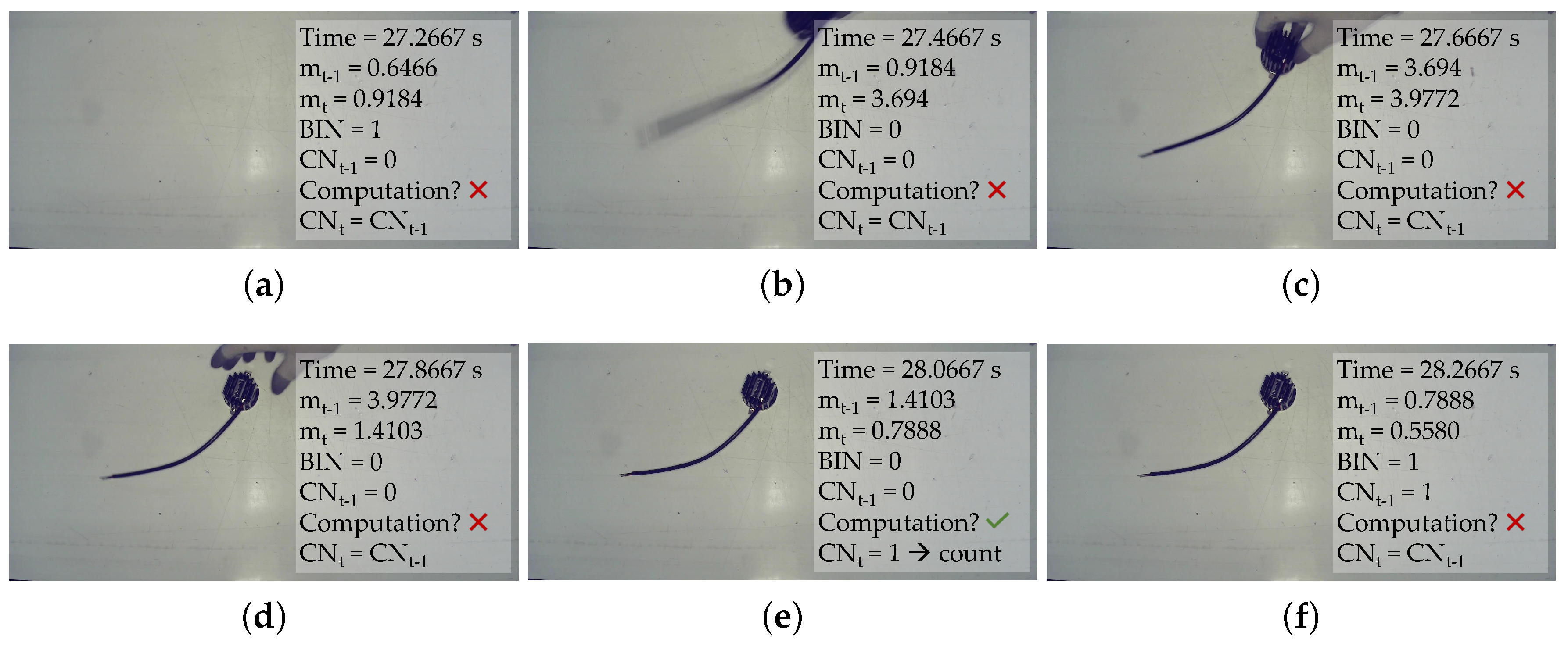

2.3.1. Motion Check

- In the first frame of a stand-still interval, just after the end of a motion phase, where BIN is equal to 0 and mt is less than 1.08; and

- In the image just after two frames within a stand-still phase which have a difference between their m values, (respectively, mt and mt−1) higher than 0.27.

Motion Check Parameters and Thresholds

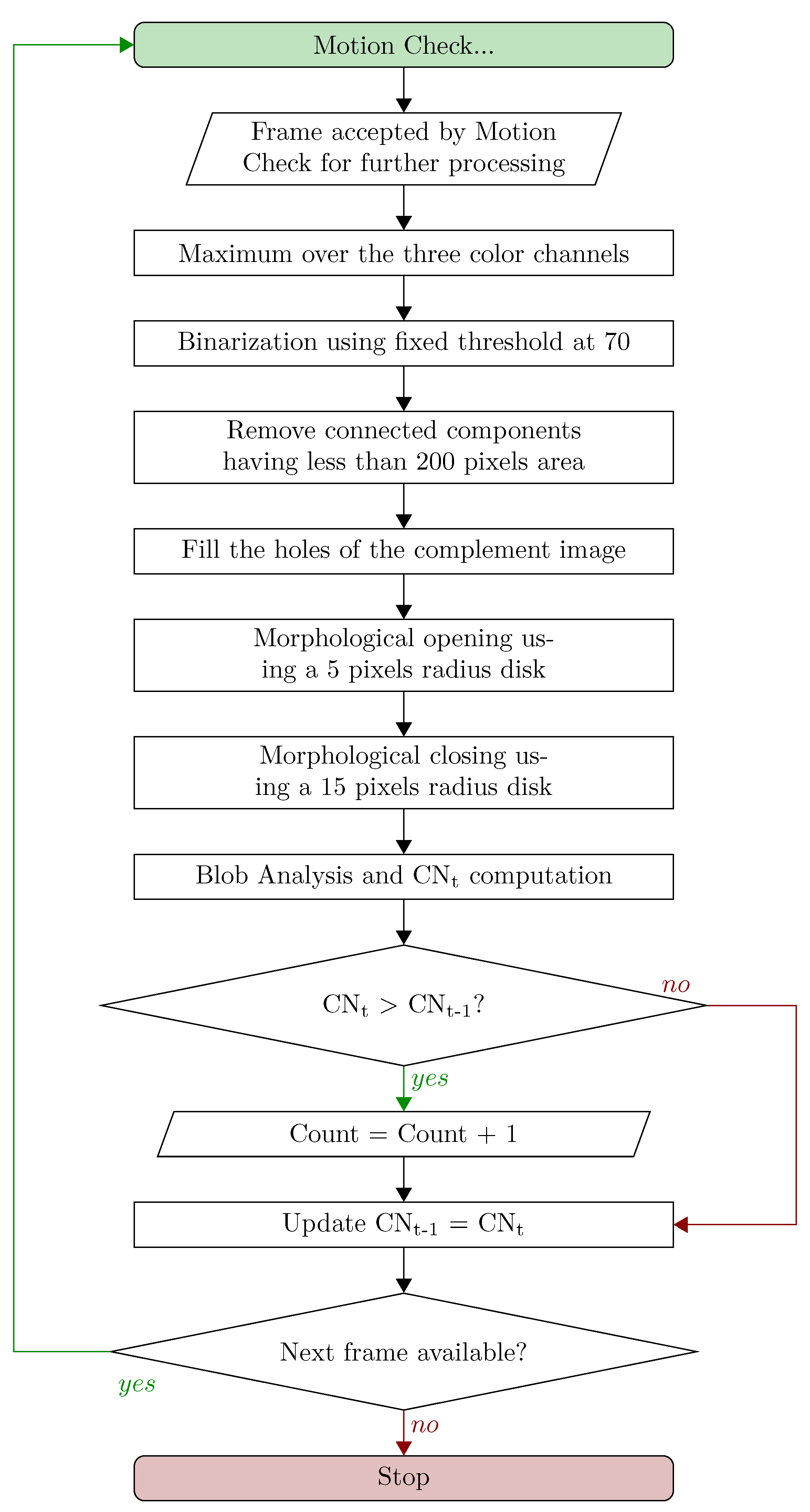

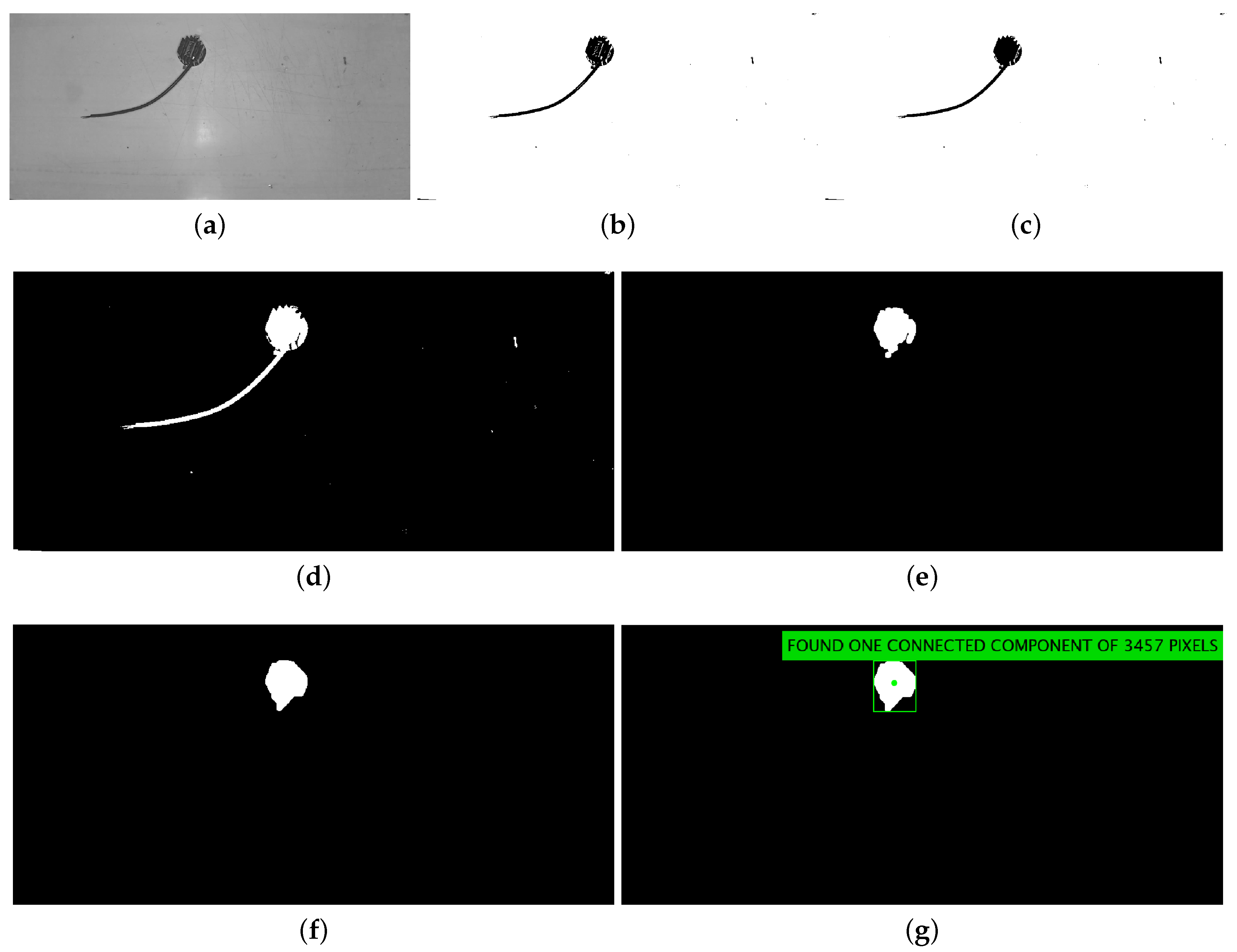

2.3.2. Blob-Based Counting Algorithm

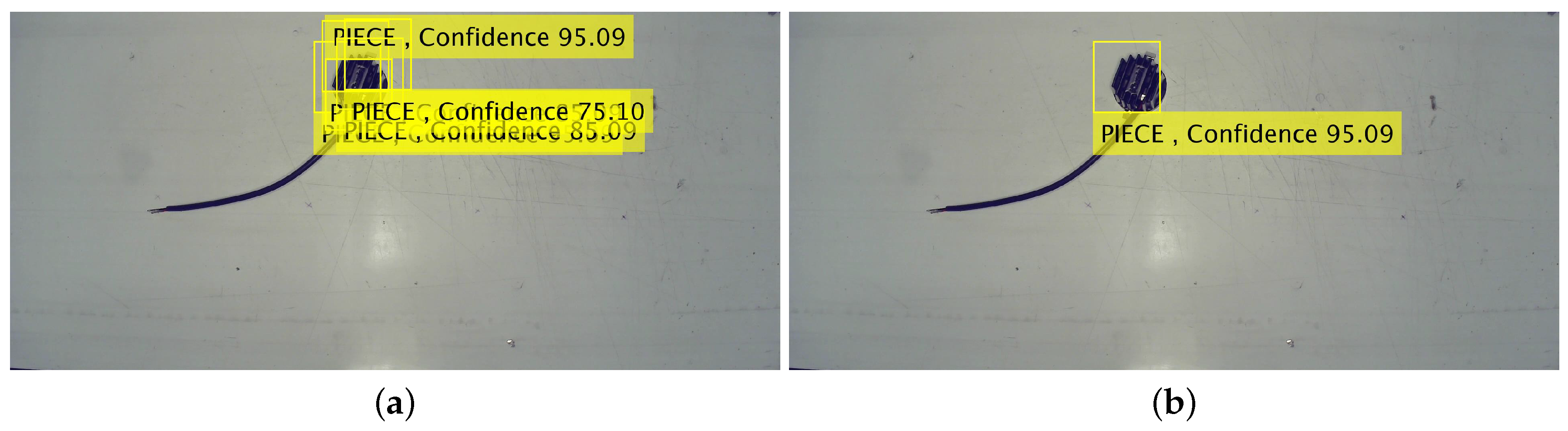

2.3.3. Aggregated Channel Features Detector-Based Counting Algorithm

- (i)

- Apply the detector on the frame and obtain the scores and the bounding box co-ordinates;

- (ii)

- Examine, when more than one object has been detected, if any of these separate bounding boxes overlap (in which case, they correspond to the same object);

- (iii)

- Compare the number of objects present in the current frame (CNt), along with the recorded number of already present objects (CNt−1):

- COUNT if tt−1

- DO NOT COUNT if tt−1

- (iv)

- Update CNt−1 with CNt and go back to the Motion Check procedure with the following frame.

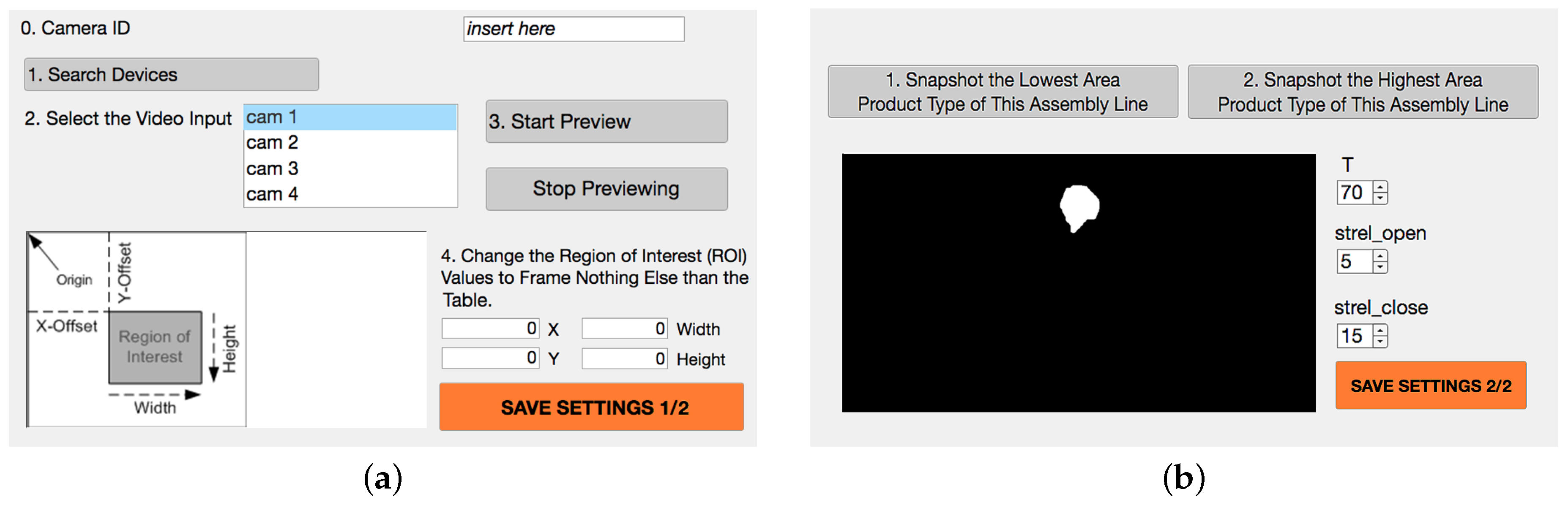

2.4. Prototypical Implementation

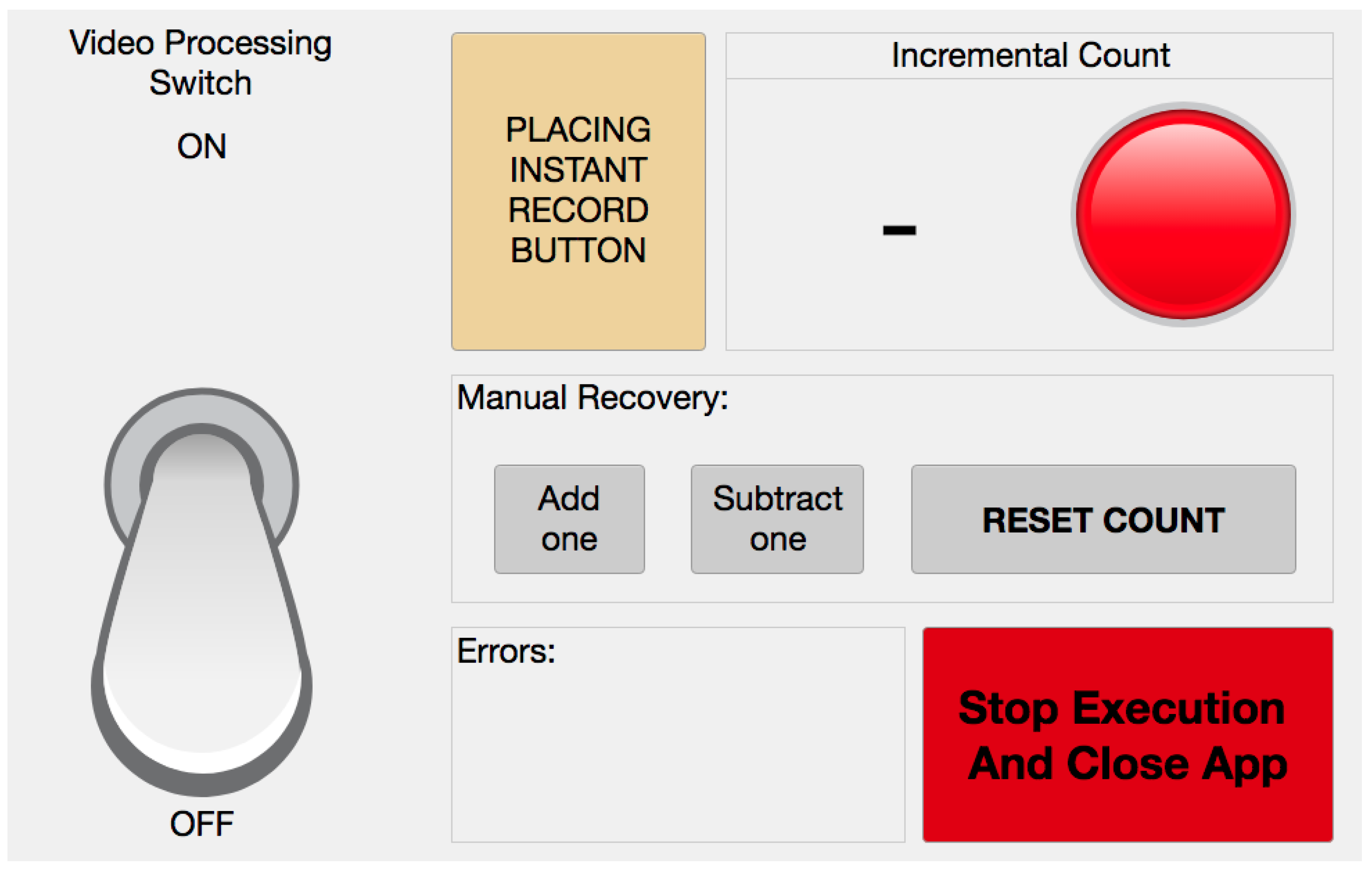

2.4.1. Real-Time Counting Application

2.4.2. Parameter Setting Tool

3. Results

- (a)

- Count every time an assembled piece is placed on the table;

- (b)

- Do not count whenever a piece is picked up from the table;

- (c)

- Do not count whenever a piece is not placed on the table, in general, given that sometimes operators interfere in the framed ROI of the camera even though they are not placing nor picking up a piece;

- (d)

- Analyse the live video stream for long times without losing any interval;

- (e)

- Be timely in counting; and

- (f)

- Be adaptable to all of the different assembly lines in the company’s shop floor.

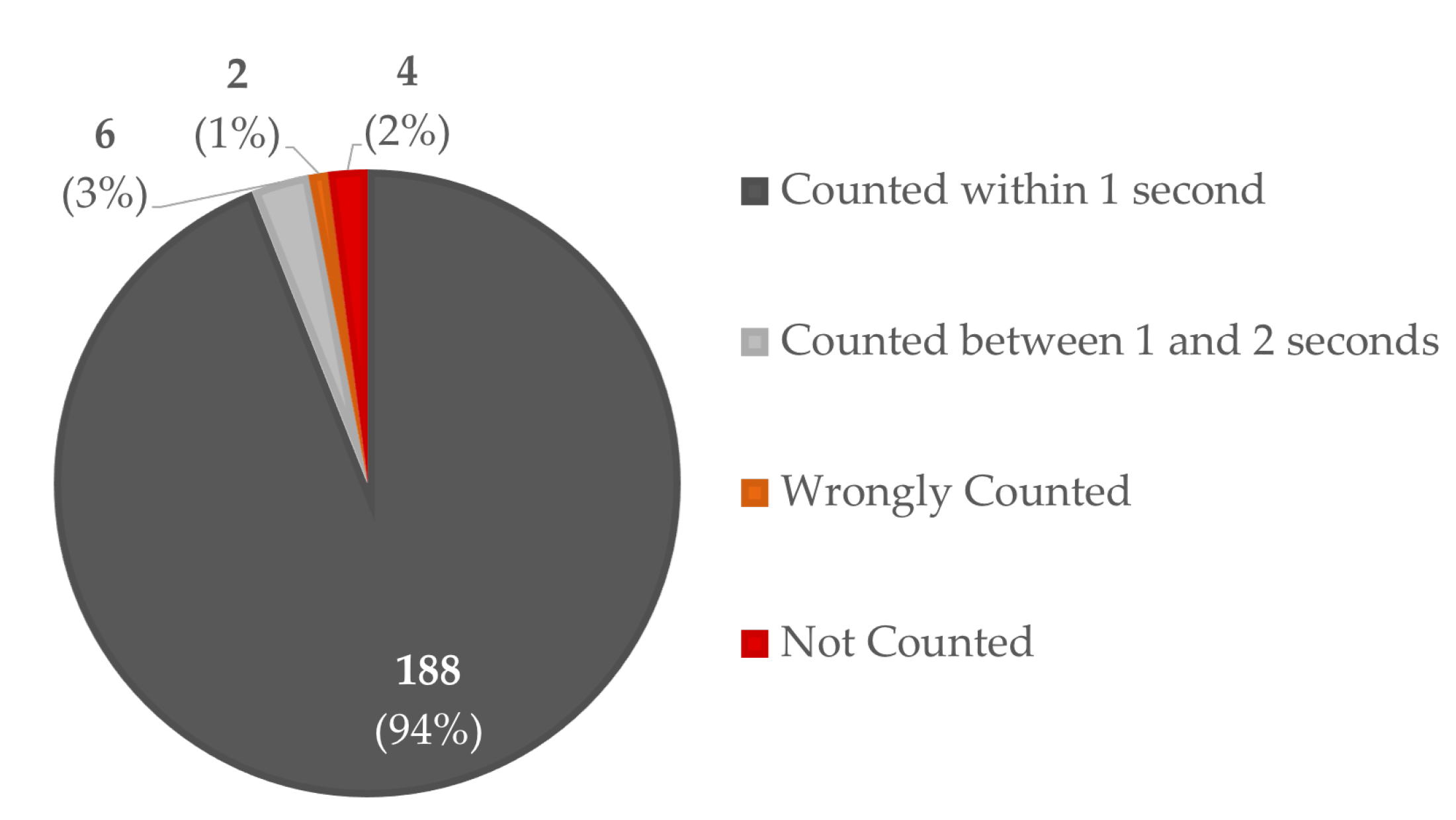

3.1. Testing Counting Capability

- correctly count one piece when the operator places an assembled one on the intermediate table (True Positive, TP);

- wrongly count when a piece has not been added on the table (False Positive, FP);

- correctly do not count when there have not been new pieces placed on the table (True Negative, TN); or

- wrongly do not count when an assembled piece has been placed on the table (False Negative-FN).

- Sensitivity, computed as the number of TP divided by the sum of the number of TP and FN, measures the solution’s capability of correctly identifying placed pieces and counting;

- Specificity, computed as the number of TN divided by the sum of TN and FP, measures the solution’s capability of correctly identifying the picked up pieces without counting; and

- Accuracy, computed as the sum of TP and TN divided by the sum of TP, FP, TN, and FN, measures the overall solution’s capability of correctly behaving.

3.2. Testing Real-Time Capability

3.3. Testing Other Requirements

4. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| CV | Computer Vision |

| MV | Machine Vision |

| ROI | Region Of Interest |

| ACF | Aggregated Channel Features |

| GUI | Graphical User Interface |

References

- Kagermann, H.; Lukas, W.D.; Wahlster, W. Industrie 4.0: Mit dem Internet der Dinge auf dem Weg zur 4. industriellen Revolution. VDI Nachrichten 2011, 13, 2. [Google Scholar]

- Hermann, M.; Pentek, T.; Otto, B. Design Principles for Industrie 4.0 Scenarios: A Literature Review; Technische Universität Dortmund: Dortmund, Germany, 2015. [Google Scholar] [CrossRef]

- Kamble, S.S.; Gunasekaran, A.; Gawankar, S.A. Sustainable Industry 4.0 framework: A systematic literature review identifying the current trends and future perspectives. Process Saf. Environ. Prot. 2018, 117, 408–425. [Google Scholar] [CrossRef]

- Liu, Y.; Xu, X. Industry 4.0 and Cloud Manufacturing: A Comparative Analysis. J. Manuf. Sci. Eng. 2016, 139. [Google Scholar] [CrossRef]

- Sony, M. Industry 4.0 and lean management: A proposed integration model and research propositions. Prod. Manuf. Res. 2018, 6, 416–432. [Google Scholar] [CrossRef] [Green Version]

- Ramadan, M.; Salah, B.; Othman, M.; Ayubali, A.A. Industry 4.0-Based Real-Time Scheduling and Dispatching in Lean Manufacturing Systems. Sustainability 2020, 12, 2272. [Google Scholar] [CrossRef] [Green Version]

- Monostori, L. Cyber-physical Production Systems: Roots, Expectations and R&D Challenges. Procedia CIRP 2014, 17, 9–13. [Google Scholar] [CrossRef]

- Muchtar, K.; Rahman, F.; Cenggoro, T.W.; Budiarto, A.; Pardamean, B. An Improved Version of Texture-based Foreground Segmentation: Block-based Adaptive Segmenter. Procedia Comput. Sci. 2018, 135, 579–586. [Google Scholar] [CrossRef]

- Heikkila, M.; Pietikainen, M. A texture-based method for modeling the background and detecting moving objects. IEEE Trans. Pattern Anal. Mach. 2006, 28, 657–662. [Google Scholar] [CrossRef] [Green Version]

- Segura, Á.; Diez, H.V.; Barandiaran, I.; Arbelaiz, A.; Álvarez, H.; Simões, B.; Posada, J.; García-Alonso, A.; Ugarte, R. Visual computing technologies to support the Operator 4.0. Comput. Ind. Eng. 2020, 139, 105550. [Google Scholar] [CrossRef]

- Posada, J.; Zorrilla, M.; Dominguez, A.; Simões, B.; Eisert, P.; Stricker, D.; Rambach, J.; Dollner, J.; Guevara, M. Graphics and Media Technologies for Operators in Industry 4.0. IEEE Comput. Graph. Appl. 2018, 38, 119–132. [Google Scholar] [CrossRef]

- Ojer, M.; Serrano, I.; Saiz, F.; Barandiaran, I.; Gil, I.; Aguinaga, D.; Alejandro, D. Real-time automatic optical system to assist operators in the assembling of electronic components. Int. J. Adv. Manuf. Technol. 2020, 107. [Google Scholar] [CrossRef]

- Coffey, V.C. Machine Vision: The Eyes of Industry 40. Opt. Photonics News 2018, 29, 42. [Google Scholar] [CrossRef]

- Fu, L.; Zhang, Y.; Huang, Q.; Chen, X. Research and application of machine vision in intelligent manufacturing. In Proceedings of the 2016 Chinese Control and Decision Conference (CCDC), Yinchuan, China, 28–30 May 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar] [CrossRef]

- Tavakolizadeh, F.; Soto, J.A.C.; Gyulai, D.; Beecks, C. Industry 4.0: Mining Physical Defects in Production of Surface-Mount Devices. In Proceedings of the 17th Conference, Advances in Data Mining (ICDM) 2017, New York, NY, USA, 12–16 July 2017; pp. 146–151. Available online: http://www.data-mining-forum.de/books/icdmposter2017.pdf (accessed on 15 April 2020).

- Solvang, B.; Sziebig, G.; Korondi, P. Multilevel control of flexible manufacturing systems. In Proceedings of the 2008 Conference on Human System Interactions (HSI), Krakow, Poland, 25–27 May 2008; IEEE: Piscataway, NJ, USA, 2008. [Google Scholar] [CrossRef]

- Teck, L.W.; Sulaiman, M.; Shah, H.N.M.; Omar, R. Implementation of Shape—Based Matching Vision System in Flexible Manufacturing System. J. Eng. Sci. Technol. Rev. 2010, 3, 128–135. [Google Scholar] [CrossRef]

- Lanza, G.; Haefner, B.; Kraemer, A. Optimization of selective assembly and adaptive manufacturing by means of cyber-physical system based matching. CIRP Ann. 2015, 64, 399–402. [Google Scholar] [CrossRef] [Green Version]

- Joshi, K.D.; Surgenor, B.W. Small Parts Classification with Flexible Machine Vision and a Hybrid Classifier. In Proceedings of the 2018 25th International Conference on Mechatronics and Machine Vision in Practice (M2VIP), Stuttgart, Germany, 20–22 November 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar] [CrossRef]

- Junior, M.L.; Filho, M.G. Variations of the kanban system: Literature review and classification. Int. J. Prod. Econ. 2010, 125, 13–21. [Google Scholar] [CrossRef]

- Alnahhal, M.; Noche, B. Dynamic material flow control in mixed model assembly lines. Comput. Ind. Eng. 2015, 85, 110–119. [Google Scholar] [CrossRef]

- Hinrichsen, S.; Riediger, D.; Unrau, A. Assistance Systems in Manual Assembly. In Proceedings of the 2016 6th International Conference on Production Engineering and Management, Lemgo, Germany, 29–30 September 2016; Hochschule Ostwestfalen-Lippe: Lemgo, Germany; University of Applied Sciences: Pordenone, Italy; Università Degli Studi di Trieste: Trieste, Italy, 2016. [Google Scholar]

- Ruppert, T.; Jaskó, S.; Holczinger, T.; Abonyi, J. Enabling Technologies for Operator 4.0: A Survey. Appl. Sci. 2018, 8, 1650. [Google Scholar] [CrossRef] [Green Version]

- Barbedo, J.G.A. A Review on Methods for Automatic Counting of Objects in Digital Images. IEEE Lat. Am. Trans. 2012, 10, 2112–2124. [Google Scholar] [CrossRef]

- Baygin, M.; Karakose, M.; Sarimaden, A.; Akin, E. An Image Processing based Object Counting Approach for Machine Vision Application. arXiv 2018, arXiv:1802.05911. Available online: https://arxiv.org/pdf/1802.05911.pdf (accessed on 13 April 2020).

- Pierleoni, P.; Belli, A.; Palma, L.; Palmucci, M.; Sabbatini, L. A Machine Vision System for Manual-AssemblyLine Monitoring. In Proceedings of the International Conference on Intelligent Engineering and Management 2020, Postponed from April to June due to Coronavirus, London, UK, 17–19 June 2020. [Google Scholar]

- Qian, S.; Weng, G. Research on Object Detection based on Mathematical Morphology. In Proceedings of the 4th International Conference on Information Technology and Management Innovation, Shenzhen, China, 12–13 September 2015; Atlantis Press: Paris, France, 2015. [Google Scholar] [CrossRef] [Green Version]

- Salscheider, N.O. Simultaneous Object Detection and Semantic Segmentation. arXiv 2019, arXiv:1905.02285. Available online: https://arxiv.org/pdf/1905.02285.pdf (accessed on 9 April 2020).

- Matveev, I.; Karpov, K.; Chmielewski, I.; Siemens, E.; Yurchenko, A. Fast Object Detection Using Dimensional Based Features for Public Street Environments. Smart Cities 2020, 3, 6. [Google Scholar] [CrossRef] [Green Version]

- Dollar, P.; Appel, R.; Belongie, S.; Perona, P. Fast Feature Pyramids for Object Detection. IEEE Trans. Pattern Anal. Mach. 2014, 36, 1532–1545. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Maddalena, L.; Petrosino, A. Background Subtraction for Moving Object Detection in RGBD Data: A Survey. J. Imaging 2018, 4, 71. [Google Scholar] [CrossRef] [Green Version]

- Darwich, A.; Hébert, P.A.; Bigand, A.; Mohanna, Y. Background Subtraction Based on a New Fuzzy Mixture of Gaussians for Moving Object Detection. J. Imaging 2018, 4, 92. [Google Scholar] [CrossRef] [Green Version]

- Tharwat, A. Classification assessment methods. Appl. Comput. Inform. 2018. [Google Scholar] [CrossRef]

| Original Blob | Original ACF | Improved Blob | Improved ACF | |||||

|---|---|---|---|---|---|---|---|---|

| Positive | Negative | Positive | Negative | Positive | Negative | Positive | Negative | |

| True | 90 | 75 | 80 | 85 | 88 | 86 | 87 | 85 |

| False | 13 | 0 | 3 | 10 | 2 | 2 | 3 | 3 |

| Original Blob | Original ACF | Improved Blob | Improved ACF | |

|---|---|---|---|---|

| Sensitivity | 100% | 89% | 96.7% | 97.8% |

| Specificity | 85.2% | 96.6% | 96.6% | 97.7% |

| Accuracy | 92.7% | 92.7% | 96.6% | 97.8% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pierleoni, P.; Belli, A.; Palma, L.; Sabbatini, L. A Versatile Machine Vision Algorithm for Real-Time Counting Manually Assembled Pieces. J. Imaging 2020, 6, 48. https://doi.org/10.3390/jimaging6060048

Pierleoni P, Belli A, Palma L, Sabbatini L. A Versatile Machine Vision Algorithm for Real-Time Counting Manually Assembled Pieces. Journal of Imaging. 2020; 6(6):48. https://doi.org/10.3390/jimaging6060048

Chicago/Turabian StylePierleoni, Paola, Alberto Belli, Lorenzo Palma, and Luisiana Sabbatini. 2020. "A Versatile Machine Vision Algorithm for Real-Time Counting Manually Assembled Pieces" Journal of Imaging 6, no. 6: 48. https://doi.org/10.3390/jimaging6060048

APA StylePierleoni, P., Belli, A., Palma, L., & Sabbatini, L. (2020). A Versatile Machine Vision Algorithm for Real-Time Counting Manually Assembled Pieces. Journal of Imaging, 6(6), 48. https://doi.org/10.3390/jimaging6060048