Machine Vision Systems in Precision Agriculture for Crop Farming

Abstract

:1. Introduction

- Plant and fruit detection approaches

- Harvesting support approaches, including fruit grading, ripeness detection, fruit counting, and yield prediction

- Plant and fruit health protection and disease detection approaches, including weed, insect, disease, and deficiency detection

- Camera types used for machine vision in agriculture

- Vision-based vehicle guidance systems (navigation) for agricultural applications

- Vision-based autonomous mobile agricultural robots

2. Plant and Fruit Detection Approaches

3. Harvest Support Approaches

3.1. Fruit Grading and Ripeness Detection

3.2. Fruit Counting and Yield Prediction

4. Plant/Fruit Health Protection and Disease Detection Approaches

4.1. Weed Detection

4.2. Insect Detection

4.3. Disease and Deficiencies Detection

5. Camera Types Used for Machine Vision in Agriculture

6. Vision-Based Vehicle Guidance Systems for Agricultural Applications

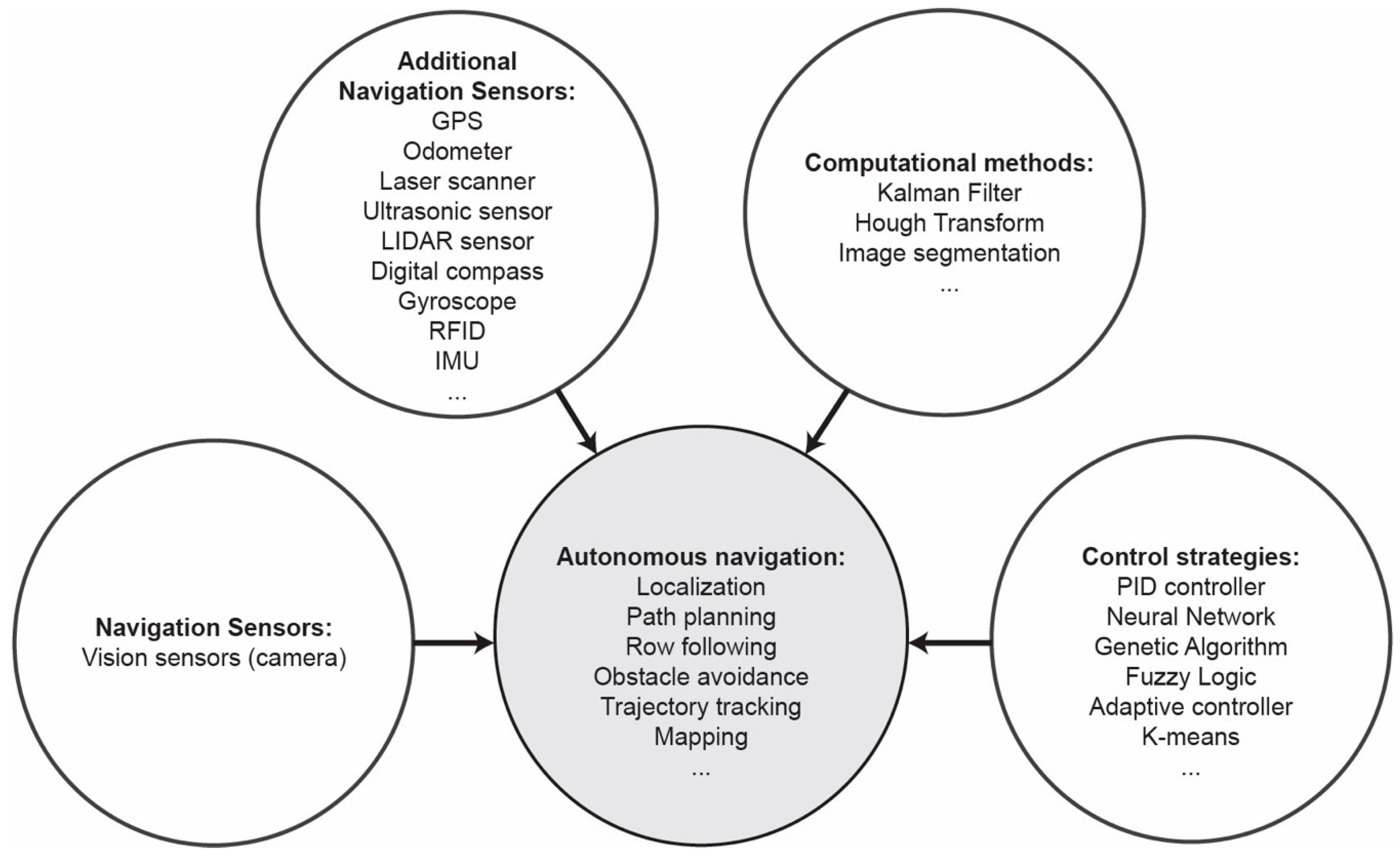

7. Vision-Based AgroBots

8. Discussion

9. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Pereira, C.S.; Morais, R.; Reis, M.J.C.S. Recent advances in image processing techniques for automated harvesting purposes: A review. In Proceedings of the 2017 Intelligent Systems Conference (IntelliSys), London, UK, 7–8 September 2017; pp. 566–575. [Google Scholar]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- Iqbal, Z.; Khan, M.A.; Sharif, M.; Shah, J.H.; ur Rehman, M.H.; Javed, K. An automated detection and classification of citrus plant diseases using image processing techniques: A review. Comput. Electron. Agric. 2018, 153, 12–32. [Google Scholar] [CrossRef]

- Patrício, D.I.; Rieder, R. Computer vision and artificial intelligence in precision agriculture for grain crops: A systematic review. Comput. Electron. Agric. 2018, 153, 69–81. [Google Scholar] [CrossRef] [Green Version]

- Pajares, G.; García-Santillán, I.; Campos, Y.; Montalvo, M.; Guerrero, J.; Emmi, L.; Romeo, J.; Guijarro, M.; Gonzalez-de-Santos, P. Machine-Vision Systems Selection for Agricultural Vehicles: A Guide. J. Imaging 2016, 2, 34. [Google Scholar] [CrossRef] [Green Version]

- Reid, J.F.; Zhang, Q.; Noguchi, N.; Dickson, M. Agricultural automatic guidance research in North America. Comput. Electron. Agric. 2000, 25, 155–167. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations: Concepts and components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Shalal, N.; Low, T.; McCarthy, C.; Hancock, N. A Review of Autonomous Navigation Systems in Agricultural Environments. In Proceedings of the 2013 Society for Engineering in Agriculture Conference: Innovative Agricultural Technologies for a Sustainable Future, Barton, Australia, 22–25 September 2013. [Google Scholar]

- Yaghoubi, S.; Akbarzadeh, N.A.; Bazargani, S.S.; Bazargani, S.S.; Bamizan, M.; Asl, M.I. Autonomous robots for agricultural tasks and farm assignment and future trends in agro robots. Int. J. Mech. Mechatronics Eng. 2013, 13, 1–6. [Google Scholar]

- Torii, T. Research in autonomous agriculture vehicles in Japan. Comput. Electron. Agric. 2000, 25, 133–153. [Google Scholar] [CrossRef]

- Ji, B.; Zhu, W.; Liu, B.; Ma, C.; Li, X. Review of Recent Machine-Vision Technologies in Agriculture. In Proceedings of the 2009 Second International Symposium on Knowledge Acquisition and Modeling, Wuhan, China, 30 November–1 December 2009; pp. 330–334. [Google Scholar]

- Kitchenham, B. Procedures for Performing Systematic Reviews; Joint Technical Report; Computer Science Department, Keele University (TR/SE0401) and National ICT Australia Ltd. (0400011T.1): Sydney, Australia, 2004; ISSN 1353-7776. [Google Scholar]

- Benitti, F.B.V. Exploring the educational potential of robotics in schools: A systematic review. Comput. Educ. 2012, 58, 978–988. [Google Scholar] [CrossRef]

- Badeka, E.; Kalabokas, T.; Tziridis, K.; Nicolaou, A.; Vrochidou, E.; Mavridou, E.; Papakostas, G.A.; Pachidis, T. Grapes Visual Segmentation for Harvesting Robots Using Local Texture Descriptors. In Computer Vision Systems (ICVS 2019); Springer: Thessaloniki, Greece, 2019; pp. 98–109. [Google Scholar]

- Behroozi-Khazaei, N.; Maleki, M.R. A robust algorithm based on color features for grape cluster segmentation. Comput. Electron. Agric. 2017, 142, 41–49. [Google Scholar] [CrossRef]

- Li, B.; Long, Y.; Song, H. Detection of green apples in natural scenes based on saliency theory and Gaussian curve fitting. Int. J. Agric. Biol. Eng. 2018, 11, 192–198. [Google Scholar] [CrossRef] [Green Version]

- Dias, P.A.; Tabb, A.; Medeiros, H. Apple flower detection using deep convolutional networks. Comput. Ind. 2018, 99, 17–28. [Google Scholar] [CrossRef] [Green Version]

- Li, D.; Zhao, H.; Zhao, X.; Gao, Q.; Xu, L. Cucumber Detection Based on Texture and Color in Greenhouse. Int. J. Pattern Recognit. Artif. Intell. 2017, 31, 1754016. [Google Scholar] [CrossRef]

- Prasetyo, E.; Adityo, R.D.; Suciati, N.; Fatichah, C. Mango leaf image segmentation on HSV and YCbCr color spaces using Otsu thresholding. In Proceedings of the 2017 3rd International Conference on Science and Technology-Computer (ICST), Yogyakarta, Indonesia, 11–12 July 2017; pp. 99–103. [Google Scholar]

- Sabzi, S.; Abbaspour-Gilandeh, Y.; Javadikia, H. Machine vision system for the automatic segmentation of plants under different lighting conditions. Biosyst. Eng. 2017, 161, 157–173. [Google Scholar] [CrossRef]

- Sabanci, K.; Kayabasi, A.; Toktas, A. Computer vision-based method for classification of wheat grains using artificial neural network. J. Sci. Food Agric. 2017, 97, 2588–2593. [Google Scholar] [CrossRef]

- Yahata, S.; Onishi, T.; Yamaguchi, K.; Ozawa, S.; Kitazono, J.; Ohkawa, T.; Yoshida, T.; Murakami, N.; Tsuji, H. A hybrid machine learning approach to automatic plant phenotyping for smart agriculture. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1787–1793. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [Green Version]

- Rosten, E.; Porter, R.; Drummond, T. Faster and Better: A Machine Learning Approach to Corner Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 105–119. [Google Scholar] [CrossRef] [Green Version]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Viola, P.; Jones, M.J. Robust Real-Time Face Detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Zuiderveld, K. Contrast Limited Adaptive Histogram Equalization. In Graphics Gems; Elsevier: Amsterdam, The Netherlands, 1994; pp. 474–485. ISBN 0123361559. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning (Information Science and Statistics), 1st ed.; 2006. corr. 2nd Printing ed.; Springer: New York, NY, USA, 2006. [Google Scholar]

- Xiong, X.; Duan, L.; Liu, L.; Tu, H.; Yang, P.; Wu, D.; Chen, G.; Xiong, L.; Yang, W.; Liu, Q. Panicle-SEG: A robust image segmentation method for rice panicles in the field based on deep learning and superpixel optimization. Plant Methods 2017, 13, 104. [Google Scholar] [CrossRef] [Green Version]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Liu, M.-Y.; Tuzel, O.; Ramalingam, S.; Chellappa, R. Entropy rate superpixel segmentation. In Proceedings of the CVPR 2011, Providence, RI, USA, 20–25 June 2011; pp. 2097–2104. [Google Scholar]

- Yang, W.; Tang, W.; Li, M.; Zhang, D.; Zhang, Y. Corn tassel detection based on image processing. In Proceedings of the 2012 International Workshop on Image Processing and Optical Engineering, SPIE, Harbin, China, 9–10 January 2011. [Google Scholar]

- Duan, L.; Huang, C.; Chen, G.; Xiong, L.; Liu, Q.; Yang, W. Determination of rice panicle numbers during heading by multi-angle imaging. Crop J. 2015, 3, 211–219. [Google Scholar] [CrossRef] [Green Version]

- Lu, H.; Cao, Z.; Xiao, Y.; Li, Y.; Zhu, Y. Region-based colour modelling for joint crop and maize tassel segmentation. Biosyst. Eng. 2016, 147, 139–150. [Google Scholar] [CrossRef]

- Zhang, J.; Kong, F.; Zhai, Z.; Wu, J.; Han, S. Robust Image Segmentation Method for Cotton Leaf Under Natural Conditions Based on Immune Algorithm and PCNN Algorithm. Int. J. Pattern Recognit. Artif. Intell. 2018, 32, 1854011. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, K.; Yang, F.; Pan, S.; Han, Y. Image segmentation of overlapping leaves based on Chan–Vese model and Sobel operator. Inf. Process. Agric. 2018, 5, 1–10. [Google Scholar] [CrossRef]

- Oppenheim, D.; Edan, Y.; Shani, G. Detecting Tomato Flowers in Greenhouses Using Computer Vision. Int. J. Comput. Electr. Autom. Control Inf. Eng. 2017, 11, 104–109. [Google Scholar]

- Momin, M.A.; Rahman, M.T.; Sultana, M.S.; Igathinathane, C.; Ziauddin, A.T.M.; Grift, T.E. Geometry-based mass grading of mango fruits using image processing. Inf. Process. Agric. 2017, 4, 150–160. [Google Scholar] [CrossRef]

- Wang, Z.; Walsh, K.; Verma, B. On-Tree Mango Fruit Size Estimation Using RGB-D Images. Sensors 2017, 17, 2738. [Google Scholar] [CrossRef] [Green Version]

- Ponce, J.M.; Aquino, A.; Millán, B.; Andújar, J.M. Olive-Fruit Mass and Size Estimation Using Image Analysis and Feature Modeling. Sensors 2018, 18, 2930. [Google Scholar] [CrossRef] [Green Version]

- Wan, P.; Toudeshki, A.; Tan, H.; Ehsani, R. A methodology for fresh tomato maturity detection using computer vision. Comput. Electron. Agric. 2018, 146, 43–50. [Google Scholar] [CrossRef]

- Abdulhamid, U.; Aminu, M.; Daniel, S. Detection of Soya Beans Ripeness Using Image Processing Techniques and Artificial Neural Network. Asian J. Phys. Chem. Sci. 2018, 5, 1–9. [Google Scholar] [CrossRef]

- Choi, D.; Lee, W.S.; Schueller, J.K.; Ehsani, R.; Roka, F.; Diamond, J. A performance comparison of RGB, NIR, and depth images in immature citrus detection using deep learning algorithms for yield prediction. In Proceedings of the 2017 Spokane, Washington, DC, USA, 16–19 July 2017. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Sethy, P.K.; Routray, B.; Behera, S.K. Detection and Counting of Marigold Flower Using Image Processing Technique. In Lecture Notes in Networks and Systems; Springer: Singapore, 2019; pp. 87–93. [Google Scholar]

- Chen, S.W.; Shivakumar, S.S.; Dcunha, S.; Das, J.; Okon, E.; Qu, C.; Taylor, C.J.; Kumar, V. Counting Apples and Oranges With Deep Learning: A Data-Driven Approach. IEEE Robot. Autom. Lett. 2017, 2, 781–788. [Google Scholar] [CrossRef]

- Dorj, U.-O.; Lee, M.; Yun, S. An yield estimation in citrus orchards via fruit detection and counting using image processing. Comput. Electron. Agric. 2017, 140, 103–112. [Google Scholar] [CrossRef]

- Cheng, H.; Damerow, L.; Sun, Y.; Blanke, M. Early Yield Prediction Using Image Analysis of Apple Fruit and Tree Canopy Features with Neural Networks. J. Imaging 2017, 3, 6. [Google Scholar] [CrossRef]

- Sethy, P.K.; Routray, B.; Behera, S.K. Advances in Computer, Communication and Control. In Lecture Notes in Networks and Systems; Biswas, U., Banerjee, A., Pal, S., Biswas, A., Sarkar, D., Haldar, S., Eds.; Springer Singapore: Singapore, 2019; Volume 41, ISBN 978-981-13-3121-3. [Google Scholar]

- Knoll, F.J.; Czymmek, V.; Poczihoski, S.; Holtorf, T.; Hussmann, S. Improving efficiency of organic farming by using a deep learning classification approach. Comput. Electron. Agric. 2018, 153, 347–356. [Google Scholar] [CrossRef]

- Bakhshipour, A.; Jafari, A.; Nassiri, S.M.; Zare, D. Weed segmentation using texture features extracted from wavelet sub-images. Biosyst. Eng. 2017, 157, 1–12. [Google Scholar] [CrossRef]

- Mallat, S.G. A theory for multiresolution signal decomposition: The wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 674–693. [Google Scholar] [CrossRef] [Green Version]

- Kounalakis, T.; Triantafyllidis, G.A.; Nalpantidis, L. Image-based recognition framework for robotic weed control systems. Multimed. Tools Appl. 2018, 77, 9567–9594. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Lottes, P.; Behley, J.; Milioto, A.; Stachniss, C. Fully Convolutional Networks With Sequential Information for Robust Crop and Weed Detection in Precision Farming. IEEE Robot. Autom. Lett. 2018, 3, 2870–2877. [Google Scholar] [CrossRef] [Green Version]

- Lottes, P.; Stachniss, C. Semi-supervised online visual crop and weed classification in precision farming exploiting plant arrangement. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 5155–5161. [Google Scholar]

- Milioto, A.; Lottes, P.; Stachniss, C. Real-Time Semantic Segmentation of Crop and Weed for Precision Agriculture Robots Leveraging Background Knowledge in CNNs. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2229–2235. [Google Scholar]

- Bosilj, P.; Duckett, T.; Cielniak, G. Connected attribute morphology for unified vegetation segmentation and classification in precision agriculture. Comput. Ind. 2018, 98, 226–240. [Google Scholar] [CrossRef] [PubMed]

- Salembier, P.; Oliveras, A.; Garrido, L. Antiextensive connected operators for image and sequence processing. IEEE Trans. Image Process. 1998, 7, 555–570. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms; IEEE: Piscataway, NJ, USA, 1979. [Google Scholar]

- Hamuda, E.; Mc Ginley, B.; Glavin, M.; Jones, E. Automatic crop detection under field conditions using the HSV colour space and morphological operations. Comput. Electron. Agric. 2017, 133, 97–107. [Google Scholar] [CrossRef]

- García, J.; Pope, C.; Altimiras, F. A Distributed Means Segmentation Algorithm Applied to Lobesia botrana Recognition. Complexity 2017, 2017, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Pérez, D.S.; Bromberg, F.; Diaz, C.A. Image classification for detection of winter grapevine buds in natural conditions using scale-invariant features transform, bag of features and support vector machines. Comput. Electron. Agric. 2017, 135, 81–95. [Google Scholar] [CrossRef]

- Ebrahimi, M.A.; Khoshtaghaza, M.H.; Minaei, S.; Jamshidi, B. Vision-based pest detection based on SVM classification method. Comput. Electron. Agric. 2017, 137, 52–58. [Google Scholar] [CrossRef]

- Vassallo-Barco, M.; Vives-Garnique, L.; Tuesta-Monteza, V.; Mejía-Cabrera, H.I.; Toledo, R.Y. Automatic detection of nutritional deficiencies in coffee tree leaves through shape and texture descriptors. J. Digit. Inf. Manag. 2017, 15, 7–18. [Google Scholar]

- Ali, H.; Lali, M.I.; Nawaz, M.Z.; Sharif, M.; Saleem, B.A. Symptom based automated detection of citrus diseases using color histogram and textural descriptors. Comput. Electron. Agric. 2017, 138, 92–104. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, S.; Yang, J.; Shi, Y.; Chen, J. Apple leaf disease identification using genetic algorithm and correlation based feature selection method. Int. J. Agric. Biol. Eng. 2017, 10. [Google Scholar] [CrossRef]

- Hlaing, C.S.; Zaw, S.M.M. Plant diseases recognition for smart farming using model-based statistical features. In Proceedings of the 2017 IEEE 6th Global Conference on Consumer Electronics (GCCE), Nagoya, Japan, 24–27 October 2017; pp. 1–4. [Google Scholar]

- Chan, T.F.; Vese, L.A. Active contours without edges. IEEE Trans. Image Process. 2001, 10, 266–277. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hu, Q.; Tian, J.; He, D. Wheat leaf lesion color image segmentation with improved multichannel selection based on the Chan–Vese model. Comput. Electron. Agric. 2017, 135, 260–268. [Google Scholar] [CrossRef]

- Patil, J.K.; Kumar, R. Analysis of content based image retrieval for plant leaf diseases using color, shape and texture features. Eng. Agric. Environ. Food 2017, 10, 69–78. [Google Scholar] [CrossRef]

- Islam, M.; Dinh, A.; Wahid, K.; Bhowmik, P. Detection of potato diseases using image segmentation and multiclass support vector machine. In Proceedings of the 2017 IEEE 30th Canadian Conference on Electrical and Computer Engineering (CCECE), Windsor, ON, Canada, 30 April–3 May 2017; pp. 1–4.

- Ma, J.; Du, K.; Zhang, L.; Zheng, F.; Chu, J.; Sun, Z. A segmentation method for greenhouse vegetable foliar disease spots images using color information and region growing. Comput. Electron. Agric. 2017, 142, 110–117. [Google Scholar] [CrossRef]

- Meyer, G.E.; Hindman, T.W.; Laksmi, K. Machine Vision Detection Parameters for Plant Species Identification. In Proceedings of the SPIE, Washington, DC, USA, 14 January 1999; pp. 327–335. [Google Scholar]

- Zhou, R.; Kaneko, S.; Tanaka, F.; Kayamori, M.; Shimizu, M. Disease detection of Cercospora Leaf Spot in sugar beet by robust template matching. Comput. Electron. Agric. 2014, 108, 58–70. [Google Scholar] [CrossRef]

- Prakash, R.M.; Saraswathy, G.P.; Ramalakshmi, G.; Mangaleswari, K.H.; Kaviya, T. Detection of leaf diseases and classification using digital image processing. In Proceedings of the 2017 International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, India, 17–18 March 2017; pp. 1–4. [Google Scholar]

- Sharif, M.; Khan, M.A.; Iqbal, Z.; Azam, M.F.; Lali, M.I.U.; Javed, M.Y. Detection and classification of citrus diseases in agriculture based on optimized weighted segmentation and feature selection. Comput. Electron. Agric. 2018, 150, 220–234. [Google Scholar] [CrossRef]

- Zhang, S.; Wu, X.; You, Z.; Zhang, L. Leaf image based cucumber disease recognition using sparse representation classification. Comput. Electron. Agric. 2017, 134, 135–141. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, H.; Huang, W.; You, Z. Plant diseased leaf segmentation and recognition by fusion of superpixel, K-means and PHOG. Optik 2018, 157, 866–872. [Google Scholar] [CrossRef]

- Zhang, H.; Sha, Z. Product Classification based on SVM and PHOG Descriptor. Int. J. Comput. Sci. Netw. Secur. 2013, 13, 9. [Google Scholar]

- Garcia-Lamont, F.; Cervantes, J.; López, A.; Rodriguez, L. Segmentation of images by color features: A survey. Neurocomputing 2018, 292, 1–27. [Google Scholar] [CrossRef]

- McCarthy, C.L.; Hancock, N.H.; Raine, S.R. Applied machine vision of plants: A review with implications for field deployment in automated farming operations. Intell. Serv. Robot. 2010, 3, 209–217. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Lee, S.-H.; Chahl, J.S. An evaluation of the contribution of ultraviolet in fused multispectral images for invertebrate detection on green leaves. Precis. Agric. 2017, 18, 667–683. [Google Scholar] [CrossRef]

- Radcliffe, J.; Cox, J.; Bulanon, D.M. Machine vision for orchard navigation. Comput. Ind. 2018, 98, 165–171. [Google Scholar] [CrossRef]

- Warner, M.G.R.; Harries, G.O. An ultrasonic guidance system for driverless tractors. J. Agric. Eng. Res. 1972, 17, 1–9. [Google Scholar] [CrossRef]

- Yukumoto, O.; Matsuo, Y.; Noguchi, N. Robotization of agricultural vehicles (part 1)-Component technologies and navigation systems. Jpn. Agric. Res. Q. 2000, 34, 99–105. [Google Scholar]

- Bell, T. Automatic tractor guidance using carrier-phase differential GPS. Comput. Electron. Agric. 2000, 25, 53–66. [Google Scholar] [CrossRef]

- Han, S.; Zhang, Q.; Ni, B.; Reid, J. A guidance directrix approach to vision-based vehicle guidance systems. Comput. Electron. Agric. 2004, 43, 179–195. [Google Scholar] [CrossRef]

- Wilson, J. Guidance of agricultural vehicles—A historical perspective. Comput. Electron. Agric. 2000, 25, 3–9. [Google Scholar] [CrossRef]

- Marchant, J.A. Tracking of row structure in three crops using image analysis. Comput. Electron. Agric. 1996, 15, 161–179. [Google Scholar] [CrossRef]

- Zhang, Q.; Reid, J.J.F.; Noguchi, N. Agricultural Vehicle Navigation Using Multiple Guidance Sensors. 1999. Available online: https://www.researchgate.net/profile/John_Reid10/publication/245235458_Agricultural_Vehicle_Navigation_Using_Multiple_Guidance_Sensors/links/543bce7c0cf2d6698be335dd/Agricultural-Vehicle-Navigation-Using-Multiple-Guidance-Sensors.pdf (accessed on 9 December 2019).

- Bakker, T.; Wouters, H.; van Asselt, K.; Bontsema, J.; Tang, L.; Müller, J.; van Straten, G. A vision based row detection system for sugar beet. Comput. Electron. Agric. 2008, 60, 87–95. [Google Scholar] [CrossRef]

- Jiang, G.-Q.; Zhao, C.-J.; Si, Y.-S. A machine vision based crop rows detection for agricultural robots. In Proceedings of the 2010 International Conference on Wavelet Analysis and Pattern Recognition, Qingdao, China, 11–14 July 2010; pp. 114–118. [Google Scholar]

- Jiang, G.; Zhao, C. A vision system based crop rows for agricultural mobile robot. In Proceedings of the 2010 International Conference on Computer Application and System Modeling (ICCASM 2010), Taiyuan, China, 22–24 October 2010; pp. V11:142–V11:145. [Google Scholar]

- Torres-Sospedra, J.; Nebot, P. A New Approach to Visual-Based Sensory System for Navigation into Orange Groves. Sensors 2011, 11, 4086–4103. [Google Scholar] [CrossRef] [PubMed]

- Sharifi, M.; Chen, X. A novel vision based row guidance approach for navigation of agricultural mobile robots in orchards. In Proceedings of the 2015 6th International Conference on Automation, Robotics and Applications (ICARA), Queenstown, New Zealand, 17–19 October 2015; pp. 251–255. [Google Scholar]

- Bakker, T.; van Asselt, K.; Bontsema, J.; Müller, J.; van Straten, G. Autonomous navigation using a robot platform in a sugar beet field. Biosyst. Eng. 2011, 109, 357–368. [Google Scholar] [CrossRef]

- Xue, J.; Xu, L. Autonomous Agricultural Robot and its Row Guidance. In Proceedings of the 2010 International Conference on Measuring Technology and Mechatronics Automation, Changsha, China, 13–14 March 2010; pp. 725–729. [Google Scholar]

- Scarfe, A.J.; Flemmer, R.C.; Bakker, H.H.; Flemmer, C.L. Development of an autonomous kiwifruit picking robot. In Proceedings of the 2009 4th International Conference on Autonomous Robots and Agents, Wellington, New Zealand, 10–12 February 2009; pp. 380–384. [Google Scholar]

- Hiremath, S.; van Evert, F.K.; ter Braak, C.; Stein, A.; van der Heijden, G. Image-based particle filtering for navigation in a semi-structured agricultural environment. Biosyst. Eng. 2014, 121, 85–95. [Google Scholar] [CrossRef]

- Subramanian, V.; Burks, T.F.; Arroyo, A.A. Development of machine vision and laser radar based autonomous vehicle guidance systems for citrus grove navigation. Comput. Electron. Agric. 2006, 53, 130–143. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, Q.; Rovira-Más, F.; Tian, L. Stereovision-based lateral offset measurement for vehicle navigation in cultivated stubble fields. Biosyst. Eng. 2011, 109, 258–265. [Google Scholar] [CrossRef]

- Xue, J.; Zhang, L.; Grift, T.E. Variable field-of-view machine vision based row guidance of an agricultural robot. Comput. Electron. Agric. 2012, 84, 85–91. [Google Scholar] [CrossRef]

- Jiang, H.; Xiao, Y.; Zhang, Y.; Wang, X.; Tai, H. Curve path detection of unstructured roads for the outdoor robot navigation. Math. Comput. Model. 2013, 58, 536–544. [Google Scholar] [CrossRef]

- Meng, Q.; Qiu, R.; He, J.; Zhang, M.; Ma, X.; Liu, G. Development of agricultural implement system based on machine vision and fuzzy control. Comput. Electron. Agric. 2015, 112, 128–138. [Google Scholar] [CrossRef]

- Muscato, G.; Prestifilippo, M.; Abbate, N.; Rizzuto, I. A prototype of an orange picking robot: Past history, the new robot and experimental results. Ind. Robot-An Int. J. 2005, 32, 128–138. [Google Scholar] [CrossRef]

- Ortiz, J.M.; Olivares, M. A Vision Based Navigation System for an Agricultural Field Robot. In Proceedings of the 2006 IEEE 3rd Latin American Robotics, Symposium, Santiago, Chile, 26–27 October 2006; pp. 106–114. [Google Scholar]

- Søgaard, H.T.; Lund, I. Application Accuracy of a Machine Vision-controlled Robotic Micro-dosing System. Biosyst. Eng. 2007, 96, 315–322. [Google Scholar] [CrossRef]

- He, B.; Liu, G.; Ji, Y.; Si, Y.; Gao, R. Auto Recognition of Navigation Path for Harvest Robot Based on Machine Vision. In IFIP Advances in Information and Communication Technology; Springer: Berlin/Heidelberg, Germany, 2011; pp. 138–148. ISBN 9783642183324. [Google Scholar]

| Ref. | Fruit/Plant Type | Task | Feature Type | Method | Performance Indices |

|---|---|---|---|---|---|

| [16] | Green apples | Detection of green apples in natural scenes | Color (V-component of the YUV color space) | Saliency map and Gaussian curve fitting | Accuracy = 91.84% |

| [17] | Apples | Apple flower detection | Color and spatial proximity | SLIC + CNN SVM + PCA | AUC-PR and F-measure 93.40% on Apple A dataset F-measure 80.20% on Apple B F-measure 82.20% on Apple C F-measure 79.90% on Peach |

| [15] | Grapes | Grape identification | Color (HSV and L* a* b* color spaces) | ANN+GA | Accuracy = 99.40% |

| [19] | Mango | Mango leaf image segmentation | Color (HSV and YCbCr color spaces) | Otsu | Precision = 99.50% Recall = 97.10% F-measure = 98.30% |

| [20] | Plants of 6 different types e.g., Potato, Mallow. | Segmentation of different plants in different growth stages, different conditions of the day and one controlled state and different imaging situations | Color (Five features among 126 extracting features of five colour spaces RGB, CMY, HSI, HSV, YIQ and YCbCr were selected using a hybrid ANN) | ANN-HS | Accuracy = 99.69% |

| [21] | Grains | Classification of wheat grains to their species | Size, color and texture | Otsu + MLP | Accuracy = 99.92% |

| [22] | Flower and seedpods of soybean plants | Flower and seedpods detection in a crowd of soybean plants | Color (Hue of HSV color space) for flower detection. Haar-like features for seedpods detection | SLIC + CNN Viola-Jones detector + CLAHE + CNN | Precision = 90.00% Recall = 82.60% F-measure = 84.00% |

| [29] | Rice | Image segmentation for rice panicles | Color (L, a, b values of the CIELAB color space) | SLIC + CNN + ERS | F-measure = 76.73% |

| [35] | Cotton | Cotton leaves segmentation | Color (three anti-light color components were selected by histogram statistical with mean gray value) | PCNN + Immune algorithm | Accuracy = 93.50% |

| [36] | Overlapping leaves (tested on cucumber leaves) | Image segmentation of overlapping leaves | Color and Shape | Sobel + C-V model | Accuracy = 95.72% |

| Ref. | Fruit Type | Task | Feature Type | Method | Performance Indices |

|---|---|---|---|---|---|

| [38] | Mango | Grading based on size (classifying mangos into one of three mass grades, e.g., large, medium, and small) | Color, Geometrical and Shape | Region based global thresholding color binarization, combined with median filter and morphological analysis | Accuracy = 97.00% |

| [39] | Mango | Size estimation | Histogram of Oriented Gradients (HOG), Color (CIE L*a*b* color space) | Cascade classifier, Otsu thresholding & ellipse fitting method | Precision 100% on fruit detection. R2 = 0.96 and RMSE = 4.9 mm for fruit length estimation and R2 = 0.95 and RMSE = 4.3 mm for fruit width estimation |

| [40] | Olive | Estimation of the maximum/minimum (polar/equatorial) diameter length and mass of olive fruits | HSV color space (the value and saturation channels) & morphological features | Mathematical morphology & automated thresholding based on statistical bimodal analysis | Relative mean errors below 2.50% for all tested cases |

| [41] | Tomato | Ripeness detection | Color (HSI color model) | BPNN | Accuracy = 99.31% |

| [43] | Citrus | Ripeness detection | Color & shape | Circular Hough Transform + CHOI’s Circle Estimation (‘CHOICE’) + AlexNet | Recall = 91.60%, 96.00% and 90.60 for, NIR and depth images respectively |

| Ref. | Fruit/Plant Type | Task | Feature Type | Method | Performance Indices |

|---|---|---|---|---|---|

| [49] | Marigold flowers | Detection and counting | Color (HSV color space) | HSV color transform and circular Hough transform (CHT) | Average error of 5% |

| [37] | Tomato flowers | Detection and counting | Color (HSV) and morphological (size & location) | Adaptive global threshold, segmentation over the HSV color space, and morphological cues. | Precision = 80.00% Recall = 80.00% |

| [46] | Apples and oranges | Fruit detection and counting | Pixel based | CNN and linear regression | Mean error (in the form of ratio of total fruit counted and standard deviation of the errors) of 13.8 on the oranges, and 10.5 on the apples. |

| [48] | Apples | Early yield prediction | Colour (RGB & HIS) and Tree canopy features | Otsu + BPNN | R2 0.82 & 0.80 |

| [47] | Citrus | Detection and counting | Color (HSV) | Histogram thresholding + Watershed segmentation | Mean of the absolute error 5.76% |

| Ref. | Fruit/Plant Type | Task | Feature Type | Method | Performance Indices |

|---|---|---|---|---|---|

| [61] | Weed in cauliflower plants fields | Weed detection | Color (HSV color space) and morphological | Morphological Image Analysis | Recall = 98.91% Precision = 99.04% |

| [50] | Weed in carrots fields | Weed detection | Color (HSV color space) | CNN | Precision = 99.10% F-measure = 99.60% Accuracy = 99.30% |

| [53] | Broad-leaved dock weed | Weed detection | Speed-Up Robust Feature (SURF) [54], | SURF + GMM | Accuracy = 89.09% and false-positive rate 4.38% |

| [57] | Weed in crop fields | Weed detection | Color (RGB) and plant features (vegetation indices) | Deep encoder-decoder CNN | Results in 3 datasets: Precision 98.16% & Recall 93.35%, Precision 67.91% & Recall 63.33%, Precision 87.87 & Recall 64.66% |

| [51] | Weed in sugarbeet fields | Weed detection | Wavelet texture features | PCA+ANN | Accuracy = 96.00% |

| [55] | Weed in sugarbeet fields | Weed detection | Spatial information | CNN + encoder-decoder structure | Results in 2 datasets: Precision 97.90% & Recall 87.80%, Precision 72.70% & Recall 95.30% |

| [58] | Weed in sugarbeet fields | Weed detection | Attribute morphology based | Selection of regions based on max-tree hierarchy [59] and classification with SVM | F-measure = 85.00% (onions) F-measure = 76.00% (sugarbeet) |

| Ref. | Fruit/Plant Type | Task | Feature Type | Method | Performance Indices |

|---|---|---|---|---|---|

| [62] | Grapes | L. botrana recognition | Color (gray scale values and gradient) | Clustering | Specificity = 95.10% |

| [63] | Grapes | Grapevine bug detection | SIFT | SVM | Precision = 86.00% |

| [64] | Strawberry plants | Pest detection in strawberry plants | Color (HSI color space) & morphological (ratio of major diameter to minor diameter in region) | SVM | MSE = 0.471 |

| Ref. | Fruit/Plant Type | Task | Feature Type | Method | Performance Indices |

|---|---|---|---|---|---|

| [65] | Coffee leaves | Identification of nutritional deficiencies of Boron (B), Calcium (Ca), Iron (Fe) and Potassium (K) | Color, shape & texture | Otsu + BSM + GLCM | F-measure = 64.90% |

| [68] | Plants | Plant disease detection | SIFT+ statistical features | SVM | Accuracy = 78.70% |

| [70] | Wheat | Detecting lesions on wheat leaves | Color | (C–V) + PCA + K-means | Accuracy = 84.17% |

| [71] | Soybean leaves | Image retrieving diseased leaves of soybean | HSV Color + SIFT + LGGP | Calculation of distance of the feature vectors to find the one with the smallest distance. | Average retrieval efficiency of 80.00% (for top 5 retrieval) and 72.00% (for top 10 retrieval) |

| [72] | Potato leaves | Disease detection | Color, texture and statistical | Hard thresholding (L*, a* and b* channels) + SVM | Accuracy = 93.70% |

| [72] | Greenhouse vegetable | Detection of foliar disease spots | CCF + ExR | Interactive region growing method based on the CCF map | Precision = 97.29% |

| [66] | Citrus plants | Disease detection | Color (RGB, HSV color histogram) and texture (LBP) | Delta E (DE) + Bagged tree ensemble classifier | Accuracy = 99.90% |

| [75] | Citrus leaves | Disease detection and classification | GLCM | K-means + SVM | Accuracy = 90.00% |

| [76] | Citrus leaves | Disease detection and classification | Color, texture, and geometric | PCA + Multi-Class SVM. | Accuracy = 97.00%, 89.00%, 90.40% on three datasets |

| [77] | Cucumber leaves | Disease detection and classification | Shape and color | K-means + SR | Accuracy = 85.70% |

| [78] | Cucumber and apple leaves | Disease detection and classification | PHOG [80] | K-means + Context-Aware SVM | Accuracy = 85.64% (apples) Accuracy = 87.55% (cucumber) |

| [67] | Apple leaves | Disease identification | Color, texture and shape features | SVM | Accuracy = 94.22% |

| Ref. | Reported Advantages | Reported Disadvantages | Main Technique |

|---|---|---|---|

| [90] | Integrates information over a number of crop rows, making the technique tolerant to missing plants and weeds. | Confined to situations where plants are established in rows. | Hough transform |

| [91] | Points in various directrix classes were determined by unsupervised classification. The sensor fusion-based navigation could provide satisfactory agricultural vehicle guidance even while losing individual navigation signals such as image of the path, or the GPS signal for short periods of time. | When the steering input frequency was higher than 4 Hz, the steering system could not respond to the steering command. | A heuristic method and Hough transform |

| [88] | Good accuracy from two test data sets: a set of soybean images and a set of corn images. The procedure is considered acceptable for real-time vision guidance applications in terms of its accuracy. | K-means algorithm in the procedure limits the processing speed, which is acceptable for real-time applications if the controller output rate is faster and is independent of the image update rate. The performance of the image processing procedure degrades significantly under adverse environmental conditions such as weeds. The fusion of vision sensor with other navigation sensors, such as GPS, is needed in order to provide a more robust guidance directrix. | K-means clustering algorithm, a moment algorithm, and a cost function |

| [92] | A considerable improvement of the speed of image processing. The algorithm is able to find the row at various growth stages. | Inaccuracies exist because of a limited number and size of crop plants, overexposure of the camera, and the presence of green algae due to the use of a greenhouse. Inaccuracies accounted for by footprints indicate that linear structures in the soil surface might create problems. High image acquisition speed, results to images overlapped each other for a large part, thus, total amount of images is too large. | Grey-scale Hough transform |

| [93] | In the pre-processing the binarization image was divided into several row segments, which created less data points while still reserved information of crop rows. Less complex data facilitated Hough transform to meet the real-time requirements. | Rows and inter-row spaces could not be segmented clearly. Within the growth of wheat, the rows became overlapped. Narrow inter-row spaces of the field made it difficult to discriminate all of the rows in view. A great number of weeds between the crop rows disturbed the row detection algorithm | Vertical projection method and Hough transform |

| [94] | The system is not restricted to a specific crop and has been tested on several green crops. After examination in a laboratory and in-field, results show that the method can attain navigation parameters in real time and guide the mobile robot effectively. | Camera calibration has good results if the robot moves in a smooth field. When the field is uneven, the calibration result is unstabilized. Rows and inter-row spaces must be segmented clearly so as the quasi navigation baseline to be detected easily. If the field has narrow inter-row spaces, rows became overlapped, which makes them difficult to separate from background. | Grey-level transformation, Otsu binarization, and Hough transform |

| [95] | Achieves good classification accuracy. The desired path is properly calculated in different lighting conditions. | Classification based on NN requires training, validation and testing of a number of samples, which is time-consuming. NN classification is a supervised technique. | A multilayer feedforward neural network and Hough transform |

| [96] | The proposed technique is unsupervised. It can be used as a complementary system to the other navigational systems such as LiDAR to provide a robust and reliable navigation system for mobile robots in orchards. | For not well-structured orchards, a filtering process is required to extract the path. Not tested in different lighting conditions. | Mean-shift algorithm, graph partitioning theory, and Hough transform |

| [97] | The method limits required a priori information regarding the field. Paths are generated in real-time. The architecture of the software is transparent, and the software is easily extendible. | Very slow. Further improvements in the accuracy of path following of a straight path are not to be expected due to technical reasons. | Grey-scale Hough transform |

| [98] | A local small window of interest is determined as the ladder structure to improve the real-time detection algorithm, and to minimize the effect of the useless information and noises on detection and identification. | Error of the camera position would result in errors of the provided data. Therefore, the camera requires efficient calibration. The plants in the field were selected artificially with the similar size and height and planted in a perfect row. Easily affected in real field conditions, e.g., uneven field, man-made measure error, wheels sideslip due to soft/moist soil etc. | Edge detection, image binarization, and least square methods |

| [99] | The robot has demonstrated capability to use artificial vision for navigation without artificial visual markers. The vision software has enough intelligence to perceive obstacles. | When the vehicle is under the canopy, it relies on the cameras to find the way, but when it is not under the kiwifruit canopy the system relies on GPS and a compass to navigate. Because the camera lenses are short, provision must be made to handle fisheye in the stereopsis. | Hough transform |

| [84] | Inconsistent lighting, shadows, and color similarities in features are eliminated by using a sky-based approach where the image was reduced to canopy plus sky, thus simplifying the segmentation process. This produces a more sensitive control system. The cropped image contains less data needing to be processed, resulting in faster processing time and more rapid response of the ground vehicle platform. | There are large deviations from the center of the row when there are sections with a break in the canopy due to either a missing tree or a tree with limited leaf growth. The proposed approach is effective only when the trees have fully developed canopies. Inadequate for canopies year-round such as citrus or fruit trees that lose their leaves in the winter. It only tackles the straight-line motion down the row but not the end of the row conditions. | Thresholding approach, filtering, and determination of centroid of the path |

| [100] | The proposed model does not extract features from the image and thus does not suffer from errors related to feature extraction process. Efficient for different row patterns, varying plant sizes and diverse lighting conditions. The robot navigates through the corridor without touching the plant stems, detects the end of the rows and traverses the headland | Lack of robustness to uncertainties of the environment. The estimation of the state vector is less accurate during headland compared to row following. When the robot is in the headland only a small part of the rows is visible making the estimation of the orientation of the robot heading less precise. Incorrect estimates of row width and row distance result to inaccuracies. | Particle filter algorithm |

| [101] | Alternative navigation method where the tree canopy frequently blocks the satellite signals to the GPS receiver. The performance is better at lower speeds of the vehicle. | No obstacle detection capability. Low performance at high speed of the vehicle. | Segmentation algorithm |

| [102] | Successfully tested on both straight and curved paths, navigation is based only on stereoscopic vision. | Reduced accuracy of lateral offset estimation when the vehicle navigates on curved paths. The algorithm is developed based on the assumption of no camera rotations while travelling in the field. Not implemented in real-time. | Stereo image processing algorithm |

| [103] | Acceptable accuracy and stability, tested without damaging the crop. | Uneven cornfield caused by residual roots and hard soil clods results in a poorer performance. | Segmentation and Max–Min fuzzy inference algorithm |

| [104] | High speed and remarkable precision. The navigation parameters in the algorithm can control the robot movement well. The algorithm displays better overall robustness and global optimization for detecting the navigation path compared to Hough transform, especially in curved paths. | When using a straight line to detect navigation directrix, the algorithm does not perform well. | Image segmentation, and least-squares curve fitting method |

| [105] | Resolves the problem of illumination interference for image segmentation, adapts to changes in natural light and has good dynamic performance at all speeds. | No obstacle avoidance | Threshold algorithm, linear scanning, and a fuzzy controller |

| Ref. | Description | Application (Year) | Photo |

|---|---|---|---|

| [90] | Visual sensing for an autonomous crop protection robot. Image analysis to derive guidance information and to differentiate between plants, weed and soil. | Tested in cauliflowers, sugar beet and widely spaced double rows of wheat (1996) |  Image from [90] |

| [106] | A commercial agricultural manipulation for fruit picking and handling without human intervention. Machine vision takes place with segmentation of a frame during the picking phase. | Semi-autonomous orange picking robot (2005) |  Image from [106] |

| [107] | Use of a vision system that retrieves a map of the plantation rows, within which the robot must navigate. After obtaining the map of the rows, the robot traces the route to follow (the center line between the rows) and follows it using a navigation system | Tested in a simulated plantation and in a real one at the “Field robot Event 2006” competition (2006) |  Image from [107] |

| [108] | Precise application of herbicides in a seed line. A machine vision system recognizes objects to be sprayed and a micro-dosing system targets very small doses of liquid at the detected objects, while the autonomous vehicle takes care of the navigation. | Experiments carried out under controlled indoor conditions (2007) |  Image from [108] |

| [99] | The vision system identifies fruit hanging from the canopy, discriminating size and defects. Fruits are placed optimally in a bin and when it is full, the vehicle puts the bin down, takes an empty bin, picks it up and resumes from its last position | Autonomous kiwifruit-picking robot (2009) |  Image from [99] |

| [98] | The offset and heading angle of the robot platform are detected in real time to guide the platform on the basis of recognition of a crop row using machine vision | Robot platform that navigates independently. Tested in a small vegetable field (2010) |  Image from [98] |

| [97] | Development of an RTK-DGPS-based autonomous field navigation system including automated headland turns and of a method for crop row mapping combining machine vision and RTK-DGPS. | Autonomous weed control in a sugar beet field (2011) |  Image from [97] |

| [109] | An algorithm of generating navigation paths for harvesting robots based on machine vision and GPS navigation. | Tested in an orchard for apple picking (2011) |  Image from [109] |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mavridou, E.; Vrochidou, E.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Machine Vision Systems in Precision Agriculture for Crop Farming. J. Imaging 2019, 5, 89. https://doi.org/10.3390/jimaging5120089

Mavridou E, Vrochidou E, Papakostas GA, Pachidis T, Kaburlasos VG. Machine Vision Systems in Precision Agriculture for Crop Farming. Journal of Imaging. 2019; 5(12):89. https://doi.org/10.3390/jimaging5120089

Chicago/Turabian StyleMavridou, Efthimia, Eleni Vrochidou, George A. Papakostas, Theodore Pachidis, and Vassilis G. Kaburlasos. 2019. "Machine Vision Systems in Precision Agriculture for Crop Farming" Journal of Imaging 5, no. 12: 89. https://doi.org/10.3390/jimaging5120089

APA StyleMavridou, E., Vrochidou, E., Papakostas, G. A., Pachidis, T., & Kaburlasos, V. G. (2019). Machine Vision Systems in Precision Agriculture for Crop Farming. Journal of Imaging, 5(12), 89. https://doi.org/10.3390/jimaging5120089