1. Introduction

TV-based optical flow can be cast into the following form:

where

is the optical flow,

is a data energy function,

is the

norm, and

is a regularization parameter weighting the relative importance of data and smoothing terms. Although the TV semi-norm has been useful for performing edge-preserving regularization [

1,

2,

3,

4,

5,

6,

7,

8,

9], it is known to be numerically difficult to handle. Despite its convexity, it is not linear, quadratic or even everywhere-differentiable. Thus, the non-smoothness of this term prevents a straightforward application of gradient based optimization methods.

To remedy the problem of non-differentiability of the norm function , there are two solutions. First, we can split where and for . The norm of x will be then equal to , which will remove non-differentiability at zero but unfortunately the problem dimension will be doubled and the optimization will become constrained since y and z should be positive. The second remedy, which we are investigating in this paper, is to replace the norm by a smooth approximation.

Any smooth TV regularization should address two issues: (1) It should remove the singularities that are caused by the use of TV regularization; (2) It should maintain the preservation of motion boundaries. Several smooth approximations of the

norm have been established in the literature for the regularization of a wide variety of problems [

10,

11,

12,

13,

14,

15,

16,

17], as well as for the optical flow problem [

2,

3,

4,

5].

To our knowledge, there is no theory that establishes optimality of any of these approximations; the best choice is application dependent. For instance, Nikolova and Ng [

17] have considered different smooth TV approximations in the context of restoration and reconstruction of images and signals using half quadratic minimization. Our objective in this paper is to investigate the use of three known smooth TV approximations, namely: the Charbonnier, Huber and Green functions for the case of optical flow computation.

The outline of this paper is as follows.

Section 2 describes the variational formulation of the optical flow problem. In particular, we present the TV regularization model for dense optical flow estimation. In

Section 3, we consider three smooth approximations of the TV regularization term and discuss their maximum theoretical error of approximation.

Section 4 concerns the performance evaluation of the three approximations in terms of the quality of the estimated optical flow and the speed of convergence by using the Middlebury datasets. Finally, we conclude our work in

Section 5.

2. Variational Formulation

Let us consider a sequence of gray level images , , , where is the temporal domain and denotes the image spatial domain. We will use both continuous and disc in time at frame numbers , and in space at pixel coordinates , with m (respectively n) corresponds to the discrete column (respectively row) of the image, being the coordinate origin located in the top-left corner of the image. With this notation, denotes a discrete representation of . We will also use both continuous and quantized image intensities and the same symbol I will be used for both of them.

Assuming that the gray level of a point does not change over time we may write the constraint

where

is the apparent trajectory of the point

. Taking the derivative with respect to

t and denoting

, we obtain the linear optical flow constraint

The vector field

is called optical flow and

,

denote the temporal and spatial partial derivatives of

I, which are computed using high-pass gradient filters for discrete images. Clearly, the single constraint (

2) is not sufficient to uniquely compute the two components

of the optical flow (this is called the aperture problem) and only gives the component of the flow normal to the image gradient, i.e., to the level lines of the image. As it is usual, in order to recover a unique flow field, some prior knowledge about it should be added. For that, we assume that the optical flow varies smoothly in space, or better, that is piecewise smooth in

. This can be achieved by including a smoothness term of the form

where

is a suitable function.

Both data attachment (

1) and regularization term (

3) can be combined into a single energy functional

where the data functional

D is either equal to the linear term

or the nonlinear term

and

is a regularization parameter.

When using the linear data term (

5), the case

corresponds to the Horn-Schunck model [

18] and the case

, where

, corresponds to the Nagel-Enkelmann model [

19]. On the other hand, the TV regularization [

1] became the most used in image processing because it allows for discontinuities preserving. In this case, for

, we have

where

is the space of infinitely differentiable vector-valued functions with compact support. Note that when

, the distributional derivative

of

w is a vector-valued Radon measure with total variation

. When

, the TV semi-norm reduces to the

-norm of the gradient

so that

where we can have either

or

. We have chosen the first one because the Euclidean norm is known to be rotationally invariant.

For a full account of regularization techniques for the optical flow problem and the associated taxonomy, we refer to [

20,

21].

In this paper, we will consider a TV regularization model which is written in the discrete form as follows:

where

and

is a set of neighbors of the pixel

p. We will also combine the TV regularization with the nonlinear data term (

6) used with a robust function

in order to remove outliers:

The robust function used in this paper is

where

is a given threshold.

3. Smooth TV Regularization

In this section, we focus on approximating the non-smooth TV semi-norm (

7) by a smooth function:

where

is a smooth approximation of the absolute value function and

is a small parameter adjusting the accuracy of this approximation. In this paper, we will consider variants choices of

as illustrated in

Table 1.

Notice that the regularization term in (

9) with a function

from

Table 1 is a hybrid between the TV regularization (

7) and the standard quadratic regularization [

18]. It takes the form of a quadratic or nearly quadratic for small values of the optical flow gradient and becomes linear or sublinear for large values of the optical flow gradient. In this way, this smooth regularization will retain the fast Laplacian diffusion inside homogeneous motion regions and its effect is substantially reduced near motion boundaries helping the preservation of these boundaries’ edges. We should also note that the smaller the parameter

is, the better the function

approximates the absolute value function; and henceforth the better the smooth regularization (

9) approximates the TV regularization (

7). In practice, a very small parameter

might cause numerical instabilities but such a choice is not really needed as the quadratic regularization is preferred inside homogeneous regions.

According to the discussion above, there are minimum conditions that should satisfy any approximation

(see [

10]):

By simple calculus, it can be shown that the approximations we are considering in this paper, which are given in

Table 1, satisfy the conditions in (

10). Notice also that all these approximations are suitable for 1st order numerical convex optimization algorithms since they are all convex and differentiable. However, for 2nd order numerical optimization algorithms, the

Charbonnier and

Green functions are twice differentiable but the

Huber function is not. In this case, the latter approximation is normally replaced by a twice differentiable function called the pseudo

Huber approximation

[

12], which is the same as the

Charbonnier function except for a vertical translation by

.

3.1. Charbonnier Approximation

The first approximation

is referred to as the

Charbonnier penalty function [

11]. It was first used for optical flow in [

5]. This function is clearly strictly convex and infinitely differentiable. Moreover, we can easily prove that it approximates the absolute value function with an error at most equal to

.

Lemma 1. For , where is the Charbonnier function (11). The Charbonnier TV regularization is therefore an approximation of the TV regularization of order . Let be the total number of image pixels and be the fixed size of each neighborhood which is used for the finite difference approximation of the optical flow gradient.

Proposition 1. , where in is the Charbonnier function (11). Proof. Using the previous lemma, we get

☐

3.2. Huber Approximation

The

Huber function

was initially used by

Huber (see [

13]) as an M-estimator in the field of robust statistics. Its use for optical flow computation was first discussed in [

2]. Later, it was used as a smooth approximation of the

norm as in [

16]. The

Huber function is clearly convex and continuously differentiable.

We want to relate the

Huber regularization in (

9) to the TV and quadratic regularization. First, the following lemma shows that the

Huber function approximates the absolute function with an error of order

.

Lemma 2. For , where is the Huber function (12). Proof. Let

. Suppose first that

. Hence

Now, if , then ☐

This shows that the Huber TV regularization has a maximum theoretical error twice better than that of the Charbonnier TV regularization.

Proposition 2. , where in is the Huber function (12). 3.3. Green Approximation

The

Green penalty function

was originally used in [

14] for the maximum likelihood reconstruction from emission tomography data as a convex extension of the Geman and McClure function [

15]. This penalty function was introduced for optical flow computation in [

3,

4]. Again, this function is strictly convex and infinitely differentiable inheriting these properties from the log cosh function. Notice that we have translated the original

Green function by a factor

, which is the maximum approximation error as shown by the following lemma.

Lemma 3. For , where is the Green function (13). Proof. Let

. First, we have

Hence, for

, we have

Now, if

, then

Therefore, whatever the sign of

s, we get

☐

The Green TV regularization approximates the TV regularization with an order as well but the maximum error is slightly greater than that of the Huber TV regularization.

Proposition 3. , where in is the Green function (13). 4. Experimental Results

We want to minimize the energy functional (

4) where

D is given by (

8),

R is given by (

9) and

is one of the smooth approximations presented in the previous section. We choose to adopt the

discretize-optimize approach by applying a numerical optimization algorithm to this discrete version of the optical flow minimization problem. The problem is of a large-scale type and therefore we solve it using a multiresolution line search truncated Newton method as developed in [

22,

23]. The method first builds a pyramid of images at different levels of resolution. It starts then at the coarsest level with a zero flow field and applies a number of iterations of the line search truncated Newton (LSTN) algorithm. Afterwards, the obtained coarse estimation is taken to the next fine level by bilinear interpolation. This process is repeated until reaching the finest level where a good initial estimate of the optical flow is obtained and henceforth refined by the LSTN algorithm until convergence is reached.

The parameter , present in the data term and which is shared by the three functionals, was fixed to a value between ten and twenty depending on the nature of the image sequence. However, in order to have a fair comparison, the parameters and involved in the regularization term yielding a different energy functional, are tuned for each functional to have the best results. From the experiments, we have noticed that functionals with the Charbonnier and Huber approximations will share in general the same set of optimal parameters. As expected the set of optimal parameters for the Green function is different, especially for the value of since the function has a different transition level between its quadratic and linear parts.

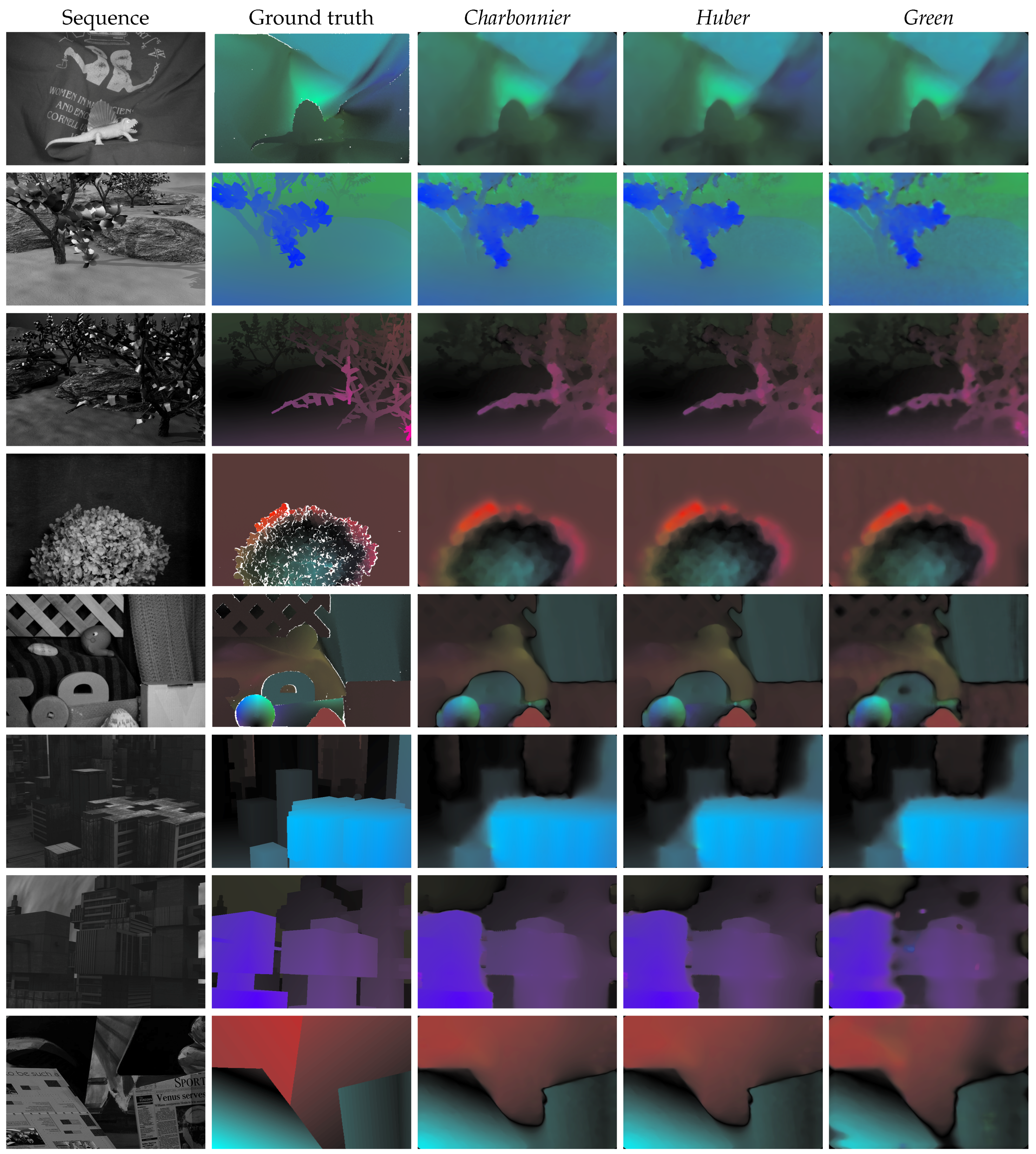

In

Figure 1, we show the colored based representation of the ground truth and the best optical flow estimates obtained using

Charbonnier,

Huber and

Green smooth TV regularizations for the Middlebury training benchmark [

24] using the best parameters. Notice first how the motion boundaries are preserved for all images in

Figure 1. This is indeed a famous property of the TV regularization that has been inherited by its three smooth approximations. In

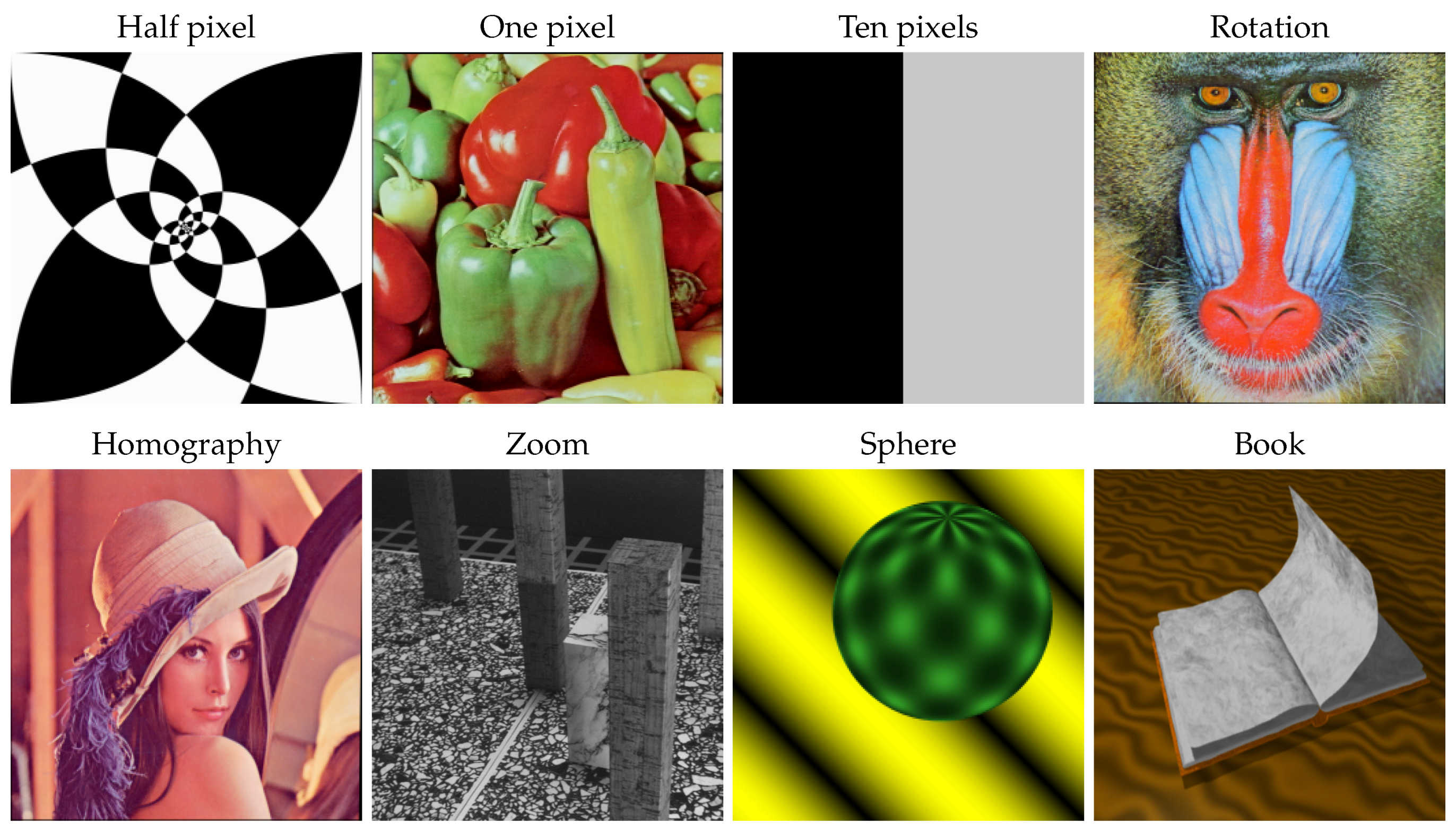

Figure 2, we present other tested image sequences that have different types of movement. The first three images have a translation of different sizes: half, one and ten for the spiral, peppers and band sequences, respectively. The baboon sequence has a rotation movement and the Lena sequence has a homography mapping. These five images are standard test images in image processing that have been used as the first frames and the second frames have been generated by applying the movements described above. The Marble blocks sequence, which has a zoom transformation, was obtained from the Image Sequence Server, Institut für Algorithmen und Kognitive Systeme, (Group Prof. Dr. H.-H. Nagel), University of Karlsruhe, Germany and was first used in [

25]. Finally, the rotating sphere sequence was generated by the Computer Vision Research Group at the University of Otago, New Zealandand the book sequence by the Computer Laboratory at Cambridge University.

Table 2 and

Table 3 present the performance comparison of the three approximations in terms of the quality of the optical flow estimation measured by the average angular error (AAE) and the average endpoint error (AEE). In

Table 4, we give also the interpolation error measured by the displaced frame difference (DFD), which corresponds to the data term in the energy functional (

4). Then in

Table 5, we provide a comparison with respect to the speed of convergence given by the number of gradient evaluations (Ng).

First, we remark that the

Charbonnier and

Huber approximations lead to similar results with a slight preference for the latter. Globally, these two approximations perform better than the Green TV regularization in terms of both the average angular error and the average endpoint error of the estimated optical flow solution, and the speed of convergence as shown in

Table 6. On a total of sixteen image sequences, Huber method has performed better half of the time in terms of AEE with an average of 3.836 per image sequence. It has also 9 times a better AEE with an average of 0.468. The method needs an average of 466 gradient evaluations to reach the estimated solution. This is slightly better than Charbonnier method, which has averages of 3.849, 0.469 and 473 for AAE, AEE and Ng, respectively. Nevertheless, the Green approximation has the best performance with respect to the interpolation error. The method has performed better on thirteen image sequences out of sixteen with an average DFD of 0.580 per sequence; while Charbonnier and Huber approximations have an average DFD of 0.670 and 0.678, respectively. On the other hand, Green method has better AAE and three different sequences, better AEE for two sequences, and better Ng for four sequences.

We have noticed also that the Green method is very sensitive to the parameter

, which is due to the sensitivity of the hyperbolic function cosh to roundoff errors. The

Charbonnier and

Huber approximations suffer less from this problem. This might explain their wide use as smooth TV approximations in image processing. In

Figure 3, we show the dependence of the estimated solution on the parameter

for these two approximations using the Yosemite sequence, which was created by Lynn Quam at SRI and first used for optical flow in [

26]. The dependence is shown in terms of AAE, AEE and Ng. For the Yosemite sequence with clouds, we can see that both the

Charbonnier and

Huber TV approximations give similar results for values of

between

and

. For

,

Charbonnier approximation is slightly better than

Huber approximation but the latter is performing better for values of

around

. Otherwise, the two approximations are performing almost the same except for large values of

near

where

Charbonnier gives slightly better AEE but

Huber has better AAE. In

Figure 4 and

Table 7, the results are shown using the best parameters.