Abstract

Precision agriculture is a farm management technology that involves sensing and then responding to the observed variability in the field. Remote sensing is one of the tools of precision agriculture. The emergence of small unmanned aerial vehicles (sUAV) have paved the way to accessible remote sensing tools for farmers. This paper describes the development of an image processing approach to compare two popular off-the-shelf sUAVs: 3DR Iris+ and DJI Phantom 2. Both units are equipped with a camera gimbal attached with a GoPro camera. The comparison of the two sUAV involves a hovering test and a rectilinear motion test. In the hovering test, the sUAV was allowed to hover over a known object and images were taken every quarter of a second for two minutes. For the image processing evaluation, the position of the object in the images was measured and this was used to assess the stability of the sUAV while hovering. In the rectilinear test, the sUAV was allowed to follow a straight path and images of a lined track were acquired. The lines on the images were then measured on how accurate the sUAV followed the path. The hovering test results show that the 3DR Iris+ had a maximum position deviation of 0.64 m (0.126 m root mean square RMS displacement) while the DJI Phantom 2 had a maximum deviation of 0.79 m (0.150 m RMS displacement). In the rectilinear motion test, the maximum displacement for the 3DR Iris+ and the DJI phantom 2 were 0.85 m (0.134 m RMS displacement) and 0.73 m (0.372 m RMS displacement). These results demonstrated that the two sUAVs performed well in both the hovering test and the rectilinear motion test and thus demonstrated that both sUAVs can be used for civilian applications such as agricultural monitoring. The results also showed that the developed image processing approach can be used to evaluate performance of a sUAV and has the potential to be used as another feedback control parameter for autonomous navigation.

1. Introduction

In the past decade, there has been an increased use of small unmanned aerial vehicles (sUAV) in the United States. The advent of sUAV was brought about by the advancement of miniaturized sensor systems, microprocessors, control systems, power supply technologies, and global positioning systems (GPS). With the recent release of the operational rules for commercial use of sUAV by the Federal Aviation Administration [1], this will open the doors to integrating sUAV into the national airspace. Applications of sUAV in the civilian area include real estate photography, fire scouting, payload delivery, and agriculture.

Agriculture is one of the areas that will be largely impacted with the use of sUAV. For a farmer, knowing the health and current state of the year’s crop is essential for effective crop management. Precision Agriculture, which is a spatial-based technology, is used by farmers to monitor and manage their crop production [2,3]. Precision agriculture can not only improve crop productivity, but it also provides the means to an efficient use of resources (e.g., water, fertilizer, and pesticides).

One of the tools of Precision Agriculture is remote sensing. Remote sensing is an approach in which information is obtained without requiring a person to be physically present to collect the data. Combined with Geographic Information Systems (GIS) and Global Positioning Systems (GPS), remote sensing provides farmers the technologies needed to maximize the output of their crops [4,5,6]. Satellite imaging has been used to remotely monitor crops [7]. However, the prohibitive cost, low image resolution, and low sampling frequency are some of the disadvantages of satellite imaging. Manned aircraft has also been tried in order to implement remote sensing in agriculture [8]. Similar to satellite imaging, the cost and the frequency of sampling are the inhibiting factors. Furthermore, a comparative study between using sUAV and manned aircraft to image citrus tree conditions showed that sUAV produced higher spatial resolution images [9]. One of the most useful yet affordable remote sensing systems can now be obtained with the purchase of a small quadcopter or drone [10,11] which can then be flown over a farmer’s desired agriculture fields. The drones can then take images of the farmer’s crop with a variety of camera filters to provide the farmer with multiple spectrums of imaging. Not only is a sUAV useful in providing current aerial images of their entire crop, but it also allows for the opportunity for image processing and analysis which can give even more information about the health of their crops as well as identifying areas of the crop that require specific forms of attention. A study by Bulanon et al. [12] to evaluate different irrigation systems of an apple orchard used a combination of sUAV and image processing. The small drones can be easily flown and maintained with little training making them a great option for farmers looking to further their farming by merging agriculture with the technology of remote sensing.

With the price of remote sensing sUAVs becoming much more affordable and thus a realistic application for today’s farmers, the challenge is selecting the sUAV that will be suitable for the specific application. Chao et al. [13] conducted a survey on the different off-the-shelf autopilot packages for small unmanned aerial vehicles available in the market. The comparative review looked at different autopilot systems and its sensor packages which includes GPS receiver and inertial sensors to estimate 3-D position and attitude information. They also suggested possible sensor configurations, such as infrared sensors and vision sensors, to improve basic autonomous navigation tasks. An example of this is a vision-guided flight stability and autonomy system that was developed to control micro air vehicles [14]. A forward-looking vision-based horizon detection algorithm was used to control a fixed-wing unmanned aerial vehicle and the system appeared to produce more stable flights than those remotely controlled by a human pilot. The research work by Carnie et al. [15] investigated the feasibility of using computer vision to provide a level of situational awareness suitable for sense and avoid capability of a UAV. Two morphological filtering approaches were compared for target detection using a series of image streams featuring real collision-course aircraft against a variety of daytime backgrounds. These studies demonstrate the potential of using vision systems and image processing to add another level of control hierarchy to UAV systems. In this paper, the vision system of two popular off-the-shelf sUAVs were used to compare their performances in multiple aerial competence tests. A customized image processing algorithm was developed to analyze the acquired images and evaluate the performance of the sUAVs. The results of this study would be helpful for choosing a particular off-the-shelf sUAV for a certain application such as agricultural monitoring. The objectives of this paper are:

- To compare the flight performance of two off-the-shelf sUAVs: 3DR Iris+ and DJI Phantom.

- To develop image processing algorithms to evaluate the performance of the two sUAVs.

2. Materials and Methods

2.1. Small Unmanned Aerial Vehicles

Unmanned aerial vehicles (UAV) can be classified according to size. The classification includes micro UAV, small UAV, medium UAV, and large UAV [16]. The micro UAVs are extremely small in size and applies to sizes of about an insect to 30–50 cm long. The small UAV (sUAV) are UAVs with dimension greater than 50 cm and less than 2 m. The Federal Aviation Agency defines sUAV as an aircraft that weighs more than 0.25 kg but less than 25 kg [17]. The medium UAVs have dimension ranging from 5 to 10 m and can carry payloads of up to 200 kg, while large UAVs applies to the UAVs used mainly for combat operations by the military. In this paper the focus is on sUAV and its application to agriculture. While most people are able to build their own sUAV using do-it-yourself kits, off-the-shelf ready-to-fly sUAV are also available. The advantages of the off-the-shelf sUAV are that they are ready to fly and there is not much tuning involved as compared with the DIY kits. In addition, these off-the-shelf sUAV come with camera gimbals that could then be easily used for agricultural surveying. Two of the most popular sUAV in the market were used in this study: (1) 3DR Iris+ [18] and (2) DJI Phantom 2 [19]. Some specifications on the two drones are provided in Table 1. An image of the 3DR Iris+ is shown in Figure 1 and is noticeably wider than the DJI Phantom 2 which is pictured in Figure 2. The greater width of the Iris+ makes it so that the distance between the front and back props is less than the distance from side to side. In contrast, the DJI Phantom 2 provides prop locations that are symmetrically set in a square around the center of the drone. Both of the drones use functionally similar gimbals to operate a Go-Pro camera for in flight imaging.

Table 1.

Iris+ and Phantom 2 Specifications.

Figure 1.

3DR Iris+ and DJI Phantom 2 quadcopter drones. (a) 3DR Iris+; (b) DJI Phantom 2.

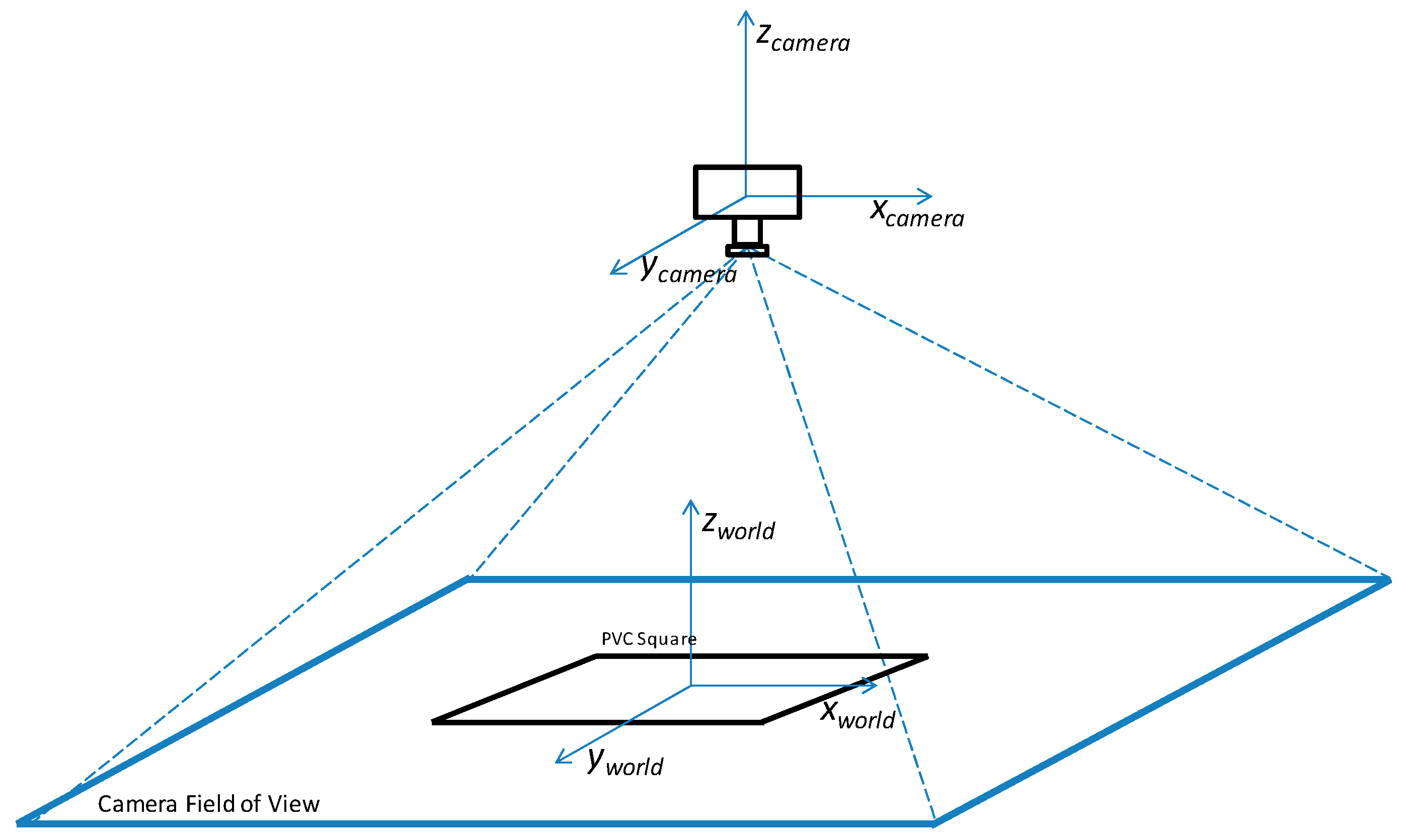

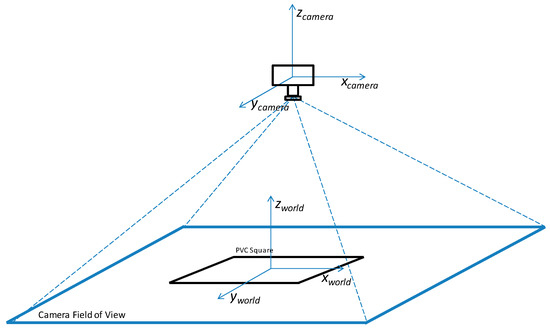

Figure 2.

sUAV camera frame and world frame relationship.

2.2. Image Acquisition for Stability Evaluation

The image acquisition system used for both sUAV was a GoPro Hero 3 camera, which was attached to the sUAV using a customized camera gimbal. The GoPro Hero 3 can shoot both videos and pictures. In this paper, the camera was used to acquire images. The camera was set to capture images with 11 megapixel resolution and the white balance was set to 5500 K.

To acquire the images for stability evaluation, the focal plane of the camera was set parallel to the ground and this was accomplished with the camera gimbal (Figure 2). The field of view in the real world coordinates with planar dimensions (xworld, yworld) was mapped to the camera’s discrete sensor elements (xcamera, ycamera). Ground control points, which include a PVC square and lined tracks, were used to calculate the spatial resolution (Δxworld, Δyworld). The spatial resolution was determined by the number of pixels in the sensor array and the field of view of the camera [20].

Since the camera was set parallel to the ground, the relationship between the two-dimensional image and the three-dimensional scene [21,22] can be expressed using a simple perspective transformation expressed by the following equation:

where fcamera is the focal length of the camera.

2.3. Performance Evaluation Tests

The application of sUAV for civilian applications such as agricultural surveying and real estate photography involves taking a single picture at a certain altitude or taking multiple pictures following a waypoint path generated by the user. Based on these applications, two performance evaluation tests were conducted to compare the two off-the-shelf sUAVs. These tests were the hovering test and rectilinear motion test. In both of these tests, a GoPro camera is attached to the sUAV camera gimbal and the camera takes images. The images are then used to evaluate the flight performance of the sUAV using image processing and analysis. Xiang and Tian evaluated the performance of an autonomous helicopter by hovering using onboard sensors [23].

2.3.1. Hovering Test

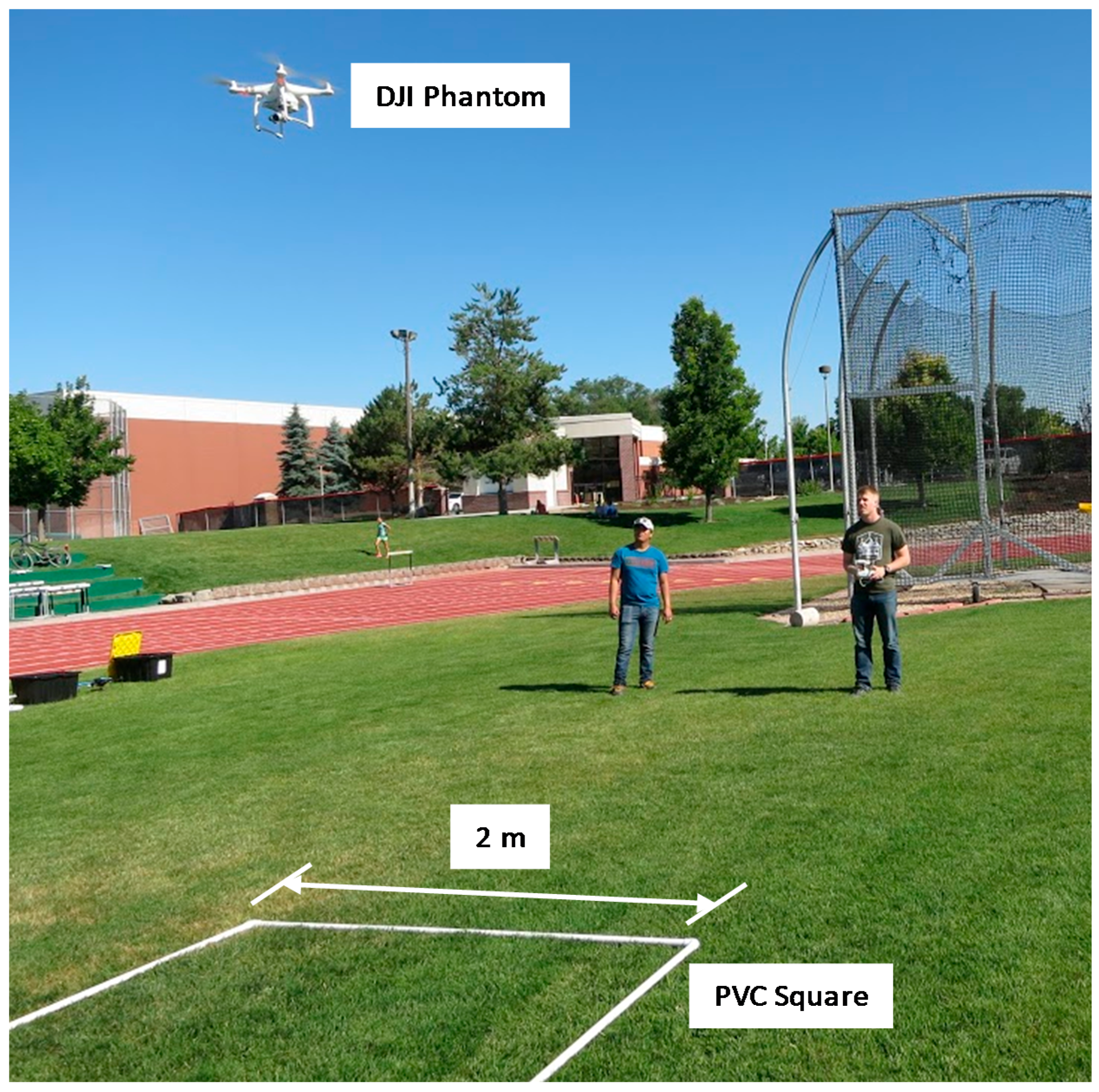

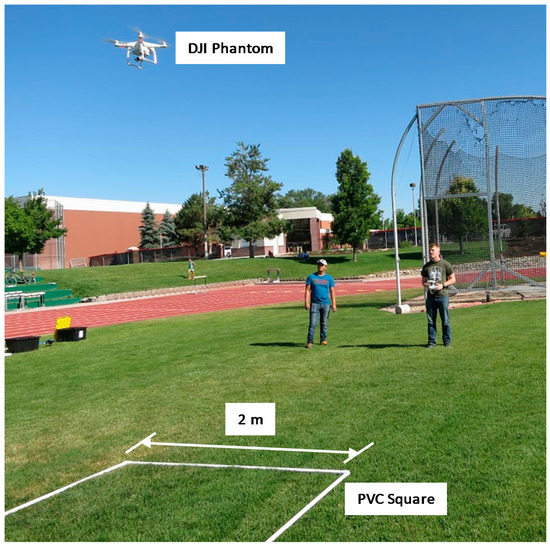

In the hovering test, the sUAV was flown over a 2-m square PVC pipe at three different altitudes: 5, 15 and 25 m. At each altitude, the sUAV was allowed to hover for two minutes and images were taken every 0.5 s. The pixel resolution for each altitude are the following: 116 pixels/m (5 m), 71 pixels/m (15 m), and 51 pixels/m (25 m). The two sUAV were tested on the same day with a wind speed of 2 miles per hour (ESE). The time-lapse images of the PVC square were used to measure stability of the sUAV while hovering. Figure 3 shows one of the hovering tests for the DJI Phantom.

Figure 3.

Hovering test for the DJI Phantom.

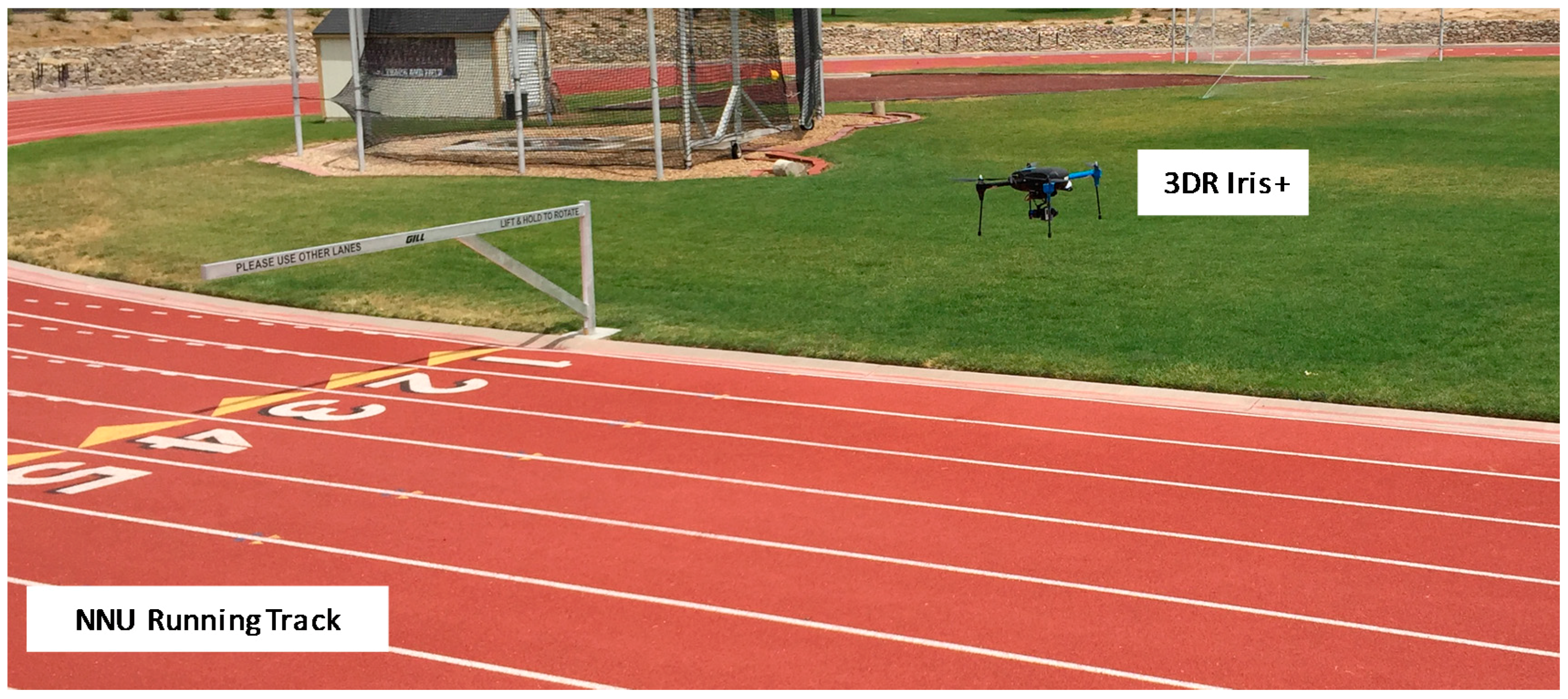

2.3.2. Rectilinear Motion Test

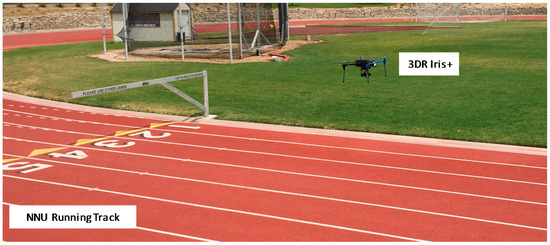

In the rectilinear motion test, the sUAV was programmed to fly a straight path over the running track at Northwest Nazarene University (NNU). The waypoint path of the sUAV was based on the straight line markings of the running track, which constrains the straight line motion of the sUAV. The wind condition during this test was 4 miles per hour (ESE). Similar to the hovering test, the camera was also programmed to acquire images as it moved over the track every 0.5 s. The acquired images were then used to measure the stability in straight line motion. Figure 4 shows one of the tests for the 3DR Iris+.

Figure 4.

Rectilinear motion test for the 3DR Iris+.

2.4. Image Processing for Performance Evaluation

2.4.1. Hovering Test

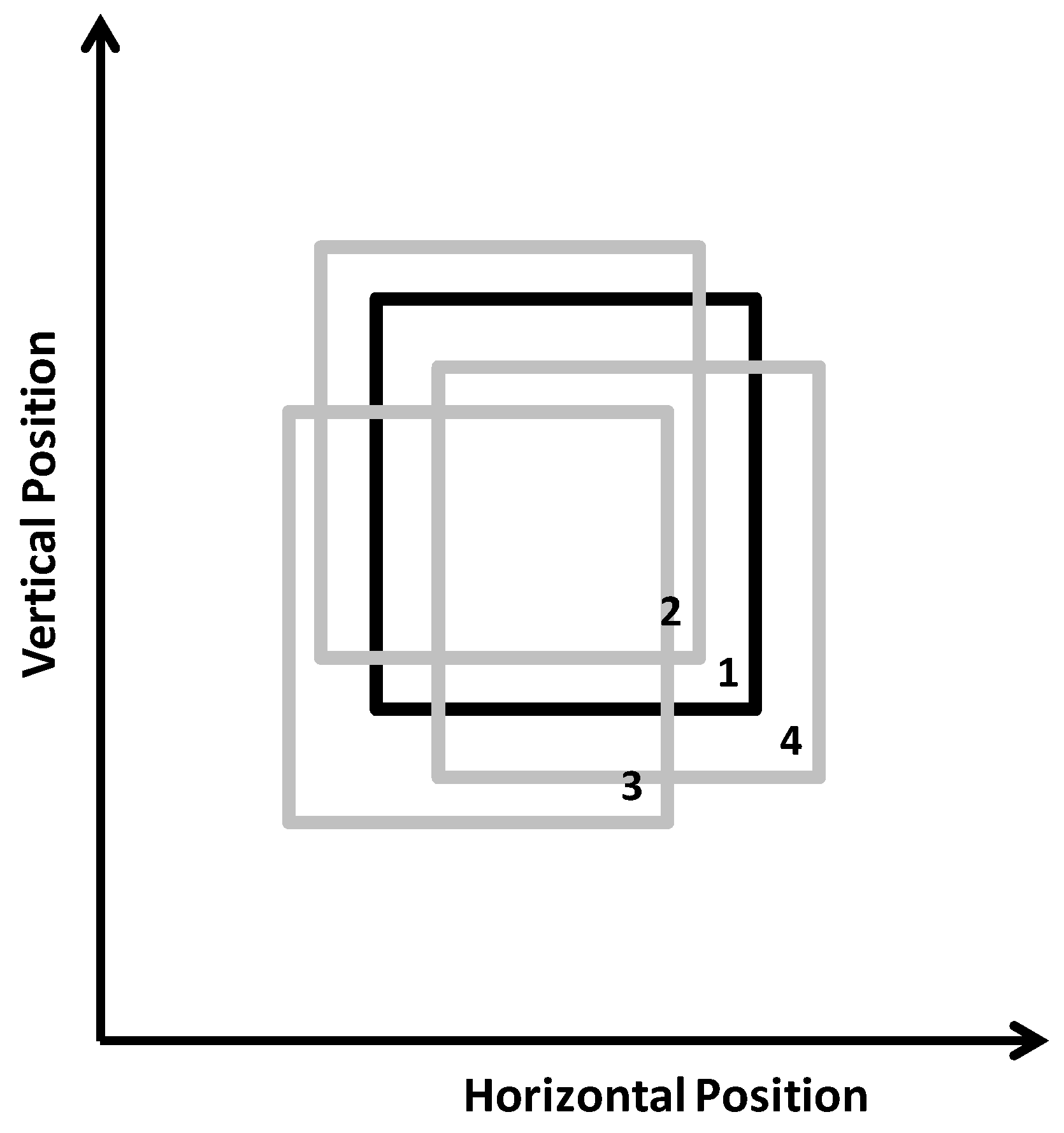

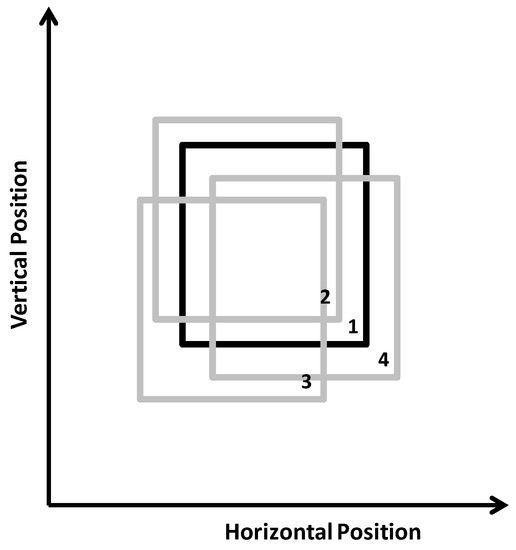

To evaluate the hovering test using image processing [24], the center of the area of the PVC square inside the image was used as the stability parameter. The stability was measured based on the change of the center of the area. The image processing was based on position tracking of the center of the PVC square. Figure 5 demonstrates this concept. The solid black line is the segmented PVC square from the first acquired image and this was used as the set point. The gray lines are the segmented PVC square from the subsequent images. The position of the center of the area for each subsequent image were then compared with the set point image, and this was used to evaluate the stability in the hovering test. The position displacement from the set point image was calculated for each image.

Figure 5.

Concept of the hovering test evaluation using image processing.

2.4.2. Rectilinear Motion Test

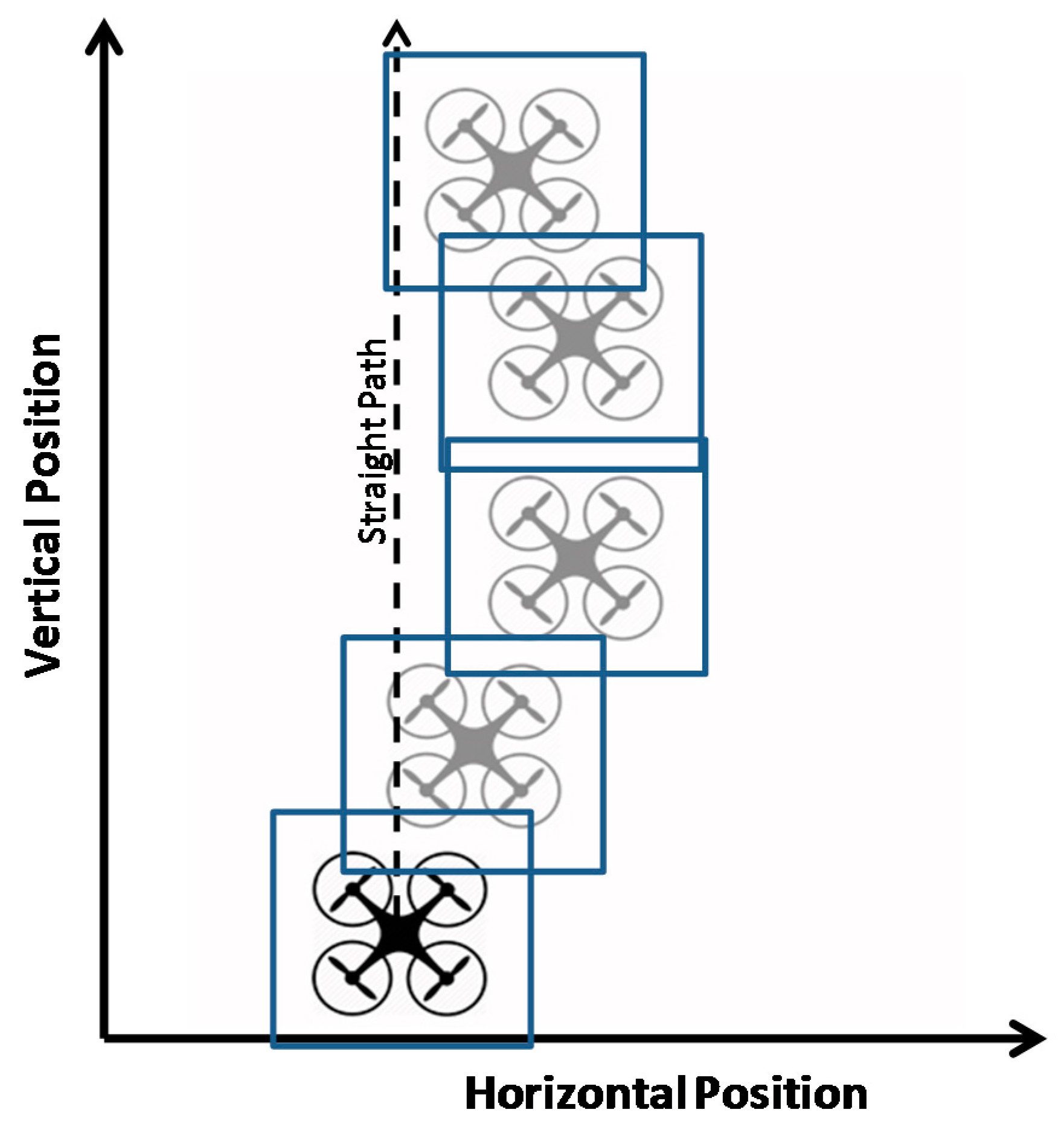

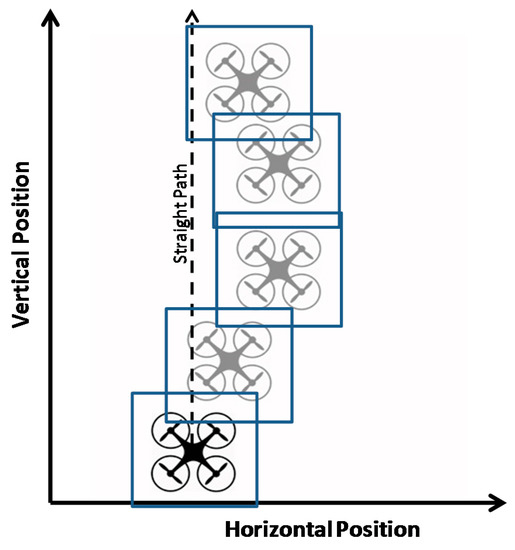

Figure 6 shows the concept of the rectilinear motion test evaluation. The black sUAV is the start position and the square enclosing the sUAV represents the field of view (FOV) of the camera. As the sUAV follows the programmed straight path, the actual position of the sUAV is different from the directed path and this is shown by the gray sUAVs with their respective FOVs. The features in the running track were then used to measure the deviation from the programmed straight path by comparing the line positions from the image acquired from the start position. Similar to the hovering test, the position displacement of the line was calculated for each image.

Figure 6.

Concept of rectilinear motion test evaluation using image processing.

3. Results and Discussion

3.1. Hovering Test

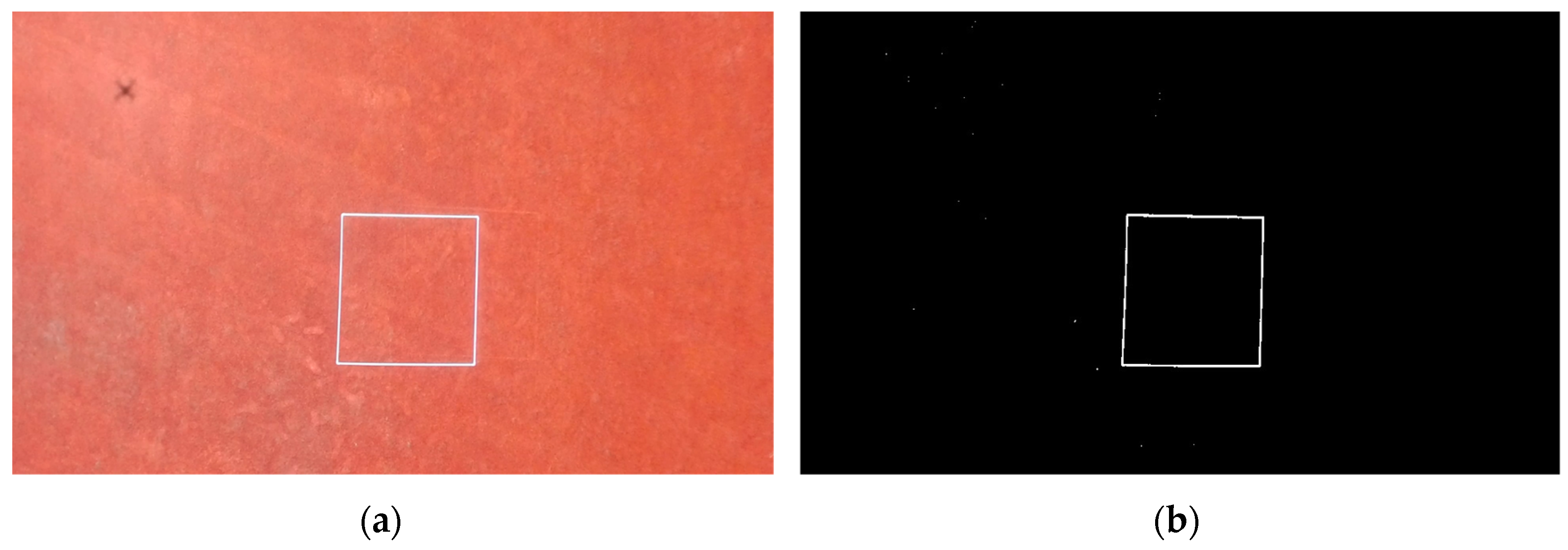

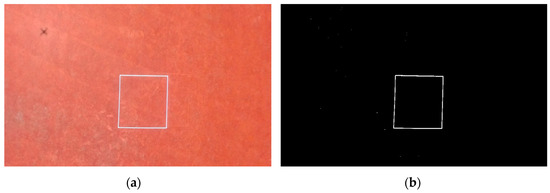

Figure 7 shows the original image of the PVC square and the segmented image. The high contrast between the PVC and the grass facilitated the segmentation of the PVC from the background and a simple thresholding operation was implemented. After thresholding, the object features such as centroid position and box length were calculated. This image and its features were used as the set point image and the position of the PVC square from the subsequent image was compared to this image and the position displacement calculated.

Figure 7.

Set point image for the hovering test. (a) Original image for hovering test; (b) Segmented image of PVC square.

Figure 8 shows the comparison between the set point image and a subsequent image. The overlaid images show that the position of the PVC square changed, which means that the sUAV is moving even though it is in hover mode. The overlaid images demonstrated that a simple image processing approach, such as image subtraction, could be used to evaluate the stability of the sUAV in hover mode.

Figure 8.

Comparison between the set point image and subsequent image. (a) Overlay of image 1 and image 2; (b) Image subtraction of images 1 and 2.

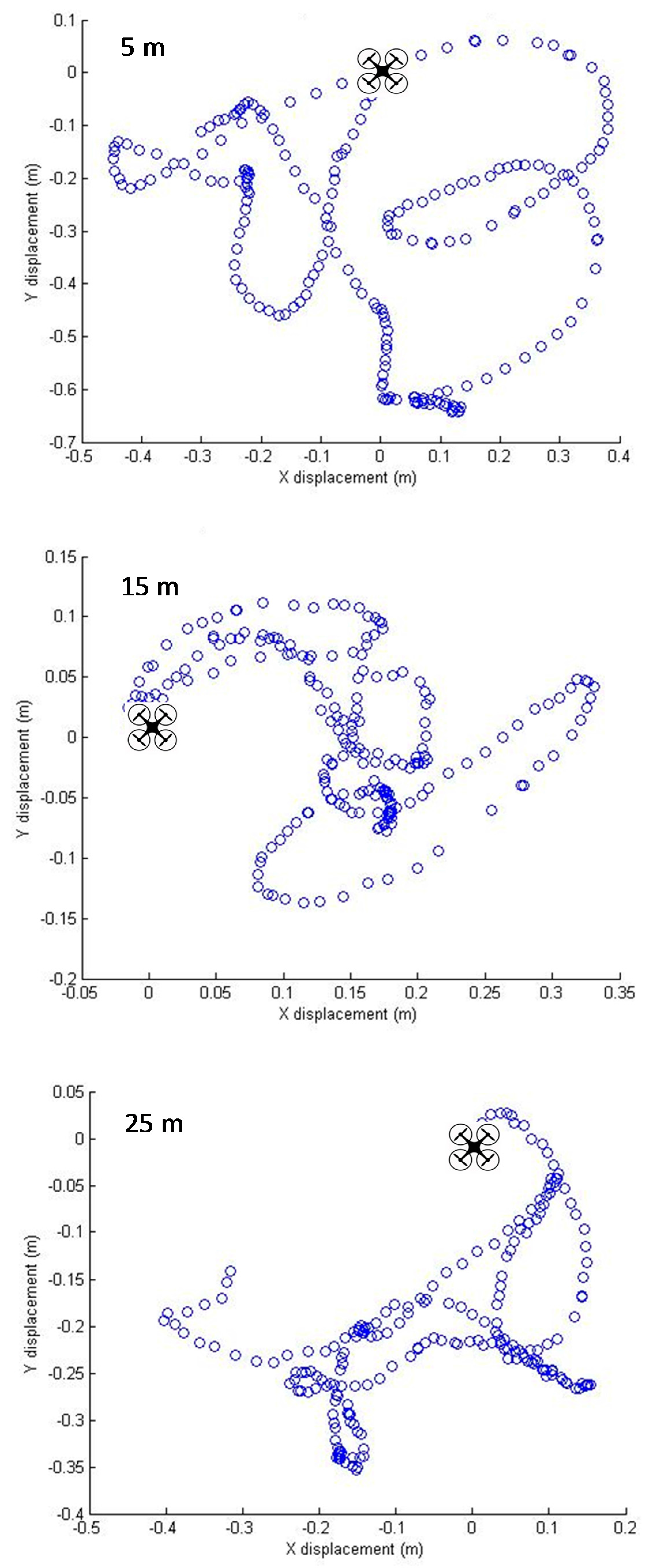

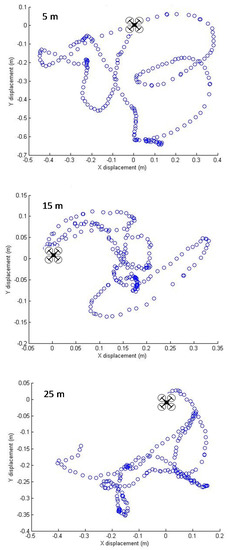

Figure 9 shows the position displacement of the 3DR Iris+ from its starting position while it is in hover mode at the three different altitudes. The position displacement is the difference between the centers of the PVC square from the set point image and the subsequent images for both the x and y axes. The hovering results of the 3DR Iris+ showed that the maximum position displacement was 0.64 m while hovering at the lowest height of 5 m and the maximum position displacement was 0.34 m while hovering at 25 m. The lowest altitude had a mean position deviation of 0.29 m (RMS displacement of 0.126 m) and the highest altitude had a mean position deviation of 0.14 m (RMS displacement of 0.052 m). A similar trend can be observed from the hovering results of the DJI Phantom (Figure 10), which showed a maximum displacement of 0.79 m while hovering at 5 m and a maximum displacement of 0.34 m at a hovering height of 25 m. The mean position displacement values for 5 and 25 m were 0.36 m (RMS displacement of 0.15 m) and 0.11 m (RMS displacement of 0.09 m), respectively. In both sUAVs, the lower altitude showed the highest position deviation. It is noted that as the altitude increased, the pixel resolution decreased which could result in higher uncertainty and in the deviation variability as the height was increased. Although both sUAVs were in hover mode, it was expected that they deviate from their set position. The position displacement is brought about by a number of factors. The first is that the GPS sensor found in most of the off-the-shelf sUAVs have hover accuracies in the ±2 m range. This is proven by the deviation from the hover images. The second factor is the disturbance caused by wind. The third is the feedback control system that holds the position of the sUAV. The Proportional Integral Derivative (PID) controller is the most commonly used for off-the-shelf sUAVs. The gains for the PID controller affect the response of the sUAV to disturbance. The results of this hovering test will be very useful when performing image mosaicking, which is a process of stitching images to form an image with a much larger field of view. Based on these results, when performing image mosaicking, the image altitude should be taken into account when configuring the image overlaps in the mission planning. As mentioned by Xiang and Tian [23], as the sUAV continuously vary around the hover point, it affects the coverage of a single image and also impacts the required overlap amount of the images during the flight. It was recommended to investigate such variations to determine the effects on the overlaps. Hunt et al. [25] described the different overlaps used at varying heights for an unmanned aircraft monitoring winter wheat fields.

Figure 9.

3DR Iris+ position displacement during hovering test using image processing.

Figure 10.

DJI Phantom 2 deviation during the hovering test using image processing.

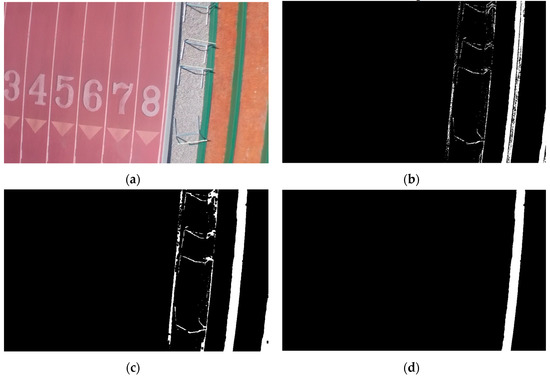

3.2. Rectilinear Motion Test

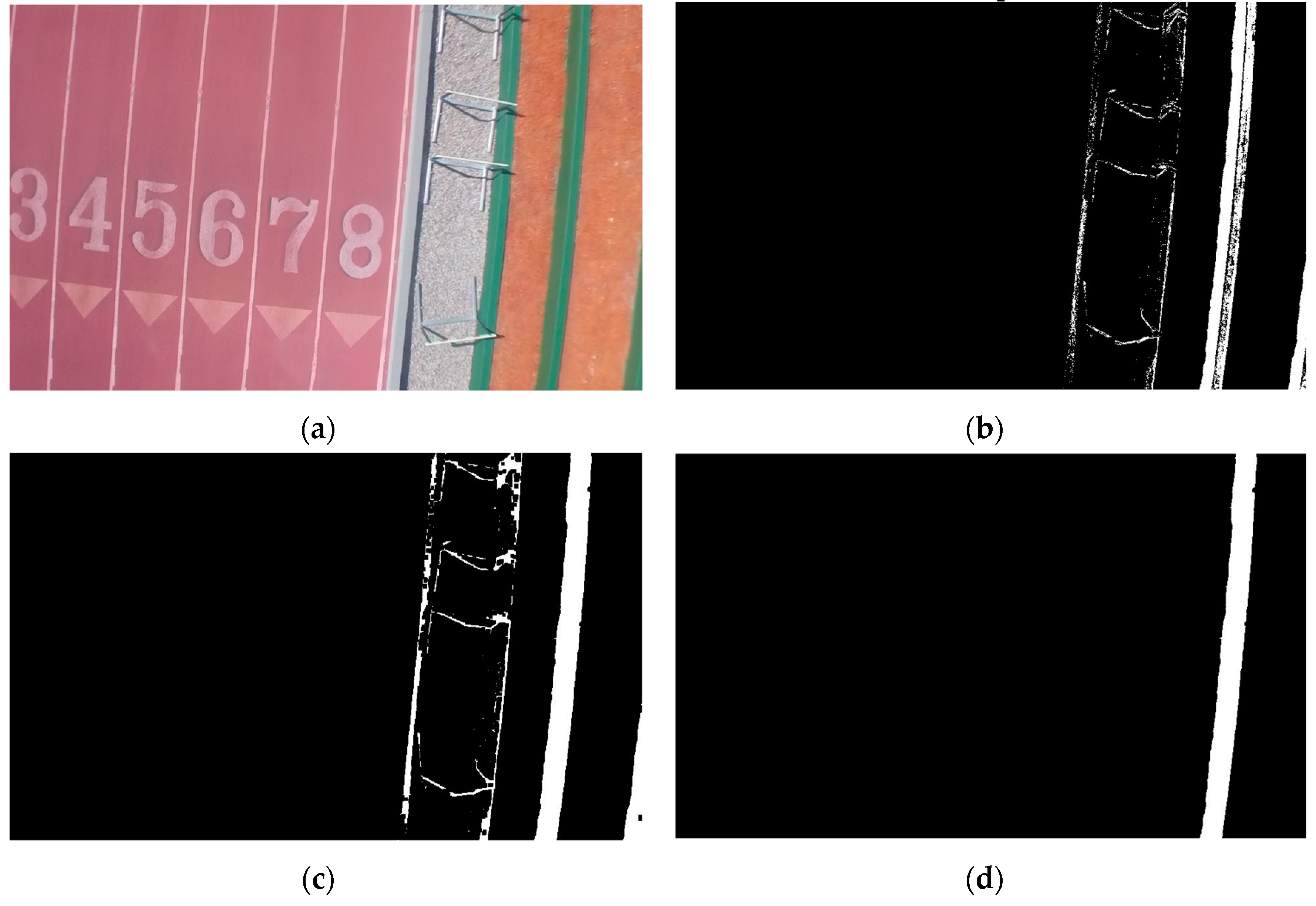

Figure 11 shows an example of the image processing for evaluating the rectilinear motion test. The first step is to segment the feature that will be used for evaluation. In this case, the feature used was the green bleacher. A color-based segmentation method was used to segment the green bleacher because of the high color contrast as compared with the other parts of the image. After segmentation, a size filter was passed to remove the salt and pepper noise. Following the filtering was an operation to fill the holes and to extract the large object in the image which was the bleacher. The position of the bleacher was then used to measure the deviation of the sUAV from the start position.

Figure 11.

Example image processing for the rectilinear motion evaluation. (a) Original image of running track; (b) Segmented image; (c) Filling hole operation; (d) Extraction of line.

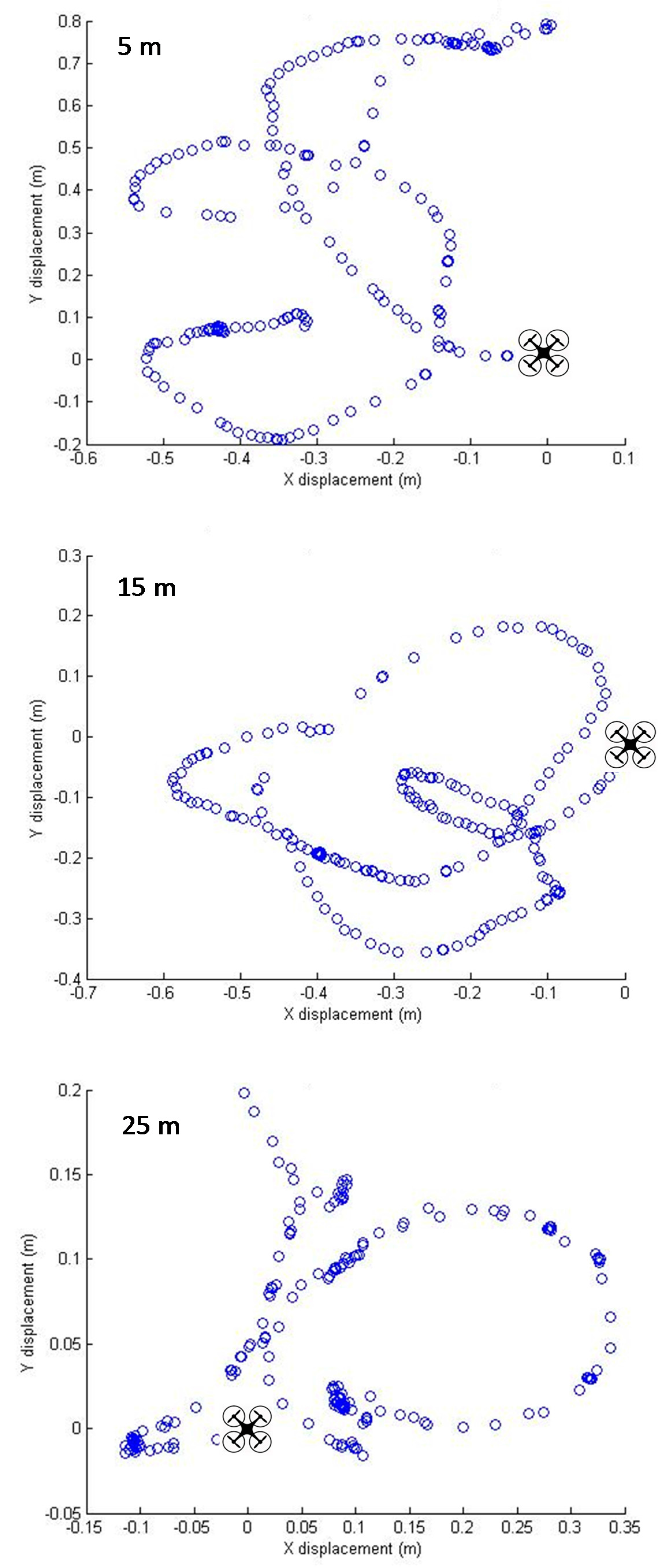

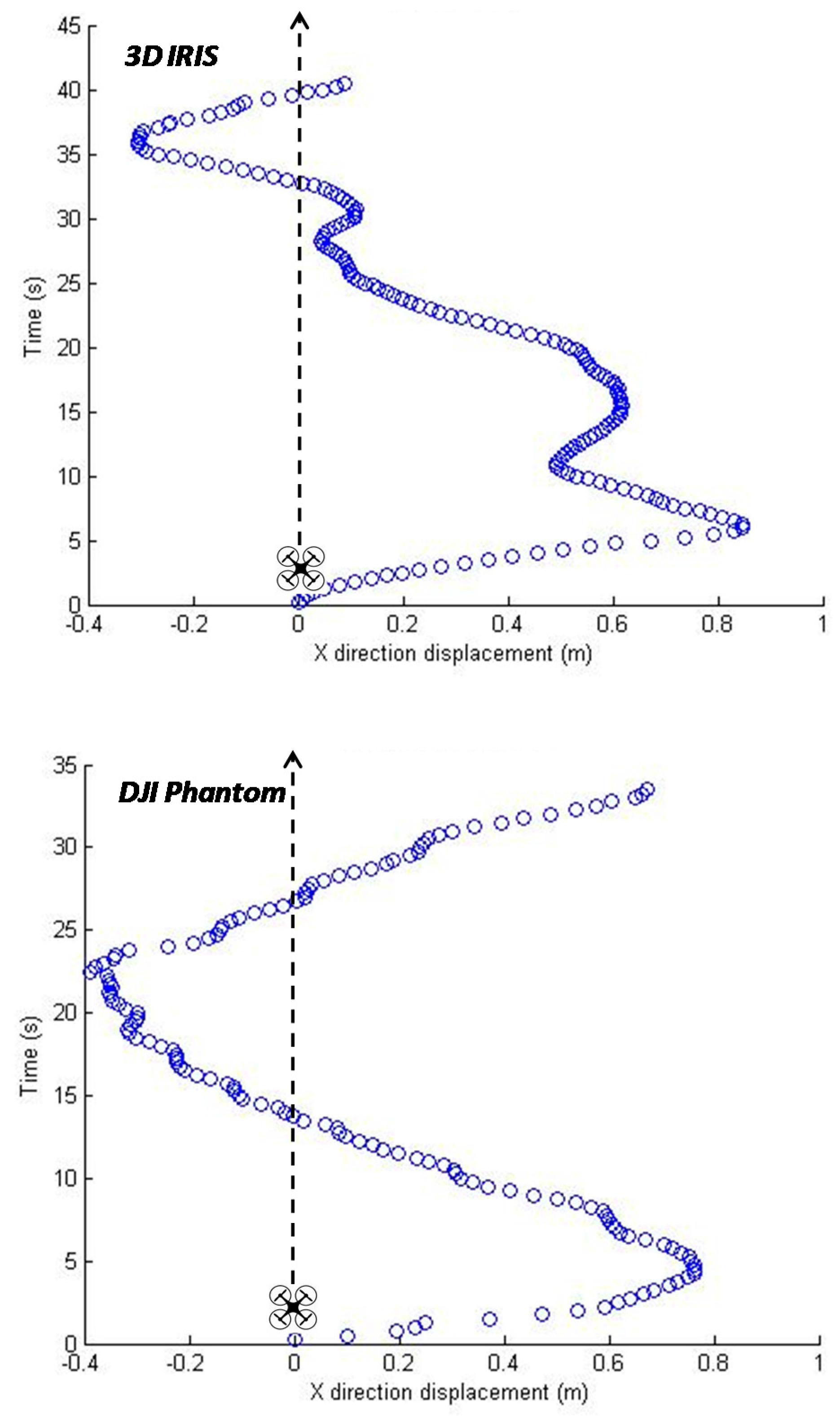

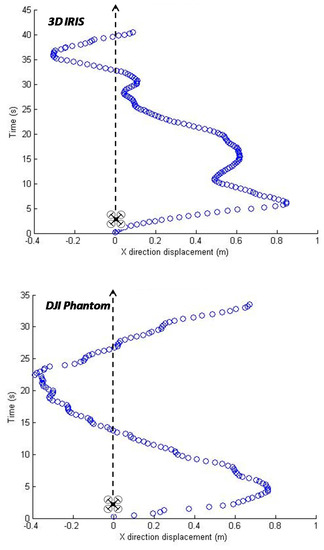

Figure 12 shows the path of the sUAV calculated using image processing for both sUAV. For the time that the sUAVs were tested, the maximum position deviation from the center line is less than 1 m. It can be noted that both sUAVs deviated from the center line and moved with a sinusoidal characteristic, which is typical for a position control system trying to correct itself. The 3DR Iris+ had a maximum position displacement of 0.85 m (RMS displacement of 0.314 m) while the DJI Phantom 2 had a maximum displacement of 0.73 m (RMS displacement of 0.372 m). Similar to the hover test, this deviation is attributed to the GPS receiver’s hover accuracy and the PID gains for the controller. The segmentation of the image features also affected the calculation of the position deviation. The line features on the ground were acquired at different positions as the sUAV was moving forward, thus affecting the segmentation of the line features. As the images were acquired every 0.5 s, the overall image intensity would vary for every image and thus affected the segmentation performance. It is noted that the values of the mean position deviation for the two sUAVs were within the reported GPS receiver’s accuracy. These results show the current state of-the-art of the control system of the off-the-shelf sUAVs and this demonstrates their capabilities in surveying tasks for civilian applications.

Figure 12.

Estimated path of the sUAVs for the rectilinear motion test using image processing.

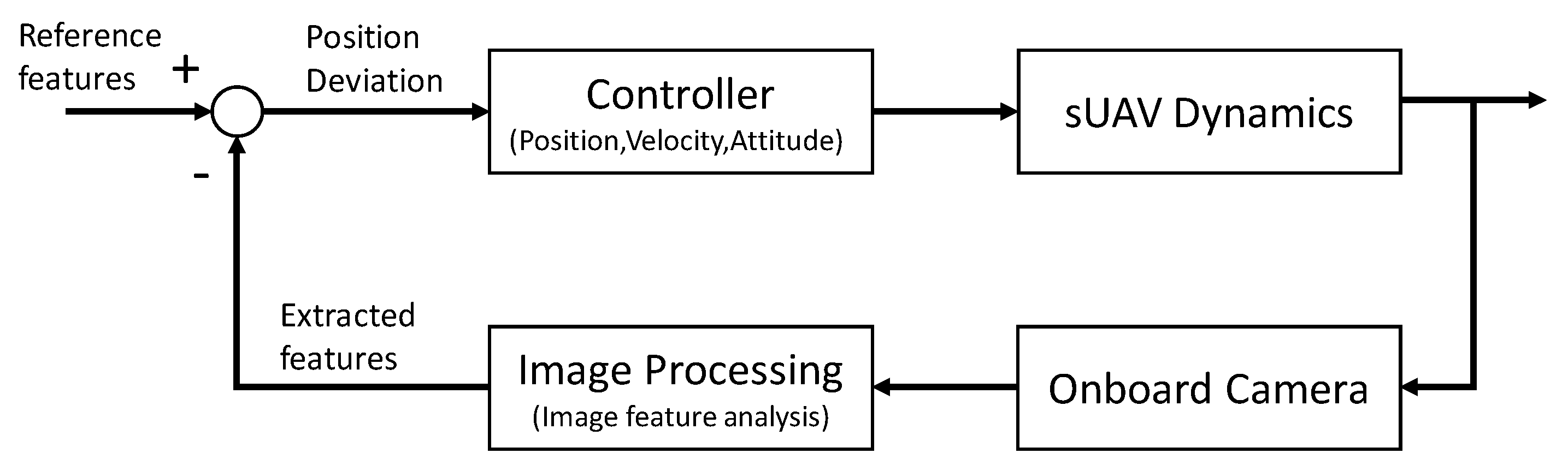

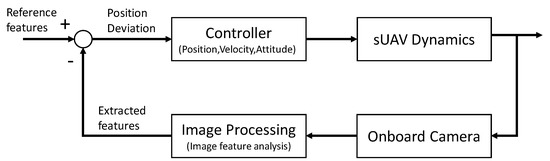

Although the image processing algorithm developed in this study was developed to evaluate and compare the basic performance of two popular off-the-shelf sUAVs, this image processing algorithm can be extended to controlling the sUAV similar to the “sense and avoid” algorithm developed by Carnie et al. [15]. In addition to the other sensors in the sUAV, a vision sensor can be utilized to estimate the sUAV attitude combined with other inertial measurements and GPS. In this case, features of the captured image will be used to estimate the relative position of the image and use it as feedback information to regulate its position such as hovering or moving in a straight line motion (Figure 13). The future direction of this study will be to extend the developed image processing algorithm and use it as an additional sensor for the sUAV.

Figure 13.

Concept of vision system as a feedback sensor for controlling the sUAV.

4. Conclusions

Two of the popular sUAVs in the market: 3DR Iris+ and DJI Phantom 2 were compared and evaluated using image processing. The comparison included the hovering test and the rectilinear motion test. For the hovering test, the sUAV took images of an object and the position displacement of the object in the images were used to evaluate the stability of the sUAV. The rectilinear motion test evaluated the performance of the sUAVs as it followed a straight line path. Image processing algorithms were developed to evaluate both tests. Results showed that for the hovering test, the 3DR Iris+ had a maximum position deviation of 0.64 m (RMS displacement of 0.126 m) while the DJI Phantom 2 had a maximum deviation of 0.79 m (RMS displacement of 0.15 m). In the rectilinear motion test, the maximum displacement for the 3DR Iris+ and the DJI phantom 2 were 0.85 m (RMS displacement of 0.314 m) and 0.73 m (RMS displacement of 0.372 m), respectively. These results show that both sUAVs are capable for surveying applications such as agricultural field monitoring.

Acknowledgments

This research was supported by the Idaho State Department of Agriculture (Idaho Specialty Crop Block Grant 2014) and Northwest Nazarene University.

Author Contributions

Duke M. Bulanon conceived the study, made the literature review, designed the experiment, processed the data, interpreted the results, and wrote the paper. Esteban Cano, Ryan Horton, and Chase Liljegren acquired the images, developed the image processing algorithm, and processed the data.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DIY | Do it yourself |

| GIS | Geographical Information System |

| GPS | Global Positioning System |

| NGB | Near-infrared, Green, Blue |

| NNU | Northwest Nazarene University |

| PVC | Polyvinyl Chloride |

| RMS | Root Mean Square |

| RGB | Red, Green, Blue |

| sUAV | Small Unmanned Aerial Vehicle |

| UAV | Unmanned Aerial Vehicle |

| VI | Vegetation Index |

References

- Federal Aviation Administration. Summary of Small Unmanned Aircraft Rule. 2016. Available online: https://www.faa.gov/uas/media/Part_107_Summary.pdf (accessed on 6 January 2017). [Google Scholar]

- Robert, P.C. Precision agriculture: A challenge for crop nutrition management. Plant Soil 2002, 247, 143–149. [Google Scholar] [CrossRef]

- Lee, W.S.; Alchanatis, V.; Yang, C.; Hirafuji, M.; Moshou, D.; Li, C. Sensing technologies for precision specialty crop production. Comput. Electron. Agric. 2010, 74, 2–33. [Google Scholar] [CrossRef]

- Koch, B.; Khosla, R. The role of precision agriculture in cropping systems. J. Crop Prod. 2003, 9, 361–381. [Google Scholar] [CrossRef]

- Seelan, S.K.; Laguette, S.; Casady, G.M.; Seielstad, G.A. Remote sensing applications for precision agriculture: A learning community approach. Remote Sens. Environ. 2003, 88, 157–169. [Google Scholar] [CrossRef]

- Sugiura, R.; Noguchi, N.; Ishii, K. Remote-sensing technology for vegetation monitoring using an unmanned helicopter. Biosyst. Eng. 2005, 90, 369–379. [Google Scholar] [CrossRef]

- Albergel, C.; De Rosnay, P.; Gruhier, C.; Munoz-Sabater, J.; Hasenauer, S.; Isaken, L.; Kerr, Y.; Wagner, W. Evaluation of remotely sensed and modelled soil moisture products using global ground-based in situ observations. Remote Sens. Environ. 2012, 118, 215–226. [Google Scholar] [CrossRef]

- Lan, Y.; Thomson, S.J.; Huang, Y.; Hoffmann, W.C.; Zhang, H. Current status and future directions of precision aerial application for site-specific crop management in the USA. Comput. Electron. Agric. 2010, 74, 34–38. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, W.S.; Rasmussen, J.; Ehsani, R. Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Pajares, G. Overview and current status of remote sensing applications based on Unmanned Aerial Vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–329. [Google Scholar] [CrossRef]

- Thomasson, J.A.; Valasek, J. Small UAS in agricultural remote-sensing research at Texas A&M. ASABE Resour. Mag. 2016, 23, 19–21. [Google Scholar]

- Bulanon, D.M.; Lonai, J.; Skovgard, H.; Fallahi, E. Evaluation of different irrigation methods for an apple orchard using an aerial imaging system. ISPRS Int. J. Geo-Inf. 2016, 5, 79. [Google Scholar] [CrossRef]

- Chao, H.Y.; Cao, Y.C.; Chen, Y.Q. Autopilots for small unmanned aerial vehicles: A survey. Int. J. Control Autom. Syst. 2010, 8, 36–44. [Google Scholar] [CrossRef]

- Ettinger, S.; Nechyba, M.; Ifju, P.; Waszak, M. Vision-guided flight stability and control for microair vehicles. Adv. Robot. 2003, 17, 617–640. [Google Scholar] [CrossRef]

- Carnie, R.; Walker, R.; Corke, P. Image Processing Algorithms for UAV “Sense and Avoid”. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006; pp. 2828–2853.

- Classification of the Unmanned Aerial Systems. Available online: https://www.e-education.psu.edu/geog892/node/5 (accessed on 29 June 2016).

- Federal Aviation Administration. Available online: https://registermyuas.faa.gov (accessed on 29 June 2016).

- 3D Robotics (3DR). Available online: https://store.3dr.com/products/IRIS+ (accessed on 29 June 2016).

- Da-Jiang Innovations (DJI). Available online: http://www.dji.com/product/phantom-2 (accessed on 29 June 2016).

- Cetinkunt, S. Mechatronics with Experiments, 2nd ed.; Wiley & Sons Ltd.: West Sussex, UK, 2015. [Google Scholar]

- Pajares, G.; García-Santillán, I.; Campos, Y.; Montalvo, M.; Guerrero, J.M.; Emmi, L.; Romeo, J.; Guijarro, M.; Gonzalez-de-Santos, P. Machine-vision systems selection for agricultural vehicles: A guide. J. Imaging 2016, 2, 34. [Google Scholar] [CrossRef]

- Stadler, W. Analytical Robotics and Mechatronics; McGraw-Hill: New York, NY, USA, 1995. [Google Scholar]

- Xiang, H.; Tian, L. Development of a low-cost agricultural remote sensing system based on an autonomous unmanned aerial vehicle (UAV). Biosyst. Eng. 2011, 108, 174–190. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Pearson: New York, NY, USA, 2007. [Google Scholar]

- Hunt, E.R., Jr.; Hively, W.D.; Fujikawa, S.J.; Linden, D.S.; Daughtry, C.S.T.; McCarty, G.W. Acquisition of NIR-Green-Blue digital photographs from unmanned aircraft for crop monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

© 2017 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).