Research Progress on Color Image Quality Assessment

Abstract

1. Introduction

2. Methodology

2.1. Search Databases and Time Range

2.2. Search Keywords and Logical Formulas

2.3. Inclusion Criteria

- (i)

- Focus on color image quality;

- (ii)

- Include subjective quality experiments or objective metrics related to color perception;

- (iii)

- Primarily peer-reviewed journals or mainstream conference papers, supplemented by high-impact preprints where necessary;

- (iv)

- Cover full-reference (FR), reduced-reference (RR), and no-reference (NR) methods, with a focus on their application and evaluation in color image scenarios.

2.4. Screening and Duplicate Removal Process

2.5. Data Extraction and Quality Control

- (i)

- Method category, whether explicit color or cross-channel modeling was included;

- (ii)

- Training/test datasets used and subjective experimental design;

- (iii)

- Evaluation metrics and statistical methods employed.

2.6. Number of Literature and Statistical Results

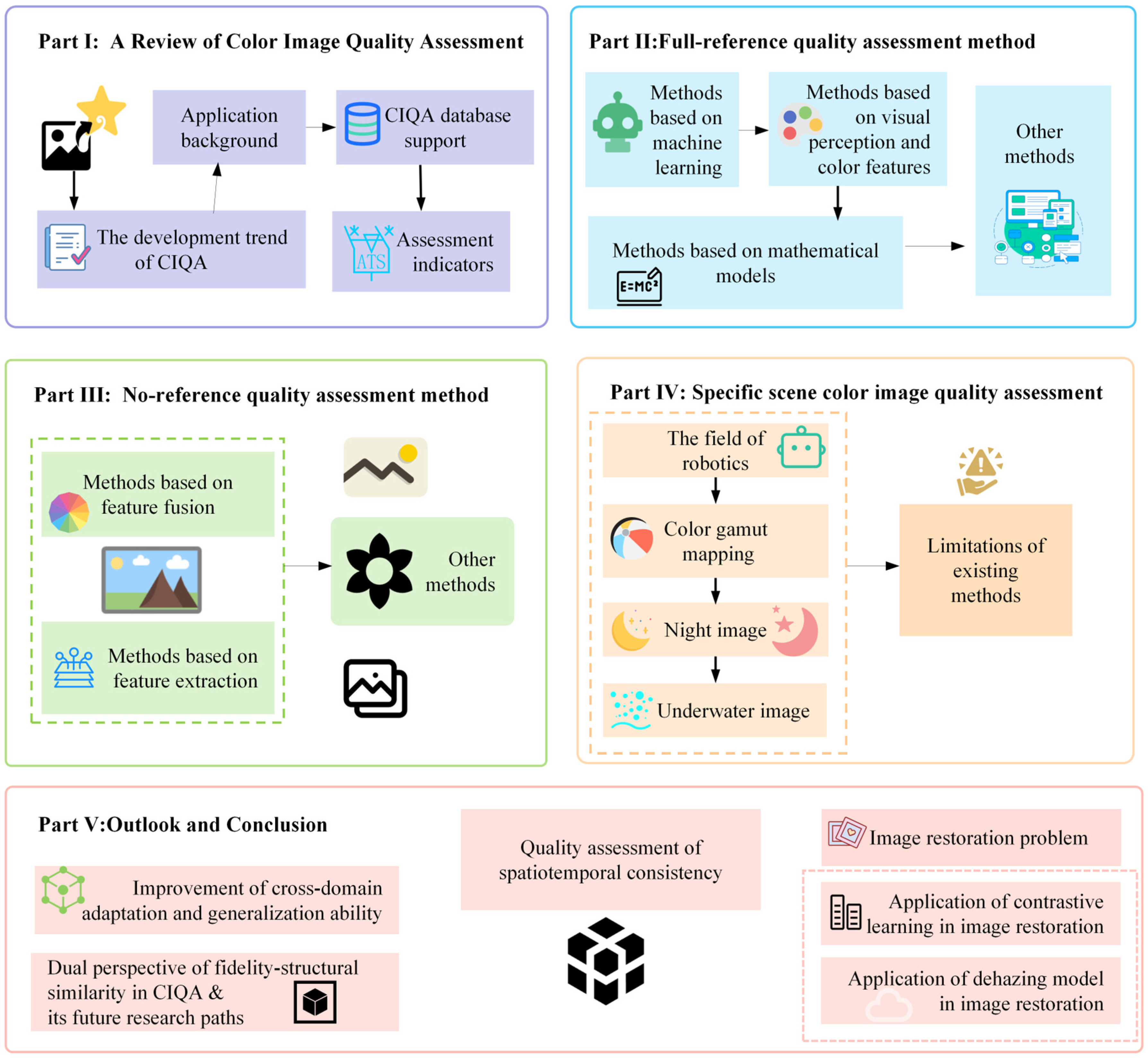

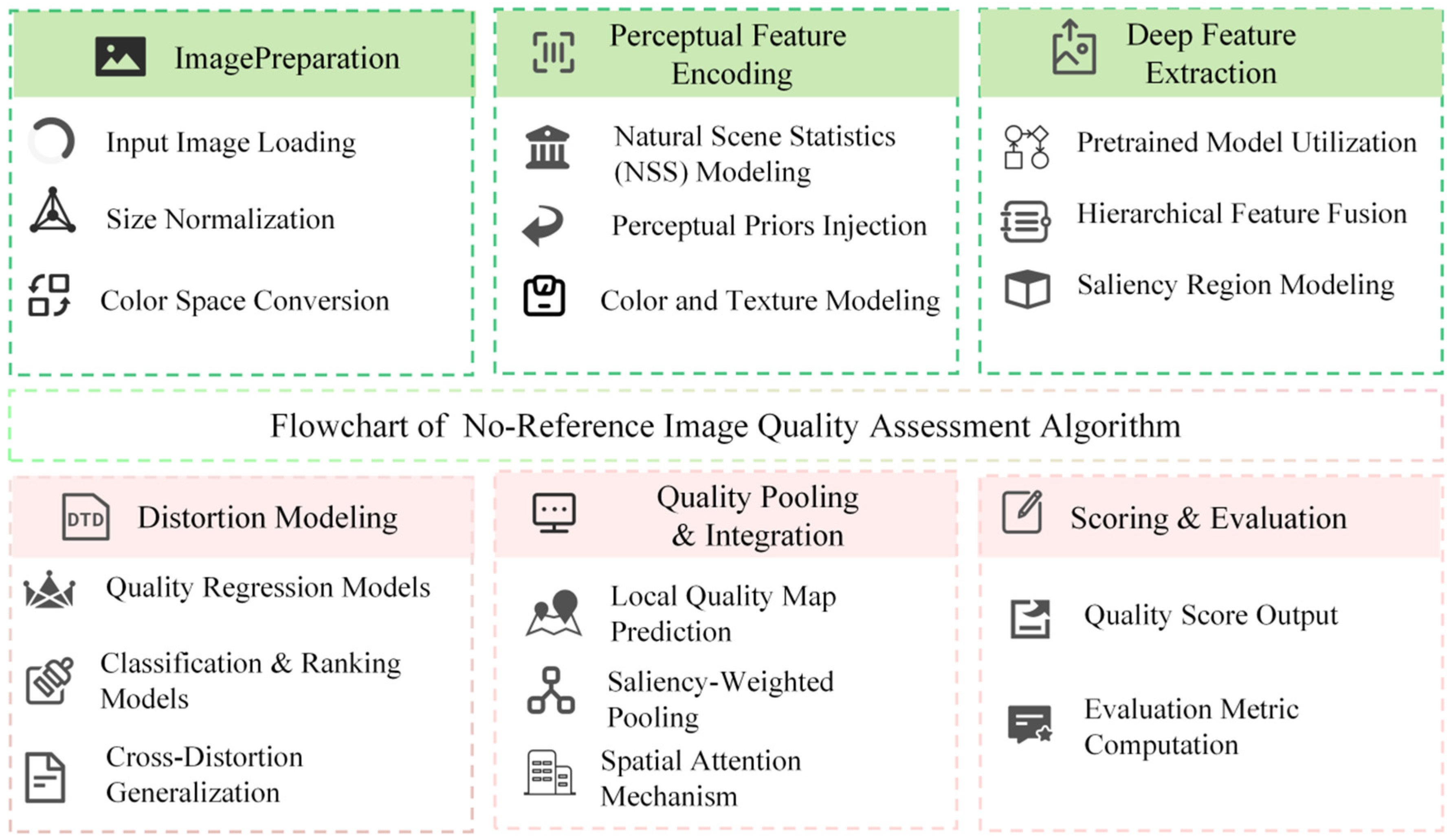

2.7. Research Framework

3. A Review of Color Image Quality Assessment

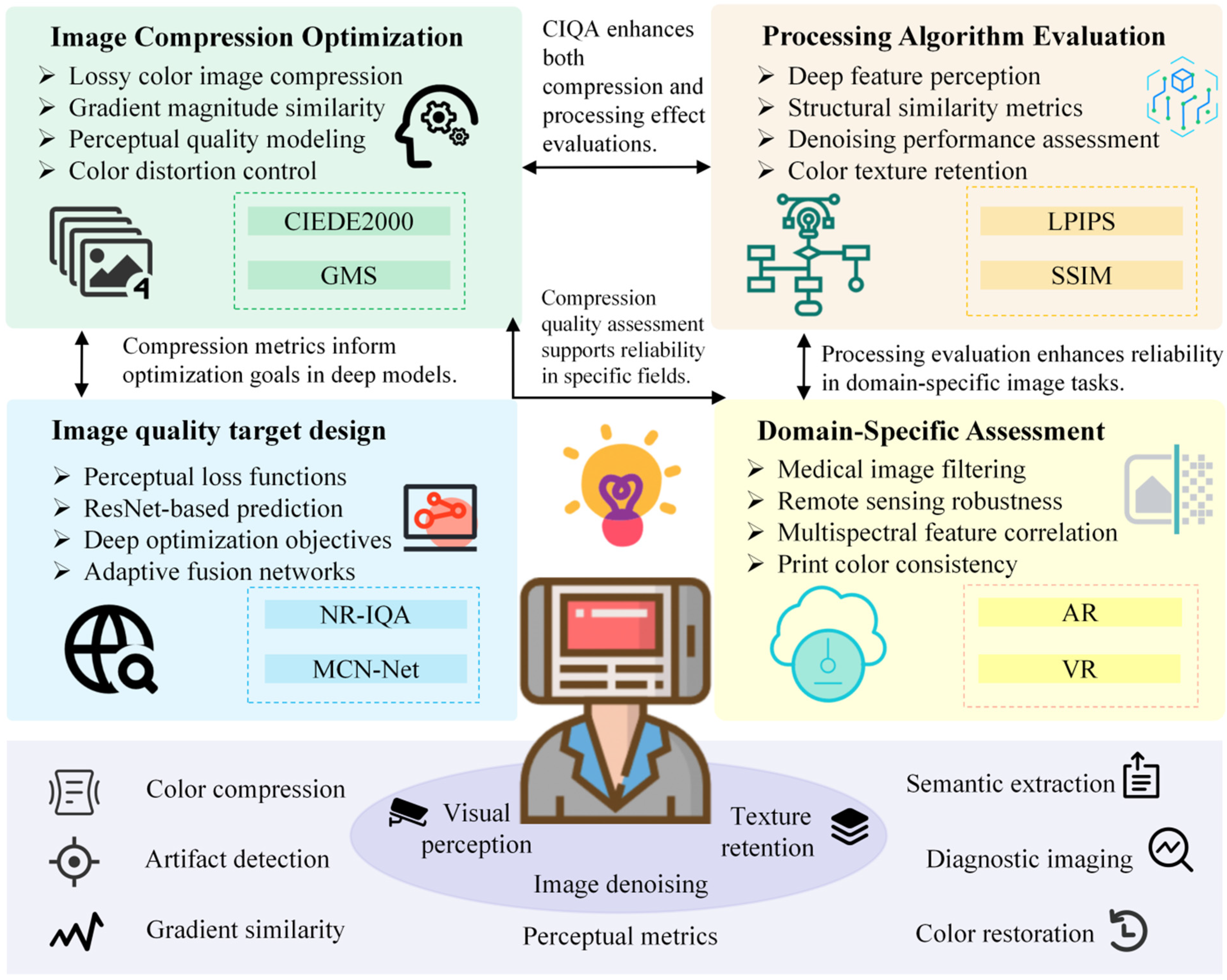

3.1. Current Application of Color Image Quality Assessment

- (i)

- Used for image compression and coding optimization

- (ii)

- Performance assessment of image processing and enhancement algorithms

- (iii)

- Design of image quality optimization targets

- (iv)

- Quality assessment for specific fields

3.2. Color Image Database

- (i)

- Quality Assessment of Compressed SCIs (QACS)

- (ii)

- Contrast-Changed Image Database (CCID2014)

- (iii)

- Contrast enhancement assessment database (CEED2016)

- (iv)

- 4K Resolution Enhancement Artifact Database

- (v)

- Underwater Image Quality Database (UIQD)

3.3. Assessment Metrics

3.3.1. Classification of Metrics

- (i)

- Correlation-based metrics, which measure the statistical association between objective prediction scores and subjective scores. Common metrics include the Pearson correlation coefficient r (PLCC), the Spearman rank correlation coefficient ρ (SROCC), and Kendall’s tau (τ). PLCC primarily assesses linear consistency, while SROCC and Kendall’s tau measure the strength and direction of monotonic relationships using ranked methods, making them well suited for non-continuous or ordinal variables. In particular, SROCC evaluates the consistency of relative rankings, whereas Kendall’s tau further assesses pairwise ranking agreements, providing complementary perspectives on monotonic associations.

- (ii)

- Error-based metrics, which quantify the numerical deviation between the predicted score and the subjective true value. Common metrics include mean absolute error (MAE) and root mean square error (RMSE), but these metrics depend on the database dimension.

3.3.2. Experimental Comparison Across Databases

3.3.3. Metric Analysis and Advantages and Disadvantages

- (i)

- PLCC

- (ii)

- SROCC

- (iii)

- Kendall’s τ

- (iv)

- MAE

- (v)

- RMSE

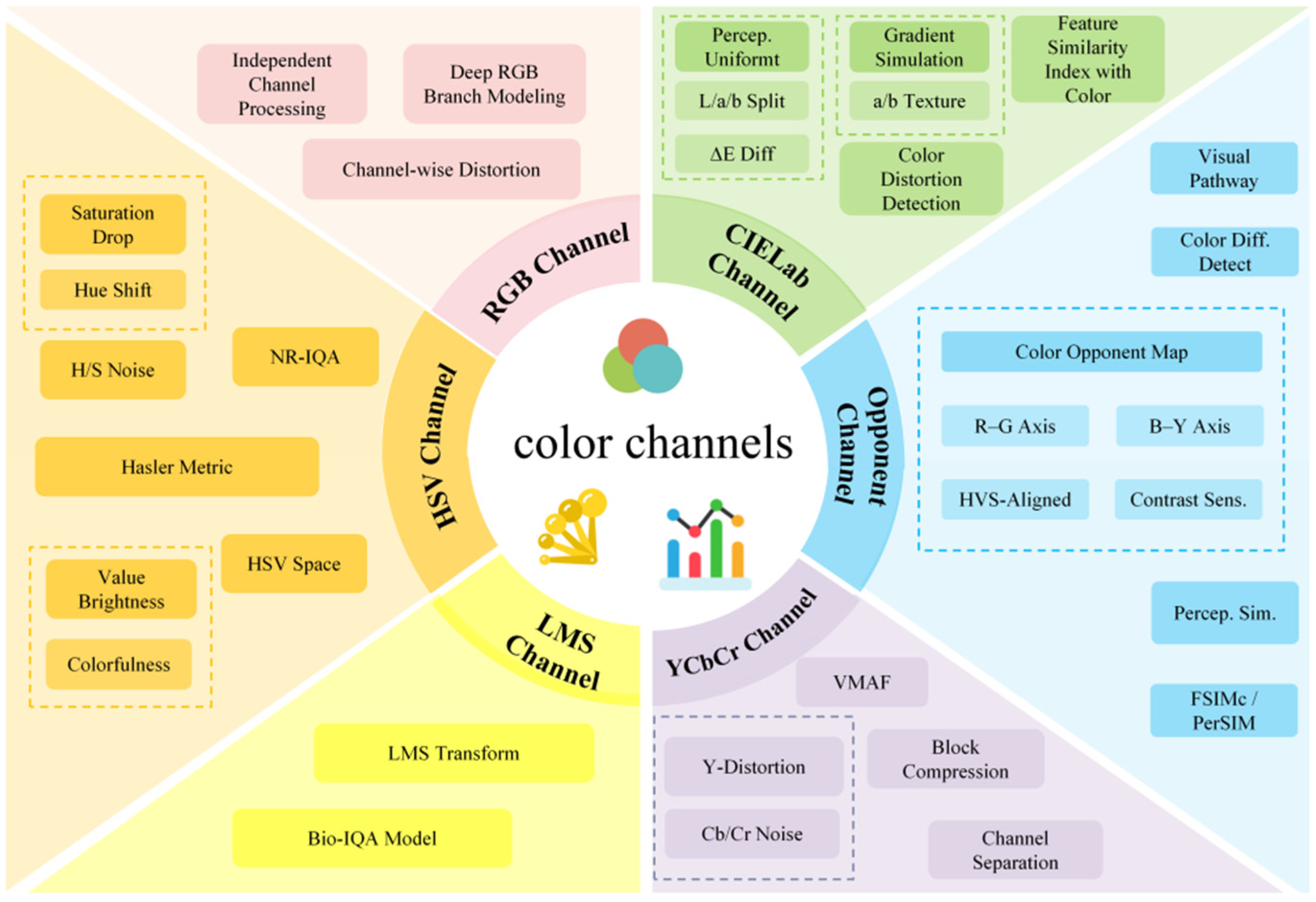

4. Overview of Color Image Quality Assessment Algorithms

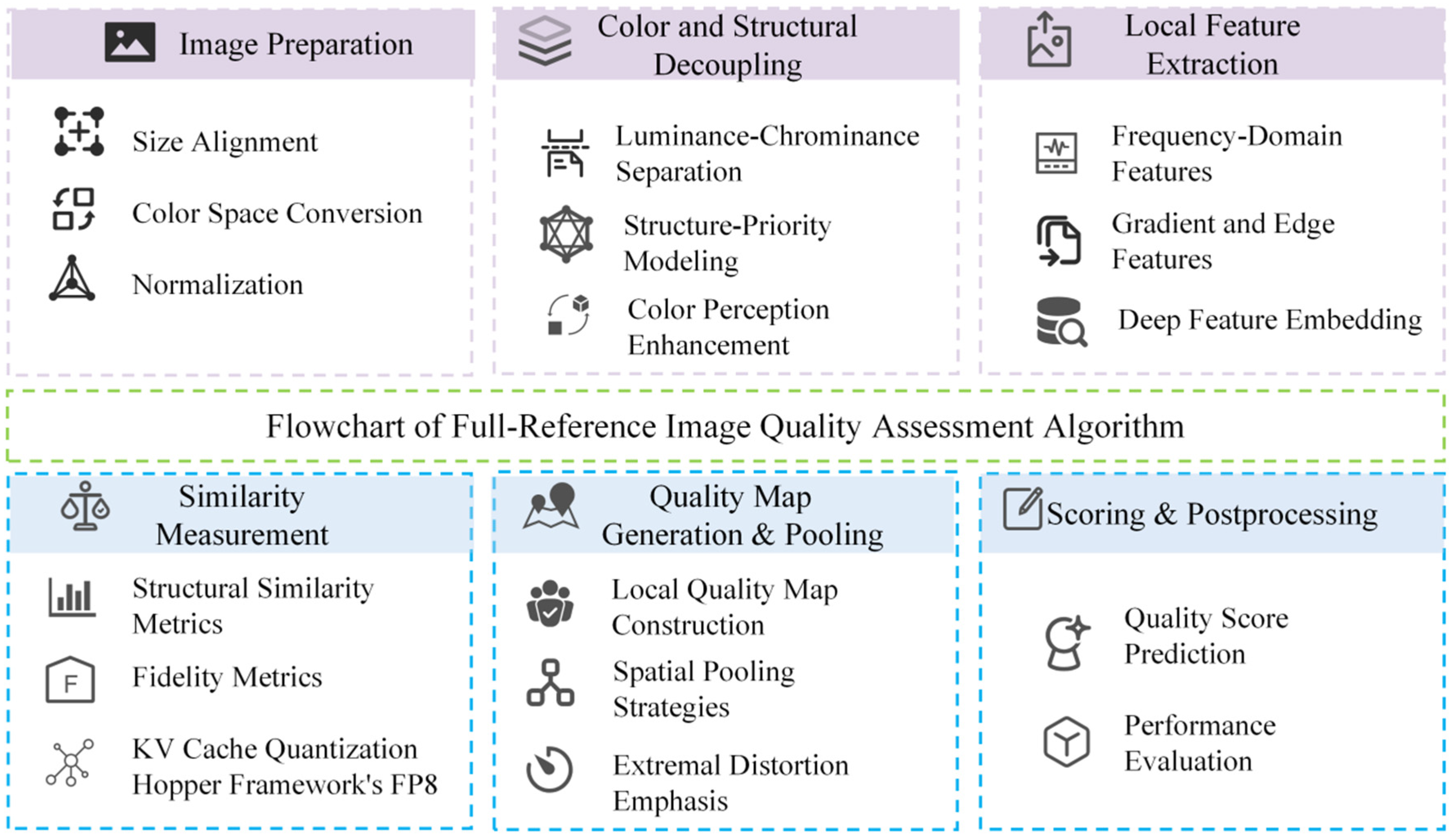

4.1. Full-Reference Quality Assessment Method

4.1.1. Machine Learning-Based Methods

4.1.2. Methods Based on Color Visual Quality and Color Characteristics

4.1.3. Methods Based on Mathematical Models

4.1.4. Other Methods

4.2. Reduced-Reference Quality Assessment Method

4.3. No-Reference Quality Assessment Method

4.3.1. Methods Based on Feature Fusion

4.3.2. Methods Based on Feature Extraction

4.3.3. Other Methods

4.4. Specific Scene Color Image Quality Assessment

5. Discussion

5.1. Limitations of Existing CIQA Methods

- (i)

- Limitations of machine learning-based methods

- (ii)

- Limitations of methods based on color visual quality and color characteristics

- (iii)

- Limitations of mathematical model-based methods

- (iv)

- Limitations of feature fusion-based methods

- (v)

- Limitations of feature extraction-based methods

5.2. Future Research Directions

5.2.1. Improvement of Cross-Domain Adaptation and Generalization Ability

5.2.2. A Dual Perspective on Fidelity and Structural Similarity

5.2.3. Quality Assessment of Spatiotemporal Consistency

5.2.4. Image Restoration Problem

- (i)

- Application of Contrastive Learning in Image Restoration

- (ii)

- Application of Dehazing Model in Image Restoration

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, J.; Wang, S.; Zhang, Y. Deep learning on medical image analysis. CAAI Trans. Intell. Technol. 2025, 10, 1–35. [Google Scholar] [CrossRef]

- Tian, W.Z.; Sanchez-Azofeifa, A.; Kan, Z.; Zhao, Q.Z.; Zhang, G.S.; Wu, Y.Z.; Jiang, K. NR-IQA for UAV hyperspectral image based on distortion constructing, feature screening, and machine learning. Int. J. Appl. Earth Obs. Geoinf. 2024, 133, 104130–104144. [Google Scholar] [CrossRef]

- Ha, C. No-Reference Image Quality Assessment with Moving Spectrum and Laplacian Filter for Autonomous Driving Environment. Vehicles 2025, 7, 8. [Google Scholar] [CrossRef]

- Zheng, L.C.; Wang, X.M.; Li, F.; Mao, Z.B.; Tian, Z.; Peng, Y.H.; Yuan, F.J.; Yuan, C.H. A Mean-Field-Game-Integrated MPC-QP Framework for Collision-Free Multi-Vehicle Control. Drones 2025, 9, 375. [Google Scholar] [CrossRef]

- Poreddy, A.K.R.; Appina, B.; Kokil, P. FFVRIQE: A Feature Fused Omnidirectional Virtual Reality Image Quality Estimator. IEEE Trans. Instrum. Meas. 2024, 73, 2522811–2822822. [Google Scholar] [CrossRef]

- Chen, Z.K.; He, Z.Y.; Luo, T.; Jin, C.C.; Song, Y. Luminance decomposition and Transformer based no-reference tone-mapped image quality assessment. Displays 2024, 85, 102881–102894. [Google Scholar] [CrossRef]

- Du, B.; Xu, H.; Chen, Q. No-reference underwater image quality assessment based on Multi-Scale and mutual information analysis. Displays 2025, 86, 102900–102913. [Google Scholar] [CrossRef]

- Chandler, D.M. Seven challenges in image quality assessment: Past, present, and future research. Int. Sch. Res. Not. 2013, 2013, 1–53. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Ahmed, M.N.; Yamany, S.M.; Mohamed, N.; Farag, A.A.; Moriarty, T. A modified fuzzy C-means algorithm for bias field estimation and segmentation of MRI data. IEEE Trans. Med. Imaging 2002, 21, 193–199. [Google Scholar] [CrossRef]

- Chandler, D.M.; Hemami, S.S. VSNR: A Wavelet-Based Visual Signal- to- Noise Ratio for Natural Images. IEEE Trans. Image Process. 2007, 16, 2284–2298. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zhang, L.; Mou, X.Q.; Zhang, D. FSIM: A Feature Similarity Index for Image Quality Assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef]

- Damera-Venkata, N.; Kite, T.D.; Geisler, W.S.; Evans, B.L.; Bovik, A.C. Image quality assessment based on a degradation model. IEEE Trans. Image Process. 2000, 9, 636–650. [Google Scholar] [CrossRef]

- Yeganeh, H.; Wang, Z. Objective quality assessment of tone-mapped images. IEEE Trans. Image Process. 2012, 22, 657–667. [Google Scholar] [CrossRef]

- Zhang, L.; Shen, Y.; Li, H. VSI: A visual saliency-induced index for perceptual image quality assessment. IEEE Trans. Image Process. 2014, 23, 4270–4281. [Google Scholar] [CrossRef]

- Temel, D.; AlRegib, G. BLeSS: Bio-inspired low-level spatiochromatic similarity assisted image quality assessment. In Proceedings of the 2016 IEEE International Conference on Multimedia and Expo (ICME), Seattle, WA, USA, 11–15 July 2016; IEEE: New York, NY, USA, 2016; pp. 1–6. [Google Scholar]

- Kang, L.; Ye, P.; Li, Y.; Doermann, D. Convolutional neural networks for no-reference image quality assessment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1733–1740. [Google Scholar]

- Bosse, S.; Maniry, D.; Müller, K.-R.; Wiegand, T.; Samek, W. Deep neural networks for no-reference and full-reference image quality assessment. IEEE Trans. Image Process. 2017, 27, 206–219. [Google Scholar] [CrossRef]

- Ding, K.; Ma, K.D.; Wang, S.Q.; Simoncelli, E.P. Image quality assessment: Unifying structure and texture similarity. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 2567–2581. [Google Scholar] [CrossRef]

- Ke, J.J.; Wang, Q.F.; Wang, Y.L.; Milanfar, P.; Yang, F. Musiq: Multi-scale image quality transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 5148–5157. [Google Scholar]

- Gu, S.Y.; Bao, J.M.; Chen, D.; Wen, F. Giqa: Generated image quality assessment. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 369–385. [Google Scholar]

- Wang, J.; Chan, K.C.K.; Loy, C.C. Exploring clip for assessing the look and feel of images. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 2555–2563. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Li, J.N.; Li, D.X.; Savarese, S.; Hoi, S.C.H. Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; PMLR: New York, NY, USA, 2023; pp. 19730–19742. [Google Scholar]

- Cappello, F.; Acosta, M.; Agullo, E.; Anzt, H.; Calhoun, J.; Di, S.; Giraud, L.; Grützmacher, T.; Jin, S.; Sano, K.; et al. Multifacets of lossy compression for scientific data in the Joint-Laboratory of Extreme Scale Computing. Future Gener. Comput. Syst. 2025, 163, 107323–107350. [Google Scholar] [CrossRef]

- Ma, L.; Li, S.; Ngan, K.N. Reduced-Reference Video Quality Assessment of Compressed Video Sequences. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1441–1456. [Google Scholar] [CrossRef]

- Xue, W.F.; Zhang, L.; Mou, X.Q.; Bovik, A.C. Gradient Magnitude Similarity Deviation: A Highly Efficient Perceptual Image Quality Index. IEEE Trans. Image Process. 2014, 23, 684–695. [Google Scholar] [CrossRef]

- Kuo, T.Y.; Wei, Y.J.; Wan, K.H. Color image quality assessment based on VIF. In Proceedings of the 2019 3rd International Conference on Imaging, Signal Processing and Communication (ICISPC), Singapore, 27–29 July 2019; IEEE: New York, NY, USA, 2019; pp. 96–100. [Google Scholar]

- Islam, M.T.; Samantaray, A.K.; Gorre, P.; Jatoth, D.N.; Kumar, S.; Al-Shidaifat, A.; Song, H.J. FPGA Implementation of an Effective Image Enhancement Algorithm Based on a Novel Daubechies Wavelet Filter Bank. Circuits Syst. Signal Process. 2025, 44, 365–386. [Google Scholar] [CrossRef]

- Jiang, B.; Li, J.X.; Lu, Y.; Cai, Q.; Song, H.B.; Lu, G.M. Eficient image denoising using deep learning: A brief survey. Inf. Fusion. 2025, 118, 103013–103031. [Google Scholar] [CrossRef]

- Liu, D.H.; Zhong, L.; Wu, H.Y.; Li, S.Y.; Li, Y.D. Remote sensing image Super-resolution reconstruction by fusing multi-scale receptive fields and hybrid transformer. Sci. Rep. 2025, 15, 2140–2154. [Google Scholar] [CrossRef]

- Li, Y.W.; An, H.; Zhang, T.; Chen, X.X.; Jiang, B.; Pan, J.S. Omni-Deblurring: Capturing Omni-Range Context for Image Deblurring. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 7543–7553. [Google Scholar] [CrossRef]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 9–12 November 2003; IEEE: New York, NY, USA, 2003; pp. 1398–1402. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Tian, X.; Tang, X. Effect of color palette and text encoding on infrared thermal imaging perception with various screen resolution accuracy. Displays 2025, 87, 102939–102952. [Google Scholar] [CrossRef]

- Shang, W.Q.; Liu, G.J.; Wang, Y.Z.; Wang, J.J.; Ma, Y.M. A non-convex low-rank image decomposition model via unsupervised network. Signal Process. 2024, 223, 109572–109588. [Google Scholar] [CrossRef]

- Pan, Z.Q.; Yuan, F.; Wang, X.; Xu, L.; Shao, X.; Kwong, S. No-reference image quality assessment via multibranch convolutional neural networks. IEEE Trans. Artif. Intell. 2022, 4, 148–160. [Google Scholar] [CrossRef]

- Ma, J.; He, Y.T.; Li, F.F.; Han, L.; You, C.Y.; Wang, B. Segment anything in medical images. Nat. Commun. 2024, 15, 654–663. [Google Scholar] [CrossRef] [PubMed]

- Yue, H.G.; Qing, L.B.; Zhang, Z.X.; Wang, Z.Y.; Guo, L.; Peng, Y.H. MSE-Net: A novel master–slave encoding network for remote sensing scene classification. Eng. Appl. Artif. Intell. 2024, 132, 107909–107925. [Google Scholar] [CrossRef]

- Wang, Y.Z.; Liu, G.J.; Yang, L.X.; Liu, J.M.; Wei, L.L. An Attention-Based Feature Processing Method for Cross-Domain Hyperspectral Image Classification. Signal Process. Lett. IEEE 2025, 32, 196–200. [Google Scholar] [CrossRef]

- Niaz, A.; Iqbal, E.; Memon, A.A.; Munir, A.; Kim, J.; Choi, K.N. Edge-based local and global energy active contour model driven by signed pressure force for image segmentation. IEEE Trans. Instrum. Meas. 2023, 72, 1–14. [Google Scholar] [CrossRef]

- Sheikh, H.R.; Wang, Z.; Bovik, A.C. Image and Video Quality Assessment Research at LIVE. 2020. Available online: http://live.ece.utexas.edu/research/quality (accessed on 10 September 2024).

- Larson, E.C.; Chandler, D.M. Categorical Subjective Image Quality Database. 2020. Available online: https://qualinet.github.io/databases/databases/ (accessed on 10 September 2024).

- Ponomarenko, N.; Jin, L.; Ieremeiev, O.; Lukin, V.; Egiazarian, K.; Astola, J.; Vozel, B.; Chehdi, K.; Carli, M.; Battisti, F.; et al. Image data⁃base TID2013: Peculiarities, results and perspectives. Signal Process. Image Commun. 2015, 30, 57–77. [Google Scholar] [CrossRef]

- Lin, H.; Hosu, V.; Saupe, D. KADID-10k: A large-scale artificially distorted IQA database. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; IEEE: New York, NY, USA, 2019; pp. 1–3. [Google Scholar]

- Hosu, V.; Lin, H.; Sziranyi, T.; Saupe, D. KonIQ-10k: An Ecologically Valid Database for Deep Learning of Blind Image Quality Assessment. IEEE Trans. Image Process. 2020, 29, 4041–4056. [Google Scholar] [CrossRef]

- Shi, S.; Zhang, X.; Wang, S.Q.; Xiong, R.Q.; Ma, S.W. Study on subjective quality assessment of Screen Content Images. In Proceedings of the Picture Coding Symposium, Cairns, Australia, 31 May–3 June 2015; IEEE: New York, NY, USA, 2015; pp. 75–79. [Google Scholar]

- Gu, K.; Zhai, G.T.; Lin, W.S.; Liu, M. The Analysis of Image Contrast: From Quality Assessment to Automatic Enhancement. IEEE Trans. Cybern. 2016, 46, 284–297. [Google Scholar] [CrossRef] [PubMed]

- Qureshi, M.A.; Beghdadi, A.; Deriche, M. Towards the design of a consistent image contrast enhancement assessment measure. Signal Process. Image Commun. 2017, 58, 212–227. [Google Scholar] [CrossRef]

- Zheng, R.D.; Jiang, X.H.; Ma, Y.; Wang, L. A Comparison of Quality Assessment Metrics on Image Resolution Enhancement Artifacts. In Proceedings of the 2022 International Conference on Culture-Oriented Science and Technology (CoST), Lanzhou, China, 18–21 August 2022; IEEE: New York, NY, USA, 2022; pp. 200–204. [Google Scholar]

- Liu, Y.T.; Zhang, B.C.; Hu, R.Z.; Gu, K.; Zhai, G.T.; Dong, J.Y. Underwater image quality assessment: Benchmark database and objective method. IEEE Trans. Multimed. 2024, 26, 7734–7748. [Google Scholar] [CrossRef]

- Zhang, Z.C.; Li, C.Y.; Sun, W.; Liu, X.H.; Min, X.K.; Zhai, G.T. A perceptual quality assessment exploration for aigc images. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Brisbane, Australia, 10–14 July 2023; IEEE: New York, NY, USA, 2023; pp. 440–445. [Google Scholar]

- Li, C.Y.; Zhang, Z.C.; Wu, H.N.; Sun, W.; Min, X.K.; Liu, X.H.; Zhai, G.T.; Lin, W.S. Agiqa-3k: An open database for ai-generated image quality assessment. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 6833–6846. [Google Scholar] [CrossRef]

- Wang, J.R.; Duan, H.Y.; Liu, J.; Chen, S.; Min, X.K.; Zhai, G.T. Aigciqa2023: A large-scale image quality assessment database for ai generated images: From the perspectives of quality, authenticity and correspondence. In Proceedings of the CAAI International Conference on Artificial Intelligence, Fuzhou, China, 22–23 July 2023; Springer Nature: Singapore, 2023; pp. 46–57. [Google Scholar]

- Yuan, J.Q.; Cao, X.Y.; Li, C.J.; Yang, F.Y.; Lin, J.L.; Cao, X.X. Pku-i2iqa: An image-to-image quality assessment database for ai generated images. arXiv 2023, arXiv:2311.15556. [Google Scholar]

- Gao, Y.X.; Jiang, Y.; Peng, Y.H.; Yuan, F.J.; Zhang, X.Y.; Wang, J.F. Medical Image Segmentation: A Comprehensive Review of Deep Learning-Based Methods. Tomography 2025, 11, 52. [Google Scholar] [CrossRef]

- Charrier, C.; Lézoray, O.; Lebrun, G. Machine learning to design full-reference image quality assessment algorithm. Signal Process. Image Commun. 2012, 27, 209–219. [Google Scholar] [CrossRef]

- Kent, M.G.; Schiavon, S. Predicting window view preferences using the environmental information criteria. Leukos 2023, 19, 190–209. [Google Scholar] [CrossRef]

- Ding, K.Y.; Ma, K.D.; Wang, S.Q.; Simoncelli, E.P. Comparison of full-reference image quality models for optimization of image processing systems. Int. J. Comput. Vis. 2021, 129, 1258–1281. [Google Scholar] [CrossRef]

- Cao, Y.; Wan, Z.L.; Ren, D.W.; Yan, Z.F.; Zuo, W.M. Incorporating semi-supervised and positive-unlabeled learning for boosting full reference image quality assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5851–5861. [Google Scholar]

- Lang, S.J.; Liu, X.; Zhou, M.L.; Luo, J.; Pu, H.Y.; Zhuang, X.; Wang, J.; Wei, X.K.; Zhang, T.P.; Feng, Y.; et al. A full-reference image quality assessment method via deep meta-learning and conformer. IEEE Trans. Broadcast. 2023, 70, 316–324. [Google Scholar] [CrossRef]

- Reddy, S.S.; Rao, V.V.M.; Sravani, K.; Nrusimhadri, S. Image quality assessment: Assessment of the image quality of actual images by using machine learning models. Bull. Electr. Eng. Inform. 2024, 13, 1172–1182. [Google Scholar] [CrossRef]

- Lan, X.T.; Jia, F.; Zhuang, X.; Wei, X.K.; Luo, J.; Zhou, M.L.; Kwong, S. Hierarchical degradation-aware network for full-reference image quality assessment. Inf. Sci. 2025, 690, 121557–121571. [Google Scholar] [CrossRef]

- Thakur, N.; Devi, S. A new method for color image quality assessment. Int. J. Comput. Appl. 2011, 15, 10–17. [Google Scholar] [CrossRef]

- Niu, Y.Z.; Zhang, H.F.; Guo, W.Z.; Ji, R.R. Image quality assessment for color correction based on color contrast similarity and color value difference. IEEE Trans. Circuits Syst. Video Technol. 2016, 28, 849–862. [Google Scholar] [CrossRef]

- Alsmadi, M.K. Content-based image retrieval using color, shape and texture descriptors and features. Arab. J. Sci. Eng. 2020, 45, 3317–3330. [Google Scholar] [CrossRef]

- Cheon, M.R.; Yoon, S.-J.; Kang, B.Y.; Lee, J.W. Perceptual image quality assessment with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 433–442. [Google Scholar]

- Shi, C.; Lin, Y. Image quality assessment based on three features fusion in three fusion steps. Symmetry 2022, 14, 773. [Google Scholar] [CrossRef]

- Li, L.D.; Xia, W.H.; Fang, Y.M.; Gu, K.; Wu, J.J.; Lin, W.S.; Qian, J.S. Color image quality assessment based on sparse representation and reconstruction residual. J. Vis. Commun. Image Represent. 2016, 38, 550–560. [Google Scholar] [CrossRef]

- Sun, W.; Liao, Q.M.; Xue, J.-H.; Zhou, F. SPSIM: A superpixel-based similarity index for full-reference image quality assessment. IEEE Trans. Image Process. 2018, 27, 4232–4244. [Google Scholar] [CrossRef]

- Shi, C.; Lin, Y. Full reference image quality assessment based on visual salience with color appearance and gradient similarity. IEEE Access 2020, 8, 97310–97320. [Google Scholar] [CrossRef]

- Sun, Y.; Zhao, Z.C.; Jiang, D.; Tong, X.L.; Tao, B.; Jiang, G.Z.; Kong, J.Y.; Yun, J.T.; Liu, Y.; Liu, X.; et al. Low-illumination image enhancement algorithm based on improved multi-scale Retinex and ABC algorithm optimization. Front. Bioeng. Biotechnol. 2022, 10, 865820. [Google Scholar] [CrossRef]

- Varga, D. Full-reference image quality assessment based on Grünwald–Letnikov derivative, image gradients, and visual saliency. Electronics 2022, 11, 559. [Google Scholar] [CrossRef]

- Yang, Y.; Liu, C.; Wu, H.; Yu, D.G. A Distorted-Image Quality Assessment Algorithm Based on a Sparse Structure and Subjective Perception. Mathematics 2024, 12, 2531. [Google Scholar] [CrossRef]

- Bezerra, S.A.C.; Júnior, S.A.C.B.; Pio, J.L.S.; de Carvalho, J.R.H.; Fonseca, K.V.O. Perceptual Error Logarithm: An Efficient and Effective Analytical Method for Full-Reference Image Quality Assessment. IEEE Access 2025, 13, 68587–68606. [Google Scholar] [CrossRef]

- Temel, D.; AlRegib, G. PerSIM: Multi-resolution image quality assessment in the perceptually uniform color do-main. In Proceedings of the 2015 IEEE International Conference on Image Processing, Quebec City, QC, Canada, 27–30 September 2015; ICIP: Quebec City, QC, Canada, 2015; pp. 1682–1686. [Google Scholar]

- Liu, D.; Li, F.; Song, H. Image quality assessment using regularity of color distribution. IEEE Access 2016, 4, 4478–4483. [Google Scholar] [CrossRef]

- Temel, D.; AlRegib, G. Perceptual image quality assessment through spectral analysis of error representations. Signal Process. Image Commun. 2019, 70, 37–46. [Google Scholar] [CrossRef]

- Athar, S.; Wang, Z. A comprehensive performance assessment of image quality assessment algorithms. IEEE Access 2019, 7, 140030–140070. [Google Scholar] [CrossRef]

- Popovic, E.; Zeger, I.; Grgic, M.; Grgic, S. Assessment of Color Saturation and Hue Effects on Image Quality. In Proceedings of the 2023 International Symposium ELMAR, Zadar, Croatia, 11–13 September 2023; IEEE: New York, NY, USA, 2023; pp. 7–12. [Google Scholar]

- Watanabe, R.; Konno, T.; Sankoh, H.; Tanaka, B.; Kobayashi, T. Full-Reference Point Cloud Quality Assessment with Multimodal Large Language Models. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: New York, NY, USA, 2025; pp. 1–5. [Google Scholar]

- Soundararajan, R.; Bovik, A.C. RRED indices: Reduced reference entropic differencing for image quality assessment. IEEE Trans. Image Process. 2011, 21, 517–526. [Google Scholar] [CrossRef]

- Rehman, A.; Wang, Z. Reduced-reference image quality assessment by structural similarity estimation. IEEE Trans. Image Process. 2012, 21, 3378–3389. [Google Scholar] [CrossRef]

- Wang, S.Q.; Gu, K.; Zhang, X.F.; Lin, W.S.; Ma, S.W.; Gao, W. Reduced-reference quality assessment of screen content images. IEEE Trans. Circuits Syst. Video Technol. 2016, 28, 1–14. [Google Scholar] [CrossRef]

- Yu, M.Z.; Tang, Z.J.; Zhang, X.Q.; Zhong, B.N.; Zhang, X.P. Perceptual hashing with complementary color wavelet transform and compressed sensing for reduced-reference image quality assessment. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7559–7574. [Google Scholar] [CrossRef]

- Mao, Q.Y.; Liu, S.; Li, Q.L.; Jeon, G.; Kim, H.; Camacho, D. No-Reference Image Quality Assessment: Past, Present, and Future. Expert. Syst. 2025, 42, e13842–e13855. [Google Scholar] [CrossRef]

- Chen, X.Q.; Zhang, Q.Y.; Lin, M.H.; Yang, G.Y.; He, C. No-reference color image quality assessment: From entropy to perceptual quality. EURASIP J. Image Video Process. 2019, 2019, 77–89. [Google Scholar] [CrossRef]

- Si, J.W.; Huang, B.X.; Yang, H.; Lin, W.S.; Pan, Z.K. A no-reference stereoscopic image quality assessment network based on binocular interaction and fusion mechanisms. IEEE Trans. Image Process. 2022, 31, 3066–3080. [Google Scholar] [CrossRef]

- Lan, X.T.; Zhou, M.L.; Xu, X.Y.; Wei, X.K.; Liao, X.R.; Pu, H.Y.; Luo, J.; Xiang, T.; Fang, B.; Shang, Z.W. Multilevel feature fusion for end-to-end blind image quality assessment. IEEE Trans. Broadcast. 2023, 69, 801–811. [Google Scholar] [CrossRef]

- Lyu, H.; Elangovan, H.; Rosin, P.L.; Lai, Y.-K. SCD: Statistical color distribution-based objective image colorization quality assessment. In Proceedings of the Computer Graphics International Conference, Geneva, Switzerland, 1–5 July 2024; Springer Nature: Cham, Switzerland, 2024; pp. 54–67. [Google Scholar]

- Yang, J.F.; Wang, Z.Y.; Huang, B.J.; Ai, J.X.; Yang, Y.H.; Xiong, Z.X. Joint distortion restoration and quality feature learning for no-reference image quality assessment. ACM Trans. Multimed. Comput. Commun. Appl. 2024, 20, 1–20. [Google Scholar] [CrossRef]

- Zhao, W.Q.; Li, M.W.; Xu, L.J.; Sun, Y.; Zhao, Z.B.; Zhai, Y.J. A multibranch network with multilayer feature fusion for no-reference image quality assessment. IEEE Trans. Instrum. Meas. 2024, 73, 1–11. [Google Scholar] [CrossRef]

- Sheng, D.; Jin, W.Q.; Wang, X.; Li, L. No-Reference Quality Assessment of Infrared Image Colorization with Color–Spatial Features. Electronics 2025, 14, 1126. [Google Scholar] [CrossRef]

- Karen, P.; Chen, G.; Sos, A. No reference color image contrast and quality measures. IEEE Trans. Consum. Electron. 2013, 59, 643–651. [Google Scholar] [CrossRef]

- Tian, D.; Khan, M.U.; Luo, M.R. The Development of Three Image Quality assessment Metrics Based on a Comprehensive Dataset. In Proceedings of the Color and Imaging Conference, Online, 1–4 November 2021; Society for Imaging Science and Technology: Springfield, VA, USA; Volume 29, pp. 77–82. [Google Scholar]

- Zhang, Z.C.; Sun, W.; Min, X.K.; Wang, T.; Lu, W.; Zhai, G.T. No-reference quality assessment for 3D colored point cloud and mesh models. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7618–7631. [Google Scholar] [CrossRef]

- Golestaneh, S.A.; Dadsetan, S.; Kitani, K.M. No-reference image quality assessment via transformers, relative ranking, and self-consistency. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 1220–1230. [Google Scholar]

- Shi, C.; Lin, Y.; Cao, X. No reference image sharpness assessment based on global color difference variation. Chin. J. Electron. 2024, 33, 293–302. [Google Scholar] [CrossRef]

- He, Q.H.; Yu, W.; Guo, Z.L.; Yuan, L.H.; Liu, Y.Y. No-reference Quality assessment Algorithm for Color Gamut Mapped Images Based on Double-Order Color Information. Infrared Technol. 2025, 47, 316–325. [Google Scholar]

- Maalouf, A.; Larabi, M.C. A no reference objective color image sharpness metric. In Proceedings of the 2010 18th European Signal Processing Conference, Aalborg, Denmark, 23–27 August 2010; IEEE: New York, NY, USA, 2010; pp. 1019–1022. [Google Scholar]

- Panetta, K.; Samani, A.; Agaian, S. A robust no-reference, no-parameter, transform domain image quality metric for evaluating the quality of color images. IEEE Access 2018, 6, 10979–10985. [Google Scholar] [CrossRef]

- García-Lamont, F.; Cervantes, J.; López-Chau, A.; Ruiz-Castilla, S. Color image segmentation using saturated RGB colors and decoupling the intensity from the hue. Multimed. Tools Appl. 2020, 79, 1555–1584. [Google Scholar] [CrossRef]

- Liu, H.; Li, C.; Zhang, D.; Zhou, Y.N.; Du, S.Y. Enhanced image no-reference quality assessment based on colour space distribution. IET Image Process. 2020, 14, 807–817. [Google Scholar] [CrossRef]

- Chen, J.; Li, S.; Lin, L. A no-reference blurred colourful image quality assessment method based on dual maximum local information. IET Signal Process. 2021, 15, 597–611. [Google Scholar] [CrossRef]

- Xu, Z.R.; Yang, Y.; Zhang, Z.X.; Zhang, W.M. No Reference Quality Assessment for Screen Content Images Based on Entire and High-Influence Regions. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Pérez-Delgado, M.L.; Celebi, M.E. A comparative study of color quantization methods using various image quality assessment indices. Multimed. Syst. 2024, 30, 40–64. [Google Scholar] [CrossRef]

- Ibork, Z.; Nouri, A.; Lézoray, O.; Charrier, C.; Touahni, R. A No Reference Deep Quality Assessment Index for 3D Colored Meshes. In Proceedings of the 2024 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Kuching, Malaysia, 6–10 October 2024; IEEE: New York, NY, USA, 2024; pp. 3305–3311. [Google Scholar]

- Miyata, T. ZEN-IQA: Zero-Shot Explainable and No-Reference Image Quality Assessment With Vision Language Model. IEEE Access 2024, 12, 70973–70983. [Google Scholar] [CrossRef]

- Zhou, M.L.; Shen, W.H.; Wei, X.K.; Luo, J.; Jia, F.; Zhuang, X.; Jia, W.J. Blind Image Quality Assessment: Exploring Content Fidelity Perceptibility via Quality Adversarial Learning. Int. J. Comput. Vis. 2025, 133, 3242–3258. [Google Scholar] [CrossRef]

- Ran, Y.; Zhang, A.X.; Li, M.J.; Tang, W.X.; Wang, Y.G. Black-box adversarial attacks against image quality assessment models. Expert. Syst. Appl. 2025, 260, 125415–125426. [Google Scholar] [CrossRef]

- Yang, Y.; Li, W. Deep Learning-Based Non-Reference Image Quality Assessment Using Vision Transformer with Multiscale Dual Branch Fusion. Informatica 2025, 49, 43–54. [Google Scholar] [CrossRef]

- Gao, C.; Panetta, K.; Agaian, S. A new color contrast enhancement algorithm for robotic applications. In Proceedings of the 2012 IEEE International Conference on Technologies for Practical Robot Applications (TePRA), Woburn, MA, USA, 23–24 April 2012; IEEE: New York, NY, USA, 2012; pp. 42–47. [Google Scholar]

- Preiss, J.; Fernandes, F.; Urban, P. Color image quality assessment: From prediction to optimization. IEEE Trans. Image Process. 2014, 23, 1366–1378. [Google Scholar] [CrossRef]

- Wang, M.; Huang, Y.; Zhang, J. Blind quality assessment of night-time images via weak illumination analysis. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; IEEE: New York, NY, USA, 2021; pp. 1–6. [Google Scholar]

- Song, C.Y.; Hou, C.P.; Yue, G.H.; Wang, Z.P. No-reference quality assessment of night-time images via the analysis of local and global features. In Proceedings of the 2021 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shenzhen, China, 5–9 July 2021; IEEE: New York, NY, USA, 2021; pp. 1–6. [Google Scholar]

- Li, Z.; Li, X.E.; Shi, J.L.; Shao, F. Perceptually-calibrated synergy network for night-time image quality assessment with enhancement booster and knowledge cross-sharing. Displays 2025, 86, 102877–102889. [Google Scholar] [CrossRef]

- Liu, B.; Gan, J.H.; Wen, B.; LiuFu, Y.P.; Gao, W. An automatic coloring method for ethnic costume sketches based on generative adversarial networks. Appl. Soft Comput. 2021, 98, 106786–106796. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An Underwater Color Image Quality assessment Metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Wang, Y.; Li, N.; Li, Z.Y.; Gu, Z.R.; Zheng, H.Y.; Zheng, B.; Sun, M.N. An imaging-inspired no-reference underwater color image quality assessment metric. Comput. Electr. Eng. 2018, 70, 904–913. [Google Scholar] [CrossRef]

- Chen, T.H.; Yang, X.C.; Li, N.X.; Wang, T.S.; Ji, G.L. Underwater image quality assessment method based on color space multi-feature fusion. Sci. Rep. 2023, 13, 16838–16854. [Google Scholar] [CrossRef]

- Dhivya, R.M.; Nagasai, V.; Masilamani, V. Fusion of Traditional and Deep Learning Models for Underwater Image Quality Assessment. In Proceedings of the 2024 IEEE 8th International Conference on Information and Communication Technology (CICT), Prayagraj, India, 6–8 December 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Jiang, Q.P.; Gu, Y.S.; Wu, Z.W.; Li, C.Y.; Xiong, H.; Shao, F.; Wang, Z.H. Deep Underwater Image Quality Assessment with Explicit Degradation Awareness Embedding. IEEE Trans. Image Process. 2025, 34, 1297–1311. [Google Scholar] [CrossRef]

- Li, B.; Shi, Y.; Yu, Q.; Wang, J.Y. Unsupervised cross-domain image retrieval via prototypical optimal transport. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 3009–3017. [Google Scholar]

- Conde, M.V.; Geigle, G.; Timofte, R. Instructir: High-quality image restoration following human instructions. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer Nature: Cham, Switzerland, 2024; pp. 1–21. [Google Scholar]

| Dataset Name | Release Year | Number of Reference Images | Number of Distorted Images | Subjective Rating Range |

|---|---|---|---|---|

| LIVE | 2006 | 29 | 779 | DMOS [0, 100] |

| CSIQ | 2010 | 30 | 866 | DMOS [0, 1] |

| TID2013 | 2015 | 25 | 3000 | MOS [0, 9] |

| QACS | 2016 | 24 | 492 | MOS [1, 10] |

| CCID2014 | 2016 | 15 | 655 | MOS [1, 5] |

| CEED2016 | 2017 | 30 | 180 | MOS [1, 5] |

| KonIQ-10k | 2017 | - | 10,073 | MOS [0, 5] |

| KADID-10k | 2019 | 81 | 10,125 | MOS [0, 5] |

| 4K Resolution Enhancement Artifact Database | 2022 | 24 | 1152 | Subjective ratings provided by 20,000 observers |

| Database | PLCC | SROCC | Kendall τ | RMSE | MAE |

|---|---|---|---|---|---|

| LIVE (DMOS 0–100) | 0.8736 | 0.8781 | 0.6973 | 13.16 | 10.23 |

| CSIQ (DMOS 0–1) | 0.7921 | 0.8052 | 0.6080 | 0.1572 | 0.1254 |

| TID2013 (MOS 0–9) | 0.6213 | 0.6542 | 0.4852 | 1.213 | 0.9796 |

| Year | Author | Method/Model | Enabling Technologies | Major Contribution |

|---|---|---|---|---|

| pre-2019 | Charrier et al. [57] | SVM-based FR-IQA | Multi-SVM classification; regression for quality scoring | Combined classification and regression to align better with human subjective perception |

| pre-2019 | Ding et al. [19] | DISTS | CNN-based overcomplete representation; structural and texture similarity | Unified structure and texture similarity; robust to geometric distortions |

| 2021 | Ding et al. [59] | FR-IQA Optimization Study | Comparative evaluation of 11 FR-IQA models; DNN training for image enhancement | Evaluated perceptual performance of IQA models in low-level vision tasks |

| 2022 | Cao et al. [60] | Semi-supervised PU-Learning | Semi-supervised + PU learning; dual-branch network; spatial attention; local slice Wasserstein distance | Achieved high performance on benchmarks; solved misalignment in pseudo-MOS generation |

| 2023 | Lang et al. [61] | Conformer + Meta-learning | Conformer; twin network; meta-learning; global–local feature extraction | Enhanced generalization and competitive on standard datasets |

| 2024 | Reddy et al. [62] | ML-based IQA | Multi-feature descriptors; KNN matching; inlier ratio | Practical model using feature-based quality evaluation |

| 2025 | Lan et al. [63] | Hierarchical Degradation-Aware Net | Multi-level degradation simulation; similarity matrix; feature fusion + regression | High-precision quality scoring with improved adaptability to complex distortions |

| Year | Author | Method/Model | Enabling Technologies | Major Contribution |

|---|---|---|---|---|

| pre-2019 | Thakur et al. [64] | Qnew | HVS theory, brightness/structure/edge/color similarity | Overcame limitations of existing indices; suitable for diverse image processing tasks |

| pre-2019 | Niu et al. [65] | Color Correction IQA Method | Color contrast similarity, color value difference | Improved accuracy of color consistency evaluation |

| 2019 | Kuo et al. [28] | Enhanced S-VIF | Integration of chroma channel into S-VIF | Improved performance by combining grayscale and color information |

| 2020 | Alsmadi et al. [66] | CBIR System | Color/shape/texture features, clustering, Canny, GA + SA | Achieved efficient and accurate image retrieval |

| 2021 | Cheon et al. [67] | Image Quality Transformer (IQT) | Transformer, CNN (Inception-ResNet-v2), multi-head attention | First Transformer in FR-IQA; modeled global distortion features |

| 2022 | Shi et al. [68] | FFS (Feature Fusion Similarity Index) | Three-feature fusion, symmetric calculation, bias pooling strategy | High consistency with subjective scores and efficient computation |

| Year | Author | Method/Model | Enabling Technologies | Major Contribution |

|---|---|---|---|---|

| pre-2019 | Li et al. [69] | Sparse Representation + Residual | Overcomplete color dictionary, reconstruction residual, brightness similarity | Quantified structural, color, contrast distortions for accurate CIQA |

| pre-2019 | Sun et al. [70] | SPSIM | Superpixel-based brightness/chromaticity/gradient similarity, texture complexity-based pooling | Achieved high subjective consistency by gradient-aware feature adjustment |

| 2020 | Shi et al. [71] | VCGS | Visual saliency, color appearance, gradient similarity, feature pooling strategy | Constructed a saliency-aware CIQA system with good consistency and moderate complexity |

| 2022 | Sun et al. [72] | Low-Light Enhancement + IQA | Multi-scale Retinex, ABC algorithm, histogram equalization, gamma correction | Enhanced low-light images with effective detail/noise balance via optimized fusion |

| 2022 | Varga et al. [73] | FR-IQA with GL Derivatives | Grünwald–Letnikov derivatives, image gradients, visual saliency weighting | Fused global/local changes for improved CVQ-consistent quality prediction |

| 2024 | Yang et al. [74] | IQA-SSSP | Sparse structural similarity, low-complexity computation, large-scale data processing | Integrated structure and perceptual similarity for scalable and efficient CIQA |

| 2025 | Bezerra et al. [75] | GL-Based IQA Method | Grünwald–Letnikov derivatives, image gradients, saliency-based perceptual weighting | Improved accuracy and consistency by combining global/local and perceptual features |

| Year | Author | Method/Model | Enabling Technologies | Major Contribution |

|---|---|---|---|---|

| pre-2019 | Temel et al. [76] | Multi-Resolution IQA Method | LAB space, LoG, color similarity, retinal ganglion perception simulation | Simulated HVS perception by combining structural and color features |

| pre-2019 | Liu et al. [77] | Color Distribution Model for Compression | Bright/median/dark channels, fractal dimension analysis | Used color distribution rules and fractal features to predict image quality |

| 2019 | Temel et al. [78] | SUMMER | Spectral error representation, multi-scale/ multi-channel features, color-aware spectral analysis | Enhanced color IQA by overcoming limitations of grayscale spectral analysis |

| 2019 | Athar et al. [79] | IQA Performance Assessment | Comparative benchmarking on 43 FR, 7 fusion FR, 14 NR methods across nine datasets | Provided comprehensive performance benchmarking of IQA methods |

| 2023 | Popovic et al. [80] | Saturation and Hue IQA Study | Subjective quality assessment, saturation/hue manipulation, correlation metrics | Built a dedicated dataset and validated subjective–objective correlation in scene perception |

| 2025 | Watanabe et al. [81] | FR-MLLM | Multimodal large language models, SVR fusion with traditional indicators | Proposed MLLM-based FR IQA for point clouds, enhancing accuracy through multimodal understanding |

| Year | Author | Method/Model | Enabling Technologies | Major Contribution |

|---|---|---|---|---|

| pre-2019 | Soundararajan et al. [82] | SSIM-RR-IQA | SSIM theory, DNT-based statistical features | Simplified-reference IQA with strong correlation to SSIM and subjective scores |

| pre-2019 | Rehman et al. [83] | RRED | Wavelet decomposition, entropy difference, error pooling | Entropy-based RR-IQA with reduced reference data and improved accuracy |

| pre-2019 | Wang et al. [84] | RR-IQA for SCIs | Visual perception modeling, texture/edge metrics, attention mechanism | Efficient SCI quality prediction, effective for compression and visualization tasks |

| 2022 | Yu et al. [85] | CCWT-CS Perceptual Hashing | CCWT, compressed sensing, block-based feature extraction | Robust and compact perceptual hashing with superior IQA and classification performance |

| Year | Author | Method/Model | Enabling Technologies | Major Contribution |

|---|---|---|---|---|

| 2019 | Chen et al. [87] | ENIQA | Spatial-frequency feature fusion, Log–Gabor filtering, TE and MI, visual saliency, SVC + SVR | Integrated spatial and frequency features using entropy and saliency; two-stage regression for NR-IQA |

| 2022 | Si et al. [88] | StereoIF-Net | Binocular interaction modules (BIM), binocular fusion (BFM), cross-convolution, local pooling | Modeled binocular vision mechanisms for stereo images; improved robustness to asymmetric distortions |

| 2023 | Lan et al. [89] | GAN + EfficientNet + BiFPN | GAN restoration, multi-level features in Spatial-CIELAB, BiFPN fusion, semantic and detail features | Combined GAN-repaired and distorted images for feature fusion; enhanced brightness, chroma, hue quality assessment |

| 2024 | Lyu et al. [90] | SCD-based Index | Statistical chromaticity distribution, semantic segmentation, smoothing, correction | Eliminated visual insensitivity and frequency anomaly interference |

| 2024 | Yang et al. [91] | RQFL-IQA | Joint restoration and quality learning, multimodal label fusion, perceptual reweighting | Unified distortion repair and quality assessment; simulated brain mechanism for enhanced perceptual consistency |

| 2024 | Zhao et al. [92] | MFFNet | MSFE module, MLFF module, superpixel-based sub-branch | Fused multi-layer and multi-branch features; improved local visual detail extraction despite computational cost |

| 2025 | Sheng et al. [93] | LDA Network | LDA-based color feature learning, multi-channel spatial attention | Applied LDA to infrared image colorization; enhanced fidelity and detail preservation in NR-IQA |

| Year | Author | Method/Model | Enabling Technologies | Major Contribution |

|---|---|---|---|---|

| pre-2019 | Karen et al. [94] | CQE Measure | NR-RME contrast, color chromaticity, RGB 3D contrast, linear fusion | Proposed NR color contrast metric aligned with human perception; introduced CQE measure combining color, clarity, contrast |

| 2021 | Tian et al. [95] | IQEMs (CS, NN, IS) | Color science, image statistics, neural networks, large-scale subjective experiment | Developed three IQA metrics; NN model achieved highest accuracy (R = 0.87); validated on color-modified dataset |

| 2022 | Zhang et al. [96] | NR-3D-IQA | LAB color space, 3D-NSS, entropy, geometry features (e.g., curvature, angle), SVR | Assessed color and geometric distortions in 3D models using color and structural features with SVR training |

| 2022 | Golestaneh et al. [97] | TReS | CNN + Transformer fusion, relative ranking, self-consistency | Captured local/non-local features; improved generalization; limited specificity and higher computation cost |

| 2024 | Shi et al. [98] | HSV + Log–Gabor-based Model | HSV color moment, color gradient, Log–Gabor multi-layer texture extraction | Extracted effective color and texture features; improved NR-IQA accuracy for diverse distortions |

| 2024 | Qiuhong et al. [99] | Two-Order Color NR-IQA | Zero- and first-order color features, color derivative dynamics, regression model | Quantified texture and color naturalness loss in gamut mapping; addressed incomplete color representation |

| Year | Author | Method/Model | Enabling Technologies | Major Contribution |

|---|---|---|---|---|

| pre-2019 | Maalouf et al. [100] | Multi-scale structure tensor clarity measurement | Wavelet transform and multi-scale image structure analysis | Improved sensitivity to image clarity by analyzing structure at multiple scales |

| pre-2019 | Panetta et al. [101] | TDMEC (Transform Domain Image Quality Measurement) | Reference-free, parameter-free transform domain method | Reference-free IQA for color images without parameter tuning |

| 2020 | García-Lamont et al. [102] | Color image segmentation method | Direct RGB space processing, HVS-inspired chrominance, and intensity separation | Simulates HVS color perception without color space conversion |

| 2020 | Liu et al. [103] | Enhanced NR-IQA based on color space distribution | GIST for target image selection, color transfer, FSIM, and absolute color difference | Effective enhanced quality assessment for challenging images; noted limitations in target selection and generalization |

| 2021 | Chen et al. [104] | DMLI (Dual Maximum Local Information) NR-IQA method | Extraction of maximum local difference and local entropy features | Reference-free fuzzy color image quality evaluation combining two local information features |

| 2023 | Xu et al. [105] | Non-re-equalization IQA method | Phase-consistency structural features, weighted high-impact area scoring | Improved accuracy by weighting high-impact areas in image quality score |

| 2024 | Pérez-Delgado et al. [106] | Comparative evaluation of CQ methods | 10 color quantization methods, 8 IQA indicators including MSE, SSIM | Recommended combining multiple indicators to evaluate CQ |

| 2024 | Ibork et al. [107] | CMVQA (Colored Mesh Visual Quality Assessment) | Combination of geometric, color, and spatial domain mesh features | Reference-free 3D color mesh visual quality assessment integrating multiple feature types |

| 2024 | Miyata et al. [108] | ZEN-IQA zero-shot interpretable NR-IQA | Pre-trained visual language model, antonym prompt pairs/triplets | Provides interpretable IQA with overall and intermediate descriptive scores |

| 2025 | Zhou et al. [109] | NR-IQA based on quality adversarial learning | Adversarial sample generation, content fidelity optimization | Improved model robustness and content fidelity perception via adversarial learning |

| 2025 | Ran et al. [110] | Black-box adversarial attack on NR-IQA models | Bidirectional loss function, black-box attack algorithm | Demonstrated vulnerability of NR-IQA models to adversarial attacks, proposing efficient attack method |

| 2025 | Yang et al. [111] | Multi-scale dual-branch fusion NR-IQA | Vision Transformer (ViT) with self-attention and multi-scale fusion | Enhanced accuracy and efficiency in NR-IQA, limited by dataset size and diversity |

| Year | Author | Method/Model | Enabling Technologies | Major Contribution |

|---|---|---|---|---|

| pre-2019 | Gao et al. [112] | Spatial domain color contrast enhancement | Alpha-weighted quadratic nonlinear filter, Global logAMEE metric | Enhances contrast and color in noisy images; suitable for real-time robotics |

| pre-2019 | Preiss et al. [113] | Color gamut mapping optimization | Color image difference (CID), improved iCID metric | Avoids visual artifacts; improves contrast, color fidelity, and prediction performance |

| 2021 | Wang et al. [114] | Night image NR-IQA method | Local brightness segmentation, support vector regression (SVR) | Models night image quality by analyzing local brightness impacts on color and structure |

| 2021 | Song et al. [115] | BNTI (Blind Night-Time Image QA) | Local/global brightness features, saliency, exposure, edge map entropy, SVR | Accurate night image quality prediction combining multidimensional features |

| 2025 | Li et al. [116] | PCSNet (Perceptually Calibrated Synergy Net) | Multi-task learning with shared shallow networks, cross-sharing modules | Joint night image quality prediction and enhancement through feature calibration and collaboration |

| 2021 | Liu et al. [117] | Reference-free automatic colorization | GAN with six-layer U-Net generator, five-layer CNN discriminator, edge detection | Automates ethnic costume sketch colorization with high detail retention |

| pre-2019 | Yang et al. [118] | UCIQE (Underwater CIQA Index) | CIELab color space, extraction of color contrast, saturation, chroma features | Objective underwater image quality assessment addressing color degradation |

| pre-2019 | Panetta et al. [119] | UIQM (Underwater Image Quality Measures) | Color, clarity, contrast metrics inspired by HVS | Comprehensive reference-free underwater image quality evaluation across major degradation dimensions |

| pre-2019 | Wang et al. [120] | CCF (Underwater CIQA index) | Feature weighting via multivariate linear regression; color richness, contrast, haze indices | Quantifies underwater image degradation caused by absorption, scattering, and haze |

| 2023 | Chen et al. [121] | UIQA based on multi-feature fusion | CIELab conversion; histogram, morphology, moment features; SVR | Addresses underwater image complexity via multi-feature fusion and quality score prediction |

| 2024 | Dhivya et al. [122] | Hybrid Underwater IQA | Traditional model fusion; deep learning high-order feature representation | Built multi-dimensional underwater image quality assessment framework |

| 2024 | Liu et al. [51] | Baseline UIQA metric and UIQD database | Channel/spatial attention, transformer modules, multi-layer perceptron fusion | Large-scale underwater image database; integrated local–global feature-based quality assessment |

| 2025 | Jiang et al. [123] | Degradation-aware embedding network for UIQA | Residual graph estimation, degradation information subnetwork, feature embedding | Improves underwater image quality prediction by modeling local degradation explicitly |

| Method Type | Mechanism | Advantages | Limitations |

|---|---|---|---|

| Machine Learning | Uses CNNs, meta-learning, transfer learning with regression/classification | Handles complex distortions; supports weak supervision; enhances generalization | Requires large data; twin networks lose global info; semantic gaps exist |

| Color Visual Quality and Color | Inspired by the human visual system (HVS); extracts features such as color difference, structural similarity, and saliency | Good perceptual alignment; handles structure and color variation | Fails under complex lighting; ignores high-order distortions; poor reference registration |

| Mathematical Models | Uses sparse coding, Fourier analysis, gradient similarity, statistical modeling | Interpretable; suitable for low-level structure modeling | Weak for fine texture; frequency models lack spatial sensitivity |

| Feature Fusion | Fuses spatial–frequency, color–structure, subjective–physical features | Combines diverse cues; improves robustness and cross-domain performance | Manual fusion design; modal conflict risk; loss balancing difficult |

| Feature Extraction | Extracts texture, color, statistical features for quality modeling | Simple and interpretable; good for lightweight applications | Lacks adaptivity; weak for high-order distortions; may incur high cost |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, M.; Song, C.; Zhang, Q.; Zhang, X.; Li, Y.; Yuan, F. Research Progress on Color Image Quality Assessment. J. Imaging 2025, 11, 307. https://doi.org/10.3390/jimaging11090307

Gao M, Song C, Zhang Q, Zhang X, Li Y, Yuan F. Research Progress on Color Image Quality Assessment. Journal of Imaging. 2025; 11(9):307. https://doi.org/10.3390/jimaging11090307

Chicago/Turabian StyleGao, Minjuan, Chenye Song, Qiaorong Zhang, Xuande Zhang, Yankang Li, and Fujiang Yuan. 2025. "Research Progress on Color Image Quality Assessment" Journal of Imaging 11, no. 9: 307. https://doi.org/10.3390/jimaging11090307

APA StyleGao, M., Song, C., Zhang, Q., Zhang, X., Li, Y., & Yuan, F. (2025). Research Progress on Color Image Quality Assessment. Journal of Imaging, 11(9), 307. https://doi.org/10.3390/jimaging11090307