E-CMCA and LSTM-Enhanced Framework for Cross-Modal MRI-TRUS Registration in Prostate Cancer

Abstract

1. Introduction

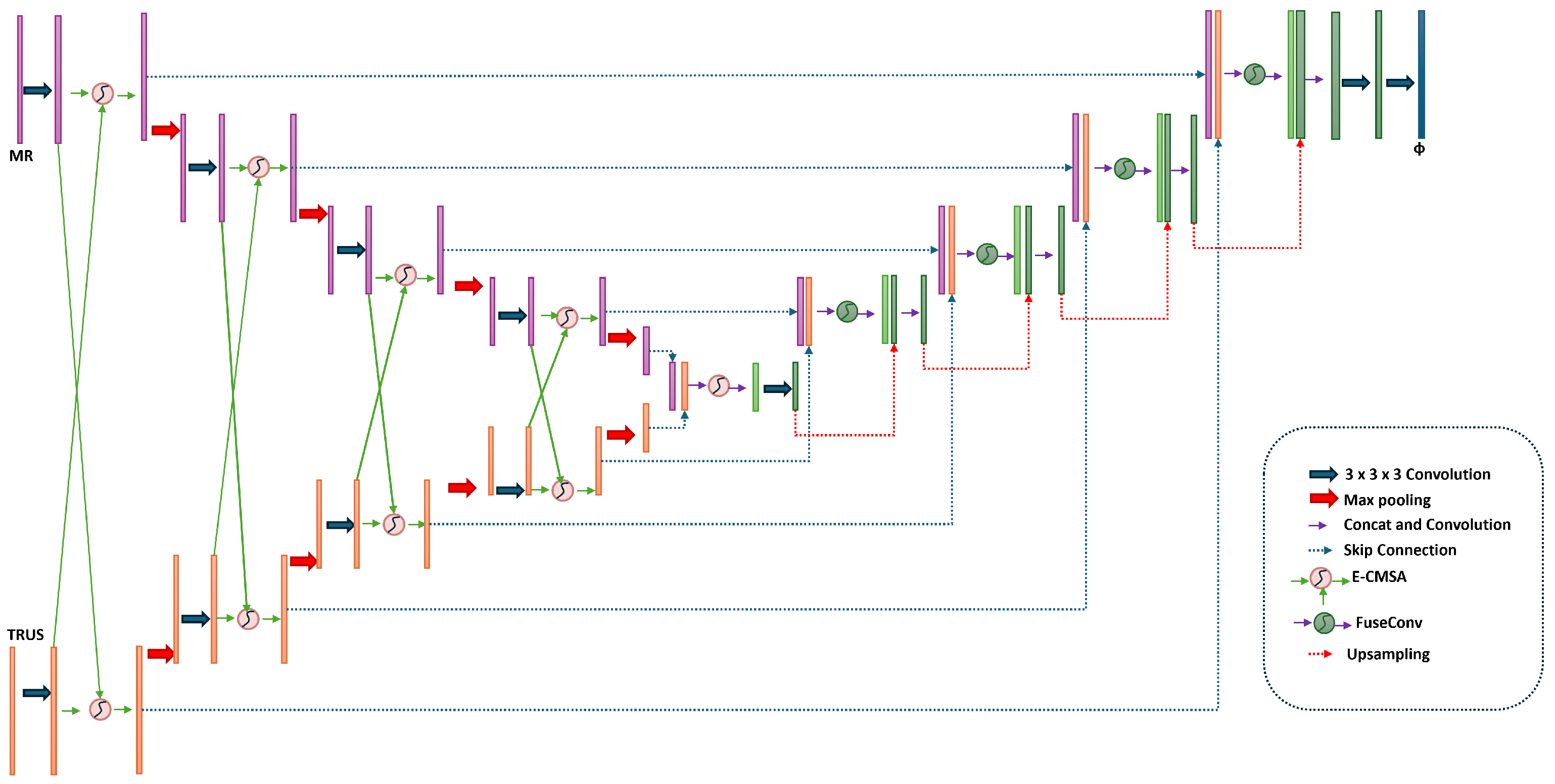

- A dual-encoder extracting modality-specific features;

- An Enhanced Cross-Modality Spatial Attention (ECMCA) module enhancing semantic alignment [6];

- A VecInt module ensuring smooth, diffeomorphic transformations [4];

- An LSTM-enhanced module modeling temporal dynamics for 4D tasks [7];

- A FuseConv layer integrating multi-level features.

- A novel dual-encoder framework for non-rigid MRI-TRUS registration, addressing cross-modal and dynamic challenges.

- Integration of ECMCA, VecInt, LSTM, and FuseConv modules for enhanced feature alignment, deformation smoothness, and temporal coherence.

- Superior performance on the -RegPro dataset, with a DSC of 0.868 and TRE of 2.260 mm, validated for clinical applications.

2. Related Work

3. Methods

3.1. Problem Specification

3.2. Pre-Processing Module

3.3. Network Architecture

3.3.1. Dual-Encoder Structure

3.3.2. E-CMCA Module

3.3.3. Feature Fusion and Bottleneck

3.3.4. Decoder and Flow Field Generation

3.3.5. Temporal Modeling with SpatialTransformerWithLSTM

- Task Priority Calculation: Global average pooling extracts feature vectors, which are fed into fully connected layers to output task weights . The mask is generated via Softmax: ;

- Subnet Iterative Training: The loss function is weighted by the mask matrix: , optimizing registration accuracy and deformation smoothness alternately.

3.4. Loss Functions

4. Experiments and Results

4.1. Data Description

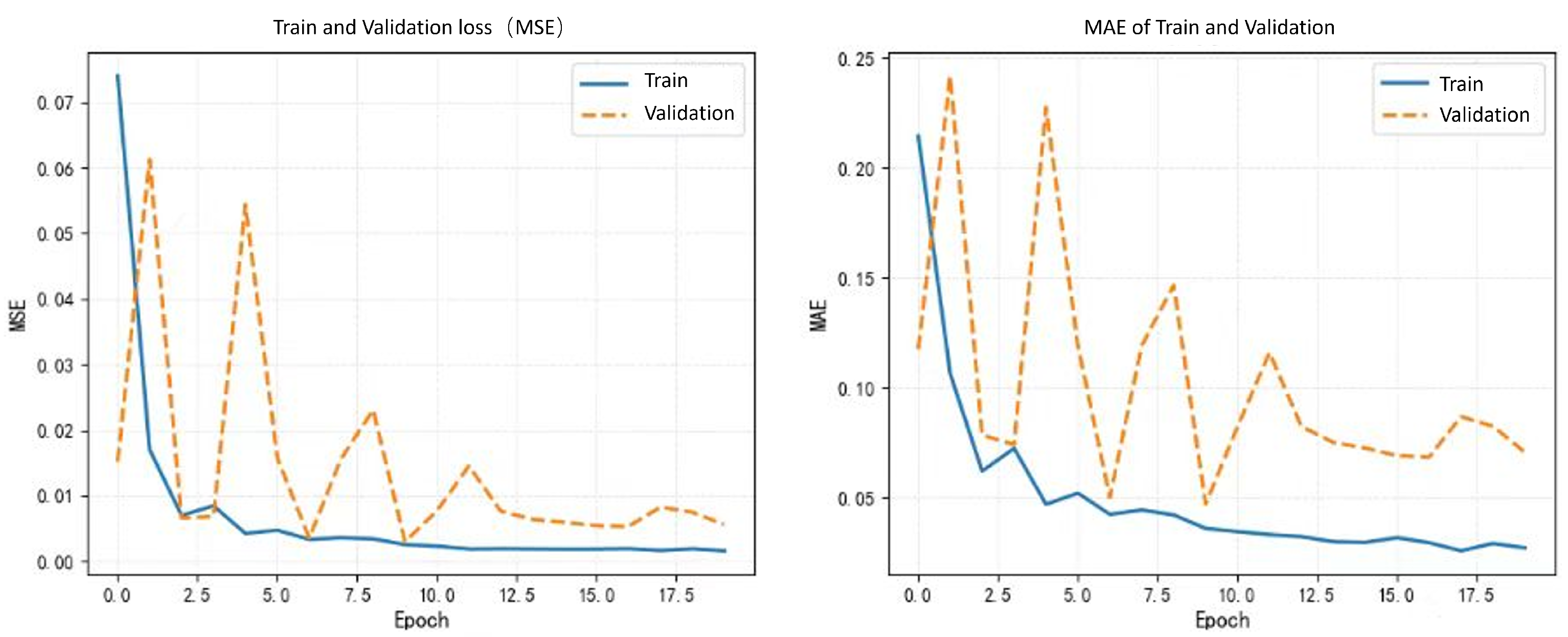

4.2. Implementation Details

4.3. Comparison Methods and Ablation Study

- Salient Region Matching model: A fully automated MR-TRUS registration framework that integrates prostate segmentation, rigid alignment, and deformable registration. It employs dual-stream encoders with cross-modal attention and a salient region matching loss to enhance multi-modality feature learning. It represents a recent state-of-the-art MR-TRUS approach.

- UNet ROI: A segmentation-guided registration method based on UNet, combined with the rigid and deformation registration process of ANTs toolkit. Selected as a segmentation-guided registration benchmark due to its robust performance in combining UNet for ROI extraction with ANTs toolkit for rigid and deformable alignment, representing a hybrid classical-deep learning approach widely used in medical imaging.

- Two Stage UNet: A staged registration strategy that first performs coarse alignment through affine transformation, and then performs deformation registration based on ROI segmentation. Chosen for its staged strategy (affine transformation followed by deformable registration based on ROI segmentation), as it exemplifies multi-phase methods that improve coarse-to-fine alignment.

- Padding+ModeTV2: Registration method using boundary filling and total variation regularization. Included because it incorporates boundary filling and total variation regularization, addressing deformation artifacts in TRUS-MRI fusion; this reflects recent advancements in regularization techniques for better robustness.

- LocalNet+Focal Tversky Loss: A registration model based on local feature network and focal Tversky loss function. Selected as it builds on local feature networks with a focal Tversky loss function tailored for imbalanced classes in prostate datasets, highlighting loss function innovations that enhance convergence in partially converged scenarios.

- LocalNet: A benchmark model for partially converged local feature networks. A baseline local feature network (not fully converged version) chosen to represent foundational unsupervised registration models, allowing direct comparison of our enhancements in convergence and accuracy.

- VoxelMorph: Classic end-to-end deformation registration framework, here is the partially converged version. Included as a classic end-to-end deformable registration framework (partially converged version), widely adopted in medical image analysis; it serves as a standard unsupervised benchmark to demonstrate our method’s superiority in handling modality discrepancies.

4.4. Evaluation Metrics

4.5. Experimental Results

4.5.1. Comparative Experimental Results

4.5.2. Ablation Experimental Results

5. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hu, Y.; Modat, M.; Gibson, E.; Li, W.; Ghavami, N.; Bonmati, E.; Wang, G.; Bandula, S.; Moore, C.M.; Emberton, M.; et al. Weakly-supervised convolutional neural networks for multimodal image registration. Med. Image Anal. 2018, 49, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Darzi, F.; Bocklitz, T. A Review of Medical Image Registration for Different Modalities. Bioengineering 2024, 11, 786. [Google Scholar] [CrossRef] [PubMed]

- Fu, Y.; Lei, Y.; Wang, T.; Patel, P.; Jani, A.B.; Mao, H.; Curran, W.J.; Liu, T.; Yang, X. Biomechanically constrained non-rigid MR-TRUS prostate registration using deep learning based 3D point cloud matching. Med. Image Anal. 2021, 67, 101845. [Google Scholar] [CrossRef] [PubMed]

- Balakrishnan, G.; Zhao, A.; Sabuncu, M.R.; Guttag, J.; Dalca, A.V. VoxelMorph: A learning framework for deformable medical image registration. IEEE Trans. Med. Imaging 2019, 38, 1788–1800. [Google Scholar] [CrossRef] [PubMed]

- Fu, Y.; Lei, Y.; Wang, T.; Curran, W.J.; Liu, T.; Yang, X. Deep learning in medical image registration: A review. Phys. Med. Biol. 2020, 65, 20TR01. [Google Scholar] [CrossRef] [PubMed]

- Feng, Z.; Ni, D.; Wang, Y. Salient region matching for fully automated MR-TRUS registration. arXiv 2025, arXiv:2501.03510v1. [Google Scholar] [CrossRef]

- Wright, R.; Khanal, B.; Gomez, A.; Skelton, E.; Matthew, J.; Hajnal, J.V.; Rueckert, D.; Schnabel, J.A. LSTM-based rigid transformation for MR-US fetal brain registration. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Granada, Spain, 16–20 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 1–8. [Google Scholar]

- Song, X.; Chao, H.; Xu, X.; Guo, H.; Xu, S.; Turkbey, B.; Wood, B.J.; Sanford, T.; Wang, G.; Yan, P. Cross-modal attention for multi-modal image registration. Med. Image Anal. 2022, 82, 102612. [Google Scholar] [CrossRef]

- Fu, Y.; Lei, Y.; Wang, T.; Patel, P.; Jani, A.B.; Mao, H.; Curran, W.J.; Liu, T.; Yang, X. Biomechanically constrained non-rigid MR-US prostate image registration by finite element analysis. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Granada, Spain, 16–20 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 344–352. [Google Scholar]

- Karnik, V.V.; Fenster, A.; Bax, J.; Cool, D.W.; Gardi, L.; Gyacskov, I.; Romagnoli, C.; Ward, A.D. Assessment of image registration accuracy in three-dimensional transrectal ultrasound guided prostate biopsy. Med. Phys. 2010, 37, 802–813. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Wu, H.; Wang, Z.; Yue, P.; Ni, D.; Heng, P.-A.; Wang, Y. A Narrative Review of Image Processing Techniques Related to Prostate Ultrasound. Ultrasound Med. Biol. 2024, 51, 189–209. [Google Scholar] [CrossRef] [PubMed]

- Wu, M.; He, X.; Li, F.; Zhu, J.; Wang, S.; Burstein, P.D. Weakly supervised volumetric prostate registration for MRI-TRUS image driven by signed distance map. Comput. Biol. Med. 2023, 163, 107150. [Google Scholar] [CrossRef]

- De Silva, S.; Prost, A.E.; Hawkes, D.J.; Barratt, D.C. Deep learning for non-rigid MR to ultrasound registration. IEEE Trans. Med. Imaging 2019, 38, 1234–1245. [Google Scholar]

- Chen, J.; Liu, Y.; Wei, S.; Bian, Z.; Subramanian, S.; Carass, A.; Prince, J.L.; Du, Y. A survey on deep learning in medical image registration: New technologies, uncertainty, evaluation metrics, and beyond. arXiv 2024, arXiv:2307.15615. [Google Scholar] [CrossRef] [PubMed]

- Baum, Z.M.C.; Hu, Y.; Barratt, D.C. Real-time multimodal image registration with partial intraoperative point-set data. Med. Image Anal. 2021, 74, 102231. [Google Scholar] [CrossRef] [PubMed]

- De Vos, B.D.; Berendsen, F.F.; Viergever, M.A.; Sokooti, H.; Staring, M.; Išgum, I. A deep learning framework for unsupervised affine and deformable image registration. Med. Image Anal. 2019, 52, 128–143. [Google Scholar] [CrossRef] [PubMed]

- Lei, Y.; Matkovic, L.A.; Roper, J.; Wang, T.; Zhou, J.; Ghavidel, B.; McDonald, M.; Patel, P.; Yang, X. Diffeomorphic transformer-based abdomen MRI-CT deformable image registration. Med. Phys. 2024, 51, 6176–6184. [Google Scholar] [CrossRef] [PubMed]

- Ramadan, H.; El Bourakadi, D.; Yahyaouy, A.; Tairi, H. Medical image registration in the era of Transformers: A recent review. Inform. Med. Unlocked 2024, 49, 101540. [Google Scholar] [CrossRef]

- Chen, J.; Frey, E.C.; He, Y.; Du, R. TransMorph: Transformer for unsupervised medical image registration. Med. Image Anal. 2022, 82, 102615. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Baum, Z.; Saeed, S.; Min, Z.; Hu, Y.; Barratt, D. MR to ultrasound registration for prostate challenge—Dataset. In Proceedings of the MICCAI 2023, Vancouver, BC, Canada, 8–12 October 2023. [Google Scholar]

- Avants, B.B.; Tustison, N.J.; Stauffer, M.; Song, G.; Wu, B.; Gee, J.C. The Insight ToolKit image registration framework. Front. Neuroinformatics 2014, 8, 44. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Abraham, N.; Khan, N.M. A Novel Focal Tversky Loss Function with Improved Attention U-Net for Lesion Segmentation. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 943–947. [Google Scholar]

| Method | DSC | RDSC | TRE (mm) | RTRE (mm) | References |

|---|---|---|---|---|---|

| Our Model | 0.865 | 0.898 | 2.278 | 1.293 | NaN |

| Salient Region Matching model | 0.859 | NaN | 4.650 | NaN | [6] |

| UNet ROI (Segmetation Afine+Deformable ANTs) | 0.862 | 0.885 | 2.450 | 1.667 | [23,24] |

| Two Stage UNet (Affine+ROI Seg→Deformable) | 0.830 | 0.879 | 1.857 | 0.667 | [23,24] |

| Padding+ModeTV2 | 0.777 | 0.828 | 4.030 | 3.005 | [16] |

| LocalNet+Focal Tversky Loss | 0.702 | 0.751 | 2.370 | 1.853 | [1,25] |

| LocalNet (Not Fully Converged) | 0.553 | 0.632 | 7.654 | 5.805 | [25] |

| VoxelMorph (Not Fully Converged) | 0.352 | 0.431 | 10.727 | 8.898 | [4] |

| Configuration | DSC | RDSC | TRE (mm) | RTRE (mm) |

|---|---|---|---|---|

| Our Model (Full) | 0.865 | 0.898 | 2.278 | 1.293 |

| Our Model (w/o WeightStitching) | 0.852 | 0.881 | 2.415 | 1.427 |

| CMCA Model | 0.856 | 0.892 | 2.240 | 1.250 |

| Our Model (w/o LSTM) | 0.864 | 0.896 | 2.260 | 1.290 |

| U-Net framework Only (Not Fully Converged) | 0.553 | 0.632 | 7.654 | 5.805 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shao, C.; Xue, R.; Gu, L. E-CMCA and LSTM-Enhanced Framework for Cross-Modal MRI-TRUS Registration in Prostate Cancer. J. Imaging 2025, 11, 292. https://doi.org/10.3390/jimaging11090292

Shao C, Xue R, Gu L. E-CMCA and LSTM-Enhanced Framework for Cross-Modal MRI-TRUS Registration in Prostate Cancer. Journal of Imaging. 2025; 11(9):292. https://doi.org/10.3390/jimaging11090292

Chicago/Turabian StyleShao, Ciliang, Ruijin Xue, and Lixu Gu. 2025. "E-CMCA and LSTM-Enhanced Framework for Cross-Modal MRI-TRUS Registration in Prostate Cancer" Journal of Imaging 11, no. 9: 292. https://doi.org/10.3390/jimaging11090292

APA StyleShao, C., Xue, R., & Gu, L. (2025). E-CMCA and LSTM-Enhanced Framework for Cross-Modal MRI-TRUS Registration in Prostate Cancer. Journal of Imaging, 11(9), 292. https://doi.org/10.3390/jimaging11090292