Contrastive Learning-Driven Image Dehazing with Multi-Scale Feature Fusion and Hybrid Attention Mechanism

Abstract

1. Introduction

- In this paper, we introduce contrastive learning as an innovative approach to image dehazing, enabling the model to effectively preserve the structure and texture of the scene while removing haze, thereby achieving more accurate and visually consistent dehazing results.

- We propose a multi-scale dynamic feature fusion strategy integrated into the Transformer-enhanced architecture and a hybrid attention mechanism, which together enhance the model’s ability to focus on critical regions and preserve fine-grained details across multiple scales, thereby improving both local and global feature restoration.

2. Related Work

3. The Proposed Method

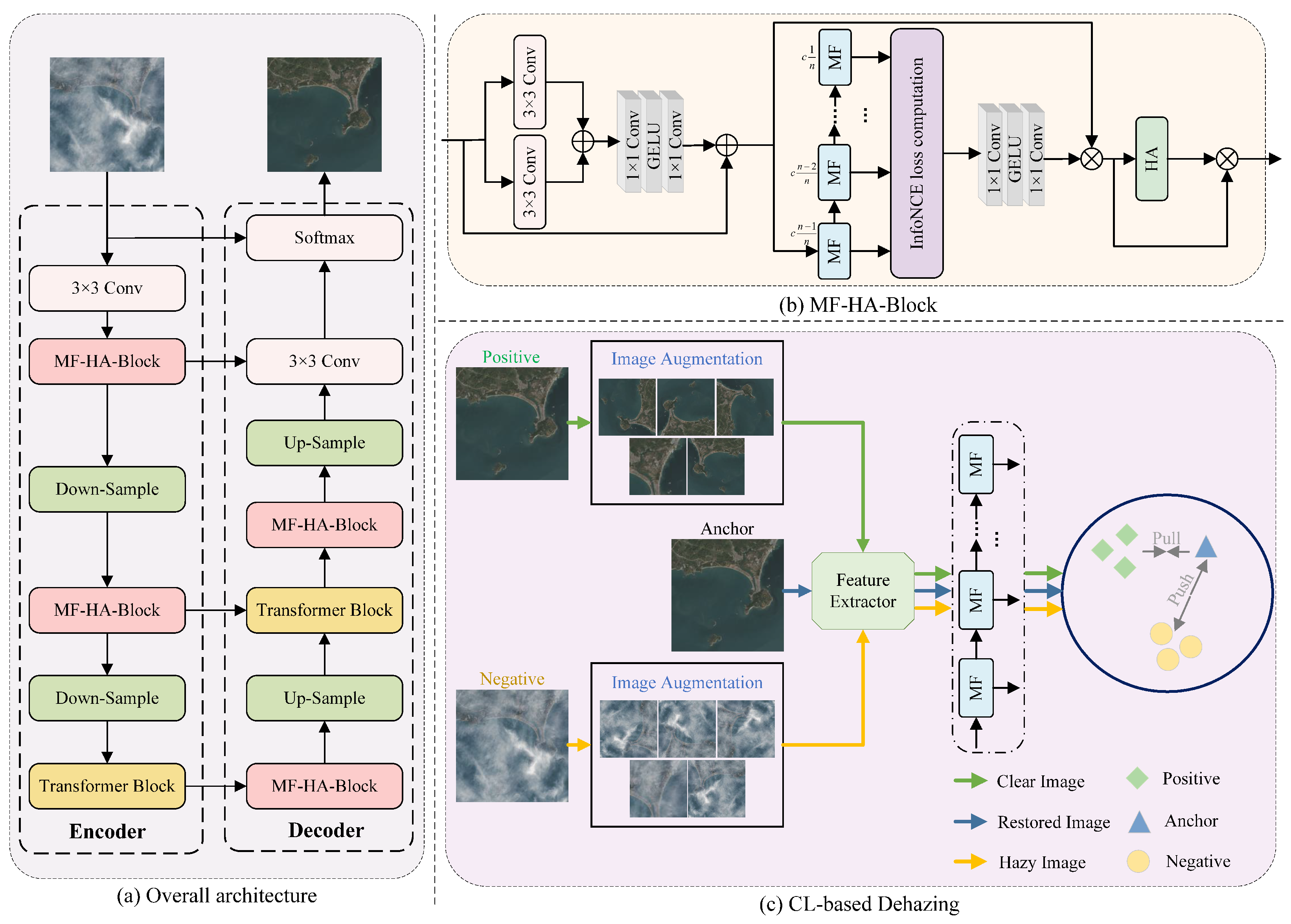

3.1. Overall Architecture

3.2. Contrastive Learning-Based Dehazing

3.3. Multi-Scale Dynamic Feature Fusion and Hybrid Attention Block

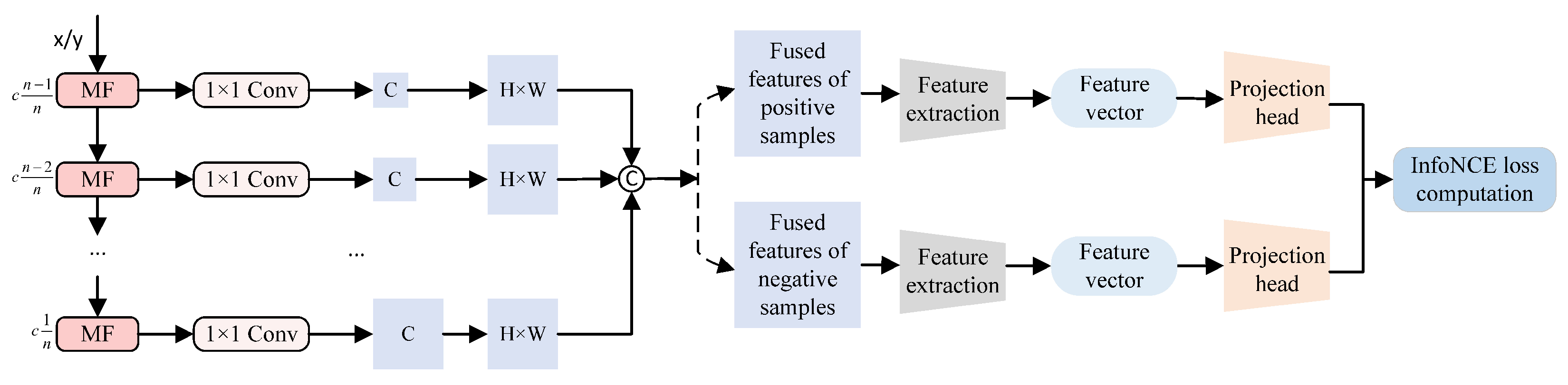

3.3.1. Multi-Scale Dynamic Feature Fusion Module (MF)

3.3.2. Hybrid Attention Mechanism (HA)

3.4. Overall Training

4. Experiment

4.1. Experimental Settings

4.1.1. Implementation Details

4.1.2. Datasets

4.1.3. Evaluation Metrics

4.2. Quantitative Comparison

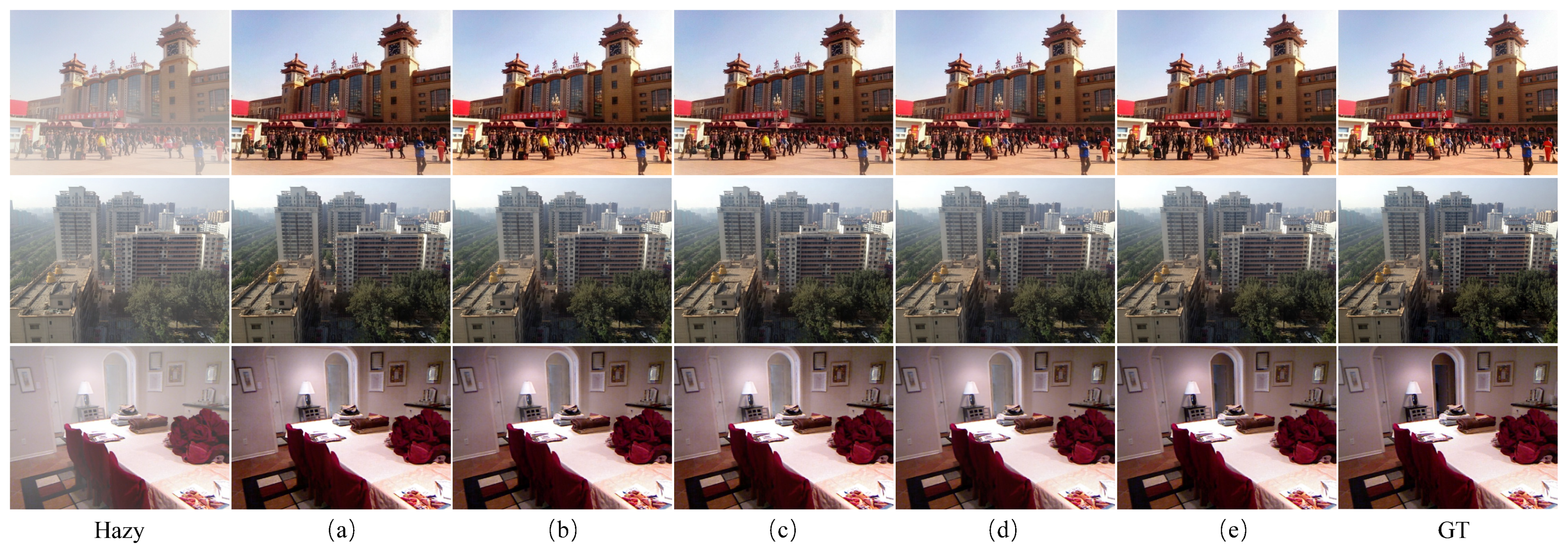

4.3. Visual Comparison

4.4. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CL-MFHA | Contrastive Learning-driven image dehazing with Multi-scale feature Fusion and Hybrid Attention mechanism |

| CL-based | Contrastive Learning-based |

| MF-HA-Block | Multi-scale dynamic feature Fusion and Hybrid Attention Block |

| MF | Multi-scale dynamic feature Fusion module |

| HA | Hybrid Attention mechanism |

References

- Zhao, X.; Xu, F.; Liu, Z. TransDehaze: Transformer-enhanced texture attention for end-to-end single image dehaze. Vis. Comput. 2025, 41, 1621–1635. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [CrossRef] [PubMed]

- Narasimhan, S.G.; Nayar, S.K. Vision and the atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- Narasimhan, S.G.; Nayar, S.K. Chromatic framework for vision in bad weather. In Proceedings of the Proceedings IEEE Conference on Computer Vision and Pattern Recognition. CVPR 2000 (Cat. No. PR00662), Hilton Head, SC, USA, 15 June 2000; IEEE: Piscataway, NJ, USA, 2000; Volume 1, pp. 598–605. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 25. [Google Scholar]

- Oord, A.v.d.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Kayalvizhi, S.; Karthikeyan, B.; Sathvik, C.; Gautham, C. Dehazing of Multispectral Images Using Contrastive Learning In CycleGAN. In Proceedings of the International Conference on Data & Information Sciences, Kyoto, Japan, 21–23 July 2023; Springer: Singapore, 2023; pp. 407–419. [Google Scholar]

- Ancuti, C.O.; Ancuti, C. Single image dehazing by multi-scale fusion. IEEE Trans. Image Process. 2013, 22, 3271–3282. [Google Scholar] [CrossRef] [PubMed]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature fusion attention network for single image dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11908–11915. [Google Scholar]

- Qiu, D.; Cheng, Y.; Wang, X. End-to-end residual attention mechanism for cataractous retinal image dehazing. Comput. Methods Programs Biomed. 2022, 219, 106779. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Song, Y.; He, Z.; Qian, H.; Du, X. Vision transformers for single image dehazing. IEEE Trans. Image Process. 2023, 32, 1927–1941. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking single-image dehazing and beyond. IEEE Trans. Image Process. 2018, 28, 492–505. [Google Scholar] [CrossRef] [PubMed]

- Ancuti, C.O.; Ancuti, C.; Timofte, R. NH-HAZE: An image dehazing benchmark with non-homogeneous hazy and haze-free images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 444–445. [Google Scholar]

- Zheng, C.; Ying, W.; Hu, Q. Comparative analysis of dehazing algorithms on real-world hazy images. Sci. Rep. 2025, 15, 10822. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, BC, Canada, 8–13 December 2014; Volume 27. [Google Scholar]

- Ren, W.; Ma, L.; Zhang, J.; Pan, J.; Cao, X.; Liu, W.; Yang, M.H. Gated fusion network for single image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3253–3261. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. Aod-net: All-in-one dehazing network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4770–4778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part IV 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 630–645. [Google Scholar]

- Liu, X.; Ma, Y.; Shi, Z.; Chen, J. Griddehazenet: Attention-based multi-scale network for image dehazing. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7314–7323. [Google Scholar]

- Kuanar, S.; Mahapatra, D.; Bilas, M.; Rao, K. Multi-path dilated convolution network for haze and glow removal in nighttime images. Vis. Comput. 2022, 38, 1121–1134. [Google Scholar] [CrossRef]

- Wang, Y.; Yan, X.; Wang, F.L.; Xie, H.; Yang, W.; Zhang, X.P.; Qin, J.; Wei, M. UCL-Dehaze: Toward real-world image dehazing via unsupervised contrastive learning. IEEE Trans. Image Process. 2024, 33, 1361–1374. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Qu, Y.; Lin, S.; Zhou, J.; Qiao, R.; Zhang, Z.; Xie, Y.; Ma, L. Contrastive learning for compact single image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 10551–10560. [Google Scholar]

- Li, J.; Zhou, P.; Xiong, C.; Hoi, S.C. Prototypical contrastive learning of unsupervised representations. arXiv 2020, arXiv:2005.04966. [Google Scholar]

- Zhou, H.; Chen, Z.; Li, Q.; Tao, T. Dehaze-UNet: A Lightweight Network Based on UNet for Single-Image Dehazing. Electronics 2024, 13, 2082. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, H.; Peng, J.; Yao, L.; Zhao, K. ODCR: Orthogonal Decoupling Contrastive regularization for unpaired image Dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 25479–25489. [Google Scholar]

- Yinglong, W.; Bin, H. Casdyf-net: Image dehazing via cascaded dynamic filters. arXiv 2024, arXiv:2409.08510. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Chen, D.; He, M.; Fan, Q.; Liao, J.; Zhang, L.; Hou, D.; Yuan, L.; Hua, G. Gated context aggregation network for image dehazing and deraining. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1375–1383. [Google Scholar]

- Luo, P.; Xiao, G.; Gao, X.; Wu, S. LKD-Net: Large kernel convolution network for single image dehazing. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1601–1606. [Google Scholar]

- Cui, Y.; Ren, W.; Cao, X.; Knoll, A. Revitalizing convolutional network for image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9423–9438. [Google Scholar] [CrossRef] [PubMed]

- Guo, C.L.; Yan, Q.; Anwar, S.; Cong, R.; Ren, W.; Li, C. Image dehazing transformer with transmission-aware 3d position embedding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 5812–5820. [Google Scholar]

- Chen, Z.; He, Z.; Lu, Z.M. DEA-Net: Single image dehazing based on detail-enhanced convolution and content-guided attention. IEEE Trans. Image Process. 2024, 33, 1002–1015. [Google Scholar] [CrossRef] [PubMed]

- Cui, Y.; Ren, W.; Knoll, A. Omni-kernel network for image restoration. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 1426–1434. [Google Scholar]

| Methods | RESIDE-6K | RS-Haze | ||||

|---|---|---|---|---|---|---|

| PSNR (dB) | SSIM | LPIPS | PSNR (dB) | SSIM | LPIPS | |

| GCANet [31] | 26.58 | 0.945 | 0.048 | 34.41 | 0.949 | 0.074 |

| GridDehazeNet [22] | 25.06 | 0.938 | 0.051 | 34.58 | 0.947 | 0.072 |

| FFA-Net [10] | 28.32 | 0.953 | 0.032 | 37.40 | 0.955 | 0.060 |

| DehazeFormer-S [13] | 30.62 | 0.976 | 0.016 | 39.57 | 0.970 | 0.068 |

| LKD-Net-B [32] | 30.67 | 0.976 | 0.022 | 37.98 | 0.965 | 0.063 |

| ConvIR-B [33] | 30.96 | 0.966 | 0.015 | 39.47 | 0.963 | 0.062 |

| Ours | 31.85 | 0.980 | 0.014 | 39.76 | 0.971 | 0.059 |

| Methods | PSNR (dB) | SSIM |

|---|---|---|

| GridDehazeNet [22] | 18.33 | 0.667 |

| FFA-Net [10] | 19.87 | 0.692 |

| DeHamer [34] | 20.66 | 0.684 |

| DehazeFormer [13] | 20.31 | 0.761 |

| DEANet [35] | 20.84 | 0.801 |

| OKNet [36] | 20.29 | 0.800 |

| Ours | 20.98 | 0.803 |

| Net | MF | HA | CL-Based Dehazing | PSNR (dB) | SSIM |

|---|---|---|---|---|---|

| (a) | 28.43 | 0.966 | |||

| (b) | ✓ | 30.53 | 0.973 | ||

| (c) | ✓ | 29.02 | 0.968 | ||

| (d) | ✓ | ✓ | 31.34 | 0.976 | |

| (e) | ✓ | ✓ | ✓ | 31.85 | 0.980 |

| Augmentation Method | Cropping | Geometric | Photometric | PSNR (dB) | SSIM |

|---|---|---|---|---|---|

| (f) | 29.93 | 0.973 | |||

| (g) | ✓ | 30.42 | 0.974 | ||

| (h) | ✓ | ✓ | 30.94 | 0.976 | |

| (i) | ✓ | ✓ | ✓ | 31.85 | 0.980 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Wang, J.; Tu, X.; Niu, Z.; Wang, Y. Contrastive Learning-Driven Image Dehazing with Multi-Scale Feature Fusion and Hybrid Attention Mechanism. J. Imaging 2025, 11, 290. https://doi.org/10.3390/jimaging11090290

Zhang H, Wang J, Tu X, Niu Z, Wang Y. Contrastive Learning-Driven Image Dehazing with Multi-Scale Feature Fusion and Hybrid Attention Mechanism. Journal of Imaging. 2025; 11(9):290. https://doi.org/10.3390/jimaging11090290

Chicago/Turabian StyleZhang, Huazhong, Jiaozhuo Wang, Xiaoguang Tu, Zhiyi Niu, and Yu Wang. 2025. "Contrastive Learning-Driven Image Dehazing with Multi-Scale Feature Fusion and Hybrid Attention Mechanism" Journal of Imaging 11, no. 9: 290. https://doi.org/10.3390/jimaging11090290

APA StyleZhang, H., Wang, J., Tu, X., Niu, Z., & Wang, Y. (2025). Contrastive Learning-Driven Image Dehazing with Multi-Scale Feature Fusion and Hybrid Attention Mechanism. Journal of Imaging, 11(9), 290. https://doi.org/10.3390/jimaging11090290