2. Related Works

In this section, we review methods aimed at preserving naturalness for both dichromats and normal trichromats, and their modifications.

The method presented in [

13] can be regarded as a method that aims to maintain naturalness for both dichromats and normal trichromats. Within the framework of this method, first, an image simulating the loss of contrast experienced by dichromats is generated. This image is then daltonized, aiming to preserve naturalness for dichromats. Only at the final step, to achieve naturalness for trichromats, is a component of the original image—indistinguishable to dichromats—added to the processed image. The authors indicate that this former operation fails to achieve its intended goal.

In the study by Chen et al. [

28], the authors proposed a neural network-based daltonization method termed CVD-Swin. This approach utilizes a loss function defined as a weighted sum of two components: one aimed at preserving image naturalness and the other at restoring contrast typically lost in dichromatic vision. However, the study did not establish the optimal weighting between these two objectives.

In the following, methods that manipulate only the achromatic component of the image are considered.

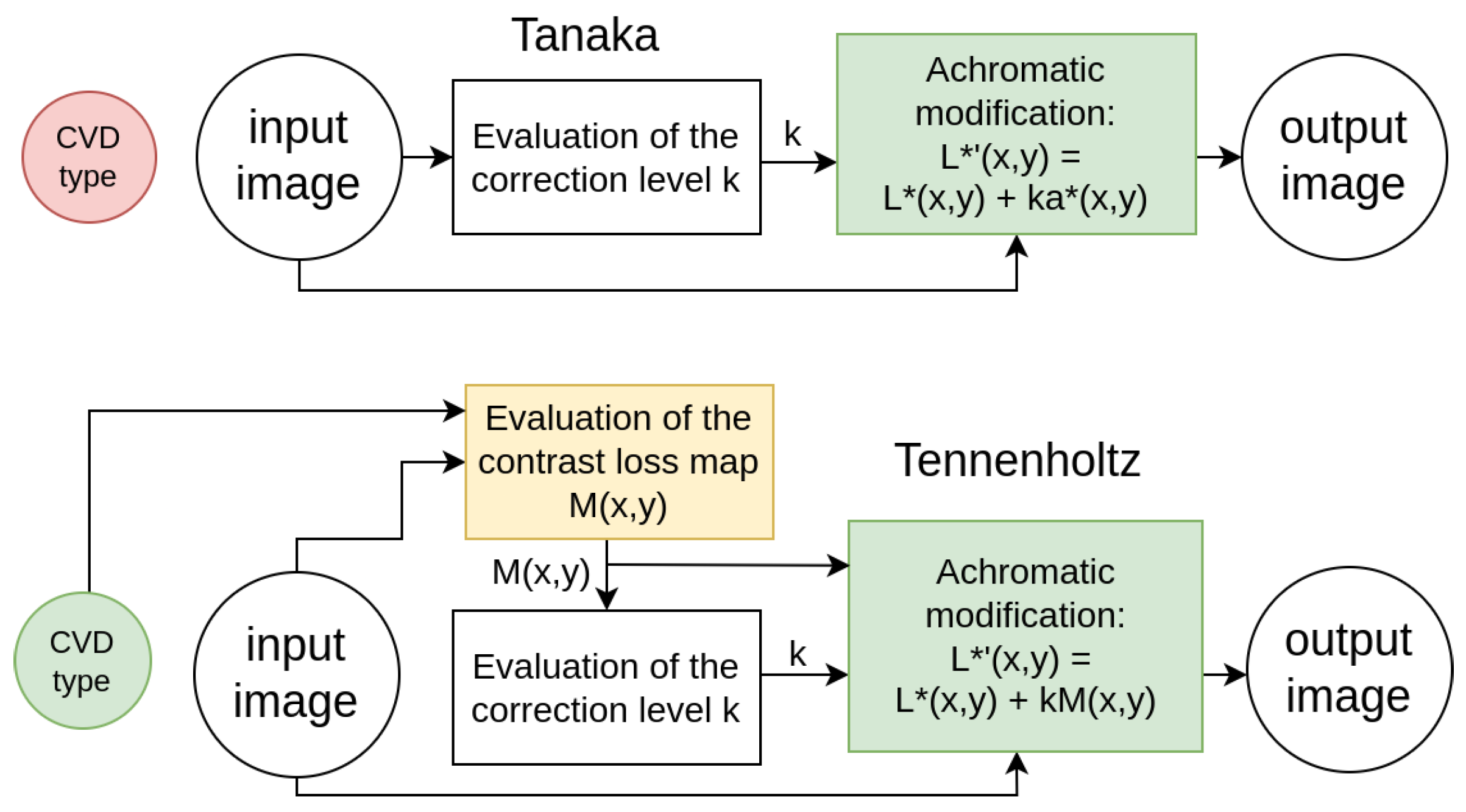

Tanaka’s method [

21] augments each pixel’s achromatic component (

component of the CIELAB image) with its chromatic component (

of the CIELAB image) using an optimized unified coefficient for individual input images (

Figure 1). The main disadvantage of Tanaka’s method is that it does not take into account the type of CVD, which leads to highly inaccurate restoration of lost details.

Tennenholtz’s method [

20] computes a mean contrast loss map using each pixel’s neighborhood and modifies the achromatic component based on this map (

Figure 1). In this method, as highlighted by Meng and Tanaka [

21], the visibility of elements within the image is enhanced by emphasizing the contours of the objects. Restoring object contours instead of the objects themselves allows partial recovery of the informational content of the original image, however, it alters its appearance.

To restore not the contours of objects but the objects themselves, several methods utilize the concept proposed by Socolinsky and Wolff [

29]. In this concept, local contrast is determined by the Di Zenzo structural tensor, from which a target vector field of original contrast is derived. The resulting image is adjusted as a whole to make its gradient field as close as possible to the target. We consider the approach proposed by Socolinsky and Wolff to be the most promising, and the methods based on it will be examined further.

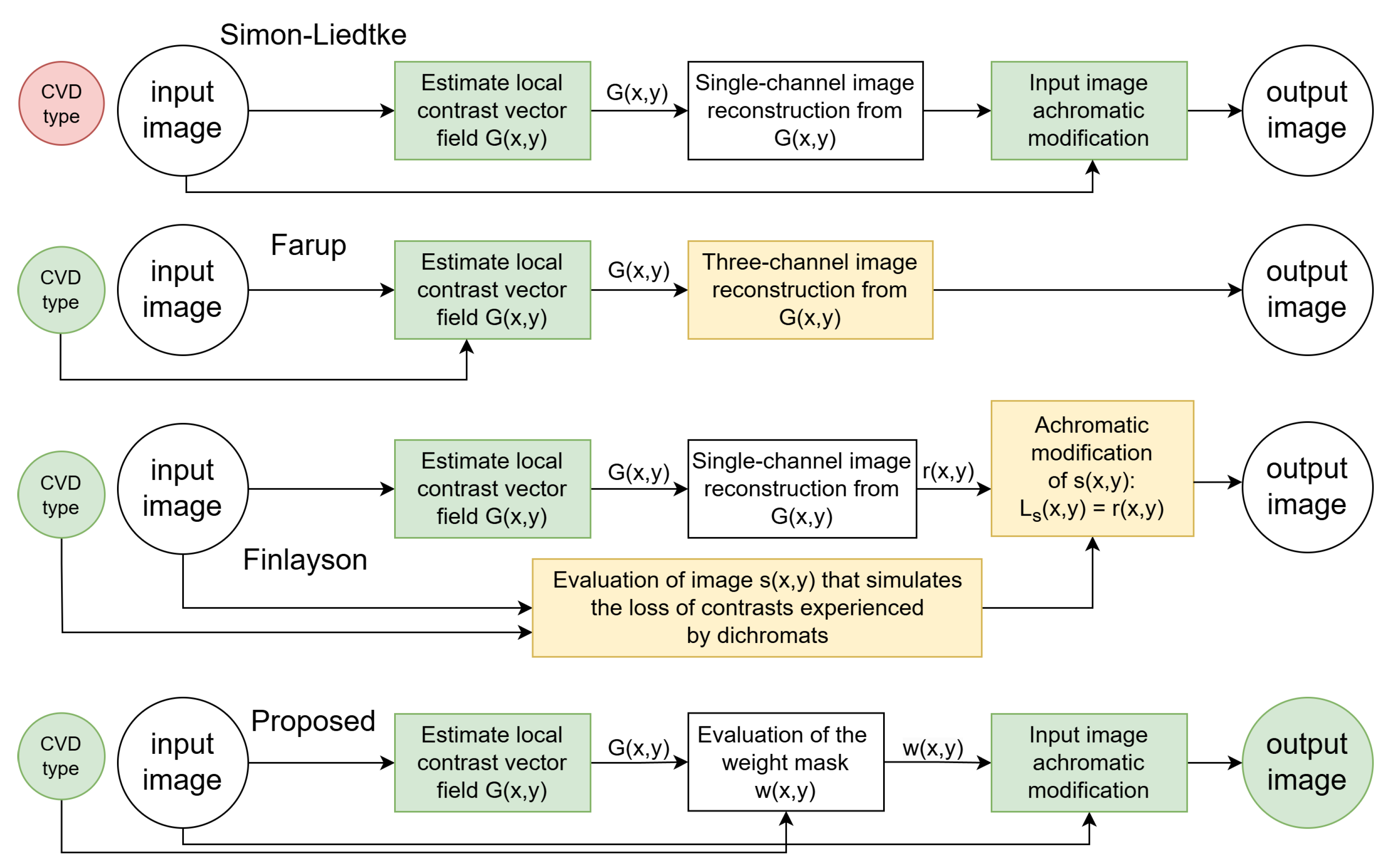

Simon-Liedtke and Farup [

19] introduced such a method that ensures naturalness by processing only the achromatic component. However, a drawback of the method proposed in [

19] is that the contrast preservation does not account for CVD type, utilizing a contrast-preserving RGB-to-grayscale transformation (

Figure 2).

The subsequent research by the Farup group took a different direction [

23,

30]. In his 2020 work [

23], Farup presented an anisotropic daltonization method aimed at maintaining local contrast specifically lost in observers with CVD (

Figure 2). However, this method does not ensure naturalness preservation as it involves modifications beyond the achromatic component.

Finlayson’s group also discussed a daltonization method based on Socolinsky and Wolff’s approach, employing the POP image fusion technique to simplify the original method [

31]. The paper suggests compressing the original RGB channels into one using POP and substituting the achromatic component of the image. In Finlayson’s method, an image is constructed that simulates the loss of contrast experienced by dichromats, with modifications applied to the achromatic component of this image (

Figure 2). The resulting image is unnatural for trichromats.

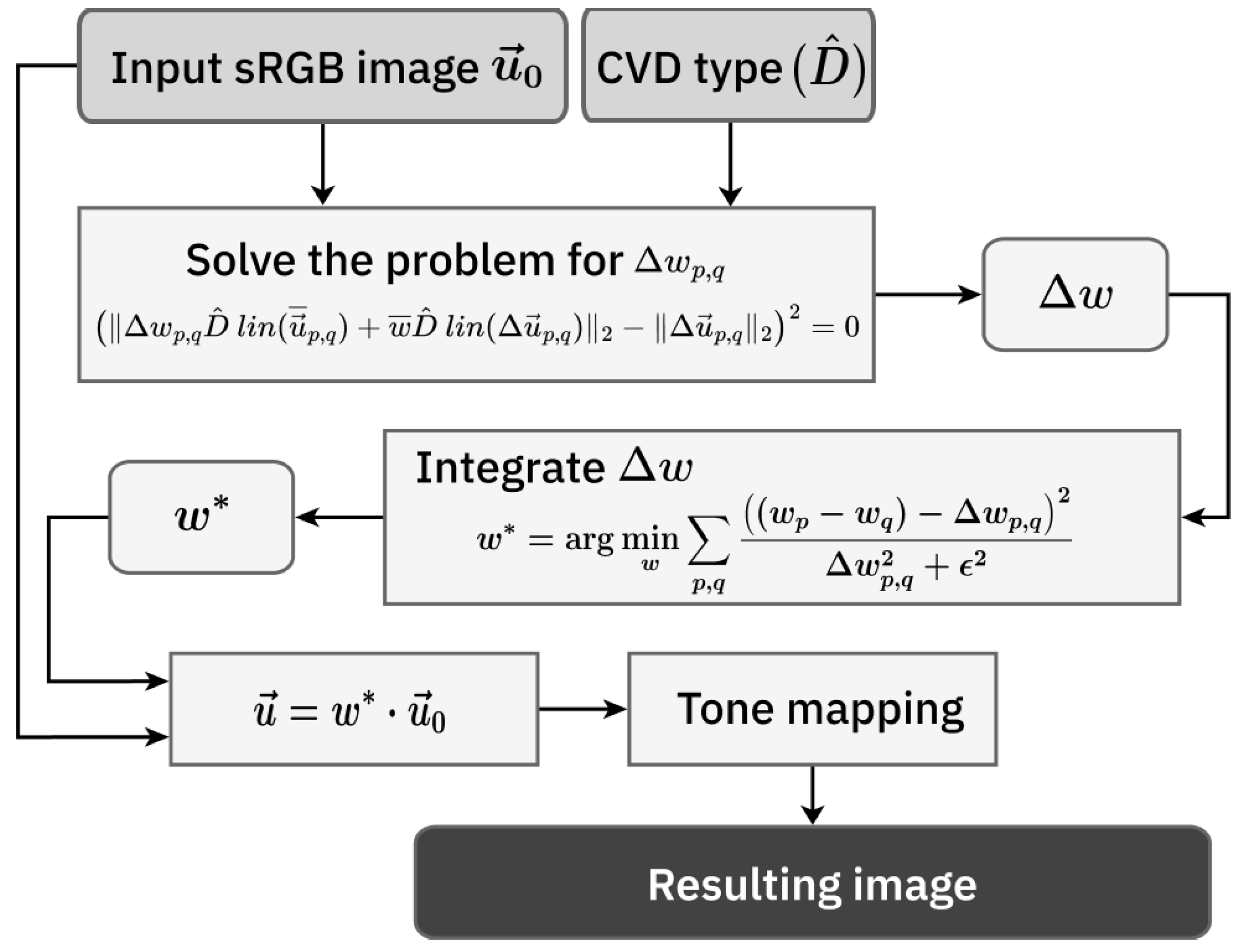

To address the identified shortcomings of the related methods, we propose a novel daltonization technique. Our method maintains naturalness by modifying only the achromatic component of the image and establishes an optimization problem similar to Socolinsky and Wolff’s approach [

29] to retain local contrast (

Figure 2). Preliminary results, without a detailed description and comprehensive testing, were reported in [

32].

3. Simulation Method

To develop a daltonization algorithm, it is crucial to model color images as perceived by individuals with CVD. This is achieved through simulating color perception for dichromats. In this study, we employed the dichromat simulation algorithm proposed by Viénot et al. [

33]. We did not use any other algorithm to model anomalous trichromacy.

The following basic requirement is imposed on dichromat color perception simulators: each set of colors indistinguishable to a dichromat must be displayed by the simulator in one color from this set, but which one is not regulated. Clearly, there can be an infinite variety of approaches to simulating dichromatic vision. They vary in the representation of the “blind” channel in the simulated image—the channel that corresponds to the type of missing cones (L for protanopia, M for deuteranopia). Typically, the specific color assigned to represent a set of indistinguishable colors is chosen to satisfy some additional requirement, such as preserving colors that are assumed to appear the same to dichromatic and normal observers. The most straightforward mathematically are simulators employing linear transformations of color vectors: the signal of the “blind” channel becomes a linear combination of the signals from the other two channels. Note that the well-known Brettel’s simulator is only piecewise linear since it uses two half-planes as a model of a dichromat color space [

34].

Viénot’s simulation retains blue, yellow, and grayscale in the simulation image—colors distinguishable by protanopes and deuteranopes. Furthermore, it utilizes almost the entire display’s color range.

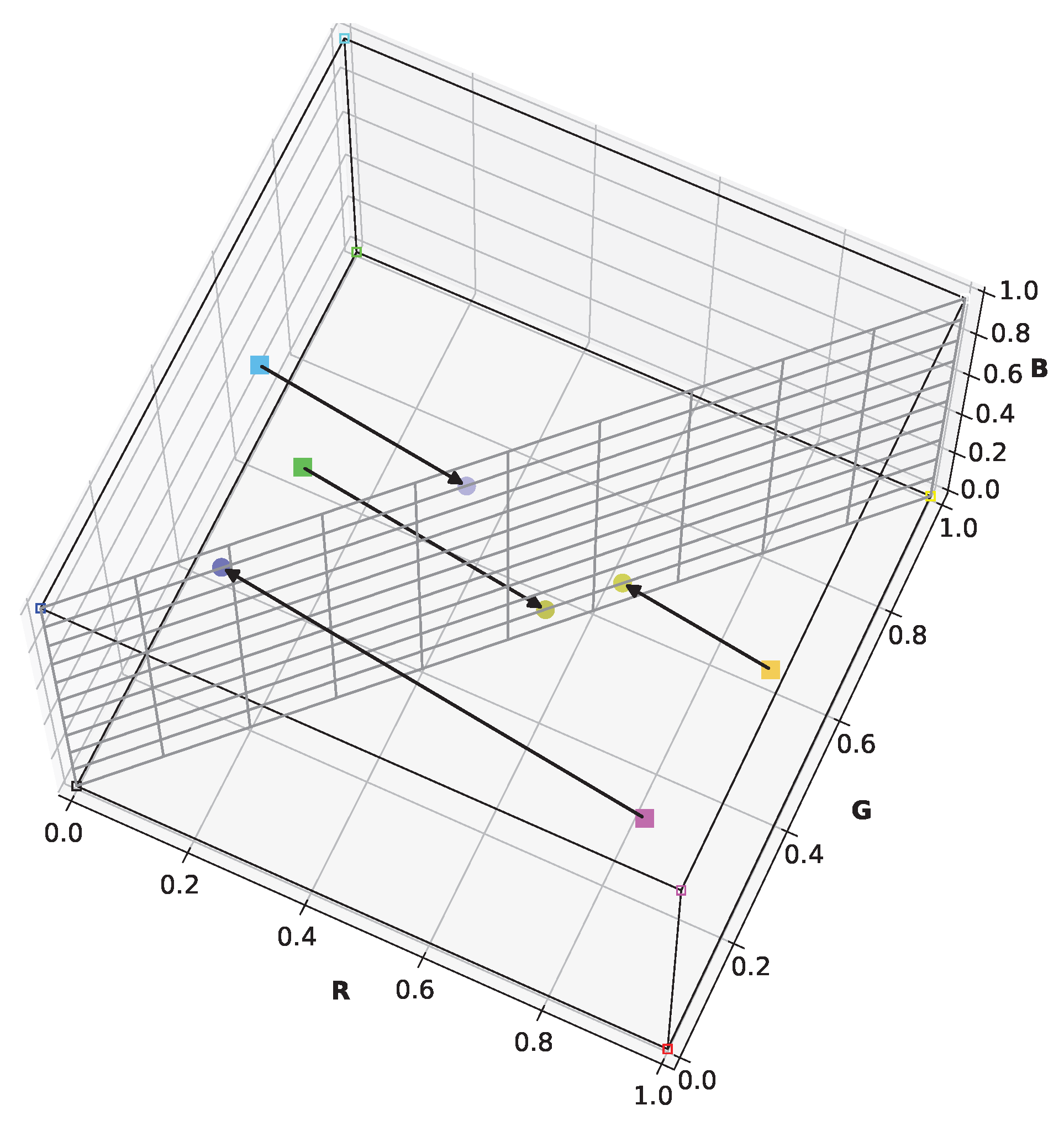

Figure 3 illustrates the simulation of certain arbitrary colors for a protanope. It can be seen that the plane representing the dichromat color space contains the black, white, blue, and yellow points of the RGB cube. Unlike Brettel’s algorithm, this linear approach streamlines its integration into the daltonization algorithm. These aspects are crucial for visually evaluating contrast loss in dichromats and achieving contrast restoration through daltonization.

Since Viénot et al. [

33] developed their simulations for a display with the same primaries and reference white as the sRGB standard uses, we were able to use the matrix for converting linear RGB values to trichromat cone responses (LMS) from that paper without change. When applying the algorithm to displays with different color gamuts, recalculating the matrix is straightforward using the authors’ proposed methodology. We also utilized the authors’ simulation matrices for protanope and deuteranope perception without making any alterations.

Because both linear RGB-to-LMS conversion and dichromat simulation in the LMS color space are linear operations, we can replace the individual sequential matrix–vector multiplications with a single multiplication of the product of the corresponding matrices by the color vector. Following the notation of Viénot et al. [

33] we can write the following for a protanope:

where

is the protanope simulation of the color vector

,

is the matrix converting linear RGB values to LMS values, and

is the protanope simulation matrix in the LMS color space. A similar equation can be written for the deuteranope simulation.

Thus, the simulation is a linear transformation described by the following matrix equation:

where

represents the original color vector,

is the color vector in the simulated image, and

is the simulation matrix. The following matrices were used for simulating the perception of protanopes and deuteranopes:

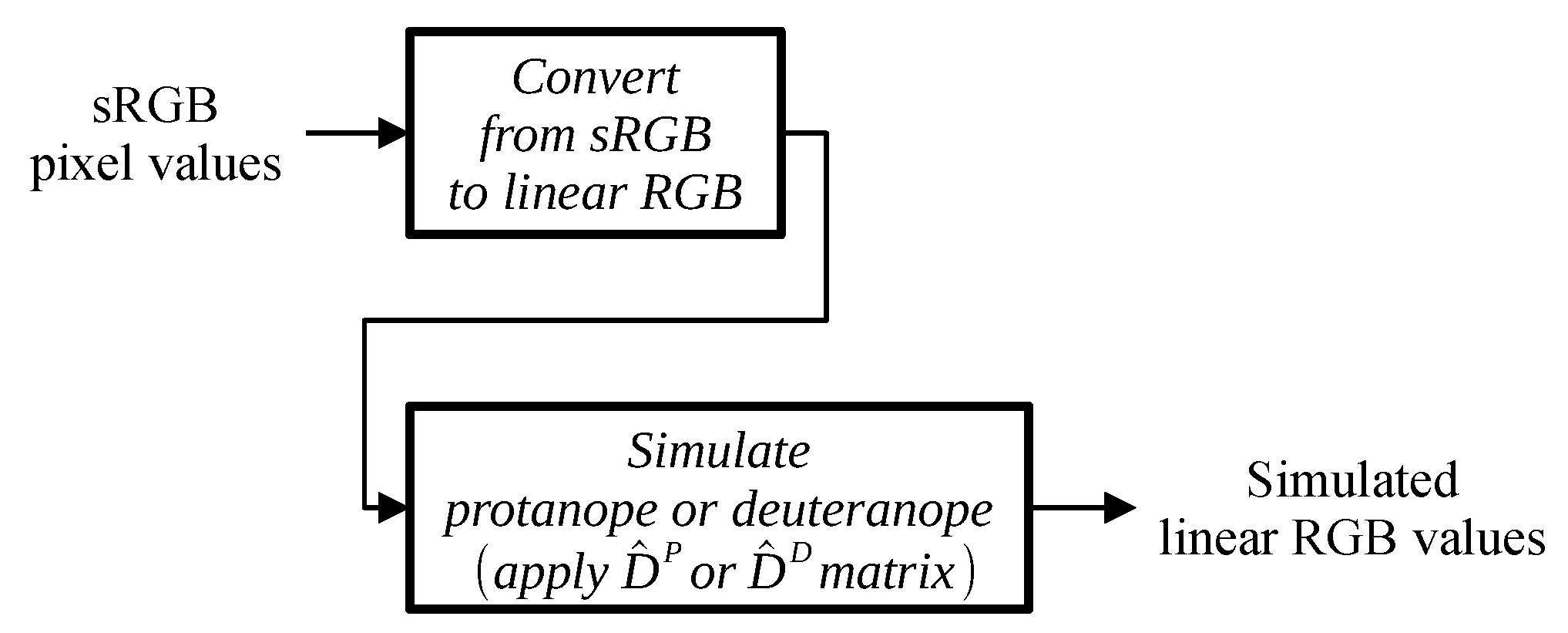

Conversion of the image pixel values to the simulated linear RGB values for a protanope or deuteranope is illustrated in

Figure 4.

4. Achromatic Contrast-Preserving Daltonization

As mentioned in

Section 2, daltonization methods aim to preserve local contrast. The formalization of the local contrast of a single-channel image is a gradient. For multi-channel images, a pseudo-gradient was introduced by Socolinsky and Wolff [

29]. Following them, we define a multi-channel image as a rectangle

together with a map

, where

denotes

n-dimensional photometric space. The pseudo-gradient is based on the structural tensor

S defined by Di Zenzo [

35],

where

denotes the Jacobian matrix of image

at point

:

The pseudo-gradient is represented by the primary eigenvalue

along with the associated eigenvector

of the structural tensor

S:

For selecting the sign of the pseudo-gradient in Equation (

6), several solutions exist [

36,

37,

38]. One straightforward but effective method involves choosing directions along the gradients of the integral image formed by summing the channels of the original image at each pixel [

29]. The pseudo-gradient with the selected sign is denoted as

.

Initially, a local contrast-preserving paradigm was proposed for the multi-channel image visualization problem, where it is necessary to reduce the number of input channels from two or more to one while preserving information important for the human visual system [

29,

39]. Next, the contrast-preserving visualization approach is briefly discussed. Then we use this approach for daltonization. The key aspect of the Socolinsky and Wolff contrast-preserving paradigm is the image reconstruction using known contrast. Namely, estimation of the resulting grayscale image

during optimization so that its gradient

matches the pseudo-gradient

as much as possible:

where

is the input image and

indicates the Euclidean norm. The optimization criterion in Formula (

7) is commonly referred to as linear [

37,

40].

According to the Weber–Fechner law, the psychophysical significance lies in the relative values of stimulus intensities. In the current context, this law becomes apparent as gradient errors are clearly distinguishable in monotonic areas of the image, while in non-monotonic areas, the same absolute errors remain unnoticed. The linear criterion (

7) does not consider this perceptual effect, potentially resulting in the emergence of unwanted gradients (halo) in the final image [

41]. To address this, Sokolov et al. proposed to replace the linear criterion with a non-linear alternative [

37,

40]:

where

is the regularization constraint. It has been shown that contrast-preserving visualization with the Sokolov criterion outperforms alternative approaches [

42]. To date, we are not aware of any other non-linear criteria. Now let us return to the daltonization, taking functional (

8) as a starting point.

Similar to the visualization methods, daltonization methods should be based on channel number reduction: to improve visibility of image elements for individuals with CVD, one needs to model the contrast loss they experience. This is achieved using the simulation method, which for a given CVD type reduces one channel that corresponds to the type of missing cones. Unlike the visualization approach, daltonization methods return an image with the same number of channels as the input image. In our daltonization algorithm, we use the described visualization approach with several modifications.

First, we replace the resulting single-channel image

u with a three-channel daltonized image

. The gradient of a single-channel image

is replaced with the pseudo-gradient of a three-channel image

. Second, we note that in the denominator of the non-linear Sokolov criterion, the contribution of the contrast of the resulting image

and the contrast of the original image

are equal. However, in the visualization, as well as in the daltonization, this is not the case. The input image contains reference contrasts to which the output image should be as close as possible, and not vice versa. If

while

, the criterion value should tend to infinity; which is not satisfied for criterion (

8).

Combining the above, we propose the following non-linear criterion for daltonization:

where

is the simulation matrix. This criterion, on the one hand, retains the advantage of the Sokolov criterion, that is, it prevents the appearance of a halo; on the other hand, it is asymmetrical in favor of the reference image.

The problem statement (

9) is focused on preserving local contrast, but so far does not take into account the preservation of naturalness for both dichromats and trichromats. To highlight boundaries invisible to dichromats, we modulate the achromatic component of the pixels by multiplying the values of

by a certain weight

w, calculated for each pixel:

where

denotes the original image,

w is the weight mask, and

is the resulting daltonized image.

We replace the pseudo-gradient with the Euclidean norm of the difference of two color vectors in pixels with coordinates

p and

q. In the current study we consider

p and

q as close neighbors: for each

the pair

and

is considered. Potentially, it might be useful to increase the size of the neighborhood as human vision perceives local contrast at different scales, depending on the angular resolution of the observed image [

43]. Finally, the discretized problem (

10) takes the following form:

The Euclidean norm, unlike the pseudo-gradient, allows for an increased neighborhood without any modifications in the problem statement.

Functional (

11) is non-convex and thus difficult to optimize. In this regard, we make several simplifications. First, we focus solely on the numerator of the original functional (

11):

Second, we employ variable substitutions of pairs

,

and

,

by their difference and mean values:

and assume that the mean mask value between adjacent pixels remains constant:

. By substituting the original variables with new ones and plugging them into (

12), we obtain a quadratic equation for a

:

Equation (

14) is solved independently for each pair of pixels

p and

q, where each pixel of the image is alternately selected as

and generates two pairs: with

and with

.

To choose between the two solutions of (

14), the guiding differences are used. The guiding differences are calculated using the integral image formed by summing the channels of the original image, as in [

29]. If for a given pair

the guiding difference is greater than zero, then the largest of the two solutions is chosen; if less, then the smallest.

Due to the variable substitutions, the solution of the simplified problem (

14) is not the pixel weight

values but their differences

. This brings us back to the problem of image reconstruction using known contrast. To this purpose, we use the following non-linear criterion with the above-proposed denominator modifications (as in criterion (

9)):

This problem is solved using the Adam optimizer from PyTorch v2.6.0.

Multiplying by the weights obtained from (

15) may cause the daltonized image

values to exceed the RGB displayable range, which cannot be reproduced correctly on standard displays. This problem is known as tone mapping and is being researched in the field of high-dynamic-range (HDR) imaging, where it is necessary to compress the HDR signal for visualization on varied low-dynamic-range output devices such as regular displays [

44,

45]. In this study, we employ a tone-mapping technique utilizing autocontrast with a threshold set at the 0.98 quantile [

46].

6. Subjective Evaluation

Relying solely on quantitative metrics for assessments does not offer insight into how individuals with CVD perceive the results produced by the proposed tools [

7]. Therefore, we conducted human studies involving individuals with CVD to validate our method. The same dataset was utilized for both subjective and objective evaluations.

6.1. Diagnosis of Color Vision Deficiency

Prior to the human studies, all participants underwent color vision assessment using the Color Assessment and Diagnosis (CAD) test [

25]. The CAD system with an Eizo monitor CS2420 was calibrated and supplied by City Occupational LTD (London, UK); for calibration details, see [

58]. CAD allows reliable and accurate identification of protans and deutans.

Additional separation into pure dichromacy and anomalous trichromacy (e.g., separation of protans into protanope and protanomalous subjects) requires additional analysis beyond the standard functionality of the system. To identify pure protanopes and deuteranopes, we inspected threshold plots retrieved from the CAD system. On the plot, we checked whether the observer’s threshold endpoints fully reached the edges of the monitor gamut. Subjects whose threshold endpoints lay on the monitor gamut boundaries were classified as putative dichromats (protanopes or deuteranopes). This method is based on the observation described in [

25]. The authors of the review state: “subjects who hit the phosphor limits in the CAD test tend to also accept ‘any’ RG mixture as a match to the monochromatic yellow field in the anomaloscope test.”

6.2. Participants

A total of 17 individuals participated in our studies, comprising 3 normal trichromats, 6 individuals with protan deficiency (3 protanopes and 3 protanomalous trichromats), and 8 individuals with deutan deficiency (1 deuteranope and 7 deuteranomalous trichromats). The relatively small number of subjects is typical of daltonization studies and is due to the difficulty of finding and diagnosing people with CVD: 30 participants in [

30], 12 participants in [

13], 10 participants in [

57], and 10 participants in [

12]. In our study, the age of participants with CVD ranged from 21 to 63, averaging 35 years, with 50% of the group aged between 21 and 26.

Volunteers with CVD were recruited based on self-reported color perception issues in daily life to partake in diagnostics and experiments. All participants were male. As per the CAD test, their RG thresholds varied from 13 to 30 CAD units, categorizing them as having severe RG color deficiency or CV5 [

25], comprising candidates with RG threshold values > 12 CAD units and YB threshold values within the age-related norm.

Additionally, two individuals exhibited both deutan/protan and mild tritan CVD (YB thresholds 2.25–2.57 CAD units), suggesting a possible acquired deficiency. However, only their deutan/protan CVD was considered for the results.

Except for one deuteranomalous trichromat, all participants underwent the CVD express-test to determine the type of CVD, and the corresponding results are presented in

Supplementary Table S1.

6.3. Subjective Evaluation Methodology for Daltonization Method

We compared our proposed method with Farup’s anisotropic daltonization technique [

23], which has publicly available source code. The participants were presented with two questions regarding contrast and naturalness for each of the 10 images in the test set. In the instructions, the contrast was referred to as “distinguishability of objects”. The definition of naturalness given in the introduction implies a subjective assessment from memory. However, the methodology based on such a definition suggests complex instructions and is not suitable for images of objects that may have different colors not only after daltonization but also in reality (e.g., flowers). Therefore, instead of providing complex instructions regarding memory, participants were suggested to evaluate the processed image in terms of its similarity to the explicit reference image.

When evaluating the naturalness of daltonization, only degradation is possible, and the task is to assess the degree of this degradation. Therefore, it is reasonable to use the triple-stimulus method with explicit reference, in which three images are simultaneously displayed on the screen: a reference image and two images being evaluated. This methodology involves direct comparison of two methods and provides easily interpretable results. When evaluating contrast, it is possible to observe not only degradation but also improvement relative to the reference. To not only compare algorithms but also accurately position them relative to the reference, the triple-stimulus methodology is unsuitable. For contrast evaluation, it is necessary to use the double-stimulus method with explicit reference, in which two images are displayed on the screen: the reference image and the image being evaluated. This methodology allows algorithms to be assessed independently, which also enables the comparison of results from different experiments conducted with various algorithms on the same dataset.

Combining all the considerations mentioned above, the following instructions were formulated:

“Which of the two images is more similar to the reference image?” Three images were displayed: a reference image and two test images (processed by both our method and the anisotropic daltonization technique). Answer options included choosing one of the images or selecting “Not sure”.

“Evaluate the distinguishability of objects compared to the reference. Disregard colors.” Two images were shown: a reference image and a single test image. Participants assessed the test image among four options: “Distinguishability on the test image is better than on the reference one”, “Distinguishability on the test image is worse than on the reference one”, “Approximately the same”, or “Not sure”.

No additional instructions were given. There were no restrictions on response time. Participants selected their answers using a computer mouse. Images were shown on a computer display with a resolution of 1920 by 1080 and a diagonal of 27 inches. The distance between the monitor and the observer was approximately 60 cm. Thus, the average angular size of the images was 8 degrees vertically, 12 degrees horizontally. During the assessment of both daltonization methods, participants with CVD viewed the test images, processed based on their CVD type. Participants with NCV (normal trichromats) were presented randomly with test images processed for protanopes or deuteranopes. Informed consent was obtained from all participants in the study.

6.4. CVD Express-Test

The contrast loss experienced by individuals with CVD while viewing an image depends on their specific type of CVD, causing distinct details to vanish for individuals with protan or deutan deficiencies. Thus, calibration of the daltonization algorithm to the user’s CVD type, identified through the CAD method, is essential. For user convenience, implementing an autonomous CVD test to customize the algorithm according to the user’s condition would be advantageous.

Identifying CVD may be easier than specifying the precise disorder type (protan/deutan). Whereas Ishihara pseudoisochromatic plates rapidly detect color vision issues, they do not conclusively differentiate between protan and deutan deficiencies. Toufeeq addressed this concern in [

59], but the suggested test is complex for home use. To our knowledge, there is no straightforward test in the English literature that accurately determines the type of CVD.

Thus, we implemented a test outlined by Maximov et al. [

27]. It involves presenting three images: the original and simulations depicting how individuals with protanopia and deuteranopia perceive the image. These simulations are generated using Viénot’s algorithm [

33]. Participants choose the image that varies the most in perceived color from the other two. Additionally, a “Not sure” option is available for ambiguous cases, accompanied by a text box to explain the decision.

Hypothetically, normal trichromats would choose the original image as significantly different, whereas dichromats would select a simulation representing the opposite type of CVD. For instance, protanopes would pick a simulation of deuteranopes’ perception, and vice versa. Subjective evaluation allowed this hypothesis to be tested, comparing the CVD express-test with the CAD test for dichromats, and revealing anomalous trichromat responses in the CVD express-test.

To simplify pairwise comparisons, three images (the original and two simulations) were presented on the monitor screen at the corners of a triangle in a randomized sequence. Each participant made 14 selections, with a new triplet of images each time. The test involved 13 participants with varying degrees of CVD (refer to

Supplementary Table S1), along with 3 normal trichromats.

6.5. CVD Express-Test Results

Supplementary Table S1 displays the outcomes of the CVD express-test and its agreement with the CAD test among CVD observers. Each participant’s red–green color discrimination thresholds from the CAD setup and their image selections in the CVD express-test are presented. The final column indicates the presumed diagnosis derived from the CVD express-test. The results for normal trichromats in the CVD express-test are excluded as they consistently and confidently distinguished the original full-color image from both simulations, as expected.

The table illustrates that individuals with CVD (both dichromats and anomalous trichromats) predominantly selected an opposing type of CVD simulation as the most distinct image, validating the diagnosis obtained via the CAD method. Protanopic dichromats exhibited a 100% match between the CVD express-test and the CAD test.

Unlike normal trichromats, anomalous trichromats rarely found the original (full-color) image to be the most distinct, often perceiving a simulation opposite to their CVD type as the most different. However, individuals with mild CVD had reduced accuracy in determining dichromacy type, as they often perceived the full-color image as distinct from both simulations.

Hence, the CVD express-test trials suggest its potential as an autonomous diagnostic tool for determining dichromacy type in individuals with CVD.

7. Results

The objective evaluation results for the proposed method, the anisotropic daltonization method [

23], and the CVD-Swin method [

28] are presented in

Table 1 and

Table 2. CVD-Swin is a recently introduced state-of-the-art neural network-based daltonization approach. Although pretrained models were not provided by the authors, the training framework is publicly available. Using this infrastructure, we trained two separate neural network models: one for protanopia and one for deuteranopia. A critical hyperparameter in the CVD-Swin framework is

, which controls the trade-off between naturalness preservation and contrast enhancement in the recolored images. While the original study explored values of

,

, and

, our experiments showed that at

, the model yields negligible contrast improvement. Therefore, we report and analyze results for models trained with

and

.

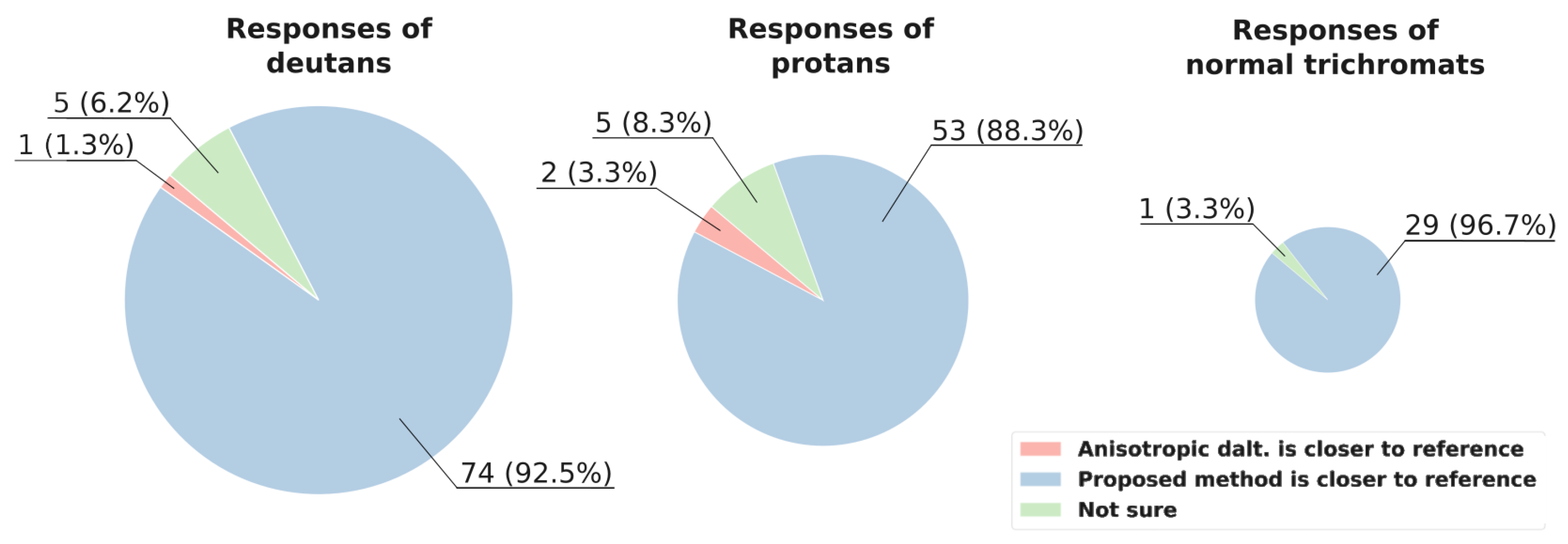

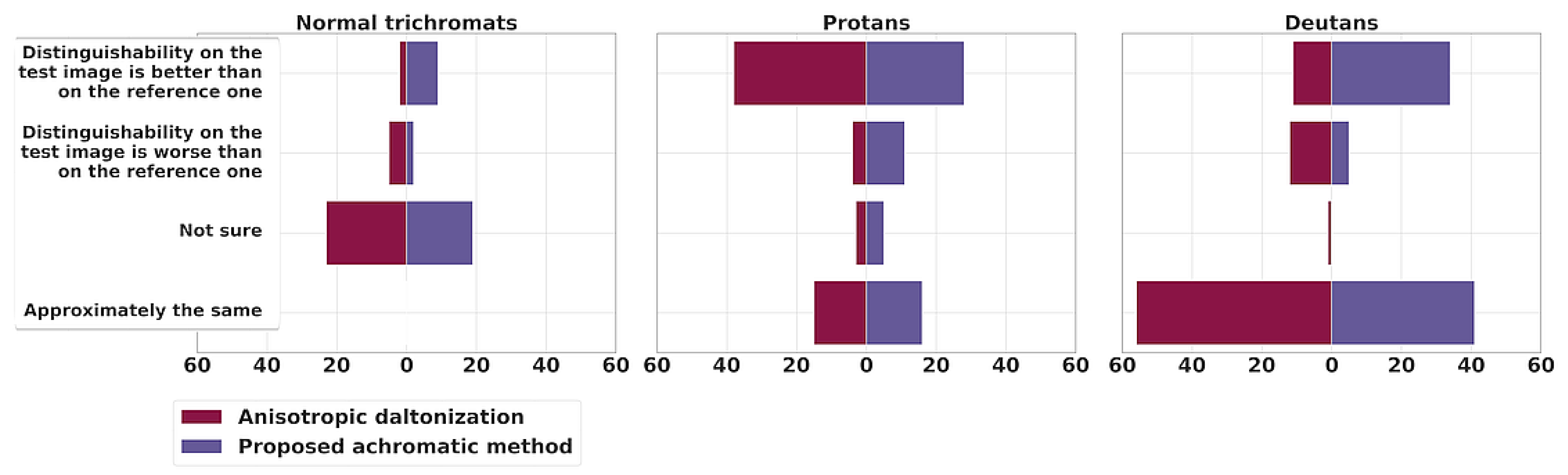

The subjective evaluation results of the proposed method and anisotropic daltonization method are presented in

Figure 8 and

Figure 9.

7.1. Qualitative and Objective Evaluation Results

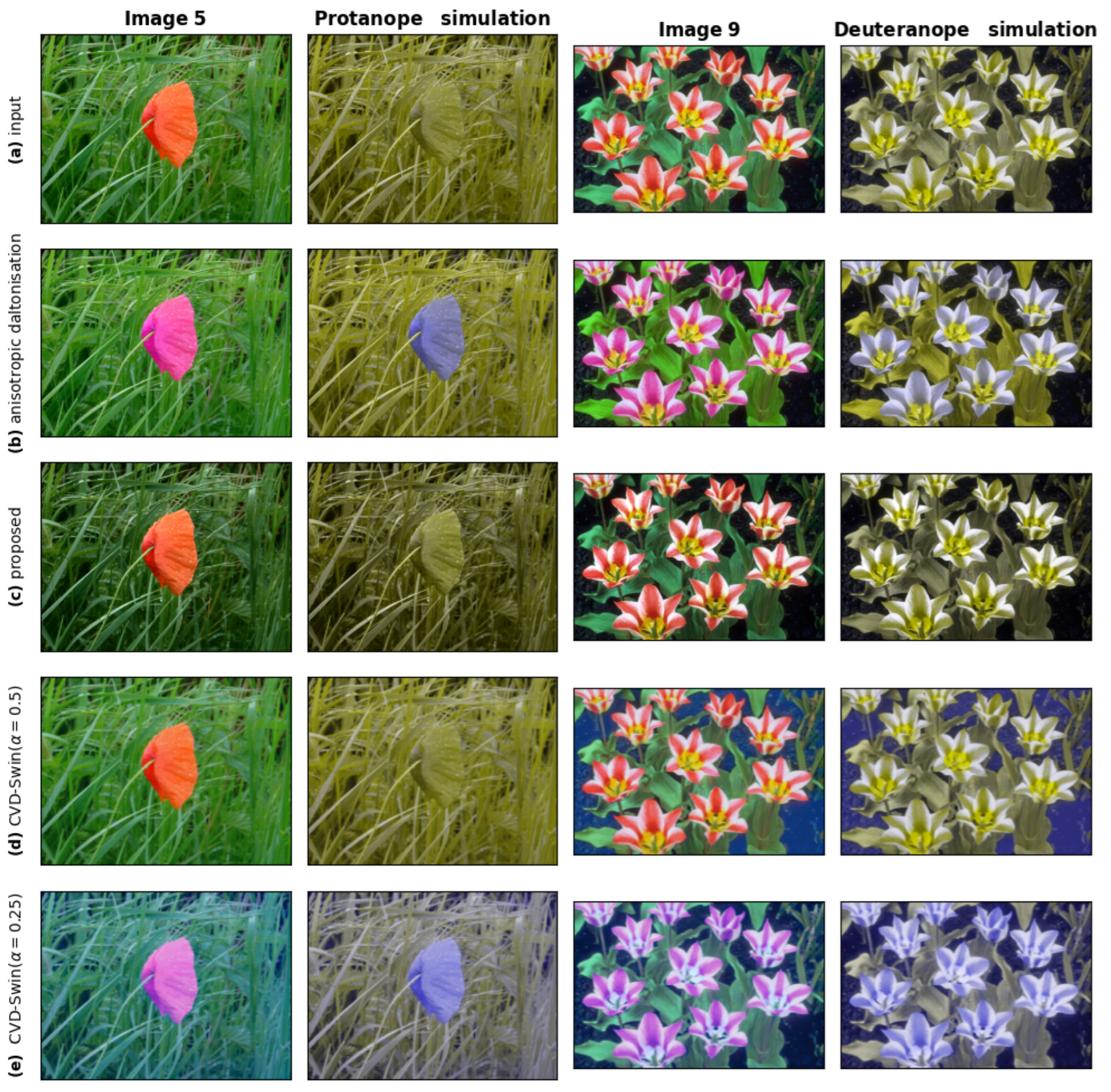

Figure 10 displays images processed for protanopia and deuteranopia. Additional figures are presented in

Supplementary Figure S2. These figures showcase substantial improvements in differentiating flowers and fungi on their background, initially challenging for individuals with CVD, using both methods. However, in

Supplementary Figure S2 (image 7), where the sun on the simulation image is nearly imperceptible without processing, only the proposed method fully restores its distinguishability for individuals with CVD. The anisotropic daltonization method, despite employing chromaticity distortion, fails to improve contrast in this specific area.

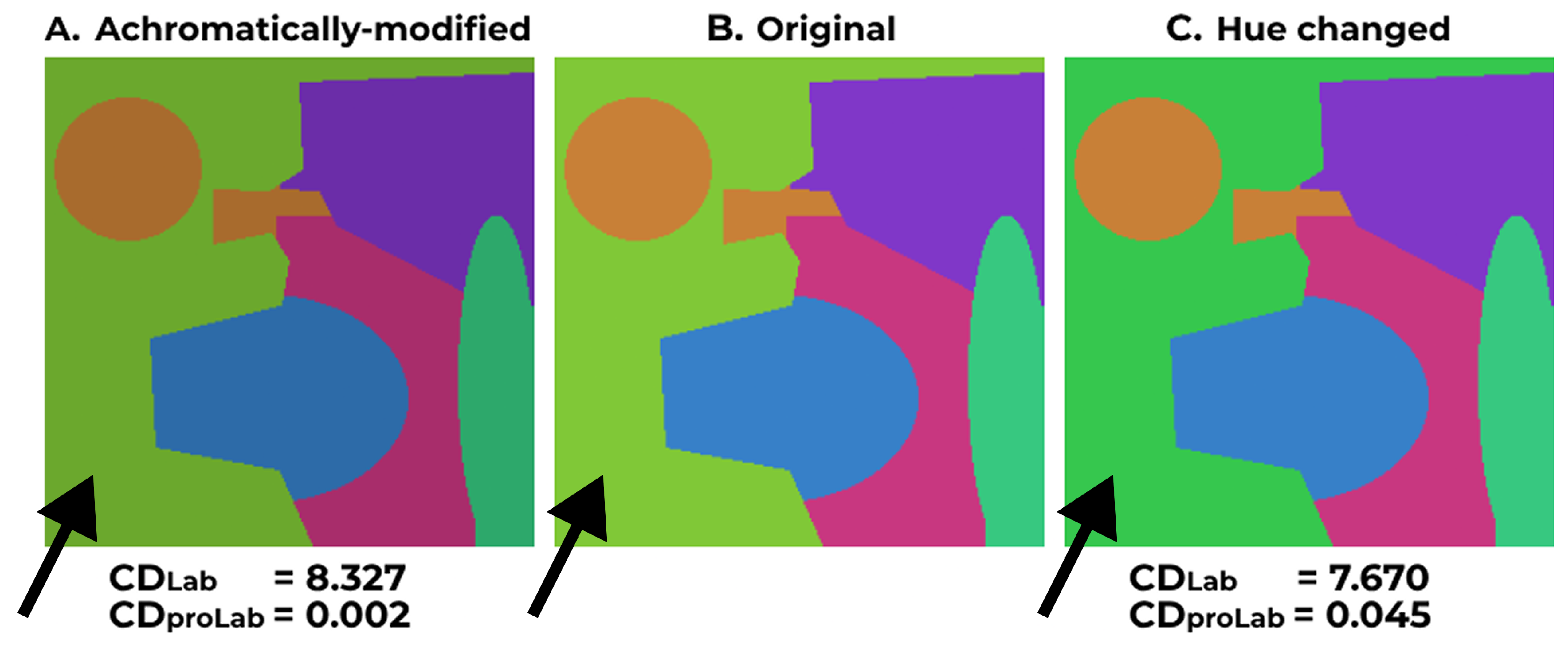

Table 1 displays the averaged naturalness preservation metrics (

(Formula (

17)) and

(Formula (

21)) calculated using the specified dataset (see

Figure 7). The assessment included both cases for normal trichromats (shown in columns under “Mean original”) and for individuals with CVD (shown in columns under “Mean simulated”). The former utilizes the original image as the reference and the daltonized trichromatic image as the test image, whereas the latter employs simulations of both the original and daltonized images.

The proposed method consistently demonstrates lower CD values, highlighted in bold font, indicating closer chromaticity resemblance to the original image in all cases compared to the anisotropic method and CVD-Swin. Both metrics consistently favor our method across all instances, except for image 5 (

Figure 10): the

metric implies that the anisotropic daltonization method produces less chromatically distinct results from the original compared to our algorithm (9.42 vs. 13.68 for deutans and 10.13 vs. 11.57 for protans). This contradicts visual perception, as shown in

Figure 10. However, the

metric exhibits the opposite trend (0.0553 vs. 0.0159 for deutans and 0.0599 vs. 0.0125 for protans), aligning with the visual assessment.

Table 2 demonstrates contrast loss and restoration evaluations using the RMS metric. Unlike assessing naturalness, where the reference image changes depending on the observer’s CVD status, the original image consistently serves as the reference for contrast evaluation. The table includes a row labeled “No dalt.”, indicating contrast loss levels for protan and deutan simulations of the original image without any daltonization. On average, the anisotropic daltonization method surpasses the proposed method and CVD-Swin in restoring the original contrast for both types of CVD, according to the RMS metric. However, on average, the proposed achromatic method enhances contrast compared to the non-daltonized image.

To assess the influence of the selected optimization criterion and tone-mapping approach in the proposed method, an ablation study was conducted. The results indicate that the proposed configuration achieves the highest levels of both contrast and naturalness across most conditions. An exception is observed for the

metric in the case of deuteranopes, where the performance is comparable for both linear and non-linear optimization criteria (see

Supplementary Table S2).

7.2. Subjective Evaluation Results

In

Figure 8, the response statistics for naturalness are shown. Overall, the proposed achromatic method notably outperforms the anisotropic daltonization method in preserving naturalness.

Remarkably, in image 5 (

Figure 10), where the

metric favored the anisotropic daltonization method, eight deutans and six protans unanimously selected our method’s output for higher naturalness, which corresponds to the results of

.

When responding to the question about naturalness, participants experienced uncertainty, selecting “Not sure” for only two images: six participants (two deutans, three protans, and one trichromat) encountered difficulties with image 7 (represented in

Figure 7), and six participants (three deutans, two protans, and one trichromat) had challenges with image 8 (represented in

Figure 7). These two images, along with daltonizations for both CVD cases, are displayed in

Supplementary Figure S3.

Let us move on to the question of the distinguishability of objects (

Figure 9). The anisotropic daltonization method shows slightly more instances of distortion than improvement for deutans (11 cases of contrast improvement versus 12 cases of contrast loss). In 57 cases (“the same” in 56 cases and “not sure” in 1 case), the results were similar to the original contrast (“No dalt.”). Conversely, the achromatic algorithm performs better for deutans: enhancements were observed 34 times; in 5 instances, the images appeared worse after processing for deutans; and in 41 cases, participants noticed no change in comparison to the original contrast.

For protans, the anisotropic daltonization method performs notably better: in 38 instances, participants favored the processed images, whereas only in 4 cases did the contrast worsen compared to the original image. In 18 cases, no noticeable change in contrast was reported—15 responses indicated “the same”, and 3 were marked as “not sure”. In comparison, for the proposed method, 28 responses indicated enhanced contrast, 11 reported a reduction in contrast, 16 responses indicated “the same”, and 5 were “not sure”.

Thus, concerning contrast preservation in our dataset, the achromatic method yields better results than no processing, both for protans and deutans. In deutans’ responses the achromatic method outperforms the anisotropic daltonization method. Nonetheless, even for protans our method yields results not worse than no processing in 82% of responses, while surpassing the naturalness of the anisotropic daltonization method in 88% of responses.

Supplementary Figure S4 showcases the least successful outcomes of the proposed algorithm for protans, where some participants observed contrast loss for initially discernible objects.

It should be noted that the improvement in our method for normal trichromats is not unequivocal. Participants with normal color vision generally experienced difficulties in assessing contrast differences between processed and original images, regardless of the method used, and often perceived the processed image as barely distinguishable from the original.

To evaluate contrast improvement, we applied the sign test, grouping participants according to their type of CVD. Responses from normal trichromats were excluded from the analysis. Each participant evaluated 10 image pairs, resulting in a total of 80 responses from deutan participants (8 subjects × 10 images) and 60 from protan participants (6 subjects × 10 images). Responses were binarized: “Distinguishability on the test image is better than on the reference one” was coded as 1, and “Distinguishability on the test image is worse than on the reference one” as 0. Responses such as “Approximately the same” and “Not sure” were excluded from the analysis. The null hypothesis assumed a 50% chance of improved distinguishability (i.e., no effect). A two-tailed sign test was conducted at a significance level of

. The null hypothesis was rejected when the

p-value fell outside the interval

, indicating a statistically significant change in distinguishability. Additionally, we report the frequency of contrast improvement and deterioration—defined as the proportion of images for which participants indicated increased or decreased distinguishability, respectively, relative to the total number of images evaluated (including “Approximately the same” and “Not sure” responses). The results are summarized in

Table 3. For the anisotropic daltonization method, deutan participants did not show a statistically significant improvement. Our method improved distinguishability in 49% of images and worsened it in only 6% for deutans. For protan participants, both methods showed similar rates of improvement.

8. Discussion

In this study, a novel daltonization method is introduced, preserving image naturalness for individuals with CVD and normal trichromats. The method achieves naturalness preservation by modifying solely the achromatic component of the image. Moreover, it enhances the distinguishability of image elements for dichromats by multiplying the input image by a coefficient map obtained through optimization aimed at preserving local contrast uniquely for each input image.

For the experimental investigation of the proposed method, a dataset of images was collected and published. Objective and subjective comparisons were conducted between our proposed method and the anisotropic daltonization method [

23], since the latter also aims to preserve local contrast and is provided with a publicly available source code.

The subjective evaluation showed that the proposed method significantly outperforms the anisotropic daltonization in preserving naturalness for individuals with CVD and normal trichromats. The objective assessment employed both the established CD metric in the Lab coordinates and the proposed proLab modification of the CD metric. The modified metric better aligns with the participants’ responses. On average, both metrics indicated that the proposed method better preserves naturalness compared to the anisotropic daltonization.

Our findings corroborate the conclusions from the study in [

7], indicating that traditional recoloring methods render colors unfamiliar and unattractive to individuals with CVD. Consequently, these tools are being utilized much more rarely than intended by their creators. As per the majority of participants in the survey [

7], recoloring tools should minimize color alterations to align more closely with the colors that individuals with CVD are accustomed to perceiving.

Despite the fact that the proposed method can only modify the achromatic component, it turns out that in terms of contrast, it is superior to the anisotropic daltonization method for the surveyed deuteranopes. For protans, the proposed method is generally preferred over non-daltonized images and is planned to be further improved in future work.

The computational complexity of the proposed daltonization method, as well as that of the anisotropic daltonization method, is , where n denotes the number of pixels in the image and M represents the number of optimization iterations. In our experiments, the proposed method with M = 10,000 achieves a per-image processing time of 13.35 s. In comparison, the anisotropic daltonization method with requires 21.05 s for the same image. Both evaluations were conducted on a machine equipped with an Intel Core i7-11700F CPU at 2.50 GHz.

The main drawback of the proposed method is an insufficient level of contrast restoration in specific images: the human studies revealed instances where the achromatic daltonization did not sufficiently differentiate objects compared to the non-daltonized image. We attribute this to two factors. First, contrast assessment relies only on adjacent pixels, potentially causing ridge-like artifacts. However, as shown in

Supplementary Figure S5, increasing the distance between points

p and

q in Equation (

9) introduces visible artifacts into the output image. To mitigate this issue, future work will explore incorporating a broader pixel neighborhood in the contrast evaluation process. Second, some images show significant background darkening, which could be addressed using local tone mapping. We would like to emphasize that the aforementioned issues do not constitute fundamental limitations of the new approach, of which the method proposed in this paper is the first representative. The achromatic contrast-preserving approach, which maintains the image naturalness both for individuals with CVD and normal trichromats, warrants further development.

Additionally, we have validated a CVD express-test that allows for the determination of the type of dichromacy without the need for special equipment.