1. Introduction

Instance segmentation, which combines object detection and semantic segmentation for pixel-level delineation of individual objects, is fundamental to computer vision applications such as autonomous driving and video surveillance [

1]. In maritime scenarios using remote sensing imagery, instance segmentation enables accurate and efficient separation of individual ships from backgrounds, including ports, water surfaces, and coastal infrastructure. It also supports precise ship localization in both near-shore and offshore environments. This capability is essential for intelligent navigation systems, maritime traffic monitoring, and 3D maritime scene reconstruction, thereby significantly enhancing the safety and autonomy of modern maritime systems [

2]. Compared with object detection, instance segmentation poses greater challenges, as it requires both precise object localization and pixel-level classification. Most existing approaches are mostly built on object detection frameworks.

Recent research on ship instance segmentation has explored multiple imaging modalities [

3]. Among these, Synthetic Aperture Radar (SAR) imagery provides all-weather, day-and-night imaging capabilities and can penetrate clouds and haze, making it widely used in maritime surveillance. Public SAR datasets such as SSDD [

4] and HRSID [

5] have been established to support ship detection and segmentation tasks. However, SAR imagery is inherently affected by speckle noise, complex backscattering mechanisms, and sparse texture, which make fine-grained instance segmentation challenging. Consequently, various SAR-based approaches, including Context-Aware Net [

6], LFG-Net [

7], and the method proposed by Wang et al. [

8], have been introduced to mitigate these modality-specific issues. In contrast, optical remote sensing imagery offers high spatial resolution, rich texture and color information, and spectral consistency with human vision [

3,

9], making it particularly suitable for precise instance-level segmentation. Nevertheless, optical sensors are susceptible to illumination variations and atmospheric interference, such as clouds, haze, and aerosols [

10]. Furthermore, publicly available optical ship segmentation datasets remain limited. To address this gap, we developed a manually annotated visible light remote sensing dataset named VLRSSD, focusing on maritime scenes and specifically designed for ship instance segmentation tasks. This dataset enriches the training resources for deep learning models and promotes further research in maritime remote sensing applications.

Ship instance segmentation is crucial for precise localization and boundary delineation in maritime scenarios. However, this task remains challenging in the context of optical remote sensing due to several inherent factors. First, ships in nearshore port areas are often densely packed, making it difficult to separate individual instances. Second, ships in remote sensing images exhibit considerable variability. Differences in imaging altitudes can lead to large variations in ship sizes, while diverse ship categories—such as fishing boats, warships, and cargo vessels—display pronounced differences in shape and structure. The existing YOLOv8 framework primarily relies on convolution-based C2f modules, conventional convolutional blocks, and CIoU loss, which are limited in their ability to capture complex local–global interactions and generate precise mask boundaries.

To address these challenges, we propose a novel single-stage instance segmentation framework called CDSANet, which integrates convolutional neural networks (CNNs), Vision Transformers [

10], attention mechanisms, and an enhanced loss function. CDSANet is built upon the YOLOv8 framework [

11], which is known for its high accuracy and flexibility in both detection and segmentation tasks. Inspired by the lightweight design of MobileViT-v3 [

12], we introduce the CVTA module, which leverages the efficiency of convolutions while retaining the global modeling capability of Transformers. This module overcomes the limited feature extraction capacity of the original C2f module when handling diverse ship types. Additionally, we design a new dynamic convolution module, DOWConv, and investigate its synergy with CVTA to enhance the model’s representational power and training efficiency. Together, these components improve ship instance segmentation performance in remote sensing images. CDSANet is primarily applicable to ship instance segmentation in nearshore ports and harbors under clear weather conditions, where ships are relatively densely packed compared to open-sea environments. The main contributions of this study are summarized as follows:

- (1)

A novel CVTA module that integrates CNN and Vision Transformer architectures to enhance global–local feature representation. Additionally, the CBAM [

13] attention mechanism is incorporated into the backbone to further improve feature extraction.

- (2)

The network neck uses DOWConv, a dynamic convolution module that generates spatially and channel-wise adaptive kernels. This design better captures fine-grained features such as edges and shape variations while remaining computationally efficient.

- (3)

The loss function is upgraded to SIoU loss [

14], enabling more precise instance localization and boundary refinement.

- (4)

We developed a novel dataset, VLRSSD, and employ a MixUp-based [

15] image-level augmentation strategy to enhance segmentation performance.

The remainder of this paper is organized as follows.

Section 2 reviews related works on instance segmentation, ship instance segmentation, and CNN–Transformer-based methods.

Section 3 introduces the proposed CDSANet framework and describes the ship datasets employed in this study.

Section 4 presents the experimental setup and results, while

Section 5 discusses the limitations of the current work and provides further analysis. Finally,

Section 6 concludes the paper and outlines directions for future research.

3. Method and Ship Data

3.1. Overall Structure

Figure 1 illustrates the structure of CDSANet, which consists of three main components: the backbone for feature extraction, the neck for feature refinement, and the detection heads for final predictions. The input image is first uniformly resized and normalized. The backbone extracts multi-scale features by integrating standard convolutional blocks, C2f modules, SPPF modules, CBAM, and the proposed CVTA module. This design allows the network to capture both rich semantic information and fine-grained details. The backbone produces hierarchical feature maps, which are then processed by the neck. Here, the standard convolution modules are replaced with the DOWConv modules to improve the model’s ability to capture fine-grained features, such as edges and shape variations. The neck outputs feature maps at three different scales, which are fed into their respective detection heads. Furthermore, the bounding box regression in the segmentation detection heads replaces the CIoU loss with the SIoU loss. In summary, the proposed framework achieves more accurate instance segmentation while maintaining computational efficiency and a compact model size. Detailed descriptions of the core components are provided in the following sections.

3.2. CVTA

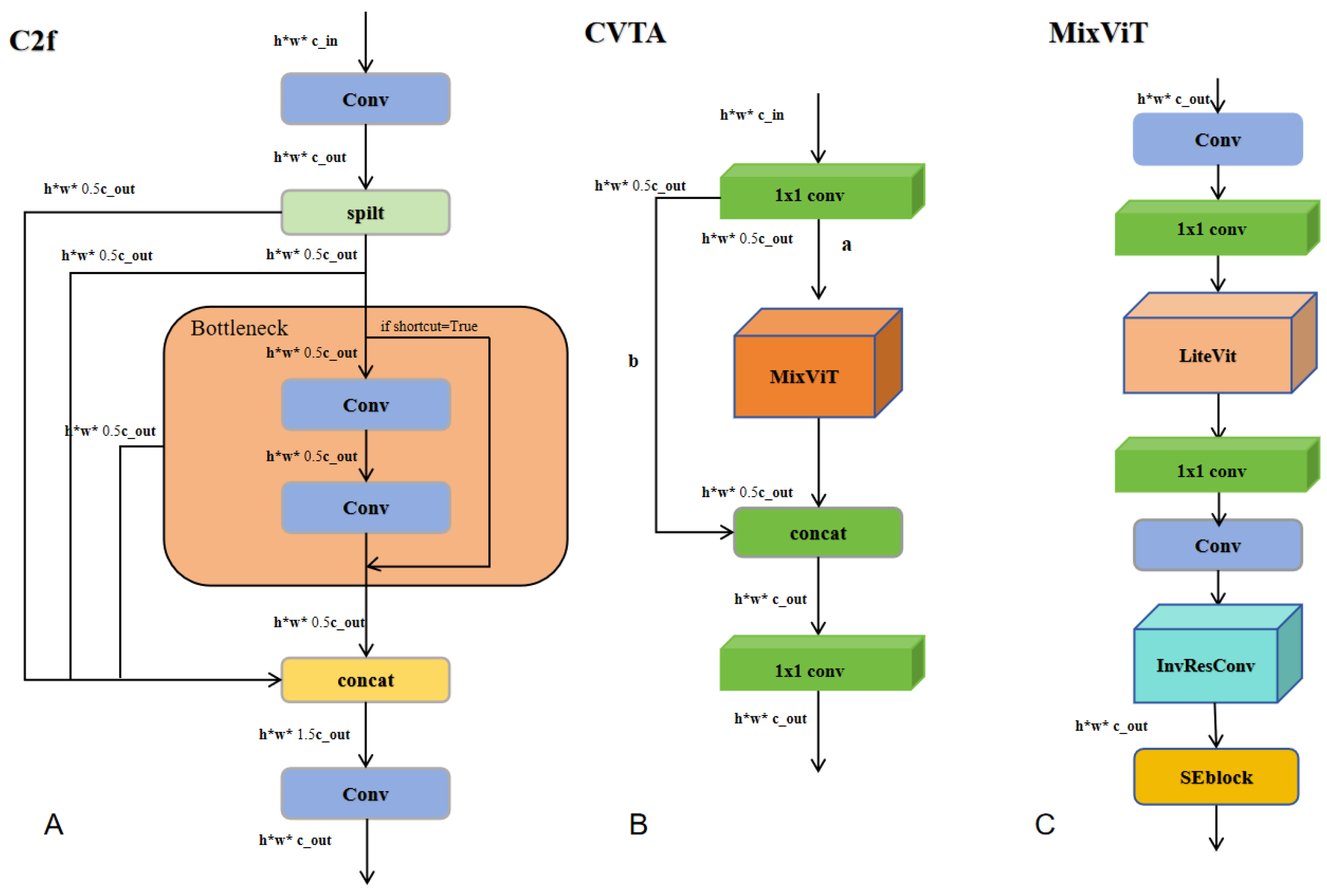

To enhance the feature extraction capability of the C2f module (

Figure 2A) in YOLOv8, which effectively captures local details but struggles with global contextual information in port and coastal scenes containing multiple ships of varying shapes, we introduce a novel hybrid module called CVTA (

Figure 2B). Inspired by MobileViTv3 [

12], which combines convolutional inductive biases with the global modeling capabilities of Transformers, CVTA employs a structured multi-branch design to explicitly partition and fuse features. In CVTA, convolution operations capture local spatial details, such as edges and contours, which are critical for ship targets, while Transformer units encode long-range contextual dependencies. Residual connections are strengthened to facilitate gradient propagation. This dual-path aggregation of local and global features improves feature robustness and instance awareness, particularly for densely packed or morphologically diverse ships. By explicitly modeling both local details and global context, CVTA overcomes the limitations of conventional C2f modules in capturing long-range dependencies while preserving fine-grained spatial information. This design enhances local–global feature interactions, producing richer and more discriminative representations for ship segmentation.

Inspired by the lightweight Vision Transformer architecture in MobileViTv3 [

12] and the standard Transformer design [

38], the proposed MixVit module integrates convolutional operations with a lightweight Transformer block, LiteVit, to balance local feature extraction and global context modeling. MixVit combines convolutional layers to preserve fine-grained textures and structural details of ships with LiteVit to capture long-range dependencies across the image. This design enables effective representation of both densely packed and morphologically diverse ships, enhancing segmentation robustness and accuracy.

LiteVit follows the efficient Transformer blocks used in MobileViTv3, incorporating multi-head self-attention and feed-forward layers with normalization to balance representational capacity and computational cost. MixVit further extends this design by integrating convolutional layers, such as InvResConv, with LiteVit, enabling more effective fusion of local and global features.

MixViT (

Figure 2C) serves as the core component of CVTA, integrating standard convo lutional, pointwise (1 × 1) convolutional, and Vision Transformer–based mechanisms. It begins by performing channel transformation and fusion through a combination of standard and pointwise convolutional layers. Subsequently, additional convolutional operations and the LiteVit module are incorporated to strengthen feature learning and enhance training stability. At the tail of the CVTA module, the InvResConv block and SE attention mechanism are employed. The InvResConv block primarily utilizes pointwise and depthwise separable convolutions, while its multi-path feature fusion design enriches the representational diversity of extracted features. As a key feature extraction module in the backbone of CDSANet, the CVTA module with MixViT replaces several C2f modules to extract multi-scale and multi-level features. It provides more discriminative representations for subsequent attention modules and segmentation heads, thereby improving the overall performance and robustness of the model.

LiteVit (

Figure 3A) is a lightweight Vision Tr

Figure 3A ansformer encoder module designed to efficiently extract high-level semantic features for downstream tasks. It consists of two sequential layers, each composed of a pre-normalized multi-head self-attention mechanism and a pre-normalized feed-forward network. Layer normalization is applied before each operation to stabilize training, while residual connections preserve feature integrity and facilitate smooth gradient propagation. By modeling long-range dependencies and enabling expressive nonlinear transformations, LiteVit effectively enhances contextual feature representation.

InvResConv (

Figure 3B) is inspired by the inverted residual block introduced in Mo bileNetV2 [

53] and subsequently adopted in the MobileViT series, including MobileViTv3. This structure is particularly suitable for lightweight networks, as its expansion–projection bottleneck design and depthwise separable convolutions significantly reduce the additional computational overhead introduced by the Transformer. In our framework, InvResConv is integrated into the MixVit module to strengthen feature representation while maintaining computational efficiency. Compared to conventional residual blocks, InvResConv alleviates representational bottlenecks during channel expansion and reduction, making it more appropriate for resource-constrained scenarios such as real-time ship instance segmentation.

Specifically, the module first employs pointwise convolutions for channel expansion, followed by depthwise separable convolutions that independently process spatial information within each channel, thereby minimizing computational cost. A final pointwise convolution restores the original channel dimensions. When the stride equals 1 and the input and output channels are identical, a residual shortcut is applied.

To further illustrate the effectiveness of the proposed CVTA module, we present a visual comparison of heatmaps before and after replacing the original C2f with CVTA. As shown in

Figure 4, CVTA not only suppresses background clutter but also more effectively captures ship regions and boundaries. These heatmaps are generated using the Grad-CAM [

54] visualization method, where brighter areas indicate regions that contribute most strongly to the model’s prediction.

3.3. DOWConv

Inspired by DOConv [

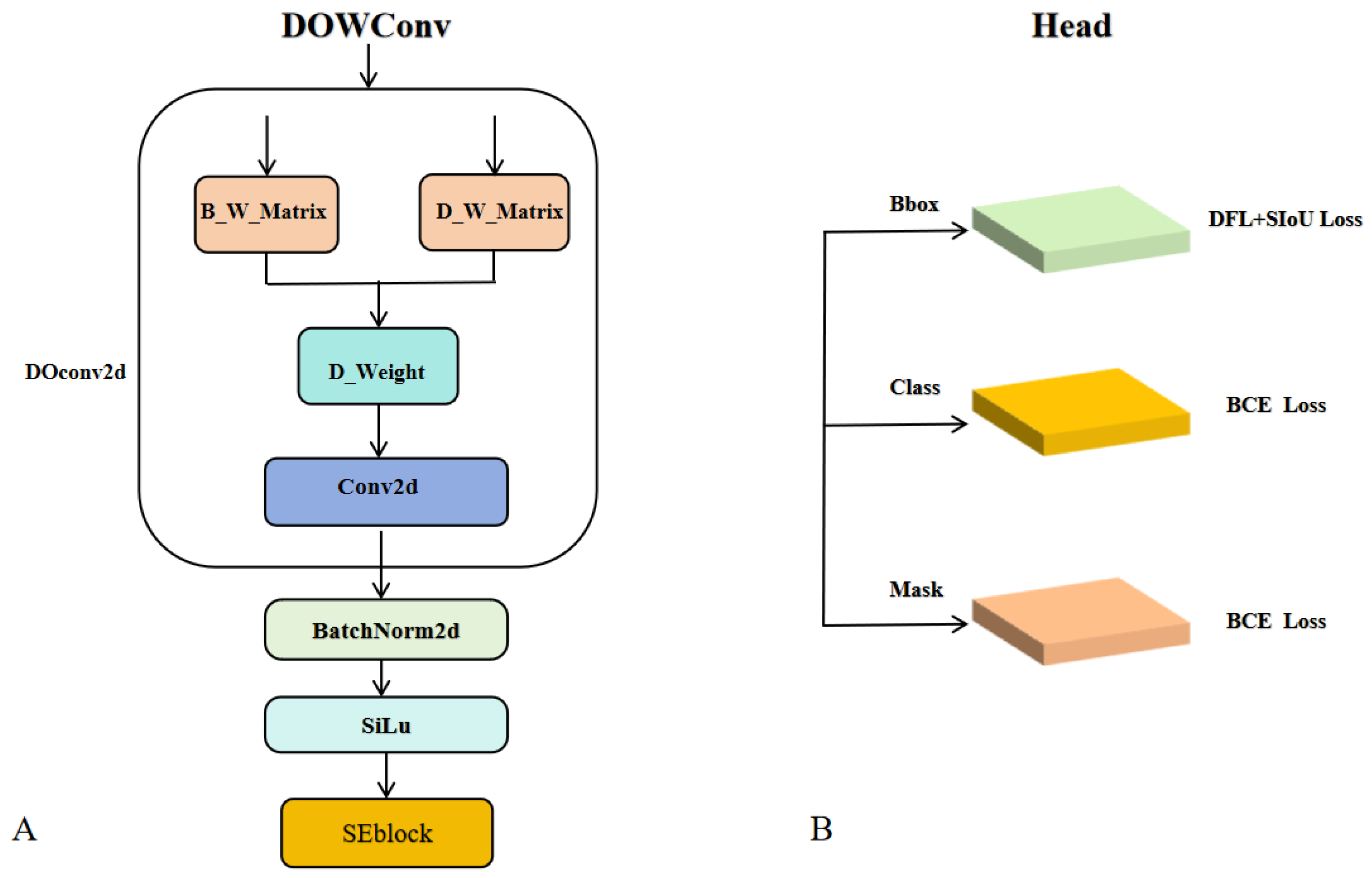

55], we propose a novel convolutional module called Dynamic Orthogonal Weight Convolution (DOWConv), which replaces two standard convolutional layers in the neck to enhance feature representation. Traditional convolutional layers rely on static kernels that struggle to adapt to objects with varying shapes, scales, and orientations. To address this limitation, DOWConv dynamically generates spatially adaptive kernels that can better capture fine-grained structural features while maintaining high computational efficiency. As illustrated in

Figure 5A, the DOWConv module consists of a DOConv2d layer, batch normalization, an activation function, and a squeeze-and-excitation (SE) block. The DOConv2d layer includes two learnable weight matrices: (1) a base weight matrix (B_W_Matrix), initialized using Kaiming initialization to provide the kernel’s foundational parameters; and (2) a dynamic weight matrix (D_W_Matrix), which incorporates a diagonal matrix D

diag to ensure stable and adaptive behavior during initialization.

Then, the interaction between the base and dynamic weight matrices produces the final dynamic weights (D_Weight) used in the convolution operation, followed by a standard convolution computation. This process can be summarized in three steps: (1) generating the initial base weights; (2) constructing the dynamic weights modulated by Ddiag; and (3) combining both to compute the spatially adaptive kernels. Unlike traditional convolutional layers with fixed kernel weights, DOWConv generates dynamic, spatially and channel-wise adaptive kernels, enabling the network to more effectively capture fine-grained features such as object edges, shape variations, and scale changes. This dynamic mechanism allows each convolutional kernel to adapt to different spatial locations and feature channels, thereby improving the network’s sensitivity to subtle differences in ship shapes, sizes, and orientations.

3.4. SIoU and Attention Mechanism

We integrate the SIoU loss and attention mechanisms to enable the model to more effectively capture subtle shape variations, precise boundaries, and spatial relationships. Specifically, this study replaces the standard Complete IoU (CIoU) loss with the Scalable IoU (SIoU) loss function for bounding box regression. SIoU extends the conventional Intersection-over-Union metric by incorporating additional geometric properties of the bounding box, such as the center distance, orientation angle, and aspect ratio. This joint optimization of spatial alignment and shape consistency enhances the model’s ability to distinguish densely packed ships and improves localization accuracy as well as boundary quality in instance segmentation tasks. The mathematical formulation of the SIoU loss function is defined as

where IoU denotes the standard Intersection-over-Union ratio, and

is a distance-based term that measures the Euclidean distance between the centers of the predicted and ground-truth bounding boxes. It also accounts for the relative orientation of this center offset with respect to the box dimensions, assigning larger penalties when the predicted box center deviates more along the object’s principal axes. This encourages more accurate localization by penalizing misalignment in both the horizontal and vertical directions. Moreover,

is a shape-based term that quantifies the differences in width and height between the predicted and ground-truth boxes. It increases the loss when the predicted box’s scale or aspect ratio diverges from the ground truth, thereby improving the model’s robustness to objects with diverse sizes and shapes. This component ensures that both large and small, as well as wide and narrow objects, are accurately represented.

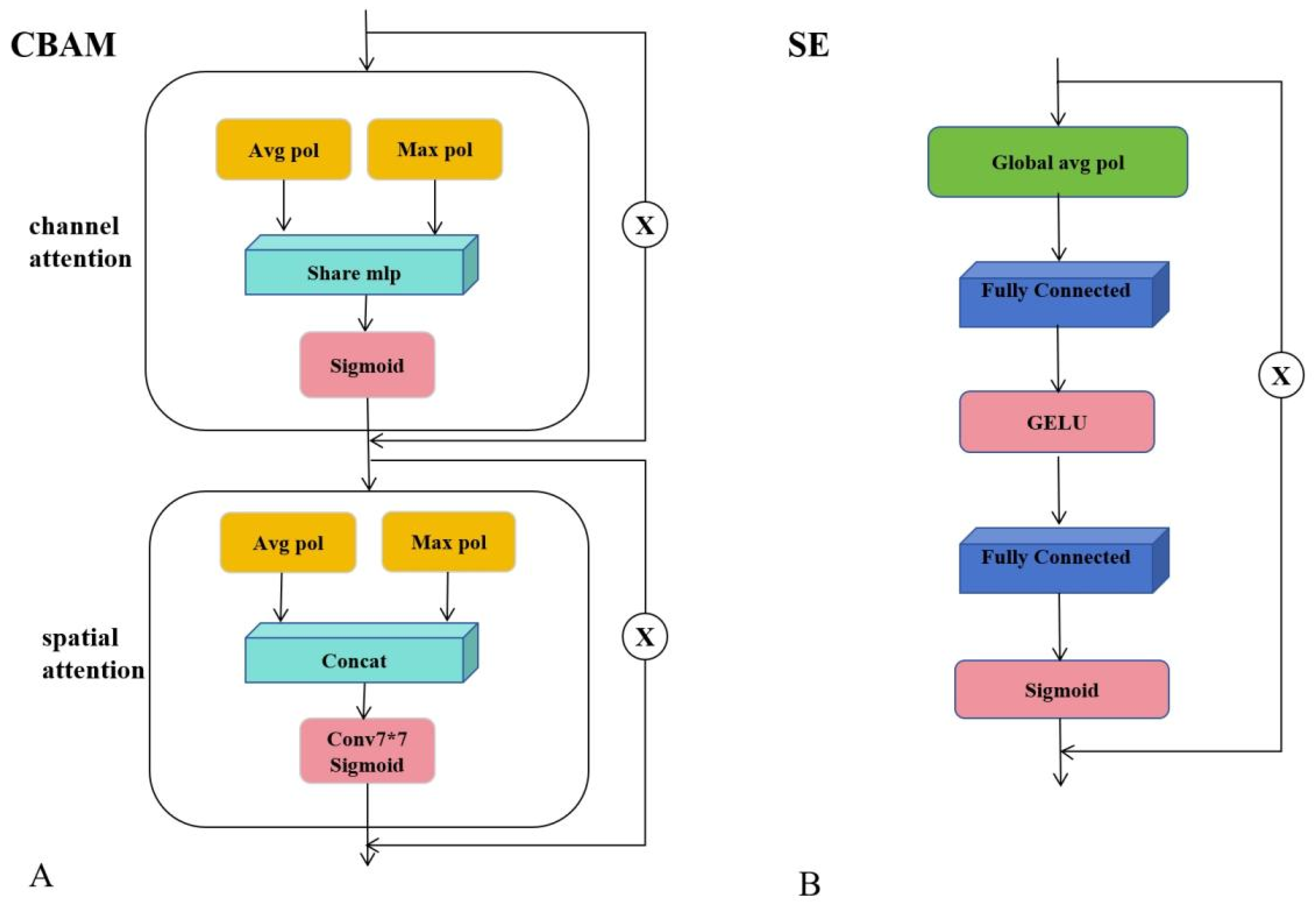

Meanwhile, the CBAM and SE attention modules dynamically emphasize distinctive ship features while suppressing irrelevant background information, thereby enhancing segmentation robustness across diverse ship morphologies. CBAM sequentially applies channel and spatial attention mechanisms, enabling adaptive focus on salient regions while attenuating uninformative features. It is particularly effective in capturing fine-grained object boundaries and positional cues. Based on extensive empirical evaluations, CBAM is applied three times before the SPPF module to enhance spatially aware feature refinement and further improve segmentation accuracy.

In contrast, the SE [

56] module performs channel-wise feature recalibration and exhibits strong stability and generalization, particularly when integrated into lightweight architectures such as MobileViT. Consequently, SE blocks are embedded within both the CVTA and DOWConv modules to strengthen their representational capacity without introducing significant computational overhead. The overall network architecture is illustrated in

Figure 6.

3.5. Ship Data

3.5.1. VLRSSD Dataset

Due to potential geopolitical and military sensitivities, publicly available visible light remote sensing ship datasets for instance segmentation remain scarce. To address this limitation, we constructed and manually annotated a new dataset termed VLRSSD (Visible Light Remote Sensing Ship Dataset). The annotation format and labeling scheme follow the MS COCO standard, supporting polygon-based instance segmentation.

The dataset comprises 2600 high-resolution visible light images of ships collected from a wide range of geographical regions and acquisition sources. Specifically, 1047 images were obtained from the HRSC2016 dataset, while the remaining 1553 images were manually extracted from Google Earth imagery covering major international ports across Asia, Europe, and North America. The selected ports and adjacent offshore areas include Shanghai, Zhoushan, Tianjin, Qingdao, Shenzhen, Hong Kong, Singapore, Dubai, Pearl Harbor, Busan, Tokyo, Kaohsiung, Amsterdam, and San Francisco, ensuring diverse maritime and geographical representations. The VLRSSD dataset incorporates imagery captured by multiple satellite sensors, including both publicly available and high-resolution commercial satellites, leading to natural variations in spatial resolution and imaging perspectives. Most images were collected under clear-sky conditions, while a smaller subset includes cloudy, foggy, or overcast scenes, further enhancing atmospheric diversity. In total, 13, 12, and 156 images correspond to cloudy, foggy, and open-sea conditions, respectively. The majority of images depict coastal and port regions, with a smaller subset representing offshore vessels in open waters.

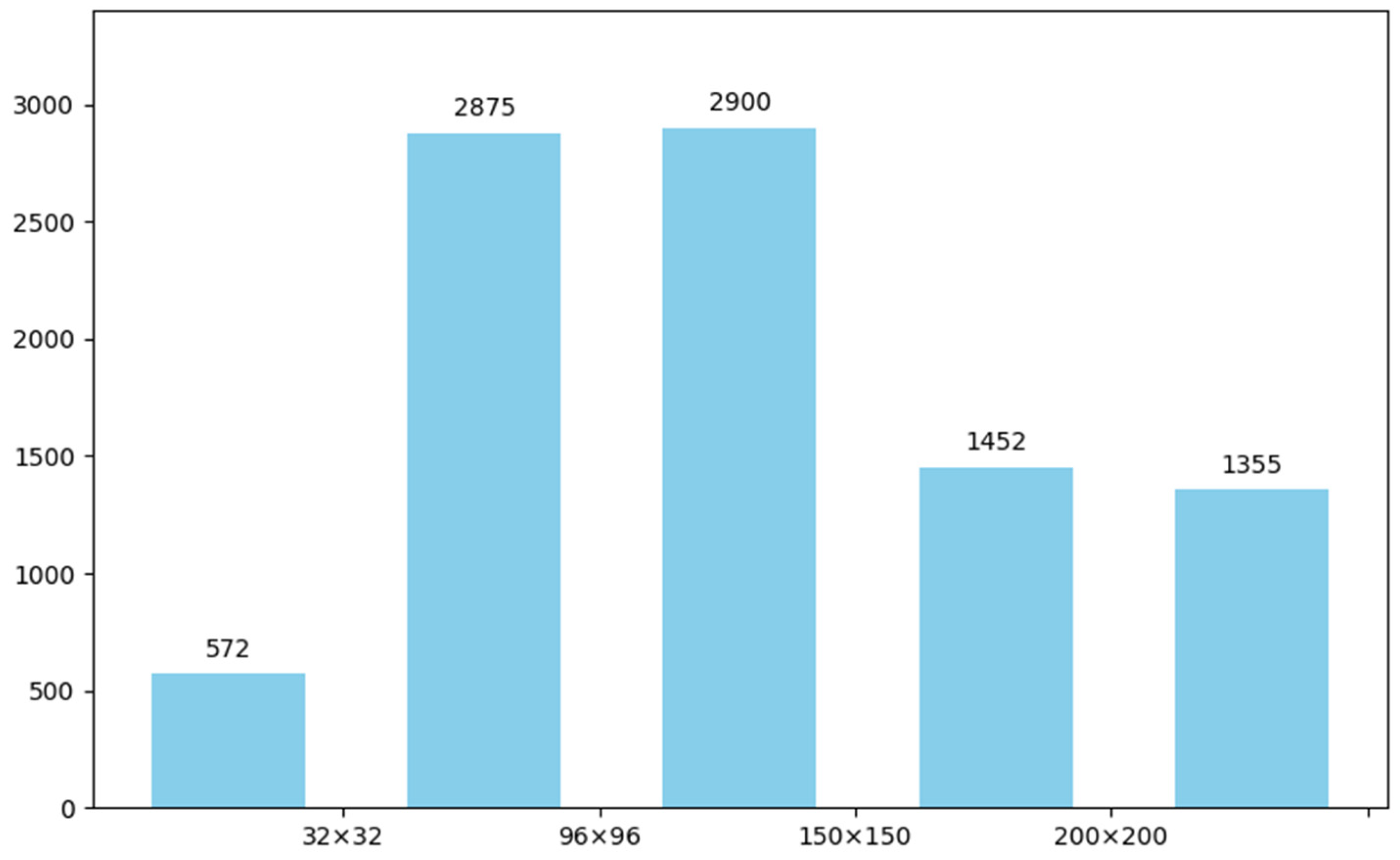

The dataset is divided into training and validation subsets with an 8:2 ratio, containing 2080 images for training and 520 for validation. A total of 9154 ship instances are annotated with fine-grained polygonal masks, averaging 3.52 ships per image. To enhance generalization across diverse maritime environments, all ships are labeled as a single category without distinguishing between vessel types. Summary statistics of the dataset are provided in

Table 1, and the distribution of ship instance sizes, measured by pixel area, is illustrated in

Figure 7, where the x-axis represents instance area and the y-axis indicates the number of ships. Representative annotation examples are shown in

Figure 8.

All images in the VLRSSD dataset were manually annotated using LabelMe and subsequently reviewed by at least two annotators. Polygon masks were refined with particular care in regions featuring complex backgrounds, such as wakes, piers, and coastal structures. Common sources of annotation uncertainty include wakes occasionally misidentified as part of the ship, clutter from nearby piers or buildings, partially visible vessels, and small or irregularly shaped ships. These challenges may lead to segmentation errors or reduced precision in distinguishing ships from visually similar surroundings. We explicitly report these potential issues to assist readers in interpreting model performance.

3.5.2. MariShipInsSeg Dataset

The MarShipInsSeg dataset [

9] consists of optical images annotated in the COCO format. Most images were collected from web sources, supplemented by ship samples from the Sea Ship, COCO, and VOC datasets. It encompasses a wide range of ship categories, including cargo ships, cruise liners, warships, fishing vessels, and coastal ships. In total, the dataset contains 4001 images and 8413 annotated ship instances, with an average of approximately 2.1 ships per image. Although MarShipInsSeg is an optical (visible light) ship dataset rather than a remote sensing dataset, it was adopted in this study due to the limited availability of public optical remote sensing ship datasets. Moreover, its texture and color characteristics are highly consistent with those of optical remote sensing imagery. Therefore, it serves as a suitable benchmark to further evaluate the generalization and robustness of the proposed model. This evaluation also indirectly demonstrates that the model maintains strong performance on standard optical images. As an optical image dataset designed for visual scene analysis, MarShipInsSeg provides high-quality imagery with diverse ship categories, varying instance densities, and broad size distributions. Additional details are summarized in

Table 1.

3.6. Improving Generalization via MixUp

To mitigate the limitation of insufficient training data, and enhance the generalization capability of our network, we adopt MixUp [

15], an image-level data augmentation strategy which generates new samples by linearly interpolating pairs of existing training samples. This method introduces additional variations in the training distribution while preserving structural coherence, which is crucial for instance segmentation.

Given two randomly selected samples

and

from the training set (typically within the same batch), MixUp generates a synthetic training sample by linearly combining their images and labels. Specifically, the new synthetic sample

is obtained as

where

denotes the image and

represents the corresponding label. The mixing coefficient

is sampled from a Beta distribution:

Here, α represents the shape parameter of the Beta distribution, which controls the degree of interpolation between two samples. In our implementation, α is set to 32.0, resulting in a λ distribution centered around 0.5. Empirical observations indicate that MixUp augmentation is particularly effective in enhancing segmentation performance for small ships. By moderately blending image–label pairs, MixUp produces diverse yet structurally consistent training samples, thereby mitigating overfitting in cluttered maritime scenes and exposing the network to richer contextual variations while preserving object-level semantics. Previous studies on MixUp [

15] and CutMix [

57] have similarly demonstrated that moderate sample blending improves dense prediction tasks by maintaining structural integrity and enhancing model generalization.

5. Limitations and Discussion

The VLRSSD dataset was collected from Google Earth, which inherently sources imagery from multiple sensors. Consequently, it can be considered a multi-source remote sensing dataset. However, Google Earth does not provide explicit annotations linking images or regions to specific sensor sources, making precise categorization by sensor type impossible. Additionally, most images depict nearshore ships under clear-sky conditions, while scenarios such as cloudy, overcast, or foggy weather, open-sea environments, and scenes containing ship wakes or waves are relatively scarce. These characteristics indicate limited scene diversity; strictly speaking, the dataset does not fully represent complex maritime environments. Furthermore, the high cost of annotation constrains the dataset size, which in turn limits the model’s generalization ability across diverse scenarios.

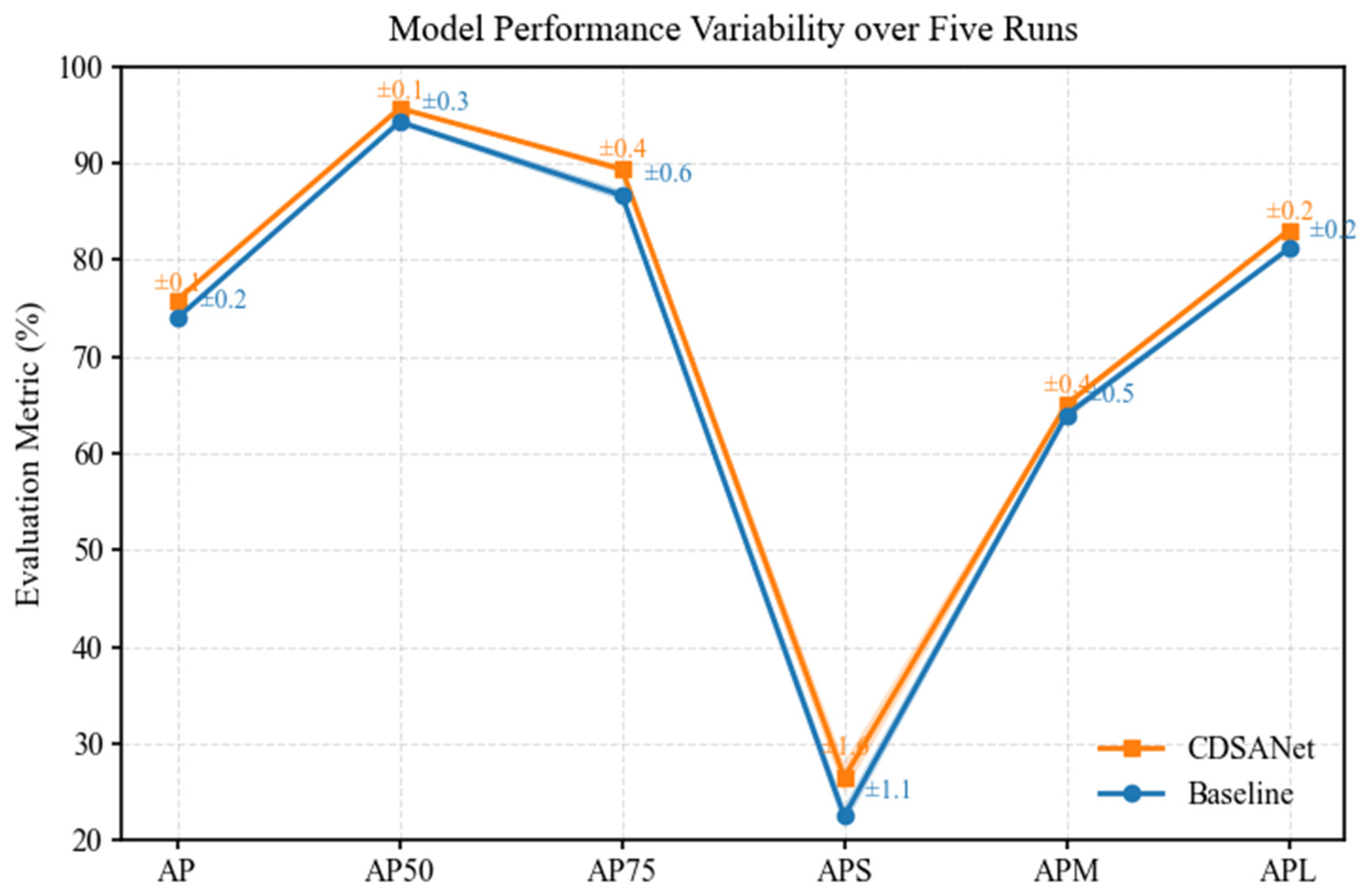

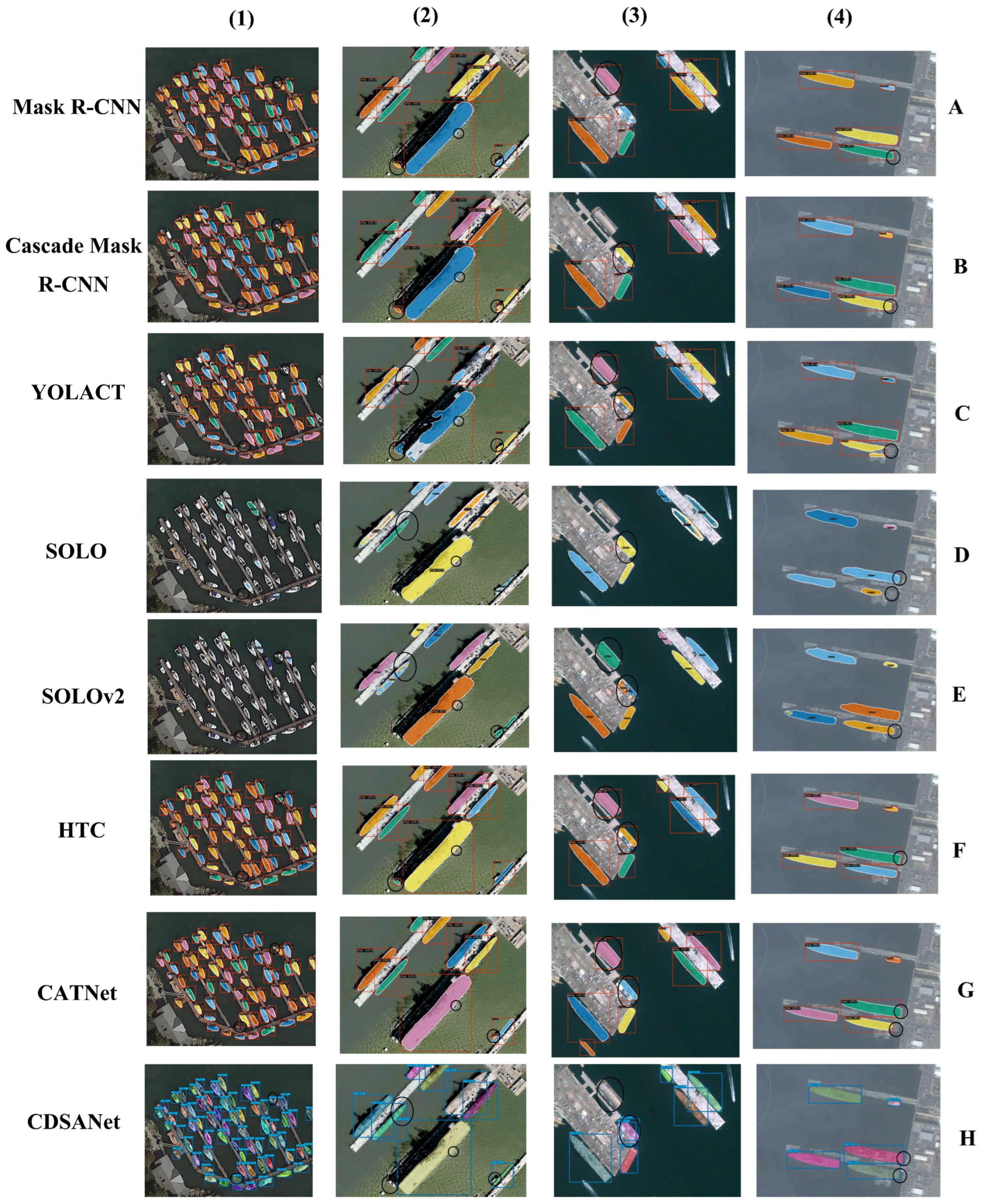

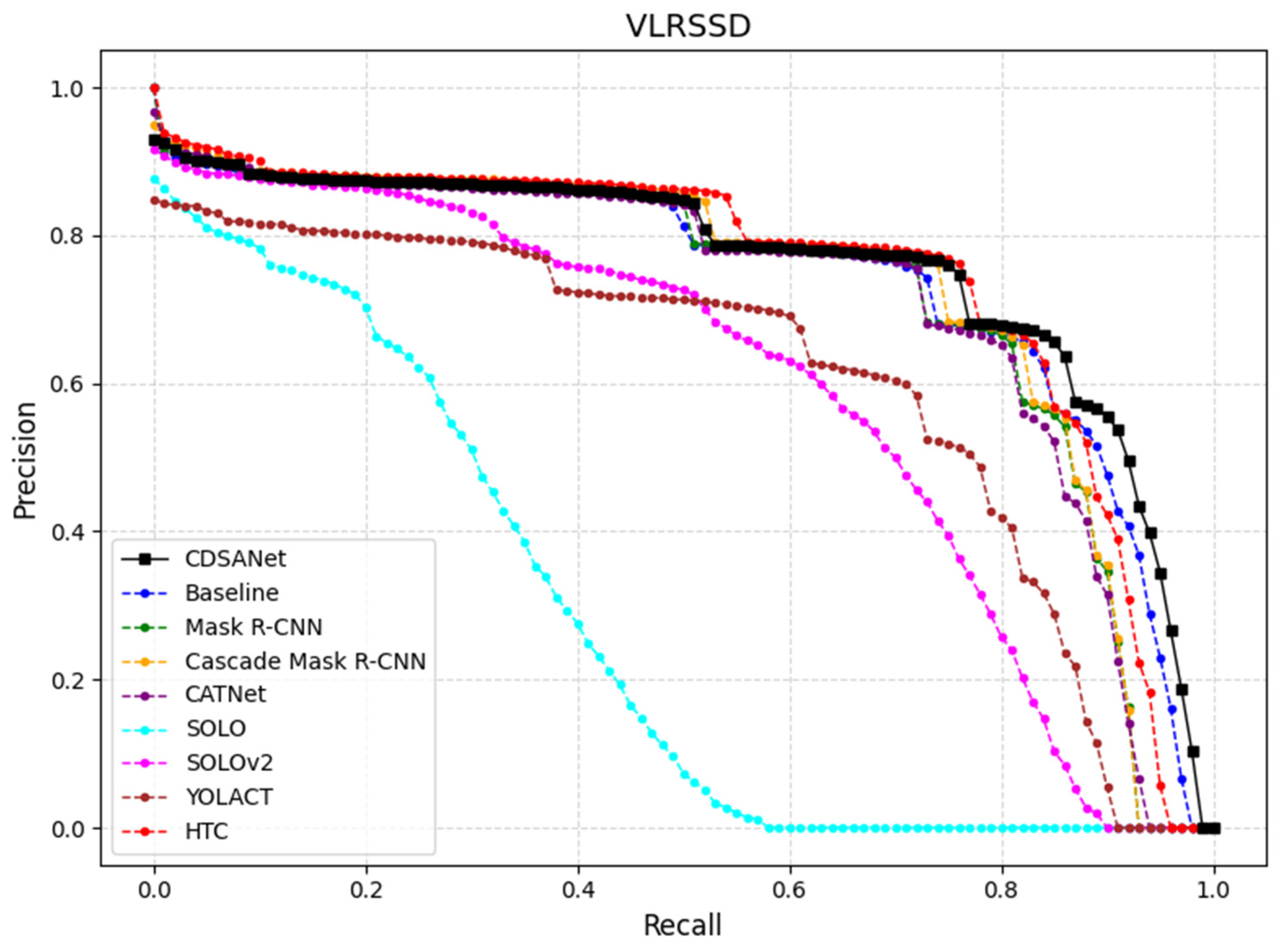

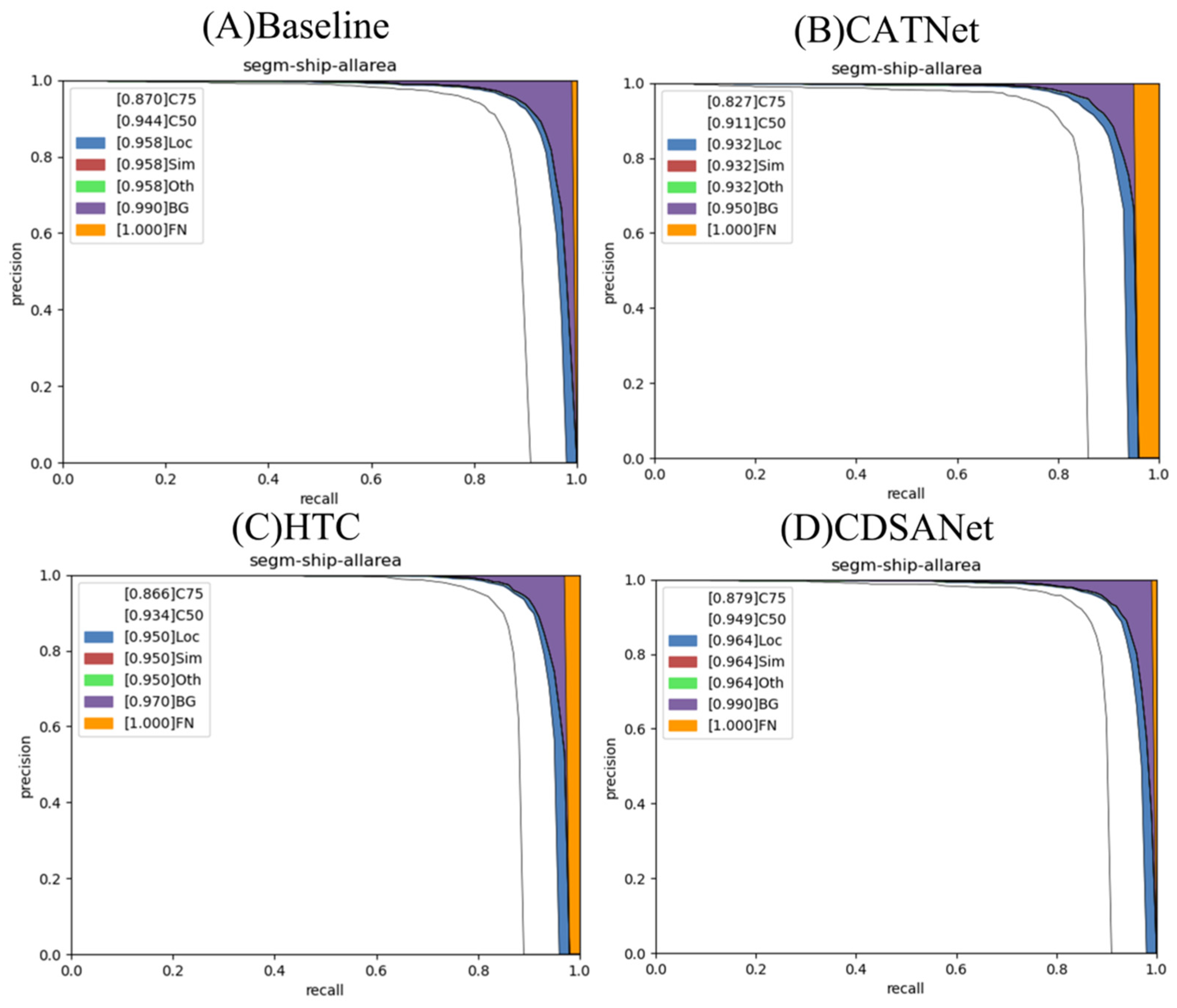

Due to the scarcity of ship datasets in complex maritime environments, further validation is needed to assess CDSANet’s performance in such challenging scenarios. Compared with widely adopted frameworks such as Mask R-CNN and Cascade Mask R-CNN, CDSANet converges more slowly and requires additional training epochs to reach optimal accuracy. As shown in

Table 9, CDSANet achieves a favorable balance in model size, parameter count, FLOPs, and inference speed compared with state-of-the-art segmentation models. Nevertheless, it still lags behind the lightweight YOLOv8 baseline. Additionally, CDSANet is currently implemented on an early version of YOLOv8 and may not fully exploit the architectural and optimization improvements introduced in later versions such as YOLOv11. Although YOLOv11 offers certain advantages, it still primarily relies on conventional CNN backbones and CIoU-based loss functions, which limit global feature modeling and boundary localization. On our dataset, YOLOv11, three versions ahead of YOLOv8, achieves only modest performance improvements.

6. Conclusions and Future Work

In this study, we propose CDSANet, a novel ship instance segmentation model that integrates CNNs, vision transformers, dynamic convolution, attention mechanisms, the SIoU loss function, and a MixUp-based data augmentation strategy. CDSANet effectively addresses several limitations of YOLOv8, including its relatively weak capabilities in global context modeling, local–global feature interaction, feature extraction, and precise mask localization. As a result, CDSANet achieves superior segmentation accuracy and mask integrity, particularly for dense ship targets and objects with significant shape variations in optical remote sensing images, mainly in nearshore port and harbor areas under clear weather conditions. In addition, this work contributes a visible light remote sensing ship image dataset, providing a valuable resource for advancing research on nearshore ship instance segmentation. Despite the promising performance of the proposed model, its applicability is constrained by the limited diversity of available datasets. Future work could focus on enhancing domain generalization through domain adaptation techniques to mitigate performance degradation when transferring across different sensors or imaging environments. Moreover, integrating multimodal data, such as SAR and optical imagery, may provide complementary spatial–spectral cues, further improving segmentation accuracy and robustness.

Looking ahead, emerging architectures such as Mamba networks are attracting increasing attention compared to Transformers due to their efficiency in modeling long sequences, lower memory usage, and reduced computational costs. Therefore, future research will explore integrating Mamba [

65] networks with more advanced YOLO series models to further improve segmentation efficiency and accuracy. Meanwhile, we recognize the robustness and efficiency of the MMDetection framework in instance segmentation tasks. Accordingly, we plan to strengthen research on model compression and efficient architectures within this framework, while also investigating how to incorporate the innovative concepts of Mamba networks into MMDetection.

It should be noted that achieving accurate ship segmentation in complex maritime environments remains a highly challenging task. The term “complex maritime environments” encompasses a wide range of conditions, and developing a comprehensive algorithm capable of handling all such scenarios is undoubtedly difficult. Therefore, future work will focus on a representative scenario within complex maritime conditions: ship segmentation under foggy and hazy weather. This scenario is both scientifically interesting and practically important, as fog and haze frequently occur at sea and can significantly impact maritime traffic and the safety of maritime trade. Fortunately, mature fog and haze scene image simulation methods can help alleviate the scarcity of such images under real-world conditions. We believe that combining the advantages of Mamba networks with ship instance segmentation in foggy and hazy remote sensing images can open new research opportunities and yield promising results.