1. Introduction

Unmanned transport includes various types of autonomous vehicles such as unmanned cars, unmanned aerial vehicles (UAVs), autonomous ships, and trains, which are capable of operating without human involvement [

1]. The use of video data obtained from unmanned vehicles (UVs) significantly enhances the capabilities of monitoring and analysis systems across various fields. UVs are equipped with high-quality cameras and sensors that can continuously and in real time capture video streams, ensuring accuracy and timeliness of information collection [

2]. Each type of transport serves its specific purpose: unmanned cars are used for passenger and cargo transportation in urban environments, drones for goods delivery, aerial photography, and monitoring, autonomous ships for cargo transport and ocean research, and trains for efficient passenger and freight transport on rails [

3]. All of these systems are aimed at improving safety, reducing costs, and enhancing logistics in various sectors [

4].

Distortions occurring during the registration of video and photo data by ground-based unmanned vehicles reduce the quality of images, which play a key role in object recognition and environmental analysis. This can lead to errors in assessing the traffic situation, misidentification of pedestrians, vehicles, and traffic signs, thus increasing the risk of accidents [

5]. This issue becomes especially critical under low-lighting conditions or in adverse weather. Additionally, digital noise can hinder the performance of machine vision algorithms and neural networks, slowing down the system’s response and reducing the accuracy of decision-making [

6].

Digital noise in video recorded by ground-based unmanned vehicles represents visual distortions that occur due to insufficient lighting, high speeds, especially during sharp turns or changes in lighting conditions, and technical limitations of the camera, as well as interference from electromagnetic fields, which can further impact the recording quality [

7]. This noise manifests as graininess or color artifacts. Filtering and post-processing algorithms are used to minimize digital noise, along with high-resolution cameras and enhanced light sensitivity. In real-time conditions, high-quality video with minimal noise is critically important for the proper functioning of navigation systems and ensuring the safety of unmanned vehicles.

The real-time denoising is a resource-intensive task. Additionally, there is no need to correct frames that are not distorted. Detecting frames affected by noise can help address these issues. This paper proposes a method in which distorted frames can be identified using the Structural Similarity Index Measure (SSIM). Similarity is determined between consecutive frames. It is assumed that with a sufficiently high frame rate, the difference between frames will be minimal. A divergence between two consecutive frames indicates the presence of distortions in the image, the operation of built-in digital filters, or high dynamics in the video. To exclude high dynamics in the video or other factors that do not affect image quality but still show high divergence between consecutive frames, the SSIM aspects of brightness, contrast, and structure were considered. The contrast aspect demonstrated the highest similarity between frames under natural conditions, without the presence of noise in the video. The decision on whether a frame is distorted is made by comparing the measure to a threshold value. The proposed method is deliberately designed as a simple yet effective solution for embedded drone systems where computational resources are strictly limited. Unlike complex neural network approaches, our method provides comparable accuracy with lower computational power requirements.

Our contribution is as follows:

A method based on a similarity measure for assessing the resemblance of video frames for detecting distorted frames is proposed.

A comparison of the proposed method with known image quality assessment methods has been conducted.

An additional sensitivity measure for detecting distorted frames has been developed.

It has been demonstrated that the method based on SSIM can identify the presence of noise in video data from unmanned vehicles.

The scientific novelty of the proposed method consists of adapting the classic SSIM for the task of detecting distorted frames in the video from unmanned vehicles, considering the contrast aspect, which is the most sensitive component. Unlike existing methods, the proposed approach does not require a reference image, has low computational costs and allows you to perform real-time detection. This makes it especially valuable for integrating it into the energy-limited systems, i.e., into ground or air UAVs, where the use of resource-intensive algorithms is unacceptable. The method also offers a simple threshold scheme of personnel classification.

The paper is organized as follows:

Section 2 provides an overview of existing image similarity comparison methods.

Section 3 discusses the proposed method for detecting distorted video frames. Then,

Section 4 presents a comparison of the proposed distorted image detection method with the other similarity assessment methods.

Section 5 discusses the obtained results.

Section 6 concludes the paper.

3. Proposed Method for Detecting Distorted Video Frames

High-quality sensors are used for video recording on unmanned vehicles, ensuring filming in dynamic scenes. Video recording on unmanned vehicles considers factors such as changes in lighting, the movement of the device itself, and surrounding objects. Cameras typically have automatic systems for adjusting exposure, focus, and contrast, which help maintain high image quality for unmanned vehicles [

55].

Let two consecutive frames of the video be obtained, where

i is the current frame of the video, and (

i − 1) is the previous frame of the video. The

SSIM, as a similarity measure between video frames, indicates changes in brightness, contrast, and structure that occurred during the time interval between the frames and is calculated using the following formula:

where

where

is the local mean values;

are the standard deviations;

is the cross-covariance for frames

i − 1 and

i;

refers to the similarity in brightness, contrast, and structure, respectively;

are the coefficients of

influence, respectively,

are constants.

Typically, a simplified SSIM formula is used, where the condition

and

holds. The simplified SSIM formula is as follows.

When analyzing the changes in the video under certain conditions, the optimal detection strategy is to isolate the factor that induces the most severe deviation in the SSIM metric. On the other hand, the difference between the frames can be described as

where

D is the influence of the difference between frames caused by object movement, the movement of the recording device, changes in lighting, and other natural factors affecting pixel mismatches (this characteristic depends on the speed of the unmanned vehicle as well as the frame rate of the video);

N is the influence of noise occurring in the images;

F is the influence of digital noise reduction filters or other embedded algorithms;

O is the influence of various control elements or information overlaid on the recorded video frames, such as the OSD (On-Screen Display) technology. The OSD can be static, such as a display of current settings, or dynamic, such as prompts that appear depending on user actions.

Example 1. Consider an experimental video recorded on the model of the car recorder MiDriveD01, which contains 650 frames; frame resolution is 1920 × 1080; frame rate is 30; video format is mp4; the video frames contain OSD information. The first, last, and an example frame affected by N are shown in Figure 1. Figure 2 presents a video fragment indicating the influences of D, N, F, and O using SSIM. The video is subject to periodic influence from F, which is caused by the recorder’s algorithm features. Every hundred frames, the influence of N is visibly noticeable, and these distortions are artificially introduced. The figure also shows a clear example of the strong influence of D; the influence of O on this video is not significant, which is due to the high resolution of the video frames. At a sufficiently high frame rate, the difference between frames SSIM(i − 1, i) will be low, and the SSIM values will be high. To determine the influence of D, N, F, and O on SSIM(i − 1, i), it is necessary to introduce a threshold value T. Using T, it can be concluded whether N has caused low similarity between the images.

Standard classification metrics, such as precision, recall, F1-score, and overall accuracy, were used to quantitatively evaluate the efficacy of the chosen threshold

T for detecting distorted frames. The calculation of these metrics is based on the analysis of the confusion matrix, which takes into account True Positive (TP), False Positive (FP), True Negative (TN), and False Negative (FN) detections.

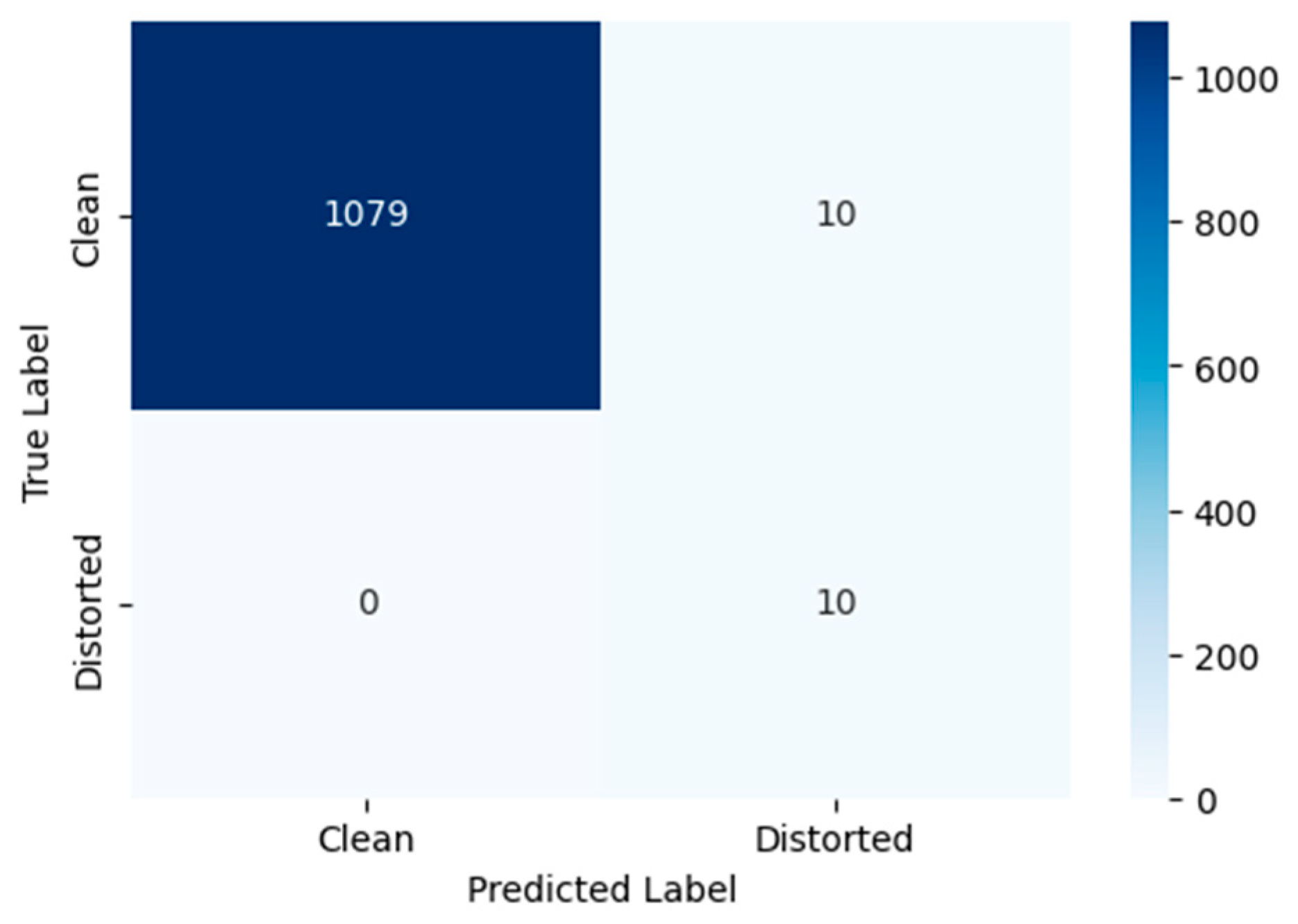

Figure 3 presents the confusion matrix for the binary classification of video frames as “distorted” or “clean”, using the proposed method with a threshold of

T = 0.9. The values of the metrics Accuracy, Precision, Recall, F1-Score are presented in

Table 1.

The choice of the threshold T = 0.9 was aimed to ensure maximum detection recall (Recall = 1.0), which guarantees the identification of all distorted frames—a critical requirement for subsequent analysis in unmanned vehicle systems. This configuration achieved an overall classification accuracy of 99.1%. The Precision value of 0.5 indicates that frames immediately following distorted ones differ significantly and are consequently classified as distorted by the proposed method. This observation is supported by the confusion matrix, where FP = TP. The F1-score of 0.667 confirms the method’s operational reliability under the chosen threshold, which aligns with a conservative detection strategy. The performance metrics are identical for Video 1, Video 2, and Video 3.

Figure 4 shows the Receiver Operating Characteristic (ROC) curve for the proposed distortion detection method, with the Area Under the Curve (AUC) value of 0.9954. This near-perfect AUC score demonstrates the exceptional capability of the proposed contrast-based measure

S to discriminate between distorted and clean frames across all possible classification thresholds. The ROC curve’s strong performance, hugging the top-left corner of the plot, indicates that the method maintains high true positive rates while keeping false positive rates low throughout the operating range. This outstanding separation capability confirms that the contrast component of SSIM serves as a highly effective feature for detecting noise-induced distortions in UAV video sequences, providing robust performance regardless of the specific threshold choice.

When determining frame distortion in the video, the most important factor is the influence of N. Therefore, it is necessary to select a measure where the influence of N is the most significant.

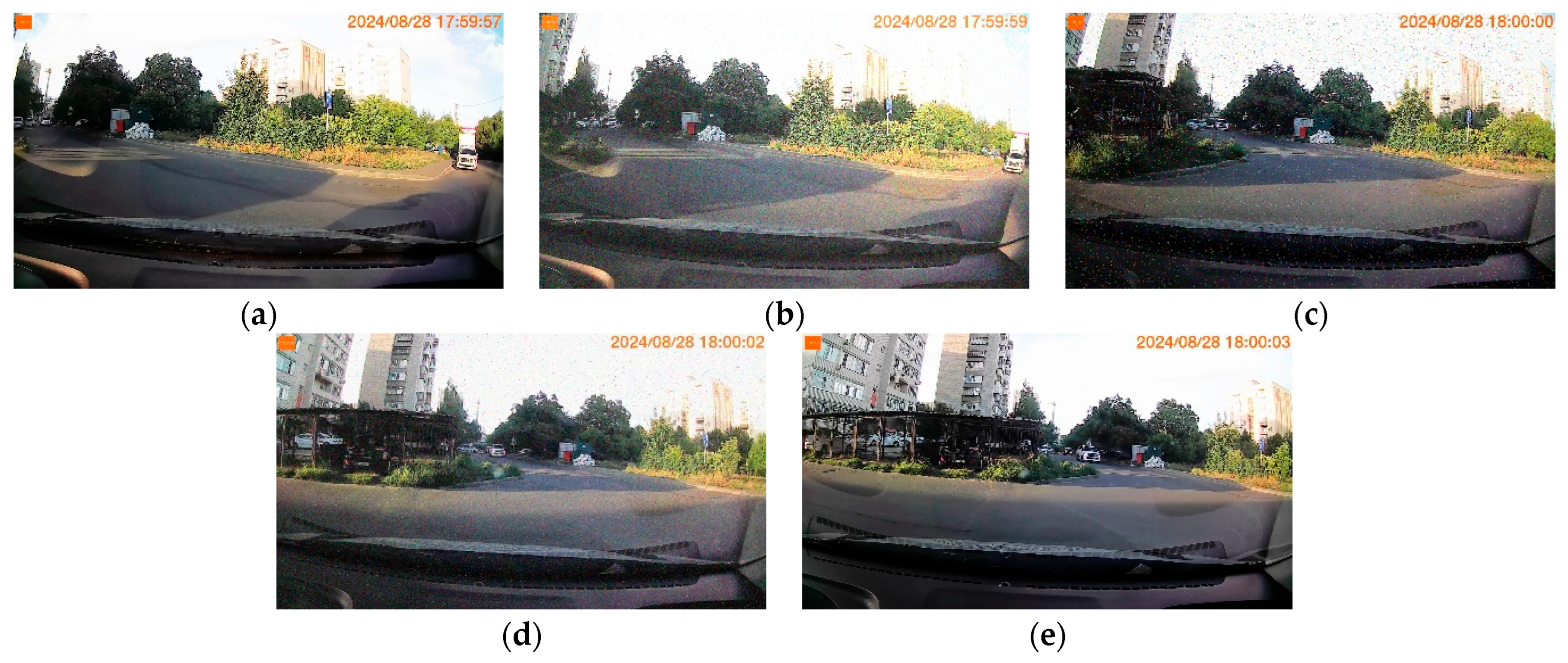

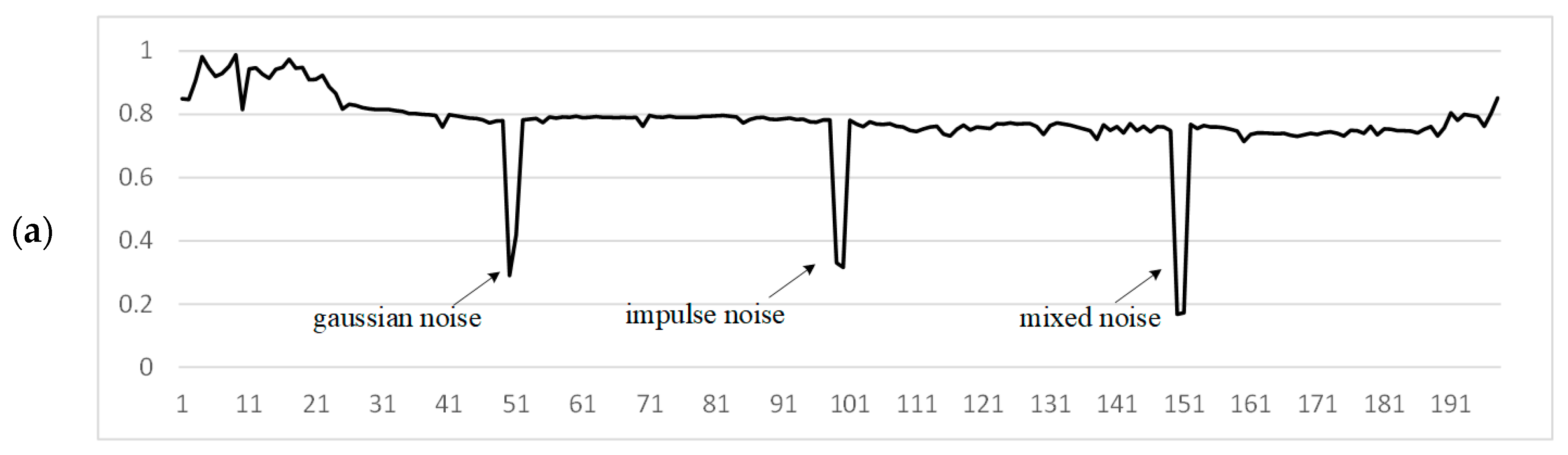

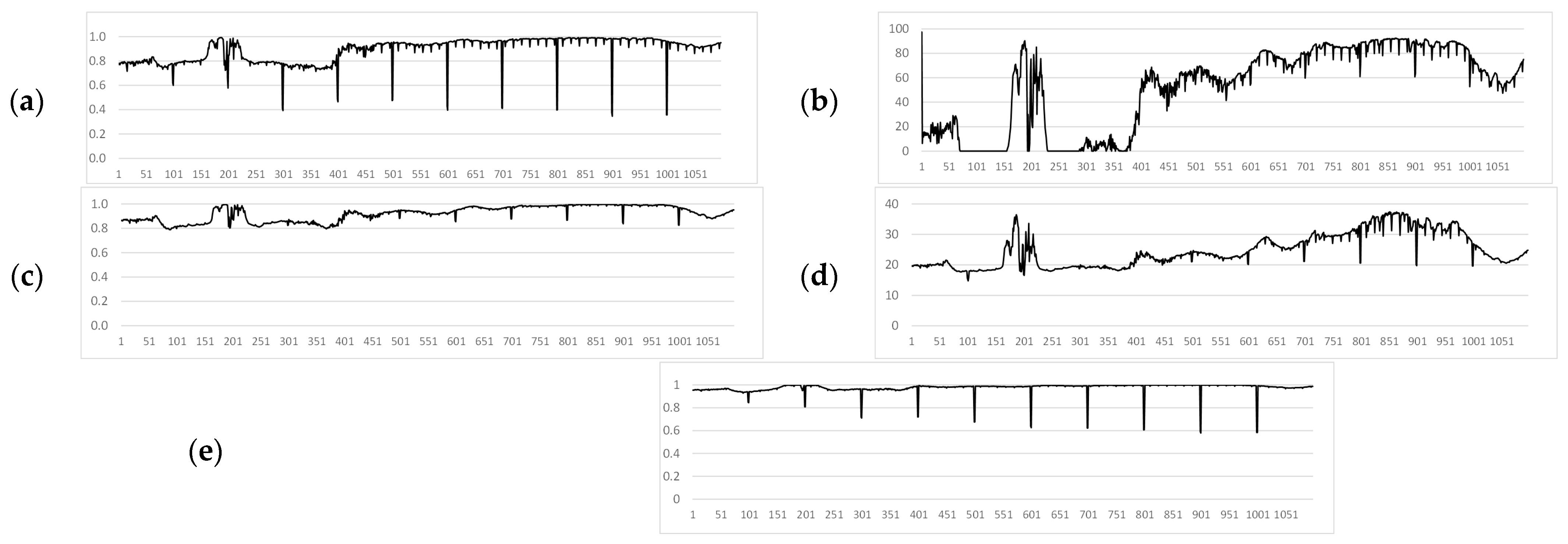

Example 2. Figure 5 shows some frames of a video fragment containing 200 frames, where frame 50 is distorted by Gaussian noise with a density of 0.15; Frame 100 is distorted by impulse noise with a noise density of 0.03; Frame 150 is distorted by impulse noise with a density of 0.01 and is further distorted by Gaussian noise with a density of 0.05. The graphs of these video fragments are shown in Figure 6. Considering the brightness factor (

Figure 6c), the similarity between video frames is higher than in other aspects, but the presence of a distorted frame is less pronounced. In the structural aspect (

Figure 6d), it is more difficult to determine which specific frame is distorted by noise. Based on the values of the SSIM aspects between video frames, the manifestation of additive noise is more pronounced in the contrast aspect (

Figure 6b). The original SSIM (

Figure 6a) also shows a high result, but a high influence from the differences in other parameters is also observed.

We use the contrast aspect of the SSIM in the proposed approach. To properly use one aspect of SSIM instead of three, the contrast value, calculated by Formula (3), needs to be cubed. The proposed method for evaluating the similarity of video frames will look as follows:

where

are the standard deviations for

i − 1 and

i;

is a constant similar to Formula (2).

The values of the S between consecutive video frames on unmanned vehicles range from [0.9, 1], where 1 indicates complete similarity in the contrast aspect. It is assumed that a value of S < 0.9 indicates the presence of noise in the video frame.

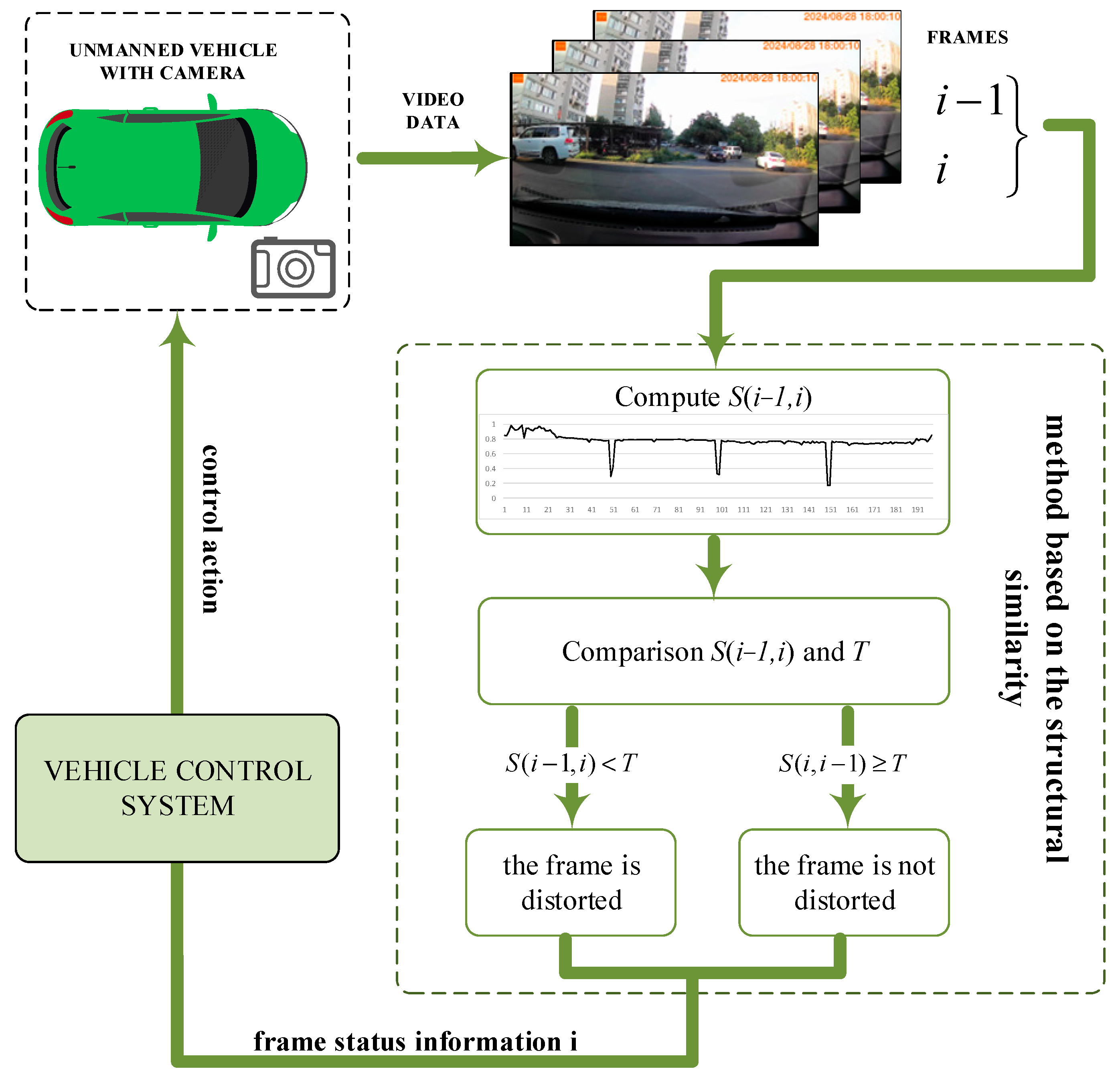

Figure 7 shows a scheme of the method for detecting distorted frames in video, which uses the proposed method. For a sequence of video frames obtained from the camera of an unmanned vehicle, the proposed method is calculated based on the contrast aspect of the structural similarity index. The value of each

i-frame of the video is compared with the threshold. Information about the frame state is transmitted to the vehicle control system. Then, the vehicle control system transmits a control action to the unmanned vehicle.

The key requirement for the method is its efficient implementation on onboard UAV processors. The simplicity of the proposed approach (using only the SSIM contrast component) allows achieving low processing time on typical embedded processors. The proposed method, based on the SSIM contrast aspect, allows us to accurately detect the difference between video frames associated with the effect of noise on the image, which makes it promising for solving the problem of detecting distorted video frames. To test its effectiveness and compare it with existing approaches, experiments were conducted on standard datasets. The results of the experiments are given in the next section.

4. Materials and Methods

An experimental video was prepared [

56] to validate the effectiveness of the proposed method and compare it with other image quality assessment measures for evaluating the similarity of video frames. The video was recorded using the MiDriveD01 dashcam model, containing 1099 frames with a resolution of 1920 × 1080, a frame rate of 30 fps, and in MP4 format. The video frames include OSD information. Every hundredth frame of the experimental video was artificially corrupted. Noise was added after decoding the original video (bitrate 50 Mbps, H.264) to a sequence of uncompressed PNGs, and then controlled noise addition was applied. The simulations and noise processing were performed using MATLAB R2021b. Thus, three copies of the experimental video were created, each differing in the type of noise applied to the corrupted frames. The noise density in the frames increases every hundred frames with a specified step.

Every hundredth frame in Video 1 is corrupted by random impulse noise, with a density ranging from 0.01 to 0.1, increasing in steps of 0.1.

Every hundredth frame in Video 2 is corrupted by Gaussian noise, with a density ranging from 0.05 to 0.5, increasing in steps of 0.05.

Every hundredth frame in Video 3 is corrupted by mixed noise, consisting of a combination of Gaussian noise and random impulse noise.

In the context of digital images, random impulse noise is a kind of noise that can degrade image quality. Sharp, fleeting brightness spikes are one way that this noise can show up at random times. As a result, pixels in the image randomly take on arbitrary values. On the other hand, Gaussian noise is a type of noise characterized by a normal distribution of amplitude values. It often occurs in digital images and can be caused by various factors, such as insufficient lighting, electronic interference, or errors in the image capture process. Gaussian noise is random and unpredictable, making it difficult to remove without losing image details. Since both individual types of noise and combinations of different noise types can occur in real-world conditions, a study was also conducted on mixed noise.

Figure 8 shows frame 600 from the original undistorted experimental video, Video 1, Video 2, and Video 3. Frame 600 of Video 1 is corrupted by random impulse noise with an intensity of 0.6. Frame 600 of Video 2 is corrupted by Gaussian noise with an intensity of 0.3. Frame 600 of Video 3 is initially corrupted by random impulse noise with an intensity of 0.6, and further corrupted by Gaussian noise with an intensity of 0.3.

The proposed method was compared with modern approaches for measuring frame similarity to detect inconsistencies. In the experimental videos, the values of the SSIM [

42], VMAF [

44], CORR [

11], PSNR [

40] and S (proposed) were calculated.

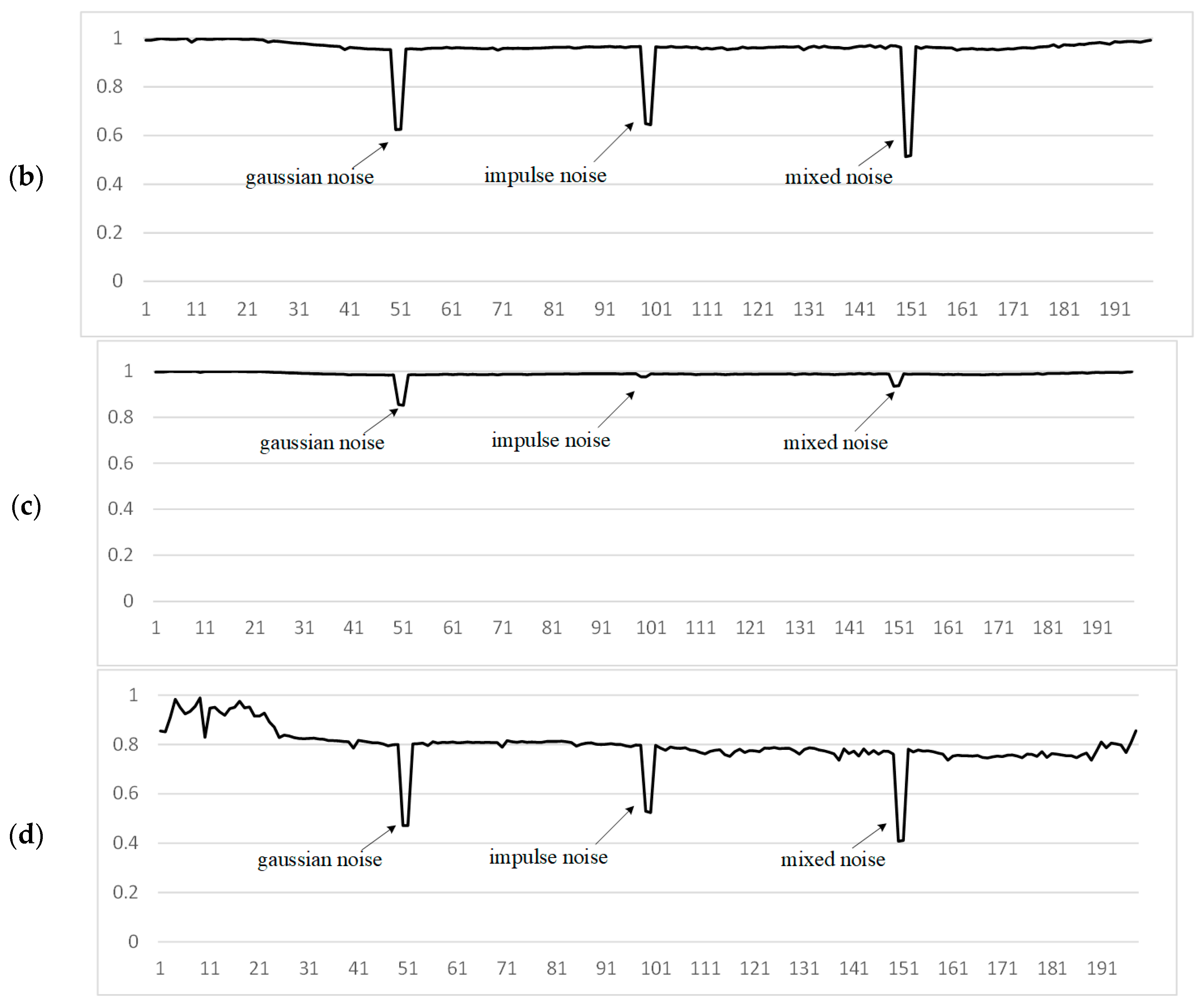

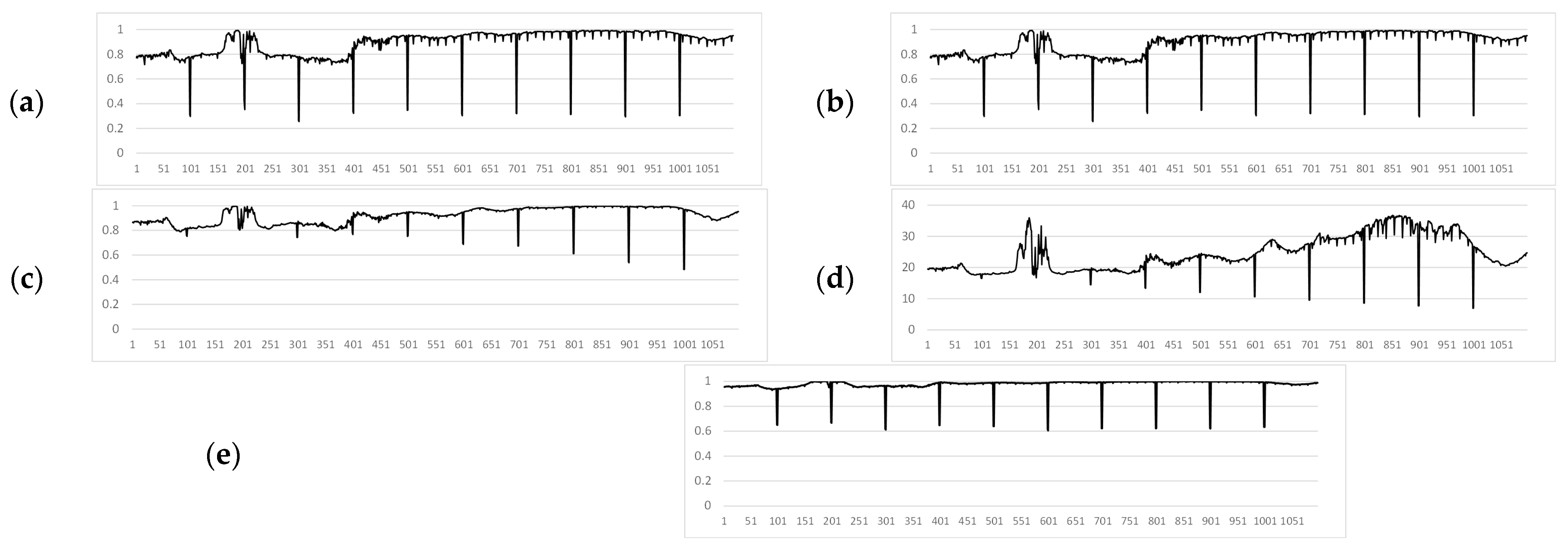

Figure 9,

Figure 10 and

Figure 11 show the graphs of the values of these measures for Video 1, Video 2, and Video 3, respectively. Measures evaluated on 1099-frame video with artificial noise injected every 100 frames.

A comparative analysis of the similarity measures, as visually summarized in

Figure 9,

Figure 10 and

Figure 11, leads to several key conclusions. The proposed measure

S (in

Figure 9e,

Figure 10e and

Figure 11e) consistently demonstrates the most pronounced and clear responses to artificially introduced distortions across all noise types (impulse, Gaussian, mixed), with similarity values for corrupted frames dropping sharply below the threshold while remaining stable for uncorrupted segments. In contrast, while SSIM (in

Figure 9a,

Figure 10a and

Figure 11a) shows good detection capability, its response is more susceptible to interference from natural scene dynamics and internal processing artifacts (

F). VMAF (in

Figure 9b,

Figure 10b and

Figure 11b) exhibits high computational instability, particularly in the initial frames, and fails to provide consistent baseline readings. CORR (in

Figure 9c,

Figure 10c and

Figure 11c), despite its low computational cost, proves ineffective for this task, as its values for distorted frames often do not surpass the variations caused by normal scene changes. Finally, PSNR (in

Figure 9d,

Figure 10d and

Figure 11d) shows poor sensitivity at lower noise levels, making it unreliable for detecting subtle distortions. Thus, the visual evidence from these figures strongly supports the superiority of the proposed contrast-based measure S for the specific task of noise detection in UAV video streams.

An additional measure was introduced to compare image quality methods for determining frame similarity in videos. This measure also allows for an objective assessment of the research results. The measure calculates the arithmetic mean of the absolute differences between the distorted and preceding frames, and is defined as follows.

The VMAF takes values in the range [0, 100], PSNR takes values in the range

. To compare the Sens values with other measures, it is necessary to normalize these values to the range [0, 1]. PSNR can be normalized to the range [0, 1] by dividing the value by 40, since 40 dB corresponds to high image quality where distortion is visually imperceptible [

45]. This linear normalization approach is widely adopted in image quality assessment literature to provide an intuitive scaling where values near 1 represent almost distortion-free content, while lower values indicate progressively more severe degradation. The threshold of 40 dB represents the point where distortions typically become imperceptible to human observers under normal viewing conditions, making it appropriate for establishing an upper bound for quality assessment in our video analysis context. The Sens allows determining the sensitivity of detecting a distorted frame using the corresponding method (SSIM, VMAF, CORR, PSNR,

S). The

Sens was calculated for Video 1, Video 2, and Video 3.

The SSIM demonstrated high effectiveness in detecting distorted frames in the video. However, the graph reveals a significant influence of

F, which is attributed to the periodic activation of internal algorithms of the video recorder. The computation of the VMAF demands greater computational resources. Additionally, the VMAF exhibits instability during the first 400 frames of the video due to its use of machine learning methods. This instability may be associated with the training datasets used in the VMAF model. A key advantage of the CORR is its low computational demand and energy efficiency. However, in all experimental videos, this method showed low effectiveness, with the CORR values under strong

N influences not exceeding the effect of

D. Consequently, it is not possible to reliably determine whether a frame is distorted. At low noise levels, PSNR fails to detect distorted frames by comparing successive frames, as can be seen from

Table 2. Sensitivity values calculated using Equation (8), representing the mean absolute difference between distorted and preceding frames. For this reason, PSNR showed detection results similar to VMAF.

The proposed method accurately detects all 10 artificially corrupted frames despite the different types of distortions. In Video 1, as the intensity of the random impulse noise increases, the detection of distorted frames becomes more effective. However, in Video 2 and Video 3, the presence of all distorted frames is evident, but as the noise intensity increases, the detection efficiency does not improve. It is also clear that, for the proposed measure S, the similarity between the uncorrupted frames does not exceed the threshold T throughout the entire video.

Also, the proposed method accurately detects all 10 artificially distorted frames despite the diverse nature of distortions. In Video 1, as the intensity of random impulse noise increases, the detection of distorted frames becomes more efficient. In contrast, in Videos 2 and 3, although the presence of all distorted frames is evident, the detection efficiency does not improve with the rise in distortion intensity. Additionally, for the proposed measure S, it is observed that the similarity values between undistorted frames do not exceed the threshold T throughout the entire video sequence.

The obtained Sens values confirm the conclusions drawn from the plots in

Figure 7,

Figure 8 and

Figure 9 and demonstrate the superiority of the proposed method across all experimental videos. The highest Sens values achieved by the proposed method indicate a more pronounced presence of distortions in the video frames. Therefore, the proposed method effectively detects distorted frames in videos captured by unmanned vehicles.

One of the limitations of the proposed method, which is based solely on the contrast component of the SSIM index, is its inability to detect frame distortions caused by sensor overexposure, such as glare from direct sunlight. To model this scenario, a simulation was conducted in which synthetic “glare” distortions were introduced into a video sequence by artificially increasing pixel brightness values. Specifically, every 100th frame was modified by increasing pixel intensity: by +10 for frame 100, +20 for frame 200, and so on, up to +100 for frame 1000. Since the developed similarity measure excludes the luminance and structural components of SSIM, the method fails to detect frames affected by overexposure. As a result, despite noticeable brightness changes, such frames are not identified as distorted because the metric relies solely on contrast. This experiment highlights a key limitation: the method is not effective in scenarios where brightness is the dominant form of distortion, as it relies exclusively on contrast variation. The results of this simulation are presented in

Figure 12.

Another important scenario to consider is when distorted frames appear consecutively rather than being isolated.

Table 3 presents the values of the proposed contrast-based similarity measure in cases where adjacent frames are corrupted by Gaussian and random impulse noise. Frames 100, 101 and 102 have the same density of random impulse and Gaussian noise. The results indicate that the method is capable of detecting such frames, as the similarity values remain below the threshold. However, this observation does not imply that the proposed approach is universally effective for all types of noise when distortions occur in sequence. Nevertheless, it can be reliably stated that the proposed method will consistently detect the first distorted frame in a sequence, as its contrast with the preceding undistorted frame is always significant. It is also worth noting that the frame following the distorted one will have low similarity values, since it is very different from the distorted frame. In this case, frame 103 is not distorted, but has low

S and

SSIM values.

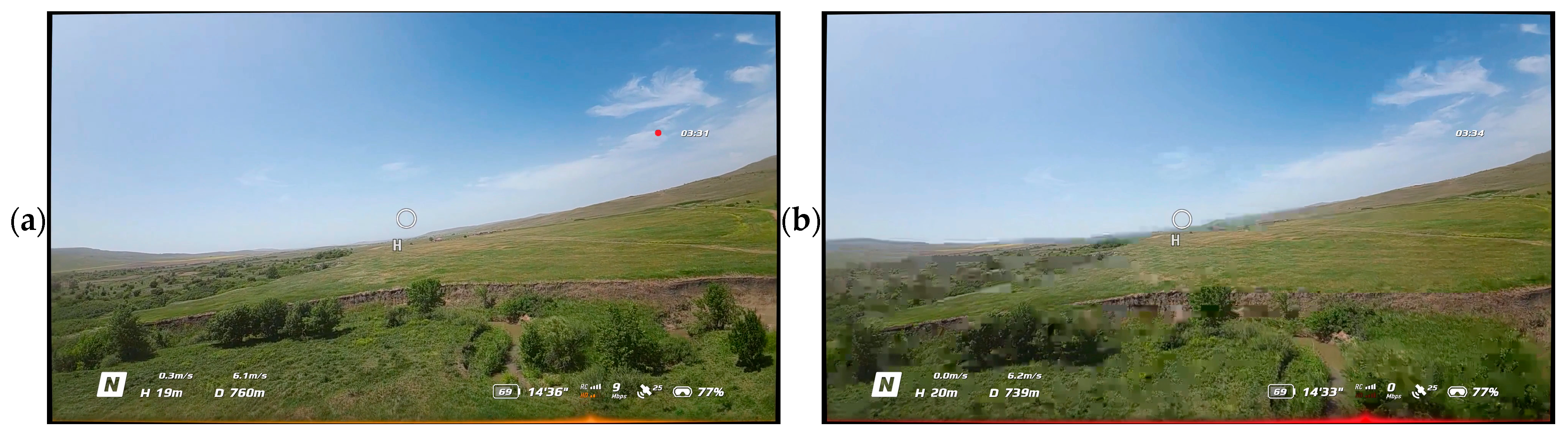

To test the robustness of the proposed method under conditions as close as possible to those described in UAV operation, an additional experiment was conducted. The test data consisted of video recorded from a DJI Avata UAV, which exhibited characteristic artifacts caused by signal loss: compression artifacts (blocking effect) and time delays (frame dependency and duplication). These interpretations were not artificially simulated but occurred during a real flight, making them valuable for assessing the practical applicability of the method. Examples of video frames are shown in

Figure 13.

Figure 14 presents the confusion matrix for the binary classification of video frames as “distorted” or “clean” using the proposed method with a threshold of

T = 0.9. The values of the metrics Accuracy, Precision, Recall, F1-Score are presented in

Table 4.

Figure 15 shows the ROC curve for the proposed distortion detection method, with the AUC.

The application of the proposed method to real-world UAV footage exhibiting compression artifacts and lag revealed a fundamental limitation of the contrast-based approach. The classification results on this dataset (Accuracy = 0.47, Precision = 0.63, Recall = 0.07) demonstrate that the method is not effective for this class of distortions (

Figure 14). The critically low Recall value of 0.07 indicates that the model fails to detect the vast majority of actual distorted frames, while the moderate Precision of 0.63 shows that even the few detections made are unreliable. This conclusion is further reinforced by the ROC-AUC value of 0.5106 (

Figure 15), which is virtually equivalent to random guessing (AUC = 0.5). This statistically confirms that the proposed metric S possesses no meaningful discriminative power for this specific distortion type and cannot reliably separate corrupted and clean frames under compression artifacts. This outcome is analytically consistent: compression artifacts primarily degrade structural information and cause global frame shifts during lag, to which the contrast component of SSIM is inherently less sensitive compared to additive noise. Therefore, these results serve not to discredit the method but to precisely define its operational domain. They conclusively show that the proposed technique is a specialized tool for detecting noise corruption in high-integrity video streams, and is not suited for diagnosing bandwidth-related artifacts like compression or lag. This finding is of significant practical importance for system architects, as it clarifies that different distortion types in UAV video pipelines require distinct, specialized detection mechanisms.

5. Discussion

SSIM and VMAF were originally developed for other tasks, but their adaptation to noise detection yields good results. Although these metrics were actually created to assess visual quality from a human perspective, the underlying criteria they establish, such as structural similarity, visibility convention, and spatial cues, are essentially objective and original representations of the data. SSIM analyzes local features of the pixel brightness distribution and their relationships—these are mathematical properties of the image, not abstract phenomena. Similarly, VMAF observes not only human perceptual patterns, but also low-level features such as contrast and edge preservation. These parameters retain their innovativeness even when the «consumer» of the frames is the method, not the observer.

The approach presented in [

1], which employs correlation for detecting edits in videos by comparing frames, does not require high computational resources but has not demonstrated satisfactory results. The detection of distorted video frames using the method described in [

11] is feasible only under conditions of very low influence of

D in the video, making it practically ineffective. The VMAF [

49] is capable of detecting distorted frames. However, because it is based on machine learning methods, certain segments of the video produce low measure values that do not reflect reality.

Among the methods considered in

Section 4, the proposed method and SSIM [

47] demonstrated the best performance, as evidenced by the numerical results in

Table 1. The superiority of the proposed method is attributed to its focus on the contrast aspect, where the influence of

N is most pronounced, as illustrated in

Figure 2. Videos captured by unmanned vehicles typically do not include edits or superimposed graphics. Contrast in such videos generally falls within a narrow range of values, unlike brightness and structural elements. It is necessary to calculate the values of the sentence (Formula (7)) for the received video from an unmanned vehicle. A conclusion is made for the distortion of this video frame when comparing the obtained measure values with the threshold value.

Although three types of noise are considered in the work, including random impulse, Gaussian and mixed, there are other types of distortions that can significantly affect the video received from unmanned vehicles. For example, compression artifacts, blurry images, as well as glare from light sources can decrease the quality of the video. These distortions are not considered in this article. Presumably, any type of distortion affects the structural similarity of images, including in the aspect of contrast. In the future, it is advisable to investigate the stability of the proposed method to such distortions and the ability to expand the method for a wider noise spectrum.

It should also be noted that the analysis showed that the values of the proposed method can be affected by periodic activation of the built-in DVR algorithms (

F). This indicates the possible sensitivity of the method to the specific equipment and firmware used in the system. At the same time,

Figure 2 shows that such fluctuations are insignificant and cannot practically be identified as noise. It is important to take into account that the results may vary depending on the DVR model. The level of influence of distortions (

F) is much less than the influence of even 1% of noise. This means that in practice, noise

F can be neglected.

The fixed threshold of S < 0.9 was empirically determined through a comprehensive analysis of ROC curves and precision-recall trade-offs across our experimental datasets. This value demonstrated an optimal balance between detection sensitivity and false positive rates, achieving maximal F1-scores while maintaining practical applicability for real-time systems. However, the generalizability of this specific threshold value across different camera models and environmental conditions requires careful consideration. Variations in sensor characteristics, lens properties, automatic exposure adjustments, and native image processing pipelines between camera systems may systematically affect the absolute values of the contrast-based similarity measure. Similarly, environmental factors such as lighting conditions, weather, and scene dynamics could influence the baseline similarity between consecutive frames. Therefore, while the methodological approach remains universally applicable, the optimal threshold value may benefit from camera-specific calibration or adaptive adjustment based on operational conditions. Future work should explore adaptive thresholding strategies that dynamically adjust to changing environmental contexts and camera-specific characteristics to ensure robust performance across diverse UAV platforms and mission profiles.

While the present study does not include a detailed runtime analysis, the computational efficiency of the proposed method follows directly from its algorithmic simplicity. Using only the contrast component of SSIM eliminates the need to compute the luminance and structural components, reducing the number of required mathematical operations by approximately three times compared to the full SSIM version. This approach is particularly important for resource-constrained systems, where even a minor reduction in computational load can be critical for enabling real-time operation. A promising direction for future research is the precise quantitative evaluation of the method’s performance on various hardware platforms used in unmanned systems.

The proposed method may seem simple, but its value lies in adapting the classical SSIM to the specific task of noise detection in videos from unmanned vehicles. This allows achieving high accuracy at low computational cost, which is critical for embedded systems. The approaches and methods discussed in this paper are applicable to cameras used in unmanned ground, water, and aerial vehicles. Different types of unmanned vehicles have varying constraints on energy consumption and computational resources. The approaches from [

12,

47] and the proposed method are suitable for unmanned vehicles with limited device dimensions. In contrast, employing the VMAF [

48] in unmanned aerial vehicles would result in rapid battery depletion. Future research could focus on implementing adaptive thresholding techniques could refine distortion detection, making it more effective for real-time applications in unmanned systems.