1. Introduction

Swallowing is a vital yet complex physiological function essential for safe nutrition and airway protection. It requires the tightly coordinated action of numerous muscles and neural pathways. Swallowing difficulties—collectively termed dysphagia—can arise from a range of causes, including age-related functional decline [

1], neurological disorders [

2], head and neck cancers and their treatments [

3], structural abnormalities, and iatrogenic complications following surgery. If left untreated, dysphagia can lead to serious consequences such as aspiration pneumonia, malnutrition, dehydration, and diminished quality of life [

4]. As such, early detection and objective assessment of swallowing impairments are critical for guiding appropriate clinical intervention.

When patients present with symptoms suggestive of dysphagia, they are typically referred to a speech-language pathologist for clinical evaluation. This usually begins with a case history and sensorimotor examination, followed by instrumental diagnostic tests. Several objective modalities are employed in clinical settings, including fiber-optic endoscopic evaluation of swallowing [

5], manometry [

6], and surface electromyography [

7]. However, the videofluoroscopic swallowing study (VFSS) remains the most widely adopted gold standard [

8]. In VFSS, patients swallow various barium-labeled boluses while X-ray imaging captures a dynamic video of the swallowing process. The resulting fluoroscopic frames enable clinicians to assess the movement and coordination of anatomical structures involved in swallowing and to identify potential abnormalities.

One of the critical aspects of VFSS analysis is bolus tracking, which enables clinicians to assess the safety and efficiency of swallowing [

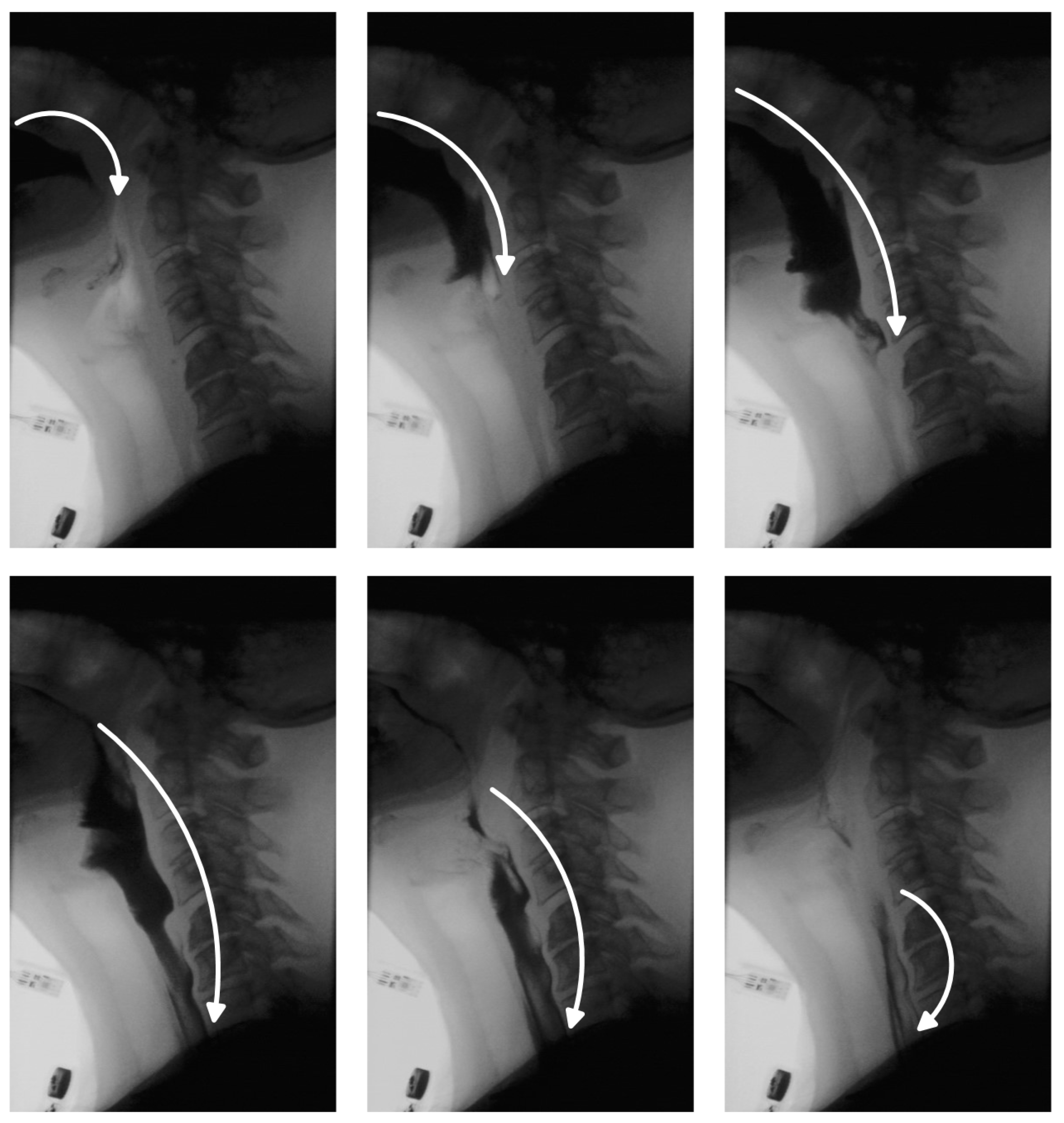

9]. In a healthy swallow, the bolus moves smoothly and continuously from the oral cavity to the esophagus and into the stomach (

Figure 1). In individuals with swallowing disorders, however, this movement may deviate from the normal trajectory, leading to complications. The bolus may be misdirected into the nasal cavity, fragment into smaller portions swallowed sequentially, or enter the pharynx while the airway is unprotected—raising the risk of aspiration pneumonia. In other cases, part of the bolus may remain in the oral or pharyngeal region, resulting in residue that can cause postprandial choking. While residue detection is as clinically important as bolus tracking, it has received comparatively less attention in research due to the inherent challenges in identifying small, low-contrast regions within complex anatomical backgrounds. Traditionally, clinicians manually review VFSS recordings, examining key frames to track bolus movement and identify residue. This process is time-consuming, susceptible to observer fatigue and variability, and requires specialized expertise, limiting scalability. Automated bolus tracking systems offer a promising alternative, providing more standardized and objective assessments. In addition to reducing manual workload, they facilitate quantification of important swallowing parameters, such as bolus transit time, penetration, and aspiration events.

In this study, we propose a machine learning-based approach for bolus segmentation and residue detection using a convolutional autoencoder trained exclusively on videofluoroscopy frames that do not contain bolus material. This design allows the model to learn the underlying anatomical background, enabling deviations—such as the presence of bolus—to be detected as anomalies during inference. By employing a customized loss function and incorporating a sufficient number of no-bolus frames during training, the model learns to differentiate bolus presence from other physiological movements occurring during swallowing, such as head motion or hyoid bone displacement. Consequently, the network selectively fails to reconstruct bolus regions during testing, while preserving reconstruction fidelity for normal anatomical features—an effect further reinforced through targeted postprocessing. Importantly, this approach requires only unlabeled no-bolus frames from diverse participants, promoting robust generalization across unseen subjects and eliminating the need for pixel-level annotations required by supervised methods.

To enhance segmentation quality, our model employs positional encoding as a strategy to capture global contextual information, addressing a fundamental limitation of convolutional neural networks, which primarily focus on local features [

10]. While transformer-based architectures are capable of capturing such global dependencies, they often demand extensive training data and incur high computational costs. By contrast, our approach leverages fixed sinusoidal encodings that inject spatial location into the input representation without requiring additional learnable parameters. Each pixel is augmented with its corresponding encoding, allowing the model to distinguish between visually similar features appearing in different parts of the image. This spatial sensitivity is especially beneficial in anomaly detection, where deviations from expected visual patterns are not solely based on appearance but also on spatial context. Comparative results demonstrate that the use of positional encoding improves spatial awareness in the reconstruction process, leading to fewer false positives in bolus segmentation.

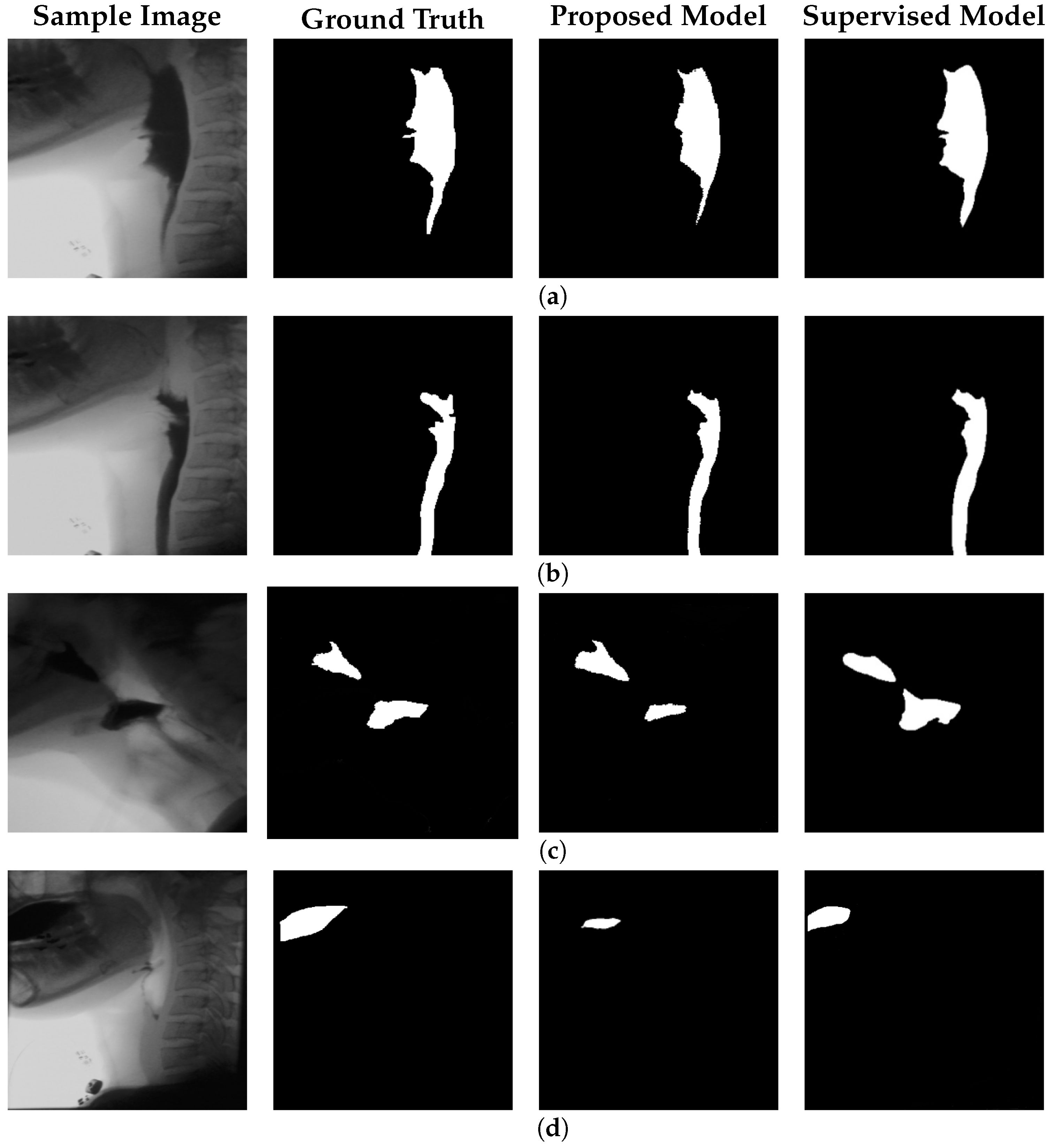

To evaluate model performance, we obtained a set of manually annotated images from speech-language pathologists indicating the locations of the bolus and bolus residue. The model’s output was quantitatively assessed using Intersection over Union (IoU) and Dice Similarity Coefficient (DSC), and benchmarked against both a fully supervised baseline and an identical unsupervised approach lacking global context integration. For bolus segmentation, our model achieves an IoU of 61% and a DSC of 72%, highlighting its ability to perform effectively with minimal annotation requirements. To ensure practical feasibility, we also report computational metrics, including inference speed and parameter count.

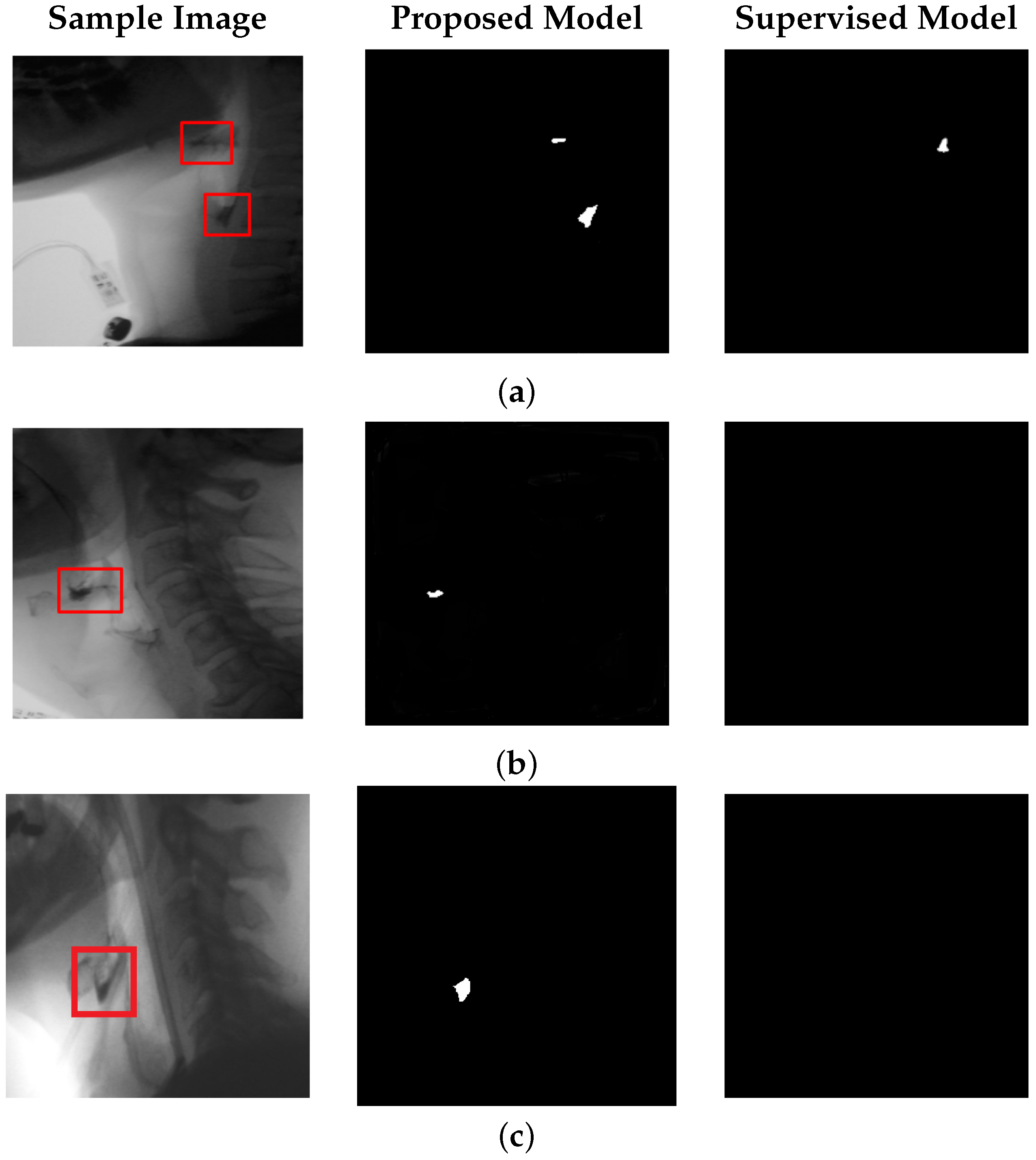

Beyond bolus localization, our model is also effective in detecting bolus residue. This is one of the first features assessed by speech-language pathologists during VFSS interpretation, as it may indicate impaired bolus propulsion, reduced pharyngeal contraction, or compromised airway protection mechanisms. To the best of our knowledge, no prior study in this area has reported quantitative results for automated residue segmentation on VFSS data. Residue regions are typically smaller in size and exhibit lower contrast relative to surrounding anatomy, making them difficult to detect. Despite these challenges, our model achieves an IoU of 52% and a DSC of 66% for residue segmentation, demonstrating its potential to fill this critical gap and support more efficient VFSS interpretation.

The main contributions of this work are as follows:

We introduce an unsupervised autoencoder-based framework for bolus segmentation and residue detection in VFSS, removing the need for pixel-level annotations.

We enhance reconstruction with positional encoding, which improves global contextual awareness and reduces false positives.

We provide the first quantitative evaluations of automated residue segmentation in VFSS, addressing a clinically important but underexplored task.

We benchmark against supervised and unsupervised baselines while also reporting computational efficiency, demonstrating both accuracy and practicality for clinical use.

2. Related Work

The application of machine learning to medical imaging has significantly advanced automated analysis by improving accuracy, efficiency, and reproducibility [

11,

12]. In the context of swallowing assessment, several works have explored VFSS analysis for bolus tracking and segmentation.

Caliskan et al. [

13] employed Mask R-CNN with a pretrained ResNet101 backbone, achieving a mean average precision of 0.49 and an IoU of 0.71 for bolus segmentation. Similarly, Li et al. [

14] evaluated U-Net [

15] and U-Net++ [

16] architectures, incorporating diverse encoder–decoder backbones such as MobileNetV2 [

17], VGGNet [

18], ResNet [

19], DenseNet [

20], Inception-ResNet [

21], and EfficientNet [

22]. These models were benchmarked on segmentation accuracy, computational complexity, and inference time. While these supervised methods achieved promising performance, they require extensive pixel-level annotations, which are labor-intensive and prone to inter-rater variability. Annotating segmentation masks is particularly challenging in VFSS due to low-contrast regions and ambiguous anatomical boundaries [

23].

To reduce annotation demands, Bandini et al. [

24] proposed a weakly supervised method for localizing the bolus during the pharyngeal phase using Grad-CAM with multiple pretrained backbones, including VGG16, InceptionV3, and ResNet50. The resulting activation maps were refined using thresholding and morphological operations. Although effective, this method still depends on labeled data and does not address bolus residue detection, which remains comparatively underexplored.

Beyond swallowing-specific applications, recent advances in image segmentation have been driven by transformer- and diffusion-based architectures. Transformer-based medical object detection has become one of the hot spots in this field [

25]; however, we did not find studies that applied transformers in an unsupervised way for segmentation. Diffusion models have also gained significant popularity, with several works exploring their application to medical image segmentation and reporting promising results [

26,

27,

28]. The main limitation of diffusion-based approaches is their requirement for very large training datasets, which poses a challenge in medical domains such as swallowing assessment where publicly available datasets are scarce and access to sufficiently large collections is difficult.

3. Materials and Methods

3.1. Dataset

The dataset used in this study comprises videofluoroscopic swallowing sequences collected from human participants, including both healthy individuals and those with swallowing difficulties. Each subject was instructed to swallow a specified bolus in a single attempt; however, some performed two to three swallows within the same recording, separated by short intervals. No postprocessing (e.g., denoising or frame interpolation) was applied. Instead, each sequence was decomposed into individual frames to enable frame-level analysis.

For model training, 10,000 bolus-free frames were extracted from 37 participants (mean age: 62.8 ± 10.8 years; 15 males, 22 females). To evaluate generalizability, an independent set of 305 frames from ten previously unseen participants (mean age: 31.6 ± 7.1 years; 4 males, 6 females) was used for validation and testing. This subject-wise split was designed to assess the model’s performance across anatomically diverse cases and reduce the risk of overfitting. The test set included both bolus-present and bolus-absent frames to ensure the model detects bolus regions accurately while avoiding false-positive predictions in bolus-free frames. The study protocol was approved by the University of Pittsburgh Institutional Review Board (IRB #12080498), and written informed consent was obtained from all participants.

3.2. Methodology

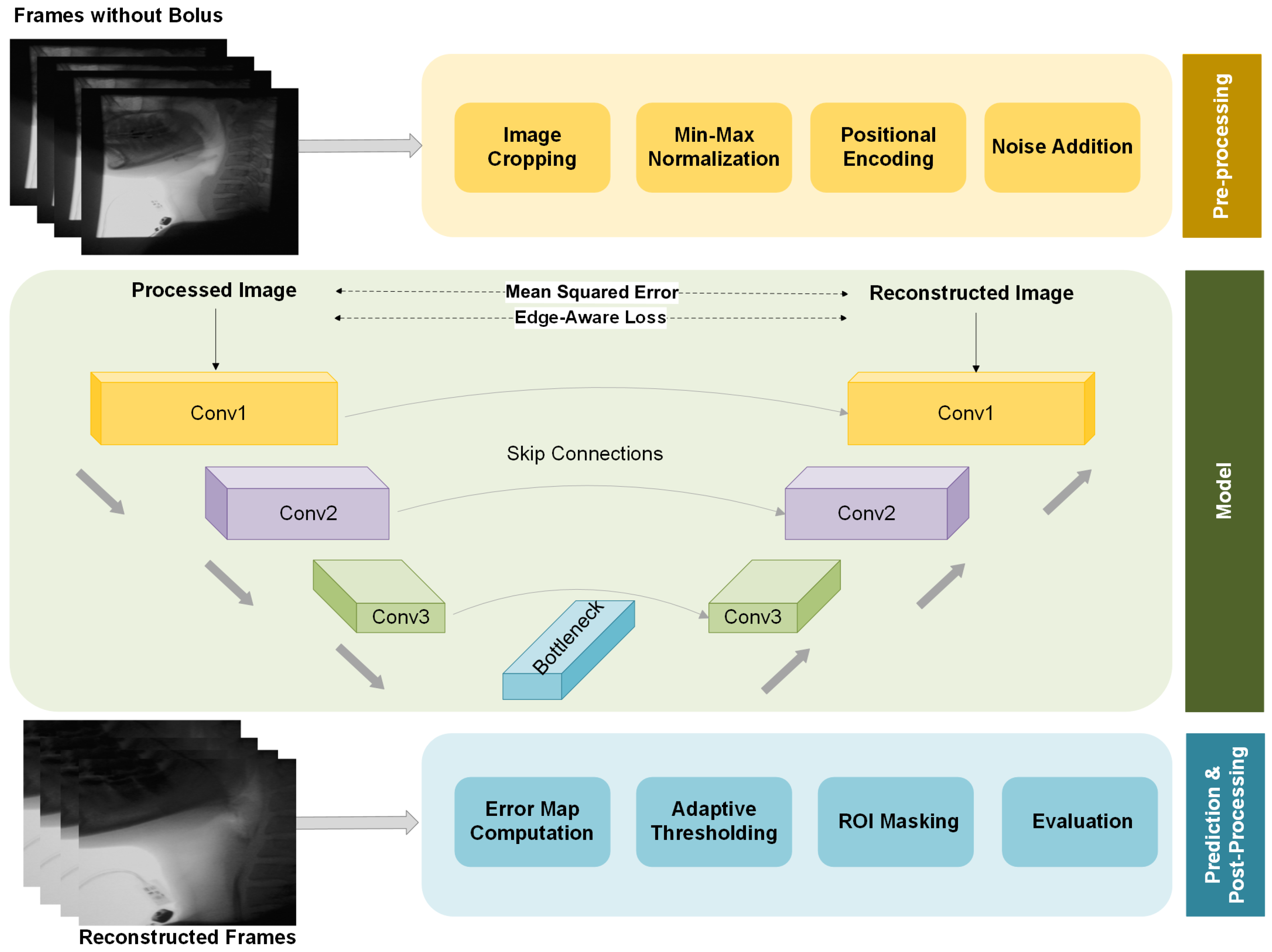

The overall structure of our proposed framework, including preprocessing, model architecture, and postprocessing, is illustrated in

Figure 2. We provide a more detailed explanation of each component in the following sections.

3.3. Preprocessing

Prior to training, the images undergo several preprocessing steps. First, black boundary regions are cropped to remove irrelevant areas and ensure that the model focuses on anatomical structures. This step also helps eliminate any private information, such as participant names or identifying details that might be present in the frame borders, addressing privacy concerns. Additionally, it prevents the model from learning unintended priors from extraneous information. The images are then resized to 256 × 256 pixels for consistency across the dataset. To minimize variations caused by differences in imaging conditions, min-max normalization is applied, scaling pixel intensities to a consistent range across frames. This helps stabilize brightness and contrast, enhancing the model’s robustness to variations in imaging quality.

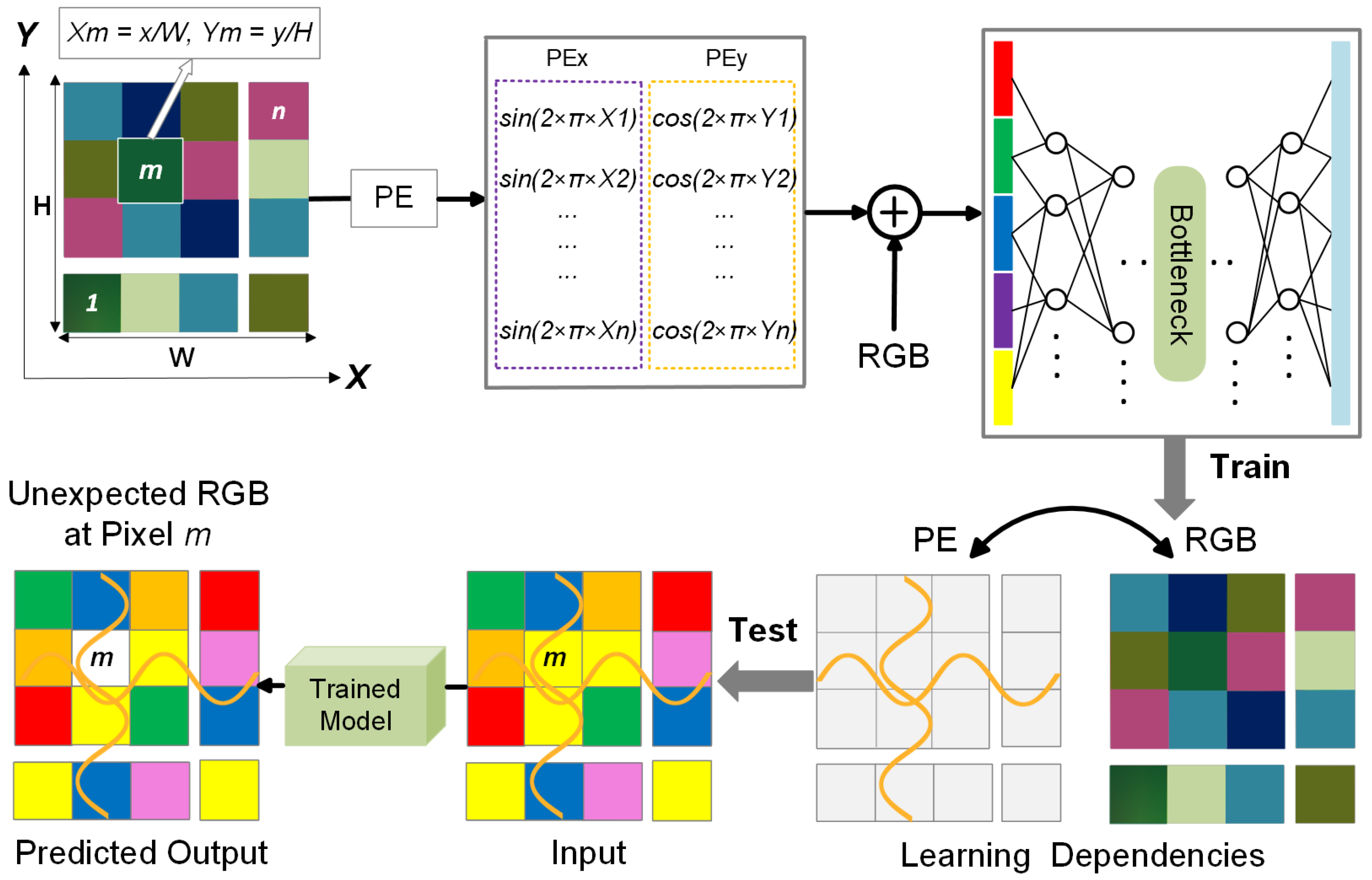

A key enhancement in our preprocessing strategy is the integration of positional encoding, which provides global spatial context to the model. While our approach is built upon a CNN-based autoencoder, standard CNN architectures primarily focus on local features, limiting their ability to distinguish between spatially similar but contextually different regions. During preliminary experiments without positional encoding, we observed that the model was partially able to reconstruct the bolus, even though bolus-containing frames were not used during training. This unintended reconstruction likely stemmed from similarities in color, texture, or other visual features between the bolus and certain anatomical regions in the training set. As a result, the model did not consistently treat the bolus as an anomaly during inference. To address this, we incorporated positional encoding into the input representation, allowing the model to learn location-dependent feature distributions. This addition enhances spatial awareness, helping the model more accurately identify the bolus as a spatially inconsistent structure and reducing false negatives in segmentation.

Positional encoding is computed using sinusoidal and cosine functions over the spatial dimensions:

where

H and

W denote the image height and width, respectively. The resulting encoding values are concatenated as additional input channels alongside the grayscale fluoroscopic image, enabling the model to better capture spatial relationships and location-dependent patterns. A schematic visualization is provided in

Figure 3, illustrating how positional encoding assists in identifying anomalies.

When training an autoencoder for reconstruction-based anomaly detection on VFSS frames, the input to the encoder is one of the following:

RGB only: ;

RGB + positional encoding (PE): , where

We compare two models:

Each model is trained to minimize the reconstruction loss over the dataset

, defined as follows:

where

denotes the trainable parameters of the model, and

represents the original RGB intensity at pixel location

.

We model the true RGB distribution at a given pixel location

as a random variable:

Here, denotes the expected RGB value at location , and represents the covariance of RGB values observed across the training dataset at that location. These parameters characterize the spatially localized appearance statistics of the background anatomy.

We consider two autoencoder models, each parameterized by , and trained to minimize the reconstruction loss:

Model A (No PE):

, which learns a

global distribution:

where

is the global mean RGB vector computed by averaging across all spatial locations in the training set. Since no spatial information is provided, the model attempts to reconstruct based solely on global appearance statistics.

Model B (With PE):

, which learns a

location-conditioned distribution:

where

is the expected RGB value at position

, estimated from the training data. By incorporating positional encoding, this model can learn spatially dependent RGB priors, enabling it to distinguish between anatomically similar features appearing in different locations.

At inference time, we assume a test frame contains an anomaly—such as bolus material—at a specific spatial location

, where such a pattern was never observed during training. We model this as follows:

Here, represents a significant deviation from the learned mean appearance at that location, indicating a potential anomaly.

For clarity, we express the reconstruction error using the mean squared error (MSE) formulation, though the underlying reasoning generalizes to other reconstruction losses, including the hybrid objective used in practice.

We evaluate how each model responds to this perturbation:

The model expects at this specific location, and reconstruction fails proportionally to the deviation .

Since the model lacks spatial information, it learns to reconstruct based on a global average , which aggregates feature statistics across the entire training set. If resembles values seen elsewhere in the training data, the model may still reconstruct it with low error—failing to recognize it as anomalous. As a result, the reconstruction error becomes inconsistent and depends on how much the local anomaly deviates from the global distribution.

Thus, only the PE-enabled model is able to assign a high reconstruction error to spatially inconsistent input, enabling more reliable anomaly detection at test time.

3.4. Autoencoder Architecture

The proposed model is a convolutional autoencoder designed to reconstruct fluoroscopic images while capturing their underlying structural patterns. It adopts an encoder–decoder architecture, where the encoder extracts a compressed representation of the input, and the decoder reconstructs the original image. The reconstruction quality serves as an indicator of structural deviation, enabling the identification of unseen elements such as bolus or residue that differ from the learned background.

The encoder consists of three convolutional blocks: the first applies a 2D convolution with 64 filters of size , followed by batch normalization, ReLU activation, and a dropout layer (rate = 0.1). Subsequent layers use 128 and 256 filters (kernel size ) with batch normalization and ReLU activation. Each block is followed by max-pooling to reduce spatial resolution and promote hierarchical feature abstraction.

The decoder mirrors this design, employing transpose convolutions for upsampling. Skip connections are included between encoder and decoder layers at matching resolutions, ensuring that fine-grained spatial details are preserved during reconstruction. Each decoded feature map undergoes batch normalization and ReLU activation to enhance stability and nonlinearity. Finally, a convolution with 3 output channels and sigmoid activation generates the reconstructed frame.

This architecture balances efficiency and accuracy: max-pooling layers capture high-level abstractions, skip connections mitigate information loss, and dropout enhances generalization. Together, these design choices ensure that reconstructed boundaries remain sharp and clinically relevant rather than overly smoothed.

3.5. Training Strategy

To effectively train the model, we use a dataset of 10,000 fluoroscopic frames. All selected frames exclude the bolus, enabling the model to learn the natural background structure of the swallowing anatomy. To ensure the model captures an accurate representation of the background while preserving critical anatomical details, we define a hybrid loss function that combines two components:

To promote generalization, training is conducted on fluoroscopic frames collected from a diverse cohort of participants, allowing the model to capture anatomical and imaging variability. To further improve robustness, we apply light Gaussian noise augmentation during training. This encourages reliance on structural and spatial cues rather than memorization of fine-grained intensity patterns. By perturbing local pixel details without altering global structure, this augmentation discourages over-reliance on specific image regions and fosters a more comprehensive understanding of background characteristics.

Model training was performed using the Adam optimizer with an initial learning rate of 0.01. This relatively large starting value was chosen empirically to enable rapid convergence in the early training stages, given the small size of the dataset and the lightweight nature of the network. To prevent instability, a learning rate scheduler (ReduceLROnPlateau) was applied: if the validation loss did not improve for 10 consecutive epochs, the learning rate was automatically reduced by a factor of 0.5. This adaptive adjustment allowed fine-grained updates in later epochs, ensuring continued convergence without premature stagnation. Training was conducted with a batch size of 16 for 100 epochs. No weight decay was applied, and the Adam momentum parameters were left at their default values (, ). Early stopping was not enforced; instead, the final model was selected based on the lowest loss observed during training.

Once trained, the model learns to reconstruct only the background features encountered during training. At inference, the presence of unseen structures—such as the bolus or residue—produces reconstruction errors, which are leveraged for segmentation. These deviations are quantified using a pixel-wise error map, computed as the absolute difference between the input frame and its reconstruction. To highlight anomalous regions, an adaptive thresholding strategy is applied, wherein the threshold is dynamically determined based on the distribution of reconstruction errors. In practice, we validated several thresholding strategies and found that using the mean reconstruction error, identified through a 10% validation subset of the test data, provided the most consistent performance. This yields a binary segmentation map in which high-error regions—corresponding to unrecognized structures—are emphasized while well-reconstructed areas are suppressed. Finally, a region-of-interest (ROI) mask is applied to restrict analysis to anatomically relevant areas, defined based on anatomical landmarks and guided by speech-language pathology expertise in swallowing physiology. Specifically, the left, right, and upper borders of each frame were excluded, as VFSS protocols introduce gaps between these borders and the participant’s head and neck.

3.6. Model Evaluation

To evaluate the performance of the proposed framework, we used a dataset comprising 305 VFSS frames, including 250 frames containing bolus and 55 frames containing bolus residue, collected from individuals representing both healthy and dysphagic subjects. The model detects both bolus and residue using the same reconstruction-based mechanism, without requiring architectural modifications or task-specific tuning. Ground truth segmentation masks, validated by certified speech-language pathologists, were compared against the binary segmentation outputs produced by the model. Performance was quantitatively assessed using several evaluation metrics, including accuracy, sensitivity, specificity, IoU, and DSC:

Accuracy measures the overall correctness of the segmentation by computing the proportion of correctly classified pixels:

Sensitivity quantifies the model’s ability to correctly identify bolus regions:

Specificity evaluates how well the model distinguishes non-bolus regions from the background:

IoU measures the overlap between the predicted bolus region and the ground truth:

DSC provides a balanced measure of segmentation performance by emphasizing both precision and recall:

While accuracy offers a general measure of correctness, it can be misleading in the presence of class imbalance, particularly due to the predominance of background (black) pixels in the segmentation masks. In such cases, IoU and DSC provide more informative metrics, as they offer a balanced evaluation of both foreground and background regions.

In addition to segmentation accuracy, we also assess the computational efficiency of the proposed framework. A lightweight model with a reduced parameter count and lower computational complexity is desirable for clinical settings, enabling fast and cost-effective inference without reliance on high-performance hardware. To evaluate the feasibility of real-time deployment, we report key efficiency metrics, including the number of trainable parameters, floating point operations (FLOPs), and average inference time.

6. Discussion

This study demonstrates that segmentation of both bolus and bolus residue in VFSS recordings can be effectively achieved through an unsupervised learning approach, without relying on pixel-level annotations. By leveraging anomaly detection via a CNN-based autoencoder trained exclusively on non-bolus frames, the model learns to reconstruct normative swallowing anatomy while treating unobserved structures, such as the bolus, as spatial anomalies during inference. This enables effective localization using reconstruction error maps, eliminating the need for manual mask annotations while maintaining strong segmentation performance.

A notable strength of this framework is its clinical scalability: it was trained using diverse frames drawn from participants with varying swallowing profiles, and required no segmentation labels—making it easier to extend to larger or more heterogeneous datasets. However, a key challenge in this type of anomaly detection is ensuring that typical anatomical movements, such as hyoid excursion or epiglottic inversion, are not falsely flagged as anomalies. To mitigate this risk, we incorporated a diverse training distribution and applied an ROI mask during postprocessing to constrain the model’s focus to the pharyngeal area, thereby reducing false positives caused by background artifacts or unrelated motion.

To further enhance spatial sensitivity, positional encoding was incorporated as an additional input channel. This mechanism provides a consistent spatial reference for the model, complementing the local feature extraction capabilities of the CNN architecture. While this addition improves detection fidelity by anchoring features to specific regions, it introduces sensitivity to geometric transformations, such as rotation or flipping. However, because the training data was sufficiently diverse, we observed that moderate variations—such as changes in head tilt, or swallowing posture-did not adversely affect performance.

In practice, the potential limitations introduced by positional encoding can be analyzed in two scenarios. In the first case, the trained model is applied to data collected within the same medical center or any center with similar imaging protocols, field of view, and patient positioning. Here, the positional encoding serves as a reliable spatial guide, and the model can generalize well to new patients in all these medical centers. It is worth noting that anatomical framing and acquisition protocols are generally similar across centers, though not always identical. In the second scenario, the model’s generalizability will be affected if deployment occurs in a center with significantly different imaging setups or acquisition protocols. Nevertheless, the same unsupervised training procedure can be repeated locally to adapt the model. Since our method does not rely on manual annotations and employs a lightweight architecture, retraining is both efficient and practical. Therefore, while positional encoding may initially appear to limit generalizability, it can in fact be easily recalibrated, enabling the approach to adapt flexibly across diverse clinical environments.

Our proposed model achieves performance in bolus segmentation that is comparable to a well-established supervised approach, but it drastically outperforms the supervised method in bolus residue detection. This improved residue detection suggests that learning from negative space—rather than relying on sparse or potentially biased residue annotations—may provide a more generalizable and robust pathway for detecting underrepresented clinical phenomena. This insight highlights the potential of label-free, anomaly-based frameworks for detecting subtle diagnostic markers in VFSS and may extend to other medical imaging domains where annotated data are limited or unreliable.

One notable advantage of the proposed model is its computational efficiency. With only 1.71 million parameters and 0.0034 GFLOPs, it is substantially smaller and faster than conventional supervised models. Its short inference time supports real-time deployment, making it suitable for integration into existing imaging systems, including portable platforms and clinical infrastructure. This efficiency reduces computational overhead, streamlines workflow, and improves patient throughput—key advantages in high-demand settings such as radiology and swallowing disorder clinics.

Several avenues exist for future research. The current segmentation output could be extended to derive additional clinically relevant metrics, such as bolus transition duration and speed, which may assist in detecting abnormal transit patterns indicative of neuromuscular disorders, esophageal motility issues, or neurodegenerative conditions. Future work could also address aspiration events, including segmentation of aspirated bolus and estimation of its volume to support risk assessment and severity grading. Beyond bolus segmentation, this anomaly-based framework can potentially be generalized to other medical image segmentation tasks by redefining the target structure as an anomaly relative to normative anatomy. By integrating unsupervised learning principles, spatial encoding, and domain-specific ROI constraints, this approach offers a promising direction for achieving reliable segmentation performance without the need for extensive manual annotation. With appropriate modifications, the core methodology may also be adapted to non-medical applications, broadening its potential impact.