Surgical Instrument Segmentation via Segment-Then-Classify Framework with Instance-Level Spatiotemporal Consistency Modeling

Abstract

1. Introduction

- (1)

- We propose a segment-then-classify framework that decouples segmentation and classification, improving the spatial completeness and temporal stability of surgical instrument segmentation.

- (2)

- We introduce a bounding box-guided temporal modeling strategy that combines spatial priors with semantic region features to enhance instance-level classification consistency.

- (3)

- We achieve state-of-the-art performance on the EndoVis 2017 and 2018 datasets, demonstrating the effectiveness and interpretability of the proposed framework.

2. Related Work

2.1. Surgical Instrument Segmentation

2.2. Temporal Modeling and Segment-Then-Classify Frameworks

3. Materials and Methods

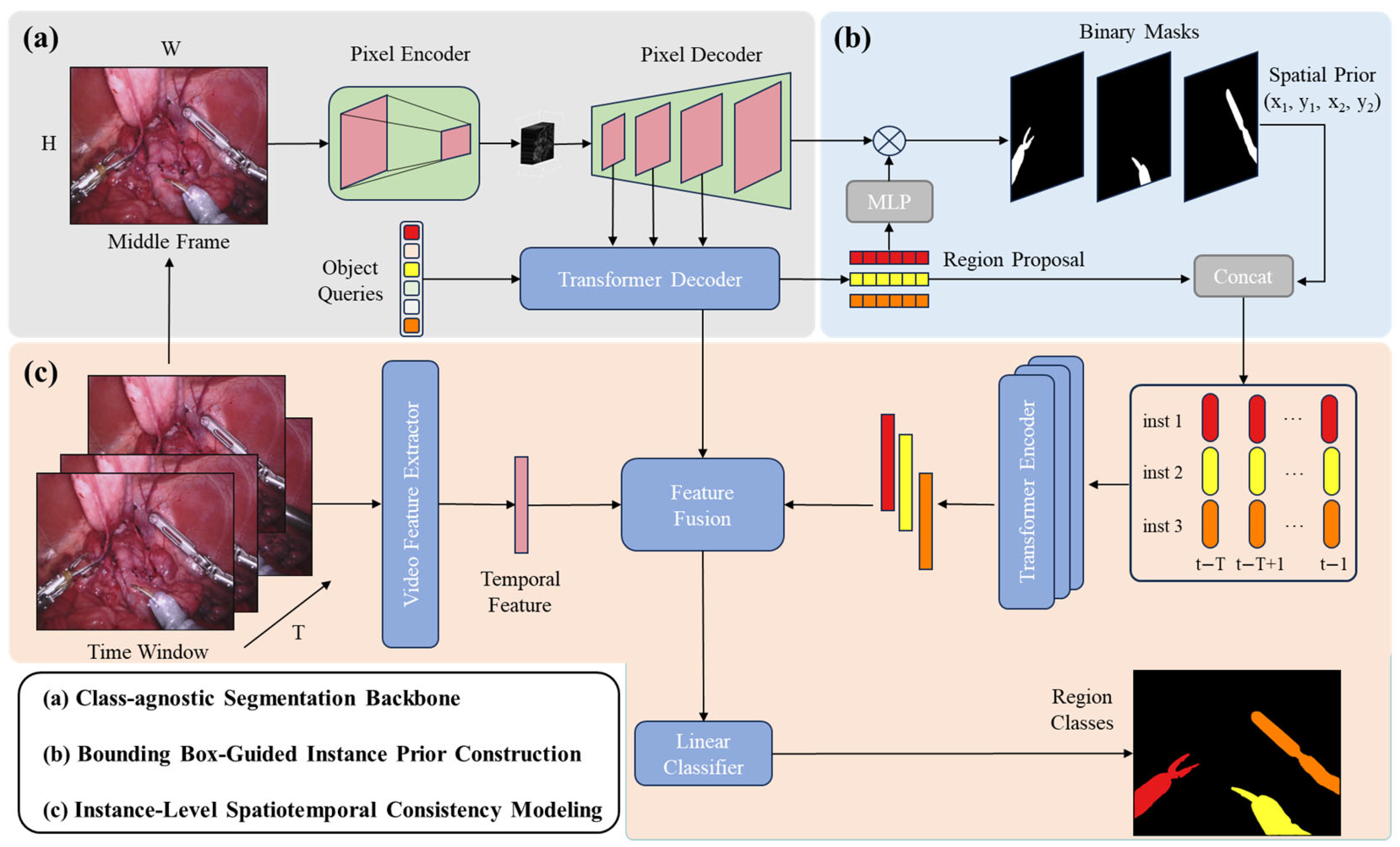

3.1. Overview

- (1)

- a class-agnostic segmentation backbone based on Mask2Former to generate instance masks and corresponding region proposal features;

- (2)

- a bounding box-guided instance prior construction module to combine spatial priors and semantic region features;

- (3)

- an instance-level spatiotemporal consistency modeling module that employs a transformer encoder to capture temporal relationships among instances across consecutive frames.

3.2. Class-Agnostic Segmentation Backbone

3.3. Bounding Box-Guided Instance Prior Construction

3.4. Instance-Level Spatiotemporal Consistency Modeling

3.5. Implementation Details

4. Results

4.1. Datasets

4.2. Main Results

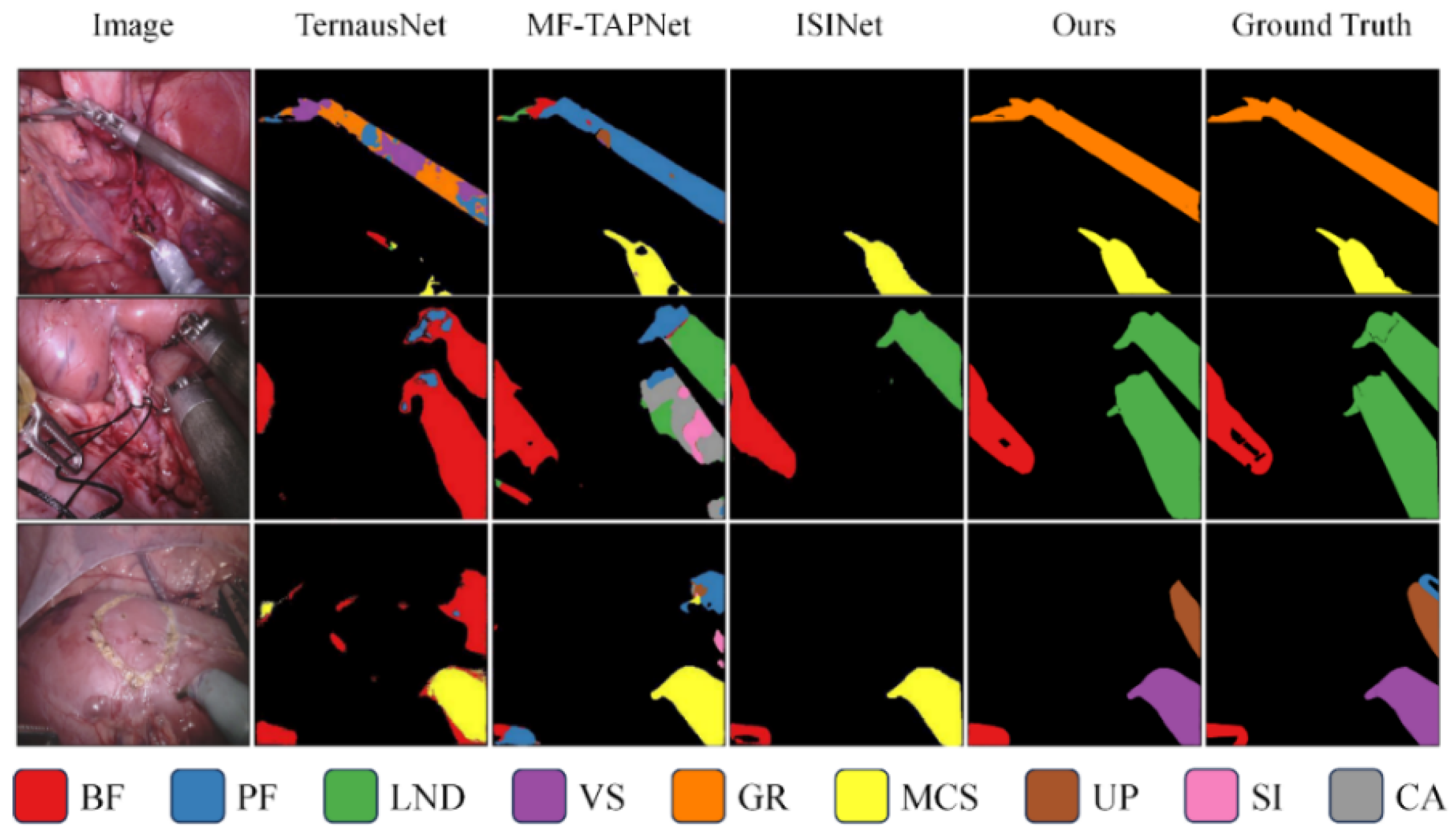

4.3. Qualitative Results

4.4. Ablation Experiment

5. Discussion

Practical Managerial Significance

6. Open Research Questions and Future Directions

- (1)

- Long-term temporal modeling: How can transformer-based structures efficiently capture dependencies over entire surgical procedures without excessive computation?

- (2)

- Generalization to unseen tools: Can the model adapt to novel instruments or surgical scenes without retraining, possibly through domain adaptation or few-shot learning?

- (3)

- Multi-modal integration: How can visual segmentation be combined with kinematic or force-sensing data to improve scene understanding?

- (4)

- Real-time deployment: What architectural or hardware-level optimizations are required to deploy the framework in real robotic surgery environments?

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Biondi, A.; Di Stefano, C.; Ferrara, F.; Bellia, A.; Vacante, M.; Piazza, L. Laparoscopic versus open appendectomy: A retrospective cohort study assessing outcomes and cost-effectiveness. World J. Emerg. Surg. 2016, 11, 44. [Google Scholar] [CrossRef] [PubMed]

- Antoniou, S.A.; Antoniou, G.A.; Koch, O.O.; Pointner, R.; Granderath, F.A. Meta-analysis of laparoscopic vs open cholecystectomy in elderly patients. World J. Gastroenterol. WJG 2014, 20, 17626. [Google Scholar] [CrossRef] [PubMed]

- Chen, Q.; Merath, K.; Bagante, F.; Akgul, O.; Dillhoff, M.; Cloyd, J.; Pawlik, T.M. A comparison of open and minimally invasive surgery for hepatic and pancreatic resections among the medicare population. J. Gastrointest. Surg. 2018, 22, 2088–2096. [Google Scholar] [CrossRef] [PubMed]

- Haidegger, T.; Speidel, S.; Stoyanov, D.; Satava, R.M. Robot-assisted minimally invasive surgery—Surgical robotics in the data age. Proc. IEEE 2022, 110, 835–846. [Google Scholar] [CrossRef]

- Maier-Hein, L.; Eisenmann, M.; Sarikaya, D.; März, K.; Collins, T.; Malpani, A.; Fallert, J.; Feussner, H.; Giannarou, S.; Mascagni, P.; et al. Surgical data science–from concepts toward clinical translation. Med. Image Anal. 2022, 76, 102306. [Google Scholar] [CrossRef] [PubMed]

- Zia, A.; Essa, I. Automated surgical skill assessment in RMIS training. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 731–739. [Google Scholar] [CrossRef] [PubMed]

- Twinanda, A.P.; Shehata, S.; Mutter, D.; Marescaux, J.; de Mathelin, M.; Padoy, N. Endonet: A deep architecture for recognition tasks on laparoscopic videos. IEEE Trans. Med. Imaging 2016, 36, 86–97. [Google Scholar] [CrossRef] [PubMed]

- Long, Y.; Li, Z.; Yee, C.H.; Ng, C.F.; Taylor, R.H.; Unberath, M.; Dou, Q. E-dssr: Efficient dynamic surgical scene reconstruction with transformer-based stereoscopic depth perception. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2021: 24th International Conference, Strasbourg, France, 27 September–1 October 2021; Proceedings, Part IV 24. Springer International Publishing: Cham, Switzerland, 2021; pp. 415–425. [Google Scholar]

- Casella, A.; Moccia, S.; Paladini, D.; Frontoni, E.; De Momi, E.; Mattos, L.S. A shape-constraint adversarial framework with instance-normalized spatio-temporal features for inter-fetal membrane segmentation. Med. Image Anal. 2021, 70, 102008. [Google Scholar] [CrossRef] [PubMed]

- Bano, S.; Vasconcelos, F.; Tella-Amo, M.; Dwyer, G.; Gruijthuijsen, C.; Poorten, E.V.; Vercauteren, T.; Ourselin, S.; Deprest, J.; Stoyanov, D. Deep learning-based fetoscopic mosaicking for field-of-view expansion. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1807–1816. [Google Scholar] [CrossRef] [PubMed]

- Bouget, D.; Benenson, R.; Omran, M.; Riffaud, L.; Schiele, B.; Jannin, P. Detecting surgical tools by modelling local appearance and global shape. IEEE Trans. Med. Imaging 2015, 34, 2603–2617. [Google Scholar] [CrossRef] [PubMed]

- Rieke, N.; Tan, D.J.; di San Filippo, C.A.; Tombari, F.; Alsheakhali, M.; Belagiannis, V.; Eslami, A.; Navab, N. Real-time localization of articulated surgical instruments in retinal microsurgery. Med. Image Anal. 2016, 34, 82–100. [Google Scholar] [CrossRef] [PubMed]

- Garcia-Peraza-Herrera, L.C.; Li, W.; Fidon, L.; Gruijthuijsen, C.; Devreker, A.; Attilakos, G.; Deprest, J.; Poorten, E.V.; Stoyanov, D.; Vercauteren, T.; et al. Toolnet: Holistically-nested real-time segmentation of robotic surgical tools. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; IEEE: New York, NY, USA, 2017; pp. 5717–5722. [Google Scholar]

- Laina, I.; Rieke, N.; Rupprecht, C.; Vizcaíno, J.P.; Eslami, A.; Tombari, F.; Navab, N. Concurrent segmentation and localization for tracking of surgical instruments. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2017: 20th International Conference, Quebec City, QC, Canada, 11–13 September 2017; Proceedings, Part II 20. Springer International Publishing: Cham, Switzerland, 2017; pp. 664–672. [Google Scholar]

- Milletari, F.; Rieke, N.; Baust, M.; Esposito, M.; Navab, N. CFCM: Segmentation via coarse to fine context memory. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2018: 21st International Conference, Granada, Spain, 16–20 September 2018; Proceedings, Part IV 11. Springer International Publishing: Cham, Switzerland, 2018; pp. 667–674. [Google Scholar]

- Ayobi, N.; Pérez-Rondón, A.; Rodríguez, S.; Arbeláez, P. Matis: Masked-attention transformers for surgical instrument segmentation. In Proceedings of the 2023 IEEE 20th International Symposium on Biomedical Imaging (ISBI), Cartagena, Colombia, 18–21 April 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Fan, H.; Xiong, B.; Mangalam, K.; Li, Y.; Yan, Z.; Malik, J.; Feichtenhofer, C. Multiscale vision transformers. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 6824–6835. [Google Scholar]

- Shvets, A.A.; Rakhlin, A.; Kalinin, A.A.; Iglovikov, V.I. Automatic Instrument Segmentation in Robot-Assisted Surgery Using Deep Learning. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications, Orlando, FL, USA, 17–20 December 2018. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In MICCAI; Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar]

- González, C.; Bravo-Sánchez, L.; Arbelaez, P. Isinet: An instance-based approach for surgical instrument segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention, Proceedings of the MICCAI 2020: 23rd International Conference, Lima, Peru, 4–8 October 2020; Proceedings, Part III; Springer International Publishing: Cham, Switzerland, 2020; pp. 595–605. [Google Scholar]

- Song, M.; Zhai, C.; Yang, L.; Liu, Y.; Bian, G. An attention-guided multi-scale fusion network for surgical instrument segmentation. Biomed. Signal Process. Control 2025, 102, 107296. [Google Scholar] [CrossRef]

- Matasyoh, N.M.; Mathis-Ullrich, F.; Zeineldin, R.A. Samsurg: Surgical Instrument Segmentation in Robotic Surgeries Using Vision Foundation Model; IEEE Access: Cambridge, MA, USA, 2024. [Google Scholar]

- Jin, Y.; Cheng, K.; Dou, Q.; Heng, P.A. Incorporating temporal prior from motion flow for instrument segmentation in minimally invasive surgery video. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2019: 22nd International Conference, Shenzhen, China, 13–17 October 2019; Proceedings, Part V 22. Springer International Publishing: Cham, Switzerland, 2019; pp. 440–448. [Google Scholar]

- Zhao, Z.; Jin, Y.; Heng, P.-A. Trasetr: Track-to-segment transformer with contrastive query for instance-level instrument segmentation in robotic surgery. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022. [Google Scholar]

- Allan, M.; Kondo, S.; Bodenstedt, S.; Leger, S.; Kadkhodamohammadi, R.; Luengo, I.; Fuentes, F.; Flouty, E.; Mohammed, A.; Pedersen, M.; et al. 2018 robotic scene segmentation challenge. arXiv 2020, arXiv:2001.11190. [Google Scholar] [CrossRef]

- Kurmann, T.; Márquez-Neila, P.; Allan, M.; Wolf, S.; Sznitman , R. Mask then classify: Multi-instance segmentation for surgical instruments. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 1227–1236. [Google Scholar] [CrossRef]

| Dataset | Method | mIoU | IoU | mcIoU | Instrument Category | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| BF | PF | LND | VS/SI | GR/CA | MCS | UP | |||||

| Endovis 2017 | TernausNet | 35.27 | 12.67 | 10.17 | 13.45 | 12.39 | 20.51 | 5.97 | 1.08 | 1.00 | 16.76 |

| MF-TAPNet | 37.25 | 13.49 | 10.77 | 16.39 | 14.11 | 19.01 | 8.11 | 0.31 | 4.09 | 13.40 | |

| Dual-MF | 45.80 | - | 26.40 | 34.40 | 21.50 | 64.30 | 24.10 | 0.8 | 17.90 | 21.80 | |

| ISINet | 55.62 | 52.20 | 28.96 | 38.70 | 38.50 | 50.09 | 27.43 | 2.1 | 28.72 | 12.56 | |

| TraSeTr | 60.40 | - | 32.56 | 45.20 | 56.70 | 55.8 | 38.90 | 11.40 | 31.30 | 18.20 | |

| Kurmann et al. [26] | 65.70 | - | - | - | - | - | - | - | - | - | |

| MATIS | 66.73 | 61.79 | 34.99 | 67.17 | 50.36 | 46.53 | 31.49 | 11.08 | 13.57 | 24.79 | |

| Ours | 69.79 | 64.78 | 36.66 | 61.03 | 52.28 | 45.69 | 34.66 | 15.00 | 20.81 | 27.14 | |

| Endovis 2018 | TernausNet | 46.22 | 39.87 | 14.19 | 44.20 | 4.67 | 0.00 | 0.00 | 0.00 | 50.44 | 0.00 |

| MF-TAPNet | 67.87 | 39.14 | 24.68 | 69.23 | 6.10 | 11.68 | 14.00 | 0.91 | 70.24 | 0.57 | |

| ISINet | 73.03 | 70.94 | 40.21 | 73.83 | 48.61 | 30.98 | 37.68 | 0.00 | 88.16 | 2.16 | |

| TraSeTr | 76.20 | - | 47.71 | 76.30 | 53.30 | 46.50 | 40.60 | 13.90 | 86.20 | 17.15 | |

| MATIS | 82.31 | 77.01 | 48.57 | 83.55 | 38.65 | 40.48 | 92.56 | 70.38 | 0 | 14.39 | |

| Ours | 84.67 | 79.86 | 54.63 | 83.95 | 41.47 | 66.57 | 92.74 | 74.20 | 0 | 23.50 | |

| Position Prior | Region Proposal | mIoU | IoU | mcIoU |

|---|---|---|---|---|

| - | - | 66.73 | 61.79 | 34.99 |

| ✓ | - | 68.91 (+2.18) | 63.86 (+2.07) | 35.31 (+0.32) |

| - | ✓ | 67.02 (+0.29) | 62.10 (+0.31) | 35.28 (+0.29) |

| ✓ | ✓ | 69.79 (+3.06) | 64.78 (+2.99) | 36.66 (+1.67) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, T.; Yuan, X.; Xu, H. Surgical Instrument Segmentation via Segment-Then-Classify Framework with Instance-Level Spatiotemporal Consistency Modeling. J. Imaging 2025, 11, 364. https://doi.org/10.3390/jimaging11100364

Zhang T, Yuan X, Xu H. Surgical Instrument Segmentation via Segment-Then-Classify Framework with Instance-Level Spatiotemporal Consistency Modeling. Journal of Imaging. 2025; 11(10):364. https://doi.org/10.3390/jimaging11100364

Chicago/Turabian StyleZhang, Tiyao, Xue Yuan, and Hongze Xu. 2025. "Surgical Instrument Segmentation via Segment-Then-Classify Framework with Instance-Level Spatiotemporal Consistency Modeling" Journal of Imaging 11, no. 10: 364. https://doi.org/10.3390/jimaging11100364

APA StyleZhang, T., Yuan, X., & Xu, H. (2025). Surgical Instrument Segmentation via Segment-Then-Classify Framework with Instance-Level Spatiotemporal Consistency Modeling. Journal of Imaging, 11(10), 364. https://doi.org/10.3390/jimaging11100364