Abstract

The surface material of an object is a key factor that affects non-line-of-sight (NLOS) imaging. In this paper, we introduce the bidirectional reflectance distribution function (BRDF) into NLOS imaging to study how the target surface material influences the quality of NLOS images. First, the BRDF of two surface materials (aluminized insulation material and white paint board) was modeled using deep neural networks and compared with a five-parameter empirical model to validate the method’s accuracy. The method was then applied to fit BRDF data for different common materials. Finally, NLOS target simulations with varying surface materials were reconstructed using the confocal diffusion tomography algorithm. The reconstructed NLOS images were classified via a convolutional neural network to assess how different surface materials impacted imaging quality. The results show that image clarity improves when decreasing the specular reflection and increasing the diffuse reflection, with the best results obtained for surfaces exhibiting a high diffuse reflection and no specular reflection.

1. Introduction

Non-line-of-sight (NLOS) imaging is a technique used to capture scenes or objects that are out of sight and not directly observable. Depending on whether a controlled light source is used, NLOS imaging techniques can be classified into two types: One is active NLOS imaging, which captures the shape of a hidden object by actively emitting a light ray that eventually captures the shape of the hidden object through scattering and diffuse reflection information after interacting with the occluder and the hidden object. The other is passive NLOS imaging, which does not rely on a controlled light source but, rather, utilizes ambient light or the hidden object’s luminescence to capture the shape of a hidden object by capturing the hidden object on the occluder object via capturing the projection information formed by the object on the occluding object to reconstruct the object [1]. In the field of NLOS imaging research, A. Velten et al. [2] utilized time-of-flight imaging to detect the 3D shape of an object beyond the line of sight. However, this method faces the problems of large, complex data processing, expensive equipment, and time consumption. T. Maeda et al. [3] realized passive NLOS imaging using infrared light, but the quality of the imaging was not satisfactory due to the difficulty of light focusing. The introduction of the Light-Cone Transform algorithm [4], which allows the simplification of the optical path transmission model through the confocal mode and assumes the target to be a Lambertian body, improves the imaging efficiency and quality. D.B. Lindell et al. [5] proposed a confocal diffuse tomography (CDT) imaging technique to overcome the limitations of scattering on the optical system, which can recover the 3D shape of the hidden object and achieve imaging in complex environments. However, achieving high resolution is difficult, and the reflection characteristics of the target material are single. Ye et al. [6] reduced the number of scanning points by using the compressed perception method, which, in turn, saves the time required for the data acquisition and accelerates the speed of imaging. In China, some attempts have been made to improve the algorithm of NLOS imaging technology. Pei et al. [7] utilized SPAD arrays to provide the NLOS imaging system the ability of “single transmitter and multiple receivers”, which improves the imaging speed. Sun et al. [8] utilized a novel learning-based solution to improve the NLOS imaging problem, which can be applied to scenarios with low signal-to-noise ratios. The methodology comprises two key designs: learnable path compensation (LPC) and the adaptive phasor field (APF). However, NLOS simulation studies usually set the target as a Lambertian body, which leads to a single reflection characteristic and hinders the true reflection of a complex environment. This simplification limits the practical application and development of NLOS. Hence, the bidirectional reflection distribution function (BRDF) is introduced into NLOS imaging in this paper.

BRDF, which is mainly determined by the roughness, dielectric constant, surface texture, polarization, and incident wavelength of the target surface, characterizes the distribution of the reflected energy of incident light in hemispherical space [9]. In practice, the measurement of BRDF is limited by its inability to obtain accurate values under arbitrary incidence and observation conditions [10,11,12]. The BRDF models are categorized into empirical, physical, and data-driven models. Empirical models can effectively capture the reflective behavior of common materials, such as the Lambertian model, Phong model [13], five-parameter model [14,15], and Minnaert model [16], but their applications are limited, and describing the reflective properties of complex materials is difficult. Physical models are based on the laws of physics, such as the Torrance–Sparrow model [17] and the Maxwell–Baird model [18]. Fu et al. [19]. proposed an improved pBRDF model, which improved the relative error in the polarization characteristics of the target surface by means of parameter inversion. Ma et al. [20]. measured the spectral polarization BRDF of copper materials in the visible light band with a self-designed device, and they established an exponential model to describe these properties. However, the lack of measurement of bidirectional reflection distribution functions for other different materials limits the generalization ability of the model. Although these models are highly accurate, they involve high computational complexity and cumbersome parameter settings. By contrast, data-driven models learn the reflective properties of materials from a large amount of measurement data via methods such as machine learning [21]. The data-driven model does not require an in-depth understanding of the microstructure and physical properties of the material, which reduces the threshold of model construction.

In summary, this paper adopts a deep learning method to model the target BRDF. Then, the BRDF is introduced into the NLOS imaging scene, and the CDT imaging algorithm in NLOS imaging is simulated based on the principles of the diffusion equation, confocal setup, and imaging model. Next, 3D reconstruction is performed through seven typical BRDF types of NLOS targets and three representative examples of NLOS targets under the deep learning approach. Finally, the effects of different target surface materials on the NLOS imaging quality are analyzed by constructing a convolutional neural network to classify the reconstruction results of different BRDF targets.

2. Materials and Methods

2.1. BRDF Definition and Principle

BRDF is defined as the relative magnitude of the radiant luminance along the direction of ,, and to the radiant illuminance, , incident on the surface of the object along the direction of (, ), with the following expression:

where λ is the wavelength, is the incident zenith angle, is the scattered zenith angle, is the incident azimuth angle, is the scattered azimuth angle, and is the BRDF of the surface element.

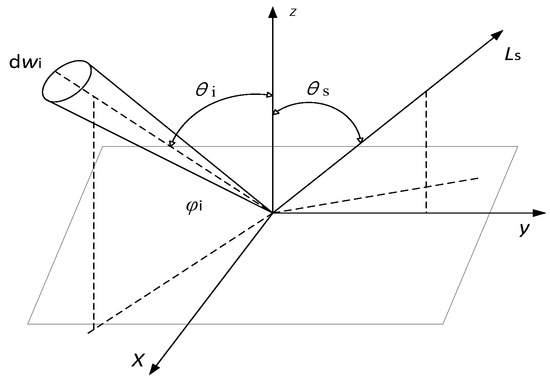

BRDF depends on factors such as the dielectric constant, radiation wavelength, and surface roughness, and its geometry is shown in Figure 1:

Figure 1.

Geometric diagram of BRDF.

According to the defining equation of BRDF, the BRDF data to be measured include the incident zenith angle, , the incident azimuth angle, , the scattered zenith angle, , the scattered azimuth angle, , and the value of BRDF.

2.2. Diffusion Equation

According to the Boltzmann radiation transport equation, the flux rate, , of the diffused photons satisfies the diffusion equation when the collimated pulsed beam is incident on the surface of a semi-infinite homogeneous tissue plate or a finite homogeneous tissue plate with the following expression:

where is the velocity of light in the tissue, is the time, is the diffusion coefficient, and is the photon source; and are the linear absorption and scattering coefficients, respectively, and g is the average cosine of the scattering angle.

A common approximation uses an extrapolated boundary condition for which the diffusive intensity is assumed to be zero on a flat surface in an extrapolation distance, , away from either side of the slab. For a slab of thickness , this condition states that the diffusive intensity is zero at and , where the value of depends on the amount of internal reflection for the diffusive intensity due to the refractive index mismatch at the medium–air interface.

Hence, the extrapolated boundary condition can be satisfied by placing a positive and negative dipole source about such that the total diffusion intensity at the extrapolated distance is zero. However, a single dipole source does not satisfy the boundary condition at . Instead, an infinite number of dipole sources are needed, for among which the dipole at the near interface () is mirrored by the dipole at the far interface and then again by the dipole at the far interface, and so on, as illustrated with the small squares in Figure 2.

Figure 2.

Model of CDT imaging.

The locations of the positive and negative sources are as follows:

Finally, the resulting solution to the diffusion equation is as follows:

where Φ is the power transmitted through the slab per unit area, is the position illuminated via the laser and imaged via the detector, and is a spatial position on the far side of the scattering medium.

2.3. Imaging Model

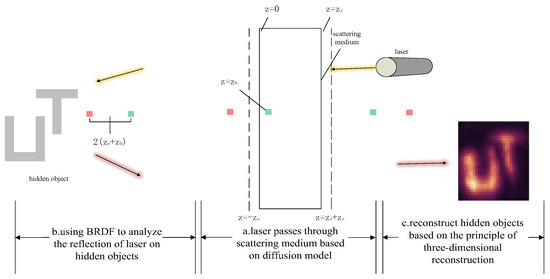

The complete CDT imaging model consists of three parts: laser diffusion through the scatterer, free-space propagation hitting the hidden object, and reflection via the hidden object and passing through the scatterer again.

The measurement model is as follows:

The measured value is an integral of three parts: the first part is the diffusion of light from to in the scattering medium, the second part is the free space propagation of the light from to the point x on the hidden object and back to another , and the third part is the diffusion of the light from to through the scattering medium.

In this paper, the confocal setting as a scheme, namely, , is used to simplify the imaging model. The expression is as follows:

where the function f is the albedo from a point on the hidden object to a point on the boundary of the scattering medium, and the function of the constraint time is .

For the inverse process of the above model, because the real imaging model contains noise terms, it needs to be deconvolved through Wiener filtering, which leads to a closed-form solution with the following expression:

where the superscript represents the complex conjugate, and RSN is the signal signal-to-noise ratio. Combining Equations (11) and (12) derives the frequency domain representation of , and then its inverse Fourier transform estimates the value of I. The expression is as follows:

Thus, the albedo of the hidden object is obtained, and finally, the geometry of the hidden object is reconstructed.

3. Results

3.1. Deep Neural Network Target BRDF Model Construction

Deep learning is a machine learning method based on artificial neural networks for data analysis and pattern recognition. Artificial neural networks mimic the structure and function of neurons in the human brain and contain an input layer, a hidden layer, and an output layer. The input layer receives the data, the hidden layer processes the data, and the output layer generates the final result.

(1) Input and output of the model

In this paper, the input layers are incident zenith angle , incident azimuth angle i, scattering zenith angle , and scattering azimuth angle , and the output layer is the simulation value f of BRDF.

(2) Model network structure selection

Due to the numerous factors that determine the distribution of BRDF, the premise of modeling BRDF is to extract relevant features adequately from experimental data. Deep neural networks connect nodes through layers, the output of the previous hidden layer becomes the input of the next hidden layer, and the cycle repeats. In this process, the effectiveness of feature extraction increases as the number of hidden layers increases. However, extremely many layers weaken the learning ability of the hidden layer and affect the accurate extraction of features. Hence, in this paper, a deep neural network containing five hidden layers is constructed, and the numbers of neurons in these hidden layers are 20, 30, 20, 10, and 10, in that order.

(3) Activation function

In this paper, the fully connected neural network is used as a deep neural network, and each layer of the fully connected neural network can be calculated by multiplying the weight matrix, W, with the neuron vector, x, and then adding it to the bias term, b, as shown below:

Because the modeling outputs result in BRDF simulation values, the value of the activation function must be greater than 0. If its value is less than 0, the network is in a state of inhibitory activity, so the activation function is selected as the ReLU activation function.

(4) Loss function and optimizer selection

The loss function is the only measure of the accuracy of the model. If the value of the loss function is smaller, then the degree of deviation is smaller. The loss function expression is as follows:

where E is the difference value between the simulation result and the measurement result, is the simulation result, and is the actual measurement result.

To avoid falling into a local optimum when debugging parameters, unsupervised learning is used as the training method for a deep neural network, and the DropOut optimization algorithm is introduced.

(5) Regularization method

Regularization techniques can reduce the complexity of deep neural network models and prevent model overfitting. In this paper, the L1 regularization method with the following expression is used:

where L denotes regularization, and is the regular term.

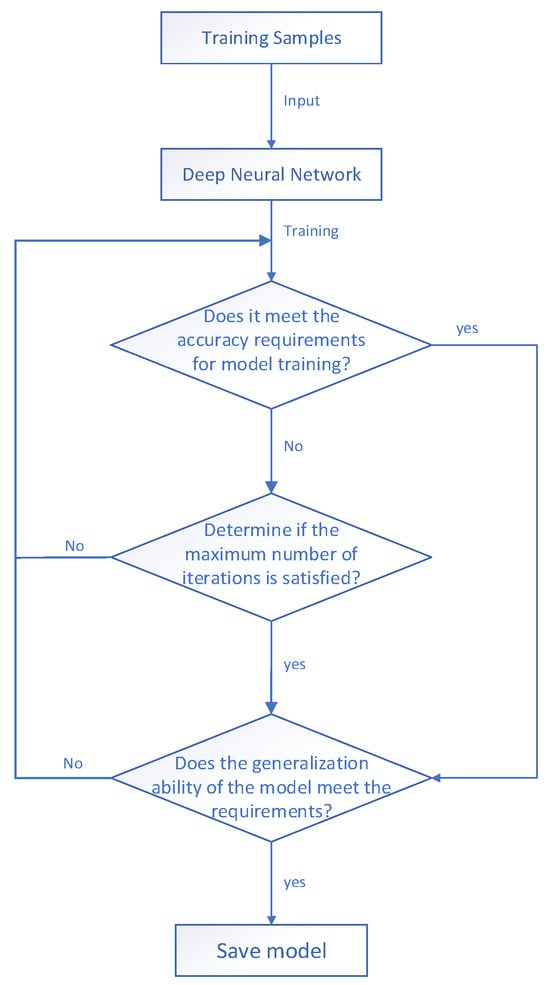

Based on the above, the target BRDF modeling derived from a deep neural network can be demonstrated in a flowchart, as shown in Figure 3.

Figure 3.

Modeling of BRDF based on deep neural network.

The surface BRDF data of different common objects are measured at incident zenith angles of 30°, 45°, and 60°, and the amount of data collected for each object is 1000. For the surface BRDF experimental data of a certain object, the scheme adopted is to select it randomly first, take part of the experimental data as a training sample and the rest as a test sample, and then take the training samples as inputs to construct the BRDF model.

3.2. Target Imaging Classification Based on Deep Learning

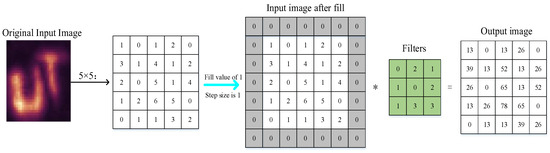

The reconstructed images of hidden objects with different target object materials are different. The data set for target classification can be obtained after numerous simulations. Combined with the features of the reconstruction results, the images can be classified into three, namely, clear, clearer, and fuzzy, and they are labeled in order as 1, 2, and 3, respectively. When classifying the target through the use of deep learning convolutional neural networks, the width of the input layer is generally H, the height is generally W, and the number of channels is D. In the case of RGB images, the value of D is taken as 3. The convolutional layer performs convolutional operations on different images and then extracts the image features and finally merges all the different image features collected.

With the 5 × 5 raw input image and the 3 × 3 filter taken as an example, and the fill value and step size are set to 1, the convolution operation is shown in Figure 4.

Figure 4.

Schematic diagram of convolution operation.

The activation function used in this paper at the fully connected layer is the same as the activation function used to build the target BRDF model with deep learning, which is also the ReLU function. In this manner, not only can the nonlinear component of the convolutional neural network be increased but also the gradient can disappear when the input data is large or small; that is, the problem of a gradient value close to 0 can be avoided.

The output layer treats the normalization function as the result of the classification output with the following expression:

The method of calculating probability is to map the output values of many inputs to the interval of 0–1, and the classification corresponding to the largest probability value is the final result.

The specific expression of the loss function used in this paper is cross-entropy because the loss function in the form of cross-entropy can measure the difference between the simulation result and the actual label, which often appears in the problem of target classification. The specific formula for the cross-entropy loss function is as follows:

where L is the loss function, and is the true value corresponding to a particular image. takes values in the range of 0–1, and the sign in front of the cumulative sign indicates that, when the probability of the simulation result, , is larger, the value of the loss function is lower.

4. Analysis of Results

4.1. BRDF Model Validation Analysis

To verify the validity and accuracy of the BRDF model, the five-parameter BRDF model simulation modeling was used, and then its modeling results were analyzed and compared with those of the deep neural network BRDF model.

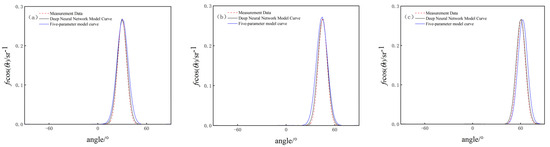

Aluminized insulation material performs stronger specular reflection, while white paint board involves stronger diffuse reflection, according to the experimental data of their surface BRDF, in which the incident zenith angles are taken as 30°, 45°, and 60°, and the relative azimuthal angles are all taken as 0°. The results of the surface BRDF of the two materials are obtained through the deep neural network modeling and the five-parameter empirical modeling methods, as shown in Figure 5 and Figure 6.

Figure 5.

Comparison of BRDF data for aluminized insulation material under two models. (a) = 30°; (b) = 45°; (c) = 60°.

Figure 6.

Comparison of BRDF data for white paint board under two models. (a) = 30°; (b) = 45°; (c) = 60°.

(1) Comparison of BRDF data of aluminized insulation material

The training error is 0.0457, the test error is 0.0339, the average agreement between the deep neural network model and the measured data is 95%, and the average agreement between the five-parameter model and the measured data is 84%.

According to the comparison results of the BRDF measurement data with the model data under the deep neural network model and the five-parameter model for the aluminized insulation material, when the incident zenith angle is different, the BRDF value of the target object surface simulated via the deep neural network model is more compatible with the measured value, and the fitting effect of this model is better than that of the five-parameter model, which indicates that, for the object with strong specular reflection, the target BRDF model based on deep learning is more compatible with the measurement data. The learning target BRDF model has a better simulation ability for objects with strong specular reflection.

(2) Comparison of BRDF data of white paint board

The training error is 0.0372, the test error is 0.0429, the average agreement between the deep neural network model and the measurement data is 92%, and the average agreement between the five-parameter model and the measurement data is 83%.

According to the comparison results of the BRDF measurement data with the model data under the deep neural network model and the five-parameter model for the white paint board, for the objects with strong diffuse reflections, the fitting effect of the deep neural network model is also better than that of the five-parameter model, which indicates that, for the objects with strong diffuse reflections, the simulation capability of the target BRDF model based on deep learning is still better than that of the five-parameter model.

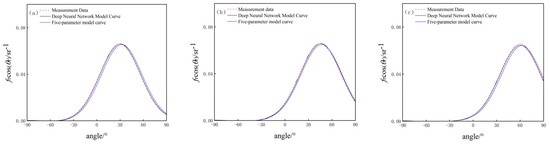

Then, by using the deep neural network BRDF model, the BRDFs of seven target objects are simulated and fitted, and the results are shown in Figure 7. In Figure 7a, the fitted curves of the seven different target objects represent four types of typical BRDFs: The fitted curves of the gold-plated polyester film and the painted steel plate have narrow shapes but high peaks, representing the type with a strong specular reflection part. The fitted curves of the quartz glass and the aluminum alloy samples have wide shapes and high peaks, representing the type with a strong specular and diffuse reflection part. The fitted curves of the black anode plate and aluminum diffuse reflection plate have wide shapes but low peaks, which represent the class with strong diffuse reflection part. The fitted curves of the standard white plate are straight lines, which represent the class with no specular reflection part, when the target object is a Lambertian body.

Figure 7.

BRDF fitting results for different target objects. (a) BRDF fitting results for seven target objects; (b) BRDF for three instances.

To verify the above analysis, the BRDF data plots of glass fiber with a strong specular reflection part, fuchsia lacquer board with a strong specular reflection part and a strong diffuse reflection part, and cement board with a strong diffuse reflection part, which are obtained from the BRDF measurement data of the three examples, are given, as in Figure 7b, where the specular reflection part of the glass fiber is stronger than that of the two other materials, and the peak value of their BRDF curves is the highest. A large part of the reason for this is that the refractive index of glass fiber is smaller, and the smaller the refractive index is, the smaller the absolute value of the dielectric constant is, resulting in a larger echo energy on the surface of the object, which in turn leads to a higher peak value.

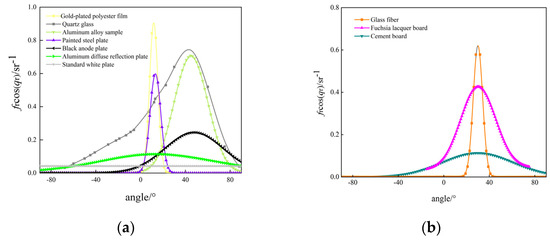

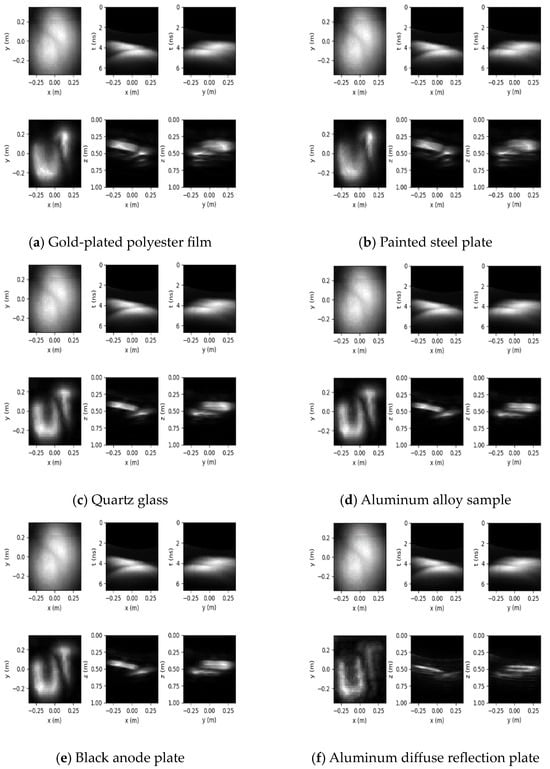

4.2. Target CDT Imaging Reconstruction

The hidden scene consists of the letters “UT” located about 50 cm behind the scatterer, the thickness of the scattering medium is 2.54 cm, the refractive index is 1.12, and other important simulation parameters are set as shown in Table 1.

Table 1.

Other important simulation parameters of non-line-of-sight imaging systems.

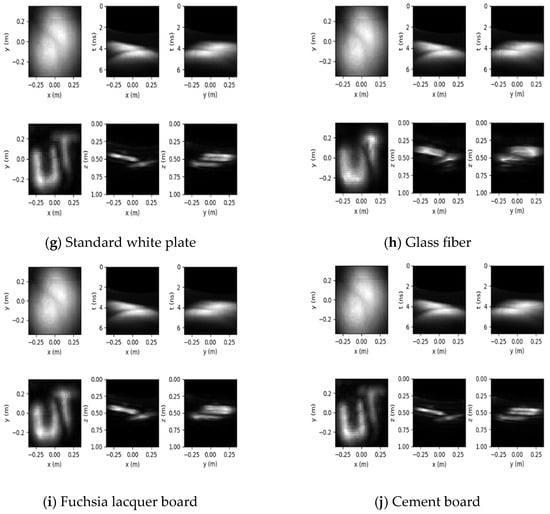

Based on the principle of 3D reconstruction, the Python 3.6 software was used to reconstruct the hidden objects of seven kinds of objects representing four types of typical BRDFs and three instance objects, and the reconstruction results are shown in Figure 8.

Figure 8.

Reconstruction results of different objects.

The 3D reconstruction results for the different hidden objects are presented in Figure 8, where the first row of each subplot shows the measurements and their x–t and y–t slices, and the second row shows the reconstruction results and their x–z and y–z slices.

4.3. Imaging Classification of Different BRDF Surface Targets

The data set constructed in this paper contains 1000 images, of which 200 and 800 were used for validation and training, respectively. Fast Fourier Transform is a frequently used tool in problems related to frequency-domain analysis to transform an image from the spatial to the frequency domain. In the frequency domain, the high frequencies correspond to the detailed parts of the image, and the low frequencies correspond to the general shape of the image.

Because the detail part implies a large amount of edge information, its higher variance indicates a higher degree of deviation, which in turn means a higher intensity of information and a clearer image. Hence, in this paper, Fast Fourier Transform is performed on 1000 images in the data set, and the variance of the edge information in the high frequency range is calculated to determine the clarity of the image. After computational evaluation, in these 1000 images, 193, 514, and 293 images are labeled 1, 2, and 3, respectively.

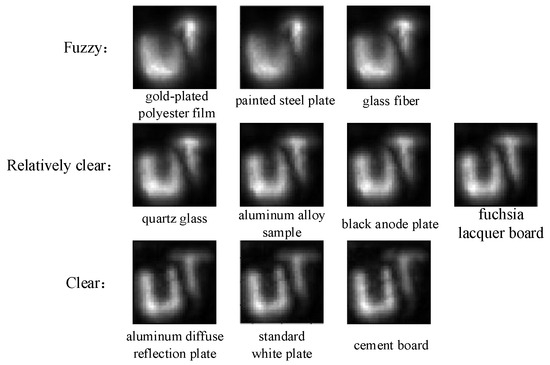

In this paper, PyTorch is selected as the framework for deep learning, the number of training times is set to 10, and the cross-entropy loss function is taken as the optimization object. After the training is completed, the reconstructed images Figure 8a–j of the above 10 objects are input into the classification network, one object corresponds to one image, 10 images are not labeled, and finally, the classification results of different BRDF surface targets are obtained, as shown in Figure 9.

Figure 9.

Classification results of different BRDF surface targets.

Figure 9 shows 10 types of objects divided into three using convolutional neural networks: Gold-plated polyester film, painted steel plate, and glass fiber belong to the category labeled as 3, which is fuzzy. Quartz glass, aluminum alloy sample, black anode plate, and fuchsia lacquer board belong to the category labeled as 2, which is relatively clear. aluminum diffuse reflection board, standard white plate, and cement board belong to the category with a label of 1, which is clear.

5. Conclusions

In this paper, a target BRDF model suitable for the scattering characteristics of object materials based on deep learning was constructed, and this method was used to model the BRDF of two surface materials (aluminized insulation material and white paint board). The results indicate that deep learning models can effectively simulate the BRDF of the target object surface, whether it is an object with strong specular reflection or an object with strong diffuse reflection. The deep learning model outperforms the five-parameter model in fitting performance, more accurately describes the target scattering characteristics, and verifies the accuracy and effectiveness of this method. Then, this method was used to fit the surface BRDF data of seven materials (gold-plated polyester film, quartz glass, an aluminum alloy sample, painted steel plate, black anode plate, aluminum diffuse reflection plate, and standard white plate). The BRDF data were analyzed and compared with three examples (glass fiber, fuchsia lacquer board, and cement board). Finally, the confocal diffusion tomography algorithm was used to simulate and reconstruct NLOS targets of these 10 different surface materials. CDT imaging reconstruction and classification analysis revealed that, as the proportion of specular reflection in the object material decreased, the effect of NLOS reconstruction remarkably improved. The BRDF distribution map shows that, when the incident angle is the same, the stronger the specular reflection of the object, the larger the highlight area generated on the surface and the greater the effect on intrinsic texture and other information, leading to a decrease in reconstruction quality. Therefore, for target objects with different surface materials, the smaller the proportion of their specular reflection, the better the reconstruction effect.

Author Contributions

Conceptualization, Y.Y.; writing—original draft, K.Y.; methodology, A.Z.; All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (62101174) and the Natural Science Foundation of Hebei Province (F2021402005).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Faccio, D.; Velten, A.; Wetzstein, G. Non-line-of-sight imaging. Nature 2020, 2, 318–327. [Google Scholar] [CrossRef]

- Velten, A.; Willwacher, T.; Gupta, O.; Veeraraghavan, A.; Bawendi, M.G.; Raskar, R. Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nat. Commun. 2012, 3, 745. [Google Scholar] [CrossRef] [PubMed]

- Maeda, T.; Satat, G.; Swedish, T.; Sinha, L.; Raskar, R. Recent advances in imaging around corners. arXiv 2019, arXiv:1910.05613. [Google Scholar]

- Toole, O.M.; Lindell, D.B.; Wetzstein, G. Confocal non-line-of-sight imaging based on the light-cone transform. Nature 2018, 555, 338. [Google Scholar] [CrossRef]

- Lindell, D.B.; Wetzstein, G. Three-dimensional imaging through scattering media based on confocal diffuse tomography. Nat. Commun. 2020, 11, 4517. [Google Scholar] [CrossRef] [PubMed]

- Ye, J.-T.; Huang, X.; Li, Z.-P.; Xu, F. Compressed sensing for active non-line-of-sight imaging. Opt. Express 2021, 29, 1749–1763. [Google Scholar] [CrossRef] [PubMed]

- Pei, C.; Zhang, A.; Deng, Y.; Xu, F.; Wu, J.; Li, D.U.-L.; Qiao, H.; Fang, L.; Dai, Q. Dynamic non-line-of-sight imaging system based on the optimization of point spread functions. Opt. Express 2021, 29, 32349. [Google Scholar] [CrossRef] [PubMed]

- Sun, S.; Li, Y.; Zhang, Y.; Xiong, Z. Generalizable Non-Line-of-Sight Imaging with Learnable Physical Priors. arXiv 2024, arXiv:2409.14011. [Google Scholar] [CrossRef]

- Zhensen, W.; Suomin, C. Bistatic scattering by arbitrarily shaped objects with rough surface at optical and infrared frequencies. Int. J. Infrared Millim. Waves 1992, 13, 537–549. [Google Scholar] [CrossRef]

- Warren, S.G.; Grenfell, T.C.; Mullen, P.C. Effect of Surface Roughness on Remote Sensing of Snow Albedo. Ann. Glaciol. 1987, 9, 242–243. [Google Scholar] [CrossRef]

- Jia, H.; Li, F.T. Bidirectional Reflectance Distribution Function of Aluminium Diffuser at UV Spectral Band. Acta Opt. Sin. 2004, 24, 230. [Google Scholar]

- Yang, Y.F. Reserch on spectral scattering characteristics of complex target. Electron. Meas. Technol. 2018, 41, 99–102. [Google Scholar]

- Phong, B.T. Illumination for computer generated pictures. Commun. ACM 1975, 18, 311–317. [Google Scholar] [CrossRef]

- Wu, Z.S.; Xie, D.H. Modeling Reflectance Function from Rough Surface and Algorithms. Acta Opt. Sin. 2002, 22, 897. [Google Scholar]

- Yang, Y.F.; Wu, Z.S.; Cao, Y. Practical Six-Parameter Bidirectional Reflectance Distribution Function Model for Rough Surface. Acta Opt. Sin. 2012, 32, 0229001. [Google Scholar] [CrossRef]

- Minnaert, M. The reciprocity principle in lunar photometry. Astrophy J. 1941, 93, 403–410. [Google Scholar] [CrossRef]

- Torrance, K.E.; Sparrow, E.M. Off-specular peaks in the directional distribution of reflected thermal radiation. J. Heat Transfer. 1966, 11, 5–9. [Google Scholar] [CrossRef]

- Maxwell, J.R.; Beard, J.; Weiner, S.; Ladd, D.; Ladd, S. Bidirectional Reflectance Model Validation and Utilization; Defense Technical Information Center: Fort Belvoir, VA, USA, 1973. [Google Scholar]

- Fu, Q.; Liu, X.; Yang, D.; Zhan, J.; Liu, Q.; Zhang, S.; Wang, F.; Duan, J.; Li, Y.; Jiang, H. Improvement of pBRDF Model for Target Surface Based on Diffraction and Transmission Effects. Remote Sens. 2023, 15, 3481. [Google Scholar] [CrossRef]

- Ma, W.; Liu, Y.; Liu, Y. The measurement and modeling investigation on the spectral polarized BRDF of brass. Opt. Rev. 2020, 27, 380–390. [Google Scholar] [CrossRef]

- Liu, C.; Li, Z.; Xu, C.; Tian, Q. BRDF Model for Commonly Used Materials of Space Targets Based on Deep Neural Network. Acta Opt. Sin. 2017, 37, 1129001. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).