Abstract

The rapid growth of electric vehicles (EVs) and new energy systems has put lithium-ion batteries at the center of the clean energy change. Nevertheless, to achieve the best battery performance, safety, and sustainability in many changing circumstances, major innovations are needed in Battery Management Systems (BMS). This review paper explores how artificial intelligence (AI) and digital twin (DT) technologies can be integrated to enable the intelligent BMS of the future. It investigates how powerful data approaches such as deep learning, ensembles, and models that rely on physics improve the accuracy of predicting state of charge (SOC), state of health (SOH), and remaining useful life (RUL). Additionally, the paper reviews progress in AI features for cooling, fast charging, fault detection, and intelligible AI models. Working together, cloud and edge computing technology with DTs means better diagnostics, predictive support, and improved management for any use of EVs, stored energy, and recycling. The review underlines recent successes in AI-driven material research, renewable battery production, and plans for used systems, along with new problems in cybersecurity, combining data and mass rollout. We spotlight important research themes, existing problems, and future drawbacks following careful analysis of different up-to-date approaches and systems. Uniting physical modeling with AI-based analytics on cloud-edge-DT platforms supports the development of tough, intelligent, and ecologically responsible batteries that line up with future mobility and wider use of renewable energy.

1. Introduction

The electrification of transportation has emerged as a pivotal strategy in the global effort to reduce greenhouse gas emissions, accelerate the transition to sustainable energy systems, and achieve ambitious climate goals [1,2]. At the heart of this transformation lies lithium-ion batteries, which power the majority of electric vehicles (EVs) due to their high energy density, lifecycle characteristics, and efficiency. However, the growing complexity of battery systems has introduced significant challenges across multiple domains, including safety, reliability, cost, and end-of-life sustainability. To ensure optimal battery performance and longevity under varying operational conditions, BMSs play a pivotal role by enabling real-time monitoring, control, and protection of battery packs. Despite their importance, conventional BMS implementations often rely on resource-constrained microcontrollers that limit the integration of advanced algorithms and real-time analytics [3,4].

In response to these limitations, recent research has advanced both model-based and data-driven techniques for BMS optimization. Equivalent circuit models (ECMs), electrochemical models, and hybrid frameworks have been widely employed for battery behavior representation and control, while machine learning approaches such as support vector regression, random forests, and deep learning have shown considerable promise in improving state estimation accuracy, particularly for SOC, state of health (SOH), and remaining useful life (RUL) prediction [5,6,7]. Hybrid approaches combining signal decomposition with optimized neural networks have achieved high prediction fidelity even under complex degradation dynamics [8]. Physics-informed neural networks (PINNs) have demonstrated the potential to unify mechanistic insights with data-driven learning for generalizable battery aging models [9]. Simultaneously, significant progress has been made in battery thermal management systems (BTMSs), where innovative combinations of phase change materials (PCMs), porous copper structures, and liquid cooling strategies have led to notable reductions in temperature gradients and enhanced energy density without compromising safety [10,11,12].

Fast charging, an essential enabler for the mass adoption of EVs, imposes additional thermal and electrochemical stress on batteries. AI-enabled charging strategies incorporating digital twins (DTs), reinforcement learning, and physics-based control architectures have emerged as viable solutions to mitigate degradation, improve adaptability, and enhance safety during high-rate charging. Among these, digital twin technology has gained particular momentum as a next-generation framework that integrates physical modeling, real-time sensing, internet-of-things (IoT) connectivity, and cloud/edge computing to create dynamic, bidirectional virtual representations of battery systems. DTs enable predictive diagnostics, adaptive control, and intelligent lifecycle management, with applications spanning battery design, manufacturing, repurposing, and recycling [13,14].

The integration of DTs with AI also opens new opportunities for sustainability and circular economy practices. Advanced data analytics and intelligent decision-making frameworks are now being employed to optimize recycling processes for ternary cathode materials such as nickel, cobalt, and manganese, addressing critical issues of resource scarcity and environmental impact [15]. Nevertheless, the full realization of intelligent and sustainable battery systems requires overcoming several persistent barriers, including cybersecurity concerns, the lack of standardization in DT architecture, limited dataset availability, and the need for interpretable, scalable AI models. Addressing these gaps is essential to developing future-proof BMS architectures that are resilient, adaptive, and capable of supporting the evolving demands of electrified transportation.

2. Battery Management Systems

A Lithium-ion BMS oversees all important signals, including cell voltage, current, temperature, and SOC, to guarantee safe, efficient, and reliable battery operation. The main advantages include fault protection, management of the battery temperature, keeping battery cells at the same charge, and monitoring how healthy the battery is. Modern BMSs now use AI for predictions, digital twins for simulation, and cloud networks to provide live analysis from any location. Electric vehicles, e-bikes, grid storage, and portable electronics often include these systems to keep batteries functioning longer, more safely, and with better performance.

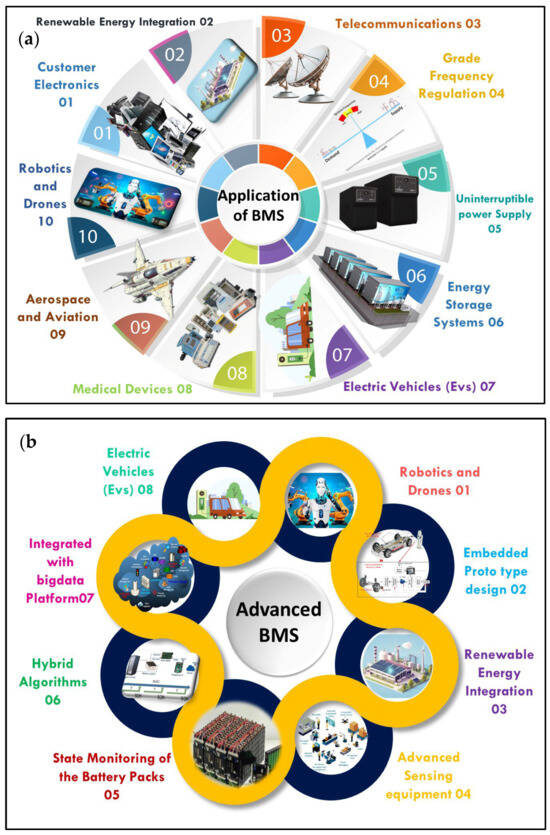

A BMS can be seen acting in multiple ways and being updated with new technology, as Figure 1 shows. BMS systems are used throughout different industries, as highlighted in Figure 1a, such as electric vehicles, telecommunications, aerospace, and robotics, to maintain safety, improve efficiency, and establish reliability. Figure 1b demonstrates how bringing in big data platforms, using hybrid algorithms, and improved sensor technologies is improving battery monitoring and control in future BMS. Taken together, these images prove the importance of BMS in current energy and transportation systems and the path toward using data and intelligence.

Figure 1.

Applications and technological enablers of Battery Management Systems. (a) General BMS applications across various industries such as consumer electronics, electric vehicles, and energy storage. (b) Key components and advancements in modern BMS, including hybrid algorithms, state monitoring, and integration with big data platforms.

3. AI Applications in Lithium-Ion Batteries

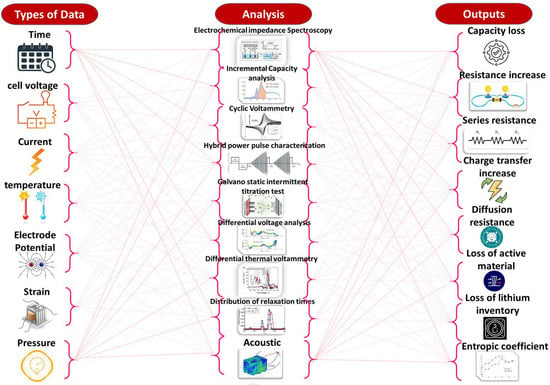

As Figure 2 illustrates, different kinds of data from lithium-ion batteries, such as voltage, current, temperature, and pressure, are analyzed with several methods to identify the battery’s state. The methods reveal if devices are facing problems related to their capacity decreasing, resistance increasing, or material failing. The data become particularly significant when used in AI-based battery systems. Using the health measurements, machine learning models can determine the pattern of a battery’s aging and forecast how well it will do in the future. Applying AI-supported data analysis allows us to design a smart BMS for electric vehicles, storage, and other uses, so that issues are spotted early, battery life is increased, and operations are both safe and efficient.

Figure 2.

Use of on-board battery data, analysis techniques, and output information to support AI-driven diagnostics and health estimation in lithium-ion batteries.

3.1. AI-Powered Lithium-Ion Battery Management Systems

Artificial intelligence is playing an increasingly vital role in enhancing the functionality, safety, and longevity of lithium-ion batteries within EV applications. One of the most comprehensive assessments in this domain was conducted by Lipu et al. [16], who statistically analyzed 78 publications spanning from 2014 to 2023. Their work identified key research trends, leading contributors, and dominant algorithmic strategies, while outlining the strengths and limitations of various AI techniques applied in BMSs. Their analysis underscores the transformative potential of AI in boosting electric vehicle performance and battery lifecycle, thus aligning with broader goals of decarbonization and sustainable energy systems.

Yavas et al. [17] explored the capabilities of AI-based approaches for enhancing real-time monitoring of state of charge (SOC) and state of health (SOH), as well as for predicting faults and managing thermal events. Their findings demonstrate that AI-enabled BMS outperforms traditional systems by dynamically adapting charging protocols and reducing operational risks. Similarly, Pooyandeh et al. [18] proposed an AI-empowered digital twin framework that leverages a time-series generative adversarial network (TS-GAN) to synthesize accurate SOC profiles, showing improvements in energy efficiency, operational safety, and battery lifespan. Palanichamy et al. [19] introduced an AI-based BMS architecture incorporating X-ray computed tomography and electrochemical impedance spectroscopy to enable model-free predictions of SOC, SOH, and fault dynamics, enhancing fault detection sensitivity by 40% and reducing thermal risk.

Farman et al. [20] emphasized the importance of dynamic SOH and RUL estimation under real-world operating conditions. By integrating cloud connectivity and IoT-based sensing, their framework supports robust thermal management and fault diagnostics, promoting battery reliability and long-term sustainability. Ahwiadi and Wang [21] further advanced the prognostic capabilities of BMS through an AI-driven particle filter (AI-PF) algorithm that employs dynamic degeneracy detection and adaptive mutation to maintain particle diversity. Their method achieved up to 33% reduction in root mean square errors (RMSE) and RUL prediction errors as low as 4.94%, with improved computational efficiency suitable for real-time application.

Devendra et al. [22] explored machine learning regressors such as XGBoost, LightGBM, and SVM, highlighting the predictive power of ensemble models under variable load profiles. Reinforcement learning strategies have also gained traction; Suanpang and Jamjuntr [23] implemented a Q-learning-based BMS optimization protocol that improved energy efficiency by 15% and extended battery lifespan by 20%.

In the broader context of energy systems, Razmjoo et al. [24] underscored the critical role of energy storage systems (ESS) in enabling large-scale renewable energy integration, highlighting the synergy between battery storage, pumped hydro, and AI optimization for grid flexibility and emission reduction. Their study emphasized that widespread ESS adoption hinges not only on technological innovation but also on supportive regulatory frameworks, strategic financial incentives, and collaborative policy actions.

Miraftabzadeh et al. [25] reviewed hybrid AI models, including artificial neural networks (ANNs), fuzzy logic, and optimization algorithms that deliver high-accuracy estimations of battery health and power quality. Deep learning and reinforcement learning were identified as underutilized yet promising approaches for advancing adaptive control and facilitating renewable integration within electric mobility ecosystems.

Badran and Toha [26] provided a broader perspective by analyzing supervised machine learning techniques across classification and regression tasks in BMS. They emphasized the increasing relevance of IoT, cloud computing, and edge control modules for real-time system optimization, while pointing to persistent challenges such as inadequate modeling of environmental influences and the need for balanced cloud-onboard computation strategies.

Finally, Acharya et al. [27] examined how AI and machine learning are transforming the battery value chain from material discovery to end-of-life recycling. They illustrated how AI tools are accelerating the identification of high-energy-density electrode materials and electrolyte formulations, while enabling sophisticated SOH analysis and imaging-based diagnostics. Furthermore, their work explored AI-driven automation in manufacturing and blockchain-enabled traceability, contributing to enhanced sustainability in battery recycling.

3.2. Artificial Intelligence for Lithium-Ion Battery Optimization

Artificial intelligence has emerged as a pivotal tool in optimizing lithium-ion battery performance, with diverse applications spanning state estimation, material discovery, system control, and real-time prediction. Among recent developments, Oyucu et al. [28] demonstrated the efficacy of machine learning models, particularly LightGBM, in predicting discharge capacity with exceptional precision (MAE: 0.103, MSE: 0.019, R2: 0.887). Their integration of explainable AI (XAI) using SHAP analysis identified temperature as the most influential factor, directly correlating elevated thermal conditions with capacity loss. These insights offer valuable direction for improving charge cycles, pre-empting failure, and extending battery lifespan, though real-time deployment continues to face hurdles in computational efficiency and system integration.

Expanding AI’s influence beyond operational diagnostics, its application in material optimization presents a transformative path forward. Feng [29] demonstrated a data-driven workflow for the rapid screening of electrode material candidates and the prediction of optimal compositions, illustrating how such methods can bypass manual trial-and-error processes and systematically guide material selection. Similarly, Alzamer et al. [30] highlighted AI’s contribution to electrolyte design, addressing challenges of data scarcity by combining machine learning with automated text mining to predict composition-structure-property relationships for novel electrolytic systems. They presented a combined machine learning and automated text mining approach to map composition–structure–property relationships in electrolytes, addressing data scarcity while enabling the exploration of novel candidates through automated knowledge extraction. While these studies do not report quantitative benchmarking, they exemplify how AI frameworks can enhance the systematic and efficient discovery of materials, supporting sustainable energy storage innovations and broader advancements in materials science [31].

Advancements in time-series modeling have also enhanced the accuracy of SOH predictions. Wang et al. [32] proposed an optimized Bi-directional LSTM (BiLSTM) model trained using an adaptive convergence-factor-enhanced Gold Rush Optimizer (GRO), achieving superior SOH and capacity fade predictions compared to standard feedforward, LSTM, and other metaheuristic models. These improvements are attributed to enhanced global-local balance in parameter optimization, enabling high-fidelity forecasting of battery degradation behavior.

In terms of real-time applicability, Shahriar et al. [33] developed a hybrid CNN-GRU-LSTM model that achieved SOC estimation errors as low as 0.41% across varying temperatures, with minimal memory requirements and fast processing times (0.000113 s/sample). By combining the long-term dependency modeling of LSTM with the efficiency of GRU and the feature extraction capability of CNNs, their system delivers high accuracy with computational efficiency suitable for onboard BMS integration. Additionally, Mumtaz et al. [34] validated the effectiveness of unscented Kalman filters (UKF) for SOC and SOH estimation under dynamic EV operating conditions, demonstrating SOC error reductions to below 1% while maintaining cloud-integrated IoT connectivity for robust tracking and adaptive control.

At the system level, Arévalo et al. [35] conducted a systematic review of AI-integrated energy management systems (EMS) in EVs, showing improvements in predictive maintenance, adaptive route planning, and range extension (by 12–18%) through real-time optimization. However, they emphasized the need for improved cybersecurity, interoperability, and smart grid integration to ensure safe and scalable deployment. Ghazali et al. [36] echoed these concerns, identifying gaps in machine learning (ML) integration, lightweight algorithm design, and sensor cost reduction for real-time safety functions such as SOP and SOE monitoring. Their review calls for interdisciplinary efforts to enhance algorithm adaptability across diverse environmental and user-driven conditions.

Finally, Challoob et al. [37] explored the convergence of lithium-ion batteries and supercapacitors through bidirectional converters as a promising hybrid powertrain configuration. Despite marked progress in thermal stability, internal resistance estimation, and battery longevity, persistent limitations remain in system-level modeling, solar charging integration, and efficient control strategies. Addressing these challenges will be key to realizing the full potential of AI-enhanced BMS in enabling cost-effective, safe, and environmentally sustainable electric mobility solutions.

3.3. AI in Lithium-Ion Battery Life Prediction

Accurately predicting the RUL of lithium-ion batteries is critical for optimizing performance, safety, and cost-efficiency in EV applications. Recent advances in artificial intelligence, particularly in deep learning and ensemble-based regression, have significantly enhanced battery life forecasting capabilities. Zhang et al. [38] explored the use of deep transfer learning, employing a VGG16-based feature extraction framework to model capacity degradation. Their study revealed that Method II, incorporating comprehensive voltage and capacity data, most effectively captured aging dynamics across varying operational conditions. However, challenges such as temperature sensitivity and classification ambiguities remain, reinforcing the need for more robust feature integration techniques, including differential voltage and electrochemical impedance spectroscopy data.

In parallel, Sravanthi and Chandra Sekhar [39] performed a comparative analysis of machine learning regressors for RUL prediction using real-world datasets. Their findings showed that the Bagging Regressor outperformed other methods such as Gradient Boosting, K-Nearest Neighbors, and Extra Trees, achieving near-perfect R2 (0.999) with minimal errors (MSE: 14.307, RMSE: 3.782, MAE: 2.099). These results underscore the practical viability of ensemble models for efficient and precise RUL estimation in battery health management systems, with hybrid learning approaches identified as a promising avenue for further refinement.

Pushing the boundaries of sequence modeling, Liu et al. [40] introduced the PatchFormer architecture, an advanced patch-based Transformer framework featuring dual attention mechanisms. The Dual Patch-wise Attention Network (DPAN) captures global degradation trends, while the Feature-wise Attention Network (FAN) extracts temporal correlations and short-term anomalies, including capacity regeneration. The model demonstrated superior accuracy across public datasets, offering a scalable, open-source solution for high-fidelity battery life forecasting in real-world deployments.

Complementing these algorithmic innovations, Shaik et al. [41] provided a comprehensive review of advanced BMS frameworks, highlighting their importance in achieving sustainable development goals through intelligent control over charge/discharge cycles, thermal regulation, and SOC estimation. The review identified key technical barriers in current systems and emphasized the necessity for integrated AI methods to bridge the gap between high-performance forecasting and practical deployment.

Khawaja et al. [42] further examined a range of machine learning algorithms for SOC and SOH estimation. Their study found that random forest regression consistently outperformed traditional models, including linear regression, support vector machines, and gradient boosting, achieving R2 values of 0.9999 and low error metrics (MAE: 0.0035, RMSE: 0.0097). While the method proved effective for smaller datasets, the authors recommended future integration of deep learning or federated learning to accommodate larger-scale applications and complex battery behaviors. These findings reaffirm the importance of AI-driven approaches in advancing predictive maintenance and safety strategies for next-generation battery management systems.

3.4. Lithium-Ion Battery Energy Management with Machine Learning

Machine learning is playing an increasingly transformative role in the optimization of lithium-ion battery energy systems, with applications extending across design, manufacturing, real-time control, and lifecycle prediction. Valizadeh and Amirhosseini [43] provided a comprehensive overview of ML’s potential in enhancing battery research and development through efficient exploration of chemical and operational parameters. Their work emphasized key challenges such as data sparsity, computational complexity, and model interpretability, while proposing hybrid strategies, transfer learning, and self-improving algorithms as potential solutions. By bridging first-principle modeling with data-driven approaches, their framework offers a roadmap for scalable, cost-effective, and environmentally sustainable battery innovations.

Complementing this broader perspective, Ardeshiri et al. [44] reviewed the integration of ML into BMS and highlighted its impact on improving state estimation, fault detection, and operational monitoring in electric vehicle and grid applications. Their analysis revealed that various ML techniques, including supervised learning, neural networks, and ensemble models, exhibit different strengths depending on operational requirements. The authors underscored the need to balance accuracy, computational cost, and implementation complexity to achieve effective, adaptable battery control under real-world constraints.

The importance of explainability in battery ML models is increasingly recognized, especially as these models become central to safety-critical systems. Niri et al. [45] explored the application of explainable machine learning (XML) across the battery value chain, from manufacturing to state estimation and performance monitoring. Although feature importance analysis dominates current XML use cases, the study identified a lack of adoption for advanced methods such as Shapley values and counterfactual explanations and their limited use in neural network-based models. These insights underscore the urgent need to develop battery-specific XML frameworks and benchmark datasets to ensure trust, transparency, and informed decision-making in increasingly complex battery systems.

Focusing on RUL estimation, Jin et al. [46] demonstrated that deep neural networks (DNNs) have emerged as the most effective ML architecture for modeling nonlinear degradation behavior and predicting battery life with high generalization accuracy. Their review noted the growing role of RUL prediction in enabling predictive maintenance and optimizing battery utilization across EV and grid storage platforms, while also highlighting challenges in real-world implementation due to model complexity and data variability.

Together, these studies reveal that the synergy between advanced ML techniques, explainability tools, and robust BMS design is central to improving energy efficiency, operational safety, and lifecycle sustainability in lithium-ion battery systems. Continued research into tailored ML frameworks, particularly those capable of handling multi-scale, time-series, and hybrid data, will be essential in driving forward the next generation of smart, adaptive energy storage technologies.

3.5. Intelligent Systems for Lithium-Ion Batteries

The development of intelligent systems for LIBs is vital for enhancing safety, reliability, and digital adaptability across electric mobility and stationary energy storage applications. As thermal events remain one of the most critical barriers to widespread LIB adoption, Li et al. [47] proposed a multi-layered safety framework that integrates early detection, thermal management, and fire suppression strategies. Their study emphasized the synergistic effect of combining real-time monitoring technologies such as optical fiber sensors and ultrasonic imaging with advanced thermal management systems. Water-based fire suppression agents were identified as particularly effective for LIB incidents, and the proposed strategy encompasses both passive and active safety mechanisms, including thermal runaway characterization, intelligent detection, and adaptive cooling [48]. This framework forms a foundational guideline for designing safer battery systems capable of preventing catastrophic failures while ensuring performance continuity.

In parallel, the convergence of electrochemical modeling and artificial intelligence is redefining the landscape of smart battery systems. Amiri et al. [49] reviewed the integration of physics-based models with machine learning algorithms to develop hybrid modeling architectures that combine the mechanistic accuracy of fundamental battery equations with the adaptability and speed of data-driven techniques. These hybrid models demonstrate significant promise across the battery lifecycle, including design optimization, performance estimation, fault diagnosis, and operational control. By merging physics-informed simulations with machine learning’s pattern recognition capabilities, such frameworks address the limitations of standalone approaches, offering both explainability and computational efficiency. Their application in digital twin environments paves the way for real-time, intelligent battery systems capable of continuous monitoring, predictive maintenance, and lifecycle optimization.

Together, these intelligent approaches ranging from safety-oriented system design to hybrid digital architectures illustrate the emerging sophistication of next-generation LIB technologies. As battery systems grow more complex and their applications more critical, the role of AI-enhanced safety and hybrid modeling frameworks will be central in enabling smart, resilient, and scalable energy storage solutions.

3.6. AI-Based Predictive Analytics for Lithium-Ion Battery Systems

Artificial intelligence is reshaping the landscape of EV technology through its application in predictive analytics, enabling more intelligent, adaptive, and efficient battery systems. Cavus et al. [50] emphasized that AI-driven approaches leveraging machine learning, deep neural networks, and reinforcement learning outperform conventional methods in predicting critical battery states such as SOC and SOH, as well as in managing thermal performance. These systems enable dynamic energy optimization, enhance regenerative braking efficiency, and improve overall vehicle control across varying operational environments. Furthermore, the integration of IoT connectivity and big data analytics expands these capabilities to encompass fleet-level optimization and predictive maintenance. Nonetheless, challenges persist regarding real-time processing limitations, interpretability of AI models in safety-critical applications, and the imperative for robust cybersecurity measures. Addressing these constraints will be essential for deploying scalable, reliable AI architectures across heterogeneous EV platforms.

Zhao and Burke [51] extended this perspective by examining how AI enhances battery diagnostics through improved precision in SOH assessments and RUL predictions. Their study highlighted advanced machine learning strategies, including end-to-end architectures, federated learning, and multimodal time-series analysis that facilitate decentralized, privacy-preserving diagnostics while adapting to changing usage patterns. These developments contribute not only to BMS accuracy and responsiveness but also support environmental sustainability by enabling battery lifespan extension and efficient second-life deployment. Moving forward, future research should focus on self-learning algorithms capable of real-time model updating, the incorporation of diverse operational datasets, and enhanced integration of multimodal sensor data to ensure robust generalization across varying battery chemistries and driving conditions.

Collectively, these predictive analytics innovations signal a transformative shift in battery intelligence, where AI not only improves system efficiency and safety but also serves as a catalyst for broader transportation electrification. The convergence of real-time diagnostics, adaptive control, and lifecycle management positions AI as a central enabler of next-generation battery technologies.

3.7. AI in Renewable Energy Storage and Lithium-Ion Batteries

Artificial intelligence is emerging as a powerful enabler for enhancing sustainability across the LIB lifecycle, from environmental impact assessment to end-of-life recovery and recycling. Chen et al. [52] proposed an AI-driven framework for life cycle assessment (LCA) of LIBs, demonstrating how machine learning and pattern recognition techniques can significantly improve the speed, scalability, and granularity of sustainability evaluations. Their SWOT analysis emphasized the strengths of AI in automating data processing and predictive modeling while identifying critical challenges such as data standardization, transparency, and methodological robustness. The study highlights that interdisciplinary collaboration between battery experts and AI specialists is essential to develop unified protocols that ensure accurate and interpretable results. These efforts are crucial for aligning battery production and deployment with global environmental targets, particularly as electric mobility and renewable energy storage systems expand.

End-of-life management remains a pressing concern for LIB sustainability, particularly in the context of ternary cathode recovery. Ren et al. [15] provided a comprehensive analysis of recycling technologies, including mechanical, pyrometallurgical, hydrometallurgical, biotechnological, and direct recovery methods. Despite recent improvements in material purity and process efficiency, challenges such as complex cell architectures and energy-intensive processes persist. The integration of AI into recycling workflows offers promising solutions ranging from process optimization and real-time monitoring to intelligent sorting and predictive control, enabling more efficient and scalable recycling systems. Moreover, AI-guided design-for-recyclability strategies and automated disassembly systems could further advance circular economy initiatives by improving material recovery rates and reducing environmental impact.

Together, these developments underscore the transformative potential of AI in driving sustainable innovation across the LIB lifecycle. From upstream production to downstream recycling, intelligent systems will be pivotal in shaping environmentally responsible energy storage technologies and supporting long-term resource security in the electrification era.

Artificial intelligence is playing a pivotal role in transforming BMS for electric vehicles by enabling precise and adaptive control over energy storage performance. Mamidi et al. [53] highlighted that AI-enhanced BMS platforms significantly advance the real-time estimation of key battery metrics, namely, SOC, SOH, and fault diagnostics through intelligent analysis of charging behavior, current flow dynamics, and degradation signatures. These systems not only improve the accuracy of internal state predictions but also enhance thermal management and early fault detection capabilities, reducing safety risks and prolonging battery service life.

The integration of AI into BMS architecture marks a critical evolution in addressing both technical and environmental challenges associated with lithium-ion battery systems. By continuously optimizing battery usage through learning-based models, these intelligent systems support sustainable electric mobility while aligning with broader objectives in energy efficiency and environmental impact mitigation. As the demand for high-performance, scalable, and environmentally responsible energy storage continues to grow, AI-driven energy management frameworks are becoming indispensable components of next-generation EV platforms.

3.8. AI Techniques for Lithium-Ion Battery System Modeling

Artificial intelligence techniques have become essential in advancing the modeling of lithium-ion battery behavior, particularly for predicting aging and estimating SOC under real-world conditions. Mayemba et al. [54] demonstrated that machine learning models, including extreme learning machine-inspired networks and encoder-coupled architectures, achieve high predictive accuracy for capacity fade, with RMSEs as low as 1.3% and 2.7% across multiple datasets. These models incorporate battery science fundamentals into the input space and have been validated against data reflecting stressors such as temperature fluctuation, calendar aging, and dynamic load profiles. Their ability to generalize across heterogeneous aging scenarios enabled by unified stress factor zoning and temporal integration techniques surpasses the performance of commercial empirical models, offering a versatile framework for expanding into more nuanced degradation indicators such as internal resistance growth and electrode material loss.

Further enhancing battery model adaptability, Wang et al. [55] proposed a temperature-adaptive neural network framework for SOC estimation that dynamically selects between multi-layer neural networks (MNN), long short-term memory (LSTM), and gated recurrent unit (GRU) architectures based on real-time thermal conditions. Their GRU-based model achieved the lowest mean absolute error (2.15%), outperforming traditional approaches by over 50% during validation across simulated driving profiles and four distinct temperature ranges. By integrating temperature-dependent voltage, current, and temporal features, the model addresses critical challenges in battery state estimation, particularly under thermal stress conditions. Moreover, the system’s planned deployment on edge computing platforms like NVIDIA Jetson highlights its practical viability for real-time SOC monitoring in applications ranging from electric vehicles to unmanned aerial systems.

Together, these developments underscore the growing effectiveness of AI-driven modeling frameworks in capturing the complex, time-dependent behaviors of lithium-ion batteries. By integrating battery physics with intelligent architectures, these models contribute to safer, more efficient, and more adaptive energy storage systems suitable for a wide range of operational environments.

3.9. Intelligent Lithium-Ion Battery Management for Enhanced Performance

Expanding the role of intelligent energy management beyond traditional electric vehicle platforms, recent advancements demonstrate the potential of hybrid power systems that integrate lithium-ion batteries with alternative energy sources for improved dynamic performance. Elkerdany et al. [56] investigated a hybrid propulsion system for electric unmanned aerial vehicles (UAVs) combining polymer membrane fuel cells with Li-ion batteries, managed through an intelligent control architecture employing Fuzzy Logic Control (FLC) and Adaptive Neuro-Fuzzy Inference System (ANFIS) algorithms. Through detailed simulations across multiple flight modes, the study revealed that both control methods effectively regulated energy distribution between power sources while exhibiting distinct dynamic response profiles under varying operational demands.

3.10. Machine Learning Models for Lithium-Ion Battery Optimization

Zou [57] introduced a Bi-LSTM model enhanced with dual attention mechanisms, temporal and spatial, that delivers highly accurate lithium-ion battery SOH estimation, achieving low error margins (RMSE: 0.4%, MAE: 0.3%). The model leverages Differential Thermal Voltammetry (DTV) to link micro-phase transitions with observable battery behavior, enabling more effective feature weighting across time and space. This dual-attention strategy addresses limitations found in conventional single-attention models and improves estimation accuracy by around 10% over non-attention-based approaches. Its consistent performance across varying cycle starting points (error fluctuation <0.1%) and compatibility with cloud-integrated BMS underscore its practical utility in cyber-physical environments. The model marks a major step forward in SOH prediction by combining deep learning with explainable insights into battery degradation processes.

3.11. AI and Digital Twin Technologies for Fast Charging Optimization in Electric Vehicles

Recent studies highlight the integration of artificial intelligence and digital twin technologies for advanced lithium-ion battery management. Zhang et al. [58] developed a fast-charging optimization method using an enhanced DDPG algorithm, improving efficiency and lifespan but lacking real-world validation. Issa et al. [59] proposed a cloud-based digital twin using ensemble learning and external APIs for highly accurate SOC estimation (NRMSE = 0.00047), with future potential for deep learning integration. Iyer et al. [60] introduced ENDEAVOR, a heuristic algorithm combining digital twins and UKF for SOC estimation and EV charging node optimization, reducing grid load and user wait time. Yang et al. [61] presented a reliability-focused digital twin using stochastic modeling and Bayesian evolution, achieving <5% error in life prediction and over 50% cost savings. Together, these approaches demonstrate complementary advances in fast charging, real-time estimation, and lifecycle management, though further validation and system-level integration are needed. Table 1 summarizes foundational models and architectures developed for Li-ion battery state estimation and control.

Table 1.

Summary of AI Models and Applications in Lithium-Ion Battery Systems.

4. Digital Twin Modeling for Lithium-Ion Battery Management

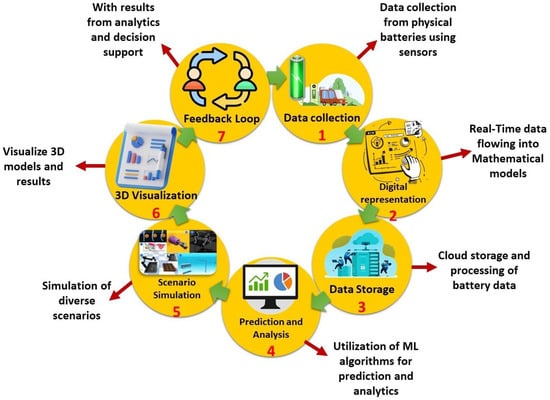

A typical approach for implementing battery digital twins is illustrated in Figure 3 and includes seven separate stages. First, sensors in physical batteries collect data, which are then integrated into digital models to represent the system (Step 2). Afterward, these data are handled by cloud services (Step 3), which makes it possible to execute machine learning (Step 4) and produce new analysis and predictions. Numerous simulation scenarios are set up to check several operating factors (Step 5), and 3D visual tools draw dynamic battery models to better explain the results (Step 6). A feedback loop (Step 7) uses the results from analytics to enhance the physical system and assist with improved decisions and ongoing improvement. The method highlights how battery management systems work through a layered and interactive digital twin approach.

Figure 3.

Standardized workflow for developing battery digital twins. The figure illustrates an end-to-end integration of data collection, digital representation, storage, predictive analytics, simulation, visualization, and feedback mechanisms for optimized battery management.

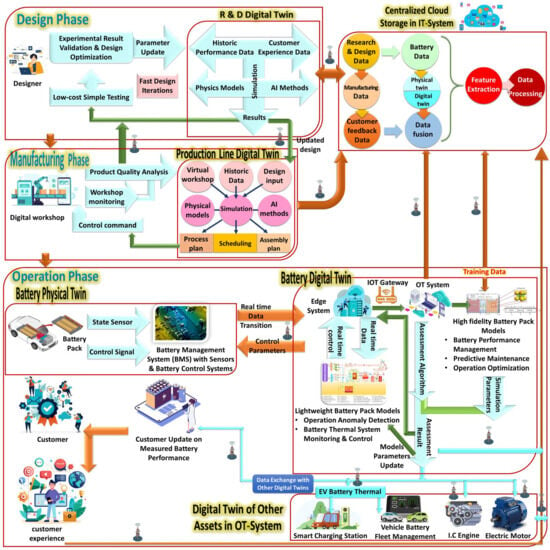

Figure 4 shows that digital twin modeling aids in managing lithium-ion batteries over the whole product lifetime. During design, digital twins use physics, AI, and existing test results to find the best structure and efficiency for the battery before creating any samples. Given that the manufacturing line is nearly the same as its digital duplicate, any issue is spotted quickly, so processes can be changed as necessary. As part of the operations phase, the actual battery pack is linked with a digital twin via the sensors collecting voltage, current, and temperature. AI systems go through these data to keep an eye on battery performance, point out odd behaviors, and predict when the battery will fail. As user movements and battery condition keep changing, these models update automatically, allowing the system to adapt its control strategies and prolong battery use. The use of a digital twin gives better safety and reliability to lithium-ion batteries while also assisting in more intelligent energy handling for electric cars, smart charging points, and fleet systems.

Figure 4.

Digital twin modeling framework for lithium-ion battery management across the design, manufacturing, and operational phases, enabling intelligent control, predictive maintenance, and lifecycle optimization.

Digital twin technology offers a transformative paradigm for managing lithium-ion battery systems by creating high-fidelity virtual replicas that integrate multi-scale modeling, real-time sensing, and intelligent analytics. Naseri et al. [14] outlined a layered digital twin architecture composed of connectivity, twin, and service modules, which leverage artificial intelligence, IoT integration, and cloud platforms such as ANSYS and Microsoft Azure. These systems provide actionable insights across the battery lifecycle, enabling functions such as SOC estimation, predictive maintenance, and lifecycle optimization. Reported outcomes include up to 60% reductions in maintenance costs and 15% improvements in battery lifespan through optimized charging strategies. However, technical challenges persist, including the need for standardized protocols, computational efficiency, and justifiable deployment costs. Emerging applications such as battery passports and second-life planning further extend the value of digital twins, supporting multi-stakeholder decision-making despite ongoing concerns related to data processing, interoperability, and model scalability.

Enhancing the practical application of digital twin frameworks, Wang and Li [62] demonstrated an integrated system combining extended Kalman filtering (EKF) for SOC estimation and particle swarm optimization (PSO) for SOH prediction. Their approach, validated through MATLAB/Simulink simulations and experimental setups, effectively addressed the challenges of Gaussian white noise in real-time environments. By fusing model-based estimators with data-driven optimization within an embedded platform, this implementation exemplified the real-world viability of digital twin architectures in monitoring and prognostics. The ability to bridge theoretical models with operational robustness marks a significant advancement in battery digitalization, providing a comprehensive foundation for intelligent performance assessment and long-term system reliability.

4.1. AI-Enabled Digital Twin Solutions for Lithium-Ion Battery Management

Artificial intelligence-enabled digital twin frameworks are emerging as powerful tools for optimizing lithium-ion battery management, enabling enhanced state estimation, real-time diagnostics, and intelligent control through the fusion of virtual modeling and physical sensor data. Kang et al. [63] demonstrated how digital twins, supported by mathematical modeling and three-dimensional visualization, enable real-time synchronization between physical and virtual systems. Their study of hybrid powertrains combining lithium-ion batteries and fuel cells revealed the utility of this approach for dynamic energy control and simulation accuracy, providing a scalable framework applicable to broader energy systems and transportation platforms.

Focusing on state estimation, Tang et al. [64] proposed a DT-driven system utilizing a hybrid HIF-PF algorithm, achieving an average SOC estimation error of just 0.14% under dynamic stress tests. This architecture addressed critical limitations in traditional battery management systems, such as data storage constraints, initial value sensitivity, and computational inefficiencies. Real-time monitoring was facilitated via an interactive visualization interface, laying the groundwork for future closed-loop DT systems capable of autonomously optimizing physical battery parameters.

In support of model robustness under thermal and nonlinear dynamics, Song et al. [65] introduced a hybrid CNN-LSTM network for SOC estimation that achieved mean absolute errors below 1.5% and robust performance across various ambient temperatures. The architecture leverages CNNs for spatial feature extraction and LSTMs for capturing temporal dependencies. A periodic parameter update mechanism further enhanced model adaptability in response to battery aging, suggesting practical deployment for real-world applications with minimal recalibration.

Khalid and Sarwat [66] proposed a unified ML framework combining minimized Akaike Information Criterion (m-AIC)-optimized ARIMA with NARX and MLP neural networks. Their model achieved RMSE values as low as 0.1323% and maintained high accuracy under low C-rate conditions. While current implementations are offline, their results support future integration into adaptive observers for real-time SOC forecasting in aging-aware, multi-pack systems.

Digital twins are also playing a transformative role in broader energy and smart grid applications. Das et al. [67] reviewed DT integration with machine learning in power systems, identifying key use cases including battery health prognosis, renewable energy integration, and cost projections for photovoltaic and wind systems. This study critically synthesizes how such integrated frameworks enable robust predictive analytics and optimized decision-making across diverse energy management scenarios, from component-level health to system-wide renewable utilization. Similarly, Kang [68] emphasized DT’s role in edge-cloud architectures and AutoML for software-defined vehicles, supporting real-time EV data analytics and traffic forecasting in resource-constrained environments. Kang’s work highlights the practical utility of these architectures in enhancing the intelligence and responsiveness of future vehicle systems, demonstrating efficient deployment of complex data processing and AI capabilities under computational limitations, which is vital for autonomous driving and smart mobility.

From a systems engineering perspective, Chai et al. [69] discussed DT deployment in intelligent connected vehicles (ICVs), emphasizing its role in lifecycle optimization, data handling, and modular environmental perception in urban traffic. Their study highlighted the benefits of partitioning tasks between edge and cloud infrastructure to improve scalability and processing efficiency. Wang et al. [70] further detailed the broader impact of digital twin frameworks on energy system operations, calling attention to real-time simulation, predictive analytics, and system-wide optimization for smart energy ecosystems.

Focusing specifically on lithium-ion battery applications, Zhao et al. [71] introduced a digital twin architecture integrating LSTM neural networks with EKF for SOC estimation. This hybrid system combines accurate initial SOC estimation from LSTM with EKF’s online correction capability, delivering enhanced robustness, lower error margins, and improved adaptability. The proposed rolling-learning architecture also supports future hierarchical DT models capable of simultaneously addressing SOC and RUL prediction, thereby advancing battery lifecycle management within renewable energy storage systems.

The reviewed studies underscore the critical role of DTs in modern energy and smart grid systems, particularly within ICVs. These works collectively demonstrate how DTs, integrating AI and edge-cloud computing, enable precise battery health prognosis, optimized renewable energy management, and real-time EV data analytics, crucial for enhanced system intelligence and responsiveness. A key insight from this body of work is the demonstrated capability of hybrid DT architectures to provide robust and adaptable battery state estimation (SOC/RUL), significantly advancing lifecycle management for Li-ion batteries in diverse applications, including renewable energy storage.

4.2. Lithium-Ion Battery Lifecycle Management with Digital Twins

Digital twin technology is emerging as a pivotal solution for next-generation battery lifecycle management, offering a scalable and intelligent framework for real-time performance monitoring, predictive maintenance, and system optimization. Elkerdany et al. [13] proposed a DT-based architecture that enhances BMS capabilities by integrating high-fidelity virtual representations with AI-powered analytics. Their study highlighted the potential of DTs to address key limitations in traditional BMS, including fragmented data streams and a lack of predictive functionality. By enabling synchronized physical-virtual monitoring, the framework supports cost-effective implementation across a range of lithium-ion battery applications. However, challenges remain in ensuring accurate data synchronization, maintaining model fidelity under dynamic operating conditions, and reducing computational overhead, requiring further research for full deployment at scale.

Building on these advancements, Lakshmi and Sarma [72] introduced a digital twin framework that fuses artificial intelligence and IoT technologies for electric vehicle energy storage systems. Their approach achieved high predictive accuracy, with a mean absolute error of 0.042 and a root mean square error of 0.055, alongside low system latency (0.12 s) and fast feedback responsiveness (0.45 s). Notably, the system improved energy efficiency by 15%, minimized capacity fade to 0.0025% per cycle, and demonstrated strong anomaly detection performance (95% precision, 76% detection rate). These results validate the effectiveness of digital twins in enhancing battery health and longevity while supporting advanced control and diagnostics. The seamless integration of AI, IoT, and DTs sets a new benchmark for intelligent battery lifecycle management in electric vehicles, offering a robust pathway toward more resilient and adaptive energy storage ecosystems.

4.3. Real-Time Digital Twin Systems for Lithium-Ion Batteries

A novel digital twin-based BMS architecture has recently been proposed, marking a significant advancement in real-time monitoring of lithium-ion batteries for electric vehicle applications. This dual-model framework integrates onboard tracking with cloud-based analytics to enable comprehensive and adaptive SOH estimation throughout a battery’s operational life [73]. Central to the system’s innovation is the application of advanced feature engineering and dynamic model retraining, which collectively address longstanding challenges in conventional data-driven approaches, particularly the inability to accurately capture battery degradation during partial charge cycles, a common but underrepresented condition in real-world usage. Through validation across multiple datasets, the proposed architecture has demonstrated robust performance, maintaining accurate predictions of both SOC and state-of-energy (SOE) while accommodating the evolving degradation patterns of lithium-ion batteries. This fusion of real-time data with long-term predictive analytics establishes a new standard for intelligent BMS design, offering a resilient solution that accounts for the inherent variability in battery behavior under diverse driving conditions. Ultimately, such architectures not only enhance operational reliability and performance but also contribute to broader goals in electric mobility and climate change mitigation by optimizing energy efficiency and lifecycle sustainability [74].

4.4. Integration of AI and Digital Twins in Lithium-Ion EV Batteries

Recent advancements in lithium-ion battery performance prediction have been realized through the integration of artificial intelligence and digital twin frameworks, particularly those combining metaheuristic optimization with ensemble learning techniques. One such approach incorporates improved gray wolf optimization with the AdaBoost algorithm to enhance predictive accuracy of discharge capacity within digital twin environments. Validated through rigorous ten-fold cross-validation on the NASA battery aging dataset, this hybrid model demonstrated exceptional performance, achieving mean absolute and root mean square errors as low as 0.01, substantially outperforming both standard and stacked LSTM architectures [75]. The enhanced predictive fidelity of this AI-driven framework presents a significant breakthrough in addressing persistent challenges related to energy storage reliability and operational efficiency. Furthermore, its applicability extends beyond electric vehicles to broader renewable energy storage domains, enabling refined battery design, predictive maintenance, and improved integration of intermittent renewable resources. These benefits collectively contribute to the development of resilient, low-carbon energy infrastructures through smarter battery management technologies. As emphasized in related studies, however, widespread deployment of such digital twin systems must contend with several practical constraints, including the need for higher fidelity data streams, increased computational scalability, robust cybersecurity protections, and harmonized communication protocols across diverse BMS architectures [76]. Despite these challenges, the potential for AI-integrated digital twins to revolutionize battery lifecycle management remains evident. Their ability to facilitate real-time monitoring, dynamic state estimation, and long-term degradation tracking positions them as foundational components in next-generation battery systems, particularly in electric vehicles and smart energy grids [13].

4.5. Digital Twins for Predictive Maintenance in Lithium-Ion BMS

Recent developments in predictive maintenance for lithium-ion BMS have leveraged digital twin frameworks to achieve greater accuracy in RUL estimation and improved maintenance strategies. One such approach integrates stochastic degradation modeling with Bayesian-based adaptive evolution to dynamically capture the stochastic and nonlinear degradation behaviors of batteries throughout their lifecycle. This method demonstrated high prediction accuracy with error margins maintained around 5%, while enabling cost reductions in predictive maintenance operations by up to 62%, highlighting its practicality for real-world deployment [61]. Further extending these capabilities, Zhao et al. introduced a Hierarchical and Self-Evolving Digital Twin (HSE-DT) model that combines Transformer and convolutional neural network (CNN) architectures with transfer learning to enable dynamic adaptation under diverse operating conditions. The system achieved root mean square error values below 0.9% for SOC and 0.8% for SOH, emphasizing both its precision and real-time responsiveness [77]. In parallel, Jafari and Byun proposed a hybrid digital twin framework combining Extreme Gradient Boosting (XGBoost) with the EKF, offering a balance between nonlinear learning and real-time state estimation. This integrated model demonstrated enhanced robustness in tracking battery aging phenomena, particularly through online model updates and adaptive filtering strategies [78].

Despite these advances, concerns surrounding cybersecurity remain a critical barrier to deployment. Pooyandeh and Sohn identified vulnerabilities in digital twin-based SOC estimation systems to timestamp attacks, where malicious disruptions in data chronology compromised the reliability of real-time monitoring. This highlights the need for robust defense protocols to protect battery systems from covert data manipulations and ensure secure operation in connected electric vehicle networks [79]. Additionally, large-scale implementation of ML-driven digital twins introduces further complexities, including the challenges of managing massive data volumes, establishing reliable communication protocols, and securing data privacy. Kaleem et al. emphasized that while ML-enhanced digital twin models employing deep learning, neural networks, and support vector machines offer remarkable improvements in battery performance and longevity, their successful application demands scalable infrastructure and cloud-based systems to support continuous learning and remote diagnostics at fleet-wide scales [80]. Collectively, these innovations underscore the evolving potential of digital twins in predictive maintenance, while pointing toward necessary improvements in system integration, model generalization, and cybersecurity to ensure dependable long-term deployment.

4.6. Digital Twin Simulations for Lithium-Ion Battery Behavior Prediction

Emerging digital twin frameworks are increasingly employing advanced simulations and data-driven methods to enhance the predictive capabilities of lithium-ion battery behavior under varying operational conditions. A notable implementation based on the Industrial Internet-of-Things (IIoT) paradigm utilized Microsoft Azure cloud infrastructure to fuse real-time sensor data with historical driving patterns using three distinct APIs. Through a supervised voting ensemble machine learning algorithm, this architecture achieved high-fidelity estimation of SOC, yielding normalized root mean square errors of 1.1446 in simulation and 0.02385 during experimental validation. By systematically coordinating data acquisition, synchronization, and visualization processes, the model demonstrated robust adaptability to dynamic driving scenarios, offering practical insights for electric vehicle range prediction and predictive maintenance planning [59].

Further enhancing simulation fidelity, Bandara and Halgamuge developed a hybrid digital twin architecture that merges conventional mathematical modeling with an adaptive LSTM network, fine-tuned using Adam optimization and early stopping regularization techniques. Trained on real-world NASA battery cycling data, the model exhibited a 68.42% reduction in capacity estimation error relative to conventional digital twin approaches. Its ability to track subtle patterns of degradation across diverse usage profiles underscores the value of integrating physical and data-driven methodologies for lifecycle-aware battery management [81]. Complementing these efforts, Hang et al. [82] presented a digital twin approach that dramatically reduces experimental load by up to 99% through numerical electrochemical simulations used to synthetically augment limited experimental datasets. This hybrid model achieved capacity prediction errors below 2%, showcasing the potential of combining domain-informed simulations with machine learning to overcome data scarcity. However, widespread adoption is contingent on automating the calibration of electrochemical models, which currently requires expert intervention, thus pointing to future directions for model generalization and deployment scalability. Collectively, these hybrid digital twin approaches establish a promising foundation for predictive modeling that synergistically harnesses both simulation and real-world data to support intelligent battery diagnostics, optimized maintenance, and extended operational life. Table 2 presents emerging sensor technologies and their integration within advanced battery monitoring frameworks.

Table 2.

AI-Enabled Digital Twin Models for Lithium-Ion Battery Management.

5. Cloud-Based Lithium-Ion Battery Management Systems

Figure 5 displays a digital twin framework developed to elevate the performance of lithium-ion BMS. At the beginning, the system includes physical equipment that looks at how the battery operates in real time, including the level of temperature, voltage, current, and how charged the battery is. These data are handled locally by edge computing, after which they are kept for future use. Using all these data, the BMS is able to run simulations to show battery performance and predict future problems like aging or failures. Because these models are regularly enhanced, the BMS can make better decisions. The graphs users see can be sent to other systems for further use in homes, vehicle batteries, or factories. From the battery to the cloud, data travel securely through the layers, so battery monitoring becomes more precise, practical, and smart.

Figure 5.

Digital twin-based architecture for Battery Management Systems (BMS), illustrating how real-time battery data flow through multiple layers for monitoring, analysis, and optimization.

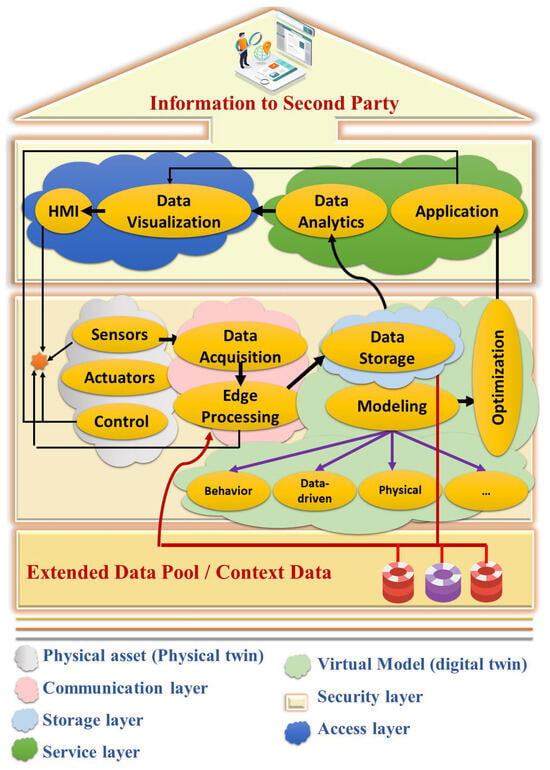

As shown in Figure 6, smart battery systems can be monitored and controlled with help from a combination of edge and cloud computing features inside an advanced BMS. In part one of the figure, sensors continuously measure temperature, voltage, current, and State-of-Health in the batteries and send this information to nearby edge devices that process it immediately. If the edge devices cannot manage complicated tasks, the unprocessed data are forwarded to a controller, then sent to the cloud for further study, improvements, and prediction. The second section of the figure covers digital twins, where information from physical batteries helps build virtual models that replicate battery actions based on data, physical functions, and behaviors. Thanks to these models, BMS can perfect its control solutions, spot any malfunctions promptly, and lengthen the battery’s service life. Having physical, communication, storage, access, and service layers enables the system to keep data safe and efficient. By using this special approach, bringing together cloud and digital twin, intelligent battery handling becomes possible across homes, factories, and vehicles.

Figure 6.

Edge-cloud and digital twin-based architecture for Battery Management Systems (BMS). The system enables real-time battery monitoring, analysis, and control through local processing, cloud computing, and virtual modeling for smart energy applications.

The convergence of cloud computing and BMS has emerged as a pivotal enabler for advancing EV performance, scalability, and reliability. Cloud-based BMS architectures offer substantial advantages by overcoming onboard computational constraints, enabling remote diagnostics, real-time data analytics, and scalable software updates. These systems significantly expand the capabilities of conventional BMS by supporting predictive maintenance, fleet-level energy optimization, and enhanced visualization of operational data. However, key technical challenges remain in the implementation of robust online learning algorithms, ensuring secure connectivity, and fully exploiting fleet-scale data streams for adaptive control [83]. Foundational work in BMS design has emphasized the importance of accurate performance modeling, efficient state estimation, and adaptive control strategies, particularly for SOC, SOH, and state-of-function (SOF) tracking to address safety, durability, and cost issues that remain obstacles to widespread EV adoption [84].

Progress toward operationalizing digital twin frameworks within cloud-based systems has been marked by implementations that integrate advanced filtering algorithms and optimization techniques. A notable example is the use of adaptive extended H-infinity filters for SOC estimation and particle swarm optimization to simultaneously track capacity and power fade. This hybrid architecture demonstrated robust accuracy across lithium-ion and lead-acid chemistries under varying initialization and dynamic loading conditions, representing a critical milestone in translating digital twin theory into real-world applications. The system’s successful deployment in both stationary and mobile environments underscores its potential for scalable, cloud-integrated diagnostics and lays the groundwork for future incorporation of machine learning models to enhance lifetime prediction and system optimization [85]. Complementing this, cloud-based condition monitoring platforms utilizing IoT modules and distributed computing, such as those implemented on Raspberry Pi and Google Cloud, have demonstrated cost-effective and accurate health assessments across large-scale lithium-ion battery systems. These platforms successfully overcome traditional scalability limitations and establish a centralized framework for data-driven battery energy storage management, setting a precedent for future industrial-scale deployments [86]. Together, these developments highlight the transformative potential of cloud-BMS ecosystems, where distributed intelligence and centralized analytics enable more resilient, efficient, and intelligent battery system control.

5.1. AI-Powered Cloud Solutions for Lithium-Ion Battery Management

The integration of artificial intelligence within cloud-based BMS presents a transformative shift in electric vehicle and smart grid applications. By merging scalable cloud computing with data-driven machine learning algorithms, this framework addresses the limitations of traditional onboard systems, enabling enhanced state estimation, including charge, health, and safety, and improved thermal management. The combined use of physical battery models and AI techniques allows for more accurate, multi-timescale predictions, supporting proactive control strategies. Leveraging cloud infrastructure, the system efficiently processes extensive operational data streams to deliver real-time insights without the need for costly hardware upgrades, thereby advancing both battery reliability and safety in large-scale energy storage applications [87].

5.2. Edge AI for Real-Time Lithium-Lon Battery Monitoring

Edge artificial intelligence is increasingly emerging as a viable solution for real-time lithium-ion battery monitoring, particularly in scenarios where onboard computational resources are constrained. A notable advancement in this domain involves the deployment of a compact neural network architecture capable of performing simultaneous SOC and SOH estimation using electrochemical impedance spectroscopy (EIS) and temperature data. By systematically optimizing network structure and applying quantization techniques, this framework significantly reduces both memory usage and processing demands, enabling its deployment on edge devices without compromising predictive accuracy. Such innovations effectively bridge the gap between advanced diagnostic capabilities and the physical limitations of embedded platforms, making them particularly suitable for predictive maintenance applications in portable and distributed battery-driven technologies [88].

Expanding this concept, the Intelligent Battery Management System (IBMS) integrates end-edge-cloud connectivity, digital twin modeling, and blockchain security into a multilayered, reconfigurable framework designed to optimize performance, safety, and system-level adaptability. Through dynamic battery reconfiguration, real-time simulations, and secure vehicle-to-grid and vehicle-to-infrastructure data exchange, this architecture facilitates predictive analytics, enhances operational efficiency, and supports next-generation mobility solutions. The inclusion of cybersecurity measures and adaptive learning further reinforces the system’s robustness, positioning it as a comprehensive solution for modern energy and transportation networks [89]. More broadly, the convergence of AI with battery prognostics and health management (PHM) marks a paradigm shift in how multi-scale battery dynamics are understood and controlled. By leveraging big data, IoT, and deep learning techniques, AI-centric PHM frameworks enable predictive modeling that accounts for variability in battery materials, usage conditions, and manufacturing inconsistencies, thus improving accuracy, safety, and system longevity. This evolution reflects the growing influence of Industry 4.0 technologies in transforming battery system intelligence from reactive control toward proactive optimization [90].

5.3. Cloud and Edge Computing for Lithium-Ion EV Batteries

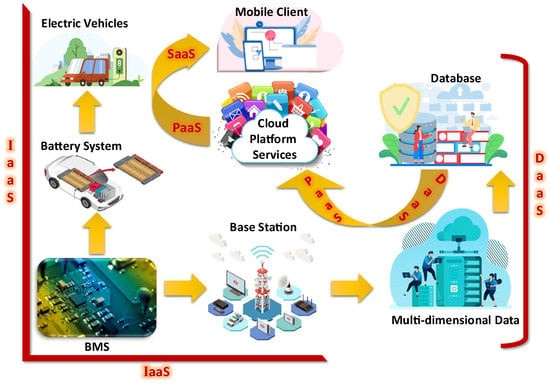

Figure 7 demonstrates an architecture that allows for both intelligent battery monitoring and control. Infrastructure-as-a-Service (IaaS) passes battery information over edge networking to cloud servers. Then, the cloud, including Data-as-a-Service (DaaS) and Platform-as-a-Service (PaaS), evaluates these readings using advanced algorithms to estimate the system’s state and maintain it. The analytics are provided to users through software offered over the internet or Software-as-a-Service (SaaS), allowing them to make decisions in real time and make battery systems in electric vehicles better, safer, and more reliable.

Figure 7.

Cloud-based BMS architecture integrating IaaS, PaaS, SaaS, and DaaS for real-time battery monitoring, analytics, and user interaction in electric vehicles.

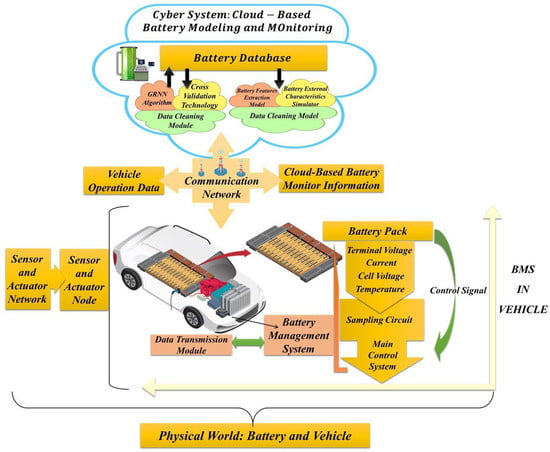

The Cyber-Physical Battery Management System framework, shown in Figure 8, integrates part of the physical battery system in electric vehicles with computing and data management within a cloud setting, all connected through communication channels. The job of the physical layer is handled by the BMS, which constantly tracks cells’ voltages, currents, and temperature and then sends these data using a communication system. Aggregated data from multiple vehicles are processed by cleaning modules, cross-validation, and deep learning on the cyber layer and then shared in a centralized database as reliable battery models. Data from the battery system and control signals can be sent to the vehicles, and operational data can be sent to the cloud, ensuring better reliability, greater scalability, and increased intelligence for monitoring and managing battery systems.

Figure 8.

Architecture of the Cyber-Physical Battery Management System (CP-BMS).

The integration of cloud and edge computing technologies has significantly redefined the architecture of lithium-ion BMS, offering a transformative approach to real-time monitoring, predictive diagnostics, and lifecycle optimization. Cloud-based digital twin frameworks, in particular, enable the creation of virtual replicas of battery systems by fusing real-time sensor streams with historical usage profiles. This fusion supports high-fidelity predictions of SOC, SOH, and degradation trajectories, thereby improving operational reliability, reducing maintenance costs, and extending battery longevity. Such models are especially impactful in industrial applications like electric two- and three-wheeler manufacturing, where early failure prediction and dynamic simulation can mitigate operational risks, although technical implementation hurdles remain [91].

Expanding upon this paradigm, smart cloud-enabled BMS architectures offer a scalable alternative to traditional systems, effectively circumventing limitations related to computational overhead and data storage. These systems enhance fault diagnosis, performance optimization, and condition monitoring while remaining adaptable to emerging storage chemistries such as lithium-sulfur and solid-state batteries. However, challenges including connectivity dependence, cost control, and outage resilience continue to limit their large-scale adoption, necessitating further real-world validation of battery models and algorithms [92]. A complementary direction is the development of cloud-end collaborative BMS frameworks, which leverage cloud infrastructure in conjunction with embedded intelligence. One such architecture integrates GRU neural networks with transfer learning to improve SOC estimation across heterogeneous battery platforms, achieving both computational efficiency and robust scalability for renewable and electric vehicle applications [93].

More broadly, the convergence of cloud computing, artificial intelligence, digital twins, and edge computing technologies is establishing cloud-based BMS (CBMS) as a next-generation solution for energy storage system intelligence. These systems enable real-time analytics and adaptive decision-making while addressing the need for scalable, secure, and efficient data processing pipelines. Nevertheless, key challenges remain in ensuring data integrity, algorithmic robustness, and cyber-resilience, indicating the need for continued innovation in data harmonization, model refinement, and system-level security to fully harness the potential of CBMS technologies [94].

5.4. Distributed AI in Lithium-Ion Battery Systems

Distributed and decentralized architectures are increasingly gaining prominence as viable alternatives to conventional centralized BMS, particularly in applications requiring modular scalability, enhanced reliability, and real-time monitoring capabilities. A distributed BMS based on STM32 microcontrollers exemplifies this trend by reducing computational burdens across the system while retaining high measurement accuracy and performance stability. Experimental validation on lithium iron phosphate battery packs confirmed its efficacy in maintaining precise voltage and temperature readings, as well as achieving effective cell balancing and safety protection. This system demonstrates the practical benefits of decentralized processing in managing energy distribution, monitoring system states, and optimizing battery pack reliability [95].

Building upon this, decentralized smart BMS frameworks have evolved further by integrating cloud-connected controllers, charge regulation systems, and networked sensors capable of continuously assessing key battery parameters, including SOC, SOH, current, voltage, and thermal conditions. Leveraging Petri Net modeling, this architecture enables intelligent energy optimization, predictive diagnostics, and remote-control functions. Its successful deployment in off-grid environments underscores its potential to improve battery lifespan, enhance safety, and support renewable energy utilization by dynamically adapting to fluctuating operational conditions [96]. At the core of these advancements lies the increasing role of AI and machine learning in reshaping battery research and development. These techniques allow for rapid interpretation of complex, high-dimensional datasets, identifying critical material, fabrication, and performance patterns that would otherwise remain inaccessible via traditional methods. While ML has demonstrated promise in areas such as cycle life prediction, degradation diagnostics, and safety forecasting, its full adoption will require interdisciplinary collaboration among experimentalists, data scientists, and domain experts to ensure data quality, model interpretability, and real-world applicability. Nevertheless, AI-driven discovery frameworks are poised to accelerate progress in battery innovation, offering a pathway to address some of the most persistent challenges in energy storage science [97].

5.5. Cloud Platforms for Lithium-Ion Battery Health Prediction

The convergence of cloud computing and AI in BMS represents a pivotal advancement in the predictive modeling and real-time supervision of lithium-ion batteries. By offloading computational workloads to cloud platforms, BMS architectures can process extensive operational datasets, enabling more accurate state estimation, degradation tracking, and predictive maintenance. This cloud-enabled framework supports scalable and efficient management strategies across both electric vehicle fleets and stationary energy storage systems. A key innovation lies in the integration of data-driven models with physics-informed machine learning, which enhances the system’s ability to capture complex nonlinear battery behaviors and long-range interdependencies often missed by traditional methods. Such hybrid approaches offer improved diagnostic fidelity and responsiveness, though their effectiveness depends on the continued refinement of learning algorithms and seamless communication between edge devices and centralized cloud infrastructure. As this technology matures, AI-enhanced cloud platforms not only address longstanding limitations in safety and performance forecasting but also facilitate the transition of academic research into practical, real-world energy storage solutions [87].

5.6. Scalable Cloud Solutions for Lithium-Ion Battery Optimization

Scalable cloud-based architectures are playing an increasingly critical role in advancing lithium-ion battery optimization by integrating real-time monitoring with long-term performance analytics. A recent framework exemplifies this trend by combining automotive-grade hardware with efficient Controller Area Network (CAN) data decoding and visualization capabilities, enabling precise tracking of essential battery parameters such as cell voltage and temperature. This system not only facilitates the early identification of weak cells, thereby enhancing operational safety and performance, but also balances data resolution with computational efficiency, a critical trade-off in cloud-connected BMS implementations. Experimental validation confirms the framework’s robustness, revealing how variations in sampling rate and server location influence latency and resource utilization. These insights are vital for the deployment of digital twin models and real-time analytics in electric vehicle applications, where responsiveness and reliability are paramount. Moreover, the architecture’s capacity for long-term data retention supports second-life battery applications, offering a unified solution that bridges real-time diagnostics with retrospective performance evaluation essential for enabling predictive maintenance, extended lifecycle management, and future reuse strategies in electric mobility [98].

5.7. Low-Latency Edge Computing in Lithium-Ion BMS