IP-YOLOv8: A Multi-Scale Pest Detection Algorithm for Field-Scale Applications

Abstract

1. Introduction

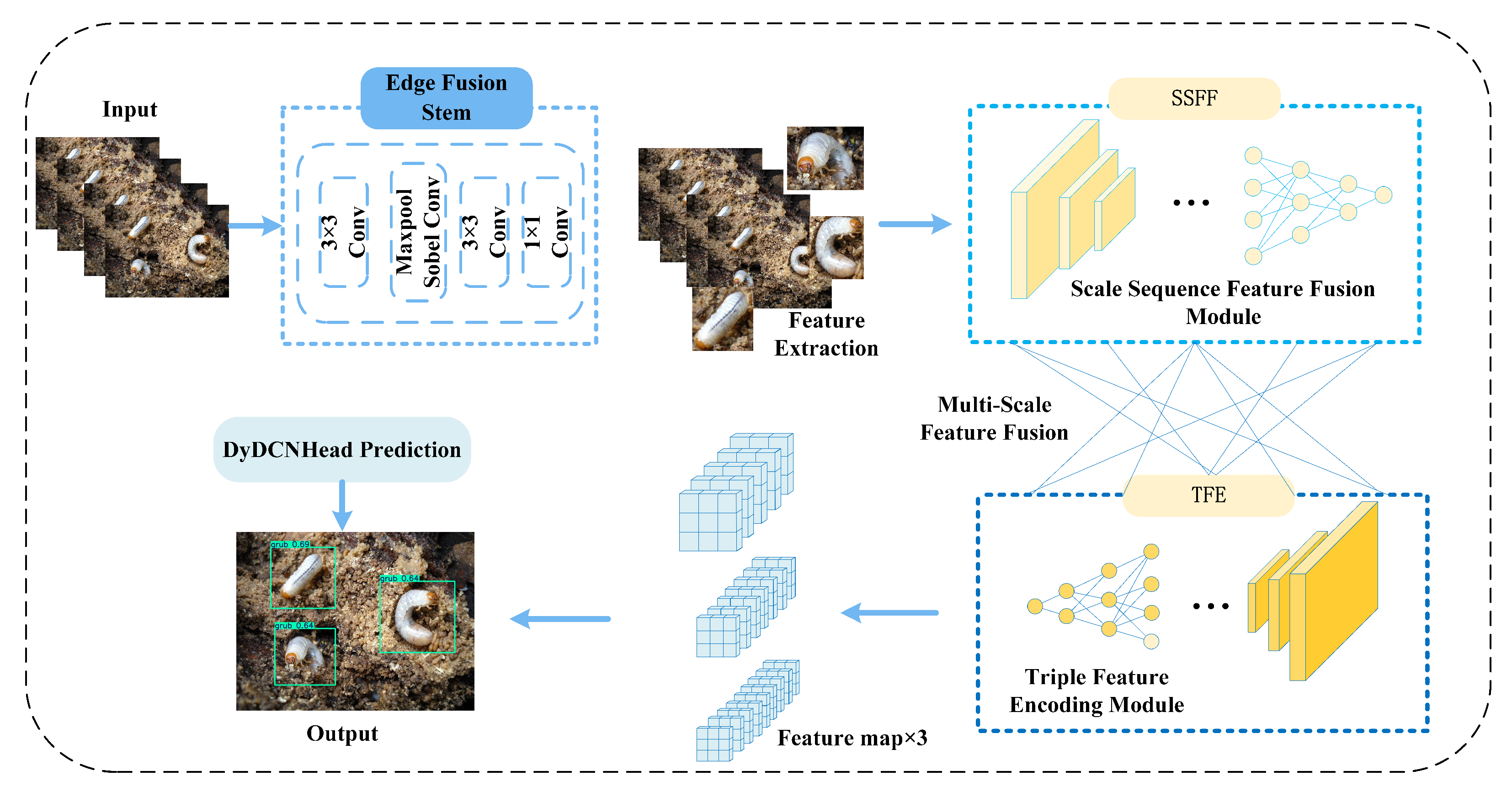

- A multi-scale feature fusion architecture is introduced, consisting of the Scale Sequence Feature Fusion (SSFF) module and the Triple Feature Encoding (TFE) module, which leverages the high-resolution information in shallow feature maps to enhance the model’s multi-scale feature fusion capability.

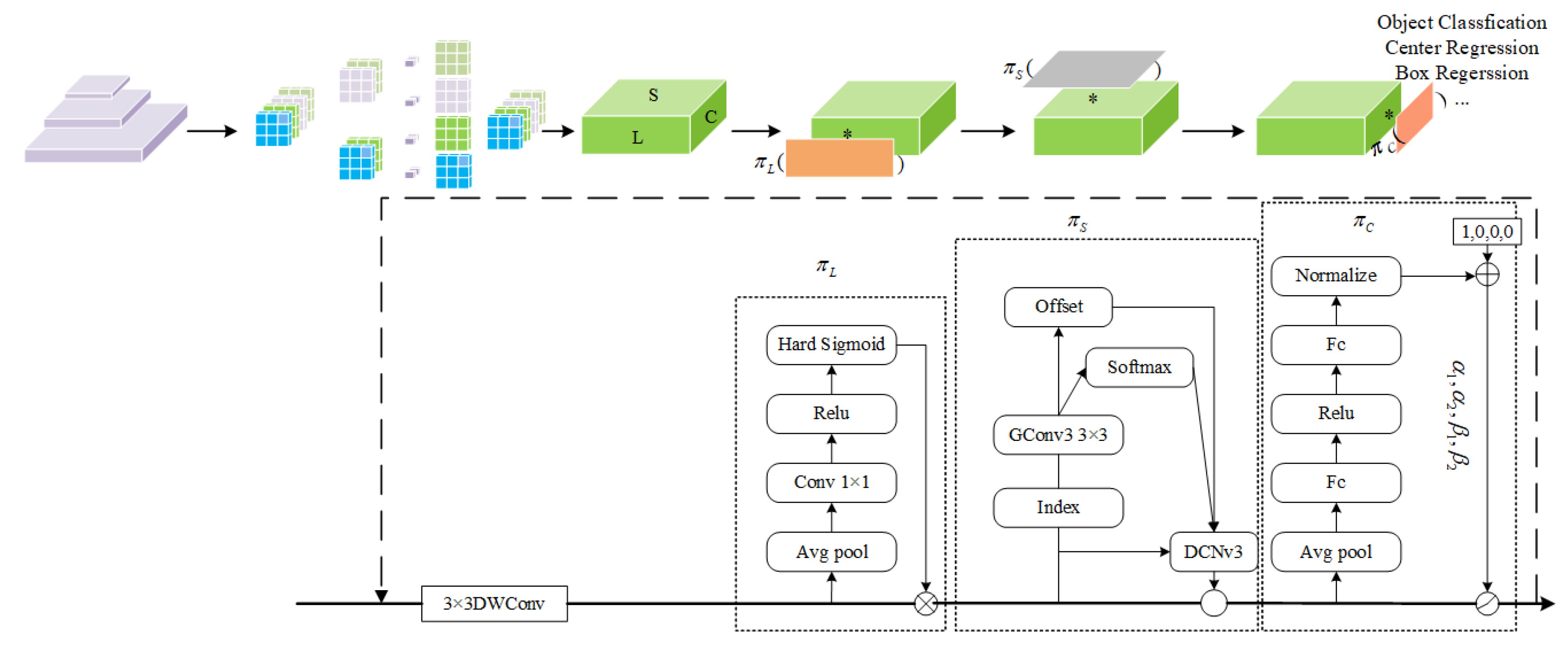

- A detection head for pest detection, DyDCNHead, is proposed. This head uses learnable dynamic sampling points, which enable it to adapt more efficiently to large-scale variations and diverse pest morphologies, thereby improving detection accuracy and robustness.

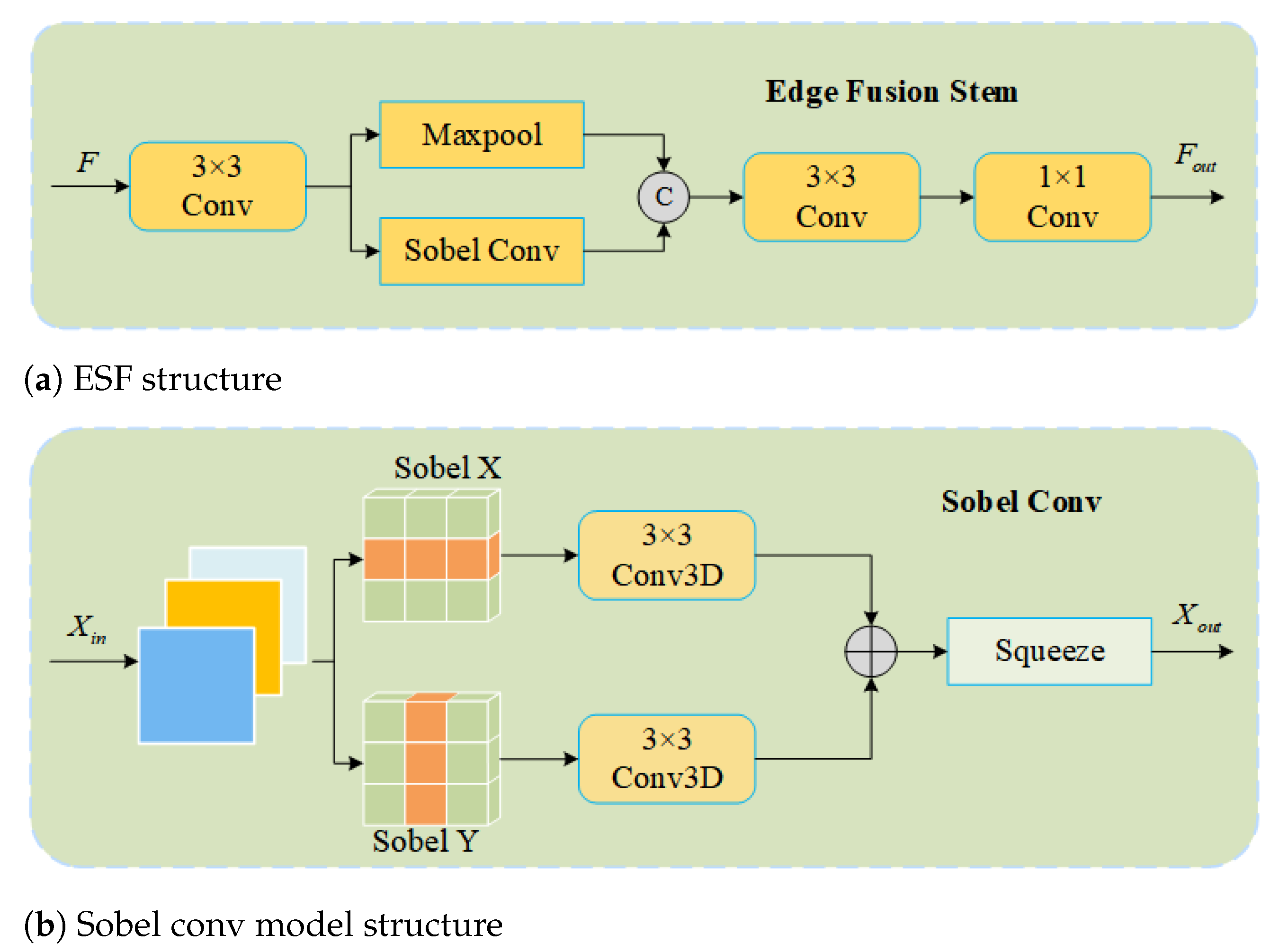

- An edge feature fusion module (Edge Fusion Stem) is designed to enhance fine-grained edge information, which enables the model to distinguish edge features from background information more accurately, thereby improving detection performance.

| Model | Classes | mAP50 | GFLOPs | Param |

|---|---|---|---|---|

| GLU-YOLOv8 [19] | 102 | 58.7% | - | - |

| Maize-YOLO [20] | 13 | 76.3% | 38.9 G | 33.4 M |

| PestLite [21] | 102 | 57.1% | 16.3 G | 6.34 M |

| Yolo-Pest [22] | 102 | 57.1% | - | 5.8 M |

| C3M-YOLO [23] | 102 | 57.2% | 16.1 G | 7.1 M |

| CSWin + FRC + RPSA [24] | 102 | 57.3% | 261.2 G | 41.4 M |

| YOLOv8-SCS [25] | 10 | 87.9% | 16.8 G | 6.2 M |

| SAW-YOLO [26] | 13 | 90.3% | - | 4.58 M |

2. Materials and Methods

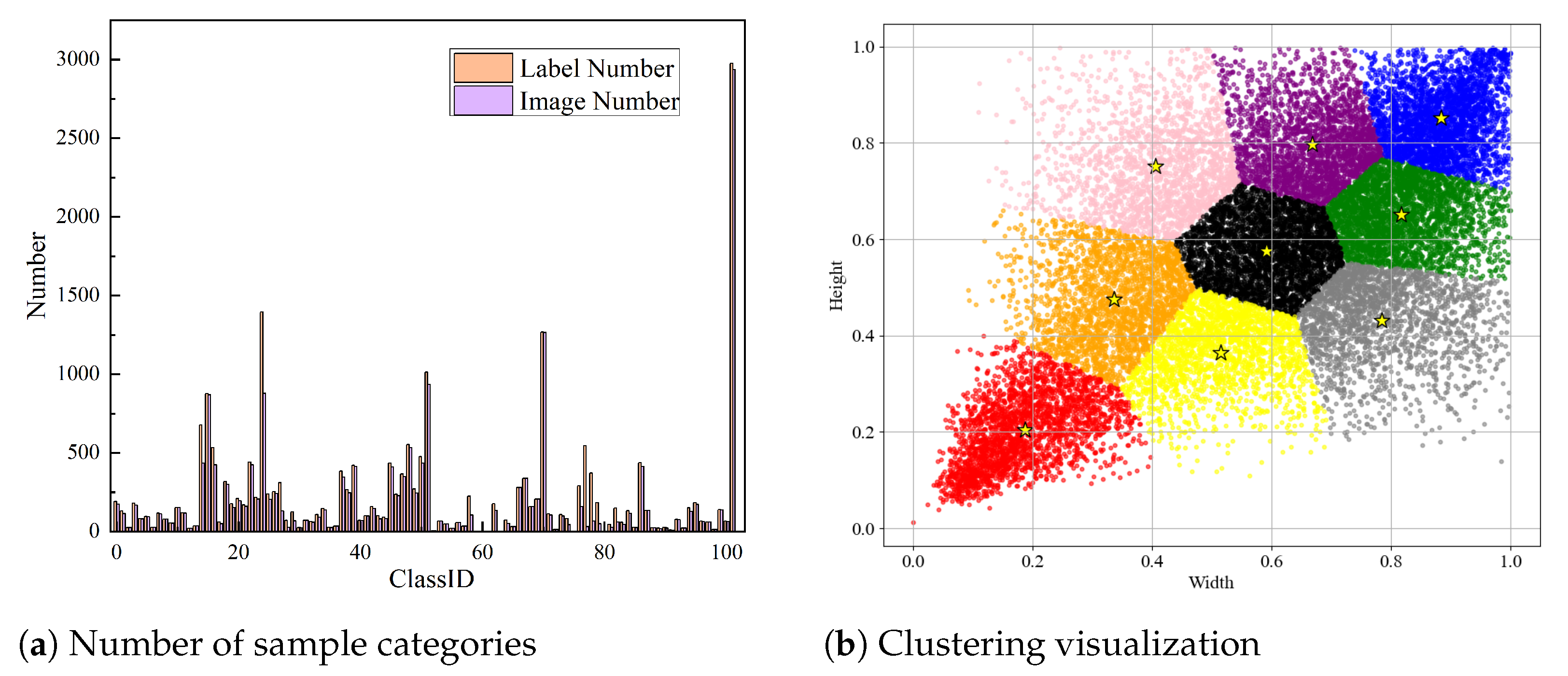

2.1. IP102 Dataset

2.2. Data Analysis

2.3. YOLOv8 Object Detection Algorithm

2.4. Model Improvement

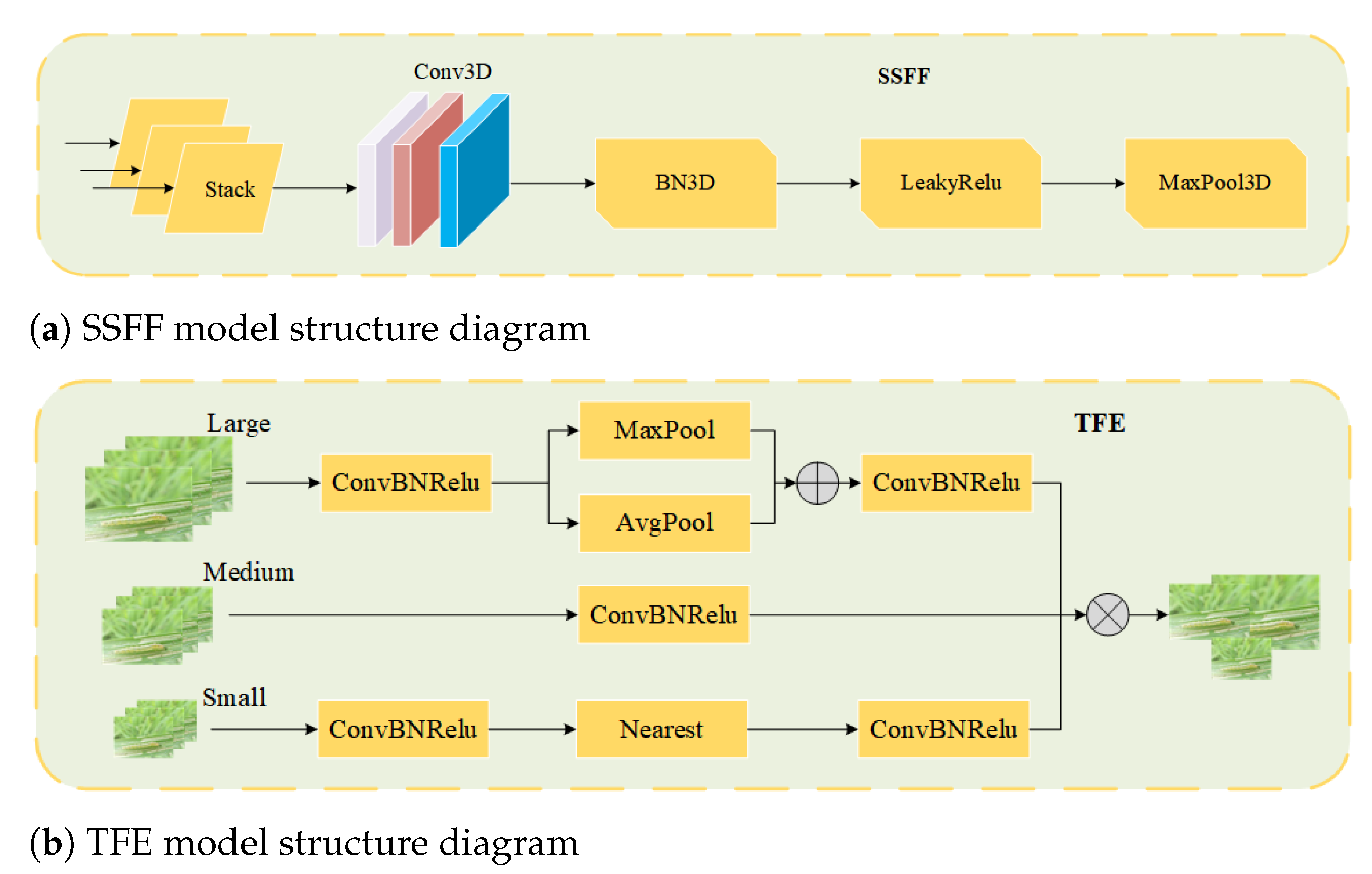

2.4.1. Multi-Scale Feature Fusion

2.4.2. Dynamic Detection Head Based on Deformable Convolution v3

2.4.3. Edge Fusion Stem

3. Experiments and Analysis

3.1. Experimental Environment

3.2. Metrics

3.3. Ablation Study

3.4. Model Comparison Experiment

3.5. Comparison with Current Methods

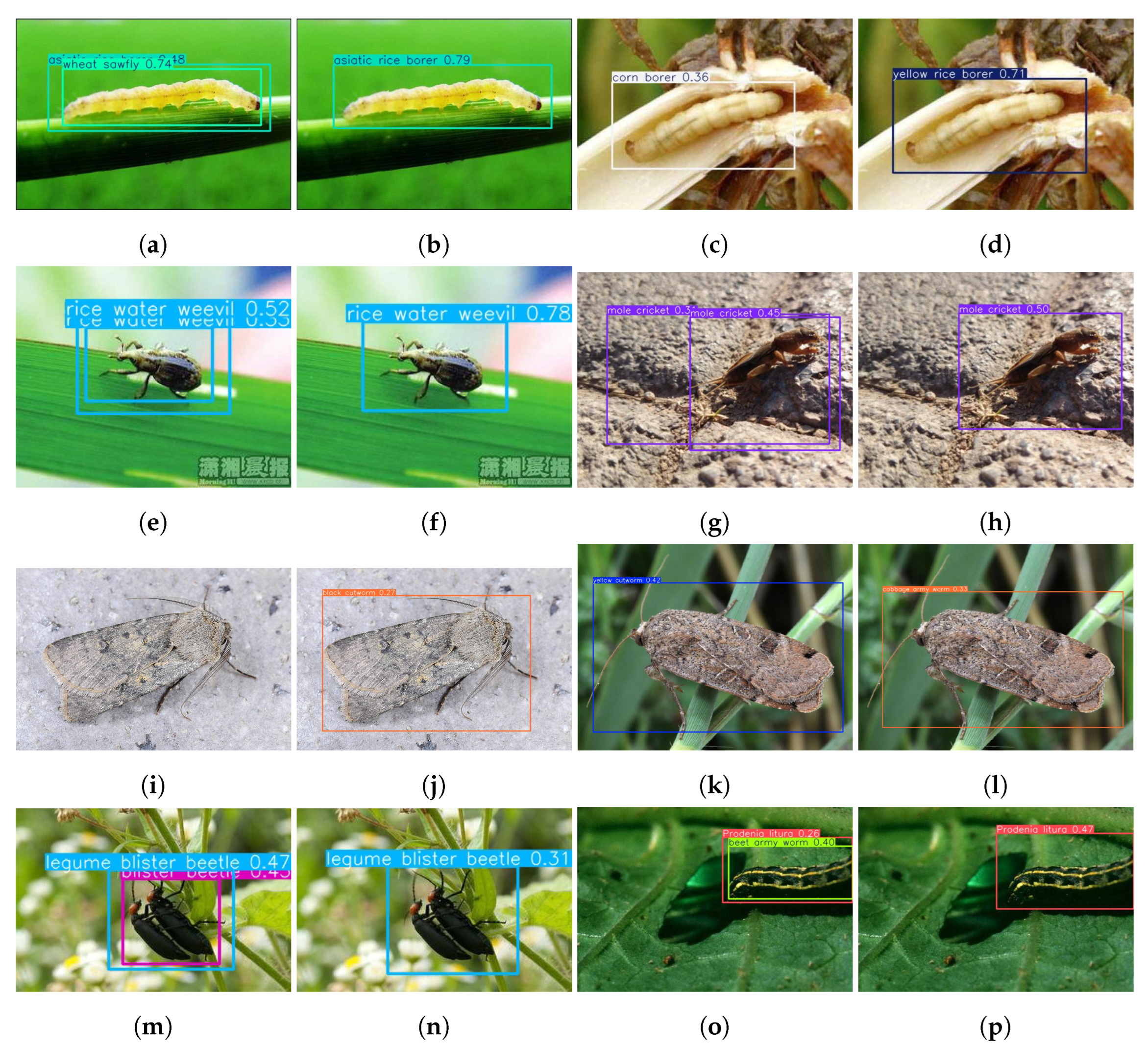

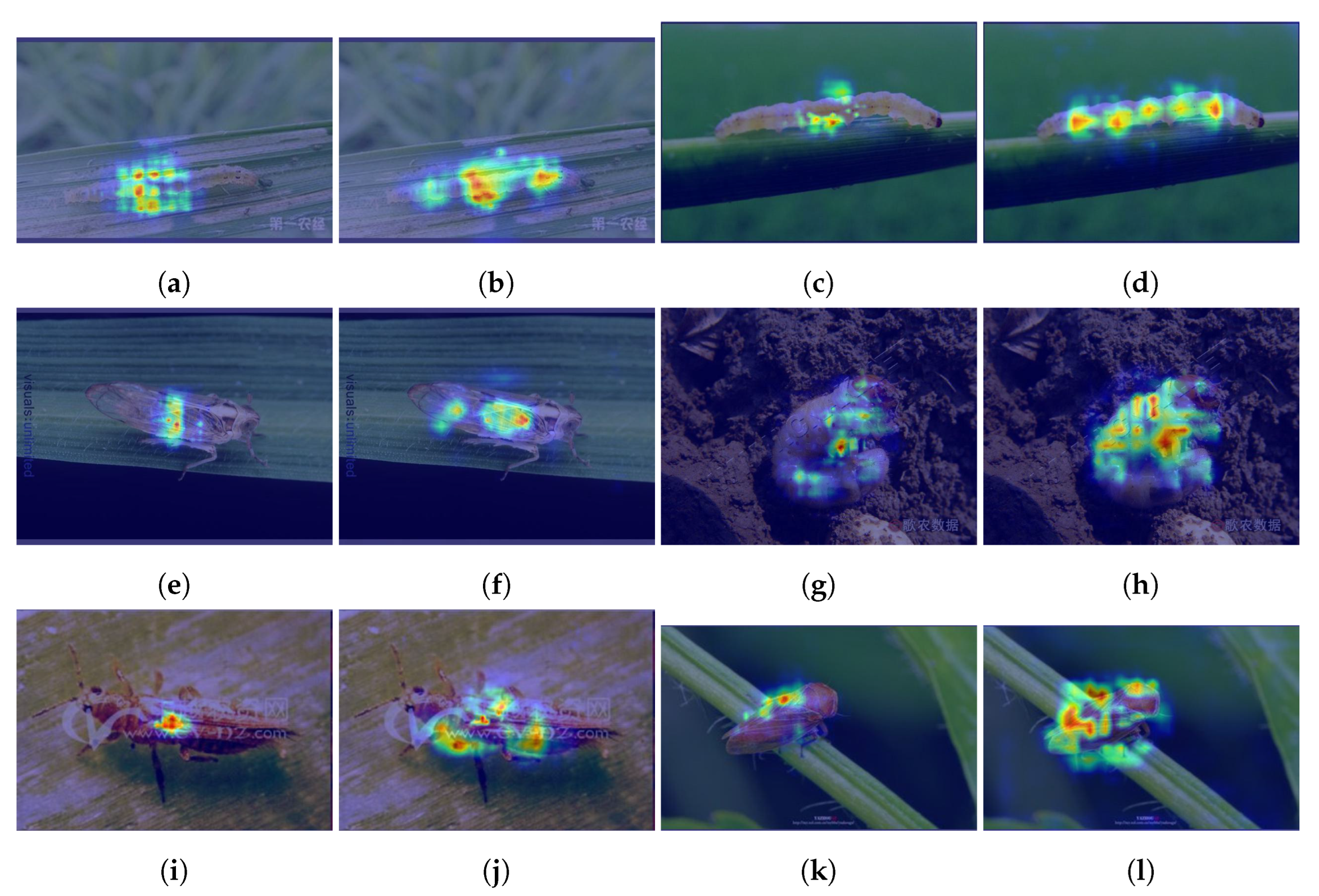

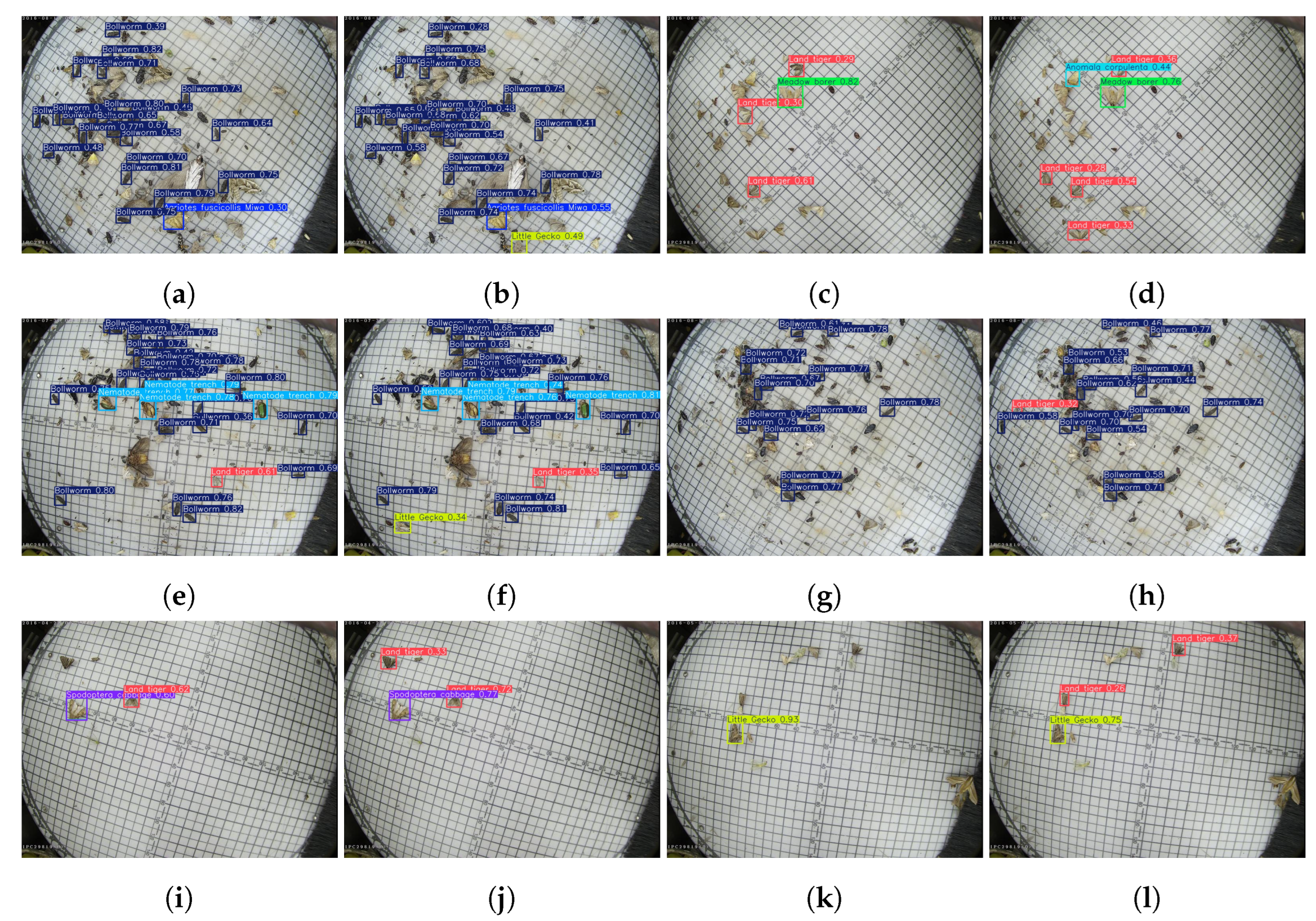

3.6. Visualization Analysis

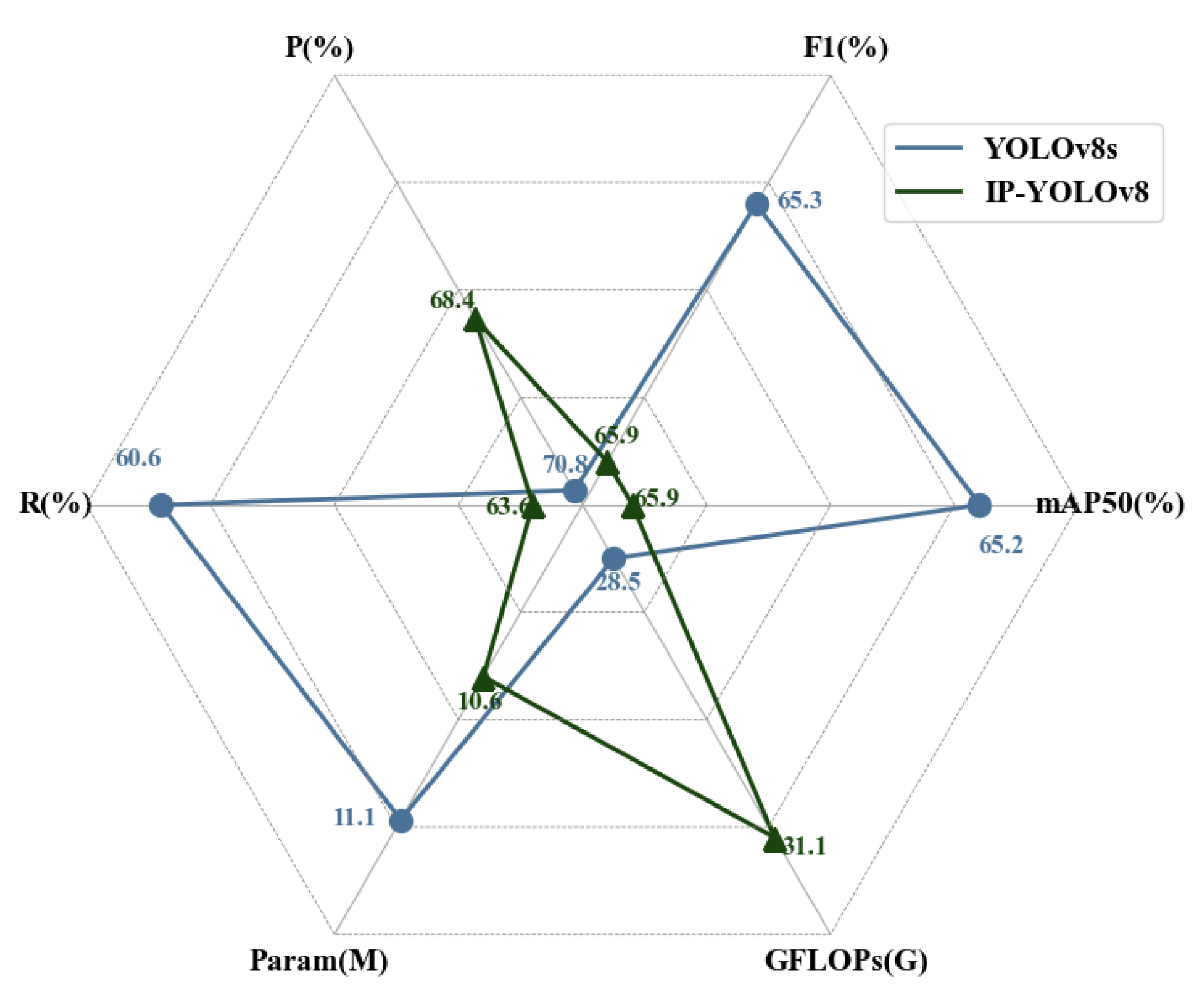

3.7. Comparison Experiments on the Pest24 Dataset

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Tian, Y.; Wang, S.; Li, E.; Yang, G.; Liang, Z.; Tan, M. MD-YOLO: Multi-scale Dense YOLO for small target pest detection. Comput. Electron. Agric. 2023, 213, 108233. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Yao, Q.; Feng, J.; Tang, J.; Xu, W.-G.; Zhu, X.-H.; Yang, B.-J.; Lü, J.; Xie, Y.-Z.; Yao, B.; Wu, S.-Z.; et al. Development of an automatic monitoring system for rice light-trap pests based on machine vision. J. Integr. Agric. 2020, 19, 2500–2513. [Google Scholar] [CrossRef]

- Huang, R.; Yao, T.; Zhan, C.; Zhang, G.; Zheng, Y. A motor-driven and computer vision-based intelligent e-trap for monitoring citrus flies. Agriculture 2021, 11, 460. [Google Scholar] [CrossRef]

- Ramalingam, B.; Mohan, R.E.; Pookkuttath, S.; Gómez, B.F.; Sairam Borusu, C.S.C.; Wee Teng, T.; Tamilselvam, Y.K. Remote insects trap monitoring system using deep learning framework and IoT. Sensors 2020, 20, 5280. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Xue, X.; Qin, G.; Li, K.; Liu, J.; Zhang, Y.; Li, X. Application of machine learning in automatic image identification of insects-a review. Ecol. Inform. 2024, 80, 102539. [Google Scholar] [CrossRef]

- Pattnaik, G.; Parvathy, K. Machine learning-based approaches for tomato pest classification. TELKOMNIKA Telecommun. Comput. Electron. Control. 2022, 20, 321–328. [Google Scholar] [CrossRef]

- Qing, Y.; Chen, G.T.; Zheng, W.; Zhang, C.; Yang, B.J.; Jian, T. Automated detection and identification of white-backed planthoppers in paddy fields using image processing. J. Integr. Agric. 2017, 16, 1547–1557. [Google Scholar] [CrossRef]

- Ebrahimi, M.; Khoshtaghaza, M.H.; Minaei, S.; Jamshidi, B. Vision-based pest detection based on SVM classification method. Comput. Electron. Agric. 2017, 137, 52–58. [Google Scholar] [CrossRef]

- Shoaib, M.; Sadeghi-Niaraki, A.; Ali, F.; Hussain, I.; Khalid, S. Leveraging deep learning for plant disease and pest detection: A comprehensive review and future directions. Front. Plant Sci. 2025, 16, 1538163. [Google Scholar] [CrossRef]

- Leite, D.; Brito, A.; Faccioli, G. Advancements and outlooks in utilizing Convolutional Neural Networks for plant disease severity assessment: A comprehensive review. Smart Agric. Technol. 2024, 9, 100573. [Google Scholar] [CrossRef]

- Chen, J.; Chen, W.; Nanehkaran, Y.A.; Suzauddola, M. MAM-IncNet: An end-to-end deep learning detector for Camellia pest recognition. Multimed. Tools Appl. 2024, 83, 31379–31394. [Google Scholar] [CrossRef]

- Li, C.; Chen, S.; Ma, Y.; Song, M.; Tian, X.; Cui, H. Wheat Pest Identification Based on Deep Learning Techniques. In Proceedings of the 2024 IEEE 7th International Conference on Big Data and Artificial Intelligence (BDAI), Beijing, China, 5–7 July 2024; pp. 87–91. [Google Scholar]

- Chen, Y.; Chen, M.; Guo, M.; Wang, J.; Zheng, N. Pest recognition based on multi-image feature localization and adaptive filtering fusion. Front. Plant Sci. 2023, 14, 1282212. [Google Scholar] [CrossRef] [PubMed]

- Dong, S.; Du, J.; Jiao, L.; Wang, F.; Liu, K.; Teng, Y.; Wang, R. Automatic crop pest detection oriented multiscale feature fusion approach. Insects 2022, 13, 554. [Google Scholar] [CrossRef]

- Chakrabarty, S.; Shashank, P.R.; Deb, C.K.; Haque, M.A.; Thakur, P.; Kamil, D.; Marwaha, S.; Dhillon, M.K. Deep learning-based accurate detection of insects and damage in cruciferous crops using YOLOv5. Smart Agric. Technol. 2024, 9, 100663. [Google Scholar] [CrossRef]

- Chen, H.; Wen, C.; Zhang, L.; Ma, Z.; Liu, T.; Wang, G.; Yu, H.; Yang, C.; Yuan, X.; Ren, J. Pest-PVT: A model for multi-class and dense pest detection and counting in field-scale environments. Comput. Electron. Agric. 2025, 230, 109864. [Google Scholar] [CrossRef]

- Wu, X.; Zhan, C.; Lai, Y.K.; Cheng, M.M.; Yang, J. Ip102: A large-scale benchmark dataset for insect pest recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 8787–8796. [Google Scholar]

- Yue, G.; Liu, Y.; Niu, T.; Liu, L.; An, L.; Wang, Z.; Duan, M. Glu-yolov8: An improved pest and disease target detection algorithm based on yolov8. Forests 2024, 15, 1486. [Google Scholar] [CrossRef]

- Yang, S.; Xing, Z.; Wang, H.; Dong, X.; Gao, X.; Liu, Z.; Zhang, X.; Li, S.; Zhao, Y. Maize-YOLO: A new high-precision and real-time method for maize pest detection. Insects 2023, 14, 278. [Google Scholar] [CrossRef]

- Dong, Q.; Sun, L.; Han, T.; Cai, M.; Gao, C. PestLite: A novel YOLO-based deep learning technique for crop pest detection. Agriculture 2024, 14, 228. [Google Scholar] [CrossRef]

- Xiang, Q.; Huang, X.; Huang, Z.; Chen, X.; Cheng, J.; Tang, X. YOLO-pest: An insect pest object detection algorithm via CAC3 module. Sensors 2023, 23, 3221. [Google Scholar] [CrossRef]

- Zhang, L.; Zhao, C.; Feng, Y.; Li, D. Pests identification of ip102 by yolov5 embedded with the novel lightweight module. Agronomy 2023, 13, 1583. [Google Scholar] [CrossRef]

- Liu, H.; Zhan, Y.; Sun, J.; Mao, Q.; Wu, T. A transformer-based model with feature compensation and local information enhancement for end-to-end pest detection. Comput. Electron. Agric. 2025, 231, 109920. [Google Scholar] [CrossRef]

- Song, H.; Yan, Y.; Deng, S.; Jian, C.; Xiong, J. Innovative lightweight deep learning architecture for enhanced rice pest identification. Phys. Scr. 2024, 99, 096007. [Google Scholar] [CrossRef]

- Wu, X.; Liang, J.; Yang, Y.; Li, Z.; Jia, X.; Pu, H.; Zhu, P. SAW-YOLO: A Multi-Scale YOLO for Small Target Citrus Pests Detection. Agronomy 2024, 14, 1571. [Google Scholar] [CrossRef]

- Kecen, L.; Xiaoqiang, W.; Hao, L.; Leixiao, L.; Yanyan, Y.; Chuang, M.; Jing, G. Survey of one-stage small object detection methods in deep learning. J. Front. Comput. Sci. Technol. 2022, 16, 41. [Google Scholar]

- Staff, A.C. The two-stage placental model of preeclampsia: An update. J. Reprod. Immunol. 2019, 134, 1–10. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Lin, T. Focal Loss for Dense Object Detection. arXiv 2017, arXiv:1708.02002. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ren, S. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. In Proceedings of the 30th Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 5–10 December 2016. Volume 29. [Google Scholar]

- Kang, M.; Ting, C.M.; Ting, F.F.; Phan, R.C.W. ASF-YOLO: A novel YOLO model with attentional scale sequence fusion for cell instance segmentation. Image Vis. Comput. 2024, 147, 105057. [Google Scholar] [CrossRef]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic head: Unifying object detection heads with attentions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 7373–7382. [Google Scholar]

- Wang, W.; Dai, J.; Chen, Z.; Huang, Z.; Li, Z.; Zhu, X.; Hu, X.; Lu, T.; Lu, L.; Li, H.; et al. Internimage: Exploring large-scale vision foundation models with deformable convolutions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 14408–14419. [Google Scholar]

- Kang, C.; Jiao, L.; Liu, K.; Liu, Z.; Wang, R. Precise Crop Pest Detection Based on Co-Ordinate-Attention-Based Feature Pyramid Module. Insects 2025, 16, 103. [Google Scholar] [CrossRef]

- Zhang, L.; Ding, G.; Li, C.; Li, D. DCF-Yolov8: An improved algorithm for aggregating low-level features to detect agricultural pests and diseases. Agronomy 2023, 13, 2012. [Google Scholar] [CrossRef]

- Zhang, L.; Cui, H.; Sun, J.; Li, Z.; Wang, H.; Li, D. CLT-YOLOX: Improved YOLOX based on cross-layer transformer for object detection method regarding insect pest. Agronomy 2023, 13, 2091. [Google Scholar] [CrossRef]

- Wang, Q.J.; Zhang, S.Y.; Dong, S.F.; Zhang, G.C.; Yang, J.; Li, R.; Wang, H.Q. Pest24: A large-scale very small object data set of agricultural pests for multi-target detection. Comput. Electron. Agric. 2020, 175, 105585. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| epoch | 300 |

| lr0 | 0.01 |

| momentum | 0.937 |

| weight_decay | 0.0005 |

| batch_size | 8 |

| optimizer | SGD |

| Image size | 640 |

| Close_mosaic | 0 |

| Learning Rate Scheduling Strategy | Cosine Annealing |

| ASF | Head | EFS | P (%) | R (%) | mAP50 | mAP50:90 | GFLOPs | Param |

|---|---|---|---|---|---|---|---|---|

| (%) | (%) | (G) | (M) | |||||

| 57.0 | 52.7 | 57.0 | 37.1 | 28.7 | 11.1 | |||

| √ | 58.7 | 52.2 | 58.1 | 37.5 | 30.3 | 11.3 | ||

| √ | 56.9 | 56.7 | 58.5 | 37.9 | 29.4 | 10.5 | ||

| √ | 53.5 | 61.0 | 58.8 | 38.4 | 29.1 | 11.1 | ||

| √ | √ | 55.6 | 55.9 | 59.0 | 38.3 | 31.0 | 10.6 | |

| √ | √ | 57.1 | 57.0 | 58.5 | 37.9 | 30.4 | 11.3 | |

| √ | √ | 55.8 | 58.7 | 58.9 | 38.1 | 30.0 | 10.5 | |

| √ | √ | √ | 60.1 | 54.2 | 59.2 | 38.4 | 31.3 | 10.6 |

| Model | P (%) | R (%) | mAP50 (%) | mAP50:95 (%) | GFLOPs (G) | Param (M) |

|---|---|---|---|---|---|---|

| YOLOv3-tiny | 49.5 | 54.5 | 53.7 | 32.6 | 12.1 | 19.1 |

| YOLOv5s | 55.8 | 54.4 | 57.7 | 37.4 | 9.1 | 24.0 |

| YOLOv6 | 56.7 | 54.8 | 57.2 | 36.9 | 16.6 | 45.6 |

| YOLOv7 | 53.9 | 53.3 | 54.5 | 34.0 | 37.0 | 104.9 |

| YOLOv8s | 57.0 | 52.0 | 57.0 | 37.1 | 11.1 | 28.7 |

| YOLOv9s | 51.2 | 57.6 | 56.0 | 36.5 | 7.2 | 26.9 |

| YOLOv11s | 57.6 | 51.7 | 57.6 | 37.4 | 9.4 | 21.5 |

| YOLOv12s | 55.7 | 53.6 | 56.3 | 36.4 | 9.1 | 19.5 |

| Rtdetr-r18 | 52.1 | 45.6 | 42.6 | 26.3 | 20.0 | 57.4 |

| Ours | 60.1 | 54.2 | 59.2 | 38.4 | 11.1 | 29.1 |

| Model | P (%) | R (%) | mAP50 (%) | mAP50:95 (%) | GFLOPs (G) | Param (M) |

|---|---|---|---|---|---|---|

| PestLite [21] | 57.2 | 56.4 | 57.1 | - | 6.34 | 16.3 |

| Yolo-Pest [22] | - | - | 57.1 | - | 5.8 | - |

| C3M-YOLO [23] | 57.4 | 57.5 | 57.2 | 34.9 | 7.1 | 16.1 |

| CFR [24] | - | - | 57.3 | - | 41.4 | 261.2 |

| CAFPN [40] | - | - | 49.7 | 29.8 | 32.19 | 211.37 |

| DCF-YOLOv8 [41] | 53 | 60.4 | 60.8 | 39.4 | 25.8 | - |

| CLT-YOLOX [42] | - | - | 57.7 | - | 10.5 | 35.4 |

| Ours | 60.1 | 54.2 | 59.2 | 38.4 | 11.1 | 29.1 |

| Model | P (%) | R (%) | mAP50 (%) | F1 (%) |

|---|---|---|---|---|

| YOLOv8s | 70.8 | 60.6 | 65.2 | 65.3 |

| IP-YOLOv8 | 68.4 | 63.6 | 65.9 | 65.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, C.; Wang, Y.; Yun, L.; Wang, H.; Han, Y.; Chen, Z. IP-YOLOv8: A Multi-Scale Pest Detection Algorithm for Field-Scale Applications. Horticulturae 2025, 11, 1109. https://doi.org/10.3390/horticulturae11091109

Yang C, Wang Y, Yun L, Wang H, Han Y, Chen Z. IP-YOLOv8: A Multi-Scale Pest Detection Algorithm for Field-Scale Applications. Horticulturae. 2025; 11(9):1109. https://doi.org/10.3390/horticulturae11091109

Chicago/Turabian StyleYang, Chenggui, Yibo Wang, Lijun Yun, Haoyu Wang, Yuqi Han, and Zaiqing Chen. 2025. "IP-YOLOv8: A Multi-Scale Pest Detection Algorithm for Field-Scale Applications" Horticulturae 11, no. 9: 1109. https://doi.org/10.3390/horticulturae11091109

APA StyleYang, C., Wang, Y., Yun, L., Wang, H., Han, Y., & Chen, Z. (2025). IP-YOLOv8: A Multi-Scale Pest Detection Algorithm for Field-Scale Applications. Horticulturae, 11(9), 1109. https://doi.org/10.3390/horticulturae11091109