Abstract

Accurate walnut yield prediction is crucial for the development of the walnut industry. Traditional manual counting methods are limited by labor and time costs, leading to inaccurate walnut quantity assessments. In this paper, we propose a walnut detection method based on UAV (UAV means Unmanned Aerial Vehicle) remote sensing imagery to improve the walnut yield prediction accuracy. Based on the YOLOv11 network, we propose several improvements to enhance the multi-scale object detection capability while achieving a more lightweight model structure. Specifically, we reconstruct the feature fusion network with a hierarchical scale-based feature pyramid structure and implement lightweight improvements to the feature extraction component. These modifications result in the RSWD-YOLO network (RSWD means remote sensing walnut detection; YOLO means ‘You Only Look Once’, and it is the specific abbreviation used for a series of object detection algorithms), which is specifically designed for walnut detection. Furthermore, to optimize the detection performance under hardware resource constraints, we apply knowledge distillation to RSWD-YOLO, thereby further improving the detection accuracy. Through model deployment and testing on small edge devices, we demonstrate the feasibility of our proposed method. The detection algorithm achieves 86.1% mean Average Precision on the walnut dataset while maintaining operational functionality on small edge devices. The experimental results demonstrate that our proposed UAV remote sensing-based walnut detection method has a significant practical application value and can provide valuable insights for future research in related fields.

1. Introduction

Walnut yield prediction is a critical aspect of walnut cultivation and management, playing a key role in the overall production process [1]. Accurate yield estimation enables enterprises and governments to proactively develop production strategies and formulate relevant policies, while also facilitating market supply–demand assessments to mitigate market risks. In the absence of an effective yield prediction, reliance on post-harvest measurements can result in delays in production planning and policy-making, ultimately impeding the sustainable development of the walnut industry.

Currently, manual fruit counting remains the primary method for walnut yield prediction. In small-scale walnut orchards, farmers can obtain the number of walnuts on each tree through direct observation and counting. Subsequently, they aggregate the data from all trees within the orchard to predict this year’s walnut yield based on historical data. While this method yields relatively accurate data, it has several limitations. First, this method requires substantial human resources and time investment. Second, manual counting is subject to unavoidable errors due to subjective judgments. Finally, this method is not well-suited for application in large-scale walnut orchards. For the prediction of walnut yields in extensive areas, it is necessary to combine it with the yield estimation method per unit area. Specifically, a sample plot is selected, and the number of walnuts within this plot is determined using manual counting. Subsequently, the characteristics of the sample plot are used to estimate the walnut yield across the entire orchard. However, predicting walnut yields using this approach can result in significant errors, which may subsequently affect subsequent harvesting plans.

With the rise of Industry 4.0, traditional agriculture has begun transitioning towards digital agriculture. In recent years, various aspects of the walnut industry—including harvesting, processing, and supply–demand matching—have gradually integrated with information and automation technologies. Several related studies have already emerged. Guo et al. [2] developed a deep learning-based algorithm to detect walnut maturity and predict the walnut oil content at different maturity stages. Ni et al. [3] proposed a machine vision-based device for walnut shell–kernel separation, providing new technical support for the automation transformation of the walnut processing industry. Li et al. [4] developed a sentiment analysis-based method for matching creative agri-product scheme demanders and suppliers. This approach improves walnut production efficiency, optimizes supply–demand relationship management, and promotes the modernization and sustainable development of the walnut industry. To facilitate digital agriculture advancement, numerous studies have investigated multi-rotor UAV remote sensing technology and demonstrated its feasibility in walnut orchards [5].

Current fruit detection tasks predominantly employ deep learning-based object detection algorithms, which can be categorized into two-stage detection algorithms [6] and one-stage detection algorithms [7]. Two-stage detection algorithms, including R-CNN [8], Fast R-CNN [9], Faster R-CNN [10], and Mask R-CNN [11], exhibit limitations such as high computational complexity, a slow processing speed, and substantial computational resource requirements, making them unsuitable for edge devices. In contrast, one-stage detection algorithms are more suitable for this research scenario due to their fast detection speed and minimal computational resource requirements. One-stage detection algorithms primarily include SSD [12], CENTERNET [13], and the You Only Look Once (YOLO) series [14,15,16]. Among these, YOLO series algorithms have been widely applied in various fields owing to their high detection accuracy and excellent real-time performance. These fields include transportation [17], healthcare [18], agriculture [19], education [20], and industrial production [21]. Regarding the application of YOLO series object detection algorithms in the field of fruit detection, recent studies have also been conducted. Wu et al. [22] proposed an improved model, MTS-YOLO, based on YOLOv8 to address the issues of inaccurate tomato maturity recognition and unclear picking position localization. MTS-YOLO achieved a detection accuracy of 92.0% while reducing the model size, effectively lowering the computational costs and inference time, thereby providing a lightweight and efficient technological solution for intelligent tomato harvesting. Qiu et al. [23] introduced a lightweight improved model, GSE-YOLO, based on YOLOv8n to tackle the low efficiency of dragon fruit maturity detection. By replacing the backbone network, incorporating an attention mechanism, and optimizing the loss function, the improved model achieved an 85.2% detection accuracy in complex environments, significantly enhancing the accuracy of dragon fruit maturity identification. In response to the challenges of low efficiency, high costs, and inconsistent quality in traditional oyster mushroom cultivation, Shi et al. [24] proposed a lightweight model, OMC-YOLO. This model optimizes the backbone network, neck network, and loss function based on YOLOv8n, achieving a detection accuracy of 94.95%, which surpasses other lightweight object detection models. This advancement provides technological support for the automated grading and detection of oyster mushrooms.

To overcome the limitations of manual fruit counting, this paper proposes a walnut detection method based on UAV remote sensing imagery, drawing from research in UAV remote sensing technology [25] and crop detection [26]. The method consists of two main steps: (1) the comprehensive image acquisition of walnut trees through low-altitude UAV flights and (2) the real-time detection of the acquired images using a walnut detection model deployed on edge devices carried by the UAV. For this method, both the detection accuracy and lightweight model deployment are equally crucial. Therefore, this study adopts YOLOv11 [27], the latest algorithm in the YOLO series that balances the detection accuracy and model size, as the baseline model. The research aims to maximize the detection accuracy while ensuring successful deployment on edge devices, achieving precise walnut detection. In conclusion, the proposed UAV remote sensing-based walnut detection method addresses the cost and subjective error issues inherent in manual counting methods, providing sufficient and reliable data support for walnut yield prediction.

The main contributions of this study can be summarized as follows:

- We propose RSWD-YOLO, a UAV remote sensing-based detection model for walnut object detection under aerial perspectives. Building upon YOLOv11s, the proposed method reconstructs the feature fusion component with hierarchical scale principles to enhance multi-scale detection capabilities. The feature extraction section incorporates partial convolution operations and an Efficient Multi-Scale Attention module, achieving model lightweighting without compromising the detection accuracy, thereby ensuring practical deployment viability.

- Knowledge distillation is applied to RSWD-YOLO, improving the walnut detection accuracy without increasing the model complexity or computational costs. This enables the model to achieve high-accuracy walnut detection while remaining compatible with edge device deployment constraints.

- RSWD-YOLO is successfully deployed and tested on Raspberry Pi 5. The results demonstrate an average processing time of 492.28 ms per image, meeting practical deployment requirements and proving RSWD-YOLO’s capability for deployment on UAV-mounted edge devices.

2. Related Works

2.1. Crop Detection Based on CNN and UAV Remote Sensing Images

Convolutional Neural Networks (CNN) are a class of deep learning models specifically designed for processing grid-like data, such as images. CNN utilize convolutional layers to extract spatial features hierarchically, making them highly effective for tasks like image classification, object detection, and segmentation. In recent years, numerous studies have explored the practical applications of CNN-based object detection algorithms in crop detection. Liu et al. [28] proposed YOLOv5s-BC, an enhanced model for apple detection based on YOLOv5, achieving a 4.6% improvement in mAP compared to the original model. Fu et al. [29] developed an improved YOLOv7-tiny model for detecting walnuts during their oil conversion period. Yang et al. [30] proposed GDAD-YOLOv5s for fruit-picking robots, achieving 95.2% detection accuracy on their own walnut dataset. Sun et al. [31] addressed the background similarity issue in green tomato detection by specifically improving the Faster R-CNN network, resulting in a significantly enhanced average precision. Although current object detection algorithms perform relatively well in crop detection tasks, challenges persist due to limited image acquisition angles and an insufficient field of view.

Integrating UAV remote sensing imagery with convolutional neural networks offers an effective solution to the field-of-view limitations in crop detection. Zhao et al. [32] proposed an automated wheat lodging detection method using high-resolution remote sensing imagery and developed specific models for different growth stages. Zhang et al. [33] introduced SSPD-YOLO, a transfer learning approach based on YOLOv8, which enables high-accuracy cotton monitoring and provides reliable support for yield estimation. Jia et al. [34] developed CA-YOLO, a UAV remote sensing-based method for precise corn tassel monitoring, which achieved 96% average detection accuracy. Li et al. [35] addressed occlusion challenges in orchard pear detection by proposing YOLOv5s-FP, a multi-scale collaborative perception network. This method leverages UAV-acquired imagery of occluded pears through aerial perspectives, achieving a mean average precision (mAP) of 96.12% on a custom-built dataset. Junos et al. [36] implemented the precise detection of loose oil palm fruits by integrating UAV-captured imagery with YOLO algorithms, thereby establishing visual information foundations for subsequent automated harvesting system research.

While the aforementioned studies effectively address crop detection issues and the limitations of field-of-view during target detection by utilizing object detection techniques and UAV remote sensing technology, they require substantial computational resources, making real-time detection unfeasible. In contrast, our study necessitates real-time detection, which requires limiting the model size and minimizing computational resource demands. This constraint inevitably compromises the model accuracy. Therefore, this research needs to find new methods that maintain a lightweight model while improving the detection accuracy as much as possible.

2.2. Applications of Knowledge Distillation in Object Detection Tasks

Deep learning demonstrates superior detection capabilities across various domains. However, the training and deployment of deep learning models typically require substantial computational resources, limiting their effectiveness in hardware-constrained environments. Knowledge distillation [37] has emerged as a solution to address poor detection performance under resource constraints and has quickly become a research focus. This method effectively improves the model detection accuracy without increasing the model size. Inspired by transfer learning concepts, knowledge distillation aims to transfer knowledge from a complex but high-performing large model (typically called the teacher model) to a simpler small model (typically called the student model). Through this process, the student model maintains its lightweight architecture while inheriting the superior detection capabilities of the teacher model, which enables better deployment on edge devices.

In object detection tasks, numerous studies have employed knowledge distillation to overcome resource constraints. Liu et al. [38] proposed a lightweight method for detecting the wine mash surface temperature, transferring knowledge from a YOLOv5s teacher model to a YOLOv5n student model. This approach improved the model’s mAP by 0.6% while achieving lightweight optimization, providing a theoretical foundation for practical deployment on edge devices. Zhu et al. [39] introduced Csb-yolo for classroom student behavior detection, achieving 71.1% mAP through post-pruning distillation, enabling deployment on low-performance devices. Liu et al. [40] developed APHS-YOLO for Stropharia rugoso-annulata sorting, using YOLOv8s to distill YOLOv8n, achieving a 0.9% average precision improvement in the lightweight model, providing a reference for the development of automated sorting equipment.

From the aforementioned studies, it is evident that knowledge distillation methods can restore or even enhance the model detection accuracy while maintaining a lightweight model. However, most of these studies remain at the theoretical research stage and their practical deployment capabilities have not been tested. In contrast, this study aims to demonstrate the practical value of the proposed method. Therefore, it is necessary to further accomplish the actual deployment and testing of the model on edge devices.

2.3. Deep Learning Model Deployment

Among numerous object detection studies, most remain theoretical, with only a few focusing on deployment, which is essential for demonstrating practical applicability. Panigrahy, S. et al. [41] proposed a single-stage object detector for the continuous monitoring and inspection of high-voltage insulators to prevent failures and emergencies, later deploying it on Raspberry Pi 4 to validate the feasibility of the system. Lawal, OM et al. [42] introduced three lightweight fruit detection algorithms based on YOLOv5, with their YOLO-Litest model achieving 4.69 FPS on Raspberry Pi 4B, sufficient for real-time fruit detection tasks. Feng et al. [43] developed an improved YOLOv8s model for automated Bemisia tabaci detection to reduce associated losses and integrated it into Raspberry Pi to demonstrate its deployability.

In the crop detection domain, practical deployment studies of object detection models have also emerged. Wang et al. [44] developed an enhanced YOLOv5 model to address endogenous foreign matter contamination in walnuts, subsequently deploying and testing it on Raspberry Pi. Their approach validated the fulfillment of real-time detection requirements, thereby providing references for subsequent development in related industries. Song et al. [45] successfully implemented their proprietary YOLO-Rice model on Raspberry Pi 5, achieving 2.233 FPS with practical applicability for rice panicle detection per unit area using low-cost devices.

Among these deployment-focused studies, we observe that Raspberry Pi series development boards, being low-cost and high-performance single-board computers, are particularly suitable for deploying and testing lightweight models. Consequently, many researchers validate the practical deployment capabilities of their models on Raspberry Pi. Therefore, to complete our model deployment work, we selected Raspberry Pi 5 as the practical deployment platform to evaluate the feasibility of our proposed method.

3. Materials and Methods

3.1. Dataset

3.1.1. Study Area

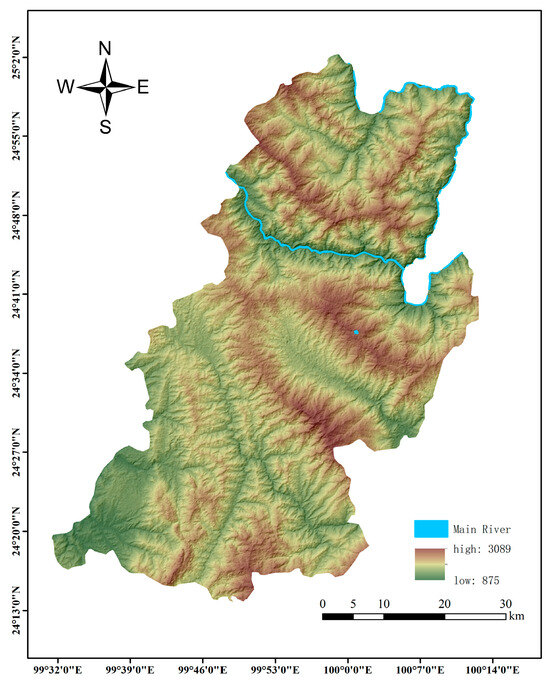

This study was conducted in Fengqing County, Lincang City, Yunnan Province, China (geographic coordinates: 24°13′ N–25°03′ N, 99°31′ E–100°13′ E; as shown in Figure 1), situated in the southwestern Yunnan–Kweichow Plateau along the middle reaches of the Lancang River. The terrain exhibits a gradual north-to-south topographic gradient, featuring undulating mountain ranges and interconnected valleys, with a mean elevation of 1569 m. Characterized by a low-latitude plateau, mid-subtropical monsoon climate, the study area maintains mild temperatures and distinct wet–dry seasonality, providing optimal ecological conditions for walnut cultivation. As a principal walnut production base in Yunnan Province, the region supports intensive walnut cultivation practices.

Figure 1.

Study area of this research.

3.1.2. Image Acquisition

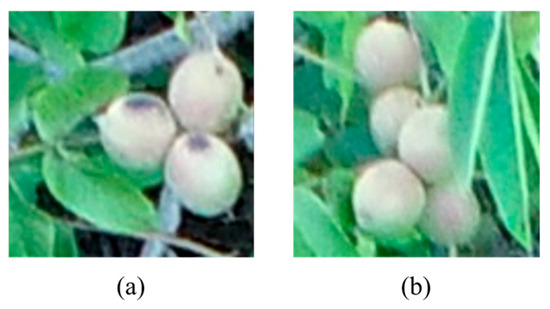

Aerial image acquisition was implemented in September 2022 using a DJI Phantom 4 Pro UAV equipped with an FC6310R photogrammetric camera, with their technical specifications detailed in Table 1 and Table 2. Manual flight mode was employed to acquire standard walnut images. To ensure the acquisition of clearer and more detailed images, the drone was maintained at a relatively close distance from the walnut tree canopy. A total of 180 aerial walnut images with dimensions of 5472 × 3648 pixels were collected and stored in JPG format. The captured walnuts exhibit both unobstructed and obstructed states, as illustrated in Figure 2.

Table 1.

DJI Phantom 4 PRO.

Table 2.

FC6310R photogrammetry camera.

Figure 2.

States of walnut obstruction. (a) Unobstructed. (b) Obstructed.

3.1.3. Dataset Creation

To clean the acquired data, the 180 aerial images were cropped into 640 × 640 pixel segments. After removing blurred images and images without walnut targets, 2347 valid walnut images were retained.

To address the varying degrees of obstruction observed in walnut fruits on trees, we established two annotation categories—“obstructed” and “unobstructed”—to enhance detection accuracy for occluded targets. This labeling strategy enables the detection model to extract discriminative features under different occlusion conditions, including partial contours, texture patterns of walnuts, and potential occluder characteristics, thereby improving fruit detection precision. Following label design specification, all walnut images were annotated using LabelImg v1.8.6 [46] with labels stored in TXT format. The annotated dataset of 2347 images was then divided into training and validation sets in an 8:2 ratio for subsequent model training. This dataset can further serve as a valuable resource for subsequent research on agricultural fruit-harvesting robots.

3.2. YOLOv11

As the latest iteration in the YOLO series, YOLOv11 demonstrates superior detection accuracy and faster inference speed compared to its predecessors. The architecture comprises four main components: input layer, backbone network, neck network, and detection head. Images enter the network through the input layer in a base format of 640 × 640 pixels. The backbone network extracts target feature information from input images, while the neck network integrates these features thoroughly using the FPN [47]-PAN [48] method. The detection head then completes the detection task. YOLOv11 offers five model variants: YOLOv11n, YOLOv11s, YOLOv11m, YOLOv11l, and YOLOv11x, with incrementally increasing parameter counts and model sizes. Taking into account both detection accuracy and real-time performance requirements, this paper selects YOLOv11s with its relatively low parameter count and small model size as the baseline model. Based on the YOLOv11s model, we perform model optimization and improvement to build a lightweight detection model specifically designed for walnut target detection tasks.

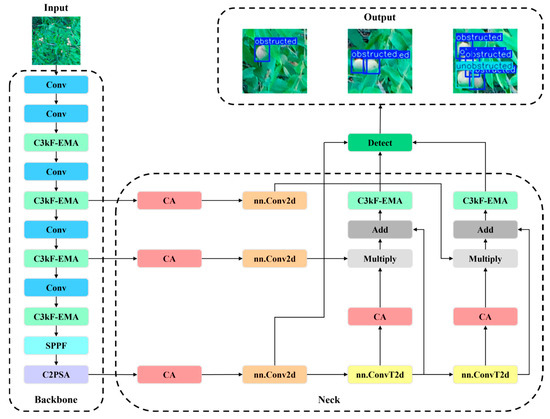

3.3. RSWD-YOLO

In walnut yield prediction, the accuracy of yield prediction, the precision of walnut count, and the accuracy of walnut object detection are positively correlated. The higher the detection accuracy of the model for walnuts, the more precise the walnut count will be, leading to more accurate yield predictions in subsequent studies. To achieve higher detection accuracy while enabling efficient deployment on drone edge devices, this study proposes the RSWD-YOLO object detection model specifically designed for walnut detection. Figure 3 illustrates the overall architecture of RSWD-YOLO.

Figure 3.

Overall architecture of RSWD-YOLO.

The RSWD-YOLO model introduces three critical enhancements to the original YOLOv11 architecture. First, we enhanced the feature fusion network to improve multi-scale target detection capabilities, addressing the varied sizes of walnuts in remote sensing images. Second, to improve the model’s compatibility with hardware deployment, we refined the feature extraction component by reducing redundant computations, making the model more lightweight. Third, we integrated a cross-spatial learning attention module to mitigate the accuracy loss caused by model lightweight optimization. Like YOLOv11, RSWD-YOLO retains four primary components: the input layer, backbone network, neck network, and detection head.

3.3.1. Feature Fusion Enhancement

In UAV-based walnut detection, walnuts of the same physical size appear at varying scales in images due to differences in their distance from the UAV. This scale variation presents a significant challenge for object detection models, making it difficult to maintain high detection accuracy across multiple scales. To address this issue, YOLOv11 incorporates an FPN-PAN structure in its neck network, which facilitates multi-scale feature fusion. However, disparities in resolution among input feature maps often hinder its ability to effectively integrate multi-scale features, limiting the overall detection performance.

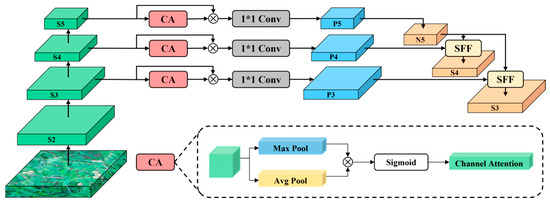

To address this multi-scale detection challenge, we replaced YOLOv11’s original FPN-PAN structure with a Hierarchical Scale-based Feature Pyramid Network (HSFPN) [49], as shown in Figure 4. HSFPN comprises two main components: a feature selection module and a feature fusion module. The feature selection module utilizes Channel Attention (CA) to weigh and adjust features at different scales, ensuring that key features are preserved during the fusion process. Additionally, a dimension-matching mechanism is employed to enable the unified fusion of multi-scale features, preventing issues such as scale mismatches. The feature fusion module adopts a Selective Feature Fusion (SFF) strategy, where high-level features filter critical information from low-level features and guide their adjustments. This allows low-level features to retain rich, detailed information while enhancing their focus on key regions. Compared to the traditional FPN-PAN structure, HSFPN leverages high-level features to guide the selection of low-level features. This interactive feature selection approach not only generates feature maps with richer semantic information, but also optimizes the feature fusion pathway, reducing computational redundancy and making the model more lightweight.

Figure 4.

Structure of HSFPN. In the structure, “1 × 1 Conv” denotes a 1 × 1 convolutional layer.

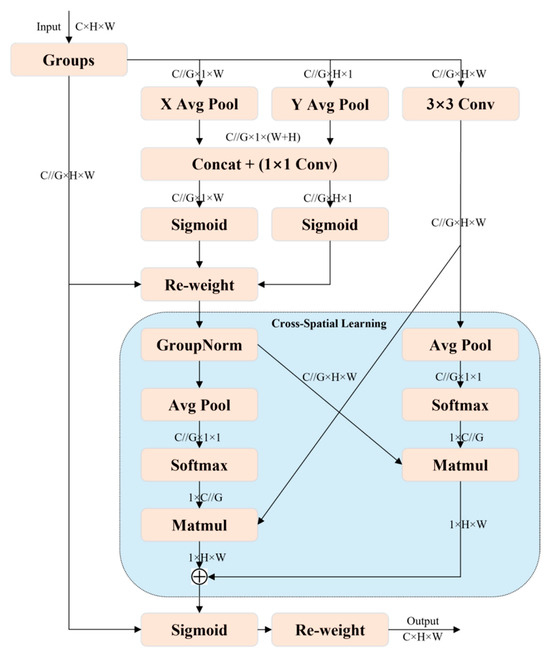

3.3.2. Efficient Multi-Scale Attention

To further enhance multi-scale target detection accuracy, we introduced an efficient multi-scale attention module with cross-spatial learning (EMA) [50], which strengthens the model’s feature extraction capability. EMA combines multi-scale attention mechanisms with efficient feature fusion strategies to achieve multi-scale feature extraction, preserving channel information while reducing computational costs. The structure of EMA is illustrated in Figure 5.

Figure 5.

Structure of EMA.

EMA extracts attention weights for grouped feature maps through three parallel branches, which includes two 1 × 1 branches and one 3 × 3 branch. To capture channel dependencies across the entire feature map, EMA captures cross-channel interactions along the channel dimension. Specifically, the two 1 × 1 branches encode channel information along two spatial directions, enabling channel descriptors to capture global positional information effectively. Meanwhile, the 3 × 3 branch uses a 3 × 3 convolution to extract multi-scale features. By enhancing the model’s feature extraction capabilities, EMA encodes global information and recalibrates channel weights in each branch, while also capturing pixel-level relationships through cross-dimensional interactions.

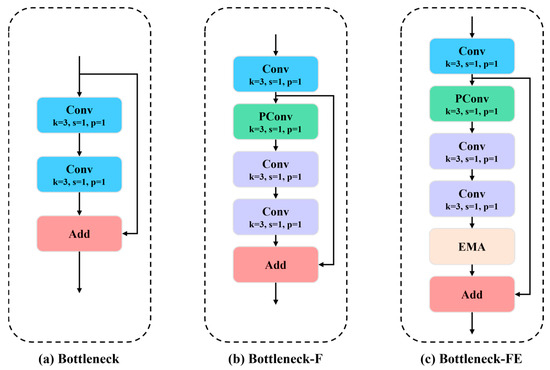

3.3.3. Feature Extraction Enhancement

The C3k2 module is an improved version of YOLOv8’s C2f module, which introduces a parameter, C3k. The C3k2 module functions as a standard C2f module when C3k is set to False. When set to True, it replaces the Bottleneck structure in the C2f module with a C3k structure, enhancing feature extraction capabilities. While the C3k2 module extracts richer gradient flow information, it introduces some computational redundancy, which may affect efficiency.

Since this study aims to deploy the model on edge devices, further lightweight optimization is necessary while minimizing the loss of detection accuracy. Therefore, inspired by the partial convolution (PConv) concept proposed in FasterNet [51], we reconstructed the Bottleneck structure within the C3k2 module and named it Bottleneck-F. Specifically, PConv performs conventional convolution on only 1/4 of the input channels for feature extraction, while the remaining 3/4 bypass convolution operations. The concatenated feature maps from both parts effectively reduce redundant computations while preserving the original channel information. This approach enables a significant reduction in model parameters and model size without substantial accuracy loss, making the model more lightweight.

To compensate for potential accuracy degradation during the lightweight optimization process and further enhance the model’s multi-scale object detection capabilities, we integrated the EMA attention mechanism into the Bottleneck-F structure. The EMA attention mechanism allows the model to focus more on the central regions of feature maps, while leveraging multi-scale feature extraction and spatial position information to enhance feature representation. As a result, the final feature maps are enriched with multi-scale contextual information. A comparison of the Bottleneck structure before and after the improvements is shown in Figure 6.

Figure 6.

Structure of bottleneck before and after the improvements.

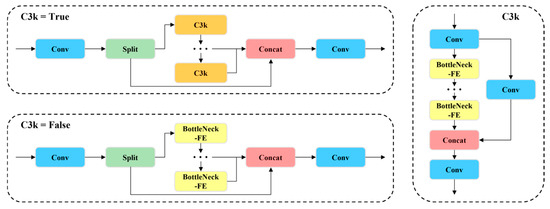

We replaced the bottleneck structure in the C3k2 module with the improved Bottleneck-FE structure and renamed it as the C3kF-EMA module, as illustrated in Figure 7. The improved C3kF-EMA module not only reduces computational redundancy compared to the original C3k2 module, making the model more lightweight, but also enhances feature extraction capabilities through the integration of the EMA attention mechanism. This design better balances the need for model lightweight optimization and feature richness.

Figure 7.

Structure of C3kF-EMA module.

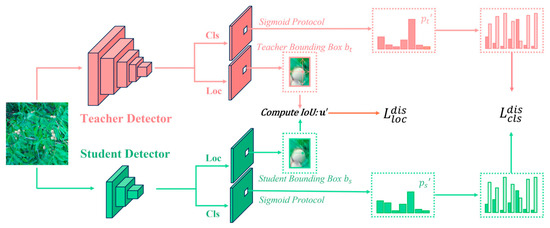

3.4. Knowledge Distillation

Current mainstream knowledge distillation methods can be categorized into Logit-Based Knowledge Distillation and Feature-Based Knowledge Distillation. Logit-Based Knowledge Distillation aims to guide student models in learning the final predictions of teacher models to achieve comparable predictive performance, while Feature-Based Knowledge Distillation focuses on aligning intermediate layer outputs between student and teacher models. In this study, we adopted the BCKD [52] method from Logit-Based Knowledge Distillation, using larger versions of RSWD-YOLO as teacher model to distill knowledge into smaller version.

BCKD, a knowledge distillation method designed for dense object detection tasks, and its architecture are illustrated in Figure 8. It addresses the cross-task protocol inconsistency between dense object detection and classification distillation through specially designed classification distillation loss and localization distillation loss. The classification distillation loss represents classification logit as multiple binary maps and extracts classification knowledge using binary cross-entropy. The localization distillation loss, based on IoU [53] theory, transfers knowledge from teacher model to student model by minimizing the IoU loss between their predicted bounding boxes. The total distillation loss is computed as a weighted sum of classification distillation loss and localization distillation loss. BCKD’s loss function design effectively tackles foreground–background class imbalance and improves bounding box localization accuracy, thereby enhancing the training performance of student model.

Figure 8.

Flow chart of BCKD.

3.5. Model Deployment

The practical deployment platform of this paper is Raspberry Pi 5. It is centered on the Broadcom BCM2712 system-on-chip (SoC), which integrates a quad-core 64-bit ARM Cortex-A76 CPU operating at 2.4 GHz. The memory subsystem is equipped with 8 GB LPDDR4X-4267 SDRAM, achieving a peak bandwidth of 34.1 GB/s. Peripheral connectivity includes dual USB 3.0 (5 Gbps) ports, dual USB 2.0 (480 Mbps) ports, dual-band Wi-Fi 5 (802.11ac), Bluetooth 5.0, and a 40-pin GPIO header maintaining backward compatibility with legacy expansion modules.

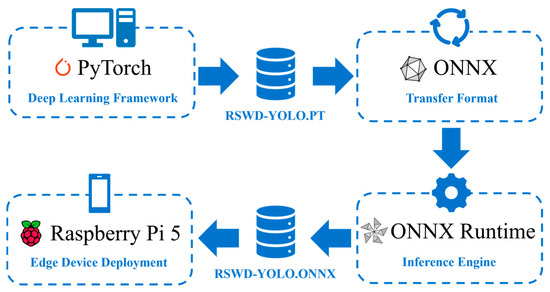

ONNX (Open Neural Network Exchange) is an open network model format introduced by Microsoft Corporation and Facebook, Inc. (Redmond, WA, USA) in 2017. By defining a standardized format independent of specific platforms and frameworks, ONNX enables deep learning models across various platforms or frameworks to be saved, exchanged, and utilized under a unified standard. ONNX Runtime is a high-performance inference engine tailored for the cross-platform deployment and execution of ONNX-format models.

The deployment process begins by loading the RSWD-YOLO model, which was designed and trained under the PyTorch v2.2.2 framework, with the trained PT weights file integrated into PyTorch’s neural network module. The model weights are then exported to ONNX format via PyTorch’s torch.onnx interface and optimized with ONNX Runtime tools to improve inference efficiency. Finally, the RSWD-YOLO model is deployed on the edge device (Raspberry Pi 5) using the ONNX Runtime inference engine to enable edge-based inference. Experimental validation demonstrates that this workflow resolves framework compatibility issues, thereby accomplishing full deployment by transitioning RSWD-YOLO from training devices to the edge platform. The complete deployment procedure is detailed in Figure 9.

Figure 9.

Flow chart of deployment.

4. Experiments and Results

4.1. Training Environment

The model training was conducted on a Windows 11 operating system. The experimental computational resources included an NVIDIA GeForce RTX 4090 GPU with 24 GB of memory, an Intel (R) Core (TM) i7-13700K CPU at 3.40 GHz, and 32 GB of RAM. Model construction, training, and evaluation were all carried out in the Python v3.10 programming environment, using the PyCharm integrated development environment, and the primary libraries used included ultralytics, opencv, numpy, and pillow, with PyTorch 2.2.2 as the deep learning framework.

The model was trained with the following configurations: an input image resolution of 640 × 640, a batch size of 16, 300 training epochs, an initial learning rate of 0.001, and a weight decay coefficient of 0.0005. We employed an SGD optimizer with a momentum of 0.937 and used a batch size of 1 for FPS testing. The model was trained using the Mosaic method for data augmentation, while validation was conducted following the predefined validation protocol of YOLOv11. The parameter settings for data augmentation and model validation are detailed in Table 3. These experimental configurations and hyperparameter settings were consistent across all experiments in this study.

Table 3.

The parameter settings for data augmentation and model validation.

4.2. Evaluation Metrics

To evaluate the performance of the proposed RSWD-YOLO model, we used four evaluation metrics: Precision (P), Recall (R), F1-Score, and mean Average Precision (mAP). True Positives (TP) refer to correctly identified positive samples among the predicted positives. False Positives (FP) refer to negative samples incorrectly classified as positive, while False Negatives (FN) refer to positive samples misclassified as negative.

Precision represents the proportion of correctly identified positive samples among all samples predicted as positive. The calculation formula is as follows:

where TP (True Positives) represents the count of correctly predicted positive samples among predicted positives and FP (False Positives) denotes the count of negative samples incorrectly predicted as positive.

Recall represents the proportion of correctly identified positive samples among all actual positive samples. The calculation formula is as follows:

where FN (False Negatives) represents the count of positive samples incorrectly classified as negative.

The F1-Score, the harmonic mean of Precision and Recall, serves as a metric for evaluating the binary classification accuracy. It ranges from 0 to 1, with higher values indicating a better classification performance. The calculation formula is as follows:

AP (Average Precision) is calculated as the area under the Precision–Recall curve, while mAP represents the mean of AP values across all classes. mAP0.5 indicates the mean value when the IoU threshold is set to 0.5, and mAP0.5–0.95 denotes the mean value when the IoU thresholds range from 0.5 to 0.95. The calculation formulas are defined as:

where N represents the number of classes (N = 2 in this study), and APi denotes the AP value for the i-th class.

In addition to the aforementioned detection performance metrics, we also evaluate the model using FPS, GFLOPs, parameters, and the model size. FPS measures the number of image frames processed per second, indicating the model’s processing speed. Higher FPS indicates a better real-time performance, enhancing suitability for real-time detection tasks. GFLOPs measure floating-point operations per second, with higher values reflecting greater computational complexity. Parameters and the model size indicate the model’s complexity and hardware resource requirements. Higher GFLOPs, parameter count, and model size suggest increased model complexity and greater hardware demands for deployment.

The above evaluation criteria enable a comprehensive assessment of the model’s performance, facilitating the selection of the object detection model that contributes most significantly to this study.

4.3. Ablation Experiments

We conducted ablation studies to evaluate the effectiveness of individual improvement modules in RSWD-YOLO, with results presented in Table 4. YOLOv11s served as the baseline model, achieving a precision of 78.8%, recall of 79.5%, F1-Score of 0.791, and mAP0.5 of 84.4%, with parameters of 9.41 M and a model size of 19.2 MB. Experiments on individual improvement modules were conducted without pre-trained weights to isolate their specific contributions to the performance.

Table 4.

Ablation experiment results.

Analyzing the results of the ablation experiments, Experiment Group A primarily replaced the feature fusion structure with the HSFPN structure, reducing the parameters and model size by 2.64 M and 5.3 MB, respectively, achieving a notable lightweight improvement. The model’s mAP0.5 increased by 0.4%; however, due to the impact of lightweight optimization, the precision, recall, and F1-Score decreased by 0.1%, 0.7%, and 0.004, respectively. Although replacing the original C3k2 structure with C3kF led to a 0.4% decrease in mAP0.5, it significantly reduced the parameters and model size by 0.64 M and 1.3 MB, respectively, successfully achieving the crucial goal of model lightweight optimization. To compensate for the accuracy loss caused by model lightweight optimization, we introduced the EMA attention mechanism into Bottleneck-F, refining it into the Bottleneck-FE structure. By integrating the EMA attention mechanism, the model enhances multi-scale feature representation, thereby improving the detection accuracy for multi-scale targets. As a result, mAP0.5 and recall increased by 0.7% and 2.6%, respectively. Finally, as shown in Experiment E, applying the BCKD knowledge distillation method improved the mAP0.5, precision, and F1-Score by 1%, 4.6%, and 0.018, respectively, without increasing the model size, significantly enhancing the model’s detection performance. Ultimately, compared to the baseline YOLOv11s model, our proposed RSWD-YOLO achieved improvements of 1.7% in mAP0.5, 0.5% in precision, 2.3% in recall, and 0.014 in the F1-Score, while achieving a reduction of 3.27 M in parameters and 6.6 MB in the model size. These results demonstrate that our improvements enhanced both the detection accuracy and model efficiency, leading to a better overall performance.

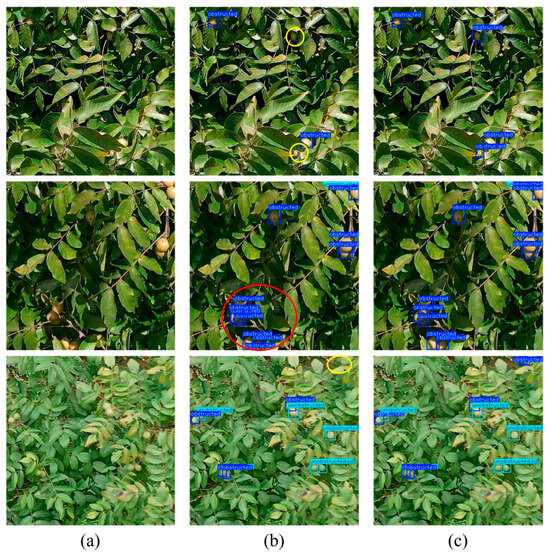

Figure 10 illustrates the detection performance comparison between RSWD-YOLO and YOLOv11s. Yellow circles indicate walnut targets detected by RSWD-YOLO but missed by YOLOv11s, while red circles highlight duplicate detection instances. The results clearly demonstrate RSWD-YOLO’s superior detection performance compared to the baseline YOLOv11s model.

Figure 10.

Comparison of detection results between RSWD-YOLO and YOLOv11s. (a) Original image; (b) Detection results of YOLOv11s; (c) Detection results of RSWD-YOLO.

Figure 11 shows the confusion matrices of YOLOv11s and RSWD-YOLO obtained during training on the walnut dataset. The results highlight RSWD-YOLO’s improved detection accuracy over YOLOv11s.

Figure 11.

Confusion matrices of YOLOv11s and RSWD-YOLO.

In summary, ablation studies confirm the effectiveness of our proposed enhancement strategies for RSWD-YOLO. These improvements enhance the overall detection accuracy while reducing the model complexity. RSWD-YOLO achieves 86.1% detection accuracy with minimal hardware resource requirements, highlighting its suitability for real-world deployment on drone-mounted edge devices for walnut detection.

4.4. Comparative Experiments

To evaluate the overall performance of our proposed RSWD-YOLO model, we compared it against current mainstream YOLO models, with detailed metrics shown in Table 5. The results show that RSWD-YOLO outperforms in recall, mAP0.5, mAP0.5–0.95, and the F1-Score.

Table 5.

Comparative experiments’ results of different models.

In terms of precision, although RSWD-YOLO’s precision was 1.8% lower than the highest value, it achieved at least 4.5% higher recall compared to YOLOv3, YOLOv3-spp, YOLOv5m, and YOLOv5x, indicating a lower probability of missed detections. Moreover, RSWD-YOLO outperformed other mainstream YOLO models in mAP0.5, mAP0.5–0.95, and the F1-Score. Despite not achieving the highest precision, RSWD-YOLO still demonstrates the best overall detection performance.

Regarding the model parameters, GFLOPs, and model size, though less lightweight than the YOLO-n series models, RSWD-YOLO maintains a smaller footprint than most other models. Subsequent deployment experiments demonstrated effective operation on UAV edge devices. Additionally, RSWD-YOLO achieves 2.3–10.6% higher walnut detection accuracy than other n-series models. Thus, without compromising deployment feasibility, RSWD-YOLO delivers higher detection accuracy with minimal compromises on the lightweight design, making it highly suitable for UAV-based walnut detection tasks.

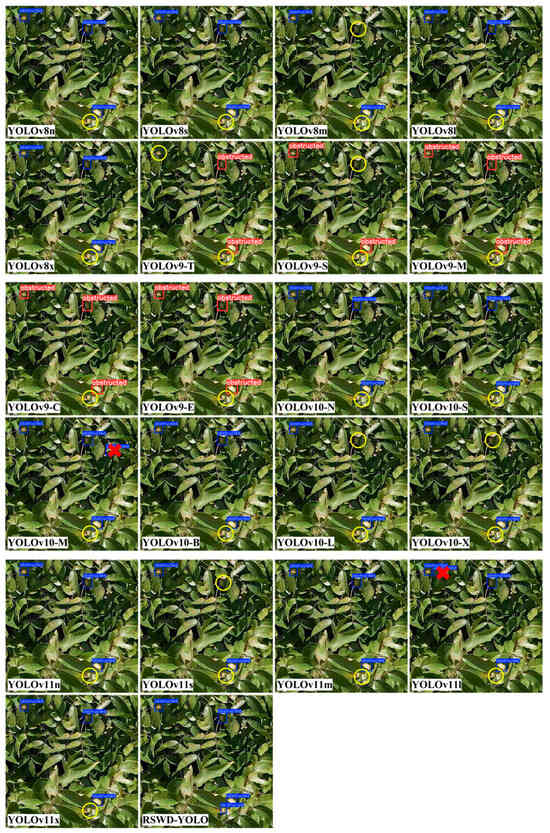

Figure 12 shows the detection results across various models. Green circles indicate walnut targets in the original image, yellow circles mark missed walnut targets, while red crosses indicate false detections. Notably, all models except RSWD-YOLO show varying levels of missed detections in identifying walnut targets. Additionally, YOLOv10-M and YOLOv11l misclassified non-walnut objects as walnuts. This visualization highlights RSWD-YOLO’s superior capability in walnut detection compared to the other models.

Figure 12.

Comparison of detection results across different models (model names are shown in the lower left corner of each image).

To further demonstrate the superiority of the RSWD-YOLO model, we conducted comparative experiments with the w-YOLO model, GDA-YOLOv5 model, GDAD-YOLOv5 model, and OW-YOLO model, all of which are also designed for walnut object detection. The experimental results are shown in Table 6.

Table 6.

Comparative experimental results with other walnut detection models.

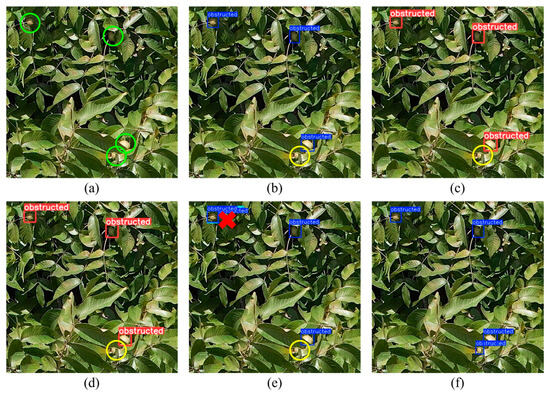

As shown in Table 6, the RSWD-YOLO model proposed in this study outperforms the other four walnut detection models not only in the overall classification, but also in the specific categories of occluded walnut targets (obstructed) and non-occluded walnut targets (unobstructed). Figure 13 presents the detection result images of these five models. Green circles indicate walnut targets in the original image, yellow circles mark missed walnut targets, while red crosses indicate false detections. It is clearly evident that, except for RSWD-YOLO, the other four models all suffer from missed detections. Additionally, OW-YOLO misclassified non-walnut objects as walnuts. This visualization highlights RSWD-YOLO’s superior capability in walnut detection compared to the other models.

Figure 13.

Comparison of detection results across five models. (a) Original image; (b) Detection results of w-YOLO; (c) Detection results of GDA-YOLOv5; (d) Detection results of GDAD-YOLOv5; (e) Detection results of OW-YOLO; (f) Detection results of RSWD-YOLO.

In conclusion, comparative experiments with mainstream YOLO models highlight the effectiveness of our targeted improvements to YOLOv11s. The proposed RSWD-YOLO model offers a smaller model size and the highest mean detection accuracy among object detection models, making it highly advantageous for UAV-based walnut detection tasks. These findings serve as a valuable reference for future research in related fields.

5. Discussion

5.1. Discussion on Neck Network Performance

In the proposed RSWD-YOLO model, the HSFPN structure effectively tackles the multi-scale challenges in walnut detection. To confirm HSFPN as the most effective neck network structure, we conducted comparative analyses against mainstream neck structures, such as GFPN [58], AFPN [59], BiFPN [60], goldyolo [61], slimneck [62], asf [63], and RCSOSA. The results are summarized in Table 7.

Table 7.

Comparative experiments’ results of different neck network.

As shown in Table 7, the HSFPN structure in RSWD-YOLO outperforms other neck architectures across five key metrics: mAP0.5, Parameters, GFLOPs, Model Size, and FPS. While FPS showed no improvement, the other four metrics demonstrated significant gains over the baseline model. These findings confirm HSFPN’s superior performance in walnut detection over alternative neck structures.

5.2. Discussion on Knowledge Distillation Performance

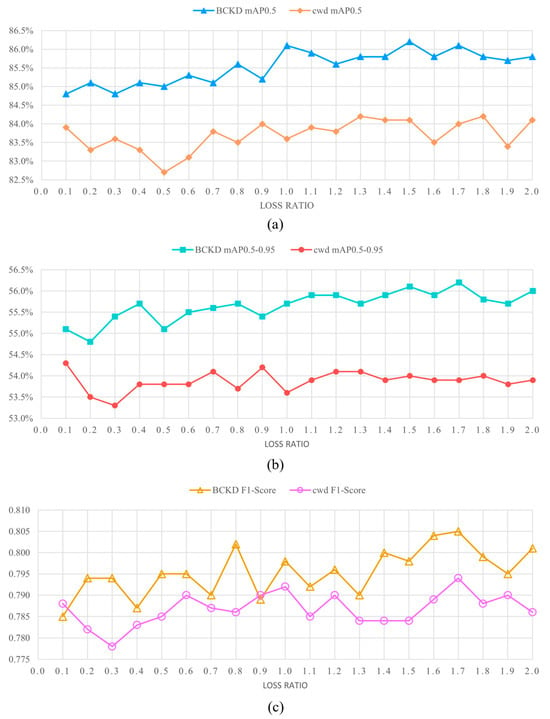

Logit-Based Knowledge Distillation and Feature-Based Knowledge Distillation are the two main approaches to knowledge distillation. To determine the optimal distillation method and parameters for RSWD-YOLO, we compared BCKD (Logit-Based Knowledge Distillation) with cwd [64] (Feature-Based Knowledge Distillation) through extensive experiments, as shown in Figure 14. The horizontal axis represents the distillation loss rate, while the vertical axes show mAP0.5, mAP0.5–0.95, and F1-Score, respectively. The results show that BCKD outperforms cwd, leading to our adoption of the BCKD method. Regarding parameter configuration, the optimal distillation loss rates for mAP0.5 and mAP0.5–0.95 are 1.5 and 1.7, respectively, with only a marginal difference of 0.1% between these scenarios. However, at the loss rate of 1.7, the model achieves a significantly better F1-Score than at 1.5. Therefore, we set the distillation loss rate to 1.7.

Figure 14.

Comparison results of knowledge distillation experiments. (a) The mAP0.5 results of the model under different distillation loss rates; (b) The mAP0.5-0.95 results of the model under different distillation loss rates; (c) The F1-Score results of the model under different distillation loss rates.

5.3. Discussion on Model Deployment Performance

To assess the deployment feasibility of the proposed RSWD-YOLO model on drone-mounted edge devices, we deployed the model on a Raspberry Pi 5 and conducted inference tests. The trained PT weight files were converted to the ONNX format with an opset version of 13. We selected 100 representative walnut images for inference testing on the edge device, with results presented in Table 8. The baseline YOLOv11s model had an average inference time of 559.53 ms per image, and the RSWD-YOLO model achieved an average inference time of 492.28 ms per image.

Table 8.

Model deployment results of YOLOv11s and RSWD-YOLO models.

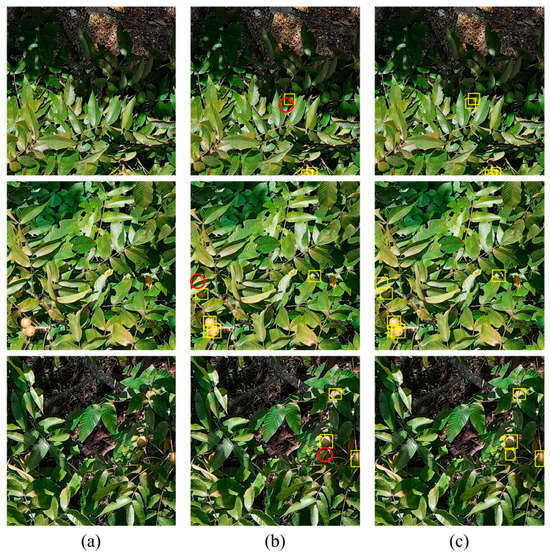

The detection results on the Raspberry Pi 5 are shown in Figure 15, where (a) represents the original image, (b) represents the detection outcomes of YOLOv11s, and (c) represents the detection outcomes of RSWD-YOLO. The yellow squares indicate walnut targets detected by the RSWD-YOLO model, while the red circles highlight walnut targets that were not detected. Therefore, it can be concluded that the improved RSWD-YOLO model not only enhances the walnut detection accuracy, but also improves the inference speed in edge devices, meeting the requirements for practical deployment.

Figure 15.

Detection results on the Raspberry Pi 5. (a) Original image; (b) Detection results of YOLOv11s; (c) Detection results of RSWD-YOLO.

6. Conclusions

High-precision walnut detection is essential for yield prediction, as accurate detection enables the reliable counting of walnut fruits and a subsequent yield estimation. Manual counting methods suffer from subjectivity and high labor costs, making them impractical for large-scale walnut surveys. With the rapid advancement of deep learning technology, this paper proposes a walnut detection method that integrates UAV remote sensing imagery with object detection techniques. In the course of this research, the initial challenge is to enhance the model’s capability to detect multi-scale targets while maintaining a lightweight structure. The second challenge is finding a method to minimize the performance loss caused by model lightweighting, with the aim of restoring or even enhancing the detection accuracy of the lightweight model. Finally, it is crucial to address the practical deployment of the model on edge devices, thereby providing technical support for subsequent studies.

To tackle these challenges, this study involved extensive efforts and innovations. Initially, we collected walnut images using aerial cameras mounted on UAVs, thereby establishing a walnut dataset for our research. Based on the characteristics of this dataset, we enhanced YOLOv11 by improving its feature extraction and feature fusion components and incorporated an efficient multi-scale attention module. This resulted in the development of the RSWD-YOLO detection model, specifically optimized for walnut object detection. Subsequently, to further improve the model’s detection accuracy and mitigate the performance loss due to lightweighting, we applied knowledge distillation. This allowed us to achieve an mAP0.5 of 86.1% without increasing the model size, outperforming all mainstream models in the YOLO series. Finally, we successfully deployed the RSWD-YOLO model on edge devices and validated its operational functionality through inference testing. This demonstrated the model’s capability to perform object detection tasks effectively on edge devices, confirming its practical deployment potential.

In summary, the RSWD-YOLO model achieves the highest detection accuracy among all mainstream models in the YOLO series. After inference testing on edge devices, our study confirms that we have successfully met the anticipated objectives, demonstrating that the model can achieve sufficiently high accuracy for walnut detection while maintaining practical deployability. This capability provides both data support and technical backing for subsequent research. However, there are certain limitations to this approach. For instance, when RSWD-YOLO is deployed on edge devices, it exhibits an average detection latency of 492.28 ms per image. While this performance is adequate for real-time detection tasks, there remains room for improvement. Therefore, future work will focus on enhancing the model’s inference speed on edge devices to truly enable real-time walnut detection from a UAV perspective. Additionally, we aim to design a counting system to perform the real-time statistics of detected walnuts, thereby significantly increasing the practical applicability of our approach in walnut yield prediction. After completing the walnut yield prediction task, future work will focus on designing an agricultural fruit-picking robot tailored to the characteristics of the proposed dataset. Moreover, we seek to further improve the detection accuracy of occluded targets to enhance the design of the agricultural fruit-picking robot, ultimately increasing the efficiency of walnut harvesting.

Author Contributions

Conceptualization, Y.W. and L.Y.; methodology, Y.W.; software, Y.W.; validation, Y.W., H.W. (Haoyu Wang), and X.Y.; formal analysis, Y.W. and H.W. (Haoyu Wang); investigation, Y.W. and X.Y.; resources, Y.W. and L.Y.; data curation, Y.W. and H.W. (Haoyu Wang); writing—original draft preparation, Y.W.; writing—review and editing, Y.W. and H.W. (Huihua Wang); visualization, Y.W.; supervision, X.Y.; project administration, Z.C.; funding acquisition, L.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Project of Yunnan Province Basic Research Program (grant number 202401AS070034) and the Yunnan Provincial Forestry and Grass Science and Technology Innovation Joint Project (grant number 202404CB090002).

Data Availability Statement

Data are contained within the article. Additional data can be obtained by contacting the corresponding author of the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Prentović, M.; Radišić, P.; Smith, M.; Šikoparija, B. Predicting walnut (Juglans spp.) crop yield using meteorological and airborne pollen data. Ann. Appl. Biol. 2014, 165, 249–259. [Google Scholar] [CrossRef]

- Guo, P.; Chen, F.; Zhu, X.; Yu, Y.; Lin, J. Phenotypic-Based Maturity Detection and Oil Content Prediction in Xiangling Walnuts. Agriculture 2024, 14, 1422. [Google Scholar] [CrossRef]

- Ni, P.; Hu, S.; Zhang, Y.; Zhang, W.; Xu, X.; Liu, Y.; Ma, J.; Liu, Y.; Niu, H.; Lan, H. Design and Optimization of Key Parameters for a Machine Vision-Based Walnut Shell–Kernel Separation Device. Agriculture 2024, 14, 1632. [Google Scholar] [CrossRef]

- Li, Y.H.; Zheng, J.; Fan, Z.P.; Wang, L. Sentiment analysis-based method for matching creative agri-product scheme demanders and suppliers: A case study from China. Comput. Electron. Agric. 2021, 186, 106196. [Google Scholar] [CrossRef]

- Jafarbiglu, H.; Pourreza, A. A comprehensive review of remote sensing platforms, sensors, and applications in nut crops. Comput. Electron. Agric. 2022, 197, 106844. [Google Scholar] [CrossRef]

- Staff, A.C. The two-stage placental model of preeclampsia: An update. J. Reprod. Immunol. 2019, 134, 1–10. [Google Scholar] [CrossRef]

- Kecen, L.; Xiaoqiang, W.; Hao, L.; Leixiao, L.; Yanyan, Y.; Chuang, M.; Jing, G. Survey of one-stage small object detection methods in deep learning. J. Front. Comput. Sci. Technol. 2022, 16, 41. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:1504.08083. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Redmon, J. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A small-object-detection model based on improved YOLOv8 for UAV aerial photography scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef] [PubMed]

- Liang, S.; Wu, H.; Zhen, L.; Hua, Q.; Garg, S.; Kaddoum, G.; Hassan, M.M.; Yu, K. Edge YOLO: Real-time intelligent object detection system based on edge-cloud cooperation in autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 25345–25360. [Google Scholar] [CrossRef]

- Kang, M.; Ting, C.M.; Ting, F.F.; Phan, R.C.W. RCS-YOLO: A fast and high-accuracy object detector for brain tumor detection. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Vancouver, BC, Canada, 8–12 October 2023; Springer Nature: Cham, Switzerland, 2023; pp. 600–610. [Google Scholar]

- Wu, M.; Yang, X.; Yun, L.; Yang, C.; Chen, Z.; Xia, Y. A General Image Super-Resolution Reconstruction Technique for Walnut Object Detection Model. Agriculture 2024, 14, 1279. [Google Scholar] [CrossRef]

- Liu, Q.; Jiang, R.; Xu, Q.; Wang, D.; Sang, Z.; Jiang, X.; Wu, L. YOLOv8n_BT: Research on Classroom Learning Behavior Recognition Algorithm Based on Improved YOLOv8n. IEEE Access 2024, 12, 36391–36403. [Google Scholar] [CrossRef]

- Guo, Z.; Wang, C.; Yang, G.; Huang, Z.; Li, G. Msft-yolo: Improved yolov5 based on transformer for detecting defects of steel surface. Sensors 2022, 22, 3467. [Google Scholar] [CrossRef]

- Wu, M.; Lin, H.; Shi, X.; Zhu, S.; Zheng, B. MTS-YOLO: A Multi-Task Lightweight and Efficient Model for Tomato Fruit Bunch Maturity and Stem Detection. Horticulturae 2024, 10, 1006. [Google Scholar] [CrossRef]

- Qiu, Z.; Huang, Z.; Mo, D.; Tian, X.; Tian, X. GSE-YOLO: A Lightweight and High-Precision Model for Identifying the Ripeness of Pitaya (Dragon Fruit) Based on the YOLOv8n Improvement. Horticulturae 2024, 10, 852. [Google Scholar] [CrossRef]

- Shi, L.; Wei, Z.; You, H.; Wang, J.; Bai, Z.; Yu, H.; Ji, R.; Bi, C. OMC-YOLO: A lightweight grading detection method for Oyster Mushrooms. Horticulturae 2024, 10, 742. [Google Scholar] [CrossRef]

- Hassler, S.C.; Baysal-Gurel, F. Unmanned aircraft system (UAS) technology and applications in agriculture. Agronomy 2019, 9, 618. [Google Scholar] [CrossRef]

- Gongal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Sensors and systems for fruit detection and localization: A review. Comput. Electron. Agric. 2015, 116, 8–19. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Liu, J.; Liu, Z. YOLOv5s-BC: An improved YOLOv5s-based method for real-time apple detection. J. Real-Time Image Process. 2024, 21, 1–16. [Google Scholar] [CrossRef]

- Fu, X.; Wang, J.; Zhang, F.; Pan, W.; Zhang, Y.; Zhao, F. Study on Target Detection Method of Walnuts during Oil Conversion Period. Horticulturae 2024, 10, 275. [Google Scholar] [CrossRef]

- Yang, C.; Cai, Z.; Wu, M.; Yun, L.; Chen, Z.; Xia, Y. Research on Detection Algorithm of Green Walnut in Complex Environment. Agriculture 2024, 14, 1441. [Google Scholar] [CrossRef]

- Sun, J.; He, X.; Ge, X.; Wu, X.; Shen, J.; Song, Y. Detection of key organs in tomato based on deep migration learning in a complex background. Agriculture 2018, 8, 196. [Google Scholar] [CrossRef]

- Zhao, B.; Li, J.; Baenziger, P.S.; Belamkar, V.; Ge, Y.F.; Zhang, J.; Shi, Y.Y. Automatic wheat lodging detection and mapping in aerial imagery to support high-throughput phenotyping and in-season crop management. Agronomy 2020, 10, 1762. [Google Scholar] [CrossRef]

- Zhang, M.; Chen, W.; Gao, P.; Li, Y.; Tan, F.; Zhang, Y.; Ruan, S.; Xing, P.; Guo, L. YOLO SSPD: A small target cotton boll detection model during the boll-spitting period based on space-to-depth convolution. Front. Plant Sci. 2024, 15, 1409194. [Google Scholar] [CrossRef]

- Jia, Y.; Fu, K.; Lan, H.; Wang, X.; Su, Z. Maize tassel detection with CA-YOLO for UAV images in complex field environments. Comput. Electron. Agric. 2024, 217, 108562. [Google Scholar] [CrossRef]

- Li, Y.; Rao, Y.; Jin, X.; Jiang, Z.; Wang, Y.; Wang, T.; Wang, F.; Luo, Q.; Liu, L. YOLOv5s-FP: A novel method for in-field pear detection using a transformer encoder and multi-scale collaboration perception. Sensors 2022, 23, 30. [Google Scholar] [CrossRef]

- Junos, M.H.; Mohd Khairuddin, A.S.; Thannirmalai, S.; Dahari, M. Automatic detection of oil palm fruits from UAV images using an improved YOLO model. Vis. Comput. 2022, 38, 2341–2355. [Google Scholar] [CrossRef]

- Gou, J.; Yu, B.; Maybank, S.J.; Tao, D. Knowledge distillation: A survey. Int. J. Comput. Vis. 2021, 129, 1789–1819. [Google Scholar] [CrossRef]

- Liu, X.; Gong, S.; Hua, X.; Chen, T.; Zhao, C. Research on temperature detection method of liquor distilling pot feeding operation based on a compressed algorithm. Sci. Rep. 2024, 14, 13292. [Google Scholar] [CrossRef] [PubMed]

- Zhu, W.; Yang, Z. Csb-yolo: A rapid and efficient real-time algorithm for classroom student behavior detection. J. Real-Time Image Process. 2024, 21, 140. [Google Scholar] [CrossRef]

- Liu, R.M.; Su, W.H. APHS-YOLO: A Lightweight Model for Real-Time Detection and Classification of Stropharia Rugoso-Annulata. Foods 2024, 13, 1710. [Google Scholar] [CrossRef] [PubMed]

- Panigrahy, S.; Karmakar, S. Real-time Condition Monitoring of Transmission Line Insulators Using the YOLO Object Detection Model with a UAV. IEEE Trans. Instrum. Meas. 2024, 73, 1–9. [Google Scholar] [CrossRef]

- Lawal, O.M.; Zhao, H.; Zhu, S.; Chuanli, L.; Cheng, K. Lightweight fruit detection algorithms for low-power computing devices. IET Image Process. 2024, 18, 2318–2328. [Google Scholar] [CrossRef]

- Feng, Z.; Wang, N.; Jin, Y.; Cao, H.; Huang, X.; Wen, S.; Ding, M. Enhancing cotton whitefly (Bemisia tabaci) detection and counting with a cost-effective deep learning approach on the Raspberry Pi. Plant Methods 2024, 20, 161. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, C.; Wang, Z.; Liu, M.; Zhou, D.; Li, J. Application of lightweight YOLOv5 for walnut kernel grade classification and endogenous foreign body detection. J. Food Compos. Anal. 2024, 127, 105964. [Google Scholar] [CrossRef]

- Song, Z.; Ban, S.; Hu, D.; Xu, M.; Yuan, T.; Zheng, X.; Sun, H.; Zhou, S.; Tian, M.; Li, L. A Lightweight YOLO Model for Rice Panicle Detection in Fields Based on UAV Aerial Images. Drones 2024, 9, 1. [Google Scholar] [CrossRef]

- Yakovlev, A.; Lisovychenko, O. An approach for image annotation automatization for artificial intelligence models learning. Адаптивні системи автoматичнoгo управління 2020, 1, 32. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Chen, Y.; Zhang, C.; Chen, B.; Huang, Y.; Sun, Y.; Wang, C.; Fu, X.; Dai, Y.; Qin, F.; Peng, Y.; et al. Accurate leukocyte detection based on deformable-DETR and multi-level feature fusion for aiding diagnosis of blood diseases. Comput. Biol. Med. 2024, 170, 107917. [Google Scholar] [CrossRef] [PubMed]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient multi-scale attention module with cross-spatial learning. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Chen, J.; Kao, S.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Yang, L.; Zhou, X.; Li, X.; Qiao, L.; Li, Z.; Yang, Z.; Wang, G.; Li, X. Bridging cross-task protocol inconsistency for distillation in dense object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 17175–17184. [Google Scholar]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. Unitbox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2025; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Xu, X.; Jiang, Y.; Chen, W.; Huang, Y.; Zhang, Y.; Sun, X. Damo-yolo: A report on real-time object detection design. arXiv 2022, arXiv:2211.15444. [Google Scholar]

- Yang, G.; Lei, J.; Zhu, Z.; Cheng, S.; Feng, Z.; Liang, R. AFPN: Asymptotic feature pyramid network for object detection. In Proceedings of the 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Oahu, HI, USA, 1–4 October 2023; pp. 2184–2189. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Wang, Y.; Han, K. Gold-YOLO: Efficient object detector via gather-and-distribute mechanism. Adv. Neural Inf. Process. Syst. 2023, 36, 51094–51112. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Kang, M.; Ting, C.M.; Ting, F.F.; Phan, R.C.W. ASF-YOLO: A novel YOLO model with attentional scale sequence fusion for cell instance segmentation. Image Vis. Comput. 2024, 147, 105057. [Google Scholar] [CrossRef]

- Shu, C.; Liu, Y.; Gao, J.; Yan, Z.; Shen, C. Channel-wise knowledge distillation for dense prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 5311–5320. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).