Universal Image Segmentation with Arbitrary Granularity for Efficient Pest Monitoring

Abstract

1. Introduction

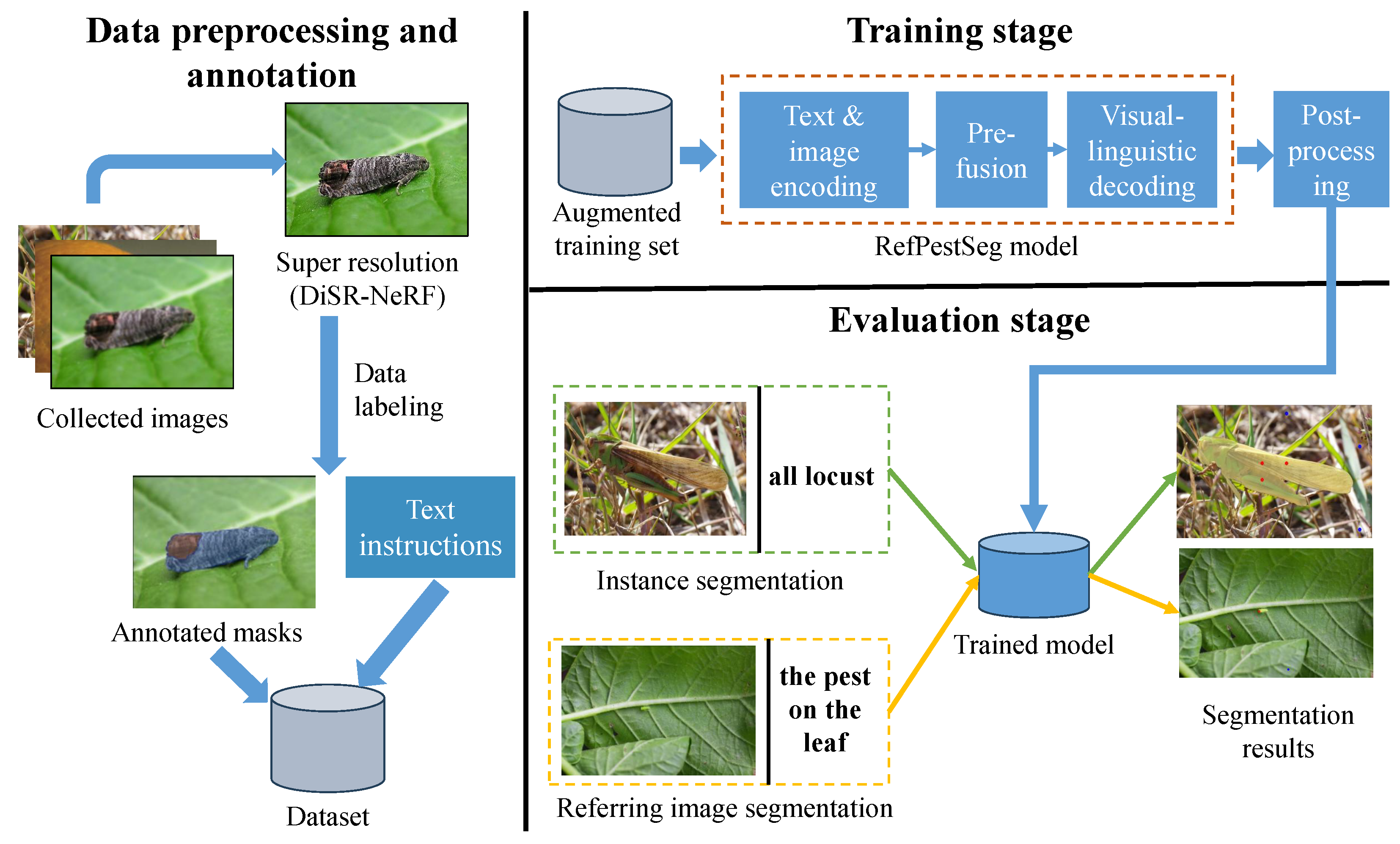

- RefPestSeg, a universal pest segmentation model that unifies visual features with textual guidance for precise pest localization.

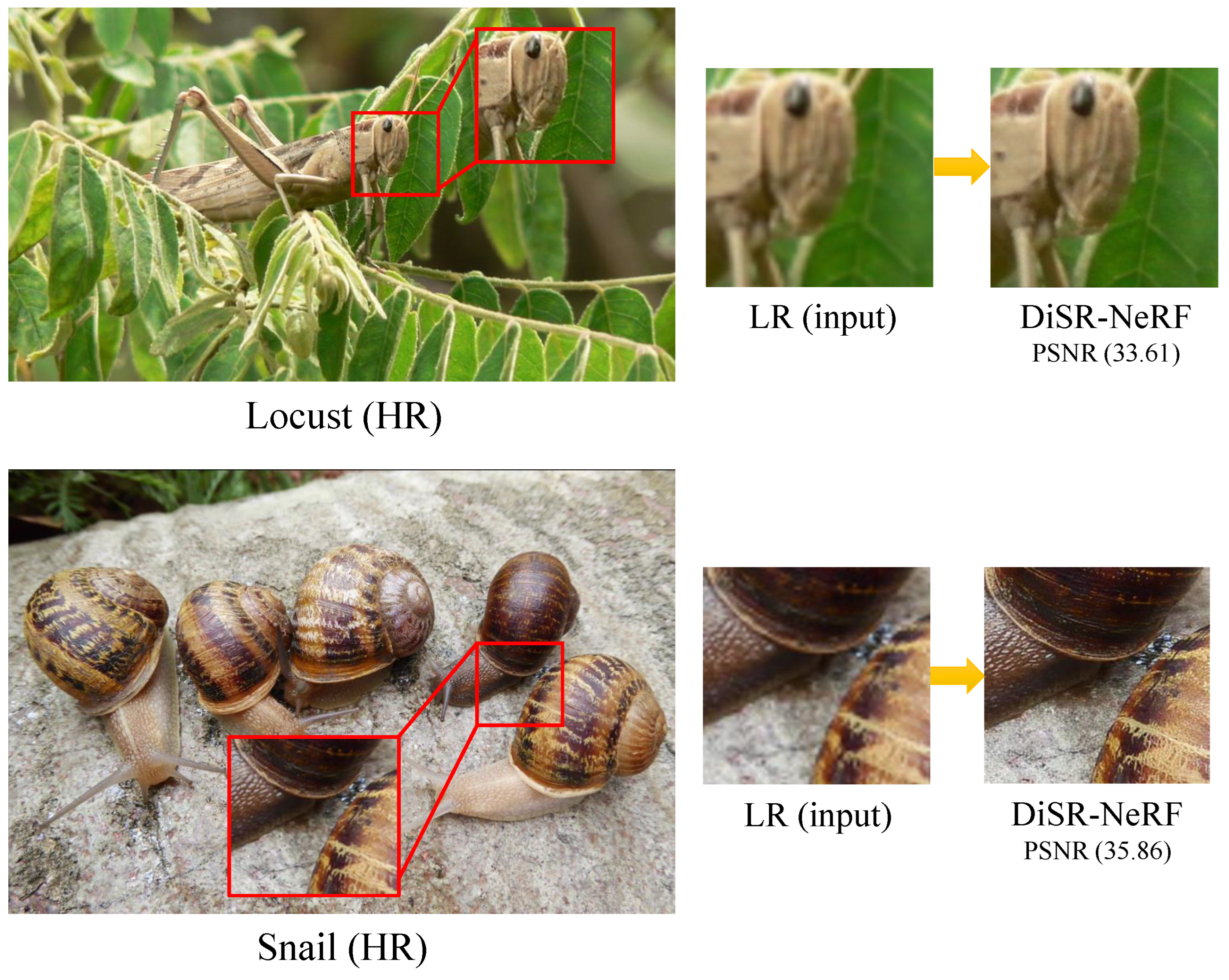

- An enhanced SR pipeline to significantly enhance image quality for precise pest analysis.

- A refined postprocessing module that resolves overlapping segmentation masks and suppresses noise.

2. Related Work

2.1. Pest Segmentation and Detection in Agriculture

2.2. Language-Guided and Referring Image Segmentation

3. Methodology

3.1. Data Preprocessing

3.2. RefPestSeg Model

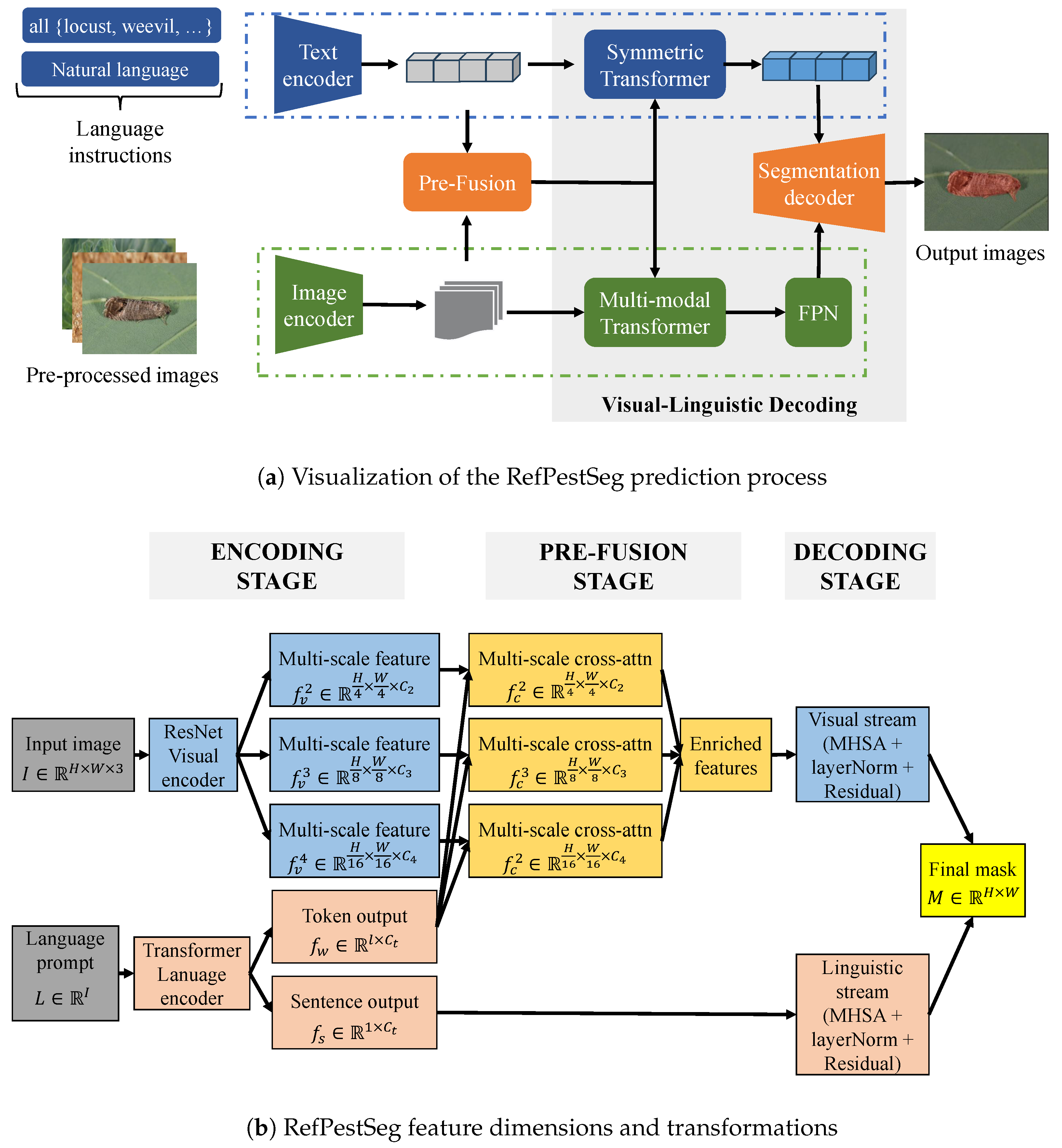

- Encoding Stage: Parallel visual and linguistic features are extracted. A ResNet visual encoder generates multi-scale hierarchical features at resolutions from the input image: . Concurrently, a transformer-based language encoder processes the prompt to yield token-level embeddings and global sentence-level embedding .

- Pre-fusion Stage: Multi-scale cross-attention mechanisms align visual and linguistic features at each resolution. This fuses semantic context from into the visual features, producing enriched representations , , and that preserve spatial dimensions while integrating visual structure and linguistic semantics.

- Decoding Stage: A symmetric dual-stream decoder processes the fused features. The visual stream refines enriched features through multi-head self-attention (MHSA) blocks with layer normalization and residual connections. The linguistic stream dynamically refines text embeddings via cross-attention with visual features, followed by identical MHSA-normalization blocks. The streams’ outputs are fused to generate the segmentation mask .

3.2.1. Encoding Process

- Visual Encoding: A ResNet backbone extracts multi-scale hierarchical features from I. We denote the features at three resolution levels as for . and correspond to the spatial dimensions of the feature maps at the i-th scale, and represents the number of channels in the extracted feature.

- Linguistic Encoding: A transformer-based language encoder processes the tokenized prompt L to generate (i) token-level embeddings capturing word semantics and syntactic relationships; and (ii) sentence-level embedding representing global semantic meaning. Both embeddings share the same channel dimension for cross-modal compatibility.

3.2.2. Pre-Fusion Process

3.2.3. Visual–Linguistic Decoding

3.2.4. Mask Postprocessing

| Algorithm 1 Postprocessing algorithm |

Require: MaskList, , , min_area, max_area

|

4. Dataset and Evaluation Metric

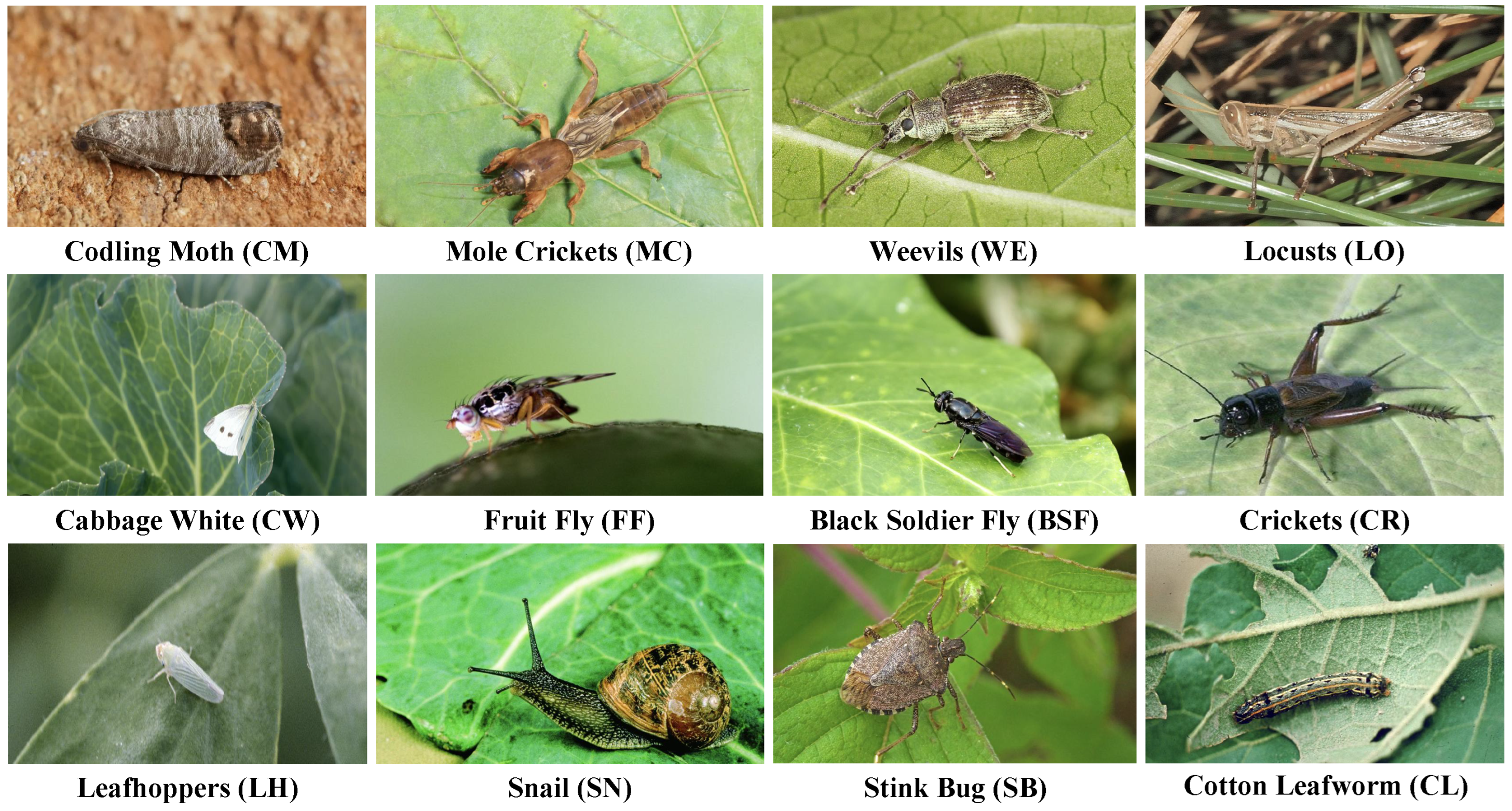

4.1. Data Overview

4.2. Unified Annotation Protocol

4.2.1. RGB Image Constraints

4.2.2. Image Annotation Protocol

- Each visible pest instance receives a separate polygonal mask enclosing its complete body, including head, thorax, abdomen, wings, legs, etc.

- Background elements (foliage, soil, non-pest objects) are treated as a single background class without annotation.

- Each mask is paired with a species-level categorical label.

4.2.3. Task-Specific Language Caption Annotation Protocol

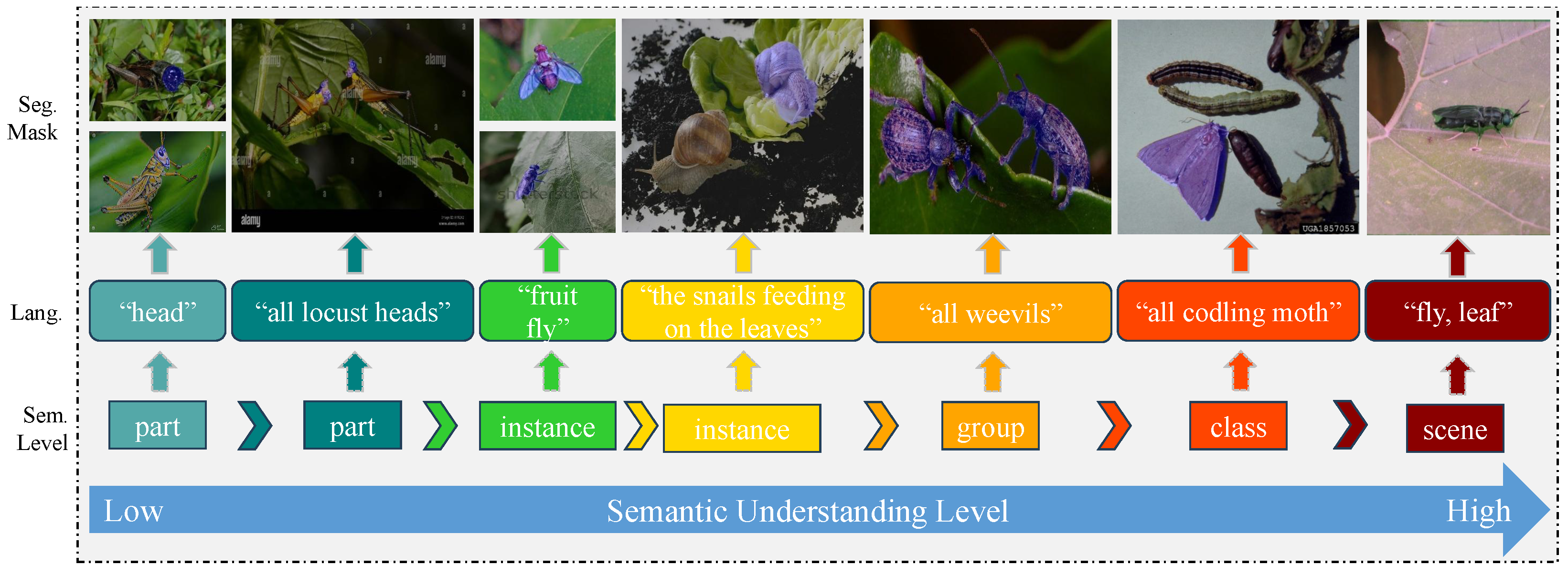

- Semantic segmentation: Template-based prompts (“all {species}”) using category names as concise textual expressions.

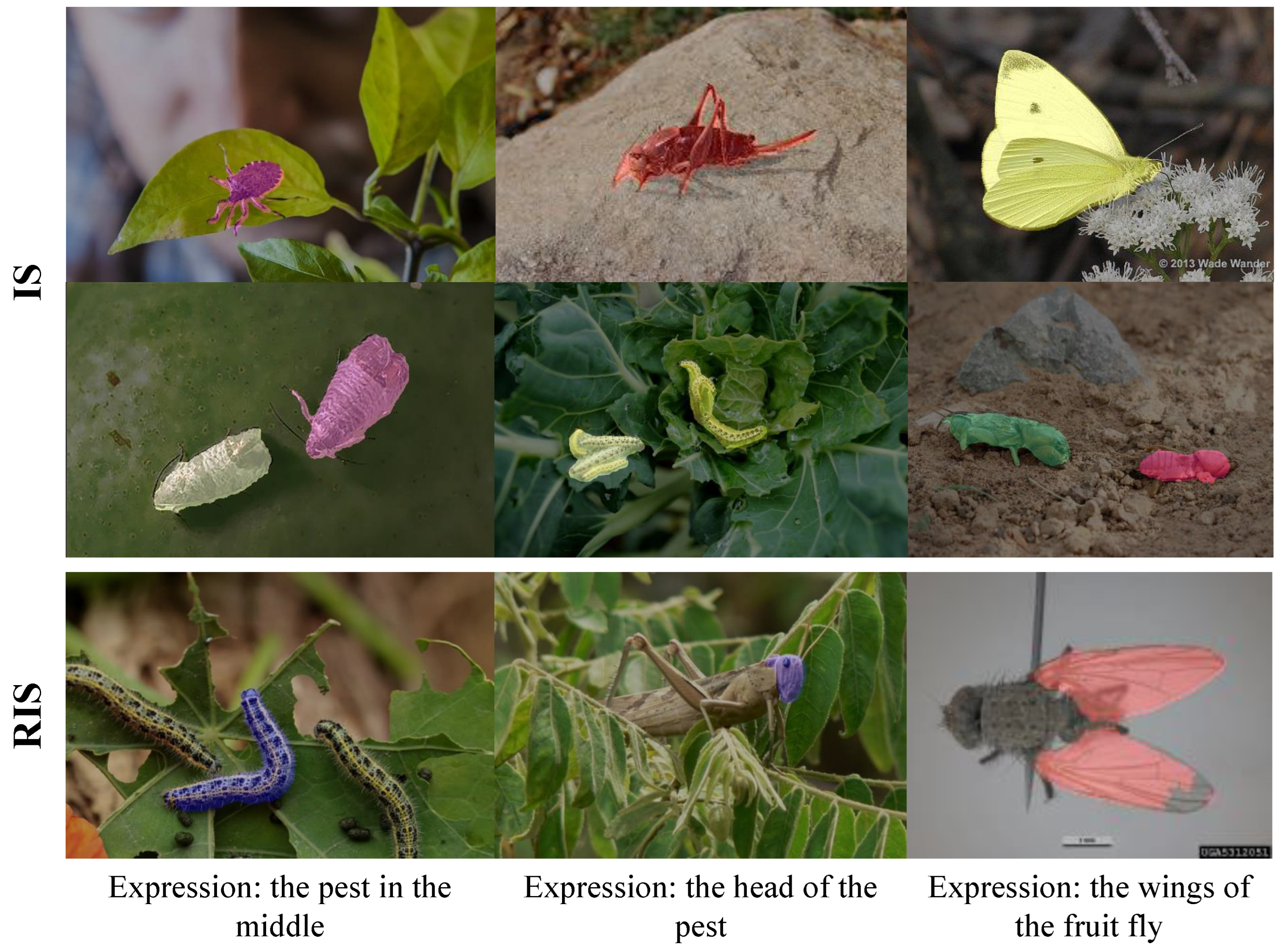

- RIS segmentation: Instance-specific descriptions generated via BLIP [29], conditioned on pest category and visual context. This approach enables “arbitrary-granularity segmentation” at inference time, where natural language queries (e.g., “the leftmost locust” or “wings of the fruit fly”) dynamically define target regions. Crucially, while part-level queries (e.g., “wings”) are supported during inference, no part-level ground truth exists in the annotations. Predictions for such queries are constrained to lie within full-instance masks.

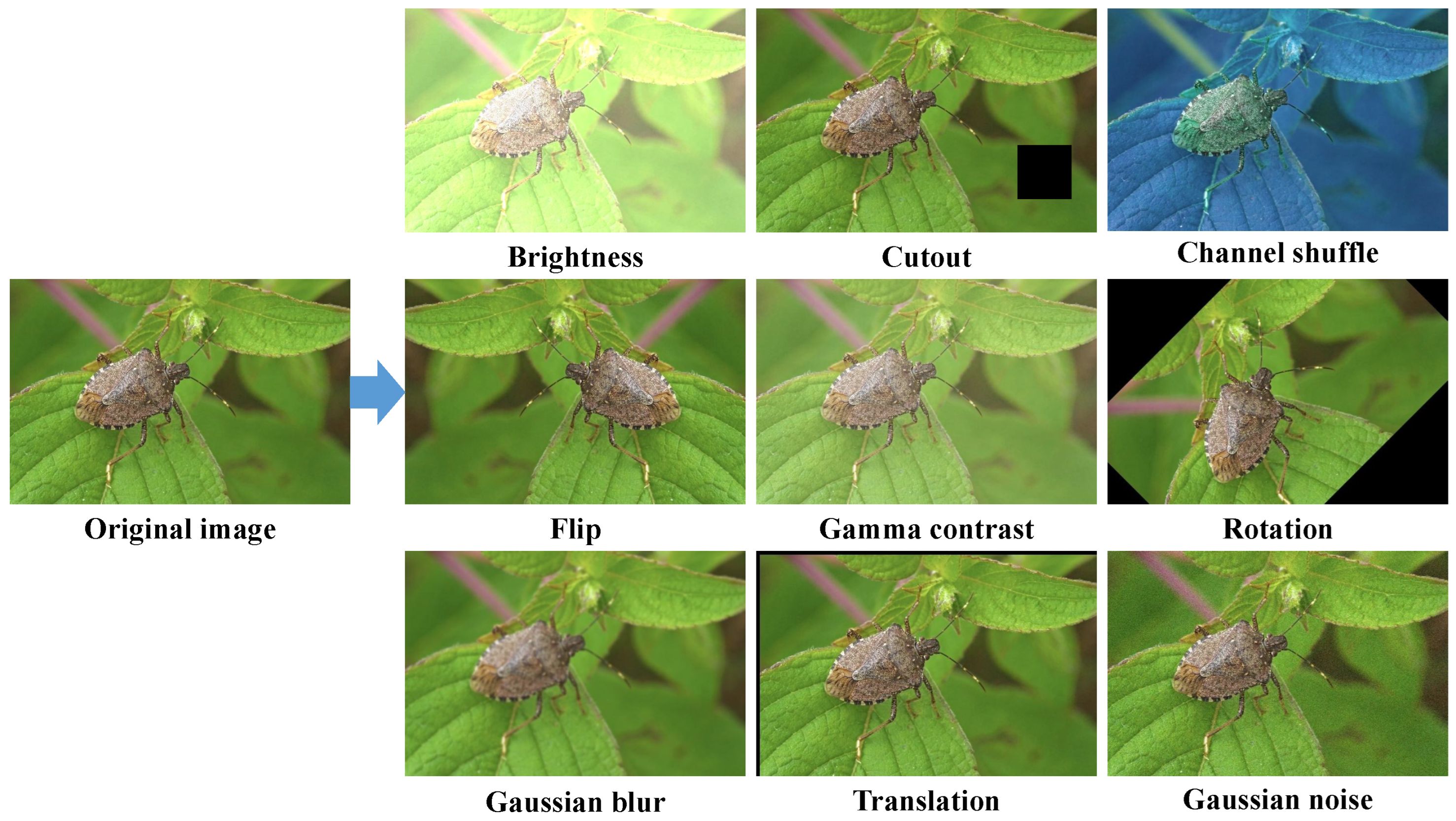

4.3. Data Augmentation

4.4. Evaluation Metrics

5. Experimental Results

- Preprocessing impact (Section 5.2.1): Quantifies accuracy gains from the preprocessing pipeline.

- Segmentation performance (Section 5.2.2): Reports quantitative metrics and visual results across diverse scenarios.

- Granularity analysis (Section 5.2.3): Evaluates segmentation fidelity at multiple semantic levels (low to high granularity).

- Comparative benchmarking (Section 5.2.5): Validates RefPestSeg’s performance against state-of-the-art segmentation baselines.

5.1. Implementation Descriptions

5.2. Pest Identification Framework Performance Assessment

5.2.1. Performance Analysis of the Preprocessing Process

5.2.2. RefPestSeg Performance Evaluations

5.2.3. Analysis of RefPestSeg Segmentation Results at Various Semantic Levels

5.2.4. Ablation Study

5.2.5. Comparison Study for RefPestSeg

6. Discussion

7. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Passias, A.; Tsakalos, K.A.; Rigogiannis, N.; Voglitsis, D.; Papanikolaou, N.; Michalopoulou, M.; Broufas, G.; Sirakoulis, G.C. Insect Pest Trap Development and DL-Based Pest Detection: A Comprehensive Review. IEEE Trans. Agrifood Electron. 2024, 2, 323–334. [Google Scholar] [CrossRef]

- Wang, R.; Liu, L.; Xie, C.; Yang, P.; Li, R.; Zhou, M. Agripest: A large-scale domain-specific benchmark dataset for practical agricultural pest detection in the wild. Sensors 2021, 21, 1601. [Google Scholar] [CrossRef] [PubMed]

- Su, H.; Li, Y.; Xu, Y.; Fu, X.; Liu, S. A review of deep-learning-based super-resolution: From methods to applications. Pattern Recognit. 2024, 157, 110935. [Google Scholar] [CrossRef]

- Wang, H.; Li, Y.; Dang, L.M.; Moon, H. An efficient attention module for instance segmentation network in pest monitoring. Comput. Electron. Agric. 2022, 195, 106853. [Google Scholar] [CrossRef]

- Jing, Y.; Kong, T.; Wang, W.; Wang, L.; Li, L.; Tan, T. Locate then segment: A strong pipeline for referring image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 9858–9867. [Google Scholar]

- Gong, H.; Liu, T.; Luo, T.; Guo, J.; Feng, R.; Li, J.; Ma, X.; Mu, Y.; Hu, T.; Sun, Y.; et al. Based on FCN and DenseNet framework for the research of rice pest identification methods. Agronomy 2023, 13, 410. [Google Scholar] [CrossRef]

- Ye, W.; Lao, J.; Liu, Y.; Chang, C.C.; Zhang, Z.; Li, H.; Zhou, H. Pine pest detection using remote sensing satellite images combined with a multi-scale attention-UNet model. Ecol. Inform. 2022, 72, 101906. [Google Scholar] [CrossRef]

- Bose, K.; Shubham, K.; Tiwari, V.; Patel, K.S. Insect image semantic segmentation and identification using UNET and DeepLab V3+. In ICT Infrastructure and Computing: Proceedings of ICT4SD 2022, Goa, India, 29–30 July 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 703–711. [Google Scholar]

- Li, H.; Shi, H.; Du, A.; Mao, Y.; Fan, K.; Wang, Y.; Shen, Y.; Wang, S.; Xu, X.; Tian, L.; et al. Symptom recognition of disease and insect damage based on Mask R-CNN, wavelet transform, and F-RNet. Front. Plant Sci. 2022, 13, 922797. [Google Scholar] [CrossRef] [PubMed]

- Rong, M.; Wang, Z.; Ban, B.; Guo, X. Pest Identification and Counting of Yellow Plate in Field Based on Improved Mask R-CNN. Discret. Dyn. Nat. Soc. 2022, 2022, 1913577. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, Y.; Xu, X. Dilated inception U-Net with attention for crop pest image segmentation in real-field environment. Smart Agric. Technol. 2025, 11, 100917. [Google Scholar] [CrossRef]

- Zhang, Y.; Lv, C. TinySegformer: A lightweight visual segmentation model for real-time agricultural pest detection. Comput. Electron. Agric. 2024, 218, 108740. [Google Scholar] [CrossRef]

- Biradar, N.; Hosalli, G. Segmentation and detection of crop pests using novel U-Net with hybrid deep learning mechanism. Pest Manag. Sci. 2024, 80, 3795–3807. [Google Scholar] [CrossRef] [PubMed]

- Hu, R.; Rohrbach, M.; Darrell, T. Segmentation from natural language expressions. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 108–124. [Google Scholar]

- Liu, C.; Lin, Z.; Shen, X.; Yang, J.; Lu, X.; Yuille, A. Recurrent multimodal interaction for referring image segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1271–1280. [Google Scholar]

- Huang, S.; Hui, T.; Liu, S.; Li, G.; Wei, Y.; Han, J.; Liu, L.; Li, B. Referring image segmentation via cross-modal progressive comprehension. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10488–10497. [Google Scholar]

- Yu, L.; Lin, Z.; Shen, X.; Yang, J.; Lu, X.; Bansal, M.; Berg, T.L. Mattnet: Modular attention network for referring expression comprehension. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1307–1315. [Google Scholar]

- Yang, Z.; Wang, J.; Tang, Y.; Chen, K.; Zhao, H.; Torr, P.H. Lavt: Language-aware vision transformer for referring image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18155–18165. [Google Scholar]

- Liu, J.; Ding, H.; Cai, Z.; Zhang, Y.; Satzoda, R.K.; Mahadevan, V.; Manmatha, R. Polyformer: Referring image segmentation as sequential polygon generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 18653–18663. [Google Scholar]

- Yan, Y.; He, X.; Wang, W.; Chen, S.; Liu, J. Eavl: Explicitly align vision and language for referring image segmentation. arXiv 2023, arXiv:2308.09779. [Google Scholar] [CrossRef]

- Zhang, M.; Liu, Y.; Yin, X.; Yue, H.; Yang, J. Risam: Referring image segmentation via mutual-aware attention features. arXiv 2023, arXiv:2311.15727. [Google Scholar]

- Meng, C.; Shu, L.; Han, R.; Chen, Y.; Yi, L.; Deng, D.J. Farmsr: Super-resolution in precision agriculture field production scenes. In Proceedings of the 2024 IEEE 22nd International Conference on Industrial Informatics (INDIN), Beijing, China, 17–20 August 2024; pp. 1–6. [Google Scholar]

- Moser, B.B.; Shanbhag, A.S.; Raue, F.; Frolov, S.; Palacio, S.; Dengel, A. Diffusion models, image super-resolution, and everything: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 11793–11813. [Google Scholar] [CrossRef]

- Lee, J.L.; Li, C.; Lee, G.H. DiSR-NeRF: Diffusion-Guided View-Consistent Super-Resolution NeRF. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 20561–20570. [Google Scholar]

- Liu, Y.; Zhang, C.; Wang, Y.; Wang, J.; Yang, Y.; Tang, Y. Universal segmentation at arbitrary granularity with language instruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 3459–3469. [Google Scholar]

- Zou, X.; Dou, Z.Y.; Yang, J.; Gan, Z.; Li, L.; Li, C.; Dai, X.; Behl, H.; Wang, J.; Yuan, L.; et al. Generalized decoding for pixel, image, and language. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 15116–15127. [Google Scholar]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Conditional prompt learning for vision-language models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16816–16825. [Google Scholar]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A database and web-based tool for image annotation. Int. J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In Proceedings of the International Conference on Machine Learning PMLR, Baltimore, MD, USA, 17–23 July 2022; pp. 12888–12900. [Google Scholar]

- Wang, Z.; Lu, Y.; Li, Q.; Tao, X.; Guo, Y.; Gong, M.; Liu, T. Cris: Clip-driven referring image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11686–11695. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Yeung, M.; Sala, E.; Schönlieb, C.B.; Rundo, L. Unified focal loss: Generalising dice and cross entropy-based losses to handle class imbalanced medical image segmentation. Comput. Med. Imaging Graph. 2022, 95, 102026. [Google Scholar] [CrossRef] [PubMed]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

| IoU | Precision@0.5 | Precision@0.7 | Precision@0.9 | |

|---|---|---|---|---|

| w/o preprocessing | 64.50 | 73.22 | 68.25 | 58.10 |

| w/preprocessing | 69.08 | 77.94 | 73.31 | 63.67 |

| Pest | IoU | Pr@50 | Pr@70 | Pr@90 |

|---|---|---|---|---|

| Black Soldier Fly | 70.25 | 80.43 | 75.12 | 65.37 |

| Mole Crickets | 63.18 | 69.87 | 64.53 | 55.29 |

| Weevils | 68.49 | 78.36 | 73.84 | 63.57 |

| Locusts | 65.22 | 72.19 | 68.63 | 60.15 |

| Cabbage White | 66.78 | 74.62 | 70.55 | 61.38 |

| Snail | 75.42 | 85.12 | 80.97 | 70.25 |

| Codling Moth | 73.19 | 83.47 | 78.84 | 68.32 |

| Crickets | 72.35 | 82.76 | 77.93 | 67.54 |

| Leafhoppers | 67.89 | 75.62 | 71.48 | 62.97 |

| Fruit Fly | 64.57 | 71.93 | 67.28 | 58.46 |

| Stink Bug | 69.74 | 79.28 | 74.15 | 64.02 |

| Cotton Leafworm | 71.84 | 81.59 | 76.43 | 66.78 |

| Average | 69.08 | 77.94 | 73.31 | 63.67 |

| Method | IoU | Precision@50 |

|---|---|---|

| Baseline | 58.42 | 67.31 |

| +Pre-fusion | 61.85 | 70.48 |

| +Pre-fusion, + Vision path | 65.23 | 74.12 |

| +Pre-fusion, + Language path | 64.97 | 73.56 |

| +Pre-fusion, + Vision path, + Language path | 69.08 | 77.94 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dang, L.M.; Danish, S.; Fayaz, M.; Khan, A.; Arzu, G.E.; Tightiz, L.; Song, H.-K.; Moon, H. Universal Image Segmentation with Arbitrary Granularity for Efficient Pest Monitoring. Horticulturae 2025, 11, 1462. https://doi.org/10.3390/horticulturae11121462

Dang LM, Danish S, Fayaz M, Khan A, Arzu GE, Tightiz L, Song H-K, Moon H. Universal Image Segmentation with Arbitrary Granularity for Efficient Pest Monitoring. Horticulturae. 2025; 11(12):1462. https://doi.org/10.3390/horticulturae11121462

Chicago/Turabian StyleDang, L. Minh, Sufyan Danish, Muhammad Fayaz, Asma Khan, Gul E. Arzu, Lilia Tightiz, Hyoung-Kyu Song, and Hyeonjoon Moon. 2025. "Universal Image Segmentation with Arbitrary Granularity for Efficient Pest Monitoring" Horticulturae 11, no. 12: 1462. https://doi.org/10.3390/horticulturae11121462

APA StyleDang, L. M., Danish, S., Fayaz, M., Khan, A., Arzu, G. E., Tightiz, L., Song, H.-K., & Moon, H. (2025). Universal Image Segmentation with Arbitrary Granularity for Efficient Pest Monitoring. Horticulturae, 11(12), 1462. https://doi.org/10.3390/horticulturae11121462