Active Laser-Camera Scanning for High-Precision Fruit Localization in Robotic Harvesting: System Design and Calibration

Abstract

:1. Introduction

- A hardware system consisting of a red line laser, an RGB camera, and a linear motion slide, coupled with an active scanning scheme, is developed for fruit localization based on the laser-triangulation principle.

- A high-fidelity extrinsic model is developed to capture 3D measurements by matching the laser illumination source with the RGB pixels. A robust calibration scheme is then developed to calibrate the model parameters by leveraging random sample consensus (RANSAC) techniques to detect and remove data outliers.

- The effectiveness of the developed model and calibration scheme is evaluated through comprehensive experiments. The results show that the calibrated ALACS system can achieve high-precision localization with millimeter-level accuracy.

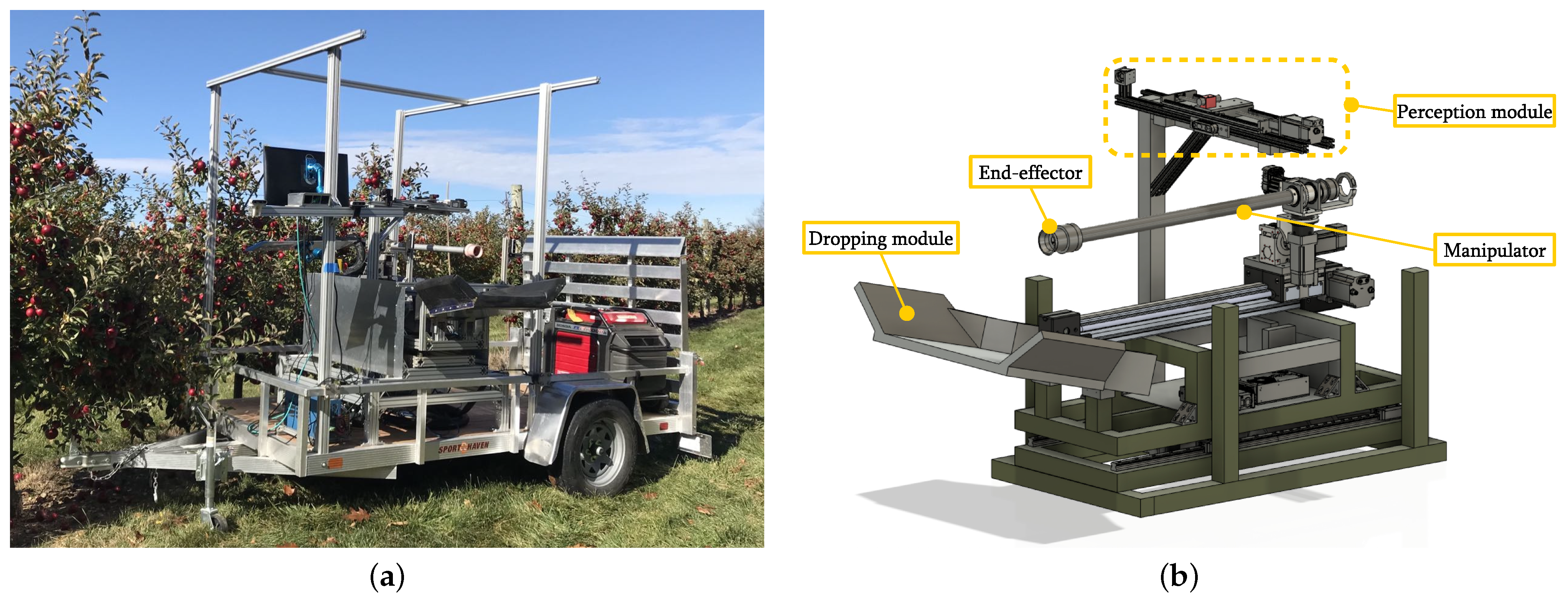

2. Overview of the Robotic Apple Harvesting System

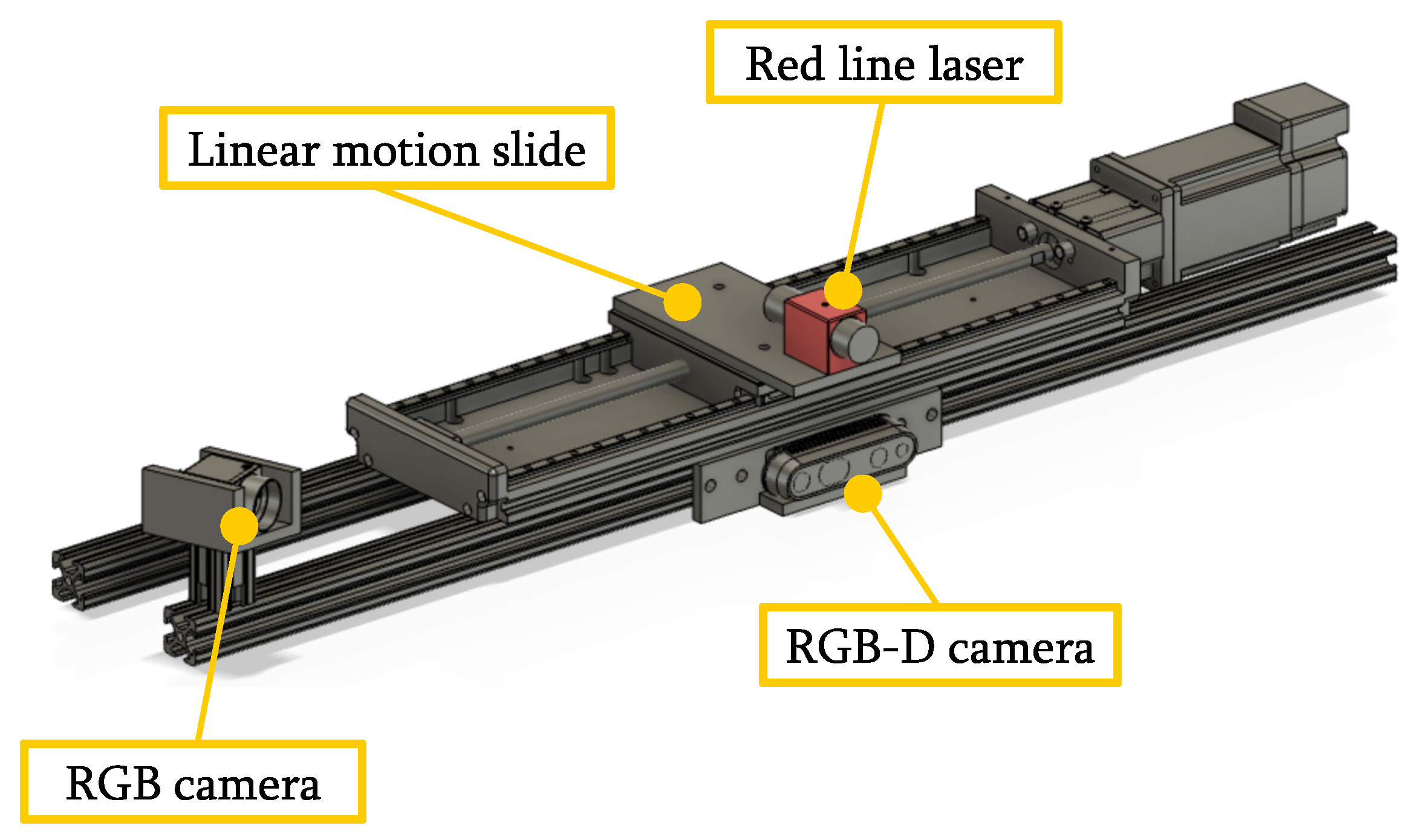

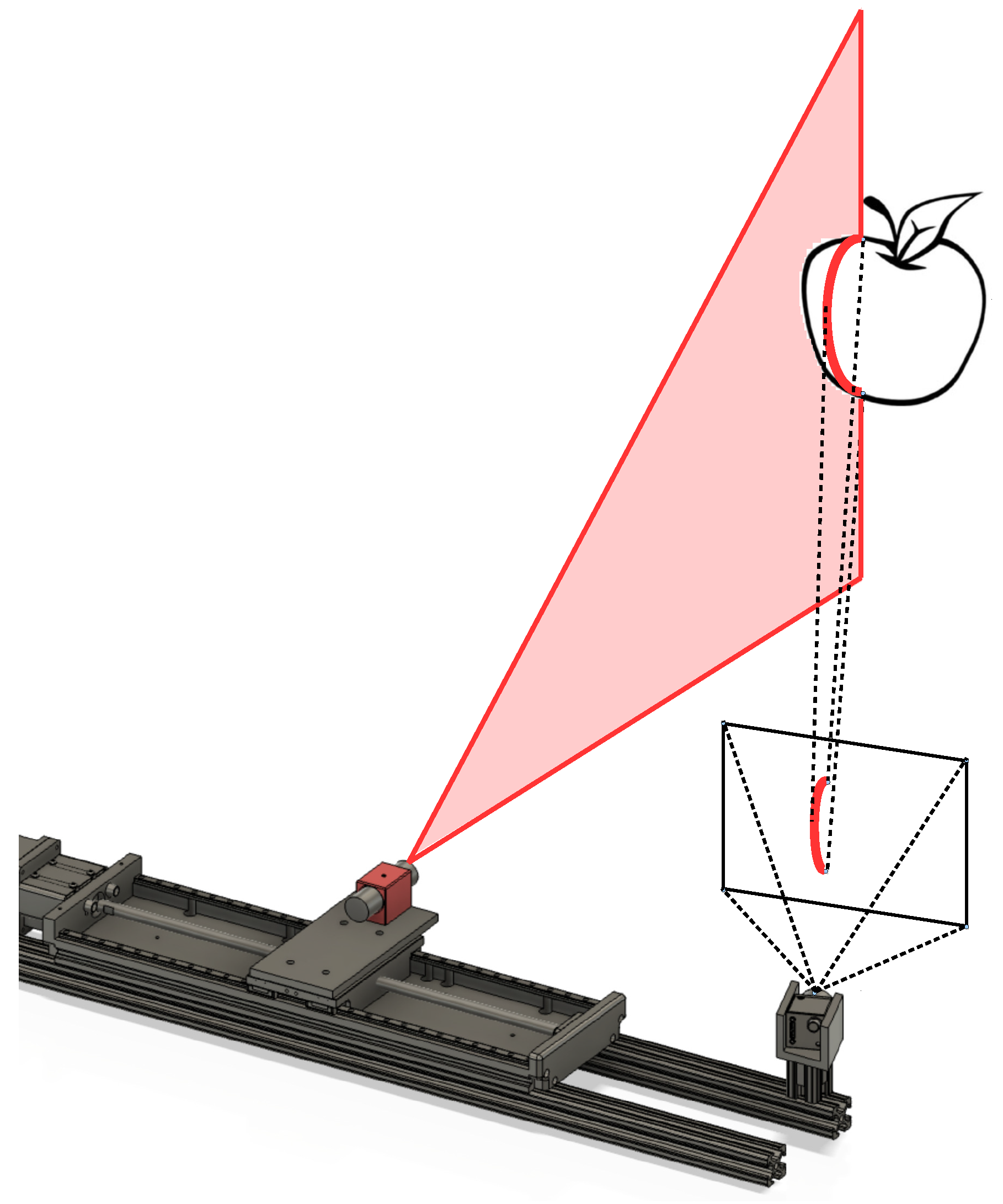

3. Design of the Active Laser-Camera Scanner

- Initialization. The linear motion slide is actuated to regulate the laser towards an initial position, ensuring that the red laser line is projected on the left half region of the target apple. The initial laser position is obtained by transforming the rough target apple location provided by the RGB-D camera into the coordinate frame of the ALACS unit.

- Interval scanning. When the laser reaches the initial position, the FLIR camera is activated to capture an image. The linear motion slide then travels to the right by four centimeters in one centimeter increments, pausing at each increment to allow the FLIR camera to take an image. A total of five images are acquired through this scanning procedure, with the laser line projected on various positions in each image. The purpose of utilizing such scanning strategy is to mitigate the impact of occlusion, since the laser line provides high spatial-resolution localization information for the target fruit. More precisely, when the target apple is partially occluded by foliage, moving the laser to multiple positions can reduce the likelihood that the laser lines will be entirely blocked by the obstacle.

- Refinement of 3D position. For each image captured by the FLIR camera, the laser line projected on the target apple surface is extracted and then used to generate a 3D location candidate. Computer vision approaches and laser triangulation-based techniques are exploited to accomplish laser line extraction and position candidate computation, respectively. Five position candidates will be generated as a result, and a holistic evaluation function is used to select one of the candidates as the final target apple location.

4. Extrinsic Model and Calibration

4.1. Modeling of the ALACS Unit

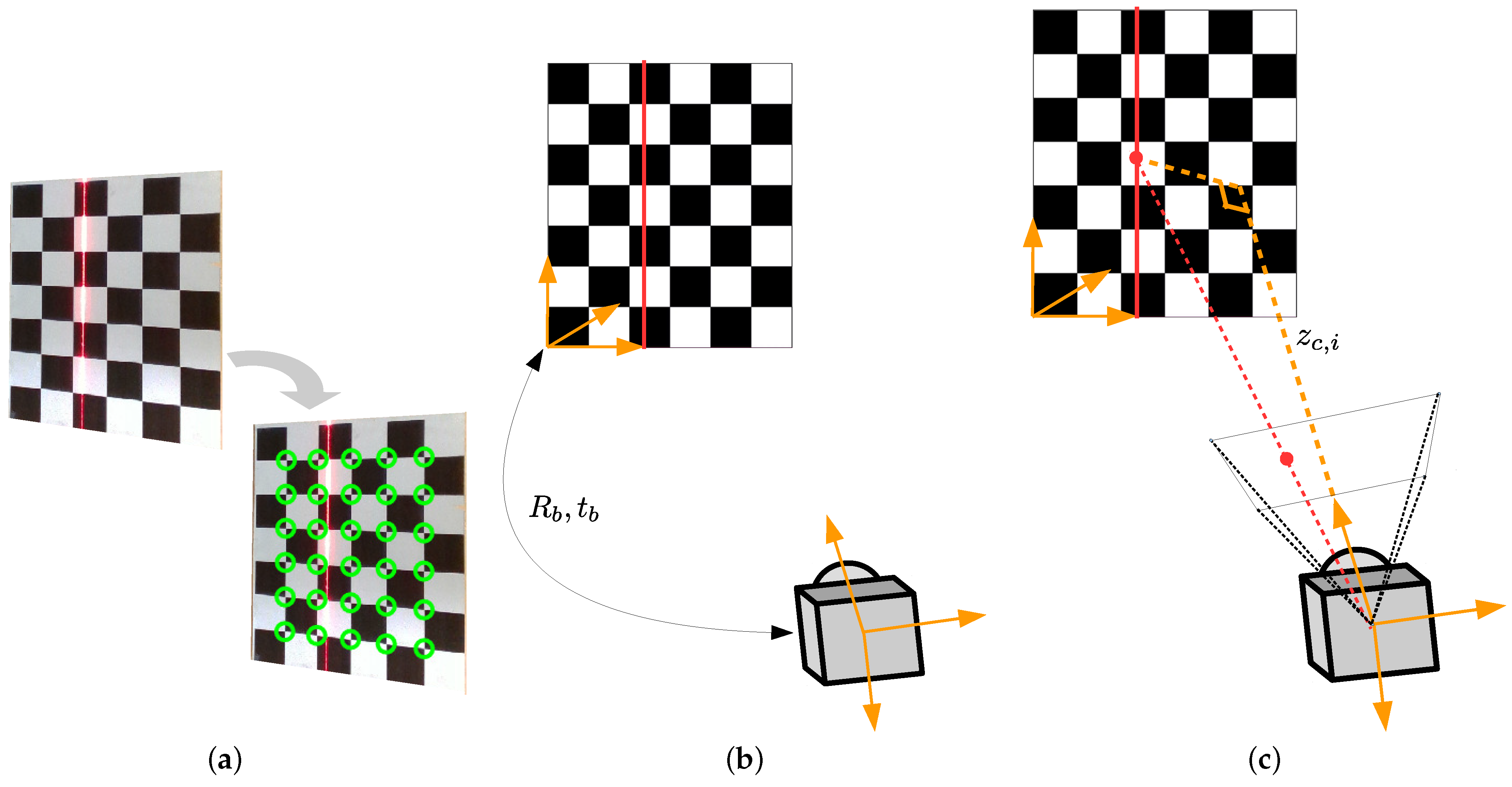

4.2. Robust Calibration Scheme

- Corner Detection. The checkerboard corners are detected from the image by using the algorithm developed in [45].

- Pose Reconstruction. Based on the detected checkerboard corners and the prior knowledge about the checkerboard square size, the relative pose information between the planar checkerboard and the camera is reconstructed [46]. The pose information is described by the rotation matrix and the translation vector .

- Computation of . Based on the relative pose information , and the normalized coordinate , is calculated with projection geometry [46].

| Algorithm 1 RANSAC-based robust calibration |

| Input: , , Output: , , , while do 1. Hypothesis generation Randomly select 4 data samples from to construct the subset , where Estimate parameters based on and (9) 2. Verification Initialize the inlier set {} for do if then Add to the inlier set end if end for if then , end if end while Estimate parameters based on and (9) |

5. Experiments

5.1. Calibration Methods and Results

- Method 1: This method utilizes the low-fidelity model to conduct the calibration. Specifically, the low-fidelity model only considers two extrinsic parameters, and L, and assumes that . Under this case, the depth measurement mechanism of the ALACS unit degenerates intoThe model (10) and all collected data samples are used to estimate the extrinsic parameters and L.

- Method 2: Both the low-fidelity model (10) and RANSAC techniques are used for calibration. Compared with Method 1, this method leverages RANSAC to remove outlier data.

- Method 4: This is our developed method which combines the high-fidelity model with RANSAC techniques for calibration. The method is detailed in Algorithm 1.

5.2. Localization Accuracy

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fathallah, F.A. Musculoskeletal disorders in labor-intensive agriculture. Appl. Ergon. 2010, 41, 738–743. [Google Scholar] [CrossRef] [PubMed]

- Zhao, D.A.; Lv, J.; Ji, W.; Zhang, Y.; Chen, Y. Design and control of an apple harvesting robot. Biosyst. Eng. 2011, 110, 112–122. [Google Scholar] [CrossRef]

- Mehta, S.; Burks, T. Vision-based control of robotic manipulator for citrus harvesting. Comput. Electron. Agric. 2014, 102, 146–158. [Google Scholar] [CrossRef]

- De Kleine, M.E.; Karkee, M. A semi-automated harvesting prototype for shaking fruit tree limbs. Trans. ASABE 2015, 58, 1461–1470. [Google Scholar] [CrossRef]

- Silwal, A.; Davidson, J.R.; Karkee, M.; Mo, C.; Zhang, Q.; Lewis, K. Design, integration, and field evaluation of a robotic apple harvester. J. Field Robot. 2017, 34, 1140–1159. [Google Scholar] [CrossRef]

- Xiong, J.; He, Z.; Lin, R.; Liu, Z.; Bu, R.; Yang, Z.; Peng, H.; Zou, X. Visual positioning technology of picking robots for dynamic litchi clusters with disturbance. Comput. Electron. Agric. 2018, 151, 226–237. [Google Scholar] [CrossRef]

- Williams, H.A.; Jones, M.H.; Nejati, M.; Seabright, M.J.; Bell, J.; Penhall, N.D.; Barnett, J.J.; Duke, M.D.; Scarfe, A.J.; Ahn, H.S.; et al. Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 2019, 181, 140–156. [Google Scholar] [CrossRef]

- Hohimer, C.J.; Wang, H.; Bhusal, S.; Miller, J.; Mo, C.; Karkee, M. Design and field evaluation of a robotic apple harvesting system with a 3D-printed soft-robotic end-effector. Trans. ASABE 2019, 62, 405–414. [Google Scholar] [CrossRef]

- Zhang, X.; He, L.; Karkee, M.; Whiting, M.D.; Zhang, Q. Field evaluation of targeted shake-and-catch harvesting technologies for fresh market apple. Trans. ASABE 2020, 63, 1759–1771. [Google Scholar] [CrossRef]

- Zhang, K.; Lammers, K.; Chu, P.; Li, Z.; Lu, R. System design and control of an apple harvesting robot. Mechatronics 2021, 79, 102644. [Google Scholar] [CrossRef]

- Bu, L.; Chen, C.; Hu, G.; Sugirbay, A.; Sun, H.; Chen, J. Design and evaluation of a robotic apple harvester using optimized picking patterns. Comput. Electron. Agric. 2022, 198, 107092. [Google Scholar] [CrossRef]

- Zhang, K.; Lammers, K.; Chu, P.; Dickinson, N.; Li, Z.; Lu, R. Algorithm Design and Integration for a Robotic Apple Harvesting System. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Kyoto, Japan, 23–27 October 2022; pp. 9217–9224. [Google Scholar] [CrossRef]

- Meng, F.; Li, J.; Zhang, Y.; Qi, S.; Tang, Y. Transforming unmanned pineapple picking with spatio-temporal convolutional neural networks. Comput. Electron. Agric. 2023, 214, 108298. [Google Scholar] [CrossRef]

- Wang, C.; Li, C.; Han, Q.; Wu, F.; Zou, X. A Performance Analysis of a Litchi Picking Robot System for Actively Removing Obstructions, Using an Artificial Intelligence Algorithm. Agronomy 2023, 13, 2795. [Google Scholar] [CrossRef]

- Ye, L.; Wu, F.; Zou, X.; Li, J. Path planning for mobile robots in unstructured orchard environments: An improved kinematically constrained bi-directional RRT approach. Comput. Electron. Agric. 2023, 215, 108453. [Google Scholar] [CrossRef]

- Bulanon, D.; Kataoka, T.; Ota, Y.; Hiroma, T. AE—Automation and emerging technologies: A segmentation algorithm for the automatic recognition of Fuji apples at harvest. Biosyst. Eng. 2002, 83, 405–412. [Google Scholar] [CrossRef]

- Zhao, J.; Tow, J.; Katupitiya, J. On-tree fruit recognition using texture properties and color data. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 263–268. [Google Scholar] [CrossRef]

- Wachs, J.P.; Stern, H.; Burks, T.; Alchanatis, V. Low and high-level visual feature-based apple detection from multi-modal images. Precis. Agric. 2010, 11, 717–735. [Google Scholar] [CrossRef]

- Zhou, R.; Damerow, L.; Sun, Y.; Blanke, M.M. Using colour features of cv. ‘Gala’ apple fruits in an orchard in image processing to predict yield. Precis. Agric. 2012, 13, 568–580. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Vandevoorde, K.; Wouters, N.; Kayacan, E.; De Baerdemaeker, J.G.; Saeys, W. Detection of red and bicoloured apples on tree with an RGB-D camera. Biosyst. Eng. 2016, 146, 33–44. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Xiong, J.; Fang, Y. Color-, depth-, and shape-based 3D fruit detection. Precis. Agric. 2020, 21, 1–17. [Google Scholar] [CrossRef]

- Li, T.; Feng, Q.; Qiu, Q.; Xie, F.; Zhao, C. Occluded Apple Fruit Detection and localization with a frustum-based point-cloud-processing approach for robotic harvesting. Remote. Sens. 2022, 14, 482. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Chu, P.; Li, Z.; Lammers, K.; Lu, R.; Liu, X. Deep learning-based apple detection using a suppression mask R-CNN. Pattern Recognit. Lett. 2021, 147, 206–211. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Kang, H.; Chen, C. Fast implementation of real-time fruit detection in apple orchards using deep learning. Comput. Electron. Agric. 2020, 168, 105108. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Gongal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Sensors and systems for fruit detection and localization: A review. Comput. Electron. Agric. 2015, 116, 8–19. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Gregorio, E.; Guevara, J.; Auat, F.; Sanz-Cortiella, R.; Escolà, A.; Llorens, J.; Morros, J.R.; Ruiz-Hidalgo, J.; Vilaplana, V.; et al. Fruit detection in an apple orchard using a mobile terrestrial laser scanner. Biosyst. Eng. 2019, 187, 171–184. [Google Scholar] [CrossRef]

- Fu, L.; Gao, F.; Wu, J.; Li, R.; Karkee, M.; Zhang, Q. Application of consumer RGB-D cameras for fruit detection and localization in field: A critical review. Comput. Electron. Agric. 2020, 177, 105687. [Google Scholar] [CrossRef]

- Neupane, C.; Koirala, A.; Wang, Z.; Walsh, K.B. Evaluation of depth cameras for use in fruit localization and sizing: Finding a successor to kinect v2. Agronomy 2021, 11, 1780. [Google Scholar] [CrossRef]

- Kang, H.; Wang, X.; Chen, C. Accurate fruit localisation using high resolution LiDAR-camera fusion and instance segmentation. Comput. Electron. Agric. 2022, 203, 107450. [Google Scholar] [CrossRef]

- Xiong, Y.; Peng, C.; Grimstad, L.; From, P.J.; Isler, V. Development and field evaluation of a strawberry harvesting robot with a cable-driven gripper. Comput. Electron. Agric. 2019, 157, 392–402. [Google Scholar] [CrossRef]

- Tian, Y.; Duan, H.; Luo, R.; Zhang, Y.; Jia, W.; Lian, J.; Zheng, Y.; Ruan, C.; Li, C. Fast recognition and location of target fruit based on depth information. IEEE Access 2019, 7, 170553–170563. [Google Scholar] [CrossRef]

- Arad, B.; Balendonck, J.; Barth, R.; Ben-Shahar, O.; Edan, Y.; Hellström, T.; Hemming, J.; Kurtser, P.; Ringdahl, O.; Tielen, T.; et al. Development of a sweet pepper harvesting robot. J. Field Robot. 2020, 37, 1027–1039. [Google Scholar] [CrossRef]

- Kang, H.; Zhou, H.; Chen, C. Visual perception and modeling for autonomous apple harvesting. IEEE Access 2020, 8, 62151–62163. [Google Scholar] [CrossRef]

- Lehnert, C.; English, A.; McCool, C.; Tow, A.W.; Perez, T. Autonomous sweet pepper harvesting for protected cropping systems. IEEE Robot. Autom. Lett. 2017, 2, 872–879. [Google Scholar] [CrossRef]

- Liu, J.; Yuan, Y.; Zhou, Y.; Zhu, X.; Syed, T.N. Experiments and analysis of close-shot identification of on-branch citrus fruit with realsense. Sensors 2018, 18, 1510. [Google Scholar] [CrossRef]

- Milella, A.; Marani, R.; Petitti, A.; Reina, G. In-field high throughput grapevine phenotyping with a consumer-grade depth camera. Comput. Electron. Agric. 2019, 156, 293–306. [Google Scholar] [CrossRef]

- Chu, P.; Li, Z.; Zhang, K.; Chen, D.; Lammers, K.; Lu, R. O2RNet: Occluder-Occludee Relational Network for Robust Apple Detection in Clustered Orchard Environments. Smart Agric. Technol. 2023, 5, 100284. [Google Scholar] [CrossRef]

- Zhang, K.; Lammers, K.; Chu, P.; Li, Z.; Lu, R. An Automated Apple Harvesting Robot—From System Design to Field Evaluation. J. Field Robot. 2023; in press. [Google Scholar] [CrossRef]

- Geiger, A.; Moosmann, F.; Car, Ö.; Schuster, B. Automatic camera and range sensor calibration using a single shot. In Proceedings of the IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 3936–3943. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Raguram, R.; Chum, O.; Pollefeys, M.; Matas, J.; Frahm, J.M. USAC: A Universal Framework for Random Sample Consensus. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2022–2038. [Google Scholar] [CrossRef] [PubMed]

- Intel. Intel RealSense Product Family D400 Series Datasheet. 2023. Available online: https://www.intelrealsense.com/wp-content/uploads/2023/07/Intel-RealSense-D400-Series-Datasheet-July-2023.pdf?_ga=2.51357024.85065052.1690338316-873175694.1690172632 (accessed on 1 October 2023).

| (deg) | (mm) | (deg) | Mean Error (mm) | |

|---|---|---|---|---|

| Method 1 (Low-fidelity model + All data) | 19.03 | 382.83 | / | 4.91 |

| Method 2 (Low-fidelity model + RANSAC) | 19.28 | 386.37 | / | 3.80 |

| Method 3 (High-fidelity model + All data) | 19.01 | 381.09 | 0.73 | 1.84 |

| Method 4 (High-fidelity model + RANSAC) | 19.07 | 381.98 | 0.69 | 0.39 |

| Computation Time(s) | |

|---|---|

| Method 1 (Low-fidelity model + All data) | 0.015 |

| Method 2 (Low-fidelity model + RANSAC) | 1.741 |

| Method 3 (High-fidelity model + All data) | 0.023 |

| Method 4 (High-fidelity model + RANSAC) | 1.824 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, K.; Chu, P.; Lammers, K.; Li, Z.; Lu, R. Active Laser-Camera Scanning for High-Precision Fruit Localization in Robotic Harvesting: System Design and Calibration. Horticulturae 2024, 10, 40. https://doi.org/10.3390/horticulturae10010040

Zhang K, Chu P, Lammers K, Li Z, Lu R. Active Laser-Camera Scanning for High-Precision Fruit Localization in Robotic Harvesting: System Design and Calibration. Horticulturae. 2024; 10(1):40. https://doi.org/10.3390/horticulturae10010040

Chicago/Turabian StyleZhang, Kaixiang, Pengyu Chu, Kyle Lammers, Zhaojian Li, and Renfu Lu. 2024. "Active Laser-Camera Scanning for High-Precision Fruit Localization in Robotic Harvesting: System Design and Calibration" Horticulturae 10, no. 1: 40. https://doi.org/10.3390/horticulturae10010040

APA StyleZhang, K., Chu, P., Lammers, K., Li, Z., & Lu, R. (2024). Active Laser-Camera Scanning for High-Precision Fruit Localization in Robotic Harvesting: System Design and Calibration. Horticulturae, 10(1), 40. https://doi.org/10.3390/horticulturae10010040