Evaluation of a Probability-Based Predictive Tool on Pathologist Agreement Using Urinary Bladder as a Pilot Tissue

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Sample Selection and Processing

2.2. Building the Predictive Tool

2.3. Pathologists

2.4. Statistical Analysis

3. Results

3.1. Data Overview

3.2. Statistical Analysis of Inter-Pathologist Agreement

3.2.1. Digital Whole-Slide Images

3.2.2. Glass Slides

3.3. Evaluation of Concurrence of Pathologist Diagnosis with the Reference Diagnosis

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Coblentz, T.R.; Mills, S.E.; Theodorescu, D. Impact of second opinion pathology in the definitive management of patients with bladder carcinoma. Cancer 2001, 91, 1284–1290. [Google Scholar] [CrossRef]

- Allen, T.C. Second opinions: Pathologists’ preventive medicine. Arch Pathol. Lab. Med. 2013, 137, 310–311. [Google Scholar] [CrossRef] [PubMed]

- Regan, R.C.; Rassnick, K.M.; Balkman, C.E.; Bailey, D.B.; McDonough, S.P. Comparison of first-opinion and second-opinion histopathology from dogs and cats with cancer: 430 cases (2001–2008). Vet. Comp. Oncol. 2010, 8, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Willard, M.D.; Jergens, A.E.; Duncan, R.B.; Leib, M.S.; McCracken, M.D.; DeNovo, R.C.; Helman, R.G.; Slater, M.R.; Harbison, J.L. Interobserver variation among histopathologic evaluations of intestinal tissues from dogs and cats. J. Am. Vet. Med. Assoc. 2002, 220, 1177–1182. [Google Scholar] [CrossRef] [PubMed]

- Fleiss, J.L.; Levin, B.; Paik, M.C. The Measurement of Interrater Agreement. In Statistical Methods for Rates and Proportions; Shewart, W.A., Wilks, S.S., Fleiss, J.L., Levin, B., Paik, M.C., Eds.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2003; pp. 598–626. [Google Scholar]

- Warren, A.L.; Donnon, T.L.; Wagg, C.R.; Priest, H. Educational interventions to improve cytology visual diagnostic reasoning measured by eye tracking. J. Vet. Med. Educ. 2015, 42, 69–78. [Google Scholar] [CrossRef]

- Willard, M.; Mansell, J. Correlating clinical activity and histopathologic assessment of gastrointestinal lesion severity: Current challenges. Vet. Clin. N. Am. Small Anim. Pract. 2011, 41, 457–463. [Google Scholar] [CrossRef]

- Xu, W.; Zhao, Y.; Nian, S.; Feng, L.; Bai, X.; Luo, X.; Luo, F. Differential analysis of disease risk assessment using binary logistic regression with different analysis strategies. J. Int. Med. Res. 2018, 46, 3656–3664. [Google Scholar] [CrossRef] [Green Version]

- Bhatti, I.P.; Lohano, H.D.; Pirzado, Z.A.; Jafri, I.A. A Logistic Regression Analysis of the Ischemic Heart Disease Risk. J. App. Sci. 2006, 6, 785–788. [Google Scholar] [CrossRef]

- Morise, A.P.; Detrano, R.; Bobbio, M.; Diamond, G.A. Development and validation of a logistic regression-derived algorithm for estimating the incremental probability of coronary artery disease before and after exercise testing. J. Am. Coll. Cardiol. 1992, 20, 1187–1196. [Google Scholar] [CrossRef] [Green Version]

- Deppen, S.A.; Blume, J.D.; Aldrich, M.C.; Fletcher, S.A.; Massion, P.P.; Walker, R.C.; Chen, H.C.; Speroff, T.; Degesys, C.A.; Pinkerman, R.; et al. Predicting lung cancer prior to surgical resection in patients with lung nodules. J. Thorac. Oncol. 2014, 9, 1477–1484. [Google Scholar] [CrossRef] [Green Version]

- Bartolomé, N.; Segarra, S.; Artieda, M.; Francino, O.; Sánchez, E.; Szczypiorska, M.; Casellas, J.; Tejedor, D.; Cerdeira, J.; Martínez, A.; et al. A Genetic Predictive Model for Canine Hip Dysplasia: Integration of Genome Wide Association Study (GWAS) and Candidate Gene Approaches. PLoS ONE 2015, 10, e0122558. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Murakami, T.; Feeney, D.A.; Bahr, K.L. Analysis of clinical and ultrasonographic data by use of logistic regression models for prediction of malignant versus benign causes of ultrasonographically detected focal liver lesions in dogs. Am. J. Vet. Res. 2012, 73, 821–829. [Google Scholar] [CrossRef] [PubMed]

- Nakamura, K.; Kawamoto, S.; Osuga, T.; Morita, T.; Sasaki, N.; Morishita, K.; Ohta, H.; Takiguchi, M. Left Atrial Strain at Different Stages of Myxomatous Mitral Valve Disease in Dogs. J. Vet. Intern. Med. 2017, 31, 316–325. [Google Scholar] [CrossRef] [Green Version]

- Grohn, Y.T.; Fubini, S.L.; Smith, D.F. Use of a multiple logistic regression model to determine prognosis of dairy cows with right displacement of the abomasum or abomasal volvulus. Am. J. Vet. Res. 1990, 51, 1895–1899. [Google Scholar] [PubMed]

- Reeves, M.J.; Curtis, C.R.; Salman, M.D.; Stashak, T.S.; Reif, J.S. Validation of logistic regression models used in the assessment of prognosis and the need for surgery in equine colic patients. Prev. Vet. Med. 1992, 13, 155–172. [Google Scholar] [CrossRef]

- Selvaraju, G.; Balasubramaniam, A.; Rajendran, D.; Kannon, D.; Geetha, M. Multiple linear regression model for forecasting Bluetongue disease outbreak in sheep of North-west agroclimatic zone of Tamil Nadu, India. Vet. World 2013, 6, 321–324. [Google Scholar] [CrossRef]

- Glueckert, E.; Clifford, D.L.; Brenn-White, M.; Ochoa, J.; Gabriel, M.; Wengert, G.; Foley, J. Endemic Skunk amdoparvovirus in free-ranging striped skunks (Mephitis mephitis) in California. Trans. Emerg Dis. 2019, 66, 2252–2263. [Google Scholar] [CrossRef]

- Chew, D.J.; DiBartola, S.P.; Schenck, P.A. Canine and Feline Nephrology and Urology, 2nd ed.; Saunders: St. Louis, MO, USA, 2011. [Google Scholar]

- Jones, E.; Alawneh, J.; Thompson, M.; Palmieri, C.; Jackson, K.; Allavena, R. Predicting Diagnosis of Australian Canine and Feline Urinary Bladder Disease Based on Histologic Features. Vet. Sci. 2020, 7, 190. [Google Scholar] [CrossRef]

- Veterinary Cancer Society Oncology-Pathology Working Group. Consensus Documents. Available online: http://vetcancersociety.org/vcs-members/vcs-groups/oncology-pathology-working-group/ (accessed on 13 January 2022).

- Geboes, K.; Riddell, R.; Ost, A.; Jensfelt, B.; Persson, T.; Lofberg, R. A reproducible grading scale for histological assessment of inflammation in ulcerative colitis. Gut 2000, 47, 404–409. [Google Scholar] [CrossRef] [Green Version]

- Seely, J.C.; Nyska, A. National Toxicology Program Nonneoplastic Lesion Atlas. Available online: https://ntp.niehs.nih.gov/nnl/index.htm (accessed on 28 September 2021).

- Brannick, E.M.; Zhang, J.; Zhang, X.; Stromberg, P.C. Influence of submission form characteristics on clinical information received in biopsy accession. J. Vet. Diagn. Investig. 2012, 24, 1073–1082. [Google Scholar] [CrossRef] [Green Version]

- Romano, R.C.; Novotny, P.J.; Sloan, J.A.; Comfere, N.I. Measures of completeness and accuracy of clinical information in skin biopsy requisition forms, an analysis of 249 cases. Am. J. Clin. Pathol. 2016, 146, 727–735. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Raab, S.S.; Oweity, T.; Hughes, J.H.; Salomao, D.R.; Kelley, C.M.; Flynn, C.M.; D’Antonio, J.A.; Cohen, M.B. Effect of clinical history on diagnostic accuracy in the cytologic interpretation of bronchial brush border specimens. Am. J. Clin. Pathol. 2000, 114, 78–83. [Google Scholar] [CrossRef] [PubMed]

- Brown, P.J.; Fews, D.; Bell, N.J. Teaching Veterinary Histopathology: A Comparison of Microscopy and Digital Slides. J. Vet. Med. Educ. 2016, 43, 13–20. [Google Scholar] [CrossRef] [PubMed]

- Harris, T.; Leaven, T.; Heidger, P.; Kreiter, C.; Duncan, J.; Dick, F. Comparison of a virtual microscope laboratory to a regular microscope laboratory for teaching histology. Anat. Rec. 2001, 265, 10–14. [Google Scholar] [CrossRef]

- Bertram, C.A.; Gurtner, C.; Dettwiler, M.; Kershaw, O.; Dietert, K.; Pieper, L.; Pischon, H.; Gruber, A.D.; Klopfleisch, R. Validation of Digital Microscopy Compared With Light Microscopy for the Diagnosis of Canine Cutaneous Tumors. Vet. Pathol. 2018, 55, 490–500. [Google Scholar] [CrossRef] [Green Version]

- Schumacher, V.L.; Aeffner, F.; Barale-Thomas, E.; Botteron, C.; Carter, J.; Elies, L.; Engelhardt, J.A.; Fant, P.; Forest, T.; Hall, P.; et al. The Application, Challenges, and Advancement Toward Regulatory Acceptance of Digital Toxicologic Pathology: Results of the 7th ESTP International Expert Workshop (20–21 September 2019). Toxicol. Pathol. 2020, 49, 720–737. [Google Scholar] [CrossRef]

- Malarkey, D.E.; Willson, G.A.; Willson, C.J.; Adams, E.T.; Olson, G.R.; Witt, W.M.; Elmore, S.A.; Hardisty, J.F.; Boyle, M.C.; Crabbs, T.A.; et al. Utilizing Whole Slide Images for Pathology Peer Review and Working Groups. Toxicol. Pathol. 2015, 43, 1149–1157. [Google Scholar] [CrossRef] [Green Version]

- Luong, R.H. Commentary: Digital histopathology in a private or commercial diagnostic veterinary laboratory. J. Vet. Diagn. Investig. 2020, 32, 353–355. [Google Scholar] [CrossRef]

- Microsoft Corporation. Microsoft Excel 365; Microsoft: Redmond, WA, USA, 2010. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2017. [Google Scholar]

- Fleiss, J.L. Measuring nominal scale agreement among many raters. Psychol. Bull. 1971, 76, 378–382. [Google Scholar] [CrossRef]

- Dohoo, I.; Martin, W.; Stryhn, H. Veterinary Epidemiologic Research, 2nd ed.; VER Inc.: Charlottetown, PE, Canada, 2009. [Google Scholar]

- van Buuren, S.; Groothuis-Oudshoorn, K. Mice: Multivariate Imputation by Chained Equations in R. J. Stat. Softw. 2011, 45, 1–67. [Google Scholar] [CrossRef] [Green Version]

- Crissman, J.W.; Goodman, D.G.; Hildebrandt, P.K.; Maronpot, R.R.; Prater, D.A.; Riley, J.H.; Seaman, W.J.; Thake, D.C. Best practice guideline: Toxicologic histopathology. Toxicol. Pathol. 2004, 32, 126–131. [Google Scholar] [CrossRef] [PubMed]

- Sim, J.; Wright, C.C. The kappa statistic in reliability studies: Use, interpretation, and sample size requirements. Phys. Ther. 2005, 85, 257–268. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aeffner, F.; Wilson, K.; Martin, N.; Black, J.; Hendriks, C.; Bolon, B.; Rudmann, D.; Gianani, R.; Koegler, S.; Krueger, J.; et al. The Gold Standard Paradox in Digital Image Analysis: Manual Versus Automated Scoring as Ground Truth. Arch Pathol. Lab. Med. 2017, 141, 1267–1275. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Allred, D.C.; Harvey, J.M.; Berardo, M.; Clark, G.M. Prognostic and predictive factors in breast cancer by immunohistochemical analysis. Mod. Pathol. 1998, 11, 155–168. [Google Scholar]

- Christmas, T. Lymphocyte sub-populations in the bladder wall in normal bladder, bacterial cystitis and interstitial cystitis. Br. J. Urol. 1994, 73, 508–515. [Google Scholar] [CrossRef]

- Koehler, J.W.; Miller, A.D.; Miller, C.R.; Porter, B.; Aldape, K.; Beck, J.; Brat, D.; Cornax, I.; Corps, K.; Frank, C.; et al. A Revised Diagnostic Classification of Canine Glioma: Towards Validation of the Canine Glioma Patient as a Naturally Occurring Preclinical Model for Human Glioma. J. Neuropathol. Exp. Neurol. 2018, 77, 1039–1054. [Google Scholar] [CrossRef]

- Woicke, J.; Al-Haddawi, M.M.; Bienvenu, J.-G.; Caverly Rae, J.M.; Chanut, F.J.; Colman, K.; Cullen, J.M.; Davis, W.; Fukuda, R.; Huisinga, M.; et al. International Harmonization of Nomenclature and Diagnostic Criteria (INHAND): Nonproliferative and Proliferative Lesions of the Dog. Toxicol. Pathol. 2021, 49, 5–109. [Google Scholar] [CrossRef]

- Cockerell, G.L.; McKim, J.M.; Vonderfecht, S.L. Strategic importance of research support through pathology. Toxicol. Pathol. 2002, 30, 4–7. [Google Scholar] [CrossRef]

- Yagi, Y.; Gilbertson, J.R. A relationship between slide quality and image quality in whole slide imaging (WSI). Diagn. Pathol. 2008, 3, S12. [Google Scholar] [CrossRef] [Green Version]

- Webster, J.D.; Dunstan, R.W. Whole-slide imaging and automated image analysis: Considerations and opportunities in the practice of pathology. Vet. Pathol. 2014, 51, 211–223. [Google Scholar] [CrossRef] [Green Version]

- American College of Veterinary Pathologists. Report of the Future Practice of Pathology Task Force; American College of Veterinary Pathologists: Chicago, IL, USA, 2019. [Google Scholar]

- Pantanowitz, L.; Sinard, J.H.; Henricks, W.H.; Fatheree, L.A.; Carter, A.B.; Contis, L.; Beckwith, B.A.; Evans, A.J.; Lal, A.; Parwani, A.V. Validating Whole Slide Imaging for Diagnostic Purposes in Pathology: Guideline from the College of American Pathologists Pathology and Laboratory Quality Center. Arch. Pathol. Lab. Med. 2013, 137, 1710–1722. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cross, S.S. Grading and scoring in histopathology. Histopathology 1998, 33, 99–106. [Google Scholar] [CrossRef] [PubMed]

- Allison, K.H.; Reisch, L.M.; Carney, P.A.; Weaver, D.L.; Schnitt, S.J.; O’Malley, F.P.; Geller, B.M.; Elmore, J.G. Understanding diagnostic variability in breast pathology: Lessons learned from an expert consensus review panel. Histopathology 2014, 65, 240–251. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kay, E.W.; O’Dowd, J.; Thomas, R.; Alyusuf, R.; Sachithanandan, S.; Robinson, R.; Walsh, C.B.; Fielding, J.F.; Leader, M.B. Mild abnormalities in liver histology associated with chronic hepatitis: Distinction from normal liver histology. J. Clin. Pathol. 1997, 50, 929–931. [Google Scholar] [CrossRef]

| Column Heading | Potential Answers * |

|---|---|

| Slide code | Provided |

| Ulceration | Yes, No |

| SM_oedema | Yes, No |

| SM_haem | Yes, No |

| SM_inflamm | Yes, No |

| SM_inflamm_type | Lymphocytic Lymphoplasmacytic Neutrophilic Granulomatous No inflammation |

| Det_inflamm | Yes, No |

| Det_inflamm_type | Lymphocytic Lymphoplasmacytic Neutrophilic Granulomatous No inflammation |

| Organisms | Yes, No |

| Morphological diagnosis | Free form box |

| Etiological diagnosis | Normal Other Cystitis Neoplasia Urolithiasis |

| Comments | Free form box |

| Column Heading | Potential Answers * |

|---|---|

| Slide code | Provided |

| Urothelial ulceration | Yes, No |

| Submucosal lymphoid aggregates | Yes, No |

| Neutrophilic submucosal inflammation | Yes, No |

| Urothelial inflammation | Yes, No |

| Amount of submucosal hemorrhage | Mild Moderate Severe |

| Your diagnosis | Normal Other Cystitis Neoplasia Urolithiasis |

| Comments | Free form box |

| No Animal Information | Signalment and History | With Predictive Tool | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Diagnosis | Reference | P1 | P2 | P3 | P4 | P1 | P2 | P3 | P4 | P1 | P2 | P3 | P4 |

| Cystitis | 7 | 7 | 14 | 17 | 6 | 6 | 14 | 11 | 5 | 7 | 14 | 11 | 4 |

| Neoplasia | 6 | 4 | 4 | 3 | 3 | 4 | 4 | 3 | 3 | 4 | 4 | 3 | 3 |

| Urolithiasis | 6 | 9 | 0 | 1 | 6 | 9 | 0 | 8 | 5 | 7 | 0 | 8 | 3 |

| Normal | 6 | 5 | 2 | 2 | 1 | 6 | 2 | 2 | 5 | 5 | 2 | 2 | 5 |

| Other | 0 | 0 | 5 | 2 | 3 | 0 | 5 | 1 | 3 | 2 | 5 | 1 | 4 |

| Total | 25 | 25 | 25 | 25 | 19 * | 25 | 25 | 25 | 21 * | 25 | 25 | 25 | 17 * |

| No Animal Information | Signalment and History | With Predictive Tool | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Diagnosis | Reference | P1 | P2 | P3 | P4 | P1 | P2 | P3 | P4 | P1 | P2 | P3 | P4 |

| Cystitis | 7 | 6 | 15 | 15 | 10 | 6 | 15 | 9 | 10 | 6 | 14 | 11 | 11 |

| Neoplasia | 6 | 3 | 3 | 2 | 3 | 2 | 3 | 3 | 3 | 3 | 3 | 3 | 3 |

| Urolithiasis | 6 | 8 | 0 | 1 | 3 | 9 | 0 | 4 | 3 | 8 | 0 | 4 | 1 |

| Normal | 6 | 5 | 2 | 4 | 4 | 4 | 0 | 4 | 4 | 5 | 2 | 4 | 4 |

| Other | 0 | 0 | 2 | 0 | 2 | 0 | 0 | 2 | 2 | 0 | 3 | 0 | 3 |

| Total | 25 * | 22 | 22 | 22 | 22 | 21 ** | 22 | 22 | 22 | 22 | 22 | 22 | 22 |

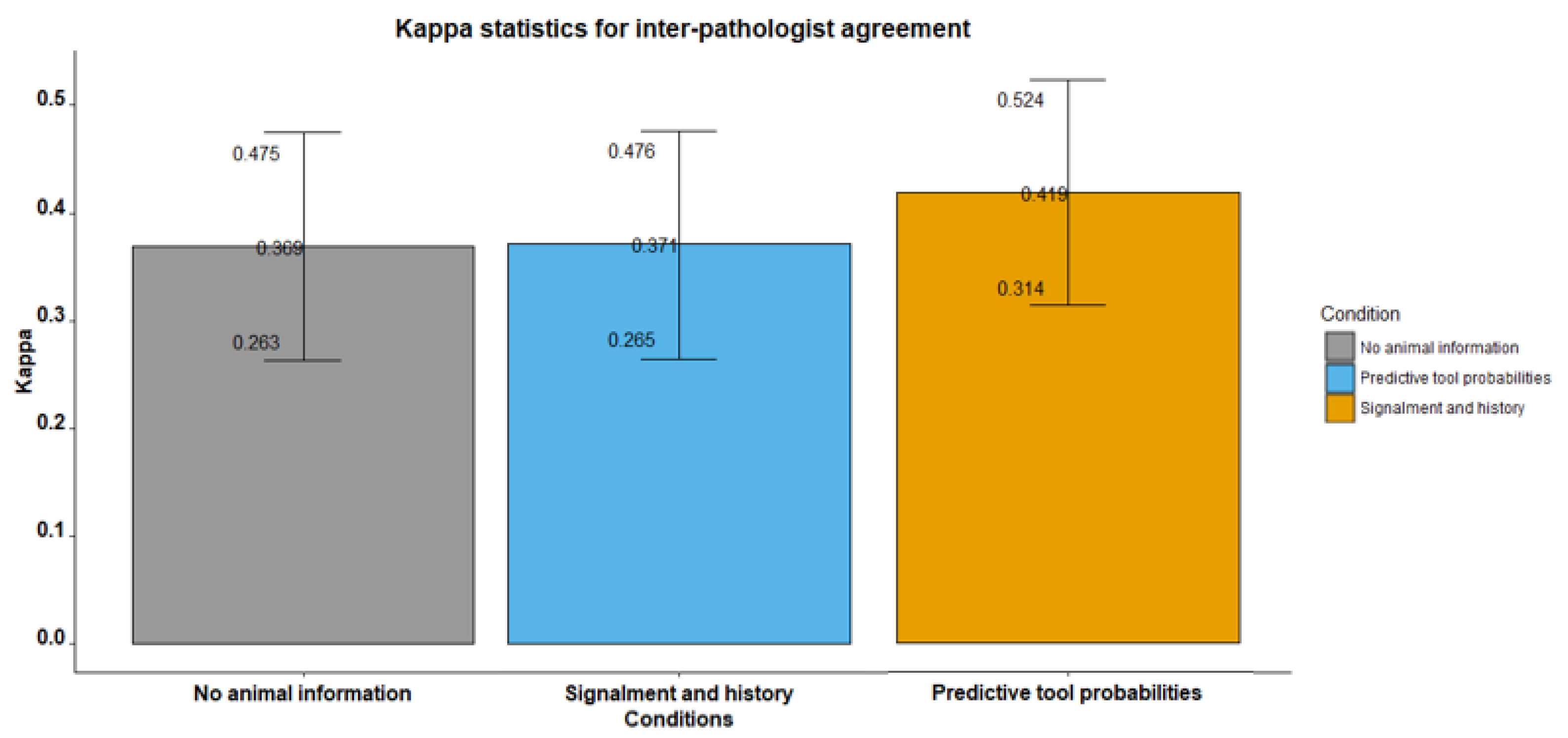

| Inter-Pathologist Agreement: Fleiss Kappa Statistics | ||||||

|---|---|---|---|---|---|---|

| Overall Kappa | Detailed Kappa for Each Diagnosis | |||||

| Kappa | Z-Value | p-Value | Kappa | Z-Value | p-Value | |

| No animal information | ||||||

| overall | 0.074 | 1.5 | 0.134 | |||

| cystitis | 0.01 | 0.118 | 0.906 | |||

| neoplasia | 0.558 | 6.833 | <0.001 | |||

| normal | 0.204 | 2.501 | 0.012 | |||

| other | −0.02 | −0.25 | 0.803 | |||

| urolithiasis | −0.159 | −1.943 | 0.052 | |||

| Signalment and history | ||||||

| overall | 0.227 | 4.668 | <0.001 | |||

| cystitis | 0.558 | 6.833 | <0.001 | |||

| neoplasia | 0.765 | 9.366 | <0.001 | |||

| normal | 0.268 | 3.278 | 0.001 | |||

| other | −0.01 | −0.124 | 0.902 | |||

| urolithiasis | 0.049 | 0.604 | 0.546 | |||

| Predictive tool probabilities | ||||||

| overall | 0.311 | 6.873 | <0.001 | |||

| cystitis | 0.204 | 2.501 | 0.012 | |||

| neoplasia | 0.551 | 6.75 | <0.001 | |||

| normal | 0.391 | 4.788 | <0.001 | |||

| other | 0.054 | 0.666 | 0.505 | |||

| urolithiasis | 0.307 | 3.755 | <0.001 | |||

| Inter-Pathologist Agreement: Fleiss Kappa Statistics | ||||||

|---|---|---|---|---|---|---|

| Overall Kappa | Detailed Kappa for Each Diagnosis | |||||

| Kappa | Z-Value | p-Value | Kappa | Z-Value | p-Value | |

| No animal information | ||||||

| overall | 0.369 | 6.813 | <0.001 | |||

| cystitis | 0.362 | 4.163 | <0.001 | |||

| neoplasia | 0.688 | 7.908 | <0.001 | |||

| normal | 0.604 | 6.935 | <0.001 | |||

| urolithiasis | −0.045 | −0.517 | 0.605 | |||

| Signalment and history | ||||||

| overall | 0.371 | 6.901 | <0.001 | |||

| cystitis | 0.688 | 7.908 | <0.001 | |||

| neoplasia | 0.688 | 7.908 | <0.001 | |||

| normal | 0.545 | 6.257 | <0.001 | |||

| urolithiasis | 0.152 | 1.741 | 0.082 | |||

| Predictive tool probabilities | ||||||

| overall | 0.419 | 7.84 | <0.001 | |||

| cystitis | 0.604 | 6.935 | <0.001 | |||

| neoplasia | 0.678 | 7.794 | <0.001 | |||

| normal | 0.652 | 7.488 | <0.001 | |||

| urolithiasis | 0.127 | 1.464 | 0.143 | |||

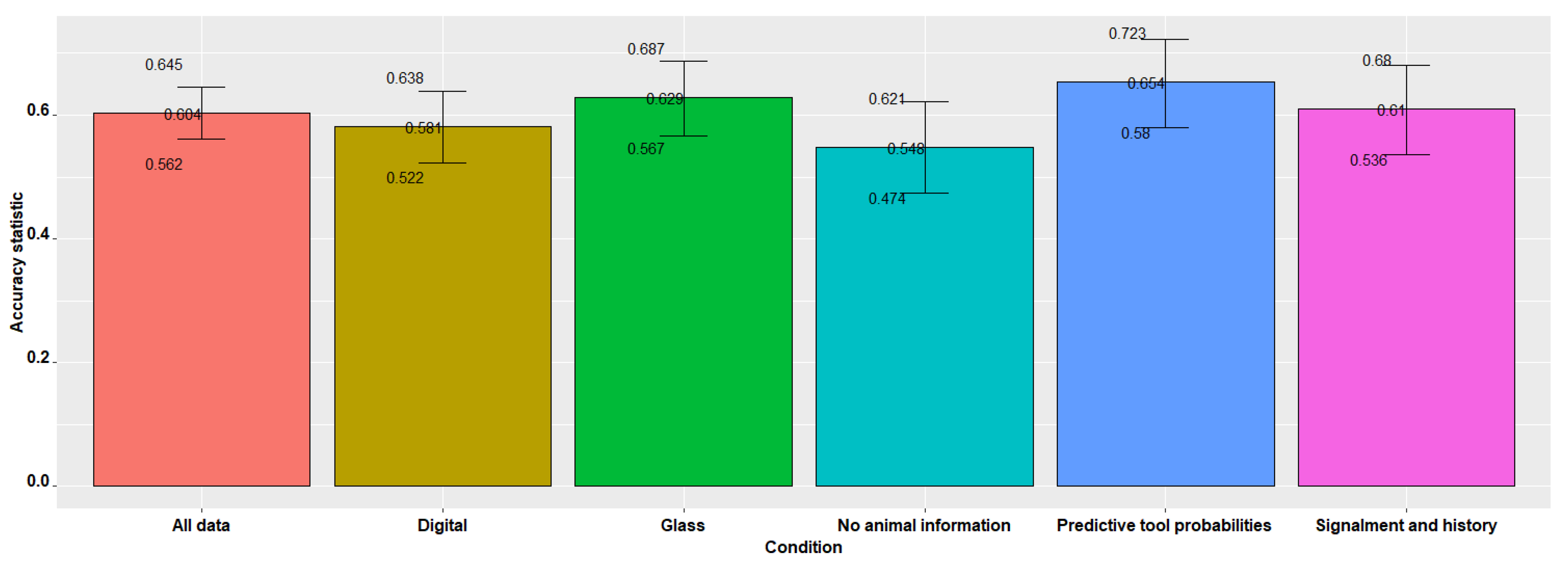

| Concurrence and Kappa Statistics | |||||

|---|---|---|---|---|---|

| Concurrence | Agreement | ||||

| Concurrence | LCL | UCL | p-Value | Kappa | p-Value |

| All data | |||||

| 0.604 | 0.562 | 0.645 | <0.001 | 0.460 | <0.001 |

| No animal information | |||||

| 0.548 | 0.474 | 0.621 | <0.001 | 0.384 | <0.001 |

| Signalment and history | |||||

| 0.610 | 0.536 | 0.680 | <0.001 | 0.470 | 0.002 |

| Predictive tool probabilities | |||||

| 0.654 | 0.580 | 0.723 | <0.001 | 0.528 | 0.001 |

| Glass | |||||

| 0.629 | 0.567 | 0.687 | <0.001 | 0.486 | <0.001 |

| Digital | |||||

| 0.581 | 0.522 | 0.638 | <0.001 | 0.436 | <0.001 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jones, E.; Woldeyohannes, S.; Castillo-Alcala, F.; Lillie, B.N.; Sula, M.-J.M.; Owen, H.; Alawneh, J.; Allavena, R. Evaluation of a Probability-Based Predictive Tool on Pathologist Agreement Using Urinary Bladder as a Pilot Tissue. Vet. Sci. 2022, 9, 367. https://doi.org/10.3390/vetsci9070367

Jones E, Woldeyohannes S, Castillo-Alcala F, Lillie BN, Sula M-JM, Owen H, Alawneh J, Allavena R. Evaluation of a Probability-Based Predictive Tool on Pathologist Agreement Using Urinary Bladder as a Pilot Tissue. Veterinary Sciences. 2022; 9(7):367. https://doi.org/10.3390/vetsci9070367

Chicago/Turabian StyleJones, Emily, Solomon Woldeyohannes, Fernanda Castillo-Alcala, Brandon N. Lillie, Mee-Ja M. Sula, Helen Owen, John Alawneh, and Rachel Allavena. 2022. "Evaluation of a Probability-Based Predictive Tool on Pathologist Agreement Using Urinary Bladder as a Pilot Tissue" Veterinary Sciences 9, no. 7: 367. https://doi.org/10.3390/vetsci9070367

APA StyleJones, E., Woldeyohannes, S., Castillo-Alcala, F., Lillie, B. N., Sula, M.-J. M., Owen, H., Alawneh, J., & Allavena, R. (2022). Evaluation of a Probability-Based Predictive Tool on Pathologist Agreement Using Urinary Bladder as a Pilot Tissue. Veterinary Sciences, 9(7), 367. https://doi.org/10.3390/vetsci9070367