1. Introduction

In contemporary society, driven by rapid advancements in medical expertise and computer technology, there has been a notable increase in the use of diverse medical imaging modalities. These include a wide range of techniques, such as computed tomography (CT), magnetic resonance imaging (MRI), and facial scanning, all aimed at enhancing diagnostic accuracy and visualizing anticipated treatment outcomes. Differences in acquisition equipment and imaging methodologies lead to variations in initial poses and clinical interpretations across multimodal medical images. Spiral CT is distinguished by its precision in reconstructing bone tissue using distinct X-ray absorption coefficients, offering exceptional accuracy in bone reconstruction. Conversely, although MRI provides high accuracy for soft tissue reconstruction, its drawbacks include lengthy acquisition times and relatively high costs. In contrast, facial scanning offers the advantage of capturing intricate details, such as high-resolution color and texture features, thereby augmenting diagnostic capabilities in unique ways.

In cosmetic surgery and orthodontics, the integration of cone-beam computed tomography (CBCT) with facial scanning data represents a groundbreaking advancement with multiple benefits. By combining CBCT, which provides detailed insights into dental and skeletal structures, with facial scanning, which captures intricate facial features and soft tissue dynamics, practitioners can obtain a comprehensive understanding of patients’ anatomical characteristics. This holistic approach facilitates precise treatment planning and execution, resulting in improved surgical outcomes and increased patient satisfaction. One of the primary advantages of fusing CBCT and facial scanning data is its ability to provide three-dimensional visualization of the craniofacial complex. This comprehensive view enables surgeons and orthodontists to accurately assess the interplay between facial aesthetics and underlying skeletal structures, facilitating personalized treatment strategies tailored to each patient’s unique anatomy. Moreover, the fusion of CBCT and facial scanning data streamlines the diagnostic process by providing a seamless workflow from preoperative planning to postoperative evaluation. Clinicians can precisely simulate surgical interventions and orthodontic movements in virtual environments, enabling meticulous preoperative analysis and optimization of treatment plans. Subsequently, during surgical or orthodontic procedures, this preplanned approach translates into greater efficiency and accuracy, minimizing intraoperative complications and optimizing surgical outcomes.

The integration of multimodal medical images has shown significant utility in various medical applications. For example, Monfardini et al. [

1] applied ultrasound and cone-beam CT fusion techniques for liver ablation procedures, while Sakthivel et al. [

2] used PET-MR fusion for postoperative surveillance. Furthermore, Dong et al. [

3] demonstrated the effectiveness of CT-MR image fusion in reconstructing complex 3D extremity tumor regions. Kraeima et al. [

4] incorporated multimodal image fusion into a workflow for 3D virtual surgical planning (VSP), highlighting its potential to enhance surgical precision. However, it is worth noting that although CT visualization is currently the standard in routine 3D VSP procedures [

5], the inclusion of additional modalities, such as MRI, holds promise for further refining surgical planning and execution.

A neural network for landmark detection introduces a feature point extraction module, which is employed within the algorithm to explain the process of learning feature extraction and to facilitate subsequent registration tasks. A fundamental distinction between processing 3D point cloud data and traditional 2D planar image data is the inherent disorder of point cloud data, in contrast to the sequential relationships used in image convolution operations. In addition, 3D point cloud data exhibit spatial correlation and translational–rotational invariance, which present challenges for keypoint extraction. Traditional methods for 3D point cloud feature extraction often involve dividing the data into voxel grids with spatial dependence [

6] or using recurrent neural networks to eliminate input point cloud data sequences [

7]. However, these approaches may result in unnecessarily large data representations and the associated computational burden. PointNet [

8] introduced a novel neural network architecture designed to process point cloud data directly while preserving the permutation invariance of the input points. This enables effective classification and semantic segmentation of scenes without the need for voxelization or image conversion. However, PointNet has limitations in capturing the local features of point cloud data, which hinders its ability to effectively analyze complex scenes and limits its generalization capabilities.

The Iterative Closest Point (ICP) algorithm is a widely used method for point cloud registration, particularly for rigid model transformations. At its core, the algorithm operates by greedily establishing correspondences between two point clouds and then applying the least-squares method to determine the parameters for rigid transformation. Although originally designed for rigid registration, the ICP algorithm has been extended to accommodate nonrigid transformations [

9], in which adjustable ground rigidity parameters are introduced to optimize the transformation under specified rigidity constraints. In practice, optimization of the ICP algorithm often involves approximate least-squares methods, which can be computationally intensive [

10]. Despite its effectiveness, ICP presents challenges, notably its susceptibility to local minima and dependence on initialization variables. Various strategies have been proposed to mitigate these issues. For example, Go-ICP [

11] employs a branch-and-bound approach to explore the entire 3D motion space, achieving global optimization by bounding a new error function. However, this method incurs significant computational overhead compared to traditional ICP, even with local acceleration.

Nevertheless, several challenges arise due to the disparities between CBCT and facial scanning data, which affect the fusion process. First, during acquisition, facial scanning captures information related to hair and clothing, which is absent in CBCT data. Second, while CBCT includes both soft tissue and bone density information, facial scanning primarily provides surface details and color information. Third, because CBCT and facial scanning data are not synchronized, variations in patients’ facial expressions and gestures between the two datasets are inevitable.

To address these challenges, we propose a novel methodology that effectively fuses disparate data types while ensuring precision and coherence in the integration process. Unlike conventional approaches that typically focus on detecting specific features and aligning corresponding points between datasets, our method leverages a pre-trained deep learning model (MediaPipe) without additional re-training. This model facilitates the detection of facial meshes in both CBCT and facial scanning data and subsequently aligns their positions and orientations according to a predefined standard. An iterative closest point (ICP)–based algorithm is then employed to refine the registration of the transformed data and ensure accurate fusion. Finally, the results were validated based on the achieved accuracy, which served as the benchmark for evaluation and quality assessment. Beyond technical accuracy, multimodal fusion of craniofacial data raises important questions of data privacy and governance. Recent work has highlighted the ethical and cybersecurity risks of handling identifiable facial data and emphasized the need for privacy-by-design strategies when developing AI-based registration pipelines [

12]. In this study, we address registration feasibility while also acknowledging the importance of integrating robust consent procedures and ethical safeguards in future clinical deployments.

2. Method

2.1. Data Preparation

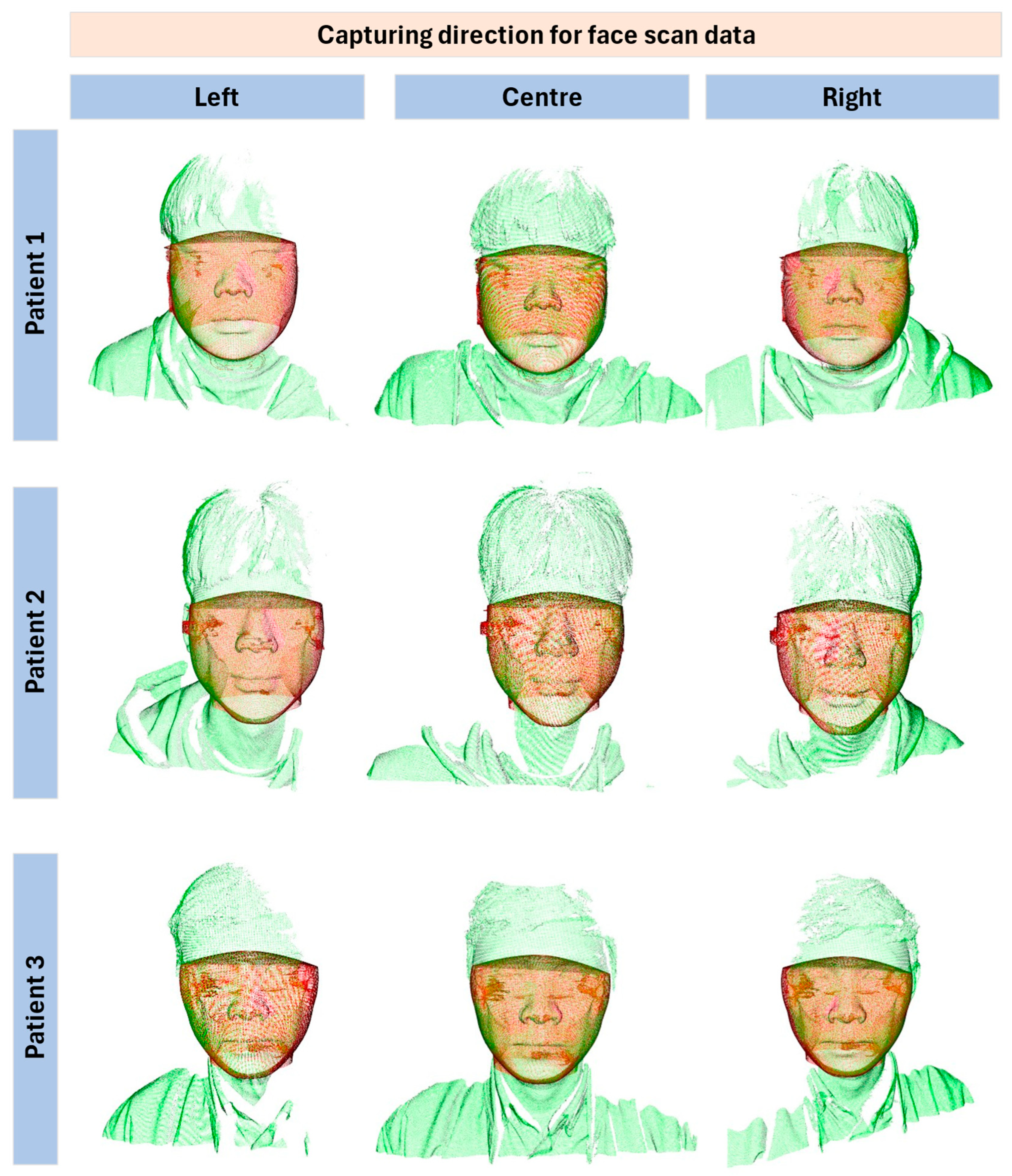

The data preparation phase involved the collection of CBCT and facial scan data from three male participants (ages 24–34 With regard to the data acquisition process for the three participants in this study, we clarify that the data were obtained without issues in accordance with the standard medical imaging procedures routinely performed at the accredited Chung-Ang University Hospital. All three subjects were used consistently for both qualitative demonstration and quantitative evaluation. Each dataset contained 3D scanning data consisting of 576 slices at a resolution of 768 × 768 × 576 pixels, with a slice thickness of 0.3 mm. Full field-of-view (230 × 170 mm) images were acquired using an I-CAT CBCT scanner (KaVo Dental GmbH, Biberach, Germany) under the following operational parameters: 120 kV, 37.1 mA, voxel size of 0.3 mm, and a scan time of 8.9 s. Face scans were obtained using a Zivid-2 structured-light RGB-D camera (Zivid Co., Ltd., Oslo, Norway), which operates on the structured-light principle and produces RGB-D data (RGB image + depth). Acquisition parameters: distance ~0.8 m, exposure 6 ms, resolution 1920 × 1200, triangulation accuracy ~0.2 mm. The CBCT and facial scans were independently collected, not simultaneously, to ensure clarity in the acquisition protocol. This combination of high-resolution CBCT and 3D facial scanning provided a comprehensive dataset for analyzing facial structures in three dimensions.

To maintain the integrity of the dataset, strict data handling protocols were implemented. Each scan underwent a quality assurance process to ensure clarity and accuracy, with any data showing artifacts or inconsistencies being reacquired. CBCT and facial scans were then anonymized and catalogued in a secure database in compliance with data protection regulations. To prepare the data for the feature point learning algorithms, the raw scans were processed to align the CBCT and facial scan data, normalize the scales, and convert the data into a consistent format for subsequent analyses. This meticulous preparation was crucial for the success of the feature extraction and machine learning phases, ensuring that the dataset was robust, reliable, and ready for complex analytical tasks.

Participant demographics: all three were male, aged 24–34. Inclusion criteria: no prior facial surgery or orthodontic appliances. Exclusion criteria: significant facial hair or medical conditions altering craniofacial anatomy. Pre-scan instructions included neutral facial expression, removal of glasses, and hair tied back to avoid occlusion of landmarks. These details are summarized in

Supplementary Table S1.

2.2. Overall Flowchart for Automatic Registration

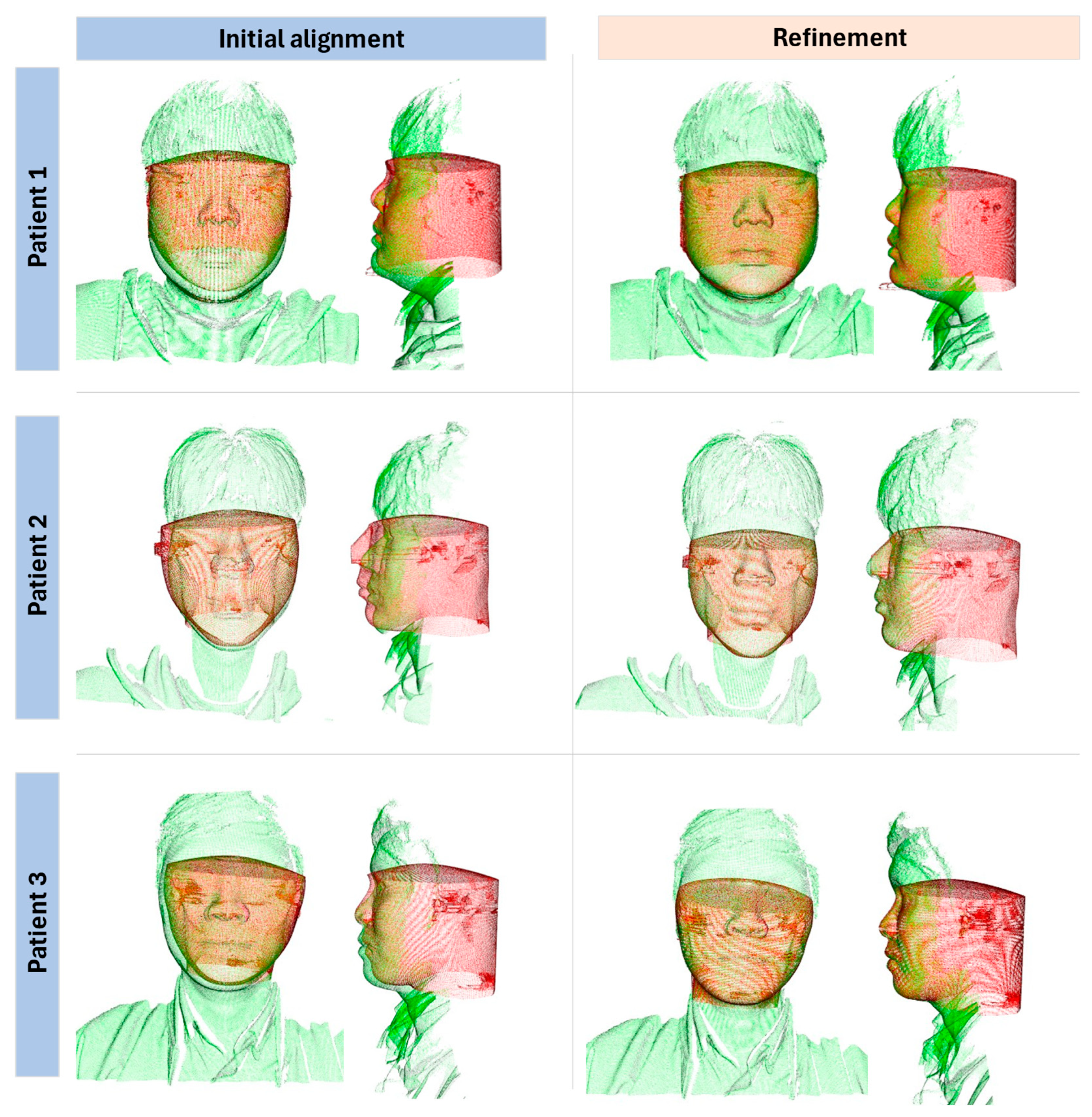

Figure 1 presents a detailed workflow designed for facial recognition and alignment, utilizing two distinct 3D environments derived from CBCT and facial scans. These environments are constructed from point cloud data obtained from the CBCT and facial scanners. Each environment incorporates a virtual camera responsible for capturing 2D images from a predefined position and orientation.

From the 2D images captured by the virtual cameras in these environments, MediaPipe [

13], a widely recognized face mesh detection framework, was employed to predict the 3D feature points of the facial mesh. This prediction provides the orientation and position of the face, which serve as the reference points for matching the face to the virtual camera’s view. Subsequently, a refinement process was implemented using the ICP method to enhance registration precision. This step further optimized the accuracy of the alignment procedure.

2.3. Initial Alignment

After loading each input datum, including the CBCT and facial scan, into the corresponding 3D virtual environments, a camera was initially positioned at the origin of the coordinate system with its viewing direction aligned along the positive z–axis. The objective was to transform the point cloud such that its center aligned with a specific point along the z–axis, specifically at z = d, where d = 300 mm represents the distance from the camera to the center of the point cloud.

The transformation involved two main steps: calculating the centroid of the point cloud and translating the point cloud so that its centroid was positioned at coordinates (0, 0, d). The point cloud comprised n points {P

1, P

2, …, P

n}, where each point P

i = (x

i, y

i, z

i). The centroid C = (C

x, C

γ, C_z) of the point cloud was the average of all the points and was computed as follows:

To align the centroid of the point cloud with the point (0, 0, d) on the z–axis, each point in the point cloud must be translated. The translation vector

is calculated by subtracting the centroid coordinates from (0, 0, d) as follows:

By applying this translation to each point, the new coordinates of each point after translation are given by:

In this method, a pre-trained MediaPipe model is employed to extract feature points from the face, which are then used to determine the orientation of a person in three-dimensional space. Specifically, the rotations around the x–, y–, and z–axes are calculated. In addition, the distance between two critical facial feature points, such as the top-left and top-right points, is measured to estimate the scale or size of the face in the image.

In particular, the following parameters are computed:

The roll (rotation around the z–axis) is calculated by analyzing the slope of the line connecting the feature points at the outer corners of the eyes, specifically landmarks 162 and 389, as shown in Equation (4).

where (

) and (

) are the coordinates of the respective points.

- 2.

The yaw (rotation around the y–axis) and pitch (rotation around the x–axis) are estimated using an arrangement of points on the left and right sides of the facial mesh, including landmarks 162, 234, and 132 on the left side, landmarks 389, 454, and 361 on the right side, and landmark 19 at the top of the nose, as follows:

Distance between landmarks 234 and 454: These points are typically located on the left and right sides of the face near the outer edges of the eyes, respectively. The distance between these points serves as a reliable proxy for facial width, as follows:

The current roll, pitch, yaw, x, y, and size values of the point cloud or face are first compared with the predetermined target values. The differences between these values are then used to compute the corrections required to accurately align the point clouds with the targets. These corrections were calculated using a proportional controller (P-controller), which scales the errors by a proportional constant to determine the necessary adjustments.

Let:

- –

represent roll, represent yaw, and represent pitch.

where represents the current size of the face in an image.

- –

represent the current positions of the top of the nose in the image.

The target values are:

- –

, , for orientation.

- –

, , and for position and size.

After the errors

between current and target values are computed, using a P-controller with a gain of

. A sensitivity analysis with values ranging from 0.005 to 0.05 confirmed that this choice achieved the most stable convergence with the best trade-off between accuracy and speed (see

Supplementary Table S2). The corrections are calculated as follows:

The transformation of a point cloud typically involves multiple rotations and translations, as expressed in the following equations. These transformations are often combined into a single matrix operation that can be efficiently applied to each point in the cloud, as shown in Algorithm 1.

To simplify the application of multiple transformations, they were combined into a 4 × 4 transformation matrix using homogeneous coordinates, which allowed rotation and translation to be handled in a single operation.

where

is the product of the individual rotation matrices:

Transformation matrix

can be applied to each point

in the point cloud. If

is a point with coordinates

represented in homogeneous coordinates as

, the transformed point

is obtained by:

MediaPipe directly provides 3D landmark coordinates (x, y, z) defined with respect to the virtual camera system used to generate 2D projections of both CBCT and face scan data. Because both modalities are projected through the same virtual camera, their extracted 3D landmarks are inherently expressed in the same coordinate system. This enables us to use MediaPipe outputs directly for rigid pose initialization prior to ICP refinement, without any intermediate 2D-to-3D conversion. Since

Figure 1 and

Figure 2 already illustrate the projection and alignment workflow, no new figure was added.

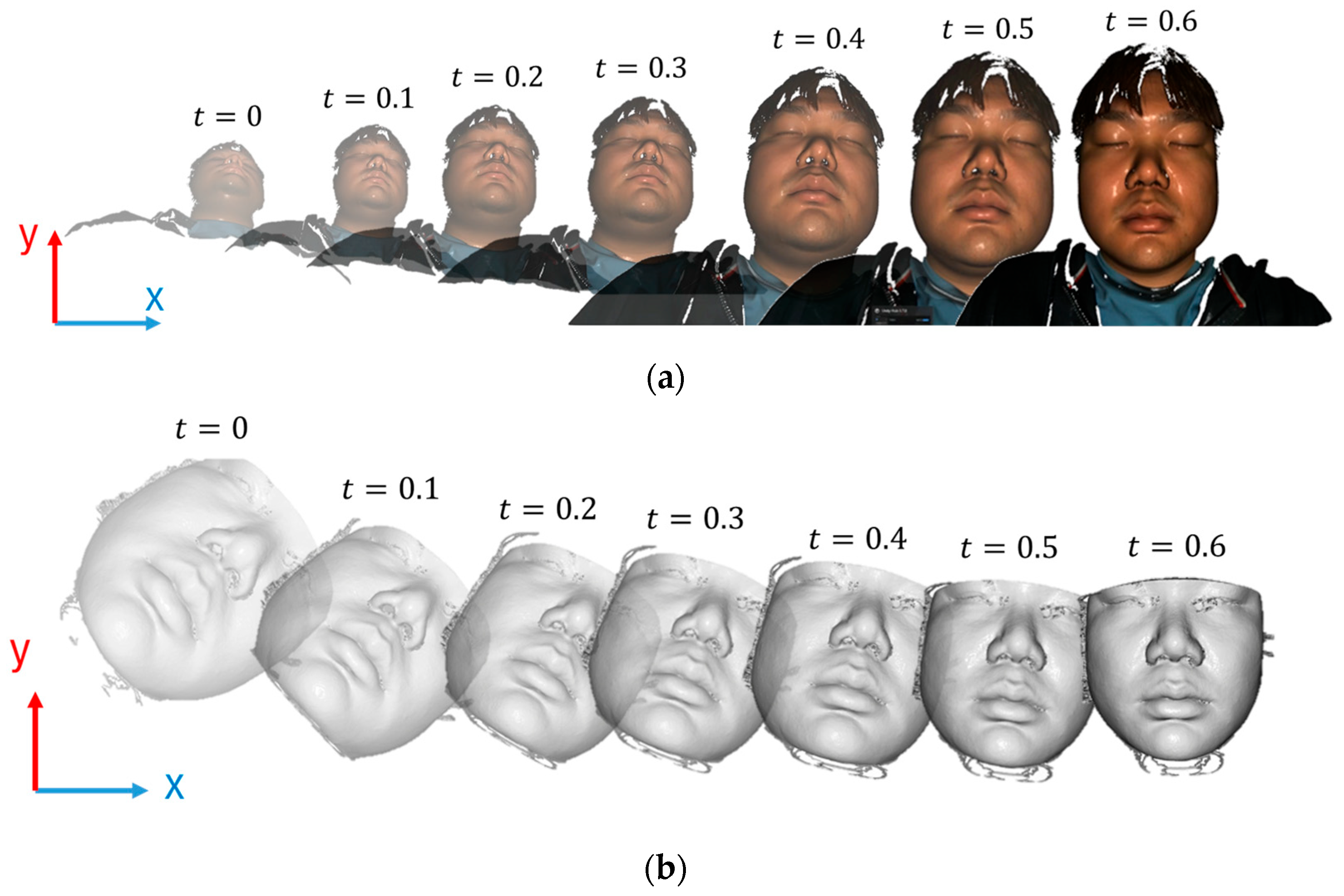

This operation, summarised in Algorithm 1, is performed for every point in the cloud, effectively and efficiently realigning the entire point cloud as required, as shown in

Figure 2.

| Algorithm 1: Pseudo code for initial alignment CBCT and face scan data by face mesh detection |

| 3D initial alignment with Mediapipe |

| 1: | input: |

| 2: | |

| 3: | , , and |

| 4: | |

| 5: | |

| 6: | repeat |

| 7: | |

| 8: | Detect the feature points by MediaPipe |

| 9: | Calculate |

| 10: | Calculate |

| 11: | Calculate |

| 12: | Calculate transform matrix |

| 13: | Update |

| 14: | until , |

| 15: | |

| 16: | return |

2.4. Refinement via ICP Algorithm

After rough alignment. ICP (Iterative Closest Point)—a well-known local alignment method was applied for refinement.

To explain about ICP, let denote the source point cloud and denote the target point cloud. For each point in , the algorithm attempts to find the nearest neighbour in . If the Euclidean distance between them is less than a specified threshold, they are considered a corresponding pair and included in the set . The estimation is then proceeds to find the transformation matrix that can be applied to the source point cloud such that the alignment between these two-point clouds minimizes an objective function, denoted as E(T), thereby improving the registration.

There are two primary types of ICP algorithms that use different objective functions. The first is point-to-point ICP [

14], which employs the followingobjective function:

The second is the point-to-plane ICP [

15] algorithm, which uses the objective function

where

is the normal of the corresponding point

. After estimating the transformation matrix

to minimize the objective function

, the algorithm iterates, updating the new corresponding point pairs set

. This new set includes points from the point clouds

and

with Euclidean distances less than the threshold value. The updated set is then used to estimate another transformation

, which is applied to the source point cloud

to minimize

. The algorithm repeats until the change in the objective function

is less than a specified thresholdor the maximum number of iterations is reached. The final transformation matrix is the product of the transformation matrices computed over all iterations.

where

is the number of iterations performed to meet the objective function.

Obviously, the transformation matrix depends on the threshold value set, as the threshold serves as a criterion to filter out corresponding points, and the set of corresponding points K provides the information needed to estimate the transformation matrix T. It can therefore be inferred that if the threshold value is set too high, points that do not truly correspond will be accepted, leading to a misleading estimate of the transformation matrix T. Conversely, if the threshold is set too low, the criteria become too strict and fewer points are accepted, which will similarly lead to misleading results.

Figure 3 clearly shows the dependence of the estimated result on the threshold value. In case (a), the threshold is too large, resulting in all points being selected and many incorrect predictions being accepted. Conversely, case (b) shows that the threshold is too small, leading to an insufficient number of points for accurately estimating the transformation matrix T. The optimal threshold value (c) lies somewhere within this range, producing a point set filter suitable for estimation. Choosing a reasonable threshold depends heavily on the input data, as there is no universal standard. Therefore, the method for determining an appropriate threshold value will be discussed in the following sections.

Owing to the particular manner in which the ICP algorithm works, it requires a sufficiently good initial alignment. Once the corresponding point-pair set K is predicted incorrectly, the estimation of the transformation matrix T will consequently be wrong, and subsequent iterations will rely on the erroneous information from the previous iteration to continue estimating. This leads to the objective function E(T) being stuck in a local minimum and unable to converge to the global minimum. Based on experiments, we found that our initial alignment step was effective in keeping the ICP algorithm on the right track.

Several studies have shown that the point-to-plane ICP algorithm offers the potential for faster convergence than traditional point-to-point ICP [

16]. Therefore, in this study, we used the point-to-plane ICP algorithm implemented in the open-source library Open3D [

17]. In addition, iteration consumes substantial computational resources and considerable time, therefore, we limited the iteration to a maximum of 30 cycles when the objective function E(T) had not yet reached the threshold value or when the change in E(T) was less than

. his limitation reduced the time required to estimate the final transformation matrix. The results show that it reliably reduces computation time in cases without a well-aligned initialization.

2.5. Refinement Optimization

Based on our experimental results, a discrepancy between theoretical expectations and practical outcomes was observed. Accordingly, setting a smaller threshold value for the objective function E(T) does not necessarily lead to better registration results. Since the final goal is to assist doctors in medical diagnosis, we aim for results where the alignment is visually acceptable to the naked eye rather than merely achieving an objective function with a sufficiently small value. Therefore, a metric that can be used to evaluate the quality of alignment and automatically determine the optimal threshold value is required.

Two basic metrics were used to measure the quality of alignment between two point clouds [

16,

17]: (1) fitness, which represents the proportion of inlier correspondences relative to the total number of points in the source cloud (i.e., the overlap ratio between the two point clouds), and (2) inlier root mean square error (inlier RMSE), which represents the root mean square distance of all inlier pairs that satisfy the proximity condition (≤0.5 mm in this study). It should be noted that in multimodal fusion between CBCT (covering the full head volume) and a partial facial surface scan, the effective overlap region is inherently small, therefore, fitness values are expected to be lower than in unimodal or full-surface registration.

Specifically, the metrics operate after refinement with a certain threshold value has been completed, and the algorithm continues to find the corresponding point set K = {(p, q)}, just as in the previous ICP algorithm. Corresponding point pairs with a Euclidean distance of less than a set value (in this study, the set value was chosen as 0.5 mm) were considered inlier correspondences. The two evaluation parameters were calculated as follows:

where all point pairs with

less than 0.5 are included in the calculation.

To ensure efficiency, the algorithm limits the distance between corresponding pairs of points to less than a specified value. This approach minimizes the risk of falsely identifying noncorresponding points. Pairs of points that satisfy this criterion are considered inliers, indicating the true correspondence between the two point clouds. These inlier pairs were the only ones included in the evaluation parameters.

2.6. Reduction in Computing Time

In all the datasets used, the optimal threshold value was consistently achieved at approximately 3.0. However, to ensure that no potential threshold values were overlooked, the search range was expanded. In this study, a threshold value ranging from 0.5 to 10.0, with increments of 0.5, was chosen. Voxel downsampling with step sizes ranging from 0.5 to 1.5 mm was used adaptively to achieve stable alignment across subjects. This parameter was adjusted depending on the density of the input scans, ensuring robust performance without significant variance (<0.05 mm) in inlier RMSE.

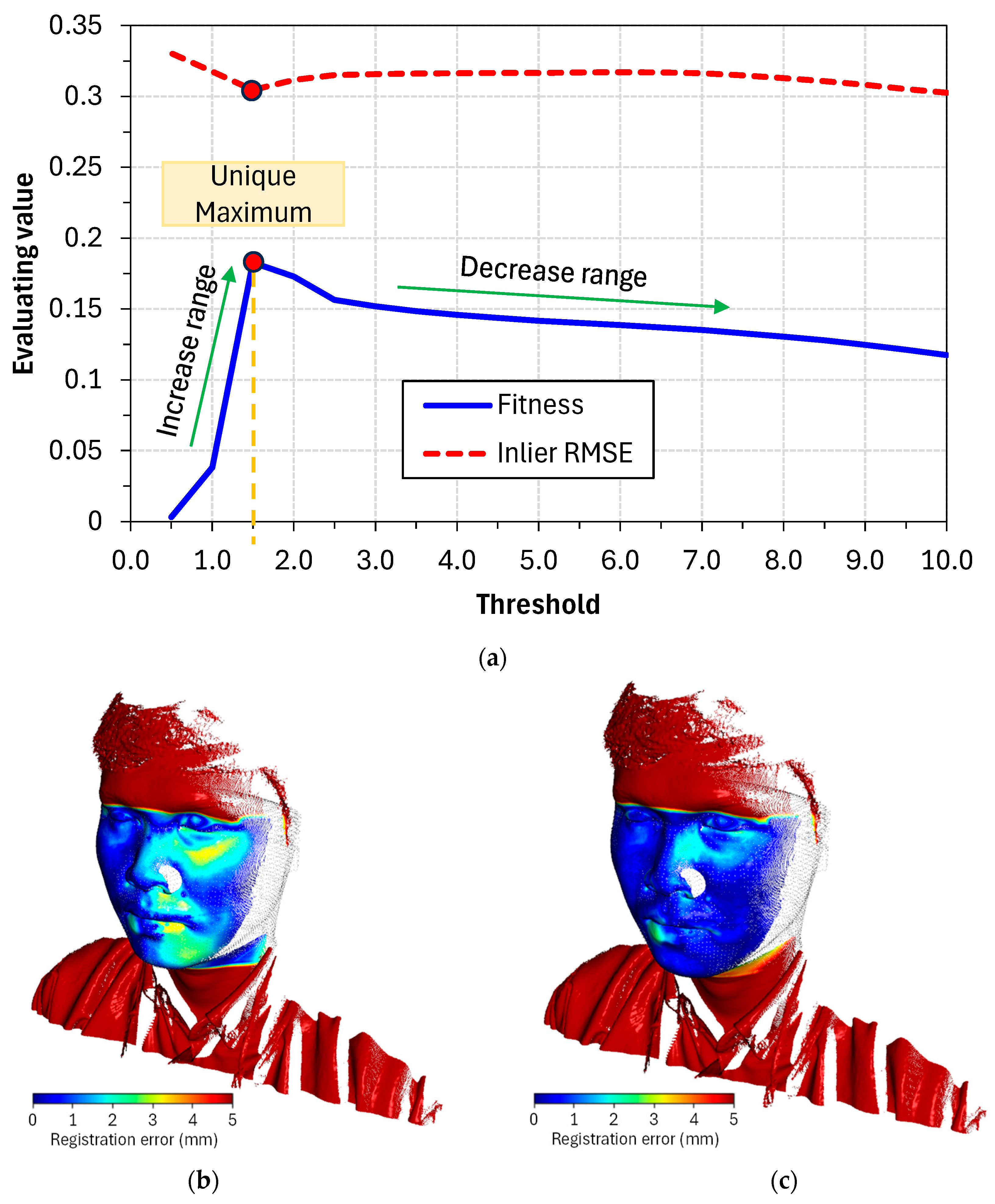

Through graph analysis, as shown in

Figure 4, the fitness response depended on the threshold, and an important property was identified. Following the decreasing trend of the threshold, fitness gradually increased because the objective function E(T) raised its criterion. However, when the threshold decreased beyond a certain point, fitness ceased to increase and instead dropped sharply to zero. In other words, within the threshold range of 0 to 10, a distinct maximum always exists.

Based on the identified features, an effective search strategy was proposed. The algorithm began calculations with threshold values starting at 10 and decreasing incrementally by one. During this process, the fitness value was monitored and expected to increase. The calculation continued until the fitness value began to decline, at which point the algorithm stopped and commenced a localized search. This localized search explored neighboring points with a finer step size of 0.5 to identify the configuration that yielded the highest fitness value, which was then considered the optimal threshold value. This search methodology, as shown in Algorithm 2, minimized the computation required for neighboring threshold values while maintaining the precision of a 0.5-step-size search. In addition, by incorporating downsampling, which reduced the number of points from the original dataset before commencing the calculations, this strategy significantly improved processing speed.

| Algorithm 2: Pseudo-code for precise alignment based on the ICP-based method. |

| Search for optimal threshold value |

| 1: | input: |

| 2: | Target point cloud |

| 3: | Source point cloud |

| 4: | |

| 5: | repeat: |

6:

7:

8:

9:

10: | Find transformation matrix

= − 1

Find transformation matrix Calculate Calculate |

11:

12:

13:

14: | Calculate

Calculate inlier Calculate Calculate inlier |

| 15: | = |

| 16: | until > |

17:

18:

19:

20:

21:

22:

23:

24:

25:

26:

27: | =

=

Find transformation matrix Find transformation matrix Calculate Calculate Calculate Calculate inlier Calculate Calculate inlier |

| 28: | return with |

4. Discussion

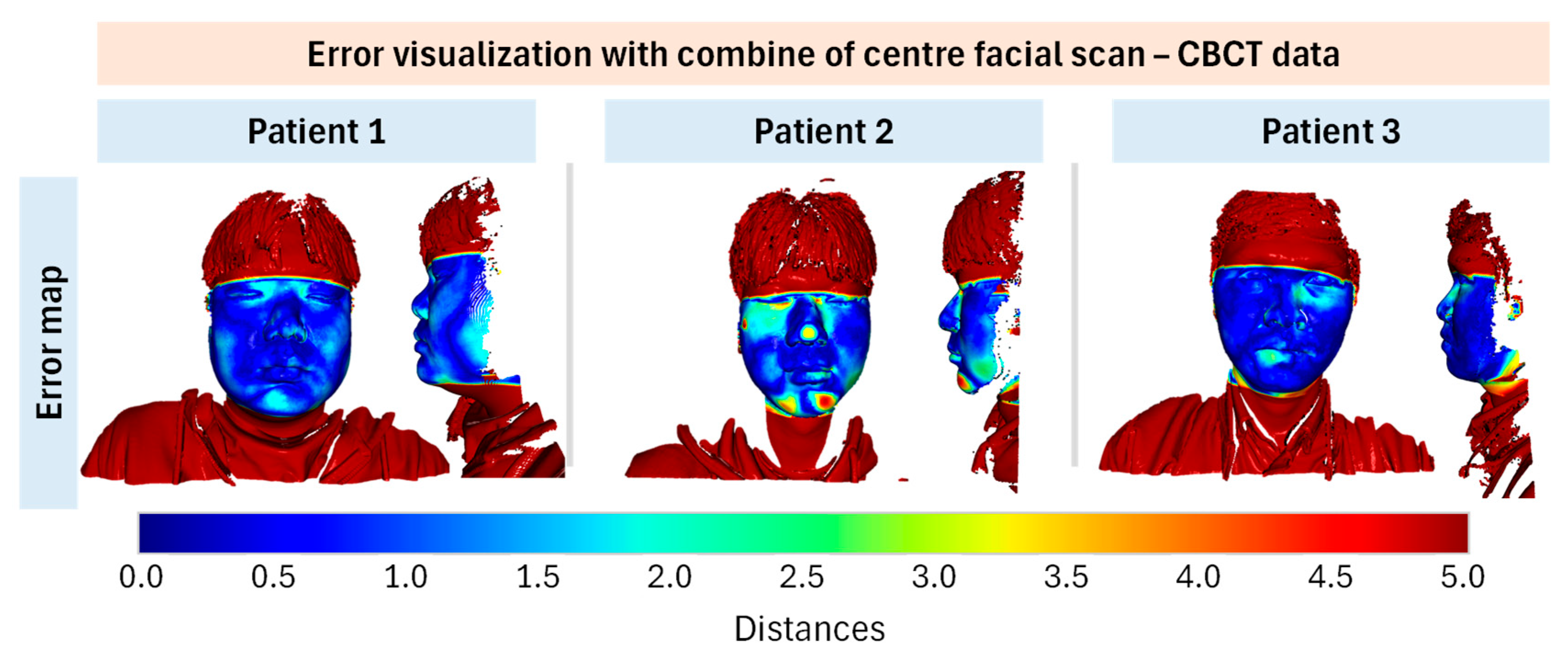

Based on the validation results of the developed method by registration for the face scan and CBCT data, the matching results demonstrated average inlier RMSE ~0.3 mm under our evaluation protocol. However, due to low overlap ratios (~15–20%), these values should not be over-interpreted as clinical accuracy. Future work will include fiducial-based target registration error, synchronized capture systems, and validation on larger cohorts. Limitations include small sample size, lack of anatomical ground truth, sensitivity to facial expressions and occlusions, and dependency on MediaPipe’s general-purpose training. Nevertheless, this feasibility study demonstrates the potential of face mesh–guided initialization for improving ICP-based multimodal registration.

While this feasibility study demonstrates promising results, the sample consisted of only three young adult male participants. This narrow cohort limits the generalizability of our findings across age ranges, sexes, and patients with diverse clinical presentations. In particular, facial hair, orthodontic appliances, surgical alterations, and other medical conditions may significantly affect surface quality and registration robustness. These factors must be systematically evaluated in future studies before clinical deployment.

Although inlier RMSE values remained consistently sub-millimeter, the fitness metric was relatively low (~0.15). This outcome mainly reflects the limited overlap between the full CBCT head volume and the partial facial surface scan, rather than failure of the registration algorithm. While local errors (RMSE, 95th percentile error, Hausdorff distance) confirmed alignment within sub-millimeter accuracy, the low fitness indicates that robustness must be carefully interpreted. Clinical feasibility will require further validation to assess how such overlap limitations influence treatment planning accuracy and reproducibility.

A prominent method involves the use of two-dimensional (2D) projection images to detect 3D facial landmarks, followed by fine registration using ICP. The primary advantage of this method is its high accuracy. By using 2D projections to detect landmarks, this method ensures precise alignment between the CBCT and facial scan data, which is crucial for applications such as 3D digital treatment planning and orthognathic surgery [

18]. Additionally, the automation of this process reduces the need for manual intervention, thereby minimizing human error. However, this method is computationally intensive and requires significant processing power and time, particularly for high-resolution scans. Moreover, the accuracy of landmark detection can be affected by noise and artifacts in the 2D projection images, potentially leading to misregistration. This sensitivity to image quality necessitates high-quality input data, which may not always be available.

Deep learning techniques have been increasingly applied to the fusion of CBCT and intraoral scans to enhance the detail and accuracy of 3D dental models. A major advantage of using deep learning is its robustness. Deep learning models can handle various types of input data, thereby improving the overall robustness of the fusion process. Additionally, these models can significantly enhance the detail and accuracy of the resulting 3D models, which is beneficial for clinical applications [

19]. However, deep learning models require large amounts of annotated training data, which can be difficult and expensive to obtain. This data-intensive requirement poses a significant challenge for the development and refinement of these models. Furthermore, the black-box nature of deep learning algorithms can render the decision-making process opaque. This lack of transparency can be problematic when verifying the accuracy and reliability of results, especially in clinical settings where precision is paramount.

CDFRegNet, an unsupervised network designed for CT-to-CBCT registration, addresses intensity differences and poor image quality in CBCT and achieves high registration accuracy. This method is advantageous because it does not require labeled training data, making it easier to implement and more flexible in various scenarios. It is particularly effective in handling typical issues associated with CBCT images, such as low contrast and noise [

20]. However, similar to other complex models, CDFRegNet is computationally demanding. The training and execution of such networks require substantial computational resources that may not be readily available in all clinical settings. Additionally, although the method is unsupervised, it still relies on the quality of the input data and the architecture of the network, which means that suboptimal settings can lead to less accurate results.

The fusion of CT and 3D face scan data offers significant advantages, such as enhanced diagnostic accuracy by providing a comprehensive view of both bone and soft tissue structures and improved visualization, which aids in a better understanding of anatomical relationships. The integration of this method with IoT platforms facilitates real-time data sharing and remote diagnostics, which are beneficial for telemedicine [

21]. In addition, it enhances patient comfort and safety by reducing the need for multiple imaging sessions, thereby minimizing radiation exposure. However, the process involves high computational complexity, requiring sophisticated algorithms and significant processing power to accurately align and merge the data.

A significant advantage of the proposed method over the feature point detection approach is its robustness to external regions, such as body parts below the neck, clothing, and other non-facial areas. Traditional methods often require manual or automated exclusion of these external regions or an expanded training dataset to accommodate these conditions in deep learning models. By contrast, the proposed method uses the automatic alignment of the input data based on face mesh detection, effectively mitigating the influence of these external regions. This approach ensures that registration performance remains unaffected by non-relevant areas, leading to more accurate and reliable outcomes.

In other research approaches, full-head data from both CBCT and face scans, encompassing both the face and back of the head, were required to extract features. However, this extensive data requirement is unnecessary for the proposed method. Our approach separately aligns the CBCT and face scan data based on the extracted face mesh. 3D data alignment is achieved gradually with continuous updates to the face mesh results and adjustments using a P-controller until convergence is reached. This method simplifies the process and enhances alignment accuracy without the need for full-head data.

However, this method presents two notable limitations. First, at the initial stage, the face mesh detection model must accurately recognize the patient’s face to initiate the alignment process. This necessitates an appropriate setup of the relative positioning between the 3D data and the virtual camera within the 3D environment to ensure reliable alignment. Second, the total alignment runtime is around 10 s, which is still suboptimal and warrants further reduction in future work. All experiments were performed on a workstation with an Intel

® Core™ i9-13900H CPU, 32 GB RAM, and an NVIDIA RTX 4070 Laptop GPU. The median runtime per case was 10.2 s, comprising approximately 2.5 s for pose initialization and 7.7 s for ICP refinement. A detailed breakdown of runtimes for each patient is provided in

Supplementary Table S3.

In addition to methodological limitations, ethical and governance considerations are central to deploying automated CBCT–face fusion in clinical workflows. As emphasized by Kováč et al. [

12], facial analysis systems must be designed with privacy-by-design principles and explicit consent management to mitigate risks of misuse or unauthorized re-identification. Our pipeline, though technically promising, must therefore be accompanied by institutional safeguards, audit trails, and transparent data governance protocols before integration into clinical decision-making.